Abstract

Advanced Driver-Assistance Systems (ADASs) are used for increasing safety in the automotive domain, yet current ADASs notably operate without taking into account drivers’ states, e.g., whether she/he is emotionally apt to drive. In this paper, we first review the state-of-the-art of emotional and cognitive analysis for ADAS: we consider psychological models, the sensors needed for capturing physiological signals, and the typical algorithms used for human emotion classification. Our investigation highlights a lack of advanced Driver Monitoring Systems (DMSs) for ADASs, which could increase driving quality and security for both drivers and passengers. We then provide our view on a novel perception architecture for driver monitoring, built around the concept of Driver Complex State (DCS). DCS relies on multiple non-obtrusive sensors and Artificial Intelligence (AI) for uncovering the driver state and uses it to implement innovative Human–Machine Interface (HMI) functionalities. This concept will be implemented and validated in the recently EU-funded NextPerception project, which is briefly introduced.

1. Introduction

In recent years, the automotive field has been pervaded by an increasing level of automation. This automation has introduced new possibilities with respect to manual driving. Among all the technologies for vehicle driving assistance, on-board Advanced Driver-Assistance Systems (ADASs), employed in cars, trucks, etc. [1], bring about remarkable possibilities in improving the quality of driving, safety, and security for both drivers and passengers. Examples of ADAS technologies are Adaptive Cruise Control (ACC) [2], Anti-lock Braking System (ABS) [3], alcohol ignition interlock devices [4], automotive night vision [5], collision avoidance systems [6], driver drowsiness detection [7], Electronic Stability Control (ESC) [8], Forward Collision Warnings (FCW) [9], Lane Departure Warning System (LDWS) [10], and Traffic Sign Recognition (TSR) [11]. Most ADASs consist of electronic systems developed to adapt and enhance vehicle safety and driving quality. They are proven to reduce road fatalities by compensating for human errors. To this end, safety features provided by ADASs target accident and collision avoidance, usually realizing safeguards, alarms, notifications, and blinking lights and, if necessary, taking control of the vehicle itself.

ADASs rely on the following assumptions: (i) the driver is attentive and emotionally ready to perform the right operation at the right time, and (ii) the system is capable of building a proper model of the surrounding world and of making decisions or raising alerts accordingly. Unfortunately, even if modern vehicles are equipped with complex ADASs (such as the aforementioned ones), the number of crashes is only partially reduced by their presence. In fact, the human driver is still the most critical factor in about 94% of crashes [12].

However, most of the current ADASs implement only simple mechanisms to take into account drivers’ states or do not take them into account it at all. An ADAS informed about the driver’s state could take contextualized decisions compatible with his/her possible reactions. Knowing the driver’s state means to continuously recognize whether the driver is physically, emotionally, and physiologically apt to guide the vehicle as well as to effectively communicate these ADAS decisions to the driver. Such an in-vehicle system to monitor drivers’ alertness and performance is very challenging to obtain and, indeed, would come with many issues.

- The incorrect estimation of a driver’s state as well as of the status of the ego-vehicle (also denoted as subject vehicle or Vehicle Under Test (VUT) and referring to the vehicle containing the sensors perceiving the environment around the vehicle itself) [13,14] and the external environment may cause the ADAS to incorrectly activate or to make wrong decisions. Besides immediate danger, wrong decisions reduce drivers’ confidence in the system.

- Many sensors are needed to achieve such an ADAS. Sensors are prone to errors and require several processing layers to produce usable outputs, where each layer introduces delays and may hide/damage data.

- Dependable systems that recognize emotions and humans’ states are still a research challenge. They are usually built around algorithms requiring heterogeneous data as input parameters as well as provided by different sensing technologies, which may introduce unexpected errors into the system.

- An effective communication between the ADAS and the driver is hard to achieve. Indeed, human distraction plays a critical role in car accidents [15] and can be caused by both external and internal causes.

In this paper, we first present a literature review on the application of human state recognition for ADAS, covering psychological models, the sensors employed for capturing physiological signals, algorithms used for human emotion classification, and algorithms for human–car interaction. In particular, some of the aspects that researchers are trying to address can be summarized as follows:

- adoption of tactful monitoring of psychological and physiological parameters (e.g., eye closure) able to significantly improve the detection of dangerous situations (e.g., distraction and drowsiness)

- improvement in detecting dangerous situations (e.g., drowsiness) with a reasonable accuracy and based on the use of driving performance measures (e.g., through monitoring of “drift-and-jerk” steering as well as detection of fluctuations of the vehicle in different directions)

- introduction of “secondary” feedback mechanisms, subsidiary to those originally provided in the vehicle, able to further enhance detection accuracy—this could be the case of auditory recognition tasks returned to the vehicle’s driver through a predefined and prerecorded human voice, which is perceived by the human ear in a more acceptable way compared to a synthetic one.

Moreover, the complex processing tasks of modern ADASs are increasingly tackled by AI-oriented techniques. AIs can solve complex classification tasks that were previously thought to be very hard (or even impossible). Human state estimation is a typical task that can be approached by AI classifiers. At the same time, the use of AI classifiers brings about new challenges. As an example, ADAS can potentially be improved by having a reliable human emotional state identified by the driver, e.g., in order to activate haptic alarms in case of imminent forward collisions. Even if such an ADAS could tolerate a few misclassifications, the AI component for human state classification needs to have a very high accuracy to reach an automotive-grade reliability. Hence, it should be possible to prove that a classifier is sufficiently robust against unexpected data [16].

We then introduce a novel perception architecture for ADAS based on the idea of Driver Complex State (DCS). The DCS of the vehicle’s driver monitors his/her behavior via multiple non-obtrusive sensors and AI algorithms, providing emotion cognitive classifiers and emotion state classifiers to the ADAS. We argue that this approach is a smart way to improve safety for all occupants of a vehicle. We believe that, to be successful, the system must adopt unobtrusive sensing technologies for human parameters detection, safe and transparent AI algorithms that satisfy stringent automotive requirements, as well as innovative Human–Machine Interface (HMI) functionalities. Our ultimate goal is to provide solutions that improve in-vehicle ADAS, increasing safety, comfort, and performance in driving. The concept will be implemented and validated in the recently EU-funded NextPerception project [17], which will be briefly introduced.

1.1. Survey Methodology

Our literature review starts by observing a lack of integration on modern ADASs of comprehensive features that take into account the emotional and cognitive states of a human driver. Following this research question, we focused the analysis on the following three different aspects:

- The state-of-the-art of modern ADASs, considering articles and surveys in a 5-year time-frame. We limit ourselves here to the highly cited articles that are still relevant today.

- Psychological frameworks for emotion/cognition recognition. We have both reviewed the classic frameworks (e.g., Tomkins [18], Russel [19], Ekman [20], etc.) as well as recent applications of these techniques in the automotive domain. We observe a lack of usage of such specialized frameworks in real-world ADAS technologies.

- Sensors and AI-based systems, proposed in recent literature, that we consider relevant to implementation of the above frameworks in the automotive domain.

Based on this summary of the state-of-the-art, we make a proposal of possible improvements going in two distinct directions: (i) the integration of ADAS with unobtrusive perception sensors and (ii) the human driver’s status recognition using complex psychological analyses based on modern AI methods. We provide illustrative scenarios where the integration could take place, highlighting the difference with existing technologies.

1.2. Article Structure

The rest of the paper is organized as follows. Section 2 reviews the state-of-the-art on ADAS and human emotion recognition through sensing technologies. Section 3 describes the improvements that are proposed in DCS estimation and their impact on ADAS, together with the way the proposed ideas will be validated in the H2020 EU project NextPerception. Section 4 discusses useful recommendations and future directions related to the topics covered in this paper. Finally, in Section 5, we draw our conclusions.

2. ADAS Using Driver Emotion Recognition

In the automotive sector, the ADAS industry is a growing segment aiming at increasing the adoption of industry-wide functional safety in accordance with several quality standards, e.g., the automotive-oriented ISO 26262 standard [21]. ADAS increasingly relies on standardized computer systems, such as the Vehicle Information Access API [22], Volkswagen Infotainment Web Interface (VIWI) protocol [23], and On-Board Diagnostics (OBD) codes [24], to name a few.

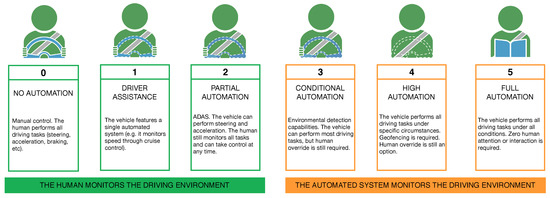

In order to achieve advanced ADAS beyond semiautonomous driving, there is a clear need for appropriate knowledge of the driver’s status. These cooperative systems are captured in the Society of Automotive Engineers (SAE) level hierarchy of driving automation, summarized in Figure 1. These levels range from level 0 (manual driving) to level 5 (fully autonomous vehicle), with intermediate levels representing semiautonomous driving situations, with a mixed driver–vehicle degree of cooperation.

Figure 1.

Society of Automotive Engineers (SAE) levels for driving automation.

According to SAE levels, in these mixed systems, automation is partial and does not cover every possible anomalous condition that can happen during driving. Therefore, the driver’s active presence and his/her reaction capability remain critical. In addition, the complex data processing needed for higher automation levels will almost inevitably require various forms of AI (e.g., Machine Learning (ML) components), in turn bringing security and reliability issues.

Driver Monitoring Systems (DMSs) are a novel type of ADAS that has emerged to help predict driving maneuvers, driver intent, and vehicle and driver states, with the aim of improving transportation safety and driving experience as a whole [25]. For instance, by coupling sensing information with accurate lane changing prediction models, a DMS can prevent accidents by warning the driver ahead of time of potential danger [26]. As a measure of the effectiveness of this approach, progressive advancements of DMSs can be found in a number of review papers. Lane changing models have been reviewed in [27], while in [28,29], developments in driver’s intent prediction with emphasis on real-time vehicle trajectory forecasting are surveyed. The work in [30] reviews driver skills and driving behavior recognition models. A review of the cognitive components of driver behavior can also be found in [7], where situational factors that influence driving are addressed. Finally, a recent survey on human behavior prediction can be found in [31].

In the following, we review the state-of-the-art of driver monitoring ADAS, dealing in particular with emotion recognition (Section 2.1), their combined utilisation with Human–Machine Interfaces (HMIs, Section 2.2), the safety issues brought about by the adoption of AI in the automotive field (Section 2.3), as well as its distribution across multiple components and sensors (Section 2.4).

2.1. Emotions Recognition in the Automotive Field

Emotions play a central role in everyone’s life, as they give flavor to intra- and interpersonal experiences. Over the past two decades, a large number of investigations have been conducted on both neurological origin and social function of emotions. Latest century studies seem to have validated the discrete emotion theory, which states that some specific emotional responses are biologically determined, regardless of the ethnic or cultural difference among the individuals. Many biologists and psychologists have commented on the characteristics of this set of “primary emotions”, theorizing various emotional sets [32,33] and/or dimensional models of affects [19,34]. Great contribution has come from the seminal work of the American psychologist Paul Ekman, who long theorized a discrete set of physiologically distinct emotions that are anger, fear, disgust, happiness, surprise, and sadness [20]. The Ekman’s set of emotions is surely one of the most considered ones.

Nowadays, understanding emotions often translates into the possibility of enhancing Human–Computer Interactions (HCIs) with a meaningful impact on the improvement of driving conditions. A variety of technologies exist to automatically recognize human emotions, like facial expression analysis, acoustic speech processing, and biological response interpretation.

2.1.1. Facial Expression and Emotion Recognition

Besides suggesting the primary set of six emotions, Ekman also proposed the Facial Action Coding System (FACS) [35], which puts facial muscle movement in relation with a number of Action Unit (AU) areas. This methodology is widely used in the Computer Vision (CV) field.

Facial expression classifiers can be either geometric-based or appearance-based. The latter category aims to find significant image descriptors based on pixel information only. Thanks to recent and continuous improvements on the ImageNet challenge [36], the application of Deep Learning (DL) algorithms has emerged as a trend among appearance-based algorithms [37]. A lot of DL architectures have been proposed and employed for facial emotion recognition, each of which outperformed its ancestors, thereby constantly improving state-of-the-art accuracy and performance [38].

The most efficient DL architectures to be applied in these scenarios are Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), and Long Short-Term Memory (LSTM) networks. Hybrid frameworks also exist, in which different architectures’ characteristics are combined together in order to achieve better results [39].

State-of-the-art CNNs have reached a noteworthy classifying capability, performing reasonably well in controlled scenarios. Hence, research efforts have shifted toward the categorization of emotions in more complex scenarios (namely, wild scenarios), aiming at a similar high accuracy [40]. This is required to allow application of these classifiers in any safety critical scenario (as will be discussed in Section 2.3). Much progress has been made in CV for emotion recognition. However, emotion classification in uncontrolled, wild scenarios still remains a challenge due to the high variability arising from subject pose, environment illumination, and camera resolution. To this end, the literature suggests to enrich facial expression datasets by labeling captured images in different conditions (i.e., captured in real-world conditions and not in a laboratory) and to train models to overcome limitations of available algorithms in emotion recognition [41]; unfortunately, this procedure is still complex and costly.

2.1.2. Valence and Engagement

Besides predicting which of the six Ekman’s emotions is most likely to be perceived by the user, affect valence is investigated too. In detail, valence expresses the pleasantness of an emotion and then differentiates between pleasant (positive) and unpleasant (negative) feelings. While having a view on the valence dimension is often useful, as nonbasic affective states may appear, one should not forget that even basic emotions frequently blend together. This is why valence is a commonly investigated dimension, especially in psychology research areas [42].

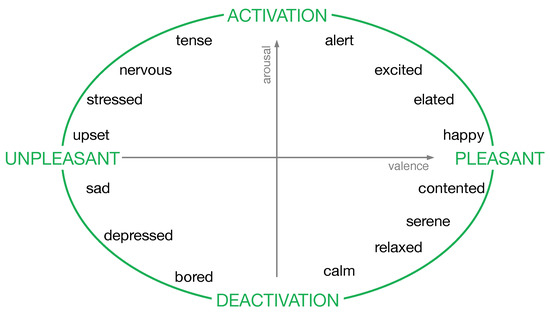

A discrete partition of emotions is a useful simplification to work with. However, real perception of feelings is way more complex and continuous, and this prominently reflects the difficulty that many people have in describing and assessing their own emotions. With this in mind, valence dimensions was suggested with one of the so called “circumplex models” for emotions, specifically with the Russell model [19], in which linear combinations of valence and arousal levels provide a two-dimensional affective space, as shown in Figure 2.

Figure 2.

Graphical representation of the Russell’s circumplex model of affect: valence is reported in the horizontal axis (ranging from totally unpleasant to fully pleasant), while arousal is reported on the vertical axis.

On the basis of this classification, the work in [43] found that an optimal driving condition is the one in which the driver is in a medium arousal state with medium-to-high valence.

Finally, psychology has made great strides in understanding the effects of emotions on user attention and engagement [44]. In accordance with the arousal–valence model, it is possible to classify the emotional engagement into active and passive engagement and positive and negative engagement [45]. The engagement level is then obtained as the sum of each Ekman emotion’s probability of occurrence; hence, this can be used as an additional indicator of driving performance, as reported in [46].

2.1.3. Further Factors Influencing Driving Behavior

The literature overview suggests considering other multiple factors to predict risk of driving behavior such as age [47], gender [48], motivation [49], personality [50], and attitudes and beliefs [51], some of which transcend emotions. While violations leading to an increased risk mainly concern the social context, user’s motives, attitudes, and beliefs [52], driving errors mostly depend on the individual cognitive processes. Violations and driving errors are influenced by gender [53], gender role [54], attention disorders [55], and age-related problems. Some researches have demonstrated that young drivers are more likely to drive fast, to not wear seat belts, and to tailgate and that males consistently exhibit greater risky driving compared with females. However, there is not a consensus on the effects of demographics on driving behavior. For instance, an experimental study appeared in the literature demonstrating that age is not such a significant predictor of speed and that other factors (such as aggression, lack of empathy and remorse, and sensation seeking) have a deeper influence on the attitude toward speeding and increase the risk of crashes [56] more than age and gender. For that reason, the present research mainly focuses on the recognition of complex human states rather than demographics. Age, gender, and ethnicity will be fixed attributes of the individual in the analysis of risk driving behavior and will be included as covariates in future experimental analyses.

2.1.4. Emotional Effects on Driving Behavior

In the fields of traffic research and HCI, several studies have been published in the past decades about the detection and exploitation of the driver cognitive and emotional states, given the significant impact that such conditions have on driver performance and consequent effects on road safety [57,58]. The detection of the driver’s state can be exploited to adapt the HMI so that it communicates with the driver in a more suitable way, for example, by overcoming driver distractions to effectively deliver warnings about risky maneuvers or road conditions. On the other hand, emotional states in the car are detected mainly with the aim of applying emotional regulation approaches to lead the driver in a condition of medium arousal and moderate positive valence.

Induced emotions may influence driving performance, perceived workload, and subjective judgment. Hence, experimental tests reported in [59] demonstrated that induced anger decreases the level of a driver’s perceived safety and leads to a lower driving performance than neutral and fear emotional states. The same result is achieved in the case of happiness.

The survey in [60] considers recent literature from Human Factors (HFs) and HCI domains about emotion detection in the car. It is shown that anger, which is the most investigated basic emotion in the automotive field, convincingly impacts negatively both safety and driving performance.

It is recognized that a workload increase has, as its main effects, a decrease in reaction time and an overall impairment on driving performance. On the other hand, an increased arousal, which is part of an intense emotion, can cause the same effects, as it is known from the Yerkes–Dodson law [61]. This result, coupled with the literature reviewing the effects of emotions while driving, suggests as an optimal state for driving [43] the one where the driver shows medium arousal and slightly positive valence.

Moreover, road traffic conditions, driving concurrent tasks (such as phone conversation, music listening, and other environmental factors) may arouse emotions. For instance, music, for its emotional nature, affects the driver’s attention. Neutral music has been demonstrated to not significantly impact driving attention [62], while sad music leads toward safe driving and happy music leads to risky driving.

Notwithstanding the role and prevalence of affective states on driving, no systematic approaches are reported in the literature to relate the driver’s emotional state and driving performance [63]. Some empirical studies have highlighted that traditional influence mechanisms (e.g., the one based on valence and arousal dimensions) may not be able to explain affective effects on driving. In fact, considering at the same time valence or arousal dimension (e.g., anger and fear belong to negative valence and positive arousal), different affective states show different performance results [59]. As a consequence, a more systematic and structured framework must be defined to explain complicated phenomena, such as the effects of emotions on driving [46].

2.1.5. Emotion Induction and Emotion Regulation Approaches

Reliable and specific emotion detection could be exploited by the HMI to implement emotion regulation approaches. Emotion regulation is the function of moderating the emotional state of the driver in order to keep it in a “safe zone” with medium arousal and positive valence. In particular, the HMI can act in order to bring the driver back to a suitable emotional state when the detected emotional state is considered to have a negative impact on driving. Emotion detection and regulation techniques have been studied in isolation (i.e., without other driver state conditions, such as cognitive ones) and in simulated environments to date. In this kind of experimental setting, emotion induction approaches are used to put the participants in different emotional states.

In detail, in [64], different approaches for emotion induction in driving experimental settings—namely, autobiographical recollection, music video priming, and both techniques enhanced with the provisioning of emotion-specific music tracks while driving—were evaluated. The results showed that artificially induced emotions have a mean duration in the interval 2–7 min, adjusted by ±2 min depending on the used technique. The most promising one, according to users’ judgment about subjective-rated intensity of emotion (measured after inducement) and for dwell time (i.e., for the time the induced emotion is felt by the participant after inducement), seems to be the one envisioning autobiographical recollection before driving, coupled with the provision of emotion-specific music tracks used by the “Database for Emotion Analysis using Physiological Signals” (DEAP) dataset [65].

As an example, a participant was asked to think about and write down an event of his/her life (autobiographical recollection) connected with a low value, high arousal emotion for 8 min. After that initial writing, driving began. The participant drove while listening to a music title used for the DEAP database for extreme values of arousal and negative valence. In order to recall the emotion while driving, the participant had to recount the story aloud, preferably in a setting protecting his/her privacy. This approach was tested in a simulated driving environment, while being applied in following studies to compare emotion regulation techniques starting from conditions of anger and sadness [66].

According to the review in [64], naturalistic causes of emotions while driving have been investigated in the literature by means of longitudinal studies, brainstorming sessions, newspaper articles, analysis about episodes of aggressive driving, and interviews with drivers. The results are that other drivers’ behaviors, traffic condition, near accident situations, and time constraints are the main sources of negative emotions. A frustrating user interface (UI) can cause the same effects as well. Both a positive and a negative impact on the driver state can be triggered, on the other hand, by verbal personal interaction with other people, the driver’s perception of his/her driving performance, the vehicle performance, and the external environment. These naturalistic causes of emotions have typically a lower dwell time and, because of that, are less useful in an experimental context created to collect data about driving performance and emotion regulation techniques performed by the HMI.

As examples of emotion regulation approaches considered in the literature [64], the following anger and sadness regulation approaches in manual driving can be recalled:

- ambient light, i.e., the exploitation of blue lighting for leveraging calming effects on the level of arousal;

- visual notification, i.e., visual feedback about the current state of the driver;

- voice assistant, i.e., audio feedback about the driver’s status provided in natural language—and obtained with Natural Language Processing (NLP) tasks—with suggestions of regulation strategies;

- empathic assistant, i.e., a voice assistant improved with an empathic tone of voice mirroring the driver’s state suggesting regulation activities.

Among these strategies, the empathic assistant seems the most promising one, according to the effects on emotions felt by the subjects during driving and subjects’ rating about the pleasure of the experience. Other techniques have been investigated, such as adaptive music to balance the emotional state, relaxation techniques such as breathing exercises, and temperature control.

2.1.6. Emotion Recognition Technologies in the Vehicle

Nowadays, the recognition of human emotions can be obtained by applying numerous methods and related technologies at different levels of intrusiveness. Some instruments based on biofeedback sensors (such as electrocardiograph (ECG), electroencephalogram (EEG), as well as other biometric sensors) may influence the user’s behavior, spontaneity, and perceived emotions [67]. Moreover, in real driving conditions, they cannot be easily adopted. Consequently, in the last decade, several efforts have been made to find nonintrusive systems for emotion recognition through facial emotion analysis. The most promising ones are based on the recognition of patterns from facial expression and the definition of theoretical models correlating them with a discrete set of emotions and/or emotional states.

Most of these systems process data using AI, usually with CNNs [68,69] to predict primary emotions in Ekman and Fresner’s framework (as shown in Section 2.1). In fact, most of the currently available facial expressions datasets are based on this emotion framework (e.g., EmotioNet [70]). In the literature, numerous emotion-aware car interfaces have been proposed [71,72]: some use bio-signals collected through wearable devices, and others rely on audio signal analysis, e.g., detection of vocal inflection changes [73]. An extended study on the effectiveness of facial expression recognition systems in a motor vehicle context to enable emotion recognition is still lacking.

2.2. Human–Machine Interface (HMI)

Since the complexity of driving is significantly increasing, the role of information systems is rapidly evolving as well. Traditional in-vehicle information systems are designed to inform the driver about dangerous situations without overloading him/her with an excessive amount of information [74]. For this reason, the most relevant issues related to evaluation of the HMI from a human factors perspective are related to inattention and workload topics [75].

Hot topics in modern HMI design concern the modalities to represent the information and the timing to display them. The design of HMIs (including graphical, acoustic, and haptics) is progressively facing this transition by shifting the perspective from visualization to interaction.

In detail, the interaction foresees a more active role of the user and requires different design strategies. For example, in the last years, the automotive domain is also experiencing a rise in User-Centered Design (UCD) as the main technique to tailor the HMI design around the users. A crucial role has been assumed by multi-modal interaction systems [76], able to dynamically tailor the interaction strategy around the driver’s state. In this sense, new perspectives have been opened by the advancement in unobtrusive sensor technologies, ML, and data fusion approaches [77,78], dealing with the possibility of combining multiple data sources with the aim of detecting even more complex states of the driver and actual driving situations. This information can be used to design more effective HMI strategies both in manual driving scenarios and in autonomous driving scenarios, with particular reference to takeover strategies [79]. As an example, it could be useful to understand what the driver is doing (e.g., texting by phone), during which kind of driving situation (e.g., overtaking other cars on a highway), frequency of visual distraction, level of cognitive distraction, and experiencing which kind of emotion. Then, this information will allow to choose the most appropriated message to be sent by the HMI in order to lead the driver back to a safer state using the most suitable communication strategy and channels (visual, acoustic, etc.).

Actually, emotion regulation strategies have been recently taken into account to improve the interaction performance. These approaches aim to regulate emotional activation towards a neutral emotion [80] using the HMI and, in turn, support a cooperative interaction.

2.2.1. Strategies to Build Human–Automation Cooperation: Teaming

Many researches have investigated possible strategies to enable the cooperation between humans and automated agents, exploiting the cooperative paradigm that establishes the two agents as team players [81]. In contrast with early researches that focused on increasing the autonomy of agents, current researches seek to understand the requirements for human–machine cooperation in order to build automated agents with characteristics suitable for making them a part of a cooperative system [82,83].

A crucial requirement for cooperation is mutual trust; to gain trust, both team members shall be mutually predictable. They must be able to monitor and correctly predict the intention of the partner (and thus the behavior of the team). However, current agents’ intelligence and autonomy reduce the confidence that people have in their predictability. Although people are usually willing to rely on simple deterministic mechanisms, they are reluctant to trust autonomous agents for complex and unpredictable situations, especially those involving rapid decisions [84]. Ironically, the increase in adaptability and customization will make autonomous agent less predictable, with the consequence that users might be more reluctant to use them because of the confusion that the adaptation might create [85].

2.3. AI Components in ADAS

AI utilization in ADAS has been a very active field of research in the last decade [86]. Roughly speaking, these are two main application lines that can be currently identified.

- Mid-range ADASs (like lane change estimation, blind spot monitoring, emergency brake intervention, and DMSs) are currently deployed in many commercial vehicles. In these ADASs, the research focuses on understanding if AI/ML is capable of providing superior accuracy with regard to rule-based systems or the human driver.

- High-range ADASs for (experimental) autonomous vehicles, like Tesla [87] or Waymo [88], require complex classification tasks to reconstruct a navigable representation of the environment. These tasks are mainly performed using DL components based on CNNs and constitute the emerging field of AI-based Autonomous Driving (AIAD).

The growing interest in the use of AI in vehicles has shown very valuable results, even if there are several problems that still need to be addressed, mostly safety issues—see the infamous “We shouldn’t be hitting things every 15,000 miles.” [89]. Safety concerns for SAE’s level 1 and level 2 vehicles are addressed by the Safety Of The Intended Functionality (SOTIF) ISO/PAS standard 21448:2019 [90], which addresses the fact that some complex ADASs, even in the absence of any hardware faults, can still suffer from safety issues (like a misclassified signal obtained from a sensor), since it is not possible to reasonably consider every possible scenario [91]. Therefore, an accurate ADAS design [92] should consider the safety boundaries of the intended functionalities and should be designed to avoid hazardous actions as much as possible. This can be hard to achieve for AI/ML components.

A significant source of classification issues arises from the imperfect nature of learning-based training of AI models. Any reasonable dataset used for training an AI/ML model will inevitably be finite and limited, thus being subject to uncertainty; instability; and more generally, lack of transparency [93]. Therefore, the resulting model will not perform perfectly in every possible scenario, and misclassifications are expected with some probability. The successful adoption of ML models in critical ADASs will heavily rely on two factors:

- how the ML/AI component will be capable of ensuring bounded behaviors in any possible scenario (SafeAI [93]);

- how well humans are able to understand and trust the functionality of these components (eXplainable AI, or XAI [94]).

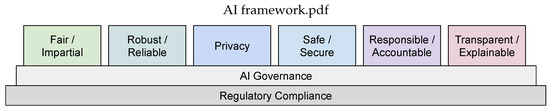

Active research is being made on both SafeAI and XAI topics to improve and further advance the state of AI components. Figure 3 shows the areas of research that are identified as the most critical to be improved in order to achieve industrial-grade AI components [95].

Figure 3.

The pillars of the trustworthy artificial intelligence (AI) framework (see [95]).

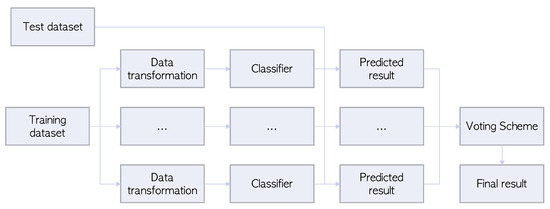

Building safe systems for safety critical domains additionally requires consideration of the concept of redundancy that can be internal or external. Internal redundancy is aimed at tolerating hardware fault (in particular, transient faults) that may impair certain computations inside the AI components [96], while external redundancy is based on multiple replication of the whole AI system or by using different forms of classifiers, followed by a majority voting mechanism. The latter approach, shown in Figure 4, is generally referred to as ensemble learning. Upon failure, the system should either tolerate it, fail in a safe manner (fail-safe), and/or operate in a degraded automation mode (fail-degraded). Unfortunately, detecting failures of AI components is not a trivial task and, indeed, is the subject of active research.

Figure 4.

Framework for ensemble AI [97].

To this end, relying on distributed AI systems [98] is one alternative to fac ing the challenges with the lack of comprehensive data to train AI/ML models as well as to provide redundancy during ADAS online operations [99]. In the former case, models are built relying on a federation of devices—coordinated by Edge or Cloud servers—that contribute to learning with their local data and specific knowledge [98,99]. In the latter case, Edge or Cloud servers contribute to decision making during ADAS operations.

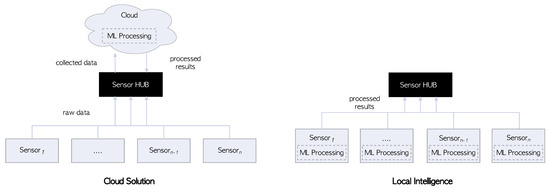

Moreover, the use of distributed AI systems (for which an example is shown in Figure 5) brings many challenges to ADAS safety. For example, resource management and orchestration become much harder when AI/ML models are built collaboratively [100]. Data may be distributed across devices in a nonidentical fashion, i.e., some devices may collaborate with substantially larger/smaller datasets, which could easily bias the aggregated models. Both during model training and ADAS operations, distributed devices may behave unreliably or could not communicate due to network congestion. Devices may become unavailable during training or application of algorithms due to faults or the lack of network coverage.

Figure 5.

Cloud vs. local intelligence.

As models are built in a distributed fashion, the attack surface also increases substantially. Some of the attack scenarios described in the literature [101] include (i) network attacks to compromise or disrupt model updates; (ii) model theft and misuse; (iii) malicious or adversarial users/servers that may act to change the environment and influence models’ decision during operations; and (iv) model poisoning or intentional insertion of biases aiming to tamper the system.

Finally, safety and security properties to be taken into account in systems handling sensitive data, such as those working in a distributed AI-oriented way in automotive contexts, can be summarized as follows.

- Confidentiality, which is the property through which data is disclosed only as intended by the data owner. Personal data to be collected from people inside a vehicle’s cabin is the main challenge to be faced, as each occupant should be sure that his/her data are not disclosed to unauthorized people, especially for data in which personal features are recognizable (e.g., imaging data).

- Integrity, which is the property guaranteeing that critical assets are not altered in disagreement with the owner’s wishes. In vehicular contexts, this is particularly true if sensor or Electronic Control Unit (ECU) data need to be stored with anti-tamper mechanisms (e.g., data to be used in case of accidents and disputes).

- Availability, which is the property according to which critical assets will be accessible when needed for authorized use.

- Accountability, which is the property according to which actions affecting critical assets can be traced to the actor or automated component responsible for the action.

It is noteworthy to highlight that these properties as well as those discussed before for distributed AI must be either considered in the case that on-board local intelligence would be used as a first decision stage before sending data to external processing entities (e.g., deployed on Cloud infrastructures [102]). To this end, even if not directly discussed in this work and left as future analysis work, these safety and security requirements will play a key role in protecting information from tampering attempts, especially those that can intervene in intermediate levels, e.g., Vehicle-to-Vehicle (V2V) and Vehicle-to-Infrastructure (V2I) channels.

2.4. Sensing Components for Human Emotion Recognition

When considering and evaluating technologies for driver’s behavior recognition and, in general, for human monitoring, one must consider that the acceptance degree on these technologies by the final user largely depends on their unobtrusiveness, i.e., how the technology is not perceived to infringe his/her privacy. To this end, heterogeneous sensors can be adopted as monitoring technologies, in turn being characterized by their mobility degree, such as stationary sensors and mobile sensors. More in detail, stationary sensors are well suited for unobtrusive monitoring, as they are typically installed in the environment (e.g., in the vehicle’s cabin) and act without any physical contact with the user: a standard example of a stationary sensor is a camera installed in the cabin. On the other hand, mobile sensors, e.g., wearable, have become practical (thanks to the miniaturization of sensors) and appealing, since nowadays people buy versatile smart devices (e.g., smart watches with sensors for health parameters monitoring) that can be exploited for driver’s behavior monitoring and recognition [103].

Then, looking for a driver’s unobtrusive monitoring and aiming to recognize his/her behavior, a DMS could be enhanced with the Naturalistic Driving Observation (NDO) [104] technique, a research methodology which aims to characterize the drivers’ behavior on each voyage through discrete and noninvasive data collection tools [105]. In detail, private vehicles can be equipped with devices that can continuously monitor driving behaviors, including vehicle’s movements detection and information retrieval (e.g., vehicle’s speed, acceleration and deceleration tasks, positioning of the vehicle inside the road it is traveling, etc.), information on the driver (e.g., head and hands movements, and eye and glance position), and the surrounding environment (e.g., road and atmospheric conditions, travel time, and traffic density). Moreover, NDO allows to observe these data under “normal” conditions, under “unsafe” conditions, and in the presence of effective collisions. Within a NDO-based gathering scenario, the driver becomes unaware of ongoing monitoring, as the collection of organized data is carried out with discretion and the monitored vehicle is the one commonly used by the driver. Finally, one of the strengths of NDO is its adaptivity to various kind of vehicles (e.g., cars, vans, trucks, and motorcycles), and this can be seen as an additional keypoint, thus being applicable to simulation scenarios before real deployment in real vehicles. Examples of already deployed NDO-based projects are “100-Car Study” [106], “INTERACTION” [107], “PROLOGUE” [108], “DaCoTA” [109], and “2-BE-SAFE” [110].

2.4.1. Inertial Sensors

Considering mobile sensing elements, often worn by the person to be monitored, the adoption of Inertial Measurement Units (IMUs) allows for estimation of the motion level. Since IMUs integrate 3-axis accelerometers and 3-axis gyroscopes, they allow for implementation of inertial estimation solutions for both motion and attitude/pose estimation. Moreover, inertial sensors have to be carefully selected and calibrated, taking into account bias instability, scaling, alignment, and temperature dependence. All these issues are very important, especially in application areas where the size, weight, and cost of an IMU must be limited (namely, small size and wearable monitoring systems for drivers).

In the automotive field, inertial sensing can intervene in different areas, from safety systems—like passenger-restraint systems (e.g., airbags and seat-belt pretensioner) and vehicle dynamics control (e.g., ESC)—to monitor driver’s movements when seated. In both areas, current IMUs are mainly built with Micro Electromechanical Systems (MEMS), mostly for miniaturization and cost reasons, thus providing better performance in compact arrangements [111] and additionally exploiting highly advanced algorithms based on ML and data fusion technologies [112]. Moreover, personal motion estimation for health monitoring requires very precise localization of the ego position; to this end, the availability of 3-axis accelerometers and 3-axis gyroscopes is a plus, since sensing elements have to be much more precise compared to traditional industrial-grade IMUs used for automotive safety systems.

2.4.2. Camera Sensors

With regards to stationary and unobtrusive technologies that can be adopted for driver behavior monitoring, a certain importance is given to imaging-based technologies, such as video cameras. To this end, it is well known that the performance of CV algorithms running on top of the imaging streams coming from the cameras are largely influenced by swinging lighting and atmospheric conditions. Hence, one way to overcome this detection accuracy’s deterioration is to use thermal sensors, which are more robust to adverse conditions with respect to canonical imaging sensors. In detail, thermal or Long Wavelength Infrared (LWIR) cameras [113] have the following characteristics: (i) can detect and classify objects in several particular conditions (e.g., darkness, rain, and through most fog conditions); (ii) offer increased robustness against reflections, shadows, and car headlights [114]; and (iii) are unaffected by sun glare, improving situational awareness [115]. Typically, the resolution of modern thermal cameras is lower than the resolution of color cameras, but recent on-market high-end thermal cameras are capable of producing full HD video streams.

In addition to driver monitoring based on thermal cameras, a further emphasis can be placed on the use of normal and general-purpose imaging sensors, such as in-vehicle fixed cameras, or the front camera of a smartphone [116,117]. This is further motivated by their well-known portability and widespread diffusion in society, thus representing an optimal way to gather data and information [118]. Therefore, it is interesting to highlight not only that modern vehicles are equipped with built-in sensors (e.g., in 2019, the Volvo car manufacturer released a press announcement regarding the development of a built-in Original Equipment Manufacturer (OEM) camera-based infrastructure targeting the detecting of drowsy and drunk people driving a vehicle [7]) but also that vehicles’ models which were not originally equipped with these kinds of technologies can benefit from these solutions. In detail, textitin-vehicle and smartphone-equipped cameras [119] can be used to gather information on glance duration, yawning, eye blinking (with frequency and duration; as an example, prolonged and frequent blinks may indicate micro sleeps), facial expression [120], percentage of eyelid closure (PERCLOS, formally defined as the proportion of time within one minute that eyes are at least 80% closed) [121], head pose and movement [122], mouth movement [123,124], eye gaze, eyelid movement, pupil movement, and saccade frequency. Unfortunately, working with these types of cameras requires taking into account that (i) large light variation outside and inside the vehicle and (ii) the presence of eyeglasses covering the driver’s eyes may represent issues in driver behavior monitoring scenarios [125,126].

Moreover, considering that, in general, normal cameras work in stereo mode, they can be used as input sources for facial feature tracking, thus creating three-dimensional geometric maps [127] and aiding in characterizing the status of the driver and its behavior. As can be easily understood, the outcome of these powerful tasks could improve consideration of multiple sources: this opens the possibility of federating multiple in-vehicle cameras aiming to compose a video network, which in turn could be exploited to detect safety critical events [128,129].

Finally, camera-based monitoring mechanisms can be applied inside the vehicle’s cabin not only for observing and identifying driver behaviors, but also for recognizing and identifying potentially dangerous situations and behaviors involving the passengers seated inside the vehicle. Thus, this leads to the need to create a sensing network inside the cabin as well as to defineproper data fusion algorithms and strategies [130,131].

2.4.3. Sensor-Equipped Steering Wheel and Wearables

Another unobtrusive way to monitor a person driving his/her vehicle is through a biometric recognition system that is gradually becoming part of everyday people’s life and is based on the intrinsic traits of each person. Several biometric indicators are considered, such as Heart Rate Variability (HRV) [132], ECG [133], or EEG [134]. Among these biometric traits, the ECG (i) gives liveness assurance, uniqueness, universality, and permanence [135] and (ii) is surely interesting to explore for biometric purposes. More in detail, this biometric signal is the result of electrical conduction through the heart needed for its contraction [136]. Based on these characteristics, the possibility to perform ECG biometric observations directly inside a vehicle’s cabin may provide some advantages, especially in terms of automatic setting customization (e.g., biometric authentication for ignition lock [137]) and driving pattern modeling aimed at the detection of fatigue-caused accidents [138] and distraction moments [139] (e.g., using ECG signals to assess mental and physical stress and workload [140,141,142] as well as fatigue and drowsiness [143]), and can be operationally deployed through the adoption of hidden sensors underneath the steering wheel rim surface material [144]. In this way, it is possible to detect the location on the steering wheel with which the hands contact [145,146], for both moving and stationary vehicles [147].

Such a biometric-based recognition system can target reliable continuous ECG-based biometric recognition. This can be performed through the acquisition of signals in more general and seamless configurations (e.g., on the steering wheel surface of the vehicle), thus considering that contact loss and saturation periods may be caused by frequent hand movements required by driving. As a consequence, this could lead to lower-quality signals and could constitute a challenge in recognition [148].

Additional biometric indicators useful in automotive scenarios rely on properties based on skin conductance and the exploitation of respiratory rate for further tasks, both collected by the usage of sensors mounted within the steering wheel or anchored on the seat belt. In detail, these data could help in deriving several indicators that may be of interest for the fitness level and driver’s emotional and workload states, while further measurements (e.g., brain waves) would provide various benefits, hence requiring other kinds of (wearable and obtrusive) sensors. Nevertheless, it could be assured that, when combining these core measurements with vision data (either by normal of thermal cameras), it should be possible to estimate a classification index for workload or stress level, wellness or fitness level, emotional state, and driver intention [149]. Other unobtrusive sensors to be considered may be pressure sensors, useful for detecting (if applied in the seat) the position of the driver. This type of sensors can represent a useful integration of video sensors, since it is well-known that a nonoptimal sitting position (e.g., leaning forward or backwards) may degrade the quality of the drive and induce stress [150]. At a “mechanical” level, when a correct seat position is maintained by drivers, pressure is sensed in all cells composing the pressure sensor, whereas a leaning position translates into nonuniform pressure distribution among the pressure cells [151]. Finally, all the previously mentioned sensing technologies may be included in portable devices, such as wearables, which are nowadays finding applicability beyond daily worn devices (e.g., fitness trackers and sleep monitors); moreover, clinical-grade biometric-driven products that can accurately detect health issues are nowadays on the agenda [152]. To this end, an illustrative example [153] may be represented by a wristwatch to be used to detect the onset of seizure attacks through precise analysis of the user’s electro-dermal activity.

2.4.4. Issues with Sensing Technologies

Lighting is the main factor limiting vision-based and vehicle-based approaches, thus producing noisy effects on both driver movement monitoring and physiological signal recognition using cameras. At night, normal cameras generally have lower performance and infrared cameras should be adopted to overcome this limitation. In addition, the majority of described methods are evaluated only in simulation environments: this has a negative impact on the reliability of this kind of methodology, in which “useful” data can be obtained only after the driver is in a dangerous situation (e.g., she/he becomes drowsy or starts sleeping).

On the other hand, physiological signals generally start changing in the earlier stages of dangerous situations and, therefore, physiological signals can be employed for anomalous behavior detection, with a negligible false positive rate [154]. Analyzing the collected raw physiological data should take into account the noise and artifacts related to all movements made by drivers during driving, but the reliability and accuracy of driver behavior estimation is higher compared to other methods [155]. One of the main inhibitors for the large adoption of methodologies based on physiological signals is related to the typically obtrusive nature of their current implementations. A possible solution is provided by systems with ad hoc sensors that are distributed inside the car, as discussed in Section 2.4.3, so that each time the driver’s hands are maintained on the vehicle steering wheel or gearshift, she/he will be monitored. Consequently, a hybrid sensor fusion solution, in which different monitoring methodologies (camera vision, vehicles monitoring, and lane monitoring) are integrated with ad hoc miniaturized physiological sensing, certainly represents a next step for a DMS to easily achieve an unobtrusive solution integrated in the vehicle and to provide a continuous evaluation of drivers’ “levels of attention,” fatigue, and workload status, as shown in Figure 6. Regardless of the specific sensing technology adopted for driver behavior monitoring (and discussed in Section 2.4.1, Section 2.4.2 and Section 2.4.3), there is a common challenge. Since any sensor works well for all possible considered tasks and for all conditions, sensor fusion, complex algorithms, and intelligent processing capabilities are required to provide redundancy for autonomous functions.

Figure 6.

Example of a prototypical sensing monitor system.

The work in [156] identifies existing physiological sensing technologies that could be used by a DMS, summarized as follows.

- Eye movements and PERCLOS.

- Tracking of gaze direction and head orientation: Electro-OculoGram (EOG).

- Level of attention of the driver, with his/her mental stress derived considering both gaze direction and focus point.

- Pupil diameter, as it has been observed that, in the case of mental stress of the driver, acceleration of the sympathetic nerve causes an increase in pupil diameter.

- Brain activity, especially through an EEG.

- ECG and HRV, that, as mentioned before, allow for monitoring of the tight connection betweem mental activity and autonomous nervous system, which in turn influences human heart activity. More in detail, the balance between the nervous systems, i.e., sympathetic and parasympathetic, affects heart rate, that generally linearly follows the predominance of the sympathetic and parasympathetic nervous systems, respectively.

- Facial muscle activity and facial tracking, for which the related parameters and directions can be monitored through a camera focusing on the driver, aim to detect his/her attention level and drowsiness degree and to alert the occupants of the vehicle (through some on-board mechanisms, e.g., HMIs) in the case that driving conditions are not appropriate (e.g., a weary driving person usually nods or swings his/her head, yawns often, and blinks rapidly and constantly).

- Steering Wheel Movement (SWM), that represents widely recognized information related to the drowsiness level of the driver and can be obtained in an unobtrusive way with a steering angle sensor in order to avoid interference with driving.

3. The NextPerception Approach

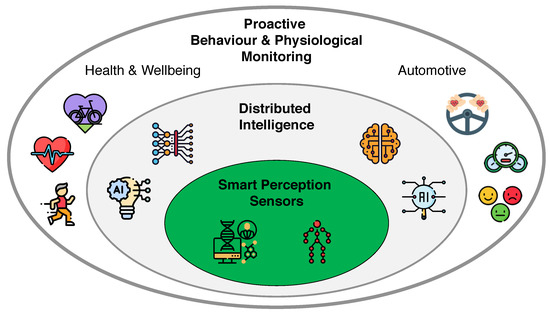

3.1. Statement and Vision

NextPerception [157] is a research project funded in the context of the ECSEL JU [158] framework, which aims to facilitate the integration of versatile, secure, reliable, and proactive human monitoring solutions in the health, well-being, and automotive domains. In particular, NextPerception seeks to advance perception sensor technologies, like RADAR, Laser Imaging Detection and Ranging (LIDAR), and Time of Flight (ToF) sensors, while additionally addressing the distribution of intelligence to accommodate for vast computational resources as well as increasing complexity needed in multi-modal and multi-sensory systems.

The basic NextPerception project concept is depicted in Figure 7. NextPerception brings together 43 partners from 7 countries in the healthcare, wellness, and automotive domains, seeking synergy by combining expertise in physiological monitoring usually in health with real-time sensor processing typical in the automotive industry.

Figure 7.

EU-funded NextPerception project concept.

Use Cases (UCs) in NextPerception are tackling challenges in integral vitality monitoring (e.g., in elderly care), driver monitoring (both in consumers’ cars and for professional drivers), as well as comfort and safety in intersections (e.g., predicting the behavior of pedestrians, cyclists, and other vulnerable road users).

With regard to driver monitoring, the use of a variety of sensors to detect compromised driving ability (e.g., by fatigue or emotional state) will contribute to further developments in automated driving, particularly in the transition phase. As also indicated in this paper, driver emotional state assessment demands the combination of information about the driver’s physiological state, emotional state, manipulation of the vehicle, and to some extent the vehicle’s environment. Perception sensors provide the means for many of the necessary observations, including novel applications of technologies for unobtrusive parameter monitoring and cameras for gaze tracking and emotion assessment. NextPerception project’s partners aim to assess the feasibility of applying these perception sensors within the vehicle environment for driver monitoring and for resolving the computational challenges needed to provide timely feedback or to take appropriate preventive actions.

3.2. Development Process

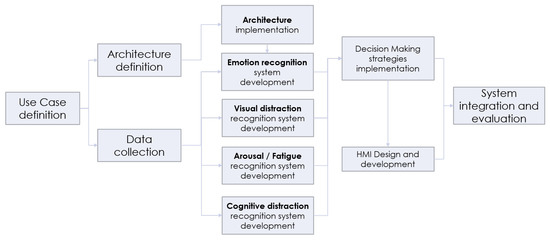

The activity will start with the definition of practical UCs that, on the basis of different User Stories (USs), will be then implemented in an automotive environment (as well as in a driving simulator). In parallel, the system architecture will be defined in order to understand the most appropriate set of sensors and communication requirements to be included in the development. Then, an iterative experimental data collection campaign will be conducted to train the software modules.

Finally, as shown in Figure 8, the state detection system and the Decision Support System (DSS) will be integrated in the driving simulator to test the impact in terms of safety, comfort, acceptability, and trust.

Figure 8.

Implementation process of the Driver Complex State (DCS) monitoring system.

Then, arbitration conditions and HMI-related strategies will be designed to mitigate weakening of the driver’s emotional state as will a dashboard to check in real-time all the measured parameters.

3.3. Use Case on Driven Behavior Recognition

Among the overall objectives of the NextPerception project, one of the practical UCs of the project itself focuses on the definition and deployment of a driver behavior recognition system to be applied in the context of partial automated driving. As highlighted in Section 1, driver behavior has a significant impact on safety also when vehicle control is in charge of automation. Then, the scope of this experimental Use Case (UC) will be to stress some of the most critical situations related to the interaction between humans and the automated system. As discussed in Section 2.2.1, conditions that have an impact on safety are often related to control transition between the agents, being even true in both directions, i.e., from manual driving to automated driving, and vice versa. In order to design and evaluate the impact of a technological solution and to establish its functionalities and requirements, a common tool used by designers are USs. This instrument can illustrate complex situations from the user’s point of view and clearly demonstrate the added value provided by technology. The following texts will present two short examples of USs to describe the behavior of the monitoring system in a realistic driving situation in Manual Mode (MM) and Automated Mode (AM).

“Peter is driving in manual mode on an urban road. He is in a hurry, since he is going to pick up his daughter Martha at the kindergarten and he is late. While driving, he receives an important mail from his boss. Since he has to reply immediately, he takes his smartphone and starts composing a message, thus starting to drive erratically. The vehicle informs him that his behavior is not safe and invites him to focus on driving. Since he does not react properly, the vehicle establishes that Peter is no longer in the condition to drive. Automation offers the opportunity to take control and informs him that he can relax and reply to the email. Taking into account Peter’s rush, automation adapts the driving style to reach the kindergarten safely but on time.”

This first US highlights the benefits of adoption of a DMS in a MM-like driving situation. The system is able to detect Peter’s impairment and provides a solution to mitigate it. This solution is incremental since it is firstly based on advice and then on intervention. Moreover, the detection of Peter’s hurry allows the vehicle’s behavior to adapt according to his needs. The situation depicted in this short US highlights the added value of complex monitoring, with a significant impact not only on safety but also on trust and acceptability, since Peter will perceive that the vehicle understand his needs and adapts its reaction to be tailored around him.

The second US is focused on transition from AM to MM.

“Julie is driving on the highway in automated mode. She is arguing with her husband on the phone, and she is visibly upset. Since automation detects that she is going to approach the highway exit, it starts adjusting the interior lighting to calm Julie and, then, plays some calming background music. Since Julie is still upset, the vehicle concludes that she is not in the condition to take control safely and proposes that she take a rest and drink chamomile tea at the closest service station.”

In this second US, the value of the monitoring system consists of the joint detection of behavioral (i.e., even if Julie is looking at the road, she is distracted, since she is involved in a challenging phone discussion) and emotional states. The reaction deriving from this monitoring is, again, incremental. The vehicle, understanding (e.g., from digital maps) that a takeover request will be necessary in a while, first tries to calm Julie, then advises that she is not in the condition to drive. This form of emotional regulation, currently neglected in vehicle interaction design, can have a significant impact on traffic safety as well as on the overall driving experience.

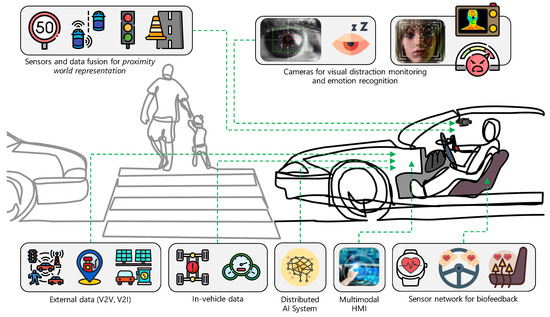

Hence, the main innovation of this UC is the combination of cognitive/behavioral/emotional status recognition in the context of highly automated driving, as shown in Figure 9. This is particularly relevant given the well-known implications related to “driver out-of-the loop” state [159]. In particular, this UC will focus on the combined effect of emotional and cognitive affections on driving performance in safety-critical contexts, such as the transition of control between automated vehicle and human driver. The scope of the research performed in this UC includes the following activities:

Figure 9.

System architecture for the envisioned automotive Use Case (UC) in the NextPerception framework.

- To develop robust, reliable, non-obtrusive methods to infer a combined cognitive/emotional state—to the best of our knowledge, there is no system able to infer the combination of these factors in the automotive domain and of examples of integrated measures [79].

- To develop interaction modalities, e.g., based on the combination of visual and vocal interaction, including shared control modalities to facilitate the transition of control and, in general, the interaction between the driver and the highly automated system.

- To develop an appropriate framework to induce and collect data about the driver state from both cognitive and emotional sides. Indeed, driver cognitive states have been investigated more than emotional states [160]. To date, driver’s emotions have been always investigated separately from driver’s cognitive states because of the difficulty to distinguish emotional and cognitive load effects [60,160]. Further research is then needed to understand the whole driver state from both perspectives, starting from the appropriated experimental design paradigm to induce both conditions.

3.4. Improving Emotion Recognition for Vehicle Safety

An envisioned vehicle driver-based state detection system would continuously and unobtrusively monitor driver’s condition at a “micro-performance” level (e.g., minute steering movements) and “micro-behavioral” level, such as driver psycho-physiological status, in particular, eye closure and facial expressions. The system may enable the handling (and personalization) of an immediate warning signal when a driver’s state is detected with high certainty or, alternatively, the presentation of a verbal secondary task via a recorded voice as a second-stage probe of driver state in situations of possible drowsiness. In addition, the system adopts an emotional regulation approach by interactively changing the in-cabin scenario (e.g., modulating interior and car interface’s lighting and sound) to orient the driver’s attitude toward safe driving and a comfortable experience. The opportunity to improve road safety by collecting human emotions represents a challenging issue in DMS [161]. Traditionally, emotion recognition has been extensively used to implement the so-called “sentiment analysis”, which has been widely adopted by different companies to gauge consumers’ moods towards their product or brand in the digital world. However, it can also be used to recognize different emotions on an individual’s face automatically to make vehicles safer and more personalized. In fact, vehicle manufacturers around the world are increasingly focusing on making vehicles more personal and safer to drive. In their pursuit to build smarter vehicle features, it makes sense for manufacturers to use AI to help them understand human emotions. As an example, using facial emotion detection, smart cars can interact with the driver to adapt the level of automation or to support the decision-making process if emotions that can affect the driving performance are detected. In fact, (negative) emotions can alter perception, decision making, and other key driving capabilities, besides affecting physical capabilities.

3.5. Improving Assistance Using Accurate Driver Complex State Monitoring

In the specific UC introduced in Section 3.3, we take into account the conditions and sensors described in Section 2 to develop a DMS, which can classify both the driver’s cognitive states (e.g., distraction, fatigue, workload, and drowsiness) and the driver’s emotional state (e.g., anxiety, panic, and anger), as well as the activities and positions of occupants (including driver) inside the vehicle.

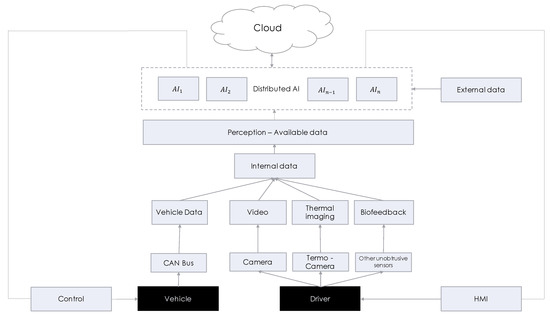

The high-level objective is to use this information to support partially automated driving functions, including takeover requests and driver support. To address this scope, we will base our in-vehicle development on the combination of different unobtrusive acquisition modules able to detect in real time different parameters that will be later fused to obtain a “Fitness-to-Drive Index” (F2DI). To this end, Figure 10 shows a modular representation of the overall acquisition system to be deployed in the driving environment.

Figure 10.

Schematic representation of the proposed perception system.

With regard to Figure 10, three categories of information will be leveraged: (i) vehicle data, (ii) driver data, and (iii) external data.

- Vehicle information will be collected through the in-vehicle Controller Area Network (CAN) network in order to recognize the driving pattern in impairment conditions, such as cognitive distraction.

- Driver data will be collected through a combination of unobtrusive sensors. For example, as introduced in Section 2.4.2, cameras inside the cockpit will be used to detect driver activity and visual distraction as well as emotional activation from facial expression; thermal cameras will be used to detect drowsiness and arousal; other unobtrusive sensors (e.g., smartwatch as well as more general wearable devices) will be used to measure the driver’s engagement and other bio-physiological parameters. The combination of different sensors enables the detection of several parameters influencing the driver’s fitness to drive. We will refer to this combination as Driver Complex State (DCS), in turn made by the combination of (i) emotional state; (ii) visual distraction; (iii) cognitive distraction; (iv) arousal; and (v) fatigue/drowsiness.

- Finally, external data, including road conditions, weather conditions, lane occupation, and the actions of other vehicles on the road will be considered.

The available data will allow a deep comprehension of a joint driver–vehicle state, also correlated with the surrounding environment. In detail, this comprehension will be the result of the combination of the output of different AI modules () in a distributed AI-oriented processing environment (“Distributed AI” block in Figure 10). Then, different data fusion strategies will be evaluated, in particular Late Fusion (i.e., high-level inference on each sensor, subsequently combined across sensors) and Cooperative Fusion (i.e., high-level inference on each sensor but taking into account feature vectors or high-level inference from other sensors) [162].

Finally, the F2DI parameter, combined with vehicle and environmental conditions, will feed a DSS that generates two main outputs: (i) a dynamic and state-aware level of arbitration and (ii) a suitable HMI strategy. The level of arbitration will be an index used to distribute the vehicle authority (at the decision and control levels) between the human and the automated agents according to the different situations, including possible solutions of shared, traded, and cooperative control. The effects of such an index will have an impact at the vehicle level (“Control” block in Figure 10). On the other hand, the derived HMI strategy will be implemented by the HMI towards the driver (“HMI” block in Figure 10). Multi-modal interaction modalities and visual solutions based on elements aimed at increasing the transparency of the system and at providing explanations instead of warnings (the so-called negotiation-based approach), and emotion regulation strategies aimed at mitigating the impairment condition and at smoothly conducting the driver towards the expected reaction, according to the situation.

3.6. Experimental Setup and Expected Results

In order to train and develop the modules to detect driver state and to test the impact of the different combinations of human states, an experimental testing campaign was conducted. All subjects gave their informed consent for inclusion before they participated in the study. The study was conducted in accordance with the Declaration of Helsinki and the ethical approval of NextPerception (ECSEL JU grant agreement No. 876487). The data collection was performed in a driving simulator based on SCANeR Studio 1.7 platform [163], enriched with a force-feedback steering wheel and equipped with cameras and sensors to gather driver’s data. Data collection was performed in two steps. The first step was conducted to gather emotional states, such as anger, fear, and neutral emotional activation, used as baseline. In particular, the experimental setup was used as a controlled environment to build a proper dataset to trains deep CNNs for emotion recognition. The achieved training set could be merged with other different public datasets—such as CK+ [164], FER+ [165], and AffectNet [166]—to ensure better accuracy. The emotions were induced with the autobiographical recall method plus an audio boost [64,167]. The second step consisted in gathering visual and cognitive distraction conditions, performed using the dual task paradigm [168]. Further acquisitions were performed to test the impact on driving of the combination of different conditions. In all the performed experiments, vehicle CAN data as well as sensors data were collected and analyzed in order to design the detection modules and the decision-making strategies.

The possibility of inferring emotional activation directly from driving behavior was explored as was definition of the most suitable architecture and combination of sensors to infer the driver’s state. Moreover, the possibility of inferring cognitive states and arousal from video streams was evaluated during the experiments.

4. Recommendations and Future Directions

Based on the proposed ADAS-oriented architecture for DCS estimation, it is possible to discuss and highlight some recommendations and future directions which can be considered. One aspect that is recommended to be taken into account with regard to ADAS systems, copes with the idea of “driver satisfaction”. More in detail, one needs to satisfy particular constraints for which knowledge might be a significant indicator of user acceptance of ADAS systems [169] and, in turn, denotes typical understanding of driver behavior. An illustrative reference parameters subset, useful to characterize a driver and its driving style with adaptive ADAS, is proposed in [170].

Another recommendation that can be advised in the future is related to the number and type of drivers involved in data collection: they should be as large and as heterogeneous as possible. In this way, it would be possible to assign each driver to one or multiple categories on the basis of their captured emotions and driving style, with the consequence to be able to further investigate the intra-driver variation of trip-related parameters (e.g., following distance and cornering velocity as well as environmental conditions in which the drive is performed, i.e., day/night, sunny/foggy day, etc.). Moreover, this structured data collection allows for the evaluation of all the aspects related to legal rules that should be followed during the trip; cultural-related behaviors followed in particular regions or environments (e.g., forbidding a certain category of people from driving or from using the vehicle front seats); and constraints specifically introduced by local governments and to be applied in specific areas.

Other challenges to be considered in scenarios involving driver emotion recognition through HMIs and ADAS refer to the following aspects [25]: (i) to incorporate driver-related personal information and preferences in order to develop more personalized on-board services (e.g., properly “training” on-board models dedicated to these purposes); (ii) to improve the quantity and the quality of the collected datasets, targeting a unified standard data representation for different applications (thus supporting the derivation of more homogeneous models); and (iii) to consider information related to the external context in models generating an estimation of the driver’s state (thus introducing real-time data even from remote-sourced sensing platforms).

Finally, should Vehicle-to-Everything (V2X) mechanisms be added to (IoT-aided) enhanced ADAS, the introduction of cooperative modeling approaches, based on the cooperation of multiple vehicles sharing their on-board collected data and aiming to enhance their internal models, will be beneficial. In this way, ADAS will become more powerful systems aiding the driver during his/her trips and, in the meanwhile, improving driving quality estimation [171]. This can be beneficial even for emotional state recognition, since anonymized data would be used by a federation of vehicles for enhancing the quality of the recognition, leaving the driver unaware of this smart processing during his/her typical errands.

5. Conclusions

In this paper, a comprehensive review of the state-of-the-art of technologies for ADAS identifying opportunities (to increase the quality and security of driving) as well as drawbacks (e.g., the fact that some ADASs implement only simple mechanisms to take into account drivers’ state or do not take it into account at all) of the current ADAS systems has been detailed. This review focuses on the emotional and cognitive analysis for ADAS development, ranging from psychological models, sensors capturing physiological signals, adopted ML-based processing techniques, and distributed architectures needed for complex interactions. The literature overview highlights the necessity to improve both cognitive and emotional state classifiers. Hence, for each of the mentioned topics, safety issues are discussed, in turn highlighting currently known weaknesses and challenges. As an example, it is very challenging to implement in-vehicle systems to monitor drivers’ alertness and performance because of different causes: incorrect estimation of the current driver’s state; errors affecting in-vehicle sensing components; and communication issues among the ADAS and the driver. All these shortcomings may cause safety systems to incorrectly activate or to make wrong decisions.