DBA-YOLO: A Dense Target Detection Model Based on Lightweight Neural Networks

Abstract

1. Introduction

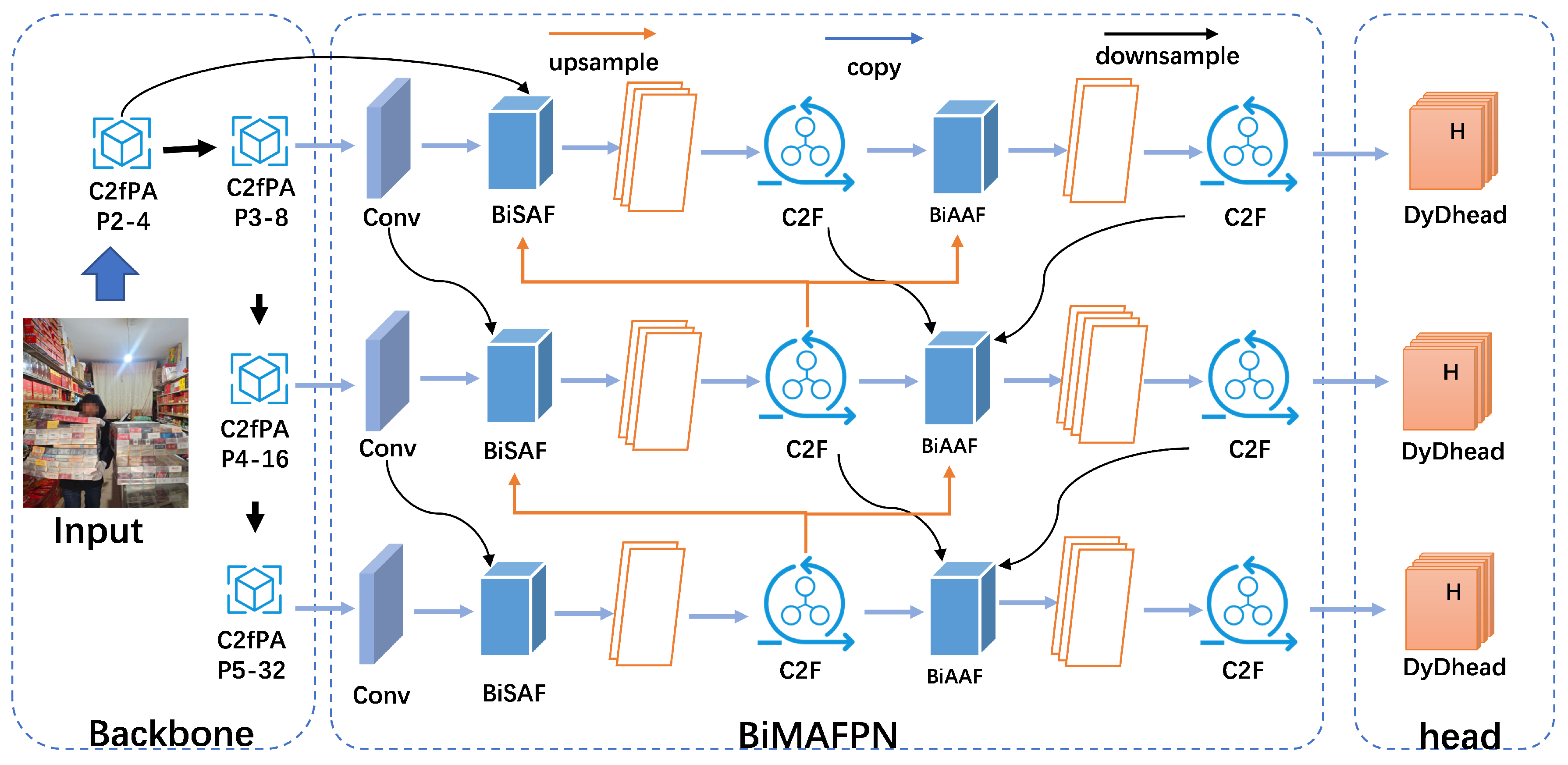

- We propose an improved C2f PA module in the backbone that adaptively adjusts feature weights according to their importance, thereby improving feature extraction. Unlike simply stacking generic attention, C2f PA prioritizes foreground information and selectively enhances shallow, fine-grained cues, targeting the early-stage erasure problem common to dense small objects.

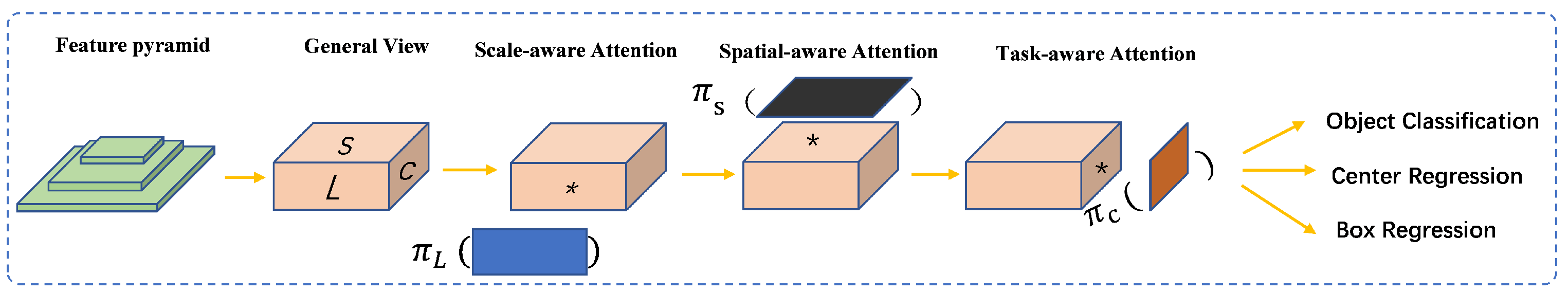

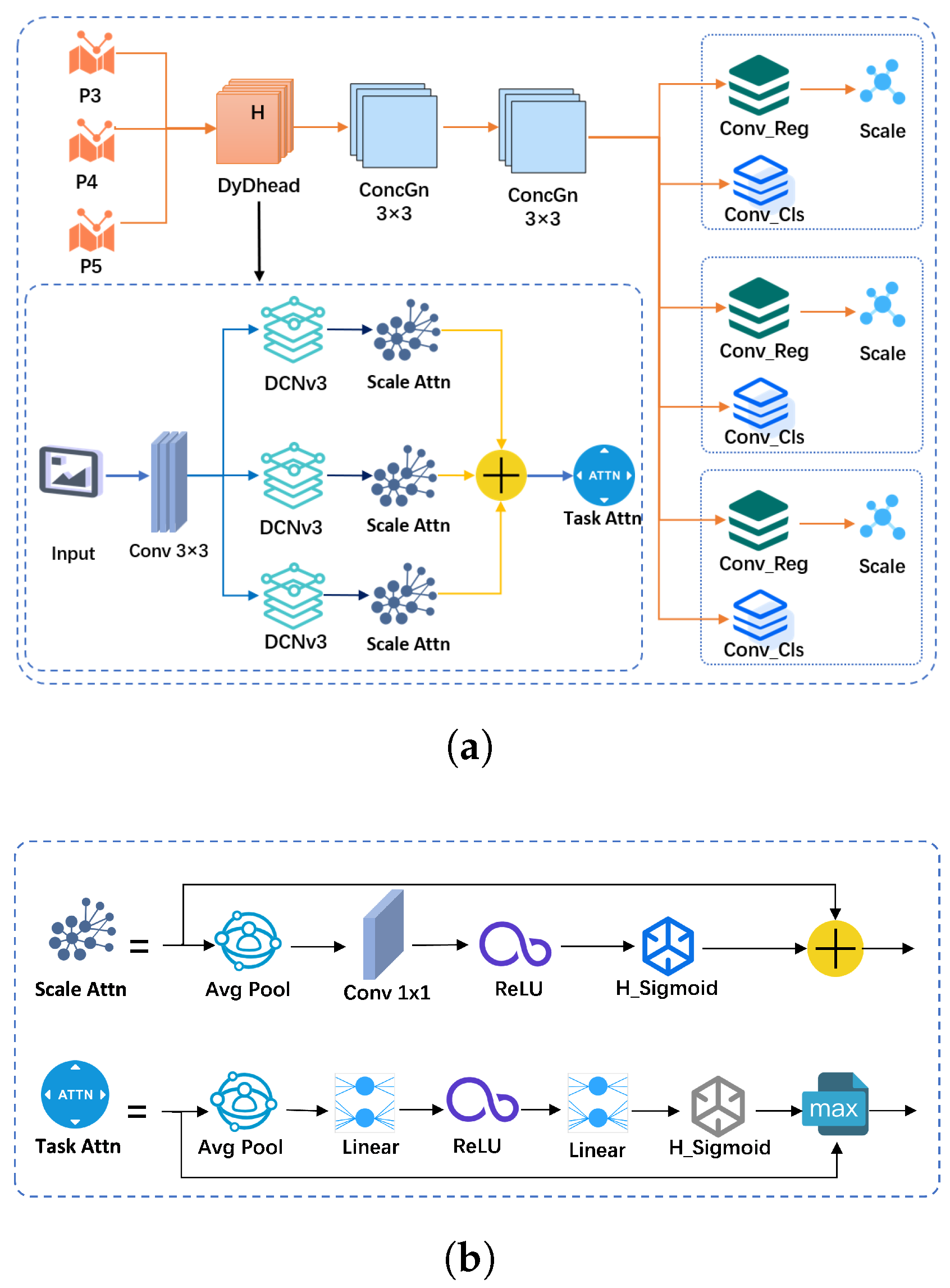

- We present DyDHead, an improved detection head built upon YOLOv10 that integrates novel dynamic convolution, adaptive feature enhancement, and multi-scale semantic awareness for more accurate target characterization in complex scenes, yielding significant performance gains. DyDHead combines dynamic deformable sampling with hierarchical attention to alleviate localization instability under occlusion/overlap, while a lightweight path design keeps the extra overhead controlled.

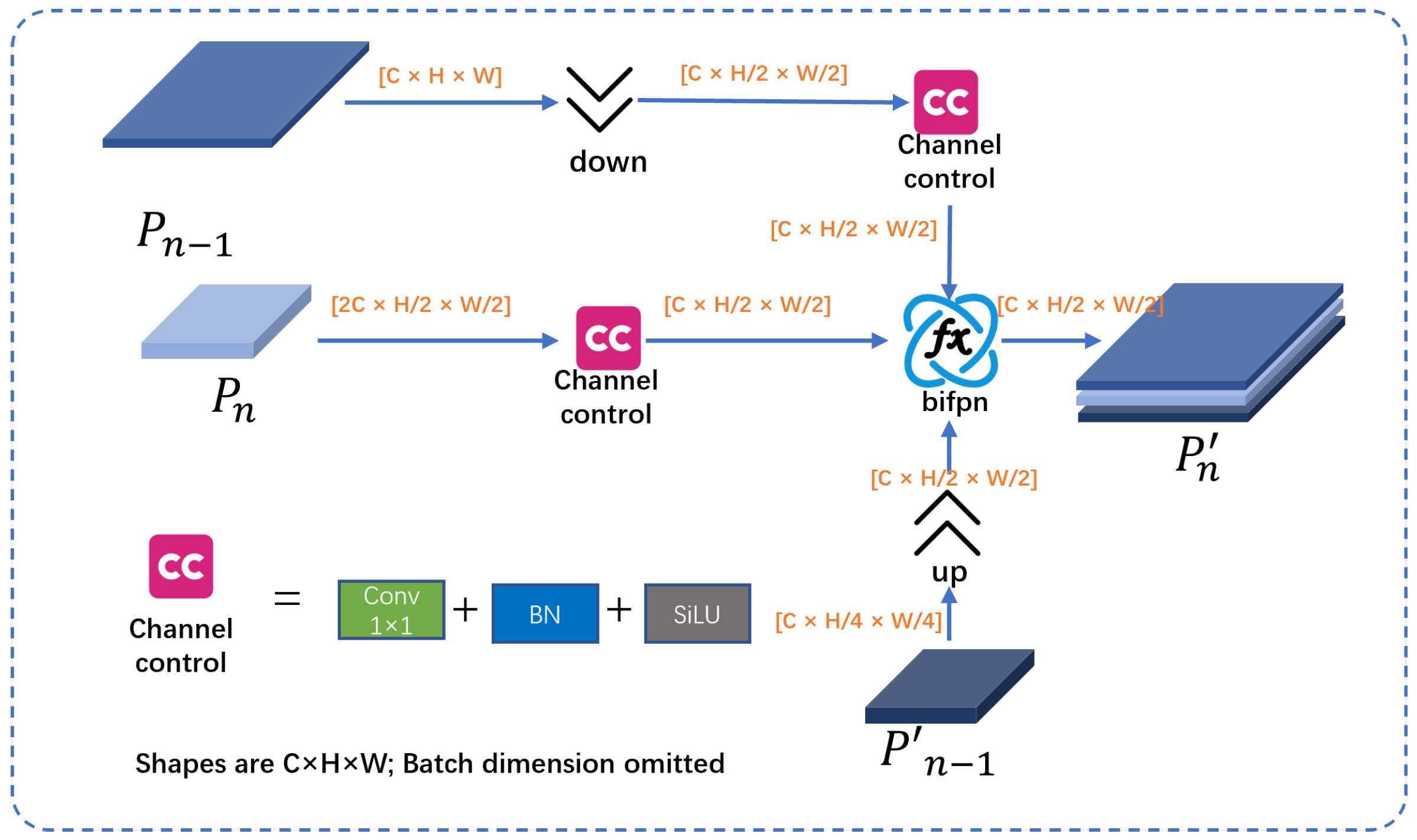

- We propose BIMAFPN, a weighted bidirectional multi-branch assisted FPN that combines BiFPN with auxiliary branches for richer interaction and fusion. BiSAF preserves shallow information for small-object sensitivity; BiAAF enriches output-layer gradients via multidirectional links; and BiFPN provides learnable, bidirectional cross-scale fusion to improve efficiency and accuracy while reducing parameters. Unlike directly concatenating a generic neck, BIMAFPN employs a “shallow-fidelity + high-level gain” dual-assist pathway explicitly tailored to dense small objects and supplies features matched to the detection head.

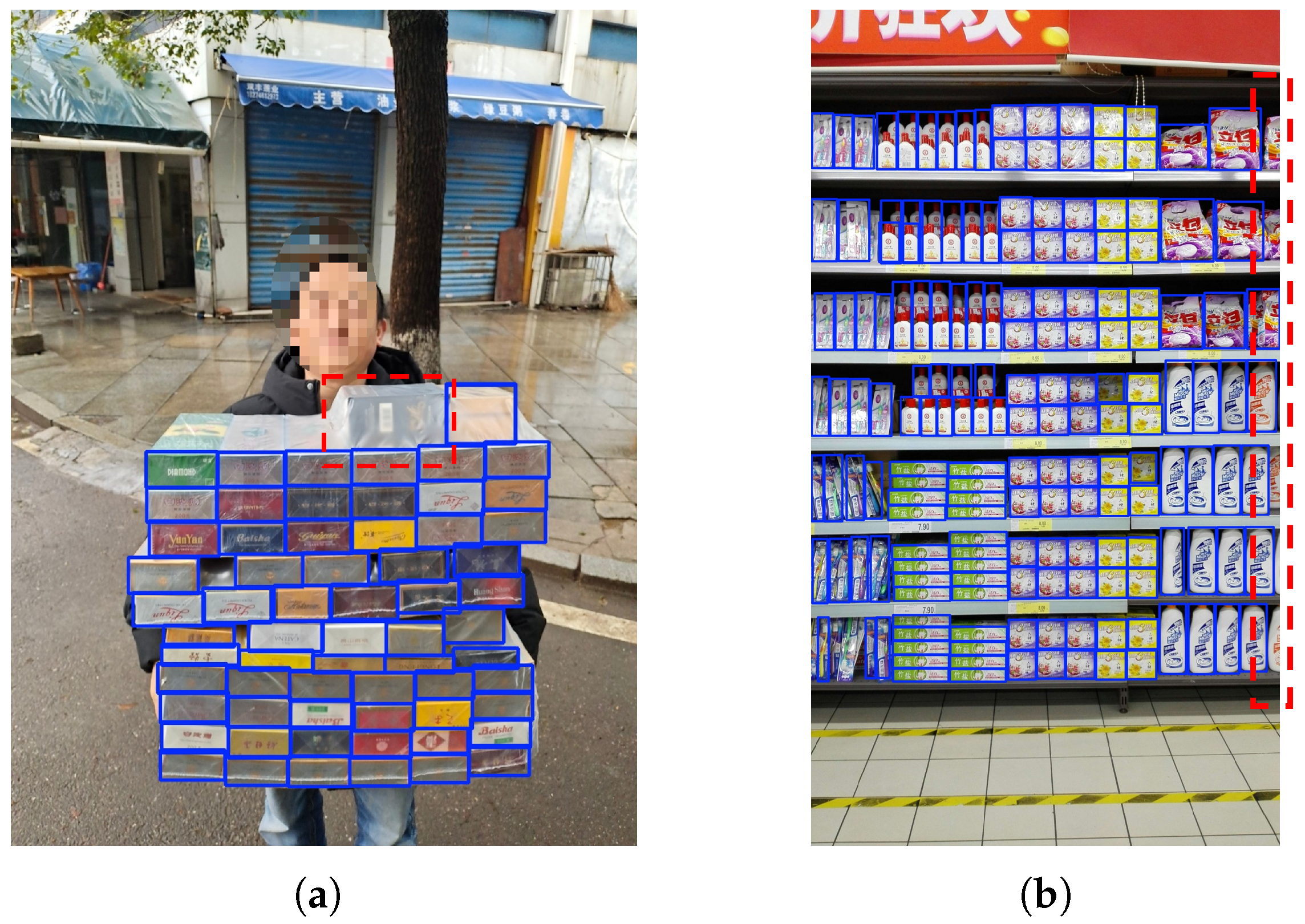

- We build a practical cigarette package dataset for testing, comprising 1073 images and 50,173 instances at 960 × 1280 resolution. As an application-neutral dense benchmark, it supports reproducible evaluation for methods targeting crowding and small objects. We plan to expand it to 3000 real images and, with augmentation, to 5000 images and 200,000 instances for public release.

2. Related Work

2.1. Real-Time Dense Small-Object Detection

2.2. Multi-Scale Feature Fusion

2.3. Detection Head

3. Method

3.1. Overview

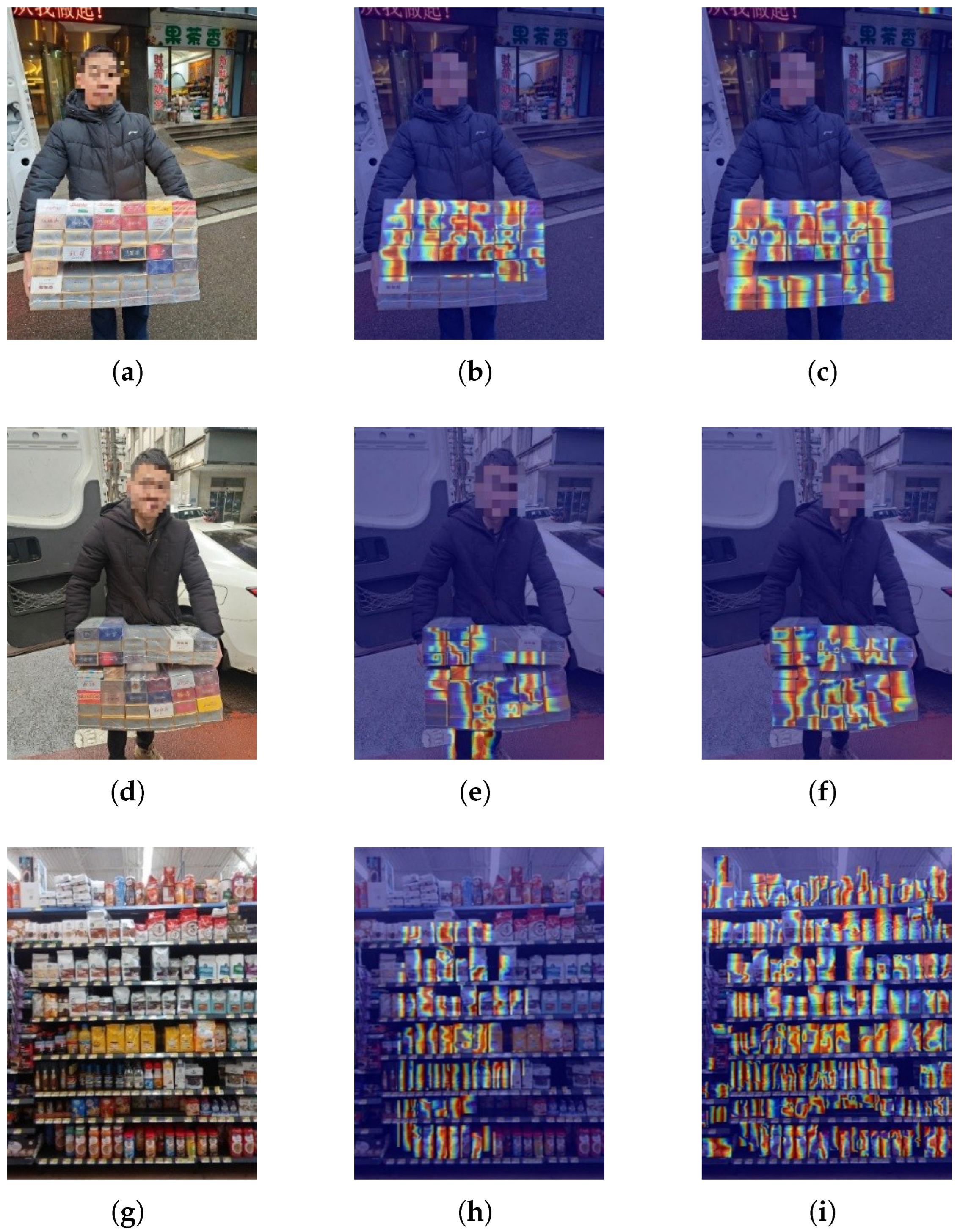

3.2. C2f PA Module

3.3. Multi-Scale Attention Feature Fusion Network

3.4. DyDHead Schematic

3.5. Loss Function

4. Experiments

4.1. Dataset

4.2. Experimental Environment and Parameter Settings

4.3. Evaluation Metrics

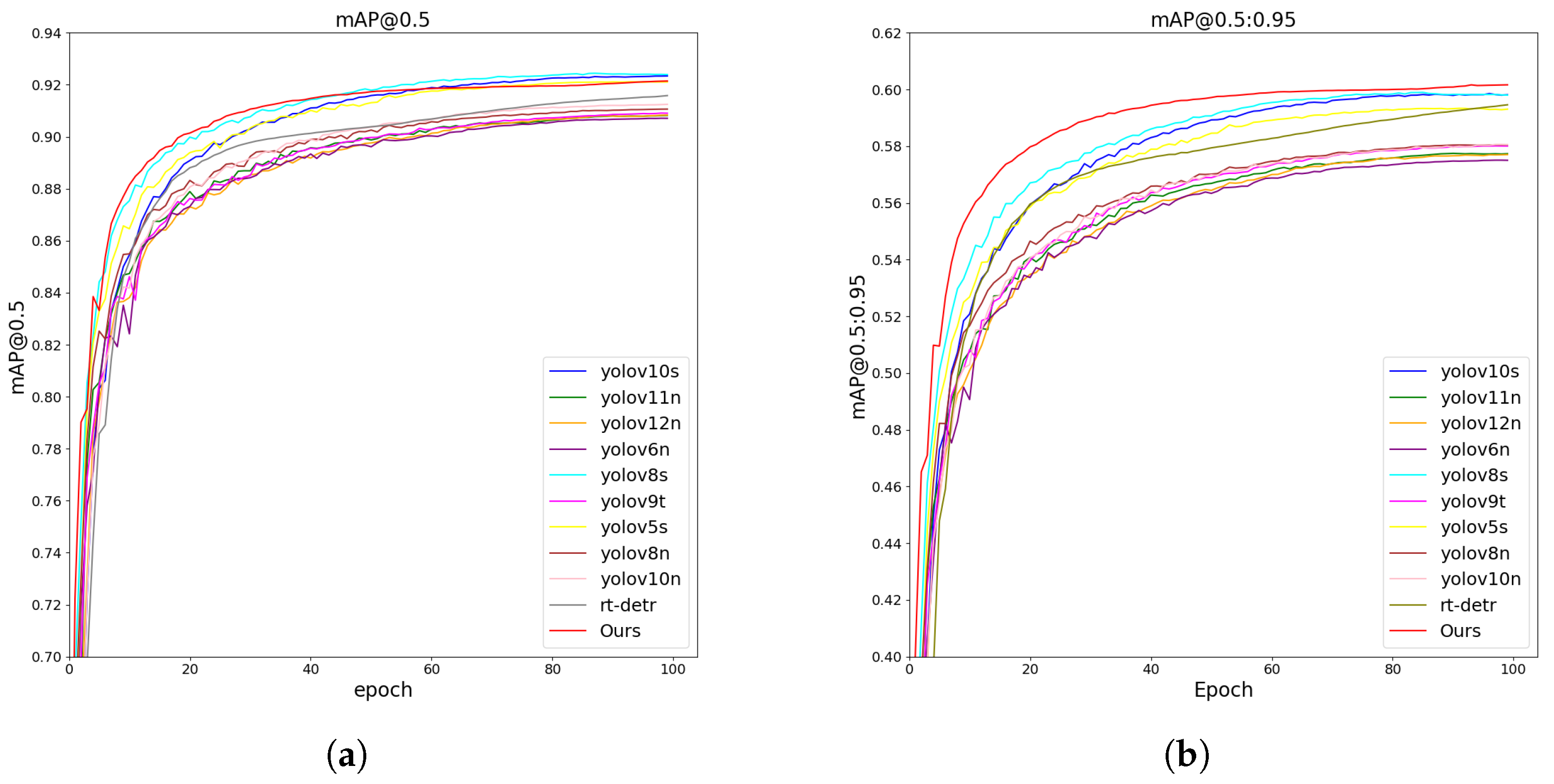

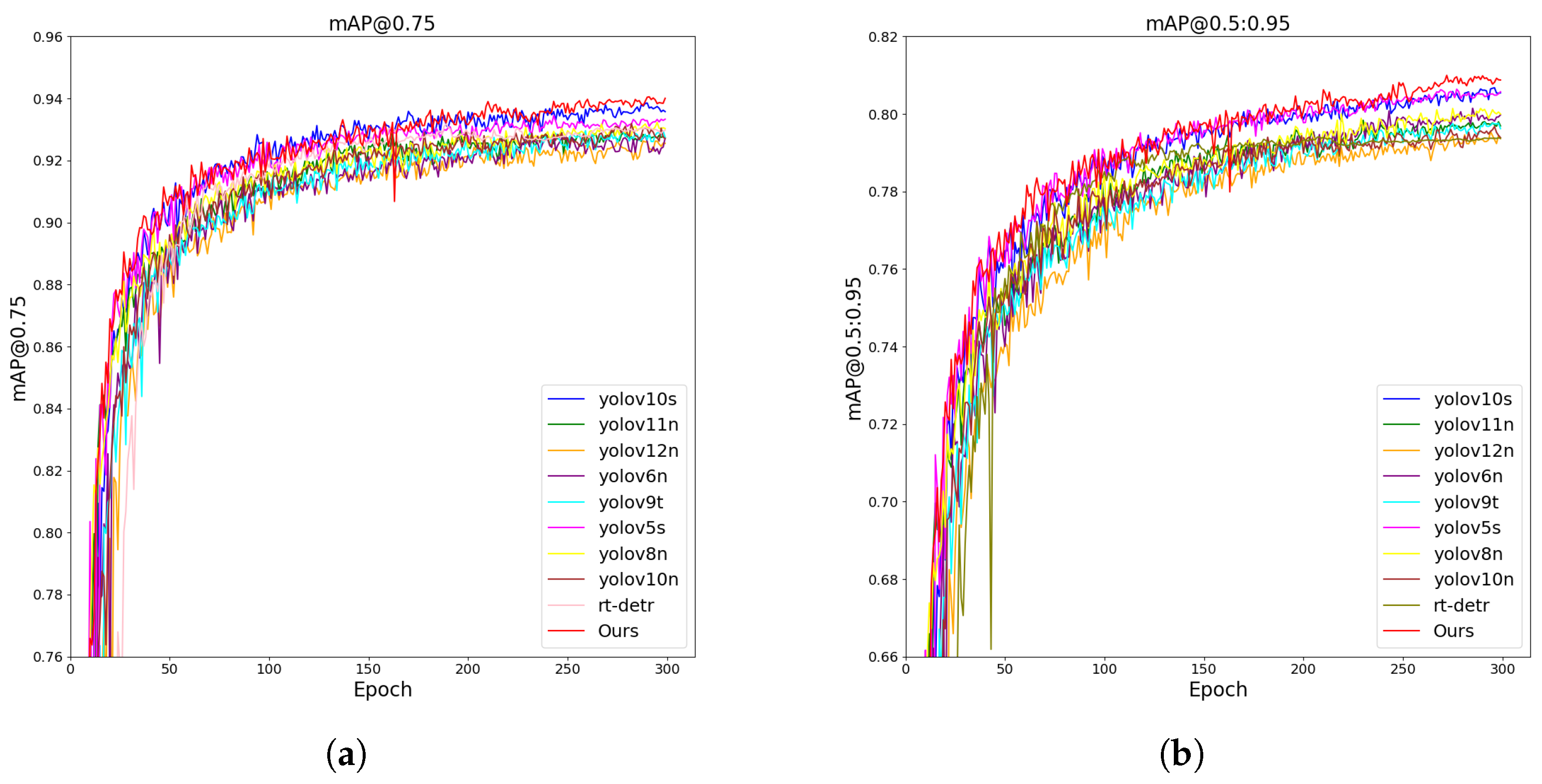

4.4. Result

4.5. Ablation Study and Discussion

4.5.1. Effectiveness of BIMAFPN Module

4.5.2. Effectiveness of C2f PA Module

4.5.3. Effectiveness of DyDHead Module

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Liang, L.; Ma, H.; Zhao, L.; Xie, X.; Hua, C.; Zhang, M.; Zhang, Y. Vehicle detection algorithms for autonomous driving: A review. Sensors 2024, 24, 3088. [Google Scholar] [CrossRef]

- EL Fadel, N. Facial Recognition Algorithms: A Systematic Literature Review. J. Imaging 2025, 11, 58. [Google Scholar] [CrossRef]

- AlKendi, W.; Gechter, F.; Heyberger, L.; Guyeux, C. Advancements and challenges in handwritten text recognition: A comprehensive survey. J. Imaging 2024, 10, 18. [Google Scholar] [CrossRef]

- Haq, M.A. Planetscope nanosatellites image classification using machine learning. Comput. Syst. Sci. Eng. 2022, 42, 1031–1046. [Google Scholar] [CrossRef]

- Goldman, E.; Herzig, R.; Eisenschtat, A.; Goldberger, J.; Hassner, T. Precise detection in densely packed scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5227–5236. [Google Scholar]

- Haq, M.A.; Rahaman, G.; Baral, P.; Ghosh, A. Deep learning based supervised image classification using UAV images for forest areas classification. J. Indian Soc. Remote Sens. 2021, 49, 601–606. [Google Scholar] [CrossRef]

- Ma, Z.; Liu, D.; Cui, Z.; Zhao, Y. AdaptCD: An adaptive target region-based commodity detection system. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 5486–5495. [Google Scholar]

- Alsubai, S.; Dutta, A.K.; Alghayadh, F.; Alamer, B.H.; Pattanayak, R.M.; Ramesh, J.V.N.; Mohanty, S.N. Design of Artificial Intelligence Driven Crowd Density Analysis for Sustainable Smart Cities. IEEE Access 2024, 12, 121983–121993. [Google Scholar] [CrossRef]

- Haq, M.A. CNN based automated weed detection system using UAV imagery. Comput. Syst. Sci. Eng. 2022, 42, 837–849. [Google Scholar] [CrossRef]

- Sharif Razavian, A.; Azizpour, H.; Sullivan, J.; Carlsson, S. CNN features off-the-shelf: An astounding baseline for recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Columbus, OH, USA, 24–27 June 2014; pp. 806–813. [Google Scholar]

- Zaidi, S.S.A.; Ansari, M.S.; Aslam, A.; Kanwal, N.; Asghar, M.; Lee, B. A survey of modern deep learning based object detection models. Digit. Signal Process. 2022, 126, 103514. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L.C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar]

- Ma, N.; Zhang, X.; Zheng, H.T.; Sun, J. Shufflenet v2: Practical guidelines for efficient cnn architecture design. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–10 September 2018; pp. 116–131. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. Ghostnet: More features from cheap operations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1580–1589. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J.; Ding, G. Yolov10: Real-time end-to-end object detection. Adv. Neural Inf. Process. Syst. 2024, 37, 107984–108011. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Carion, N.; Massa, F.; Synnaeve, G.; Usunier, N.; Kirillov, A.; Zagoruyko, S. End-to-end object detection with transformers. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 213–229. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable detr: Deformable transformers for end-to-end object detection. arXiv 2021, arXiv:2010.04159. [Google Scholar]

- Lv, W.; Zhao, Y.; Chang, Q.; Huang, K.; Wang, G.; Liu, Y. Rt-detrv2: Improved baseline with bag-of-freebies for real-time detection transformer. arXiv 2024, arXiv:2407.17140. [Google Scholar]

- Huang, S.; Lu, Z.; Cun, X.; Yu, Y.; Zhou, X.; Shen, X. Deim: Detr with improved matching for fast convergence. In Proceedings of the Computer Vision and Pattern Recognition Conference, Nashville, TN, USA, 11–15 June 2025; pp. 15162–15171. [Google Scholar]

- Chu, X.; Zheng, A.; Zhang, X.; Sun, J. Detection in crowded scenes: One proposal, multiple predictions. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 12214–12223. [Google Scholar]

- Wang, J.; Song, L.; Li, Z.; Sun, H.; Sun, J.; Zheng, N. End-to-end object detection with fully convolutional network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Online, 19–25 June 2021; pp. 15849–15858. [Google Scholar]

- Yang, C.; Huang, Z.; Wang, N. QueryDet: Cascaded sparse query for accelerating high-resolution small object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 13668–13677. [Google Scholar]

- Zhang, S.; Wang, X.; Wang, J.; Pang, J.; Lyu, C.; Zhang, W.; Luo, P.; Chen, K. Dense distinct query for end-to-end object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 7329–7338. [Google Scholar]

- Lin, Z.; Wang, Y.; Zhang, J.; Chu, X. Dynamicdet: A unified dynamic architecture for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 6282–6291. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2018; pp. 8759–876. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the CVPR, Seattle, WA, USA, 13–19 June 2020; pp. 10781–10790. [Google Scholar]

- Guo, C.; Fan, B.; Zhang, Q.; Liu, X.; Zhang, M.; Lu, H. AugFPN: Improving Multi-scale Feature Learning for Object Detection. In Proceedings of the CVPR, Online, 14–19 June 2020; pp. 12559–12568. [Google Scholar]

- Luo, Y.; Cao, X.; Zhang, J.; Guo, J.; Shen, H.; Wang, T.; Feng, Q. CE-FPN: Enhancing Channel Information for Object Detection. Multimed. Tools Appl. 2022, 81, 30685–30704. [Google Scholar] [CrossRef]

- Yang, G.; Lei, J.; Zhu, Z.; Cheng, S.; Feng, Z.; Liang, R. AFPN: Asymptotic Feature Pyramid Network for Object Detection. In Proceedings of the 2023 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Vienna, Austria, 5–8 October 2023; pp. 2184–2189. [Google Scholar]

- Chen, Z.; Ji, H.; Zhang, Y.; Zhu, Z.; Li, Y. High-Resolution Feature Pyramid Network for Small Object Detection. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 475–489. [Google Scholar] [CrossRef]

- Shi, Z.; Hu, J.; Ren, J.; Ye, H.; Yuan, X.; Ouyang, Y.; He, J.; Ji, B.; Guo, J. High Frequency and Spatial Perception Feature Pyramid Network for Object Detection. In Proceedings of the AAAI Conference on Artificial Intelligence, Philadelphia, PA, USA, 27 February–4 March 2025. [Google Scholar]

- Li, C.; Li, L.; Jiang, H.; Weng, K.; Geng, Y.; Li, L.; Ke, Z.; Li, Q.; Cheng, M.; Nie, W.; et al. YOLOv6: A Single-Stage Object Detection Framework for Industrial Applications. arXiv 2022, arXiv:2209.02976. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2023, arXiv:2207.02696. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Mark Liao, H.Y. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Dai, Z.; Cai, Q.; Lin, Y.; Chen, Y.; Ding, M.; Xie, E.; Zhang, W.; Hu, H.; Dai, J. Dynamic Head: Unifying Object Detection Heads with Attentions. In Proceedings of the CVPR, Online, 19–25 June 2021. [Google Scholar]

- Zhang, T.; Cheng, C.; Lu, C.; Li, K.; Yang, X.; Li, G.; Zhang, L. TOOD: Task-aligned One-stage Object Detection. In Proceedings of the ICCV, Seoul, Republic of Korea, 10–17 October 2021. [Google Scholar]

- Zheng, Z.; Wang, P.; Liu, W.; Li, J.; Ye, R.; Ren, D. Distance-IoU loss: Faster and better learning for bounding box regression. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12993–13000. [Google Scholar]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized Focal Loss: Learning Qualified and Distributed Bounding Boxes. In Proceedings of the NeurIPS, Online, 6–12 December 2020. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the ICCV, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part V; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Zhu, P.; Wen, L.; Du, D.; Bian, X.; Fan, H.; Hu, Q.; Ling, H. Detection and tracking meet drones challenge. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 7380–7399. [Google Scholar] [CrossRef]

- Yu, Z.; Huang, H.; Chen, W.; Su, Y.; Liu, Y.; Wang, X. Yolo-facev2: A scale and occlusion aware face detector. arXiv 2022, arXiv:2208.02019. [Google Scholar] [CrossRef]

- Chen, X.; Hu, D.; Cheng, Y.; Chen, S.; Xiang, J. EDT-YOLOv8n-Based Lightweight Detection of Kiwifruit in Complex Environments. Electronics 2025, 14, 147. [Google Scholar] [CrossRef]

- Yan, C.; Xu, E. ECM-YOLO: A real-time detection method of steel surface defects based on multiscale convolution. J. Opt. Soc. Am. A 2024, 41, 1905–1914. [Google Scholar] [CrossRef]

- Wang, H.; Liu, X.; Song, L.; Zhang, Y.; Rong, X.; Wang, Y. Research on a train safety driving method based on fusion of an incremental clustering algorithm and lightweight shared convolution. Sensors 2024, 24, 4951. [Google Scholar] [CrossRef] [PubMed]

- Dong, S.; Wang, L.; Du, B.; Meng, X. ChangeCLIP: Remote sensing change detection with multimodal vision-language representation learning. ISPRS J. Photogramm. Remote Sens. 2024, 208, 53–69. [Google Scholar] [CrossRef]

- Chen, J.; Mai, H.; Luo, L.; Chen, X.; Wu, K. Effective feature fusion network in BIFPN for small object detection. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 699–703. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-neck by GSConv: A lightweight-design for real-time detector architectures. J. Real-Time Image Process. 2024, 21, 62. [Google Scholar] [CrossRef]

- Wang, C.; He, W.; Nie, Y.; Guo, J.; Liu, C.; Wang, Y.; Han, K. Gold-YOLO: Efficient object detector via gather-and-distribute mechanism. Adv. Neural Inf. Process. Syst. 2023, 36, 51094–51112. [Google Scholar]

- Kang, M.; Ting, C.M.; Ting, F.F.; Phan, R.C.W. ASF-YOLO: A novel YOLO model with attentional scale sequence fusion for cell instance segmentation. Image Vis. Comput. 2024, 147, 105057. [Google Scholar] [CrossRef]

- Du, Z.; Hu, Z.; Zhao, G.; Jin, Y.; Ma, H. Cross-layer feature pyramid transformer for small object detection in aerial images. arXiv 2024, arXiv:2407.19696. [Google Scholar] [CrossRef]

- Kang, M.; Ting, C.M.; Ting, F.F.; Phan, R.C.W. RCS-YOLO: A fast and high-accuracy object detector for brain tumor detection. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Vancouver, Canada, 8–12 October 2023; Springer: Berlin/Heidelberg, Germany, 2023; pp. 600–610. [Google Scholar]

- Xu, X.; Jiang, Y.; Chen, W.; Huang, Y.; Zhang, Y.; Sun, X. Damo-yolo: A report on real-time object detection design. arXiv 2022, arXiv:2211.15444. [Google Scholar]

- Ye, R.; Shao, G.; He, Y.; Gao, Q.; Li, T. YOLOv8-RMDA: Lightweight YOLOv8 network for early detection of small target diseases in tea. Sensors 2024, 24, 2896. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Zhang, C.; Chen, B.; Huang, Y.; Sun, Y.; Wang, C.; Fu, X.; Dai, Y.; Qin, F.; Peng, Y.; et al. Accurate leukocyte detection based on deformable-DETR and multi-level feature fusion for aiding diagnosis of blood diseases. Comput. Biol. Med. 2024, 170, 107917. [Google Scholar] [CrossRef] [PubMed]

| Dataset | Model | (%) | (%) | (%) | Params (×) | GFLOPs |

|---|---|---|---|---|---|---|

| SKU-110K | yolov5n | 56.3 | 88.9 | 64.1 | 25.09 | 7.2 |

| yolov5s | 58.1 | 90.2 | 66.8 | 91.23 | 24.0 | |

| RTMDet-Tiny | 40.1 | 62.5 | 46.9 | 48.73 | 8.03 | |

| yolov6n | 56.4 | 89.0 | 64.0 | 42.38 | 11.9 | |

| yolov8n | 57.0 | 89.3 | 65.1 | 30.11 | 8.2 | |

| yolov8s | 58.7 | 90.5 | 67.8 | 113.60 | 28.6 | |

| yolov9t | 57.0 | 89.1 | 65.2 | 20.06 | 7.8 | |

| yolov10n | 56.9 | 89.6 | 65.0 | 27.07 | 7.3 | |

| yolov10s | 58.7 | 90.6 | 67.7 | 80.67 | 24.8 | |

| yolov11n | 56.4 | 88.6 | 64.4 | 25.90 | 6.4 | |

| yolov12n | 56.6 | 89.1 | 64.5 | 25.68 | 6.5 | |

| RT-DETR-R18 | 58.2 | 89.6 | 66.9 | 198.73 | 56.9 | |

| DDQ R-CNN | 38.1 | 90.3 | 57.6 | 632.80 | 50.2 | |

| OURS | 58.8 | 90.6 | 67.6 | 26.16 | 7.9 |

| Dataset | Model | Params/ | |||

|---|---|---|---|---|---|

| Cigarette packet | yolov5n | 79.9 | 98.3 | 92.8 | 25.09 |

| yolov5s | 80.6 | 99.1 | 93.3 | 91.23 | |

| yolov6n | 80.1 | 98.3 | 92.6 | 42.38 | |

| yolov8n | 80.2 | 98.6 | 93.1 | 30.11 | |

| yolov8s | 81.0 | 99.2 | 93.5 | 113.6 | |

| yolov9t | 79.7 | 98.2 | 92.8 | 20.06 | |

| yolov10n | 79.6 | 98.7 | 92.7 | 27.07 | |

| yolov10s | 80.7 | 99.1 | 93.7 | 80.67 | |

| yolov11n | 79.7 | 98.6 | 92.9 | 25.90 | |

| yolov12n | 79.6 | 98.8 | 92.7 | 25.68 | |

| RT-DETR-R18 | 79.4 | 99.3 | 93.1 | 198.73 | |

| Ours | 81.0 | 99.4 | 93.9 | 26.16 |

| Dataset | Model | P | R | mAP/% | mAP50/% | mAP75/% | Params/ |

|---|---|---|---|---|---|---|---|

| Visdrone-val | yolov10n | 0.458 | 0.35 | 20.3 | 35.2 | 20.4 | 27.07 |

| yolov11n | 0.441 | 0.34 | 19.5 | 33.7 | 19.3 | 25.9 | |

| yolov12n | 0.44 | 0.335 | 19.3 | 33.1 | 19.1 | 25.68 | |

| OURS | 0.504 | 0.369 | 22.9 | 38.5 | 23.4 | 26.16 | |

| Visdrone-test | yolov10n | 0.386 | 0.302 | 14.8 | 27.1 | 14.4 | 27.07 |

| yolov11n | 0.393 | 0.296 | 15.1 | 27.1 | 15.1 | 25.9 | |

| yolov12n | 0.39 | 0.292 | 15.2 | 27 | 15.2 | 25.68 | |

| OURS | 0.436 | 0.311 | 17.6 | 30.7 | 17.8 | 26.16 |

| Head | mAP (%) | AP50 (%) | AP75 (%) | Params () | GFLOPS |

|---|---|---|---|---|---|

| Ours | 57.8 | 90.0 | 66.4 | 27.8 | 7.7 |

| SEAMHead [49] | 56.6 | 89.3 | 64.4 | 25.2 | 7.3 |

| TADDH [50] | 56.5 | 89.7 | 64.8 | 19.9 | 8.4 |

| MultiSEAM [51] | 56.6 | 89.3 | 64.5 | 67.3 | 9.3 |

| LSCD [52] | 56.9 | 89.5 | 65.1 | 19.5 | 6.2 |

| RSCD [53] | 55.2 | 87.5 | 62.8 | 20.5 | 6.5 |

| Neck Networks | mAP (%) | AP50 (%) | AP75 (%) | Params () | GFLOPS |

|---|---|---|---|---|---|

| Ours | 57.4 | 89.7 | 65.8 | 18.9 | 6.3 |

| bifpn [54] | 57.1 | 89.3 | 65.1 | 17.2 | 6.0 |

| slimneck [55] | 56.6 | 89.3 | 64.3 | 23.9 | 5.9 |

| goldyolo [56] | 55.9 | 88.6 | 64.8 | 53.9 | 8.9 |

| ASF [57] | 57.1 | 89.6 | 65.1 | 23.0 | 6.9 |

| CFPT [58] | 56.3 | 89.6 | 63.9 | 18.9 | 6.4 |

| RCSOSA [59] | 57.4 | 90.0 | 65.6 | 41.1 | 15.3 |

| GFPN [60] | 57.0 | 89.5 | 65.2 | 33.2 | 7.0 |

| EfficientRepBiPAN [61] | 56.8 | 89.5 | 64.8 | 27.3 | 6.8 |

| HSFPN [62] | 56.0 | 88.6 | 63.5 | 19.3 | 6.7 |

| C2f PA | BIMAFPN | DyDHead | mAP/% | /% | Params () | GFLOPs |

|---|---|---|---|---|---|---|

| – | – | – | 56.9 | 65.0 | 27.0 | 7.3 |

| ✓ | – | – | 57.7 | 66.2 | 27.7 | 8.4 |

| – | ✓ | – | 57.4 | 65.8 | 18.9 | 6.3 |

| – | – | ✓ | 57.8 | 66.4 | 27.8 | 7.7 |

| ✓ | ✓ | – | 57.9 | 66.4 | 24.0 | 7.4 |

| ✓ | – | ✓ | 58.2 | 66.8 | 28.8 | 8.7 |

| – | ✓ | ✓ | 58.0 | 66.7 | 25.4 | 7.3 |

| ✓ | ✓ | ✓ | 58.8 | 67.6 | 26.1 | 7.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

He, Z.; Yang, J.; Ning, H.; Li, C.; Tang, Q. DBA-YOLO: A Dense Target Detection Model Based on Lightweight Neural Networks. J. Imaging 2025, 11, 345. https://doi.org/10.3390/jimaging11100345

He Z, Yang J, Ning H, Li C, Tang Q. DBA-YOLO: A Dense Target Detection Model Based on Lightweight Neural Networks. Journal of Imaging. 2025; 11(10):345. https://doi.org/10.3390/jimaging11100345

Chicago/Turabian StyleHe, Zhiyong, Jiahong Yang, Hongtian Ning, Chengxuan Li, and Qiang Tang. 2025. "DBA-YOLO: A Dense Target Detection Model Based on Lightweight Neural Networks" Journal of Imaging 11, no. 10: 345. https://doi.org/10.3390/jimaging11100345

APA StyleHe, Z., Yang, J., Ning, H., Li, C., & Tang, Q. (2025). DBA-YOLO: A Dense Target Detection Model Based on Lightweight Neural Networks. Journal of Imaging, 11(10), 345. https://doi.org/10.3390/jimaging11100345