In this section, we present the comprehensive experimental evaluation of our proposed framework on different datasets for human action recognition task. First, we briefly describe the implementation details and tools we have used to conduct our experiments. Next, the datasets used in this research are briefly discussed, followed by the comparative analysis of the results we have obtained with different settings of our proposed framework. Finally, we present the comparative analysis of our proposed framework with the state-of-the-art human action recognition methods.

4.3. Assessment of the Baseline Results

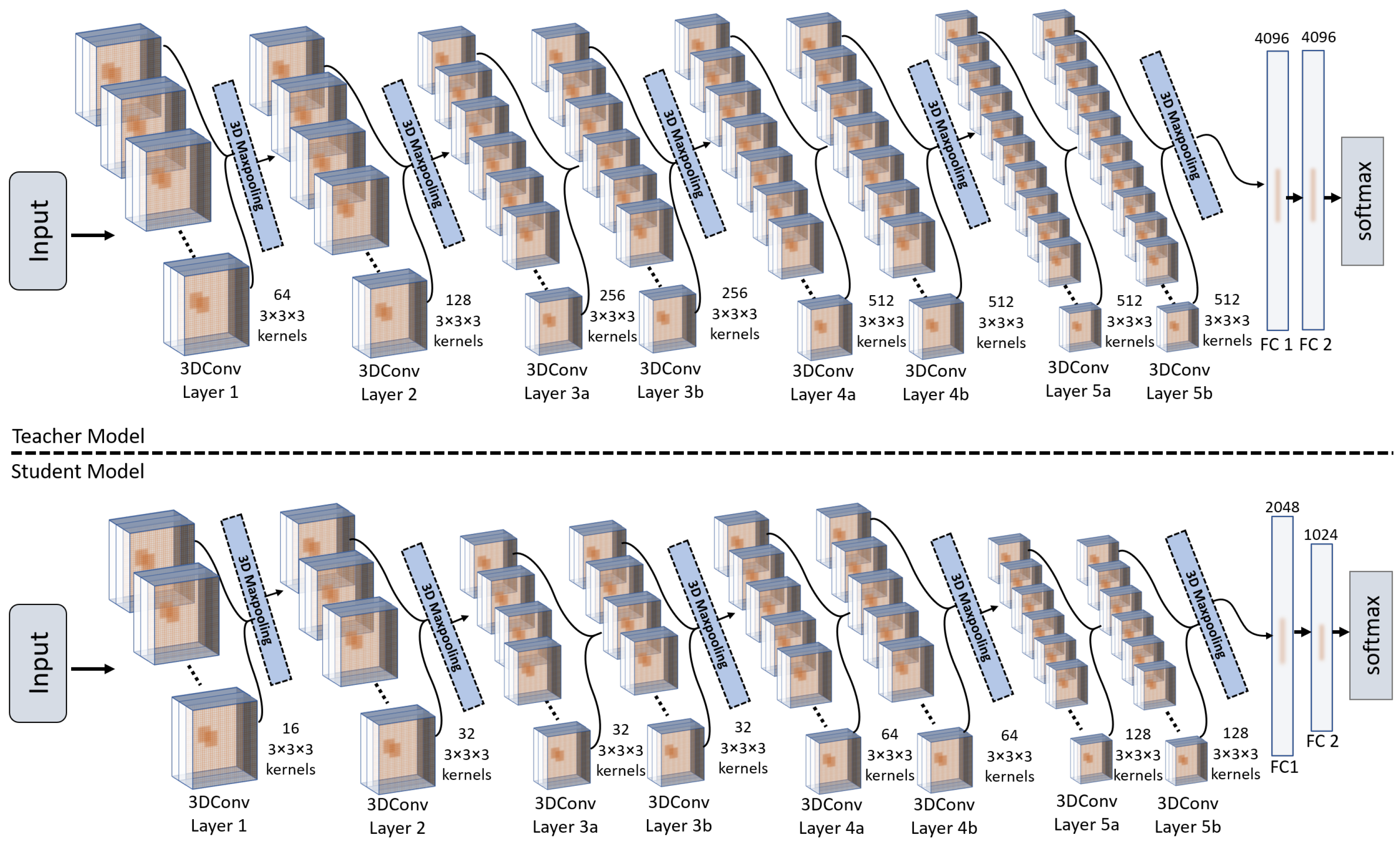

In this research, we have explored the spatio-temporal knowledge distillation from a pre-trained 3DCNN teacher model to a 3DCNN student model with different settings. We have also evaluated the performance of a light-weight 3DCNN model (student) with and without knowledge distillation. To obtain a well-generalized yet an efficient model across each dataset, we have conducted extensive experiments with different settings in our knowledge distillation framework. As our proposed method is based on the offline knowledge distillation approach, which transfers spatio-temporal knowledge from a large 3DCNN teacher model to a lightweight 3DCNN student model. Therefore, first we have trained the 3DCNN teacher model and later we have used the pre-trained 3DCNN model in knowledge distillation training. It is worth mentioning here that we have trained the 3DCNN teacher model with two different settings that include training from the scratch, which we refer to as TFS, and the training using transfer learning from [

9] (using its pre-trained weights), which we refer to as TUTL. In the first setting, we have trained the 3DCNN teacher model TFS from scratch on each dataset and then used it for knowledge distillation. In the second setting, we have trained the 3DCNN teacher model TUTL with pre-trained weights from [

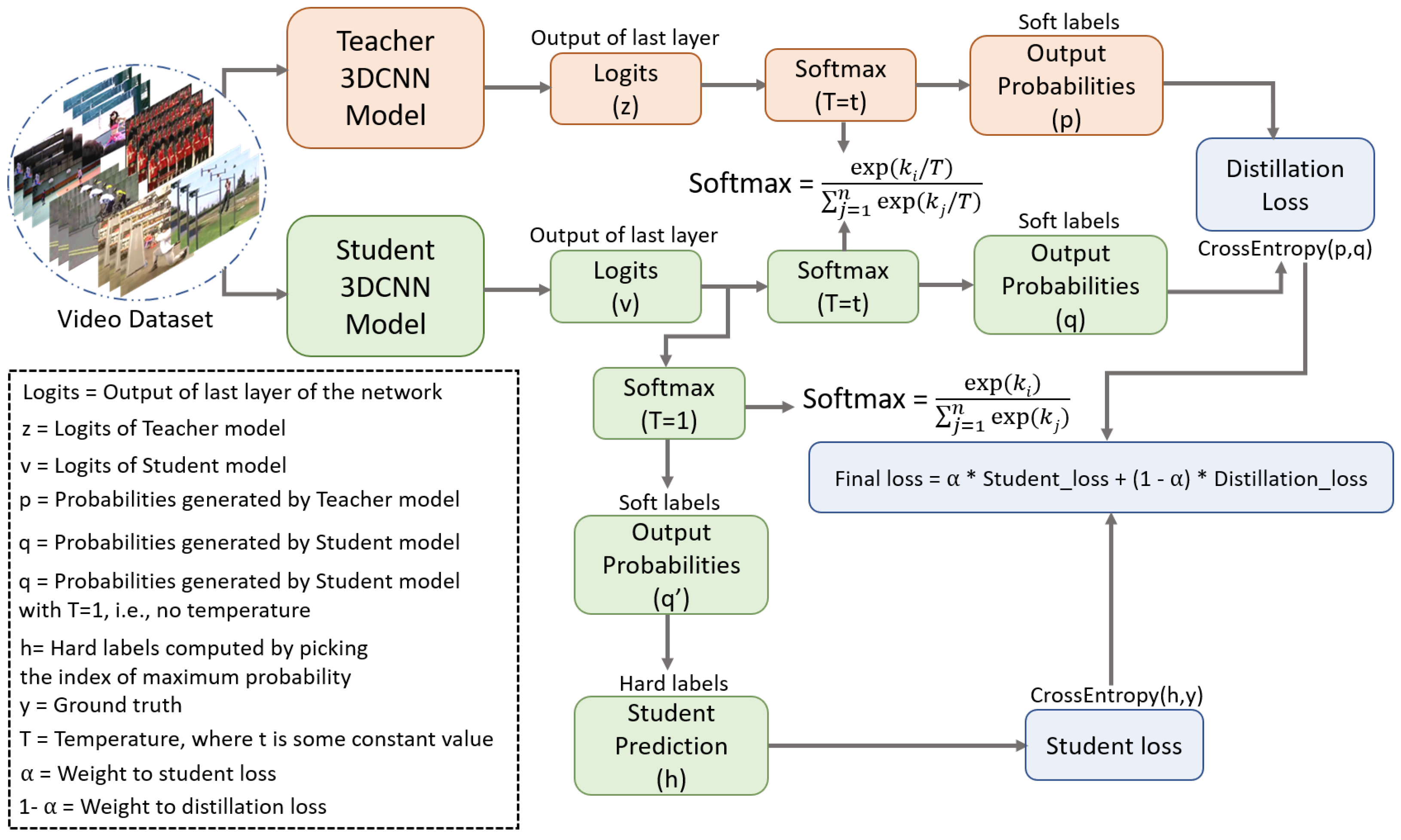

9] using transfer learning technique. We have assessed the performance of knowledge distillation with the two different pre-trained teacher models. Further, we also have investigated the effect the impact of temperature values (a hyperparameter in our knowledge distillation framework as shown in

Figure 4) on knowledge distillation, where we have examined the spatio-temporal knowledge distillation with different temperature values (i.e., T = 1, 5, 10, 15, 20) for both the pre-trained teacher models. The training histories for a set of conducted trainings are depicted in

Figure 5. For instance, in

Figure 5, the left-most plots represent the average training losses for the 3DCNN teacher TFS, for the 3DCNN teacher TUTL, students without knowledge distillation, and students with knowledge distillation with the optimal temperature (T = 10) value over UCF11, HMDB51, UCF50, and UCF101 datasets, respectively. The middle plots in

Figure 5 represent the average training losses of the student models trained under the pre-trained 3DCNN teacher model (TFS) using knowledge distillation with different temperature values including 1, 5, 10, 15, and 20. The right-most plots in

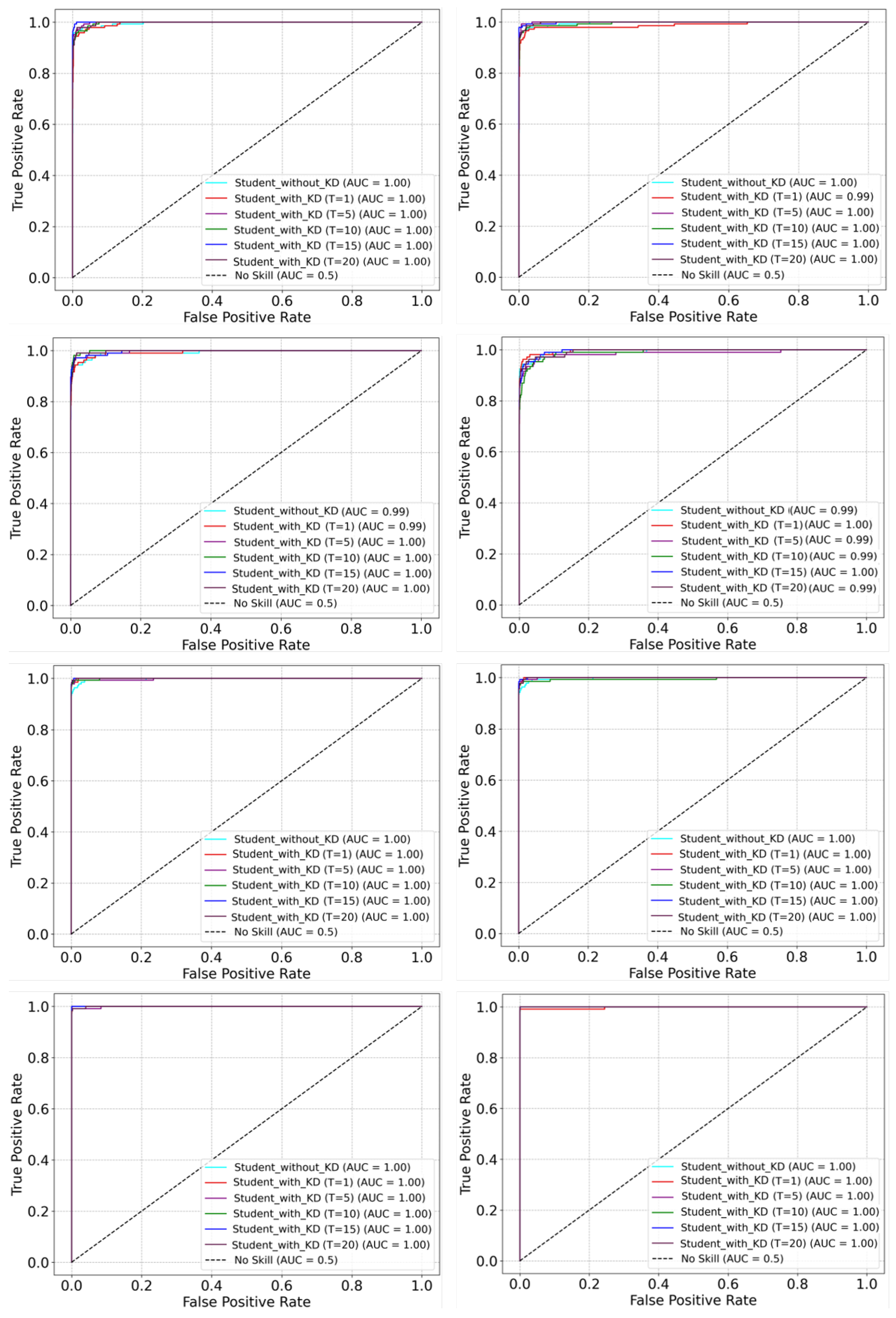

Figure 5, represent the average training loss of the student model trained under the pre-trained 3DCNN teacher model TUTL using knowledge distillation with different temperature values including 1, 5, 10, 15, and 20. Moreover, the effectiveness of the proposed framework is evaluated with different settings using receiver operating characteristics (ROC) curve and the area under the curve (AUC) values as visualized in

Figure 6. Generally, the ROC estimates the contrast between the true positive rate (TPR) and the false positive rate (FPR) for classifier predictions. In

Figure 6, the first column represents the ROC curves for the student models trained with and without knowledge distillation under the TFS teacher model with different temperature values over UCF11, HMDB51, UCF50, and UCF101 datasets. The second column in

Figure 6 represents the ROC curves for the student models trained with and without knowledge distillation under the TUTL teacher model with different temperature values over UCF11, HMDB51, UCF50, and UCF101 datasets. As it can be perceived from

Figure 6, the proposed framework with different settings obtains the best AUC values and ROC curves across all datasets.

We have also compared the accuracy of the proposed framework with different knowledge distillation settings (such as knowledge distillation with two different teacher models including the TFS and TUTL, and different temperature values). The obtained quantitative results comparisons for UCF11, HMDB51, UCF50, and UCF101 are presented in

Table 3,

Table 4,

Table 5 and

Table 6, respectively. From the quantitative results given in

Table 3, it can be noticed that the proposed framework attains different accuracies with different teachers (i.e., TFS and TUTL) and temperature values. For instance, the proposed 3DCNN student model achieves the best and the runner-up accuracies of 98.78% and 98.17%, respectively, when distilled by the TUTL teacher model having temperature values of T = 10 and T = 15, respectively. Furthermore, it is worth noticing that the proposed distilled 3DCNN model obtains approximately 10% improvement in accuracy in comparison with the proposed 3DCNN student model trained without knowledge distillation, which has an accuracy of 88.71%. Similarly, for the HMDB51 dataset in

Table 4, the proposed 3DCNN student model achieves the best accuracy of 92.89% when distilled by TUTL with T = 10 and obtains the runner-up accuracy of 91.55% when distilled by TFS with T = 10. Furthermore, it can be perceived from

Table 4 that the proposed TUTL-distilled 3DCNN student model obtains approximately 4.65% improvement in accuracy in comparison with the proposed 3DCNN student model trained without knowledge distillation. For the UCF50 dataset in

Table 5, the proposed 3DCNN student model attains the best accuracy of 97.71% when distilled by TUTL with T = 10 and achieves the runner-up accuracy of 97.60% when distilled by TFS with T = 10. Moreover, the proposed 3DCNN student model when distilled by TUTL with T = 10 obtains around 2% improvement in accuracy in comparison with the proposed 3DCNN student model trained without knowledge distillation. Finally, for the UCF101 dataset in

Table 6, the proposed 3DCNN student model achieves the best and runner-up accuracies of 97.36% and 96.80%, respectively, when distilled by TUTL with temperature values of T = 10 and T = 15, respectively. Furthermore, the proposed 3DCNN student model attains approximately 3.62% improvement in accuracy in comparison with the proposed 3DCNN student model trained without knowledge distillation. Furthermore, from the reported results in

Table 3,

Table 4,

Table 5 and

Table 6, it can be noticed that the proposed 3DCNN student model performs well when trained with the TUTL teacher model having temperature value T = 10 as compared to the proposed TFS-distilled 3DCNN student model with other temperature values. Thus, based on the obtained quantitative results, we determine that the proposed 3DCNN performs well when trained under TUTL with temperature value T = 10 as compared to other settings across all datasets we used in our experiments.

4.4. Comparison with the State-of-the-Art Methods

In this section, we compare our proposed framework with the state-of-the-art human action recognition methods with and without knowledge distillation. The detailed quantitative comparative assessment of the proposed framework with non-distillation state-of-the-art human action recognition methods on UCF11, HMDB51, UCF50, and UCF101 datasets are presented in

Table 7,

Table 8,

Table 9 and

Table 10, respectively. For instance, for the UCF11 dataset, the proposed Student

-TUTL method surpasses the state-of-the-art methods by obtaining the best accuracy of 98.7%, whereas the STDAN method [

44] attains the second-best accuracy of 98.2%. Amongst all the compared methods, the local-global features + QSVM method [

45] has the lowest accuracy of 82.6% for UCF11 dataset whereas the rest of the methods including multi-task hierarchical clustering [

46], BT-LSTM [

47], deep autoencoder [

48], two-stream attention-LSTM [

49], weighted entropy-variances based feature selection [

50], dilated CNN + BiLSTM-RB [

51], and DS-GRU [

52] achieve accuracies of 89.7%, 85.3%, 96.2%, 96.9%, 94.5%, 89.0%, and 97.1%, respectively, for the UCF11 dataset.

For the HMDB51 dataset, which is one of the most challenging video dataset, our proposed Student

-TUTL method achieves the overwhelming results by obtaining an accuracy of 92.8%, whereas the evidential deep learning method [

62] achieves runner-up results with an accuracy of 77.0%. The multi-task hierarchical clustering method [

46] attains an accuracy of 51.4%, which is the lowest accuracy amongst all comparative methods over the HMDB51 dataset. The other comparative methods, that include STPP + LSTM [

53], optical-flow + multi-layer LSTM [

54], TSN [

55], IP-LSTM [

56], deep autoencoder [

48], TS-LSTM + temporal-inception [

57], HATNet [

58], correlational CNN + LSTM [

59], STDAN [

44], DB-LSTM + SSPF [

60], DS-GRU [

52], TCLC [

61], and semi-supervised temporal gradient learning [

63] achieves 70.5%, 72.2%, 70.7%, 58.6%, 70.3%, 69.0%, 74.8%, 66.2%, 56.5%, 75.1%, 72.3%, 71.5%, and 75.9% accuracies, respectively, for the HMDB51 dataset.

For the UCF50 dataset, the proposed Student

-TUTL method outperforms all the comparative methods by obtaining the best accuracy of 97.6% followed by the runner-up LD-BF + LD-DF method [

65], which achieves an accuracy of 97.5%. The local-global features + QSVM [

45] method attains the lowest accuracy of 69.4% amongst all comparative method on the UCF50 dataset. The rest of the comparative methods including multi-task hierarchical clustering [

46], deep autoencoder [

48], ensembled swarm-based optimization [

64], and DS-GRU [

52] achieve accuracies of 93.2%, 96.4%, 92.2%, and 96.4%, respectively, for the UCF50 dataset.

Finally, for the UCF101 dataset, the proposed Student

-TUTL method surpasses all the comparative methods by obtaining the best accuracy of 97.3%, followed by the RTS method [

74], which attains the second best accuracy of 96.4%. The multi-task hierarchical clustering [

46] obtains the lowest accuracy of 76.3% amongst all the comparative methods for the UCF101 dataset. The rest of comparative methods that include saliency-aware 3DCNN with LSTM [

66], spatio-temporal multilayer networks [

67], long-term temporal convolutions [

21], CNN + Bi-LSTM [

68], OFF [

69], TVNet [

70], attention cluster [

71], Videolstm [

18], two stream convnets [

72], mixed 3D-2D convolutional tube [

73], TS-LSTM + temporal-inception [

57], TSN + TSM [

75], STM [

76], and correlational CNN + LSTM [

59] obtain accuracies of 84.0%, 87.0%, 82.4%, 92.8%, 96.0%, 95.4%, 94.6%, 89.2%, 84.9%, 88.9%, 91.1%, 94.3%, 96.2%, and 92.8%, respectively, for the UCF101 dataset.

To further validate the performance generalization of our method, we compute the confidence interval as in [

77] of our proposed method for each dataset used in this paper and compare it with the average confidence interval of the state-of-the-art methods. It is worth mentioning here that we estimate the confidence interval for our method and the state-of-the-art methods using a confidence level of 95%. The obtained confidence interval values of the proposed method and the state-of-the-art methods are listed in

Table 11. From the obtained confidence interval values, it can be perceived that the proposed method has the higher confidence with small interval on UCF11 dataset as compare to the state-of-the-art methods. For instance, the proposed method has the confidence interval between 97.59 and 99.96 with a small range of 2.37, where as the state-of-the-art methods have the average confidence interval between 87.81 and 96.52 with a comparatively large range of 8.72. Similarly, for the HMDB51 dataset, the proposed method has the confidence interval between 91.46 and 94.20 with a small range of 2.74, whereas the state-of-the-art methods have the average confidence interval between 64.62 and 72.98 with a comparatively large range of 8.36. For the UCF50 dataset, the proposed method has the confidence interval between 96.78 and 98.48 with a small range of 1.65, whereas the state-of-the-art methods have the average confidence interval between 79.53 and 97.48 with a comparatively large range of 18.98. Finally, for the UCF101 dataset, the proposed methods has the confidence interval between 96.72 and 97.94 with a small range of 1.22, whereas the state-of-the-art methods have the average confidence interval between 87.44 and 93.27 with comparatively large range of 6.27. It is worth noticing that the proposed method has a higher confidence across each dataset with a small interval as compare to the state-of-the-art methods, which verfies the effectiveness of the proposed method over the state-of-the-art methods.

Beside comparing the proposed framework with the mainstream human action recognition methods, we also compare our proposed framework with the knowledge distillation-based human action recognition methods. The comparative analysis of the proposed method with the state-of-the-art knowledge distillation-based human action recognition methods on HMDB51 and UCF101 datasets are presented in

Table 12 and

Table 13, respectively. The results listed in

Table 12 demonstrates the overwhelming performance of the proposed method on HMDB51 dataset in comparison with other knowledge distillation-based methods. For instance, the proposed method achieves the best accuracy of 92.8% amongst all the comparative methods, followed by the runner-up D3D method D3D [

32], which obtains an accuracy of 78.7%. The SKD-SRL [

33] method attains the lowest accuracy of 29.8% amongst all the comparative knowledge distillation-based methods for the HMDB51 dataset. The rest of comparative methods that include STDDCN [

29], Prob-Distill [

30], MHSA-KD [

31], TY [

34], (2+1)D Distilled ShuffleNet [

36], and Self-Distillation (PPTK) [

35] achieve accuracies of 66.8%, 72.2%, 57.8%, 32.8%, 59.9%, and 76.5%, respectively, for the HMDB51 dataset. Similarly, for the UCF101 dataset in

Table 13, our proposed framework outperforms other comparative knowledge distillation-based methods by obtaining the best accuracies of 97.3% followed by the D3D [

32] method which attains the second-best accuracy of 97.0%. The TY [

34] method achieves the lowest accuracy of 71.1% amongst all the comparative methods for the UCF101 dataset. The rest of comparative methods that include STDDCN [

29], Prob-Distill [

30], SKD-SRL [

33], Progressive KD [

37], (2+1)D Distilled ShuffleNet [

36], Self-Distillation (PPTK) [

35], and ConDi-SR [

38] achieve accuracies of 93.7%, 95.7%, 71.9%, 88.8%, 86.4%, 94.6%, and 91.2%, respectively, for the UCF101 dataset.

We also evaluate the performance generalization of our proposed method in comparison with the state-of-the-art knowledge-distillation based methods using confidence interval (with a confidence level of 95%) on HMDB51 and UCF101 datasets. The obtained confidence interval values of the proposed method and the state-of-the-art methods are listed in

Table 14. It is clear from the obtained confidence interval values that the proposed method achieves better confidence interval values as compare to the state-of-the-art methods across both HMDB51 and UCF101 datasets. For instance, the proposed method has the confidence interval on the HMDB51 dataset in between 91.46 and 94.20 with a small range of 2.74, whereas the state-of-the-art methods have the average confidence interval between 43.59 and 75.03 with a comparatively very large range of 31.44. Similarly, for the UCF101 dataset, the proposed method has the confidence interval between 96.72 and 97.94 with a small range of 1.22, whereas the state-of-the-art methods have the average confidence interval between 80.29 and 95.34 with a comparatively large range of 15.11. The obtained confidence interval values for both the datasets verify the generalization and effectiveness of the proposed method over the state-of-the-art knowledge distillation-based methods.

Considering the overall comparative analysis across all datasets, the proposed framework outperforms all the comparative mainstream action recognition methods by obtaining an improvement in accuracy of 7%, 34.88%, 7.7%, and 8% on UCF11, HMDB51, UCF50, and UCF101 datasets, respectively. Furthermore, we compare our proposed framework with knowledge distillation-based human action recognition methods on HMDB51 and UCF101 datasets. Experimental results reveal that our proposed framework attains an improvement in accuracy of 56.46% and 6.39%, on average, on HMDB51 and UCF101 datasets, respectively, over the existing knowledge distillation-based human action recognition methods.

4.5. Run Time Analysis

To validate the effectiveness and suitability of the proposed methods for practical applications in real-time environment, we have computed the inference time of the proposed method for action recognition task in terms of seconds per frame (SPF) and frames per second (FPS) with and without GPU computational resources. The obtained run time results are then compared with the state-of-the-art human actions recognition methods and presented in

Table 15. The run time results listed in

Table 15 shows that, while using GPU resources OFF [

69] has the best inference time results with the SPF of 0.0048 and FPS of 206, followed by STPP + LSTM [

53] having the second-best run time results with the SPF of 0.0053 and FPS of 186.6. The proposed framework attains the third best run time results with the SPF of 0.0091 and FPS of 110. The Videolstm [

18] method has the worst run time results with SPF of 0.0940 and FPS of 10.6 among all comparative methods while using GPU resources. When using CPU resources, the propose method obtains the best run time results with the SPF of 0.0106 and FPS of 93. The Optical-flow + multi-layer LSTM [

54] has the second-best run time results with the SPF of 0.18 and FPS of 3.5.

To provide a fair comparison of the inference speed, we scaled [

78] the run time results of the state-of-the-art methods in

Table 15 to the hardware specifications (i.e., 2.5 GHz CPU and 585 MHz GPU) we used in our experiments. The scaled run time results of the proposed method and other comparative human action recognition methods are presented in

Table 16. From the scaled results in

Table 16, it can be noticed that the STPP + LSTM [

53] method has the best SPF and FPS values of 0.0063 and 154.6, respectively, for the GPU inference. The OFF [

69] method has the second-best SPF and FPS values of 0.0082 and 120.5, respectively, followed by the proposed method having the third best SPF and FPS values of 0.0091 and 110, respectively, for the GPU inference. On the other hand, for inference on the CPU, our proposed method attains the best SPF and FPS of 0.0106 and 93, respectively, followed by Optical-flow + multi-layer LSTM [

54], which has the runner-up SPF and FPS values of 0.23 and 2.6, respectively. It is worth mentioning here that, the proposed method provides comparatively slower inference speed than STPP + LSTM [

53] and OFF [

69] on GPU resources, however, the proposed method is more efficient and robust in terms of accuracy as compare to STPP + LSTM [

53] and OFF [

69]. Moreover, for CPU inference, the proposed method outperforms the comparative methods by obtaining the best SPF and FPS values for both scaled and unscaled inference speed analysis by obtaining an improvement of up to 28× and 37× for SPF metric and an improvement of 37× and 50× for FPS metric, respectively. It is also worth mentioning here that the proposed framework not only obtains the best accuracy on the UCF11 dataset but also attains an improvement up to 2× in terms of FPS metric over the runner-up STDAN [

44] method. Similarly, on the UCF50 dataset, the proposed method obtains an improvement of up to 13× in terms of FPS metric over the runner-up LD-BF + LD-DF [

65] method. Thus, the obtained quantitative and run time assessment results validate the efficiency and robustness of our proposed framework for real-time human action recognition task.