Abstract

Background and Objectives: Brain Tumor Fusion-based Segments and Classification-Non-enhancing tumor (BTFSC-Net) is a hybrid system for classifying brain tumors that combine medical image fusion, segmentation, feature extraction, and classification procedures. Materials and Methods: to reduce noise from medical images, the hybrid probabilistic wiener filter (HPWF) is first applied as a preprocessing step. Then, to combine robust edge analysis (REA) properties in magnetic resonance imaging (MRI) and computed tomography (CT) medical images, a fusion network based on deep learning convolutional neural networks (DLCNN) is developed. Here, the brain images’ slopes and borders are detected using REA. To separate the sick region from the color image, adaptive fuzzy c-means integrated k-means (HFCMIK) clustering is then implemented. To extract hybrid features from the fused image, low-level features based on the redundant discrete wavelet transform (RDWT), empirical color features, and texture characteristics based on the gray-level cooccurrence matrix (GLCM) are also used. Finally, to distinguish between benign and malignant tumors, a deep learning probabilistic neural network (DLPNN) is deployed. Results: according to the findings, the suggested BTFSC-Net model performed better than more traditional preprocessing, fusion, segmentation, and classification techniques. Additionally, 99.21% segmentation accuracy and 99.46% classification accuracy were reached using the proposed BTFSC-Net model. Conclusions: earlier approaches have not performed as well as our presented method for image fusion, segmentation, feature extraction, classification operations, and brain tumor classification. These results illustrate that the designed approach performed more effectively in terms of enhanced quantitative evaluation with better accuracy as well as visual performance.

1. Introduction

Brain tumor (BT) is the tenth leading cause of death and disability worldwide and has been recognized by organizations such as the World Health Organization (WHO), National Brain Tumor Society (NBTS), and the Indian Society of Neuro-Oncology (ISNO) as one of the most important primary neoplasms causing morbidity and mortality across the world [1]. According to the international agency for cancer research, over 97,000 people in the United States and nearly 126,000 people worldwide suffer disability and related consequences of brain tumors each year [2]. If appropriate diagnosis, intervention, and management are received at an early stage, an increase in survival rates can be achieved.

Medical image fusion (MIF) techniques such as digital imaging, pattern recognition, and machine learning ML) by fusion of images now have various applications in clinical medicine and are widely used to diagnose neoplasms as well [3]. These fusion methods overcome the constraints of traditional imaging techniques and are described to be more effective than magnetic resonance imaging (MRI) as well as computed tomography (CT) [4]. Various images of organs have been created by using different sensor strategies [5]. It is crucial to differentiate a neoplastic lesion and also to derive lesion characteristics as opposed to the surrounding normal tissues to help in diagnosis. Since lesion size, texture, shape, and placement vary based on different individual characteristics, images must be evaluated as well as categorized using tissue segmentation, which has been detailed in different forms of automation, to produce a diagnosis. Curvelet domain coefficients providing substantial structural features and functional mapping are necessary [6]. To distinguish the boundaries between normal and abnormal brain tissue, expert segmentation requires several upgraded datasets and pixel profiles. It is ideal in this case to use fully automated machine learning techniques. Multimodal medical image fusion was thus designed utilizing the particle swarm optimization technique (PSO) to improve the efficiency of multimodal mapping [7].

The fusion-based techniques help to analyze and evaluate the disease by expanding the visual data and lucidity. Direct fusion techniques frequently create unwanted impacts prompting distortion and low contrast. Multi-scale decomposition (MSD) strategies have made progress in different image fusion issues and the limitations are overcome through the total variation (TV-L1) method [8]. MIF is well-known terminology in this area of satellite imaging and diagnostic medical imaging, which has now expanded its presence in clinical diagnosis and has proven to be beneficial in multimodal medical image fusion (MMIF) [9]. The spatial domain is where multimodal image fusion and pixel-to-pixel fusion take place. Additionally, a max/min for the fusion approaches using weighted pixels is available [10].

Utilizing phase congruency and local laplacian energy, pixel activity determines the weights to select the most active pixels. [11]. The image features are first scaled by a designed method with average weighting. Yin et al. [12] in their study utilised the maximum average mutual information, this image fusion technique. MIF has widely used optimization techniques to resolve deficiency issues such as the whale optimization algorithm (WOA), which is one of the most used optimal algorithms [13].

For image fusion, the authors of [14] designed a method and different features are extracted by individually multiplying each input image’s first convolutional sparsity component. A few techniques are also discussed, including the principle of feature measurement [15] and graph filter and sparse representation (GFSR) [16]. Dual-branch CNN (DB-CNN) [17] as well as separable dictionary learning based on Gabor filtering [18] and local difference in the non-subsampled domain (LDNSD) [19] are also considered. Edge-based artifacts are instances of blocking objects in these methods [7].

To match the high-frequency images, the Laplacian re-decomposition (LRD) method offered a way to inverse LRD fusion-based rule by producing pixels surrounding the overlapped domains [20]. Deep learning and MMIF are also created for disease diagnostics in medicine. Deep learning is combined with MDT and actual analysis to characterize the MMIF. It can successfully overcome the difficulty of only one-page processing and make up for the lack of image fusion. It can also be created to address many types of MMIF challenges in batch processing mode [21]. To improve the execution of various methods of image processing, algorithms perform optimization and give an optimal solution for image fusion; various global optimization techniques are imposing procedures that can convey better clarifications for various issues [22].

Positron Emission Tomography (PET) images are also enhanced in terms of spatial resolution and given acceptable color [23]. MRI images also have improved spatial resolution and provide decent color. Using a CNN-based technique, weight maps are created from input medical images. Following that, the weight maps formed are subjected to gaussian pyramid decomposition, and a multi-scale high-resolution image is obtained using the contrast pyramid decomposition approach [24]. To break down the image into sub-band categories, the Non-Subsampled Contourlet Transform (NSCT) is utilized [25]. The presentation of an image fusion framework based on two CNP models using nonsubsampled shearlet transforms (NSST) is made. These two CNP models were used to constrain the fusion of the NSST domain, which operates at lower frequencies. Applying the encoder network to the encoded image, its features are extracted and fused using Lmax norms [10].

Additional segmentation methods include a fast level set based CNN [26], bayesian fuzzy clustering with hybrid deep auto-encoder (BFC-HAD) [27], and symmetric-driven adversarial network (SDAN) [28]. However, the precision and accuracy of these techniques are compromised. The categorization of brain tumor segmentation, synthetic data augmentation using multiscale CNN [29] as well as deep learning [30] are also designed. Meningioma from non-meningioma brain images is distinguished using a learning-based fine-tuning transfer [31] and dice coefficient index [32]. Three forms of brain tumors were distinguished by the authors of [33] using the transfer learning CNN model. An already-built pre-trained network was modified by expanding the tumor, ring-dividing it, and using T1-weighted contrast-enhanced MRI [34]. Hybridization of two methods entropy-based controlling and Multiclass Vector machine (M-SVM) is used for optimal feature extraction [35,36,37]. The differential deep-CNN model for detecting brain cancers in MRI images was put to the test by the authors in [38]. Here, CNN-multi-scale analysis was used to create 3D-CNN [39] utilizing MRI images of pituitary tumors. The classification procedure was made to perform better in [36,40,41] by using variational auto-encoders with generative adversarial networks and a hybrid model. These conventions still need to be refined because they do not produce adequate classification and segmentation results.

As dataset sizes grow, the high computational complexity has a negative impact on traditional models. Before their implementation in hardware, neural networks (NNs) must first be evaluated for computational complexity, which uses the majority of the CPU and GPU resources [42,43,44]. The difficulty, however, is dependent on the specific deep learning-probabilistic neural network (DLPNN) design and can be decreased if we can accept a more accurate trade-off: changing the values of the hyper-parameters can have an impact on the accuracy while also working to lower the computational complexity. In addition, the performance of conventional approaches for segmentation, classification, and fusion needs to be improved. The main contributions of the paper are listed below to help solve these issues:

- Preprocessing, fusion, segmentation, and classification steps were combined to create a brand-new BTFSC-Net model that no other authors have yet created.

- The original purpose of HPWF was to improve the contrast, brightness, and color qualities of MRI and CT medical images by removing various noises from them.

- The REA analysis is combined with the input data MRI and CT images using DLCNN-based fusion network, which identified the tumor region.

- For the separation of a tumor region from a fused image, the HFCMIK method is used to further characterize the major region of the brain tumor.

- Using the gray-level cooccurrence matrix (GLCM), redundant discrete wavelet transform (RDWT) trained features, the classification of benign and malignant tumors is achieved using DLPNN.

- Data from simulations illustrate that the designed approach outperformed state-of-the-art methodology.

2. Proposed Methodology

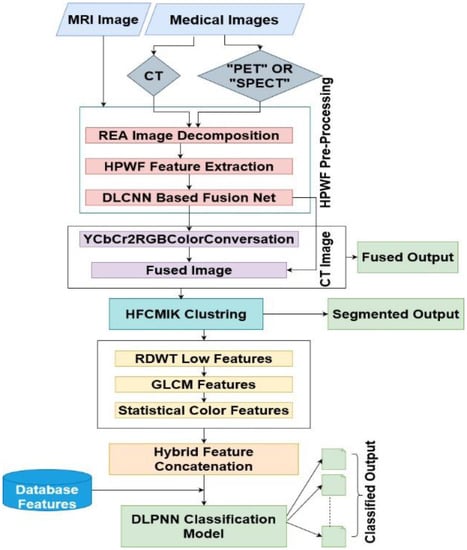

The classification techniques for brain tumors are thoroughly examined in this section. Both Figure 1 and Table 1 present the proposed algorithm for the BTFSC-Net technique. Medical images are put through an HPWF before being processed further to remove any noise. To fuse MRI and CT medical images while retaining REA capabilities a new method is designed. Since it is essential to obtain the slopes and boundaries of the image, REA is utilized.

Figure 1.

Segmentation and classification model for a proposed brain tumor fusion (MRI: magnetic resonance imaging, CT: computed tomography, PET: positron emission tomography, SPECT: single photon emission computed tomography, REA: robust edge analysis, HPWF: hybrid probabilistic wiener filter, DLCNN: deep learning convolutional neural networks, HFCMIK: hybrid fuzzy C-means integrated K-means, RDWT: redundant discrete wavelet transform, GLCM: gray-level cooccurrence matrix, DLPNN: deep learning probabilistic neural network).

Table 1.

Fusion of a proposed segmentation and classification system for brain tumors.

HFCMIK clustering is utilized to separate the diseased region from the fused image. Furthermore, the fused image is utilized to integrate empirical color features, low-level features based on the RDWT, and texture characteristics based on the GLCM to form hybrid features [37,45]. The distinction between benign and malignant tumors is made using a DLPNN.

2.1. Hybrid Probabilistic Wiener Filter Method (HPWF)

With the use of Gaussian mask kernels, the HPWF successfully improves and eliminates noise from images. The HPWF algorithm may be found in Table 2, and pixels comprise images. Many groups of the image have been divided. After the pixel cluster is implemented to HPWF in one of these ways, the resultant pixel is an improved version of the primary pixel. Each image pixel is contaminated by a variety of noise sources. Consider the original image Oij and Gij is the noise term with ith row, jth column pixel values. Here is the produced image with noise Xij:

Table 2.

HPWF Approach.

To estimate the gaussian noise variance, the Gaussian mask is convolved with a noisy image Xij of size M × N. We describe the use of eigenvalues as a threshold parameter for denoising Gaussian noise in images. Gaussian noise is removed through thresholding in the transform domain rather than the spatial domain. Using spatial coefficients, thresholding is performed to estimate great pixels. The noisy image of size M × N is then mean filtered and stored in . By subtracting the noisy image from the mean filtered image, the difference mask (Di,j) is created. This difference mask is then used to keep only uniformly distributed pixels (V) of an image based on whether (Di,j) is less or greater than the mean (µ). The denoised (Yi,j) image is created by adjusting the mean filtered image based on the threshold setting.

The noisy image of size M × N is then mean filtered. By subtracting the noisy image from the mean filtered image, the difference mask is created. The denoised image is created by adjusting the mean filtered image based on the threshold setting.

2.2. Proposed Fusion Strategy

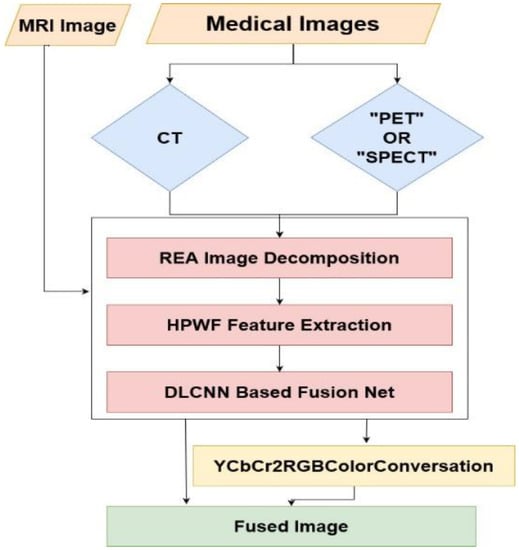

The new technique may combine images from a variety of imaging methods, e.g., MRI-SPECT, MRI-CT, and MRI-PET combinations, by using two distinct structures. The hybrid DLCNN for fusing MRI-CT/PET/SPECT images is shown in Figure 2. To enhance slope analysis in the event of misalignment, image decomposition is performed using the REA technique. This removes the edges and slopes from the primary input image. Medical images typically have piecewise smooth slopes, with Analysis indicating the edges. The edge positions on these images should line up with the CT image when they are aligned. This highlights how the analytical feature is dynamic and changing using the given medical images. Analysis literature has not looked at this property. Active slope analysis images and signals are the names given to this type of image or signal today.

Figure 2.

Hybrid DLCNN for MRI-CT/PET/SPECT image fusion.

By subtracting the blurred input photos from the represented input images using HPWF, crisp images are produced. The fused output is then produced by combining the results from the HPWF and REA. The operational technique of the recommended method is shown in Table 3.

Table 3.

Proposed Fusion algorithm.

2.2.1. Robust Edge Analysis

During the fusion process, contemporaneous registration of the raw medical images is accomplished using an REA approach shown in Table 4. Because misalignment is challenging to correct, accurate registration is necessary when combining MR and CT images. To increase resolution and enable precise image registration, the MR image is first focalized.

Table 4.

REA algorithm.

Similarly, a gradual mismatch reduction enables precise image fusion. Until convergence is reached, these two processes are repeated. Additionally, it takes into account the hitherto ignored intrinsic correlation of various bands. A novel active slope methodology has been devised to optimize the total energy value in these bands. Backtracking is employed to extract the slope while quick REA iterations are used to effectively solve the subproblems. The REA contains just one non-sensitive argument, unlike conventional variation techniques. Medical image fusion begins with input medical image registration. Without increasing processing complexity, large medical images require trustworthy similarity evaluation. Data is therefore kept in space via REA. A mismatch in the medical imaging would also make slopes analysis worse. As a result, REA is employed in nature to establish similarity [44]. Energy has a role in the simultaneous registration and fusion of molecules.

The terms R, down-sampling process, and E stand for the image energy and energy function optimization of the subproblem image, respectively. The low resolution would cause a significant shift and image blurring because Equation (8) obtains the per-pixel energy cost utilization [46]. The slope extraction method is employed to prevent such a simple fix. Iteratively moving a image in the wrong direction could be effectively avoided by doing this. Equation (9) demonstrates that the objective of this method is to optimize energy. To begin with, the issue is resolved for R as follows:

Here, the term in Equation (2)’s first part is smooth, however, the second part is not; is an ideal slope parameter.

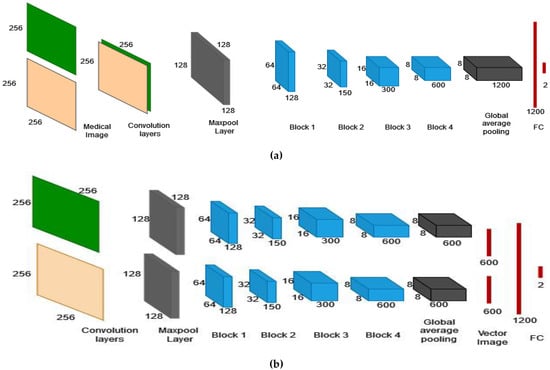

2.2.2. DLCNN-based Fusion Network

Applications for segmenting, classifying, and fusing images typically use deep learning. A Fusion-Net architecture based on DLCNN is shown in Figure 3 for fusing several image modalities according to characteristics. The proposed Fusion-Net architecture makes use of convolutional layers: max layering concatenation and two-way SoftMax. Convolution layers are used to extract fine details, and their main job is to perform convolution between the kernel-based weight fusion and the input image patch. Convolution layers applied to MR and CT images are shown in Equations (10) and (11), respectively.

Figure 3.

Fusion network based on deep learning convolutional neural networks. (a) Generalised fusion network for medical images fusion, (b) Proposed method of fusion network.

Here, the Bias function is denoted by B1 which depends on the rectifier linear unit (ReLU), and W1 is a matrix based on the kernel containing the most recent weight values. Primarily, the neural network includes 64 feature maps of 16 × 16 size, whereas the convolutional layer kernel consists of 3 × 3 size features. Equation (12) depicts how the ReLU works.

Additionally, the MaxPooling layer receives the information from the convolutional layers as input and precisely extracts each type of feature. The primary objective of the MaxPooling layer is to extract the inter and intra dependencies between MR and CT features. By using both inter and intra dependencies, it is possible to determine how CT affects MRI and vice-versa [46]. Equations (19) and (20) illustrate how the MaxPooling layer operates.

Here, W2 denotes the matrix based on the kernel containing the recent weight parameters and B2 denotes a bias function built using a ReLU, respectively. The combined MR and CT features are produced as MaxPooling layer outputs with the letters F3 and F4, respectively. Additionally, the kernel in the MaxPooling layer in the second stage has 128 feature maps with a 16 × 16 size, and vice versa.

Here, fused feature maps are represented with FR created by the neural network, The kernel matrix with the most recent weight values is known as W3, B3 stands for the bias function based on ReLU, and W3 stands for the kernel matrix.

In Figure 3a, Convolution layers are used in the developed model to analyse the two-channel image and produce feature maps with change information. The final feature vector for these feature maps is derived using the global average pooling, which helps to lessen the fitting problem. The fully connected layer (FC) then outputs the change category. In Figure 3b, Within the designed model, each branch employs several convolutional layers and global average pooling. The top network, which is made up of FC, receives the two branch outputs after being concatenated. With the top network acting as a classifier and the two branches acting as two feature extractors.

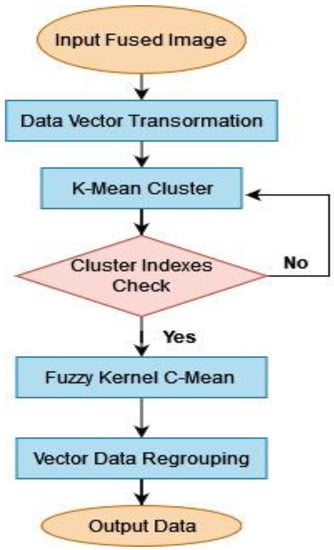

2.3. Proposed Hybrid Fuzzy Segmentation Model

The analysis and segmentation of tumors depend heavily on brain imaging. The intended method for segmenting tumors using HFCMIK is shown in Figure 4. Adaptive cluster index localization, which also introduces similarity matching and the mean characteristic of cluster centers, addresses the problem and maximizes the limitations of conventional k-means clustering. Adaptive k-means clustering (AKMC) is a new technique for adaptive segmentation and cluster will be addressed by fuzzy kernel c means (FKCM) clustering approach.

Figure 4.

Proposed hybrid fuzzy segmentation model.

Two stages make up the HFCMIK algorithm. In the first step, initial centroids are selected using the AKMC approach. Therefore, initial centroids are set for the duration of the process. It lowers the number of iterations required to combine similar things. By choosing a distinct starting centroid, the AKMC algorithm offers local optimum results in the end. This results in the global optimum solution of the AKMC algorithm. For the second phase, On employs the weighted FKCM approach based on Euclidean distance and segments deal with the weights related to each feature value range in the collection of data [46]. A weighted ranking method is used by the upgraded proposed algorithm. Each piece of data’s distance from the origin to the weighted attribute is calculated using the weighted ranking approach. Equation (16) calculates weighted data points.

where the weightage of input is indicated by . Equation (18) shows the format for calculating n numbers of U values for n data points.

The weighted data points (Ui) are subsequently sorted by their distances. This data is then separated into pixel block sets, where k is the whole quantity of clusters. The center points or the mean value of each group serve as the initial centroids. Consistency among cluster members is caused by the initial centroids of this technique. The proposed weighted ranking method selects beginning centroids using the distance formula since HFCMIK selects group members using distance measurements. The recommended weighted ranking notion is therefore helpful for maximizing the choice of initial centroids. The method’s first phase includes two key components: the distinct initial centroid selection and the processing weights based on attribute values. An image is divided into clusters via the clustering method known as the HFCMIK and the proposed hybrid fuzzy segmentation model is shown in Table 5. It re-estimates the segmented output while using centroids to represent its artificially created cluster.

Table 5.

Proposed hybrid fuzzy segmentation model.

2.4. Proposed Hybrid Feature Extraction

The different lesions can be categorized using a number of the brain tumor’s features that can be retrieved. Several crucial traits, such as low-level features based on RDWT and texture characteristics based on GLCM, features of matrix color, and others, that support the differentiating of brain tumors have been extracted. The spatial relationship between the pixels representing the brain tumors is taken into account using the texture analysis method known as GLCM. The GLCM method computes the texture of the tumor and uses that data to characterize the texture using repeated frequently occurring image combinations with measured value and frequency characteristics that exist in the brain tumor. The approach outlined above can be used to get statistical texture features from the GLCM matrix once it has been created. This probability measure describes the probability that a particular grey level is present near another grey level [46].

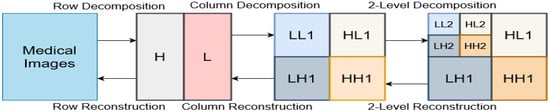

Following that, a two-level RDWT is used to extract the low-level features.

The LL1, LH1, HL1, and HH1 bands will be represented by the segmented result when RDWT is initially applied to the outcome. The entropy, energy, as well as correlation parameters, are calculated using the LL band. The result is then effectively obtained as LL2, LH2, HL2, and HH2 while applying RDWT one more time to the LL output band. As illustrated in Figure 5, the LL2 band used to be once more utilized to evaluate the entropy, energy, and correlation characteristics.

Figure 5.

Two-level RDWT correlation.

The segmented image is then used to obtain statistical color data based on the mean and standard deviation.

Following that, all of these features are integrated using array concatenation, producing a hybrid feature matrix as the output.

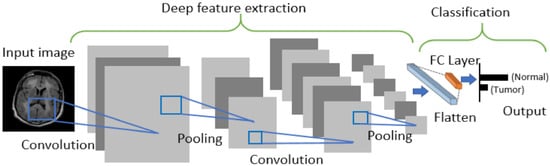

2.5. Proposed DLPNN Classification

Deep learning models are playing an increasingly significant role in feature extraction and classification operation. Deep learning-based probabilistic neural network (DLPNN) models may be utilized to extract highly correlated detailed features from the segmented images. The segmented image links between various segments can be identified by this model, and the links can then be extracted as features. Finally, the DLPNN models that used these features during training perform the classification task. Using distinguishing features from upper-level inputs, DLPNN derives more complex features at earlier stages. The DLPNN method for feature classification and extraction is shown in Figure 6. Each layer is closely examined, and statistics on the layer’s size, number of iterations (or kernel size), number of filters, and characteristics are as follows: training samples are 369, the batch size is 90, the number of epochs: 300, number of iterations: 25,000, learning rate: 0.02, Conv2D layer: 2, activation Layer: ReLU activation with frame size: Layer1 {62, 3 × 3, 32}, Layer2 {29, 3 × 3, 64}, MaxPooling 2D layer: 2 with frame size: Layer1 {31, 2 × 2, 32}, Layer2 {14, 2 × 2, 64}, flatten layer: 1 with frame size: Layer1 {1 × 12,544}, dense layer: 2 for activation layer: ReLU activation with frame size: Layer1 {1 × 128}, Layer2 {1 × 21}.

Figure 6.

Proposed DLPNN classifier.

Each layer is closely examined, and statistics on the layer’s size, number of iterations (or kernel size), number of filters, and characteristics are presented. The DLPNN model, which executes the joint classification and feature extraction of brain tumors, is constructed by integrating all of the layers.

3. Results and Discussion

The extensive simulation model of the Fusion-Net deep learning model used for image fusion, which is performed by using the simulation environment of MATLAB R2019a, is presented in this section. The simulations also make use of datasets, and objective and statistical efficiency is evaluated.

3.1. Data Set

The BraTS 2020 dataset (https://www.kaggle.com/datasets/awsaf49/brats20-dataset-training-validation (accessed on 1 July 2022) and the CBICA Image Processing Portal (https://ipp.cbica.upenn.edu/ (accessed on 1 July 2022) is applied to study the efficiency of the designed model. Multimodal brain MR analyses include 369 training, 125 validations, 169 tests, and T1-weighted (T1), T1ce-weighted (T1ce), T2-weighted (T2), and flair sequences. All MR images are 240, 240, 155 pixels. Results showed evaluated each study’s enhancing tumor (ET), peritumoral edema (ED), necrotic, and non-enhancing tumor core (NET). The training sets are annotated for online evaluation and the best classification challenge, but the validation, as well as test sets, are not.

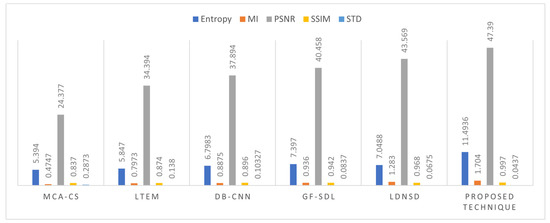

3.2. Fusion Process’s Performance Measurement

MR-CT Fusion approach: the effectiveness of the proposed method for integrating MR and CT medical images is shown, both objectively and as well as subjectively. Additionally, the performance of other standard techniques was compared shown in Table 6 and Table 7. Entropy, standard deviation (STD), structural similarity index metric (SSIM), peak signal-to-noise ratio (PSNR), and mutual information (MI) metrics are used to compare performance.

Table 6.

MR-CT image fusion approaches performance analysis.

Table 7.

MR-PET image fusion approaches performance analysis.

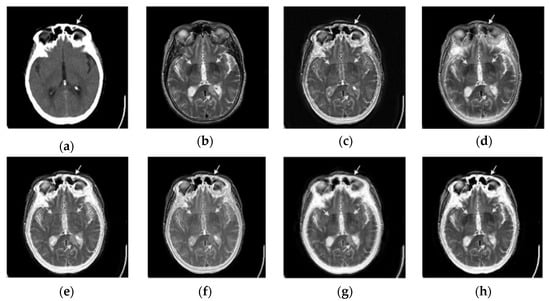

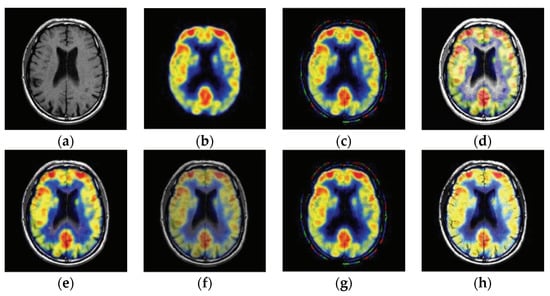

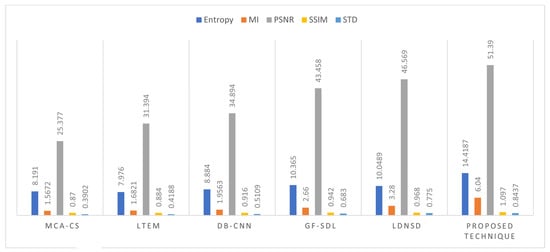

Figure 7a,b show the source CT and MR images, and Figure 7c–h shows the results of various fusion techniques. Figure 7 demonstrates how the Fusion-Net performed better than more traditional methods. The usual approaches struggle to produce superior visual outcomes in this situation due to issues with brightness and saturation. For all quality measures, it can be seen from Table 6 that the proposed approach produced superior quantitative analysis than the conventional approaches, as shown in Figure 8.

Figure 7.

Simulation outcomes of MR-CT image fusion (a) CT input, (b) MR input, (c) MCA-CS [14], (d) LTEM [15], (e) DB-CNN [17], (f) GF-SDL [18], (g) LDNSD [19], (h) Proposed fused outcome.

Figure 8.

Percentage-based visual display of MR-CT image fusion methods (MCA-CS [14], LTEM [15], DB-CNN [17], GF-SDL [18], LDNSD [19]).

MR-PET Fusion approach: the efficiency of the designed approach for fusing MR and PET medical images is shown in this section, both subjectively and objectively. Additionally, the performance of many standard techniques was compared, including MCA-CS [14], LTEM [15], DB-CNN [17], GF-SDL [18], and LDNSD [19], respectively.

Figure 9a,b show the original MR and PET images, and Figure 9c–h show the results of various fusion techniques. Figure 9 demonstrates that when compared to traditional methods the proposed fused output produced the best subjective performance. The various sorts of noise in this situation prevent the standard procedures from producing better visual outcomes. Table 7 shows that for all of the quality measures for color PET images, the proposed approach produced better objective analysis when compared to conventional approaches. MR-PET image fusion techniques for all metrics are shown in Figure 10.

Figure 9.

Results of MR-PET image fusion simulation (a) MR input, (b) PET input (c) MCA-CS [14], (d) LTEM [15], (e) DB-CNN [17], (f) GF-SDL [18], (g) LDNSD [19], (h) designed fused results.

Figure 10.

Graphical representation of MR-PET image fusion approaches in percentage (MCA-CS [14], LTEM [15], DB-CNN [17], GF-SDL [18], LDNSD [19]).

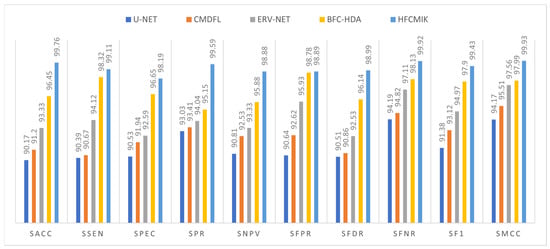

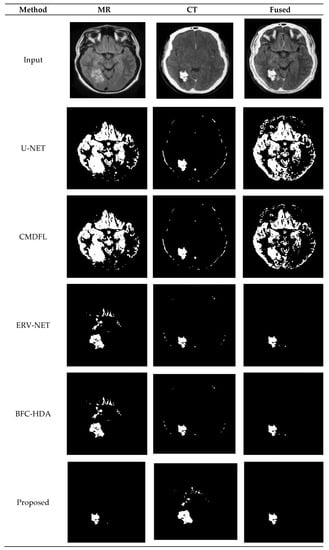

3.3. Proposed Segmentation Method

Figure 11 illustrates how the suggested HFCMIK segmentation strategy enhanced the localization of the tumor tissue in MR, CT, and fused images analyzed with current methodologies. All of the traditional methods localize the tumor region incorrectly. To evaluate different techniques’ performance and analyzed with a proposed method as well as compared in Table 8 to cutting-edge. The suggested HFCMIK technique produced higher objective performance, as seen in Table 8. Figure 12 displays a graphic depiction of Table 8.

Figure 11.

Graphical representation of segmentation performance comparison in percentage (U-NET [20], CMDFL [22], ERV-NET [23], BFC-HDA [27]).

Table 8.

Performance evaluation of various methodologies for segmentation in percentage.

Figure 12.

Segmentation performance comparison of MR, CT, and Fused images (U-NET [20], CMDFL [22], ERV-NET [23], BFC-HDA [27]).

3.4. Proposed Classification Methodology

Comparison is made with the designed DLPNN classification strategy with existing techniques. Several metrics evaluation parameters are used in this article to compare the performance of various methods. Table 9 compares the proposed DLPNN approach’s classification performance to cutting-edge approaches. Table 9 illustrates that the designed DLPNN method exceeded state-of-the-art techniques with there better results. Figure 13 shows a graphical representation of tables.

Table 9.

Comparison of different methods’ classification performance in percentage.

Figure 13.

Graphical representation of classification performance in percentage (TL-CNN [33], TL-CNN [38], FTTL [31], GAN-VE [36], VGG19 + GRU [46]).

4. Discussion

Traditionally used models experience considerable computational complexity as dataset sizes increase. It is also necessary to improve the efficiency of traditional techniques in terms of fusion, segmentation, and classification. In the related work, various deep learning models are designed with a great level of complexity. Therefore, in the present study specific models with a distinctive selection of filters, filter widths, stride factor, and layers is proposed. Each of these arrangements results in a distinct framework in the proposed fusion classification model.

Additionally, at present, there is no standard approach for feature extraction, segmentation, fusion, or classification. Additionally, none of these hybrid method combinations are documented in the literature. As a result, a unique BTFSC-Net model including steps for pre-processing, image fusion, image segmentation, and image classification has been designed in this presented research. Regarding the limitations of the proposed method, small medical datasets could be partially or fully overcome by MRI and CT images, which would also aid deep learning models in producing passable results.

5. Conclusions

A segmentation and classification model was constructed in this study along with a hybrid fusion. This model efficiently helps radiologists and medical professionals locate brain tumors more accurately. This technique is effective for computer-assisted brain tumor categorization. This study first used the HPWF filtering method to preprocess the original photos and remove noise. Additionally, a Fusion-Net based on DLCNN was used to combine the two source images with various modalities. The location of the brain tumor was then determined using enhanced segmentation based on HFCMIK. Additionally, using GLCM and RDWT techniques, hybrid features were recovered from the segmented image. The trained characteristics were then utilized to classify benign and malignant tumors using DLPNN. The simulation-based research results showed that the proposed fusion, segmentation, and classification approaches showed an improved performance. The research results also demonstrated that the suggested strategy can be used in real-time applications to classify brain tumors. The outcomes of the present study in comparison to the findings from previous studies demonstrated the potential capability of the proposed framework. The designed approach may be utilized to produce medical images for a variety of medical conditions in addition to brain tumors. Additionally, this study can be expanded to build a categorization system for brain tumors using bio-optimization techniques.

Author Contributions

Conceptualization, A.S.Y., S.K., S.I. and R.P.; methodology, V.K., S.S., R.G. and S.I.; software, J.C.C.-A., J.L.A.-G. and V.K.; investigation, J.C.C.-A., J.L.A.-G., B.S. and N.S.T.; resources, S.I., R.P. and N.N.; data curation, A.S.Y., S.K., G.R.K. and R.G.; writing—original draft preparation, V.K., S.S., R.G. and S.I.; writing—review and editing, R.P. and N.N.; visualization, A.S.Y., S.K., G.R.K. and J.C.C.-A.; project administration, N.N., B.S. and N.S.T. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The present study uses the Brain Tumor Segmentation(BraTS2020) dataset. Link: (https://www.kaggle.com/datasets/awsaf49/brats20-dataset-training-validation accessed on 1 July 2022).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviation

| Abbreviations | Specification | Abbreviations | Specification |

| BTFSC-Net | Brain Tumor Fusion-Based Segments and Classification–Non-Enhancing Tumor | FTTL | Fine-Tuning-Based Transfer Learning |

| HPWF | Hybrid Probabilistic Wiener Filter | GAN-VE | Generative Adversarial Networks Based on Variational Autoencoders |

| REA | Robust Edge Analysis | HDNN | Hybrid Deep Neural Network |

| DLCNN | Deep Learning Convolutional Neural Networks | AKMC | Adaptive k-means Clustering |

| HFCMIK | Hybrid Fuzzy C-Means Integrated K-Means | FKCM | Fuzzy Kernel C Means |

| RDWT | Redundant Discrete Wavelet Transform | MRI-MRA | Magnetic Resonance Angiography |

| GLCM | Gray-Level Cooccurrence Matrix | SACC | Segmentation Accuracy |

| DLPNN | Deep Learning Probabilistic Neural Network | SSEN | Sensitivity |

| MIF | Medical Image Fusion | SPEC | Specificity |

| MRI | Magnetic Resonance Imaging | SPR | Precision |

| CT | Computed Tomography | SNPV | Negative Predictive Value |

| PSO | Particle Swarm Optimization | SPVR | Segmentation False Positive Rate |

| MSD | Multi-Scale Decomposition | SFDR | Segmentation False Discovery Rate |

| MMIF | Multimodal Medical Images Fusion | SFNR | Segmentation False Negative Rate |

| MRI-SPECT | Single Photon Emission Computed Tomography | SF1 | Segmentation F1-Score |

| MRI-PET | Positron Emission Tomography | SMCC | Segmentation Matthew’s Correlation Coefficient |

| NSST | Nonsubsampled Shearlet Transform Domain | CACC | Classification Accuracy |

| MCA-CS | Morphological Component Analysis-Based Convolutional Sparsity | CSEN | Sensitivity |

| LTEM | Laws Of Texture Energy Measures | CPEC | Specificity |

| GFSR | Raph Filter And Sparse Representation | CPR | Precision |

| PCA | Principal Component Analysis | CNPV | Negative Predictive Value |

| NSCT | Non-Subsampled Contourlet Transform | CPVR | False Positive Rate |

| CNP | Coupled Neural P | CFDR | False Discovery Rate |

| FLS-CNN | Fast Level Set-Based CNN | CFNR | False Negative Rate |

| BFC-HDA | Bayesian Fuzzy Clustering With Hybrid Deep Autoencoder | CF1 | Classification F1-Score |

| SDAN | Symmetric-Driven Adversarial Network | CMCC | Classification Matthew’s Correlation Coefficient |

| DLSDA | Deep Learning With Synthetic Data Augmentation |

References

- Rao, C.S.; Karunakara, K. A comprehensive review on brain tumor segmentation and classification of MRI images. Multimed. Tools Appl. 2021, 80, 17611–17643. [Google Scholar] [CrossRef]

- Rasool, M.; Ismail, N.A.; Boulila, W.; Ammar, A.; Samma, H.; Yafooz, W.; Emara, A.H.M. A Hybrid Deep Learning Model for Brain Tumor Classification. Entropy 2022, 24, 799. [Google Scholar] [CrossRef]

- Maqsood, S.; Javed, U. Multi-modal medical image fusion based on two-scale image decomposition and sparse representation. Biomed. Signal Process. Control 2020, 57, 01810. [Google Scholar] [CrossRef]

- Dian, R.; Li, S.; Fang, L.; Lu, T.; Bioucas-Dias, J.M. Nonlocal sparse tensor factorization for semiblind hyperspectral and multispectral image fusion. IEEE Trans. Cybern. 2019, 50, 4469–4480. [Google Scholar] [CrossRef] [PubMed]

- Jose, J.; Gautam, N.; Tiwari, M.; Tiwari, T.; Suresh, A.; Sundararaj, V.; Rejeesh, M.R. An image quality enhancement scheme employing adolescent identity search algorithm in the NSST domain for multimodal medical image fusion. Biomed. Signal Process. Control. 2021, 66, 102480. [Google Scholar] [CrossRef]

- Daniel, E. Optimum wavelet-based homomorphic medical image fusion using hybrid genetic–grey wolf optimization algorithm. IEEE Sens. J. 2018, 18, 6804–6811. [Google Scholar] [CrossRef]

- Shehanaz, S.; Daniel, E.; Guntur, S.R.; Satrasupalli, S. Optimum weighted multimodal medical image fusion using particle swarm optimization. Optik 2021, 231, 1–12. [Google Scholar] [CrossRef]

- Padmavathi, K.; Asha, C.S.; Maya, V.K. A novel medical image fusion by combining TV-L1 decomposed textures based on adaptive weighting scheme. Eng. Sci. Technol. Int. J. 2020, 23, 225–239. [Google Scholar] [CrossRef]

- Tirupal, T.; Mohan, B.C.; Kumar, S.S. Multimodal medical image fusion techniques—A review. Curr. Signal Transduct. Ther. 2021, 16, 142–163. [Google Scholar] [CrossRef]

- Li, B.; Luo, X.; Wang, J.; Song, X.; Pérez-Jiménez, M.J.; Riscos-Núñez, A. Medical image fusion method based on coupled neural p systems in nonsubsampled shearlet transform domain. Int. J. Neural Syst. 2020, 31, 2050050. [Google Scholar] [CrossRef]

- Zhu, Z.; Zheng, M.; Qi, G.; Wang, D.; Xiang, Y. A phase congruency and local Laplacian energy based multi-modality medical image fusion method in NSCT domain. IEEE Access 2019, 7, 20811–20824. [Google Scholar] [CrossRef]

- Yin, M.; Li, X.; Liu, Y.; Chen, X. Medical image fusion with parameter-adaptive pulse coupled neural network in nonsubsampled shearlet transform domain. IEEE Trans. Inst. Meas. 2019, 68, 49–64. [Google Scholar] [CrossRef]

- Dutta, S.; Banerjee, A. Highly precise modified blue whale method framed by blending bat and local search algorithm for the optimality of image fusion algorithm. J. Soft Comput. Paradig. 2020, 2, 195–208. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, X.; Ward, R.K.; Wang, Z.J. Medical image fusion via convolutional sparsity based morphological component analysis. IEEE Signal Process. Lett. 2019, 26, 485–489. [Google Scholar] [CrossRef]

- Padma, G.; Prasad, A.D. Medical image fusion based on laws of texture energy measures in stationary wavelet transform domain. Int. J. Imaging Syst. Technol. 2020, 30, 544–557. [Google Scholar]

- Li, Q.; Wang, W.; Chen, G.; Zhao, D. Medical image fusion using segment graph filter and sparse representation. Comput. Biol. Med. 2021, 131, 104239. [Google Scholar] [CrossRef]

- Ding, Z.; Zhou, D.; Nie, R.; Hou, R.; Liu, Y. Brain medical image fusion based on dual-branch CNNs in NSST domain. BioMed Res. Int. 2020, 2020, 1–15. [Google Scholar] [CrossRef]

- Hu, Q.; Hu, S.; Zhang, F. Multi-modality medical image fusion based on separable dictionary learning and Gabor filtering. Signal Process. Image Commun. 2020, 83, 115758. [Google Scholar] [CrossRef]

- Kong, W.; Miao., Q.; Lei, Y. Multimodal sensor medical image fusion based on local difference in non-subsampled domain. IEEE Trans. Inst. Meas. 2019, 68, 938–951. [Google Scholar] [CrossRef]

- Li, X.; Guo, X.; Han, P.; Wang, X.; Li, H.; Luo, T. Laplacian redecomposition for multimodal medical image fusion. IEEE Trans. Instrum. Meas. 2020, 69, 6880–6890. [Google Scholar] [CrossRef]

- Li, Y.; Zhao, J.; Lv, Z.; Li, J. Medical image fusion method by deep learning. Int. J. Cogn. Comput. Eng. 2021, 2, 21–29. [Google Scholar] [CrossRef]

- Faragallah, O.S.; El-Hoseny, H.; El-Shafai, W.; Abd El-Rahman, W.; El-Sayed, H.S.; El-Rabaie, E.S.M.; Abd El-Samie, F.E.; Geweid, G.G. A comprehensive survey analysis for present solutions of medical image fusion and future directions. IEEE Access 2020, 9, 11358–11371. [Google Scholar] [CrossRef]

- Azam, M.A.; Khan, K.B.; Salahuddin, S.; Rehman, E.; Khan, S.A.; Khan, M.A.; Kadry, S.; Gandomi, A.H. A review on multimodal medical image fusion: Compendious analysis of medical modalities, multimodal databases, fusion techniques and quality metrics. Comput. Biol. Med. 2022, 144, 105253. [Google Scholar] [CrossRef]

- Wang, K.; Zheng, M.; Wei, H.; Qi, G.; Li, Y. Multi-modality medical image fusion using convolutional neural network and contrast pyramid. Sensors 2020, 20, 2169. [Google Scholar] [CrossRef]

- Kaur, M.; Singh, D. Multi-modality medical image fusion technique using multi-objective differential evolution based deep neural networks. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 2483–2493. [Google Scholar] [CrossRef]

- Zhao, C.; Wang, T.; Lei, B. Medical image fusion method based on dense block and deep convolutional generative adversarial network. Neural Comput. Appl. 2021, 33, 6595–6610. [Google Scholar] [CrossRef]

- Raja, P.M.S.; Rani, A.V. Brain tumor classification using a hybrid deep autoencoder with Bayesian fuzzy clustering-based segmentation approach. Biocybern. Biomed. Eng. 2020, 40, 440–453. [Google Scholar] [CrossRef]

- Wu, X.; Bi, L.; Fulham, M.; Feng, D.D.; Zhou, L.; Kim, J. Unsupervised brain tumor segmentation using a symmetric-driven adversarial network. Neurocomputing 2021, 455, 242–254. [Google Scholar] [CrossRef]

- Díaz-Pernas, F.J.; Martínez-Zarzuela, M.; Antón-Rodríguez, M.; González-Ortega, D. A deep learning approach for brain tumor classification and segmentation using a multiscale convolutional neural network. Healthc. Multidiscip. Digit. Publ. Inst. 2021, 9, 153. [Google Scholar] [CrossRef]

- Khan, A.; RKhan, S.; Harouni, M.; Abbasi, R.; Iqbal, S.; Mehmood, Z. Brain tumor segmentation using K-means clustering and deep learning with synthetic data augmentation for classification. Microsc. Res. Tech. 2021, 84, 1389–1399. [Google Scholar] [CrossRef]

- Swati, Z.N.K.; Zhao, Q.; Kabir, M.; Ali, F.; Ali, Z.; Ahmed, S.; Lu, J. Brain tumor classification for MR images using transfer learning and fine-tuning. Comput. Med. Imaging Graph. 2019, 75, 34–46. [Google Scholar] [CrossRef] [PubMed]

- Gumaei, A.; Hassan, M.M.; Hassan, M.R.; Alelaiwi, A.; Fortino, G. A hybrid feature extraction method with regularized extreme learning machine for brain tumor classification. IEEE Access 2019, 7, 36266–36273. [Google Scholar] [CrossRef]

- Deepak, S.; Ameer, P.M. Brain tumor classification using deep CNN features via transfer learning. Comput. Biol. Med. 2019, 111, 103345. [Google Scholar] [CrossRef]

- Polat, Ö.; Cahfer, G. Classification of brain tumors from MR images using deep transfer learning. J. Supercomput. 2021, 77, 7236–7252. [Google Scholar] [CrossRef]

- Naik, N.; Rallapalli, Y.; Krishna, M.; Vellara, A.S.; KShetty, D.; Patil, V.; Hameed, B.Z.; Paul, R.; Prabhu, N.; Rai, B.P.; et al. Demystifying the Advancements of Big Data Analytics in Medical Diagnosis: An Overview. Eng. Sci. 2021, 19, 42–58. [Google Scholar] [CrossRef]

- Sharma, D.; Kudva, V.; Patil, V.; Kudva, A.; Bhat, R.S. A Convolutional Neural Network Based Deep Learning Algorithm for Identification of Oral Precancerous and Cancerous Lesion and Differentiation from Normal Mucosa: A Retrospective Study. Eng. Sci. 2022, 18, 278–287. [Google Scholar] [CrossRef]

- Maqsood, S.; Damaševičius, R.; Maskeliūnas, R. Multi-modal brain tumor detection using deep neural network and multiclass SVM. Medicina 2022, 58, 1090. [Google Scholar] [CrossRef]

- Abd El Kader, I.; Xu, G.; Shuai, Z.; Saminu, S.; Javaid, I.; Ahmad, I.S. Differential deep convolutional neural network model for brain tumor classification. Brain Sci. 2021, 11, 352. [Google Scholar] [CrossRef]

- Mzoughi, H.; Njeh, I.; Wali, A.; Slima, M.B.; BenHamida, A.; Mhiri, C.; Mahfoudhe, K.B. Deep multi-scale 3D convolutional neural network (CNN) for MRI gliomas brain tumor classification. J. Digit. Imaging 2020, 33, 903–915. [Google Scholar] [CrossRef]

- Ahmad, B.; Sun, J.; You, Q.; Palade, V.; Mao, Z. Brain Tumor Classification Using a Combination of Variational Autoencoders and Generative Adversarial Networks. Biomedicines 2022, 10, 223. [Google Scholar] [CrossRef]

- Sasank, V.V.S.; Venkateswarlu, S. Hybrid deep neural network with adaptive rain optimizer algorithm for multi-grade brain tumor classification of MRI images. Multimed. Tools Appl. 2022, 81, 8021–8057. [Google Scholar] [CrossRef]

- Modi, A.; Kishore, B.; Shetty, D.K.; Sharma, V.P.; Ibrahim, S.; Hunain, R.; Usman, N.; Nayak, S.G.; Kumar, S.; Paul, R. Role of Artificial Intelligence in Detecting Colonic Polyps during Intestinal Endoscopy. Eng. Sci. 2022, 20, 25–33. [Google Scholar] [CrossRef]

- Devnath, L.; Summons, P.; Luo, S.; Wang, D.; Shaukat, K.; Hameed, I.A.; Aljuaid, H. Computer-Aided Diagnosis of Coal Workers’ Pneumoconiosis in Chest X-ray Radiographs Using Machine Learning: A Systematic Literature Review. Int. J. Environ. Res. Public Health 2022, 19, 6439. [Google Scholar] [CrossRef] [PubMed]

- Armi, L.; Fekri-Ershad, S. Texture image analysis and texture classification methods-A review. Int. Online J. Image Process. Pattern Recognit. 2019, 2, 1–29. [Google Scholar]

- Khan, M.A.; Ashraf, I.; Alhaisoni, M.; Damaševičius, R.; Scherer, R.; Rehman, A.; Bukhari, S.A.C. Multimodal Brain Tumor Classification Using Deep Learning and Robust Feature Selection: A Machine Learning Application for Radiologists. Diagnostics 2020, 10, 565. [Google Scholar] [CrossRef]

- Gab Allah, A.M.; Sarhan, A.M.; Elshennawy, N.M. Classification of Brain MRI Tumor Images Based on Deep Learning PGGAN Augmentation. Diagnostics 2021, 11, 2343. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).