A Structured and Methodological Review on Vision-Based Hand Gesture Recognition System

Abstract

:1. Introduction

1.1. Background

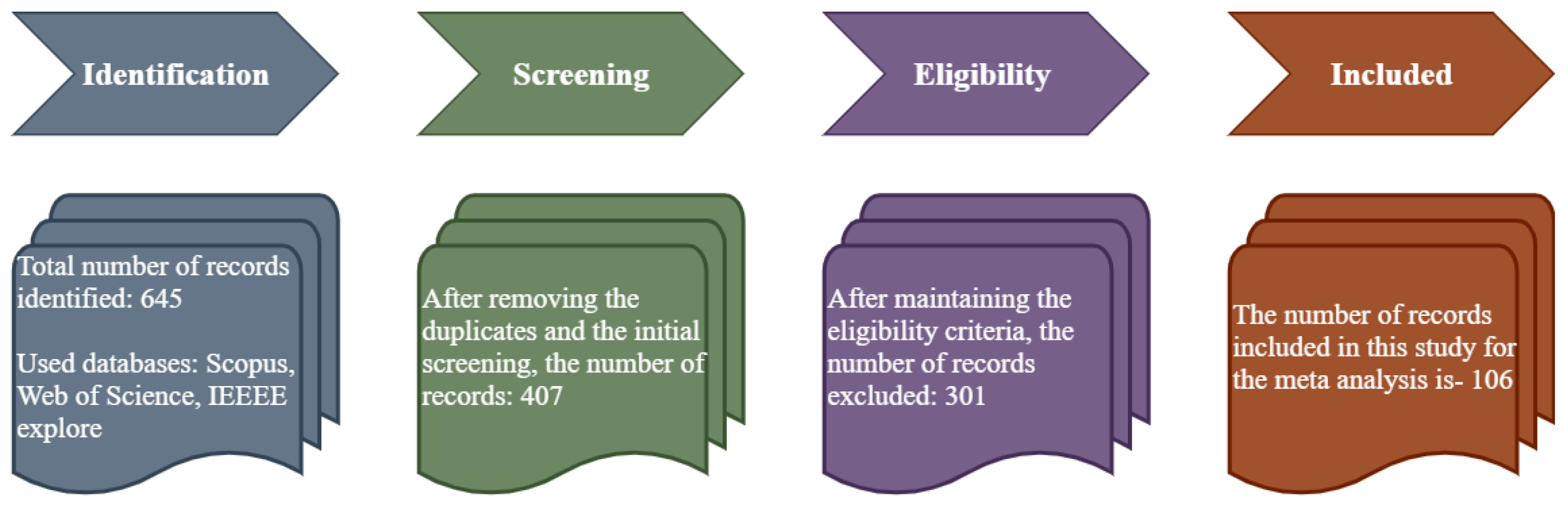

1.2. Survey Methodology

- -

- Science, technology, or computer science are all acceptable search terms. Journals, proceedings, and transactions are the three main categories of publications.

- -

- Article type is the in-depth analysis and commentary.

- -

- The vision-based hand gesture recognition system can recognize a variety of different hand motions.

- -

- The language of instruction is English.

1.3. Research Gaps and New Research Challenges

1.4. Contribution

1.5. Research Questions

- What are the main difficulties faced in gesture recognition?

- What are some challenges faced with gesture recognition?

- What are the major algorithms involved in gesture recognition?

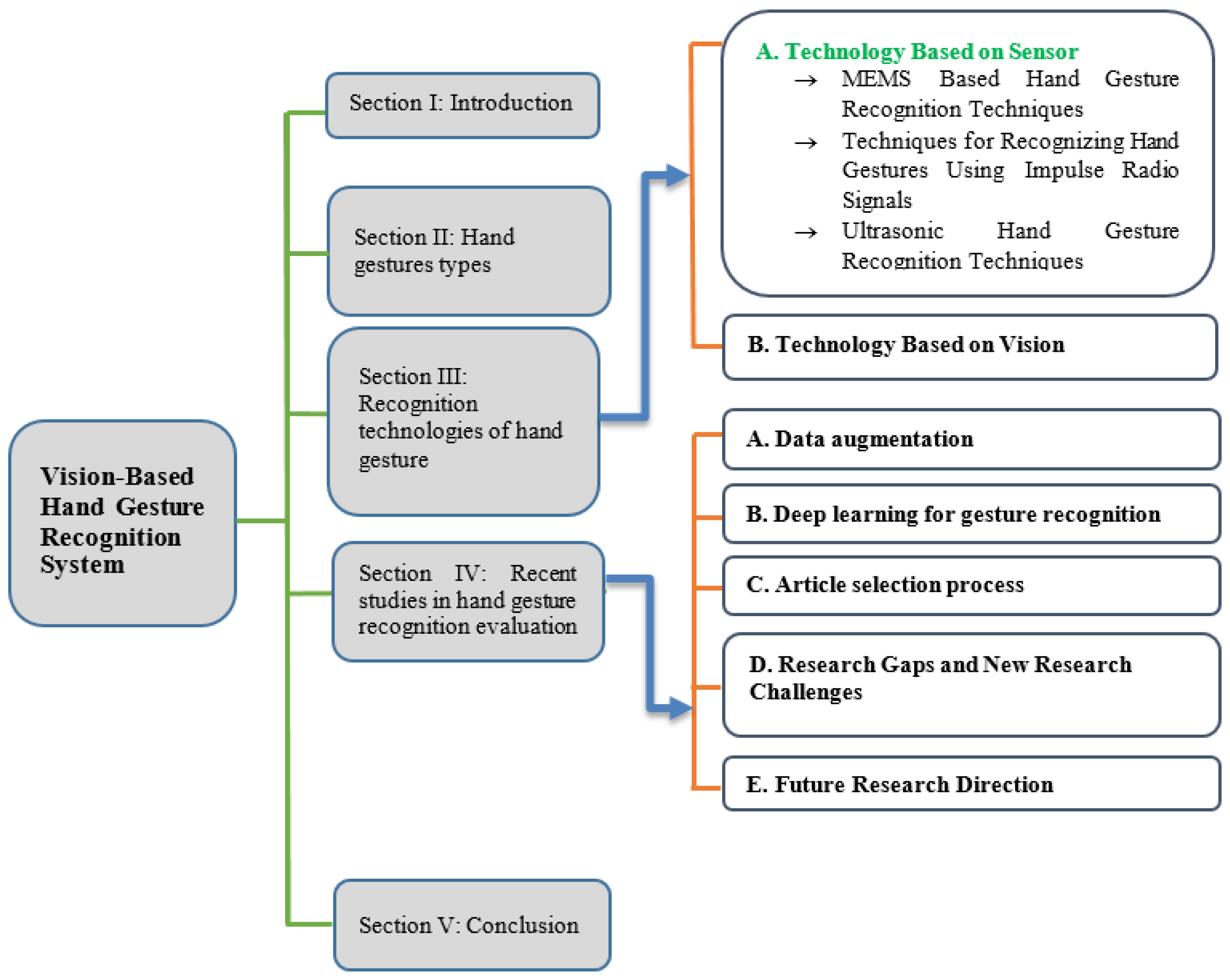

1.6. Organization of the Work

2. Hand Gestures Types

3. Recognition Technologies of Hand Gesture

3.1. Technology Based on Sensor

3.1.1. Techniques for Recognizing Hand Gestures Using Impulse Radio Signals

3.1.2. Ultrasonic Hand Gesture Recognition Techniques

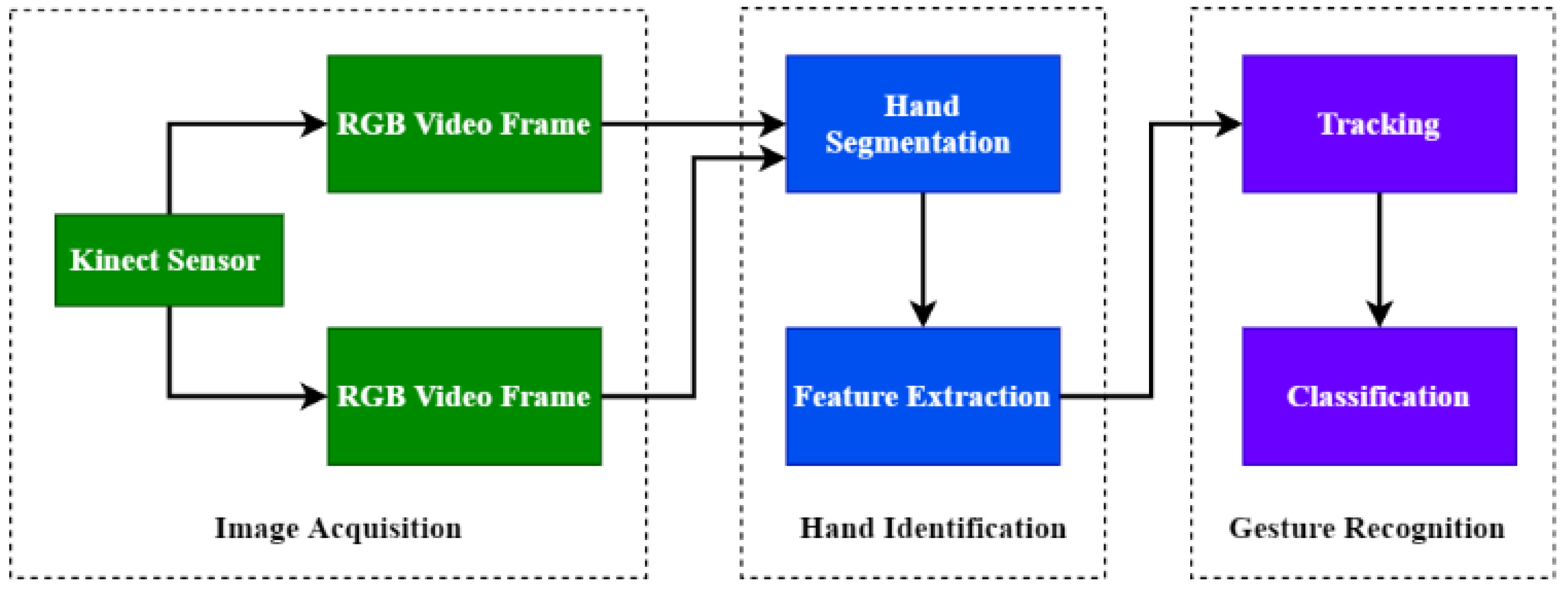

3.2. Technology Based on Vision

4. Significant Research Works on Hand Gesture Recognition

4.1. Data Augmentation

4.2. Deep Learning for Gesture Recognition

4.3. Summary

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gupta, H.P.; Chudgar, H.S.; Mukherjee, S.; Dutta, T.; Sharma, K. A continuous hand gestures recognition technique for human-machine interaction using accelerometer and gyroscope sensors. IEEE Sens. J. 2016, 16, 6425–6432. [Google Scholar] [CrossRef]

- Xie, R.; Cao, J. Accelerometer-based hand gesture recognition by neural network and similarity matching. IEEE Sens. J. 2016, 16, 4537–4545. [Google Scholar] [CrossRef]

- Rautaray, S.S.; Agrawal, A. Vision based hand gesture recognition for human computer interaction: A survey. Artif. Intell. Rev. 2015, 43, 1–54. [Google Scholar] [CrossRef]

- Zhang, Q.-Y.; Lu, J.-C.; Zhang, M.-Y.; Duan, H.-X. Hand gesture segmentation method based on YCbCr color space and K-means clustering. Int. J. Signal Process. Image Process. Pattern Recognit. 2015, 8, 105–116. [Google Scholar] [CrossRef]

- Lai, H.Y.; Lai, H.J. Real-time dynamic hand gesture recognition. In Proceedings of the 2014 International Symposium on Computer, Consumer and Control, Taichung, Taiwan, 10–12 June 2014; pp. 658–661. [Google Scholar]

- Hasan, M.M.; Mishra, P.K. Features fitting using multivariate gaussian distribution for hand gesture recognition. Int. J. Comput. Sci. Emerg. Technol. Ijcset 2012, 3, 73–80. [Google Scholar]

- Bargellesi, N.; Carletti, M.; Cenedese, A.; Susto, G.A.; Terzi, M. A random forest-based approach for hand gesture recognition with wireless wearable motion capture sensors. IFAC-PapersOnLine 2019, 52, 128–133. [Google Scholar] [CrossRef]

- Cho, Y.; Lee, A.; Park, J.; Ko, B.; Kim, N. Enhancement of gesture recognition for contactless interface using a personalized classifier in the operating room. Comput. Methods Programs Biomed. 2018, 161, 39–44. [Google Scholar] [CrossRef]

- Zhao, H.; Ma, Y.; Wang, S.; Watson, A.; Zhou, G. MobiGesture: Mobility-aware hand gesture recognition for healthcare. Smart Health 2018, 9, 129–143. [Google Scholar] [CrossRef]

- Tavakoli, M.; Benussi, C.; Lopes, P.A.; Osorio, L.B.; de Almeida, A.T. Robust hand gesture recognition with a double channel surface EMG wearable armband and SVM classifier. Biomed. Signal Process. Control. 2018, 46, 121–130. [Google Scholar] [CrossRef]

- Zhang, Y.; Chen, Y.; Yu, H.; Yang, X.; Lu, W.; Liu, H. Wearing-independent hand gesture recognition method based on EMG armband. Pers. Ubiquitous Comput. 2018, 22, 511–524. [Google Scholar] [CrossRef]

- Li, Y.; He, Z.; Ye, X.; He, Z.; Han, K. Spatial temporal graph convolutional networks for skeleton-based dynamic hand gesture recognition. Eurasip J. Image Video Process. 2019, 2019, 78. [Google Scholar] [CrossRef]

- Alonso, D.G.; Teyseyre, A.; Soria, A.; Berdun, L. Hand gesture recognition in real world scenarios using approximate string matching. Multimed. Tools Appl. 2020, 79, 20773–20794. [Google Scholar] [CrossRef]

- Zhang, T.; Lin, H.; Ju, Z.; Yang, C. Hand Gesture recognition in complex background based on convolutional pose machine and fuzzy Gaussian mixture models. Int. J. Fuzzy Syst. 2020, 22, 1330–1341. [Google Scholar] [CrossRef] [Green Version]

- Tam, S.; Boukadoum, M.; Campeau-Lecours, A.; Gosselin, B. A fully embedded adaptive real-time hand gesture classifier leveraging HD-sEMG and deep learning. IEEE Trans. Biomed. Circuits Syst. 2019, 14, 232–243. [Google Scholar] [CrossRef]

- Li, H.; Wu, L.; Wang, H.; Han, C.; Quan, W.; Zhao, J. Hand gesture recognition enhancement based on spatial fuzzy matching in leap motion. IEEE Trans. Ind. Inform. 2019, 16, 1885–1894. [Google Scholar] [CrossRef]

- Köpüklü, O.; Gunduz, A.; Kose, N.; Rigoll, G. Online dynamic hand gesture recognition including efficiency analysis. IEEE Trans. Biom. Behav. Identity Sci. 2020, 2, 85–97. [Google Scholar] [CrossRef]

- Tai, T.M.; Jhang, Y.J.; Liao, Z.W.; Teng, K.C.; Hwang, W.J. Sensor-based continuous hand gesture recognition by long short-term memory. IEEE Sens. Lett. 2018, 2, 1–4. [Google Scholar] [CrossRef]

- Ram Rajesh, J.; Sudharshan, R.; Nagarjunan, D.; Aarthi, R. Remotely controlled PowerPoint presentation navigation using hand gestures. In Proceedings of the International conference on Advances in Computer, Electronics and Electrical Engineering, Vijayawada, India, 22 July 2012. [Google Scholar]

- Czupryna, M.; Kawulok, M. Real-time vision pointer interface. In Proceedings of the ELMAR-2012, Zadar, Croatia, 12–14 September 2012; pp. 49–52. [Google Scholar]

- Gupta, A.; Sehrawat, V.K.; Khosla, M. FPGA based real time human hand gesture recognition system. Procedia Technol. 2012, 6, 98–107. [Google Scholar] [CrossRef] [Green Version]

- Chen, L.; Wang, F.; Deng, H.; Ji, K. A survey on hand gesture recognition. In Proceedings of the 2013 International Conference on Computer Sciences and Applications, Wuhan, China, 14–15 December 2013; pp. 313–316. [Google Scholar]

- Jalab, H.A.; Omer, H.K. Human computer interface using hand gesture recognition based on neural network. In Proceedings of the 2015 5th National Symposium on Information Technology: Towards New Smart World (NSITNSW), Riyadh, Saudi Arabia, 17–19 February 2015; pp. 1–6. [Google Scholar]

- Pisharady, P.K.; Saerbeck, M. Recent methods and databases in vision-based hand gesture recognition: A review. Comput. Vis. Image Underst. 2015, 141, 152–165. [Google Scholar] [CrossRef]

- Plouffe, G.; Cretu, A.M. Static and dynamic hand gesture recognition in depth data using dynamic time warping. IEEE Trans. Instrum. Meas. 2015, 65, 305–316. [Google Scholar] [CrossRef]

- Rios-Soria, D.J.; Schaeffer, S.E.; Garza-Villarreal, S.E. Hand-gesture recognition using computer-vision techniques. In Proceedings of the 21st International Conference on Computer Graphics, Visualization and Computer Vision, Plzen, Czech Republic, 24–27 June 2013. [Google Scholar]

- Cheng, H.; Yang, L.; Liu, Z. Survey on 3D hand gesture recognition. IEEE Trans. Circuits Syst. Video Technol. 2015, 26, 1659–1673. [Google Scholar] [CrossRef]

- Ahuja, M.K.; Singh, A. Static vision based Hand Gesture recognition using principal component analysis. In Proceedings of the 2015 IEEE 3rd International Conference on MOOCs, Innovation and Technology in Education (MITE), Amritsar, India, 1–2 October 2015; pp. 402–406. [Google Scholar]

- Kaur, H.; Rani, J. A review: Study of various techniques of Hand gesture recognition. In Proceedings of the 2016 IEEE 1st International Conference on Power Electronics, Intelligent Control and Energy Systems (ICPEICES), Delhi, India, 4–6 July 2016; pp. 1–5. [Google Scholar]

- Sonkusare, J.S.; Chopade, N.B.; Sor, R.; Tade, S.L. A review on hand gesture recognition system. In Proceedings of the 2015 International Conference on Computing Communication Control and Automation, Pune, India, 26–27 February 2015; pp. 790–794. [Google Scholar]

- Shimada, A.; Yamashita, T.; Taniguchi, R.I. Hand gesture based TV control system—Towards both user-& machine-friendly gesture applications. In Proceedings of the 19th Korea-Japan Joint Workshop on Frontiers of Computer Vision, Incheon, Korea, 30 January–1 February 2013; pp. 121–126. [Google Scholar]

- Palacios, J.M.; Sagüés, C.; Montijano, E.; Llorente, S. Human-computer interaction based on hand gestures using RGB-D sensors. Sensors 2013, 13, 11842–11860. [Google Scholar] [CrossRef] [PubMed]

- Trigueiros, P.; Ribeiro, F.; Reis, L.P. Generic system for human-computer gesture interaction. In Proceedings of the 2014 IEEE International Conference on Autonomous Robot Systems and Competitions (ICARSC), Espinho, Portugal, 14–15 May 2014; pp. 175–180. [Google Scholar]

- Dhule, C.; Nagrare, T. Computer vision based human-computer interaction using color detection techniques. In Proceedings of the 2014 Fourth International Conference on Communication Systems and Network Technologies, Washington, DC, USA, 7–9 April 2014; pp. 934–938. [Google Scholar]

- Poularakis, S.; Katsavounidis, I. Finger detection and hand posture recognition based on depth information. In Proceedings of the 2014 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Florence, Italy, 4–9 May 2014; pp. 4329–4333. [Google Scholar]

- Dinh, D.L.; Kim, J.T.; Kim, T.S. Hand gesture recognition and interface via a depth imaging sensor for smart home appliances. Energy Procedia 2014, 62, 576–582. [Google Scholar] [CrossRef] [Green Version]

- Panwar, M. Hand gesture recognition based on shape parameters. In Proceedings of the 2012 International Conference on Computing, Communication and Applications, Dindigul, India, 22–24 February 2012; pp. 1–6. [Google Scholar]

- Wang, W.; Pan, J. Hand segmentation using skin color and background information. In Proceedings of the 2012 International Conference on Machine Learning and Cybernetics, Xi’an, China, 15–17 July 2012; Volume 4, pp. 1487–1492. [Google Scholar]

- Doğan, R.Ö.; Köse, C. Computer monitoring and control with hand movements. In Proceedings of the 2014 22nd Signal Processing and Communications Applications Conference (SIU), Trabzon, Turkey, 23–25 April 2014; pp. 2110–2113. [Google Scholar]

- Suarez, J.; Murphy, R.R. Hand gesture recognition with depth images: A review. In Proceedings of the 2012 IEEE RO-MAN: The 21st IEEE International Symposium on Robot and Human Interactive Communication, Paris, France, 9–13 September 2012; pp. 411–417. [Google Scholar]

- Puri, R. Gesture recognition based mouse events. arXiv 2014, arXiv:1401.2058. [Google Scholar] [CrossRef]

- Wang, C.; Liu, Z.; Chan, S.C. Superpixel-based hand gesture recognition with kinect depth camera. IEEE Trans. Multimed. 2014, 17, 29–39. [Google Scholar] [CrossRef]

- Garg, P.; Aggarwal, N.; Sofat, S. Vision based hand gesture recognition. World Acad. Sci. Eng. Technol. 2009, 49, 972–977. [Google Scholar]

- Chastine, J.; Kosoris, N.; Skelton, J. A study of gesture-based first person control. In Proceedings of the CGAMES’2013 USA, Louisville, KY, USA, 30 July–1 August 2013; pp. 79–86. [Google Scholar]

- Dominio, F.; Donadeo, M.; Marin, G.; Zanuttigh, P.; Cortelazzo, G.M. Hand gesture recognition with depth data. In Proceedings of the 4th ACM/IEEE International Workshop on Analysis and Retrieval of Tracked Events and Motion in Imagery Stream, Barcelona, Spain, 21 October 2013; pp. 9–16. [Google Scholar]

- Xu, Y.; Wang, Q.; Bai, X.; Chen, Y.L.; Wu, X. A novel feature extracting method for dynamic gesture recognition based on support vector machine. In Proceedings of the 2014 IEEE International Conference on Information and Automation (ICIA), Hailar, China, 28–30 July 2014; pp. 437–441. [Google Scholar]

- Jais, H.M.; Mahayuddin, Z.R.; Arshad, H. A review on gesture recognition using Kinect. In Proceedings of the 2015 International Conference on Electrical Engineering and Informatics (ICEEI), Bali, Indonesia, 10–11 August 2015; pp. 594–599. [Google Scholar]

- Czuszynski, K.; Ruminski, J.; Wtorek, J. Pose classification in the gesture recognition using the linear optical sensor. In Proceedings of the 2017 10th International Conference on Human System Interactions (HSI), Ulsan, Korea, 17–19 July 2017; pp. 18–24. [Google Scholar]

- Park, S.; Ryu, M.; Chang, J.Y.; Park, J. A hand posture recognition system utilizing frequency difference of infrared light. In Proceedings of the 20th ACM Symposium on Virtual Reality Software and Technology, Edinburgh, Scotland, 11–13 November 2014; pp. 65–68. [Google Scholar]

- Jangyodsuk, P.; Conly, C.; Athitsos, V. Sign language recognition using dynamic time warping and hand shape distance based on histogram of oriented gradient features. In Proceedings of the 7th International Conference on PErvasive Technologies Related to Assistive Environments, Rhodes, Greece, 27–30 May 2014; pp. 1–6. [Google Scholar]

- Sahoo, J.P.; Prakash, A.J.; Pławiak, P.; Samantray, S. Real-Time Hand Gesture Recognition Using Fine-Tuned Convolutional Neural Network. Sensors 2022, 22, 706. [Google Scholar] [CrossRef] [PubMed]

- Gadekallu, T.R.; Srivastava, G.; Liyanage, M.; Iyapparaja, M.; Chowdhary, C.L.; Koppu, S.; Maddikunta, P.K.R. Hand gesture recognition based on a Harris hawks optimized convolution neural network. Comput. Electr. Eng. 2022, 100, 107836. [Google Scholar] [CrossRef]

- Amin, M.S.; Rizvi, S.T.H. Sign Gesture Classification and Recognition Using Machine Learning. Cybern. Syst. 2022. [Google Scholar] [CrossRef]

- Kong, F.; Deng, J.; Fan, Z. Gesture recognition system based on ultrasonic FMCW and ConvLSTM model. Measurement 2022, 190, 110743. [Google Scholar] [CrossRef]

- Saboo, S.; Singha, J.; Laskar, R.H. Dynamic hand gesture recognition using combination of two-level tracker and trajectory-guided features. Multimed. Syst. 2022, 28, 183–194. [Google Scholar] [CrossRef]

- Alnaim, N. Hand Gesture Recognition Using Deep Learning Neural Networks. Ph.D. Thesis, Brunel University, London, UK, 2020. [Google Scholar]

- Oudah, M.; Al-Naji, A.; Chahl, J. Computer Vision for Elderly Care Based on Hand Gestures. Computers 2021, 10, 5. [Google Scholar] [CrossRef]

- Joseph, P. Recent Trends and Technologies in Hand Gesture Recognition. Int. J. Adv. Res. Comput. Sci. 2017, 8. [Google Scholar]

- Zhang, Y.; Liu, B.; Liu, Z. Recognizing hand gestures with pressure-sensor-based motion sensing. IEEE Trans. Biomed. Circuits Syst. 2019, 13, 1425–1436. [Google Scholar] [CrossRef] [PubMed]

- Mujahid, A.; Awan, M.J.; Yasin, A.; Mohammed, M.A.; Damaševičius, R.; Maskeliūnas, R.; Abdulkareem, K.H. Real-Time Hand Gesture Recognition Based on Deep Learning YOLOv3 Model. Appl. Sci. 2021, 11, 4164. [Google Scholar] [CrossRef]

- Min, Y.; Zhang, Y.; Chai, X.; Chen, X. An efficient pointlstm for point clouds based gesture recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5761–5770. [Google Scholar]

- Al-Hammadi, M.; Muhammad, G.; Abdul, W.; Alsulaiman, M.; Bencherif, M.A.; Alrayes, T.S.; Mathkour, H.; Mekhtiche, M.A. Deep learning-based approach for sign language gesture recognition with efficient hand gesture representation. IEEE Access 2020, 8, 192527–192542. [Google Scholar] [CrossRef]

- Neethu, P.; Suguna, R.; Sathish, D. An efficient method for human hand gesture detection and recognition using deep learning convolutional neural networks. Soft Comput. 2020, 24, 15239–15248. [Google Scholar] [CrossRef]

- Asadi-Aghbolaghi, M.; Clapes, A.; Bellantonio, M.; Escalante, H.J.; Ponce-López, V.; Baró, X.; Guyon, I.; Kasaei, S.; Escalera, S. A survey on deep learning based approaches for action and gesture recognition in image sequences. In Proceedings of the 2017 12th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2017), Washington, DC, USA, 30 May–3 June 2017; pp. 476–483. [Google Scholar]

- Cao, C.; Zhang, Y.; Wu, Y.; Lu, H.; Cheng, J. Egocentric gesture recognition using recurrent 3d convolutional neural networks with spatiotemporal transformer modules. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 3763–3771. [Google Scholar]

- John, V.; Boyali, A.; Mita, S.; Imanishi, M.; Sanma, N. Deep learning-based fast hand gesture recognition using representative frames. In Proceedings of the 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, QLD, Australia, 30 November–2 December 2016; pp. 1–8. [Google Scholar]

- Zhang, X.; Li, X. Dynamic gesture recognition based on MEMP network. Future Internet 2019, 11, 91. [Google Scholar] [CrossRef] [Green Version]

- Wang, S.; Song, J.; Lien, J.; Poupyrev, I.; Hilliges, O. Interacting with soli: Exploring fine-grained dynamic gesture recognition in the radio-frequency spectrum. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; pp. 851–860. [Google Scholar]

- Funke, I.; Bodenstedt, S.; Oehme, F.; von Bechtolsheim, F.; Weitz, J.; Speidel, S. Using 3D convolutional neural networks to learn spatiotemporal features for automatic surgical gesture recognition in video. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Shenzhen, China, 13–17 October 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 467–475. [Google Scholar]

- Al Farid, F.; Hashim, N.; Abdullah, J. Vision Based Gesture Recognition from RGB Video Frames Using Morphological Image Processing Techniques. Int. J. Adv. Sci. Technol. 2019, 28, 321–332. [Google Scholar]

- Al Farid, F.; Hashim, N.; Abdullah, J. Vision-based hand gesture recognition from RGB video data using SVM. In Proceedings of the International Workshop on Advanced Image Technology (IWAIT) 2019, International Society for Optics and Photonics, NTU, Singapore, 22 March 2019; Volume 11049, p. 110491E. [Google Scholar]

- Bhuiyan, M.R.; Abdullah, D.; Hashim, D.; Farid, F.; Uddin, D.; Abdullah, N.; Samsudin, D. Crowd density estimation using deep learning for Hajj pilgrimage video analytics. F1000Research 2021, 10, 1190. [Google Scholar] [CrossRef]

- Bhuiyan, M.R.; Abdullah, J.; Hashim, N.; Al Farid, F.; Samsudin, M.A.; Abdullah, N.; Uddin, J. Hajj pilgrimage video analytics using CNN. Bull. Electr. Eng. Inform. 2021, 10, 2598–2606. [Google Scholar] [CrossRef]

- Zamri, M.N.H.B.; Abdullah, J.; Bhuiyan, R.; Hashim, N.; Farid, F.A.; Uddin, J.; Husen, M.N.; Abdullah, N. A Comparison of ML and DL Approaches for Crowd Analysis on the Hajj Pilgrimage. In Proceedings of the International Visual Informatics Conference; Springer: Berlin/Heidelberg, Germany, 2021; pp. 552–561. [Google Scholar]

- Bari, B.S.; Islam, M.N.; Rashid, M.; Hasan, M.J.; Razman, M.A.M.; Musa, R.M.; Ab Nasir, A.F.; Majeed, A.P.A. A real-time approach of diagnosing rice leaf disease using deep learning-based faster R-CNN framework. Peerj Comput. Sci. 2021, 7, e432. [Google Scholar] [CrossRef] [PubMed]

- Zoph, B.; Cubuk, E.D.; Ghiasi, G.; Lin, T.Y.; Shlens, J.; Le, Q.V. Learning data augmentation strategies for object detection. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 566–583. [Google Scholar]

- Xie, Q.; Dai, Z.; Hovy, E.; Luong, M.T.; Le, Q.V. Unsupervised data augmentation for consistency training. arXiv 2019, arXiv:1904.12848. [Google Scholar]

- Islam, M.Z.; Hossain, M.S.; ul Islam, R.; Andersson, K. Static hand gesture recognition using convolutional neural network with data augmentation. In Proceedings of the 2019 Joint 8th International Conference on Informatics, Electronics & Vision (ICIEV) and 2019 3rd International Conference on Imaging, Vision & Pattern Recognition (icIVPR), Spokane, WA, USA, 30 May–2 June 2019; pp. 324–329. [Google Scholar]

- Mungra, D.; Agrawal, A.; Sharma, P.; Tanwar, S.; Obaidat, M.S. PRATIT: A CNN-based emotion recognition system using histogram equalization and data augmentation. Multimed. Tools Appl. 2020, 79, 2285–2307. [Google Scholar] [CrossRef]

- Rashid, M.; Bari, B.S.; Yusup, Y.; Kamaruddin, M.A.; Khan, N. A Comprehensive Review of Crop Yield Prediction Using Machine Learning Approaches With Special Emphasis on Palm Oil Yield Prediction. IEEE Access 2021, 9, 63406–63439. [Google Scholar] [CrossRef]

- Rashid, M.; Sulaiman, N.; PP Abdul Majeed, A.; Musa, R.M.; Bari, B.S.; Khatun, S. Current status, challenges, and possible solutions of EEG-based brain-computer interface: A comprehensive review. Front. Neurorobotics 2020, 14, 25. [Google Scholar] [CrossRef]

- Mathew, A.; Amudha, P.; Sivakumari, S. Deep Learning Techniques: An Overview. In Proceedings of the International Conference on Advanced Machine Learning Technologies and Applications, Manipal, India, 13–15 February 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 599–608. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 35, 221–231. [Google Scholar] [CrossRef] [Green Version]

- Liu, Z.; Zhang, C.; Tian, Y. 3D-based deep convolutional neural network for action recognition with depth sequences. Image Vis. Comput. 2016, 55, 93–100. [Google Scholar] [CrossRef] [Green Version]

- Sun, L.; Jia, K.; Yeung, D.Y.; Shi, B.E. Human action recognition using factorized spatio-temporal convolutional networks. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 4597–4605. [Google Scholar]

- Escorcia, V.; Heilbron, F.C.; Niebles, J.C.; Ghanem, B. Daps: Deep action proposals for action understanding. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 768–784. [Google Scholar]

- Mansimov, E.; Srivastava, N.; Salakhutdinov, R. Initialization strategies of spatio-temporal convolutional neural networks. arXiv 2015, arXiv:1503.07274. [Google Scholar]

- Baccouche, M.; Mamalet, F.; Wolf, C.; Garcia, C.; Baskurt, A. Sequential deep learning for human action recognition. In Proceedings of the International Workshop on Human Behavior Understanding, Amsterdam, The Netherlands, 16 November 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 29–39. [Google Scholar]

- Feichtenhofer, C.; Pinz, A.; Zisserman, A. Convolutional two-stream network fusion for video action recognition. In Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1933–1941. [Google Scholar]

- Shou, Z.; Wang, D.; Chang, S.F. Temporal action localization in untrimmed videos via multi-stage cnns. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1049–1058. [Google Scholar]

- Varol, G.; Laptev, I.; Schmid, C. Long-term temporal convolutions for action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 1510–1517. [Google Scholar] [CrossRef] [Green Version]

- Neverova, N.; Wolf, C.; Taylor, G.W.; Nebout, F. Multi-scale deep learning for gesture detection and localization. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 474–490. [Google Scholar]

- Wang, L.; Qiao, Y.; Tang, X. Action recognition with trajectory-pooled deep-convolutional descriptors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 4305–4314. [Google Scholar]

- Han, S.; Mao, H.; Dally, W.J. Deep compression: Compressing deep neural networks with pruning, trained quantization and huffman coding. arXiv 2015, arXiv:1510.00149. [Google Scholar]

- Zhang, B.; Wang, L.; Wang, Z.; Qiao, Y.; Wang, H. Real-time action recognition with enhanced motion vector CNNs. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2718–2726. [Google Scholar]

- Xu, Z.; Zhu, L.; Yang, Y.; Hauptmann, A.G. Uts-cmu at thumos 2015. Thumos Chall. 2015, 2015, 2. [Google Scholar]

- Gkioxari, G.; Malik, J. Finding action tubes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 759–768. [Google Scholar]

- Escalante, H.J.; Morales, E.F.; Sucar, L.E. A naive bayes baseline for early gesture recognition. Pattern Recognit. Lett. 2016, 73, 91–99. [Google Scholar] [CrossRef]

- Xu, X.; Hospedales, T.M.; Gong, S. Multi-task zero-shot action recognition with prioritised data augmentation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 343–359. [Google Scholar]

- Montes, A.; Salvador, A.; Pascual, S.; Giro-i Nieto, X. Temporal activity detection in untrimmed videos with recurrent neural networks. arXiv 2016, arXiv:1608.08128. [Google Scholar]

- Nasrollahi, K.; Escalera, S.; Rasti, P.; Anbarjafari, G.; Baro, X.; Escalante, H.J.; Moeslund, T.B. Deep learning based super-resolution for improved action recognition. In Proceedings of the 2015 International Conference on Image Processing Theory, Tools and Applications (IPTA), Orleans, France, 10–13 November 2015; pp. 67–72. [Google Scholar]

| Author | Findings | Challenges |

|---|---|---|

| [56] | In this study, image processing techniques such as wavelets and empirical mode decomposition were suggested to extract picture functionalities in order to identify 2D or 3D manual motions. Classification of artificial neural networks (ANN), which was utilized for the training and classification of data in addition to the CNN (CNN). | Three-dimensional gesture disparities were measured utilizing the left and right 3D gesture videos. |

| [57] | Deaf–mute elderly folk use five distinct hand signals to seek a particular item, such as drink, food, toilet, assistance, and medication. Since older individuals cannot do anything independently, their requests were delivered to their smartphone. | Microsoft Kinect v2 sensor’s capability to extract hand movements in real time keeps this study in a restricted area. |

| [58] | The physical closeness of gestures and voices may be loosened slightly and utilized by individuals with unique abilities. It was always important to explore efficient human computer interaction (HCI) in developing new approaches and methodologies. | Many of the methods encounter difficulties like occlusions, changes in lighting, low resolution and a high frame rate. |

| [59] | A working prototype is created to perform gestures based on real-time interactions, comprising a wearable gesture detecting device with four pressure sensors and the appropriate computational framework. | The hardware design of the system has to be further simplified to make it more feasible. More research on the balance between system resilience and sensitivity is required. |

| [60] | This article offers a lightweight model based on the YOLO (You Look Only Once) v3 and the DarkNet-53 neural networks for gesture detection without further preprocessing, filtration of pictures and image improvement. Even in a complicated context the suggested model was very accurate, and even in low resolution image mode motions were effectively identified. Rate of high frame. | The primary challenge of this application for identification of gestures in real time is the classification and recognition of gestures. Hand recognition is a method used by several algorithms and ideas of diverse approaches for understanding the movement of a hand, such as picture and neural networks. |

| [61] | This work formulates the recognition of gestures as an irregular issue of sequence identification and aims to capture long-run spatial correlations in points of the cloud. In order to spread information from past to future while maintaining its spatial structure, a new and effective PointLSTM is suggested. | The underlying geometric structure and distance information for the object surfaces are accurately described in dot clouds as compared with RGB data, which offer additional indicators of gesture identification. |

| [62] | A new system is presented for a dynamic recognition of hand gestures utilizing various architectures to learn how to partition hands, local and global features and globalization and recognition features of the sequence. | To create an efficient system for recognition, hand segmentation, local representation of hand forms, global corporate configuration, and gesture sequence modeling need to be addressed. |

| [63] | This article detects and recognizes the gestures of the human hand using the method to classification for neural networks (CNN). This process flow includes hand area segmentation using mask image, finger segmentation, segmented finger image normalization and CNN classification finger identification. | SVM and the naive Bayes classification were used to recognize the conventional gesture technique and needed a large number of data for the identification of gesture patterns. |

| [64] | They provided a study of existing deep learning methodologies for action and gesture detection in picture sequences, as well as a taxonomy that outlines key components of deep learning for both tasks. | They looked through the suggested architectures, fusion methodologies, primary datasets, and competitions in depth. They described and analyzed the key works presented so far, focusing on how they deal with the temporal component of data and suggesting potential and challenges for future study. |

| [65] | They solve the problems by employing an end-to-end learning recurrent 3D convolutional neural network. They created a spatiotemporal transformer module with recurrent connections between surrounding time slices that can dynamically change a 3D feature map into a canonical view in both space and time. | The main challenge in egocentric vision gesture detection is the global camera motion created by the device wearer’s spontaneous head movement. |

| [66] | To categorize video sequences of hand motions, a long-term recurrent convolution network is utilized. Long-term recurrent convolution is the most common kind of long-term recurrent convolution. Multiple frames captured from a video sequence are fed into a network to conduct categorization in a network-based action classifier. | Apart from lowering the accuracy of the classifier, the inclusion of several frames increases the computing complexity of the system. |

| [67] | The MEMP network’s major characteristic is that it extracts and predicts the temporal and spatial feature information of gesture video numerous times, allowing for great accuracy. MEMP stands for multiple extraction and multiple prediction. | They present a neural network with an alternative fusion of 3D CNN and ConvLSTM since each kind of neural network structure has its own constraints. MEMP was developed by them. |

| [68] | This research introduces a new machine learning architecture that is especially built for gesture identification based on radio frequency. They are particularly interested in high-frequency (60 GHz) short-range radar sensing, such as Google’s Soli sensor. | The signal has certain unique characteristics, such as the ability to resolve motion at a very fine level and the ability to segment in range and velocity space rather than picture space. This allows for the identification of new sorts of inputs, but it makes the design of input recognition algorithms much more challenging. |

| [69] | They propose learning spatio-temporal properties from successive video frames using a 3D convolutional neural network (CNN). They test their method using recordings of robot-assisted suturing on a bench-top model from the JIGSAWS dataset, which is freely accessible. | Recognizing surgical gestures automatically is an important step in gaining a complete grasp of surgical expertise. Automatic skill evaluation, intra-operative monitoring of essential surgical processes, and semi-automation of surgical activities are all possible applications. |

| [70,71] | They blur the image frames from videos to remove the background noise. The photos are then converted to HSV color mode. They transform the picture to black-and-white format through dilation, erosion, filtering, and thresholding. Finally, hand movements are identified using SVM. | Gesture-based technology may assist the handicapped, as well as the general public, to maintain their safety and requirements. Due to the significant changeability of the properties of each motion with regard to various persons, gesture detection from video streams is a complicated matter. |

| [72,73] | The purpose of this study is to offer a method for Hajj applications that is based on a convolutional neural network model. They also created a technique for counting and then assessing crowd density. The model employs an architecture that recognizes each individual in the crowd, marks their head position with a bounding box, and counts them in their own unique dataset (HAJJ-Crowd). | There has been a growth in interest in the improvement of video analytics and visual monitoring to better the safety and security of pilgrims while in Makkah. It is mostly due to the fact that Hajj is a one-of-a-kind event with hundreds of thousands of people crowded into a small area. |

| [74] | This study presents crowd density analysis using machine learning. The primary goal of this model is to find the best machine learning method for crowd density categorization with the greatest performance. | Crowd control is essential for ensuring crowd safety. Crowd monitoring is an efficient method of observing, controlling, and comprehending crowd behavior. |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Al Farid, F.; Hashim, N.; Abdullah, J.; Bhuiyan, M.R.; Shahida Mohd Isa, W.N.; Uddin, J.; Haque, M.A.; Husen, M.N. A Structured and Methodological Review on Vision-Based Hand Gesture Recognition System. J. Imaging 2022, 8, 153. https://doi.org/10.3390/jimaging8060153

Al Farid F, Hashim N, Abdullah J, Bhuiyan MR, Shahida Mohd Isa WN, Uddin J, Haque MA, Husen MN. A Structured and Methodological Review on Vision-Based Hand Gesture Recognition System. Journal of Imaging. 2022; 8(6):153. https://doi.org/10.3390/jimaging8060153

Chicago/Turabian StyleAl Farid, Fahmid, Noramiza Hashim, Junaidi Abdullah, Md Roman Bhuiyan, Wan Noor Shahida Mohd Isa, Jia Uddin, Mohammad Ahsanul Haque, and Mohd Nizam Husen. 2022. "A Structured and Methodological Review on Vision-Based Hand Gesture Recognition System" Journal of Imaging 8, no. 6: 153. https://doi.org/10.3390/jimaging8060153

APA StyleAl Farid, F., Hashim, N., Abdullah, J., Bhuiyan, M. R., Shahida Mohd Isa, W. N., Uddin, J., Haque, M. A., & Husen, M. N. (2022). A Structured and Methodological Review on Vision-Based Hand Gesture Recognition System. Journal of Imaging, 8(6), 153. https://doi.org/10.3390/jimaging8060153