Apart from the usual single-view finger vein capturing devices, several researchers have developed different 3D or multi-perspective finger vein capturing devices. These works are summarised in the following, structured according to their operation principle, starting with rotating devices, followed by multi-camera devices and finally, related works that are dedicated either to other principles of capturing devices or to processing the multi-perspective finger vein data.

3.2.1. Rotation-Based Multi-Perspective Finger Vein Capturing Devices

Qi et al. [

10] proposed a 3D hand-vein-capturing system based on a rotating platform and a fixed near-infrared camera, which is located above the hand. The hand is put on a handle (which is mounted on the rotating platform) with an integrated illuminator based on the light transmission principle. The plate rotates around the z-axis. However, the degree of rotation is limited due to the limited movement of the hand in this position. A 3D point cloud is generated from the samples captured at different rotation angles. The 3D point-cloud-based templates are compared using a kernel correlation technique. They conducted some recognition performance evaluation using a small dataset (160 samples) captured with their proposed device, but no comparison with other hand-vein recognition algorithms on their acquired hand-vein samples. The authors claim that their approach should help to overcome hand registration and posture change problems present in hand-vein recognition if only 2D vein patterns/images are available.

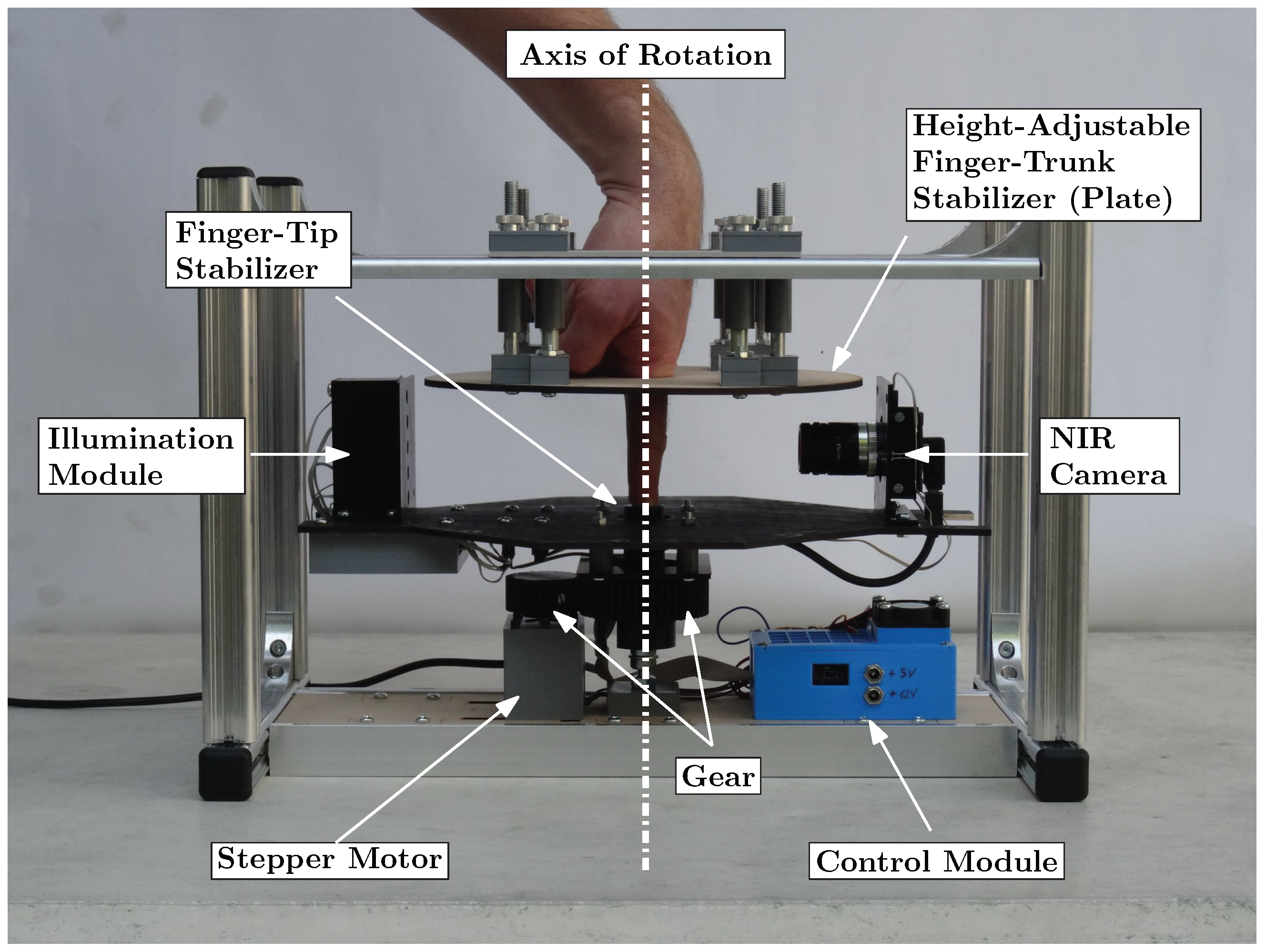

In 2018, Prommegger et al. [

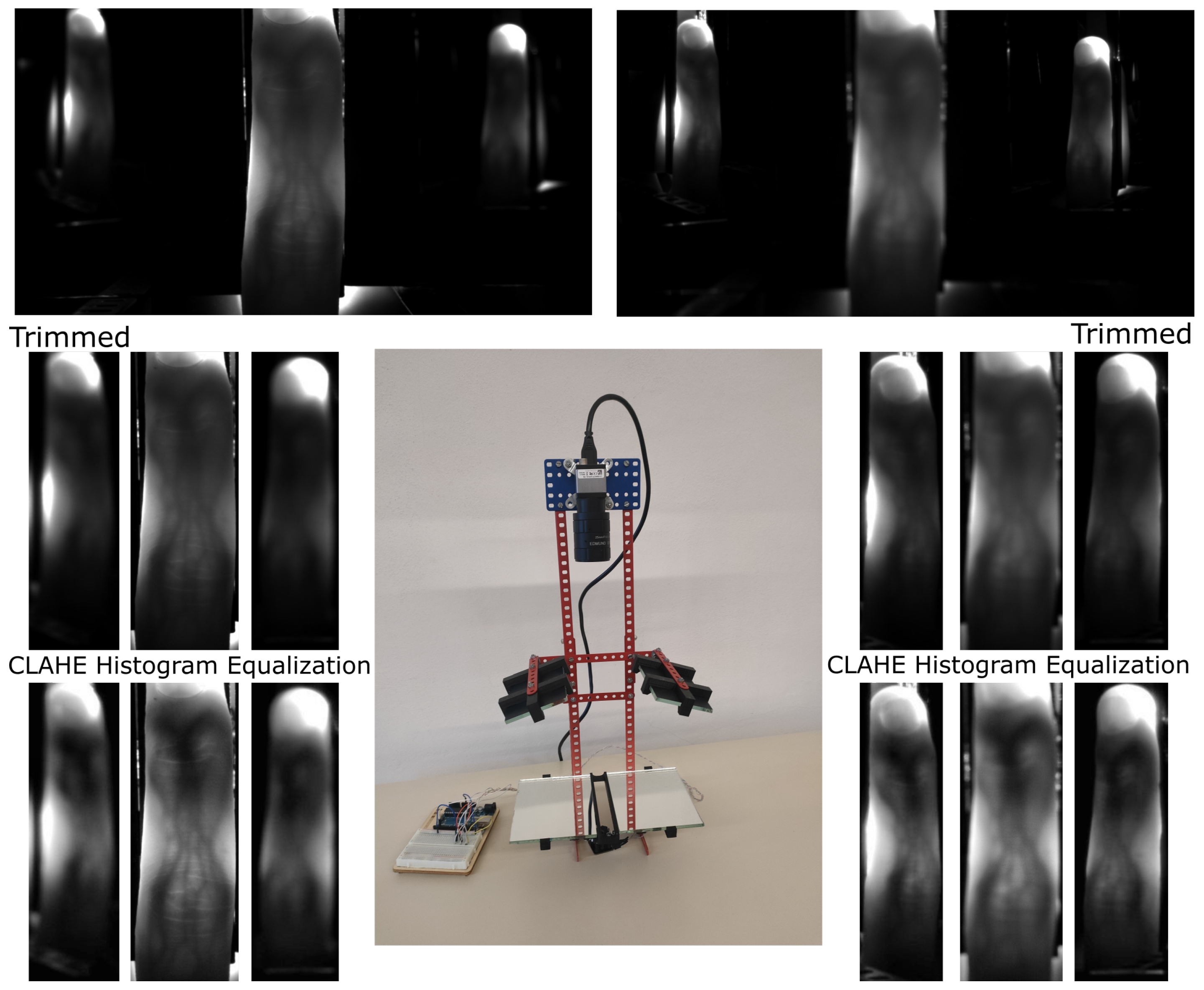

2] constructed a custom, rotating 3D finger-vein-capturing device. This capturing device acquires finger vein images in different projection angles by rotating a near-infrared camera and a illumination unit around the finger. The principle of the device is shown in

Figure 5. The finger is positioned at the axis of rotation, while the camera and the illumination module are placed on the opposite sides, rotating around the finger. The scanner is based on the light transmission principle and uses an industrial near-infrared enhanced camera and five near-infrared laser modules (808 nm) as light source. The acquisition process is semi-automated. After the finger has been inserted into the device, the operator has to initiate the capturing process. The illumination for the finger is then set automatically in order to achieve an optimal image contrast with the help of a contrast measure. Afterwards, the acquisition is started at one video frame per degree of rotation; hence, a full capture includes 360 images, one image per degree of rotation. All perspectives are captured in one run using the same illumination conditions to ensure the comparability of the different captures at different projection angles.

With the help of this custom rotational scanner, they established the first available multi-perspective finger vein dataset, consisting of 252 unique fingers from 63 volunteers, each finger captured 5 times at 360 perspectives (the 0° and 360° perspective are the same); hence, it contains

images in total. Each perspective is stored as an 8-bit grayscale image with a resolution of 1024 × 1280 pixels. The finger is always placed in the centre of the image; hence, the sides can be cut, resulting in an image size of 652 × 1280 pixels.

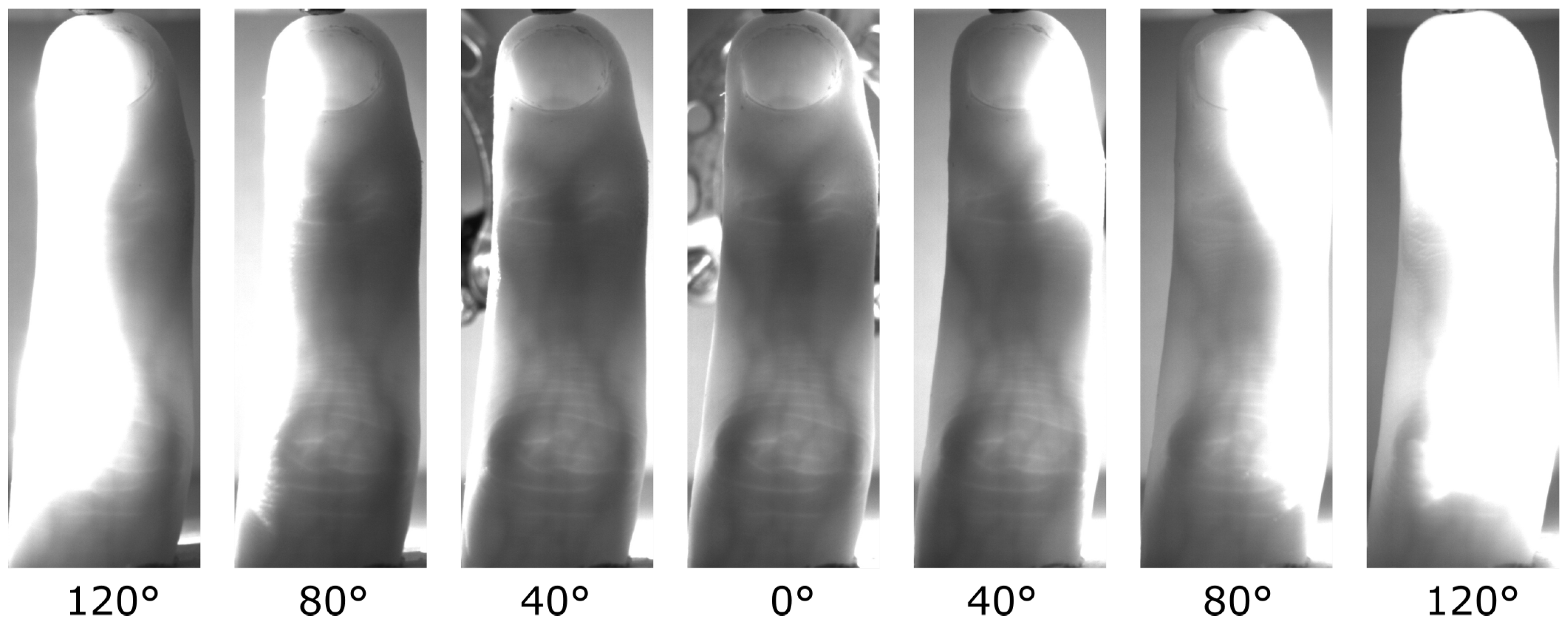

Figure 6 shows some example images of this dataset. For more details on the dataset and the capturing device, refer to our original work [

2].

Based on this dataset, they evaluated the recognition performance of the single perspectives as well as a score level fusion based on 2, 3 and 4 perspectives using well-established vein-pattern-based recognition algorithms. Their results confirmed that the palmar perspective (0°) achieved the best performance, followed by the dorsal one (180°), while all other perspectives are inferior.

3.2.2. Multi-Camera-Based Multi-Perspective Finger-Vein-Capturing Devices

In 2013, Zhang et al. [

11] proposed one of the first binocular stereoscopic vision devices to capture hand vein images and the shape of the finger knuckles. The recognition is based on a 3D point cloud matching of hand-veins and knuckle shape. Their capture device set-up consisted of two cameras, placed in a relative position of about

next to each other, each one equipped with an NIR-pass filter. There is only a single light transmission illuminator placed underneath the palm of the hand. The 3D point clouds are generated by extracting information from the edges of the hand veins and knuckle shapes. The generated 3D point clouds are then compared utilising a kernel correlation method, especially designed for unstructured 3D point clouds. The authors claim that their proposed method is faster and more accurate compared to 2D vein recognition schemes, yet they did not compare to other hand-vein recognition schemes.

Lu et al. [

12] proposed a finger vein recognition system using two cameras. These cameras are placed separated by a rotation angle of

next to each other, where each camera is located

apart from the palmar view. They applied feature- as well as score-level fusion (based on the min and max rule) using the samples captured the two views simultaneously by the two cameras and were able to improve the recognition performance over samples captured from only one view.

Sebastian Bunda [

13] proposed a method to reconstruct 3D point cloud data from the finger vascular pattern. His work is based on a a multi-camera finger-vein-capturing device constructed by the University of Twente. The University of Twente finger-vein-capturing device is also described in [

14]. The device is based on the light transmission principle and captures the finger from the palmar side. It consists of three independent cameras located at the bottom of the device and separated by a rotation angle of about 20°. On the top of the device, there are three near-infrared LED illuminators, also separated by a rotation angle of 20°. The cameras have a field of view of 120° in order to capture the whole finger from a close distance and are equipped with NIR pass filters to block the ambient light. The same as the device proposed by [

15], this device also requires a camera calibration and stereo rectification prior to any further processing of the acquired samples. After those steps, the finger vein lines are extracted (cropping the image, histogram equalisation, Gaussian filtering and then using the repeated line tracking [

16] algorithm) from the pair of stereo images. According to the basic stereo vision principles, finger vein lines in the first image should be matched with the corresponding vein lines in the second image. Hence, a standard stereo correspondence algorithm is used to generate the disparity maps and derive the 3D point cloud data from those disparity maps. Note that so far only two pairs of cameras (cam1 and cam2 plus cam2 and cam3) are used to generate the 3D point clouds. Bunda evaluated his three-dimensional model visually and using a flat test object and a round test object. Tests with real fingers showed that the presented method is a good first attempt at creating 3D models of the finger vein patterns. On the other hand, he notes that there is still room for improvement, especially in terms of speed and accuracy. He did not conduct any recognition performance evaluations and there are no hints towards a full dataset captured with their scanner so far.

In order to address the limited vein information and the sensitivity to positional changes of the finger, another finger-vein-capturing device with an accompanying finger vein recognition algorithm in 3D space was proposed by Kang et al. [

17]. The authors claim that their capturing device is able to collect a full view of the vein pattern information from the whole finger and that their novel 3D reconstruction method is able to build the full-view 3D finger vein image and the corresponding 3D finger vein feature extraction from the samples acquired by their capturing device. Their capturing device consists of three cameras (placed at an rotation angle of 0°, 120° and 240°) and three corresponding illumination units (light transmission), which are placed on the opposite side of the camera. The illuminators use NIR LEDs with a wavelength of 850 nm and the cameras are equipped with an 850 nm NIR band-pass filter. The authors point out that using a standard feature-based stereo matching technique for finger vein recognition is difficult as it is hard to find a sufficient number of matched points between two images to obtain all of the deep-vein information, while area-based stereo-matching techniques have difficulties in extracting the vein lines robustly. Hence, they suggest a novel 3D representation as well as a feature extraction and matching strategy based on a lightweight convolutional neural network (CNN). At first, similar to other approaches, their set-up requires a camera calibration and stereo rectification. Afterwards, they generate elliptical approximations of the finger’s cross section at each horizontal point in the 2D images based on the detected finger outline in the three captured 2D images. Using those ellipses and the three 2D images, they can then establish a 3D coordinate system and generate a 3D finger model of the whole finger. Once this is completed, they can map the texture information of the 2D finger vein images onto the 3D finger model. Afterwards, a 3D finger normalization is applied, which eliminates variations caused by Y-shift or Z-shift movements and pitch or yaw movements, with only the variations caused by rotation along the X-axis (roll movement) and X-shift movement remaining. These problems are addressed in the subsequent feature extraction and comparison step, which utilizes two different CNNs to extract the vein texture information and the 3D finger geometry from the 3D representation. Those features are then compared using a CNN to establish the final comparison score after fusing the two separate scores. The authors also established a 3D finger vein database containing 8526 samples. Their experimental results demonstrate the potential of their proposed system. They also did comparisons with classical finger vein recognition approaches and three CNN-based ones and showed that their approach is superior in terms of recognition accuracy. Despite the promising results, the authors consider their work as a “preliminary exploration of a 3D finger vein verification system” and point out that substantial improvements still need to be made in order to improve the verification accuracy and to reduce the time consumption. An improvement in image quality is essential, which requires an improvement in their capturing device hardware, including the camera performance as well as the light path design and illumination control. Furthermore, the 3D reconstruction and texture mapping procedures introduce some errors, e.g., related to non-rigid transform, uneven exposure and shift transforms, which need to be addressed.

In [

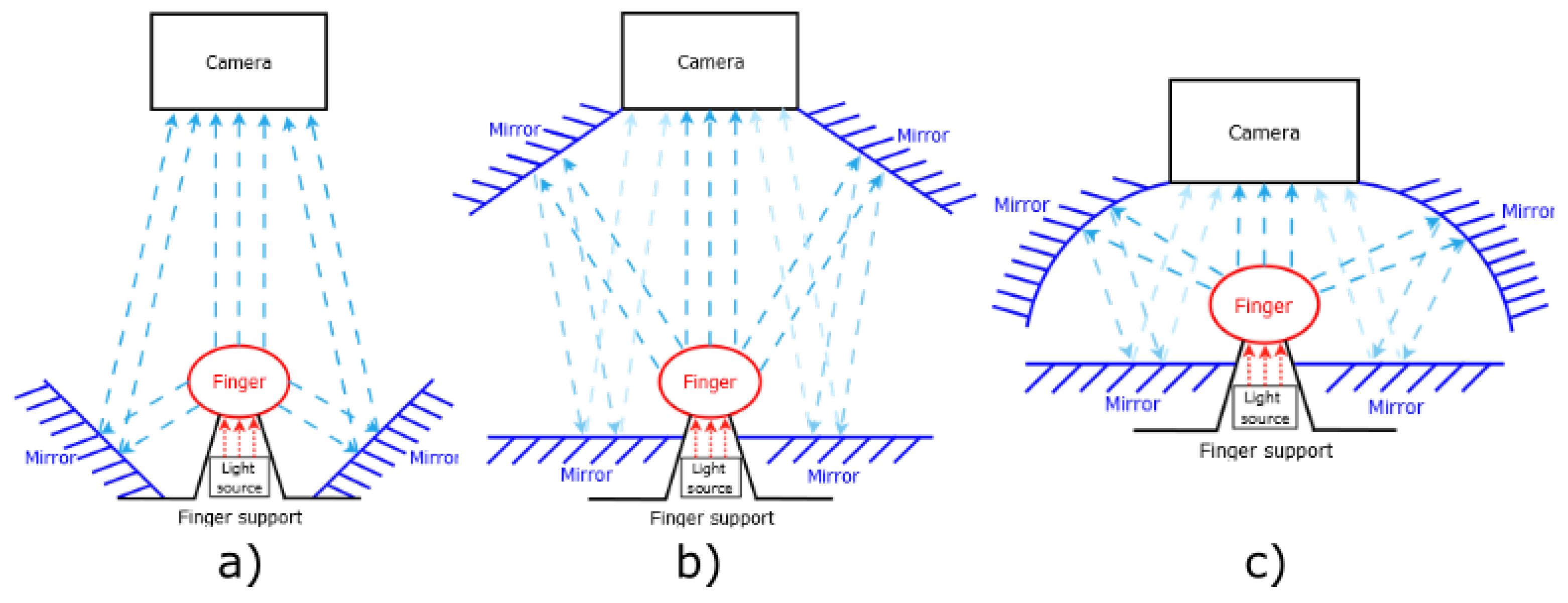

18], Prommegger et al. derived a capturing device design that achieves an optimal trade-off between cost (number of cameras) and recognition performance. Based on the single-view scores of [

19], they carried out several score-level and feature-level fusion experiments involving different fusion strategies in order to determine the best-performing set of views and feature extraction methods. The results showed that by fusing two independent views, in particular, the palmar and dorsal view, a significant performance gain was achieved. By adding a second feature extraction method to the two views, a further considerable performance improvement is possible. Taking the considerations about capturing time and capturing device costs into account, the preferred option is to design a two-perspective capture device capturing the palmar and dorsal view, applying a two-algorithm fusion including Maximum Curvature and SIFT. The second feature extraction method can be included without involving additional hardware costs just by extending the recognition tool chain. The drawback of this capturing device design is that a real 3D reconstruction of the vein structures will not be possible, so if that is taken into account, the capturing device should consist of at least three different cameras and three illumination units as, e.g., proposed by Veldhuis et al. [

14].

Durak et al. [

20] designed and constructed a 3D finger vein scanner for implementing an authentication mechanism for secure strong access control on desktop computers based on biometry. Their system implements privacy-by-design by separating the biometric data from the identity information. Their 3D finger-vein-capturing device was constructed in cooperation with Global ID, IDIAP, HES-SO Valais–Wallis, and EPFL and captures the finger from three different perspectives (the palmar perspective and two further perspectives that are separated by an rotational angle of 60° to the palmar one). It uses three cameras but only one illumination unit on top of the device (opposite to the palmar camera). No further details about their 3D finger-vein-capturing device are available as the rest of the paper is about the implementation of the security protocols. The device seems to be at least similar to the one constructed by the University of Twente [

13,

14].

The LFMB-3DFB is a large-scale finger multi-biometric database for 3D finger biometrics established by Yang et al. [

21]. They designed and constructed a multi-view, multi-spectral 3D finger imaging system that is capable of simultaneously capturing finger veins, finger texture, finger shape and fingerprint information. Their capturing device consists of six camera/illumination modules, which are located in a rotational distance of 60° to each other around the longitudinal axis of the finger (with the first camera being placed at the palmar view). The illumination pars consist of blue and near-infrared light sources, to be able to capture both the vein and the skin texture information. With the help of their proposed capturing device, they established LFMB-3DFB by collecting samples of 695 fingers from 174 volunteers. They also propose a 3D finger reconstruction algorithm which is able to include both finger veins as well as skin textures. As usual for multi-camera systems, at first an internal and external camera parameter calibration has to be performed. Afterwards they employ a K-Means, Canny edge detection and guided-filter-based finger contour segmentation, which is refined using a CNN in a second stage. The next step is a finger visual hull reconstruction based on the Space Carving algorithm, which is a simple and classical 3D reconstruction algorithm. In the following, a template-based mesh deform algorithm is applied in order to remove sharp edges and artefacts introduced by the visual hull reconstruction. The template used to represent the finger is a cylindrical mesh. Finally, a texture mapping stage is performed, where the skin and vein textures are mapped to the vertices of the 3D finger mesh. The authors performed several recognition performance experiments based on the single-perspective samples, the reconstructed 3D finger vein models, on 2D multi-perspective samples and a fusing (score- as well as feature-level fusion) of the former ones using several CNN-based feature extraction and comparison approaches. Their results suggest that the best recognition performance can be achieved by using the multi-perspective samples in combination with feature-level fusion, but the performance of the 3D end-to-end recognition using their second CNN approach is almost as good as the multi-perspective one. They account the inferior performance of their 3D end-to-end approach to problems with the accuracy of their 3D finger reconstruction algorithm as well as problems with the 3D end-to-end recognition CNN, which they want to improve.

Quite recently, in December 2021, Momo et al. patented a “Method and Device for Biometric Vascular Recognition and/or Identification” [

22]. From the title of the patent, it is not obvious that this device performs 3D or multi-perspective finger vein recognition. The finger vein capturing device design in the patent is similar to the one presented by [

20] as it uses three cameras placed at the palmar view and separated by a rotational angle of

. It also uses only one illumination module, which is placed opposite to the palmar view camera, on top of the device. The patent claims that the device includes anti-spoofing measures and that the recognition is performed based on 3D finger vein templates where the vein information is extrapolated from a disparity map or by triangulation using two images provided by the cameras. For recognition, the patent only suggests that this can be performed based on “(deformable or scale-invariant) template matching, veins feature matching and/or by veins interest point detection and cross-verification”, so it does not give any further details about the feature extraction/comparison process.

The most recent publication on 3D finger vein recognition is the work completed by Xu et al. [

23], published on the 4th of February, 2022. The authors propose a 3D finger vein verification system that extracts axial rotation invariant features to address the problem of changes in the finger posture, especially in contactless operation. This work is based on the authors’ previous work [

17], i.e., it uses the same capturing device and also utilizes the dataset presented in [

17] for performance evaluations. In contrast to their previous work, which had problems with the accuracy of the 3D finger vein model, here, the authors propose a silhouette-based 3D finger vein reconstruction optimization model to obtain a 3D finger point cloud representation with finger vein texture. This method is based on the assumption that the cross-section of finger approximates ellipses and employs finger contours and epipolar constraints to reconstruct the 3D finger vein model. The final 3D model is obtained by stacking up ellipses from all cross-sections. Finally, the vein texture is mapped to the 3D point cloud by establishing the correspondences between 3D point cloud model and the input 2D finger vein images. The next step is to input the 3D point clouds to a novel end-to-end CNN network architecture called 3D Finger Vein and Shape Network (3DFVSNet). The network transforms the initial rotation problem into a permutation problem and finally transforms this to invariance (against rotations) by applying global pooling. These rotation-invariant templates are then compared to achieve the final matching result. The authors also extended their initial dataset (SCUT-3DFV-V1 [

17]) to the new SCUT LFMB-3DPVFV dataset, which includes samples of 702 distinct fingers and is available on request. The authors claim that this is so far the largest publicly available 3D finger vein dataset and is three times larger than their initial one. They performed several experiments on both of their datasets (SCUT-3DFV-V1 and SCUT LFMB-3DPVFV) and showed that their proposed 3DFVSNet outperforms other 3D point-cloud-based network approaches (e.g., PointNet). They also compared their results with well-established vein recognition schemes and showed that their 3DFVSNet performs better on all tested benchmarks on the SCUT-3DFV-V1 and LFMB-3DPVFV datasets.

3.2.3. Other Multi-Perspective Finger-Vein-Capturing Devices and Data Processing

A completely different approach to 3D finger vein authentication was presented by Zhan et al. [

24]. Their approach is not based on the widely employed near-infrared light transmission principle but on photoacoustic tomography and is an improvement over their previous work on an photoacoustic palm vessel sensor [

25]. They presented a compact photoacoustic tomography setup and a novel recognition algorithm. Compared to optical imaging technologies, the photoacoustic tomography (PAT) is able to detect vascular structures that are deeper inside the tissue with a high spatial resolution because the acoustic wave scattering effect is three orders of magnitude less than the optical scattering in the tissue. Optical imaging suffers from light diffusion, while ultrasound imaging suffers from low sensitivity to blood vessels. PAT is able to output images with a higher signal-to-noise ratio (SNR) because non-absorbing tissue components will not generate any PA signals. The authors propose a finger-vein-capturing device that is able to cover four fingers (index, middle, ring and pinky) at a time and allows the data subjects to place their fingers directly on top of the imaging window to enhance the usability. The data subject’s fingers are placed on top of a water tank. The optical fiber output and ultrasound transducer are located inside the water tank. The ultrasound transducer has 2.25 MHz centre frequency and a 8.6 cm lateral coverage to be able to cover all four fingers and the device uses a laser with a wavelength of 1064 nm as light source. Both the light delivery and the acoustic detection path are combined using a dichroic cold mirror, which also reflects the photoacoustic signals generated by the finger vessels. To scan the whole finger, the laser is moved along the finger during a single capture. Using this PAT-based finger vein capturing device, the authors collected samples of 36 subject to establish a test data-set. The software part of their proposed system includes the reconstruction of the 3D image from the acquired 2D images at each laser pulse by stacking all the 2D data based on their position and finally depth-encoding and maximum-intensity-projecting the data. Afterwards, a vessel structure enhancement is conducted by at first de-noising and smoothing the original reconstructed image using Gaussian and bilateral filters. Then, a 3D vessel pattern segmentation technique, which is essentially a binarisation method, including a nearest-neighbor search and vascular structure fine-tuning module (skeleton) is applied. The finger vein templates are then binary, 3D finger vein representations. For comparison, a SURF key-point-based approach is employed. Note that while the vessel structure is essentially 3D information, the SURF features are intended for the 2D space. The authors conducted some verification experiments to determine the recognition performance of their approach and achieved accuracies of more than 99% or an EER of 0% and 0.13% for the right and left index finger, correspondingly. However, they did not compare their results to the well-established finger vein recognition schemes. A drawback of their capturing device design is the production costs due to the photoacoustic tomography setup and the parts that are needed to establish this setup.

Liu et al. [

26] presented an approach for the efficient representation of 3D finger vein data. Instead of the typical point cloud representation, they propose a so-called liana model. At first, they store the finger vein data in a binary tree, and then they propose some restrictions and techniques on the 3D finger vein data, which are caliber uniformity (represent each vein line with the same thickness), bine classification (veins are classified gradually from root to terminal, palmar to dorsal surface, and counter-clockwise), node partition (usually two smaller veins intersect and merge into a thicker one, which leads to a 3-connectable node, all other nodes should be partitioned into 3-connectable ones) and spread restriction (vein loops can occur if the veins are spread around the finger skeleton, which are not allowed in a binary tree representation; hence, spread restriction removes the loop structures without discarding any vein information) in order to simplify the representation. The authors did not capture any 3D finger vein data, nor did they conduct any empirical (recognition performance) evaluation of their proposed 3D finger vein representation.

Another point-cloud-based 3D finger vein recognition technique was proposed by Ma et al. [

15]. They strive to augment the finger vein samples by solving the problem of the lack of depth information in 2D finger vein images. They constructed a finger-vein-capturing device using two cameras that are positioned along the finger (not the longitudinal axis) in a binocular set-up, which is able to perceive the depth information from the dorsal view of the finger, but no full 360° 3D vein information is available as only the dorsal perspective is captured. After applying a camera calibration and stereo rectification for their proposed capturing device set-up, they extract the vein contours and the edges of the finger as features. Based on those features and the pair of stereo images, a 3D point cloud is reconstructed. Comparison of two samples is performed by utilizing the iterative closest point (ICP) algorithm to compare the two 3D point-cloud-based templates. The authors claim that due to the more discriminative features in their 3D point cloud finger vein representation, the recognition performance (matching accuracy) is enhanced. They performed neither any recognition performance evaluations, nor a comparison with other finger vein recognition schemes.

In [

19], based on the multi-perspective finger vein data acquired in [

2], the authors evaluated the effect of longitudinal finger rotation, which is present in most of the commercial off-the-shelf and custom-built finger-vein-capturing devices. This effect describes a rotation of the finger around its longitudinal axis between the capturing of the reference and the probe template. They showed that most of the well-established vein-pattern-based recognition algorithms are only able to handle longitudinal finger rotation to a small extent (

) before their performance degrades noticeably. The key-point-based ones (SIFT and DTFPM [

27]) are able to compensate a higher level of longitudinal finger rotation (

), but for longitudinal finger rotations of more than

, the recognition performance degradation renders the tested finger vein recognition schemes useless.

A natural extension to this work was to analyse the amount of longitudinal finger rotation present in available finger vein datasets (UTFVP [

28], FV-USM [

29], SDUMLA-HMT [

30] and PLUSVein-FV3 [

31]) in order to show that longitudinal finger rotation is present in current finger vein datasets. In [

32], the authors proposed an approach to estimate the longitudinal finger rotation between two samples based on their rotation correction approach introduced in [

33] and found that especially the FV-USM and the SDUMLA-HMT are subject to a great amount of longitudinal finger rotation. A more sophisticated approach to detect the longitudinal finger rotation was presented in [

34] and is a CNN-based rotation detector able to estimate the rotational difference between vein images of the same finger without requiring any additional information. The experimental results showed that the rotation detector delivers accurate results in the range of particular interest (±30°), while for rotation angles >30°, the estimation error rises noticeably.

In [

33], the authors suggest several approaches to detect and compensate the longitudinal finger rotation, e.g., using the known rotation angle, overusing a geometric shape analysis, elliptic pattern normalisation and just by using a fixed rotation angle (independent of the real rotation angle).

In [

35], the authors propose a method to generate a reference finger vein template that makes the probe template during the authentication purpose longitudinal rotation invariant. Their method, called multi-perspective enrolment (MPE), acquires reference samples in different rotation angles and then applies the CPN method proposed in [

33]. For authentication, only a single perspective is acquired and the extracted template is compared to all enrolment templates where the final comparison score is the maximum score of all the comparisons. The second technique for rotation invariant probe templates proposed in [

35] also captures several reference samples from the finger in different rotation angles, which are then normalized using CPN, but then combines the different perspectives by stitching the templates to form a so called perspective cumulative finger vein template (PCT). This template can be thought of as a “rolled” finger vein template, which contains the vein information of all around the finger. During the authentication, the comparison score is calculated by sliding the probe template over the cumulative reference template. This work is extended in [

36] by a method to reduce the number of different perspectives during enrolment of a longitudinal rotation invariant finger vein template, and thus a way to reduce the number of cameras and illumination units equipped in a finger vein capturing device. This new method is based on the good results we achieved with our multi-perspective enrolment (MPE) method in [

35], as well as the insights of the optimal number of different perspectives gained in [

18]. The new method is called Perspective Multiplication for Multi-Perspective Enrolment (PM-MPE) and essentially combines the MPE with the fixed angle correction method introduced in [

33]. They showed that PM-MPE is able to increase the performance over the previously introduced MPE method. In [

37], they extended the work on PM-MPE presented in [

36] by applying it to other feature extraction schemes (was only evaluated using Maximum Curvature in [

36]) and by introducing so-called perspective shifts to MPE as well as by adding more than two pseudo perspectives in between each of the cameras for PM-MPE. Their experimental results showed that the perspective shifts on their own are not able to significantly improve the performance, but if the PMx-MPE is employed (additional pseudo perspectives), then especially the well-established vein-pattern-based systems benefit most from the insertion of additional pseudo perspectives.

In [

3], Prommegger et al. presented a novel multi-camera finger vein recognition system that captures the vein pattern from multiple perspectives during enrolment and recognition (as a further extension to our previously proposed MP-MPE approach). In contrast to existing multi-camera solutions using the same capturing device for enrolment and recognition, the proposed approach utilises different camera positioning configurations around the finger of the capturing device during enrolment and recognition in order to achieve an optimal trade-off between the recognition rates (minimising the rotational distance between the closest enrolment and recognition sample) and the number of cameras (perspectives involved) needed. This new approach is called Combined Multi-Perspective Enrolment and Recognition (MPER) and is designed to achieve fully rotation-invariant finger vein recognition. The same as the previous MP-MPE approach, the robustness against longitudinal finger rotation is achieved by acquiring multiple perspectives during enrolment and now also during recognition. For every recognition attempt, the acquired probe samples are compared to the corresponding enrolment samples, which leads to an increased number of comparisons in contrast to the MP-MPE approach. The proposed capturing devices for MPER were not actually built, but have been simulated using the PLUSVein-FR dataset [

2], which allowed them to easily test a group of different sensor configurations in order to find the best-performing one. Their experimental results confirmed the rotation invariance of the proposed approach and showed that this can be achieved by using as few as three cameras in both devices. This method is solely based on well-established vein recognition schemes and a maximum rule score level fusion and thus, does not need any costly 3D reconstruction and matching methods like other proposed multi-camera recognition systems, e.g., [

17], do. The computation time of our proposed MPER approach has comparable results with existing solutions and can be used in real-world applications. MPER enables both the enrolment and the recognition-capturing device to be equipped with three cameras. Most of the other proposed multi-perspective finger-vein-capturing devices use three cameras as well [

13,

17,

22]. A comparison with those systems shows that the actual placement of the cameras is important as well. If the same methodology as that proposed for MPER is used for these proposed capturing devices [

13,

17,

22], only the configuration of MPER-120°/3 achieves rotational invariance.