Abstract

It is necessary to establish the relative performance of established optical flow approaches in airborne scenarios with thermal cameras. This study investigated the performance of a dense optical flow algorithm on 14 bit radiometric images of the ground. While sparse techniques that rely on feature matching techniques perform very well with airborne thermal data in high-contrast thermal conditions, these techniques suffer in low-contrast scenes, where there are fewer detectable and distinct features in the image. On the other hand, some dense optical flow algorithms are highly amenable to parallel processing approaches compared to those that rely on tracking and feature detection. A Long-Wave Infrared (LWIR) micro-sensor and a PX4Flow optical sensor were mounted looking downwards on a drone. We compared the optical flow signals of a representative dense optical flow technique, the Image Interpolation Algorithm (), to the Lucas–Kanade (LK) algorithm in OpenCV and the visible light optical flow results from the PX4Flow in both X and Y displacements. The to LK was found to be generally comparable in performance and better in cold-soaked environments while suffering from the aperture problem in some scenes.

1. Introduction

Robust navigation is a desirable capability for Unmanned Aerial Vehicles (UAVs). Many UAVs rely on the Global Position System (GPS) to sense their position. However, GPS requires a clear view of the sky to operate reliably, which limits its use in certain working environments such as in high-density urban areas, inside buildings, underground, or in areas where the signal is subject to deliberate or inadvertent interference. Additionally, the vertical error of GPS can be several meters on Earth due to the layers of the atmosphere, which refract and delay the transmission signals between the receivers and the satellites [1]. This issue makes GPS navigation-based techniques unreliable in confined spaces and indoors.

Unlike GPS-based navigation systems, vision-based systems do not rely on having an unobstructed view between the UAVs and the satellite. Instead, it relies on the on-board sensor, which can be an optical colour visual light sensor or a thermal sensor. Vision-based systems can provide real-time information about the surrounding dynamic environment and are resistant to conventional jamming. Hence, vision-based systems can be a good solution to aid UAVs to navigate in GPS-denied areas.

2. Related Work

There have been efforts to develop both active and passive vision-based techniques for navigation, yet a limited number of techniques have been attempted in darkness despite the potential to double the operating period. Thermal sensors are one imaging technology that can operate in darkness in some environments. There are many reasons for this, such as high cost and difficulty to acquire thermal sensors, limited access to airspace, and operational difficulties experienced at night [2].

Early researchers tried to utilise both visual and infrared spectrum to help robots navigating in dark and low visual environments.

Brunner et al. [3] combined the visual spectrum with infrared spectrum to aid the Simultaneous Localization And Mapping (SLAM) in low light and smoke situations. The result showed a significant increase in the SLAM performance in such conditions for unmanned ground vehicles. Papachristos et al. [4] developed a fusion of thermal and inertial sensor systems for small UAVs through a dark and GPS- denied environment. The study relied on a Long-Wave Infrared (LWIR) sensor to detect thermal objects, combined with the Support Vector Machine technique to accomplish real-time localisation. Another approach proposed by Khattak et al. [5] outlined a multi-modal fusion system from a fusion of visual light and the infrared spectrum combined with inertial sensors to help a small UAV to manoeuvre in a dark tunnel.

However, the previous approaches only utilised a rescaled 8-bit resolution instead of on full radiometric 14 bit. The reason for this approach is that the vast majority of open-source computer vision libraries such as OpenCV are designed with 8-bit processing in mind [6,7].

A rescaled 8-bit thermal frame results in lower contrast due to the loss of information due to 6 bits being discarded [8]. Furthermore, the study in [9] shows that with the same algorithm, utilising full input radiometric thermal information may produce better performance, and with less accumulated errors over time than its rescaled counterpart.

Khattak et al. [10] proposed a framework to use full radiometric 14-bit data from an LWIR sensor to navigate in a degraded visual environment. The framework was tested in an underground mine, demonstrating better results compared to a rescaled version. Recently, the same team [6] developed a thermal–inertial system for tracking features to determine a path for a small UAV with full 14-bit radiometric resolution.

These works have shown that thermal sensors can provide valuable information to navigate in low-light situations. However, these mentioned works are computationally demanding, which limits their use on small UAVs and in real-time applications. In contrast, biologically inspired optical flow techniques have been used by birds and insects to support them to navigate in cluttered environments [11]. Recent studies have shown that honeybees rely on optical flow for most of their navigation tasks, such as collision avoidance [12] or landing [13,14]. Optical flow is evidently an efficient and effective way to achieve more autonomous robust navigation for small UAVs.

Optical flow is defined as the apparent motion of image intensities or brightness patterns across multiple scenes [15]. The PX4Flow sensor [16] is one of the most widely used optical flow sensors, which has been integrated into many studies [17,18] with success. Optical flow can be used for active navigation, such as frontal object avoidance [19], to calculate time to impact [20] or can be used passively to collect information about the current states of aircraft, such as pitch and roll [21], descent angles [22] and lateral drift [23] for fixed-wing aircraft and to perform altitude control for automatic landing [13,14]. Furthermore, the study of the feasibility and concept of using optical flow with thermal imaging for navigation has been demonstrated in [24,25].

The paper is organised in 10 sections. Section 3 outlines our previous works and the motivation for this study. Section 4 presents the in one and two dimensions. Section 5 and Section 6 outline our hardware and software architectures. Section 7 considers our assessment methodology, including the flying platform, two experimental sites and its conditions. Section 8 and Section 9 report and analyse the collected data from the flights. Section 10 outlines the lessons learnt and future research directions.

3. Contributions

Previous studies by us [26,27] have started to systematically explore the concept of airborne thermal flow. These works utilised the LK in OpenCV. Additionally, the characteristics of thermal flow over 24 h were investigated and compared to the output of the visible-light-based optical flow sensor. One of the lessons learned from [27] was that thermal flow from LK performed poorly from midnight until just before sunrise due to the much lower contrast in thermal data long after the sun goes down.

While the LK technique yields reliable results with relatively low computational demand, it suffers from sensitivity to noise due to the requirement to compute derivatives and the need to find distinct features between frames even when contrast is low. As a result, the implementation of thermal flow based on the LK suffered in a cold-soaked environment with much less contrast in thermal images. To solve this problem, considering robustness to noise and suitability for sub-pixel movements for airborne applications, dense techniques such as the [28] might sometimes be a better alternative to the LK in lower-contrast frames such as in cold-soaked environments.

The was first proposed by Srinivasan in [28], which demonstrated its robustness to noise, and the fact that it does not require feature detection and tracking between frames or high-order spatial or temporal derivatives of images. Our version of the was tailored for airborne applications with the addition of predicted motion during the flight to increase precision. Additionally, we aimed to explore the use of the with low-resolution and low-contrast thermal images.

Furthermore, our implementation utilised full radiometric 14-bit frames from the thermal sensor, while the LK in OpenCV only accepts 8-bit scaled intensity images. This means that the had an initial advantage of obtaining 6 more bits depth from its input, potentially outperforming the LK while not necessarily being the superior algorithm for the situation. On the other hand, the LK in OpenCV is very well known for its accuracy and has become a “go-to” implementation in real-time applications. As a result, it is still valid to evaluate the against the OpenCV implementation of LK as well as the PX4Flow.

4. Optical Flow Computation

The has demonstrated its effectiveness when computing optical flow from an image plan, which is much less computationally demanding [28], and the is best with small movement changes in images [29].

The is used to compute the motion of one image or subimage with respect to another [30]. It estimates the distance between and , across an observation window of arbitrary size, shape and spatial weighting, relative to the deformation between and . For small displacements of the sensor, it is assumed that the input is approximated by , a weighted linear combination of and

where is the angular velocity in pixels per frame shift, k is a reference shift in pixels that is small, but larger than any expected motion, and specifies the deformation of normalised with respect to the distance between and , or pixels. For the constraint of interpolation to apply, the value of will range between −1.0 and 1.0. Within an observation window , is solved by minimising the mean squared error between and :

and taking a derivative of (2) with respect to yields the expression

where is the normalised position of between and , and thus is the shift between and in pixels.

To reduce aliasing caused by high-frequency components, the images are passed through a low-pass filter, which can be a Gaussian or a square convolution kernel, before computing optical flow.

Extension to Two Dimensions

The theory can be extended to compute optical flow in two dimensions. Assuming that the motion has two degrees of freedom, with a small shift, the shifted image can be presented as:

Similar to Equation (3), the parameters and can be solved by setting and to zero.

The resulting simultaneous equations may be expressed in matrix form, as follows:

where

Matrix inversion is required at each point in the images generated by the expressions defining the matrix coefficients, and after each image has been convolved with a two-dimensional kernel, , which acts to localise each motion computation. Gaussian kernels were used throughout the flight tests.

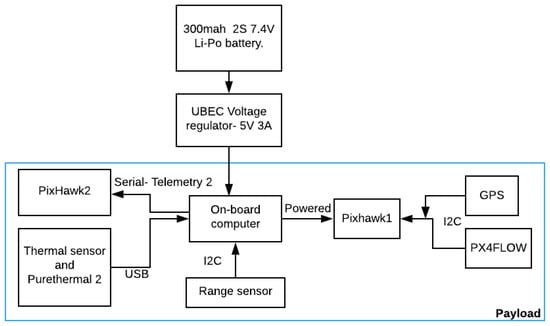

5. Hardware Implementation

This section describes the hardware system used in the experiment. All the components had to satisfy three constraints: low in cost, light in weight and small in size. Figure 1 shows a block diagram of all the components of our system. A lithium-polymer battery was used to power the system via a 5 V power supply voltage regulator to maintain constant voltage and current throughout the experiment.

Figure 1.

Hardware implementation block diagrams of the system.

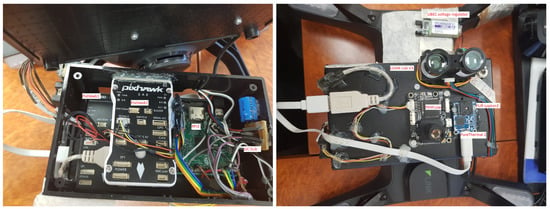

Figure 2 shows our constructed payload in the housing frame, with components labeled in red.

Figure 2.

Inside (on the left) and outside (on the right) of the system.

5.1. Thermal Sensor

The FLIR Lepton 3 (Teledyne FLIR LLC., Wilsonville, OR, USA) was chosen in this study due to its light weight and low cost. The FLIR Lepton 3 is an uncooled LWIR thermal sensor with a 56° field of view [31]. The sensor has a low angular error of 0.03°, which is adequate, without any calibration needed [26]. Additionally, the sensor also satisfies both weight and size constraints at 0.9 g and 11.8 mm × 12.7 mm × 7.2 mm in size. The sensor can output 14-bit 160 × 120 radiometric resolution thermal images at 8.7 HZ.

Flat Field Correction

The thermal sensor comes with a built-in shutter with Flat Field Correction (FFC) for stationary usage. The FFC compensates for errors that build up over time during operation. The FFC is essential when the sensor captures the same scene for a prolonged period to prevent ghosting [31]. During the FFC process, the sensor freezes for a small amount of time depending on the model (0.3–2 s), which is undesirable for navigation applications. Since the Lepton is mounted on a constantly moving aircraft, it is essential to disable the FFC to achieve continuous inputs.

5.2. Interfacing with the Lepton Sensor

The Lepton is integrated on the Purethermal 2 board. The board weighs 50 g, with the dimensions of 30 mm × 18 mm. The board uses its own integrated circuit with an integrated ARM microprocessor, which is capable of executing the Lepton commands by itself, thus freeing up some of the processing that would otherwise be done by the main computing system, the Raspberry Pi 3. The Purethermal 2 board interfaces with the Raspberry Pi via a USB connector.

Range Sensor

A LIDAR lite v3 (Garmin Ltd., Lenexa, KS, USA) [32] was used in this study due to its light weight of only 22 g and low power consumption. The purpose of the range sensor is for post-flight altitude verification.

5.3. Onboard Processor

The Raspberry Pi 3 (Pi 3) (Raspberry Pi Foundation, Cambridge, UK) was used in this study to obtain and save 14-bit raw thermal images from the Lepton 3 for later processing. The Pi 3 satisfies both weight and size constraints for small aircraft applications.

5.4. PixHawk and PX4Flow

The PixHawk was powered by the Pi 3 via “Telemetry 2” connection. The PX4Flow interfaces with the PixHawk via the I2C communication protocol. Data “Ulog” files were saved on the PixHawk, which captured the optical flow signals from the PX4Flow.

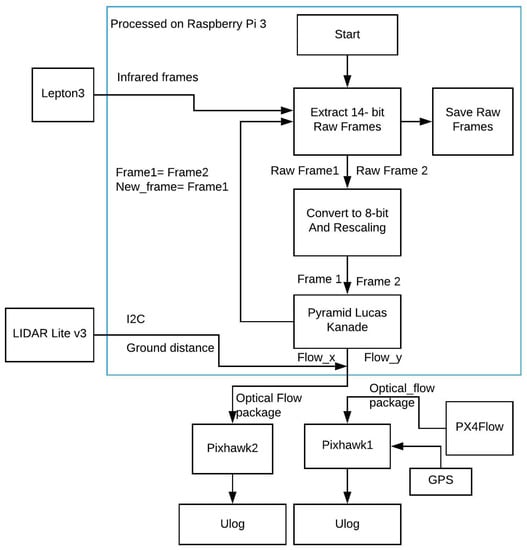

6. Software Implementation

Figure 3 shows the structure of our payload. The Pi 3 is the main computer of the system, requesting raw 14-bit data from the Lepton 3 in the beginning. The raw 14-bit data were saved for later processing. Each consecutive 14-bit frame then will be down-scaled to 8 bits while maintaining a common scale with the technique in [27]. The two processed frames were processed with the LK in OpenCV to determine the 2D optical flow vector (flow_x, flow_y). The ground distance was also received from LIDAR, and it is sent along with the optical flow vector to the PixHawk2 via the “OPTICALFLOWRAD” MAVLINK package to be saved in Ulog format. The PX4Flow data were also saved on the PixHawk1.

Figure 3.

Software algorithm block diagram.

In this study, the system was constructed with OpenCV version 4.5.5, Numpy version 1.19.2 and Python version 3.8.

6.1. Lucas–Kanade Algorithm in OpenCV

The LK implementation in OpenCV uses Shi–Tomasi [33] corner feature detection to identify distinguishable features across two images. The LK optical flow technique operates based on three assumptions [15]:

- Brightness constancy: The contrast should not differ between two frames.

- Small movements: The displacement between two frames should not be too large.

- Spatial coherence: The neighbouring pixels should move together and have the same motion across two frames.

The three conditions must be met in order to compute the optical flow field between two frames with the LK and the .

6.2. Automatic Gain Control

In modern thermal sensors such as the FLIR Lepton 3, the Automatic Gain Control (AGC) is turned on by default to give to the user the most detail when the average temperature of the scene is changing. When the sensor first captures radiometric thermal data, the data are in a 14-bit depth format, which are “raw” data. However, the raw 14-bit data must be converted down to 8 bits to visualise them on electronic displays. Additionally, the LK implementation in OpenCV only accepts 8-bit input data for optical flow estimation. Hence, it is necessary to convert them from 14 bits to 8 bits.

By default, the AGC built into the sensor is responsible for this. However, a problem arises when there is a drastic change in the scene temperature, when a significantly hotter or cooler object enters or exits the scene. One example, in Figure 4, shows two 8-bit frames taken continuously when a hot cup is moving out of the scene when processed with AGC.

Figure 4.

Automatic Gain Control (AGC) changes the contrast in an image when a hot cup moves into a scene: (1)–(2).

Additionally, Table 1 shows the average, 10% and 90% percentile for the pixel intensity of the images shown in Figure 4. It is clear that the pixel intensity of an image changes dramatically when a very hot or cold object enters or exits the scene due to the AGC.

Table 1.

Pixel intensity values with Automatic Gain Control (AGC) of two images shown in Figure 4.

The AGC was designed to show the maximum possible dynamic range of the image, which is good for inspection purposes. However, this may cause problems for many feature matching algorithms due to the drastic change in contrast between images. Additionally, rescaling 14-bit images with the AGC also violates the first condition of the LK technique: brightness constancy.

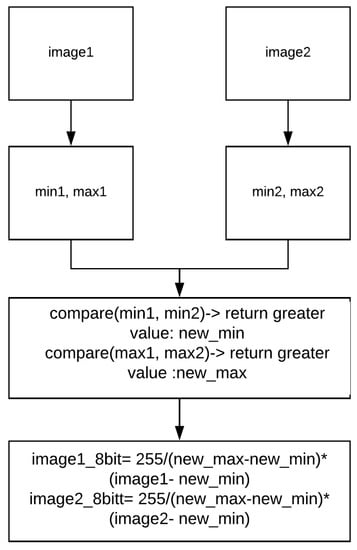

6.2.1. Rescaling Technique

This section summarises our technique from [27] to convert 14-bit raw thermal images to 8-bit images while maintaining the contrast between two images.

Our technique takes two 14-bit images as input, and then rescales these two frames based on their maximum and minimum pixel intensity. Figure 5 shows the conversion of a pair of 14-bit images, image1 and image2, to two 8-bit images.

Figure 5.

A pair of images with the same scaling value.

Figure 6 shows the rescaled images with our technique from Figure 4. The result shows that the brightness does not change but there are small artefacts in the second image in this case.

Figure 6.

A pair from Figure 4 with our technique. In this extreme case, there are small artefacts, which are circled in red.

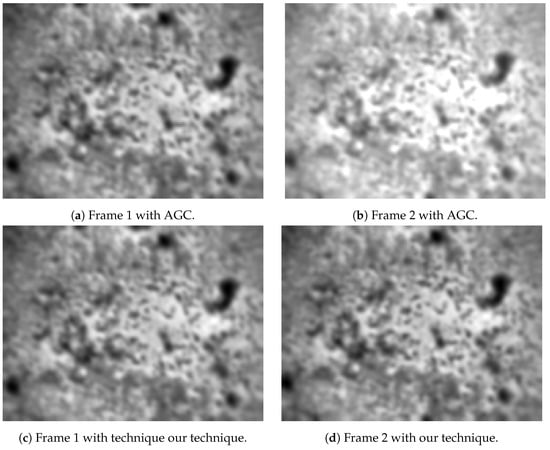

Figure 7 shows a pair of images captured from the flight, processed with AGC and our technique. Visually, a pair with AGC cannot be used for optical flow estimation due to corresponding pixels having different gains applied to their read-out. On the other hand, when applying our technique, the second rescaled image is able to maintain its contrast. Additionally, the undesired artefact effect is too small to be visually detected.

Figure 7.

Two captured consecutive frames with AGC enabled (a,b) and with technique (c,d).

Table 2 shows the average, 10% and 90% percentile pixel intensity from images shown in Figure 7. It is clear that the same brightness is maintained across two images for optical flow estimation.

Table 2.

Pixel intensity values of a pair of 8-bit images with AGC (first two rows) and our technique (last two rows).

Good, distinct features were found based on the Shi–Tomasi algorithm [33]. The output of the algorithm is single displacement vectors in two dimensions as a median of all good points found. Table 3 shows the parameter settings for LK and Shi–Tomasi algorithms in OpenCV.

Table 3.

Setting parameters for LK optical flow and Shi–Tomasi corner detection algorithm.

6.2.2. Benefit of 14-Bit Implementation with the I2A

Table 4 shows the pixel intensity of a pair of 14-bit images, showing that the brightness is consistent across both images. Hence, there is no need to apply the conversion techniques in Section 6.1 while using full radiometric 14-bit data.

Table 4.

Pixel intensity values of two unprocessed 14-bit images, which shows that they both have approximately the same contrast and overall pixel brightness.

Besides bypassing the troubled AGC and rescale techniques, utilising full 14-bit radiometric data directly can provide better results compared to their 8-bit version counterpart [9]. Additionally, the team in [6] also showed that using full 14-bit radiometric data makes the algorithm more resilient to the occasional absence of data due to the availability of an additional 6 bits of data that would otherwise be lost during the conversion technique.

In this experiment, the shift values are 4 pixels in both X and Y displacements and the chosen kernel is a 9 × 9 Gaussian.

7. Assessment Methodology

This section shows how the tests were conducted, including the site of the experiment, flight plans, flying platform, weather conditions at the site and how the signals were analysed.

7.1. Flying Platform

The payload was mounted underneath the 3DR SOLO (3DR, Berkeley, CA, USA). The now obsolete SOLO was available and had the capacity to carry a payload of up to 500 g with a tolerable flight time of 10 min.

7.2. Field Experiment

The SOLO was programmed to fly one square lap at a constant height of 8 m with target velocity at 8 m/s. Figure 8 shows the flight path in this experiment. The SOLO took off at point H, flew to point (1)-(2)-(3)-(4)-(5) and then landed at (5).

Figure 8.

Flight plan in mission planner.

We carried out two flight trials in this study. The first trial was during a normal sunny day in autumn, while our second trial was during a cold and foggy day several months later in winter. Both trials were performed at the same field with the same flight plan.

The purpose of the first trial was to compare the performance of the LK, the and the PX4Flow during normal conditions: on a sunny, clear-sky day with high thermal contrast. The second trial aimed to compare them in a cold-soaked, lower-contrast environment.

Table 5 shows the field conditions, including min and max temperatures, temperature and weather conditions, at the time for each experiment [34].

Table 5.

Weather conditions at the experimental sites.

It was expected that the PX4Flow and the LK would perform worse in cold-soaked conditions, as learned from our previous study in [27]. Additionally, we also used the same flight plan at the same experimental site to evaluate LK and performance in the two experiments.

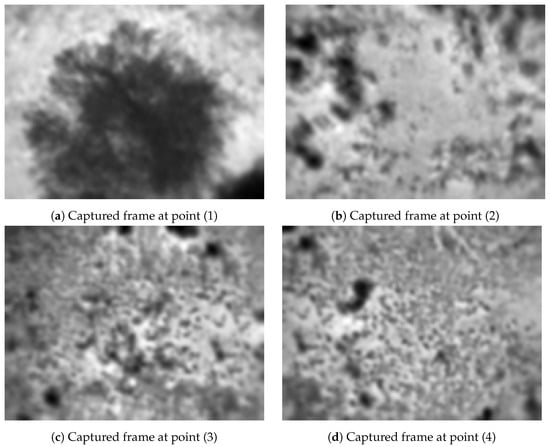

7.2.1. Experiment 1: High Thermal Contrast Condition

The first test was conducted at 11am, during a clear and sunny autumn day at the site. Figure 9 shows some thermal images of the site taken from the SOLO; all the images are in 8 bit and were converted from 14 bits with the same scale as described in Figure 5.

Figure 9.

Processed 8-bit thermal frames of some interesting points in Experiment 1.

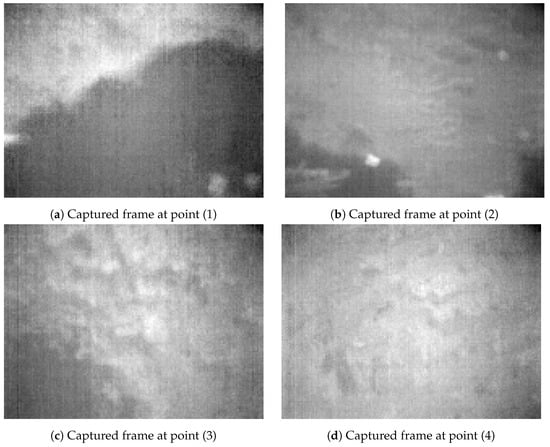

7.2.2. Experiment 2: Lower Contrast Condition

The second trial was done in winter at 0900 h at the same location, using the same flight plan. Figure 10 shows the lack of sunlight and rainy and foggy condition of the site.

Figure 10.

Field condition in Experiment 2.

Figure 11 shows captured and processed 8-bit thermal frames at four interesting points during Experiment 2, at approximately the same location as in Experiment 1. It indicates that the thermal frames in Experiment 2 contained much less contrast, details and dynamic ranges compared to Experiment 1.

Figure 11.

Processed 8-bit thermal frames at same locations as in Figure 9, in Experiment 2.

7.3. Signal Analysis

To evaluate the performance of the LK, the and the PX4Flow sensor, the output signals of each technique were compared to each other in both X and Y displacements. Cross-correlation processing was applied to determine how closely the two signals matched each other. High and positive cross-correlation indicates that the two signals are well matched.

8. Results

This section shows the optical flow measurements from the two flight tests in both X and Y axes. We also used our collected data from our previous paper [27].

8.1. Experiment 1

This section shows the resulting signal for our flight test in X and Y displacements for the , the LK and from the PX4Flow.

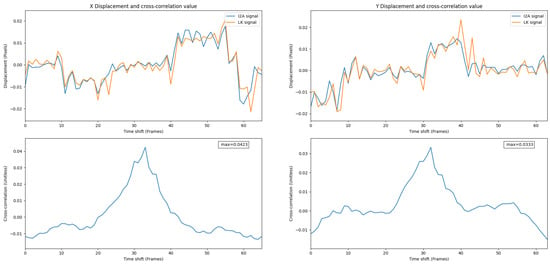

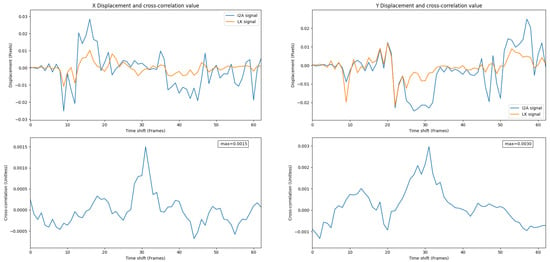

Figure 12 shows the overlaid signals and the cross-correlation value of the LK and the . Given the high value of cross-correlation, the performed as well as the LK during the test in both the X and Y displacements.

Figure 12.

Overlay of the and the LK signals in Experiment 1. A high positive correlation value shows a strong relationship between these two signals.

Figure 13 shows overlaid signals and their cross-correlation values for the PX4Flow and the . A high correlation value shows a strong relationship between these two signals.

Figure 13.

Overlay of the PX4Flow and the signals in Experiment 1. A high correlation value shows a strong relationship between these two signals.

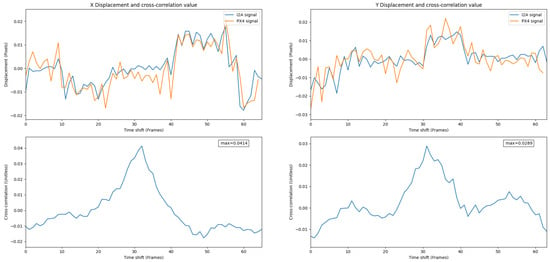

Figure 14 shows overlaid signals and their cross-correlation values for the PX4Flow and the LK. A high correlation value shows a strong relationship between these two signals.

Figure 14.

Overlay of the PX4Flow and the LK signals in Experiment 1. A high correlation value shows a strong relationship between these two signals.

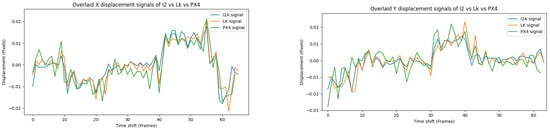

Figure 15 shows the three signals—the , the LK and the PX4Flow—over X and Y displacements.

Figure 15.

Overlay of the , the LK and the PX4Flow signals in Experiment 1.

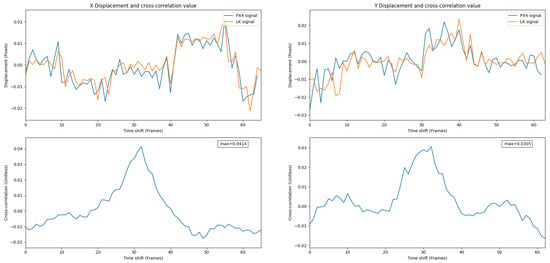

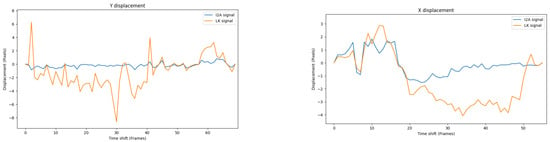

8.2. Experiment 2

In Experiment 2, the PX4Flow did not work, while thermal flow with the LK and the was functional.

Figure 16 shows thermal flow measurements from the LK and the . The results clearly indicate that the works better than the LK in this trial. While the LK can keep up with the at some points during the flight, the still yields some flow measurements while the LK yields nothing. At point (1) and (2) in Figure 11, the and the LK produce comparable results since the frame contrast is still high. However, while the contrast is low at point (3) and (4), the LK cannot keep up with the . Hence, the has the advantage in cold-soaked, low-contrast conditions.

Figure 16.

and the LK signals in cold-soaked conditions, Experiment 2. A weaker correlation value shows a weaker relationship between signals compared to Experiment 1.

8.3. Aperture Problem

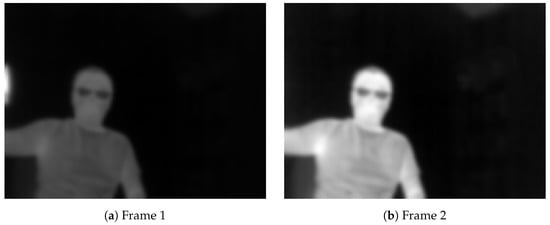

The aperture problem refers to the phenomenon that causes one-dimensional spatial structures such as a bar, line or edge to be determined ambiguously when viewing from a small hole, when the motion is not known [35].

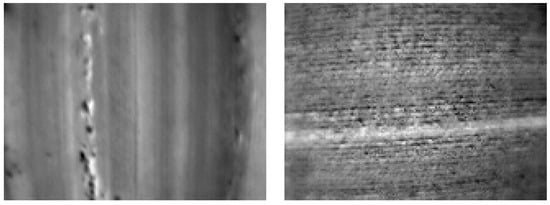

Two parts of our dataset at 1400 h from our previous work in [27] were used to test the performance of the in a scene when the aperture problem was prominent. We used sequences of the main road and wheat field with a strong vertical and horizontal line, as shown in Figure 17.

Figure 17.

Thermal images of the main road (left) and the wheat field (right) at 1400 h.

Figure 18 shows a comparison of Y displacement over the main road and the X displacement over the field of the and the LK.

Figure 18.

The and the LK signals in Y and X displacements above the main road (left) and the wheat field (right). It is clear that the does not work in these scenarios.

From Figure 18, the suffers from the aperture problem while the LK does not. This issue potentially limits the use of the in some scenarios.

9. Discussion

The greatest weakness of simple optical flow algorithms based on spatio-temporal gradients is their inability to deal with the aperture problem or to detect information deficiencies in the image. This problem is entirely based on how they were originally formulated, and is not particularly fundamental, although it does deviate from the ideal of massive Single Instruction Multiple Data (SIMD) processing of the entire image in a single pass. In this sense, the aperture problem seems to be a reasonably manageable problem, since there is no particular reason that a salience operator could not be run across the entire image also using SIMD instructions, and then used as a gate to determine which data are likely to be valid. This is well travelled ground in the literature from decades ago [36,37].

Outdoor environments are difficult to control. However, it seems likely that under cold-soaked conditions, such as very late at night, where noise is more apparent in thermal images [27], the might have some advantages, as shown in Figure 16.

In general, agreement between the and LK and PX4Flow shows that both the dense and sparse optical flow techniques can be used reliably with low-resolution thermal data for airborne applications.

10. Conclusions

The results have shown that the is capable of computing optical flow reliably from low-resolution thermal imagery, compared to the LK technique and the PX4Flow. Additionally, the performs better than the LK in lower contrast and higher noise during colder conditions.

The can take advantage of new-generation compact systems with more powerful graphics processing units, such as the Nvidia Jetson, that are capable of SIMD processing. Moreover, the is robust to noise, while the “aperture problem” is a considerable issue. Hence, we expect the to continue to work well compared to the LK during the day and better during the night due to the characteristics with noise and sensitivity. It is the case, however, that the LK has a built-in test of the quality of the result, based on the number of features.

The drone was programmed to fly at constant height and speed in this study; a fixed “shifting value” worked well in this scenario, but might not work well in situations where the altitude or velocity changes over time. Therefore, the shifting value should be able to update itself to respond to the changes in height and velocity of the UAVs.

Further study will focus on variations of these algorithms that are better tuned to the aerial environment for fixed wing flight and to deal with the aperture problem. Additionally, further study will also investigate the deep learning approach for thermal flow.

Author Contributions

T.X.B.N. designed the payload, conducted the fly trials, analysed the data and wrote the draft manuscript. K.R. and J.C. reviewed the work and contributed with valuable advice. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by an Australian Government Research Training Program (RTP) Scholarship.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

This research was supported by an Australian Government Research Training Program (RTP) Scholarship. The authors would like to thank Asanka and Sam at the University of South Australia and Philip from the Defense Science and Technology Group for their assistance during the previous dataset’s collection.

Conflicts of Interest

The authors declare no conflict of interest.

Sample Availability

Not applicable.

Abbreviations

The following abbreviations are used in this manuscript:

| LWIR | Long-Wavelength Infrared |

| AGC | Automatic Gain Control |

| FFC | Flat Field Correction |

| I2A | Image Interpolation Algorithm |

| UAV | Unmanned Aerial Vehicle |

| LK | Lucas–Kanade Algorithm |

References

- Bos, M.; Fernandes, R.; Williams, S.; Bastos, L. Fast error analysis of continuous GPS observations. J. Geod. 2008, 82, 157–166. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, T.X.B.; Rosser, K.; Chahl, J. A Review of Modern Thermal Imaging Sensor Technology and Applications for Autonomous Aerial Navigation. J. Imaging 2021, 7, 217. [Google Scholar] [CrossRef] [PubMed]

- Brunner, C.; Peynot, T.; Vidal-Calleja, T.; Underwood, J. Selective combination of visual and thermal imaging for resilient localization in adverse conditions: Day and night, smoke and fire. J. Field Robot. 2013, 30, 641–666. [Google Scholar] [CrossRef] [Green Version]

- Papachristos, C.; Mascarich, F.; Alexis, K. Thermal-inertial localization for autonomous navigation of aerial robots through obscurants. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; pp. 394–399. [Google Scholar]

- Khattak, S.; Papachristos, C.; Alexis, K. Visual-thermal landmarks and inertial fusion for navigation in degraded visual environments. In Proceedings of the 2019 IEEE Aerospace Conference, Big Sky, MT, USA, 2–9 March 2019; pp. 1–9. [Google Scholar]

- Khattak, S.; Papachristos, C.; Alexis, K. Keyframe-based thermal–inertial odometry. J. Field Robot. 2020, 37, 552–579. [Google Scholar] [CrossRef]

- Shin, Y.S.; Kim, A. Sparse depth enhanced direct thermal-infrared SLAM beyond the visible spectrum. IEEE Robot. Autom. Lett. 2019, 4, 2918–2925. [Google Scholar] [CrossRef] [Green Version]

- Mouats, T.; Aouf, N.; Chermak, L.; Richardson, M.A. Thermal stereo odometry for UAVs. IEEE Sens. J. 2015, 15, 6335–6347. [Google Scholar] [CrossRef] [Green Version]

- Bloesch, M.; Burri, M.; Omari, S.; Hutter, M.; Siegwart, R. Iterated extended Kalman filter based visual-inertial odometry using direct photometric feedback. Int. J. Robot. Res. 2017, 36, 1053–1072. [Google Scholar] [CrossRef] [Green Version]

- Khattak, S.; Papachristos, C.; Alexis, K. Keyframe-based direct thermal—Inertial odometry. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3563–3569. [Google Scholar]

- Srinivasan, M.V.; Chahl, J.S.; Weber, K.; Venkatesh, S.; Nagle, M.G.; Zhang, S.W. Robot navigation inspired by principles of insect vision. Robot. Auton. Syst. 1999, 26, 203–216. [Google Scholar] [CrossRef]

- Srinivasan, M.V. Honey bees as a model for vision, perception, and cognition. Annu. Rev. Entomol. 2010, 55, 267–284. [Google Scholar] [CrossRef] [PubMed]

- Chahl, J.S.; Srinivasan, M.V.; Zhang, S.W. Landing strategies in honeybees and applications to uninhabited airborne vehicles. Int. J. Robot. Res. 2004, 23, 101–110. [Google Scholar] [CrossRef]

- Srinivasan, M.V.; Zhang, S.W.; Chahl, J.S.; Barth, E.; Venkatesh, S. How honeybees make grazing landings on flat surfaces. Biol. Cybern. 2000, 83, 171–183. [Google Scholar] [CrossRef]

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef] [Green Version]

- Honegger, D.; Meier, L.; Tanskanen, P.; Pollefeys, M. An open source and open hardware embedded metric optical flow cmos camera for indoor and outdoor applications. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013; pp. 1736–1741. [Google Scholar]

- Chao, H.; Gu, Y.; Napolitano, M. A survey of optical flow techniques for robotics navigation applications. J. Intell. Robot. Syst. 2014, 73, 361–372. [Google Scholar] [CrossRef]

- Jung, S.; Hwang, S.; Shin, H.; Shim, D.H. Perception, guidance, and navigation for indoor autonomous drone racing using deep learning. IEEE Robot. Autom. Lett. 2018, 3, 2539–2544. [Google Scholar] [CrossRef]

- Miller, A.; Miller, B.; Popov, A.; Stepanyan, K. Optical Flow as a navigation means for UAV. In Proceedings of the 2018 Australian & New Zealand Control Conference (ANZCC), Melbourne, Australia, 7–8 December 2018; pp. 302–307. [Google Scholar]

- Camus, T. Calculating Time-To-Contact Using Real-Time Quantized Optical Flow. Available online: https://www.nist.gov/publications/calculating-time-contact-using-real-time-quantized-optical-flow (accessed on 12 January 2022).

- Chahl, J.; Mizutani, A.; Strens, M.; Wehling, M. Autonomous navigation using passive sensors and small computers. In Proceedings of the Infotech@ Aerospace, Arlington, VA, USA, 26–29 September 2005. [Google Scholar]

- Barrows, G.L.; Chahl, J.S.; Srinivasan, M.V. Biomimetic visual sensing and flight control. In Proceedings of the 17th International Unmanned Air Vehicle Systems Conference, Bristol, UK, 8–10 April 2002; pp. 1–15. [Google Scholar]

- Rosser, K.; Chahl, J. Reducing the complexity of visual navigation: Optical track controller for long-range unmanned aerial vehicles. J. Field Robot. 2019, 36, 1118–1140. [Google Scholar] [CrossRef]

- Borges, P.V.K.; Vidas, S. Practical infrared visual odometry. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2205–2213. [Google Scholar] [CrossRef]

- Delaune, J.; Hewitt, R.; Lytle, L.; Sorice, C.; Thakker, R.; Matthies, L. Thermal-inertial odometry for autonomous flight throughout the night. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Macau, China, 3–8 November 2019; pp. 1122–1128. [Google Scholar]

- Rosser, K.; Nguyen, T.X.B.; Moss, P.; Chahl, J. Low complexity visual UAV track navigation using long-wavelength infrared. J. Field Robot. 2021, 38, 882–897. [Google Scholar] [CrossRef]

- Nguyen, T.X.B.; Rosser, K.; Perera, A.; Moss, P.; Teague, S.; Chahl, J. Characteristics of optical flow from aerial thermal imaging, “thermal flow”. J. Field Robot. 2022. [Google Scholar] [CrossRef]

- Srinivasan, M.V. An image-interpolation technique for the computation of optic flow and egomotion. Biol. Cybern. 1994, 71, 401–415. [Google Scholar] [CrossRef]

- Otte, M.; Nagel, H.H. Optical flow estimation: Advances and comparisons. In Proceedings of the European Conference on Computer Vision, Stockholm, Sweden, 2–6 May 1994; Springer: Berlin/Heidelberg, Germany, 1994; pp. 49–60. [Google Scholar]

- Chahl, J. Optical flow and motion detection for navigation and control of biological and technological systems. J. Mod. Opt. 2016, 1–18. [Google Scholar] [CrossRef]

- Corp, F. FLIR Lepton Engineering Data Sheet; FLIR Corp.: Wilsonville, OR, USA, 2014; Available online: https://www.cornestech.co.jp/tech/wp-content/uploads/sites/2/2018/06/500-0659-00-09-Lepton-Engineering-Datasheet-Rev201.pdf (accessed on 12 January 2022).

- Corp, G. Lidar Lite v3 Operation Manual and Technical Specifications; Garmin: Olathe, KS, USA, 2016; Available online: https://static.garmin.com/pumac/LIDAR_Lite_v3_Operation_Manual_and_Technical_Specifications.pdf (accessed on 12 January 2022).

- Shi, J. Good features to track. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 21–23 June 1994; pp. 593–600. [Google Scholar]

- The Bureau of Meteorology. One Tree Hill Weather. Available online: http://www.bom.gov.au/places/sa/one-tree-hill/ (accessed on 12 January 2022).

- Binder, M.D.; Hirokawa, N.; Windhorst, U. (Eds.) Aperture Problem. In Encyclopedia of Neuroscience; Springer: Berlin/Heidelberg, Germany, 2009; p. 159. [Google Scholar] [CrossRef]

- Zeuch, S.; Huber, F.; Freytag, J.C. Adapting tree structures for processing with SIMD instructions. In Proceedings of the 17th International Conference on Extending Database Technology (EDBT), Athens, Greece, 24–28 March 2014. [Google Scholar]

- Plank, J.S.; Greenan, K.M.; Miller, E.L. Screaming fast Galois field arithmetic using intel SIMD instructions. In Proceedings of the 11th Conference on File and Storage Systems (FAST 2013), San Jose, CA, USA, 12–15 February 2013; pp. 299–306. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).