Abstract

Some color images primarily comprise specific hues, for example, food images predominantly contain a warm hue. Therefore, these hues are essential for creating delicious impressions of food images. This paper proposes a color image enhancement method that can select hues to be enhanced arbitrarily. The current chroma is considered such that near achromatic colors are not over-enhanced. The effectiveness of the proposed method was confirmed through experiments using several food images.

1. Introduction

Color images permeate our daily lives by recording various scenes. The popularity of smartphones and other digital devices has created an environment where people can easily capture photographs. Furthermore, they can share photographs using Internet technologies, such as social networking services (SNSs).

For instance, we can obtain many food images taken in various environments, which are posted on SNSs. However, their quality may be poor because of the performance of the digital device and lighting environment. Food images appear delicious when their lightness and chroma are satisfactory. In addition, food images mainly involve warm hues, such as red, yellow, and orange. Therefore, color image enhancement that focuses on warm hues is necessary for food images. Furthermore, the hues should not have changed after the image enhancement.

Several image enhancement methods that preserve the hue in the RGB color space have been proposed to improve the quality of acquired images [1,2,3,4,5,6,7,8]. Furthermore, image enhancement methods in other color spaces, such as HSI and CIELAB color spaces, have been proposed [9,10,11,12,13,14]. These color spaces can directly represent hue, chroma, and lightness.

Our previous method [14] using the CIELAB color space was computationally expensive and real-time processing was difficult. The improved method [15,16] solved the problem of computational cost; however, its concrete applications in daily life were unclear. We focus on the limited hues that often appear in food images and propose an image enhancement method that preserves the hue in the CIELAB color space to match human visual characteristics. The novelties of the proposed method are that a weighting function with hue as a variable is introduced to achieve limited-hue image enhancement naturally and the unnatural coloring of near-achromatic colors is avoided by considering the magnitude of the current chroma. However, one limitation of the proposed method is that it does not incorporate the local features of the human visual system such as the Abney effect [17] and the Helmholtz–Kohlrausch effect [18,19]. Experiments were conducted using several digital images to verify the performance of the proposed method.

2. Limited Hue-Focused Chroma Enhancement in CIELAB Color Space

This study employs the CIELAB color space to represent human visual perception adequately as the color space for image enhancement processing.

2.1. Conversion from RGB Color Space to CIELAB Color Space

Generally, digital color images acquired using digital devices are represented in the RGB color space. Therefore, the conversion from RGB color components to CIELAB color components is required. The RGB components of the original image are first inverse gamma corrected and converted to the linear RGB components. Here, the RGB color space is assumed to be the sRGB color space. The linear RGB components are denoted as . R, G, and B denote red, green, and blue, respectively. We assume that is normalized to .

Next, is converted into the color components (X, Y, Z) of the CIEXYZ color space. Finally, is converted to color components (, , ) of the CIELAB color space. is the lightness ranging from 0 to 100. and are chromaticity indices in the red-green and yellow-blue directions, respectively.

Chroma and hue h are given by the following equations using and [20].

Although h is generally given in the range , in this study, it is treated as in order to simplify the formulation. The minimum value of is zero and the maximum value varies depending on and h. Here, h is set to 0 when .

2.2. Hue-Based Weight Function for Chroma Enhancement

The weight function k is introduced to achieve chroma enhancement in a limited range of hues.

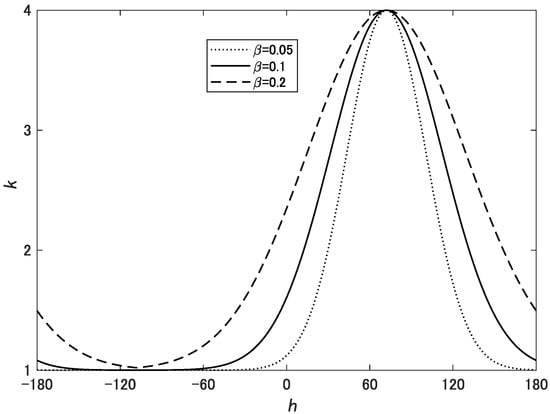

where , , and are parameters that determine the shape of Gaussian function. is the target hue and is the center of the Gaussian function. determines the degree of chroma enhancement. determines the range of hues that need to be enhanced. When is small, only hues close to are enhanced, and when is larger, wider hues are enhanced. Figure 1 shows the weight function k.

Figure 1.

Weight function k expressed by Equation (3) for , , and .

Using the weight function k, the chroma enhancement while preserving the hue is performed as follows:

When k is close to 1, the enhanced chroma is almost the same as that of the original . h is preserved in the CIELAB color space from =. However, the RGB color components obtained by converting the enhanced CIELAB color components (, , ) may be out of the color gamut, depending on the degree of k. In this case, the quality of the resulting image degrades.

To address the color gamut problem, the chroma after enhancement is given as follows:

where is an approximation of the maximum chroma in the color gamut, defined by , and h and is obtained from a lookup table using the method in Ref. [15]. Finally, the enhanced CIELAB color components are converted into RGB color components to obtain the resulting images [15]. Figure 2 shows the effects of the gamut correction using Equation (5).

2.3. Adjustment of the Degree of Chroma Enhancement Considering Current Chroma

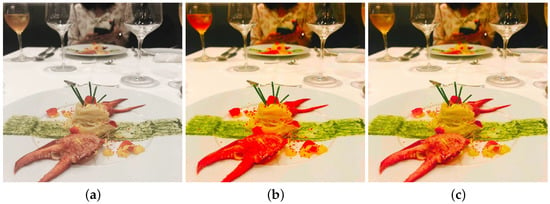

However, as illustrated in Figure 3, there is a problem that unnatural colors are added to colors that are close to achromatic colors, such as whitish colors.

Figure 3.

Experimental results. (a) Original image. (b) Resulting image using the proposed method with Equation (3).

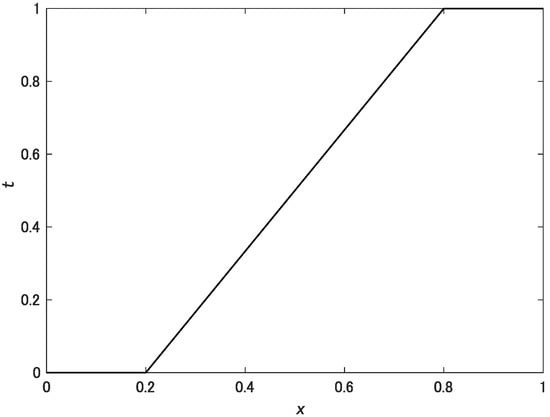

To solve this problem, function t is added to Equation (3) such that the degree of chroma enhancement is adjusted according to the magnitude of the current chroma.

where is the maximum chroma of the original image. t is a tone-mapping function defined as follows:

Figure 4.

Tone mapping function t expressed by Equation (7) for and .

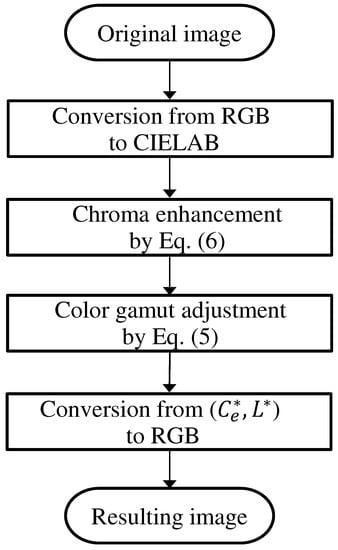

Figure 5.

Flow diagram of the proposed method.

3. Experimental Results

3.1. Food Image Enhancement

Experiments were conducted to illustrate the performance of the proposed method using digital food images. Parameters , , MIN, and MAX are set as to 3, 0.1, 0.2, and 0.8, respectively. is 72, which is the average value of h, using the top 100 ranking photos posted as of April 2021 on the SNS site “SnapDish”, which specializes in cooking [21]. The problem in this calculation is that the values of h differ greatly between −180 and 180, even though they have almost the same hue. Therefore, is determined using the following equation:

where is the average operator for all the images. and are the average values of and in each image.

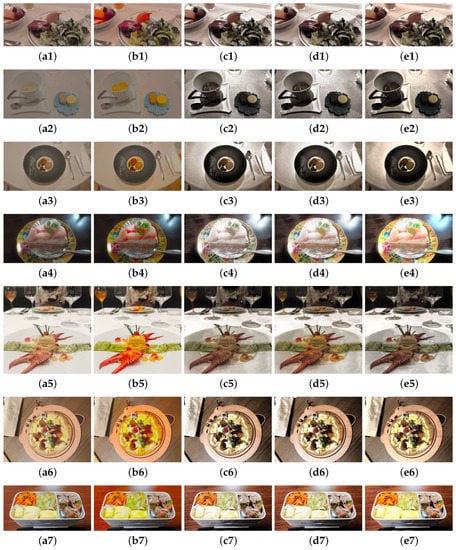

Figure 6 shows the experimental results of seven digital images. These are 24-bit color images (1) 1015 × 501 pixels, (2) 1920 × 1080 pixels, (3) 1919 × 1080 pixels, (4) 1706 × 960 pixels, (5) 1280 × 1280 pixels, (6) 1478 × 1108 pixels, and (7) 1280 × 664 pixels in size, respectively. The first column shows the original images, the second column shows the resulting images using the proposed method, and the third, fourth, and fifth columns show the resulting images using the methods in Ref. [2], in Ref. [6], and in Refs. [12,13]. In (b1), the unnatural coloring of whitish colors is improved compared with (b) in Figure 3. In (b2) and (b3), the chroma of the sweets is appropriately enhanced, whereas the blue and gray dishes remain nearly identical. In (b4) and (b5), the cake and crab are vivid, whereas the brown and white tables are largely unaffected. In (b6) and (b7), the yellow areas are effectively brightened. In addition, we can see that the proposed method provides sufficient color enhancement compared to the comparison methods. However, the resulting image of (e4) is vivid compared with (b4), which was obtained by the proposed method. In the experiments, the same parameters were used for all images. This was done to demonstrate the versatility of the proposed method. However, to further improve the performance of the proposed method, it is necessary to consider a scheme by changing the parameters based on the statistics of the input image.

Figure 6.

Experimental results. First column (a1–a7): original images. Second column (b1–b7): resulting images using the proposed method with Equation (6). Third column (c1–c7): resulting images using the method in Ref. [2] using histogram equalization. Fourth column (d1–d7): resulting images using the method in Ref. [6] using histogram equalization. Fifth column (e1–e7): resulting images using the method in Refs. [12,13].

The difference in hue between the resulting and original images is calculated as follows [22].

where and are the hues of pixels paired with the resulting and the original images, respectively. Similarly, and represent chromas. denotes the absolute value. Furthermore, the difference is calculated to verify the degree of image enhancement. Table 1 lists the averages and standard deviations of and . We can see that the averages of in all resulting images by the proposed method are smaller than those of the comparison methods, and the hue is almost preserved. Furthermore, the averages of indicate that the proposed method can improve the chroma compared to the comparison methods.

Table 1.

Averages and standard deviations of and .

SSIM [23] is measured as the image quality metric for objectively evaluating the performance of the proposed method. When SSIM is closer to 1, the enhanced image is structurally similar to the original image. Table 2 shows SSIMs between the original images and the resulting images with respect to Figure 6. This value is the average of SSIM calculated for each RGB component. From Table 2, we see that the proposed method gives better results compared to the comparison methods.

Table 2.

SSIMs between the original images and the resulting images with respect to Figure 6.

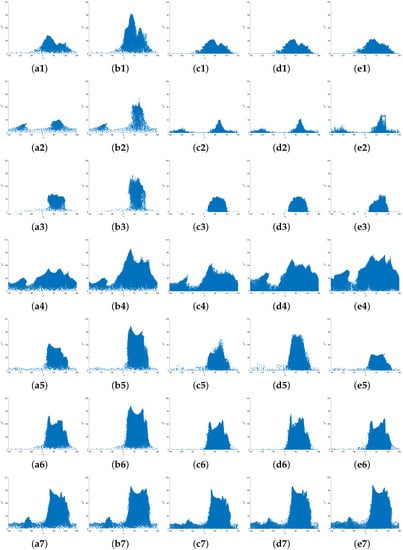

Figure 7 shows the scatter plots of the hue and chroma corresponding to Figure 6. The chroma is enhanced within a limited range of hues by the proposed method. However, due to the relationship between the RGB color space and the CIELAB color space, the range of possible values for varies depending on . Therefore, there are areas where does not increase uniformly.

Figure 7.

Scatter plots of hue and chroma corresponding to Figure 6.

Furthermore, Scheffe’s paired comparison test [24,25] was conducted as a subjective evaluation of the resulting images in Figure 6. Randomly selected images were placed left and right, and the two images were evaluated by six examinees (average age: 20.5 years, 4 females and 2 males) about which image is preferable as a food image. The examinees evaluated the two images by selecting one of , where the higher values indicate that the right image is preferable to the left image. The yardstick method was used to obtain the evaluation value of each image. Table 3 shows the evaluation values by Scheffe’s paired comparison test. A higher value indicates a higher evaluation of the image. From Table 3, we see that the proposed method is superior to the comparison methods except in the case of image 4.

Table 3.

Evaluation values by Scheffe’s paired comparison test with respect to Figure 6.

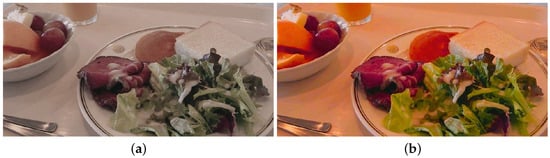

3.2. Other Applications

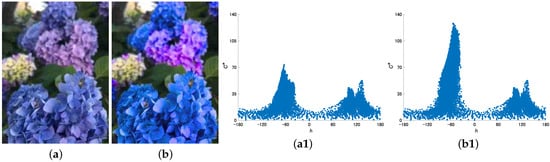

Figure 8 shows the experimental results of the flower image. As for the values of the parameters, only was changed to −60 due to the color information of the original image. This figure shows that the proposed method can naturally highlight almost only the color of the blue flowers.

Figure 8.

Experimental results. (a) Original image and its scatter plot of hue and chroma (a1). (b) Resulting image using the proposed method with Equation (6) and its scatter plot of hue and chroma (b1).

3.3. Computational Load

Table 4 shows the execution times required to obtain Figure 6 using CPU Intel(R) Core(TM) i5-5200U, RAM 8GB, MATLAB R2020a. The computational cost of the proposed method is relatively high because of the color space conversion involved. However, since the implementation of the proposed method is still naive, we think that further acceleration is fully possible. The Matlab code of the proposed method is available here: https://github.com/ta850-z/limited_hues_enhancement, accessed on 20 November 2022.

Table 4.

Execution times (s) required to obtain Figure 6.

4. Conclusions

This paper proposed a color image enhancement method that focuses on limited hues. The degree of chroma enhancement was also adjusted by considering the magnitude of current chroma to avoid the unnatural coloring of near-achromatic colors. Applying the proposed method to actual food images confirmed that the image enhancement is effective. Further work is required to improve the proposed method by utilizing the color information of the original image.

Author Contributions

Conceptualization, T.A. and N.S.; methodology, T.A., N.S., K.K. and C.H.; software, T.A. and N.S.; validation, K.K. and C.H.; formal analysis, T.A. and N.S.; investigation, K.K. and C.H.; data curation, T.A. and C.H.; writing—original draft preparation, T.A.; writing—review and editing, T.A.; funding acquisition, T.A. and N.S. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by JSPS KAKENHI Grant Number 22K12097.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Naik, S.K.; Murthy, C.A. Hue-preserving color image enhancement without gamut problem. IEEE Trans. Image Process. 2003, 12, 1591–1598. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Lee, B. Hue-preserving gamut mapping with high saturation. Electron. Lett. 2013, 49, 1221–1222. [Google Scholar] [CrossRef]

- Nikolova, M.; Steidl, G. Fast hue and range preserving histogram specification: Theory and new algorithms for color image enhancement. IEEE Trans. Image Process. 2014, 23, 4087–4100. [Google Scholar] [CrossRef] [PubMed]

- Kamiyama, M.; Taguchi, A. Hue-preserving color image processing with a high arbitrariness in RGB color space. IEICE Trans. Fundam. 2017, 100, 2256–2265. [Google Scholar] [CrossRef]

- Kinoshita, Y.; Kiya, H. Hue-correction scheme based on constant-hue plane for deep-learning-based color-image enhancement. IEEE Access 2020, 8, 9540–9550. [Google Scholar] [CrossRef]

- Inoue, K.; Jiang, M.; Hara, K. Hue-preserving saturation improvement in RGB color cube. J. Imaging 2021, 7, 150. [Google Scholar] [CrossRef] [PubMed]

- Kurokawa, R.; Yamato, K.; Hasegawa, M. Near hue-preserving reversible contrast and saturation enhancement using histogram shifting. IEICE Trans. Inf. Syst. 2022, 105, 54–64. [Google Scholar] [CrossRef]

- Zhou, D.; He, G.; Xu, K.; Liu, C. A two-stage hue-preserving and saturation improvement color image enhancement. algorithm without gamut problem. IET Image Process. 2022, 1–8. [Google Scholar] [CrossRef]

- Chien, C.L.; Tseng, D.C. Color image enhancement with exact HSI color model. Int. J. Innov. Comput. Inf. Control. 2011, 7, 6691–6710. [Google Scholar]

- Taguchi, A.; Hoshi, Y. Color image enhancement in HSI color space without gamut problem. IEICE Trans. Fundam. 2015, 98, 792–795. [Google Scholar] [CrossRef]

- Kinoshita, Y.; Kiya, H. Hue-correction scheme considering CIEDE2000 for color-image enhancement including deep-learning-based algorithms. APSIPA Trans. Signal Inf. Process. 2020, 9, 1–10. [Google Scholar] [CrossRef]

- Li, G.; Rana, M.A.; Sun, J.; Song, Y. Real-time image enhancement with efficient dynamic programming. Multimed. Tools Appl. 2020, 79, 1–21. [Google Scholar] [CrossRef]

- The Code for the Dynamic Programming Approach Developed for the Enhancement of Color Images. Available online: https://github.com/yinglei2020/YingleiSong (accessed on 15 September 2022).

- Azetsu, T.; Suetake, N. Hue-preserving image enhancement in CIELAB color space considering color gamut. Opt. Rev. 2019, 26, 283–294. [Google Scholar] [CrossRef]

- Azetsu, T.; Suetake, N. Chroma enhancement in CIELAB color space using a lookup table. Designs 2021, 5, 32. [Google Scholar] [CrossRef]

- The Code for Chroma Enhancement in CIELAB Color Space Using a Lookup Table. Available online: https://github.com/ta850-z/color_image_enhancement (accessed on 21 May 2021).

- Mizokami, Y.; Werner, J.S.; Crognale, M.A.; Webster, M.A. Nonlinearities in color coding: Compensating color appearance for the eye’s spectral sensitivity. J. Vis. 2006, 6, 283–294. [Google Scholar] [CrossRef] [PubMed]

- Fairchild, M.D.; Pirrotta, E. Predicting the lightness of chromatic object colors using CIELAB. Color Res. Appl. 1991, 16, 385–393. [Google Scholar] [CrossRef]

- Nayatani, Y. A colorimetric explanation of the Helmholtz-Kohlrausch effect. Color Res. Appl. 1998, 23, 374–378. [Google Scholar] [CrossRef]

- Fairchild, M.D. Color Appearance Models, 3rd ed.; Wiley: Chichester, UK, 2013. [Google Scholar]

- SnapDish. Available online: https://snapdish.co (accessed on 10 May 2021).

- CIE Publication, Colorimetry, no. 15; CIE Central Bureau: Vienna, Austria, 2004.

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Engeldrum, P.G. Psychometric Scaling: A Toolkit for Imaging Systems Development; Imcotek Press: Winchester, UK, 2000. [Google Scholar]

- Takagi, H. Practical statistical tests machine learning-III: Significance tests for human subjective tests. Inst. Syst. Control. Inf. Eng. 2014, 58, 514–520. (In Japanese) [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).