Abstract

A retinal vessel analysis is a procedure that can be used as an assessment of risks to the eye. This work proposes an unsupervised multimodal approach that improves the response of the Frangi filter, enabling automatic vessel segmentation. We propose a filter that computes pixel-level vessel continuity while introducing a local tolerance heuristic to fill in vessel discontinuities produced by the Frangi response. This proposal, called the local-sensitive connectivity filter (LS-CF), is compared against a naive connectivity filter to the baseline thresholded Frangi filter response and to the naive connectivity filter response in combination with the morphological closing and to the current approaches in the literature. The proposal was able to achieve competitive results in a variety of multimodal datasets. It was robust enough to outperform all the state-of-the-art approaches in the literature for the OSIRIX angiographic dataset in terms of accuracy and 4 out of 5 works in the case of the IOSTAR dataset while also outperforming several works in the case of the DRIVE and STARE datasets and 6 out of 10 in the CHASE-DB dataset. For the CHASE-DB, it also outperformed all the state-of-the-art unsupervised methods.

1. Introduction

The retina is a multi-layered tissue of light-sensitive cells that surrounds the posterior cavity of the eye where light rays are converted into electrical neural signals for interpretation by the brain. One of the most important retinal diseases is diabetes, which can result in blindness [1].

A retinal vessel analysis is a medical procedure that can be used as an assessment of risks to the eye and other organs. Retinal vessels contain some of the narrowest vessels of the human body and its analysis can lead to the perception of blood vessel damage caused by early stage metabolic diseases, such as diabetes. It is also possible to estimate the progression of diabetic retinopathy by measuring the rate of dilation of the vessels in response to a flickering light [2].

Fundus imaging is one of the primary methods of screening for retinopathy. Recent advances in digital imaging and image processing resulted in the widespread use of computerized image analysis techniques in all areas of medical sciences, including ophthalmology. Several parameters can be obtained from the retinal vessel structure, such as changes in the thickness of the vessels, the curvature of the vessel structure, and the arteriolar-to-venular ratio (AVR) [1].

These parameters can be computed after an adequate automatic segmentation of the vessel structure [3], which is what we propose in this work. In practice, the vasculature of the eye is still manually segmented or measured by experts, which is a mundane and laborious task. Moreover, segmentation requires expertise and a considerable degree of attention and time. However, even in the case of clinical experts, retinal vessel annotation is prone to human errors and subjectiveness, lacking repeatability and reproducibility. The task becomes even harder in the presence of retinal pathologies, e.g., exudates and hemorrhages [4].

In this work, we improve the popular Frangi filter, a popular vessel segmentation approach, by capitalizing on a simple yet powerful concept called connectivity [4]. The usage of connectivity information mimics the momentum of a brush, instead of evaluating pixels on their own. In a previous work, we associated this technique to a coupled region-growing and machine learning [5,6] approach, creating a unique framework for vessel segmentation called ELEMENT. However, as ELEMENT is based on machine learning, it requires training phases that use segmented vessels annotated by specialists. In the case of a shortage of specialists for a certain image modality, vessels cannot be segmented by ELEMENT.

This work, on the other hand, focuses on improving classical image filters, such as the Frangi filter. We propose two main improvements called the connectivity filter (CF) and the local-sensitive connectivity filter (LS-CF), which are not tied to any machine learning concept, being completely unsupervised. The LS-CF is based on the Frangi filter output and on a heuristic. As it works on top of the Frangi filter, the approach is also multimodal.

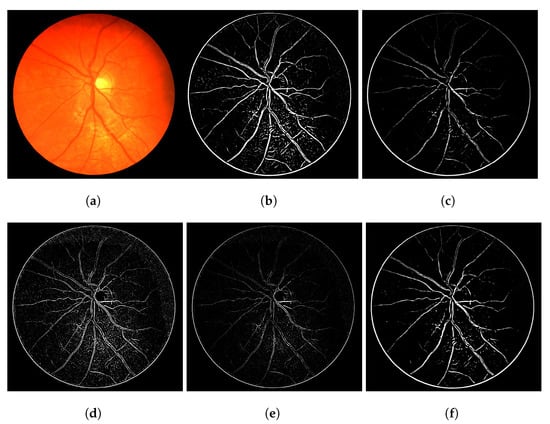

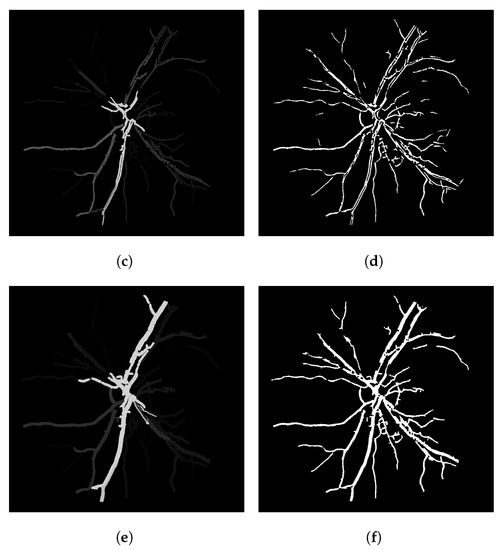

The heuristic for this proposal consists of associating a score of connectivity to each pixel of the image. First, we obtain a binary thresholded response of the Frangi filter (other thresholded binary vessel images can be used). After the threshold, the Frangi response generates either an overly connected segmentation with a lot of noise or a sparse segmentation with disconnected and invisible vessel branches. These variations can be seen in Figure 1. Neither of these scenarios (b and f) nor the results in-between (c, d and e) are desirable in terms of vessel segmentation, which is a binary adequate response.

Figure 1.

Overall variations of the Frangi filter gray-level response. (a) Image 11L from the CHASE-DB dataset. (b) Thicker vessel caliber response, which suits to the reality of the vessel, but contains several artefacts in non-vessel areas. (c) Smoother, less-noisy overall response but erases parts of important vessel branches. (d) Very noisy response that highlights most parts of the vessels but is more responsive to the edges of the vessel branches in contrast to their interior. (e) Less-noisy version of -e- but still with the same vessel caliber issue. (f) Less noisy version of -b- but looses more vessel information.

The CF and LS-CF receive the thresholded binary Frangi response as input and produce a gray-level response based on the assumption that parts that yield a great connectivity score are most probably the correctly segmented parts of existing vessels. The connectivity score is computed by recursively iterating over connected pixels. We start by walking over the vessel pixels, and the longer we travel over these connected pixels, the greater the score of that respective pixel. If a pixel is connected to 100 other pixels, it will receive a brighter intensity compared to a pixel that is connected to just 10. We later use this information to improve the disconnections of vessels. Pixels that are not segmented as vessels that are closer to highly connected vessels’ pixels (brighter regions) are more likely to be vessels and are more likely to have been misclassified as a non-vessel.

The next section covers the medical aspects of the human eye and its retina, later addressing works in the literature that proposed automated vessel segmentation. Section 3 covers the proposed methodology, where we describe the two newly proposed unsupervised algorithms. Section 4 shows the obtained visual and numerical results, and Section 5 addresses the conclusions and future work.

2. Literature Review

A recent study [7] observed that the flicker-induced dilatation of the retinal arterioles is significantly reduced in patients with chronic heart failure compared to patients with cardiovascular risk factors and healthy controls. Computer-based segmentations can also assist with locating and identifying stenoses within the retinal or cardiac vessel structure [8]. Furthermore, the arterial dilation is shown to be weaker in patients with Alzheimer’s disease when compared to a control group. Patients with a mild cognitive impairment also show weaker dilation responses but not as significant as with Alzheimer’s [9].

The retinal microcirculation is earlier affected in the presence of atherosclerosis, and the retinal vessel caliber is an emerging cardiovascular risk factor. Obesity is also associated with vascular dysfunction. The mean arteriolar-to-venular diameter ratio is impaired in obese subjects when compared to lean cases. Overall, cardiovascular evaluations are associated to the response of the retinal vessels [10].

Stergiopulos et al. [11] focused on modeling the blood flow within the vessels to automatically locate and quantify the degree of severity of stenoses. However, prior to this modeling, vessels were manually segmented. All these highlighted health markers justify concentrating efforts toward automatic vessel segmentation approaches.

The Frangi filter is a popular vessel-enhancing technique proposed in [12,13] that works with a broad extension of modalities, such as X-ray and retinal fundus images. The Frangi filter is based on the Hessian matrix [14]. As defined by the authors [12], their approach searches for tubular geometrical structures. The method uses the eigenvalues of the Hessian matrix to locally determine the likelihood of a vessel. Similar filters are also called Hessian filters in the literature [15,16].

Approaches to the vessel segmentation problem can be divided into two general categories: (1) unsupervised, the ones that do not require training data, and in most cases, these are subsequent applications of image filters; and (2) supervised, which require training information and use machine learning techniques. Usually, well-constructed supervised learning methods that use the response of filters, such as the Frangi, as input will more frequently outperform pure image processing techniques [4].

Unsupervised approaches are frequently based on the Hessian matrix or Frangi [17,18]. However, they can also use mathematical morphology [5,19], region growing, Fourier transform [20], etc. Some methods in the literature are based on deep learning approaches [21]. Some also combine classical filters with supervised learning, usually yielding the best results [4,22,23,24].

This work focuses primarily on unsupervised techniques, as they do not require training data and can be used as a feature in machine learning approaches. The state-of-the-art and most recent unsupervised approaches in the literature are the works of Memari et al. [25], Tavakoli et al. [26] and Mahapatra et al. [27].

Memari et al. [25] used a complex combination of several techniques: image pre-processing (median filtering, CLAHE), mathematical morphology, the Gabor filter, Frangi filter, level set and a clustering algorithm. Tavakoli et al. [26] used Radon transform and mathematical morphology. Mahapatra et al. [27] also used the Frangi filter, associated to a clustering algorithm. The Frangi filter is prominent in the state of the art when it comes to unsupervised techniques (and also in supervised cases, such as in [4]).

Although the approach of Memari et al. [25] used the level set as one of their steps, which reminds one of the proposal of this work and iterates over the pixel intensities, none of these three state-of-the-art approaches is based on the connectivity of pixels. The first connectivity image filter in the literature is proposed in this work. Although we do use it with vessels, the connectivity filter can also be applied to other application domains, such as a feature descriptor, by adapting the idea to gray-level and color images.

Furthermore, these three state-of-the-art articles primarily focus on the DRIVE and STARE datasets. In this work, we test our proposal with the DRIVE, STARE, CHASE-DB and IOSTAR public datasets, and we also test the performance of the approach on X-ray angiograms (OSIRIX), which is a completely different imaging technique. One major contribution of this work is the multimodal aspect that is confirmed by our experiments. Our proposal also outperformed the state of the art [25,26] for the CHASE-DB dataset. The proposed approach is also cleaner than the approach of Memari et al. [25] that used a combination of several techniques, which leads to several adjustments of parameters and difficulties in replication.

3. Proposed Methodology

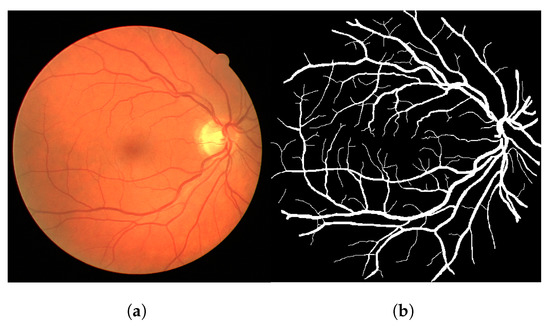

The methodology pipeline consists of converting colored images to gray images using the green channel, which is commonplace in the literature. Later, we apply the Frangi filter to the image using the MATLAB implementation. The chosen input parameters for the Frangi algorithm vary a little bit across different modalities and were adjusted empirically (per modality) to return acceptable responses (average of 5 combinations of parameters per dataset). We did not overly adjust the parameters to avoid overfitting to a certain modality. The used parameters can be found at [28]. Figure 2a shows an example of the input image and its ground-truth pair, extracted from the DRIVE dataset.

Figure 2.

Image 02 from the test folder of the DRIVE dataset (a) and its ground truth (b). (a) Input image. (b) Ground truth.

We subsequently apply a threshold operation using the gray-level 100, which was empirical, chosen by visual subjective observation of 10 samples. This number could be chosen based on an optimization of the accuracy. However, we discarded this idea as this could lead to overfitting to the image data that we have, where the methodology could not generalize well to other images. We used this same threshold for all modalities and images in this work. To sum up, if the pixel value is greater than 100, then it is painted white. Otherwise, it is painted black. The result of this threshold operation is shown in Figure 3b.

Figure 3.

The Frangi filter response (a) and its thresholded version at intensity 100 (b). (a) Frangi filter response. (b) Frangi filter response after threshold.

At last, the proposed connectivity filter is applied to the previous thresholded response. The connectivity filter receives as input a binary image and produces as output a gray-level image containing the connectivity scores for each connected component of the image (Figure 4a). To generate the final segmentation mask, we apply a threshold operation for gray levels greater than 1 (shown in Figure 4b). If we set this threshold to 0, the entire image is painted white. If we set it to 1, it paints white all the pixels that are associated to a connectivity score, irrespective if it is a low or a high connectivity score. A single pixel that is isolated from every other vessel pixel has connectivity score 1, so they are painted white as well. When we select the gray levels greater than 1, we remove these pixels that are noise and are separated from other vessels. This threshold can be adjusted for different segmentation scenarios. Figure 4b represents the final segmentation result for the input image shown in Figure 2.

Figure 4.

The connectivity filter output and its thresholded version. (a) Connectivity filter. (b) Thresholded version () of (a).

Figure 4a clearly shows that the highly connected components appear brighter (with a larger associated connectivity score). Some parts of the vessels shown in Figure 4b cannot be seen in Figure 4a because their gray-level value is close to 0 (black), but these elements are there. Figure 4b shows the main vessel branches but also shows a lot of disconnections. To improve this result further, we propose the more intelligent local-sensitive connectivity filter. At total, we propose two algorithms in this work: CF and its improved version called LS-CF.

The connectivity filter (CF) shown in Figure 4a can be represented as a recursive function that iterates over the neighborhood of a pixel, just like a flood-filling approach, while also keeping and incrementing a connectivity associated to that pixel. To obtain the score for a certain pixel, the function shown in Algorithm 1 is triggered. As the idea is to spread the movement as a flood filling, a binary matrix can be used to mark visited pixels in order to avoid recursive repetitions.

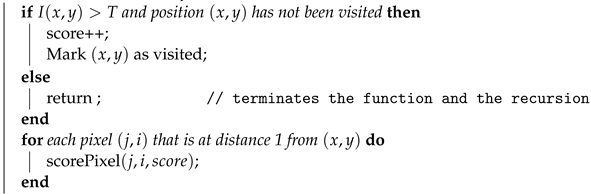

| Algorithm 1 Connectivity Filter (CF): The recursive flood-filling function that calculates the connectivity scores and outputs the gray-level connectivity image response. |

|

|

The CF algorithm, although recursive, was capable of calculating the connectivity scores for all the images used in this work in less than 1 min (38 s in avg for one image of the DRIVE dataset). Algorithm 1 outputs the gray-level image shown in Figure 4a. A threshold must be further applied to the gray-level response to obtain the binary segmentation mask shown in Figure 4b.

The issue with this naive connectivity filter is clear vessel disconnections. Therefore, we modify this naive CF approach, introducing a tolerance score. Let us consider a pixel that has not been marked as vessel; the idea is to iterate around this pixel while incrementing a tolerance score for each visited neighboring pixel. The idea is to tolerate some error and force the neighborhood iteration to continue. If the surrounding pixel is not a vessel, the connectivity score is incremented until a certain pre-defined tolerance.

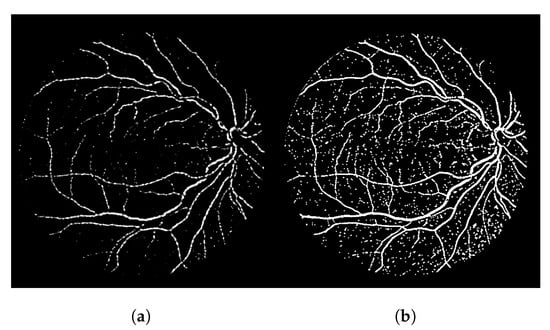

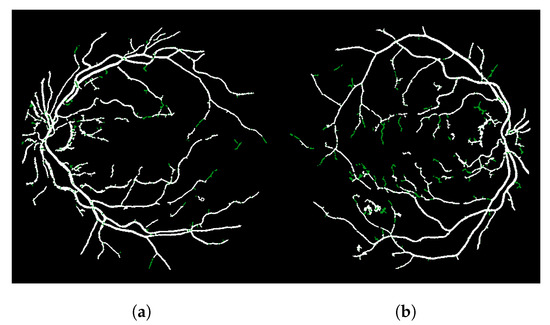

The result of this modified version, called LS-CF, is shown in Figure 5. The pixels in green show what has changed in comparison to the naive CF approach shown in Algorithm 1. This version is sensitive to local connections and is called local-sensitive connectivity filter (LS-CF).

Figure 5.

Improvements obtained with the locally sensitive version of the connectivity filter (LS-CF). (a) Image 01 of the DRIVE dataset. (b) Image 14 of the DRIVE dataset.

The great advantage of this heuristic is that it reconnects the vessel branches in any orientation while preserving the vessel thickness, without losing caliber and shape information. Algorithm 2 shows the LS-CF pseudo-code. The algorithm receives a distance to control the neighborhood iteration when computing the tolerance score. Furthermore, it also receives two constants and . The is not strictly necessary, but its usage can reduce the time required for computation. Changes on both of these constants can alter the final result.

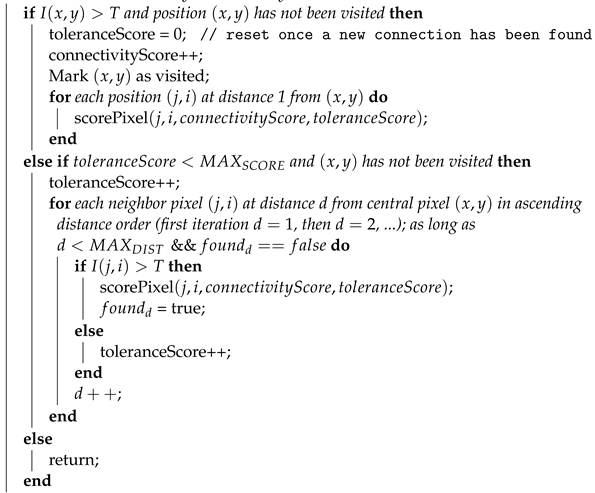

| Algorithm 2 Local-Sensitive Connectivity Filter (LS-CF): The local-sensitive version of the connectivity filter. |

|

|

For the neighborhood iterations in Algorithms 1 and 2, we used the Rodrigues distance [29] shown in Equation (1) (), as it is easy to iterate over the pixel neighborhood iteratively. The algorithm for the discrete iteration can be found in [29]. However, other distances can be used as well, and they will return different segmentation results [29,30].

The core idea of the algorithm is to travel on top of vessel branch. Eventually, when it encounters non-vessel pixels, the tolerance keeps it going, as it may find vessel pixels in subsequent iterations. If they do, all the pixels that are visited when the algorithm is moving its coordinate are painted as vessel.

It is best to keep the same “momentum” of the stroke. If the algorithm is walking on top of a vessel that is going upward, in each recursive call of the function “scorePixel”, we should start visiting pixels that belong to the upward motion. This emulates a brush stroke. The rule is the same for the rest of the orientations.

The main input parameter for this algorithm that can lead to different results are the and variables. These two variables control by how much the filter can “see” future iterations. If we increase both, we tend to have longer run times and more connected pixels. These variables are alike, and they could be written one based on the other, we just separated the concept in two variables to make it more clear, but both control by how much each pixel keeps growing and trying to reconnect to other vessel pixels.

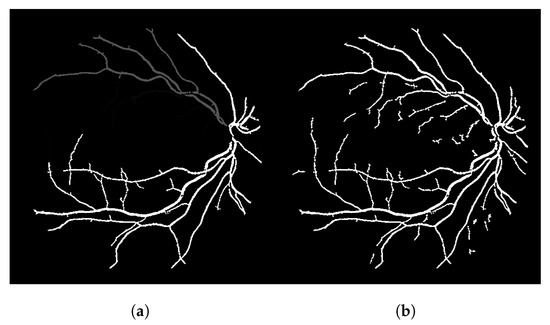

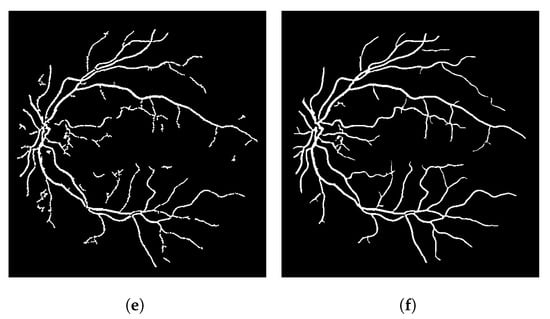

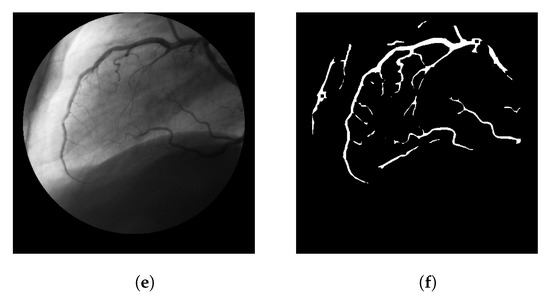

Figure 6 shows the main steps of the LS-CF approach. If we increase the parameter as shown in Figure 6e, we obtain more “packed” connections, but we also lose some vessel information, most probably because we obtain higher connectivity scores in this case and, therefore, the noisy disconnected branches get erased after the threshold operation. Figure 6e, when compared to Figure 6c, shows the longer and brighter main vessel branch, horizontally centered, that starts on the top and stops at the bottom of the image.

Figure 6.

Steps and parameter analysis of the LS-CF algorithm. Image 14L of the CHASE-DB dataset. (a) The Frangi filter response. (b) The thresholded Frangi filter response shown in (a). (c) The LS-CF algorithm ( and ). (d) The thresholded version of the LS-CF response shown (c). (e) The LS-CF algorithm ( and ). (f) The thresholded version of the LS-CF response shown (e).

4. Performed Experiments

The experiments were performed on a total of five datasets that contain distinct image resolutions and a total of three different types of imaging modalities: retinal fundus images, laser ophthalmoscope images and cardiac X-ray angiography. The datasets used in this section are identified by their names, as follows: (1) the DRIVE dataset contains 20 retinal fundus images, (2) STARE also contains 20 retinal fundus images, (3) CHASE-DB contains 28 retinal fundus images, (4) IOSTAR contains 24 retinal scanner laser ophthalmoscope images and (5) OSIRIX contains 7 cardiac X-ray coronary images. More details about these datasets can be found in [4].

The method proposed in this work is unsupervised and therefore no training phase is required. The approach can segment vessels in different modalities without training. In terms of the experiments, for the datasets that have a folder separation (i.e., train and test), we calculated the results using the images in the test folder. For the datasets that do not originally have this separation proposed by their creators, we used the entire dataset.

Along with the CF and LS-CF proposals, we also evaluated a third possibility. This third possibility was created to measure and show that the local-sensitive connectivity filter is indeed more sophisticated than coupling the naive connectivity filter to a morphological closing, aiming to fill in the holes produced by the thresholded Frangi filter response and/or recreate vessel disconnections.

In this coupled approach, we use the morphological closing operation, which is composed of a morphological dilation followed by a morphological erosion [5]. The closing operation, by itself, is an operation used to remove holes from binary images and to reconnect structures. The used structuring element was the standard cross-shaped structuring element (distance of 1 pixel). Although the morphological approach can perform the reconnection of vessels to some extent, actual vessels can also be mistakenly connected to noise or non-vessel pixels.

The steps in this third approach are: (1) extract the green layer, (2) apply the Frangi filter, (3) threshold the result, (4) apply the connectivity filter, (5) threshold the result again and (6) apply the morphological closing. The closing operation does improve the results and disconnections in some cases, but it also increases the false positive rate in other situations, decreasing the overall accuracy. The mathematical morphology approach is not capable of achieving a solution at the same level of the result obtained with the LS-CF algorithm. We report this morphological approach in the tables of this section as “Connectivity Filter + Morphology Closing”.

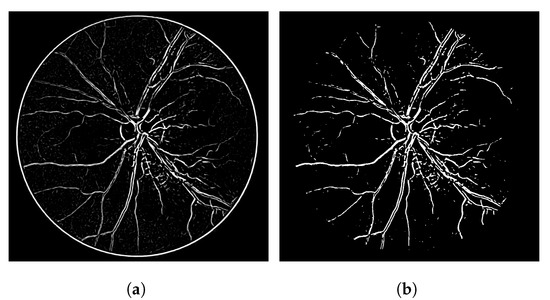

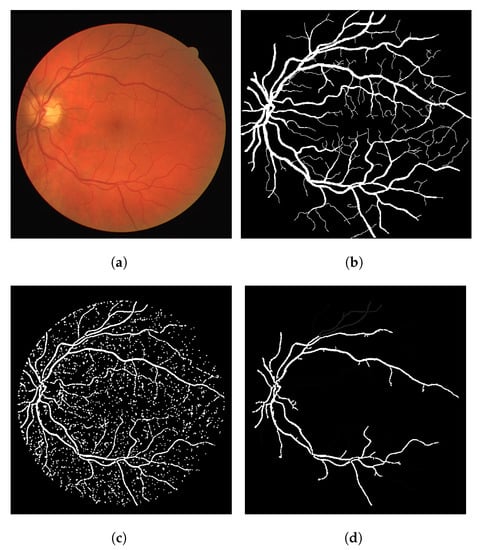

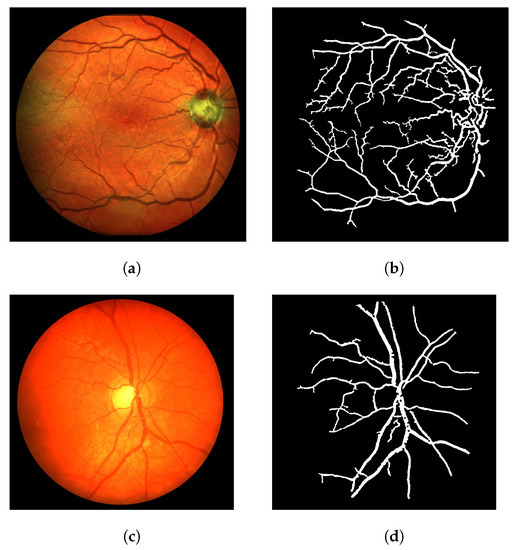

In terms of the visual results, Figure 7 compares the thresholded result from the Frangi filter with the result obtained with the proposed thresholded CF filter. It is clear that the CF removes the noise that originates from the Frangi response. The accuracy obtained with Figure 7 was 92.39% and the one obtained with the CF was 94.97% for this image.

Figure 7.

Results using an image from the DRIVE dataset. (a) Image 05—DRIVE dataset. (b) Ground truth. (c) Thresholded Frangi. (d) Gray-level CF response (proposal). (e) Thresholded CF response (proposal). (f) LS-CF response (proposal).

The gray-level response of the connectivity filter is also interesting due to another property. This response shows the most important/core vessels (usually the thicker ones) of the retina. The response shown in Figure 7d is the response of the Frangi filter shown in Figure 7c after the application of the connectivity filter. The longer the vessel branch, the brighter the pixel color. This image is used to later generate the result in (e). The LS-CF response shown in (f) is an entirely different approach that changes how the connectivity score is computed, as shown in Algorithm 2.

The algorithm is able to achieve good results with the DRIVE dataset in general. No weird effect or strong segmentation error was observed. Image 08 is the only image that resulted in small circular artifacts, as shown in Figure 8 (out of the 20 images). This is one of the few unhealthy retinas in the DRIVE dataset. In this case, the Frangi filter response was able to achieve 92.22% accuracy, while the CF response achieved 92.63% and the LS-CF 92.60%, both improving the Frangi response.

Figure 8.

The worst numerical and visual segmentation result for the DRIVE dataset. (a) Image 08 from the DRIVE dataset. (b) Ground truth of image 08. (c) Thresholded Frangi response of image 08. (d) Gray-level CF response of image 08 (proposal). (e) Thresholded CF response of image 08 (proposal). (f) LS-CF response of image 08 (proposal).

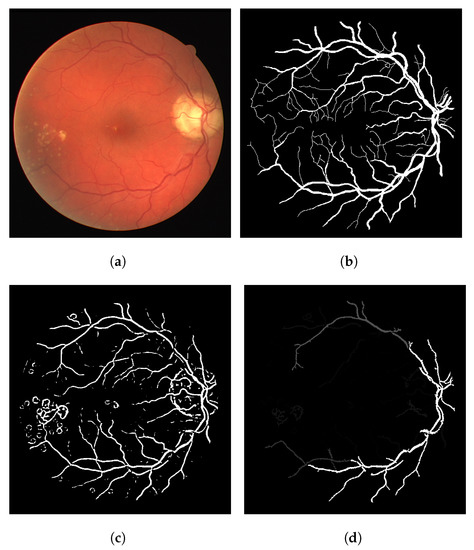

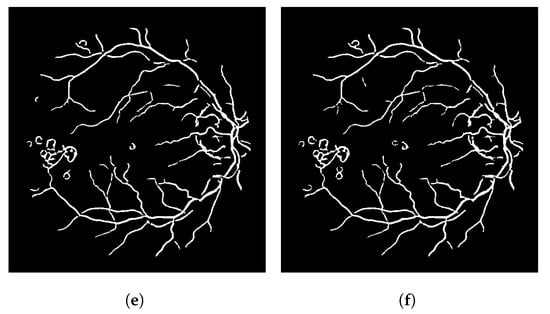

Figure 9 shows the segmentation results of the LS-CF for a variety of datasets used in this work. The parameters should be adjusted when the modality is changed. However, these adjustments mostly relate to the response of the Frangi filter. In some cases, the Frangi should be adjusted to be more or less responsive to thinner or thicker vessels.

Figure 9.

Visual performance of the LS-CF in a variety of datasets. (a) Image 02 from the IOSTAR dataset (retinal scanner laser ophthalmoscope). (b) Segmentation result of image 02 from the IOSTAR dataset using the proposed LS-CF. (c) Image 11R from the CHASE-DB dataset. (d) Segmentation result of image 11R from the CHASE-DB dataset using the proposed LS-CF. (e) Image 4 from the OSIRIX X-ray angiographic dataset. (f) Segmentation result of image 4 from the OSIRIX X-ray angiographic dataset using the proposed LS-CF.

In what follows, we compare the numerical results obtained throughout the literature, a baseline method that consists of a thresholded Frangi filter response and three proposed approaches: (1) the connectivity filter, shown in Algorithm 1, (2) the naive connectivity filter + morphological closing to fill in the holes generated by the thresholded response and (3) the local-sensitive connectivity filter, shown in Algorithm 2.

Table 1 compares the results using the DRIVE dataset. In terms of the accuracy, the proposed LS-CF obtained an average of 95.77% accuracy and was able to outperform 12 out of 18 unsupervised methods. Table 2 shows the results obtained with the STARE dataset. In this case, the proposed approach was able to outperform 13 out of 14 unsupervised approaches.

Table 1.

Comparison using the DRIVE dataset.

Table 2.

Comparison using the STARE dataset.

The results obtained with the CHASE-DB dataset are shown in Table 3. The LS-CF outperformed all the unsupervised methods in the literature and outperformed two out of seven supervised approaches. This is the only case where the naive connectivity filter + morphology (morphological closing) obtained a slightly better accuracy when compared to the proposed LS-CF.

Table 3.

Comparison using the CHASE-DB dataset.

Table 4 compares the results using the IOSTAR dataset. Our approach was still able to outperform all works except our previous work that uses connectivity and machine learning—and is supervised.

Table 4.

Comparison using the IOSTAR dataset.

At last, Table 5 shows the results obtained with the OSIRIX dataset. In contrast to the previous comparisons, this is a comparison that is not performed using the same dataset, as previous articles in the literature did not publicly provide X-ray angiographic datasets. However, we are still able to measure how the LS-CF would most probably perform in these cases. We were also able to outperform all works in the literature.

Table 5.

Comparison using the X-ray angiogram (OSIRIX) dataset.

5. Discussion

As shown in the results, the LS-CF is not equivalent to a CF coupled with a morphological closing. In fact, the LS-CF achieves the highest performance values in nearly all cases (with the only exception of Table 3). This is expected, as the usual mathematical morphology techniques are global techniques, i.e., they see and operate the entire image as a whole. In contrast to the LS-CF approach, this does not locally connect small vessel parts. The contrast here is similar to a local vs a global search [82].

Morphological closing consists of dilation plus erosion. In a practical view, the dilation fills some of the vessel disconnections. Later, however, when the erosion is subsequently applied, the disconnections are introduced again. This is expected as these disconnections are not quite literally “holes” in the image, and the closing operation works best in this case.

This problem of reintroducing the disconnections is only solved when more dilations are applied to the image (e.g., two dilations and one erosion). However, this thickens the vessel caliber and hence produces a lower accuracy and unrealistic visual segmentations. Often, it also introduces a lot of extra false positives to the results, as it begins to merge separated vessels together.

6. Conclusions

Automatic vessel segmentation is a challenging problem, and despite the amount of works in the literature, there is still room for improvement. Annotations provided by specialists are not always available, which denies the reproduction and utilization of supervised approaches. In some cases, they also need to be adjusted, i.e., by removing or including new features in order to provide an adequate response for a new modality. In contrast, unsupervised approaches are more adaptable for a vast amount of image modalities after a few adjustments of parameters and do not require training.

Although powerful and widely used, the Frangi filter still has its weaknesses, such as creating holes in the segmented vessels and discontinuities. In this work, we focus these issues raised by the Frangi filter and provide an enhancement to fill the discontinuities, improving accuracy and visual segmentations. Our approach is simple and just uses two filters (Frangi + LS-CF) to obtain the final result.

The proposed methodology consists of assigning vessel continuity scores to the pixels of a binary image (e.g., a thresholded Frangi). Longer connected components appear brighter. The connectivity score image is then thresholded to obtain the final result. The LS-CF algorithm introduces a tolerance to keep the algorithm traveling over the image pixels, attempting to re-link the vessel branches. Without the tolerance, the CF algorithm does not re-link the branches as expected. At the end, the small vessel components are deleted (usually noise) and we have nice, connected vessel branches.

This idea builds upon the hypothesis of the influence of each pixel to its surrounding pixels. Classical machine learning [83] is not strictly capable of using this connectivity data as a feature. In a previous work [4], we combined this connectivity idea to machine learning algorithms, producing some of the best results in the literature for vessel segmentation. However, in this new work, we create an unsupervised approach based on a very similar idea, showing that connectivity can be powerful enough to provide accurate segmentations.

The experiments were performed using a total of five datasets, where the proposed approaches obtained very competitive results in all cases. For the IOSTAR and OSIRIX datasets, our unsupervised approach was able to outperform all but our previous approach. When we analyze the unsupervised methods, our approach outperforms most of them in all dataset cases. In addition, the enhancements produced with our approach were visually better than the baseline thresholded Frangi response in all cases for all used datasets.

We did not perform an extensive tuning of the parameters in this work. For most cases, we visually tested some combinations and chose the one that seemed to be better for a few selections of images (usually 5 to 10). As a future work, a better optimization of parameters can be performed in order to enhance the obtained results as well as combining the proposed connectivity filters with machine learning, which would probably result in a very competitive methodology for vessel segmentation. A more robust pre-processing after the application of the Frangi and before the application of the CF and/or LS-CF can also improve the segmentation results further.

Author Contributions

Conceptualization, E.O.R., P.L.; methodology, E.O.R.; software, E.O.R., J.H.P.M.; validation, E.O.R., J.H.P.M., L.O.R.; formal analysis, E.O.R., J.H.P.M., M.T., D.C., J.T.O.; investigation, E.O.R., P.L., G.B.; resources, P.L., J.H.P.M., E.O.R.; data curation, E.O.R., J.H.P.M.; writing—original draft preparation, E.O.R., P.L.; writing—review and editing, E.O.R., D.C., M.T., J.T.O., L.O.R., G.B.; visualization, J.H.P.M.; supervision, E.O.R., D.C., M.T., J.T.O.; project administration, E.O.R., P.L.; funding acquisition, E.O.R., P.L., D.C., M.T., J.T.O. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding. The APC was partially funded by Universidade Tecnologica Federal do Parana-Pato Branco.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Miri, M.; Amini, Z.; Rabbani, H.; Kafieh, R. A Comprehensive Study of Retinal Vessel Classification Methods in Fundus Images. J. Med. Signals Sens. 2017, 7, 59–70. [Google Scholar] [PubMed]

- Lim, L.S.; Ling, L.H.; Ong, P.G.; Foulds, W.; Tai, E.S.; Wong, T.Y. Dynamic Responses in Retinal Vessel Caliber With Flicker Light Stimulation and Risk of Diabetic Retinopathy and Its Progression. Investig. Ophthalmol. Vis. Sci. 2017, 58, 2449–2455. [Google Scholar] [CrossRef] [PubMed]

- Lamy, J.; Merveille, O.; Kerautret, B.; Passat, N.; Vacavant, A. Vesselness Filters: A Survey with Benchmarks Applied to Liver Imaging. In Proceedings of the 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021. [Google Scholar]

- Chaudhuri, S.; Chatterjee, S.; Katz, N.; Nelson, M.; Goldbaum, M. ELEMENT: Multi-modal retinal vessel segmentation based on a coupled region growing and machine learning approach. IEEE J. Biomed. Health Inform. 2020, 24, 3507–3519. [Google Scholar]

- Rodrigues, E.O.; Conci, A.; Liatsis, P. Morphological classifiers. Pattern Recognit. 2018, 84, 82–96. [Google Scholar] [CrossRef]

- Porcino, T.; Rodrigues, E.O.; Silva, A.; Clua, E.; Trevisan, D. Using the gameplay and user data to predict and identify causes of cybersickness manifestation in virtual reality games. In Proceedings of the IEEE 8th International Conference on Serious Games and Applications for Health, SeGAH 2020, Vancouver, BC, Canada, 12–14 August 2020. [Google Scholar]

- Nagele, M.P.; Barthelmes, J.; Ludovici, V.; Cantatore, S.; Eckardstein, A.; Enseleit, F.; Lüscher, T.F.; Ruschitzka, F.; Sudano, I.; Flammer, A.J. Retinal microvascular dysfunction in heart failure. Eur. Heart J. 2017, 39, 47–56. [Google Scholar] [CrossRef]

- Arnoldi, E.; Gebregziabher, M.; Schoepf, U.J.; Goldenberg, R.; Ramos-Duran, L.; Zwerner, P.L.; Nikolaou, K.; Reiser, M.F.; Costello, P.; Thilo, C. Automated computer-aided stenosis detection at coronary CT angiography: Initial experience. Eur. Radiol. 2010, 20, 1160–1167. [Google Scholar] [CrossRef]

- Querques, G.; Borrelli, E.; Sacconi, R.; Vitis, L.D.; Leocani, L.; Santangelo, R.; Magnani, G.; Comi, G.; Bandello, F. Functional and morphological changes of the retinal vessels in Alzheimer’s disease and mild cognitive impairment. Sci. Rep. 2019, 9, 63. [Google Scholar] [CrossRef] [PubMed]

- Hanssen, H.; Nickel, T.; Drexel, V.; Hertel, G.; Emslander, I.; Sisic, Z.; Lorang, D.; Schuster, T.; Kotliar, K.E.; Pressler, A.; et al. Exercise-induced alterations of retinal vessel diameters and cardiovascular risk reduction in obesity. Atherosclerosis 2011, 216, 433–439. [Google Scholar] [CrossRef]

- Stergiopulos, N.; Young, D.F.; Rogge, T.R. Computer simulation of arterial flow with applications to arterial and aortic stenoses. J. Biomech. 1992, 25, 1477–1488. [Google Scholar] [CrossRef]

- Frangi, A.F.; Niessen, W.J.; Vincken, K.L.; Viergever, M.A. Multiscale vessel enhancement filtering. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Cambridge, MA, USA, 11–13 October 1998; pp. 130–137. [Google Scholar]

- Moccia, S.; Momi, E.; Hadji, S.; Mattos, L.S. Blood vessel segmentation algorithms—Review of methods, datasets and evaluation metrics. Comput. Methods Programs Biomed. 2018, 158, 71–91. [Google Scholar] [CrossRef] [PubMed]

- Yin, X.; Ng, B.W.H.; He, J.; Zhang, Y.; Abbott, D. Accurate Image Analysis of the Retina Using Hessian Matrix and Binarisation of Thresholded Entropy with Application of Texture Mapping. PLoS ONE 2014, 9, e95943. [Google Scholar] [CrossRef] [PubMed]

- Sato, Y.; Nakajima, S.; Atsumi, H.; Koller, T.; Gerig, G.; Yoshida, S.; Kikinis, R. 3D multi-scale line filter for segmentation and visualization of curvilinear structures in medical images. In Proceedings of the International Conference on Computer Vision, Virtual Reality, and Robotics in Medicine, Genobre, France, 19–22 March 1997; pp. 213–222. [Google Scholar]

- Lorenz, C.; Carlsen, I.C.; Buzug, T.M.; Fassnacht, C.; Weese, J. Multi-scale line segmentation with automatic estimation of width, contrast and tangential direction in 2D and 3D medical images. In Proceedings of the International Conference on Computer Vision, Virtual Reality, and Robotics in Medicine, Genobre, France, 19–22 March 1997; pp. 233–242. [Google Scholar]

- Nugroho, H.A.; Aras, R.A.; Lestari, T.; Ardiyanto, I. Retinal vessel segmentation based on Frangi filter and morphological reconstruction. In Proceedings of the International Conference on Control, Electronics, Renewable Energy and Communications (ICCREC), Yogyakarta, Indonesia, 26–28 September 2017. [Google Scholar]

- Ciecholewski, M.; Kassjański, M. Computational Methods for Liver Vessel Segmentation in Medical Imaging: A Review. Sensors 2021, 21, 2027. [Google Scholar] [CrossRef] [PubMed]

- Odstrcilik, J.; Kolar, R.; Budai, A.; Hornegger, J.; Jan, J.; Gazarek, J.; Kubena, T.; Cernosek, P.; Svoboda, O.; Angelopoulou, E. Retinal vessel segmentation by improved matched filtering: Evaluation on a new high-resolution fundus image database. IEEE Trans. Inf. Technol. Biomed. 2013, 7, 373–383. [Google Scholar] [CrossRef]

- Jiang, H.; He, B.; Fang, D.; Ma, Z.; Yang, B.; Zhang, L. A region growing vessel segmentation algorithm based on spectrum information. Comput. Math. Methods Med. 2013, 2013, 743870. [Google Scholar] [CrossRef] [PubMed]

- Mo, J.; Zhang, L. Multi-level deep supervised networks for retinal vessel segmentation. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 2181–2193. [Google Scholar] [CrossRef] [PubMed]

- Lupascu, C.A.; Tegolo, D.; Trucco, E. FABC: Retinal Vessel Segmentation Using AdaBoost. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 1267–1274. [Google Scholar] [CrossRef] [PubMed]

- Fu, W.; Breininger, K.; Würfl, T.; Ravikumar, N.; Schaffert, R.; Maier, A. Frangi-Net: A Neural Network Approach to Vessel Segmentation. arXiv 2017, arXiv:1711.03345. [Google Scholar]

- Imran, A.; Li, J.; Pei, Y.; Yang, J.J.; Wang, Q. Comparative Analysis of Vessel Segmentation Techniques in Retinal Images. IEEE Access 2019, 7, 114862–114887. [Google Scholar] [CrossRef]

- Memari, N.; Ramli, A.R.; Saripan, M.I.B.; Mashohor, S.; Moghbel, M. Retinal blood vessel segmentation by using matched filtering and fuzzy c-means clustering with integrated level set method for diabetic retinopathy assessment. J. Med. Biol. Eng. 2019, 39, 713–731. [Google Scholar] [CrossRef]

- Tavakoli, M.; Mehdizadeh, A.; Shahri, R.P.; Dehmeshk, J. Unsupervised automated retinal vessel segmentation based on Radon line detector and morphological reconstruction. IET Image Process. 2021, 15, 1484–1498. [Google Scholar] [CrossRef]

- Mahapatra, S.; Agrawal, S.; Mishro, P.K.; Pachori, R.B. A novel framework for retinal vessel segmentation using optimal improved frangi filter and adaptive weighted spatial FCM. Comput. Biol. Med. 2022, 147, 105770. [Google Scholar] [CrossRef] [PubMed]

- Rodrigues, E.O. Sources and Datasets. 13 October 2022. Available online: https://github.com/Oyatsumi/ConnectivityFilter (accessed on 10 August 2022).

- Rodrigues, E.O. Combining Minkowski and Chebyshev: New distance proposal and survey of distance metrics using k-nearest neighbours classifier. Pattern Recognit. Lett. 2018, 110, 66–71. [Google Scholar] [CrossRef]

- Rodrigues, E.O. An efficient and locality-oriented Hausdorff distance algorithm: Proposal and analysis of paradigms and implementations. Pattern Recognit. 2022, 117, 107989. [Google Scholar] [CrossRef]

- Staal, J.; Abramoff, M.D.; Niemeijer, M.; Viergever, M.A.; Ginneken, B. Ridge-Based Vessel Segmentation in Color Images of the Retina. IEEE Trans. Med. Imaging 2004, 66, 501–509. [Google Scholar] [CrossRef] [PubMed]

- Sheet, D.; Karri, S.P.K.; Conjeti, S.; Ghosh, S.; Chatterjee, J.; Ray, A.K. Detection of retinal vessels in fundus images through transfer learning of tissue specific photon interaction statistical physics. In Proceedings of the IEEE 10th International Symposium on Biomedical Imaging, San Fracisco, CA, USA, 7–11 April 2013. [Google Scholar]

- Al-Rawi, M.; Karajeh, H. Genetic algorithm matched filter optimization for automated detection of blood vessels from digital retinal images. Comput. Methods Programs Biomed. 2007, 87, 248–253. [Google Scholar] [CrossRef]

- Soares, J.V.B.; Leandro, J.J.G.; Cesar, R.M.; Jelinek, H.F.; Cree, M.J. Retinal vessel segmentation using the 2-D Gabor wavelet and supervised classification. IEEE Trans. Med. Imaging 2006, 25, 1214–1222. [Google Scholar] [CrossRef]

- Marin, D.; Aquino, A.; Gegundez-Arias, M.E.; Bravo, J.M. A New Supervised Method for Blood Vessel Segmentation in Retinal Images by Using Gray-Level and Moment Invariants-Based Features. IEEE Trans. Med. Imaging 2011, 30, 146–158. [Google Scholar] [CrossRef]

- Ricci, E.; Perfetti, R. Retinal Blood Vessel Segmentation Using Line Operators and Support Vector Classification. IEEE Trans. Med. Imaging 2007, 26, 1357–1365. [Google Scholar] [CrossRef] [PubMed]

- Maji, D.; Santara, A.; Mitra, P.; Sheet, D. Ensemble of deep convolutional neural networks for learning to detect retinal vessels in fundus images. arXiv 2016, arXiv:1603.04833. [Google Scholar]

- Li, Q.; Feng, B.; Xie, L.; Liang, P.; Zhang, H.; Wang, T. A Cross-Modality Learning Approach for Vessel Segmentation in Retinal Images. IEEE Trans. Med. Imaging 2016, 35, 109–118. [Google Scholar] [CrossRef] [PubMed]

- Fu, H.; Xu, Y.; Wong, D.W.K.; Liu, J. Retinal vessel segmentation via deep learning network and fully-connected conditional random fields. In Proceedings of the IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016. [Google Scholar]

- Liskowski, P.; Krawiec, K. Segmenting Retinal Blood Vessels With Deep Neural Networks. IEEE Trans. Med. Imaging 2016, 35, 2369–2380. [Google Scholar] [CrossRef]

- Fan, Z.; Rong, Y.; Lu, J.; Mo, J.; Li, F.; Cai, X.; Yang, T. Automated blood vessel segmentation in fundus image based on integral channel features and random forests. In Proceedings of the 12th World Congress on Intelligent Control and Automation (WCICA), Guilin, China, 12–15 June 2016. [Google Scholar]

- Yan, Z.; Yang, X.; Cheng, K.T. Pixel-wise losses for deep learning based retinal vessel segmentation. IEEE Trans. Biomed. Eng. 2018, 66, 1912–1923. [Google Scholar] [CrossRef]

- Welikala, R.A.; Foster, P.J.; Whincup, P.H.; Rudnicka, A.R.; Owen, C.G.; Strachan, D.P.; Barman, S.A. Automated arteriole and venule classification using deep learning for retinal images from the UK Biobank cohort. Comput. Biol. Med. 2017, 90, 23–32. [Google Scholar] [CrossRef]

- Jin, Q.; Meng, Z.; Pham, T.D.; Chen, Q.; Wei, L.; Su, R. DUNet: A deformable network for retinal vessel segmentation. Knowl.-Based Syst. 2019, 178, 149–162. [Google Scholar] [CrossRef]

- Orlando, J.I.; Prokofyeva, E.; Blaschko, M.B. A Discriminatively Trained Fully Connected Conditional Random Field Model for Blood Vessel Segmentation in Fundus Images. IEEE Trans. Biomed. Eng. 2017, 64, 16–27. [Google Scholar] [CrossRef] [PubMed]

- Jiang, Y.; Tan, N.; Peng, T.; Zhang, H. Retinal Vessels Segmentation Based on Dilated Multi-Scale Convolutional Neural Network. IEEE Access 2019, 7, 76342–76352. [Google Scholar] [CrossRef]

- Soomro, T.A.; Afifi, A.J.; Gao, J.; Hellwich, O.; Zheng, L.; Paul, M. Strided Fully Convolutional Neural Network for Boosting the Sensitivity of Retinal Blood Vessels Segmentation. Expert Syst. Appl. 2019, 134, 36–52. [Google Scholar] [CrossRef]

- Adeyinka, A.A.; Adebiyi, M.O.; Akande, N.O.; Ogundokun, R.O.; Kayode, A.A.; Oladele, T.O. A Deep Convolutional Encoder-Decoder Architecture for Retinal Blood Vessels Segmentation. In Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2019; pp. 180–189. [Google Scholar]

- Shin, S.Y.; Lee, S.; Yun, I.D.; Lee, K.M. Deep Vessel Segmentation by Learning Graphical Connectivity. Med. Image Anal. 2016, 58, 101556. [Google Scholar] [CrossRef]

- Jebaseeli, T.J.; Durai, C.A.D.; Peter, J.D. Retinal blood vessel segmentation from diabetic retinopathy images using tandem PCNN model and deep learning based SVM. Optik 2019, 199, 163328. [Google Scholar] [CrossRef]

- Lam, B.S.Y.; Gao, Y.; Liew, A.W.C. General Retinal Vessel Segmentation Using Regularization-Based Multiconcavity Modeling. IEEE Trans. Med. Imaging 2010, 29, 1369–1381. [Google Scholar] [CrossRef]

- Zhang, B.; Zhang, L.; Karray, F. Retinal vessel extraction by matched filter with first-order derivative of Gaussian. Comput. Biol. Med. 2010, 40, 438–445. [Google Scholar] [CrossRef] [PubMed]

- Delibasis, K.K.; Kechriniotis, A.I.; Tsonos, C.; Assimakis, N. Automatic model-based tracing algorithm for vessel segmentation and diameter estimation. Comput. Methods Programs Biomed. 2010, 100, 108–122. [Google Scholar] [CrossRef] [PubMed]

- Al-Diri, B.; Hunter, A.; Steel, D. An Active Contour Model for Segmenting and Measuring Retinal Vessels. IEEE Trans. Med. Imaging 2009, 28, 1488–1497. [Google Scholar] [CrossRef]

- Palomera-Perez, M.A.; Martinez-Peres, M.E.; Benitez-Perez, H.; Ortega-Arjona, J.L. Parallel Multiscale Feature Extraction and Region Growing: Application in Retinal Blood Vessel Detection. IEEE Trans. Inf. Technol. Biomed. 2010, 14, 500–506. [Google Scholar] [CrossRef] [PubMed]

- Mendonca, A.M.; Compilho, A. Segmentation of retinal blood vessels by combining the detection of centerlines and morphological reconstruction. IEEE Trans. Med. Imaging 2006, 25, 1200–1213. [Google Scholar] [CrossRef]

- Azzopardi, G.; Strisciuglio, N.; Vento, M.; Petkov, N. Trainable COSFIRE filters for vessel delineation with application to retinal images. Med. Image Anal. 2015, 19, 46–57. [Google Scholar] [CrossRef]

- Zhang, J.; Dashtbozorg, B.; Bekkers, E.; Pluim, J.P.W.; Duits, R.; ter Haar Romeny, B.M. Robust Retinal Vessel Segmentation via Locally Adaptive Derivative Frames in Orientation Scores. IEEE Trans. Med. Imaging 2016, 35, 2631–2644. [Google Scholar] [CrossRef]

- Hossain, N.I.; Reza, S. Blood vessel detection from fundus image using Markov random field based image segmentation. In Proceedings of the 4th International Conference on Advances in Electrical Engineering (ICAEE), Dhaka, Bangladesh, 28–30 September 2017. [Google Scholar]

- Srinidhi, C.L.; Aparna, P.; Rajan, J. A visual attention guided unsupervised feature learning for robust vessel delineation in retinal images. Biomed. Signal Process. Control. 2018, 44, 110–126. [Google Scholar] [CrossRef]

- Samant, P.; Bansal, A.; Agarwal, R. A hybrid filteringbased retinal blood vessel segmentation algorithm. In Computer Vision and Machine Intelligence in Medical Image Analysis; Springer: Singapore, 2019; pp. 73–79. [Google Scholar]

- Karn, P.K.; Biswal, B.; Samantaray, S.R. Robust retinal blood vessel segmentation using hybrid active contour model. IET Image Process. 2018, 12, 440–450. [Google Scholar] [CrossRef]

- Chakraborti, T.; Jha, D.K.; Chowdhury, A.S.; Jiang, X. A self-adaptive matched filter for retinal blood vessel detection. Machine Vision and Applications. Mach. Vis. Appl. 2014, 26, 55–68. [Google Scholar] [CrossRef]

- Asad, A.H.; Hassaanien, A.E. Retinal Blood Vessels Segmentation Based on Bio-Inspired Algorithm. In Applications of Intelligent Optimization in Biology and Medicine; Springer: Cham, Switzerland, 2015; pp. 181–215. [Google Scholar]

- Roychowdhury, S.; Koozekanani, D.D.; Parhi, K.K. Iterative Vessel Segmentation of Fundus Images. IEEE Trans. Biomed. Eng. 2015, 62, 1738–1749. [Google Scholar] [CrossRef]

- Hoover, A.D.; Kouznetsova, V.; Goldbaum, M. Locating blood vessels in retinal images by piecewise threshold probing of a matched filter response. IEEE Trans. Med. Imaging 2000, 19, 203–210. [Google Scholar] [CrossRef]

- Lam, B.S.Y.; Hong, Y. A Novel Vessel Segmentation Algorithm for Pathological Retina Images Based on the Divergence of Vector Fields. IEEE Trans. Med. Imaging 2008, 27, 237–246. [Google Scholar] [CrossRef] [PubMed]

- Annunziata, R.; Garzelli, A.; Ballerini, L.; Mecocci, A.; Trucco, E. Leveraging Multiscale Hessian-Based Enhancement With a Novel Exudate Inpainting Technique for Retinal Vessel Segmentation. IEEE J. Biomed. Health Inform. 2016, 20, 1129–1138. [Google Scholar] [CrossRef]

- Zhao, Y.; Rada, L.; Chen, K.; Harding, S.P.; Zheng, Y. Automated Vessel Segmentation Using Infinite Perimeter Active Contour Model with Hybrid Region Information with Application to Retinal Images. IEEE Trans. Med. Imaging 2015, 34, 1797–1807. [Google Scholar] [CrossRef]

- Zhang, Y.; Hsu, W.; Lee, M.L. Detection of Retinal Blood Vessels Based on Nonlinear Projections. J. Signal Process. Syst. 2008, 55, 103–112. [Google Scholar] [CrossRef]

- Imani, E.; Javidi, M.; Pourreza, H.R. Improvement of retinal blood vessel detection using morphological component analysis. Comput. Methods Programs Biomed. 2015, 118, 263–279. [Google Scholar] [CrossRef]

- Abbasi-Sureshjani, S.; Smit-Ockeloen, I.; Zhang, J.; Romeny, B.T.H. Biologically-Inspired Supervised Vasculature Segmentation in SLO Retinal Fundus Images. In Image Analysis and Recognition; Springer: Cham, Switzerland, 2015; pp. 325–334. [Google Scholar]

- Srinidhi, C.L.; Rath, P.; Sivaswamy, J. A Vessel Keypoint Detector for junction classification. In Proceedings of the IEEE 14th International Symposium on Biomedical Imaging (ISBI), Melbourne, Australia, 18–21 April 2017. [Google Scholar]

- Zhao, H.; Li, H.; Maurer-Stroh, S.; Guo, Y.; Deng, Q.; Cheng, L. Supervised Segmentation of Un-annotated Retinal Fundus Images by Synthesis. IEEE Trans. Med. Imaging 2018, 38, 46–56. [Google Scholar] [CrossRef]

- Mhiri, F.; Duong, L.; Desrosiers, C.; Cheriet, M. Vesselwalker: Coronary arteries segmentation using random walks and hessian-based vesselness filter. In Proceedings of the IEEE 10th International Symposium on Biomedical Imaging, San Francisco, CA, USA, 7–11 April 2013. [Google Scholar]

- Cervantes-Sanchez, F.; Cruz-Aceves, I.; Hernandez-Aguirre, A.; Avina-Cervantes, J.G.; Solorio-Meza, S.; Ornelas-Rodriguez, M.; Torres-Cisneros, M. Segmentation of Coronary Angiograms Using Gabor Filters and Boltzmann Univariate Marginal Distribution Algorithm. Comput. Intell. Neurosci. 2016, 2016, 2420962. [Google Scholar] [CrossRef]

- Fatemi, M.J.R.; Mirhassani, S.M.; Yousefi, B. Vessel segmentation in X-ray angiographic images using Hessian based vesselness filter and wavelet based image fusion. In Proceedings of the 10th IEEE International Conference on Information Technology and Applications in Biomedicine, Corfu, Greece, 3–5 November 2010. [Google Scholar]

- Eiho, S.; Qian, Y. Detection of coronary artery tree using morphological operator. In Computers in Cardiology; IEEE: Lund, Sweden, 1997. [Google Scholar]

- Wang, S.; Li, B.; Zhou, S. A Segmentation Method of Coronary Angiograms Based on Multi-scale Filtering and Region-Growing. In Proceedings of the International Conference on Biomedical Engineering and Biotechnology, Macau, China, 28–30 May 2012. [Google Scholar]

- Kang, W.; Kang, W.; Li, Y.; Wang, Q. The segmentation method of degree-based fusion algorithm for coronary angiograms. In Proceedings of the International Conference on Measurement, Information and Control, Harbin, China, 16–18 August 2013. [Google Scholar]

- Chanwimaluang, T.; Fan, G.; Fransen, S.R. Hybrid Retinal Image Registration. IEEE Trans. Inf. Technol. Biomed. 2006, 10, 129–142. [Google Scholar] [CrossRef]

- Rodrigues, E.O.; Liatsis, P.; Satoru, L.; Conci, A. Fractal triangular search: A metaheuristic for image content search IET Image Processing 2018, 12, 1475–1484. IET Image Processing 2018, 12, 1475–1484. [Google Scholar] [CrossRef]

- Rodrigues, E.O.; Conci, A.; Morais, F.F.C.; Perez, M.G. Towards the automated segmentation of epicardial and mediastinal fats: A multi-manufacturer approach using intersubject registration and random forest. In Proceedings of the IEEE International Conference on Industrial Technology (ICIT), Seville, Spain, 17–19 March 2015; pp. 1779–1785. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).