Metaheuristic Algorithms Applied to Color Image Segmentation on HSV Space

Abstract

:1. Introduction

2. Materials and Methods

2.1. Color Image Segmentation Method

- Any color can be defined by three values and the combination of the three components is unique;

- Two colors are equivalent after multiplying or dividing the three components by the same number;

- The luminance of a mixture of colors is equal to the sum of the luminance of each color.

2.2. Edge Extraction Applying Artificial Bee Colony Algorithm

3. Results

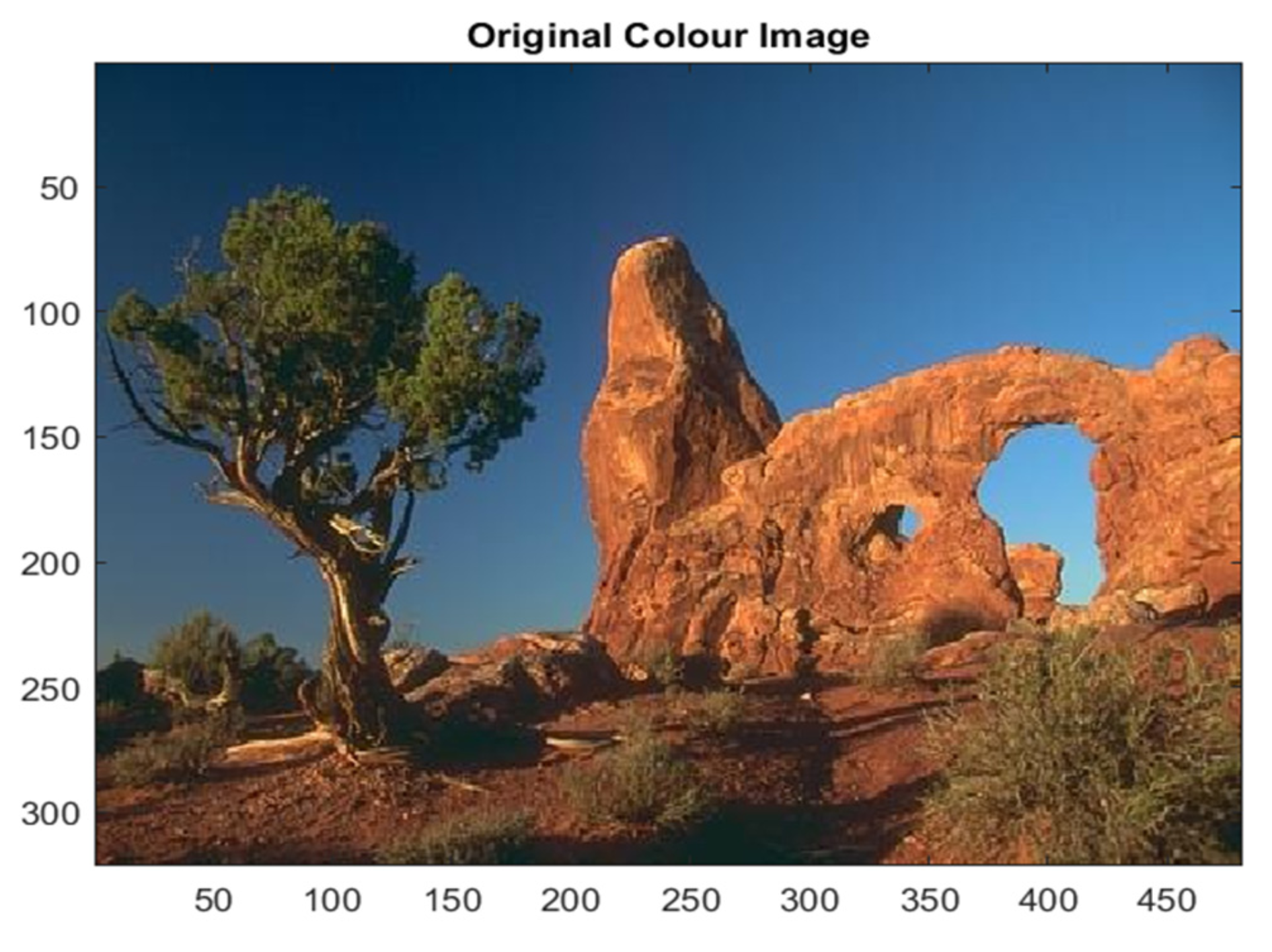

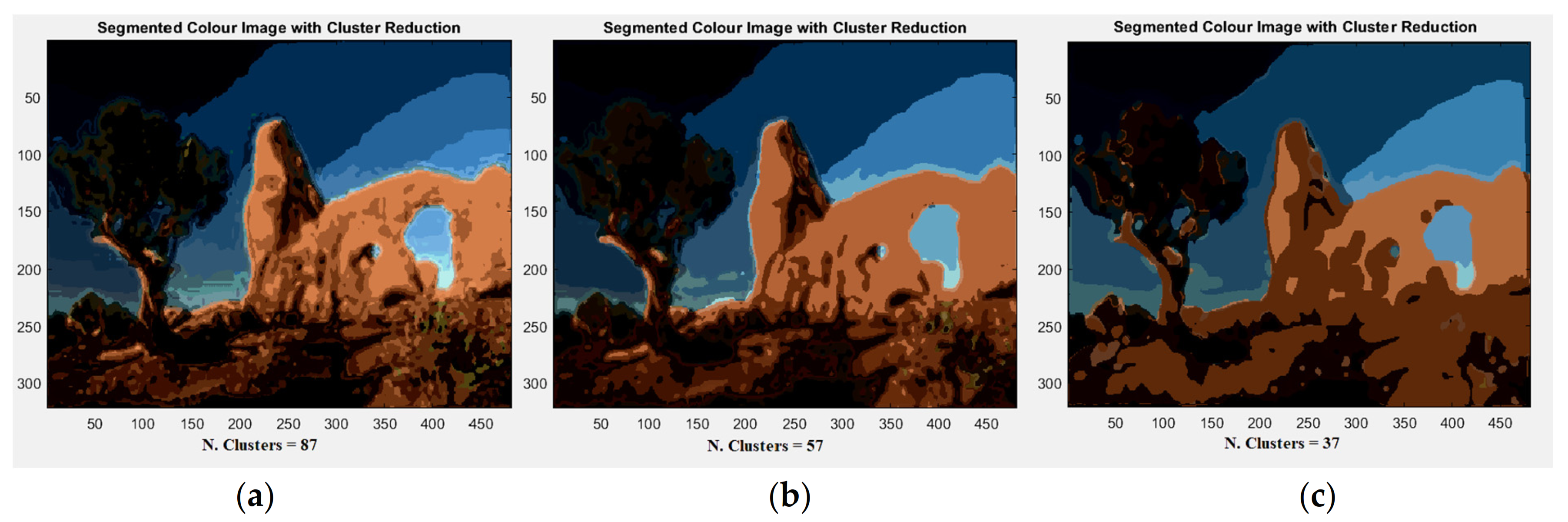

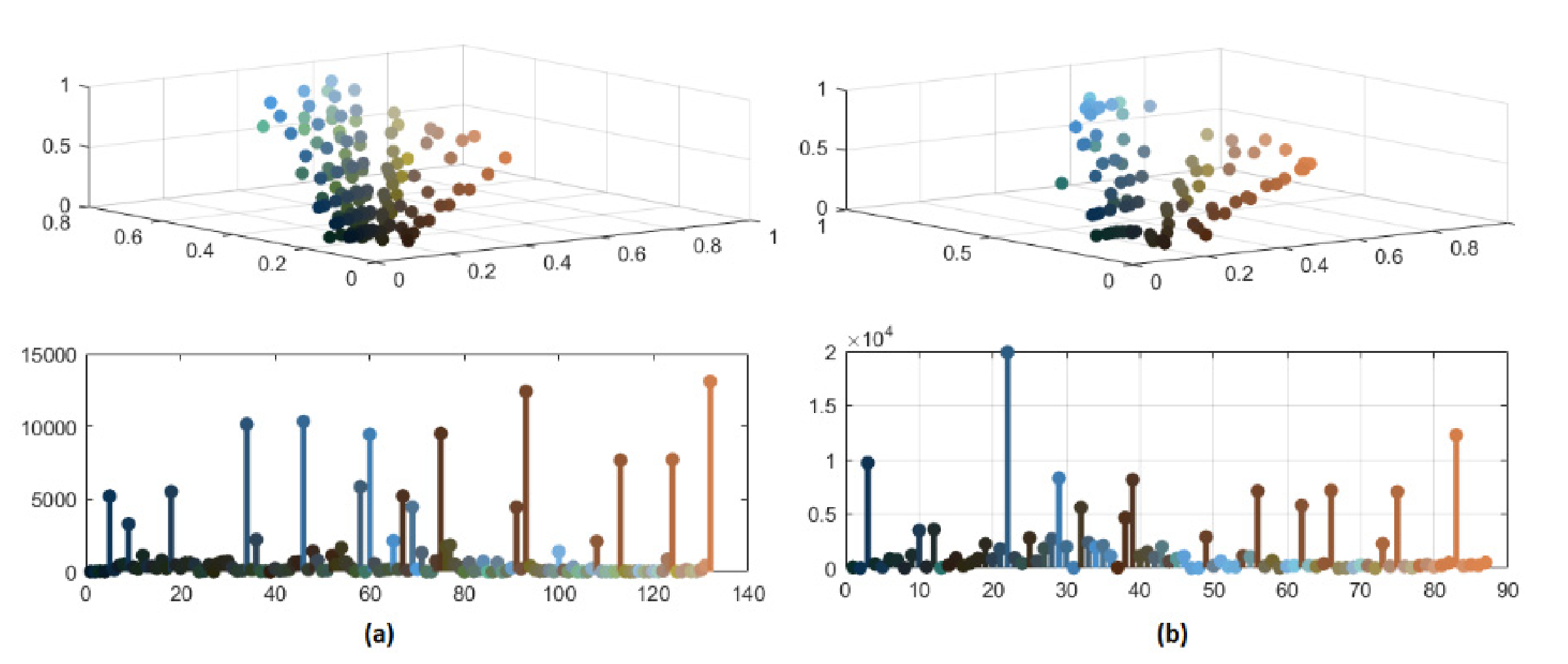

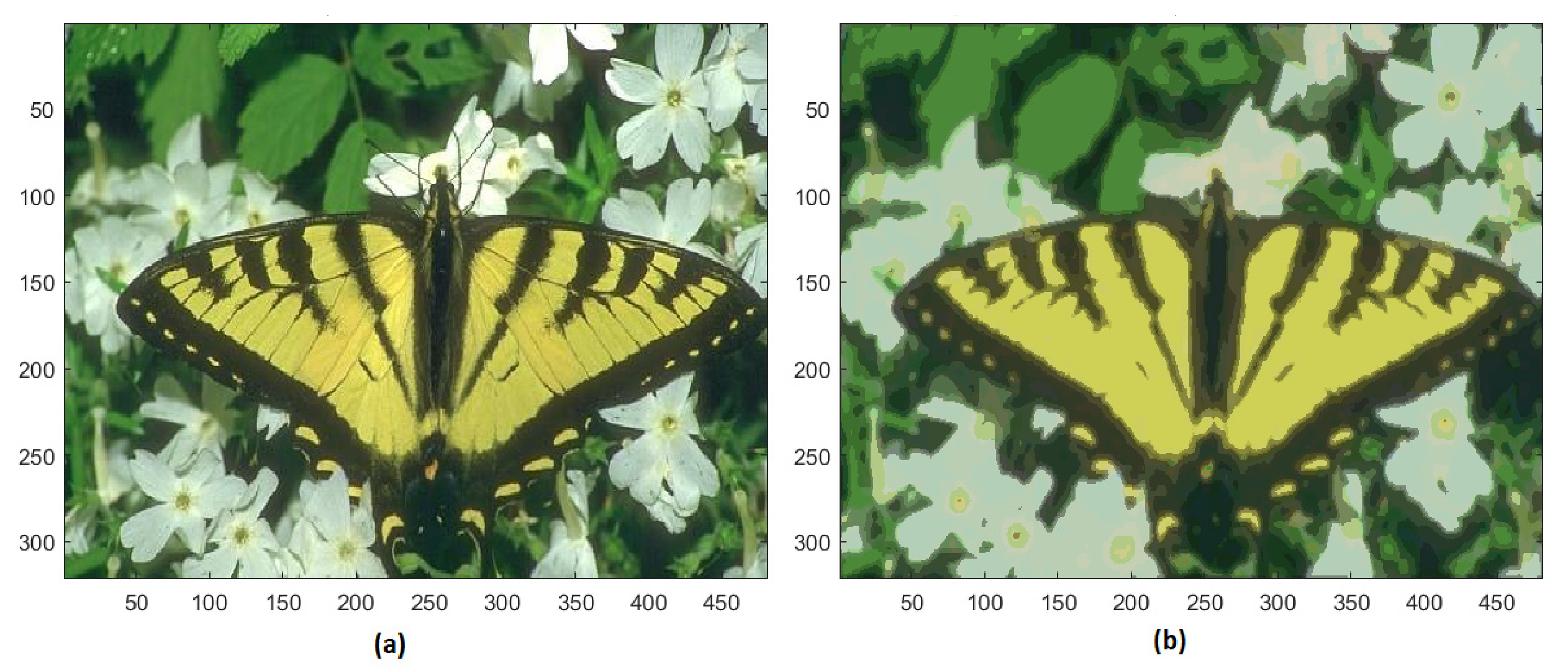

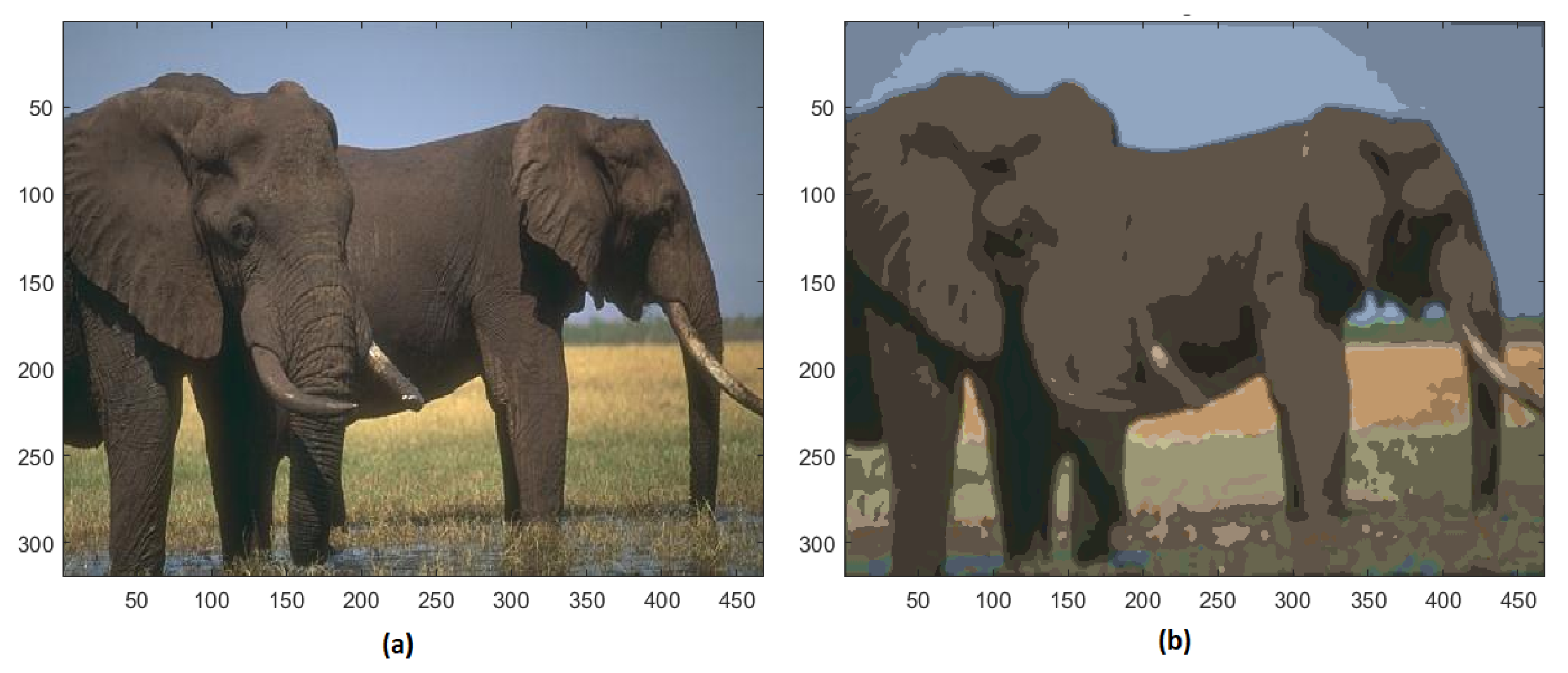

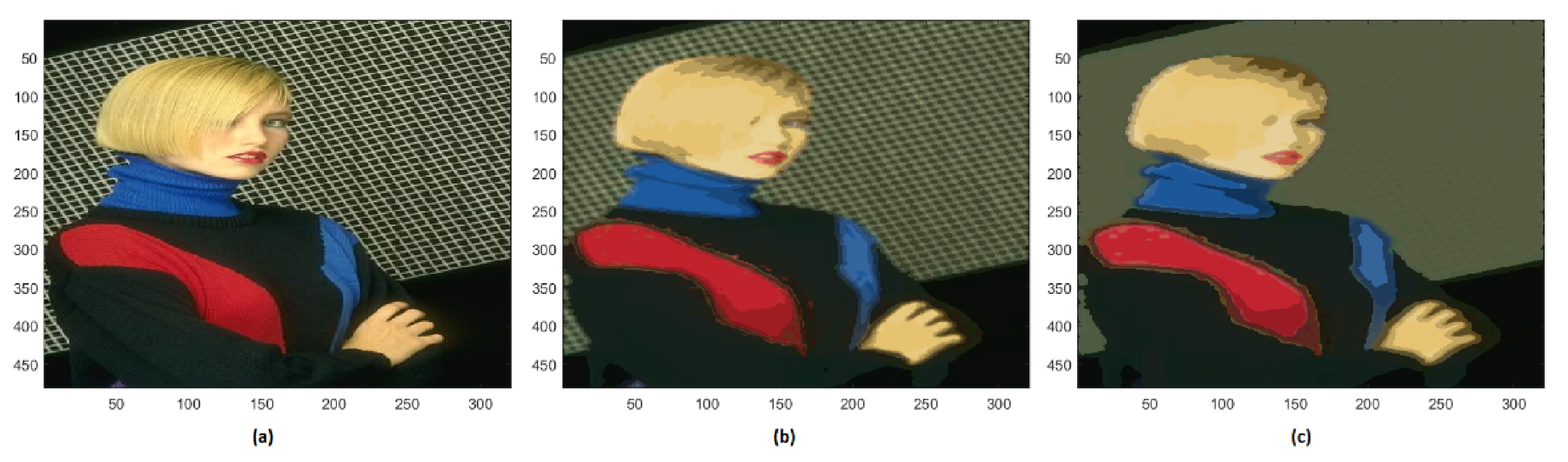

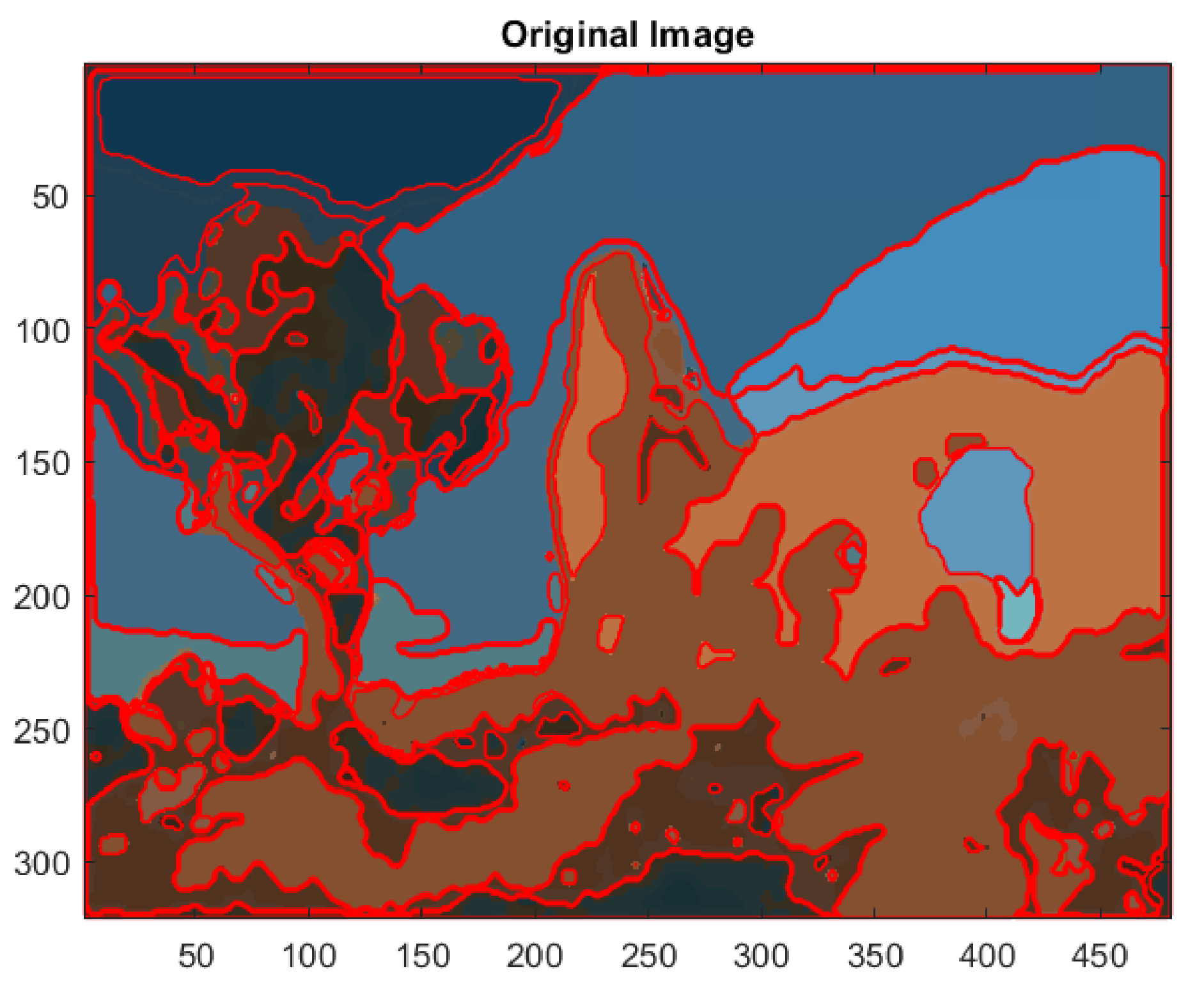

3.1. Results of the Color Image Segmentation Method

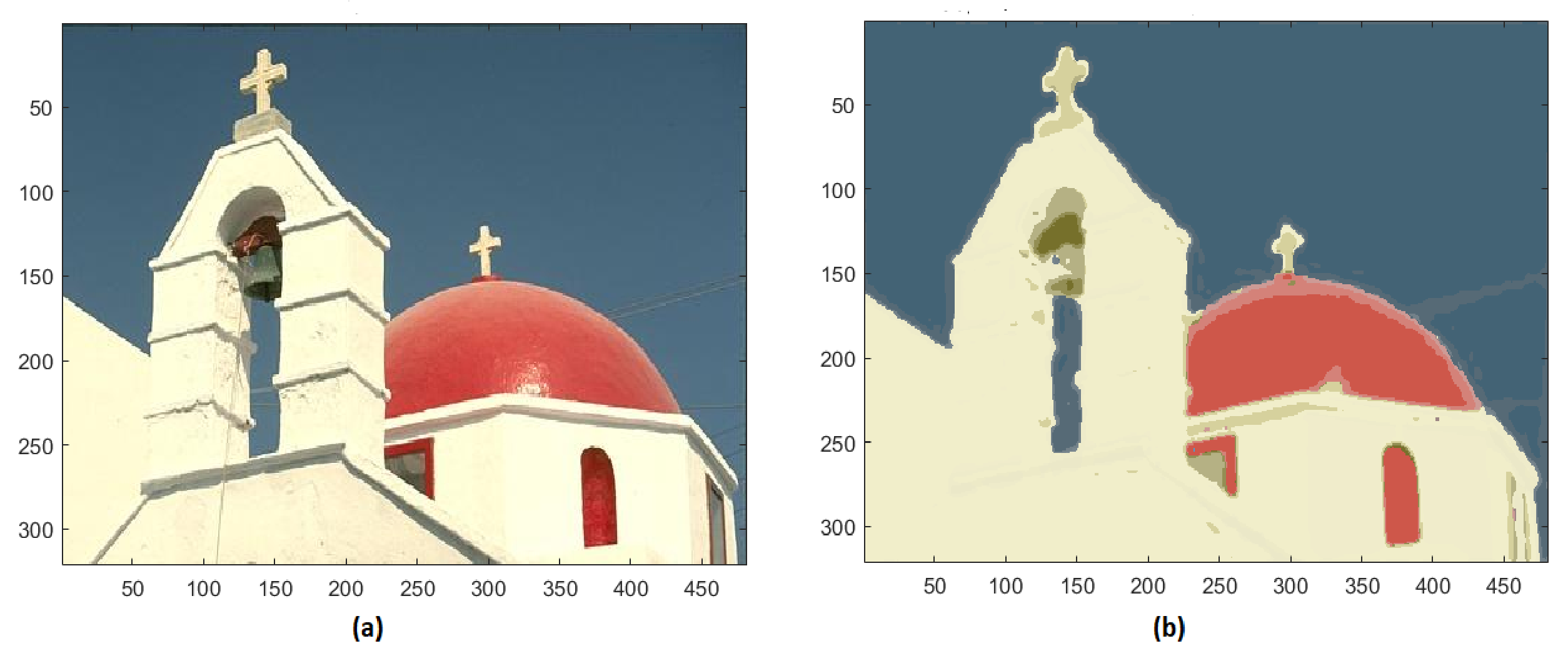

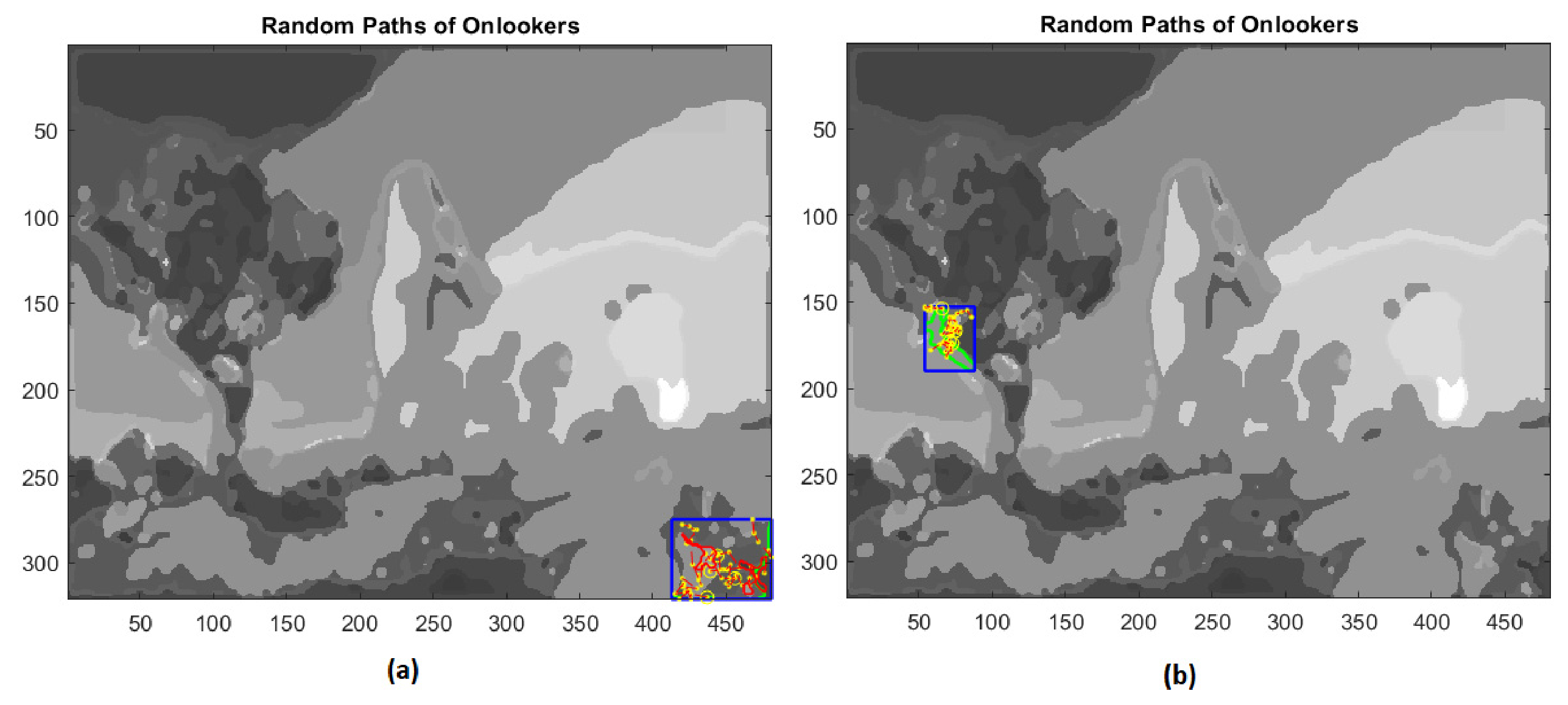

3.2. Results of Edge Extraction Applying Artificial Bee Colony Algorithm

4. Discussion

Funding

Data Availability Statement

Conflicts of Interest

References

- Gonzalez, R.C.; Wood, R.E. Digital Image Processing, 3rd ed.; Prentice Hall: Hoboken, NJ, USA, 2007. [Google Scholar]

- Russ, J.C.; Neal, F.B. The Image Processing Handbook, 7th ed.; CRC Press: Boca Raton, FL, USA, 2015. [Google Scholar]

- Skarbek, W.; Koschan, A. Colour Image Segmentation: A Survey; Technical Report 94–32; Techical University of Berlin: Berlin, Germany, 1994. [Google Scholar]

- Lucchese, L.; Mitra, S.K. Colour Image Segmentation: A State of the Art Survey; PINSA: Lee’s Summit, MO, USA, 2001; Volume 67, pp. 207–221. [Google Scholar]

- Fernandez-Maloigne, C. Advanced Color Image Processing and Analysis; Springer: Berlin/Heidelberg, Germany, 2012. [Google Scholar]

- Vantaram, S.R.; Saber, E. Survey of contemporary trends in color image segmentation. J. Electron. Imaging 2012, 21, 4. [Google Scholar] [CrossRef]

- Gracia-Lamont, F.; Cervantes, J.; Lopez, A.; Rodriguez, A. Segmentation of images by color features: A survey. Neurocomputing 2018, 292, 1–27. [Google Scholar] [CrossRef]

- Ridler, T.W.; Calvard, S. Picture thresholding using an iterative selection method. IEEE Trans. Syst. Man Cybern. 1978, 8, 630–632. [Google Scholar]

- Chen, Y.B.; Chen, O.T. Image Segmentation Method Using Thresholds Automatically Determined from Picture Contents. EURASIP J. Image Video Process. 2009, 2009, 140492. [Google Scholar] [CrossRef] [Green Version]

- Kanungo, T.; Mount, D.M.; Netanyahu, N.S.; Piatko, C.D.; Silverman, R.; Wu, A.Y. An efficient k-means clustering algorithm: Analysis and implementation. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 881–892. [Google Scholar] [CrossRef]

- Chen, J.; Pappas, T.N.; Mojsilovic, A.; Rogowitz, A. Adaptive perceptual color-texture image segmentation. IEEE Tran. Image Proc. 2008, 17, 1524–1536. [Google Scholar] [CrossRef]

- Frigui, H.; Krishnapuram, R. A Robust Competitive Clustering Algorithm with Applications. Comput. Vision J. IEEE Trans. Pattern Anal. Mach. Intell. 1999, 21, 450–465. [Google Scholar]

- Gu, C.; Lim, J.J.; Arbelaez, P.; Malik, J. Recognition using regions. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Gould, S.; Fulton, R.; Koller, D. Decomposing a scene into geometric and semantically consistent regions In Proceedings of the 2009 IEEE 12th International Conference on Computer Vision, Kyoto, Japan, 29 September–2 October 2009.

- Ren, X.; Malik, J. Learning a classification model for segmentation. Int. Conf. of Comput. Vis. 2003, 2, 10–17. [Google Scholar]

- Healey, G. Using color for geometry insensitive segmentation. Opt. Soc. Am. 1989, 22, 920–937. [Google Scholar] [CrossRef]

- Xin, J.H.; Shen, H.L. Accurate color synthesis of three-dimensional objects in an image. Opt. Soc. Am. 2004, 21, 5. [Google Scholar] [CrossRef] [Green Version]

- Chaves-Gonzalez, J.M.; Vega-Rodrıguez, M.A.; Gomez-Pulido, J.A.; Sanchez-Perez, J.M. Detecting skin in face recognition systems: A color spaces study. Digit. Signal Process. 2009, 20, 806–823. [Google Scholar] [CrossRef]

- Jurio, A.; Pagola, M.; Galar, M.; Lopez, C.; Paterna, D. A comparison study of different color spaces in clustering based image segmentation. In Communications on Computer and Information Science; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Koschan, A.; Abidi, M. Detection and classification of edges in color images: A review of vector valued techniques. IEEE Signal Process. Mag. 2005, 22, 64–73. [Google Scholar] [CrossRef]

- Ruiz-Ruiz, G.; Gomez-Gil, J.; Navas-Gracia, L.M. Testing different color space based on hue for the environmentally adaptive segmentation algorithm (ESA). Comput. Electron. Agric. 2009, 68, 88–96. [Google Scholar] [CrossRef]

- Alata, O.; Quintard, L. Is there a best color space for color image characterization or representation based on multivariate Gaussian Mixture Model? Comput. Vis. Image Under-Standing 2009, 113, 867–877. [Google Scholar] [CrossRef]

- Yang, X.S. Nature-Inspired Metaheuristic Algorithms; Luniver Press: Beckington, UK, 2008. [Google Scholar]

- Parpinelli, R.S.; Lopes, H.S. New inspirations in swarm intelligence: A survey. Int. J. Bio-Inspired Comput. 2011, 3, 1–16. [Google Scholar] [CrossRef]

- Pham, D.T.; Ghanbarzadeh, A.; Koc, E.; Otri, S.; Rahim, S.; Zaidi, M. The Bees Algorithm-A Novel Tool for Complex Optimisation Problems. In Intelligent Production Machines and Systems, 2nd I*PROMS Virtual International Conference, 3–14 July 2006; Elsevier: Amsterdam, The Netherlands, 2006; pp. 454–459. [Google Scholar]

- Kennedy, J.; Eberhart, R.; Shi, Y. Swarm Intelligence; Academic Press: Cambridge, MA, USA, 2001. [Google Scholar]

- Blum, C.; Roli, A. Metaheuristics in combinatorial optimization: Overview and conceptual comparison. ACM Comput. Surv. 2003, 35, 268–308. [Google Scholar] [CrossRef]

- Rothlauf, F. Design of Modern Heuristics Principles and Application; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Senthilnath, J.; Vipul, D.; Omkar, S.N.; Mani, V. Clustering using levy flight cuckoo search. In Proceedings of the 7th International Conference on Bio-Inspired Computing: Theories and Applications, Advances in Intelligent Systems and Computing, Beijing, China, 2–4 November 2018; Lecture Notes in Computer Science (LNCS). Springer: Delhi, India, 2012; pp. 65–75. [Google Scholar]

- Senthilnath, J.; Omkar, S.N.; Mani, V. Clustering using firefly algorithm: Performance study. Swarm Evol. Comput. Elsevier 2011, 1, 164–171. [Google Scholar] [CrossRef]

- Giuliani, D. Colour Image Segmentation based on Principal Component Analysis with application of Firefly Algorithm and Gaussian Mixture Model. Int. J. Image Process. 2018, 12, 4. [Google Scholar]

- Giuliani, D. A Grayscale Segmentation Approach using the Firefly Algorithm and the Gaussian Mixture Model. Int. J. Swarm Intell. Res. 2017, 9, 1. [Google Scholar] [CrossRef]

- Lindsay, B.G. Mixture Models: Theory, Geometry and Applications. In NSF-CBMS Regional Conference Series in Probability and Statistics; Institute of Mathematical Statistics: Hayward, CA, USA, 1995. [Google Scholar]

- McLachlan, G.J.; Basford, K.E. Mixture Models: Inference and Applications to Clustering; Marcel Dekker: New York, NY, USA, 1988. [Google Scholar]

- Chapron, M. A new Chromatic Edge Detector Used for Color Image Segmentation. In Proceedings of the IEEE International Conference on Pattern Recognition, Hague, The Netherlands, 30 August–3 September 1992; pp. 311–314. [Google Scholar]

- Halkidi, M.; Batistakis, Y.; Vazirgiannis, M. On Clustering Validation Techniques. J. Intell. Inf. Syst. 2001, 17, 107–145. [Google Scholar] [CrossRef]

- Saitta, S.; Raphael, B.; Smith, I.F.C. A comprehensive validity index for clustering. Intell. Data Anal. 2008, 12, 529–548. [Google Scholar] [CrossRef] [Green Version]

- Bolshakova, N.; Azuaje, F. Cluster validation techniques for genome expression data. Signal Process. 2003, 83, 825–833. [Google Scholar] [CrossRef] [Green Version]

- Celebi, M.E. Improving the performance of K-means for color quantization. Image Vis. Comput. 2011, 29, 260–271. [Google Scholar] [CrossRef] [Green Version]

- Karaboga, D. An idea based on honey bee swarm for numerical optimization. In Technical Report of Computer Engineering Department; Engineering Faculty of Erciyes University: Kayseri, Turkey, 2005. [Google Scholar]

- Lučić, P.; Teodorović, D. Computing with bees: Attacking complex transportation engineering problems. Int. J. Artif. Intell. Tools 2003, 12, 375–394. [Google Scholar] [CrossRef]

- Karaboga, D.; Gorkemli, B.; Orzturk, C.; Karaboga, N. A comprehensive survey: Artificial Bee Colony algorithm and applications. Artif. Intell. Rev. 2014, 42, 21–57. [Google Scholar] [CrossRef]

- Nikolić, M.; Teodorović, D. Empirical study of the Bee Colony Optimization (BCO) algorithm. Expert Syst. Appl. 2013, 40, 4609–4620. [Google Scholar] [CrossRef]

- Jaskirat, K.; Sunil, A.; Renu, V. A comparative analysis of thresholding and edge detection segmentation techniques. Int. J. Comput. Appl. 2012, 39, 29–34. [Google Scholar]

- Nihar, R.N.; Bikram, K.M.; Amiya, K.R. A Time Efficient Clustering Algorithm for Gray Scale Image Segmentation. Int. J. Comput. Vis. Image Process. 2013, 3, 22–32. [Google Scholar]

- Balasubramanian, G.P.; Saber, E.; Misic, V.; Peskin, E.; Shaw, M. Unsupervised color image segmentation using a dynamic color gradient thresholding algorithm. In Proceedings of the Human Vision and Electronic Imaging XIII, San Jose, CA, USA, 27 January 2008; Volume 6806. [Google Scholar]

| Number of Clusters | RSME | RAE |

|---|---|---|

| 132 | 0.0618 | 0.9729 |

| 87 | 0.0690 | 1.0087 |

| 57 | 37.0155 | 0.9960 |

| 37 | 37.2132 | 0.9963 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Giuliani, D. Metaheuristic Algorithms Applied to Color Image Segmentation on HSV Space. J. Imaging 2022, 8, 6. https://doi.org/10.3390/jimaging8010006

Giuliani D. Metaheuristic Algorithms Applied to Color Image Segmentation on HSV Space. Journal of Imaging. 2022; 8(1):6. https://doi.org/10.3390/jimaging8010006

Chicago/Turabian StyleGiuliani, Donatella. 2022. "Metaheuristic Algorithms Applied to Color Image Segmentation on HSV Space" Journal of Imaging 8, no. 1: 6. https://doi.org/10.3390/jimaging8010006

APA StyleGiuliani, D. (2022). Metaheuristic Algorithms Applied to Color Image Segmentation on HSV Space. Journal of Imaging, 8(1), 6. https://doi.org/10.3390/jimaging8010006