1. Introduction

The question of how we perceive the world around us has been an intriguing topic since ancient times. For example, we can consider the philosophical debate around the concept of

entelechy, which started with the early studies of the Aristotelian school, in order to answer this question while, on the side of phenomenology and its relation to natural sciences, we can think of the theory started by Husserl. A well-known and accepted theory of perception is that formulated within Gestalt psychology [

1,

2].

Gestalt psychology is a theory for understanding the principles underlying the configuration of local forms giving rise to a meaningful global perception. The main idea of Gestalt psychology is that the mind constructs the whole by grouping similar fragments rather than simply summing the fragments as if they were all different. In terms of visual perception, such similar fragments correspond to point stimuli with the same (or very close) valued features of the same type. As an enlightening example from vision science, we tend to group the same coloured objects in an image and to perceive them as an ensemble rather than as objects with different colours. There have been many psychophysical studies which have attempted to provide quantitative parameters describing the tendencies of the mind in visual perception based on Gestalt psychology. A particularly important one is the pioneering work of Field et al. [

3], where the authors proposed a representation, called the

association field, that modelled specific Gestalt principles. Furthermore, they also showed that it is more likely that the brain perceives fragments together that are similarly oriented and aligned along a curvilinear path than the ones that are rapidly changing orientations.

The presented model for neural activity is a geometrical abstraction of the orientation-sensitive V1 hypercolumnar architecture observed by Hubel and Wiesel [

4,

5,

6]. This abstraction generates a good

phenomenological approximation of the V1 neuronal connections existing in the hypercolumnar architecture, as reported by Bosking et al. [

7]. In this framework, the corresponding projections of the neuronal connections in V1 onto a 2D image plane are considered to be the association fields described above and the neuronal connections are modeled as the horizontal integral curves generated by the model geometry. The projections of such horizontal integral curves were shown to produce a close approximation of the association fields, see

Figure 1. For this reason, the approach considered by Citti, Petitot and Sarti and used in this work is referred to as cortically-inspired.

We remark that the presented model for neural activity is a phenomenological model that provides a mathematical understanding of early perceptual mechanisms at the cortical level by starting from very structure of receptive profiles. Nevertheless, it has been very useful for many image-processing applications, see, for example, [

9,

10].

In this work, we follow this approach for a better understanding of the visual perception biases due to visual distortions often referred to as visual illusions. Visual illusions are described as the mismatches between reality and its visual perception. They result either from a neural conditioning introduced by external agents such as drugs, microorganisms and tumours [

11,

12], or from self-inducing mechanisms evoking visual distortions via proper neural functionality applied to a specific stimulus [

13,

14]. The latter type of illusion is due to the effects of neurological and biological constraints on the visual system [

15].

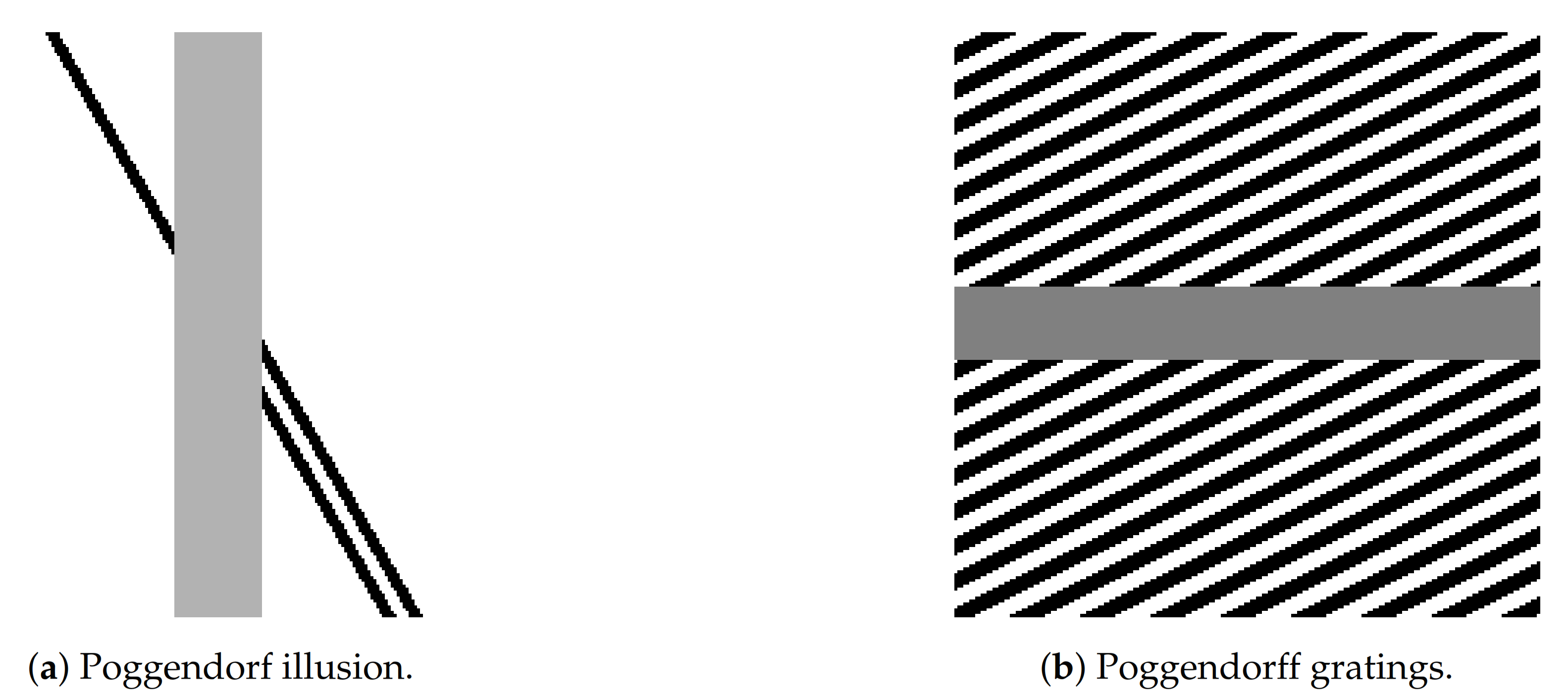

In this work, we focus on illusions induced by contrast induction and orientation misalignments, with a particular focus on the well-known Poggendorff illusion and its variations, see

Figure 2. This is a geometrical optical illusion [

16,

17] in which a misaligned oblique perception is induced by the presence of a central bar [

18].

1.1. The Functional Architecture of the Primary Visual Cortex

It has been known since the celebrated experiments of Hubel and Wiesel [

4,

5,

6] that neurons (simple cells) in the primary visual cortex (V1) perform boundary (hence orientation) detection and propagate their activations through cortical connectivity, in accordance with the psychophysical results of Fields and Hayes [

3]. Hubel and Wiesel showed that simple cells have a spatial arrangement based on the so-called

hypercolumns in V1. In this arrangement, simple cells that are sensitive to different orientations at the same retinal location are found in the same vertical column constructed on the cortical surface. Adjacent columns contain simple cells, which are sensitive to close positions.

Several models have been proposed to describe the functional architecture of V1 and the neural connectivity within it. Koenderink et al. [

19,

20] focused on differential geometric approaches to study the visual space where they modelled the invariance of simple cells with respect to suitable symmetries in terms of a family of Gaussian functions. Hoffman [

21,

22] provided the basic framework of vision models by interpreting the hypercolumn architecture of V1 as a fibre bundle. Following a similar reasoning, Petitot and Tondut [

23] further developed this modelling, providing a new model, coherent both with the structure of orientation sensitive simple cells and the long range neural connectivity between V1 simple cells. In their model, they first observed that the simple cell orientation selectivity induces a contact geometry (associated with the first Heisenberg group) rendered by the fibres of orientations. Moreover, they showed that a specific family of curves found via a constrained minimisation approach in the contact geometry fits the aforementioned association fields reported by Field et al. [

3]. In [

8,

24], Citti and Sarti further developed the model of Petitot and Tondut, by introducing a group based approach, which was then refined by Boscain, Gauthier et al. [

25,

26], see also the monograph in [

27]. The so-called Citti-Petitot-Sarti (CPS) model exploits the natural sub-Riemannian (sR) structure of the group of rotations and translations

as the V1 model geometry.

In this framework, simple cells are modelled as points of the three-dimensional group . Here, is the projective line, obtained by identifying antipodal points in . The response of simple cells to the two-dimensional visual stimuli is identified by lifting them to via a Gabor wavelet transform. Neural connectivity is then modelled in terms of horizontal integral curves given by the natural sub-Riemannian structure of . Activity propagation along neural connections can further be modelled in terms of diffusion and transport processes along the horizontal integral curves.

In recent years, the CPS model has been exploited as a framework for several cortical-inspired image processing problems by various researchers. We mention the large corpus of literature by Duits et al., see, for example, [

28,

29,

30] and the state-of-the-art image inpainting and image recognition algorithms developed by Boscain, Gauthier, et al. [

9,

31]. Some extensions of the CPS model geometry and its applications to other image processing problems can be found in [

32,

33,

34,

35,

36,

37,

38,

39].

1.2. Mean-Field Neural Dynamics & Visual Illusions

Understanding neural behaviors is in general a very challenging task. Reliable responses to stimuli are typically measured at the level of population assemblies comprised by a large number of coupled cells. This motivates the reduction, whenever possible, of the dynamics of a neuronal population to a neuronal mean-field model, which describes large-scale dynamics of the population as the number of neurons goes to infinity. These mean-field models, inspired by the pioneering work of Wilson and Cowan [

40,

41] and Amari [

42], are low dimensional in comparison with their corresponding ones based on large-scale population networks. Yet, they capture the same dynamics underlying the population behaviours.

In the framework of the CPS model for V1 discussed above, several mathematical models were proposed to describe the neural activity propagation favouring the creation of visual illusions, including Poggendorff type illusions. In [

37], for instance, illusions are identified with suitable strain tensors, responsible for the perceived displacement from the grey levels of the original image. In [

43], illusory patterns are identified by a suitable modulation of the geometry of

and are computed as the associated geodesics via the fast-marching algorithm.

In [

44,

45,

46], a variant of the Wilson-Cowan (WC) model based on a variational principle and adapted to the

geometry of V1 was employed to model the neuronal activity and generate illusory patterns for different illusion types. The modelling considered in these works is strongly inspired by the integro-differential model firstly studied in [

47] for perception-inspired Local Histogram Equalisation (LHE) techniques and later applied in a series of work, see, for example, [

48,

49] for the study of contrast and assimilation phenomena. By further incorporating a cortical-inspired modelling, the authors showed in [

44,

45,

46] that cortical Local Histogram Equalisation (LHE) models are able to replicate visual misperceptions induced not only by local contrast changes, but also by orientation-induced biases similar to the ones in

Figure 2. Interestingly, the cortical LHE model [

44,

45,

46] was further shown to outperform both standard and cortical-inspired WC models and was rigorously shown to correspond to the minimisation of a variational energy, which suggests more efficient representation properties [

50,

51]. One major limitation in the modelling considered in these works is the use of neuronal interaction kernels (essentially, isotropic 3D Gaussian), which are not compatible with the natural sub-Riemannian structure of V1 proposed in the CPS model.

1.3. Main Contributions

In this work, we encode the sub-Riemannian structure of V1 into both WC and LHE models by using a sub-Laplacian procedure associated with the geometry of the space

described in

Section 1.1. Similar to [

44,

45,

46], with the lifting procedure associated with a given two dimensional image, the corresponding neuronal response in

is performed by means of all-scale cake wavelets, introduced in [

52,

53]. A suitable gradient-descent algorithm is applied to compute the stationary states of the neural models.

Within this framework, we study the family of Poggendorf visual illusions induced by local contrast and orientation alignment of the objects in the input image. In particular, we aim to reproduce such illusions by the proposed models in a way that is qualitatively consistent with the psychophysical experience.

Our findings show that it is possible to reproduce Poggendorff-type illusions by both the sR cortical-inspired WC and LHE models. This, compared with the results in [

44,

45] where the cortical WC model is endowed with a Riemannian (isotropic) 3D kernel was shown to fail to reproduce Poggendoff-type illusions, shows that adding the natural sub-Laplacian procedure to the computation of the flows improves the capability of those cortical-inspired models in terms of reproducing orientation-dependent visual illusions.

2. Cortical-Inspired Modelling

In this section we recall the fundamental features of CPS models. The theoretical criterion underpinning the model relies on the so-called neurogeometrical approach introduced in [

8,

23,

54]. According to this model, the functional architecture of V1 is based on the geometrical structure inspired by the neural connectivity in V1.

2.1. Receptive Profiles

A simple cell is characterised by its receptive field, which is defined as the domain of the retina to which the simple cell is sensitive. Once a receptive field is stimulated, the corresponding retinal cells generate spikes which are transmitted to V1 simple cells via retino-geniculo-cortical paths.

The response function of each simple cell to a spike is called the receptive profile (RP), and is denoted by . It is basically the impulse response function of a V1 simple cell. Conceptually it is the measurement of the response of the corresponding V1 simple cell to a stimulus at a point (Note that we omit the coordinate maps between the image plane and retina surface, and the retinocortical map from the retina surface to the cortical surface. In other words, we assume that the image plane and the retinal surface are identical and denote both by .) .

In this study, we assume the response of simple cells to be linear. That is, for a given visual stimulus

we assume the response of the simple cell at V1 coordinates

to be

This procedure defines the cortical stimulus

associated with the image

f. We note that receptive field models consisting of cascades of linear filters and static non-linearities, although not perfect, may be more adequate to account for responses to stimuli [

20,

55,

56]. Several mechanisms such as, for example, response normalisation, gain controls, cross-orientation suppression or intra-cortical modulation, might intervene to radically change the shape of the profile. Therefore, the above static and linear model for the receptive profiles should be considered as a first approximation of the complex behaviour of a real dynamic receptive profile, which cannot be perfectly described by static wavelet frames.

Regarding the form of the RP, in [

8], a simplified basis of Gabor functions was proposed as good candidates for modelling the position-orientation sensitive receptive profiles for neuro-physiological reasons [

57,

58]. This basis has then been extended to take into account additional features such as scale [

54], velocity [

33] and frequency-phase [

39]. On the other hand, Duits et al. [

53] proposed so-called

cake kernels as a good alternative to Gabor functions, and showed that cake kernels were adequate for obtaining simple cell output responses which were used to perform certain image processing tasks such as image enhancement and completion based on sR diffusion processes.

In this study, we employed cake kernels as the models of position-orientation RPs obtaining the initial simple cell output responses to an input image, and we used the V1 model geometry to represent the output responses. We modelled the activity propagation along the neural connectivity by using the combination of a diffusion process based on the natural sub-Laplacian and a Wilson-Cowan type integro-differential system.

2.2. Horizontal Connectivity and Sub-Riemannian Diffusion

Neurons in V1 present two type of connections—local and lateral. Local connections connect neurons belonging to the same hypercolumn. On the other hand, lateral connections account for the connectivity between neurons belonging to different hypercolums, but along a specific direction. In the CPS model these are represented (This expression does not yield smooth vector fields on

. Indeed, e.g.,

despite that 0 and

are identified in

. Although in the present application such difference is inconsequential, since we are only interested in the direction (which is smooth) and not in the orientation, this problem can be solved by defining

in an appropriate atlas for

[

25].) by the vector fields

The above observation yields to the modelling of the dynamic of the neuronal excitation

starting from a neuron

via the following stochastic differential equation

where

and

are two one-dimensional independent Wiener processes. As a consequence, in [

25] the cortical stimulus

induced by a visual stimulus

is assumed to evolve according to the Fokker-Planck equation

Here,

is a constant encoding the unit coherency between the spatial and orientation dimensions.

The operator

is the sub-Laplacian associated with the sub-Riemannian structure on

with orthonormal frame

, as presented in [

8,

25]. It is worth mentioning that this operator is not elliptic, since

is not a basis for

. However,

. Hence,

satisfies the Hörmander condition and

is a hypoelliptic operator [

59] which models the activity propagation between neurons in V1 as the diffusion concentrated to a neighborhood along the (horizontal) integral curves of

and

.

A direct consequence of hypoellipticity is the existence of a smooth kernel for (

4). That is, there exists a function

such that the solution of (

4) with initial datum

reads

An analytic expression for

can be derived in terms of Mathieu functions [

10,

29]. This expression is however cumbersome to manipulate, and it is usually more efficient to resort to different schemes for the numerical implementation of (

4), see, for example,

Section 4.

2.3. Reconstruction on the Retinal Plane

Activity propagation evolves the lifted visual stimulus in time. In order to obtain a meaningful result, which is represented on a 2-dim image plane, we have to transform the evolved lifted image back to the 2-dim image plane. We achieve this by using the projection given by

where

and

denote the processed image and the final time of the evolution, respectively. One easily checks that this formula yields

under the assumption

4. Discrete Modelling and Numerical Realisation

In this Section, we report a detailed description of how models (sR-WC) and (sR-LHE) can be formulated in a complete discrete setting, providing, in particular, some insights on how the sub-Riemannian evolution can be realised. We further add a self-contained section regarding the gradient-descent algorithm used to perform the numerical experiments reported in

Section 5, for more details see [

44,

46].

4.1. Discrete Modelling and Lifting Procedure via Cake Wavelets

First, the sub-Riemannian diffusion

is discretised by a final time

, where

m and

denote the number of iterations and the time-step, respectively. For

and

denoting the spatial sampling size, we then discretise the given grey-scale image function

associated with the retinal stimulus on a uniform square spatial grid

and denote, for each

, the brightness value at point

by

As far as the orientation sampling is concerned, we used a uniform orientation grid with points

and

. We can then define the discrete version of the simple cell response

to the visual stimulus located at

with local orientation

at time

of the evolution as

where

is the lifting operation to be defined.

To do so, we consider in the following the image lifting procedure based on cake kernels introduced in [

53] and used, for example, in [

32,

44,

46]. We write the cake kernel centered at

and rotated by

as

where

. We can then write the lifting operation applied to the initial image

for all

and

as:

Finally, for we consider a time-discretisation of the interval at time nodes with .

The resulting fully-discretised neuronal activation at

,

and

will be thus denoted by:

4.2. Sub-Riemannian Heat Diffusion

Let

be a given cortical stimulus, and denote and set

. In this section we describe how to compute

The main difficulty here is due the degeneracy arising from the anisotropy of the sub-Laplacian. Indeed, developing the computations in (

4), we have

In particular, it is straightforward to deduce that the eigenvalues of ℓ are .

The discretisation of such anisotropic operators can be done in several ways, see for example [

29,

30,

39,

65]. In our implementation, we follow the method presented in [

26], which is tailored around the group structure of

, the universal cover of

, and based on the non-commutative Fourier transform, see also [

9].

It is convenient to assume for the following discussion

and

. The “semi-discretised” sub-Laplacian

can be defined by

where by

we denote the central difference operator discretising the derivatives along the

direction, that is, the operator

The operator

D is the diagonal operator defined by

The full discretisation is then achieved by discretising the spatial derivatives as

Under the discretisation

of

defined in (

24), we now resort to Fourier methods to compute efficiently the solution of the sub-Riemannian heat equation

In particular, let

be the discrete Fourier transform (DFT) of G w.r.t. the variables

, i.e.,

A straightforward computation shows that

Hence, (

29) is mapped by the discrete Fourier transform (DFT) to the following completely decoupled system of

ordinary linear differential equations on

:

which can be solved efficiently through a variety of standard numerical schemes. We chose the semi-implicit Crank-Nicolson method [

66] for its good stability properties. Let us remark that the operator at the r.h.s. of the above equations are periodic tridiagonal matrices, that is, tridiagonal matrices with additional non-zero values at positions

and

. Thus, the linear system appearing at each step of the Crank-Nicolson method can be solved in linear time w.r.t.

K via a variation of the Thomas algorithm.

The desired solution

can be then be simply recovered by applying the inverse DFT to the solution of (

32) at time

.

4.3. Discretisation via Gradient Descent

We follow [

44,

45,

47] and discretise both models (sR-WC) and (sR-LHE) via a simple explicit gradient descent scheme. Denoting the discretised version of the local mean average

appearing in the models by

, we have that the the time stepping reads for all

-4.6cm0cm

where

is defined depending on the model by: -4.6cm0cm

with

being the discretised version of the coefficient

in (

14) at time

.

A sufficient condition on the time-step

guaranteeing the convergence of the numerical scheme (

33) is

(see [

47]).

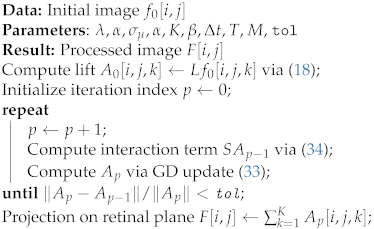

4.4. Pseudocode

Our algorithmic procedure consists of three main numerical sub-steps. The first one is the lifting of the two dimensional input image

to the space

via (

20). The second one is the Fourier-based procedure described in

Section 4.2 to compute the sub-Riemannian diffusion (

22), which can be used as kernel to describe the neuronal interactions along the horizontal connection. This step is intrinsically linked to the last iterative procedure, based on computing the gradient descent update (

33)–(

34) describing the evolution of neuronal activity in the cortical framework both for (sR-WC) and (

16).

We report the simplified pseudo-code in Algorithm 1 below. The detailed Julia package used to produce the following examples is freely available at the following webpage

https://github.com/dprn/srLHE (Accessible starting from 28 December 2020).

| Algorithm 1: sR-WC and sr-LHE pseudocode. |

![Jimaging 07 00041 i001 Jimaging 07 00041 i001]() |

5. Numerical Experiments

In this section we present the results obtained by applying models (

13), (sR-LHE) via Algorithm 1 to two Poggendorf-type illusions reported in

Figure 3. Our results are compared to the ones obtained by applying the corresponding WC and LHE 3-dimensional models with a 3D-Gaussian kernel as described in [

44,

45]. The objective of the following experiments is to understand whether the output produced by applying (sR-WC) and (sR-LHE) to the images in

Figure 3 agrees with the illusory effects perceived. Since the quantitative assessment of the strength of these effects is a challenging problem, the outputs of Algorithm 1 have to be evaluated by visual inspection. Namely, for each output, we consider whether the continuation of a fixed black stripe on one side of a central bar connects with a segment on the other side. Differently from inpainting-type problems, we stress that for these problems the objective is to replicate the perceived wrong alignments due to contrast and orientation effects rather than its collinear prosecution and/or to investigate when both types of completions can be reproduced.

(a) Testing data: Poggendorff-type illusions. We test the (sR-WC) and (sR-LHE) models on a greyscale version of the Poggendorff illusion in

Figure 2 and on its modification reported in

Figure 3b where the background is constituted by a grating pattern—in this case, the perceived bias also depends on the contrast between the central surface and the background lines.

(b) Parameters. Images in

Figure 3 have size

pixels, with

. The lifting procedure to the space of positions and orientations is obtained by discretising

into

orientations (this is in agreement with the standard range of 12–18 orientations typically considered to be relevant in the literature [

67,

68]). The relevant cake wavelets are then computed following [

32], setting the frequency band

for all experiments. The scaling parameter

appearing in (

4) is set (Such parameter adjusts the different spatial and orientation sampling. A single spatial unit is equal to

pixel edge whereas a single orientation unit is 1 pixel edge.) to

, and the parameter

M appearing in (

13), (sR-LHE) is set to

.

Parameters varying from test to test are: the slope

of the sigmoid functions

in (

9) and

, the fidelity weight

, the variance of the 2D Gaussian filtering

use to compute the local mean average

in (sR-WC) and (

16), the gradient descent time-step

, the time step

and the final time

used to compute the sub-Riemannian heat diffusion

.

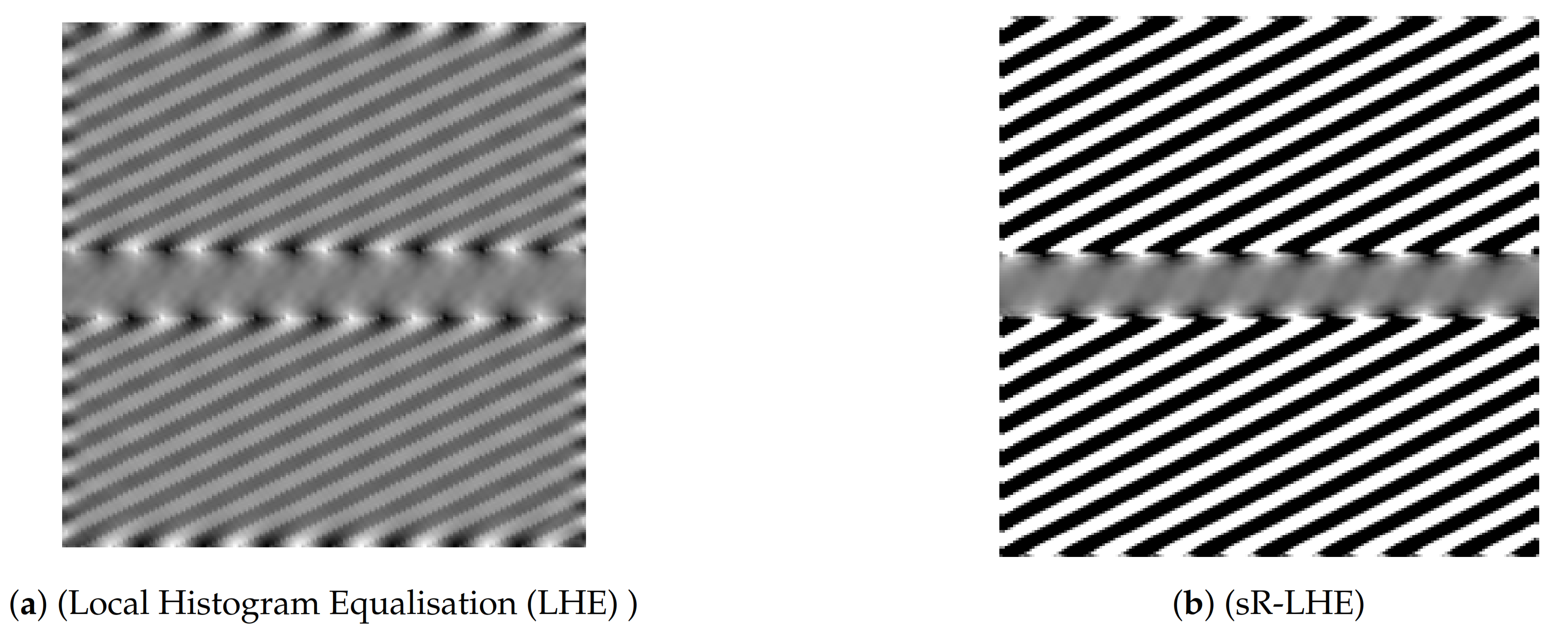

5.1. Poggendorff Gratings

In

Figure 4, we report the results obtained by applying (sR-WC) to the Poggendorff grating image in

Figure 3b. We compare them with the ones obtained by the cortical-inspired WC model considered [

44,

45], where the sR heat-kernel is an isotropic 3D Gaussian, which are reported in

Figure 4a. In

Figure 4b, we observe that the sR diffusion encoded in (sR-WC) favours the propagation of the grating throughout the central grey bar so that the resultant image agrees with our perception of misalignment. We stress that such an illusion could not be reproduced via the cortical-inspired isotropic WC model proposed in [

44,

45]. The use of the appropriate sub-Laplacian diffusion is thus crucial in this example to replicate the illusion.

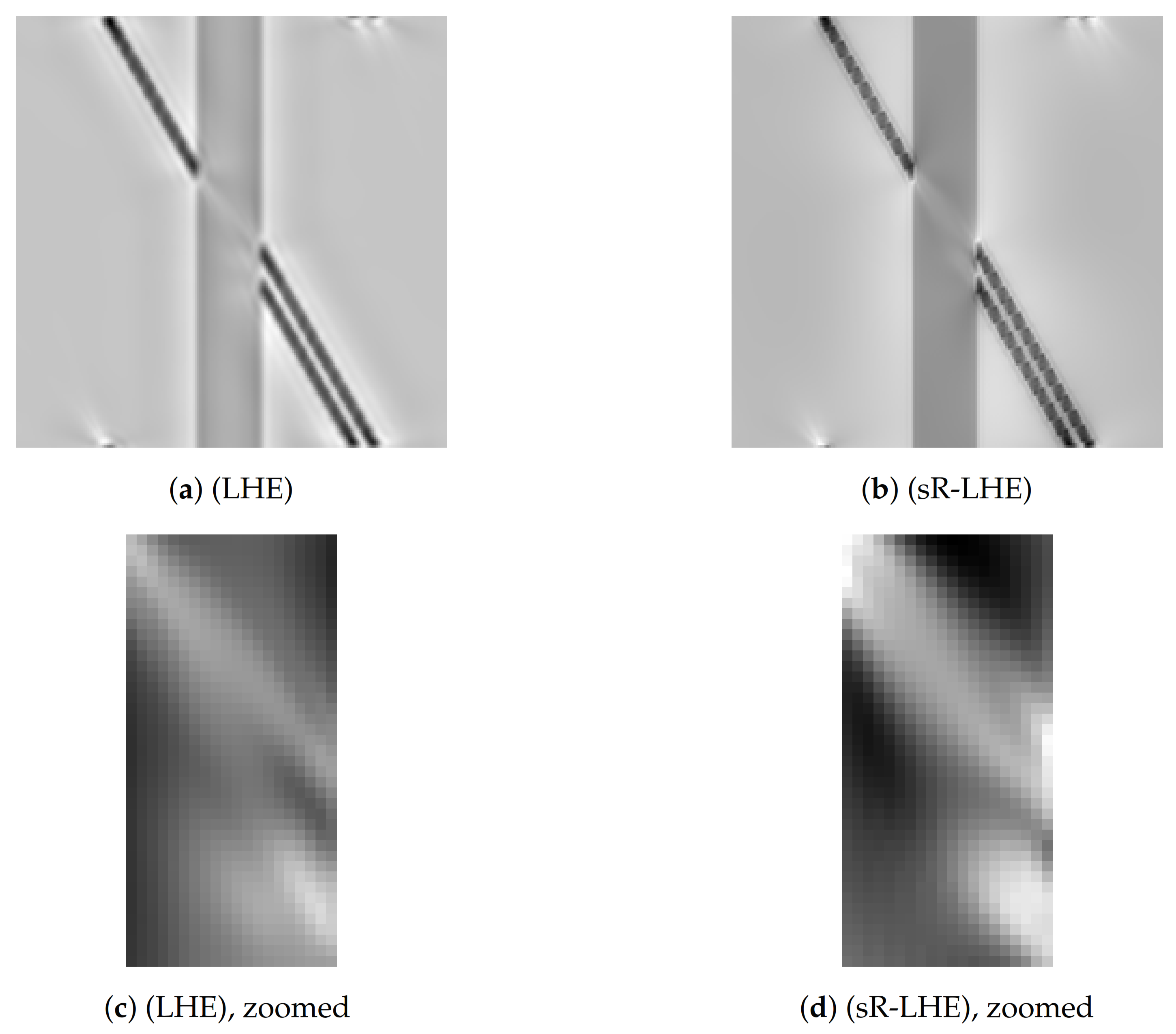

We further report in

Figure 5 the result obtained by applying (sR-LHE) on the same image. We observe that in this case both the (sR-LHE) model and the LHE cortical model introduced in [

44,

45] reproduce the illusion.

Note that both (sR-WC) and (sR-LHE) further preserve fidelity w.r.t. the given image outside the target region, which is not the case in the LHE cortical model presented in [

44,

45].

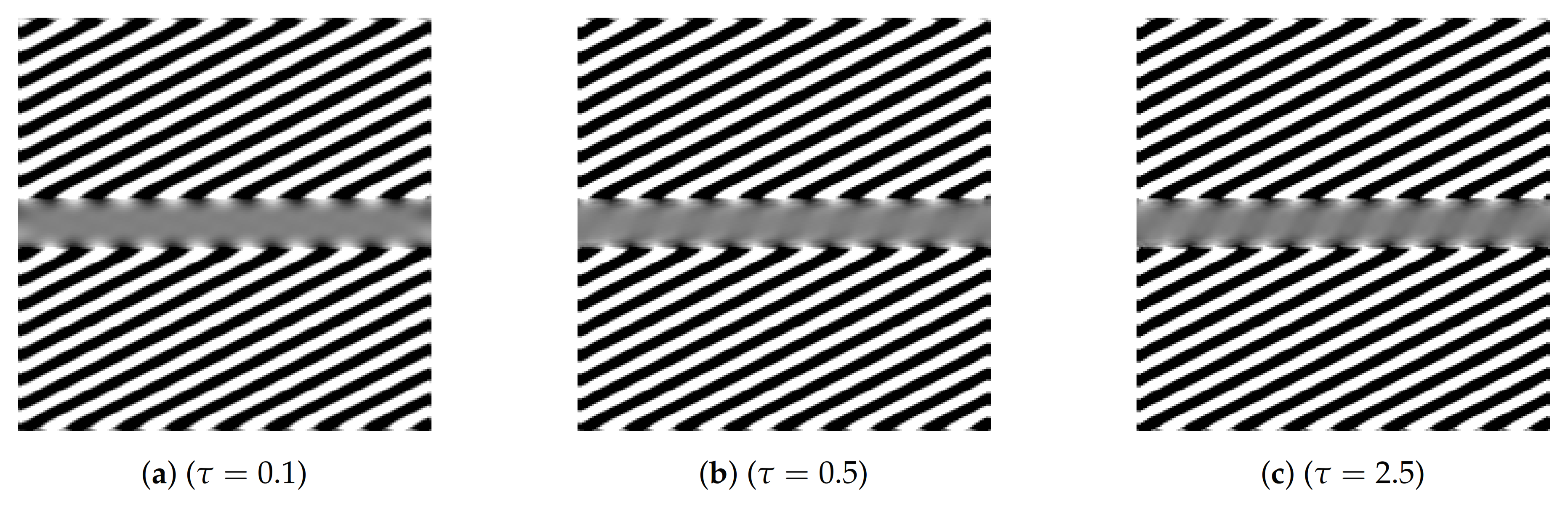

5.2. Dependence on Parameters: Inpainting vs. Perceptual Completion

The capability of the (sR-LHE) model to reproduce visual misperceptions depends on the chosen parameters. This fact was already observed in [

45] for the cortical-inspired LHE model proposed therein endowed by a standard Gaussian filtering. There, LHE was shown to reproduce illusory phenomena only in the case where the chosen standard deviation of the Gaussian filter was set to be large enough (w.r.t. the overall size of the image). On the contrary, the LHE model was shown to perform geometrical completion (inpainting) for small values of the standard deviation. Roughly speaking, this corresponds to the fact that perceptual phenomena—such as geometrical optical illusions—can be modelled only when the interaction kernel is wide enough for the information to cross the central grey line. This is in agreement with psycho-physical experiences in [

17], where the width of the central missing part of the Poggendorff illusion is shown to be directly correlated with the intensity of the illusion.

In the case under consideration here, the parameter encoding the width of the interaction kernel is the final time

of the sub-Riemannian diffusion used to model the activity propagation along neural connections. To support this observation, in

Figure 6, we show that the completion obtained via (sR-LHE) shifts from a geometrical one (inpainting), where

is small, to a perceptual one, where

is sufficiently big.

As far as the (sR-WC) model is concerned, we observed that, despite the improved capability of replicating the Poggendorf gratings, the transition from perceptual completion to inpainting could not be reproduced. In agreement with the efficient representation principle, this supports the idea that visual perceptual phenomena are better encoded by variational models as (sR-LHE) than by non-variational ones as (

13).

5.3. Poggendorff Illusion

In

Figure 7 we report the results obtained by applying LHE methods to the standard Poggendorff illusion in

Figure 3a. In particular, in

Figure 7a we show the result obtained via the LHE method of [

44,

45], while in

Figure 7b we show the result obtained via (

16), with two close-ups in

Figure 7c,d showing a normalized detail of the central region onto the set of values

. As shown by these preliminary examples, the prosecutions computed by both (LHE) models agree with our perception as the reconstructed connection in the target region links the two misaligned segments, while somehow ’stopping’ the connection of the collinear one.

This phenomenon, as well as a more detailed study on how the choice of the parameters used to generate

Figure 3a (such as the incidence angle, the width of the central gray bar, the distance between lines) in a similar spirit to [

69] where psysho-physicis experiments were performed on analogous images, is an interesting topic for future research.

6. Conclusions

In this work we presented the sub-Riemannian version (

16) of the Local Histogram Equalisation mean-field model previously studied in [

44,

45] and here denoted by (sR-LHE). The model considered is a natural extension of existing ones where the kernel used to model neural interactions was simply chosen to be a 3D Gaussian kernel, while in (sR-LHE) this is chosen as the sub-Riemannian kernel of the heat equation formulated in the space of positions and orientations given by the primary visual cortex (V1). A numerical procedure based on Fourier expansions is described to compute such evolution efficiently and in a stable way and a gradient-descent algorithm is used for the numerical discretisation of the model.

We tested the (sR-LHE) model on orientation-dependent Poggendorff-type illusions and showed that (i) in presence of a sufficiently wide interaction kernel, model (sR-LHE) is capable to reproduce the perceptual misalignments perceived, in agreement with previous work (see

Figure 5 and

Figure 7); (ii) when the interaction kernel is too narrow, (sr-LHE) favours a geometric-type completion (inpainting) of the illusion (see

Figure 6) due to the limited amount of diffusion considered.

We also considered the sub-Riemannian version (

13) of the standard orientation-dependent Wilson-Cowan equations previously studied in [

44,

45] and denoted here by (sR-WC). We obtained (sR-WC) by using the sub-Riemannian interaction kernel in the standard orientation-dependent Wilson-Cowan equations. We showed that the introduction of such cortical-based kernel improves the capability of WC-type models of reproducing Poggendorff-type illusions, in comparison to the analogous results reported [

44,

45], where the cortical version of WC with a standard 3D Gaussian kernel was shown to fail to replicate the illusion.

Finally, we stress that, in agreement with the standard range of 12–18 orientations typically considered to be relevant in the literature [

67,

68], all the aforementioned results have been obtained by considering

orientations. The LHE and WC models previously proposed were unable to obtain meaningful results with less than

orientations.