Abstract

The empirical wavelet transform is an adaptive multi-resolution analysis tool based on the idea of building filters on a data-driven partition of the Fourier domain. However, existing 2D extensions are constrained by the shape of the detected partitioning. In this paper, we provide theoretical results that permits us to build 2D empirical wavelet filters based on an arbitrary partitioning of the frequency domain. We also propose an algorithm to detect such partitioning from an image spectrum by combining a scale-space representation to estimate the position of dominant harmonic modes and a watershed transform to find the boundaries of the different supports making the expected partition. This whole process allows us to define the empirical watershed wavelet transform. We illustrate the effectiveness and the advantages of such adaptive transform, first visually on toy images, and next on both unsupervised texture segmentation and image deconvolution applications.

1. Introduction

Within the field of image processing and computer vision, multi-resolution analysis is a vastly applicable tool. For example, the use of multi-resolution analysis and compressive sensing theory has led to state-of-the-art denoising and deconvolution techniques. In addition, the use of multi-resolution analysis has shown to be quite effective in texture analysis.

Wavelets have been the standard tool for multi-resolution analysis, and their effectiveness is matched by their popularity. However, wavelets are prescriptive in their construction in the sense that the wavelet filters are built independently of the data. Adaptive (i.e., data-driven) methods have shown many promising improvements in applications across all fields of science. Hereafter, we review the most popular adaptive methods developed in the last two decades.

An early adaptive method is the empirical mode decomposition (EMD) [1,2]. The EMD is an iterative algorithmic method that attempts to decompose a signal into its amplitude-modulated frequency-modulated components (also called harmonic modes). Despite its success in analyzing non-stationary signals, the EMD lacks a solid mathematical foundation due to its algorithmic nature, making it difficult to predict its behavior. Furthermore, the bidimensional empirical mode decomposition is computationally inefficient, especially with the presence of noise [3]. An alternative, called variational mode decomposition (VMD), was proposed by Dragomiretskiy et al. [4,5]. It aims at decomposing the spectrum of an image into a mixture of Gaussians with specific constraints, using optimization techniques, while this method has seen success in many applications [6,7,8,9,10]. The assumption that a spectrum can be meaningfully represented as a mixture of Gaussians can lead to a loss of information. However, another approach is using synchrosqueezed wave packets, proposed by Daubechies et al. in [11], extended into 2D by Yang et al. in [12]. Synchrosqueezed wave packets provide more accurate time/space-frequency resolution representations than classic wavelets by using a reassignment procedure. Recently, an arbitrary shape filter bank (ASFB) construction was proposed by Mahde et al. in [13]. Given a boundary line, the ASFB uses optimization techniques to define a low-pass and high-pass filter. However, since the ASFB defines only two filters, it is insufficient for many applications where more modes are present in the image. One more approach, proposed by Gilles et al. in [14,15], is the empirical wavelet transform (EWT). The empirical wavelet transform aims to build wavelet filter banks whose supports in the frequency domain are detected from the information contained in the spectrum of the signal/image. This process is equivalent to building wavelet filters based on an adaptive partitioning of the Fourier domain. The EWT has multiple bidimensional extensions based on Tensor, Littlewood–Paley, and Curvelet wavelets. The EWT has shown promising results in many applications [16,17,18,19,20]. However, in 2D, the detected partitions are made of subsets of constrained shapes limiting the adaptability capability of the EWT.

In this paper, we propose to remove such limitations by defining a new 2D version of the empirical wavelet transform based on fully arbitrary partitions of the frequency domain. This paper is organized as follows: In Section 2, we review the empirical wavelet transform and its existing implementations. We illustrate the limitations in the existing 2D approaches. In Section 3, we investigate the theoretical aspects of building 2D empirical wavelet filters based on arbitrary supports in the Fourier domain. We also propose an algorithm, based on scale-space representations and the watershed transform, to automatically detect a partition corresponding to dominant harmonic modes. Given such a partition, we define the empirical watershed wavelet transform (EWWT). In Section 4, we illustrate the performance of the EWWT as a multi-resolution analysis method, first on a synthetic image with chosen harmonic modes. Next, we illustrate that the EWWT allows improvements on the results obtained in unsupervised texture segmentation and in nonblind deconvolution. Finally, we give our concluding remarks in Section 5.

2. The Empirical Wavelet Transform

The empirical wavelet transform (EWT) is a method first proposed in 1D by Gilles in [14] and extended to 2D in [15]. The EWT is based on the idea of constructing a family of wavelets based on a set of detected (i.e., data-driven) supports in the Fourier domain corresponding to different harmonic modes. Hereafter, we first recall the 1D empirical wavelet transform, then the 2D extensions, as well as the support detection technique based on scale-space representations.

2.1. 1D Empirical Wavelet Transforms

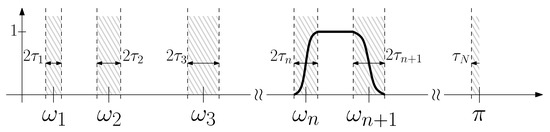

The one-dimensional, empirical wavelet transform constructs a set of wavelets based on an arbitrary partitioning of the 1D Fourier domain, see Figure 1. We consider a normalized Fourier axis, which is periodic, and we denote the Fourier transform of a function as . While we consider real signals here, which have symmetric spectra, the generalization to complex signals is fairly straightforward and can be found in [21].

Figure 1.

Construction of 1D Empirical Wavelet: given a set of boundaries }, we define as a band-pass filter between and with transition regions of width and .

Given a set of detected boundaries defined on the frequency axis and their corresponding transition widths , we define the set of empirical wavelets as the set of band-pass filters with supports and the empirical scaling function as a low-pass filter supported by . Furthermore, if the transition widths are chosen proportionally to , i.e., , where , then the obtained set of empirical wavelets forms a tight frame of bound 1.

With the set of empirical scaling function and empirical wavelets, , we can then define the empirical wavelet transform, denoted . The empirical scaling coefficients are given by the inner product of the signal and , (denoting )

and the detail coefficients are defined similarly,

where ∗ and denote respectively the convolution operator and the inverse Fourier transform. Due to the tight frame condition, the original signal f can be reconstructed from via

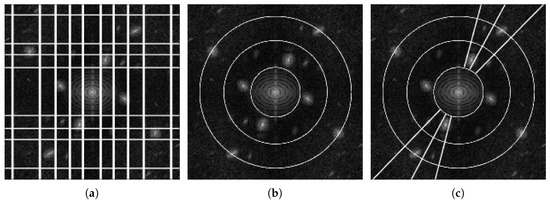

2.2. 2D Empirical Wavelet Transforms

In 2D, the set of detected supports is characterized using regions in the Fourier plane, rather than intervals on the real line. Adding this extra dimension brings an extra degree of liberty in the detection of partitions: geometry. In 1D, intervals correspond to basic geometric objects; however, in 2D, the detected regions can have very different shapes. The 2D extensions proposed in [15] revisit three main families of classic wavelets based on Fourier supports of specific shapes. The first one, the tensor empirical wavelet transform, is a separable approach processing rows and columns independently, based on rectangular supports. The second one aims at building Littlewood–Paley wavelet filters, whose purpose is to separate scales, defined by concentric rings supports. The third one is a curvelet type transform and captures both scale and orientation information by defining supports that are polar wedges shaped supports. The adaptability of these different transforms comes from the fact that the positions and sizes of these different geometric structures are driven by the spectrum of the analyzed image. It is worth noticing that three different strategies were proposed to build the empirical curvelets. The first detects scales and angular sectors independently. The second detects scales first and then detects the angular sectors within each scale ring. The third and final option detects the angular sectors first and then detects scales within each. An example partition of each of the 2D empirical wavelet transforms (only the curvelet first option is depicted here) is illustrated in Figure 2. If each of these transforms allow for adaptive multi-resolution analysis with specific properties, they are still constrained by the restrictions on the shapes of their detected supports, limiting their degree of adaptability. The purpose of this paper is to remove such limitations by considering completely arbitrary partitions, but before we describe this new approach, the next section will review the scale-space approach to detect such data-driven partitions.

Figure 2.

Examples of 2D EWT Implementations: In order from left to right, we see the partitioning of the Fourier spectrum of the (a) Tensor, (b) Littlewood–Paley, and (c) Curvelet (Option 1) empirical wavelet transforms.

2.3. Scale-Space Representations

As described in the previous sections, the ability for the EWT to be adaptive lies in the fact that the method is capable of detecting the “optimal” supports (in the Fourier domain) for each wavelet filter. This leads to the natural question: how can we detect such a partition of the Fourier domain? Since the goal for each filter is to extract meaningful harmonic modes, let us first define a harmonic mode. A harmonic mode will correspond to an amplitude modulated/frequency modulated (AM/FM) signal, denoted , where and are fast decaying (i.e., of almost bounded support) functions. It is also assumed that two consecutive are separated enough to be independently observed. From a geometric point of view, this means that is the superposition of distinct lobes. Detecting the expected partition then corresponds to find the boundaries between successive modes. The original approaches proposed by Gilles et al. in [14,15] either consider the midpoint or the lowest minimum between the highest maxima. The major drawback of such approaches is that they require the knowledge of the expected number of modes, which is, in the general case, not necessarily known.

An automatic detection algorithm (including the number of modes) based on scale-space representations has been proposed in [22] and is recalled hereafter. The scale-space representation [23] of a function g provides the content of g at multiple scales by using a blurring operator to continuously remove components of small scales. In the 1D discrete case, it is given [23] by

where s is a scale parameter and is the 1D discrete Gaussian kernel. Note that is the Bessel function of first order and . A straightforward 2D extension, as will be necessary in Section 3.2.1, is simply obtained by using separable convolutions:

Since we consider the discrete case, we will sample s with a fixed step-size, denoted , which will be discussed in the experimental section. One of the main axioms fulfilled by such scale-space representations is the guarantee that the number of extrema can only decrease when s increases. This reduction of extrema permits us to define how meaningful each original minimum is by simply measuring its lifespan (i.e., the value of s before this minimum disappears). Given the lifespans of all initial minima, each of them are then classified as meaningful/persistent or not.

As such, to detect the expected partition of the Fourier domain, we compute the scale-space representation of the signal magnitude spectrum, i.e., we set , to extract the meaningful minima, which will correspond to the set of expected boundaries . In the 2D extensions described in Section 2.2, detecting 2D partitions resumes in performing multiple 1D detections (by averaging the 2D Fourier spectrum or pseudo-polar Fourier spectrum with respect each dimension) because of their specific structures. We invite the reader to check [14,15] and especially [22] for more details and numerical implementations.

3. A New 2D Empirical Wavelet Transform

In the following sections, we present a general approach to remove the geometric constraints on the detected partitions in the previously described 2D empirical wavelets. First, we give a mathematical construction of wavelet filters is based on arbitrarily shaped supports and shows that these filters permit us to define a general empirical wavelet transform. We prove the existence of dual filters, allowing the definition of the inverse transform. Second, we present a new algorithm to detect 2D partitions of arbitrary geometry, inspired by the 1D scale-space algorithm discussed in Section 2.3.

3.1. 2D Empirical Wavelets of Arbitrary Supports

Let us assume we are given a discrete image where is the image domain (pixels coordinates will be denoted ) and an arbitrary partition, , of the Fourier domain (denoted , frequency coordinates will be denoted ), whose detection will be discussed in Section 3.2. We assume the partition has the following properties: and if , whose boundaries delineate the expected supports. In order to define a transition region similar to the one shown in Figure 1 for the 1D EWT, we must define the distance from any point in the image to these boundaries. As such, for each region , we define a distance transform of the region:

where is the boundary of the region and is the quasi-Euclidean distance, defined by

This is done such that, for each region, we define any point in the image spectrum by its distance to the region’s boundary. From there, we can define a 2D empirical wavelet as

where is the desired transition area width and is an arbitrary function such that

A usual choice for is

Unlike in the 1D case or the existing 2D cases, there are no theoretical results for the appropriate choice of , and therefore it must be chosen experimentally. Nevertheless, we can show that the set forms a frame.

Proposition 1.

Denoting , the set forms a frame.

Proof.

Assuming, for now that (to be shown later) , such that

then, using Parseval’s theorem, we get

which implies that forms a frame of bounds A and B.

It remains to show that such bounds A and B exist such that . We can observe that three situations, as shown in Figure 3, can occur:

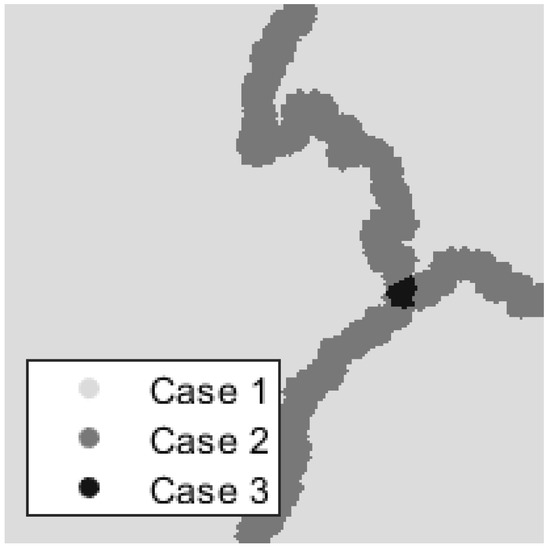

Figure 3.

Three Cases Described In Proof: Depending on whether falls within a transition region, and how many regions are in that transition, we see different bounds for our frame.

- -

- lies outside of a transition area

- -

- lies in a transition area between only two regions

- -

- lies in a transition area between three or more regions

It is easy to see that, if is not within a transition area, is nonzero for only one index n, and, by construction, we have . It remains to show the bounds if lies within a transition area.

The lower bound is decided when the transition area is defined between two regions. In this situation, we have

However, from the definition of the distance transform, we have , hence

Since and ,

and since , this is equivalent to

Therefore, our lower bound is .

In the situation where the transition area is between r regions, we have . Since , we therefore get an upper bound which completes the proof. □

With our set of empirical wavelets in hand, the corresponding transform is then defined by

Since , we can define the corresponding dual frame via

The existence of such dual frame allows us to reconstruct the original signal f from its empirical wavelet transform:

3.2. On the Detection of Partitions of Arbitrary Geometry

In this section, we address the question of how to detect partitions of the Fourier domain corresponding to meaningful harmonic modes of arbitrary geometry. The proposed method uses a 2D scale-space representation to find the “center” of harmonic modes, followed by a watershed transform to find the arbitrary boundaries delimiting the different regions . These two steps are described in detail in the next two sections. From now on, we assume our image f is discrete of size .

3.2.1. Scale-Space Localization of Harmonic Modes

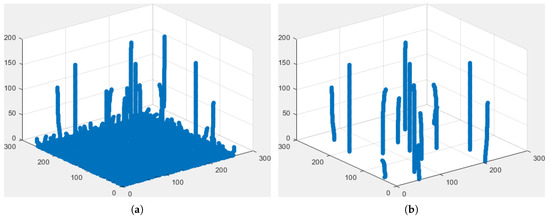

In Section 2.3, we showed that the expected 1D boundaries corresponded to meaningful local minima which can be found using scale-space representations. Unfortunately, 2D boundaries separating the different regions correspond to arbitrary curves and it becomes very hard to characterize what a meaningful curve is. Since our goal is to separate harmonic modes, assuming that these modes are well enough separated lobes in the spectrum, we propose first to detect the potential candidates by selecting meaningful local maxima. To do so, we follow a similar approach as in Section 2.3 but looking for persistent maxima instead of minima. To resume the process, we build a 2D scale-space representation of the image magnitude spectrum, i.e., , detect all local maxima at each scale s, and measure their lifespans (i.e., the largest s before they disappear). From there, we create a histogram of the persistence of the maxima and use Otsu’s method to define a threshold to classify each maxima as persistent or not. The locations of all persistent maxima, denoted , are then extracted; these locations represent “centers” of the expected harmonic modes. Because we are working in a discrete space, the scale step-size is a parameter and we set the maximum scale proportional to and the image size, , where K is the size of the kernel used to create the scale-space representation. Figure 4a illustrates the tracking of maxima through the scale-space, while Figure 4b shows the remaining persistent maxima after thresholding.

Figure 4.

Tracking of Maxima through Scale-Space (the vertical axis corresponds to the scale s): (a) shows all maxima through scale-space. (b) shows the remaining persistent maxima, i.e., of lifespan larger than the threshold defined by Otsu’s method.

3.2.2. Watershed Transforms

Given a set of mode centers, , we wish to find a set of boundaries which will define the mode supports. Taking again the point of view that modes correspond to lobes, then a natural way to find such boundaries is to select the bottom of the valleys between the modes. From a mathematical perspective, such a process corresponds to finding the path of lowest separation (this idea is a direct extension of the principle used in [15], for finding 1D boundaries, where the lowest minimum between meaningful peaks was chosen).

The watershed transform, first proposed by Beucher et al. in [24], is an image segmentation technique that defines a contour based on the path of highest separation between minima. Based on the geographic definition of watersheds and catchment basins, the watershed transform treats an image as a topographic landscape with pixel intensity representing the height at that pixel. Then, the transforms separate the image into its catchment basins, with a watershed contour separating them. This process can be described as follows.

Given an image g and a set of its minima, , we uniformly “flood” the topographic landscape produced from g, with the water collecting at the minima. When one body of water flows into another, we construct a barrier. Once complete, we define the contour along the barrier.

Later, Meyer et al. in [25,26] proposed a method in which one morphologically reconstructs the image in such a way to impose minima at select markers . This is done by first forcing each marker to be a minimum, by setting for all n, and then by filling the catchment basins of each minimum in such a way that the region contains no extrema. This approach reconstructs the image so that the only minima are at the markers and thus reformulates the watershed transform such that the generated contour lays along the path of highest separation between selected markers, rather than all minima.

To find the set of boundaries that will define the expected supports, we first invert the magnitude spectrum, , so that the watershed transform will define the path of lowest separation, rather than highest separation. Then, we impose minima to be at the location of persistent maxima, . Finally, we apply the watershed transform, which defines a boundary on the magnitude spectrum that is along the paths of lowest separation between the locations of persistent maxima . The boundary defines a partition with regions .

Since the watershed transform defines some pixels as part of the boundary, we must assign these pixels to a region. This assignment is not critical, since they belong to the path of lowest separation, but it should be symmetric if f is a real valued image. Furthermore, if f is real valued, we must pair regions symmetrically with respect to the origin. This can be achieved using Algorithm 1.

| Algorithm 1 Boundary pixel assignment and regions symmetrization. |

| Input: A set of unpaired maxima locations and corresponding partition , where n = |

| for do |

| end for |

| Output: Set of paired regions , where |

3.3. The Empirical Watershed Wavelet Transform

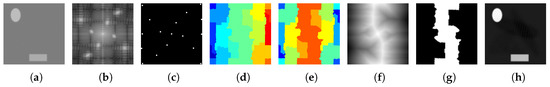

We are now equipped with all tools to define a new 2D arbitrary empirical wavelet transform called the empirical watershed wavelet transform (EWWT). The process is described by Algorithm 2, and the output of the intermediate steps is illustrated in Figure 5.

| Algorithm 2 The Empirical Watershed Wavelet Transform. |

| Input: Image f (Figure 5a), transition thickness and scale-space step-size |

| Use scale-space approach with step-size from Section 3.2.1 to find the location of persistent maxima (Figure 5c) |

| Use watershed transform from Section 3.2.2 to detect the partition (Figure 5d) |

| if f is real valued image then |

| Pair using the pairing algorithm in Section 3.2.2 (Figure 5e) |

| end if |

| Build the wavelet filters of supports using the construction described in Section 3.1 (Figure 5g) |

| Compute the EWWT given by (Figure 5h) |

| Output: Set of empirical watershed wavelet coefficients and its corresponding partition and filter bank . |

Figure 5.

Step by step construction of empirical watershed wavelets: (a) Original image, (b) Magnitude spectrum of image, (c) Detected persistent maxima using method from Section 3.2.1, (d) detected partitioning of the spectrum using method from Section 3.2.2, (e) Paired regions if image is real, (f) Distance transform of central region, (g) Empirical watershed wavelet of central region, (h) Empirical watershed wavelet coefficient for the central region.

4. Experiments

In this section, we first qualitatively explore the performance of the EWWT by decomposing a toy image made of known harmonic modes. Next, we propose to quantitatively test the use of the EWWT on two practical applications: unsupervised texture segmentation and non-blind deconvolution. As stated in Section 3.1 and Section 3.2.1, the transition width and scale-space step-size must be provided. Unless otherwise specified, we choose and for all experiments.

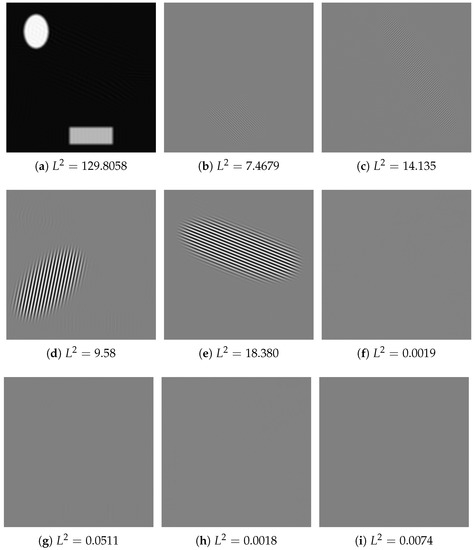

4.1. Harmonic Mode Decomposition

First, we show how the EWWT decomposes a toy image, which is made of four well separated harmonic modes and two slightly blurred piecewise constant components. This toy image is illustrated in Figure 6a along with its magnitude spectrum in Figure 6b. Figure 6c provides the detected partition and Figure 7 shows the empirical watershed wavelet coefficients obtained by performing the transform. As expected, the EWWT is capable of isolating the five harmonic modes present in the original image, which are depicted in Figure 7a–e. As for the other coefficients shown in Figure 7f–i, we see very little information (as per their respective norms), which mostly consists of high frequency information near the piecewise regions. Looking at the Fourier partition, we see that these extra coefficients correspond to regions near the edge of the spectrum where the Fourier coefficients are very small.

Figure 6.

(a) An example image, (b) its magnitude Fourier transform, (c) the detected partition using the EWWT.

4.2. Performance on Unsupervised Texture Segmentation

In this section, we explore the use of the EWWT to extract texture features for the task of unsupervised texture segmentation. Wavelets have been widely used in the literature to characterize textures since they can extract multi-scale as well as directional information. Recently, in [16], Huang et al. showed the effectiveness of the empirical wavelet transform on this problem, particularly that the Curvelet-1 EWT option outperforms all traditional wavelets. As such, we compare the EWWT against the Curvelet-1 option on the Outex dataset [27].

To perform the unsupervised texture segmentation of an image, we go through the following steps, which are outlined in detail in [16]. First, we perform a cartoon–texture decomposition of the image and keep only the textural part. This removes some variability in lighting and other non-textural information. Next, we perform the empirical wavelet feature extraction, which in our case, is either the EWWT or the EWT-Curvelet 1 option. Afterwards, we perform an energy aggregation method by calculating the energy on a window centered at each pixel. The ideal window size is mostly dependent on the dataset, and [16] shows that a window is best for the Outex dataset. The final segmentation is obtained by performing a pixel-wise k-means (the expected number of textures k is provided to the algorithm) clustering with cityblock distance.

To quantitatively evaluate the quality of the segmentation, we perform the segmentation on 100 images and compute the score, R, which averages six different region-based segmentation benchmarks (the closer to 100% the better the segmentation). These metrics are the normalized variation of information [28], the swapped directional hamming distance [29], the van Dongen distance [30], the swapped segmentation covering [31,32], the bipartite graph matching [33,34], and the bidirectional consistency error [35]. Each of these metrics are implemented by the eval_segm function available in the Supervised Evaluation of Image Segmentation Methods (SEISM) toolbox (https://github.com/jponttuset/seism).

4.2.1. Influence of the EWWT Parameters

First, we investigate the choice of the scale-space step-size . When using smaller values for , we automatically reduces the maximum scale, . In these cases, Otsu’s method detects an earlier threshold, providing a larger number of modes. Table 1 shows the results for different values of . We observe that a lower outperforms a larger , meaning that detecting a large number of modes is advantageous. This suggests that the empirical watershed wavelet transform does a successful job of separating relevant modes well that characterize the textural information. Ultimately, a value of is the most successful, and we will use this choice going forward.

Table 1.

Influence of truncating scale-space step-size for texture segmentation (best score in bold).

The next parameter influence we consider is the choice of the transition width . Table 2 shows that a transition width of performs best, which is likely a balance between proper separation of modes and the forming of Gibbs phenomena.

Table 2.

Influence of transition width for texture segmentation (best score in bold).

We also look into the effect of excluding maxima detected at the Fourier domain edge in the mode detection step. If a mode is detected at the edge of the spectrum, it represents a mode with very high frequencies. Unless equally high frequency textures are present in the dataset, it is likely these represent noise rather than meaningful textural information. Therefore, we propose to remove the locations corresponding to maxima within a certain band around the edge of the Fourier domain. Setting and , Table 3 investigates the influence of the width of that exclusion band. We can observe that removing the maxima within a two-pixel distance from the edge of the Fourier domain gives the best accuracy.

Table 3.

Influence of edge exclusion width for texture segmentation (best score in bold).

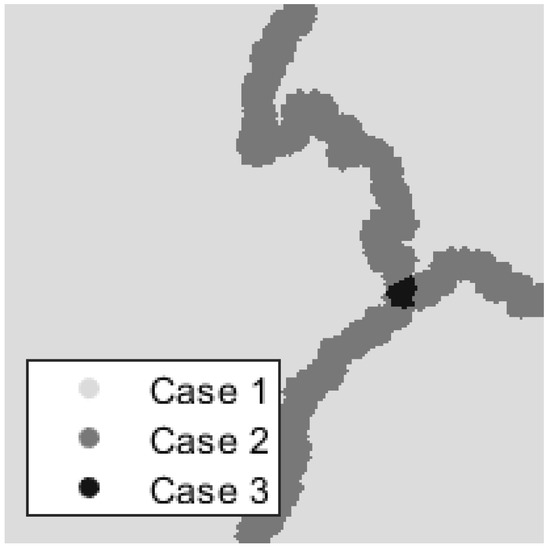

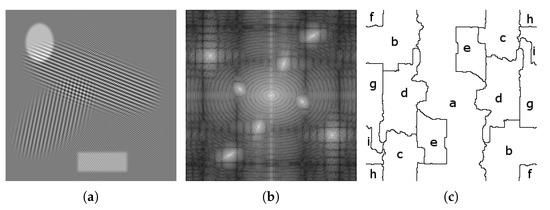

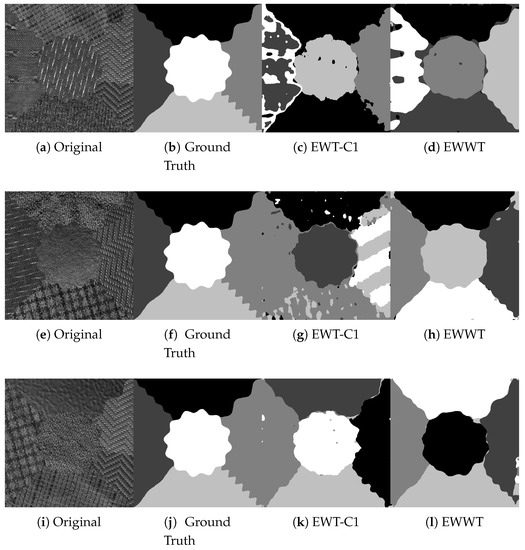

4.2.2. Final Comparison

We now compare the EWWT against the Curvelet-1 EWT (extensive comparisons with other types of wavelets are provided in [16]) on the Outex dataset. We use the best parameters for the EWWT for these tests, i.e., we set , , and we exclude maxima detected within two pixels of the domain edge. The results are shown in Table 4, and we see a significant improvement (almost 2% with similar standard deviation) when using the empirical watershed wavelet transform. Examples of obtained segmentations can be seen in Figure 8.

Table 4.

Final comparative results for texture segmentation (best score in bold).

Figure 8.

Results of Segmentation performed on Outex using empirical curvelets (EWT-C1) and empirical watershed wavelets (EWWT).

Likely, this improvement is due to extra adaptability of the EWWT in both mode detection and boundary detection.

4.3. Performance of EWWT for Non-Blind Deconvolution

Another standard application of traditional wavelets is in deconvolution as a sparsity-promoting operator [36]. Often, an ideal image u is affected by a kernel A and noise , which is observed as the image f accordingly to , where ∗ denotes convolution. As such, deconvolution is an inverse problem for image restoration where the goal is, from the observed image f, to recover the unknown pristine image u. Here, we consider the case of non-blind deconvolution where the convolution kernel A is assumed to be known. We begin by discussing how deconvolution inverse problems are solved using traditional wavelets.

4.3.1. Deconvolution with Traditional Wavelets

When attempting to recover a pristine image u via deconvolution, we are essentially solving . However, this equation is ill-posed, as it lacks a regularization term. Cai et al. [36] claim that real images usually have sparse representations under wavelet transforms. Therefore, by including a regularization term of , where D is a traditional wavelet transform, we ensure that the resulting u will have the characteristics of a real image. The goal is to ultimately turn the image deconvolution problem into a saddle point problem so that we may employ the Primal-Dual method [37]. As such, we also employ a quadratic penalty method, leaving us with

where is a regularization parameter.

For the reader’s convenience, we recall that the Primal-Dual algorithm [37] is a technique to solve saddle point problems of the form

where is a linear operator. The minimizer u is then obtained by Algorithm 3 (where ).

| Algorithm 3 General Primal-Dual algorithm. |

| Input: Choose |

| while Convergence criteria not met do |

| end while |

| Output: Numerical solution u to the saddle point problem. |

Applied to the deconvolution problem given by (16), we have , and .

It is well known that the dual of is and, furthermore, corresponds to a projection of x on to the unit ball [38]. Thus, denoting a pixel location,

and

On the other hand, is the minimization of two squared norms. Its solution has a closed-form formulation obtained by (we will denote to emphasize the operator point of view and where denotes the adjoint of )

This can be easily solved in the Fourier domain by

Furthermore, since we are working in the finite dimensional image space, . We then make the assumption that , and we numerically implement this using the inverse wavelet transform. Finally, the wavelet based deconvolution algorithm is given by Algorithm 4:

| Algorithm 4 Wavelet based deconvolution. |

| Input: Choose |

| while Convergence criteria not met do |

| end while |

| Output: Numerical solution u to the saddle point problem (16). |

4.3.2. Extension to Empirical Wavelets

Here, we naturally aim at promoting sparsity in the empirical wavelets domain, i.e., solve the deconvolution problem (16) by replacing the traditional wavelet operator, D with an empirical wavelet operator, denoted , as was done by Alvarado in [39]. Two strategies are possible: (1) we build the set of empirical wavelets based on the input image f at the beginning, and this set is kept fixed while solving (16); (2) the set of wavelet filters is initialized based on f at the beginning of the algorithm but then it is updated at each iteration. In order to distinguish both options, we will denote the empirical wavelet transform that keeps its set of filters fixed in option (1). It is worth noticing that, in option (2), the operator is nonlinear. Thus, we lose the theoretical guarantee of convergence of the primal-dual algorithm; however, we never observed any convergence issues in our experiments. The algorithms corresponding to each options are given by Algorithms 5 and 6, respectively.

We want to emphasize that updating the set of empirical wavelet filters at each iteration k automatically updates the operator .

| Algorithm 5 Empirical wavelet based deconvolution with a kept fixed set of filters. |

| Input: Choose |

| Build the set of empirical wavelet filters, based on the magnitude spectrum of f. This defines the operator |

| Initialize |

| while Convergence criteria not met do |

| end while |

| Output: Numerical solution u of . |

| Algorithm 6 Empirical wavelet based deconvolution with sets of wavelet filters that are updated at each iteration. |

| Input: Choose |

| Initialize the set of empirical wavelet filters, based on the magnitude spectrum of f. |

| while Convergence criteria not met do |

| Update the set of empirical filter based on the magnitude spectrum of |

| end while |

| Output: Numerical solution u. |

4.3.3. Results

In this section, we compare the deconvolution results obtained by using framelets [40], empirical curvelet option 1 and the empirical watershed wavelets. We use the Outex dataset once again, and simulate a Gaussian blur with variance . To assess the effectiveness of the different restorations, we compute the structural similarity index measure (SSIM) [41] between the pristine image f and the deblurred image u. We remind the reader that the closer the SSIM is to 1, the better the deconvolution. The deconvolution algorithms are run on 50 images, and we present the average SSIM over these 50 images.

We begin by investigating the influence of the parameters of the EWWT in a similar way as we did in Section 4.2.1. We run these tests in the case of the fixed filter bank approach described in Section 4.3.2. We first consider the influence of the scale-space step-size while keeping . The results are presented in Table 5.

Table 5.

Influence of the scale-space step-size for deconvolution for different level of blur (). The best SSIM values are given in bold.

We observe that a scale-space step-size value of or is optimal. While we see small differences between average SSIMs, we notice that smaller step-sizes give higher average results, confirming our observation from Section 4.2.1 that a larger number of modes is advantageous. It is worth noticing that the value also led to the best texture segmentation, thus we favor the value over the value in the following experiments.

Next, we compare the influence of the transition width . From Table 6, we see very small differences between the structural similarity index measures, but overall a small transition width of works best for less blur, while a larger value of works best for larger blurs. Going forward, we use for and for .

Table 6.

Influence of transition width for deconvolution for different level of blur (). The best SSIM values are given in bold.

We now compare the effectiveness of empirical watershed wavelet deconvolution against framelets and empirical curvelet option 1 on the Outex dataset. Framelets have been chosen as the classic wavelets since it has been shown to outperform other traditional wavelet decompositions [36,42,43].

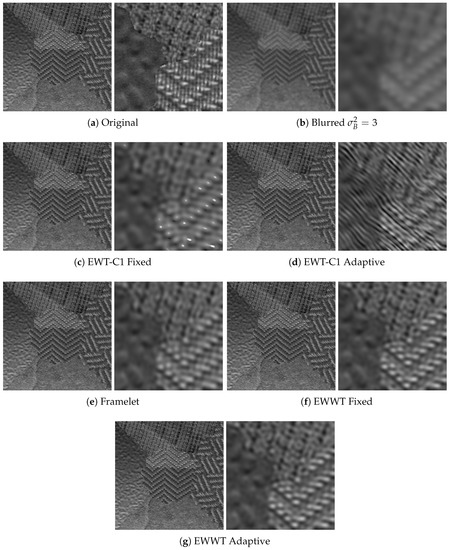

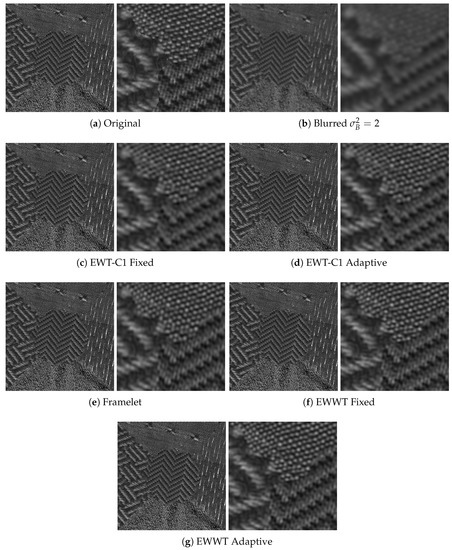

For both empirical wavelet approaches, we look into both the fixed filter bank approach and the adaptive filter bank method described in Section 4.3.2. The primal-dual algorithm has its own parameters set to , , , and [39]. The results (again averaged on 50 images) are shown for all values in Table 7, while visual deblurring examples are shown in Figure 9 and Figure 10.

Table 7.

Final comparisons for deconvolution for different level of blur (). The best SSIM values are given in bold.

Figure 9.

Example of the deconvolution performed on an image with a blur variance of . Note the artifacts present in the empirical curvelet approaches.

Figure 10.

Example of the deconvolution performed on an image with a blur variance of .

We see from these results that, among all tested methods, the empirical watershed wavelet performs the best. Furthermore, between the fixed filter bank and the adaptive filter bank approaches, the adaptive method slightly outperforms the fixed method. We also note that, in some cases, the empirical curvelets led to artifacting, which can be seen in Figure 9, and this phenomenon was not observed when using framelets and empirical watershed wavelets. In Table 8, we compare the average number of iterations needed to reach the desired convergence accuracy (set to ) between the framelet and the empirical watershed wavelet methods. We see that the empirical watershed wavelets converge in comparable or less iterations compared to other methods. This seems to be another advantage of the adaptability, i.e., adaptive representations need less iterations to converge to optimal representations of the images.

Table 8.

Average number of iteration until convergence.

5. Conclusions

In conclusion, we proposed a new construction of the 2D empirical wavelet transform based on an arbitrary partitioning of the Fourier spectrum. This construction includes a trigonometric transition region similar to the 1D EWT, and we showed that the set of empirical wavelets forms a frame. We also proposed an adaptive way to obtain such a partitioning, which is broken up into two steps. The first step involves a mode detection step and uses scale-space representations to define persistent maxima. The second step is a boundary detection step that uses the watershed transform. This defines a boundary that isolates the detected maxima by a path of lowest separation. Using these in conjunction with the 2D EWT of arbitrary shape, we defined the empirical watershed wavelet transform (EWWT).

We then showed that the EWWT performs well when applied to multiple applications. Both as a feature extractor for unsupervised texture segmentation and as a sparsity promoter in deconvolution, we saw improved results over previous wavelet and empirical wavelet transforms. On top of this, we explored the robustness of the empirical watershed wavelet transform parameters on each of these applications and found trends in how these parameters act.

Future work is possible in multiple directions. Firstly, in the construction of the 2D EWT, one could consider an arbitrary shape construction that forms a tight frame, rather than a frame. Future work can also be considered in the extension from 2D to 3D or N-D versions of the EWT of arbitrary shape.

In terms of the mode detection we proposed, many other options exist, such as threholding maxima, using maxima filters, entropy-based methods like in wavelet packets, or PCA based methods for dimension reduction. There is plenty of work to be done in finding which method works best for various applications. The same can be said for the boundary detection, especially if certain degrees of boundary smoothness are desired. While we saw that a certain value of the scale-space step-size worked best for both applications explored, more work is warranted to see if this extends to further applications, as well as further datasets.

Author Contributions

Methodology, B.H. and Z.A.; Supervision, J.G.; Writing—original draft, B.H.; Writing—review & editing, Z.A. All authors have read and agreed to the published version of the manuscript.

Funding

This work was partially supported by the Air Force Office of Scientific Research under Grant No. FA9550-15-1-0065.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Huang, N.E.; Shen, Z.; Long, S.R.; Wu, M.C.; Shih, H.H.; Zheng, Q.; Yen, N.C.; Tung, C.C.; Liu, H. The empirical mode decomposition and the Hilbert spectrum for nonlinear and non-stationary time series analysis. Proc. R. Soc. Lond. A 1998, 454, 903–995. [Google Scholar] [CrossRef]

- Nunes, J.C.; Bouaoune, Y.; Delechelle, E.; Niang, O.; Bunel, P. Image analysis by bidimensional empirical mode decomposition. Image Vis. Comput. 2003, 21, 1019–1026. [Google Scholar] [CrossRef]

- Bhuiyan, S.M.A.; Adhami, R.R.; Khan, J.F. A novel approach of fast and adaptive bidimensional empirical mode decomposition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Las Vegas, NV, USA, 31 March–4 April 2008; pp. 1313–1316. [Google Scholar]

- Dragomiretskiy, K.; Zosso, D. Variational Mode Decomposition. IEEE Trans. Signal Process. 2014, 62, 531–544. [Google Scholar] [CrossRef]

- Dragomiretskiy, K.; Zosso, D. Two-Dimensional Variational Mode Decomposition. In Energy Minimization Methods in Computer Vision and Pattern Recognition; Lecture Notes in Computer Science; Tai, X.C., Bae, E., Chan, T.F., Lysaker, M., Eds.; Springer: New York, NY, USA, 2015; Volume 8932. [Google Scholar]

- Gao, H.; Ma, L.; Dong, H.; Lu, J.; Li, G. An improved two-dimensional variational mode decomposition algorithm and its application in oil pipeline image. Syst. Sci. Control. Eng. 2020, 8, 297–307. [Google Scholar] [CrossRef]

- Lahmiri, S.; Boukadoum, M. Biomedical image denoising using variational mode decomposition. In Proceedings of the IEEE Biomedical Circuits and Systems Conference (BioCAS) Proceedings, Lausanne, Switzerland, 22–24 October 2014; pp. 340–343. [Google Scholar]

- Raghavendra, U.; Rejedra Acharya, U.; Gudigar, A.; Shetty, R.; Krishnananda, N.; Pai, U.; Samanth, J.; Nayak, C. Automated screening of congestive heart failure using variational mode decomposition and texture features extracted from ultrasound images. Naural Comput. Appl. 2017, 28, 2869–2878. [Google Scholar] [CrossRef]

- Yu, S.; Ma, J. Complex Variational Mode Decomposition for Slop-Preserving Denoising. IEEE Trans. Geosci. Remote Sens. 2018, 56, 586–597. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, Z.; Qu, C.; Yang, F.; Lv, S. Research on hybrid fusion algorithm for multi-feature among heterogeneous image. Infrared Phys. Technol. 2020, 104, 103110. [Google Scholar] [CrossRef]

- Daubechies, I.; Lu, J.; Wu, H.T. Synchrosqueezed wavelet transforms: An empirical mode decomposition-like tool. Appli. Comput. Harmon. Anal. 2011, 30, 243–261. [Google Scholar] [CrossRef]

- Yang, H.; Ying, L. Synchrosqueezed Wave Packet Transform for 2D Mode Decomposition. SIAM J. Imaging Sci. 2013, 6, 1979–2009. [Google Scholar] [CrossRef]

- Madhe, S.P.; Patil, B.D.; Holambe, R.S. Design of a frequency spectrum-based versatile two-dimensional arbitrary shape filter bank: Application to contact lens detection. Pattern Anal. Appl. 2020, 23, 45–58. [Google Scholar] [CrossRef]

- Gilles, J. Empirical Wavelet Transform. IEEE Trans. Signal Process. 2013, 61, 3999–4010. [Google Scholar] [CrossRef]

- Gilles, J.; Tran, G.; Osher, S. 2D Empirical transforms. Wavelets, Ridgelets and Curvelets Revisited. SIAM J. Imaging Sci. 2014, 7, 157–186. [Google Scholar] [CrossRef]

- Huang, Y.; De Bortoli, V.; Zhou, F.; Gilles, J. Review of wavelet-based unsupervised texture segmentation, advantage of adaptive wavelets. IET Image Process. 2018, 12, 1626–1638. [Google Scholar] [CrossRef]

- Huang, Y.; Zhou, F.; Gilles, J. Empirical curvelet based fully convolutional network for supervised texture image segmentation. Neurocomputing 2019, 349, 31–43. [Google Scholar] [CrossRef]

- Liu, W.; Cao, S.; Chen, Y. Seismic Time–Frequency Analysis via Empirical Wavelet Transform. IEEE Geosci. Remote Sens. Lett. 2016, 13, 28–32. [Google Scholar] [CrossRef]

- Polinati, S.; Dhuli, R. Multimodal medical image fusion using empirical wavelet decomposition and local energy maxima. Optik 2020, 205, 163947. [Google Scholar] [CrossRef]

- Zhang, X.; Li, X.; Feng, Y. Image fusion based on simultaneous empirical wavelet transform. Multimedia Tools Appl. 2017, 76, 8175–8193. [Google Scholar] [CrossRef]

- Gilles, J. Continuous empirical wavelets systems. Adv. Data Sci. Adapt. Anal. 2020, 2050006. [Google Scholar] [CrossRef]

- Gilles, J.; Heal, K. A parameterless scale-space approach to find meaningful modes in histograms—Application to image and spectrum segmentation. Int. J. Wavel. Multiresolution Inf. Process. 2014, 12, 1450044-1–1450044-17. [Google Scholar] [CrossRef]

- Lindeburg, T. Scale-Space Theory In Computer Vision; Springer: New York, NY, USA, 1994. [Google Scholar]

- Beucher, S.; Lantuejoul, C. Use of Watersheds in Contour Detection. In Proceedings of the International Workshop on Image Processing: Real-Time Edge and Motion Detection/Estimation, Rennes, France, 17–21 September 1979; Volume 132. [Google Scholar]

- Meyer, F.; Beucher, S. Morphological Segmentation. J. Vis. Commun. Image Represent. 1990, 1, 21–46. [Google Scholar] [CrossRef]

- Meyer, F. Topographic distance and watershed lines. Signal Process. 1994, 38, 113–125. [Google Scholar] [CrossRef]

- Ojala, T.; Maenpaa, T.; Pietikainen, M.; Viertola, J.; Kyllonen, J.; Huovinen, S. Outex—New Framework for Empirical Evaluation of Texture Analysis Algorithms. In Proceedings of the Object Recognition Supported by User Interaction for Service Robots, Quebec City, QC, Canada, 11–15 August 2002; Volume 1, pp. 701–706. [Google Scholar]

- Meila, M. Comparing clusterings—An axiomatic view. In Proceedings of the 22nd International Conference on Machine Learning, Bonn, Germany, 7–11 August 2005; pp. 577–584. [Google Scholar]

- Huang, Q.; Dom, B. Quantitative methods of evaluating image segmentation. Int. Conf. Image Process. 1995, 3, 53–56. [Google Scholar]

- Dongen, S. Performance Criteria for Graph Clustering and Markov Cluster Experiments. In CWI (Centre for Mathematics and Computer Science); Technical Report; National Research Institute for Mathematics and Computer Science: Amsterdam, The Netherlands, 2000. [Google Scholar]

- Malisiewicz, T.; Efros, A. Improving spatial support for objects via multiple segmentations. In Proceedings of the British Machine Vision Conference, Coventry, UK, 10–13 September 2007; pp. 55.1–55.10. [Google Scholar]

- Arbelaez, P.; Maire, M.; Fowlkes, C.; Malik, J. Contour Detection and Hierarchical Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 898–918. [Google Scholar] [CrossRef] [PubMed]

- Jiang, X.; Marti, C.; Irniger, C.; Bunke, H. Distance measures for image segmentation evaluation. EURASIP J. Appl. Signal Process. 2006, 1, 1–10. [Google Scholar] [CrossRef]

- Khuller, S.; Raghavachari, B. Advanced Combinatorial Algorithms. In Algorithms and Theory of Computation Handbook: General Concepts and Techniques; Atallah, M., Blanton, M., Eds.; Chapman and Hall/CRC: New York, NY, USA, 2009. [Google Scholar]

- Martin, D.R. An Empirical Approach to Grouping and Segmentation. Ph.D. Thesis, EECS Department, University of California, Berkeley, CA, USA, 2003. [Google Scholar]

- Cai, J.; Ji, H.; Liu, C.; Shen, Z. Framelet-Based Blind Motion Deblurring From a Single Image. IEEE Trans. Image Process. 2012, 21, 562–572. [Google Scholar]

- Chambolle, A.; Pock, T. A first-order primal-dual algorithm for convex problems with applications to imaging. J. Math. Imaging Vis. 2011, 40, 120–145. [Google Scholar] [CrossRef]

- Gilles, J. Primal Dual Optimization; Unpublished Technical Report; Department of Mathematics & Statistics, San Diego State University: San Diego, CA, USA, 2017. [Google Scholar]

- Alvarado, Z. Image Deconvolution Using The Empirical Wavelet Transform. Master Thesis, Math Department, San Diego State University, San Diego, CA, USA, 2020. [Google Scholar]

- Daubechies, I.; Han, B.; Ron, A.; Shen, Z. Framelets: MRA-based constructions of wavelet frames. Appl. Comput. Harmon. Anal. 2003, 14, 1–46. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Cai, J.; Osher, S.; Shen, Z. Linearized Bregman Iterations for Frame-Based Image Deblurring. SIAM J. Imaging Sci. 2009, 2, 226–252. [Google Scholar] [CrossRef]

- Cai, J.; Osher, S.; Shen, Z. Split Bregman Methods and Frame Based Image Restoration. Multiscale Model. Simul. 2009, 8, 337–369. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).