DIRT: The Dacus Image Recognition Toolkit

Abstract

:1. Introduction

Motivation and Contribution

- a dataset of images depicting McPhail trap contents,

- manually annotated spatial identification of Dacuses in the dataset,

- programming code samples in MATLAB that allow fast initial experimentation on the dataset or any other Dacus image set,

- a public rest https API and web interface that reply to queries for Dacus identification in user provided images,

- extensive experimentation on the use of deep learning for Dacus identification on the dataset contents.

2. Related Research

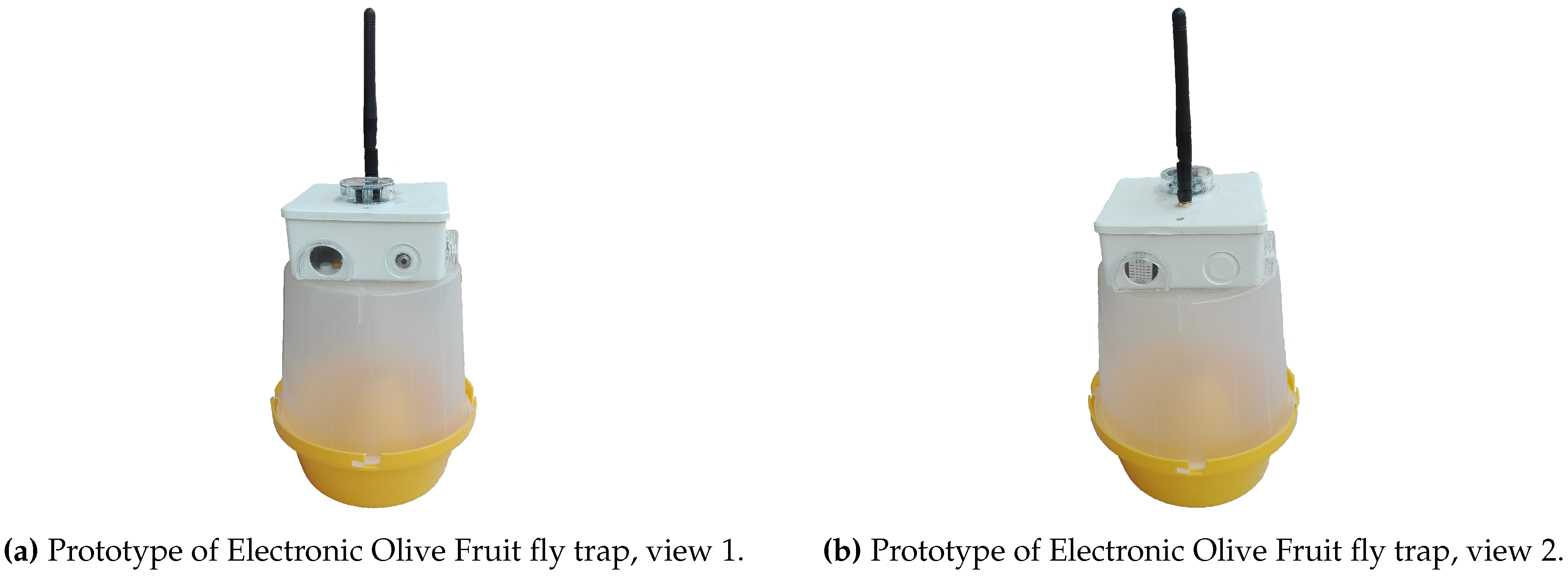

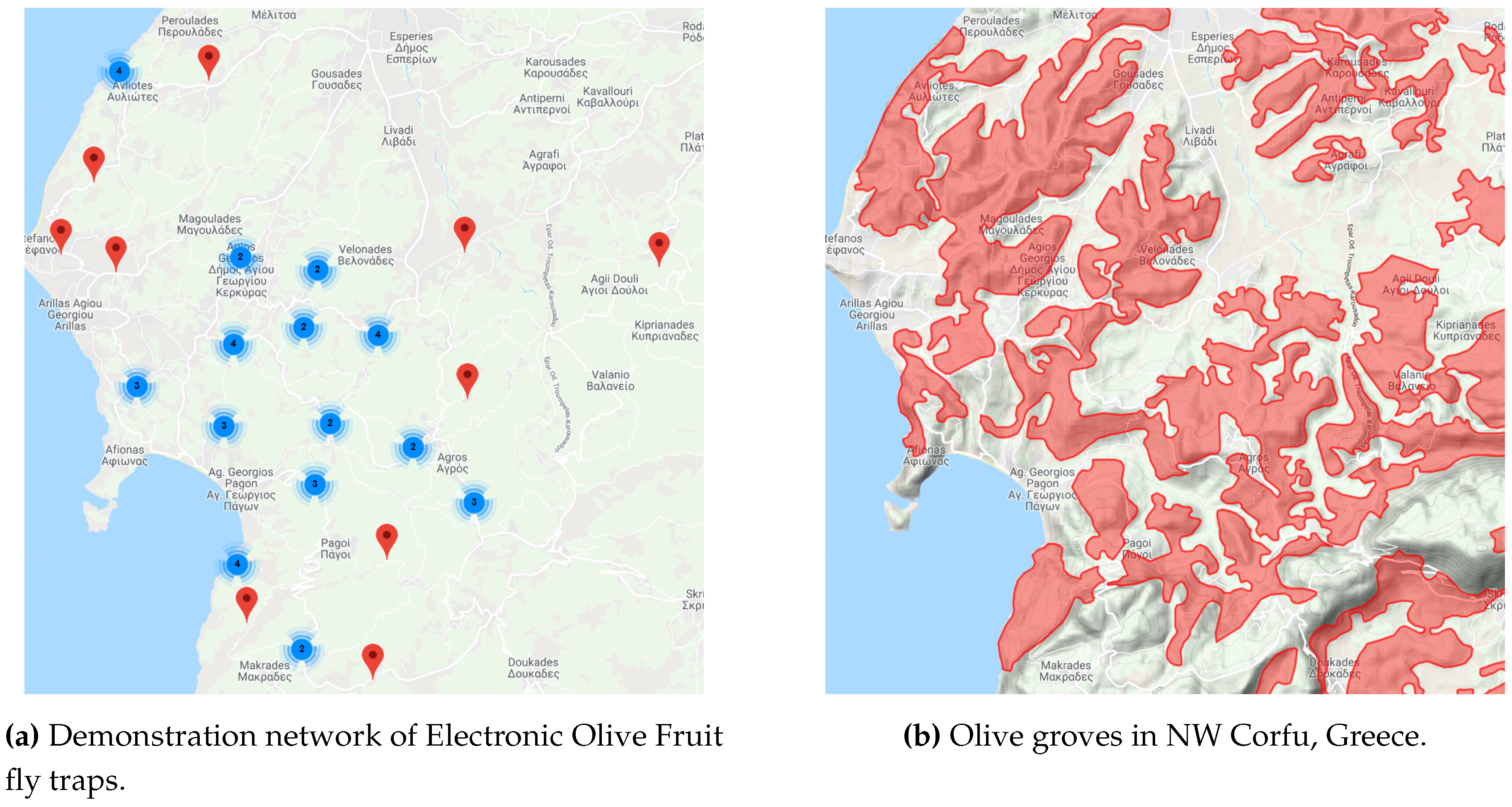

3. Smart Trap

- McPhail-type trap. A McPhail-type trap with enlarged upper part completely equivalent in terms of size (inner trap volume), environmental conditions (temperature, humidity, etc.), and parameters effecting the attraction of Dacuses, the entrance type for the pests, and the difficulty of exit. The extra height of the upper part is important to accommodate all the necessary electronic parts described in the sequel as well as to allow space for proper focus of the camera. The electronics compartment is to be completely isolated from the rest part of the trap (e.g., by means of a transparent PVC plate or equivalent methods).

- Wi-fi equipped microcomputer. A microcomputer for the task of orchestrating all the necessary actions to record the data and dispatch these to a networking module (e.g., a GSM modem), thus reaching finally to a server/processing center. The microcomputer is to be selected based on the following criteria:

- low cost,

- computational resources,

- number of open-source programs available for it,

- operational stability,

- availability of integrated Wi-Fi (and/or other protocols’) transceiver, and

- capability for integration of camera with fast interface and adequate resolution.

The key disadvantage of including a microcomputer is its relative high-power consumption, despite the numerous techniques existing for the minimization of stand-by consumption. The main alternative, micro-controllers, can also be considered for the task given that preliminary tests indicate that the computational load is not too big for their limited resources (such as RAM and CPU speed). The microcomputer proposed features a Unix-type operating system for openness while with the use of scripts (e.g., python) will collect data from the sensors (mentioned in the sequel) at explicitly defined time instances of the day. Then, the data will be transmitted through the networking to a server/processing center. Both collection and transmission may be synchronized with scripts (e.g., Unix bash). - Real-time clock. An accurate battery equipped Real-time clock module.

- Camera. An adequate resolution camera with adaptable lenses system to achieve focusing and zooming.

- Sensors. A high accuracy humidity and temperature sensor set within (and additionally possibly outside) the enclosure of the trap. Similar remote sensors may also be used to collect ambient readings.

- Power supply. A grid power supply system-based a battery with adequate capacity to supply the necessary electrical power to the smart-trap for a few days. For the smart-trap to be an autonomous and a maintenance-free device, a solar panel and a charger system are to be included and accommodated to a waterproof box nearby the trap.

- Networking. Despite the abundance of alternative networking configurations (e.g., star, mesh, ad-hoc, hybrid, etc.) herein we propose the use of a GSM modem that can serve up to 50 smart-traps, leading thus to the star topology. The modem should feature external antennas that can be replaced with higher-gain antennas should it be deemed necessary. The GSM modem is to be supplied with power by the solar panel—battery system that supplies the smart-trap.

- Local data storage. Use of local data storage (e.g., Secure Digital), in addition to the aforementioned operating system, for the temporary storage (and recycling) of collected data will allow to ensure the collected data are note lost in case of communication errors or errors of the server/processing center, at least up to the point of the next recycling. Accordingly, attention should be paid on the expected data volume per sampling of the sensors in addition to the frequency of sampling to select the required retention level.

- Server/processing center. The server/processing center is to be accessed through secure protocols (e.g., SSH) and synchronize data directories with the data directories of the smart-traps at explicitly defined time instances every day to deal with communication costs. To ensure the collected data are note lost, should a GSM modem failure occur and do not reach the server/processing center, data are also to be stored in the smart-trap’s local storage.

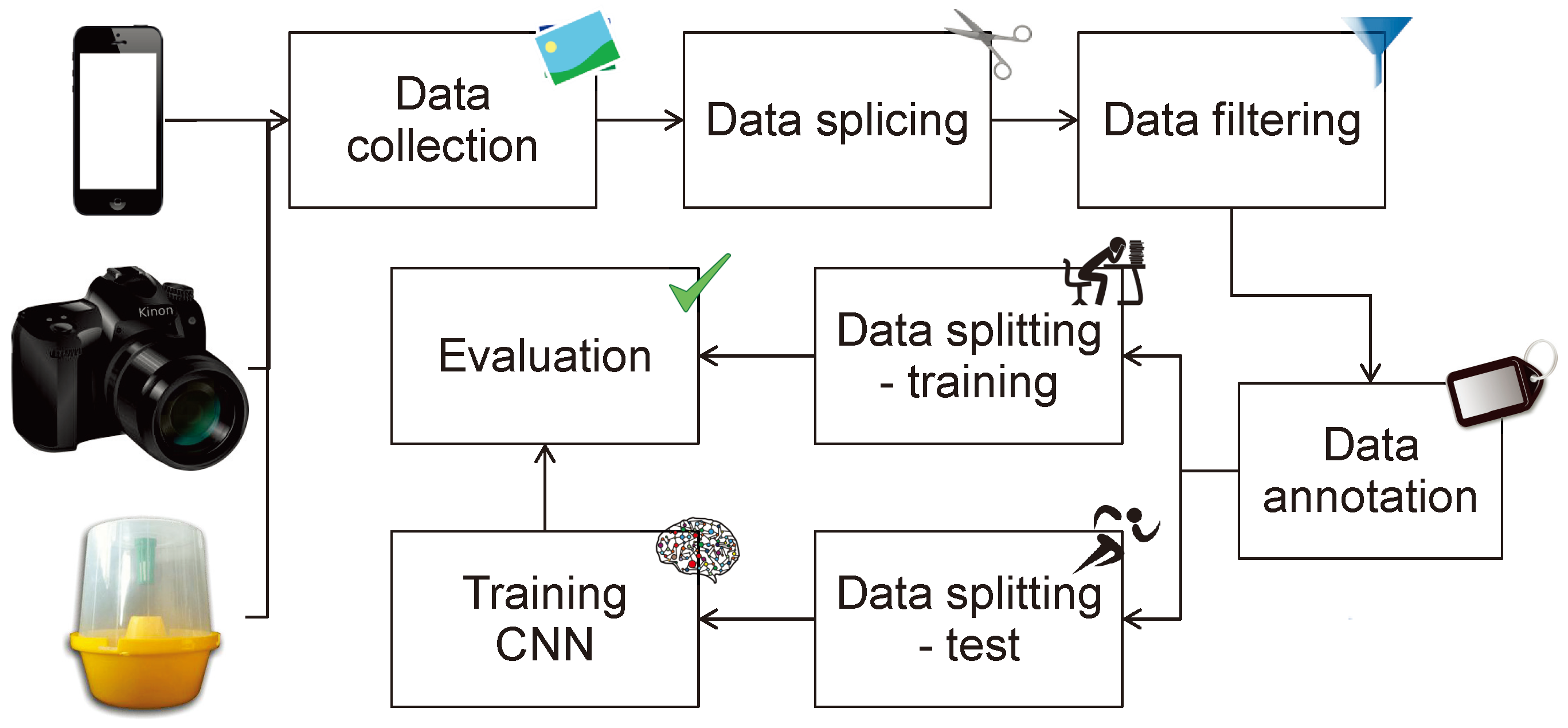

4. The Toolkit

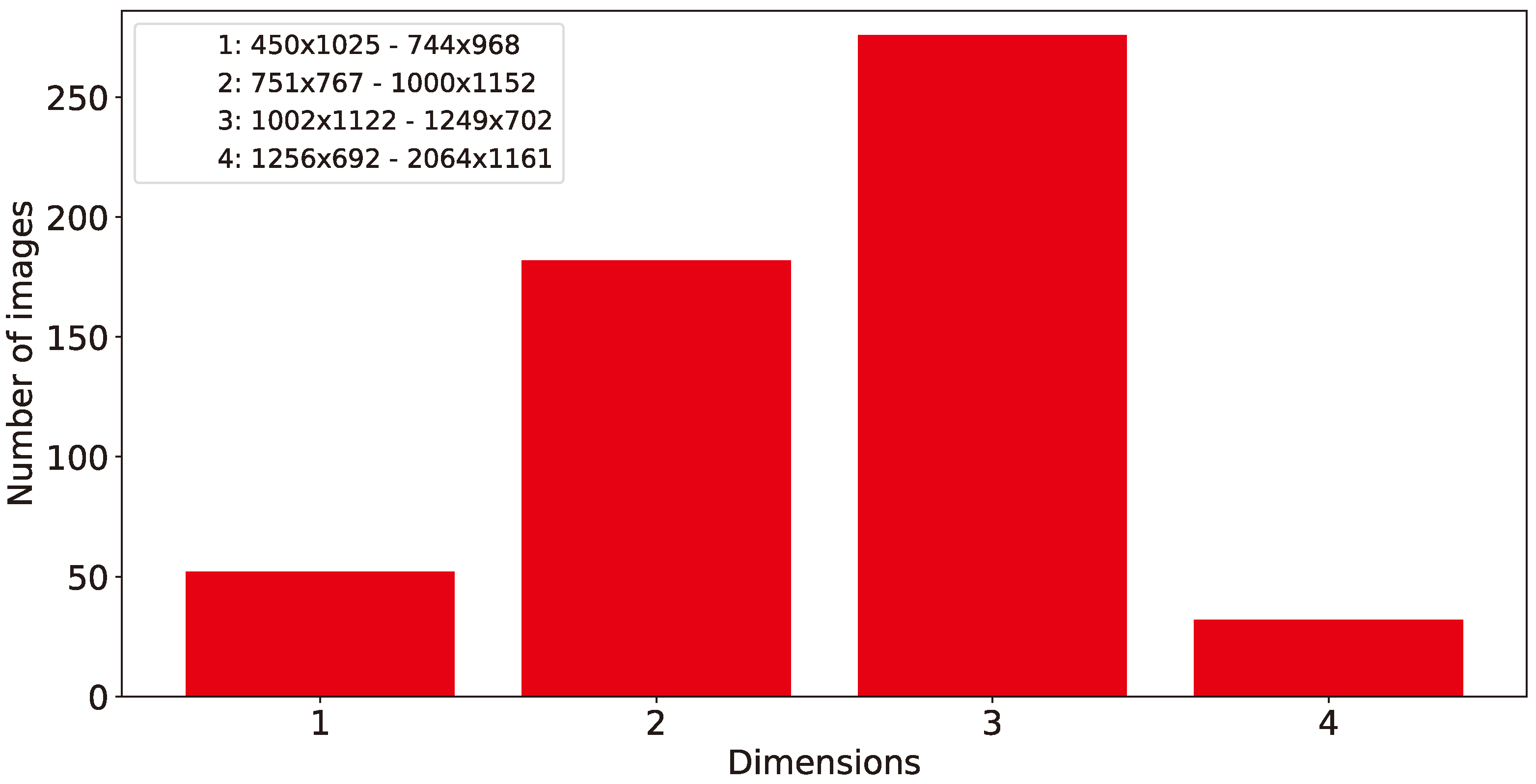

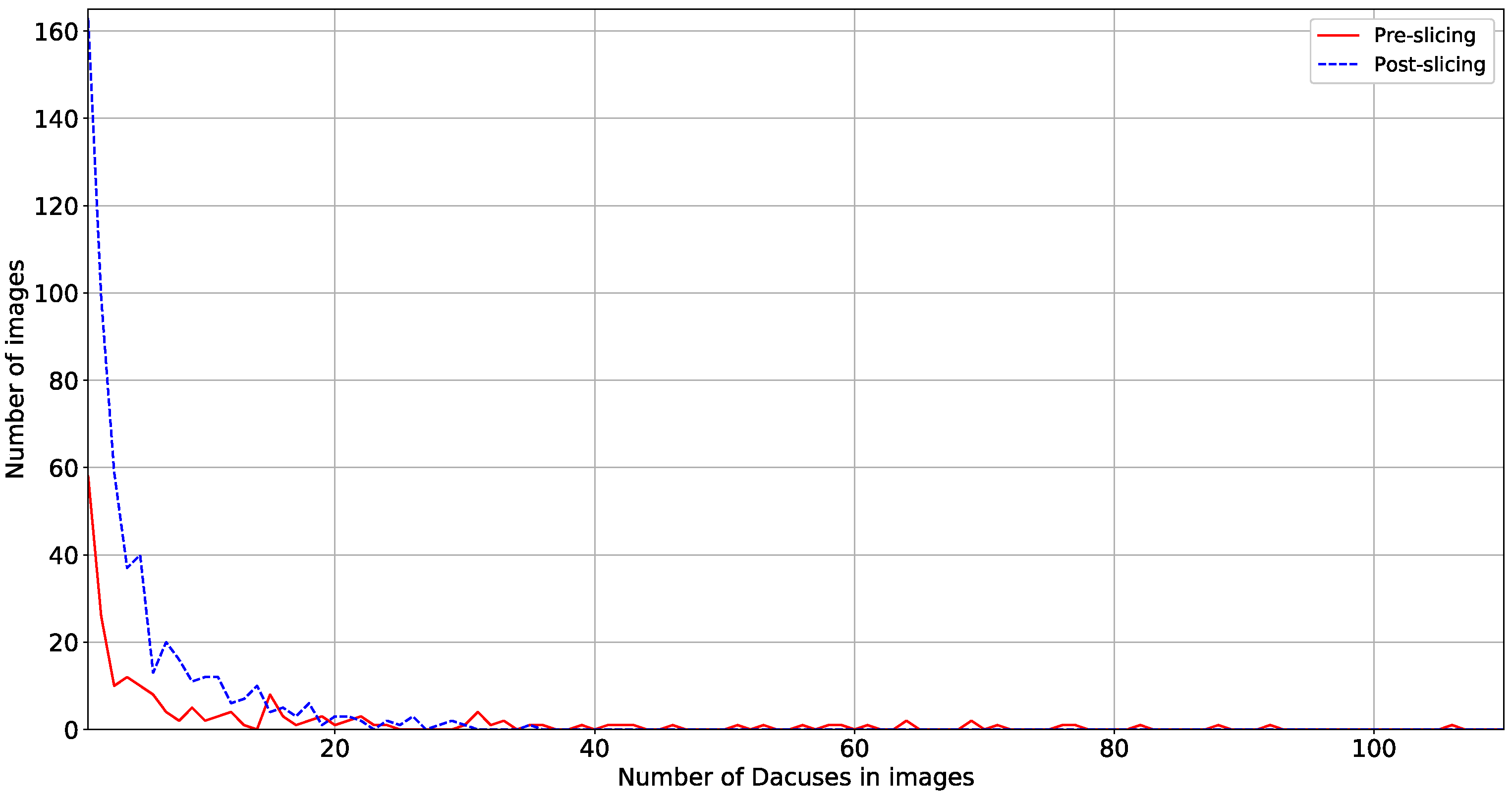

4.1. Dataset

4.2. Programming Code Samples

- Load dataset. Function load_DIRT_dataset parses the files of the dataset as provided in the archive of DIRT and produces a struct array with the associated filenames and folders of both images and (xml) annotations using local relative paths. No input arguments are required, and the function returns the resulting struct array as well as saves it as a file titled DIRT_dataset.mat for future use. The definition of the image and annotation local paths, for content discovery, is clearly noted in lines 5 and 6 of the function.

- Preview image with annotation. Function preview_img_DIRT_dataset shows a random image of the dataset with overlaid annotation(s) of its Dacuses. The first argument is required and refers to the dataset as produced by load_DIRT_dataset function. The second argument is the array id of the image to preview (optional). If no second argument is provided or its value is false, then a random image of the dataset is shown. The third argument is a switch whether to show the image or not: if set to true (default) then it displays the image while if set to false then it does not display the image. The function returns the image including the overlay of the annotation(s).

- Parse annotation. Function parse_DIRT_annotation parses an annotation’s xml file of DIRT dataset and returns its contents. The input argument is the char array or string containing the full path to the annotation file, including the annotation file’s name and extension. The returned value is a struct containing (a) an aggregated array, with size where k is the number of Dacuses annotated in the image, while the four numbers per annotation describe the lower left x and y coordinates of the annotation’s bounding box and the box’s width and height, as well as (b) an array of the , , , and coordinates of each annotation’s bounding box.

- Driver. Function startFromHere is a driver function for training and testing an R-CNN with the DIRT dataset. No input arguments are required, and the function does not return any information as the last called function therein, test_r_CNN function, presents a graphical comparison of the identified by the R-CNN Dacus and the manually annotated ground-truth equivalent. The function slices the DIRT_dataset to train it with all but one, randomly selected from the available, image that will be subsequently used in the test_r_CNN function performing the test of the R-CNN.

- Training the R-CNN. Function train_r_CNN trains an R-CNN object detector for the identification of Dacuses in images. The input argument is the dataset in the format produced by load_DIRT_dataset function, while the output of the function is the trained R-CNN object detector. The network used herein is not based on a pre-trained network but it is simplistically trained from scratch as a demo of the complete process. The resulting network is saved as a file titled rcnn_DIRT_network.mat for future use. The function also includes a switch that allows the training to be completely avoided and a pre-trained network loaded from the file titled rcnn_DIRT_network.mat to be used/returned instead.

- Testing the R-CNN. Function test_r_CNN presents a graphical comparison of the identified by the R-CNN Dacus, based on the trained network, and the manually annotated ground-truth equivalent, as prepared by the preview_img_DIRT_dataset function, side-by-side. The function’s first input is the trained R-CNN network, as provided by the train_r_CNN function, the second argument is the dataset in the format produced by load_DIRT_dataset function, and the third argument is the test image’s id from the array of load_DIRT_dataset, as selected by the startFromHere function for the testing procedure.

4.3. Dacus Identification API

5. Experimental Evaluation

5.1. Experimental Setup

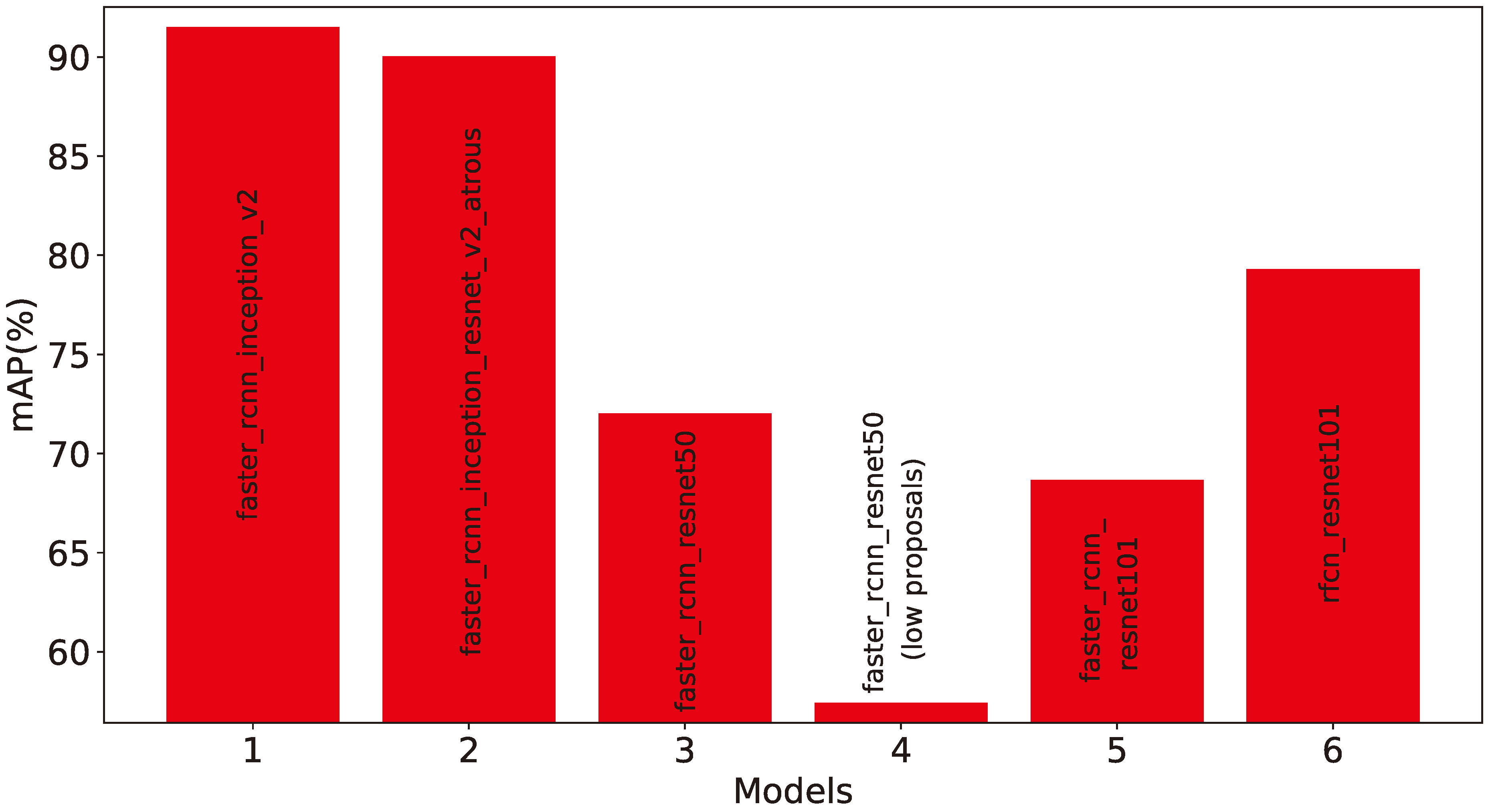

5.2. Experimental Results

- faster_rcnn_inception_v2_coco

- faster_rcnn_resnet50_coco

- faster_rcnn_resnet50_lowproposals_coco

- rfcn_resnet101_coco

- faster_rcnn_resnet101_coco

- faster_rcnn_inception_resnet_v2_atrous_coco

5.3. Results and Discussion

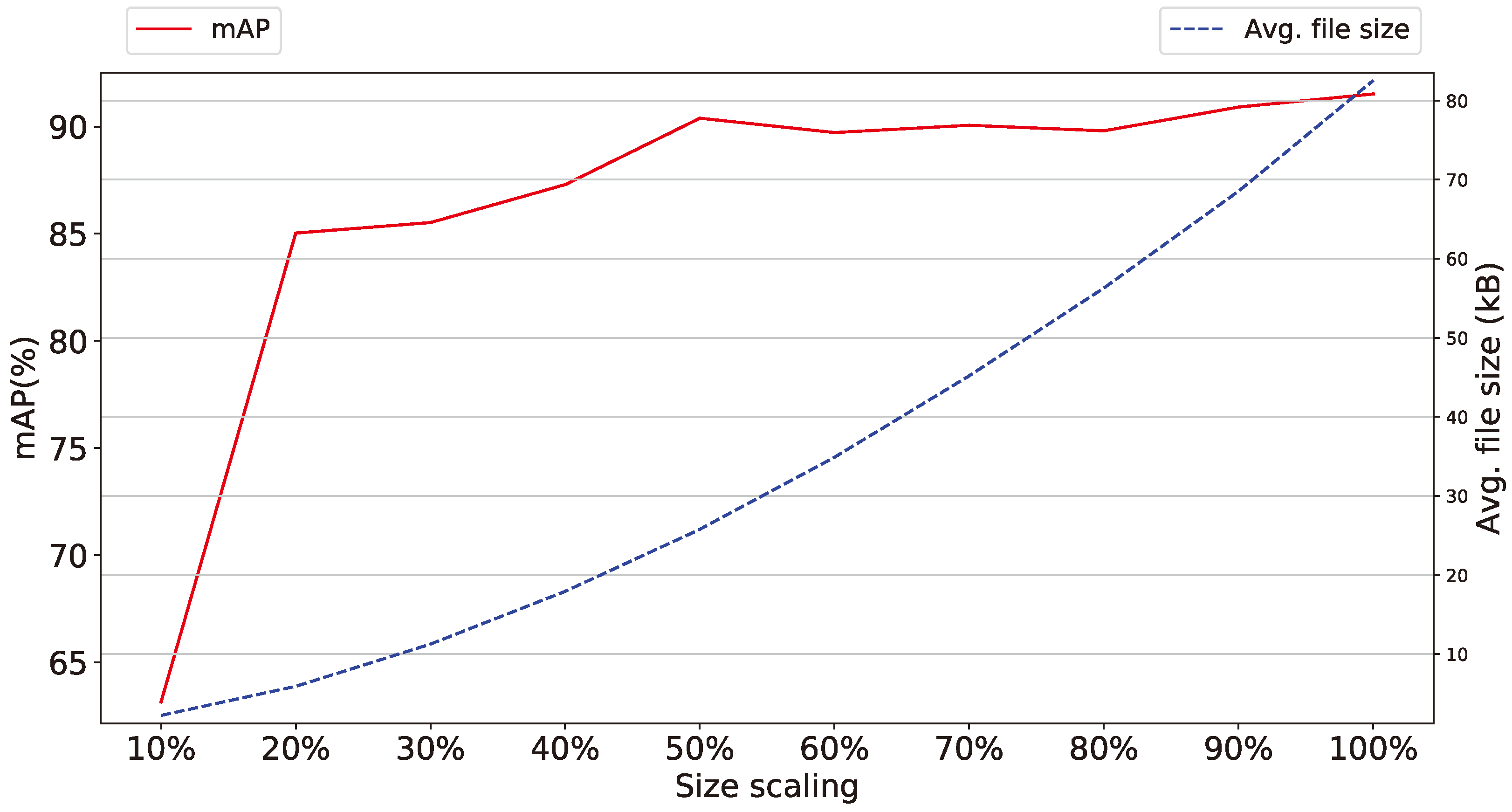

- Size of images The experimental results on the size of the images taken from the smart-trap, as shown in Figure 9, indicate that a high detail provided in photos with increased pixel availability is indeed affecting the performance of the proposed methodology, but the ratio of performance’s increase falls sharply after discarding 80% of the original information while the difference between discarding 50–10% is approx 1% and thus almost negligible, for some applications. Accordingly, the widespread availability of high-pixel cameras, although has been show to increase the effectiveness of the identification of Dacuses, develops to be a trade-off between marginally higher performance and increased volume of data that potentially have to be stored locally to limited persistent storage or transported over either meter connections (e.g., GSM modem) or in ad-hoc networks affecting thus the network’s load.

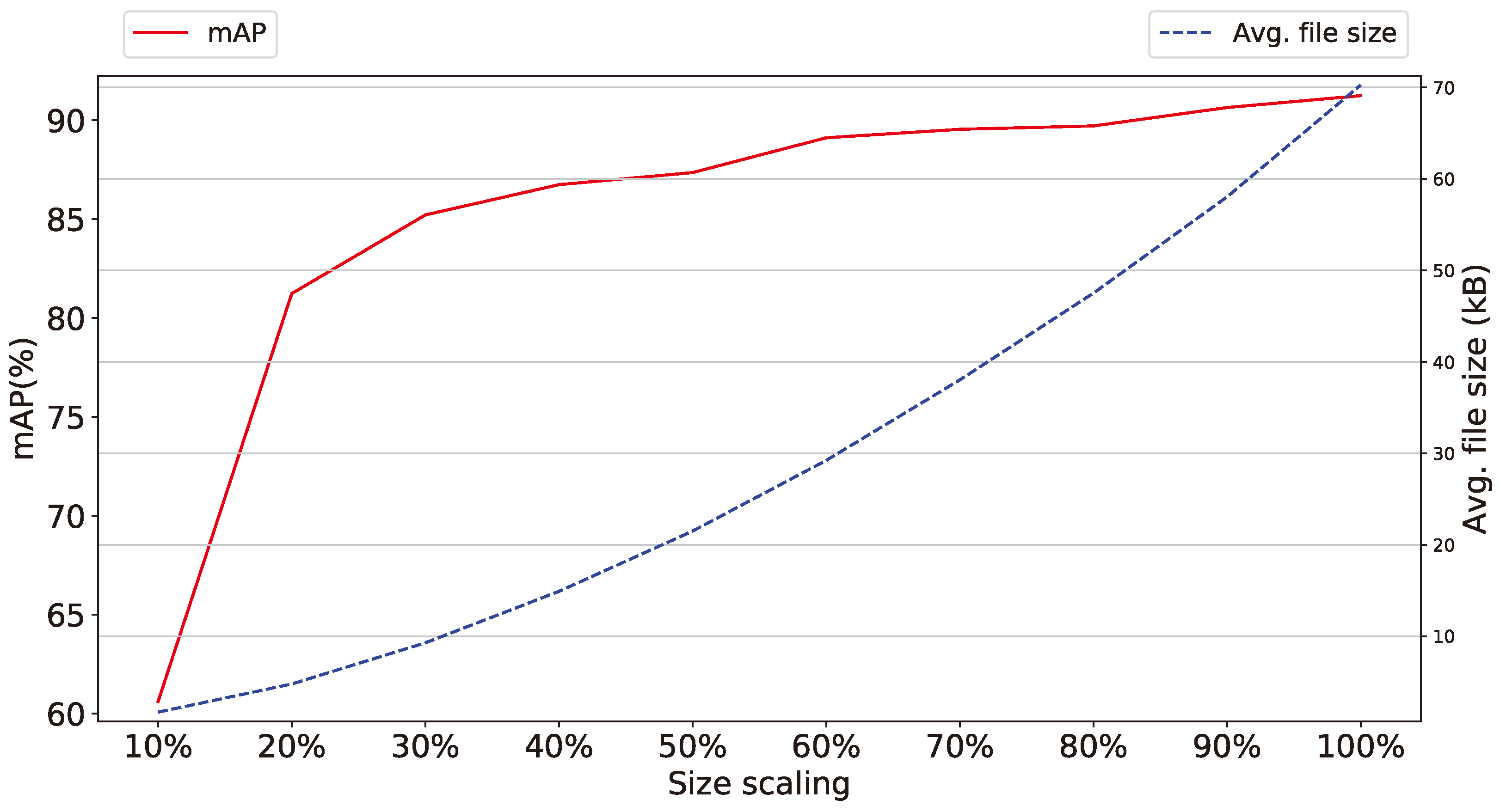

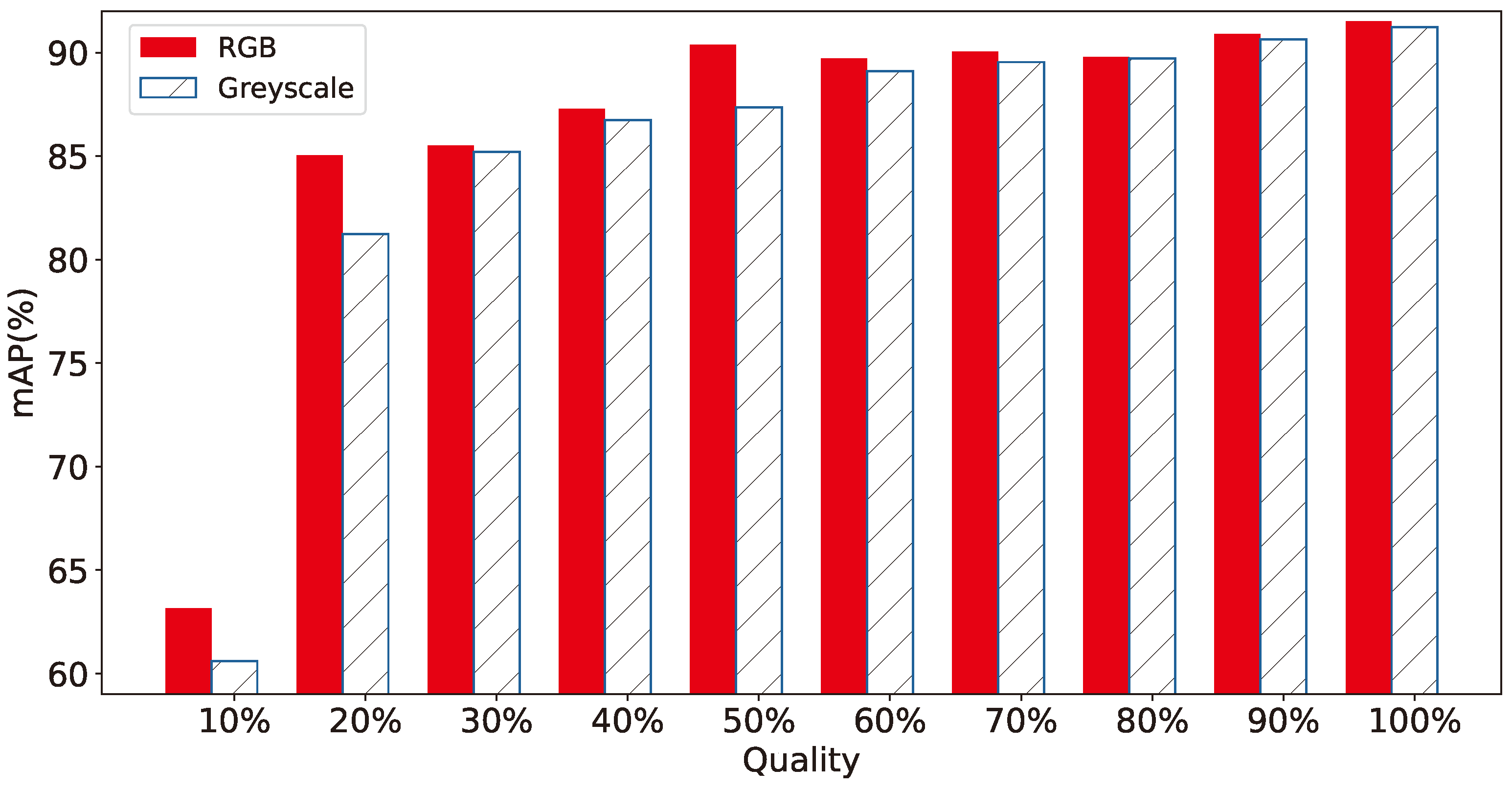

- Color information of images Similarly to the previous argument, the color information of the images obtained from the smart-trap, as shown in Figure 10 and Figure 11, is shown to be of secondary importance as, in both scaled gray-scale images as well as in full scale images after conversion to gray-scale, the effect of RGB color on performance is almost negligible. This supports further the previous argument of the diminished role of high-pixel images even when these are gray-scale and thus require approx. one third of the RGB equivalent images. Thus, the selection of RGB or gray-scale cameras reverts to the aforementioned trade-off between minor increase in identification performance versus volume of data.

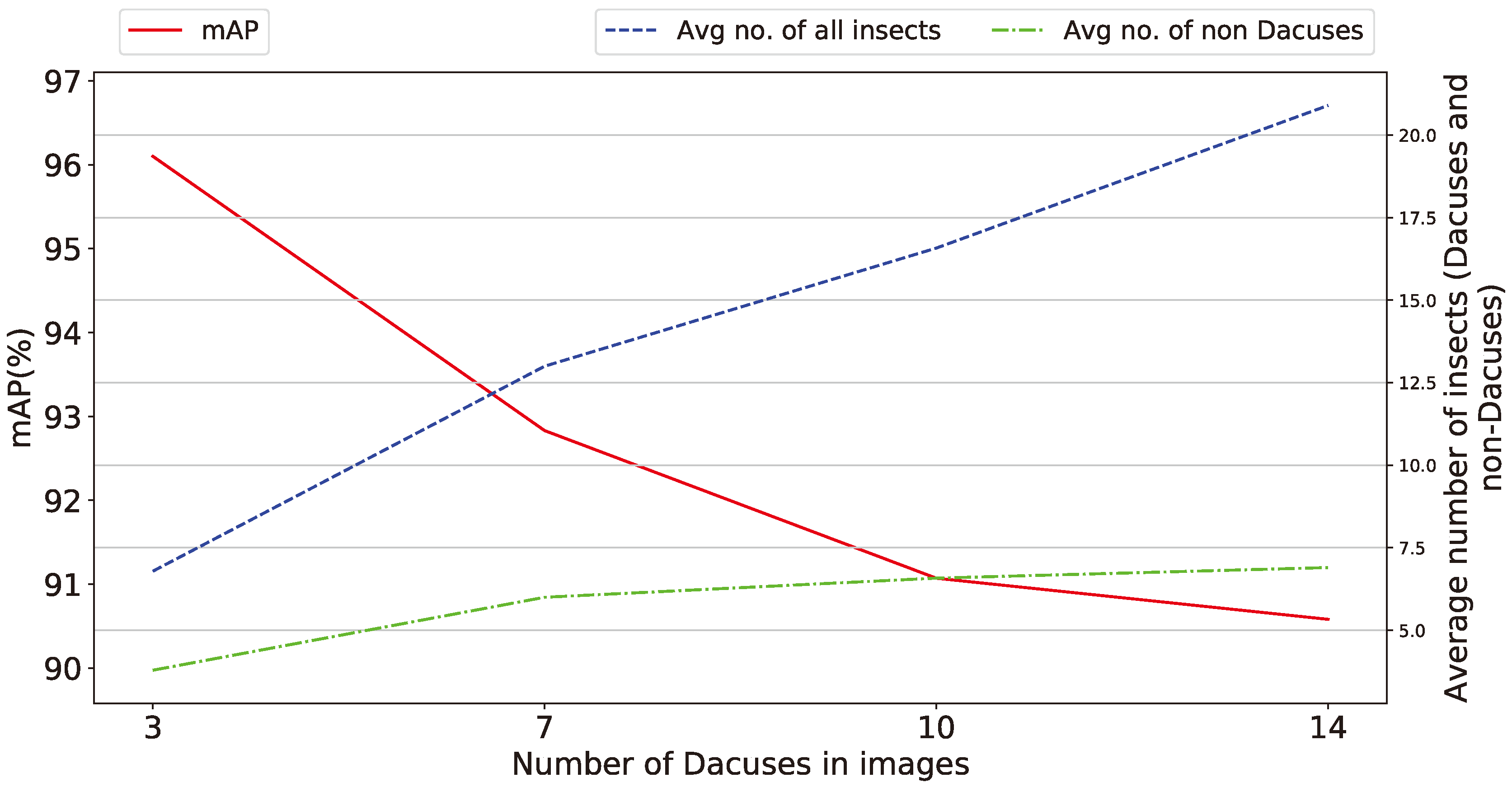

- Number of Dacuses in images The ability of the proposed method to retain high performance irrespectively of the number of collected Dacuses in the trap is very important to address a variety of scenarios of trap designs, geo- and weather-characteristics of the olive grove, varieties of olives etc. The variation shown in Figure 12 is approx. 6.5% and thus requires further examination, even though for the specific traps used, all values of number of Dacuses equal or greater than seven are similarly considered to be a significant infestation indication requiring action. Overall, a general trend is evident for the parameters tested: the number of insects (and by extension Dacuses) inversely affects the detection precision, an effect attributed to the proportionally higher number of insects in the trap when increased number of Dacuses are measured.

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Altieri, M.A.; Farrell, J.G.; Hecht, S.B.; Liebman, M.; Magdoff, F.; Murphy, B.; Norgaard, R.B.; Sikor, T.O. Toward sustainable agriculture. In Agroecology; CRC Press: Boca Raton, FL, USA, 2018; pp. 367–379. [Google Scholar] [CrossRef]

- Van Grinsven, H.J.; Erisman, J.W.; de Vries, W.; Westhoek, H. Potential of extensification of European agriculture for a more sustainable food system, focusing on nitrogen. Environ. Res. Lett. 2015, 10, 025002. [Google Scholar] [CrossRef] [Green Version]

- King, A. The future of agriculture. Nature 2017, 544, S21–S23. [Google Scholar] [CrossRef] [PubMed]

- Donatelli, M.; Magarey, R.D.; Bregaglio, S.; Willocquet, L.; Whish, J.P.; Savary, S. Modelling the impacts of pests and diseases on agricultural systems. Agric. Syst. 2017, 155, 213–224. [Google Scholar] [CrossRef] [PubMed]

- Bogue, R. Sensors key to advances in precision agriculture. Sens. Rev. 2017, 37, 1–6. [Google Scholar] [CrossRef]

- Ojha, T.; Misra, S.; Raghuwanshi, N.S. Wireless sensor networks for agriculture: The state-of-the-art in practice and future challenges. Comput. Electron. Agric. 2015, 118, 66–84. [Google Scholar] [CrossRef]

- Wolfert, S.; Ge, L.; Verdouw, C.; Bogaardt, M.J. Big data in smart farming—A review. Agric. Syst. 2017, 153, 69–80. [Google Scholar] [CrossRef]

- Lindblom, J.; Lundström, C.; Ljung, M.; Jonsson, A. Promoting sustainable intensification in precision agriculture: Review of decision support systems development and strategies. Precis. Agric. 2017, 18, 309–331. [Google Scholar] [CrossRef]

- Eurostat. Agri-Environmental Indicator—Cropping Patterns. 2017. Available online: https://ec.europa.eu/eurostat/statistics-explained/index.php/Agri-environmental_indicator_-_cropping_patterns (accessed on 25 October 2018).

- Fogher, C.; Busconi, M.; Sebastiani, L.; Bracci, T. Chapter 2—Olive Genomics. In Olives and Olive Oil in Health and Disease Prevention; Preedy, V.R., Watson, R.R., Eds.; Academic Press: Cambridge, MA, USA, 2010; pp. 17–24. [Google Scholar] [CrossRef]

- Haniotakis, G.E. Olive pest control: Present status and prospects. IOBC Wprs Bull. 2005, 28, 1–12. [Google Scholar]

- Shaked, B.; Amore, A.; Ioannou, C.; Valdés, F.; Alorda, B.; Papanastasiou, S.; Goldshtein, E.; Shenderey, C.; Leza, M.; Pontikakos, C.; et al. Electronic traps for detection and population monitoring of adult fruit flies (Diptera: Tephritidae). J. Appl. Entomol. 2017, 142, 43–51. [Google Scholar] [CrossRef]

- Doitsidis, L.; Fouskitakis, G.N.; Varikou, K.N.; Rigakis, I.I.; Chatzichristofis, S.A.; Papafilippaki, A.K.; Birouraki, A.E. Remote monitoring of the Bactrocera oleae (Gmelin) (Diptera: Tephritidae) population using an automated McPhail trap. Comput. Electron. Agric. 2017, 137, 69–78. [Google Scholar] [CrossRef]

- Alorda, B.; Valdes, F.; Mas, B.; Leza, M.; Almenar, L.; Feliu, J.; Ruiz, M.; Miranda, M. Design of an energy efficient and low cost trap for Olive fly monitoring using a ZigBee based Wireless Sensor Network. In Proceedings of the 10th European Conference on Precision Agriculture, Volcani Center, Israel, 12–16 July 2015. [Google Scholar]

- Wang, J.N.; Chen, X.L.; Hou, X.W.; Zhou, L.B.; Zhu, C.D.; Ji, L.Q. Construction, implementation and testing of an image identification system using computer vision methods for fruit flies with economic importance (Diptera: Tephritidae). Pest Manag. Sci. 2016, 73, 1511–1528. [Google Scholar] [CrossRef] [PubMed]

- Philimis, P.; Psimolophitis, E.; Hadjiyiannis, S.; Giusti, A.; Perello, J.; Serrat, A.; Avila, P. A centralised remote data collection system using automated traps for managing and controlling the population of the Mediterranean (Ceratitis capitata) and olive (Dacus oleae) fruit flies. In Proceedings of the International Conference on Remote Sensing and Geoinformation of the Environment, Paphos, Coral Bay Cyprus, 8–10 April 2013; Volume 8795, p. 8795. [Google Scholar] [CrossRef]

- Tirelli, P.; Borghese, N.A.; Pedersini, F.; Galassi, G.; Oberti, R. Automatic monitoring of pest insects traps by Zigbee-based wireless networking of image sensors. In Proceedings of the IEEE International Instrumentation and Measurement Technology Conference, Hangzhou, China, 9–12 May 2011; pp. 1–5. [Google Scholar] [CrossRef]

- Uhlir, P. The Value of Open Data Sharing. 2015. Available online: https://www.earthobservations.org/documents/dsp/20151130_the_value_of_open_data_sharing.pdf (accessed on 25 October 2018).

- Mayernik, M.S.; Phillips, J.; Nienhouse, E. Linking publications and data: Challenges, trends, and opportunities. D-Lib Mag. 2016, 22, 11. [Google Scholar] [CrossRef]

- Potamitis, I.; Eliopoulos, P.; Rigakis, I. Automated remote insect surveillance at a global scale and the internet of things. Robotics 2017, 6, 19. [Google Scholar] [CrossRef]

- European Commission—Community Research and Development Information Service. Development of an Innovative Automated and Wireless Trap with Warning and Monitoring Modules for Integrated Management of the Mediterranean (Ceratitis Capitata) & Olive (Dacus oleae) Fruit Flies. 2017. Available online: https://cordis.europa.eu/project/rcn/96182_en.html (accessed on 25 October 2018).

- European Commission—Community Research and Development Information Service. E-FLYWATCH Report Summary. 2014. Available online: https://cordis.europa.eu/result/rcn/141151_en.html (accessed on 25 October 2018).

- Sun, C.; Flemons, P.; Gao, Y.; Wang, D.; Fisher, N.; La Salle, J. Automated Image Analysis on Insect Soups. In Proceedings of the 2016 International Conference on Digital Image Computing: Techniques and Applications (DICTA), Gold Coast, Australia, 30 November–2 December 2016; pp. 1–6. [Google Scholar] [CrossRef]

- Potamitis, I.; Rigakis, I.; Tatlas, N.A. Automated surveillance of fruit flies. Sensors 2017, 17, 110. [Google Scholar] [CrossRef] [PubMed]

- Shelly, T.; Epsky, N.; Jang, E.B.; Reyes-Flores, J.; Vargas, R. Trapping and the Detection, Control, and Regulation of Tephritid Fruit Flies: Lures, Area-Wide Programs, and Trade Implications; Springer: Berlin, Germany, 2014. [Google Scholar]

- Ding, W.; Taylor, G. Automatic moth detection from trap images for pest management. Comput. Electron. Agric. 2016, 123, 17–28. [Google Scholar] [CrossRef] [Green Version]

- Rassati, D.; Faccoli, M.; Chinellato, F.; Hardwick, S.; Suckling, D.; Battisti, A. Web-based automatic traps for early detection of alien wood-boring beetles. Entomol. Exp. Appl. 2016, 160, 91–95. [Google Scholar] [CrossRef]

- Creative Web Applications P.C. e-OLIVE. 2017. Available online: https://play.google.com/store/apps/details?id=gr.cwa.eolive (accessed on 25 October 2018).

- Tzutalin. LabelImg. 2015. Available online: https://github.com/tzutalin/labelImg (accessed on 25 October 2018).

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Tensorflow. Tensorflow Object Detection API. 2018. Available online: https://github.com/tensorflow/models/tree/master/research/object_detection (accessed on 25 October 2018).

- Tensorflow. An Open Source Machine Learning Framework for Everyone. 2018. Available online: https://www.tensorflow.org/ (accessed on 25 October 2018).

- COCO. Common Objects in Context. 2018. Available online: http://cocodataset.org/ (accessed on 25 October 2018).

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision Meets Robotics: The KITTI Dataset. Int. J. Robot. Res. (IJRR) 2013. Available online: http://www.cvlibs.net/datasets/kitti/ (accessed on 25 October 2018).

- Krasin, I.; Duerig, T.; Alldrin, N.; Ferrari, V.; Abu-El-Haija, S.; Kuznetsova, A.; Rom, H.; Uijlings, J.; Popov, S.; Kamali, S.; et al. OpenImages: A Public Dataset for Large-Scale Multi-Label And Multi-Class Image Classification. 2017. Available online: https://storage.googleapis.com/openimages/web/index.html (accessed on 25 October 2018).

- Gros, D.; Habermann, T.; Kirstein, G.; Meschede, C.; Ruhrberg, S.D.; Schmidt, A.; Siebenlist, T. Anaphora Resolution: Analysing the Impact on Mean Average Precision and Detecting Limitations of Automated Approaches. Int. J. Inf. Retr. Res. (IJIRR) 2018, 8, 33–45. [Google Scholar] [CrossRef]

- Manning, C.D.; Raghavan, P.; Schütze, H. Introduction to Information Retrieval; Cambridge University Press: Cambridg, UK, 2008. [Google Scholar]

| CPU | Intel Core i7 920 @ 2.67 GHz |

| GPU | NVIDIA TITAN Xp 11 GB |

| RAM | 16 GB |

| Index | Model | Total Loss | Training Time |

|---|---|---|---|

| 1 | faster_rcnn_inception_v2 | 0.04886 | 5 h 35 m 51 s |

| 2 | faster_rcnn_inception_resnet_v2_atrous | 0.1883 | 23 h 21 m 39 s |

| 3 | faster_rcnn_resnet50 | 0.1029 | 8 h 35 m 53 s |

| 4 | faster_rcnn_resnet50_low_proposals | 0.5773 | 8 h 29 m 54 s |

| 5 | faster_rcnn_resnet101 | 0.1608 | 12 h 57 m 49 s |

| 6 | rfcn_resnet101 | 0.1349 | 13 h 45 m 51 s |

| Olive Fruit Fly Counts | No. of Training Images | No. of Test Images |

|---|---|---|

| 3 | 483 | 59 |

| 7 | 522 | 20 |

| 10 | 530 | 12 |

| 14 | 532 | 10 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kalamatianos, R.; Karydis, I.; Doukakis, D.; Avlonitis, M. DIRT: The Dacus Image Recognition Toolkit. J. Imaging 2018, 4, 129. https://doi.org/10.3390/jimaging4110129

Kalamatianos R, Karydis I, Doukakis D, Avlonitis M. DIRT: The Dacus Image Recognition Toolkit. Journal of Imaging. 2018; 4(11):129. https://doi.org/10.3390/jimaging4110129

Chicago/Turabian StyleKalamatianos, Romanos, Ioannis Karydis, Dimitris Doukakis, and Markos Avlonitis. 2018. "DIRT: The Dacus Image Recognition Toolkit" Journal of Imaging 4, no. 11: 129. https://doi.org/10.3390/jimaging4110129

APA StyleKalamatianos, R., Karydis, I., Doukakis, D., & Avlonitis, M. (2018). DIRT: The Dacus Image Recognition Toolkit. Journal of Imaging, 4(11), 129. https://doi.org/10.3390/jimaging4110129