Empirical Evaluation of Invariances in Deep Vision Models

Abstract

1. Introduction

- Unified evaluation across tasks—recognition, localization, and segmentation are rarely benchmarked together under identical perturbation protocols.

- Controlled comparison—testing of 30 models under the same invariance transformations, ensuring a fair basis for comparison.

- Empirical confirmation of assumptions—although prior works have suggested such robustness patterns, they were often task-specific or anecdotal. The presented results provide systematic evidence that these assumptions hold across different tasks and perturbations.

2. Historical Review and Related Works

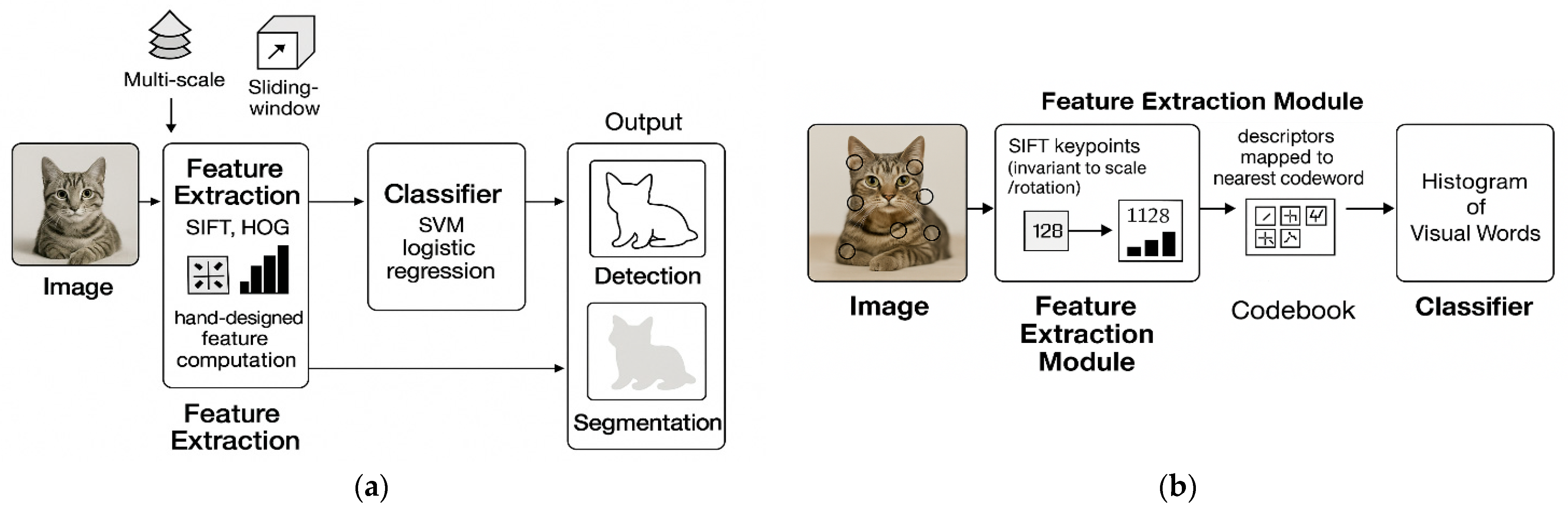

2.1. Historical Review

2.1.1. Historical Approaches to Invariance

2.1.2. The Deep Learning Shift

2.1.3. Emerging Trends in Self-Supervised Learning for Invariance

2.1.4. Domain Invariance as a Path to Robustness

2.2. Related Works

2.2.1. Blur Invariances

2.2.2. Noise Invariances

2.2.3. Rotation Invariances

2.2.4. Scale Invariances

3. Materials and Methods

3.1. Benchmark Datasets

3.1.1. Object Localization Dataset

3.1.2. Object Recognition Dataset

3.1.3. Semantic Segmentation Dataset

3.2. Models’ Selection Criteria

- Documentation and Resource Availability. The most important factor for model selection was firstly models that were trained on open source and well-constructed datasets, and secondly models with comprehensive documentation, tutorials, and implementation examples were favored to facilitate integration into our research pipeline. The availability of educational resources surrounding these models ensures efficient troubleshooting and optimization.

- Open-Source Availability. All selected models need to be available through open-source licenses, allowing for unrestricted academic use and modification. This accessibility is crucial for reproducibility and extension of our research findings. Open-source models also typically provide pre-trained weights on standard datasets, reducing the computational resources required for implementation. Models with significant adoption within the computer vision community were prioritized. All models were imported from well-known libraries such as PyTorch or Hugging Face.

- Performance-Efficiency Balance. This criterion spans a range of architectures that offer different trade-offs between accuracy and computational efficiency. This variety allows us to evaluate which models best suit specific hardware constraints and performance requirements. Many models had the ability to select multiple versions of each other with different sizes of parameters, which made them much easier or much harder to run. For this research, each model was selected based on the ability of the testing hardware to infer it in the shortest amount of time without reducing the performance due to its small number of parameters.

3.3. Generating Degraded Images

3.3.1. Blurred Images

3.3.2. Noised Images

3.3.3. Rotated Images

3.3.4. Scaled Images

3.4. Models’ Performance Evaluation

4. Experimental Setup

5. Results

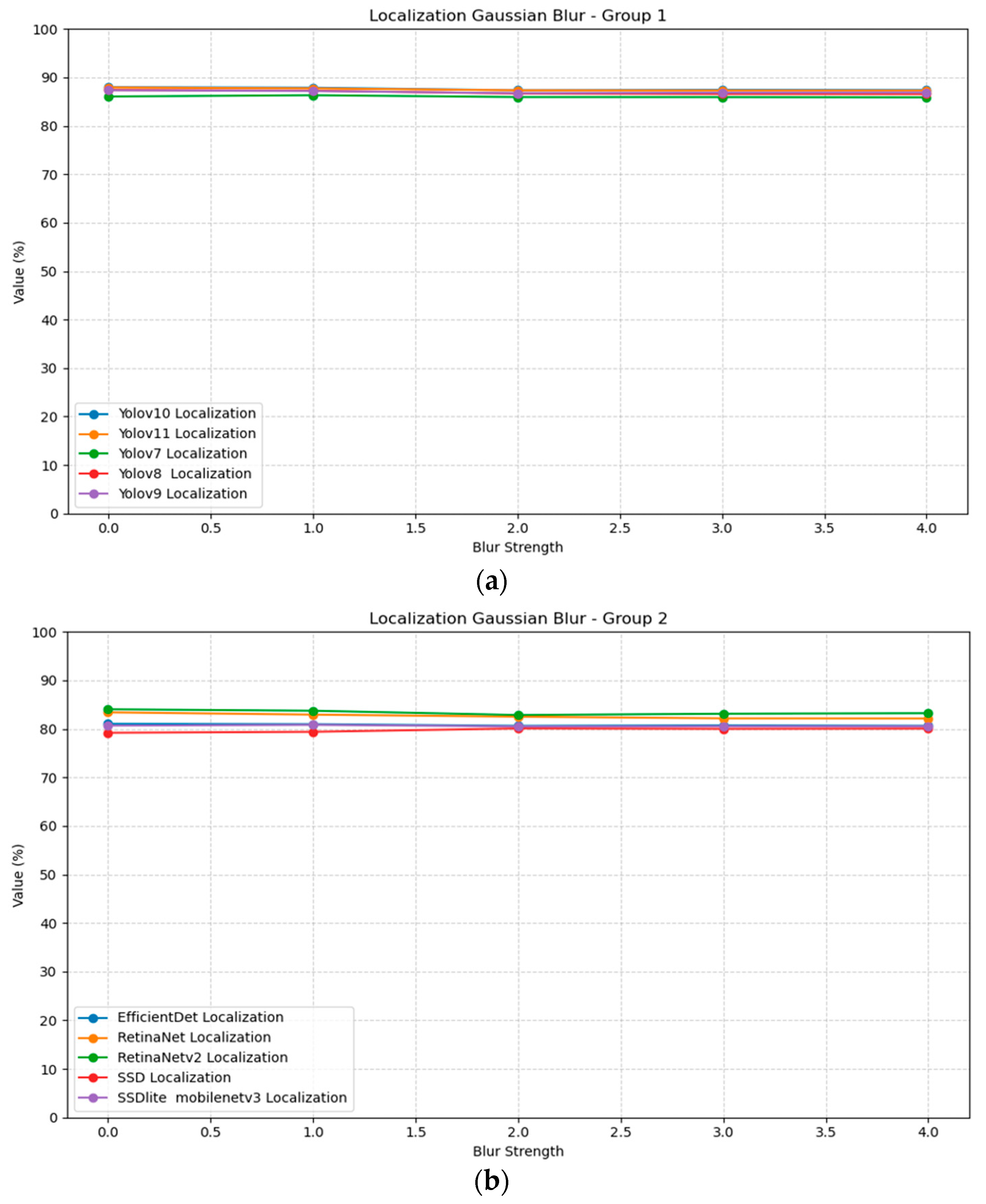

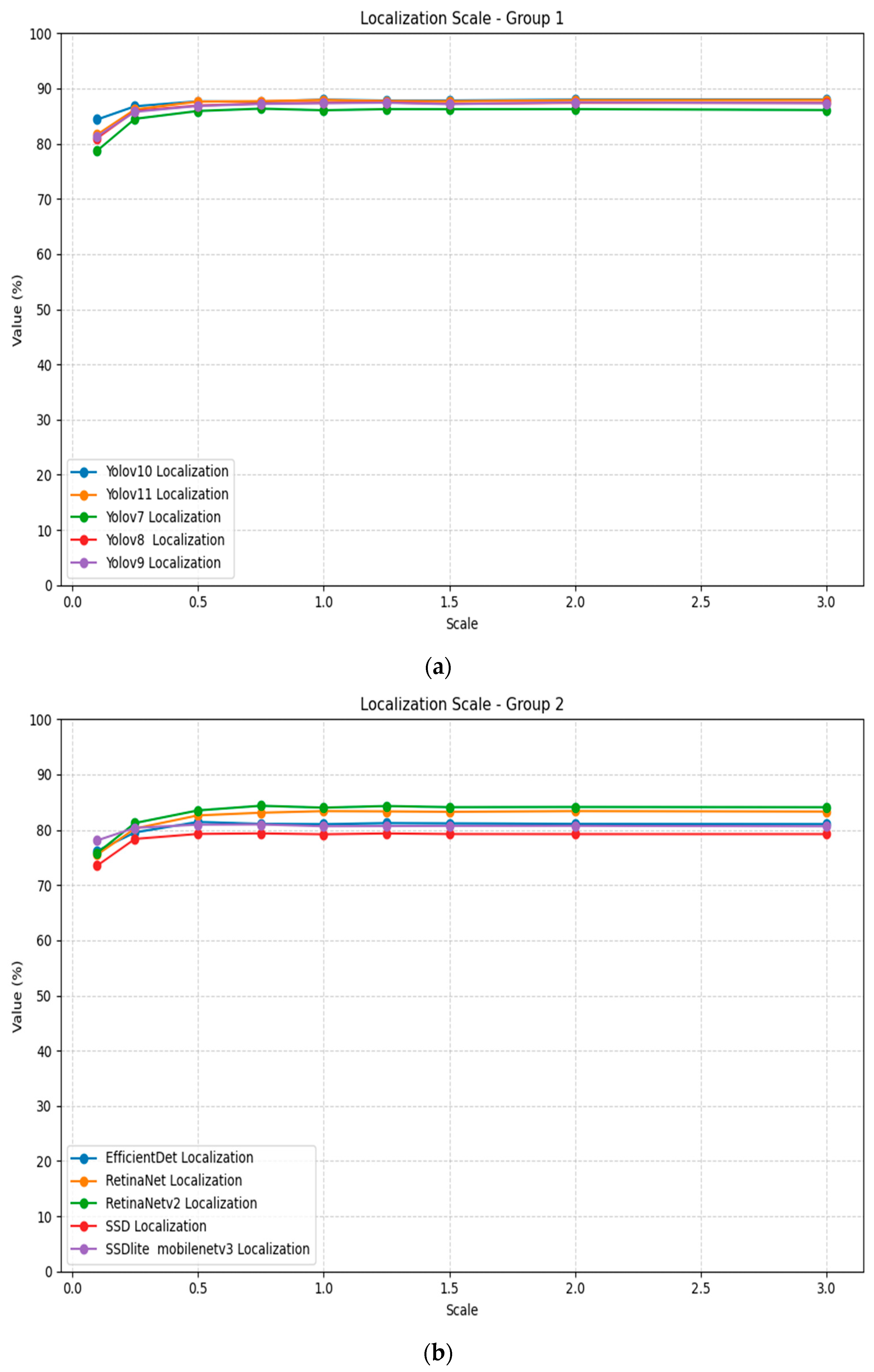

5.1. Object Localization

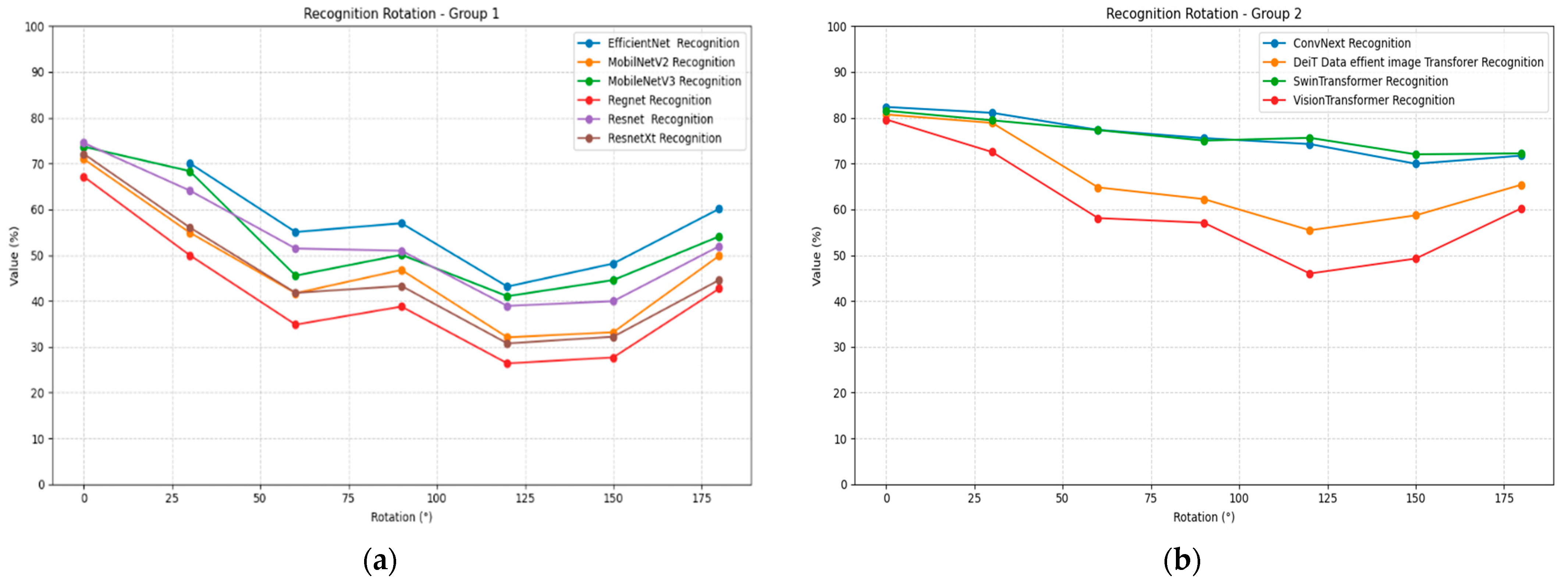

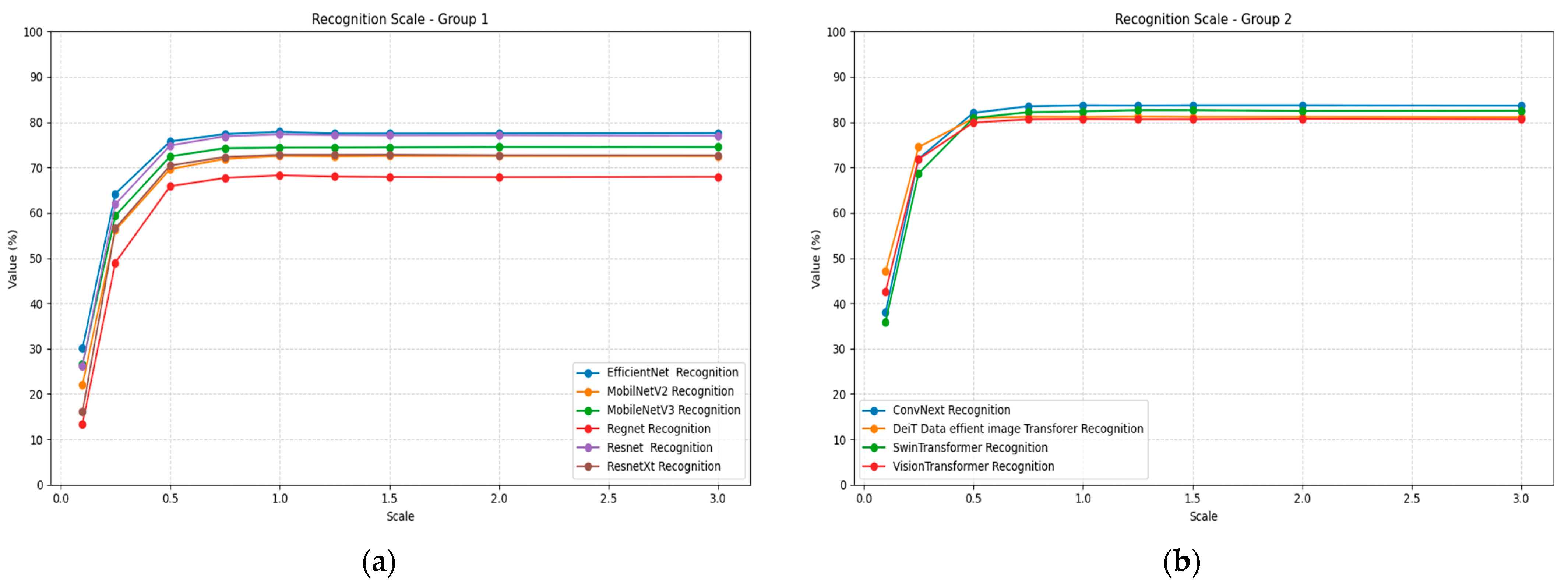

5.2. Object Recognition

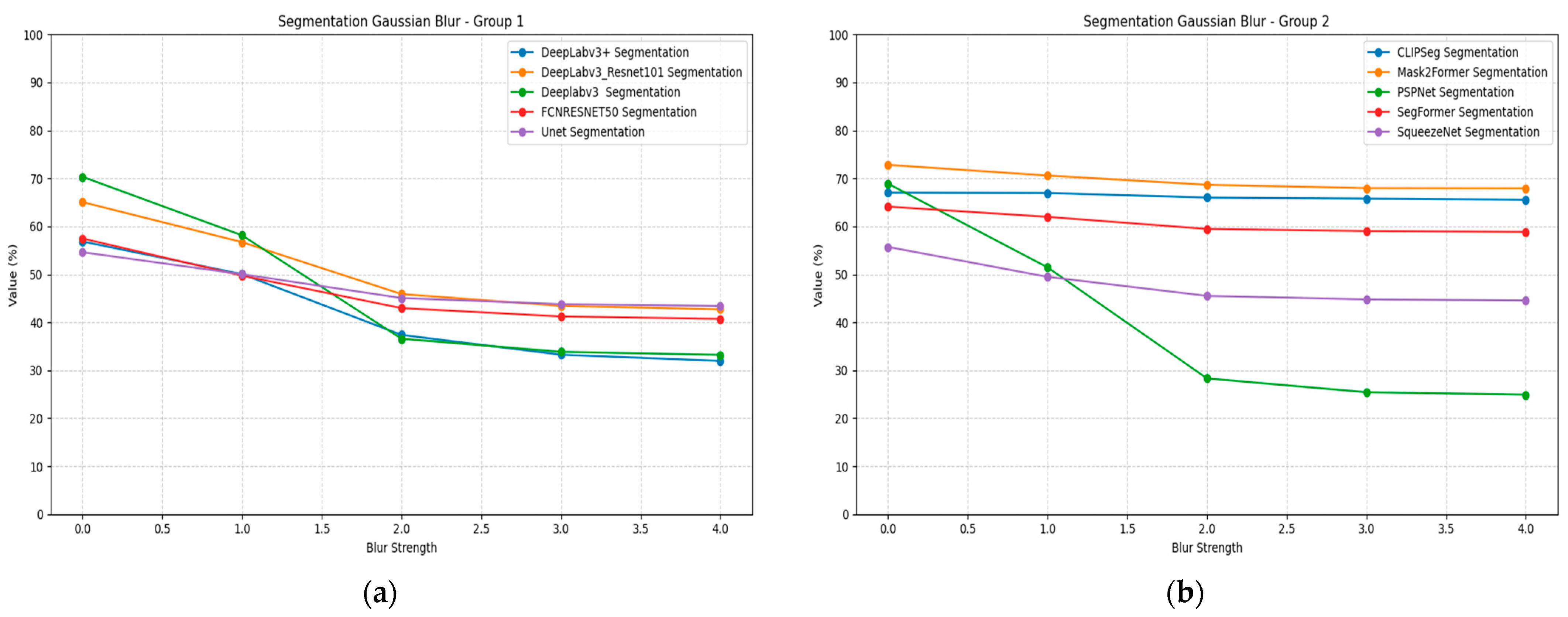

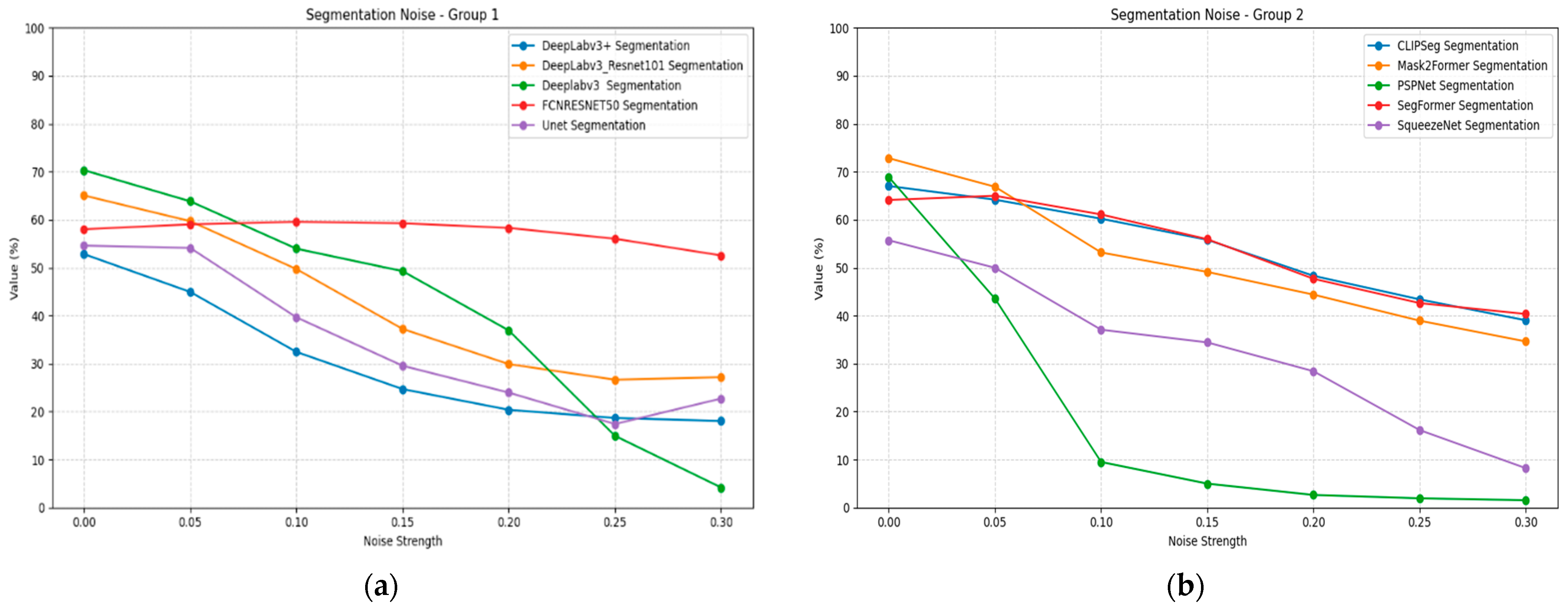

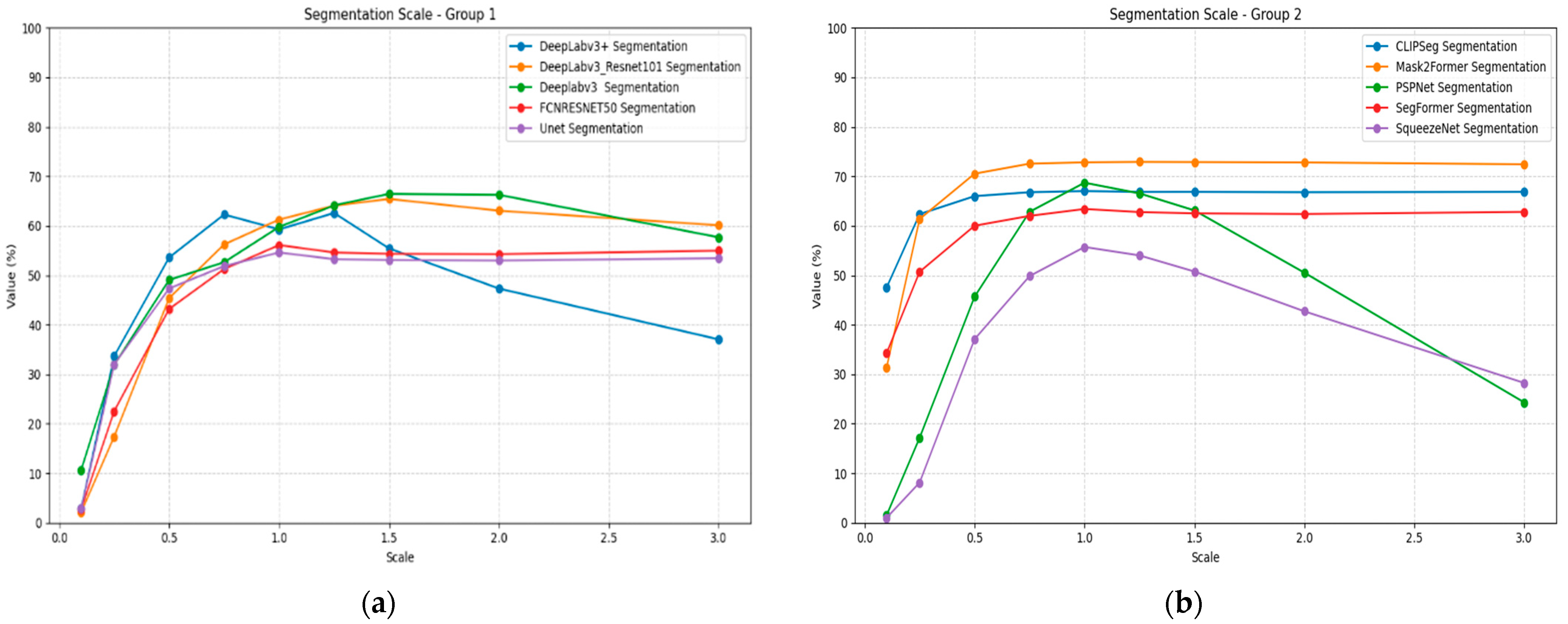

5.3. Semantic Segmentation

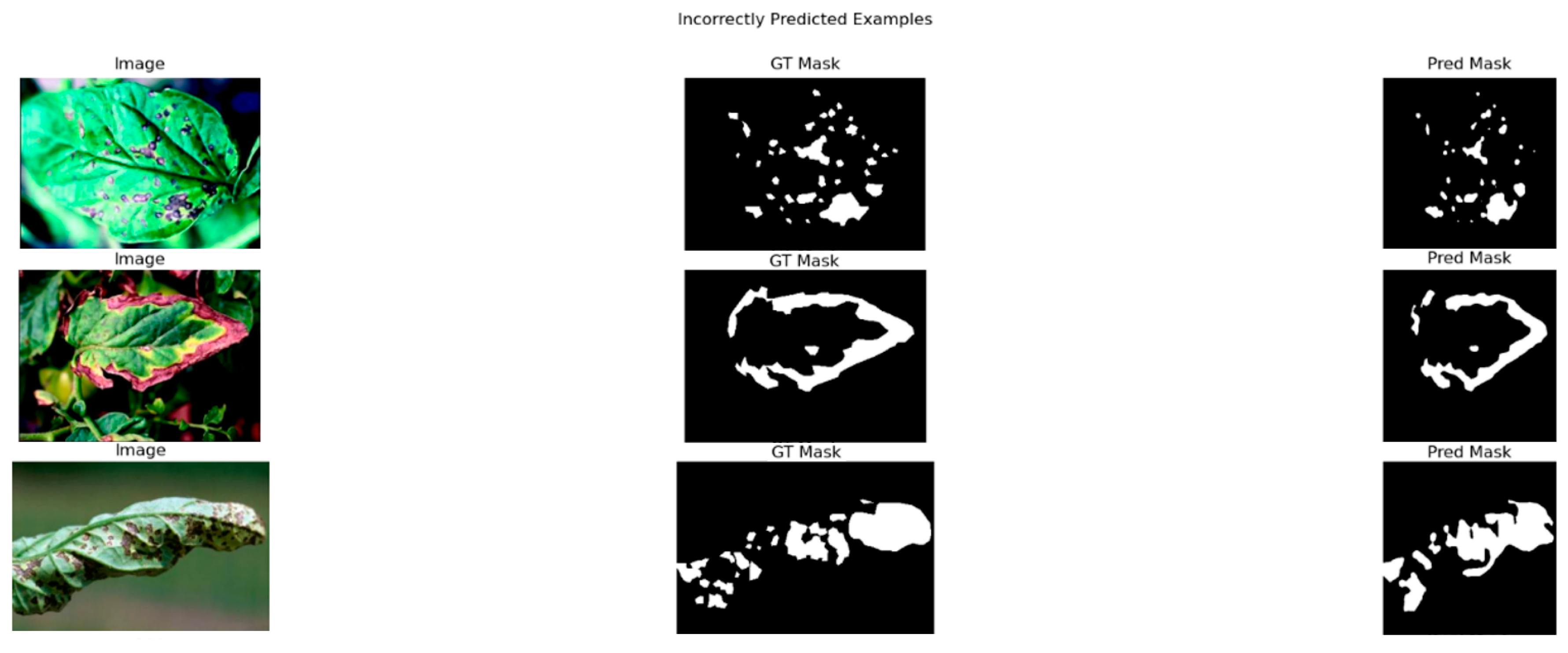

5.4. Case Studies of Misclassifications Across Architectures

6. Discussion

6.1. Observation Points

6.2. Limitations and Future Directions

- Static Transformation Analysis: Real-world invariances often involve combined perturbations, which are absent from our isolated tests.

- Simplicity of Transformations: The applied perturbations (blur, noise, rotation, scale) were simulated independently and represent clean, idealized distortions. In practice, corruptions frequently co-occur (e.g., blur + rotation, scale + occlusion) or appear partially across the image, producing more complex challenges. Evaluating such compounded perturbations remains an important direction for future work. Yet, it should be noted that isolating individual transformations allows for a more precise evaluation of the models’ sensitivity and robustness to specific types of perturbations, while single transformations in this work also aim to serve as a clear baseline of a foundational benchmark.

- Dataset Bias: COCO/ImageNet focus limits ecological validity for specialized domains like medical or satellite imaging.

- Black-Box Metrics: Layer-wise invariance analysis could reveal mechanistic insights beyond task performance.

- Given our modest per-class sample size, marginal score differences (e.g., ±0.01 in mAP or mIoU) are not necessarily statistically significant and should not be over-interpreted.

- Limited computational resources: in this work subsets of data were considered to fine-tune the segmentation pre-trained models, mainly due to limited available resources. Considering recent robustness benchmarks specifically designed for object detection, such as ImageNet-C Hendrycks and Dietterich [41], future work will aim enhance the size of used datasets.

7. Conclusions

7.1. Key Empirical Findings

7.2. Task-Specific Invariance Patterns

7.3. Future Research Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CNNs | Convolutional Neural Networks |

| ViTs | Vision Transformers |

| COCO | Common Objects in Context |

| mIoU | Mean Intersection over Union |

| Acc | Accuracy |

| SIFT | Scale-Invariant Feature Transform |

| SVMs | Support Vector Machines |

| ASIFT | Affine-Scale-Invariant Feature Transform |

| SURF | Speeded-Up Robust Features |

| ORB | Oriented FAST and Rotated BRIEF |

| FAST | Features from Accelerated Segment Test |

| BRIEF | Binary Robust Independent Elementary Features |

| HOG | Histogram of Oriented Gradients |

| SPM | Spatial Pyramid Matching |

| DIAL | Domain Invariant Adversarial Learning |

| DAT | Domain-wise Adversarial Training |

| DCT | Discrete Cosine Transform |

| PyramidAT | Pyramid Adversarial Training |

| SP-ViT | Spatial Prior-enhanced Vision Transformers |

| STNs | Spatial Transformer Networks |

| RViT | Rotation Invariant Vision Transformer |

| AMR | Artificial Mental Rotation |

| SPP | Spatial Pyramid Pooling |

| RiT | Rotation Invariance Transformer |

| ILSVRC2012 | ImageNet Large Scale Visual Recognition Challenge 2012 |

| FPNs | Feature pyramid networks |

References

- Saremi, S.; Sejnowski, T.J. Hierarchical Model of Natural Images and the Origin of Scale Invariance. Proc. Natl. Acad. Sci. USA 2013, 110, 3071–3076. [Google Scholar] [CrossRef]

- Rodríguez, M.; Delon, J.; Morel, J.-M. Fast Affine Invariant Image Matching. Image Process. Line 2018, 8, 251–281. [Google Scholar] [CrossRef]

- Saha, S.; Gokhale, T. Improving Shift Invariance in Convolutional Neural Networks with Translation Invariant Polyphase Sampling. arXiv 2024, arXiv:2404.07410. [Google Scholar] [CrossRef]

- Kvinge, H.; Emerson, T.H.; Jorgenson, G.; Vasquez, S.; Doster, T.; Lew, J.D. In What Ways Are Deep Neural Networks Invariant and How Should We Measure This? arXiv 2022, arXiv:2210.03773. [Google Scholar] [CrossRef]

- Lee, J.; Yang, J.; Wang, Z. What Does CNN Shift Invariance Look Like? A Visualization Study. In Proceedings of the Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Online, 23–28 August 2020; pp. 196–210, ISBN 9783030682378. [Google Scholar]

- Lowe, D.G. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Lee, A. Comparing Deep Neural Networks and Traditional Vision Algorithms in Mobile Robotics. Swart. Univ. 2015, 40, 1–9. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. SURF: Speeded Up Robust Features. In Proceedings of the Computer Vision–ECCV 2006, Graz, Austria, 7–13 May 2006; Leonardis, A., Bischof, H., Pinz, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; Volume 3951, pp. 404–417, ISBN 978-3-540-33832-1/978-3-540-33833-8. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An Efficient Alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: New York, NY, USA, 2011; pp. 2564–2571. [Google Scholar]

- Dalal, N.; Triggs, B. Histograms of Oriented Gradients for Human Detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; IEEE: New York, NY, USA, 2005; Volume 1, pp. 886–893. [Google Scholar]

- Lazebnik, S.; Schmid, C.; Ponce, J. Beyond Bags of Features: Spatial Pyramid Matching for Recognizing Natural Scene Categories. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition-Volume 2 (CVPR’06), New York, NY, USA, 17–22 June 2006; IEEE: New York, NY, USA, 2006; Volume 2, pp. 2169–2178. [Google Scholar]

- Flajolet, P.; Sedgewick, R. Mellin Transforms and Asymptotics: Finite Differences and Rice’s Integrals. Theor. Comput. Sci. 1995, 144, 101–124. [Google Scholar] [CrossRef]

- Chen, B.; Shu, H.; Zhang, H.; Coatrieux, G.; Luo, L.; Coatrieux, J.L. Combined Invariants to Similarity Transformation and to Blur Using Orthogonal Zernike Moments. IEEE Trans. Image Process. 2011, 20, 345–360. [Google Scholar] [CrossRef]

- Huang, Z.; Leng, J. Analysis of Hu’s Moment Invariants on Image Scaling and Rotation. In Proceedings of the 2010 2nd International Conference on Computer Engineering and Technology, Chengdu, China, 16–18 April 2010; IEEE: New York, NY, USA, 2010; pp. V7-476–V7-480. [Google Scholar]

- Papakostas, G.A.; Boutalis, Y.S.; Karras, D.A.; Mertzios, B.G. Efficient Computation of Zernike and Pseudo-Zernike Moments for Pattern Classification Applications. Pattern Recognit. Image Anal. 2010, 20, 56–64. [Google Scholar] [CrossRef]

- Papakostas, G.A.; Boutalis, Y.S.; Karras, D.A.; Mertzios, B.G. Fast Numerically Stable Computation of Orthogonal Fourier–Mellin Moments. IET Comput. Vis. 2007, 1, 11–16. [Google Scholar] [CrossRef]

- Wang, K.W.K.; Ping, Z.P.Z.; Sheng, Y.S.A.Y. Development of Image Invariant Moments—A Short Overview. Chin. Opt. Lett. 2016, 14, 091001. [Google Scholar] [CrossRef][Green Version]

- Immer, A.; van der Ouderaa, T.F.A.; Rätsch, G.; Fortuin, V.; van der Wilk, M. Invariance Learning in Deep Neural Networks with Differentiable Laplace Approximations. Adv. Neural Inf. Process. Syst. 2022, 35, 12449–12463. [Google Scholar][Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Deng, W.; Gould, S.; Zheng, L. On the Strong Correlation Between Model Invariance and Generalization. arXiv 2022, arXiv:2207.07065. [Google Scholar] [CrossRef]

- Abdalla, A. A Visual History of Interpretation for Image Recognition. Available online: https://thegradient.pub/a-visual-history-of-interpretation-for-image-recognition/ (accessed on 22 June 2025).

- Chavhan, R.; Gouk, H.; Stuehmer, J.; Heggan, C.; Yaghoobi, M.; Hospedales, T. Amortised Invariance Learning for Contrastive Self-Supervision. arXiv 2023, arXiv:2302.12712. [Google Scholar] [CrossRef]

- Levi, M.; Attias, I.; Kontorovich, A. Domain Invariant Adversarial Learning. arXiv 2022, arXiv:2104.00322. [Google Scholar] [CrossRef]

- Xin, S.; Wang, Y.; Su, J.; Wang, Y. On the Connection between Invariant Learning and Adversarial Training for Out-of-Distribution Generalization. Proc. AAAI Conf. Artif. Intell. 2023, 37, 10519–10527. [Google Scholar] [CrossRef]

- Gu, J.; Tresp, V.; Qin, Y. Are Vision Transformers Robust to Patch Perturbations? In Proceedings of the Computer Vision–ECCV 2022, Tel Aviv, Israel, 23–27 October 2022; pp. 404–421. [Google Scholar]

- Xu, W.; Hirami, K.; Eguchi, K. Self-Supervised Learning for Neural Topic Models with Variance–Invariance–Covariance Regularization. Knowl. Inf. Syst. 2025, 67, 5057–5075. [Google Scholar] [CrossRef]

- Dvořáček, P. How Image Distortions Affect Inference Accuracy. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; IEEE: New York, NY, USA, 2024; pp. 1–7. [Google Scholar]

- Hossain, M.T.; Teng, S.W.; Sohel, F.; Lu, G. Robust Image Classification Using a Low-Pass Activation Function and DCT Augmentation. IEEE Access 2021, 9, 86460–86474. [Google Scholar] [CrossRef]

- Zhang, R. Making Convolutional Networks Shift-Invariant Again. arXiv 2019, arXiv:1904.11486. [Google Scholar] [CrossRef]

- Hendrycks, D.; Mu, N.; Cubuk, E.D.; Zoph, B.; Gilmer, J.; Lakshminarayanan, B. AugMix: A Simple Data Processing Method to Improve Robustness and Uncertainty. arXiv 2020, arXiv:1912.02781. [Google Scholar] [CrossRef]

- Calian, D.A.; Stimberg, F.; Wiles, O.; Rebuffi, S.-A.; Gyorgy, A.; Mann, T.; Gowal, S. Defending Against Image Corruptions Through Adversarial Augmentations. arXiv 2021, arXiv:2104.01086. [Google Scholar] [CrossRef]

- Xie, Q.; Luong, M.-T.; Hovy, E.; Le, Q.V. Self-Training With Noisy Student Improves ImageNet Classification. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; IEEE: New York, NY, USA, 2020; pp. 10684–10695. [Google Scholar]

- Usama, M.; Asim, S.A.; Ali, S.B.; Wasim, S.T.; Mansoor, U. Bin Analysing the Robustness of Vision-Language-Models to Common Corruptions. arXiv 2025, arXiv:2504.13690. [Google Scholar]

- Herrmann, C.; Sargent, K.; Jiang, L.; Zabih, R.; Chang, H.; Liu, C.; Krishnan, D.; Sun, D. Pyramid Adversarial Training Improves ViT Performance. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; IEEE: New York, NY, USA, 2022; pp. 13409–13419. [Google Scholar]

- Zhou, Y.; Xiang, W.; Li, C.; Wang, B.; Wei, X.; Zhang, L.; Keuper, M.; Hua, X. SP-ViT: Learning 2D Spatial Priors for Vision Transformers. arXiv 2022, arXiv:2206.07662. [Google Scholar]

- Zhang, C.; Zhang, C.; Zheng, S.; Zhang, M.; Qamar, M.; Bae, S.-H.; Kweon, I.S. A Survey on Audio Diffusion Models: Text To Speech Synthesis and Enhancement in Generative AI. arXiv 2023, arXiv:2303.13336. [Google Scholar] [CrossRef]

- Zheng, S.; Song, Y.; Leung, T.; Goodfellow, I. Improving the Robustness of Deep Neural Networks via Stability Training. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: New York, NY, USA, 2016; pp. 4480–4488. [Google Scholar]

- Xie, C.; Wu, Y.; Van Der Maaten, L.; Yuille, A.L.; He, K. Feature Denoising for Improving Adversarial Robustness. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; IEEE: New York, NY, USA, 2019; pp. 501–509. [Google Scholar]

- Cohen, J.M.; Rosenfeld, E.; Kolter, J.Z. Certified Adversarial Robustness via Randomized Smoothing. arXiv 2019, arXiv:1902.02918. [Google Scholar] [CrossRef]

- Paul, S.; Chen, P.-Y. Vision Transformers Are Robust Learners. Proc. AAAI Conf. Artif. Intell. 2022, 36, 2071–2081. [Google Scholar] [CrossRef]

- Hendrycks, D.; Dietterich, T. Benchmarking Neural Network Robustness to Common Corruptions and Perturbations. arXiv 2019, arXiv:1903.12261. [Google Scholar] [CrossRef]

- Roy, S.; Marathe, A.; Walambe, R.; Kotecha, K. Self Supervised Learning for Classifying the Rotated Images. In Communications in Computer and Information Science; Springer Nature: Cham, Switzerland, 2023; pp. 17–24. ISBN 9783031356438. [Google Scholar]

- Jaderberg, M.; Simonyan, K.; Zisserman, A.; Kavukcuoglu, K. Spatial Transformer Networks. arXiv 2016, arXiv:1506.02025. [Google Scholar]

- Cohen, T.S.; Welling, M. Group Equivariant Convolutional Networks. In Proceedings of the 33rd International Conference on Machine Learning, ICML, New York, NY, USA, 20–22 June 2016. [Google Scholar]

- Worrall, D.E.; Garbin, S.J.; Turmukhambetov, D.; Brostow, G.J. Harmonic Networks: Deep Translation and Rotation Equivariance. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; IEEE: New York, NY, USA, 2017; pp. 7168–7177. [Google Scholar]

- Zhang, P.; Tang, J.; Zhong, H.; Ning, M.; Fan, Y. Pre-Rotation Only at Inference-Time: A Way to Rotation Invariance. In Proceedings of the Fourteenth International Conference on Digital Image Processing (ICDIP 2022), Wuhan, China, 20–23 May 2022; Xie, Y., Jiang, X., Tao, W., Zeng, D., Eds.; SPIE: Washington, DC, USA, 2022; p. 119. [Google Scholar]

- Krishnan, P.T.; Krishnadoss, P.; Khandelwal, M.; Gupta, D.; Nihaal, A.; Kumar, T.S. Enhancing Brain Tumor Detection in MRI with a Rotation Invariant Vision Transformer. Front. Neuroinform. 2024, 18, 1414925. [Google Scholar] [CrossRef]

- Sun, X.; Wang, C.; Wang, Y.; Wei, J.; Sun, Z. IrisFormer: A Dedicated Transformer Framework for Iris Recognition. IEEE Signal Process. Lett. 2025, 32, 431–435. [Google Scholar] [CrossRef]

- Tuggener, L.; Stadelmann, T.; Schmidhuber, J. Efficient Rotation Invariance in Deep Neural Networks through Artificial Mental Rotation. arXiv 2023, arXiv:2311.08525. [Google Scholar] [CrossRef]

- Chen, S.; Ye, M.; Du, B. Rotation Invariant Transformer for Recognizing Object in UAVs. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; ACM: New York, NY, USA, 2022; pp. 2565–2574. [Google Scholar]

- Graziani, M.; Lompech, T.; Müller, H.; Depeursinge, A.; Andrearczyk, V. On the Scale Invariance in State of the Art CNNs Trained on ImageNet. Mach. Learn. Knowl. Extr. 2021, 3, 374–391. [Google Scholar] [CrossRef]

- Jansson, Y.; Lindeberg, T. Exploring the Ability of CNN s to Generalise to Previously Unseen Scales over Wide Scale Ranges. In Proceedings of the 2020 25th International Conference on Pattern Recognition (ICPR), Milan, Italy, 10–15 January 2021; IEEE: New York, NY, USA, 2021; pp. 1181–1188. [Google Scholar]

- Xie, W.; Liu, T. MFP-CNN: Multi-Scale Fusion and Pooling Network for Accurate Scene Classification. In Proceedings of the 2024 2nd International Conference on Computer, Vision and Intelligent Technology (ICCVIT), Huaibei, China, 24–27 November 2024; IEEE: New York, NY, USA, 2024; pp. 1–8. [Google Scholar]

- Chang, J.-R.; Chen, Y.-S. Pyramid Stereo Matching Network. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: New York, NY, USA, 2018; pp. 5410–5418. [Google Scholar]

- Kumar, D.; Sharma, D. Feature Map Upscaling to Improve Scale Invariance in Convolutional Neural Networks. In Proceedings of the 16th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Virtual Event, 8–10 February 2021; SCITEPRESS-Science and Technology Publications: Setúbal, Portugal, 2021; pp. 113–122. [Google Scholar]

- Wei, X.-S.; Gao, B.-B.; Wu, J. Deep Spatial Pyramid Ensemble for Cultural Event Recognition. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015; IEEE: New York, NY, USA, 2015; pp. 280–286. [Google Scholar][Green Version]

- Kumar, D.; Sharma, D. Feature Map Augmentation to Improve Scale Invariance in Convolutional Neural Networks. J. Artif. Intell. Soft Comput. Res. 2023, 13, 51–74. [Google Scholar] [CrossRef]

- Xu, Y.; Zhang, Q.; Zhang, J.; Tao, D. ViTAE: Vision Transformer Advanced by Exploring Intrinsic Inductive Bias. arXiv 2021, arXiv:2106.03348. [Google Scholar] [CrossRef]

- Ge, J.; Wang, Q.; Tong, J.; Gao, G. RPViT: Vision Transformer Based on Region Proposal. In Proceedings of the 2022 the 5th International Conference on Image and Graphics Processing (ICIGP), Beijing, China, 7–9 January 2022; ACM: New York, NY, USA, 2022; pp. 220–225. [Google Scholar]

- Qian, Z. ECViT: Efficient Convolutional Vision Transformer with Local-Attention and Multi-Scale Stages. arXiv 2025, arXiv:2504.14825. [Google Scholar]

- Yu, A.; Niu, Z.-H.; Xie, J.-X.; Zhang, Q.-L.; Yang, Y.-B. EViTIB: Efficient Vision Transformer via Inductive Bias Exploration for Image Super-Resolution. In Proceedings of the 2024 International Joint Conference on Neural Networks (IJCNN), Yokohama, Japan, 30 June–5 July 2024; IEEE: New York, NY, USA, 2024; pp. 1–7. [Google Scholar]

- Singh, B.; Davis, L.S. An Analysis of Scale Invariance in Object Detection-SNIP. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; IEEE: New York, NY, USA, 2018; pp. 3578–3587. [Google Scholar]

- Lee, Y.; Lama, B.; Joo, S.; Kwon, J. Enhancing Human Key Point Identification: A Comparative Study of the High-Resolution VICON Dataset and COCO Dataset Using BPNET. Appl. Sci. 2024, 14, 4351. [Google Scholar] [CrossRef]

- Iglovikov, V.; Shvets, A. TernausNet: U-Net with VGG11 Encoder Pre-Trained on ImageNet for Image Segmentation. arXiv 2018, arXiv:1801.05746. [Google Scholar]

- Sharma, P.; Ding, N.; Goodman, S.; Soricut, R. Conceptual Captions: A Cleaned, Hypernymed, Image Alt-Text Dataset For Automatic Image Captioning. In Proceedings of the 56th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Melbourne, Australia, 15–20 July 2018; Association for Computational Linguistics: Stroudsburg, PA, USA, 2018; pp. 2556–2565. [Google Scholar]

- Fakhre, A. Leaf Disease Segmentation Dataset. Available online: https://www.kaggle.com/datasets/fakhrealam9537/leaf-disease-segmentation-dataset (accessed on 22 June 2025).

- Goyal, M.; Yap, M.; Hassanpour, S. Multi-Class Semantic Segmentation of Skin Lesions via Fully Convolutional Networks. In Proceedings of the 13th International Joint Conference on Biomedical Engineering Systems and Technologies, Valletta, Malta, 24–26 February 2020; SCITEPRESS-Science and Technology Publications: Setúbal, Portugal, 2020; pp. 290–295. [Google Scholar]

- Tian, J.; Jin, Q.; Wang, Y.; Yang, J.; Zhang, S.; Sun, D. Performance Analysis of Deep Learning-Based Object Detection Algorithms on COCO Benchmark: A Comparative Study. J. Eng. Appl. Sci. 2024, 71, 76. [Google Scholar] [CrossRef]

- Danişman, T. Bagging Ensemble for Deep Learning Based Gender Recognition Using Test-Time Augmentation on Large-Scale Datasets. Turk. J. Electr. Eng. Comput. Sci. 2021, 29, 2084–2100. [Google Scholar] [CrossRef]

- Tong, Z.; Chen, Y.; Xu, Z.; Yu, R. Wise-IoU: Bounding Box Regression Loss with Dynamic Focusing Mechanism. arXiv 2023, arXiv:2301.10051. [Google Scholar]

- Li, R.; Chen, R.; Yue, J.; Liu, L.; Jia, Z. Segmentation and Quantitative Evaluation of Tertiary Lymphoid Structures in Hepatocellular Carcinoma Based on Deep Learning. In Proceedings of the 2024 2nd International Conference on Algorithm, Image Processing and Machine Vision (AIPMV), Zhenjiang, China, 12–14 July 2024; IEEE: New York, NY, USA, 2024; pp. 31–35. [Google Scholar]

- Shyamala Devi, M.; Eswar, R.; Hibban, R.M.D.; Jai, H.H.; Hari, P.; Hemanth, S. Encrypt Decrypt ReLU Activated UNet Prototype Based Prediction of Leaf Disease Segmentation. In Proceedings of the 2024 International Conference on Signal Processing, Computation, Electronics, Power and Telecommunication (IConSCEPT), Karaikal, India, 4–5 July 2024; IEEE: New York, NY, USA, 2024; pp. 1–6. [Google Scholar]

- Br, P.; Av, S.H.; Ashok, A. Diseased Leaf Segmentation from Complex Background Using Indices Based Histogram. In Proceedings of the 2021 6th International Conference on Communication and Electronics Systems (ICCES), Coimbatore, India, 8–10 July 2021; IEEE: New York, NY, USA, 2021; pp. 1502–1507. [Google Scholar]

- Azulay, A.; Weiss, Y. Why Do Deep Convolutional Networks Generalize so Poorly to Small Image Transformations? J. Mach. Learn. Res. 2019, 20, 1–25. [Google Scholar]

- Cui, Y.; Zhang, C.; Qiao, K.; Wang, L.; Yan, B.; Tong, L. Study on Representation Invariances of CNNs and Human Visual Information Processing Based on Data Augmentation. Brain Sci. 2020, 10, 602. [Google Scholar] [CrossRef]

- Wang, Z.; Bai, Y.; Zhou, Y.; Xie, C. Can CNNs Be More Robust Than Transformers? arXiv 2023, arXiv:2206.03452. [Google Scholar] [CrossRef]

- Pinto, F.; Torr, P.H.S.; Dokania, P.K. An Impartial Take to the CNN vs Transformer Robustness Contest. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2022; pp. 466–480. ISBN 9783031197772. [Google Scholar]

- Mumuni, A.; Mumuni, F. CNN Architectures for Geometric Transformation-Invariant Feature Representation in Computer Vision: A Review. SN Comput. Sci. 2021, 2, 340. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of Deep Learning: Concepts, CNN Architectures, Challenges, Applications, Future Directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Zhang, F.X.; Deng, J.; Lieck, R.; Shum, H.P.H. Adaptive Graph Learning from Spatial Information for Surgical Workflow Anticipation. arXiv 2024, arXiv:2412.06454. [Google Scholar] [CrossRef]

- Ge, H.; Sun, P.; Lu, Y. A New Dataset, Model, and Benchmark for Lightweight and Real-Time Underwater Object Detection. Neurocomputing 2025, 651, 130891. [Google Scholar] [CrossRef]

| Model | Short Name | Description | Date Introduced | Architecture | Dataset |

|---|---|---|---|---|---|

| EfficientDet-D0 | EfficientDet | Lightweight detector using EfficientNet backbone and BiFPN | 2019 | CNN | COCO 2017 |

| ResNet-50 backbone + FPN + RetinaNet head (Focal Loss) | RetinaNet | Single-stage detector with a ResNet-50-FPN backbone and Focal Loss to address class imbalance | 2017 | CNN | COCO 2017 |

| ResNet-50 backbone + FPN + RetinaNet head (ATSS) with ResnetV2 | RetinaNetv2 | Improved RetinaNet with a ResNet-50-FPN backbone incorporating Adaptive Training Sample Selection (ATSS) | 2019 | CNN | COCO 2017 |

| SSD300 VGG16 | SSD | Single Shot MultiBox Detector with VGG16 backbone, fast and lightweight | 2016 | CNN | COCO 2017 |

| MobileNetV3-Large backbone + SSD-Lite head | SSDlite | Single-Shot Detector Lite with a MobileNetV3-Large backbone for fast, efficient object detection | 2019 | CNN | COCO 2017 |

| YOLOv10 | Yolov10 | Latest iteration with focus on real-time performance and transformer enhancements | 2024 | CNN | COCO 2017 |

| YOLOv11 | Yolov11 | Introduces advanced feature fusion and sparse attention mechanisms | 2024 | CNN | COCO 2017 |

| YOLOv7 | Yolov7 | Improved accuracy and speed over v5, optimized for diverse tasks, including pose estimation | 2022 | CNN | COCO 2017 |

| YOLOv8 | Yolov8 | Modular and extensible, supports classification, segmentation, and detection | 2023 | CNN | COCO 2017 |

| YOLOv9 | Yolov9 | Introduces faster training and inference with hardware-aware optimization | 2024 | CNN | COCO 2017 |

| Model | Short Name | Description | Date Introduced | Architecture | Dataset |

|---|---|---|---|---|---|

| EfficientNet-B0 | EfficientNet | Compound-scaled CNN with great efficiency and accuracy trade-off | 2019 | CNN | ILSVRC2012 |

| MobileNetV2 | MobilNetV2 | Lightweight CNN optimized for mobile inference | 2018 | CNN | ILSVRC2012 |

| MobileNetV3-Large | MobileNetV3 | Optimized version of MobileNetV2 using NAS and SE blocks | 2019 | CNN | ILSVRC2012 |

| RegNetY-400MF | Regnet | Regularized network design with performance/efficiency trade-off | 2020 | CNN | ILSVRC2012 |

| ResNet-50 | Resnet | 50-layer deep CNN with skip connections, strong baseline | 2015 | CNN | ILSVRC2012 |

| ResNeXt-50 (32x4d) | ResnetXt | Improved ResNet variant with grouped convolutions | 2017 | CNN | ILSVRC2012 |

| ConvNeXt-Base | ConvNext | CNN redesigned with transformer-like properties for SOTA performance | 2022 | CNN | ILSVRC2012 |

| DeiT-Base Patch16/224 | DeiT-Base | Data-efficient vision transformer trained without large datasets | 2021 | Transformer | ILSVRC2012 |

| Swin Transformer Base | SwinTransformer | Shifted window transformer with hierarchical vision transformer design | 2021 | Transformer | ILSVRC2012 |

| Vision Transformer (ViT-B/16) | VisionTransformer | Vision Transformer with 16x16 patch embedding, no CNNs | 2020 | Transformer | ILSVRC2012 |

| Model | Short Name | Description | Date Introduced | Architecture | Dataset |

|---|---|---|---|---|---|

| DeepLabV3+ (ResNet-101) | DeepLabv3+ | Dilated convolution with encoder-decoder refinement using ResNet-101 | 2018 | CNN | Custom Leaf Dataset |

| DeepLabV3 (ResNet-101) | DeepLabv3_Resnet101 | Atrous spatial pyramid pooling with ResNet-101 backbone | 2017 | CNN | Custom Leaf Dataset |

| DeepLabV3 (ResNet-50) | Deeplabv3 | Smaller version of DeepLabV3 using ResNet-50 as encoder | 2017 | CNN | Custom Leaf Dataset |

| FCN (ResNet-50) | FCN | Fully convolutional network using ResNet-50 as backbone | 2015 | CNN | Custom Leaf Dataset |

| UNet (ResNet-34) | Unet | UNet encoder-decoder architecture with ResNet-34 as backbone | 2015 | CNN | Custom Leaf Dataset |

| CLIPSeg | CLIPSeg | CLIP-based model for text-prompted image segmentation | 2022 | Transformer | Custom Leaf Dataset |

| Mask2Former | Mask2Former | Universal transformer-based model for semantic, instance, and panoptic segmentation | 2022 | Transformer | Custom Leaf Dataset |

| PSPNet (ResNet-50) | PSPNet | Pyramid Scene Parsing Network with ResNet-50 encoder backbone | 2017 | CNN | Custom Leaf Dataset |

| SegFormer | SegFormer | Transformer-based semantic segmentation model, efficient and accurate | 2021 | Transformer | Custom Leaf Dataset |

| SqueezeNet 1.1 | SqueezeNet | Tiny CNN for classification, sometimes used in lightweight segmentation | 2016 | CNN | Custom Leaf Dataset |

| Model | Gaussian Blur of Different Strengths (Sigma) | ||||

|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | |

| EfficientDet | 0.81 | 0.81 | 0.81 | 0.81 | 0.81 |

| RetinaNet | 0.83 | 0.83 | 0.83 | 0.82 | 0.82 |

| RetinaNetv2 | 0.84 | 0.84 | 0.83 | 0.83 | 0.83 |

| SSD | 0.80 | 0.79 | 0.80 | 0.80 | 0.80 |

| SSDlite | 0.81 | 0.81 | 0.80 | 0.81 | 0.81 |

| Yolov10 | 0.88 | 0.88 | 0.87 | 0.87 | 0.87 |

| Yolov11 | 0.88 | 0.88 | 0.87 | 0.87 | 0.87 |

| Yolov7 | 0.86 | 0.86 | 0.86 | 0.86 | 0.86 |

| Yolov8 | 0.87 | 0.87 | 0.87 | 0.87 | 0.87 |

| Yolov9 | 0.87 | 0.87 | 0.87 | 0.87 | 0.87 |

| Model | Gaussian Noise of Different Strengths (std) | ||||||

|---|---|---|---|---|---|---|---|

| 0 | 0.05 | 0.1 | 0.15 | 0.2 | 0.25 | 0.3 | |

| EfficientDet | 0.81 | 0.81 | 0.80 | 0.79 | 0.79 | 0.78 | 0.78 |

| RetinaNet | 0.83 | 0.82 | 0.82 | 0.81 | 0.80 | 0.79 | 0.78 |

| RetinaNetv2 | 0.84 | 0.84 | 0.83 | 0.81 | 0.80 | 0.77 | 0.75 |

| SSD | 0.80 | 0.80 | 0.79 | 0.79 | 0.79 | 0.78 | 0.77 |

| SSDlite | 0.81 | 0.81 | 0.81 | 0.80 | 0.80 | 0.80 | 0.81 |

| Yolov10 | 0.88 | 0.87 | 0.86 | 0.85 | 0.85 | 0.83 | 0.83 |

| Yolov11 | 0.88 | 0.87 | 0.86 | 0.85 | 0.84 | 0.83 | 0.82 |

| Yolov7 | 0.86 | 0.85 | 0.85 | 0.83 | 0.83 | 0.83 | 0.81 |

| Yolov8 | 0.87 | 0.86 | 0.85 | 0.85 | 0.84 | 0.84 | 0.83 |

| Yolov9 | 0.87 | 0.86 | 0.86 | 0.85 | 0.84 | 0.83 | 0.82 |

| Model | Rotation of Different Angles (Angle) | ||||||

|---|---|---|---|---|---|---|---|

| 0° | 30° | 60° | 90° | 120° | 150° | 180° | |

| EfficientDet | 0.81 | 0.64 | 0.65 | 0.77 | 0.65 | 0.65 | 0.78 |

| RetinaNet | 0.83 | 0.65 | 0.64 | 0.79 | 0.65 | 0.65 | 0.80 |

| RetinaNetv2 | 0.84 | 0.65 | 0.65 | 0.81 | 0.66 | 0.65 | 0.80 |

| SSD | 0.80 | 0.65 | 0.65 | 0.76 | 0.66 | 0.65 | 0.77 |

| SSDlite | 0.81 | 0.66 | 0.65 | 0.77 | 0.66 | 0.66 | 0.78 |

| Yolov10 | 0.88 | 0.65 | 0.65 | 0.85 | 0.65 | 0.65 | 0.85 |

| Yolov11 | 0.88 | 0.66 | 0.65 | 0.85 | 0.65 | 0.66 | 0.85 |

| Yolov7 | 0.86 | 0.65 | 0.64 | 0.85 | 0.66 | 0.65 | 0.85 |

| Yolov8 | 0.87 | 0.65 | 0.65 | 0.86 | 0.65 | 0.66 | 0.85 |

| Yolov9 | 0.87 | 0.65 | 0.65 | 0.85 | 0.65 | 0.66 | 0.85 |

| Model | Scaling of Different Factors (Scale) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.25 | 0.5 | 0.75 | 1 | 1.25 | 1.5 | 2 | 3 | |

| EfficientDet | 0.76 | 0.80 | 0.81 | 0.81 | 0.81 | 0.81 | 0.81 | 0.81 | 0.81 |

| RetinaNet | 0.76 | 0.80 | 0.83 | 0.83 | 0.83 | 0.83 | 0.83 | 0.83 | 0.83 |

| RetinaNetv2 | 0.76 | 0.81 | 0.84 | 0.84 | 0.84 | 0.84 | 0.84 | 0.84 | 0.84 |

| SSD | 0.74 | 0.78 | 0.79 | 0.79 | 0.80 | 0.79 | 0.79 | 0.79 | 0.79 |

| SSDlite | 0.78 | 0.80 | 0.81 | 0.81 | 0.81 | 0.81 | 0.81 | 0.81 | 0.81 |

| Yolov10 | 0.84 | 0.87 | 0.88 | 0.88 | 0.88 | 0.88 | 0.88 | 0.88 | 0.88 |

| Yolov11 | 0.82 | 0.86 | 0.88 | 0.88 | 0.88 | 0.88 | 0.88 | 0.88 | 0.88 |

| Yolov7 | 0.79 | 0.84 | 0.86 | 0.86 | 0.86 | 0.86 | 0.86 | 0.86 | 0.86 |

| Yolov8 | 0.81 | 0.86 | 0.87 | 0.87 | 0.87 | 0.87 | 0.87 | 0.87 | 0.87 |

| Yolov9 | 0.81 | 0.86 | 0.87 | 0.87 | 0.87 | 0.87 | 0.87 | 0.87 | 0.87 |

| Model | Gaussian Blur of Different Strengths (Sigma) | ||||

|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | |

| EfficientNet | 0.78 | 0.76 | 0.69 | 0.59 | 0.46 |

| MobilNetV2 | 0.73 | 0.70 | 0.61 | 0.51 | 0.39 |

| MobileNetV3 | 0.74 | 0.73 | 0.66 | 0.57 | 0.44 |

| Regnet | 0.68 | 0.67 | 0.55 | 0.39 | 0.26 |

| Resnet | 0.77 | 0.75 | 0.68 | 0.60 | 0.49 |

| ResnetXt | 0.73 | 0.71 | 0.63 | 0.51 | 0.36 |

| ConvNext | 0.84 | 0.83 | 0.78 | 0.70 | 0.60 |

| DeiT-Base | 0.81 | 0.81 | 0.79 | 0.74 | 0.66 |

| SwinTransformer | 0.82 | 0.82 | 0.75 | 0.64 | 0.52 |

| VisionTransformer | 0.81 | 0.80 | 0.75 | 0.70 | 0.62 |

| Model | Gaussian Noise of Different Strengths (std) | ||||||

|---|---|---|---|---|---|---|---|

| 0 | 0.05 | 0.1 | 0.15 | 0.2 | 0.25 | 0.3 | |

| EfficientNet | 0.78 | 0.73 | 0.65 | 0.51 | 0.32 | 0.16 | 0.08 |

| MobilNetV2 | 0.73 | 0.61 | 0.42 | 0.24 | 0.10 | 0.04 | 0.02 |

| MobileNetV3 | 0.74 | 0.67 | 0.54 | 0.39 | 0.26 | 0.15 | 0.09 |

| Regnet | 0.68 | 0.60 | 0.47 | 0.31 | 0.19 | 0.10 | 0.06 |

| Resnet | 0.77 | 0.69 | 0.57 | 0.41 | 0.26 | 0.14 | 0.07 |

| ResnetXt | 0.73 | 0.67 | 0.55 | 0.44 | 0.32 | 0.21 | 0.14 |

| ConvNext | 0.84 | 0.79 | 0.74 | 0.66 | 0.55 | 0.44 | 0.32 |

| DeiT-Base | 0.81 | 0.81 | 0.78 | 0.75 | 0.71 | 0.65 | 0.58 |

| SwinTransformer | 0.82 | 0.79 | 0.75 | 0.69 | 0.61 | 0.53 | 0.45 |

| VisionTransformer | 0.81 | 0.78 | 0.75 | 0.69 | 0.63 | 0.56 | 0.48 |

| Model | Rotation of Different Angles (Angle) | ||||||

|---|---|---|---|---|---|---|---|

| 0° | 30° | 60° | 90° | 120° | 150° | 180° | |

| EfficientNet | 0.78 | 0.70 | 0.55 | 0.57 | 0.43 | 0.48 | 0.60 |

| MobilNetV2 | 0.73 | 0.55 | 0.42 | 0.47 | 0.32 | 0.33 | 0.50 |

| MobileNetV3 | 0.74 | 0.68 | 0.46 | 0.50 | 0.41 | 0.45 | 0.54 |

| Regnet | 0.68 | 0.50 | 0.35 | 0.39 | 0.26 | 0.28 | 0.43 |

| Resnet | 0.77 | 0.64 | 0.51 | 0.51 | 0.39 | 0.40 | 0.52 |

| ResnetXt | 0.73 | 0.56 | 0.42 | 0.43 | 0.31 | 0.32 | 0.45 |

| ConvNext | 0.84 | 0.81 | 0.77 | 0.76 | 0.74 | 0.70 | 0.72 |

| DeiT-Base | 0.81 | 0.79 | 0.65 | 0.62 | 0.55 | 0.59 | 0.65 |

| SwinTransformer | 0.82 | 0.79 | 0.77 | 0.75 | 0.76 | 0.72 | 0.72 |

| VisionTransformer | 0.81 | 0.73 | 0.58 | 0.57 | 0.46 | 0.49 | 0.60 |

| Model | Scaling of Different Factors (Scale) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.25 | 0.5 | 0.75 | 1 | 1.25 | 1.5 | 2 | 3 | |

| EfficientNet | 0.30 | 0.64 | 0.76 | 0.77 | 0.78 | 0.78 | 0.78 | 0.78 | 0.78 |

| MobilNetV2 | 0.22 | 0.56 | 0.70 | 0.72 | 0.73 | 0.72 | 0.73 | 0.73 | 0.73 |

| MobileNetV3 | 0.27 | 0.59 | 0.72 | 0.74 | 0.74 | 0.74 | 0.74 | 0.75 | 0.75 |

| Regnet | 0.13 | 0.49 | 0.66 | 0.68 | 0.68 | 0.68 | 0.68 | 0.68 | 0.68 |

| Resnet | 0.26 | 0.62 | 0.75 | 0.77 | 0.77 | 0.77 | 0.77 | 0.77 | 0.77 |

| ResnetXt | 0.16 | 0.57 | 0.70 | 0.72 | 0.73 | 0.73 | 0.73 | 0.73 | 0.73 |

| ConvNext | 0.38 | 0.72 | 0.82 | 0.84 | 0.84 | 0.84 | 0.84 | 0.84 | 0.84 |

| DeiT-Base | 0.47 | 0.75 | 0.81 | 0.81 | 0.81 | 0.81 | 0.81 | 0.81 | 0.81 |

| SwinTransformer | 0.36 | 0.69 | 0.81 | 0.82 | 0.82 | 0.83 | 0.83 | 0.82 | 0.83 |

| VisionTransformer | 0.43 | 0.72 | 0.80 | 0.81 | 0.81 | 0.81 | 0.81 | 0.81 | 0.81 |

| Model | Gaussian Blur of Different Strengths (Sigma) | ||||

|---|---|---|---|---|---|

| 0 | 1 | 2 | 3 | 4 | |

| DeepLabv3+ | 0.57 | 0.50 | 0.37 | 0.33 | 0.32 |

| DeepLabv3_Resnet101 | 0.65 | 0.57 | 0.46 | 0.43 | 0.43 |

| Deeplabv3 | 0.70 | 0.58 | 0.37 | 0.34 | 0.33 |

| FCN | 0.57 | 0.50 | 0.43 | 0.41 | 0.41 |

| Unet | 0.55 | 0.50 | 0.45 | 0.44 | 0.43 |

| CLIPSeg | 0.67 | 0.67 | 0.66 | 0.66 | 0.66 |

| Mask2Former | 0.73 | 0.71 | 0.69 | 0.68 | 0.68 |

| PSPNet | 0.69 | 0.51 | 0.28 | 0.25 | 0.25 |

| SegFormer | 0.64 | 0.62 | 0.59 | 0.59 | 0.59 |

| SqueezeNet | 0.56 | 0.49 | 0.46 | 0.45 | 0.45 |

| Model | Gaussian Noise of Different Strengths (std) | ||||||

|---|---|---|---|---|---|---|---|

| 0 | 0.05 | 0.1 | 0.15 | 0.2 | 0.25 | 0.3 | |

| DeepLabv3+ | 0.53 | 0.45 | 0.32 | 0.25 | 0.20 | 0.19 | 0.18 |

| DeepLabv3_Resnet101 | 0.65 | 0.60 | 0.50 | 0.37 | 0.30 | 0.27 | 0.27 |

| Deeplabv3 | 0.70 | 0.64 | 0.54 | 0.49 | 0.37 | 0.15 | 0.04 |

| FCN | 0.58 | 0.59 | 0.60 | 0.59 | 0.58 | 0.56 | 0.53 |

| Unet | 0.55 | 0.54 | 0.40 | 0.30 | 0.24 | 0.17 | 0.23 |

| CLIPSeg | 0.67 | 0.64 | 0.60 | 0.56 | 0.48 | 0.43 | 0.39 |

| Mask2Former | 0.73 | 0.67 | 0.53 | 0.49 | 0.44 | 0.39 | 0.35 |

| PSPNet | 0.69 | 0.44 | 0.09 | 0.05 | 0.03 | 0.02 | 0.02 |

| SegFormer | 0.64 | 0.65 | 0.61 | 0.56 | 0.48 | 0.43 | 0.40 |

| SqueezeNet | 0.56 | 0.50 | 0.37 | 0.34 | 0.28 | 0.16 | 0.08 |

| Model | Rotation of Different Angles (Angle) | ||||||

|---|---|---|---|---|---|---|---|

| 0° | 30° | 60° | 90° | 120° | 150° | 180° | |

| DeepLabv3+ | 0.57 | 0.55 | 0.56 | 0.58 | 0.56 | 0.57 | 0.58 |

| DeepLabv3_Resnet101 | 0.65 | 0.64 | 0.65 | 0.66 | 0.62 | 0.64 | 0.66 |

| Deeplabv3 | 0.70 | 0.64 | 0.65 | 0.71 | 0.66 | 0.66 | 0.69 |

| FCN | 0.57 | 0.47 | 0.47 | 0.55 | 0.47 | 0.47 | 0.56 |

| Unet | 0.55 | 0.48 | 0.51 | 0.56 | 0.49 | 0.51 | 0.55 |

| CLIPSeg | 0.67 | 0.60 | 0.60 | 0.67 | 0.59 | 0.59 | 0.67 |

| Mask2Former | 0.73 | 0.60 | 0.61 | 0.72 | 0.62 | 0.64 | 0.73 |

| PSPNet | 0.69 | 0.60 | 0.61 | 0.69 | 0.61 | 0.60 | 0.69 |

| SegFormer | 0.64 | 0.64 | 0.64 | 0.67 | 0.65 | 0.65 | 0.65 |

| SqueezeNet | 0.56 | 0.49 | 0.50 | 0.55 | 0.48 | 0.49 | 0.55 |

| Model | Scaling of Different Factors (Scale) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| 0.1 | 0.25 | 0.5 | 0.75 | 1 | 1.25 | 1.5 | 2 | 3 | |

| DeepLabv3+ | 0.03 | 0.34 | 0.54 | 0.62 | 0.59 | 0.63 | 0.55 | 0.47 | 0.37 |

| DeepLabv3_Resnet101 | 0.02 | 0.17 | 0.45 | 0.56 | 0.61 | 0.64 | 0.65 | 0.63 | 0.60 |

| Deeplabv3 | 0.11 | 0.32 | 0.49 | 0.53 | 0.60 | 0.64 | 0.66 | 0.66 | 0.58 |

| FCN | 0.03 | 0.23 | 0.43 | 0.51 | 0.56 | 0.55 | 0.54 | 0.54 | 0.55 |

| Unet | 0.03 | 0.32 | 0.47 | 0.52 | 0.55 | 0.53 | 0.53 | 0.53 | 0.53 |

| CLIPSeg | 0.48 | 0.62 | 0.66 | 0.67 | 0.67 | 0.67 | 0.67 | 0.67 | 0.67 |

| Mask2Former | 0.31 | 0.61 | 0.71 | 0.73 | 0.73 | 0.73 | 0.73 | 0.73 | 0.72 |

| PSPNet | 0.02 | 0.17 | 0.46 | 0.63 | 0.69 | 0.67 | 0.63 | 0.51 | 0.24 |

| SegFormer | 0.34 | 0.51 | 0.60 | 0.62 | 0.63 | 0.63 | 0.63 | 0.62 | 0.63 |

| SqueezeNet | 0.01 | 0.08 | 0.37 | 0.50 | 0.56 | 0.54 | 0.51 | 0.43 | 0.28 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Keremis, K.; Vrochidou, E.; Papakostas, G.A. Empirical Evaluation of Invariances in Deep Vision Models. J. Imaging 2025, 11, 322. https://doi.org/10.3390/jimaging11090322

Keremis K, Vrochidou E, Papakostas GA. Empirical Evaluation of Invariances in Deep Vision Models. Journal of Imaging. 2025; 11(9):322. https://doi.org/10.3390/jimaging11090322

Chicago/Turabian StyleKeremis, Konstantinos, Eleni Vrochidou, and George A. Papakostas. 2025. "Empirical Evaluation of Invariances in Deep Vision Models" Journal of Imaging 11, no. 9: 322. https://doi.org/10.3390/jimaging11090322

APA StyleKeremis, K., Vrochidou, E., & Papakostas, G. A. (2025). Empirical Evaluation of Invariances in Deep Vision Models. Journal of Imaging, 11(9), 322. https://doi.org/10.3390/jimaging11090322