Non-Invasive Retinal Pathology Assessment Using Haralick-Based Vascular Texture and Global Fundus Color Distribution Analysis

Abstract

1. Introduction

Related Work

2. Materials and Methods

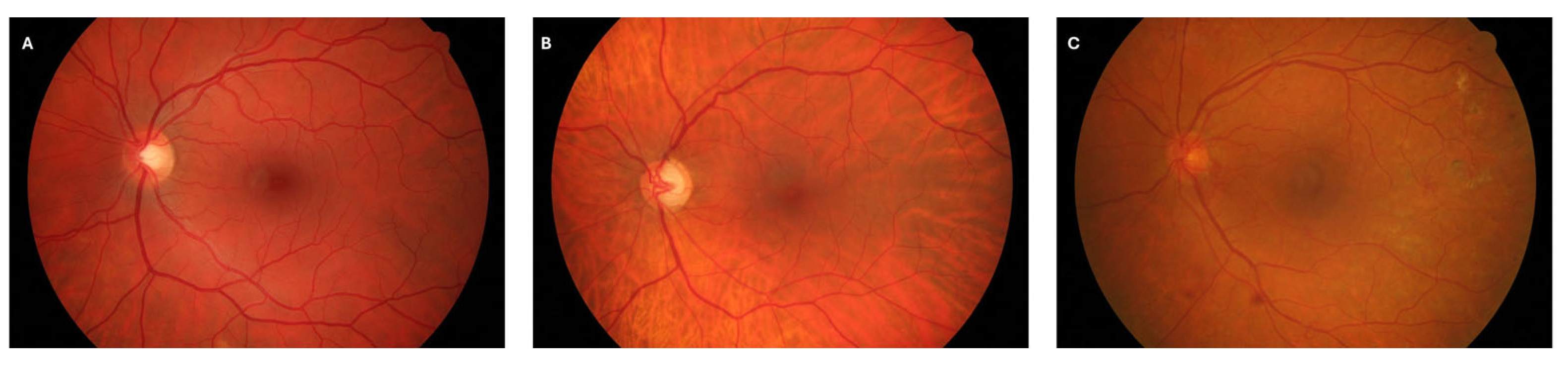

2.1. Dataset

- Healthy group: 15 images from subjects with no clinical signs of retinal disease.

- Diabetic Retinopathy (DR) group: 15 images exhibiting vascular abnormalities consistent with DR, such as microaneurysms and hemorrhages.

- Glaucoma group: 15 images from patients diagnosed with advanced-stage glaucoma, characterized by structural changes in the optic nerve head and retinal vasculature.

2.2. Features Extraction

2.3. Histogram Analysis

3. Results and Discussion

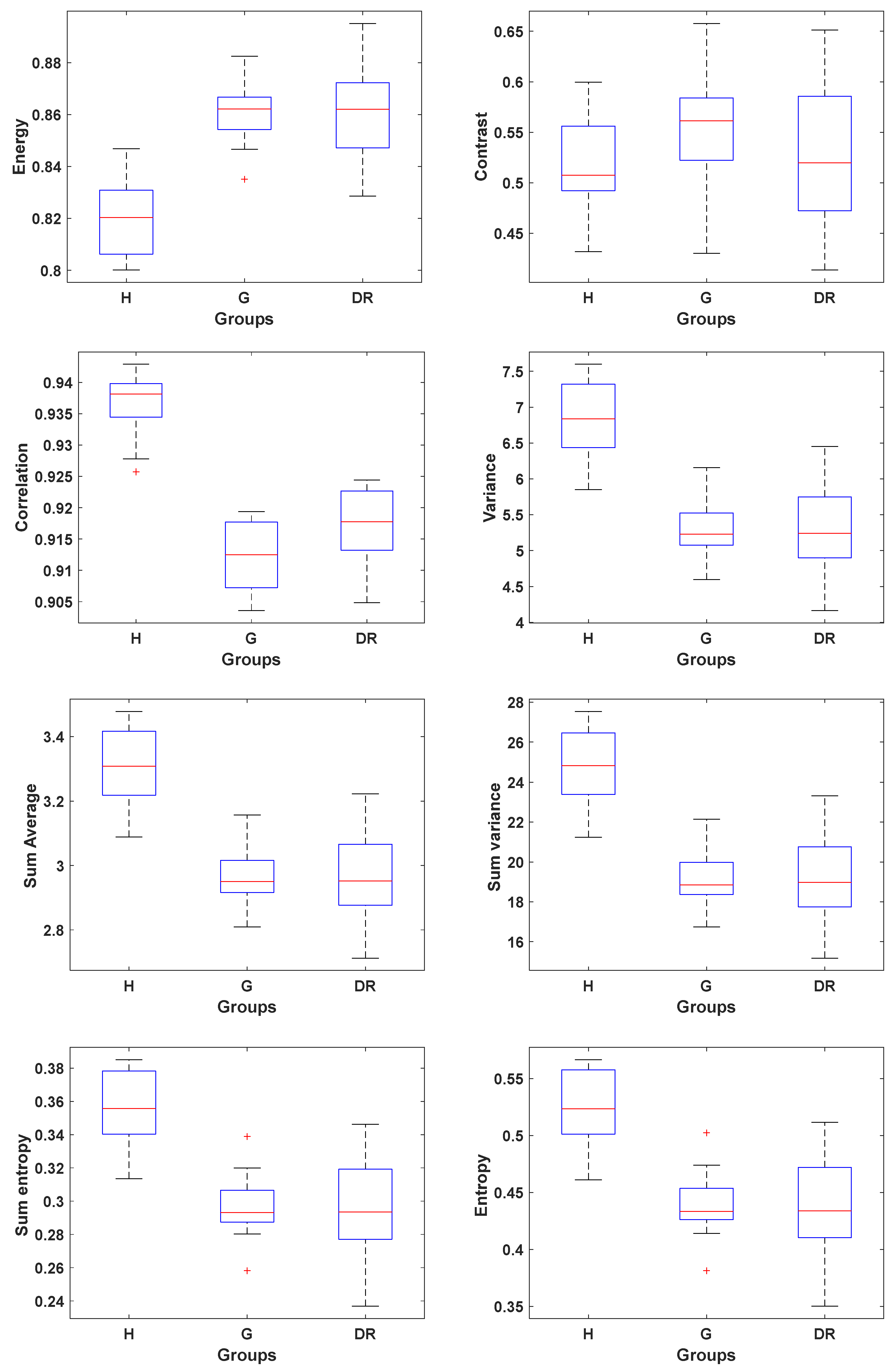

3.1. Texture Analysis

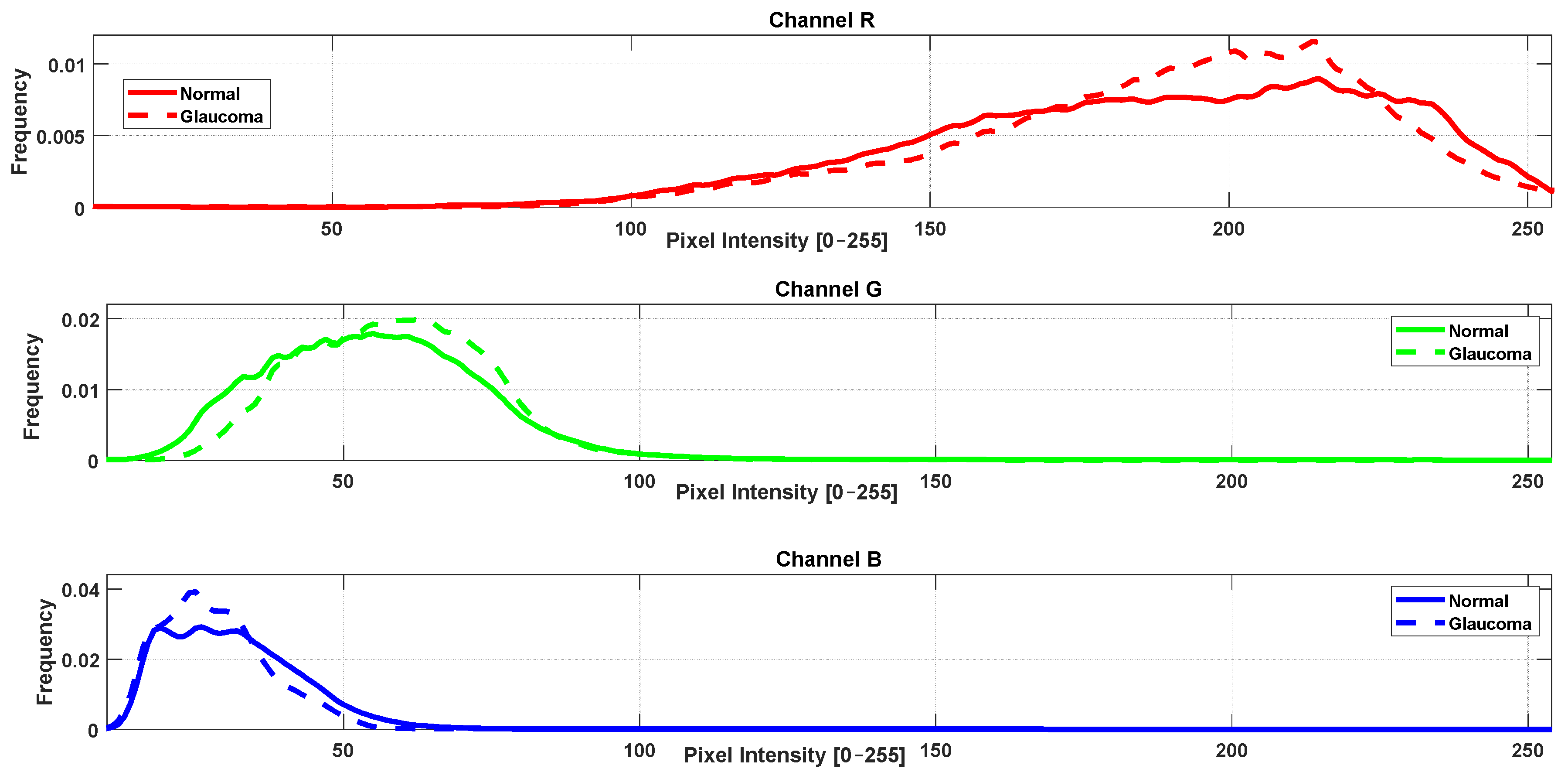

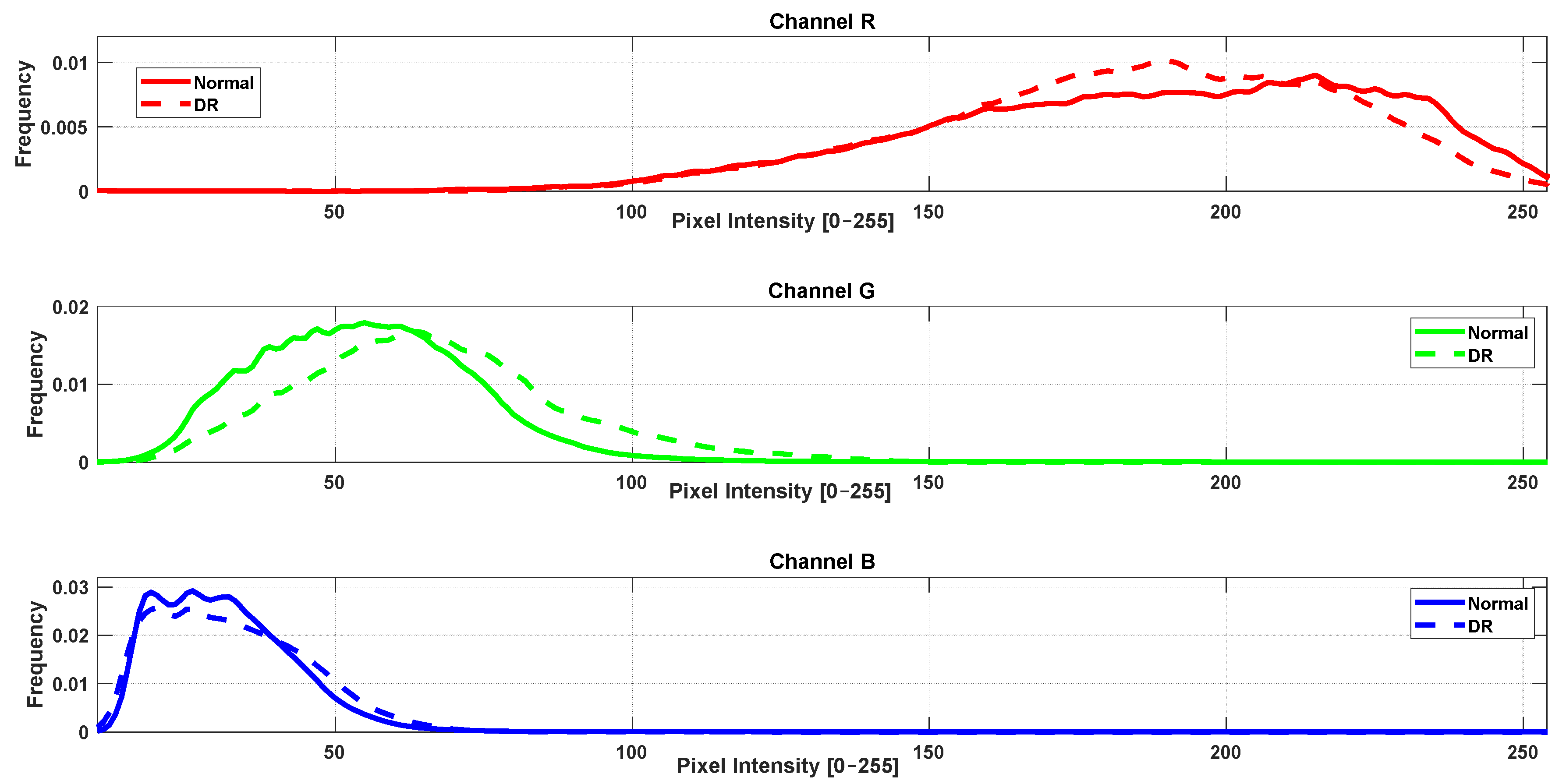

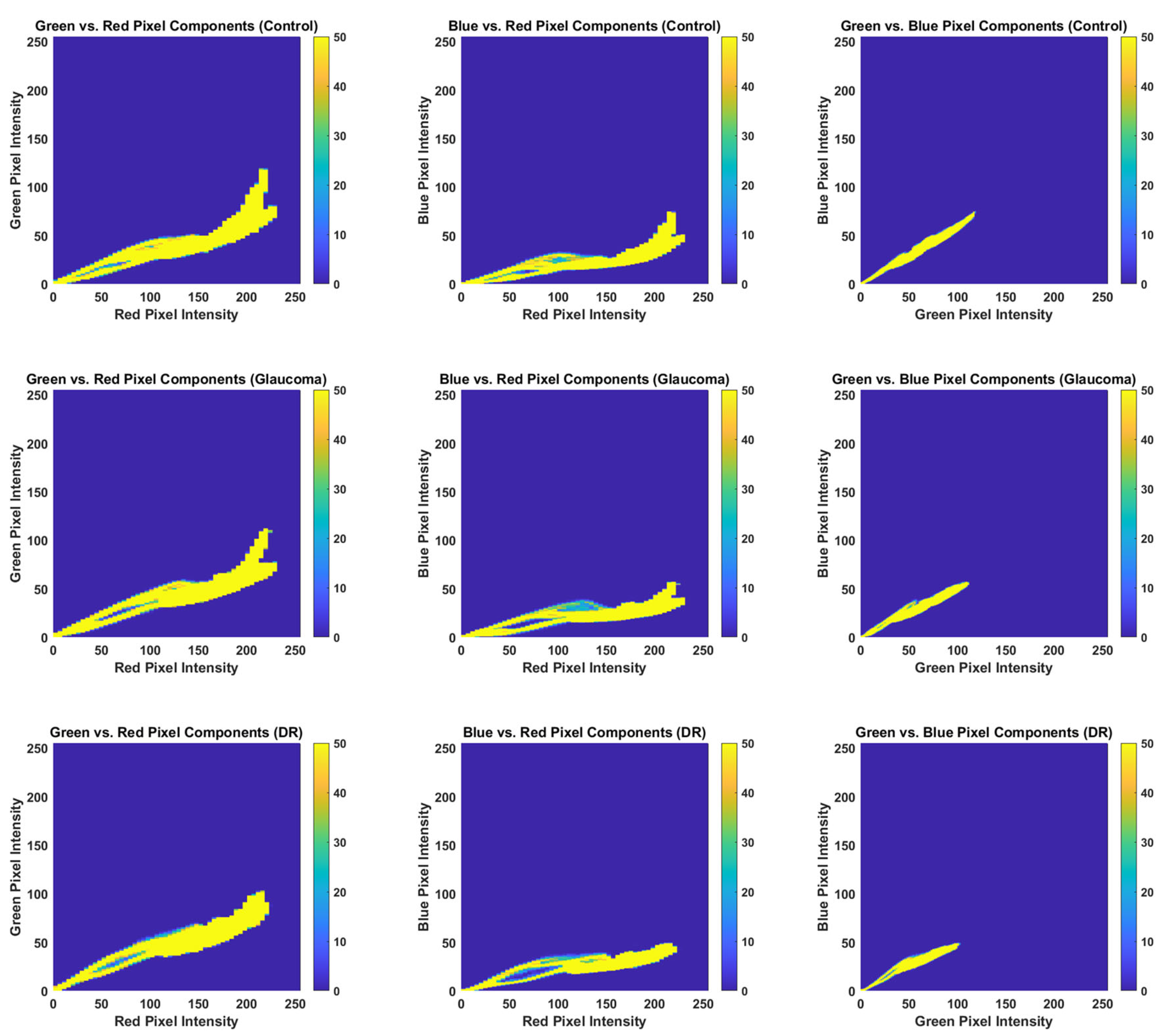

3.2. RGB Histogram and Color Distribution Analysis

- -

- Channelwise computation: Each color channel (R, G, B) was analyzed separately. Mahalanobis distance was calculated based on the histogram data for that specific channel only.

- -

- Distributional comparison: For each channel, the Mahalanobis distance quantified how distinct the color distributions of the control group were from those of each pathological group, accounting for both mean and covariance structures.

4. Conclusions

- -

- The sample size, though representative, may limit generalizability across broader populations.

- -

- The study was based on 2D fundus imaging, which does not capture depth information or fine capillary detail.

- -

- While recent advances in retinal image analysis have been dominated by deep learning approaches such as DR-VNet and OCE-Net, this study focuses on classical handcrafted features, specifically vascular texture and color histogram analysis, which offer greater interpretability and computational efficiency. Direct benchmarking against these deep learning models was beyond the current scope due to dataset size and resource constraints. Nevertheless, this method provides a complementary perspective that can be particularly valuable in settings with limited annotated data.

- -

- Integration with deep learning frameworks could enable automated feature extraction and classification at scale.

- -

- Further validation of larger and more diverse datasets from multiple clinical centers is needed to confirm robustness and reproducibility.

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ahmad, A.; Mansoor, A.B.; Mumtaz, R.; Khan, M.; Mirza, S. Image processing and classification in diabetic retinopathy: A review. In Proceedings of the 2014 5th European Workshop on Visual Information Processing (EUVIP), Paris, France, 10–12 December 2014; pp. 1–6. [Google Scholar]

- Kumar, B.N.; Chauhan, R.; Dahiya, N. Detection of Glaucoma using image processing techniques: A review. In Proceedings of the 2016 International Conference on Microelectronics, Computing and Communications (MicroCom), Durgapur, India, 23–25 January 2016; pp. 1–6. [Google Scholar]

- Sarhan, A.; Rokne, J.; Alhajj, R. Glaucoma detection using image processing techniques: A literature review. Comput. Med. Imaging Graph 2019, 78, 101657. [Google Scholar] [CrossRef] [PubMed]

- Chan, K.K.; Tang, F.; Tham, C.C.; Young, A.L.; Cheung, C.Y. Retinal vasculature in glaucoma: A review. BMJ Open Ophthalmol. 2017, 1, e000032. [Google Scholar] [CrossRef] [PubMed]

- Barber, A.J. A new view of diabetic retinopathy: A neurodegenerative disease of the eye. Prog. Neuropsychopharmacol. Biol. Psychiatry 2003, 27, 283–290. [Google Scholar] [CrossRef]

- Nguyen, T.T.; Wong, T.Y. Retinal vascular changes and diabetic retinopathy. Curr. Diab. Rep. 2009, 9, 277–283. [Google Scholar] [CrossRef] [PubMed]

- Selvam, S.; Kumar, T.; Fruttiger, M. Retinal vasculature development in health and disease. Prog. Retin. Eye Res. 2018, 63, 1–19. [Google Scholar] [CrossRef]

- Ahmad, R.; Mohanty, B.K. Chronic kidney disease stage identification using texture analysis of ultrasound images. Biomed. Signal Process. Control 2021, 69, 102695. [Google Scholar] [CrossRef]

- Tesař, L.; Shimizu, A.; Smutek, D.; Kobatake, H.; Nawano, S. Medical image analysis of 3D CT images based on extension of Haralick texture features. Comput. Med. Imaging Graph. 2008, 32, 513–520. [Google Scholar] [CrossRef]

- Youssef, S.M.; Korany, E.A.; Salem, R.M. Contourlet-based feature extraction for computer aided diagnosis of medical patterns. In Proceedings of the 2011 IEEE 11th International Conference on Computer and Information Technology, Pafos, Cyprus, 31 August–2 September 2011; pp. 481–486. [Google Scholar]

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Gupta, S.; Sisodia, D.S. Automated detection of diabetic retinopathy from gray-scale fundus images using GLCM and GLRLM-based textural features—A comparative study. In Intelligent Computing Techniques in Biomedical Imaging; Elsevier: Amsterdam, The Netherlands, 2025; pp. 251–259. [Google Scholar]

- Stanley, R.J.; Moss, R.H.; Van Stoecker, W.; Aggarwal, C. A fuzzy-based histogram analysis technique for skin lesion discrimination in dermatology clinical images. Comput. Med. Imaging Graph 2003, 27, 387–396. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, Q.; Zhang, B.; Chen, X. TongueNet: A precise and fast tongue segmentation system using U-Net with a morphological processing layer. Appl. Sci. 2019, 9, 3128. [Google Scholar] [CrossRef]

- Igalla-El Youssfi, A.; López-Alonso, J.M. Fractal and multifractal new metrics for pathological characterization and quantification in diabetic retinopathy and glaucoma. Measurement 2025, 256, 118561. [Google Scholar] [CrossRef]

- Karaali, A.; Dahyot, R.; Sexton, D.J. DR-VNet: Retinal vessel segmentation via dense residual UNet. In Proceedings of the International Conference on Pattern Recognition and Artificial Intelligence, Xiamen China, 23–25 September 2022; pp. 198–210. [Google Scholar]

- Wei, X.; Yang, K.; Bzdok, D.; Li, Y. Orientation and context entangled network for retinal vessel segmentation. Expert Syst. Appl. 2023, 217, 119443. [Google Scholar] [CrossRef]

- Li, M.; Zhou, S.; Chen, C.; Zhang, Y.; Liu, D.; Xiong, Z. Retinal vessel segmentation with pixel-wise adaptive filters. In Proceedings of the 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI), Kolkata, India, 28–31 March 2022; pp. 1–5. [Google Scholar]

- Shamsan, A.; Senan, E.M.; Shatnawi, H.S.A. Automatic classification of colour fundus images for prediction eye disease types based on hybrid features. Diagnostics 2023, 13, 1706. [Google Scholar] [CrossRef]

- Odstrcilik, J.; Kolar, R.; Budai, A.; Hornegger, J.; Jan, J.; Gazarek, J.; Kubena, T.; Cernosek, P.; Svoboda, O.; Angelopoulou, E. Retinal vessel segmentation by improved matched filtering: Evaluation on a new high-resolution fundus image database. IET Image Process. 2013, 7, 373–383. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 2007, 6, 610–621. [Google Scholar] [CrossRef]

- Shabat, A.M.; Tapamo, J.-R. A comparative study of the use of local directional pattern for texture-based informal settlement classification. J. Appl. Res. Technol. 2017, 15, 250–258. [Google Scholar] [CrossRef]

- Kumar Ram, R. Segmentation of Blood Vessels in Retinal Images. Version 1.0.0. MATLAB Central File Exchange. Available online: https://www.mathworks.com/matlabcentral/fileexchange/102364-segmentation-of-blood-vessels-in-retinal-images (accessed on 30 July 2025).

- Monzel, R. haralickTextureFeatures Versión 1.3.1.0. Available online: https://es.mathworks.com/matlabcentral/fileexchange/58769-haralicktexturefeatures (accessed on 30 July 2025).

- Plataniotis, K.N.; Venetsanopoulos, A.N. Companion image processing software. In Color Image Processing and Applications; Springer: Berlin/Heidelberg, Germany, 2000; pp. 349–352. [Google Scholar]

- Swain, M.J.; Ballard, D.H. Color indexing. Int. J. Comput. Vis. 1991, 7, 11–32. [Google Scholar] [CrossRef]

- Hu, H. Advanced Man-Machine Interaction-Fundamentals and Implementation. Ind. Robot. Int. J. 2007, 34. [Google Scholar] [CrossRef]

- Gonzalez, R.C. Digital Image Processing; Pearson Education India: Delhi, India, 2009. [Google Scholar]

- Saleem, A.; Beghdadi, A.; Boashash, B. Image fusion-based contrast enhancement. EURASIP J. Image Video Process. 2012, 2012, 10. [Google Scholar] [CrossRef]

- Janney, J.B.; Roslin, S.E.; Kumar, S.K. Analysis of skin lesions using machine learning techniques. In Computational Intelligence and Its Applications in Healthcare; Elsevier: Amsterdam, The Netherlands, 2020; pp. 73–90. [Google Scholar]

- Xue, Y.; Mohamed, K.; Van Dyke, M.; Guner, D.; Sherizadeh, T. Quantifying the Texture of Coal Images with Different Lithotypes through Gray-Level Co-Occurrence Matrix. In Proceedings of the MINEXCHANGE SME Annual Conference and Expo, Society of Mining, Metallurgy and Explorartion, Phoenix, AZ, USA, 25–28 February 2024. [Google Scholar]

- Durbin, M.K.; An, L.; Shemonski, N.D.; Soares, M.; Santos, T.; Lopes, M.; Neves, C.; Cunha-Vaz, J. Quantification of retinal microvascular density in optical coherence tomographic angiography images in diabetic retinopathy. JAMA Ophthalmol. 2017, 135, 370–376. [Google Scholar] [CrossRef]

- Romero-Oraá, R.; Jiménez-García, J.; García, M.; López-Gálvez, M.I.; Oraá-Pérez, J.; Hornero, R. Entropy rate superpixel classification for automatic red lesion detection in fundus images. Entropy 2019, 21, 417. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.; Zee, B.C.Y.; Li, Q. Detection of neovascularization based on fractal and texture analysis with interaction effects in diabetic retinopathy. PLoS ONE 2013, 8, e75699. [Google Scholar] [CrossRef] [PubMed]

- Zheng, Y.; Kwong, M.T.; MacCormick, I.J.; Beare, N.A.; Harding, S.P. A comprehensive texture segmentation framework for segmentation of capillary non-perfusion regions in fundus fluorescein angiograms. PLoS ONE 2014, 9, e93624. [Google Scholar] [CrossRef]

- Gayathri, S.; Krishna, A.K.; Gopi, V.P.; Palanisamy, P. Automated binary and multiclass classification of diabetic retinopathy using haralick and multiresolution features. IEEE Access 2020, 8, 57497–57504. [Google Scholar] [CrossRef]

- Patel, R.K.; Kashyap, M. Automated screening of glaucoma stages from retinal fundus images using BPS and LBP based GLCM features. Int. J. Imaging Syst. Technol. 2023, 33, 246–261. [Google Scholar] [CrossRef]

- Gupta, S.; Thakur, S.; Gupta, A. Comparative study of different machine learning models for automatic diabetic retinopathy detection using fundus image. Multimed. Tools Appl. 2024, 83, 34291–34322. [Google Scholar] [CrossRef]

| Texture Feature | Equation | |

|---|---|---|

| Energy or angular second moment | (3) | |

| Contrast | (4) | |

| Correlation | (5) | |

| Variance | (6) | |

| Sum Average | (7) | |

| Sum variance | (8) | |

| Sum entropy | (9) | |

| Entropy | (10) |

| Texture Feature | Glaucoma | DR | Diseased vs. Normal Controls (p-Value) | |

|---|---|---|---|---|

| Energy | 0.82 ± 0.02 | 0.86 ± 0.01 | 0.86 ± 0.02 | <0.05 |

| Contrast | 0.52 ± 0.05 | 0.55 ± 0.06 | 0.52 ± 0.07 | >0.05 |

| Correlation | 0.937 ± 0.005 | 0.912 ± 0.006 | 0.917 ± 0.006 | <0.05 |

| Variance | 6.8 ± 0.6 | 5.3 ± 0.4 | 5.3 ± 0.6 | <0.05 |

| Sum Average | 3.3 ± 0.1 | 2.96 ± 0.08 | 3.0 ± 0.1 | <0.05 |

| Sum variance | 27.7 ± 2.0 | 19.1 ± 1.3 | 19.3 ± 2.2 | <0.05 |

| Sum entropy | 0.36 ± 0.02 | 0.30 ± 0.02 | 0.30 ± 0.03 | <0.05 |

| Entropy | 0.52 ± 0.03 | 0.44 ± 0.03 | 0.44 ± 0.05 | <0.05 |

| Mahalanobis Distance Per Channel | |||

|---|---|---|---|

| R | G | B | |

| Healthy vs. glaucoma | 3.45 | 3.50 | 4.20 |

| Healthy vs. DR | 4.26 | 3.88 | 3.56 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sijilmassi, O. Non-Invasive Retinal Pathology Assessment Using Haralick-Based Vascular Texture and Global Fundus Color Distribution Analysis. J. Imaging 2025, 11, 321. https://doi.org/10.3390/jimaging11090321

Sijilmassi O. Non-Invasive Retinal Pathology Assessment Using Haralick-Based Vascular Texture and Global Fundus Color Distribution Analysis. Journal of Imaging. 2025; 11(9):321. https://doi.org/10.3390/jimaging11090321

Chicago/Turabian StyleSijilmassi, Ouafa. 2025. "Non-Invasive Retinal Pathology Assessment Using Haralick-Based Vascular Texture and Global Fundus Color Distribution Analysis" Journal of Imaging 11, no. 9: 321. https://doi.org/10.3390/jimaging11090321

APA StyleSijilmassi, O. (2025). Non-Invasive Retinal Pathology Assessment Using Haralick-Based Vascular Texture and Global Fundus Color Distribution Analysis. Journal of Imaging, 11(9), 321. https://doi.org/10.3390/jimaging11090321