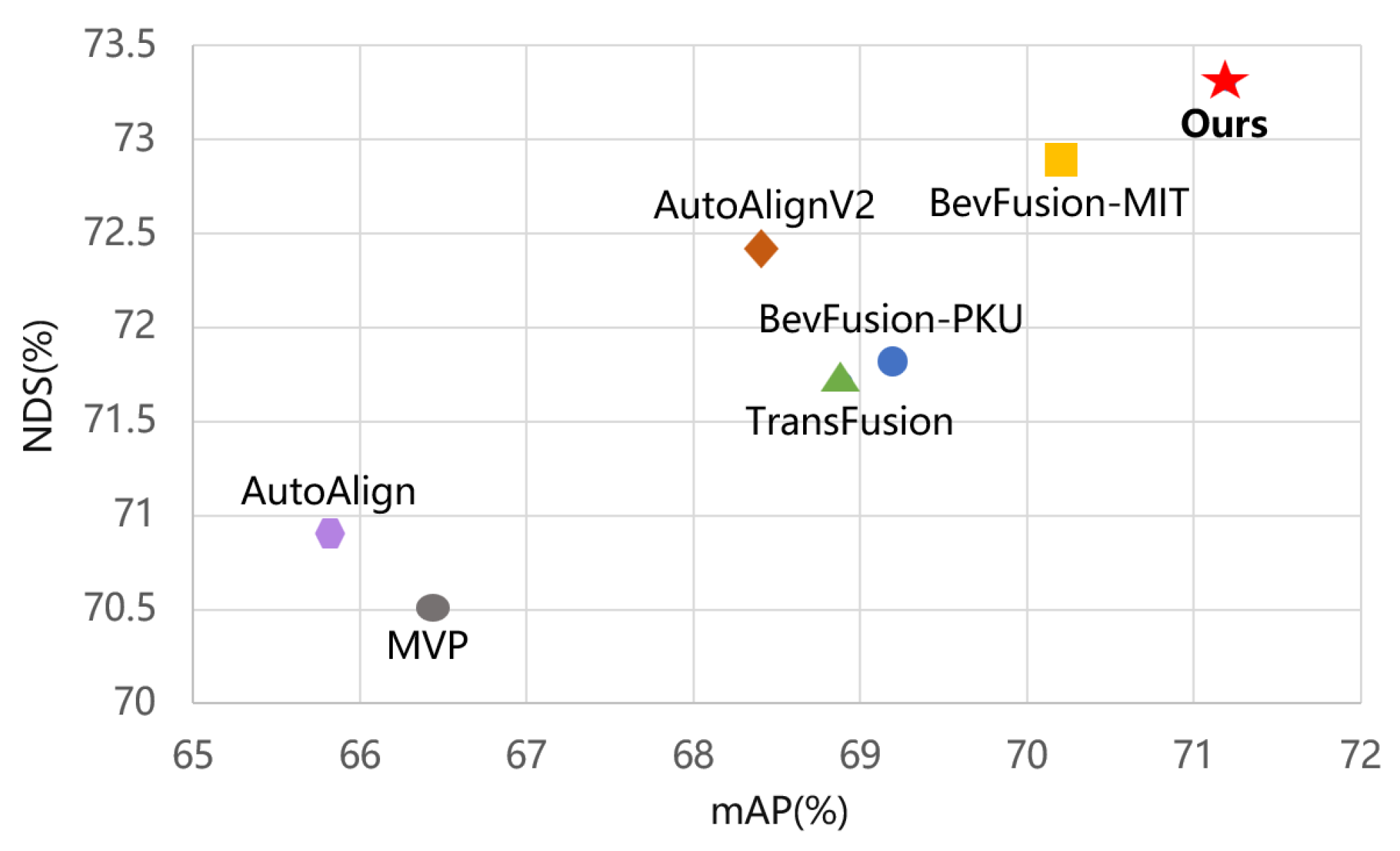

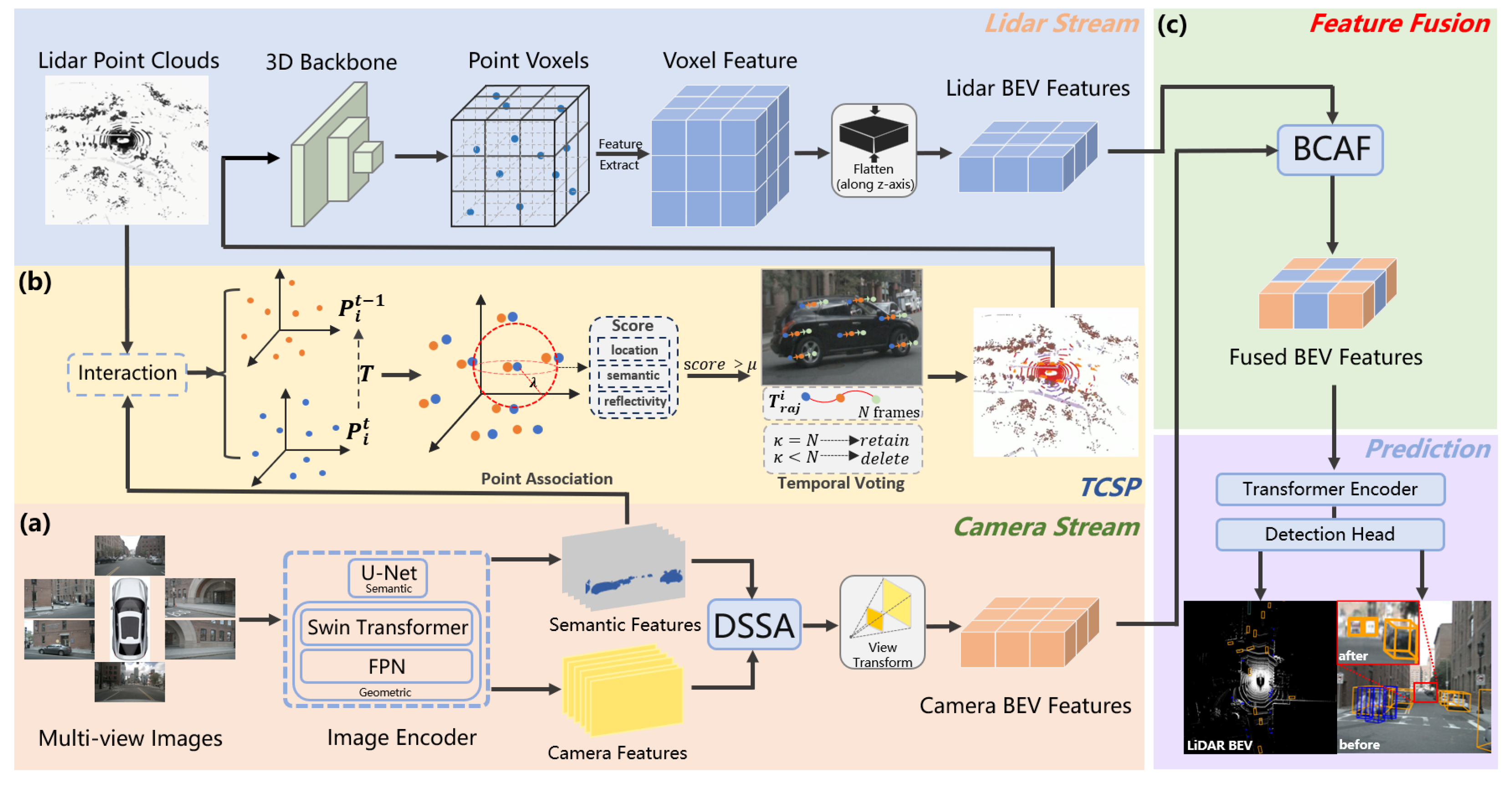

To address LiDAR’s difficulties in fine-grained perception and the shortcomings of the fusion strategy, we reconsider the significance of image semantic information and point cloud geometric information for detection. Our SETR-Fusion pipeline is shown in

Figure 3. The proposed SETR-Fusion framework consists of two complementary processing branches, namely the camera stream and the point cloud stream. In the camera stream, multiview images are first processed by an image encoder to extract both semantic and geometric representations. These features are then refined through the DSSA module, which emphasizes salient foreground objects. Following a view transformation step, the enhanced representations are projected into the BEV space, yielding the camera BEV features. In the point cloud branch, the raw LiDAR point clouds are combined with semantic features from the images via a temporal consistency semantic point fusion module, which improves the detection of distant foreground targets. The fused point clouds are then processed by a 3D backbone to generate voxel-level features, which are subsequently projected along the Z-axis to obtain the LiDAR BEV features. Finally, the camera BEV features and LiDAR BEV features are fed into a bilateral cross-attention fusion module to achieve comprehensive multimodal BEV feature integration. The fused BEV features are subsequently passed through a detection encoder and a task-specific detection head to perform accurate 3D object detection.

3.1. Image Feature Extraction

In the image encoder of the SETR-Fusion camera stream, we design a dual-branch encoding architecture that jointly captures geometric and semantic information. Consider a set of input data

, where

is the number of viewpoints. In the semantic branch, a U-Net-based semantic segmentation network [

56] is employed to extract instance-level segmentation features

, where

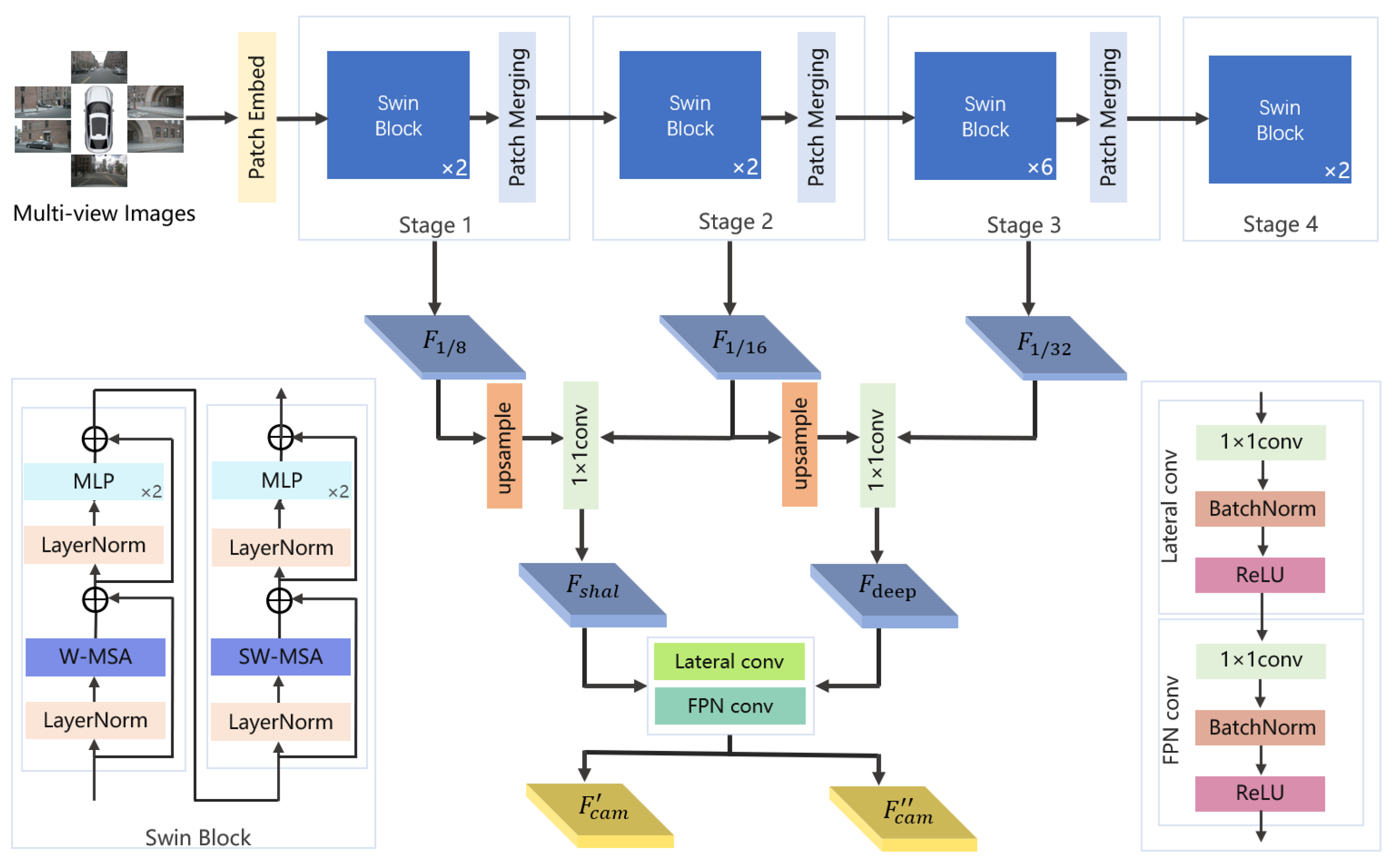

K denotes the number of object categories. In the geometric branch, we adopt a multiview image feature extraction framework based on a hierarchical Transformer architecture to process multiview image inputs in autonomous driving scenarios, as illustrated in

Figure 4. Specifically, the feature extraction pipeline first flattens the multiview images into six independent samples, which are then fed into a Swin Transformer backbone [

57] with shared weights. The core computational unit of this backbone is the Swin block, which incorporates a hierarchical window-based self-attention mechanism to achieve efficient long-range dependency modeling. Each Swin block contains a shifted window multihead self-attention module with layer normalization, which alternates between local attention computation through fixed-window partitioning and shifted-window strategies, thereby enabling cross-window feature interaction. To mitigate overfitting, DropPath regularization is applied. Following the attention module, a feedforward network is employed, consisting of a

channel-expanded MLP with GELU activation, further enhanced by residual connections to improve the information flow. This cascaded “attention + feedforward” design preserves global context awareness while significantly reducing the computational complexity.

The backbone network processes features through four hierarchical stages. The initial patch embedding module employs a

convolution to downsample the input, producing the initial feature maps. Subsequently, four sequential processing stages progressively reduce the spatial resolution while increasing the representational capacity, generating a set of multiscale features

,

, and

. These multiscale outputs are fused through a feature recomposition module. The shallow-level features

are obtained by concatenating the outputs of stages 1 and 2 after upsampling

to match the resolution of

, followed by a

convolution for channel reduction. Similarly, the deep-level features

are generated by fusing the outputs of stages 3 and 4 and applying a

convolution for dimension reduction. The reorganized features are fed into the neck module, a lightweight variant of the Feature Pyramid Network (FPN) [

58]. This module first compresses both shallow and deep features to a uniform channel dimension

C via

convolutions, followed by

convolutions to enhance spatial context modeling. Finally, it outputs multiscale feature pairs

and

with consistent channel dimensions, where

C is the number of feature channels. In this design, high-resolution features preserve fine-grained spatial details, whereas low-resolution features encode high-level semantic cues, enabling balanced and optimized representations for multiview 3D perception.

Overall, the hierarchical attention mechanism within the Swin blocks facilitates cross-window information interaction, while the feature recomposition strategy balances computational efficiency with multiscale expressiveness, forming an end-to-end feature extraction pipeline.

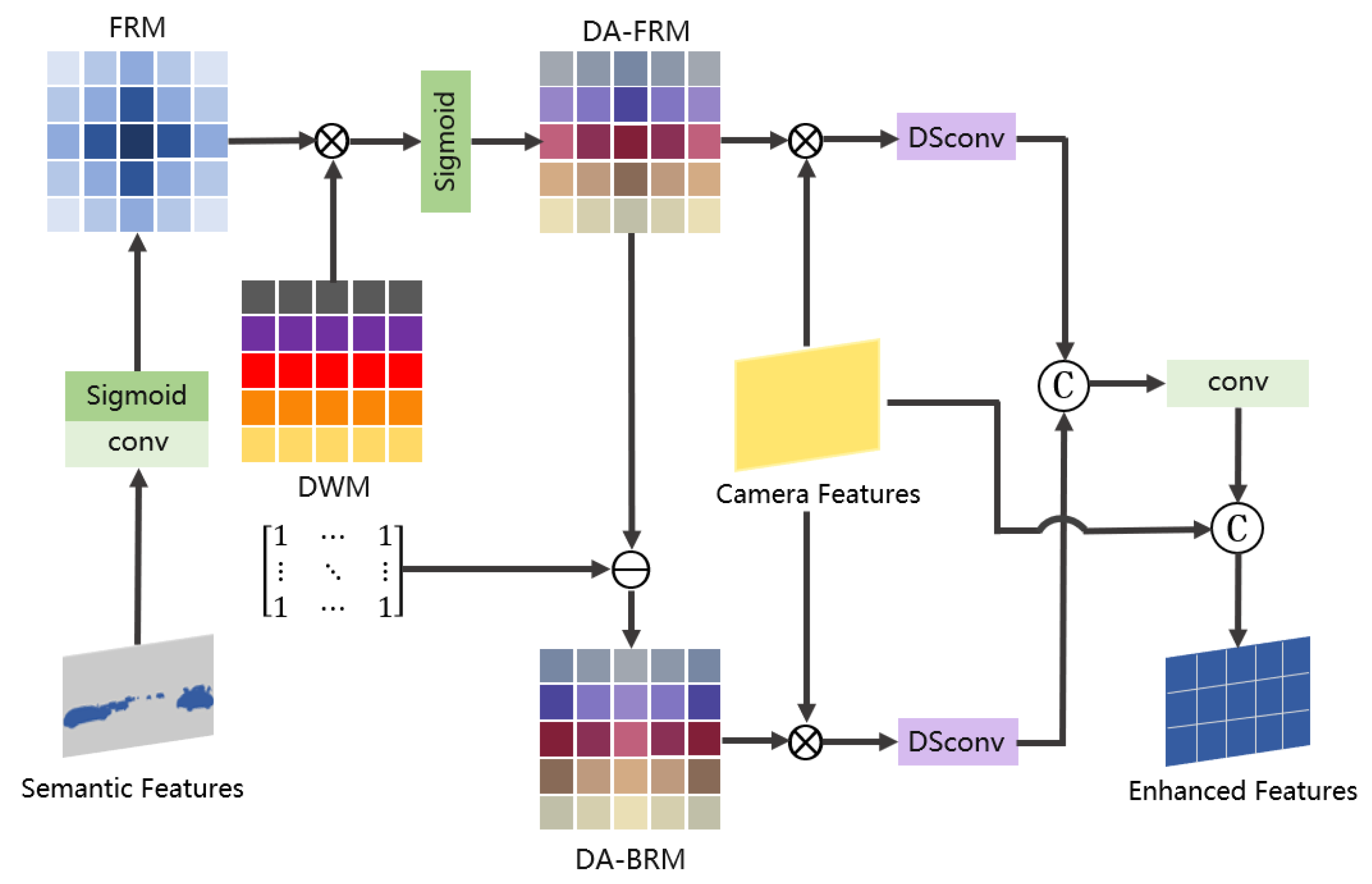

3.2. Discriminative Semantic Saliency Activation

Images provide dense semantics that are critical for contextual understanding and precise foreground–background differentiation. To fully leverage this capability, we enhance the camera branch with the DSSA module, which explicitly fuses semantic and geometric image features. This design maximizes the use of semantic cues and significantly improves the perception performance, especially for distant small targets near the sensor’s range limits. The camera branch pipeline is shown in

Figure 3a, and the details of the DSSA module are demonstrated in

Figure 5.

and

are obtained by the image encoder, where

C is the number of feature channels and

K is the number of categories. Inputting

and

into the DSSA module,

generates the foreground response map (FRM) of the emphasized instances after

convolution and Sigmoid; then, we refer to [

59] to generate the image into a depth weight map (DWM). We then multiply the FRM with the DWM element-by-element pointwise to obtain the depth-augmented foreground response map (DA-FRM); finally, the depth-augmented background response map (DA-BRM) can be obtained by subtracting the DA-FRM with the all-one matrix. Next, the DA-FRM and DA-BRM are weighted with

to obtain

and

, respectively, integrating fine-grained details to enhance the characterization and discriminative properties of small targets in the feature mapping. To enhance the camera’s ability to perceive distant and small instances, as shown in

Figure 6, we incorporate the depth-separable dilation convolution

[

60]. This module sequentially comprises three steps. First, dilated convolutions are performed independently on each channel through per-channel dilated convolutions. Different dilation rates significantly expand the receptive field while maintaining manageable computational complexity, enabling the capture of contextual information across varying scales. Second, deep convolutional kernels are introduced to perform further spatial feature extraction and enhancement on the per-channel features; finally, 1 × 1 pointwise convolutions are employed to achieve cross-channel linear combinations and information fusion, thereby enhancing the overall expressive power of the feature representations. This architecture maintains multiscale receptive field coverage while effectively reducing the computational complexity of traditional convolutions. It improves the detection performance for small objects at near, medium, and far distances. It is detailed in the following formula:

where

consists of a

convolution kernel, 256 channels, and a layer of

when

. When

,

is a depth-separated convolution with expansion rates of 3, 5, and 7, respectively, and the number of channels is 256, where

is a

convolution kernel,

denotes a depth-separated convolution kernel independent of each group of channels,

represents the size of the convolution kernel, and

C denotes the input data. The small target scale is widely distributed, and it is difficult to take into account the single receptive field structure. The design covers

to

receptive fields with different expansion rates, realizing the capture of different contextual information features at near, middle, and far distances. With inputs

and

, the outputs with different expansion rates are concatenated and output using

to obtain

. Finally,

is then connected in series with

to obtain the enhanced image features

through the

kernel and ReLU layer.

where ⊔ denotes the join operation,

L denotes the output layer, and

denotes the tandem operation.

3.3. Camera BEV Feature Construction

We adopt an implicitly supervised approach to construct camera BEV features, with the goal of transforming the image data into spatially consistent feature representations by predicting the depth distribution of each pixel. This strategy projects rich image features into appropriate depth intervals in three-dimensional space, thereby generating BEV representations that are tightly coupled with the object structure and depth cues. Such representations provide high-quality feature inputs for downstream tasks such as localization, navigation, and 3D detection.

Specifically, as shown in

Figure 7, the extracted image features

are fed into the camera BEV encoder, which integrates the Lift–Splat–Shoot (LSS) [

12] method to predict per-pixel depth distributions. This enables the more accurate estimation of each pixel’s position and depth in 3D space, forming a robust foundation for subsequent feature transformation. Each image feature point is then sampled along the corresponding camera ray into multiple discrete depth hypotheses, where the predicted depth distribution defines a probability density function for each pixel. Based on these probabilities, each feature point is rescaled according to its depth value, ensuring a precise spatial arrangement and ultimately forming a feature point cloud in 3D space.

Once the feature point cloud is obtained, it is compressed along the depth axis to aggregate the information into a compact BEV representation , where denotes the BEV feature channel dimension. This compression step removes redundant depth variations while preserving the most informative geometric and spatial structural cues. The resulting BEV feature map effectively encodes both geometric and semantic information from the original images in a 3D spatial context, providing an efficient and discriminative representation for downstream visual perception tasks.

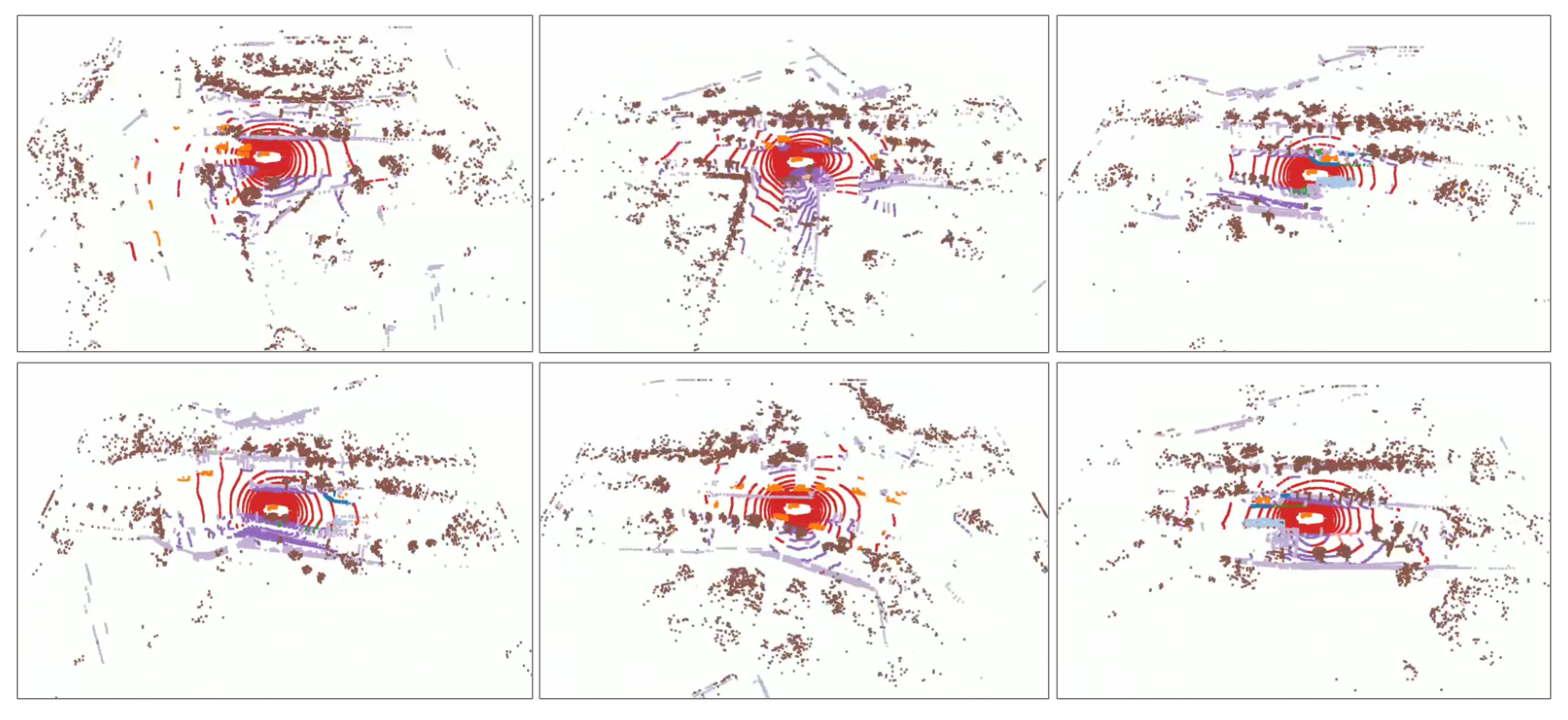

3.4. Temporally Consistent Semantic Point Fusion

In order to compensate for the shortcomings of LiDAR for distant target detection, we insert a temporal consistency semantic point fusion module before the point cloud enters the LiDAR branch, aiming to enhance the detection ability of the point cloud for the foreground, and its pipeline is shown in

Figure 3b.

Point Association. Semantic feature points are fused with the point cloud

with reference to [

14,

61] to generate semantic LiDAR points

, where the number 4 contains the point cloud coordinates

and the intensity

r,

c is the category, and

s is the confidence. The real objects have continuous motion trajectories in the time dimension, while the noise points appear randomly. In order to make the fusion results more accurate, the semantic LiDAR points are filtered for temporal consistency. Firstly, we obtain the positional transformation matrix

T between neighboring frames and transform the current frame point cloud

into the previous frame point cloud

coordinate system. Second, KD-Tree is utilized to establish correspondence between the current frame points and the historical frame points, and we search for points that match the radius

as candidate points. Finally, according to the distance, semantic confidence, and radar reflectivity, the candidate points are assigned a location similarity score

, semantic similarity score

, and reflectivity similarity score

, respectively, which are computationally defined as follows:

where

is the Euclidean distance between the current point and the candidate point, and

is the distance scale parameter.

I is a binary indicator function that satisfies the same semantic category, outputting 1; otherwise, it is 0.

is the absolute difference between the reflectance value of the current point and that of the candidate point. From the above, the scoring model is built:

where

is the spatial distance weight,

is the category invariance weight, and

is the material consistency weight.

Temporal Voting. We set the score threshold and the list of temporal point trajectories . From the comprehensive scores of candidate points, we determine whether there is a best candidate point among the candidate points. If so, we deposit it into the trajectory ; if not, we consider it as a new point. After consecutive N frames, we determine whether the length of the trajectory is greater than . If is equal to N, the current frame point is retained; if it is less than , the current frame point is regarded as noise and rejected. Finally, the enhanced point cloud filtered by temporal consistency is obtained. The TCSP module’s algorithmic flow is shown in Algorithm 1.

Next, we input

into the LiDAR branch, which we first divide into regular voxels

and extract voxel features using a voxel encoder [

3] with 3D sparse convolution. Then, we project the voxel features to the BEV along the z-axis and use multiple 2D convolutional layers to obtain the LiDAR BEV feature map

, where

is the number of LiDAR BEV feature channels.

| Algorithm 1 Temporally Consistent Semantic Point Fusion |

Require: Current frame semantic LiDAR points , previous frame active

trajectories , transformation matrix T (current to previous frame), parameters:

(radius), (distance scale), weights: , , , thresholds:

(score), (trajectory length), persistent state: (trajectory counter)

Ensure: Enhanced point cloud , updated active trajectories , updated

- 1:

, , , - 2:

for each point do - 3:

Compute - 4:

end for - 5:

- 6:

for each point (with transformed ) do - 7:

- 8:

if exists then - 9:

, - 10:

for each trajectory do - 11:

- 12:

- 13:

- 14:

- 15:

- 16:

- 17:

if

then - 18:

, - 19:

end if - 20:

end for - 21:

if

then - 22:

Update (current frame coordinates) - 23:

- 24:

Add to - 25:

if

then - 26:

- 27:

end if - 28:

continue - 29:

end if - 30:

end if - 31:

Create new trajectory: - 32:

- 33:

- 34:

Add to - 35:

if

then

- 36:

end if - 37:

end for

|

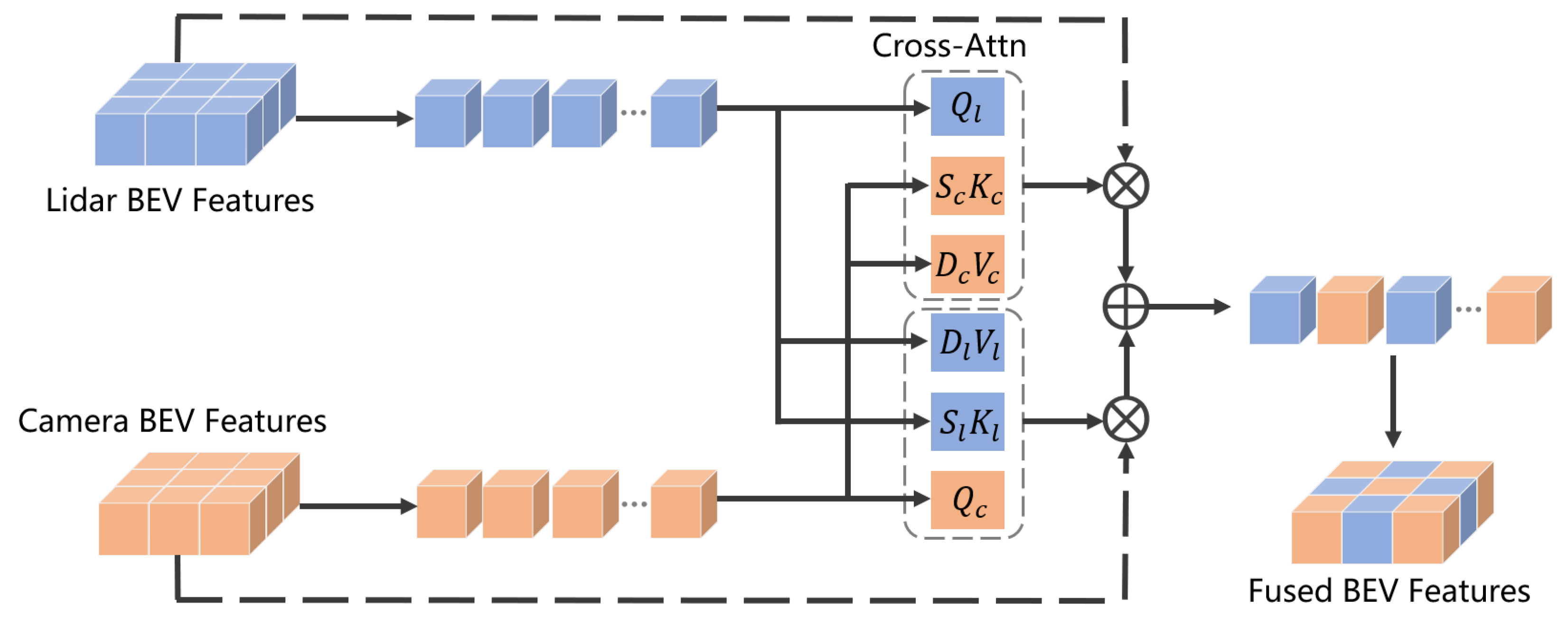

3.5. Bilateral Cross-Attention Fusion

Recent studies [

16,

17] have constructed shared BEV representations through simple feature concatenation. However, these approaches suffer from fundamental limitations: on the one hand, no cross-modal interaction mechanism has been established, leading to the isolation of geometric and semantic information; on the other hand, the lack of global spatial correlation modeling degrades feature fusion to a local operation. This coarse-grained fusion fails to satisfy complementary modality requirements in dynamic scenes. For this reason, we propose the BCAF mechanism in the cross-modal perception task, which realizes a strong synergy between point clouds and image modalities through symmetric feature interaction.

As shown in

Figure 8, the framework first adds position encoding

and

to the image BEV feature sequence

and the point cloud BEV feature sequence

to preserve spatial information, respectively. The cross-attention formula is as follows:

where

, and

V denote the query, key, and value, respectively. We create low-rank projection matrices

,

and

,

, respectively, for the two attention directions, where rank

. Each

high-density projection of

K and

V is replaced with low-rank projections

and

, reducing the parameters and floating-point operations of the linear terms from

to

and lowering the computational complexity. The core design of the BCAF module consists of two parallel cross-attentional branches, formulated as follows:

where

is the query vector of the image modality, and

and

denote the key and value vectors of the point cloud modality, respectively.

denotes the image BEV features enhanced using the point cloud information.

denotes the query vector of the point cloud modality, and

and

denote the key and value vectors of the image modality, respectively.

denotes the point cloud BEV features enhanced using the image information. Finally, the bilaterally enhanced features are fused into a unified representation:

3.6. Loss Function

DSSA Module Loss. The DSSA module partitions BEV-space features into foreground and background channels to enhance the discriminative capabilities of multimodal fusion features. Let the foreground saliency map predicted by the DSSA module be denoted as

and the background saliency map as

. The binary mask

is obtained by projecting the ground truth segmentation labels of instances within 3D bounding boxes onto the BEV space. Specifically, for each annotated object, we first rasterize its 3D bounding box onto the BEV grid, marking grid cells within the object region as foreground (1) and those outside as background (0). The resulting binary mask

provides explicit supervision signals for the foreground saliency map

and background saliency map

. To address class imbalance and place a greater emphasis on hard-to-classify samples, we employ the binary focal loss [

62] to supervise the foreground and background predictions separately:

where

and

are pixel normalization factors. Finally, the loss of the DSSA module is

where

and

are loss weight hyperparameters.

TCSP Module Loss. The TCSP module achieves time-consistent semantic point fusion by calculating the cross-frame association score between the current frame and historical frame points. Let the matching score between the current frame point

and the historical frame candidate point

be

, which is a weighted sum of the spatial position similarity, semantic feature similarity, and reflectance similarity. To ensure matching quality, we introduce a ranking consistency loss constraint on the interval between positive and negative sample scores:

where

is the set of point pairs in which the association between a current-frame point and a historical-frame point satisfies both the spatial distance threshold and the semantic category consistency criterion. The notation

is the total number of positive matching pairs.

i is the current index,

is the historical-frame positive sample point matching the current-frame point

i, and

is the set of negative samples for the current-frame point

i.

is the number of negative samples for the current-frame point

i,

is the index of the negative matching point for the current-frame point,

is the cross-frame matching score between the current-frame point

i and the historical-frame point

j, and

is the hyperparameter for the matching score interval, used to widen the gap between positive and negative matching scores.

At the same time, to avoid jumps in the trajectory after cross-frame fusion, a trajectory smoothing loss is introduced to constrain the continuity of the matching points in adjacent frames, and a reflectance consistency term is added:

where

is the set of matching points on the same trajectory in consecutive frames;

is the total number of trajectory points;

i is the trajectory point index;

t is the current-frame time;

and

are the 3D coordinate vectors of the

i-th trajectory point in the current frame and the previous frame, respectively;

is the pose transformation matrix from the previous frame coordinate system to the current frame coordinate system;

is the L1 norm, used to calculate the sum of the absolute values of the coordinate differences;

and

represent the reflectance values of the

i-th trajectory point in the current frame and the previous frame, respectively; and beta is the weight controlling the reflectance term. Finally, the total loss of the TCSP module is composed of two parts:

where

and

are loss weight hyperparameters.

BCAF Module Loss. The BCAF module achieves efficient interaction between camera BEV features and LiDAR BEV features through a bidirectional cross-modal attention mechanism. Let the attention matrix from the camera to the LiDAR branch be A and the attention matrix from the LiDAR to the camera branch be A. They are normalized to A. To ensure the symmetry of the cross-modal interaction, an attention consistency loss is introduced:

where

is the normalized attention matrix from the camera to the LiDAR,

is the normalized attention matrix from the LiDAR to the camera, and

is the L1 norm. The fused BEV features are

and

, respectively. To reduce feature shifts between modalities, a feature consistency loss is introduced:

where

is the set of BEV grid coordinates;

is the total number of BEV grids; g is a position index in the grid;

and

are the feature vectors from

and

, respectively, at BEV grid position g; and

is the L1 norm. Finally, the total loss of the BCAF module is

where

and

are loss weight hyperparameters.

Detection Loss. The loss function for this model in 3D detection tasks consists of two parts: object classification loss and 3D bounding box regression loss. The classification loss component uses standard cross-entropy loss to measure the difference between the predicted category probabilities and the true labels. Let the total number of training samples be

N, the total number of categories be

C, the predicted category probability of the

i-th sample be

, and the one-hot encoding of the true category label be

; then, the classification loss is defined as

This loss can effectively optimize the network’s category discrimination ability and maintain good stability even in scenarios with a large number of categories or uneven sample distribution.

The bounding box regression loss is used to optimize the consistency between the predicted box and the true box in terms of position and scale. In this paper, the

loss is chosen to measure the deviation between the two. Let

be the number of positive samples, and let

and

be the predicted bounding box parameters and true parameters of the

i-th positive sample, respectively. Then, the

loss is defined as

where the bounding box parameter

includes the center coordinates, 3D dimensions, and orientation. The L1 loss can directly minimize the absolute error between the predicted value and the actual value, thereby improving the accuracy of target localization. Therefore, the total loss in the detection section is

where

and

are the weight coefficients for classification and regression loss, respectively.

The final total loss of SETR-Fusion consists of the following four parts: