Hybrid-Recursive-Refinement Network for Camouflaged Object Detection

Abstract

1. Introduction

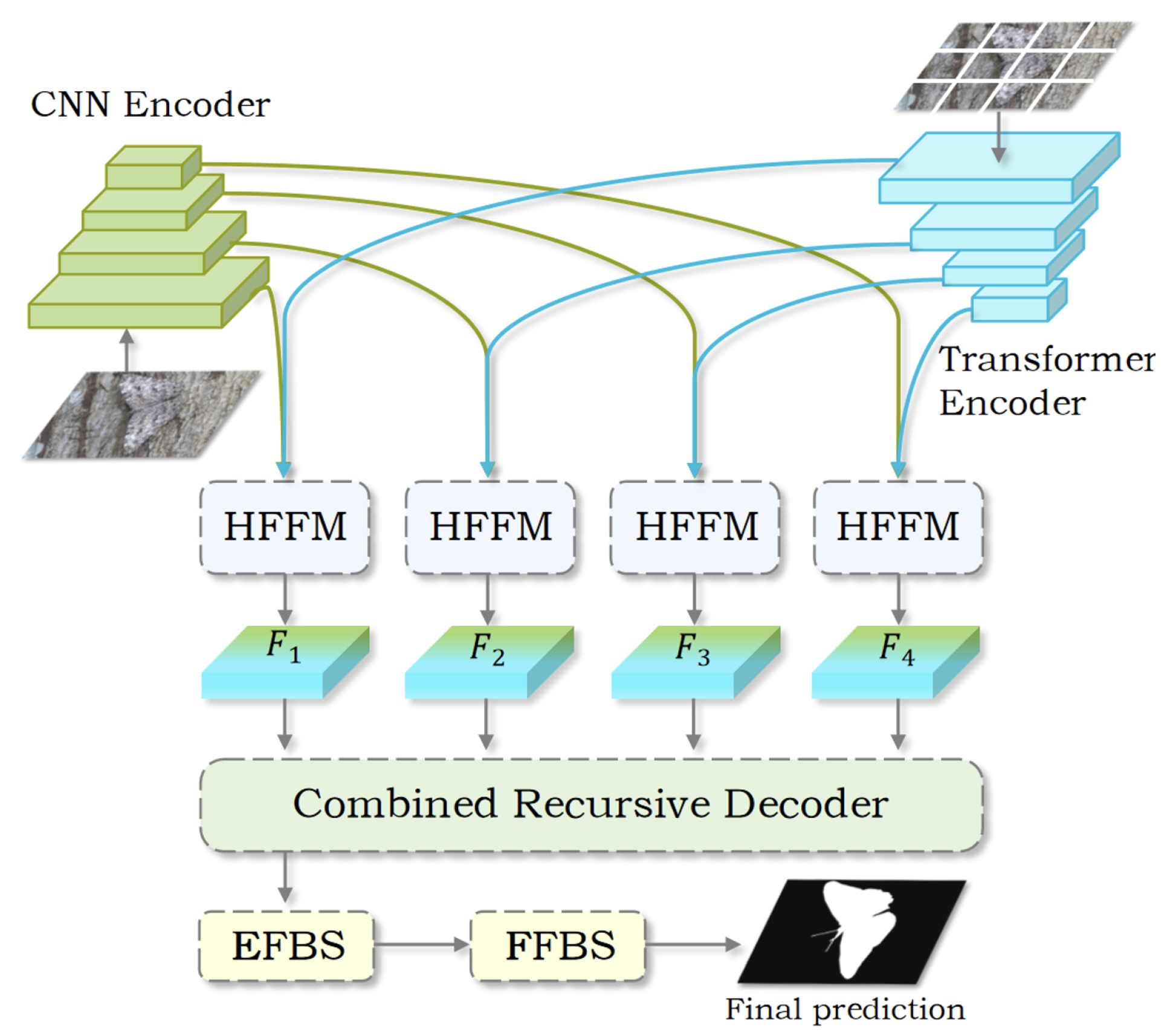

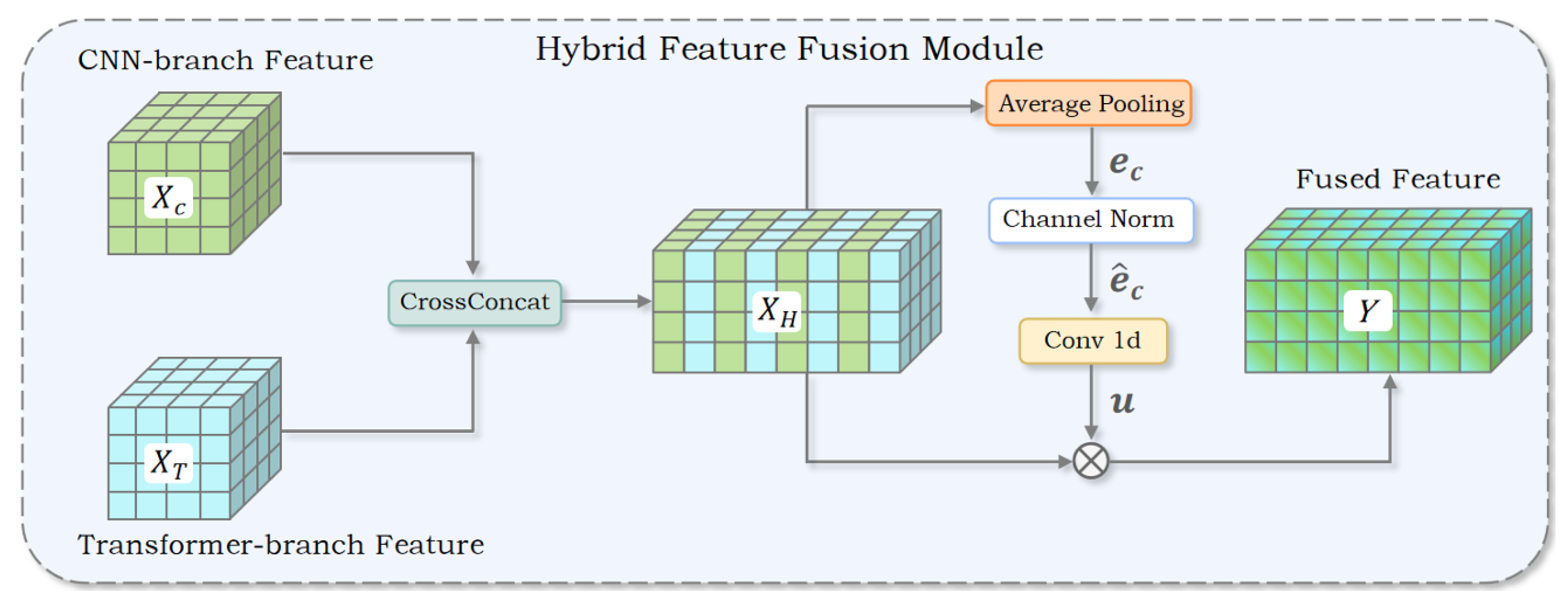

- We introduce a new hybrid architecture, termed HRRNet, which integrates features extracted by CNN and Transformer encoders through an HFFM. This module performs decoupled channel-wise fusion to exploit the complementary strengths of both feature extractors effectively.

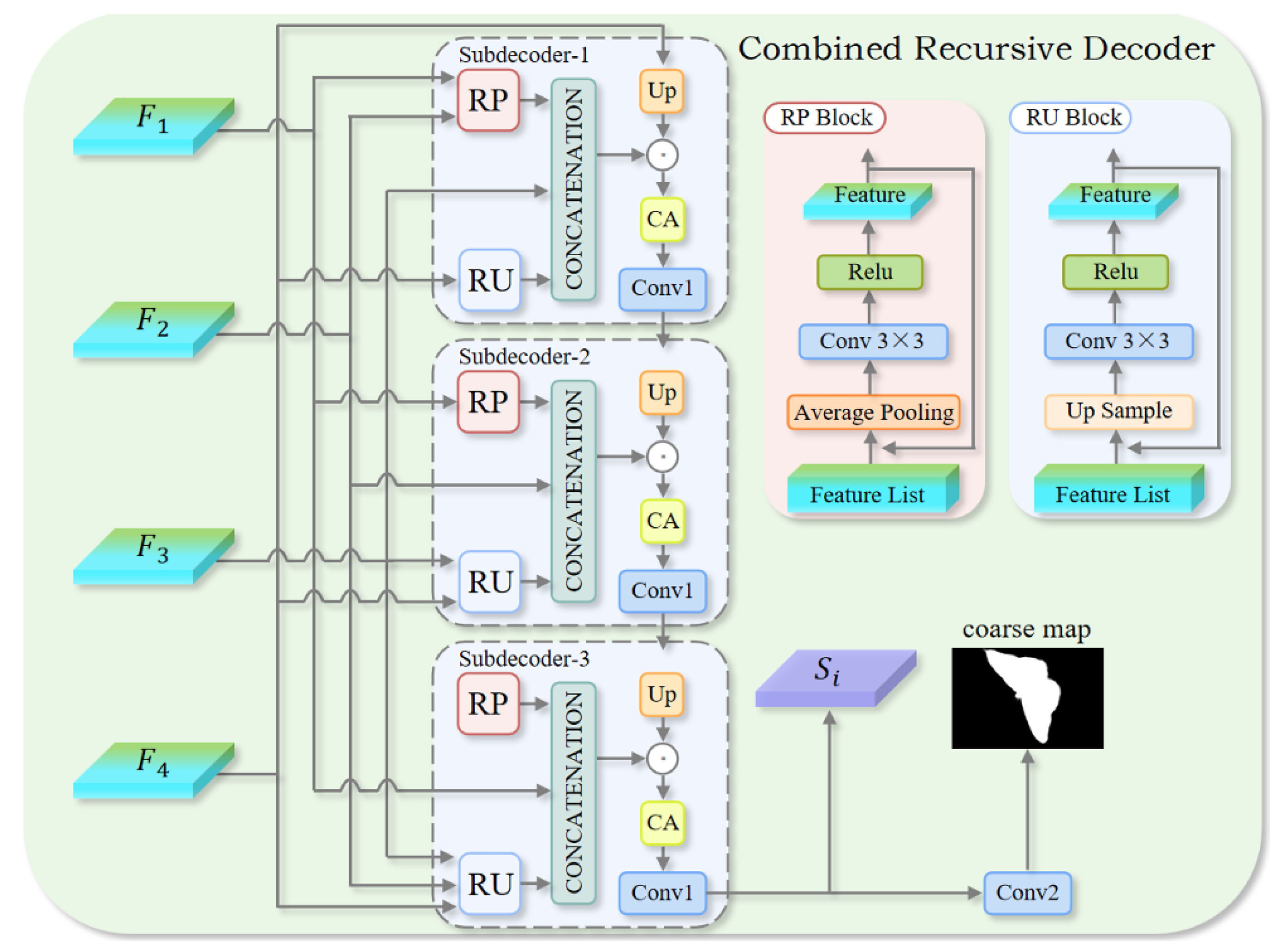

- We design a CRD that adaptively adjusts the feature fusion strategy based on the feature hierarchy. By fusing low-level detail with high-level semantics, the decoder effectively estimates the probable locations of camouflaged targets.

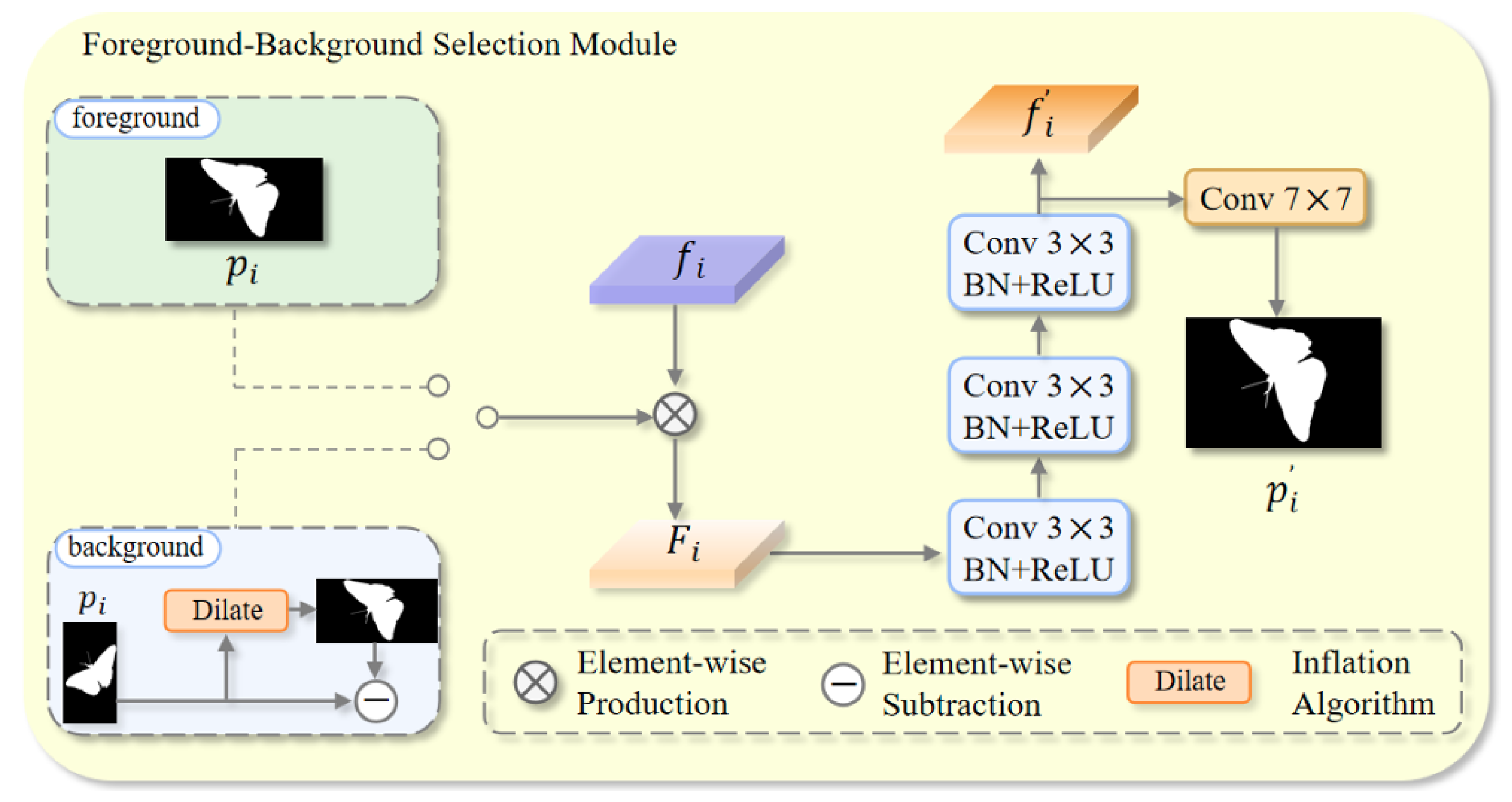

- We present an FBS module, which selectively focuses on either foreground or background regions to iteratively refine segmentation results, enabling accurate identification of camouflaged object boundaries.

2. Related Work

2.1. Camouflaged Object Detection

2.2. Complementary Information Strategy

3. Method

3.1. Overall Architecture

3.2. Hybrid Feature Fusion Module

3.3. Combined Recursive Decoder

3.4. Foreground–Background Selection Module

3.5. Loss Function

4. Experiments and Results

4.1. Experiment Settings

4.1.1. Datasets

4.1.2. Implementation Details

4.1.3. Evaluation Metrics

4.2. Comparison with State of the Art

4.2.1. Quantitative Evaluation

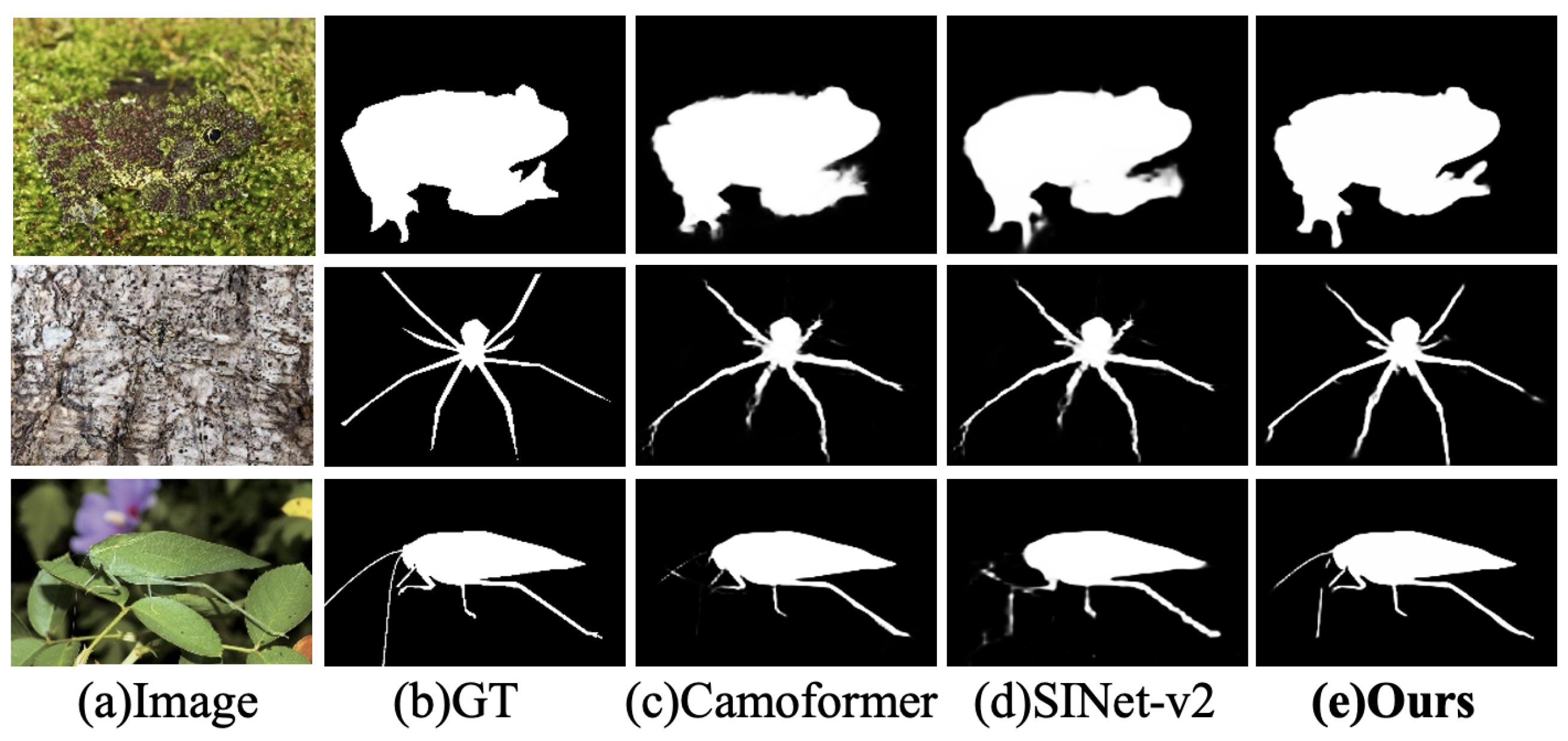

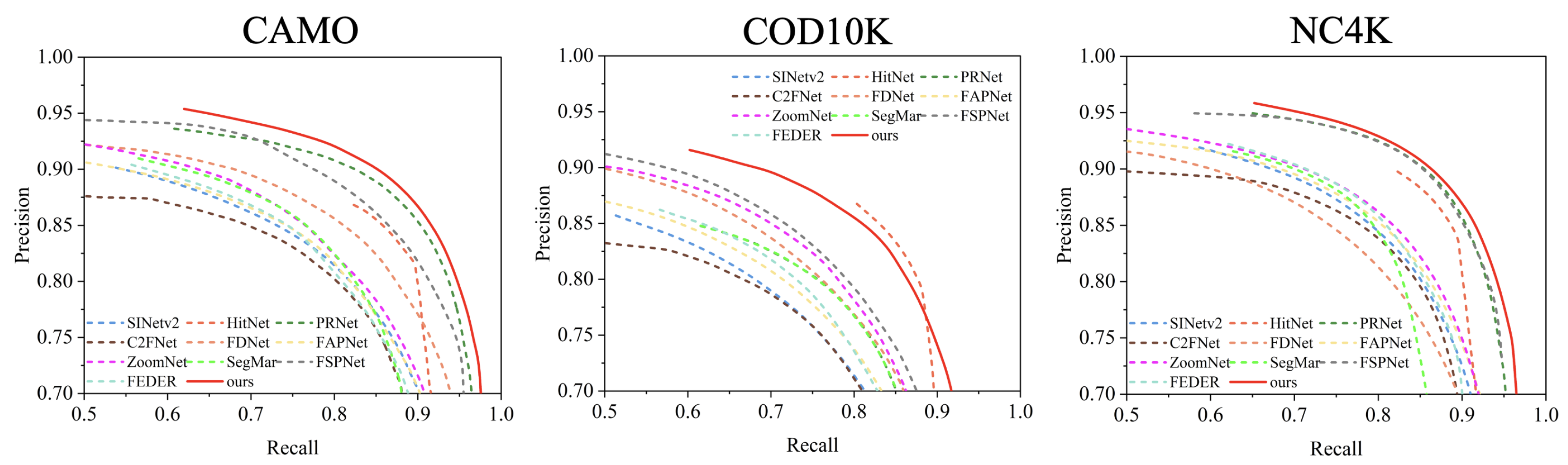

4.2.2. Qualitative Evaluation

4.2.3. Effectiveness Comparison of the HFFM and Dual-Encoder Strategy

4.2.4. Effectiveness Comparison of Combined Recursive Decoder

4.2.5. Effectiveness Comparison of Foreground–Background Selection Module

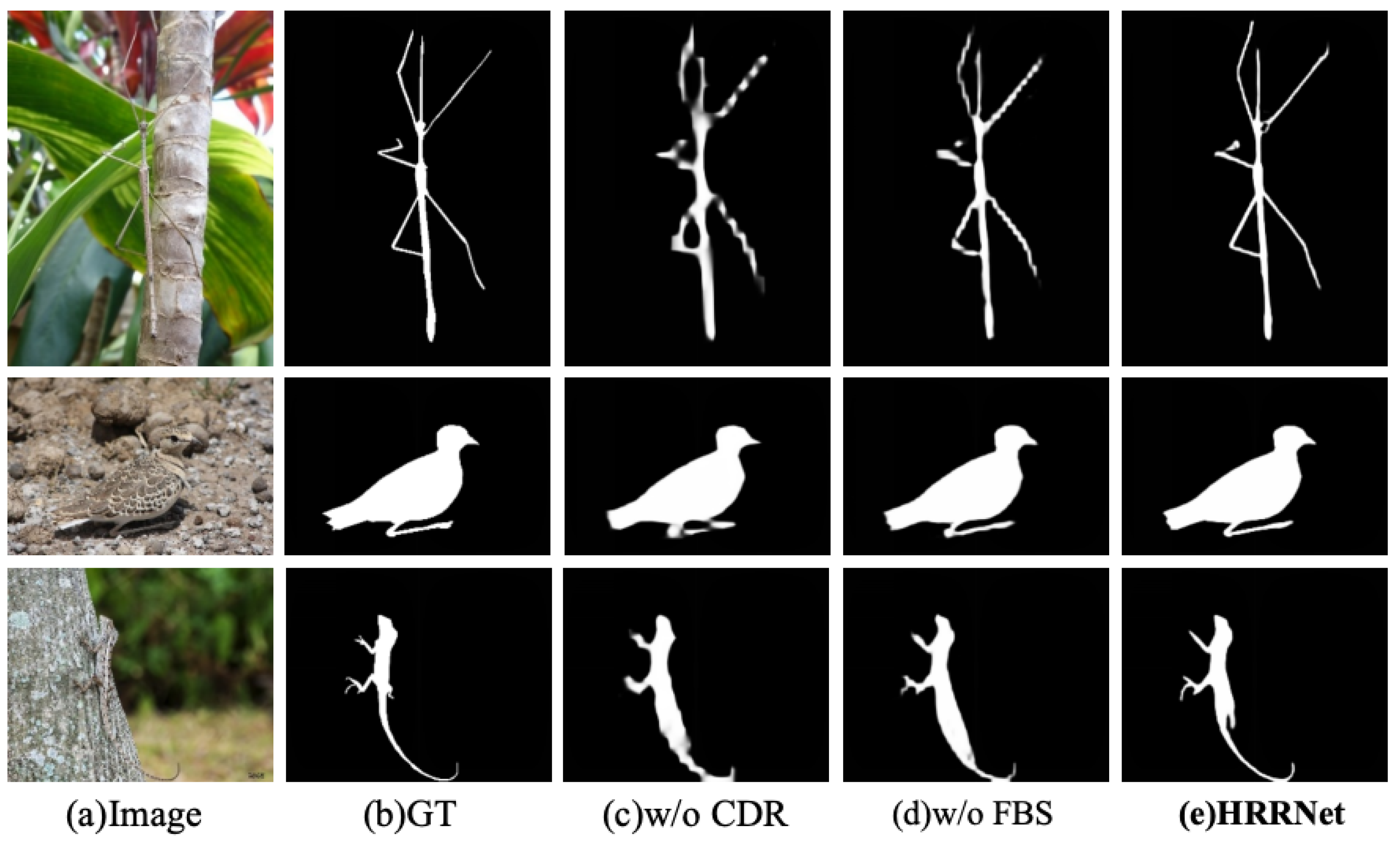

4.2.6. Qualitative Ablation Comparison

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xiao, F.; Hu, S.; Shen, Y.; Fang, C.; Huang, J.; Tang, L.; Yang, Z.; Li, X.; He, C. A Survey of Camouflaged Object Detection and Beyond. CAAI Artif. Intell. Res. 2024, 3, 9150044. [Google Scholar] [CrossRef]

- Fan, D.P.; Ji, G.P.; Zhou, T.; Chen, G.; Fu, H.; Shen, J.; Shao, L. Pranet: Parallel reverse attention network for polyp segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2020; pp. 263–273. [Google Scholar]

- Wu, Y.H.; Gao, S.H.; Mei, J.; Xu, J.; Fan, D.P.; Zhang, R.G.; Cheng, M.M. Jcs: An explainable covid-19 diagnosis system by joint classification and segmentation. IEEE Trans. Image Process. 2021, 30, 3113–3126. [Google Scholar] [CrossRef]

- Xiong, Y.J.; Gao, Y.B.; Wu, H.; Yao, Y. Attention u-net with feature fusion module for robust defect detection. J. Circuits Syst. Comput. 2021, 30, 2150272. [Google Scholar] [CrossRef]

- Wang, L.; Yang, J.; Zhang, Y.; Wang, F.; Zheng, F. Depth-aware concealed crop detection in dense agricultural scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 17–18 June 2024; pp. 17201–17211. [Google Scholar]

- Sengottuvelan, P.; Wahi, A.; Shanmugam, A. Performance of decamouflaging through exploratory image analysis. In Proceedings of the 2008 First International Conference on Emerging Trends in Engineering and Technology, Nagpur, India, 16–18 July 2008; pp. 6–10. [Google Scholar]

- Kavitha, C.; Rao, B.P.; Govardhan, A. An efficient content based image retrieval using color and texture of image sub blocks. Int. J. Eng. Sci. Technol. 2011, 3, 1060–1068. [Google Scholar]

- He, C.; Li, K.; Zhang, Y.; Tang, L.; Zhang, Y.; Guo, Z.; Li, X. Camouflaged object detection with feature decomposition and edge reconstruction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 22046–22055. [Google Scholar]

- He, C.; Li, K.; Zhang, Y.; Zhang, Y.; You, C.; Guo, Z.; Li, X.; Danelljan, M.; Yu, F. Strategic Preys Make Acute Predators: Enhancing Camouflaged Object Detectors by Generating Camouflaged Objects. arXiv 2023, arXiv:2308.03166. [Google Scholar]

- Fang, X.; Chen, J.; Wang, Y.; Jiang, M.; Ma, J.; Wang, X. EPFDNet: Camouflaged object detection with edge perception in frequency domain. Image Vis. Comput. 2025, 154, 105358. [Google Scholar] [CrossRef]

- Yin, C.; Yang, K.; Li, J.; Li, X.; Wu, Y. Camouflaged object detection via complementary information-selected network based on visual and semantic separation. IEEE Trans. Ind. Inform. 2024, 20, 12871–12881. [Google Scholar] [CrossRef]

- Luo, Z.; Liu, N.; Zhao, W.; Yang, X.; Zhang, D.; Fan, D.P.; Khan, F.; Han, J. Vscode: General visual salient and camouflaged object detection with 2d prompt learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 17169–17180. [Google Scholar]

- Zhao, J.; Li, X.; Yang, F.; Zhai, Q.; Luo, A.; Jiao, Z.; Cheng, H. Focusdiffuser: Perceiving local disparities for camouflaged object detection. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2024; pp. 181–198. [Google Scholar]

- Wang, Y.; Feng, L.; Sun, W.; Wang, L.; Yang, G.; Chen, B. A lightweight CNN-Transformer network for pixel-based crop mapping using time-series Sentinel-2 imagery. Comput. Electron. Agric. 2024, 226, 109370. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, T.; Zhao, L.; Hu, L.; Wang, Z.; Niu, Z.; Cheng, P.; Chen, K.; Zeng, X.; Wang, Z.; et al. Ringmo-lite: A remote sensing lightweight network with cnn-transformer hybrid framework. IEEE Trans. Geosci. Remote. Sens. 2024, 62, 1–20. [Google Scholar] [CrossRef]

- Sun, F.; Ren, P.; Yin, B.; Wang, F.; Li, H. CATNet: A cascaded and aggregated transformer network for RGB-D salient object detection. IEEE Trans. Multimed. 2023, 26, 2249–2262. [Google Scholar] [CrossRef]

- Ren, J.; Hu, X.; Zhu, L.; Xu, X.; Xu, Y.; Wang, W.; Deng, Z.; Heng, P.A. Deep texture-aware features for camouflaged object detection. IEEE Trans. Circuits Syst. Video Technol. 2021, 33, 1157–1167. [Google Scholar] [CrossRef]

- Ji, G.P.; Fan, D.P.; Chou, Y.C.; Dai, D.; Liniger, A.; Van Gool, L. Deep gradient learning for efficient camouflaged object detection. Mach. Intell. Res. 2023, 20, 92–108. [Google Scholar] [CrossRef]

- Xiao, J.; Chen, T.; Hu, X.; Zhang, G.; Wang, S. Boundary-guided context-aware network for camouflaged object detection. Neural Comput. Appl. 2023, 35, 15075–15093. [Google Scholar] [CrossRef]

- Liu, Z.; Deng, X.; Jiang, P.; Lv, C.; Min, G.; Wang, X. Edge perception camouflaged object detection under frequency domain reconstruction. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 10194–10207. [Google Scholar] [CrossRef]

- Fan, D.P.; Ji, G.P.; Cheng, M.M.; Shao, L. Concealed object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 6024–6042. [Google Scholar] [CrossRef]

- Yin, B.; Zhang, X.; Fan, D.P.; Jiao, S.; Cheng, M.M.; Van Gool, L.; Hou, Q. Camoformer: Masked separable attention for camouflaged object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10362–10374. [Google Scholar] [CrossRef]

- Le, T.N.; Nguyen, T.V.; Nie, Z.; Tran, M.T.; Sugimoto, A. Anabranch network for camouflaged object segmentation. Comput. Vis. Image Underst. 2019, 184, 45–56. [Google Scholar] [CrossRef]

- Fan, D.P.; Ji, G.P.; Sun, G.; Cheng, M.M.; Shen, J.; Shao, L. Camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 2777–2787. [Google Scholar]

- Lv, Y.; Zhang, J.; Dai, Y.; Li, A.; Liu, B.; Barnes, N.; Fan, D.P. Simultaneously localize, segment and rank the camouflaged objects. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Virtual, 19–25 June 2021; pp. 11591–11601. [Google Scholar]

- Pang, Y.; Zhao, X.; Xiang, T.Z.; Zhang, L.; Lu, H. Zoom in and out: A mixed-scale triplet network for camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 2160–2170. [Google Scholar]

- Sun, Y.; Wang, S.; Chen, C.; Xiang, T.Z. Boundary-guided camouflaged object detection. arXiv 2022, arXiv:2207.00794. [Google Scholar] [CrossRef]

- He, R.; Dong, Q.; Lin, J.; Lau, R.W. Weakly-supervised camouflaged object detection with scribble annotations. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 781–789. [Google Scholar]

- Huang, Z.; Dai, H.; Xiang, T.Z.; Wang, S.; Chen, H.X.; Qin, J.; Xiong, H. Feature shrinkage pyramid for camouflaged object detection with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 5557–5566. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Cong, R.; Sun, M.; Zhang, S.; Zhou, X.; Zhang, W.; Zhao, Y. Frequency perception network for camouflaged object detection. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 29 October–3 November 2023; pp. 1179–1189. [Google Scholar]

- Song, Z.; Kang, X.; Wei, X.; Liu, H.; Dian, R.; Li, S. Fsnet: Focus scanning network for camouflaged object detection. IEEE Trans. Image Process. 2023, 32, 2267–2278. [Google Scholar] [CrossRef]

- Wang, W.; Xie, E.; Li, X.; Fan, D.P.; Song, K.; Liang, D.; Lu, T.; Luo, P.; Shao, L. Pyramid vision transformer: A versatile backbone for dense prediction without convolutions. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 568–578. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 10–17 October 2021; pp. 10012–10022. [Google Scholar]

- Wei, J.; Wang, S.; Huang, Q. F3Net: Fusion, feedback and focus for salient object detection. In Proceedings of the AAAI Conference on Artificial Intelligence, New York, NY, USA, 7–12 February 2020; Volume 34, pp. 12321–12328. [Google Scholar]

- Wu, Z.; Su, L.; Huang, Q. Cascaded partial decoder for fast and accurate salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3907–3916. [Google Scholar]

- Hou, Q.; Cheng, M.M.; Hu, X.; Borji, A.; Tu, Z.; Torr, P.H. Deeply supervised salient object detection with short connections. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3203–3212. [Google Scholar]

- Zhang, Z.; Lin, Z.; Xu, J.; Jin, W.D.; Lu, S.P.; Fan, D.P. Bilateral attention network for RGB-D salient object detection. IEEE Trans. Image Process. 2021, 30, 1949–1961. [Google Scholar] [CrossRef]

- Mei, H.; Ji, G.P.; Wei, Z.; Yang, X.; Wei, X.; Fan, D.P. Camouflaged object segmentation with distraction mining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8772–8781. [Google Scholar]

- Yang, Z.; Zhu, L.; Wu, Y.; Yang, Y. Gated channel transformation for visual recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 11794–11803. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. Unitbox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar]

- Skurowski, P.; Abdulameer, H.; Błaszczyk, J.; Depta, T.; Kornacki, A.; Kozieł, P. Animal camouflage analysis: Chameleon database. 2018; 2, 7, unpublished manuscript. [Google Scholar]

- Fan, D.P.; Gong, C.; Cao, Y.; Ren, B.; Cheng, M.M.; Borji, A. Enhanced-alignment measure for binary foreground map evaluation. arXiv 2018, arXiv:1805.10421. [Google Scholar] [CrossRef]

- Margolin, R.; Zelnik-Manor, L.; Tal, A. How to evaluate foreground maps? In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 248–255. [Google Scholar]

- Perazzi, F.; Krähenbühl, P.; Pritch, Y.; Hornung, A. Saliency filters: Contrast based filtering for salient region detection. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition, Providence, RI, USA, 16–21 June 2012; pp. 733–740. [Google Scholar]

- Zhai, Q.; Li, X.; Yang, F.; Chen, C.; Cheng, H.; Fan, D.P. Mutual graph learning for camouflaged object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 12997–13007. [Google Scholar]

- Sun, Y.; Chen, G.; Zhou, T.; Zhang, Y.; Liu, N. Context-aware cross-level fusion network for camouflaged object detection. arXiv 2021, arXiv:2105.12555. [Google Scholar]

- Jia, Q.; Yao, S.; Liu, Y.; Fan, X.; Liu, R.; Luo, Z. Segment, magnify and reiterate: Detecting camouflaged objects the hard way. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4713–4722. [Google Scholar]

- Zhou, T.; Zhou, Y.; Gong, C.; Yang, J.; Zhang, Y. Feature aggregation and propagation network for camouflaged object detection. IEEE Trans. Image Process. 2022, 31, 7036–7047. [Google Scholar] [CrossRef] [PubMed]

- Hao, C.; Yu, Z.; Liu, X.; Xu, J.; Yue, H.; Yang, J. A simple yet effective network based on vision transformer for camouflaged object and salient object detection. IEEE Trans. Image Process. 2025, 34, 608–622. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Cheng, L.; Liu, Y.; Wang, X.; Han, J. Mamba capsule routing towards part-whole relational camouflaged object detection. Int. J. Comput. Vis. 2025, 1–21. [Google Scholar] [CrossRef]

| Method | Year | Backbone | CAMO-Test | COD10K-Test | NC4K | CHAMELEON | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| M↓ | M↓ | M↓ | M↓ | |||||||||||||||

| MGL [47] | 2021-CVPR | ResNet50 | 0.775 | 0.812 | 0.736 | 0.088 | 0.814 | 0.852 | 0.711 | 0.035 | 0.833 | 0.867 | 0.782 | 0.052 | 0.893 | 0.917 | 0.833 | 0.031 |

| FNet [48] | 2021-IJCAI | Res2Net50 | 0.796 | 0.854 | 0.762 | 0.080 | 0.813 | 0.890 | 0.723 | 0.036 | 0.838 | 0.897 | 0.765 | 0.049 | 0.888 | 0.935 | 0.828 | 0.032 |

| SINet-v2 [21] | 2022-TPAMI | Res2Net50 | 0.820 | 0.882 | 0.782 | 0.070 | 0.815 | 0.887 | 0.718 | 0.037 | 0.847 | 0.903 | 0.805 | 0.048 | 0.888 | 0.942 | 0.835 | 0.030 |

| ZoomNet [26] | 2022-CVPR | ResNet50 | 0.820 | 0.877 | 0.794 | 0.066 | 0.838 | 0.888 | 0.766 | 0.029 | 0.853 | 0.896 | 0.818 | 0.043 | 0.902 | 0.943 | 0.864 | 0.023 |

| FEDER [8] | 2023-CVPR | ResNet50 | 0.807 | 0.873 | 0.785 | 0.069 | 0.823 | 0.900 | 0.740 | 0.032 | 0.846 | 0.905 | 0.817 | 0.045 | 0.894 | 0.947 | 0.855 | 0.028 |

| DGNet [18] | 2023-MIR | EfficientNet | 0.839 | 0.901 | 0.806 | 0.057 | 0.822 | 0.896 | 0.728 | 0.033 | 0.857 | 0.911 | 0.814 | 0.042 | 0.890 | 0.938 | 0.834 | 0.029 |

| FSPNet [29] | 2023-CVPR | ViT | 0.857 | 0.899 | 0.830 | 0.050 | 0.851 | 0.895 | 0.769 | 0.026 | 0.879 | 0.915 | 0.843 | 0.035 | 0.908 | 0.943 | 0.867 | 0.023 |

| Camouflageator [9] | 2024-ICLR | ResNet50 | 0.829 | 0.891 | 0.805 | 0.066 | 0.843 | 0.920 | 0.763 | 0.028 | 0.869 | 0.922 | 0.835 | 0.041 | 0.903 | 0.952 | 0.863 | 0.026 |

| VSSNet [11] | 2024-TII | PVTv2 | 0.873 | 0.939 | 0.844 | 0.043 | 0.873 | 0.941 | 0.805 | 0.021 | 0.889 | 0.942 | 0.855 | 0.030 | - | - | - | - |

| VSCode [12] | 2024-CVPR | Swin-S | 0.873 | 0.926 | 0.861 | 0.043 | 0.873 | 0.935 | 0.818 | 0.021 | 0.889 | 0.936 | 0.870 | 0.030 | - | - | - | - |

| FocusDiffuser [13] | 2024-ECCV | ViT | 0.869 | 0.931 | 0.842 | 0.043 | 0.863 | 0.934 | 0.785 | 0.024 | 0.882 | 0.933 | 0.840 | 0.032 | - | - | - | - |

| CamoFormer [22] | 2024-TPAMI | Swin-B | 0.876 | 0.935 | 0.832 | 0.043 | 0.862 | 0.932 | 0.772 | 0.024 | 0.888 | 0.941 | 0.840 | 0.031 | 0.891 | 0.953 | 0.829 | 0.026 |

| EPFDNet [10] | 2025-IVC | Res2Net50 | 0.817 | 0.886 | 0.757 | 0.068 | 0.815 | 0.894 | 0.700 | 0.033 | 0.844 | 0.906 | 0.780 | 0.044 | - | - | - | - |

| SENet [51] | 2025-TIP | ViT | - | - | - | - | 0.865 | 0.925 | 0.780 | 0.024 | 0.889 | 0.933 | 0.843 | 0.032 | - | - | - | - |

| MCRNet [52] | 2025-IJCV | Swin | 0.854 | 0.915 | 0.847 | 0.054 | 0.854 | 0.924 | 0.807 | 0.026 | 0.875 | 0.930 | 0.857 | 0.036 | - | - | - | - |

| HRRNet | Ours | Swin-Res2Net | 0.876 | 0.928 | 0.843 | 0.043 | 0.867 | 0.932 | 0.785 | 0.023 | 0.888 | 0.933 | 0.845 | 0.032 | 0.911 | 0.948 | 0.863 | 0.023 |

| HRRNet | Ours | Swin-ResNet | 0.874 | 0.926 | 0.842 | 0.045 | 0.866 | 0.931 | 0.783 | 0.023 | 0.886 | 0.931 | 0.847 | 0.032 | 0.910 | 0.948 | 0.862 | 0.024 |

| HRRNet | Ours | PVT-Res2Net | 0.883 | 0.935 | 0.853 | 0.041 | 0.869 | 0.941 | 0.797 | 0.021 | 0.891 | 0.942 | 0.855 | 0.030 | 0.918 | 0.959 | 0.869 | 0.021 |

| HRRNet | Ours | PVT-ResNet | 0.882 | 0.932 | 0.849 | 0.042 | 0.863 | 0.937 | 0.795 | 0.021 | 0.889 | 0.941 | 0.857 | 0.031 | 0.914 | 0.956 | 0.867 | 0.023 |

| MGL [47] | FNet [48] | ZoomNet [26] | FEDER [8] | SegMar [29] | FSPNet [49] | HRRNet (Swin-R2N) | HRRNet (Swin-R50) | HRRNet (PVT-R2N) | HRRNet (PVT-R50) | |

|---|---|---|---|---|---|---|---|---|---|---|

| Parameters | 63.60 | 28.41 | 32.38 | 37.37 | 55.62 | 273.79 | 63.16 | 62.53 | 60.24 | 59.62 |

| FLOPs (G) | 553.94 | 26.17 | 203.50 | 23.98 | 33.65 | 288.31 | 36.67 | 35.80 | 35.06 | 34.28 |

| FPS | 5.18 | 109.67 | 14.10 | 119.68 | 85.29 | 10.13 | 78.42 | 80.17 | 82.15 | 83.92 |

| Method | CAMO-Test | COD10K-Test | NC4K | CHAMELEON | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| CNN-CNN | 0.825 | 0.897 | 0.798 | 0.056 | 0.823 | 0.907 | 0.760 | 0.030 | 0.861 | 0.913 | 0.825 | 0.041 | 0.901 | 0.938 | 0.855 | 0.028 |

| Trans-Trans | 0.859 | 0.918 | 0.836 | 0.043 | 0.844 | 0.923 | 0.774 | 0.026 | 0.879 | 0.924 | 0.836 | 0.036 | 0.911 | 0.946 | 0.859 | 0.027 |

| w/o HFFM | 0.876 | 0.928 | 0.841 | 0.046 | 0.861 | 0.927 | 0.788 | 0.025 | 0.883 | 0.930 | 0.841 | 0.033 | 0.912 | 0.952 | 0.860 | 0.024 |

| HRRNet | 0.883 | 0.935 | 0.853 | 0.041 | 0.869 | 0.941 | 0.797 | 0.021 | 0.891 | 0.942 | 0.855 | 0.030 | 0.918 | 0.959 | 0.869 | 0.021 |

| Method | CAMO-Test | COD10K-Test | NC4K | CHAMELEON | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| w/o CRD | 0.823 | 0.894 | 0.785 | 0.060 | 0.815 | 0.903 | 0.742 | 0.033 | 0.855 | 0.916 | 0.790 | 0.043 | 0.886 | 0.922 | 0.813 | 0.034 |

| HRRNet | 0.883 | 0.935 | 0.853 | 0.041 | 0.869 | 0.941 | 0.797 | 0.021 | 0.891 | 0.942 | 0.855 | 0.030 | 0.918 | 0.959 | 0.869 | 0.021 |

| Method | CAMO-Test | COD10K-Test | NC4K | CHAMELEON | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| w/o FFBS | 0.879 | 0.931 | 0.846 | 0.045 | 0.864 | 0.933 | 0.792 | 0.023 | 0.887 | 0.935 | 0.846 | 0.033 | 0.915 | 0.954 | 0.863 | 0.023 |

| w/o EFBS | 0.881 | 0.933 | 0.850 | 0.043 | 0.866 | 0.938 | 0.795 | 0.023 | 0.890 | 0.939 | 0.852 | 0.031 | 0.916 | 0.958 | 0.867 | 0.021 |

| HRRNet | 0.883 | 0.935 | 0.853 | 0.041 | 0.869 | 0.941 | 0.797 | 0.021 | 0.891 | 0.942 | 0.855 | 0.030 | 0.918 | 0.959 | 0.869 | 0.021 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; Wang, X.; Jin, H. Hybrid-Recursive-Refinement Network for Camouflaged Object Detection. J. Imaging 2025, 11, 299. https://doi.org/10.3390/jimaging11090299

Chen H, Wang X, Jin H. Hybrid-Recursive-Refinement Network for Camouflaged Object Detection. Journal of Imaging. 2025; 11(9):299. https://doi.org/10.3390/jimaging11090299

Chicago/Turabian StyleChen, Hailong, Xinyi Wang, and Haipeng Jin. 2025. "Hybrid-Recursive-Refinement Network for Camouflaged Object Detection" Journal of Imaging 11, no. 9: 299. https://doi.org/10.3390/jimaging11090299

APA StyleChen, H., Wang, X., & Jin, H. (2025). Hybrid-Recursive-Refinement Network for Camouflaged Object Detection. Journal of Imaging, 11(9), 299. https://doi.org/10.3390/jimaging11090299