Part-Wise Graph Fourier Learning for Skeleton-Based Continuous Sign Language Recognition

Abstract

1. Introduction

- We propose a novel Fourier fully connected graph action representation structure, which uses the Fourier space features of part-level topological graphs as nodes, employs inter-node attention as edges, and constructs action sequences in a globally ordered yet locally unordered manner.

- We propose a graph Fourier learning method that employs Fourier graph operators to learn representations from the Fourier fully connected graph, then applies the proposed MLP-based amplitude enhancement module to improve the sign language representation capability of the model.

- We design a dual-branch action learning strategy that integrates an action prediction branch to assist the traditional recognition branch. The two branches collaboratively reinforce each other, thereby improving the model’s understanding of sign sequences.

2. Related Work

2.1. Skeleton-Based Action Recognition

2.2. Part-Based Action Recognition

2.3. GNN-Based Action Recognition

3. Methodology

3.1. Problem Definition

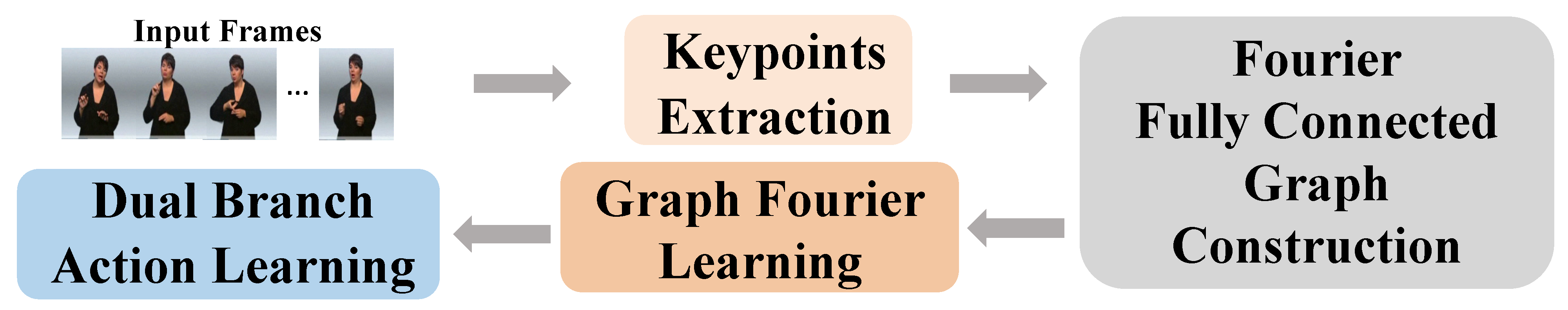

3.2. Model Framework

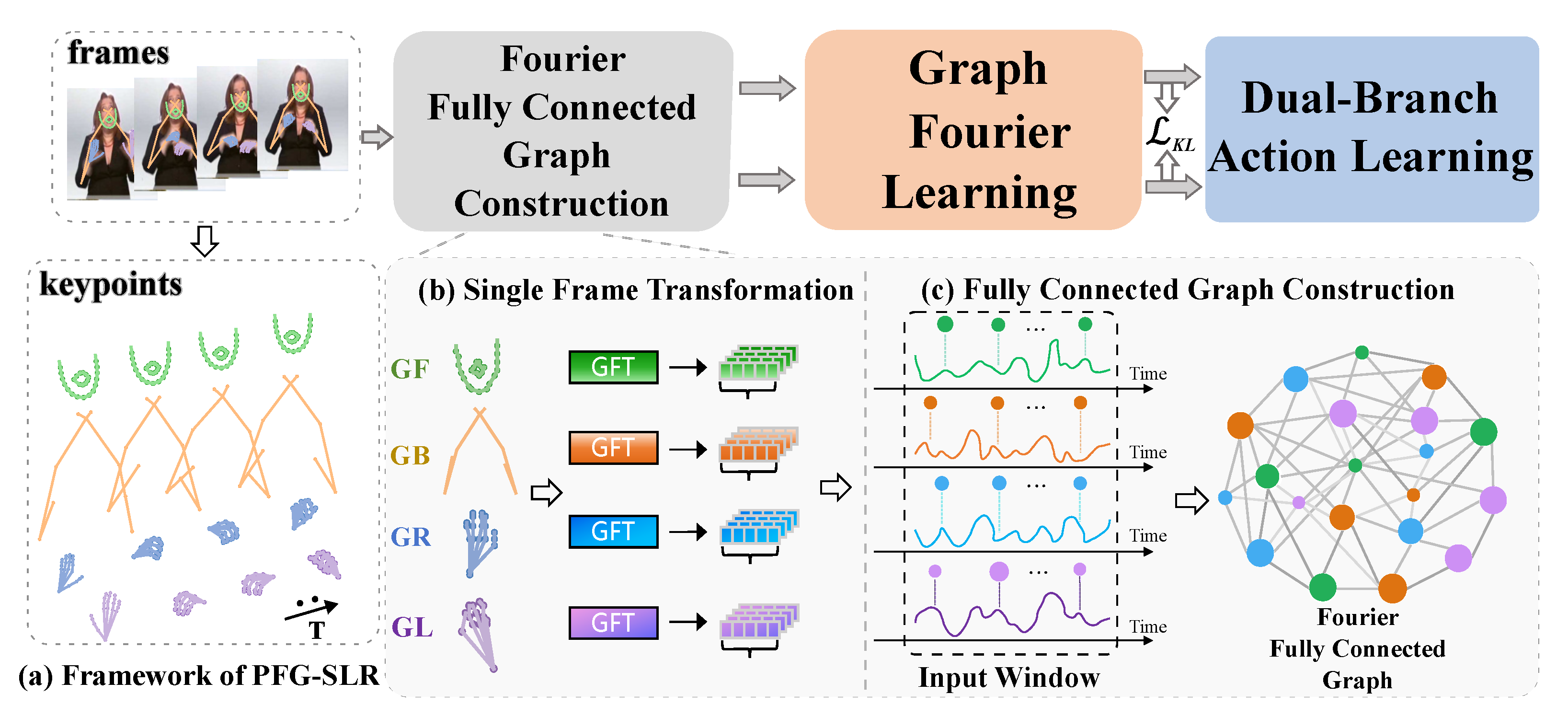

- Fourier fully connected graph construction module. This module first maps part-level topology graphs into Fourier space using graph Fourier transformation (GFT), and it constructs a Fourier fully connected graph using the part-level Fourier representations as nodes features and their frequency domain attention as edges.

- Graph Fourier learning module. The module employs stacked Fourier graph operators (FGOs) to learn the spatiotemporal relationships among nodes in the Fourier fully connected graph. An adaptive frequency enhancement module is attached to enhance the learned action features.

- Dual-branch learning module. This module comprises an auxiliary action prediction branch to assist the sign language recognition branch to obtain higher-quality sign language action representations.

3.3. Fourier Fully Connected Graph Construction

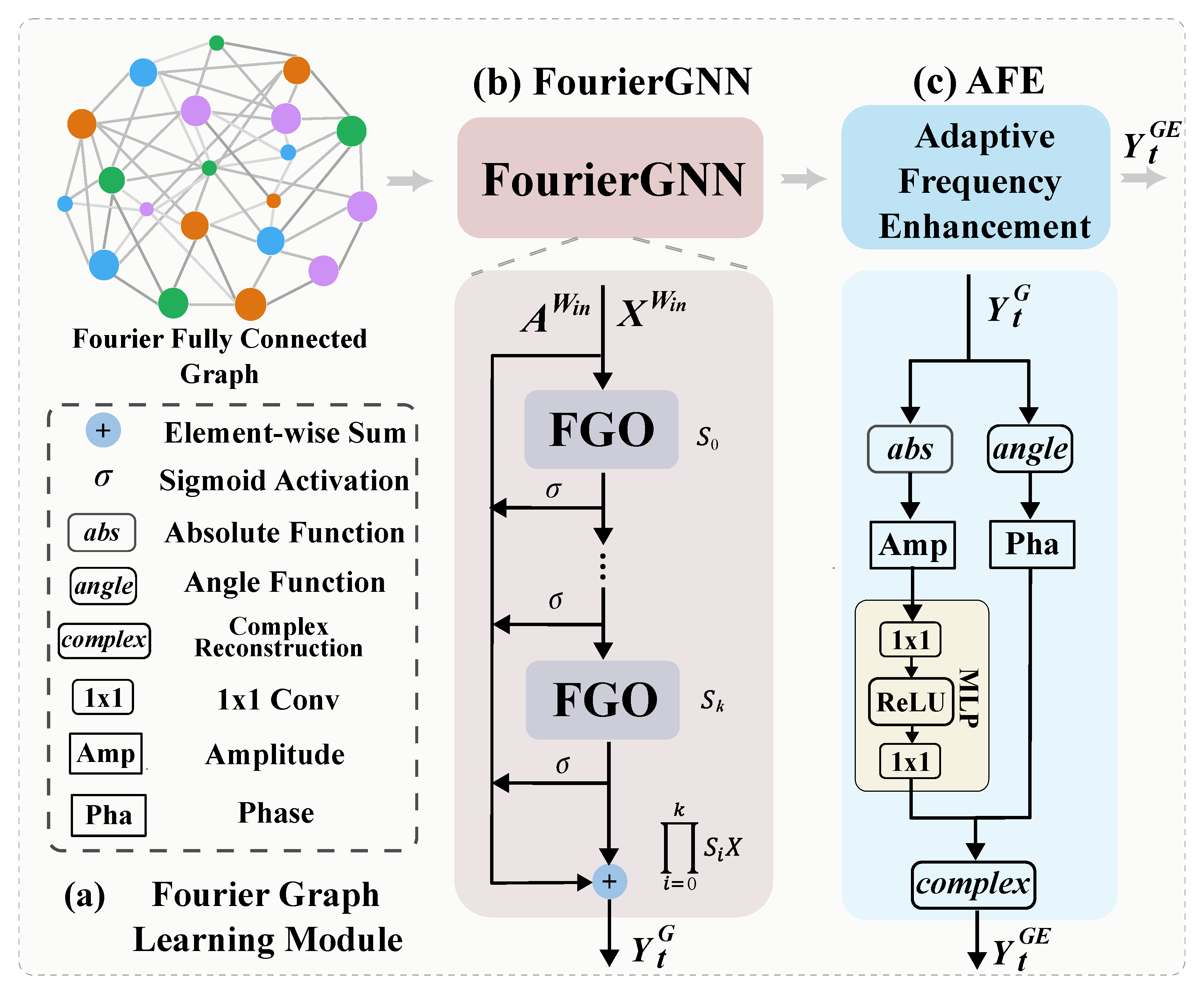

3.4. Graph Fourier Learning

3.4.1. Fourier Graph Neural Network

3.4.2. Adaptive Frequency Enhancing Module

| Algorithm 1: Graph Fourier learning. | |

| Input: Fourier fully connected graph | |

| Output: Enhanced action representation | |

| 1 forto k do | |

| 2 ; | // k stacked FGOs |

| 3 ; | // Get amplitude |

| 4 ; | // Get phase |

| 5 ; | // conv based MLP |

| 6 ; | // Enhanced real part |

| 7 ; | // Enhanced image coefficient |

| 8 ; | // Frequency-enhanced representation |

| 9 return | |

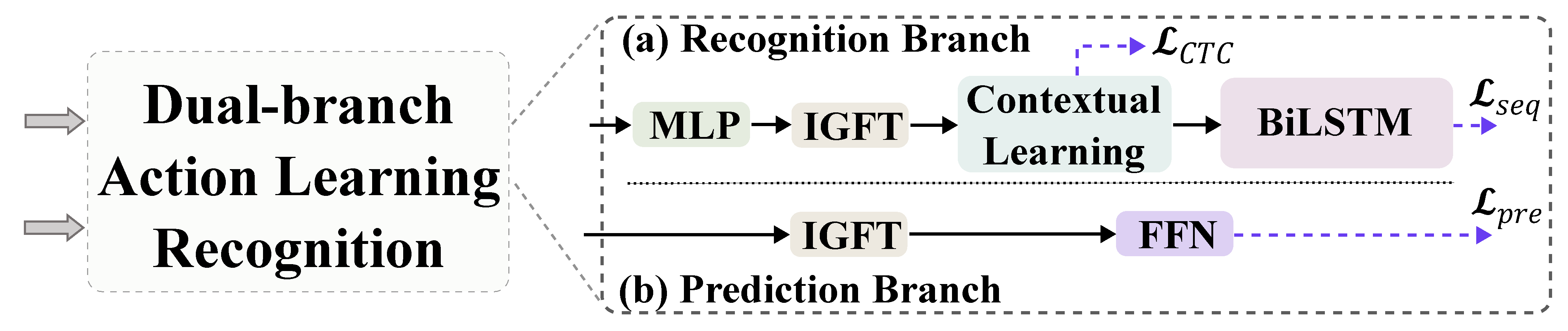

3.5. Dual-Branch Action Learning Module

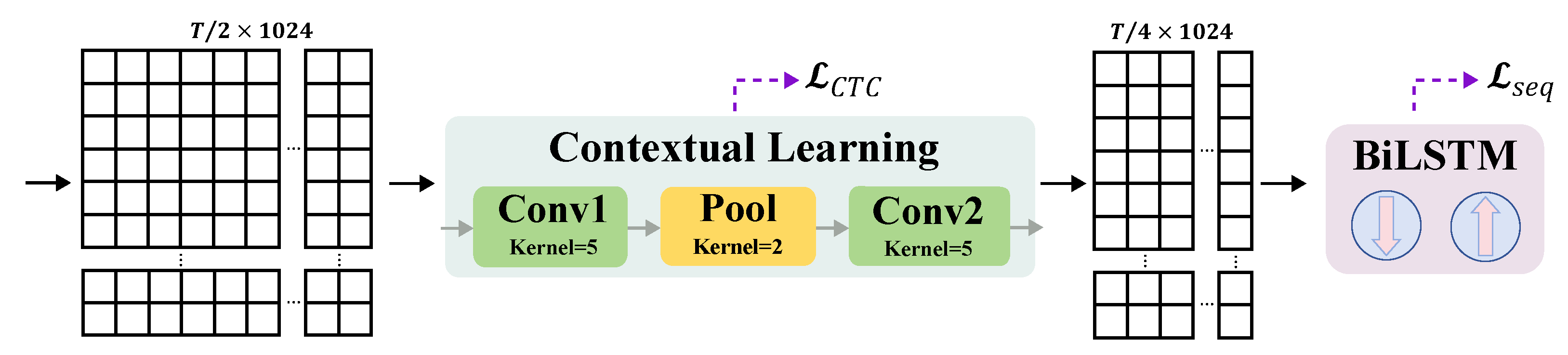

3.5.1. Sign Language Recognition Branch

3.5.2. Action Prediction Branch

3.6. Loss Function

4. Experiments

4.1. Datasets

- PHOENIX14 [52] is a dataset recorded by nine presenters, extracted from German weather forecasts with high-contrast backgrounds. It contains 6841 sentences with a total of 1295 Glosses. The dataset is divided into train/dev/test sets, comprising 5672/540/629 samples, respectively.

- PHOENIX14-T [1] is another dataset extracted from German weather forecasts. It includes 1085 Glosses distributed across 8247 sentences. The distribution of samples in train/dev/test sets is 7096/519/642, respectively.

- CSL-Daily [53] is a Chinese Sign Language dataset related to daily life, recorded indoors by 10 signers. Compared to the previous two datasets, it has a noisier background. The dataset consists of 20654 sentences, with the train/dev/test sets containing 18401/1077/1176 samples, respectively.

4.2. Implementation Details

4.3. Baseline Methods

4.4. Evaluation Metrics

4.5. Comparison with Baseline Methods

4.6. Ablation Study

4.7. Hyperparameter Sensitivity Analysis

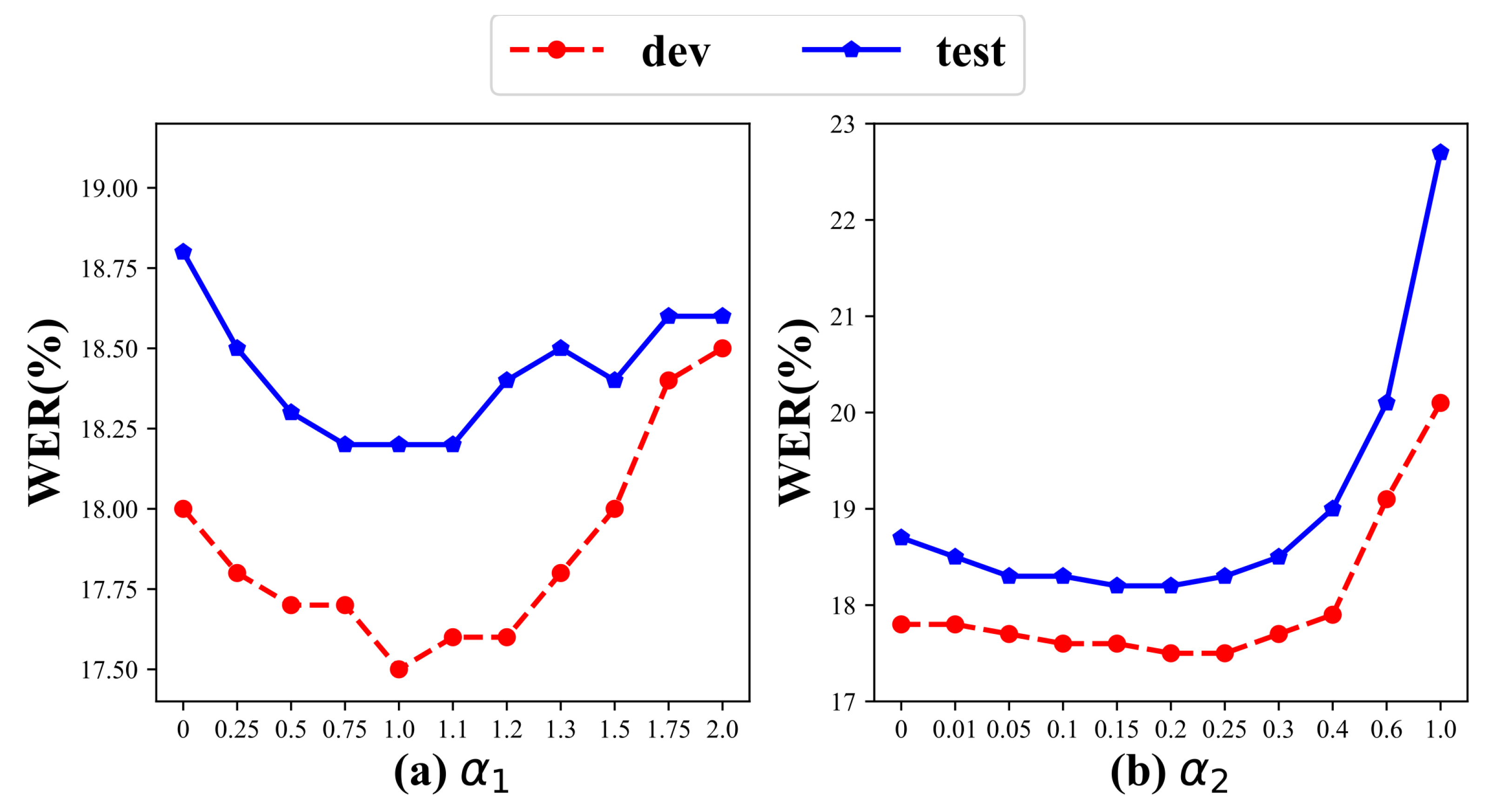

4.7.1. Weights of Loss Function

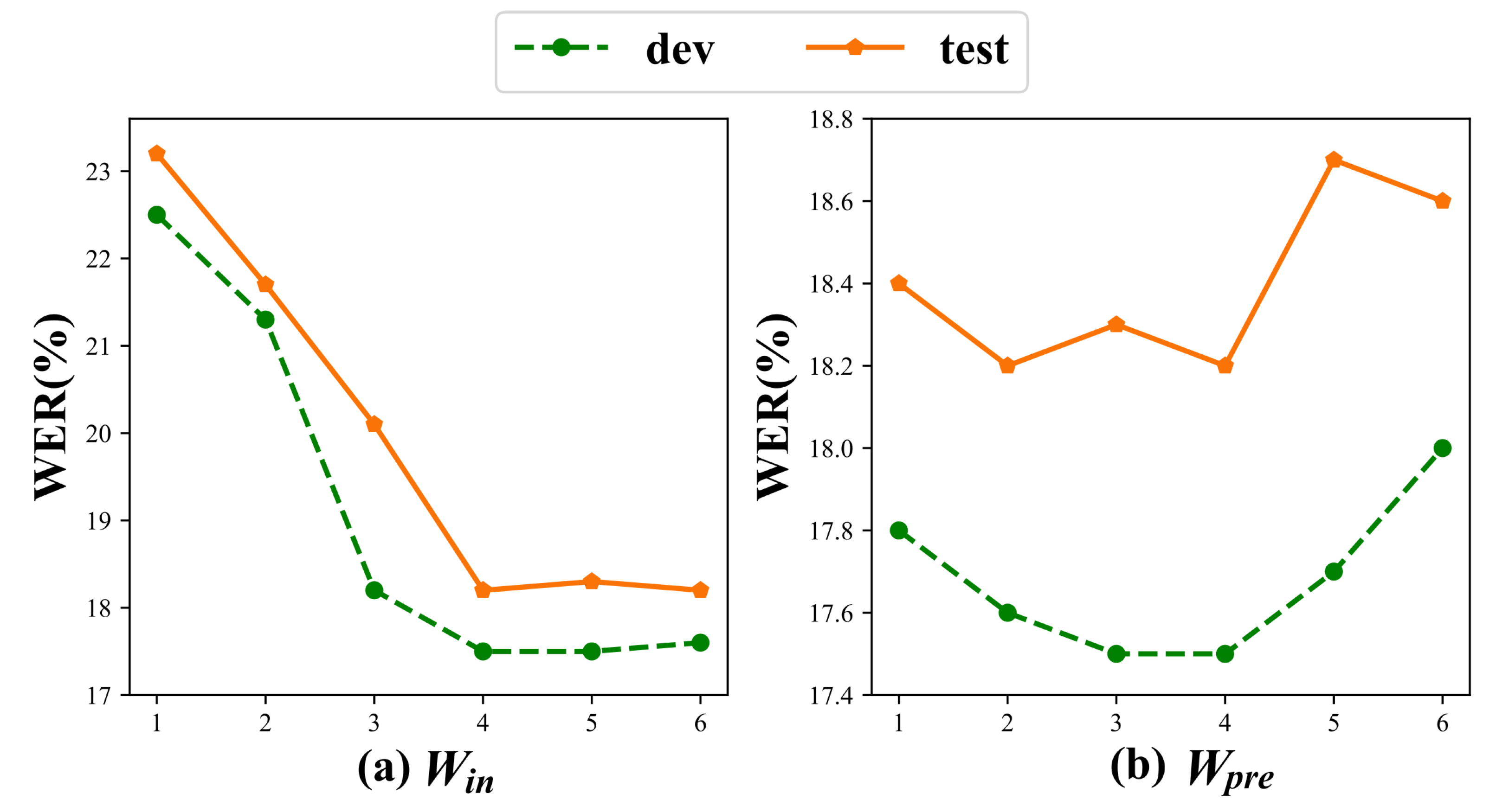

4.7.2. Input Window Size

4.7.3. Prediction Window Size

4.8. Online Inference

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Camgoz, N.C.; Hadfield, S.; Koller, O.; Ney, H.; Bowden, R. Neural sign language translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7784–7793. [Google Scholar]

- Min, Y.; Hao, A.; Chai, X.; Chen, X. Visual alignment constraint for continuous sign language recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 11542–11551. [Google Scholar]

- Laines, D.; Gonzalez-Mendoza, M.; Ochoa-Ruiz, G.; Bejarano, G. Isolated sign language recognition based on tree structure skeleton images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 276–284. [Google Scholar]

- Rao, Q.; Sun, K.; Wang, X.; Wang, Q.; Zhang, B. Cross-sentence gloss consistency for continuous sign language recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 4650–4658. [Google Scholar]

- Hu, H.; Zhao, W.; Zhou, W.; Li, H. Signbert+: Hand-model-aware self-supervised pre-training for sign language understanding. IEEE Trans. Pattern Analysis Mach. Intell. 2023, 45, 11221–11239. [Google Scholar] [CrossRef]

- Piergiovanni, A.; Ryoo, M.S. Representation flow for action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–17 June 2019; pp. 9945–9953. [Google Scholar]

- Jiao, P.; Min, Y.; Li, Y.; Wang, X.; Lei, L.; Chen, X. Cosign: Exploring co-occurrence signals in skeleton-based continuous sign language recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 20676–20686. [Google Scholar]

- Koller, O.; Zargaran, S.; Ney, H. Re-sign: Re-aligned end-to-end sequence modelling with deep recurrent cnn-hmms. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4297–4305. [Google Scholar]

- Cheng, K.L.; Yang, Z.; Chen, Q.; Tai, Y.-W. Fully convolutional networks for continuous sign language recognition. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXIV 16. pp. 697–714. [Google Scholar]

- Han, X.; Lu, F.; Yin, J.; Tian, G.; Liu, J. Sign language recognition based on r (2 + 1) d with spatial–temporal–channel attention. IEEE Trans. Hum.-Mach. Syst. 2022, 52, 687–698. [Google Scholar] [CrossRef]

- Graves, A.; Fernández, S.; Gomez, F.; Schmidhuber, J. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the 23rd International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 369–376. [Google Scholar]

- Zhang, H.; Guo, Z.; Yang, Y.; Liu, X.; Hu, D. C2st: Cross-modal contextualized sequence transduction for continuous sign language recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 21053–21062. [Google Scholar]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; Volume 32. [Google Scholar]

- Zhang, S.; Yin, J.; Dang, Y. A generically contrastive spatiotemporal representation enhancement for 3d skeleton action recognition. Pattern Recognit. 2025, 164, 111521. [Google Scholar] [CrossRef]

- Zhou, Y.; Yan, X.; Cheng, Z.-Q.; Yan, Y.; Dai, Q.; Hua, X.-S. Blockgcn: Redefine topology awareness for skeleton-based action recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 2049–2058. [Google Scholar]

- Liu, C.; Liu, S.; Qiu, H.; Li, Z. Adaptive part-level embedding GCN: Towards robust skeleton-based one-shot action recognition. IEEE Trans. Instrum. Meas. 2025, 74, 5024713. [Google Scholar] [CrossRef]

- Tunga, A.; Nuthalapati, S.V.; Wachs, J. Pose-based sign language recognition using gcn and bert. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Online, 5–9 January 2021; pp. 31–40. [Google Scholar]

- Corso, G.; Stark, H.; Jegelka, S.; Jaakkola, T.; Barzilay, R. Graph neural networks. Nat. Rev. Methods Prim. 2024, 4, 17. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems; NIPS: Islamabad, Pakistan, 2017; Volume 30. [Google Scholar]

- Guan, M.; Wang, Y.; Ma, G.; Liu, J.; Sun, M. Mska: Multi-stream keypoint attention network for sign language recognition and translation. Pattern Recognit. 2025, 165, 111602. [Google Scholar] [CrossRef]

- Gunasekara, S.R.; Li, W.; Yang, J.; Ogunbona, P. Asynchronous joint-based temporal pooling for skeleton-based action recognition. IEEE Trans. Circuits Syst. Video Technol. 2024, 35, 357–366. [Google Scholar] [CrossRef]

- Gan, S.; Yin, Y.; Jiang, Z.; Wen, H.; Xie, L.; Lu, S. Signgraph: A sign sequence is worth graphs of nodes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 13470–13479. [Google Scholar]

- Hu, L.; Gao, L.; Liu, Z.; Feng, W. Continuous sign language recognition with correlation network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 2529–2539. [Google Scholar]

- Zuo, R.; Mak, B. C2slr: Consistency-enhanced continuous sign language recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–20 June 2022; pp. 5131–5140. [Google Scholar]

- MMPose Contributors. OpenMMLab Pose Estimation Toolbox and Benchmark. 2020. Available online: https://github.com/open-mmlab/mmpose (accessed on 16 August 2025).

- Zhang, Z. Microsoft kinect sensor and its effect. IEEE Multimed. 2012, 19, 4–10. [Google Scholar] [CrossRef]

- Li, S.; Zheng, L.; Zhu, C.; Gao, Y. Bidirectional independently recurrent neural network for skeleton-based hand gesture recognition. In Proceedings of the 2020 IEEE International Symposium on Circuits and Systems (ISCAS), Sevilla, Spain, 10–21 October 2020; pp. 1–5. [Google Scholar]

- Chen, Y.; Zhang, Z.; Yuan, C.; Li, B.; Deng, Y.; Hu, W. Channel-wise topology refinement graph convolution for skeleton-based action recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 13359–13368. [Google Scholar]

- Do, J.; Kim, M. Skateformer: Skeletal-temporal transformer for human action recognition. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2024; pp. 401–420. [Google Scholar]

- Shi, L.; Zhang, Y.; Cheng, J.; Lu, H. Decoupled spatial-temporal attention network for skeleton-based action-gesture recognition. In Proceedings of the Asian Conference on Computer Vision, Kyoto, Japan, 30 November–4 December 2020. [Google Scholar]

- Wang, Q.; Shi, S.; He, J.; Peng, J.; Liu, T.; Weng, R. Iip-transformer: Intra-inter-part transformer for skeleton-based action recognition. In Proceedings of the 2023 IEEE International Conference on Big Data (BigData), Sorrento, Italy, 15–18 December 2023; pp. 936–945. [Google Scholar]

- Du, Y.; Wang, W.; Wang, L. Hierarchical recurrent neural network for skeleton based action recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1110–1118. [Google Scholar]

- Chen, T.; Zhou, D.; Wang, J.; Wang, S.; He, Q.; Hu, C.; Ding, E.; Guan, Y.; He, X. Part-aware prototypical graph network for one-shot skeleton-based action recognition. In Proceedings of the 2023 IEEE 17th International Conference on Automatic Face and Gesture Recognition (FG), Waikoloa Beach, HI, USA, 5–8 January 2023; pp. 1–8. [Google Scholar]

- Song, Y.-F.; Zhang, Z.; Shan, C.; Wang, L. Constructing stronger and faster baselines for skeleton-based action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 1474–1488. [Google Scholar] [CrossRef]

- Qiu, H.; Hou, B. Multi-grained clip focus for skeleton-based action recognition. Pattern Recognit. 2024, 148, 110188. [Google Scholar] [CrossRef]

- Zhou, L.; Jiao, X. Multi-modal and multi-part with skeletons and texts for action recognition. Expert Syst. Appl. 2025, 272, 126646. [Google Scholar] [CrossRef]

- Wu, L.; Zhang, C.; Zou, Y. Spatiotemporal focus for skeleton-based action recognition. Pattern Recognit. 2023, 136, 109231. [Google Scholar] [CrossRef]

- Xiang, W.; Li, C.; Zhou, Y.; Wang, B.; Zhang, L. Generative action description prompts for skeleton-based action recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 10276–10285. [Google Scholar]

- Xiang, X.; Li, X.; Liu, X.; Qiao, Y.; El Saddik, A. A gcn and transformer complementary network for skeleton-based action recognition. Comput. Vis. Image Underst. 2024, 249, 104213. [Google Scholar] [CrossRef]

- Hang, R.; Li, M. Spatial-temporal adaptive graph convolutional network for skeleton-based action recognition. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 1265–1281. [Google Scholar]

- Xie, J.; Meng, Y.; Zhao, Y.; Nguyen, A.; Yang, X.; Zheng, Y. Dynamic semantic-based spatial graph convolution network for skeleton-based human action recognition. In Proceedings of the AAAI Conference on Artificial intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 6225–6233. [Google Scholar]

- Huang, Q.; Geng, Q.; Chen, Z.; Li, X.; Li, Y.; Li, X. Joint-wise distributed perception graph convolutional network for skeleton-based action recognition. In Proceedings of the ICASSP 2025-2025 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Hyderabad, India, 6–11 April 2025; pp. 1–5. [Google Scholar]

- Lee, C.; Hasegawa, H.; Gao, S. Complex-valued neural networks: A comprehensive survey. IEEE/CAA J. Autom. Sin. 2022, 9, 1406–1426. [Google Scholar] [CrossRef]

- Yu, X.; Wu, L.; Lin, Y.; Diao, J.; Liu, J.; Hallmann, J.; Boesenberg, U.; Lu, W.; Möller, J.; Scholz, M.; et al. Ultrafast bragg coherent diffraction imaging of epitaxial thin films using deep complex-valued neural networks. npj Comput. Mater. 2024, 10, 24. [Google Scholar] [CrossRef]

- Shuman, D.I.; Ricaud, B.; Vandergheynst, P. Vertex-frequency analysis on graphs. Appl. Comput. Harmon. Anal. 2016, 40, 260–291. [Google Scholar] [CrossRef]

- Ai, G.; Pang, G.; Qiao, H.; Gao, Y.; Yan, H. Grokformer: Graph fourier kolmogorov-arnold transformers. In Proceedings of the 42st International Conference on Machine Learning (ICML), Vancouver, BC, Canada, 13–19 July 2025. [Google Scholar]

- Yi, K.; Zhang, Q.; Fan, W.; He, H.; Hu, L.; Wang, P.; An, N.; Cao, L.; Niu, Z. Fouriergnn: Rethinking multivariate time series forecasting from a pure graph perspective. Adv. Neural Inf. Process. Syst. 2023, 36, 69638–69660. [Google Scholar]

- Katznelson, Y. An Introduction to Harmonic Analysis; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Wei, D.; Yang, X.-H.; Weng, Y.; Lin, X.; Hu, H.; Liu, S. Cross-modal adaptive prototype learning for continuous sign language recognition. IEEE Trans. Circuits Syst. Video Technol. 2025, 35, 7354–7367. [Google Scholar] [CrossRef]

- Guo, L.; Xue, W.; Guo, Q.; Liu, B.; Zhang, K.; Yuan, T.; Chen, S. Distilling cross-temporal contexts for continuous sign language recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 10771–10780. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Koller, O.; Forster, J.; Ney, H. Continuous sign language recognition: Towards large vocabulary statistical recognition systems handling multiple signers. Comput. Vis. Image Underst. 2015, 141, 108–125. [Google Scholar] [CrossRef]

- Zhou, H.; Zhou, W.; Qi, W.; Pu, J.; Li, H. Improving sign language translation with monolingual data by sign back-translation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 1316–1325. [Google Scholar]

- Hao, A.; Min, Y.; Chen, X. Self-mutual distillation learning for continuous sign language recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 11303–11312. [Google Scholar]

- Hu, L.; Gao, L.; Liu, Z.; Feng, W. Temporal lift pooling for continuous sign language recognition. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2022; pp. 511–527. [Google Scholar]

- Hu, L.; Gao, L.; Liu, Z.; Pun, C.-M.; Feng, W. Adabrowse: Adaptive video browser for efficient continuous sign language recognition. In Proceedings of the 31st ACM International Conference on Multimedia, Ottawa, ON, Canada, 9 October–3 November 2023; pp. 709–718. [Google Scholar]

- Gan, S.; Yin, Y.; Jiang, Z.; Xia, K.; Xie, L.; Lu, S. Contrastive learning for sign language recognition and translation. In Proceedings of the IJCAI, Macao, China, 19–25 August 2023; pp. 763–772. [Google Scholar]

- Hu, L.; Gao, L.; Liu, Z. Self-emphasizing network for continuous sign language recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 8–12 October 2023; Volume 37, pp. 854–862. [Google Scholar]

- Kan, J.; Hu, K.; Hagenbuchner, M.; Tsoi, A.C.; Bennamoun, M.; Wang, Z. Sign language translation with hierarchical spatio-temporal graph neural network. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 3–8 January 2022; pp. 3367–3376. [Google Scholar]

- Lu, H.; Salah, A.A.; Poppe, R. Tcnet: Continuous sign language recognition from trajectories and correlated regions. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 26–27 February 2024; Volume 38, pp. 3891–3899. [Google Scholar]

- Zhou, H.; Zhou, W.; Zhou, Y.; Li, H. Spatial-temporal multi-cue network for sign language recognition and translation. IEEE Trans. Multimed. 2021, 24, 768–779. [Google Scholar] [CrossRef]

- Klakow, D.; Peters, J. Testing the correlation of word error rate and perplexity. Speech Commun. 2002, 38, 19–28. [Google Scholar] [CrossRef]

- Chen, Y.; Zuo, R.; Wei, F.; Wu, Y.; Liu, S.; Mak, B. Two-stream network for sign language recognition and translation. Adv. Neural Inf. Process. Syst. 2022, 35, 17043–17056. [Google Scholar]

| Methods | Backbone | PHOENIX14 | PHOENIX14-T | ||||

|---|---|---|---|---|---|---|---|

| dev (%) | test (%) | dev (%) | test (%) | ||||

| del/ins | WER | del/ins | WER | ||||

| VAC [2] | ResNet18 | 7.9/2.5 | 21.2 | 8.4/2.6 | 22.3 | - | - |

| SMKD [54] | ResNet18 | 6.8/2.5 | 20.8 | 6.3/2.3 | 21.0 | 20.8 | 22.4 |

| TLP [55] | ResNet18 | 6.3/2.8 | 19.7 | 6.1/2.9 | 20.8 | 19.4 | 21.2 |

| AdaBrowse [56] | ResNet18 | 6.0/2.5 | 19.6 | 5.9/2.6 | 20.7 | 19.5 | 20.6 |

| Contrastive [57] | ResNet18 | 5.8/2.6 | 19.6 | 5.1/2.7 | 19.8 | 19.3 | 20.7 |

| SEN [58] | ResNet18 | 5.8/2.6 | 19.5 | 7.3/4.0 | 21.0 | 19.3 | 20.7 |

| HST-GNN [59] | ST-GCN | - | 19.5 | - | 19.8 | 19.5 | 19.8 |

| SignGraph [22] | Custome(GCN) | 6.0/2.2 | 18.2 | 5.7/2.2 | 19.1 | 17.8 | 19.1 |

| TCNet [60] | ResNet18 | 5.5/2.4 | 18.1 | 5.4/2.0 | 18.9 | 18.3 | 19.4 |

| STMC [61] | VGG11 | 7.7/3.4 | 21.1 | 7.4/2.6 | 20.7 | 19.6 | 21.0 |

| C2SLR [24] | ResNet18 | 6.8/3.0 | 20.5 | 7.1/2.5 | 20.4 | 20.2 | 20.4 |

| CoSign-2s [7] | ST-GCN | - | 19.7 | - | 20.1 | 19.5 | 20.1 |

| TwoStream-SLR [63] | ST-GCN | - | 18.4 | - | 18.8 | 17.7 | 19.3 |

| MSKA [20] | ST-GCN | - | 21.7 | - | 22.1 | 20.1 | 20.5 |

| CoSign-1s [7] | ST-GCN | - | 20.9 | - | 21.2 | 20.4 | 20.6 |

| PGF-SLR | FourierGNN | 4.4/2.7 | 17.5 | 4.2/2.9 | 18.2 | 17.3 | 17.7 |

| Methods | dev (%) | test (%) |

|---|---|---|

| SEN [58] | 31.1 | 30.7 |

| TCNet [60] | 29.7 | 29.3 |

| SignGraph [22] | 26.4 | 25.8 |

| CoSign-2s [7] | 28.1 | 27.2 |

| MSKA [20] | 28.2 | 27.8 |

| CoSign-1s [7] | 29.5 | 29.1 |

| PGF-SLR | 27.7 | 28.3 |

| DI | FGL | PRE | PHOENIX14 | PHOENIX14-T | CSL-Daily | ||||

|---|---|---|---|---|---|---|---|---|---|

| dev (%) | test (%) | dev (%) | test (%) | dev (%) | test (%) | ||||

| baseline | 20.9 | 21.2 | 20.4 | 20.6 | 29.5 | 29.1 | |||

| a_1 | ✓ | 20.7 | 21.1 | 20.1 | 20.4 | 29.3 | 29.0 | ||

| a_2 | ✓ | 18.1 | 19.1 | 17.7 | 18.0 | 28.6 | 29.0 | ||

| a_3 | ✓ | 20.9 | 21.0 | 20.5 | 20.6 | 29.3 | 29.1 | ||

| a_4 | ✓ | ✓ | 17.8 | 18.7 | 17.5 | 18.0 | 28.3 | 28.8 | |

| a_5 | ✓ | ✓ | 20.7 | 20.9 | 20.4 | 21.2 | 29.2 | 28.9 | |

| a_6 | ✓ | ✓ | 17.7 | 18.4 | 17.5 | 18.0 | 28.1 | 28.6 | |

| PGF-SLR | ✓ | ✓ | ✓ | 17.5 | 18.2 | 17.3 | 17.7 | 27.7 | 28.3 |

| Methods | Throughput | GFLOPs | WER (%) |

|---|---|---|---|

| Baseline | 15.84 | 175.0 | 20.8 |

| PGF-SLR | 25.72 | 11.5 | 18.5 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, D.; Hu, H.; Ma, G.-F. Part-Wise Graph Fourier Learning for Skeleton-Based Continuous Sign Language Recognition. J. Imaging 2025, 11, 286. https://doi.org/10.3390/jimaging11080286

Wei D, Hu H, Ma G-F. Part-Wise Graph Fourier Learning for Skeleton-Based Continuous Sign Language Recognition. Journal of Imaging. 2025; 11(8):286. https://doi.org/10.3390/jimaging11080286

Chicago/Turabian StyleWei, Dong, Hongxiang Hu, and Gang-Feng Ma. 2025. "Part-Wise Graph Fourier Learning for Skeleton-Based Continuous Sign Language Recognition" Journal of Imaging 11, no. 8: 286. https://doi.org/10.3390/jimaging11080286

APA StyleWei, D., Hu, H., & Ma, G.-F. (2025). Part-Wise Graph Fourier Learning for Skeleton-Based Continuous Sign Language Recognition. Journal of Imaging, 11(8), 286. https://doi.org/10.3390/jimaging11080286