Abstract

Breast cancer is a significant cause of cancer-related mortality among women around the globe, underscoring the need for early and accurate diagnosis. Typically, histopathological analysis of biopsy slides is utilized for tumor classification. However, it is labor-intensive, subjective, and often affected by inter-observer variability. Therefore, this study explores a deep learning-based, multi-class classification framework for distinguishing breast cancer subtypes using convolutional neural networks (CNNs). Unlike previous work using the popular BreaKHis dataset, where binary classification models were applied, in this work, we differentiate eight histopathological subtypes: four benign (adenosis, fibroadenoma, phyllodes tumor, and tubular adenoma) and four malignant (ductal carcinoma, lobular carcinoma, mucinous carcinoma, and papillary carcinoma). This work leverages transfer learning with ImageNet-pretrained ResNet architectures (ResNet-18, ResNet-34, and ResNet-50) and extensive data augmentation to enhance classification accuracy and robustness across magnifications. Among the ResNet models, ResNet-50 achieved the best performance, attaining a maximum accuracy of 92.42%, an AUC-ROC of 99.86%, and an average specificity of 98.61%. These findings validate the combined effectiveness of CNNs and transfer learning in capturing fine-grained histopathological features required for accurate breast cancer subtype classification.

1. Introduction

Breast cancer is one of the leading causes of mortality among women across the globe. According to data reported by the WHO in 2022, approximately 2.3 million women around the world were diagnosed with breast cancer, among whom 670,000 lost their lives [1]. One of the major causes behind such a high number is late-stage diagnosis, which means the disease has already progressed to an advanced stage where treatment options are limited. Therefore, early detection is critical to confirm the presence of cancer and determine its specific subtype since the prognosis, treatment response, and progression depend on subtype classification. Hence, there is an urgent need to develop an automated, efficient, cost-effective, and objective diagnostic tool to identify the breast cancer subtype. This is crucial since it enables physicians to provide an effective treatment plan, ultimately improving the patient’s survival rate.

Traditionally, breast cancer is diagnosed through histopathological examination [2,3,4,5], where tissue samples are analyzed by pathologists under a microscope and classified as either benign or malignant. Similarly, other common diagnostic techniques employed include mammography [6,7,8], ultrasound [9,10,11], magnetic resonance imaging (MRI) [12], and biopsy-based histopathology [13,14]. All of these techniques are effective in diagnosing breast cancer. However, each has certain shortcomings. Mammography-based breast cancer screening can result in false positives or negatives for women with dense breast tissue. Ultrasound- and MRI-based diagnostic techniques are very effective; however, their dependence on a skilled operator has made them expensive and inaccessible in resource-limited settings. Although histopathology is considered the gold standard in breast cancer diagnosis, it is labor-intensive and prone to inter-observer variability, which can result in inconsistent diagnoses among pathologists, particularly in high-volume diagnostic workflows. Given the challenges associated with each of these techniques, it is crucial to have a reliable, cost-effective solution that can assist medical professionals, reduce diagnostic workload, and enhance accessibility. This can be achieved with the help of artificial intelligence (AI) and machine learning (ML). By leveraging these advanced techniques together with deep learning on histopathological images, human dependence on diagnosing or classifying cancer types can be reduced while improving diagnostic accuracy. While AI cannot replace the need for invasive biopsy procedures or specialist oversight, it serves as an efficient decision-support system that has the potential to enhance accuracy and consistency in breast cancer subtype classification.

Many studies have applied deep learning models to classify breast cancer using histopathological images, mammograms, and ultrasound scans. For example, convolutional neural networks (CNNs) are widely used in binary classification to distinguish between benign and malignant tumors. In [15], the authors used deep learning-based computer-aided diagnosis (CAD) systems to classify mammographic mass lesions, significantly enhancing diagnostic accuracy to 98.94% and reducing reliance on unnecessary biopsies. Similarly, in another study, CNN-based classification was applied to the mini-MIAS database, consisting of mammographic images, achieving an impressive accuracy of 89.05% and a sensitivity of 90.63% [16]. Transfer learning is another technique where pretrained deep learning architectures, such as VGG16, Inception, and ResNet, have shown remarkable accuracy in automating breast cancer detection in mammographic and histopathological images [17,18,19]. For example, Saber et al. [17] showed that through transfer learning with models such as VGG16 and ResNet-50, breast cancer can be diagnosed using mammographic images, with an impressive accuracy of 98.96%. Another study by Shahidi et al. [19] showed that using preprocessing, data augmentation, and model selection techniques, transfer learning models like ResNeXt and SENet can improve breast cancer classification.

Although advancements using deep learning models have led to significant progress in breast cancer diagnosis, most existing studies mainly focus on binary classification (benign vs. malignant) rather than on differentiating specific histopathological subtypes. Breast cancer is a heterogeneous disease; hence, it has multiple subtypes, each of which requires its own treatment. However, researchers have conducted limited work with deep learning models for multi-class classification of breast cancer subtypes using histopathological images. Due to this research gap, it is essential to study the ability of CNN-based deep learning architectures such as ResNet to classify the various histopathological subtypes of breast cancer. Hence, in this work, we examine various ResNet architectures for multi-class classification of breast cancer subtypes using histopathological images. Moreover, in this work, we employ the ResNet-18, ResNet-34, and ResNet-50 models to evaluate their performance in distinguishing between eight tumor subtypes in the BreaKHis dataset [20] at multiple magnifications (40X, 100X, 200X, and 400X). By analyzing the effectiveness of these deep learning models, this study will advance current efforts to automate histopathological classification, which will eventually reduce diagnostic subjectivity and improve the accuracy of breast cancer subtype identification.

The remainder of this paper is organized as follows. Section 2 discusses relevant studies conducted using the BreaKHis dataset. Section 3 presents the methodology utilized in this work to examine the performance of the various ResNet models. Section 4 presents the results, where various metrics are used to evaluate the performance of the different ResNet models. Then, we conclude by describing the implications of this study and its effects on breast cancer diagnosis, as well as potential directions for future research.

2. Related Works

In this study, we utilize the BreaKHis dataset, which has been widely used as a benchmark for testing AI-based models in breast cancer diagnosis [20]. The dataset consists of 7909 histopathological images from 82 patients, which are categorized into benign and malignant tumors. Each category is further divided into four subtypes. Additionally, the dataset provides images at four different magnification levels, including 40X, 100X, 200X, and 400X, meaning deep learning models are trained on varying image resolutions. Researchers have conducted various studies using the BreaKHis dataset to apply ML and AI models for diagnosing breast cancer. Some of the key techniques are outlined below.

2.1. Machine Learning Techniques

Initial research using the BreaKHis dataset for diagnosing breast cancer included utilizing traditional machine learning techniques to classify histopathological images. For this purpose, handcrafted features were first extracted, followed by applying classifiers such as support vector machine (SVM), k-nearest neighbor (k-NN), decision tree, and random forest. For instance, in [21], Alqudah et al. utilized sliding-window-based feature extraction using Local Binary Pattern (LBP) features, where they divided each image into 25 sliding windows for localized feature extraction. These extracted features were then utilized to train a support vector machine (SVM) classifier, achieving an impressive accuracy of 91.2%. Another study by Ariateja et al. extracted features that included color, Gabor filter, and GLCM descriptors, which were later utilized to train a weighted k-nearest neighbor (weighted k-NN) algorithm to classify histopathological images [22]. The method proposed in [22] achieved classification accuracies of 90% at 40X, 100X, and 200X magnifications, and 89.6% at 400X, demonstrating its potential for supporting breast cancer histopathology analysis. In a similar study by Murtaza et al. [23], the authors trained a decision tree model using the BreaKHis dataset and fine-tuned it on the Bioimaging Challenge 2015 dataset. Using a misclassification reduction algorithm, they achieved a classification accuracy ranging from 87.5% to 100% across four breast tumor subtypes. All these traditional machine learning techniques have shown promise. However, they have limitations due to their overreliance on feature extraction. Hence, conventional machine learning techniques are unable to capture complex patterns inherent in histopathological images.

2.2. Deep Learning Models for Binary Classification

Similarly, earlier work on applying deep learning models to the BreaKHis dataset focused primarily on binary classification using CNNs. These models focused only on differentiating between benign and malignant tumors. Araújo et al. in [24] trained a CNN model using the BreaKHis dataset, achieving an accuracy of 83.3% across all magnifications. Similarly, in another work by Spanhol et al., a patch-based CNN model was utilized for feature extraction and classification, achieving an accuracy of 85.6% at 200X magnification [20]. These works underscore the potential of deep learning models to automatically learn hierarchical features from histopathological images.

2.3. Transfer Learning and Model Optimization

The use of deep learning for the BreaKHis dataset was further advanced by Bayramoglu et al. through the combined use of CNNs with transfer learning, resulting in accuracy improving to 87.3% at 400X magnification [25].

A further advancement in breast cancer classification for benign and malignant tumors was achieved through a hybrid of a CNN and Long Short-Term Memory (LSTM) with federated learning, resulting in an accuracy of 93% [26]. A similar hybrid technique was utilized by Kaddes et al. in [27], achieving an impressive accuracy of 99.90%. Besides hybrid techniques, various deep learning models, including ResNeXt-50, DPN131, and DenseNet-169, have been utilized to classify binary cancer types, achieving an impressive accuracy of 99.5% [28]. This shows the impact and progress made in distinguishing benign from malignant tumors due to advancements in deep learning.

However, even when AI demonstrates its potential in breast cancer diagnostics through binary classification, it is not sufficient to capture the full heterogeneity of breast cancer [29]. Since breast cancer consists of multiple histopathological subtypes, developing a multi-class classification model is essential to ensure that patients can receive the proper treatment plan.

2.4. Deep Learning Models for Multi-Class Classification

Although binary classification in breast cancer research using deep learning shows great promise and potential, it is not sufficient, since subtype classification is essential for personalized treatment. For this purpose, multi-class classification of histopathological subtypes of breast cancer is necessary. In the BreaKHis dataset, there are eight classes into which images are categorized: adenosis (A), ductal carcinoma (DC), fibroadenoma (F), lobular carcinoma (LC), mucinous carcinoma (MC), papillary carcinoma (PC), phyllodes tumor (PT), and tubular adenoma (TA). Research was conducted in this direction by Umer et al., who proposed a six-branch deep convolutional neural network (6B-Net) with feature fusion and selection mechanisms for multi-class breast cancer classification [30]. When this model was applied to the BreaKHis dataset to classify histopathological images into eight breast cancer classes, an accuracy of 90.10% was achieved. Another research work adopted a DenseNet121-based deep learning model that achieved an average accuracy of 92.50% [31].

The BreaKHis dataset enables advancements in AI-driven breast cancer diagnosis, which can result in significant progress in binary and multi-class classification tasks. Traditional machine learning models, although not very accurate, provide the groundwork for integrating advanced deep learning models to further improve classification accuracy. Although the use of deep learning, transfer learning, and model optimization has significantly improved the accuracy of binary classification, much work still needs to be done in multi-class classification. The complexity and heterogeneity of breast cancer make further advancements in multi-class classification necessary to enable precise and personalized treatment planning.

3. Dataset and Preprocessing

3.1. BreaKHis Dataset

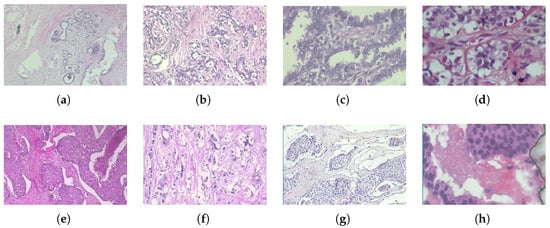

The BreaKHis dataset is publicly available [20] and consists of histopathological breast cancer images widely used for benchmarking machine learning and deep learning models for breast cancer classification. The dataset contains 7909 microscopic images obtained from 82 patients. Each image in the dataset corresponds to a breast tumor specimen extracted through biopsy procedures, as shown in Figure 1. Additionally, each image is available at four different magnification levels (40X, 100X, 200X, and 400X), capturing tissue structures at varying resolutions and enabling multi-scale feature learning, as shown in Table 1. The lower magnifications (40X, 100X) are ideal for broader tissue morphology, whereas the higher magnifications (200X, 400X) show detailed cellular structures, which are crucial for deep learning models in differentiating tumor subtypes.

Figure 1.

Histopathological images of different breast tumor subtypes from the BreaKHis dataset at various magnifications: (a) adenosis (40X), (b) fibroadenoma (100X), (c) phyllodes tumor (200X), (d) tubular adenoma (400X), (e) ductal carcinoma (40X), (f) lobular carcinoma (100X), (g) mucinous carcinoma (200X), and (h) papillary carcinoma (400X).

Table 1.

Four magnification levels in BreaKHis, categorized into benign and malignant tumor subtypes.

Each tumor sample in the dataset is further classified into four subcategories for both benign and malignant cases, as shown in Table 2. In oncology, precise information regarding the varied growth patterns, aggressiveness, and treatment responses of different cancer types is vital, which can only be achieved through multi-class classification. Given that the dataset is imbalanced, with malignant cases significantly outnumbering benign cases, data processing and augmentation are essential to train and build a robust model.

Table 2.

Tumor subtypes in the BreaKHis dataset. The dataset includes both benign and malignant tumors, each further categorized into four distinct subtypes.

3.2. Preprocessing and Augmentation

For applying deep learning models for image classification of histopathological data, it is essential for the model to effectively learn discriminative features while mitigating variations in staining techniques, imaging conditions, and tissue structures. A robust preprocessing pipeline is especially necessary for the BreaKHis dataset since the images in this dataset are of varying resolutions, intensity distributions, and orientations.

3.2.1. Image Resizing

As discussed earlier, the BreaKHis dataset consists of images at different magnification levels (40X, 100X, 200X, and 400X), leading to variations in spatial resolution. Deep learning models such as CNNs require input images to be of uniform size for batch processing. Hence, to ensure uniformity and compatibility with pretrained architectures such as ResNet, all images were resized to a standard resolution of 224 × 224 pixels. The resizing operation maintains spatial consistency across different magnifications, reduces computational overhead, and ensures compatibility with ImageNet-pretrained models. Although image resizing causes a loss of fine-grained cellular details, the use of deep feature extraction layers in the ResNet architecture compensates for this loss by capturing hierarchical spatial information.

3.2.2. Normalization

The next step in preparing the dataset for training and testing was normalization. This step is crucial for deep learning since it standardizes image intensity distributions, stabilizing training and improving model convergence. Among the various techniques, in this work, mean-variance normalization was applied to scale pixel values to a zero-mean, unit-variance distribution:

where is the normalized image, I represents the original pixel intensity, is the dataset-wide mean, and is the standard deviation. This transformation reduces the variability introduced by staining differences in histopathological slides and ensures a consistent input distribution.

3.3. Data Splitting Strategy

To train the ResNet models and later evaluate them, we randomly split the dataset into three subsets that included 80% for training, 10% for validation, and the remaining 10% for testing. The use of a random split ensures that each subset contains a diverse representation of the eight breast cancer subtypes, ensuring that the trained model can generalize well across unseen samples. In this work, stratified sampling was not used due to the multi-class nature of the classification task; instead, random shuffling was performed prior to splitting to ensure variability across sets.

3.4. Data Augmentation

Data augmentation is applied when a dataset contains a limited number of images. Hence, using this technique, the training dataset was expanded artificially by applying a series of transformations to improve the model’s robustness and generalization. This technique is appropriate since the BreaKHis dataset contains limited histopathological images. The data augmentation process introduces variations in image orientation and appearance while preserving the essential structural patterns required for classification, reducing the possibility of overfitting. In this work, augmentation was applied through the PyTorch (version 2.5.1+cu124) transforms pipeline.

3.4.1. Random Horizontal Flipping

Histopathological slides can exhibit variations in tissue orientation due to the preparation process. Hence, to prevent potential biases arising from these positional differences, random orientation flipping is employed. In this work, random flipping of images with a probability of 50% was applied. This ensures that the model is not biased toward a particular tissue orientation.

3.4.2. Random Rotation ()

Random rotation of images within the range of ±10° was applied to account for the possible variation in slide position under the microscope.

where (x, y) represent the original pixel coordinates, (, ) represent the rotated coordinates after transformation, and is the randomly selected rotation angle between −10° and +10°.

3.5. Handling Class Imbalance

In the BreaKHis dataset, malignant tumor samples significantly outnumber benign ones, which can result in the deep learning model being biased toward the majority class. Hence, in this work, instead of applying popular class balancing techniques such as oversampling, undersampling, or weighted loss functions, we applied random shuffling.

4. Methodology

This study proposes an automated deep learning-based classification framework leveraging Residual Networks (ResNet-18, ResNet-34, and ResNet-50) for classifying eight distinct tumor subtypes. The model architecture is designed to efficiently extract and learn hierarchical feature representations, enabling multi-class classification with high accuracy. The methodology employed in this study consists of multiple stages, including data acquisition, model architecture, training strategy, and evaluation.

4.1. Overview of the Proposed Model

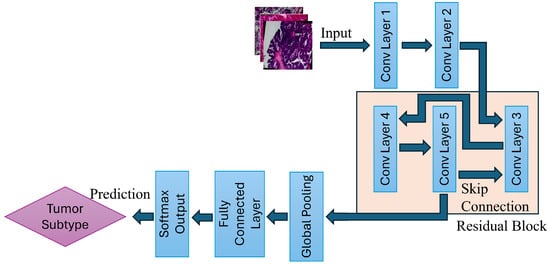

The proposed deep learning framework follows a structured pipeline that begins with the acquisition of images from the BreaKHis dataset, followed by preprocessing and augmentation, including image resizing, normalization, random horizontal flipping, and random rotation. Then, the processed dataset is fed into the ResNet-based CNN architecture for classification. The ResNet model employed in this work then performs convolutional feature extraction and residual learning, and uses fully connected classification layers, which allow for accurate differentiation among eight breast tumor subtypes, as shown in Figure 2.

Figure 2.

Illustration of the ResNet-based multi-class breast tumor classification model. The pipeline consists of convolutional feature extraction, residual block learning, global pooling, and final classification via a fully connected layer.

4.2. Deep Residual Network (ResNet) for Tumor Classification

By addressing the vanishing gradient problem, ResNet has revolutionized deep learning applications in image classification. Traditional CNN models are effective techniques for various applications; however, as the architecture grows in depth, the signal that guides the network’s learning process dwindles to near insignificance during backpropagation. This makes the learning process ineffective, eventually making the network incapable of effectively refining its parameters. ResNet rectifies this issue through the use of a skip connection, as shown in Figure 2.

The output of a residual block is computed as

where x is the input to the residual block, is the residual function learned by the network, and W represents the weight matrices of the convolutional layers.

This can be expanded further into a two-layer residual block. The transformation is defined as

where and are the convolutional weight matrices, and and are the bias terms.

4.3. ResNet Architecture

This work implemented three variations of ResNet, including ResNet-18, ResNet-34, and ResNet-50, to evaluate the effectiveness of network depth in classifying the various breast cancer classes. Each of these architectures differs in terms of the number of layers and computational efficiency, as shown in Table 3. ResNet-18 and ResNet-34 use a basic residual block consisting of two 3 × 3 convolutional layers, whereas ResNet-50 incorporates bottleneck residual blocks, where each block consists of three convolutional layers (1 × 1, 3 × 3, 1 × 1 convolutions). The bottleneck residual block reduces the number of computations while maintaining superior feature extraction.

Table 3.

Comparison of ResNet architectures utilized in this study.

4.4. Computational Setup and Training Time

ResNet training and testing were conducted on a high-performance workstation. This workstation is equipped with an NVIDIA A100 GPU (40 GB of VRAM) (NVIDIA Corporation, Santa Clara, CA, USA), an 8-core CPU, and 32 GB of RAM, and was provided through the National Research Platform (NRP) program [32]. All the histopathological images were locally stored on the workstation where the Python (version 3.12.8) scripts were executed. Simultaneously, the performance of the three ResNet architectures was measured. Among the three selected ResNet models, ResNet-50 took around 30 min to an hour to train over 20 epochs.

4.5. Model Training Process

The training process of the ResNet deep learning model follows a structured pipeline, which ensures efficient feature extraction and residual learning ideal for multi-class breast tumor subtype identification. The training mechanism involves mini-batch processing, backpropagation, and optimization using the Adam optimizer.

Each image first undergoes preprocessing and augmentation, after which it is fed into the ResNet models. All images then pass through multiple convolution processes, where hierarchical features from the images are extracted. The early stage captures low-level features such as edges and textures, while deeper layers learn high-level tumor structures. Then comes the residual learning mechanism in ResNet, which is critical for stable gradient propagation through skip connections and for mitigating the vanishing gradient problem, as shown in Figure 2.

4.5.1. Forward Propagation

Forward propagation involves the following processes:

- 1.

- Input Image Processing:

Each image undergoes normalization and resizing prior to entering the neural network.

- 2.

- Convolutional Feature Extraction:

As shown in Figure 2, the first two convolutional layers extract spatial features such as edges, textures, and cell morphology. These are computed using

where is the input feature map, represents the input image pixels, and is the convolution kernel.

- 3.

- Residual Learning via Skip Connections:

The residual block allows the gradient to flow smoothly and efficiently through the network, which eventually helps prevent the vanishing gradient problem. This is achieved by adding skip connections that bypass one or more layers, enabling the network to learn identity mappings. The skip connection representations are shown in Equation (3) and Equation (4), respectively.

4.5.2. ResNet Model Complexity and Efficiency Comparison

Understanding the computational trade-off between the three ResNet architectures is vital since it allows for evaluating the effectiveness of each model in resource-constrained clinical environments or real-time diagnostic workflows. Beyond classification accuracy, factors such as model size, computational overhead (GFLOPs), and inference latency must be carefully considered before determining the most effective and practical architecture for deployment. The architectural characteristics of the three ResNet variants, ResNet-18, ResNet-34, and ResNet-50, are shown in Table 4. Among the three ResNet architectures, ResNet-18 has a shallow architecture comprising 11.18 million parameters and is the lightest-weight model with the smallest model size of 42.65 MB and inference time of 3.67 milliseconds per image. On the other hand, ResNet-34 has double the number of layers compared to ResNet-18. Finally, ResNet-50 is the deepest among the three selected ResNet models, with 23.52 million parameters and a model size of 89.74 MB. All models process input images resized to 224 × 224 pixels and are configured to classify eight distinct breast cancer subtypes. Transfer learning from ImageNet-pretrained weights was employed to enhance feature extraction capabilities. This comparative analysis will enable assessing the trade-off between architectural complexity and accuracy.

Table 4.

Summary of ResNet architectures for breast cancer subtype classification.

5. Results and Analysis

In this section, we evaluate three deep learning models, ResNet-18, ResNet-34, and ResNet-50, to determine their performance in classifying breast cancer subtypes. We utilize multiple performance metrics, such as accuracy, precision, recall, and F1-score, for a well-rounded assessment. Additionally, graphical tools such as loss curves, confusion matrices, ROC curves, and precision–recall (PR) curves are employed to provide comprehensive insights into the models’ training dynamics, predictive behavior, and class-wise performance across multiple evaluation dimensions. Additionally, the effects of various data-balancing techniques and magnifications on accuracy and classification performance are evaluated.

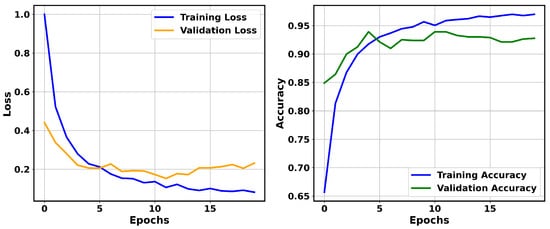

5.1. Model Training Dynamics and Convergence

Among the ResNet models utilized in this study, ResNet-50, when run over 20 epochs, achieved superior performance. The validation accuracy of ResNet-50 improved from 84.90% to a peak of 93.91% at epoch 5, after which it oscillated within the range of 92.64% to 93.91%, suggesting stable generalization to unseen data. Similarly, its training accuracy showed a consistent increase, improving from an initial 65.66% to 96.99% by the final epoch. In contrast, its validation loss steadily declined from 0.9998 to 0.0812, as shown in Figure 3. Except for brief fluctuations around epochs 4 and 6, ResNet-50 exhibited a continuous increase in accuracy. Overall, the accuracy and validation loss show that the model effectively learned to discriminate features from the training data while avoiding overfitting.

Figure 3.

Training and validation loss (left) and training and validation accuracy (right) over 20 epochs for ResNet-50 on the BreaKHis dataset.

It is important to evaluate the performance of each of the ResNet models in terms of variability and potential overfitting. Hence, the ResNet-18, -34, and -50 models were evaluated over five random seeds. Given that the BreaKHis dataset is imbalanced, different training, validation, and test sets were used to ensure that the results were not biased by a specific split during each run. The test accuracies for each of the seeds, along with their mean values and 95% confidence intervals, are shown in Table 5. Among the three architectures, ResNet-50 achieved the highest mean test accuracy of 92.42%, with a narrow confidence interval (91.13–93.72%). This shows that, compared to ResNet-18 and ResNet-34, ResNet-50 offers strong consistency and generalization. Similarly, ResNet-18 and ResNet-34 also achieved respectable mean accuracies of 88.74% and 88.46%, respectively.

Table 5.

Performance summary across 5 random seeds with mean accuracy and 95% confidence intervals.

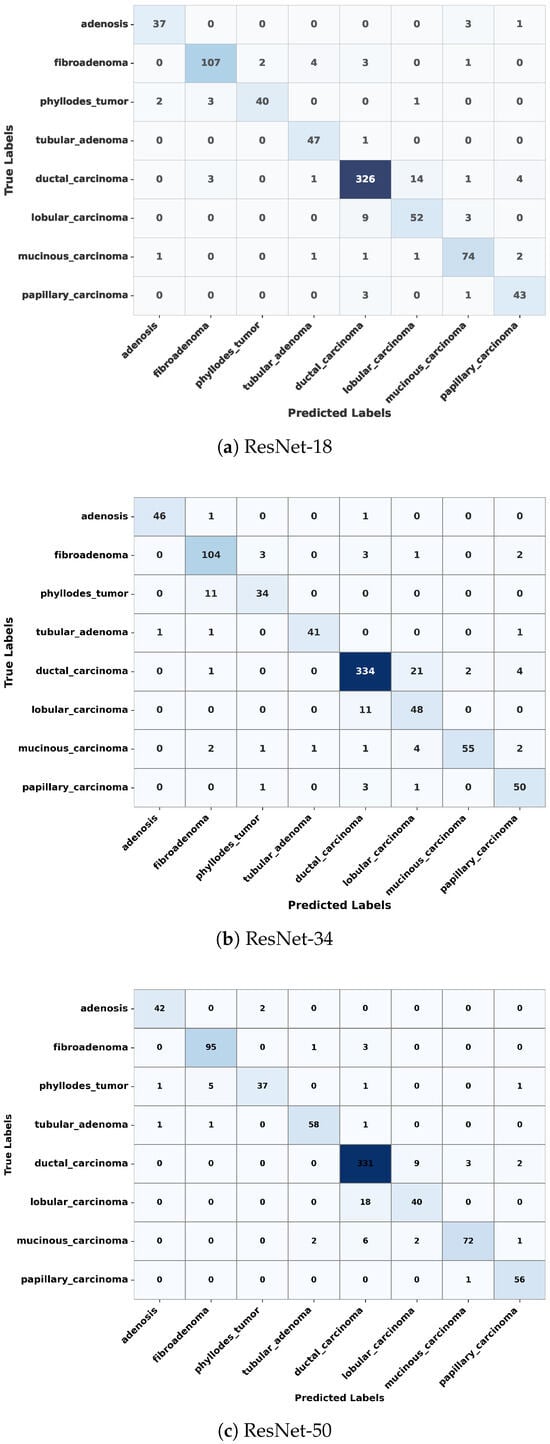

5.2. Confusion Matrix Analysis

In this section, we examine the strengths and residual weaknesses of the trained ResNet models in prediction ability on the test set across subtypes using confusion matrices. The confusion matrices for ResNet-18, ResNet-34, and ResNet-50 are shown in Figure 4. The diagonal dominance indicates high accuracy across most of the classes. The three confusion matrices shown in Figure 4 indicate that the three ResNet models were able to achieve exceptional performance in classifying ductal carcinoma, adenosis, fibroadenoma, and tubular adenoma. However, the models still showed confusion between some of the classes, such as lobular carcinoma and mucinous carcinoma, as well as phyllodes tumor and fibroadenoma, as shown in Figure 4. These misclassifications might have resulted from inherent visual similarities in tissue architecture patterns and morphology among these subtypes. Such confusion can be minimized through the use of a balanced dataset that adequately represents each of the subtypes, thus allowing the model to learn distinctive and discriminative features.

Figure 4.

Confusion matrices of ResNet-18, ResNet-34, and ResNet-50 on the test dataset, showing classification performance across eight breast tumor subtypes. Each matrix illustrates correct predictions on the diagonal and misclassifications on the off-diagonal.

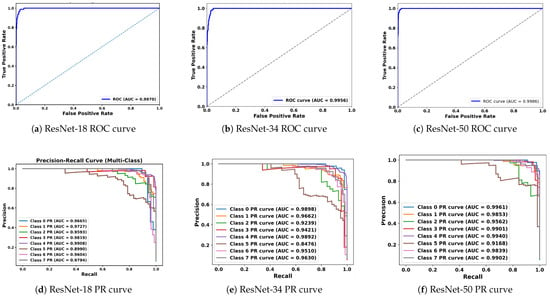

5.3. Analysis of Receiver Operating Characteristic (ROC) and Precision–Recall (PR) Curves

In medical image classification tasks, especially for diseases like cancer, it is essential not only to achieve high overall accuracy but also to rigorously evaluate how well the model distinguishes between different classes. It becomes even more crucial when the dataset is imbalanced or when the cost of false positives and false negatives varies significantly. The ROC curve serves this purpose as it plots the true positive rate (sensitivity) against the false positive rate (specificity) at various decision thresholds. The primary metric derived from the ROC curve is the area under the curve (AUC), which summarizes the model’s ability to correctly classify positive and negative instances across all thresholds. An AUC score of 1.0 means perfect classification, whereas a value of 0.5 implies no discriminative power, which is equivalent to random guessing. In this study, the ROC curves for ResNet-18, ResNet-34, and ResNet-50 demonstrate that each model maintains high true positives while keeping false positives low across various decision thresholds, as shown in Figure 5. The AUC for ResNet-50 was 0.9979, signifying near-perfect classification performance across the eight breast tumor classes. Similarly, for ResNet-34, the AUC was 0.995, indicating exceptional discriminative performance as well. These high AUC values for both ResNet models highlight the capabilities of each in separating tumor classes with minimal overlap in their predictive probabilities.

Figure 5.

ROC (top) and precision–recall (bottom) curves for ResNet-18, ResNet-34, and ResNet-50 on the test set. ROC panels show the trade-off between sensitivity and specificity across thresholds. PR panels summarize performance under class imbalance by emphasizing precision–recall behavior.

Although the ROC curve provides valuable insights, it can sometimes give an overly optimistic view in imbalanced datasets, which can result in favoring the dominant classes and reducing performance for the minority classes. In such cases, the precision–recall (PR) curve becomes a more appropriate tool. Typically, in a PR curve, precision (positive predictive value) is plotted against recall (sensitivity), explicitly focusing on the performance for the positive class while ignoring the true negatives, which dominate in imbalanced scenarios. The summary metric that is utilized for the PR curve is the area under the precision–recall curve (PR-AUC). Hence, the PR-AUC measures the model’s ability to correctly identify positive instances while minimizing false positives. This becomes crucial in cases where detecting rare classes has significant consequences. The PR curves for ResNet-34 and ResNet-50, across each of the 8 tumor classes, are shown in Figure 5. ResNet-34 shows strong PR-AUC values for all tumor subtypes, ranging from 0.85 to 0.9898. The lowest PR-AUC is observed for class 5 (lobular carcinoma), either due to lower representation or high similarity with other classes. Similarly, for ResNet-50, the PR-AUC is even more robust, with AUC values above 0.91 and many of the classes exceeding 0.99. This shows that ResNet-50 consistently maintains both high precision and recall across tumor classes, even when the dataset is imbalanced.

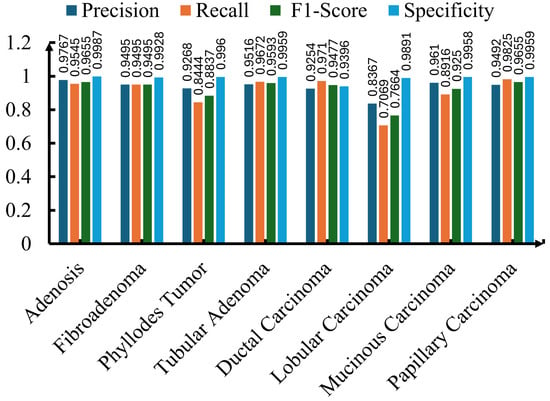

5.4. Class-Wise Performance Metrics and Detailed Analysis

For a comprehensive understanding of the model’s performance, besides overall accuracy, we utilized four critical metrics: precision, recall, F1-score, and specificity. All of these metrics allow a thorough assessment of how well the model performs in identifying each of the breast cancer subtypes present in the BreaKHis dataset. Among these metrics, precision reflects the proportion of samples that were correctly identified as positive among all those predicted as positive. Precision is important in medical diagnosis, where a high precision score corresponds to a low false positive rate, which can minimize unnecessary treatments or invasive procedures. Similarly, recall measures the ability of the model to identify all the true positives within each sample in a given class. A high recall score ensures that all actual cancer cases in a clinical setting are diagnosed. In an imbalanced dataset, the F1-score plays a vital role in providing a measure of the model’s completeness in identifying positive cases. Since the F1-score represents the harmonic mean of precision and recall, it provides a score reflecting both the accuracy of positive predictions and the model’s completeness in identifying positive cases. In the end, specificity reflects the ratio of correctly identified negative cases, thereby ensuring that the model does not raise false alarms while identifying non-cancerous or different subtype cases.

Although all three ResNet models achieved impressive performance across all four metrics, ResNet-50 exhibited superior performance, and its evaluation is shown in Figure 6. Precision for each of the seven breast cancer classes was more than 93%, except for lobular carcinoma. For the same class (lobular carcinoma), the F1-score and recall were under 78%, likely due to the subtle visual differences with other subtypes or due to fewer training samples. Except for lobular carcinoma, the model exhibited superior performance for other subtypes. For instance, adenosis achieved the highest scores in each category, including a precision of 0.9767, a recall of 0.9545, and an F1-score of 0.9655, showing the trained model’s exceptional ability in identifying adenosis with minimal misclassifications. Similar to adenosis, tubular adenoma, fibroadenoma, and papillary carcinoma also showed superior scores in precision, recall, and F1-score. The model achieved impressive scores of 0.9710 and 0.9477 in recall and F1-score, respectively, for ductal carcinoma, one of the most prevalent and clinically significant subtypes of malignant tumors. This shows that the trained model can play a pivotal role in detecting this critical class with minimal false negatives, as shown in Figure 6. Besides precision, recall, and F1-score, the model consistently achieved high specificity, with each subtype scoring more than 0.92. In some cases, such as adenosis, tubular adenoma, and papillary carcinoma, the specificity score approached near-perfect values. This shows that the trained ResNet-50 model has the ability to minimize false positive predictions, which is an essential characteristic in clinical applications.

Figure 6.

Precision, recall, F1-score, and specificity for each of the eight breast cancer classes using the ResNet-50 model.

To measure the impact and effectiveness of the proposed ResNet model, it is crucial to compare it with existing published works that also utilized the BreaKHis dataset and applied multi-class classification on breast tumor subtypes. This comparison is presented in Table 6, where the proposed technique is shown to achieve superior accuracy compared to previously reported methods that achieved accuracies ranging from 73.68% to 91.3%. In these methods, various techniques such as traditional CNNs, Inception V3, and attention-based networks like ECSAnet were employed. For most of the methods shown in Table 6, the datasets were split in an 80:20 ratio for the training and test sets. In some cases, they were split into training, test, and validation sets. For uniformity, the validation and test divisions are combined into one category. This comparison clearly shows the robustness and practical potential of the proposed model in automating breast cancer subtype classification for clinical pathology.

Table 6.

Comparison of classification performance with existing methods.

5.5. Impact of Class Imbalance Mitigation Strategies

As shown in Figure 6, among all subtypes, ResNet-50 performed less satisfactorily in classifying lobular carcinoma. This is due to the class imbalance in the dataset, where the number of sample histopathological images varies significantly across breast cancer subtypes. Therefore, class-balanced oversampling and the focal loss technique were applied to address this severe class imbalance in the BreaKHis dataset. These approaches were evaluated along with the baseline model trained with random shuffling utilized in this work. Utilizing focal loss and balanced oversampling improved ResNet-50’s ability to identify lobular and papillary carcinoma compared to random shuffling, as shown in Figure 7. However, the performance of focal loss and balanced oversampling either deteriorated or was similar in other classes. This was especially evident in ductal carcinoma. Hence, the two data-balancing techniques did not yield substantial improvements over the baseline model.

Figure 7.

Comparison of confusion matrices for ResNet-50 using baseline (left), balanced oversampling (middle), and focal loss (right) techniques.

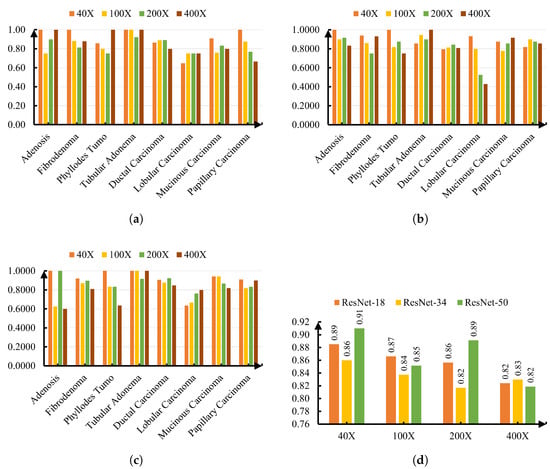

5.6. Class-Wise Performance Across Magnifications

Thus far, the accuracy of each ResNet model and its performance for each of the classes have been measured. However, to evaluate the robustness of the proposed models, it is also important to report the class-wise performance for each magnification level (40×, 100×, 200×, and 400×) rather than collapsing the results into a single aggregated figure. This shows the effect of magnification on the accuracy of each ResNet model and its performance in classifying histopathological images. A comparison of the accuracies of ResNet-18, -34, and -50 for various magnification levels (40×, 100×, 200×, and 400×) is shown in Figure 8d. ResNet-50 performed better than ResNet-18 and ResNet-34 for 40X magnification and 200X magnification. Meanwhile, ResNet-34 and ResNet-18 outperformed their counterparts for 100X magnification and 400X magnification, respectively.

Figure 8.

Performance across magnifications for different ResNet variants. (a) Precision per class for ResNet-18 across magnifications. (b) Precision per class for ResNet-34 across magnifications. (c) Precision per class for ResNet-50 across magnifications. (d) Overall accuracy across magnifications for all ResNet variants.

In addition to accuracy, precision was evaluated to provide a more detailed view of model performance for each tumor subtype across different magnifications. High precision is particularly important in medical diagnosis, as it reflects the likelihood that a positive classification truly corresponds to the target condition, thereby reducing the risk of unnecessary treatments. All ResNet models selected in this work showed superior precision in classifying tubular adenoma at all four magnification levels. Meanwhile, lobular carcinoma exhibited the most variability, with precision dropping below 0.70 in multiple cases, particularly for ResNet-34 at 200× (0.5263) and 400× (0.4286), suggesting greater sensitivity to magnification changes and possible feature overlap with other subtypes. On the other hand, for phyllodes tumor, the performance of ResNet-18 and ResNet-50 was much more consistent across different magnifications than ResNet-34. These findings highlight the effect of magnification and network depth on classification performance for certain types of cancer and accuracy.

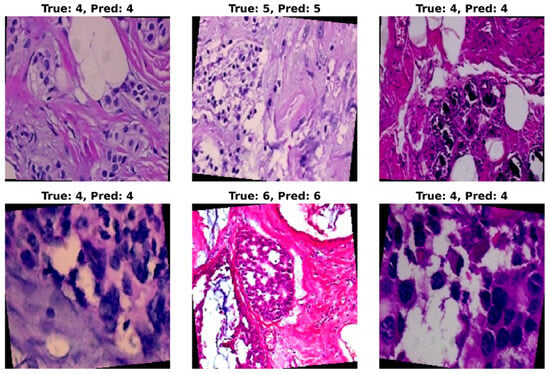

5.7. Visualization of Model Predictions

Through quantitative metrics, we were able to showcase the effectiveness of the ResNet models in classifying breast cancer into eight subtypes. To complement the numerical results, a visualization of the trained model’s prediction ability provides valuable insights into classification performance. For this purpose, randomly selected test set images were applied as input to the model, and the predicted classification was then compared with the original class label. A grid of six histopathological images from the BreaKHis test set, representing various breast cancer subtypes and magnification levels, was provided as input to the trained ResNet-50 model. The model’s predictions (Pred), alongside the true class labels (True), are shown in Figure 9. In Figure 9, True class 4 represents ductal carcinoma, class 5 represents lobular carcinoma, and class 6 corresponds to mucinous carcinoma. In this figure, most of the predictions on the test samples are shown to be correct. However, on some occasions, misclassification may occur when the subtypes exhibit subtle morphological similarities.

Figure 9.

Visualization of ResNet-50 model predictions on randomly selected histopathological images from the BreaKHis test set. Each image displays the corresponding ground-truth class (True) and the predicted class (Pred) assigned by the model. The visualization highlights the model’s strong ability to correctly classify most tumor subtypes at varying magnifications, while also revealing occasional misclassifications in subtypes with subtle morphological differences.

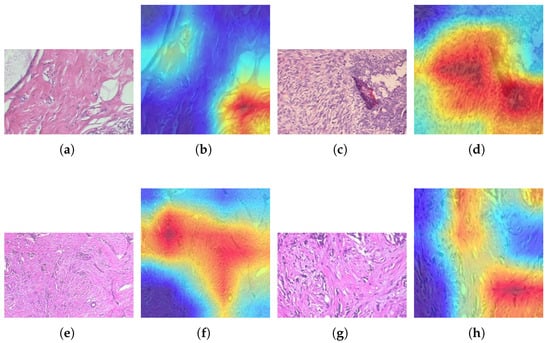

5.8. Model Interpretability and Explanation

Visualizing the performance of the ResNet models in classifying breast cancer types is essential; however, the models must be able to highlight the histopathology images when they make correct or incorrect predictions. This can be achieved by using Gradient-weighted Class Activation Mapping (Grad-CAM). Among the ResNet models, ResNet-50 achieved the highest accuracy. A qualitative visualization of its performance using Grad-CAM on various histopathology images across various classes from the BreaKHis dataset is shown in Figure 10. Warmer colors indicate a more substantial positive contribution to the selected class. On the other hand, cooler or lighter colors indicate a small or no contribution. Figure 10a shows an original adenosis sample at a magnification of 100X. The ResNet-50 model correctly identified this sample. Figure 10b shows the Grad-CAM of the same histopathology image, where heat concentrates over crowded glandular/acinar units within the preserved lobular architecture. Similarly, another correctly predicted sample from the phyllodes tumor category at 100X magnification and its Grad-CAM representation are shown in Figure 10c and Figure 10d, respectively. Besides correct predictions, it is vital to see what Grad-CAM highlights when the ResNet model makes incorrect predictions to better understand the reason behind it. Hence, in Figure 10e,g, the original ductal carcinoma sample is selected at a different magnification, which the ResNet-50 classified incorrectly as lobular carcinoma. This visualization validates both successful classifications and explains the specific failure modes during misclassification.

Figure 10.

Grad-CAM visualizations: (a) original benign adenosis sample, (b) Grad-CAM of benign adenosis, (c) original benign phyllodes tumor sample, (d) Grad-CAM of benign phyllodes tumor sample, (e) original ductal carcinoma sample, (f) Grad-CAM of ductal carcinoma misclassified as lobular carcinoma, (g) original ductal carcinoma sample, (h) Grad-CAM of ductal carcinoma misclassified as lobular carcinoma.

6. Discussion

This work discusses the potential of deep learning models, such as the ResNet architecture, to achieve the challenging task of multi-class classification of breast cancer subtypes from histopathological images. Among ResNet-18, ResNet-34, and ResNet-50, the latter outperformed the others across all metrics and parameters, including accuracy, AUC, ROC, precision, recall, F1-score, and specificity. This superior performance can largely be attributed to the deeper architecture of ResNet-50, specifically its bottleneck residual blocks, which enable the extraction of complex, fine-grained histopathological features essential for differentiating among visually similar subtypes. The model performed much better in classifying subtypes such as ductal carcinoma, adenosis, fibroadenoma, and tubular adenoma. Even though the model performed better than other published works in classifying lobular carcinoma and mucinous carcinoma, its performance for these subtypes was still not as strong as for others, likely due to the subtle morphological differences among these subtypes and their under-representation within the dataset. Hence, due to these observations, further research is necessary, where more advanced data augmentation strategies, subtype-specific learning approaches, and more balanced datasets will be used to improve the classification of challenging subtypes.

6.1. Practical Deployment Scenarios

A highly accurate, trained AI model is insufficient without an actual plan for evaluating the model’s applicability in real-world clinical workflows. The proposed model can be deployed in two different scenarios, as outlined below.

6.1.1. Workstation-Integrated Microscope

In this approach, a workstation uses a histological microscope to capture images, deploys the trained ResNet model in the same environment, and promptly classifies the breast cancer type [37]. In the preprocessing steps, the captured image is resized to 224 × 224 pixels, and after normalization, it is fed as input to the ResNet model, which classifies the histopathological image into one of the eight categories. In this scenario, pathologists can utilize and interact with an AI model for diagnosis, fostering a “dialogue-mode” workflow that combines human expertise with machine precision.

6.1.2. Remote Processing Workflow

In some scenarios, the trained AI model needs more computational resources, making it impossible to deploy using the workstation-integrated microscope setup. In such cases, histopathological slides are digitized and transferred to a centralized server for analysis. Typically, in this type of setup, images undergo manual or semi-automated preprocessing, including format conversion and spatial resolution adjustments. After preprocessing, the image is applied to the trained model, which classifies it into one of the categories. Although this approach requires access to high computational resources, it suffers from latency issues due to data transfer and preprocessing overhead.

6.2. Future Work

In a recent work conducted using an ensemble of Swin Transformers, on the BreaKHis dataset, BreaST-Net achieved a higher test accuracy of 96% across eight subtypes (at 40× magnification) [38]. However, some of the metrics used in the model were performance-related. Similarly, Joseph et al. used a hybrid method combining a handcrafted feature extraction technique and a deep neural network (DNN) classifier, achieving an accuracy of 97.89% in multi-class classification of breast cancer subtypes on the same dataset [39]. Likewise, Chikkala et al. proposed a novel bidirectional recurrent neural network (BRNN) framework that integrates a ResNet-50-based transfer learning backbone, Gated Recurrent Units (GRUs), residual collaborative branches, and a feature fusion module for multi-class classification on the BreaKHis dataset, achieving an accuracy of 97.25% [40]. Sharma et al. used a VGG16 pretrained network with a linear SVM classifier on the BreaKHis dataset, achieving an accuracy slightly better than that of the ResNet-50 model proposed in this work [41]. These recent publications highlight that by incorporating advanced architectures, such as transformers or recurrent neural networks, or by using hybrid learning approaches that integrate handcrafted features, multi-classification of breast cancer can be achieved. While these models achieved slightly higher accuracies than the ResNet-50 model presented in this study, they often require more complex architectures or additional training resources. Additionally, the integration of explainable AI (XAI) methods could also improve the interpretability of model decisions, fostering greater trust in clinical applications. In future work, we will incorporate these advanced methods into the current framework, along with additional techniques such as ensemble learning and domain adaptation, to improve accuracy and generalizability. Recently, Attention GhostUNet++ achieved better contextual understanding and feature refinement on liver CT images, with efficient computational resource requirements [42]. Hence, comparing the ResNet models presented in this work with Attention GhostUNet++ is pertinent. Moreover, in future work, we will also investigate the effect of using native image resolutions or a patch-based strategy, as discussed in [43], rather than the uniform resizing of 224 × 224 employed in this work. Overall, this study reinforces the capability of deep learning for multi-class classification of histopathological images and lays a strong foundation for continued research in this domain.

7. Conclusions

In this study, we developed and rigorously evaluated a deep learning framework for multi-class classification of eight breast cancer subtypes on the BreaKHis dataset using ResNet-18, ResNet-34, and ResNet-50. Across five random seeds, ResNet-50 delivered the strongest and most stable performance (mean test accuracy was equal to 92.42% ± 1.30; 95% CI: 91.13–93.72), with high discrimination on threshold-free metrics (overall AU-ROC up to 99.57) and robust class-wise precision–recall behavior (PR-AUC ≥ 0.91 for all classes, with several ≥ 0.99). Confusion-matrix analyses showed consistently correct recognition of adenosis, fibroadenoma, tubular adenoma, and ductal carcinoma, while the model most often confused lobular with mucinous carcinoma and, to a lesser extent, phyllodes tumor with fibroadenoma, patterns aligned with known morphological similarity. Grad-CAM visualizations indicated that the network attends to histologically meaningful regions in both correct and error cases, providing qualitative interpretability that can support pathologist review. Beyond histopathology, the proposed solutions are potentially suitable for multi-class breast tumor classification based on X-ray mammography, ultrasonography, and magnetic resonance imaging, provided appropriate modality-specific preprocessing and retraining. Future work will investigate cross-modality transfer learning and domain adaptation to validate this potential on external, multi-institutional cohorts.

Despite these strengths, limitations remain. Performance is partially constrained by class imbalance and dataset size, and our evaluation is limited to a single public cohort; external, multi-institutional validation is necessary to establish generalizability. Additionally, because the task presented in this work is image-level classification, not segmentation, our assessment appropriately focused on classification metrics (accuracy, AUC-ROC, PR-AUC, precision, recall, F1-score, and specificity) rather than surface-distance measures.

Future work will prioritize (i) patient-level or leave-one-patient-out splitting to further mitigate potential leakage, (ii) stain normalization and domain adaptation to reduce site-specific bias, (iii) ensemble and transformer/hybrid backbones for harder subtypes, and (iv) expanded interpretability studies and prospective, pathologist-in-the-loop evaluation. Taken together, our results establish a strong, reproducible baseline for subtype-level histopathology classification and outline a clear path toward clinically reliable, AI-assisted decision support.

Author Contributions

Conceptualization, A.D. and R.M.; methodology, A.D.; software, A.D.; validation, A.D. and R.M.; formal analysis, A.D.; investigation, A.D.; resources, A.D.; data curation, A.D.; writing—original draft preparation, R.M.; writing—review and editing, R.M.; visualization, R.M.; supervision, R.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The BreaKHis dataset used in this study is publicly available at https://www.kaggle.com/datasets/ambarish/breakhis or via the original website at http://web.inf.ufpr.br/vri/databases/breast-cancer-histopathological-database-breakhis/, accessed on 30 October 2024.

Acknowledgments

The authors would like to thank California State University, Fullerton (CSUF), for providing access to Grammarly through its institutional license. During the preparation of this manuscript, the authors used Grammarly for grammar checking and sentence refinement. The authors have reviewed and edited the final manuscript and take full responsibility for its content.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Løyland, B.; Sandbekken, I.H.; Grov, E.K.; Utne, I. Causes and risk factors of breast cancer, what do we know for sure? An evidence synthesis of systematic reviews and meta-analyses. Cancers 2024, 16, 1583. [Google Scholar] [CrossRef]

- Veta, M.; Pluim, J.P.; Diest, P.J.V.; Viergever, M.A. Breast cancer histopathology image analysis: A review. IEEE Trans. Biomed. Eng. 2014, 61, 1400–1411. [Google Scholar] [CrossRef]

- Aswathy, M.A.; Jagannath, M. Detection of breast cancer on digital histopathology images: Present status and future possibilities. Inform. Med. Unlocked 2017, 8, 74–79. [Google Scholar] [CrossRef]

- Li, C.I.; Uribe, D.J.; Daling, J.R. Clinical characteristics of different histologic types of breast cancer. Br. J. Cancer 2005, 93, 1046–1052. [Google Scholar] [CrossRef] [PubMed]

- Rakha, E.A.; Reis-Filho, J.S.; Baehner, F.; Dabbs, D.J.; Decker, T.; Eusebi, V.; Fox, S.B.; Ichihara, S.; Jacquemier, J.; Lakhani, S.R.; et al. Breast cancer prognostic classification in the molecular era: The role of histological grade. Breast Cancer Res. 2010, 12, 207. [Google Scholar] [CrossRef] [PubMed]

- Gøtzsche, P.C.; Olsen, O. Is screening for breast cancer with mammography justifiable? Lancet 2000, 355, 129–134. [Google Scholar] [CrossRef] [PubMed]

- Olsen, O.; Gøtzsche, P.C. Screening for breast cancer with mammography. Cochrane Database Syst. Rev. 2001, 4, CD001877. [Google Scholar] [CrossRef]

- Gøtzsche, P.C.; Jørgensen, K.J. Screening for breast cancer with mammography. Cochrane Database Syst. Rev. 2013. [Google Scholar] [CrossRef]

- Guo, R.; Lu, G.; Qin, B.; Fei, B. Ultrasound imaging technologies for breast cancer detection and management: A review. Ultrasound Med. Biol. 2018, 44, 37–70. [Google Scholar] [CrossRef]

- Gordon, P.B. Ultrasound for breast cancer screening and staging. Radiol. Clin. 2002, 40, 431–441. [Google Scholar] [CrossRef]

- Sood, R.; Rositch, A.F.; Shakoor, D.; Ambinder, E.; Pool, K.L.; Pollack, E.; Mollura, D.J.; Mullen, L.A.; Harvey, S.C. Ultrasound for Breast Cancer Detection Globally: A Systematic Review and Meta-Analysis. JGO 2019, 5, 1–17. [Google Scholar] [CrossRef]

- Bluemke, D.A.; Gatsonis, C.A.; Chen, M.H.; DeAngelis, G.A.; DeBruhl, N.; Harms, S.; Heywang-Köbrunner, S.H.; Hylton, N.; Kuhl, C.K.; Schnall, M.D.; et al. Magnetic resonance imaging of the breast prior to biopsy. JAMA 2004, 292, 2735–2742. [Google Scholar] [CrossRef] [PubMed]

- Kwok, T.C.; Rakha, E.A.; Lee, A.H.S.; Grainge, M.; Green, A.R.; Ellis, I.O.; Powe, D.G. Histological grading of breast cancer on needle core biopsy: The role of immunohistochemical assessment of proliferation. Histopathology 2010, 57, 212–219. [Google Scholar] [CrossRef] [PubMed]

- Ellis, I.O.; Humphreys, S.; Michell, M.; Pinder, S.E.; Wells, C.A.; Zakhour, H. Best Practice No 179: Guidelines for breast needle core biopsy handling and reporting in breast screening assessment. J. Clin. Pathol. 2004, 57, 897–902. [Google Scholar] [CrossRef]

- Chougrad, H.; Zouaki, H.; Alheyane, O. Deep Convolutional Neural Networks for breast cancer screening. Comput. Methods Programs Biomed. 2018, 157, 19–30. [Google Scholar] [CrossRef]

- Li, B.; Ge, Y.; Zhao, Y.; Guan, E.; Yan, W. Benign and malignant mammographic image classification based on Convolutional Neural Networks. In Proceedings of the 2018 10th International Conference on Machine Learning and Computing, Macau, China, 26–28 February 2018; ICMLC ’18. pp. 247–251. [Google Scholar] [CrossRef]

- Saber, A.; Sakr, M.; Abo-Seida, O.M.; Keshk, A.; Chen, H. A novel deep-learning model for automatic detection and classification of breast cancer using the transfer-learning technique. IEEE Access 2021, 9, 71194–71209. [Google Scholar] [CrossRef]

- Saini, M.; Susan, S. Vggin-net: Deep transfer network for imbalanced breast cancer dataset. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022, 20, 752–762. [Google Scholar] [CrossRef]

- Shahidi, F.; Daud, S.M.; Abas, H.; Ahmad, N.A.; Maarop, N. Breast cancer classification using deep learning approaches and histopathology image: A comparison study. IEEE Access 2020, 8, 187531–187552. [Google Scholar] [CrossRef]

- Spanhol, F.A.; Oliveira, L.S.; Petitjean, C.; Heutte, L. A Dataset for Breast Cancer Histopathological Image Classification. IEEE Trans. Biomed. Eng. 2016, 63, 1455–1462. [Google Scholar] [CrossRef] [PubMed]

- Alqudah, A.; Alqudah, A.M. Sliding Window Based Support Vector Machine System for Classification of Breast Cancer Using Histopathological Microscopic Images. IETE J. Res. 2022, 68, 59–67. [Google Scholar] [CrossRef]

- Ariateja, D.; Aprilliyani, R.; Chaidir, M.D. Breast cancer histopathological images classification based on weighted K-nearest neighbor. AIP Conf. Proc. 2024, 3215, 120007. [Google Scholar] [CrossRef]

- Murtaza, G.; Abdul Wahab, A.W.; Raza, G.; Shuib, L. A tree-based multiclassification of breast tumor histopathology images through deep learning. Comput. Med. Imaging Graph. 2021, 89, 101870. [Google Scholar] [CrossRef] [PubMed]

- Araújo, T.; Aresta, G.; Castro, E.; Rouco, J.; Aguiar, P.; Eloy, C.; Polónia, A.; Campilho, A. Classification of breast cancer histopathological images using Convolutional Neural Networks. PLoS ONE 2017, 12, e0177544. [Google Scholar] [CrossRef] [PubMed]

- Bayramoglu, N.; Kannala, J.; Heikkilä, J. Deep learning for magnification independent breast cancer histopathology image classification. In Proceedings of the 2016 23rd International Conference on Pattern Recognition (ICPR), Cancun, Mexico, 4–8 December 2016; pp. 2440–2445. [Google Scholar] [CrossRef]

- Mehta, S.; Khurana, S. Enhanced Breast Tumor Detection with a CNN-LSTM Hybrid Approach: Advancing Accuracy and Precision. In Proceedings of the 2024 2nd International Conference on Recent Trends in Microelectronics, Automation, Computing and Communications Systems (ICMACC), Hyderabad, India, 19–21 December 2024; pp. 14–18. [Google Scholar] [CrossRef]

- Kaddes, M.; Ayid, Y.M.; Elshewey, A.M.; Fouad, Y. Breast cancer classification based on hybrid CNN with LSTM model. Sci. Rep. 2025, 15, 4409. [Google Scholar] [CrossRef]

- Toma, T.A.; Biswas, S.; Miah, M.S.; Alibakhshikenari, M.; Virdee, B.S.; Fernando, S.; Rahman, M.H.; Ali, S.M.; Arpanaei, F.; Hossain, M.A.; et al. Breast Cancer Detection Based on Simplified Deep Learning Technique with Histopathological Image Using BreaKHis Database. Radio Sci. 2023, 58, e2023RS007761. [Google Scholar] [CrossRef]

- Benhammou, Y.; Achchab, B.; Herrera, F.; Tabik, S. BreakHis based breast cancer automatic diagnosis using deep learning: Taxonomy, survey and insights. Neurocomputing 2020, 375, 9–24. [Google Scholar] [CrossRef]

- Umer, M.J.; Sharif, M.; Kadry, S.; Alharbi, A. Multi-Class Classification of Breast Cancer Using 6B-Net with Deep Feature Fusion and Selection Method. J. Pers. Med. 2022, 12, 683. [Google Scholar] [CrossRef]

- Rafiq, A.; Jaffar, A.; Latif, G.; Masood, S.; Abdelhamid, S.E. Enhanced Multi-Class Breast Cancer Classification from Whole-Slide Histopathology Images Using a Proposed Deep Learning Model. Diagnostics 2025, 15, 582. [Google Scholar] [CrossRef]

- Weitzel, D.; Graves, A.; Albin, S.; Zhu, H.; Wuerthwein, F.; Tatineni, M.; Mishin, D.; Khoda, E.; Sada, M.; Smarr, L.; et al. The National Research Platform: Stretched, Multi-Tenant, Scientific Kubernetes Cluster. In Proceedings of the Practice and Experience in Advanced Research Computing 2025: The Power of Collaboration, PEARC ’25, Columbus, OH, USA, 20–24 July 2025. [Google Scholar] [CrossRef]

- Bardou, D.; Zhang, K.; Ahmad, S.M. Classification of Breast Cancer Based on Histology Images Using Convolutional Neural Networks. IEEE Access 2018, 6, 24680–24693. [Google Scholar] [CrossRef]

- Mi, W.; Li, J.; Guo, Y.; Ren, X.; Liang, Z.; Zhang, T.; Zou, H. Deep Learning-Based Multi-Class Classification of Breast Digital Pathology Images. Cancer Manag. Res. 2021, 13, 4605–4617. [Google Scholar] [CrossRef]

- Nguyen, P.T.; Nguyen, T.T.; Nguyen, N.C.; Le, T.T. Multiclass Breast Cancer Classification Using Convolutional Neural Network. In Proceedings of the 2019 International Symposium on Electrical and Electronics Engineering (ISEE), Ho Chi Minh City, Vietnam, 10–12 October 2019; pp. 130–134. [Google Scholar] [CrossRef]

- Aldakhil, L.A.; Alhasson, H.F.; Alharbi, S.S. Attention-Based Deep Learning Approach for Breast Cancer Histopathological Image Multi-Classification. Diagnostics 2024, 14, 1402. [Google Scholar] [CrossRef] [PubMed]

- Abeyrathna, D.; Ashaduzzaman, M.; Malshe, M.; Kalimuthu, J.; Gadhamshetty, V.; Chundi, P.; Subramaniam, M. An AI-based approach for detecting cells and microbial byproducts in low volume scanning electron microscope images of biofilms. Front. Microbiol. 2022, 13, 996400. [Google Scholar] [CrossRef] [PubMed]

- Tummala, S.; Kim, J.; Kadry, S. BreaST-Net: Multi-Class Classification of Breast Cancer from Histopathological Images Using Ensemble of Swin Transformers. Mathematics 2022, 10, 4109. [Google Scholar] [CrossRef]

- Joseph, A.A.; Abdullahi, M.; Junaidu, S.B.; Ibrahim, H.H.; Chiroma, H. Improved multi-classification of breast cancer histopathological images using handcrafted features and deep neural network (dense layer). Intell. Syst. Appl. 2022, 14, 200066. [Google Scholar] [CrossRef]

- Chikkala, R.B.; Anuradha, C.; Murty, P.S.C.; Rajeswari, S.; Rajeswaran, N.; Murugappan, M.; Chowdhury, M.E. Enhancing Breast Cancer Diagnosis With Bidirectional Recurrent Neural Networks: A Novel Approach for Histopathological Image Multi-Classification. IEEE Access 2025, 13, 41682–41707. [Google Scholar] [CrossRef]

- Sharma, S.; Mehra, R. Conventional Machine Learning and Deep Learning Approach for Multi-Classification of Breast Cancer Histopathology Images—A Comparative Insight. J. Digit. Imaging 2020, 33, 632–654. [Google Scholar] [CrossRef]

- Hayat, M.; Aramvith, S.; Bhattacharjee, S.; Ahmad, N. Attention GhostUNet++: Enhanced Segmentation of Adipose Tissue and Liver in CT Images. arXiv 2025, arXiv:2504.11491. [Google Scholar] [CrossRef]

- Hayat, M.; Gupta, M.; Suanpang, P.; Nanthaamornphong, A. Super-Resolution Methods for Endoscopic Imaging: A Review. In Proceedings of the 2024 12th International Conference on Internet of Everything, Microwave, Embedded, Communication and Networks (IEMECON), Jaipur, India, 24–26 October 2024; pp. 1–6. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).