Bangla Speech Emotion Recognition Using Deep Learning-Based Ensemble Learning and Feature Fusion

Abstract

1. Introduction

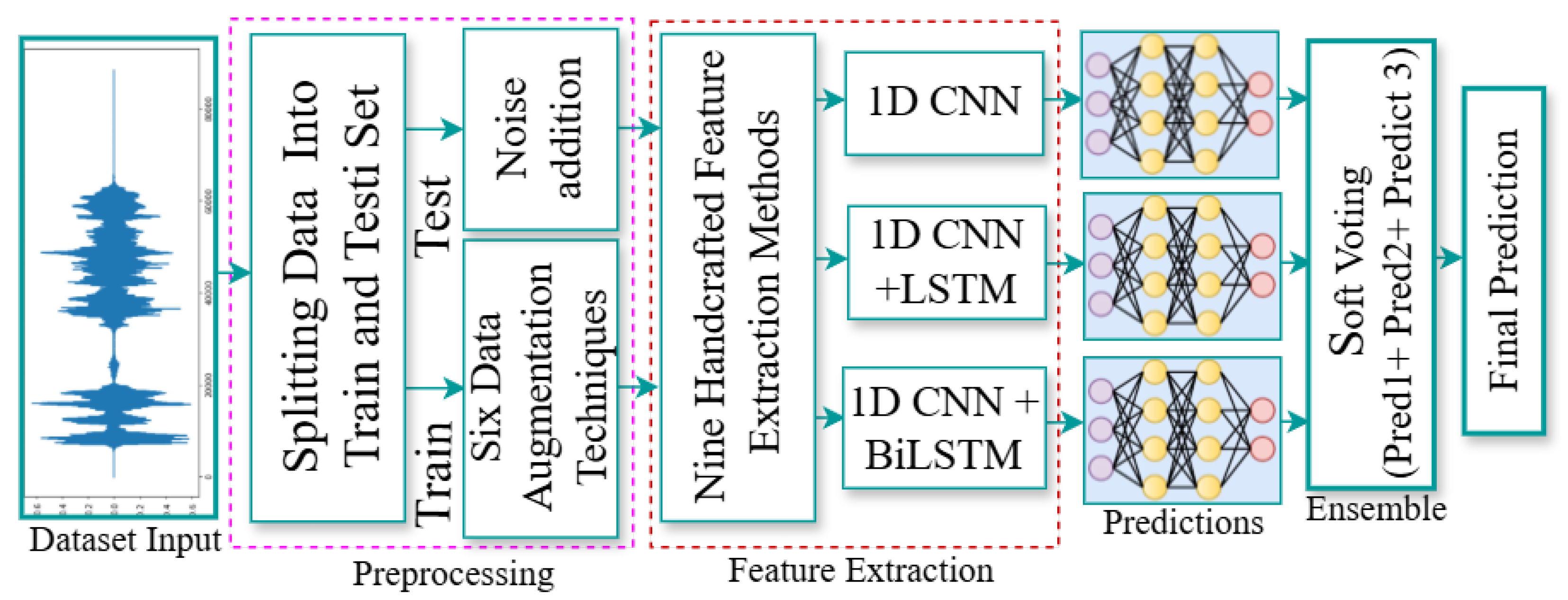

- Fusion of Handcrafted and Deep Learning Features: We combine handcrafted features with deep learning-derived representations to capture both explicit speech characteristics and complex emotional patterns. This fusion enhances the model’s accuracy and robustness, improving generalization across different emotional expressions and speaker variations.

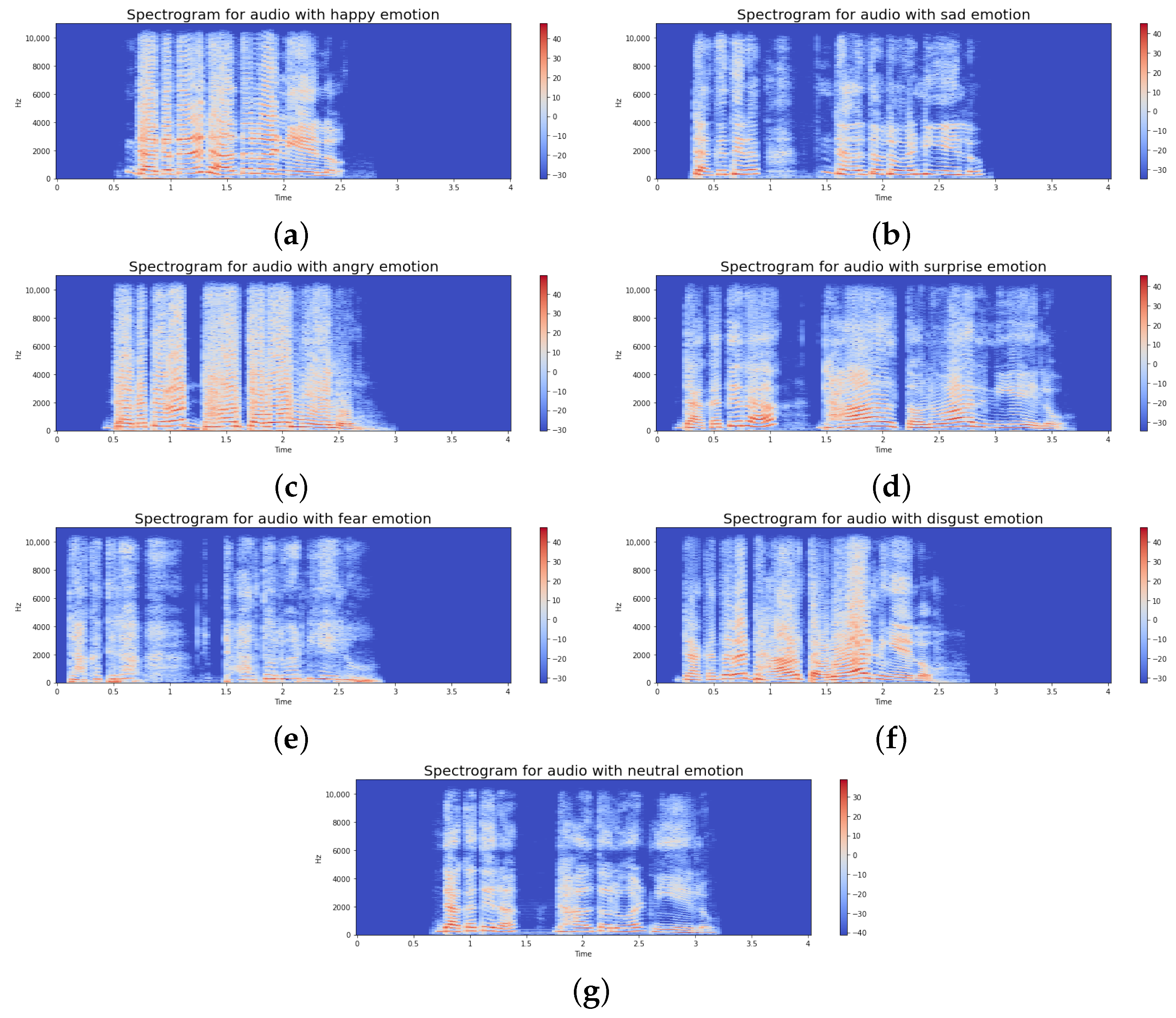

- Handcrafted Features: We extract features such as Zero-Crossing Rate (ZCR), Mel-Frequency Cepstral Coefficients (MFCCs), spectral contrast, and Mel-spectrogram, which focus on key speech characteristics like pitch, tone, and energy fluctuations. Although our approach accepts 1D feature vectors as inputs to the model, some features such as MFCCs, Mel-spectrograms, and chromagrams are themselves generated from time–frequency representations of speech signals that can also be represented as images. This makes our approach sing with imaging-based pipelines of classification that process visual patterns as extracted from audio signals, putting it on the same conceptual level as image-based signal processing. These features provide valuable insights into emotional content, enhancing the model’s ability to distinguish subtle emotional variations.

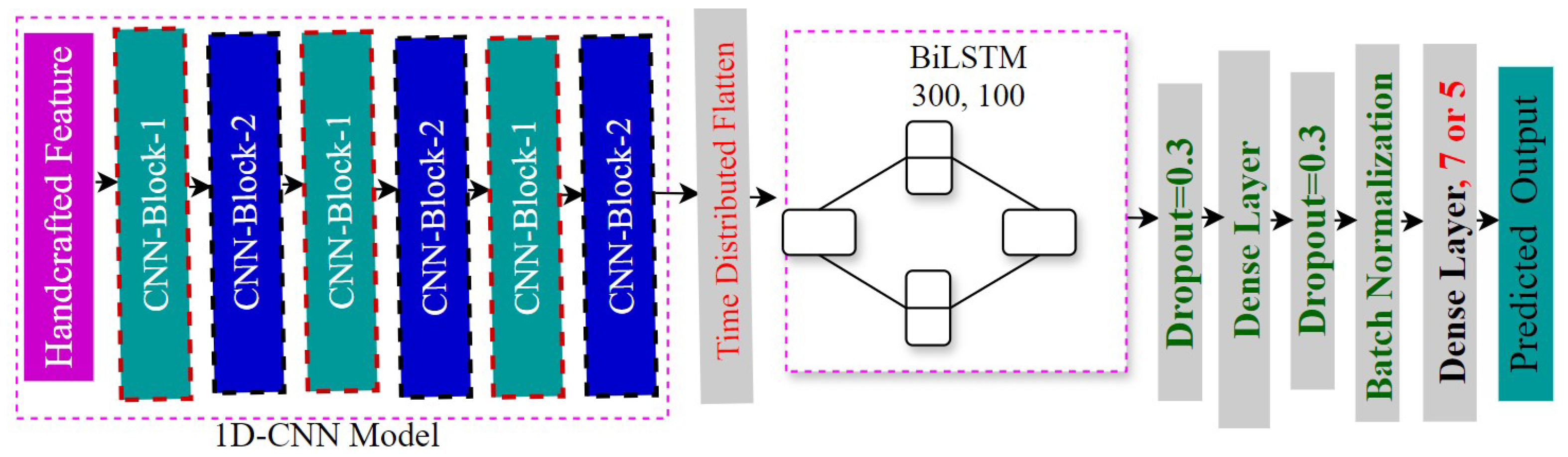

- Multi-Stream Deep Learning Architecture: Our model employs three streams: 1D CNNs, 1D CNNs with Long Short-Term Memory (LSTM), and 1D CNNs with bidirectional LSTM (Bi-LSTM), which capture both local and global patterns in speech, providing a robust understanding of emotional nuances. The LSTM and Bi-LSTM streams improve the model’s ability to recognize emotions in speech sequences.

- Ensemble Learning with Soft Voting: We combine predictions from the three streams using an ensemble learning technique with soft voting, improving emotion classification by leveraging the strengths of each model.

- Improved Performance and Generalization: Data augmentation techniques such as noise addition, pitch modification, and time stretching enhance the model’s robustness and generalization, addressing challenges like speaker dependency and variability in emotional expressions. Our approach achieves impressive performance, with accuracies of 92.90%, 85.20%, 90.63%, 67.71%, and 69.25% for the SUBESCO, BanglaSER, merged SUBESCO and BanglaSER, RAVDESS, and EMODB datasets, respectively, demonstrating its superiority over traditional models.

2. Related Works

3. Datasets

3.1. SUBESCO Dataset

3.2. BanglaSER Dataset

3.3. SUBESCO and BanglaSER Merged Dataset

3.4. RAVDESS Dataset

3.5. EMODB Dataset

4. Materials and Methods

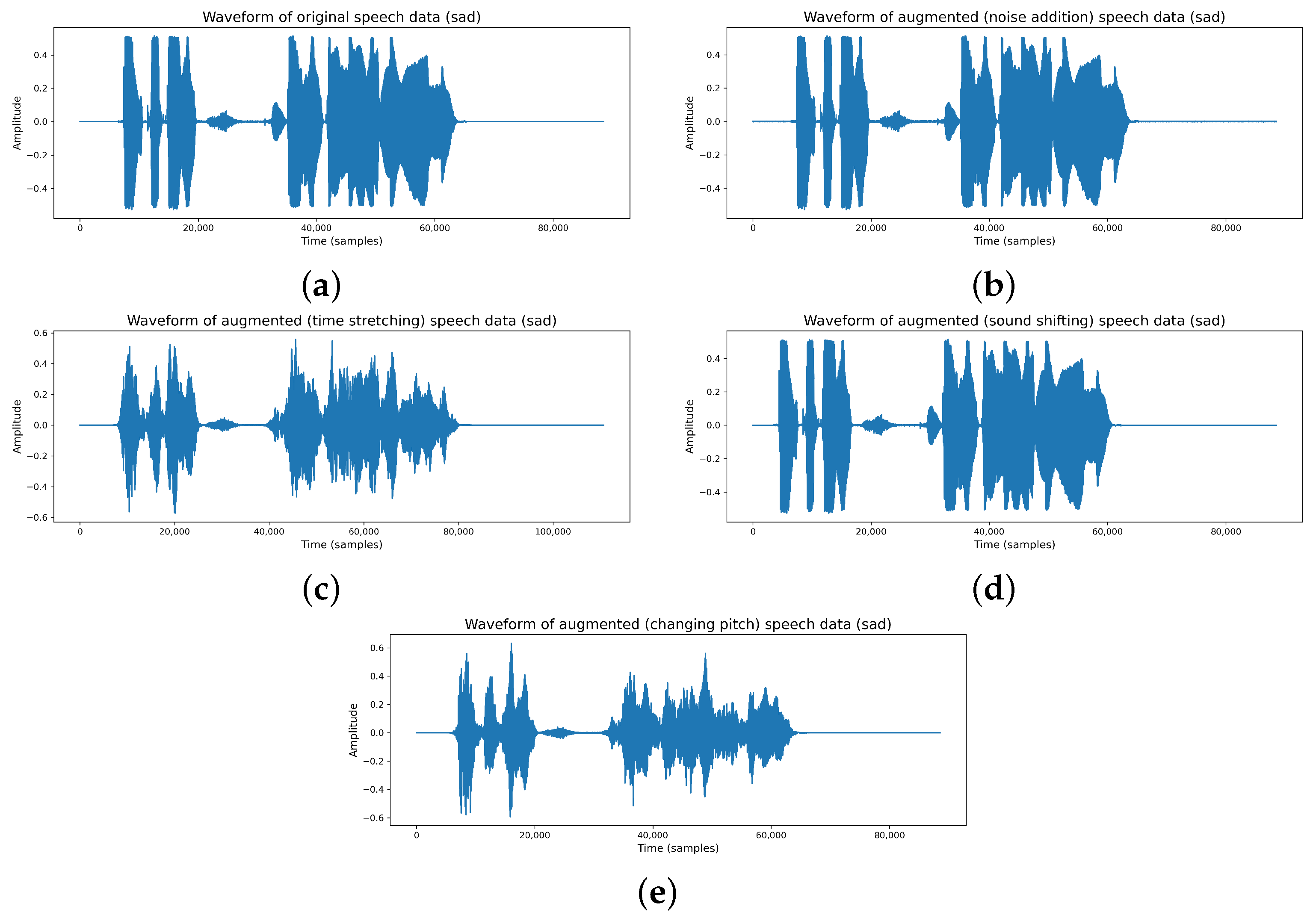

4.1. Data Augmentation

4.2. Feature Extraction

4.3. Deep Learning Model

4.3.1. 1D-CNN Approach

4.3.2. Integration of 1D-CNN and LSTM Approach

4.3.3. Integration of 1D-CNN and Bi-LSTM Approach

4.4. Soft Voting Ensemble Learning

5. Results

5.1. Ablation Study

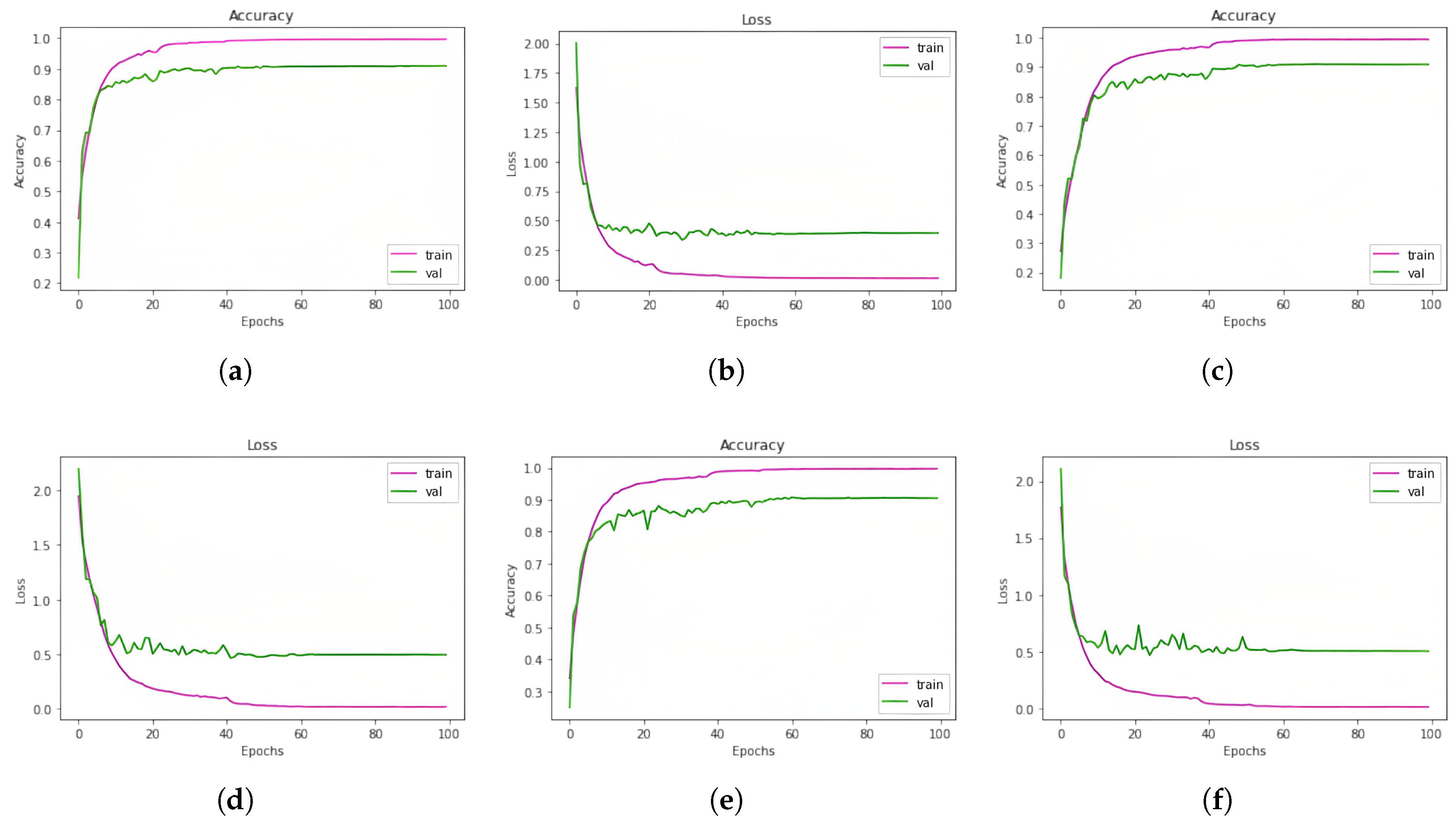

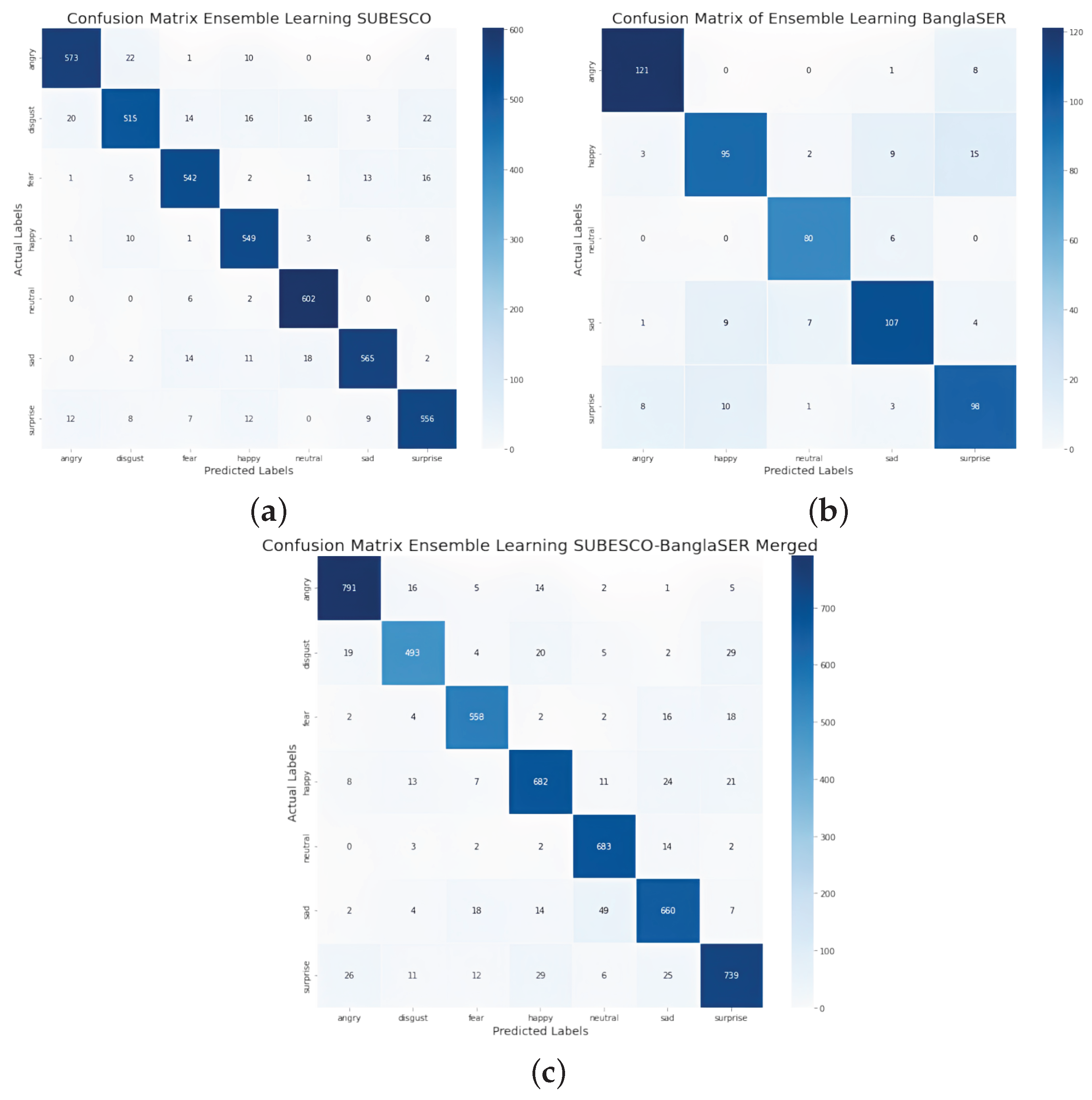

5.2. Outcomes of the Models for SUBESCO Dataset

5.3. Outcomes of the Models for BanglaSER Dataset

5.4. Outcomes of the Models for SUBESCO and BanglaSER Merged Dataset

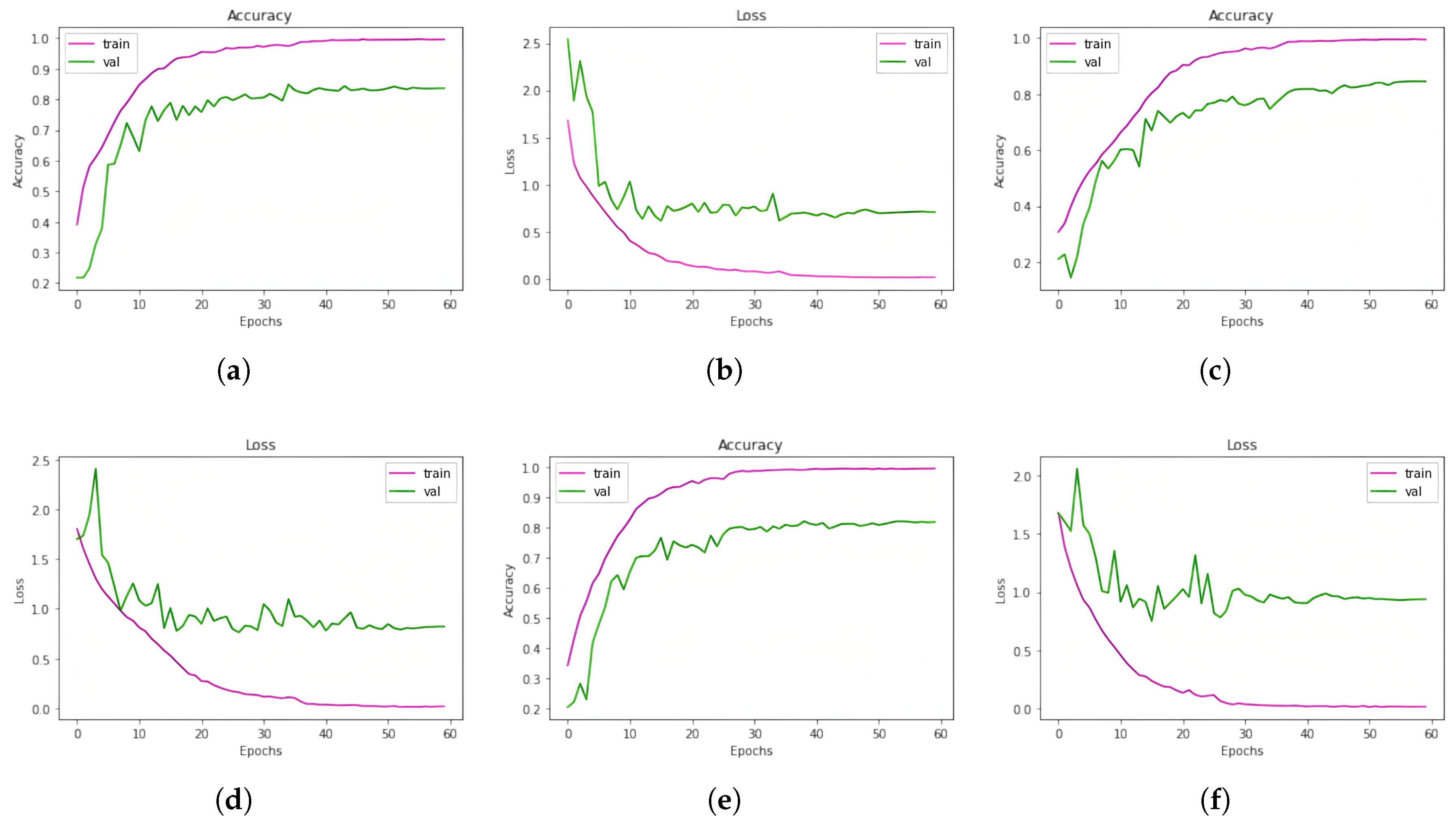

5.5. Outcomes of the Models for RAVDESS and EMODB Datasets

5.6. State of the Art Comparison

5.7. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| PBCC | Phase-Based Cepstral Coefficients |

| DTW | Dynamic Time Warping |

| RMS | Root Mean Square |

| MFCCs | Mel-Frequency Cepstral Coefficients |

References

- Bashari Rad, B.; Moradhaseli, M. Speech emotion recognition methods: A literature review. AIP Conf. Proc. 2017, 1891, 020105. [Google Scholar] [CrossRef]

- Ahlam Hashem, M.A.; Alghamdi, M. Speech emotion recognition approaches: A systematic review. Speech Commun. 2023, 154, 102974. [Google Scholar] [CrossRef]

- Muntaqim, M.Z.; Smrity, T.A.; Miah, A.S.M.; Kafi, H.M.; Tamanna, T.; Farid, F.A.; Rahim, M.A.; Karim, H.A.; Mansor, S. Eye Disease Detection Enhancement Using a Multi-Stage Deep Learning Approach. IEEE Access 2024, 12, 191393–191407. [Google Scholar] [CrossRef]

- Hossain, M.M.; Chowdhury, Z.R.; Akib, S.M.R.H.; Ahmed, M.S.; Hossain, M.M.; Miah, A.S.M. Crime Text Classification and Drug Modeling from Bengali News Articles: A Transformer Network-Based Deep Learning Approach. In Proceedings of the 2023 26th International Conference on Computer and Information Technology (ICCIT), Cox’s Bazar, Bangladesh, 13–15 December 2023; pp. 1–6. [Google Scholar]

- Rahim, M.A.; Farid, F.A.; Miah, A.S.M.; Puza, A.K.; Alam, M.N.; Hossain, M.N.; Karim, H.A. An Enhanced Hybrid Model Based on CNN and BiLSTM for Identifying Individuals via Handwriting Analysis. CMES-Comput. Model. Eng. Sci. 2024, 140, 1690–1710. [Google Scholar] [CrossRef]

- Khalil, R.A.; Jones, E.; Babar, M.I.; Jan, T.; Zafar, M.H.; Alhussain, T. Speech emotion recognition using deep learning techniques: A review. IEEE Access 2019, 7, 117327–117345. [Google Scholar] [CrossRef]

- Saad, F.; Mahmud, H.; Shaheen, M.; Hasan, M.K.; Farastu, P. Is Speech Emotion Recognition Language-Independent? Analysis of English and Bangla Languages using Language-Independent Vocal Features. arXiv 2021, arXiv:2111.10776. [Google Scholar]

- Chakraborty, C.; Dash, T.K.; Panda, G.; Solanki, S.S. Phase-based Cepstral features for Automatic Speech Emotion Recognition of Low Resource Indian languages. In Transactions on Asian and Low-Resource Language Information Processing; Association for Computing Machinery: New York, NY, USA, 2022. [Google Scholar]

- Ma, E. Data Augmentation for Audio. Medium. 2019. Available online: https://medium.com/@makcedward/data-augmentation-for-audio-76912b01fdf6 (accessed on 8 March 2023).

- Rintala, J. Speech Emotion Recognition from Raw Audio using Deep Learning; School of Electrical Engineering and Computer Science Royal Institute of Technology (KTH): Stockholm, Sweden, 2020. [Google Scholar]

- Tusher, M.M.R.; Al Farid, F.; Kafi, H.M.; Miah, A.S.M.; Rinky, S.R.; Islam, M.; Rahim, M.A.; Mansor, S.; Karim, H.A. BanTrafficNet: Bangladeshi Traffic Sign Recognition Using A Lightweight Deep Learning Approach. Comput. Vis. Pattern Recognit. 2024; preprint. [Google Scholar]

- Siddiqua, A.; Hasan, R.; Rahman, A.; Miah, A.S.M. Computer-Aided Osteoporosis Diagnosis Using Transfer Learning with Enhanced Features from Stacked Deep Learning Modules. arXiv 2024, arXiv:2412.09330. [Google Scholar] [CrossRef]

- Tusher, M.M.R.; Farid, F.A.; Al-Hasan, M.; Miah, A.S.M.; Rinky, S.R.; Jim, M.H.; Sarina, M.; Abdur, R.M.; Hezerul Abdul, K. Development of a Lightweight Model for Handwritten Dataset Recognition: Bangladeshi City Names in Bangla Script. Comput. Mater. Contin. 2024, 80, 2633–2656. [Google Scholar] [CrossRef]

- Sultana, S.; Iqbal, M.Z.; Selim, M.R.; Rashid, M.M.; Rahman, M.S. Bangla speech emotion recognition and cross-lingual study using deep CNN and BLSTM networks. IEEE Access 2021, 10, 564–578. [Google Scholar] [CrossRef]

- Rahman, M.M.; Dipta, D.R.; Hasan, M.M. Dynamic time warping assisted SVM classifier for Bangla speech recognition. In Proceedings of the 2018 International Conference on Computer, Communication, Chemical, Material and Electronic Engineering (IC4ME2), Rajshahi, Bangladesh, 8–9 February 2018; pp. 1–6. [Google Scholar]

- Issa, D.; Demirci, M.F.; Yazici, A. Speech emotion recognition with deep convolutional neural networks. Biomed. Signal Process. Control 2020, 59, 101894. [Google Scholar] [CrossRef]

- Zhao, J.; Mao, X.; Chen, L. Speech emotion recognition using deep 1D & 2D CNN LSTM networks. Biomed. Signal Process. Control 2019, 47, 312–323. [Google Scholar]

- Mustaqeem; Kwon, S. 1D-CNN: Speech emotion recognition system using a stacked network with dilated CNN features. CMC-Comput. Mater. Contin. 2021, 67, 4039–4059. [Google Scholar]

- Badshah, A.M.; Ahmad, J.; Rahim, N.; Baik, S.W. Speech emotion recognition from spectrograms with deep convolutional neural network. In Proceedings of the 2017 International Conference on Platform Technology and Service (PlatCon), Busan, Republic of Korea, 13–15 February 2017; pp. 1–5. [Google Scholar]

- Etienne, C.; Fidanza, G.; Petrovskii, A.; Devillers, L.; Schmauch, B. Cnn+ lstm architecture for speech emotion recognition with data augmentation. arXiv 2018, arXiv:1802.05630. [Google Scholar] [CrossRef]

- Ai, X.; Sheng, V.S.; Fang, W.; Ling, C.X.; Li, C. Ensemble learning with attention-integrated convolutional recurrent neural network for imbalanced speech emotion recognition. IEEE Access 2020, 8, 199909–199919. [Google Scholar] [CrossRef]

- Kwon, S. A CNN-assisted enhanced audio signal processing for speech emotion recognition. Sensors 2019, 20, 183. [Google Scholar] [CrossRef]

- Zheng, W.; Yu, J.; Zou, Y. An experimental study of speech emotion recognition based on deep convolutional neural networks. In Proceedings of the 2015 International Conference on Affective Computing and Intelligent Interaction (ACII), Xi’an, China, 21–24 September 2015; pp. 827–831. [Google Scholar]

- Zehra, W.; Javed, A.R.; Jalil, Z.; Khan, H.U.; Gadekallu, T.R. Cross corpus multi-lingual speech emotion recognition using ensemble learning. Complex Intell. Syst. 2021, 7, 1845–1854. [Google Scholar] [CrossRef]

- Basha, S.A.K.; Vincent, P.D.R.; Mohammad, S.I.; Vasudevan, A.; Soon, E.E.H.; Shambour, Q.; Alshurideh, M.T. Exploring Deep Learning Methods for Audio Speech Emotion Detection: An Ensemble MFCCs, CNNs and LSTM. Appl. Math 2025, 19, 75–85. [Google Scholar]

- Zhao, S.; Jia, G.; Yang, J.; Ding, G.; Keutzer, K. Emotion Recognition From Multiple Modalities: Fundamentals and methodologies. IEEE Signal Process. Mag. 2021, 38, 59–73. [Google Scholar] [CrossRef]

- Fu, B.; Gu, C.; Fu, M.; Xia, Y.; Liu, Y. A novel feature fusion network for multimodal emotion recognition from EEG and eye movement signals. Front. Neurosci. 2023, 17, 1234162. [Google Scholar] [CrossRef]

- Sultana, S.; Rahman, M.S.; Selim, M.R.; Iqbal, M.Z. SUST Bangla Emotional Speech Corpus (SUBESCO): An audio-only emotional speech corpus for Bangla. PLoS ONE 2021, 16, e0250173. [Google Scholar] [CrossRef]

- Das, R.K.; Islam, N.; Ahmed, M.R.; Islam, S.; Shatabda, S.; Islam, A.M. BanglaSER: A speech emotion recognition dataset for the Bangla language. Data Brief 2022, 42, 108091. [Google Scholar] [CrossRef] [PubMed]

- Livingstone, S.R.; Russo, F.A. The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS): A dynamic, multimodal set of facial and vocal expressions in North American English. PLoS ONE 2018, 13, e0196391. [Google Scholar] [CrossRef] [PubMed]

- Burkhardt, F.; Paeschke, A.; Kienast, M.; Sendlmeier, W.F.; Weiss, B. Berlin EmoDB (1.3.0) [Data Set]. Zenodo. 2022. Available online: https://zenodo.org/records/7447302 (accessed on 5 December 2022).

- Paiva, L.F.; Alfaro-Espinoza, E.; Almeida, V.M.; Felix, L.B.; Neves, R.V.A. A Survey of Data Augmentation for Audio Classification. In Proceedings of the XXIV Congresso Brasileiro de Automática (CBA); 2022; Available online: https://sba.org.br/open_journal_systems/index.php/cba/article/view/3469 (accessed on 5 December 2022).

- McFee, B.; Raffel, C.; Liang, D.; Ellis, D.P.; Battenberg, E.; Nieto, O.; Dieleman, S.; Tokunaga, H.; McQuin, P.; NumPy; et al. librosa/librosa: 0.10.1. Available online: https://zenodo.org/records/8252662 (accessed on 4 January 2024).

- McFee, B.; Raffel, C.; Liang, D.; Ellis, D.P.; McVicar, M.; Battenberg, E.; Nieto, O. librosa: Audio and music signal analysis in python. In Proceedings of the 14th Python in Science Conference, Austin, TX, USA, 6–12 July 2015; Volume 8, pp. 18–25. [Google Scholar]

- Harris, C.R.; Millman, K.J.; van der Walt, S.J.; Gommers, R.; Virtanen, P.; Cournapeau, D.; Wieser, E.; Taylor, J.; Berg, S.; Smith, N.J.; et al. Array programming with NumPy. Nature 2020, 585, 357–362. [Google Scholar] [CrossRef]

- Galic, J.; Grozdić, Đ. Exploring the Impact of Data Augmentation Techniques on Automatic Speech Recognition System Development: A Comparative Study. Adv. Electr. Comput. Eng. 2023, 23, 3–12. [Google Scholar] [CrossRef]

- Titeux, N. Everything You Need to Know About Pitch Shifting; Nicolas Titeux: Luxembourg, 2023; Available online: https://www.nicolastiteux.com/en/blog/everything-you-need-to-know-about-pitch-shifting/ (accessed on 5 March 2023).

- Jordal, I. Gain; Gain—Audiomentations Documentation. 2018. Available online: https://iver56.github.io/audiomentations/waveform_transforms/gain/ (accessed on 4 January 2023).

- Kedem, B. Spectral analysis and discrimination by zero-crossings. Proc. IEEE 1986, 74, 1477–1493. [Google Scholar] [CrossRef]

- Rezapour Mashhadi, M.M.; Osei-Bonsu, K. Speech Emotion Recognition Using Machine Learning Techniques: Feature Extraction and Comparison of Convolutional Neural Network and Random Forest. PLoS ONE 2023, 18, e0291500. [Google Scholar] [CrossRef]

- Shah, A.; Kattel, M.; Nepal, A.; Shrestha, D. Chroma Feature Extraction Using Fourier Transform. In Proceedings of the Conference at Kathmandu University, Nepal, January 2019. Available online: https://www.researchgate.net/publication/330796993_Chroma_Feature_Extraction (accessed on 5 January 2025).

- Sharma, G.; Umapathy, K.; Krishnan, S. Trends in Audio Signal Feature Extraction Methods. Appl. Acoust. 2020, 158, 107020. [Google Scholar] [CrossRef]

- Kumar, S.; Thiruvenkadam, S. An Analysis of the Impact of Spectral Contrast Feature in Speech Emotion Recognition. Int. J. Recent Contrib. Eng. Sci. IT (iJES) 2021, 9, 87–95. [Google Scholar] [CrossRef]

- West, K.; Cox, S. Finding An Optimal Segmentation for Audio Genre Classification. In Proceedings of the 6th International Conference on Music Information Retrieval, ISMIR 2005, London, UK, 11–15 September 2005; pp. 680–685. [Google Scholar]

- Peeters, G. A Large Set of Audio Features for Sound Description (Similarity and Classification) in the CUIDADO Project; Ircam: Paris, France, 2004. [Google Scholar]

- Panda, R.; Malheiro, R.; Paiva, R.P. Audio Features for Music Emotion Recognition: A Survey. IEEE Trans. Affect. Comput. 2023, 14, 68–88. [Google Scholar] [CrossRef]

- Roberts, L. Understanding the Mel Spectrogram. Medium. March 2020. Available online: https://medium.com/analytics-vidhya/understanding-the-mel-spectrogram-fca2afa2ce53 (accessed on 5 January 2023).

- Wu, J. Introduction to convolutional neural networks. Natl. Key Lab Nov. Softw. Technol. Nanjing Univ. China 2017, 5, 495. [Google Scholar]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.J.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.A.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Zafar, A.; Aamir, M.; Mohd Nawi, N.; Arshad, A.; Riaz, S.; Alruban, A.; Dutta, A.; Alaybani, S. A Comparison of Pooling Methods for Convolutional Neural Networks. Appl. Sci. 2022, 12, 8643. [Google Scholar] [CrossRef]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating deep network training by reducing internal covariate shift. arXiv 2015, arXiv:1502.03167. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- GeeksforGeeks. What Is a Neural Network Flatten Layer? GeeksforGeeks: Noida, India, 2024; Available online: https://www.geeksforgeeks.org/what-is-a-neural-network-flatten-layer/ (accessed on 10 August 2024).

- Nwankpa, C.; Ijomah, W.; Gachagan, A.; Marshall, S. Activation Functions: Comparison of trends in Practice and Research for Deep Learning. arXiv 2018, arXiv:cs.LG/1811.03378. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2017, arXiv:1412.6980. [Google Scholar] [CrossRef]

- Mohammed, A.; Kora, R. A Comprehensive Review on Ensemble Deep Learning: Opportunities and Challenges. J. King Saud Univ. Comput. Inf. Sci. 2023, 35, 757–774. [Google Scholar] [CrossRef]

| Class Name | Total Count | Percentage |

|---|---|---|

| Happy | 1000 | 14.29 |

| Sad | 1000 | 14.29 |

| Angry | 1000 | 14.29 |

| Surprise | 1000 | 14.29 |

| Fear | 1000 | 14.29 |

| Disgust | 1000 | 14.29 |

| Neutral | 1000 | 14.29 |

| Class Name | Total Count | Percentage |

|---|---|---|

| Happy | 306 | 20.87 |

| Sad | 306 | 20.87 |

| Angry | 306 | 20.87 |

| Surprise | 306 | 20.87 |

| Neutral | 243 | 16.53 |

| Class Name | Total Count | Percentage |

|---|---|---|

| Happy | 1306 | 15.41 |

| Sad | 1306 | 15.41 |

| Angry | 1306 | 15.41 |

| Surprise | 1306 | 15.41 |

| Fear | 1000 | 11.80 |

| Disgust | 1000 | 11.80 |

| Neutral | 1243 | 14.67 |

| Class Name | Total Count | Percentage |

|---|---|---|

| Happy | 192 | 13.33 |

| Sad | 192 | 13.33 |

| Angry | 192 | 13.33 |

| Surprise | 192 | 13.33 |

| Fear | 192 | 13.33 |

| Disgust | 192 | 13.33 |

| Neutral | 96 | 6.67 |

| Class Name | Total Count | Percentage |

|---|---|---|

| Happy | 71 | 13.27 |

| Sad | 62 | 11.59 |

| Angry | 127 | 23.74 |

| Boredom | 81 | 15.14 |

| Fear | 69 | 12.90 |

| Disgust | 46 | 8.60 |

| Neutral | 79 | 14.77 |

| Dataset Name | Total Samples | Train/Test Ratio | Train Samples | Test Samples |

|---|---|---|---|---|

| SUBESCO | 7000 | 70/30 | 4900 | 2100 |

| BanglaSER | 1467 | 80/20 | 1173 | 294 |

| SUBESCO and BanglaSER merged | 8467 | 70/30 | 5926 | 2541 |

| RAVDESS (Audio-only) | 1248 | 70/30 | 1008 | 432 |

| EMODB | 535 | 80/20 | 428 | 107 |

| Augmentation Name | Description |

|---|---|

| Polarity Inversion | Reverses the phase of the audio signal by multiplying it by , effectively canceling the phase when combined with the original signal, resulting in silence [36]. It can simulate variations in recordings caused by microphone polarity errors, which may slightly enhance the generalization of the model. Polarity inversion was applied by multiplying the signal by . |

| Noise Addition | Adds random white noise to the audio data to enhance its variability and robustness [9]. We have added white Gaussian noise at 10 dB and 20 dB SNR using numpy.random.normal() with a noise factor of 0.005. |

| Time Stretching | Alters the speed of the audio by stretching or compressing time series data, increasing or decreasing sound speed [9]. We have applied time stretching on our audio data with a rate (stretch factor) of 0.8 using librosa.time_stretch() function. |

| Pitch Change | Changes the pitch of the audio signal by adjusting the frequency of sound components, typically by resampling [37]. We have shifted ±2 semitones of our audio data with a pitch factor (steps to shift) of 0.8 using librosa.effects.pitch_shift() function. |

| Sound Shifting | Randomly shifts the audio by a predefined number of seconds, introducing silence at the shifted location if necessary [9]. We have shifted audio ±5 ms using numpy.roll() with shifts from random.uniform(, 5). |

| Random Gain | Alters the loudness of the audio signal using a volume factor, making it louder or softer [38]. To apply random gain, we randomly adjusted volume between ×2 and ×4 using random.uniform(). |

| Feature Name | Description and Advantage |

|---|---|

| Zero-Crossing Rate (ZCR) | Counts the number of times the audio signal crosses the horizontal axis. It helps analyze signal smoothness and is effective for distinguishing voiced from unvoiced speech [39,40]. |

| Chromagram | Represents energy distribution over frequency bands corresponding to pitch classes in music. It captures harmonic and melodic features of the signal, useful for tonal analysis [41]. |

| Spectral Centroid | Indicates the “center of mass” of a sound’s frequencies, providing insights into the brightness of the sound. It is useful for identifying timbral characteristics [42]. |

| Spectral Roll-off | Measures the frequency below which a certain percentage of the spectral energy is contained. This feature helps in distinguishing harmonic from non-harmonic content [42]. |

| Spectral Contrast | Measures the difference in energy between peaks and valleys in the spectrum, capturing timbral texture and distinguishing between different sound sources [43,44]. |

| Spectral Flatness | Quantifies how noise-like a sound is. A high spectral flatness value indicates noise-like sounds, while a low value indicates tonal sounds, useful for identifying the type of sound [45]. |

| Mel-Frequency Cepstral Coefficients (MFCCs) | Captures spectral variations in speech, focusing on features most relevant to human hearing. It is widely used in speech recognition and enhances emotion recognition capabilities [42,45]. |

| Root Mean Square (RMS) Energy | Measures the loudness of the audio signal, offering insights into the energy of the sound, which is crucial for understanding the emotional intensity [46]. |

| Mel-Spectrogram | Converts the frequencies of a spectrogram to the mel scale, representing the energy distribution in a perceptually relevant way, commonly used in speech and audio processing [47]. |

| Dataset | 1D CNN | 1D CNN LSTM | 1D CNN BiLSTM | Ensemble Learning |

|---|---|---|---|---|

| SUBESCO | 90.93% | 90.98% | 90.50% | 92.90% |

| BanglaSER | 83.67% | 84.52% | 81.97% | 85.20% |

| SUBESCO + BanglaSER | 88.92% | 88.61% | 87.56% | 90.63% |

| RAVDESS | 65.63% | 64.93% | 60.76% | 67.71% |

| EMODB | 69.57% | 67.39% | 65.84% | 69.25% |

| Model | Accuracy |

|---|---|

| 1D CNN | 90.93% |

| 1D CNN LSTM | 90.98% |

| 1D CNN BiLSTM | 90.50% |

| Ensemble Learning | 92.90% |

| Emotion | Accuracy (%) |

|---|---|

| Angry | 93.93 |

| Disgust | 84.98 |

| Fear | 93.61 |

| Happy | 94.98 |

| Neutral | 98.69 |

| Sad | 92.32 |

| Surprise | 92.05 |

| Class | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|

| Angry | 94.00 | 94.00 | 94.00 |

| Disgust | 92.00 | 85.00 | 88.00 |

| Fear | 93.00 | 93.00 | 93.00 |

| Happy | 91.00 | 95.00 | 93.00 |

| Neutral | 94.00 | 99.00 | 96.00 |

| Sad | 95.00 | 92.00 | 94.00 |

| Surprise | 91.00 | 92.00 | 92.00 |

| Macro Average | 93.00 | 93.00 | 93.00 |

| Weighted Average | 93.00 | 93.00 | 93.00 |

| Accuracy (%) = 93.00 | |||

| Model | Accuracy |

|---|---|

| 1D CNN | 83.67% |

| 1D CNN LSTM | 84.52% |

| 1D CNN BiLSTM | 81.97% |

| Ensemble Learning | 85.20% |

| Emotion | Accuracy (%) |

|---|---|

| Angry | 93.07 |

| Happy | 76.61 |

| Neutral | 93.02 |

| Sad | 83.59 |

| Surprise | 81.67 |

| Class | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|

| Angry | 91.00 | 93.00 | 92.00 |

| Happy | 83.00 | 77.00 | 80.00 |

| Neutral | 89.00 | 93.00 | 91.00 |

| Sad | 85.00 | 84.00 | 84.00 |

| Surprise | 78.00 | 82.00 | 80.00 |

| Macro Average | 85.00 | 85.00 | 85.00 |

| Weighted Average | 85.00 | 85.00 | 85.00 |

| Accuracy (%) = 85.00 | |||

| Model | Accuracy |

|---|---|

| 1D CNN | 88.92% |

| 1D CNN LSTM | 88.61% |

| 1D CNN BiLSTM | 87.56% |

| Ensemble Learning | 90.63% |

| Emotion | Accuracy (%) |

|---|---|

| Angry | 94.84 |

| Disgust | 86.19 |

| Fear | 92.69 |

| Happy | 89.03 |

| Neutral | 96.74 |

| Sad | 87.53 |

| Surprise | 87.15 |

| Class | Precision (%) | Recall (%) | F1-Score (%) |

|---|---|---|---|

| Angry | 93.00 | 95.00 | 94.00 |

| Disgust | 91.00 | 86.00 | 88.00 |

| Fear | 92.00 | 93.00 | 92.00 |

| Happy | 89.00 | 89.00 | 89.00 |

| Neutral | 90.00 | 97.00 | 93.00 |

| Sad | 89.00 | 88.00 | 88.00 |

| Surprise | 90.00 | 87.00 | 89.00 |

| Macro Average | 91.00 | 91.00 | 91.00 |

| Weighted Average | 91.00 | 91.00 | 91.00 |

| Accuracy (%) = 91.00 | |||

| Model | Accuracy |

|---|---|

| 1D CNN | 65.63% |

| 1D CNN LSTM | 64.93% |

| 1D CNN BiLSTM | 60.76% |

| Ensemble Learning | 67.71% |

| Model | Accuracy |

|---|---|

| 1D CNN | 69.57% |

| 1D CNN LSTM | 67.39% |

| 1D CNN BiLSTM | 65.84% |

| Ensemble Learning | 69.25% |

| Research | Features Used | Model | Accuracy for 5 Datasets (%) | ||||

|---|---|---|---|---|---|---|---|

| SUBESCO (Bangla) | RAVDESS (American English) | EMO-DB | BanglaSER | (SUBESCO + BanglaSER) | |||

| Sultana et al. [14] | Mel-spectrogram | Deep CNN and BLSTM | 86.9 | 82.7 | |||

| Rahman et al. (2018) [15] | MFCCs, MFCC derivatives | SVM with RBF, DTW | 86.08 (Bangla 12 speakers) | - | |||

| Chakraborty et al. (2022) [8] | PBCC | P Gradient Boosting Machine | 96 | 96 | |||

| Issa, et al. [16] | MFCCs, Mel-spectrogram, Chroma-gram, Spectral contrast feature, Tonnetz representation | 1D CNN | 64.3% (IEMOCAP, 4 classes) | 71.61 (8 classes) | 95.71 | ||

| Zhao, et al. 2019 [17] | Log mel spectrogram | 1D CNN LSTM, 2D CNN LSTM | 89.16 (IEMOCAP dependent) | 52.14% (IEMOCAP independent) | 95.33 (Emo-Db dependent) | 95.89% (independent) | |

| Mustaqeem et al. [18] | Spectral analysis | 1D Dilated CNN with BiGRU | 72.75 | 78.01 | 91.14 | - | - |

| Badshah et al. (2017) [19] | Spectrograms | CNN (3 convolutional, 3 FC layers) | 56% | ||||

| Etienne, et al. (2018) [20] | High-level features, log-mel Spectrogram | CNN-LSTM (4 conv + 1 BLSTM layer) | 61.7% (Unweighted), 64.5% (Weighted) | ||||

| Proposed | ZCR, chroma-gram, RMS, spectral centroid, spectral roll-off, spectral contrast, spectral flatness, mel spectrogram, and MFCCs | Ensemble of 1D CNN, 1D CNN LSTM, and 1D CNN BiLSTM | 92.90 % (SUBESCO), | 67.71% (RAVDESS), | 69.25% (EMODB) | 85.20% (BanglaSER), | 90.63% (SUBESCO + BanglaSER) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shakil, M.S.A.; Farid, F.A.; Podder, N.K.; Iqbal, S.M.H.S.; Miah, A.S.M.; Rahim, M.A.; Karim, H.A. Bangla Speech Emotion Recognition Using Deep Learning-Based Ensemble Learning and Feature Fusion. J. Imaging 2025, 11, 273. https://doi.org/10.3390/jimaging11080273

Shakil MSA, Farid FA, Podder NK, Iqbal SMHS, Miah ASM, Rahim MA, Karim HA. Bangla Speech Emotion Recognition Using Deep Learning-Based Ensemble Learning and Feature Fusion. Journal of Imaging. 2025; 11(8):273. https://doi.org/10.3390/jimaging11080273

Chicago/Turabian StyleShakil, Md. Shahid Ahammed, Fahmid Al Farid, Nitun Kumar Podder, S. M. Hasan Sazzad Iqbal, Abu Saleh Musa Miah, Md Abdur Rahim, and Hezerul Abdul Karim. 2025. "Bangla Speech Emotion Recognition Using Deep Learning-Based Ensemble Learning and Feature Fusion" Journal of Imaging 11, no. 8: 273. https://doi.org/10.3390/jimaging11080273

APA StyleShakil, M. S. A., Farid, F. A., Podder, N. K., Iqbal, S. M. H. S., Miah, A. S. M., Rahim, M. A., & Karim, H. A. (2025). Bangla Speech Emotion Recognition Using Deep Learning-Based Ensemble Learning and Feature Fusion. Journal of Imaging, 11(8), 273. https://doi.org/10.3390/jimaging11080273