Enhancing YOLOv5 for Autonomous Driving: Efficient Attention-Based Object Detection on Edge Devices

Abstract

1. Introduction

- Proposition of an optimized lightweight object detection model that balances accuracy on edge devices, computational efficiency, and deployment feasibility.

- Improvement of YOLOv5 by integrating SE and ECA mechanisms into its architecture to enhance feature selection and detection precision without increasing computational overhead.

- Introduction of extensive performance evaluation on autonomous driving datasets, including the KITTI dataset for standard conditions and the BDD-100K dataset to assess model robustness in challenging scenarios.

- Benchmark analysis with cutting-edge approaches to lightweight object detection models demonstrates improved trade-offs between computational efficiency and performance.

2. Related Work

2.1. Lightweight Object Detection Models

2.2. Emerging Generative Approaches

2.3. Edge-Based and Energy-Efficient Object Detection Approaches

2.4. Object Detection Performance in Challenging Driving Environments

2.5. Small-Scale Object Detection in Autonomous Navigation

3. Materials and Methods

3.1. YOLOv5

3.2. Proposed Models

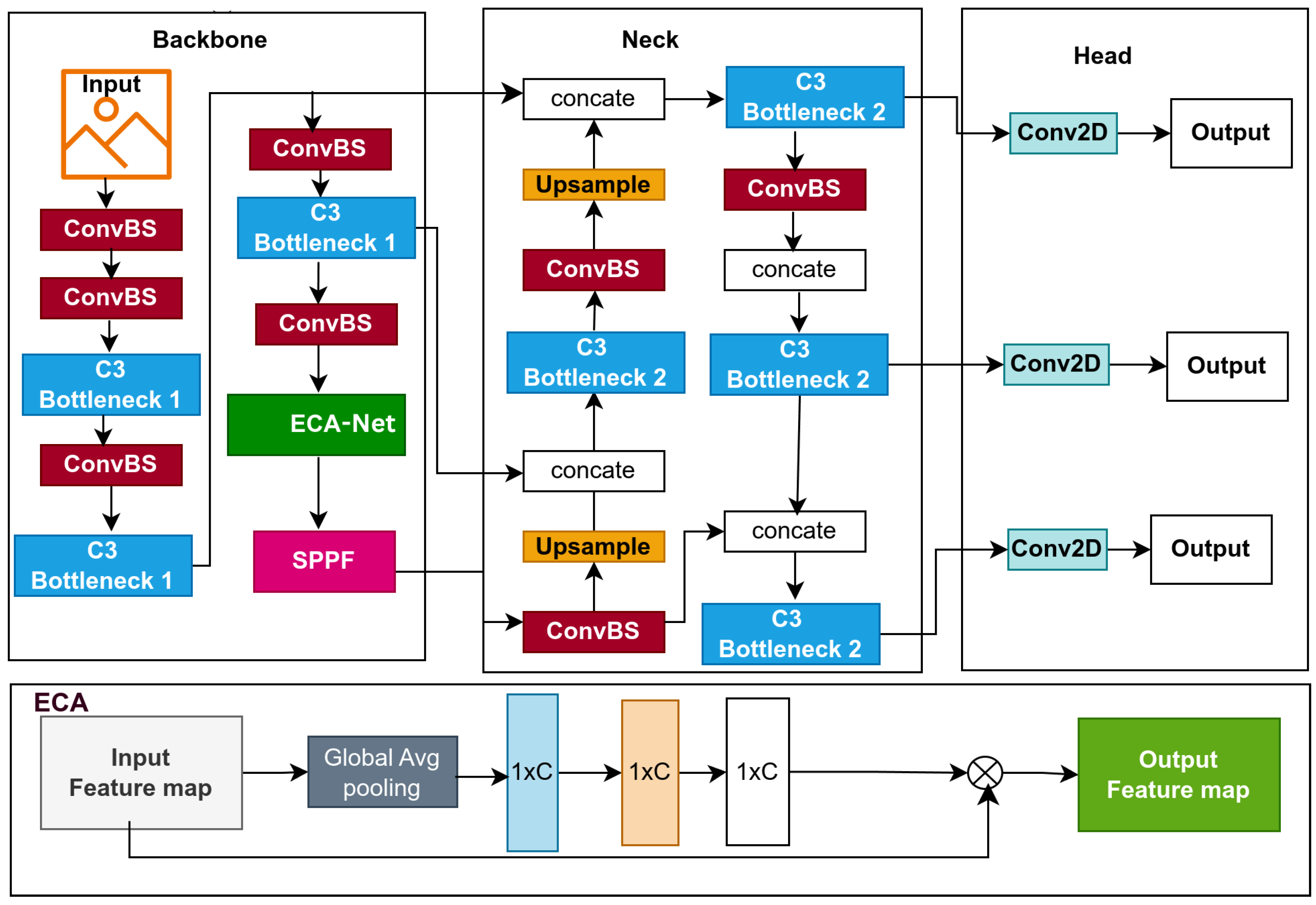

3.2.1. BaseECA Model

- Parameter Complexity in the C3 Module: The C3 module in YOLOv5 performs convolutional operations while utilizing CSPNet’s partial feature reuse mechanism. It consists of two convolutional layers applied to half of the input channels and one applied to all channels, followed by batch normalization and non-linear activation functions (see Figure 2). The C3 component in layer nine introduces over 1.18 million trainable parameters, significantly contributing to the architecture’s computational complexity.The total number of trainable parameters in the C3 module can be estimated aswhere C represents the number of input and output channels, and K indicates the kernel size.

- Parameter Complexity in the ECA Module: The Efficient Channel Attention (ECA) module is an efficient module designed to optimize feature selection while maintaining computational efficiency. Compared to the C3 module, the ECA module applies a 1D convolution-based attention mechanism to refine channel-wise features.Replacing this modification reduces the parameter count from 1.18 million to just 1, enhancing model efficiency. This transformation lowers memory requirements and reduces computational cost (GFLOPs) while maintaining or improving feature refinement quality, demonstrating the effectiveness of ECA in optimizing YOLOv5’s backbone.

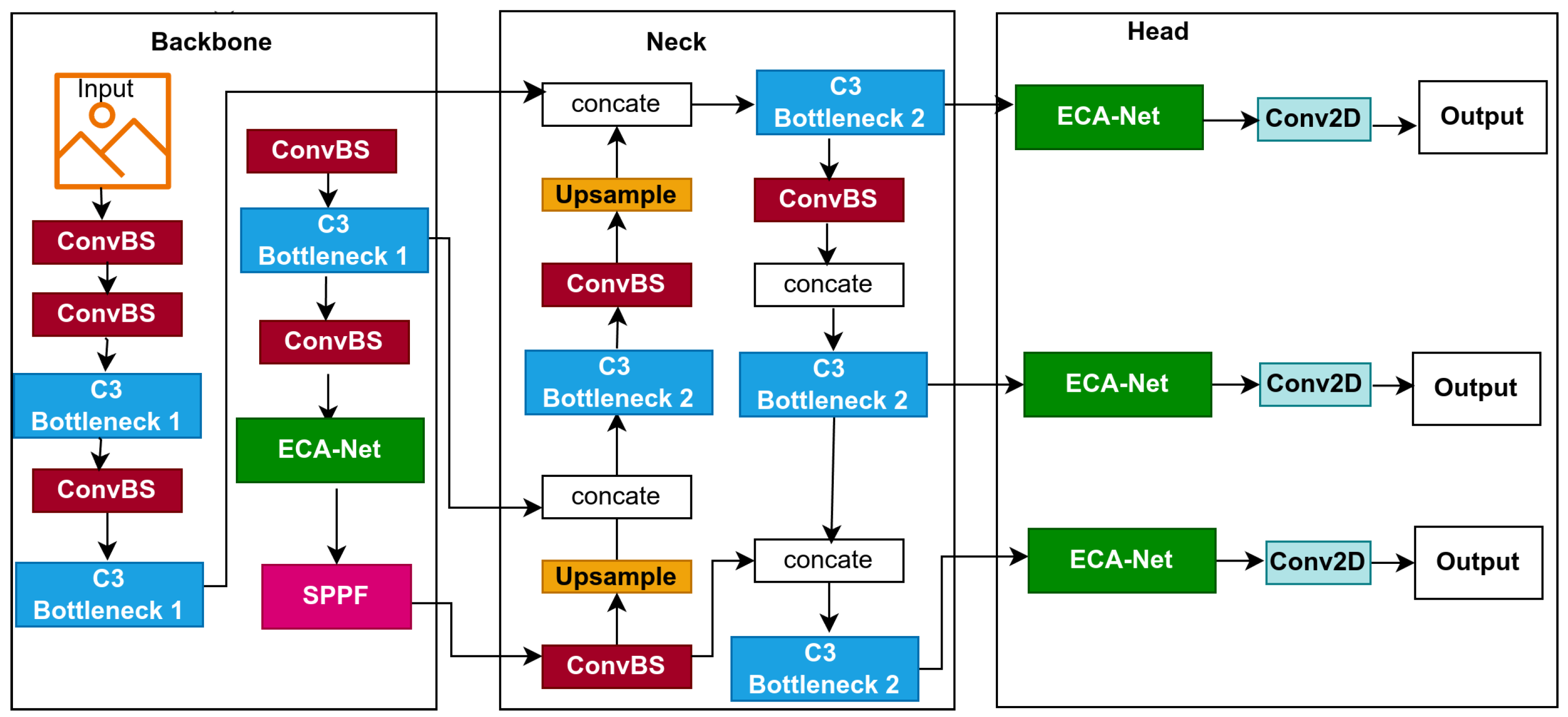

3.2.2. BaseECAx2 Model

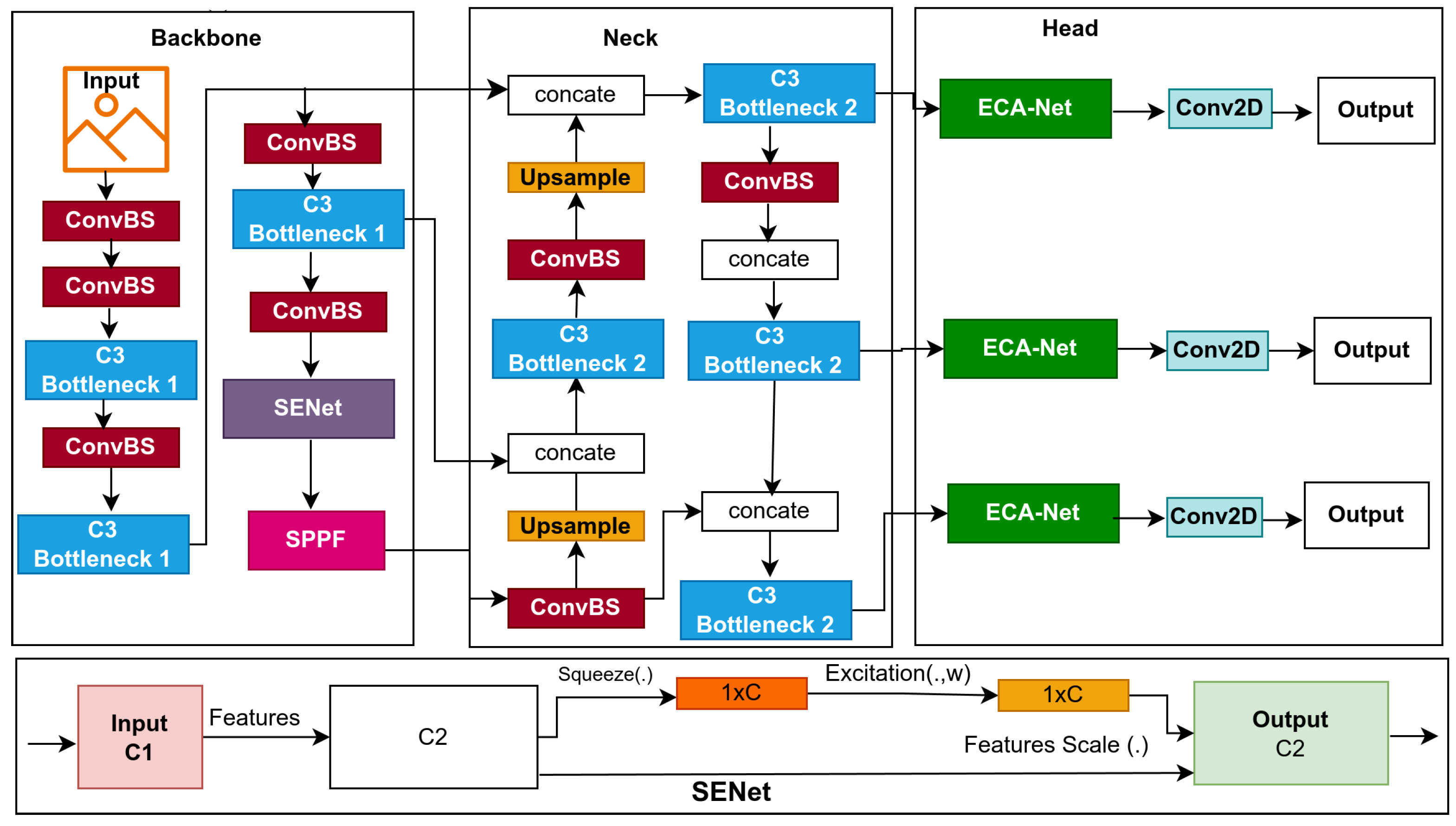

3.2.3. BaseSE-ECA

4. Experiments

4.1. Dataset and Preprocessing

- The KITTI dataset stands out as one of the leading benchmark datasets to evaluate computer vision algorithms in autonomous driving scenarios. It provides diverse real-world driving scenes captured using high-resolution stereo cameras and 3D LiDAR sensors. The dataset includes various levels of occlusion and truncation, making it well-suited for testing the robustness of lightweight object detection models under challenging conditions.The dataset is partitioned into three distinct subsets: for training, 5220 images; for validation, 1495 images; and for testing, 746 test images. It includes eight object categories, covering common road participants: car, van, truck, pedestrian, person (sitting), cyclist, tram, and miscellaneous. The dataset contains 40,484 labeled objects, averaging 5.4 annotations per image across these eight classes.Data preprocessing was conducted prior to training to ensure a consistent input structure with the proposed detection models and improve training efficiency. All images were scaled to a 640 × 640 pixel resolution while maintaining the aspect ratio using stretching techniques. Input pixel values were normalized to a range of [0,1] to ensure stable convergence during training. Data augmentation techniques, including random horizontal flipping, brightness adjustment, and affine transformations, were incorporated to improve generalization and model robustness [46].The KITTI dataset served as the primary evaluation benchmark in this study due to its well-structured annotations and established reputation for testing object detection models in autonomous driving scenarios. The proposed models achieved strong performance on KITTI, which was used as a reference to measure the models’ effectiveness in standard conditions. See some samples for detection results in Figure 5.

- The BDD-100K Dataset is a comprehensive driving video dataset tailored for autonomous driving research, featuring 100,000 samples of annotated video clips collected from diverse geographic locations and environmental conditions, including urban streets, highways, and residential areas. The dataset features weather conditions such as explicit, cloudy, rainy, and foggy scenarios and daytime and nighttime driving scenes, making it suitable for evaluating model robustness in complex environments [47]. The BDD-100K dataset was used to explore the limitations of the proposed models under more complex conditions. See some samples for detection results in Figure 6. While the models demonstrated strong performance on KITTI, they exhibited reduced performance on BDD-100K, particularly in scenes with poor lighting, motion blur, and occlusions. This evaluation provided insights into the models’ robustness and highlighted areas for future improvements, such as enhanced temporal feature fusion and improved noise handling techniques.

4.2. Implementation and Training

4.3. Performance Metrics

4.3.1. Inference Speed

4.3.2. Detection Accuracy

4.3.3. Detection Quality Assessment

4.4. Ablation Study

4.4.1. Impact on Feature Importance

4.4.2. Computational Cost Considerations

4.4.3. Effect on Accuracy

4.4.4. Effect on Speed

5. Results

5.1. Detection Performance Analysis

- BaseSE-ECA (vehicles) achieves the highest detection precision (96.7%) and recall (96.2%), demonstrating that attention mechanisms significantly enhance vehicle detection performance.

- BaseECA surpasses the baseline model in mAP@50 (90.9%) and mAP@95 (68.9%), indicating improved overall detection accuracy.

- BaseECAx2, incorporating dual ECA, demonstrates robust detection capabilities with precision (89.1%) and recall (86%), highlighting the effectiveness of multi-layer attention integration.

- While BaseSE-ECA achieves the highest precision (91.3%), its lower recall (83.9%) suggests a trade-off between high selectivity and overall detection capability.

- Car detection remains consistently high across all models (≥75%), confirming model robustness for prominent and frequently occurring object classes in driving scenes.

- Motorcycle and rider classes show the sharpest degradation, with mAP@50 ranging between 21.1%–25.1% for “Motor" and 31.9%–35.6% for “Rider”, indicating difficulty in detecting fast-moving, small, or partially occluded objects in real-world conditions.

- Bike and person detection performances are modest across models, with mAP below 51%. Detection challenges likely stem from partial visibility, variable human poses, and low-light conditions.

- Bus and truck classes perform relatively well due to their larger sizes and clearer boundaries. BaseSE-ECA achieves the best result on “Bus” (50.1%) and competitive accuracy on “Truck” (53.4%), demonstrating that attention mechanisms help in large-object detection under complex conditions.

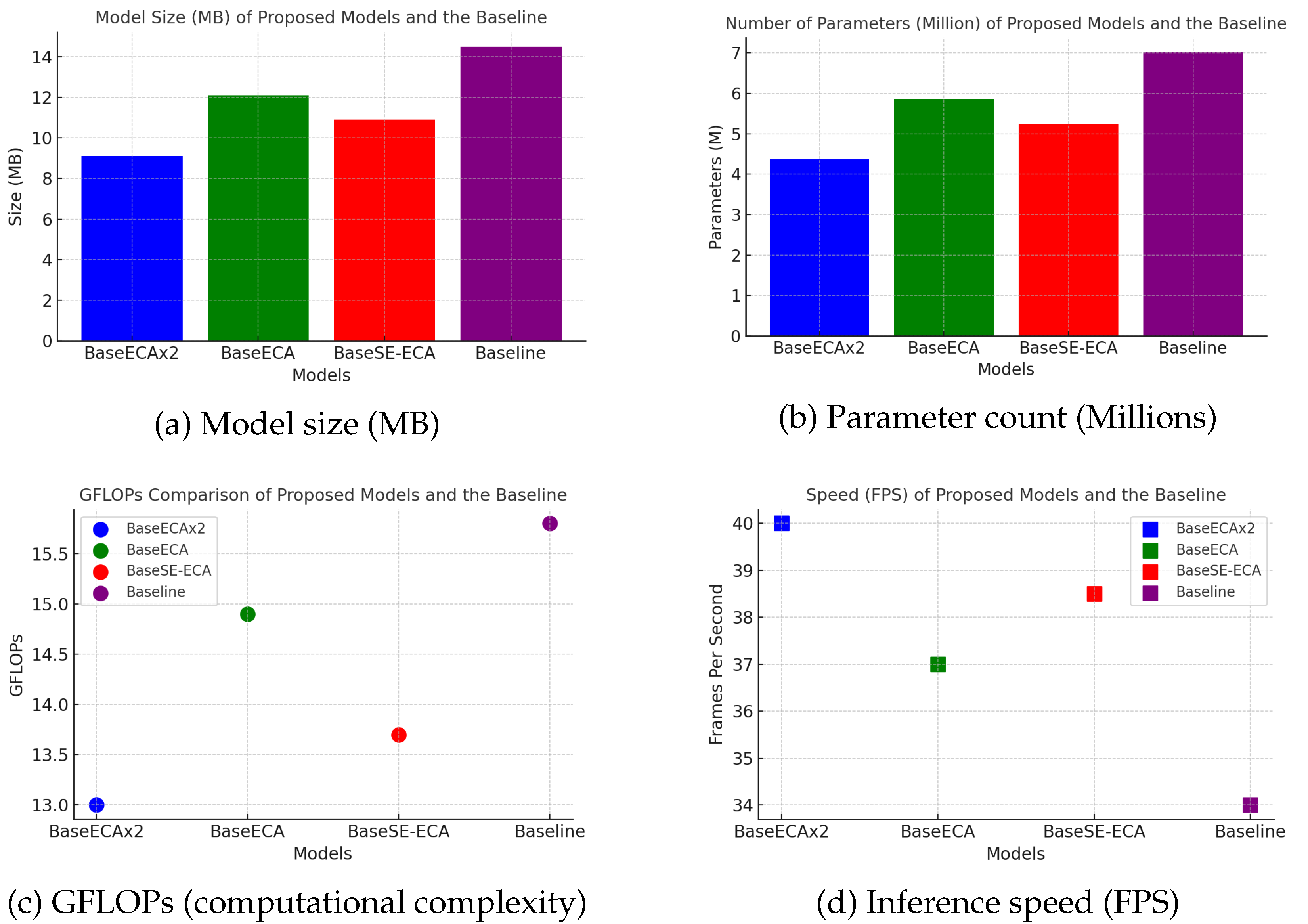

5.2. Computational Efficiency and Model Complexity

- BaseSE-ECA (vehicles) achieves a compact model size (9.1 MB) with a moderate computational complexity of 13.7 GFLOPs, making it highly efficient for edge-device deployment.

- BaseECAx2 exhibits the lowest GFLOPs (13.0) while maintaining fewer parameters (43.7 M ), confirming that multi-layer ECA integration reduces computational overhead.

- BaseECA, despite enhancing feature representation, slightly increases computational complexity, requiring 14.9 GFLOPs and a model size of 12.1 MB.

- The baseline YOLOv5s model has the highest GFLOPs (15.8) and parameter count (70 M) while achieving the lowest FPS (34), reinforcing the efficiency gains of the proposed models.

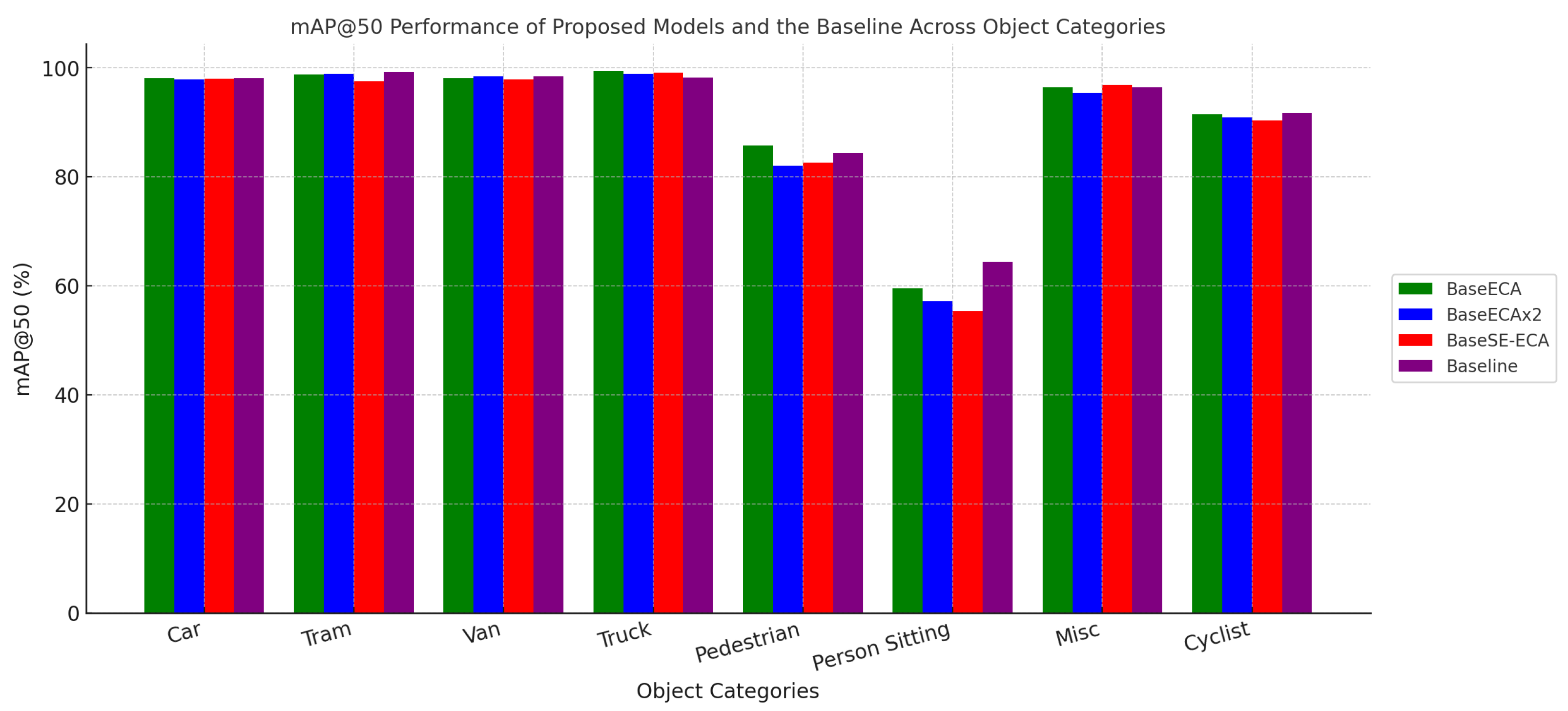

5.3. Class-Wise Detection Performance

- BaseECA achieves the highest pedestrian detection accuracy (85.7%) and performs exceptionally well for cyclists (91.4%), confirming that ECA enhances small-object detection.

- BaseSE-ECA (vehicles only) excels in vehicle detection, achieving 98.8% mAP for vans and 98.4% for trucks, reinforcing SE’s strength in large-object recognition.

- BaseECAx2 maintains strong multi-class performance, demonstrating a balanced detection capability across different object categories.

5.4. Discussion

5.5. Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AI | Artificial Intelligence |

| CNN | Convolutional Neural Network |

| DNN | Deep Neural Network |

| IoU | Intersection over Union |

| mAP | mean Average Precision |

| FPS | Frames Per Second |

| GFLOPs | Giga Floating Point Operations |

| V2X | Vehicle-to-Everything |

| KITTI | Karlsruhe Institute of Technology and Toyota Technological Institute |

| BDD100K | Berkeley DeepDrive 100K |

| YOLO | You Only Look Once |

| SE | Squeeze-and-Excitation |

| ECA | Efficient Channel Attention |

| CBAM | Convolutional Block Attention Module |

| CA | Coordinate Attention |

| RPN | Region Proposal Network |

| FPN | Feature Pyramid Network |

| NMS | Non-Maximum Suppression |

| UAV | Unmanned Aerial Vehicle |

| COCO | Common Objects in Context |

References

- Sharma, S.; Sharma, A.; Van Chien, T. (Eds.) The Intersection of 6G, AI/Machine Learning, and Embedded Systems: Pioneering Intelligent Wireless Technologies, 1st ed.; CRC Press: Boca Raton, FL, USA, 2025. [Google Scholar] [CrossRef]

- Meng, F.; Hong, A.; Tang, H.; Tong, G. FQDNet: A Fusion-Enhanced Quad-Head Network for RGB-Infrared Object Detection. Remote Sens. 2025, 17, 1095. [Google Scholar] [CrossRef]

- Yang, Z.; Li, J.; Li, H. Real-Time Pedestrian and Vehicle Detection for Autonomous Driving. In Proceedings of the 2018 IEEE Intelligent Vehicles Symposium (IV), Changshu, China, 26–30 June 2018; pp. 179–184. [Google Scholar] [CrossRef]

- Li, F.; Zhao, Y.; Wei, J.; Li, S.; Shan, Y. SNCE-YOLO: An Improved Target Detection Algorithm in Complex Road Scenes. IEEE Access 2024, 12, 152138–152151. [Google Scholar] [CrossRef]

- Wang, H.; Chaw, J.K.; Goh, S.K.; Shi, L.; Tin, T.T.; Huang, N.; Gan, H.-S. Super-Resolution GAN and Global Aware Object Detection System for Vehicle Detection in Complex Traffic Environments. IEEE Access 2024, 12, 113442–113462. [Google Scholar] [CrossRef]

- Farhat, W.; Ben Rhaiem, O.; Faiedh, H.; Souani, C. Optimized Deep Learning for Pedestrian Safety in Autonomous Vehicles. Int. J. Transp. Sci. Technol. 2025, in press. [Google Scholar] [CrossRef]

- Galvao, L.G.; Abbod, M.; Kalganova, T.; Palade, V.; Huda, M.N. Pedestrian and Vehicle Detection in Autonomous Vehicle Perception Systems—A Review. Sensors 2021, 21, 7267. [Google Scholar] [CrossRef]

- Karangwa, J.; Liu, J.; Zeng, Z. Vehicle Detection for Autonomous Driving: A Review of Algorithms and Datasets. IEEE Trans. Intell. Transp. Syst. 2023, 24, 11568–11594. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.-Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. In Proceedings of the European Conference on Computer Vision (ECCV), Amsterdam, The Netherlands, 11–14 October 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer: Cham, Switzerland, 2016; pp. 21–37. [Google Scholar] [CrossRef]

- Hussain, M. YOLOv1 to v8: Unveiling Each Variant–A Comprehensive Review of YOLO. IEEE Access 2024, 12, 42816–42833. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. arXiv 2019, arXiv:1910.03151. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. arXiv 2017, arXiv:1709.01507. [Google Scholar]

- Sarda, A.; Dixit, S.; Bhan, A. Object Detection for Autonomous Driving Using YOLO (You Only Look Once) Algorithm. In Proceedings of the 2021 Third International Conference on Intelligent Communication Technologies and Virtual Mobile Networks (ICICV), Tirunelveli, India, 4–6 February 2021; pp. 1370–1374. [Google Scholar] [CrossRef]

- Zhou, Y.; Wen, S.; Wang, D.; Meng, J.; Mu, J.; Irampaye, R. MobileYOLO: Real-Time Object Detection Algorithm in Autonomous Driving Scenarios. Sensors 2022, 22, 3349. [Google Scholar] [CrossRef]

- Afdhal, A.; Saddami, K.; Arief, M.; Sugiarto, S.; Fuadi, Z.; Nasaruddin, N. MXT-YOLOv7t: An Efficient Real-Time Object Detection for Autonomous Driving in Mixed Traffic Environments. IEEE Access 2024, 12, 178566–178585. [Google Scholar] [CrossRef]

- Cai, Y.; Luan, T.; Gao, H.; Wang, H.; Chen, L.; Li, Y.; Sotelo, M.A.; Li, Z. YOLOv4-5D: An Effective and Efficient Object Detector for Autonomous Driving. IEEE Trans. Instrum. Meas. 2021, 70, 1–13. [Google Scholar] [CrossRef]

- Cao, Y.; Li, C.; Peng, Y.; Ru, H. MCS-YOLO: A Multiscale Object Detection Method for Autonomous Driving Road Environment Recognition. IEEE Access 2023, 11, 22342–22354. [Google Scholar] [CrossRef]

- Liang, S.; Wu, H.; Zhen, L.; Hua, Q.; Garg, S.; Kaddoum, G.; Hassan, M.M.; Yu, K. Edge YOLO: Real-Time Intelligent Object Detection System Based on Edge-Cloud Cooperation in Autonomous Vehicles. IEEE Trans. Intell. Transp. Syst. 2022, 23, 25345–25360. [Google Scholar] [CrossRef]

- He, Q.; Xu, A.; Ye, Z.; Zhou, W.; Cai, T. Object Detection Based on Lightweight YOLOX for Autonomous Driving. Sensors 2023, 23, 7596. [Google Scholar] [CrossRef]

- Yasir, M.; Shanwei, L.; Mingming, X.; Jianhua, W.; Nazir, S.; Islam, Q.U.; Dang, K.B. SwinYOLOv7: Robust Ship Detection in Complex Synthetic Aperture Radar Images. Appl. Soft Comput. 2024, 160, 111704. [Google Scholar] [CrossRef]

- Yang, M.; Fan, X. YOLOv8-Lite: A Lightweight Object Detection Model for Real-Time Autonomous Driving Systems. ICCK Trans. Emerg. Top. Artif. Intell. 2024, 1, 1–16. [Google Scholar] [CrossRef]

- Wei, F.; Wang, W. SCCA-YOLO: A Spatial and Channel Collaborative Attention Enhanced YOLO Network for Highway Autonomous Driving Perception System. Sci. Rep. 2025, 15, 6459. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Tian, Y.; Ye, Q.; Doermann, D. YOLOv12: Attention-Centric Real-Time Object Detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-Time Object Detection. arXiv 2024, arXiv:2304.08069. [Google Scholar]

- Kadiyapu, D.C.M.; Mangali, V.S.; Thummuru, J.R.; Sattula, S.; Annapureddy, S.R.; Arunkumar, M.S. Improving the Autonomous Vehicle Vision with Synthetic Data Using Gen AI. In Proceedings of the 2025 3rd International Conference on Intelligent Data Communication Technologies and Internet of Things (IDCIoT), Tiruchirappalli, India, 6–7 March 2025; pp. 776–782. [Google Scholar] [CrossRef]

- Ghosh, A.; Kumar, G.P.; Prasad, P.; Kumar, D.; Jain, S.; Chopra, J. Synergizing Generative Intelligence: Advancements in Artificial Intelligence for Intelligent Vehicle Systems and Vehicular Networks. Iconic Res. Eng. J. 2023, 7. Available online: https://www.researchgate.net/publication/376406497_Synergizing_Generative_Intelligence_Advancements_in_Artificial_Intelligence_for_Intelligent_Vehicle_Systems_and_Vehicular_Networks (accessed on 6 August 2025).

- Smolin, M. GenCoder: A Generative AI-Based Adaptive Intra-Vehicle Intrusion Detection System. IEEE Access 2024, 12, 150651–150663. [Google Scholar] [CrossRef]

- Lu, J.; Yang, W.; Xiong, Z.; Xing, C.; Tafazolli, R.; Quek, T.Q.S.; Debbah, M. Generative AI-Enhanced Multi-Modal Semantic Communication in Internet of Vehicles: System Design and Methodologies. arXiv 2024, arXiv:2409.15642. [Google Scholar]

- Zhang, J.; Liu, Z.; Wei, H.; Zhang, S.; Cheng, W.; Yin, H. CAE-YOLOv5: A Vehicle Target Detection Algorithm Based on Attention Mechanism. In Proceedings of the 2023 China Automation Congress (CAC), Wuhan, China, 24–26 November 2023; pp. 6893–6898. [Google Scholar] [CrossRef]

- Wang, J.; Dong, Y.; Zhao, S.; Zhang, Z. A High-Precision Vehicle Detection and Tracking Method Based on the Attention Mechanism. Sensors 2023, 23, 724. [Google Scholar] [CrossRef]

- Wang, Z.; Men, S.; Bai, Y.; Yuan, Y.; Wang, J.; Wang, K.; Zhang, L. Improved Small Object Detection Algorithm CRL-YOLOv5. Sensors 2024, 24, 6437. [Google Scholar] [CrossRef]

- Jia, X.; Tong, Y.; Qiao, H.; Li, M.; Tong, J.; Liang, B. Fast and Accurate Object Detector for Autonomous Driving Based on Improved YOLOv5. Sci. Rep. 2023, 13, 9711. [Google Scholar] [CrossRef]

- Liu, T.; Dongye, C.; Jia, X. The Research on Traffic Sign Recognition Algorithm Based on Improved YOLOv5 Model. In Proceedings of the 2023 3rd International Conference on Consumer Electronics and Computer Engineering (ICCECE), Guangzhou, China, 6–8 January 2023; pp. 92–97. [Google Scholar] [CrossRef]

- Wang, K.; Liu, M. YOLOv3-MT: A YOLOv3 Using Multi-Target Tracking for Vehicle Visual Detection. Appl. Intell. 2022, 52, 2070–2091. [Google Scholar] [CrossRef]

- Li, Z.; Pang, C.; Dong, C.; Zeng, X. R-YOLOv5: A Lightweight Rotational Object Detection Algorithm for Real-Time Detection of Vehicles in Dense Scenes. IEEE Access 2023, 11, 61546–61559. [Google Scholar] [CrossRef]

- Almujally, N.A.; Qureshi, A.M.; Alazeb, A.; Rahman, H.; Sadiq, T.; Alonazi, M.; Algarni, A.; Jalal, A. A Novel Framework for Vehicle Detection and Tracking in Night Ware Surveillance Systems. IEEE Access 2024, 12, 88075–88085. [Google Scholar] [CrossRef]

- Muzammul, M.; Li, X. Comprehensive Review of Deep Learning-Based Tiny Object Detection: Challenges, Strategies, and Future Directions. Knowl. Inf. Syst. 2025, 67, 3825–3913. [Google Scholar] [CrossRef]

- Zhao, C.; Guo, D.; Shao, C.; Zhao, K.; Sun, M.; Shuai, H. SatDetX-YOLO: A More Accurate Method for Vehicle Target Detection in Satellite Remote Sensing Imagery. IEEE Access 2024, 12, 46024–46041. [Google Scholar] [CrossRef]

- Adam, M.A.A.; Tapamo, J.R. Survey on Image-Based Vehicle Detection Methods. World Electr. Veh. J. 2025, 16, 303. [Google Scholar] [CrossRef]

- Jocher, G. Ultralytics YOLOv5, Version 7.0; 2020. Available online: https://github.com/ultralytics/yolov5 (accessed on 10 May 2025).

- Lin, T.-Y.; Maire, M.; Belongie, S.; Bourdev, L.; Girshick, R.; Hays, J.; Perona, P.; Ramanan, D.; Zitnick, C.L.; Dollár, P. Microsoft COCO: Common Objects in Context. arXiv 2015, arXiv:1405.0312. [Google Scholar]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision Meets Robotics: The KITTI Dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Yu, F.; Xian, W.; Chen, Y.; Liu, F.; Liao, M.; Madhavan, V.; Darrell, T. BDD100K: A Diverse Driving Video Database with Scalable Annotation Tooling. arXiv 2018, arXiv:1805.04687. [Google Scholar]

- Liang, T.; Bao, H.; Pan, W.; Pan, F. ALODAD: An Anchor-Free Lightweight Object Detector for Autonomous Driving. IEEE Access 2022, 10, 40701–40714. [Google Scholar] [CrossRef]

- Li, G.; Ji, Z.; Qu, X.; Zhou, R.; Cao, D. Cross-Domain Object Detection for Autonomous Driving: A Stepwise Domain Adaptative YOLO Approach. IEEE Trans. Intell. Veh. 2022, 7, 603–615. [Google Scholar] [CrossRef]

| Authors | Methods | Results | Limitations |

|---|---|---|---|

| Zhou et al. [15] | Combines MobileNetV2 and ECA. | 90.7% accuracy, 80% model size reduction. | Struggles with occlusions and small objects. |

| Cai et al. [17] | Network pruning and feature fusion in YOLOv4. | 31.3% faster inference with high accuracy. | Pruning requires careful tuning. |

| Liang et al. [19] | Edge-cloud offloading scheme. | Reduced latency and CPU load. | Requires a stable network for full functionality. |

| He et al. [20] | Replaces CSPDarkNet53 with ShuffleDet. | 92.2% mAP with reduced complexity. | Weak performance in low-light scenes. |

| Zhang et al. [31] | Integrates ECA and CBAM into YOLOv5. | Achieved 96.3% detection accuracy. | Increased inference time due to added attention layers. |

| Wang et al. [32] | Incorporates NAM into YOLOv5. | +1.6% mAP vs. baseline. | Limited gain in dense traffic. |

| Jia et al. [34] | NAS and structural reparameterization. | 96.1% accuracy, 202 FPS on KITTI. | Dataset-specific tuning limits generalization. |

| Liu and Dongye [35] | Apply dense CSP and enhanced FPN to YOLOv5. | 5.28% boost in detection efficiency. | Lower performance in adverse weather conditions. |

| Wang et al. [36] | Kalman filtering + DIoU-NMS. | Up to 4.65% mAP increase. | Degraded under severe occlusion. |

| Zhao et al. [40] | Uses deformable attention and MPDIoU loss. | +3.5% precision, +3.3% recall in remote sensing. | High computational complexity. |

| Method | Description |

|---|---|

| C3 block | Equal channel processing; more convolution operations; baseline accuracy; slightly slower speed. |

| SE attention | Focuses on key channels; introduces fully connected (FC) layer cost; improves global feature focus; faster than C3. |

| ECA attention | Local channel attention via 1D convolution; lightweight; boosts local feature refinement; fastest option. |

| Model | Precision (%) | Recall (%) | mAP@50 (%) | mAP@95 (%) |

|---|---|---|---|---|

| BaseECA | 88.0 | 87.5 | 90.9 | 68.9 |

| BaseECAx2 | 89.1 | 86.0 | 90.0 | 65.9 |

| BaseSE | 91.3 | 83.9 | 89.7 | 67.6 |

| BaseSE-ECA | 91.3 | 83.9 | 89.9 | 67.6 |

| BaseSE-ECA (Vehicle) | 96.7 | 96.2 | 98.4 | 84.4 |

| Baseline | 89.1 | 87.9 | 91.4 | 69.5 |

| YOLOv11n | 85.2 | 82.8 | 88.8 | 34.9 |

| YOLOv12n | 87.6 | 89.2 | 91.6 | 71.9 |

| Model | GFLOPs | Params (M) | FPS | Size (MB) | Improvement Summary |

|---|---|---|---|---|---|

| BaseECA | 14.9 | 5.85 | 37.0 | 12.1 | +3.0 FPS, −0.9 GFLOPs, −2.4 MB |

| BaseECAx2 | 13.0 | 4.37 | 40.0 | 9.1 | +6.0 FPS, −2.8 GFLOPs, −5.4 MB |

| BaseSE | 15.6 | 6.71 | 35.0 | 13.8 | +1.0 FPS, −0.2 GFLOPs, −0.7 MB |

| BaseSE−ECA | 13.7 | 5.24 | 38.5 | 10.9 | +4.5 FPS, −2.1 GFLOPs, −3.6 MB |

| BaseSE−ECA (Vehicles) | 13.7 | 5.24 | 38.5 | 9.1 | Same FPS, −2.1 GFLOPs, −5.4 MB |

| Baseline | 15.8 | 7.03 | 34.0 | 14.5 | Reference point |

| YOLOv11n | 6.3 | 2.58 | 53.0 | 5.5 | +19.0 FPS, −9.5 GFLOPs, −9.0 MB |

| YOLOv12n | 6.3 | 2.56 | 38.3 | 5.6 | +3.3.0 FPS, −9.5 GFLOPs, −8.9.0 MB |

| Model | Car | Tram | Van | Truck | Pedestrian | Person Sitting | Misc | Cyclist |

|---|---|---|---|---|---|---|---|---|

| BaseECA | 98.1 | 98.8 | 98.1 | 99.4 | 85.7 | 59.5 | 96.4 | 91.4 |

| BaseECAx2 | 97.8 | 98.9 | 98.4 | 98.9 | 82.0 | 57.2 | 95.4 | 90.9 |

| BaseSE | 98.1 | 99.0 | 98.5 | 99.2 | 84.6 | 67.4 | 96.5 | 91.0 |

| BaseSE-ECA | 98.0 | 97.5 | 97.9 | 99.1 | 82.6 | 55.4 | 96.8 | 90.3 |

| BaseSE-ECA (Vehicles) | 98.0 | N/A | 98.8 | 98.4 | N/A | N/A | N/A | N/A |

| Baseline | 98.1 | 99.2 | 98.4 | 98.2 | 84.4 | 64.4 | 96.4 | 91.7 |

| YOLOv11n | 97.3 | 94.9 | 96.1 | 97.2 | 80.6 | 66.6 | 80.0 | 82.7 |

| YOLOv12n | 98.2 | 98.1 | 98.3 | 98.4 | 85.2 | 68.8 | 94.3 | 91.1 |

| Model | Precision (%) | Recall (%) | mAP@50 (%) |

|---|---|---|---|

| BaseECAx2 | 62.1 | 43.8 | 46.6 |

| BaseSE | 63.8 | 42.7 | 47.2 |

| BaseSE-ECA | 61.4 | 44.1 | 48.0 |

| Baseline | 57.5 | 44.4 | 47.0 |

| Model | Bike | Bus | Car | Motor | Person | Rider | Truck |

|---|---|---|---|---|---|---|---|

| BaseECAx2 | 41.9 | 45.5 | 76.0 | 24.5 | 49.6 | 34.4 | 54.5 |

| BaseSE | 48.8 | 47.5 | 76.2 | 21.1 | 50.1 | 31.9 | 55.1 |

| BaseSE-ECA | 45.9 | 50.1 | 75.9 | 25.1 | 49.6 | 35.6 | 53.4 |

| Baseline | 46.1 | 48.7 | 76.3 | 21.2 | 51.0 | 32.2 | 53.4 |

| Method | mAP (%) | # Parameters (M) | GFLOPs |

|---|---|---|---|

| YOLOX-L [34] | 92.27 | 54.15 | 155.69 |

| ShuffYOLOX [34] | 92.20 | 35.43 | 89.99 |

| SNCE-YOLO [4] | 91.90 | 9.58 | 35.20 |

| MobileYOLO [15] | 90.70 | 12.25 | 46.70 |

| YOLOv8s [4] | 89.40 | 11.13 | 28.40 |

| SSIGAN and GCAFormer [5] | 89.12 | N/A | N/A |

| YOLOv4-5D[P-L] [17] | 87.02 | N/A | 103.66 |

| YOLOv3 [34] | 87.37 | 61.53 | N/A |

| SD-YOLO-AWDNet [12] | 86.00 | 3.70 | 8.30 |

| YOLOv6s [4] | 85.60 | 16.30 | 44.00 |

| YOLOv7s-tiny [12] | 84.12 | 6.20 | 5.80 |

| YOLOv3-MT [36] | 84.03 | N/A | 32.06 |

| RetinaNet [15] | 88.70 | 37.23 | 165.40 |

| YOLOv3 [48] | 87.40 | 61.53 | 234.70 |

| YOLOv8-Lite [22] | 76.62 | 4.80 | 8.95 |

| Faster R-CNN [36] | 71.86 | N/A | 7.04 |

| Edge YOLO [19] | 72.60 | 24.48 | 9.97 |

| MobileNetv3 SSD [19] | 71.80 | 33.11 | 12.52 |

| YOLOv4-5D[P-G] [48] | 69.84 | N/A | 76.90 |

| SSD [36] | 61.42 | N/A | 27.06 |

| S-DAYOLO [49] | 49.30 | 9.35 | 19.70 |

| YOLOv11n | 88.8 | 2.60 | 6.30 |

| YOLOv5s | 91.4 | 7.03 | 15.8 |

| Ours (BaseECA) | 90.90 | 5.85 | 14.90 |

| Ours (BaseECAx2) | 90.00 | 4.37 | 13.00 |

| Ours (BaseSE-ECA) | 89.90 | 5.24 | 13.70 |

| Ours (BaseSE-ECA (Vehicles)) | 98.40 | 5.24 | 13.70 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Adam, M.A.A.; Tapamo, J.R. Enhancing YOLOv5 for Autonomous Driving: Efficient Attention-Based Object Detection on Edge Devices. J. Imaging 2025, 11, 263. https://doi.org/10.3390/jimaging11080263

Adam MAA, Tapamo JR. Enhancing YOLOv5 for Autonomous Driving: Efficient Attention-Based Object Detection on Edge Devices. Journal of Imaging. 2025; 11(8):263. https://doi.org/10.3390/jimaging11080263

Chicago/Turabian StyleAdam, Mortda A. A., and Jules R. Tapamo. 2025. "Enhancing YOLOv5 for Autonomous Driving: Efficient Attention-Based Object Detection on Edge Devices" Journal of Imaging 11, no. 8: 263. https://doi.org/10.3390/jimaging11080263

APA StyleAdam, M. A. A., & Tapamo, J. R. (2025). Enhancing YOLOv5 for Autonomous Driving: Efficient Attention-Based Object Detection on Edge Devices. Journal of Imaging, 11(8), 263. https://doi.org/10.3390/jimaging11080263