Deep Learning Techniques for Prostate Cancer Analysis and Detection: Survey of the State of the Art

Abstract

1. Introduction

- This survey thoroughly reviews state-of-the-art deep learning techniques applied to prostate lesion analysis and detection, offering insights into the strengths and limitations of various methods.

- It categorises existing techniques based on their applications, such as lesion detection, segmentation, and classification, helping researchers understand the landscape of solutions.

- The study identifies critical challenges in prostate lesion analysis, including imaging modalities, image quality, variation in acquisition protocols, patient-specific factors, data annotation, and ground truth, thereby guiding future research directions.

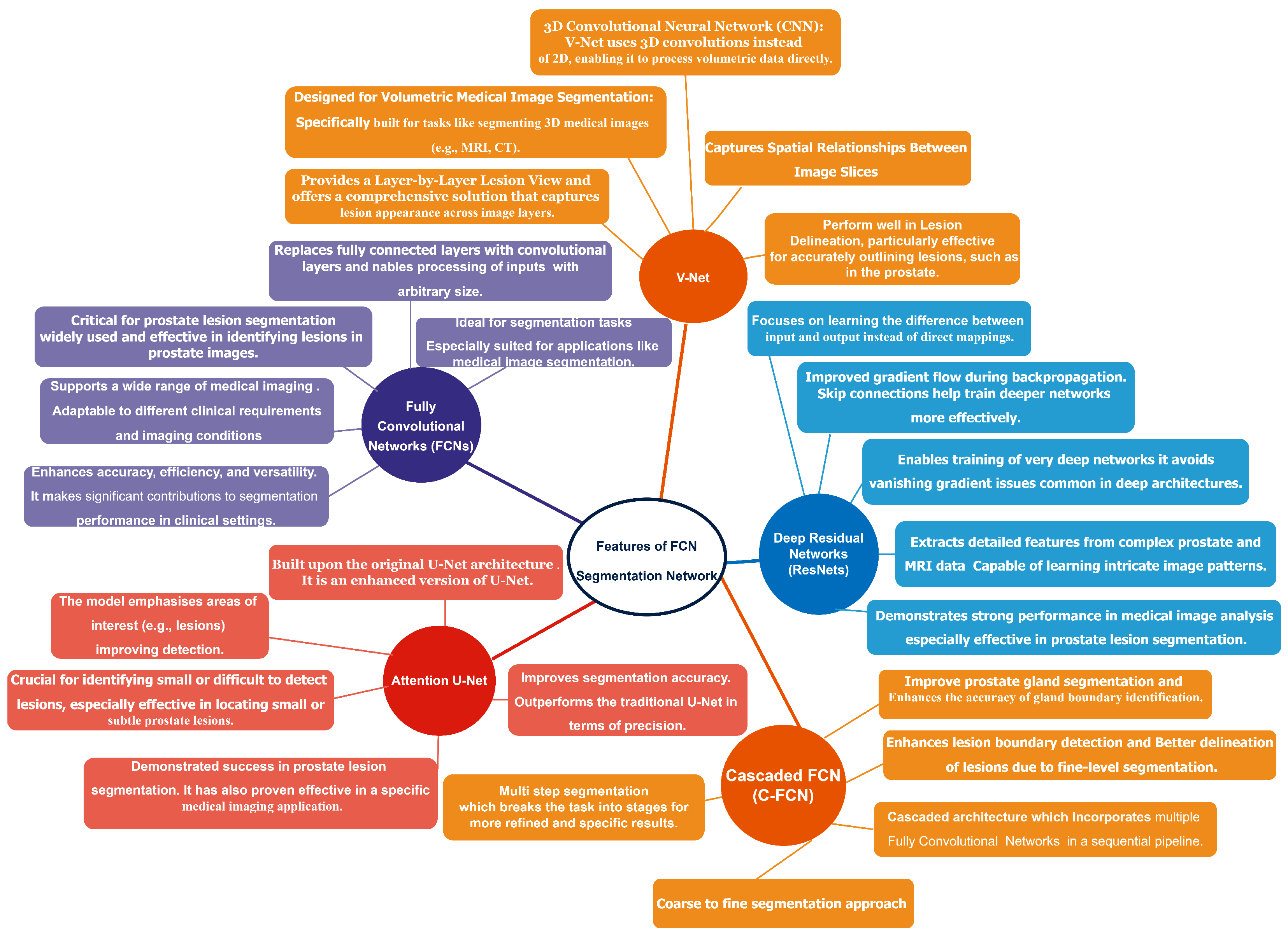

- In this study, we proposed several deep learning-based models that exhibit exceptional performance in prostate lesion segmentation. Additionally, we conducted a comprehensive review of various architectures, including V-Net, fully convolutional networks (FCNs), Attention U-Net, cascaded FCNs, and deep fully convolutional residual networks (FCRNs).

- By showcasing real-world implementations, this paper bridges the gap between research and clinical practice, demonstrating the potential impact of deep learning in improving prostate cancer diagnosis and treatment.

- The survey discusses commonly used datasets and evaluation metrics, providing a benchmark for researchers to assess the performance of their models.

Challenges in the Detection of Prostate Cancer

- Imaging Modalities: Several imaging sequences, such as T1-weighted MRI, T2-weighted MRI, dynamic contrast-enhanced (DCE) MRI, and diffusion-weighted imaging (DWI), provide complementary information about prostatic tissue. Each modality targets different tissue features, thus posing difficulties in merging information from other sources to obtain accurate lesion detection. Although the sensitivity for lesion types among specific modalities may be more significant, it generally lacks specificity and can lead to differences in detectability results.

- Image Quality: Variations in image quality, including differences in resolution, contrast, and noise, can also significantly impact the detectability of lesions. High-quality, high-contrast images can reveal finer details, while low-quality, lower-contrast images can obscure subtle irregularities. Differences in hardware, scanning parameters, or operator procedures may exacerbate variations in image quality, thereby affecting the accuracy of analysis.

- Variation in Acquisition Protocols: MRI acquisition protocols vary between institutions, leading to image appearance and quality variability. Differences in scanner type (e.g., 1.5 T vs. 3 T magnetic field strength), slice thickness, and imaging parameters can lead to variability in the data acquired. These contradictions challenge the development of general models of lesion detection that generalise well to a range of data.

- Patient-Specific Factors: Patient-specific variables, such as anatomy, age, prostate volume, and the presence of benign lesions (benign prostatic hyperplasia or inflammation), contribute to image variability. These can make it difficult to standardise so that benign and malignant lesions can be differentiated reliably.

- Data Annotation and Ground Truth: Inconsistencies in image annotation and labelling between datasets can affect the training of artificial intelligence models. For example, non-standardised, discriminative, conditional, and subjective annotations by various radiologists can lead to inconsistencies in ground truth, thus affecting the performance of detection algorithms.

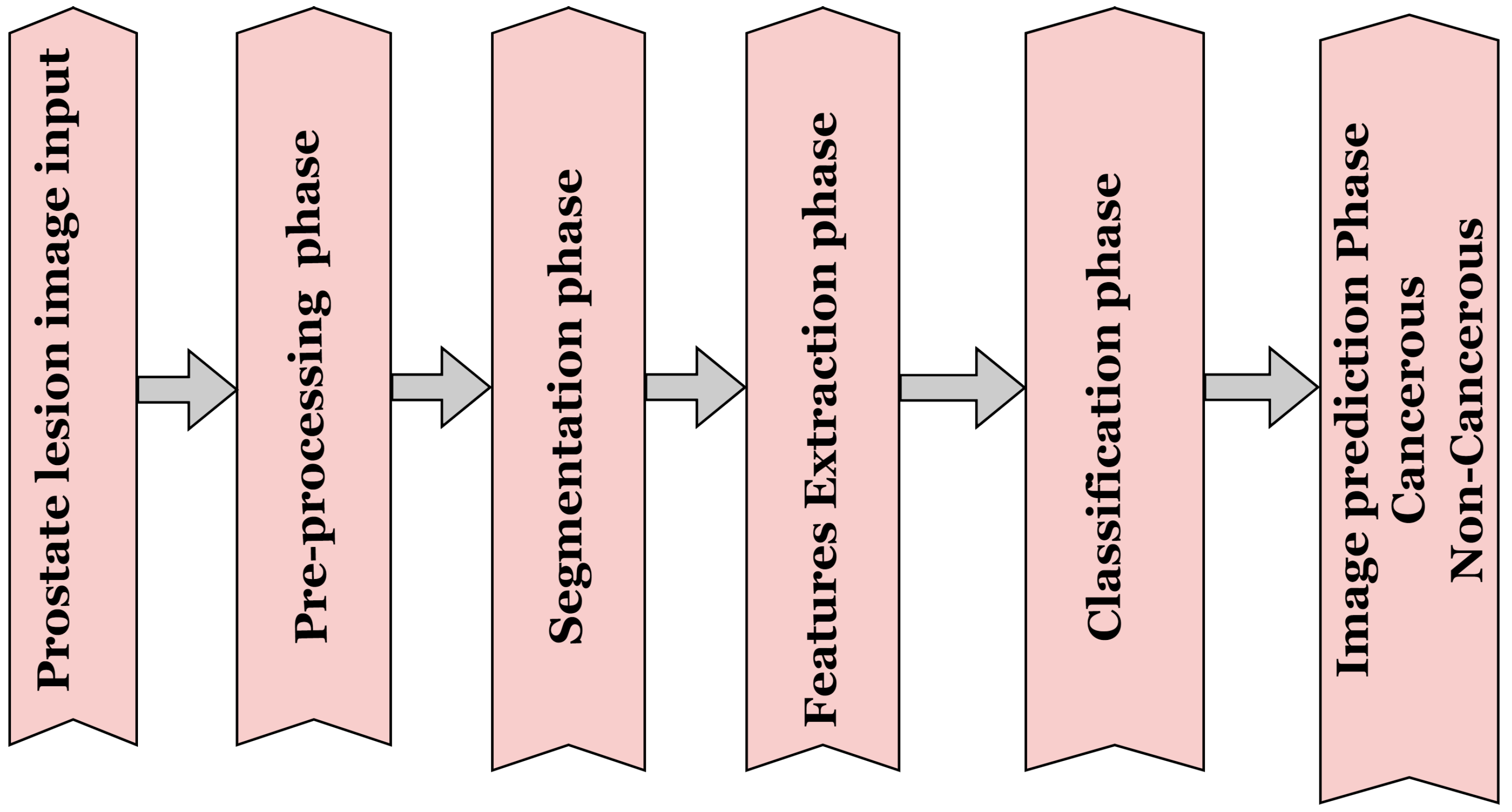

2. Methods for Analysing Prostate Lesion Images

2.1. Pre-Processing Methods for Prostate Lesion

2.2. Segmentation Method for Prostate Lesions

2.3. Traditional Intelligence Method

2.4. Deep Learning-Based Method

3. Classification Techniques for Prostate Lesions

3.1. Traditional Methods of Prostate Lesion Classification

3.2. Deep Neural Networks: A Cutting-Edge Technology

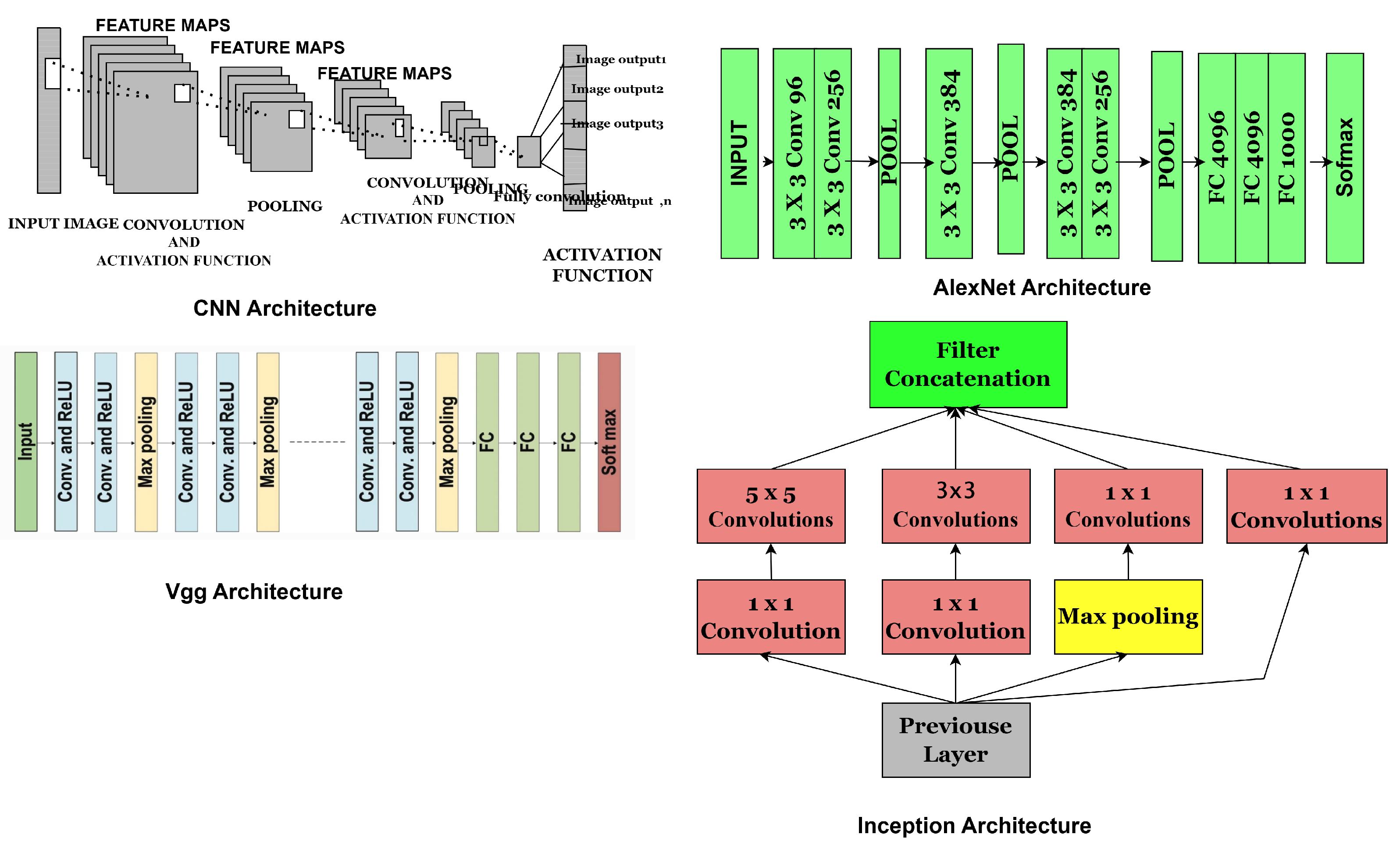

- The exponential growth in computing power, particularly with advancements in graphical processing units (GPUs), as predicted by Moore’s Law in 1971, has driven rapid progress in computer vision technology, primarily in the development of deep learning (DL) architectures, such as convolutional neural networks (CNNs). These advancements and efficient formulations in deep learning (DL) architecture have yielded exceptional state-of-the-art performance in image processing and classification. These approaches have shown better performance than traditional techniques. However, there are still challenges in the use of deep learning-based image analysis.

- Convolutional Neural Network Components: CNNs are artificial neural networks (ANNs) primarily designed for image analysis. These networks consist of neurons that can optimise themselves through learning and adaptation. CNNs comprise several layers, each serving a different function, and can process high-dimensional input vectors, such as images. The general architecture of CNN is illustrated in Figure 3, highlighting key components, including layers, activation functions, and hyperparameters. CNNs layers are typically categorised into convolutional, pooling, and fully connected layers. The activation function is a mechanism that transforms input signals into output signals, playing a crucial role in the functioning of neural networks. Standard activation functions include linear activation, Sigmoid functions, and rectified linear units (ReLUs), also known as piecewise linear functions, exponential linear units (ELUs), and Softmax, which are mathematically represented by the equations below.

3.3. Exponential Linear Unit (ELU)

3.4. SoftMax Function

4. Convolutional Neural Network Models

4.1. AlexNet

4.2. Visual Geometry Group (VGG) Network

4.3. Inception

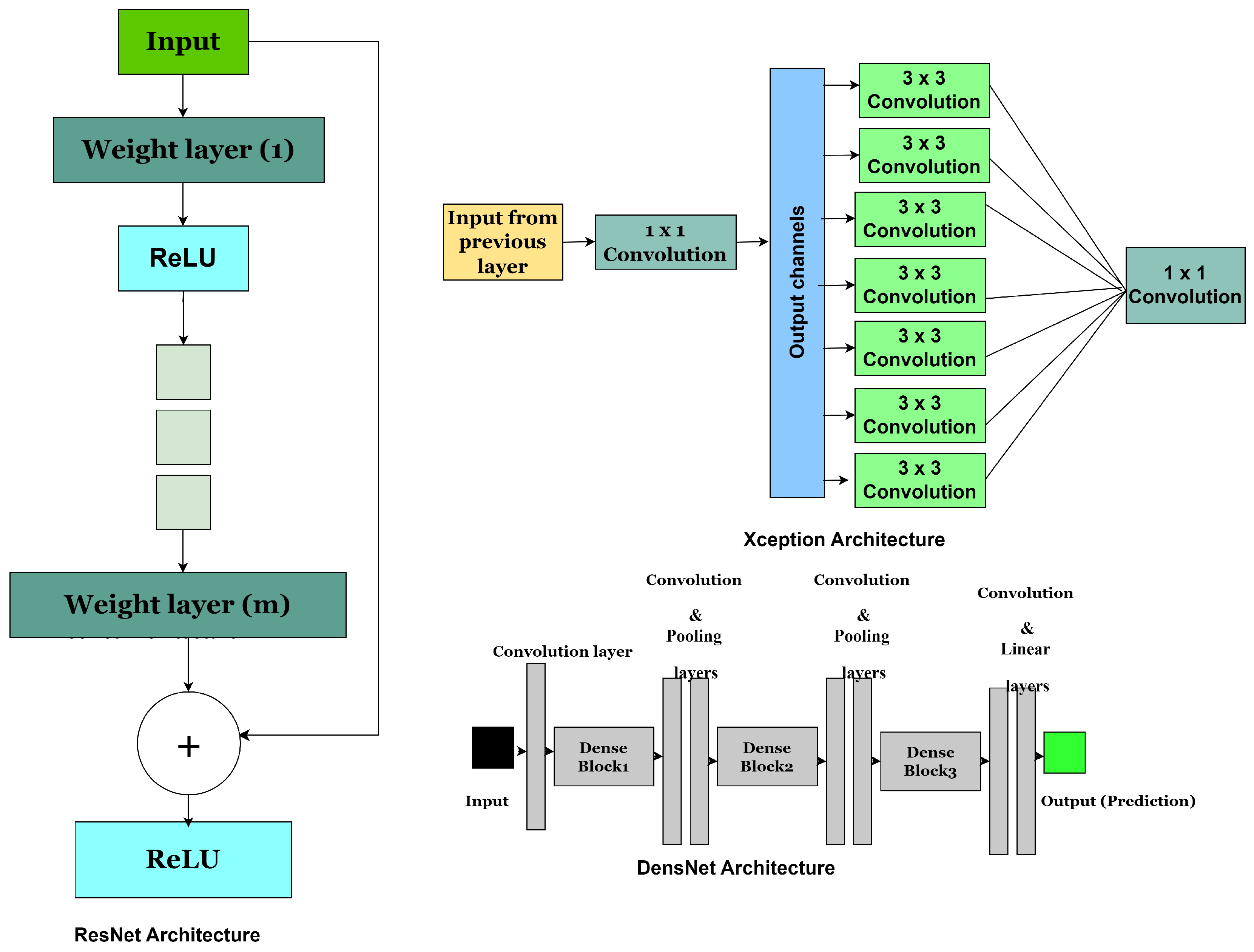

4.4. Residual Neural Network (ResNet)

4.5. DenseNet

4.6. Xception Architecture

4.7. Convolutional Block Attention Module (CBAM)

4.8. Residual Attention Neural Network

- The input feature map is X

- The weights of the residual function are

- Residual mapping is

- Attention mask generated from the input X with weights is .

- The output feature map after the attention mechanism and residual is applied is Y.

- Element-wise multiplication between the attention mask A and the residual mapping is .

- represents the input to the i-th residual attention block.

- and represent the attention mechanism and residual function of the i-th block.

4.9. Transformer-Based Networks

4.10. CSWin U-Net

4.11. ProstAttention Net

4.12. Prostate Cancer Detection Model (PCDM)

4.13. EfficientNet-B4 + Efficient Channel Attention (Eff4-Attn)

4.14. Prostate Vision-Based Classification Network (ProViCNet)

4.15. Automated Multi-Modal Transformer Network (AMTNet)

| Architectures | Description | Strengths | Limitations | Performance |

|---|---|---|---|---|

| ResNet [57] | A deep neural network architecture that revolutionised deep learning by enabling extremely deep networks to be trained effectively. It uses “skip connections” or “shortcut connections” to bypass one or more layers. | Prevention of vanishing gradients, increased depth and performance, a modular design for transfer learning, and parameter efficiency. | Computational intensity, complexity in network design, and dimensionality issues are key challenges. | 3.57% error on imageNet test |

| AlexNet [65] | The model is made up of eight layers, including five (5) convolutional layers and three (3) fully connected layers, with ReLU activation functions used for non-linearity. |

It offers performance improvement, efficient training with GPUs, the introduction of ReLU activation, and dropout regularization, though it is not robust for complex structures. |

Large parameter count, susceptibility to overfitting, and inflexible architecture. | top-1 and top-5 error rates of 37.5% and 17.0% |

| VGG [70] | This model is known for its simplicity and depth. VGG network series (e.g., VGG16 and VGG19) uses 16 and 19 weight layers, respectively, consisting of multiple convolutional and fully connected layers. | Simplicity and modularity, deep feature hierarchy, and transfer learning are key principles. | High computational cost and memory usage, redundant parameters, and slow training time are key challenges. | top-1 and top-5 error rates of 23.7% and 6.8% |

| Inception [78] | This deep learning architecture revolutionised convolutional neural networks by introducing multi-scale processing within a single layer. The first model, Inception V1 (also known as GoogLeNet), uses an “Inception module” that applies multiple convolutions (1 × 1, 3 × 3, and 5 × 5) and pooling operations in parallel within the same layer. |

The approach offers parameter efficiency, enables multi-scale feature extraction, adapts well to deep networks, and is effective for transfer learning. | Architecture complexity, computational demands, and higher memory requirements challenge the design of efficient and scalable deep networks. | top-1 of 21.2% and top-5 of 5.6% error |

| DenseNet [82] | A deep learning model that addresses some limitations of traditional CNNs by connecting each of the layers to other layers within a dense block, unlike typical CNNs, where each layer has its own set of filters and receives input from the previous layer. The model layers receive inputs from all preceding layers within each dense block. | Efficient parameter use enables improved gradient flow, promotes feature reuse and enhanced representation, and helps reduce overfitting. | Memory-intensive processes, increased computational complexity, and difficulty in fine-tuning | 3.46% on C10+ and 17.18% on C100+ error rates |

| Xception [84] | It builds upon the Inception architecture but takes the concept of depthwise separable convolutions to an extreme. Standard convolutions are replaced with depthwise separable convolutions, where each channel of the input is processed independently before a pointwise convolution (1x1 convolution) is applied features. | Parameter efficiency and computational speed, enhanced feature extraction, and competitive accuracy. | Memory consumption, limited flexibility for transfer learning, and complexity in implementation are the primary challenges. | top-1 0.790 and top-5 0.945 accuracy |

| Convolutional Block Attention Module [87] | A lightweight attention model that enhances the feature learning ability of convolutional neural networks. It refines feature maps by applying two types of attention sequentially: channel attention and spatial attention. |

Enhanced feature representation, lightweight efficiency, modularity, flexibility, and performance gains are achieved. |

The limitations include sequential processing, limited adaptability to diverse data, and potential redundancy in simple architectures. | top-1 error of 29.27% and top-5 error of 10.09% |

| Residual Attention Neural Network [90] | It combines residual learning (from ResNet) with a self-attention mechanism to enhance feature extraction, which allows the network to focus on important parts of an image while ignoring irrelevant details. The network has a stacked structure of attention modules that perform spatial attention to highlight specific regions in the image and channel attention to emphasise useful features. | It enables selective feature learning, effective gradient flow, and modular attention blocks, while enhancing interpretability and proving useful in applications that require detailed feature focus, such as object detection, medical imaging, and facial recognition. |

Increased computational complexity during training and a heightened risk of overfitting on small datasets. | top-1 accuracy 0.6% |

| Transformer-Based Networks [93] | It uses self-attention mechanisms to capture long-range dependencies and global contextual relationships within data, making them highly effective for complex tasks in both natural language processing and medical imaging. | Transformer-based networks are good at capturing global contextual information and long-range dependencies, leading to improved performance in complex data analysis tasks such as medical imaging and prostate lesion detection. | They require large amounts of data and significant computational resources for training and may struggle with limited datasets or real-time clinical deployment. | It achieved a score of 0.967 |

| CSwin UNet [98] | CSWin U-Net is a hybrid deep learning model that integrates the U-Net architecture with the cross-shaped window transformer (CSWin transformer). The model leverages both convolutional neural networks (CNNs) and transformer-based self-attention mechanisms to enhance medical image segmentation, especially in complex tasks such as prostate cancer detection, brain tumour segmentation, and other biomedical imaging applications. | It combines the powerful global context modeling of the CSWin transformer with U-Net’s multi-scale feature extraction, enabling efficient long-range dependency capture, fine-grained segmentation accuracy, improved boundary detection, and reduced computational cost compared to full self-attention models, making it highly effective for complex medical imaging tasks. | Despite its strengths, this model remains more computationally intensive than traditional CNN-based models, requires large annotated datasets, is sensitive to hyperparameter tuning, may face generalisation challenges on unseen data, and typically demands longer training times. | It achieved 85.4% top-1 accuracy on ImageNet-1K and on ImageNet-21K it achieved 87.5% top-1 accuracy on ImageNet-1K |

| ProstAttention Net [99] | This model is developed for prostate cancer detection and makes use of attention mechanisms. It selectively focuses on important regions within the prostate, improving its ability to detect small or subtle cancerous lesions. The integration of attention modules enhances both accuracy and interpretability, making it a promising tool to support clinical decision-making. | It leverages attention mechanisms to focus on critical regions in prostate images, improving the detection of small or subtle cancerous lesions. Its attention maps offer some interpretability, making it more transparent for clinical use. The model performs well even with imbalanced datasets and is adaptable across different imaging modalities. | Added attention modules increase complexity, requiring more computational resources. It may risk overfitting, especially with limited labelled data. Despite the use of attention, full explainability remains limited, and its generalisability across different clinical settings may still be a challenge without extensive multicenter validation. | Dice of |

| PCDM model [100] | A hybrid deep learning approach that combines ResNet50 for extracting detailed imaging features and Faster R-CNN for accurately detecting and classifying prostate cancer lesions on MRI scans, achieving high sensitivity and specificity in identifying clinically significant tumours. |

The PCDM model achieves highly accurate prostate cancer detection by combining powerful feature extraction from ResNet50 with precise lesion localisation from Faster R-CNN, resulting in excellent sensitivity, specificity, and diagnostic reliability. | Its reliance on bounding-box detection may limit fine-grained lesion boundary delineation, and its performance can be affected by variations in MRI quality and acquisition protocols across institutions. | Accuracy of 95.2%, sensitivity of 97.4% and specificity of 97.1% |

| Eff4-Attn [101] | A deep learning model that combines EfficientNet B4 with efficient channel attention (ECA) to achieve high accuracy and speed in image classification. Its balanced architecture enhances feature focus while remaining lightweight, making it ideal for medical imaging tasks like prostate cancer diagnosis in digital and telepathology settings. | This model delivers high diagnostic accuracy, operates efficiently with fewer parameters and robustly detects fine tissue features using channel-wise attention. It performs well across different magnifications and enhances interpretability, making it ideal for real-world pathology applications. | Despite its high performance, the model faces limitations such as sensitivity to training data quality, reliance on time-consuming patch-based WSI processing, and the need for clinical validation to ensure real-world applicability and regulatory approval. | Cancer detection accuracy of 96.18% and Gleason grade accuracy of 94.86% |

| ProViCNet [103] | A 3D deep learning framework developed for automated classification of clinically significant prostate cancer (csPCa) using multiparametric MRI (mpMRI). It integrates T2-weighted, ADC and DWI sequences into a unified 3D feature space, using a dual-branch architecture with modality-specific pathways and an attention mechanism to focus on critical prostate regions. | The model effectively integrates multiple mpMRI modalities with attention-guided feature extraction, leading to high accuracy in detecting clinically significant prostate cancer and reducing inter-reader variability. | Its performance depends on high-quality, complete mpMRI data, and its 3D architecture requires substantial computational resources, which may hinder deployment in low-resource clinical settings. | AUROC of 0.907 Sensitivity and Specificity of 0.880 and 0.654 |

| AMTNet [104] | A deep learning model designed for 3D medical image segmentation, particularly in prostate cancer detection. It uses a dual-stream encoder to process multiple imaging modalities such as T2-weighted MRI, DWI, and ADC maps and fuses them through a transformer-based attention mechanism. | It effectively captures long-range spatial dependencies and integrates diverse imaging modalities, leading to highly accurate lesion localisation and segmentation in prostate cancer detection. | The model’s transformer-based architecture results in increased computational complexity and longer training times, which may limit its scalability in resource-constrained clinical settings. | Achieves an average DSC of 0.907 and 0.851 |

5. Deep Learning Applications for Image-Based Prostate Cancer Diagnosis

5.1. Magnetic Resonance Imaging for Prostate Cancer Diagnosis

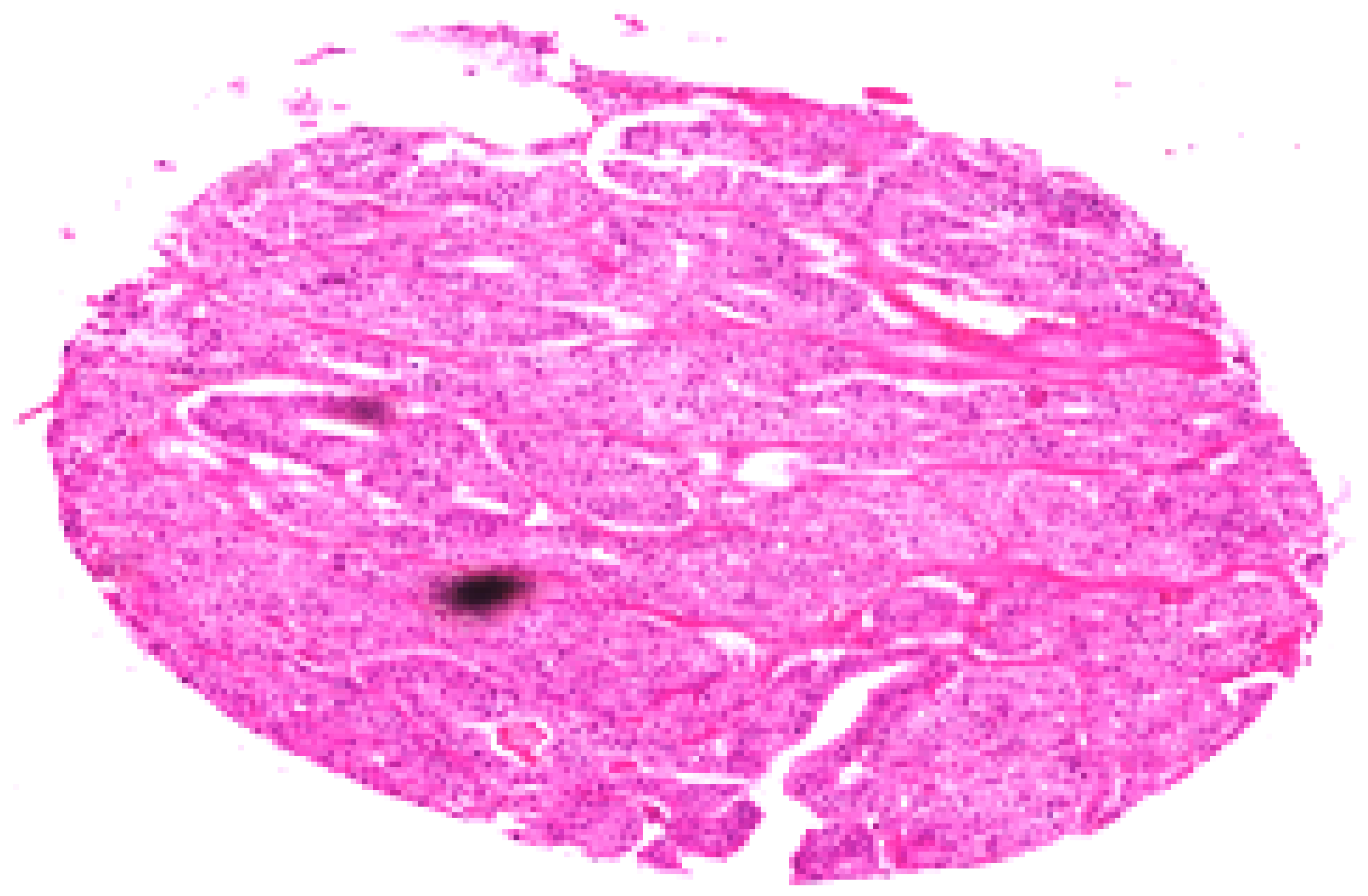

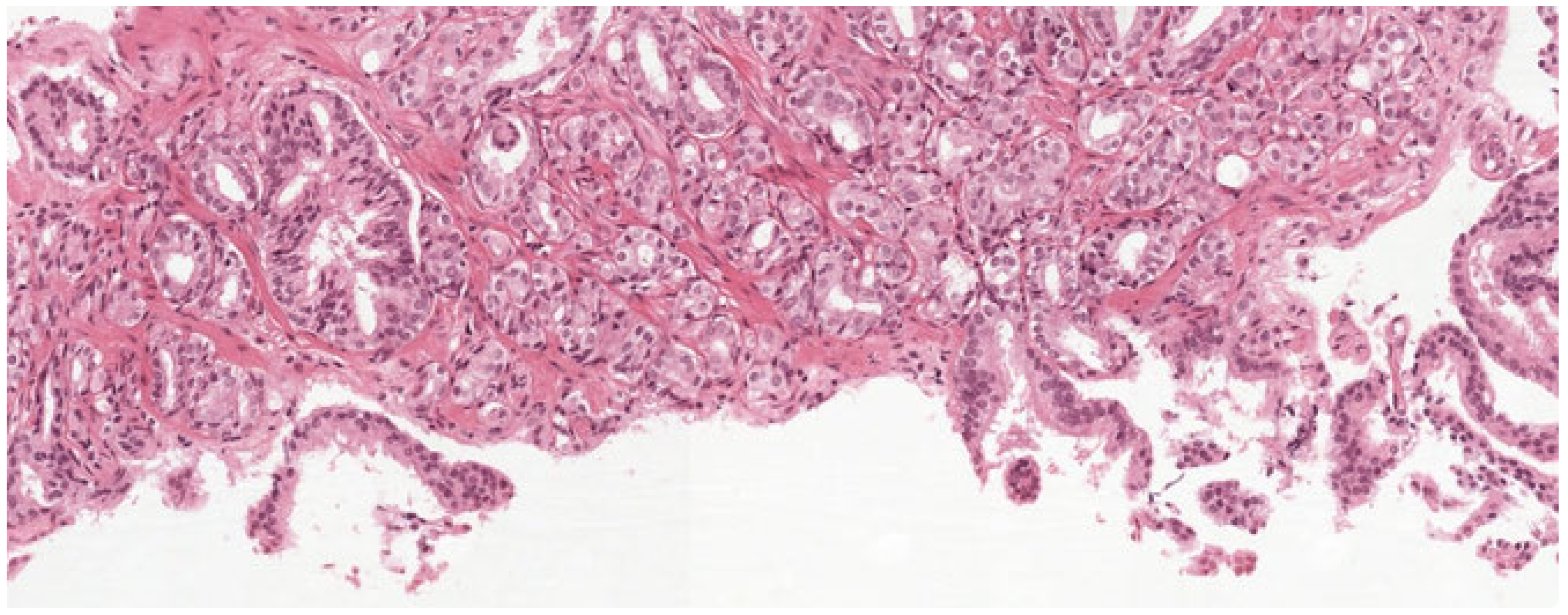

5.2. Deep Learning Model for Histopathological Diagnosis of Prostate Cancer

5.3. MRI-Based Segmentation Techniques for Prostate Cancer Diagnosis

5.4. Detection of Prostate Cancer with Computed Tomography Images

6. Prostate Cancer Dataset

6.1. Digital Pathology Dataset for Prostate Cancer Diagnosis [116]

6.2. Peso Dataset [120]

6.3. TCGA Prostate Adenocarcinoma Dataset (TCGA-PRAD) [121]

6.4. Gleason Challenge [122]

6.5. Prostate Cancer Grade Assessment (PANDA) Challenge [123]

6.6. PROSTATE-MRI [123]

6.7. NCIGT-PROSTATE [124]

6.8. PROMISE12 Challenge [125]

6.9. PROSTATE158 [71]

6.10. QIN-PROSTATE-Repeatability [126]

7. Performance Metrics

7.1. Accuracy

7.2. Recall

7.3. Specificity

7.4. Precision

7.5. F1-Score

7.6. False Positive Rate (FPR)

8. Discussion

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Stewart, B.W.; Wild, C.P. World Cancer Report 2014; International Agency for Research on Cancer: Lyon, France, 2024. [Google Scholar]

- American Cancer Society. Key Statistics for Prostate Cancer—Prostate Cancer Facts. American Cancer Society. Available online: https://www.cancer.org/cancer/types/prostate-cancer/about/key-statistics.html (accessed on 16 July 2025).

- Garg, G. Computer-aided Diagnosis Systems for Prostate Cancer: A Comprehensive Study. Curr. Med. Imaging 2024, 20, e220523217205. [Google Scholar] [CrossRef]

- Kumar, G.; Alqahtani, H. Deep Learning-Based Cancer Detection—Recent Developments. Trend Challenges CMES-Comput. Model. Eng. Sci. 2022, 130, 1271–1307. [Google Scholar]

- Zhu, M.; Sali, R.; Baba, F.; Khasawneh, H.; Ryndin, M.; Leveillee, R.J.; Hurwitz, M.D.; Lui, K.; Dixon, C.; Zhang, D.Y. Artificial intelligence in pathologic diagnosis, prognosis and prediction of prostate cancer. Am. J. Clin. Exp. Urol. 2024, 12, 200. [Google Scholar] [CrossRef]

- Ayyad, S.M.; Shehata, M.; Shalaby, A.; Abou El-Ghar, M.; Ghazal, M.; El-Melegy, M.; Abdel-Hamid, N.B.; Labib, L.M.; Ali, H.A.; El-Baz, A. Role of AI and histopathological images in detecting prostate cancer: A survey. Sensors 2021, 21, 2586. [Google Scholar] [CrossRef] [PubMed]

- Rabilloud, N.; Allaume, P.; Acosta, O.; De Crevoisier, R.; Bourgade, R.; Loussouarn, D.; Rioux-Leclercq, N.; Khene, Z.E.; Mathieu, R.; Bensalah, K.; et al. Deep learning methodologies applied to digital pathology in prostate cancer: A systematic review. Diagnostics 2023, 13, 2676. [Google Scholar] [CrossRef] [PubMed]

- Jiang, H.; Diao, Z.; Shi, T.; Zhou, Y.; Wang, F.; Hu, W.; Zhu, X.; Luo, S.; Tong, G.; Yao, Y.D. A review of deep learning-based multiple-lesion recognition from medical images: Classification, detection and segmentation. Comput. Biol. Med. 2023, 157, 106726. [Google Scholar] [CrossRef] [PubMed]

- Kim, H.; Kang, S.W.; Kim, J.H.; Nagar, H.; Sabuncu, M.; Margolis, D.J.; Kim, C.K. The role of AI in prostate MRI quality and interpretation: Opportunities and challenges. Eur. J. Radiol. 2023, 165, 110887. [Google Scholar] [CrossRef]

- Stabile, A.; Giganti, F.; Kasivisvanathan, V.; Giannarini, G.; Moore, C.M.; Padhani, A.R.; Panebianco, V.; Rosenkrantz, A.B.; Salomon, G.; Turkbey, B.; et al. Factors influencing variability in the performance of multiparametric magnetic resonance imaging in detecting clinically significant prostate cancer: A systematic literature review. Eur. Urol. Oncol. 2020, 3, 145–167. [Google Scholar] [CrossRef]

- Chaddad, A.; Kucharczyk, M.J.; Cheddad, A.; Clarke, S.E.; Hassan, L.; Ding, S.; Rathore, S.; Zhang, M.; Katib, Y.; Bahoric, B.; et al. Magnetic resonance imaging based radiomic models of prostate cancer: A narrative review. Cancers 2021, 13, 552. [Google Scholar] [CrossRef]

- Michaely, H.J.; Aringhieri, G.; Cioni, D.; Neri, E. Current value of biparametric prostate MRI with machine-learning or deep-learning in the detection, grading, and characterization of prostate cancer: A systematic review. Diagnostics 2022, 12, 799. [Google Scholar] [CrossRef]

- Cianflone, F.; Maris, B.; Bertolo, R.; Veccia, A.; Artoni, F.; Pettenuzzo, G.; Montanaro, F.; Porcaro, A.B.; Bianchi, A.; Malandra, S.; et al. Development of Artificial Intelligence-based Real-time Automatic Fusion of Multiparametric Magnetic Resonance Imaging and Transrectal Ultrasonography of the Prostate. Urology 2025, 199, 27–34. [Google Scholar] [CrossRef]

- Mata, L.A.; Retamero, J.A.; Gupta, R.T.; García Figueras, R.; Luna, A. Artificial intelligence–assisted prostate cancer diagnosis: Radiologic-pathologic correlation. Radiographics 2021, 41, 1676–1697. [Google Scholar] [CrossRef] [PubMed]

- Rakovic, K.; Colling, R.; Browning, L.; Dolton, M.; Horton, M.R.; Protheroe, A.; Lamb, A.D.; Bryant, R.J.; Scheffer, R.; Crofts, J.; et al. The use of digital pathology and artificial intelligence in histopathological diagnostic assessment of prostate cancer: A survey of prostate cancer UK supporters. Diagnostics 2022, 12, 1225. [Google Scholar] [CrossRef] [PubMed]

- Belkahla, S.; Nahvi, I.; Biswas, S.; Nahvi, I.; Ben Amor, N. Advances and development of prostate cancer, treatment, and strategies: A systemic review. Front. Cell Dev. Biol. 2022, 10, 991330. [Google Scholar] [CrossRef] [PubMed]

- Carter, H.B.; Albertsen, P.C.; Barry, M.J.; Etzioni, R.; Freedland, S.J.; Greene, K.L.; Holmberg, L.; Kantoff, P.; Konety, B.R.; Murad, M.H.; et al. Early detection of prostate cancer: AUA Guideline. J. Urol. 2013, 190, 419–426. [Google Scholar] [CrossRef]

- Khan, Z.; Yahya, N.; Alsaih, K.; Al-Hiyali, M.I.; Meriaudeau, F. Recent automatic segmentation algorithms of MRI prostate regions: A review. IEEE Access 2021, 9, 97878–97905. [Google Scholar] [CrossRef]

- Iqbal, S.; Siddiqui, G.F.; Rehman, A.; Hussain, L.; Saba, T.; Tariq, U.; Abbasi, A.A. Prostate cancer detection using deep learning and traditional techniques. IEEE Access 2021, 9, 27085–27100. [Google Scholar] [CrossRef]

- Seeram, E.; Kanade, V. Image Processing and Analysis. In Artificial Intelligence in Medical Imaging Technology: An Introduction; Springer Nature: Cham, Switzerland, 2024; pp. 83–103. [Google Scholar]

- Li, X.; Li, C.; Rahaman, M.M.; Sun, H.; Li, X.; Wu, J.; Yao, Y.; Grzegorzek, M. A comprehensive review of computer-aided whole-slide image analysis: From datasets to feature extraction, segmentation, classification and detection approaches. Artif. Intell. Rev. 2022, 55, 4809–4878. [Google Scholar] [CrossRef]

- Juneja, M.; Saini, S.K.; Gupta, J.; Garg, P.; Thakur, N.; Sharma, A.; Mehta, M.; Jindal, P. Survey of denoising, segmentation and classification of magnetic resonance imaging for prostate cancer. Multimed. Tools Appl. 2021, 80, 29199–29249. [Google Scholar] [CrossRef]

- Garg, G.; Juneja, M. A survey of prostate segmentation techniques in different imaging modalities. Curr. Med. Imaging Rev. 2018, 14, 19–46. [Google Scholar] [CrossRef]

- Xu, Y.; Quan, R.; Xu, W.; Huang, Y.; Chen, X.; Liu, F. Advances in medical image segmentation: A comprehensive review of traditional, deep learning and hybrid approaches. Bioengineering 2024, 11, 1034. [Google Scholar] [CrossRef]

- Kaur, S.; Singla, J.; Nkenyereye, L.; Jha, S.; Prashar, D.; Joshi, G.P.; El-Sappagh, S.; Islam, M.S.; Islam, S.R. Medical diagnostic systems using artificial intelligence (ai) algorithms: Principles and perspectives. IEEE Access 2020, 8, 228049–228069. [Google Scholar] [CrossRef]

- Kumar, D.; Pandey, R.C.; Mishra, A.K. A review of image features extraction techniques and their applications in image forensic. Multimed. Tools Appl. 2024, 83, 87801–87902. [Google Scholar] [CrossRef]

- Alam, M.; Tahernezhadi, M.; Vege, H.K.; Rajesh, P. A machine learning classification technique for predicting prostate cancer. In Proceedings of the 2020 IEEE International Conference on Electro Information Technology (EIT), Chicago, IL, USA, 31 July–1 August 2020; pp. 228–232. [Google Scholar]

- Duran-Lopez, L.; Dominguez-Morales, J.P.; Rios-Navarro, A.; Gutierrez-Galan, D.; Jimenez-Fernandez, A.; Vicente-Diaz, S.; Linares-Barranco, A. Performance evaluation of deep learning-based prostate cancer screening methods in histopathological images: Measuring the impact of the model’s complexity on its processing speed. Sensors 2021, 21, 1122. [Google Scholar] [CrossRef] [PubMed]

- Liu, Y.; Pu, H.; Sun, D.W. Efficient extraction of deep image features using convolutional neural network (CNN) for applications in detecting and analysing complex food matrices. Trends Food Sci. Technol. 2021, 113, 193–204. [Google Scholar] [CrossRef]

- Deep Convolutional Neural Networks. Available online: https://www.run.ai/guides/deep-learning-for-computer-vision/deep-convolutional-neural-networks (accessed on 16 July 2025).

- Hassan, M.R.; Islam, M.F.; Uddin, M.Z.; Ghoshal, G.; Hassan, M.M.; Huda, S.; Fortino, G. Prostate cancer classification from ultrasound and MRI images using deep learning based Explainable Artificial Intelligence. Future Gener. Comput. Syst. 2022, 127, 462–472. [Google Scholar] [CrossRef]

- Huang, X.; Li, Z.; Zhang, M.; Gao, S. Fusing hand-crafted and deep-learning features in a convolutional neural network model to identify prostate cancer in pathology images. Front. Oncol. 2022, 12, 994950. [Google Scholar] [CrossRef]

- Horasan, A.; Güneş, A. Advancing prostate cancer diagnosis: A deep learning approach for enhanced detection in MRI images. Diagnostics 2024, 14, 1871. [Google Scholar] [CrossRef]

- Corradini, D.; Brizi, L.; Gaudiano, C.; Bianchi, L.; Marcelli, E.; Golfieri, R.; Schiavina, R.; Testa, C.; Remondini, D. Challenges in the use of artificial intelligence for prostate cancer diagnosis from multiparametric imaging data. Cancers 2021, 13, 3944. [Google Scholar] [CrossRef]

- Dehbozorgi, P.; Ryabchykov, O.; Bocklitz, T. A systematic investigation of image pre-processing on image classification. IEEE Access 2024, 12, 64913–64926. [Google Scholar] [CrossRef]

- Tharinda, D.P. Image Binarization in a Nutshell. Image Binarization, also Known as Image… | by Tharinda Dilshan Piyadasa | Medium 6 Jun 2022. Available online: https://medium.com/@tharindad7/image-binarization-in-a-nutshell-b40b63c0228e (accessed on 16 July 2025).

- Said, K.A.M.; Jambek, A.B. Analysis of image processing using morphological erosion and dilation. J. Phys. Conf. Ser. 2021, 2071, 012033. [Google Scholar] [CrossRef]

- Rodrigues, N.M.; Silva, S.; Vanneschi, L.; Papanikolaou, N. A comparative study of automated deep learning segmentation models for prostate MRI. Cancers 2023, 15, 1467. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, R.; Xu, Y.; Liu, Y.; Chai, R.; Ma, H. A two-stage CNN method for MRI image segmentation of prostate with lesion. Biomed. Signal Process. Control 2023, 82, 104610. [Google Scholar] [CrossRef]

- Liu, X.; Song, L.; Liu, S.; Zhang, Y. A review of deep-learning-based medical image segmentation methods. Sustainability 2021, 13, 1224. [Google Scholar] [CrossRef]

- Muhayimana, O. Automatic Segmentation of Prostate in MRI Based on Deep Learning. Ph.D. Dissertation, University of Nankai, Tianjin, China, 2021. [Google Scholar]

- Comelli, A.; Dahiya, N.; Stefano, A.; Vernuccio, F.; Portoghese, M.; Cutaia, G.; Bruno, A.; Salvaggio, G.; Yezzi, A. Deep learning-based methods for prostate segmentation in magnetic resonance imaging. Appl. Sci. 2021, 11, 782. [Google Scholar] [CrossRef]

- Peruch, F.; Bogo, F.; Bonazza, M.; Cappelleri, V.M.; Peserico, E. Simpler, faster, more accurate melanocytic lesion segmentation through meds. IEEE Trans. Biomed. Eng. 2014, 61, 557–565. [Google Scholar] [CrossRef] [PubMed]

- Turkbey, B.; Haider, M.A. Deep learning-based artificial intelligence applications in prostate MRI: Brief summary. Br. J. Radiol. 2022, 95, 20210563. [Google Scholar] [CrossRef] [PubMed]

- Maini, R.; Aggarwal, H. Study and comparison of various image edge detection techniques. Int. J. Image Process. 2009, 3, 1–11. [Google Scholar]

- Sharma, N.; Aggarwal, L.M. Automated medical image segmentation techniques. J. Med. Phys. 2010, 35, 3–14. [Google Scholar] [CrossRef]

- Kaganami, H.G.; Beiji, Z. Region-based segmentation versus edge detection. In Proceedings of the 2009 Fifth International Conference on Intelligent Information Hiding and Multimedia Signal Processing, Kyoto, Japan, 12–14 September 2009; pp. 1217–1221. [Google Scholar]

- Karabağ, C.; Verhoeven, J.; Miller, N.R.; Reyes-Aldasoro, C.C. Texture segmentation: An objective comparison between five traditional algorithms and a deep-learning U-Net architecture. Appl. Sci. 2019, 18, 3900. [Google Scholar] [CrossRef]

- Chouhan, S.S.; Kaul, A.; Singh, U.P. Image segmentation using computational intelligence techniques. Arch. Comput. Methods Eng. 2019, 26, 533–596. [Google Scholar] [CrossRef]

- Punn, N.S.; Agarwal, S. Modality specific U-Net variants for biomedical image segmentation: A survey. Artif. Intell. Rev. 2022, 55, 5845–5889. [Google Scholar] [CrossRef]

- Ozer, S.; Langer, D.L.; Liu, X.; Haider, M.A.; Van der Kwast, T.H.; Evans, A.J.; Yang, Y.; Wernick, M.N.; Yetik, I.S. Supervised and unsupervised methods for prostate cancer segmentation with multispectral MRI. Med. Phys. 2010, 37, 1873–1883. [Google Scholar] [CrossRef] [PubMed]

- Khaleel, B.I. A Review of Clustering Methods Based on Artificial Intelligent Techniques. J. Educ. Sci. 2022, 31, 69–82. [Google Scholar] [CrossRef]

- Armya, R.E.; Abdulazeez, A.M. Medical images segmentation based on unsupervised algorithms: A review. Qubahan Acad. J. 2021, 1, 71–80. [Google Scholar] [CrossRef]

- Jiangtao, W.; Ruhaiyem, N.I.R.; Panpan, F. A Comprehensive Review of U-Net and Its Variants: Advances and Applications in Medical Image Segmentation. IET Image Process. 2025, 19, e70019. [Google Scholar] [CrossRef]

- Zhang, C.; Achuthan, A.; Himel, G.M.S. State-of-the-art and challenges in pancreatic CT segmentation: A systematic review of U-Net and its variants. IEEE Access 2024, 12, 78726–78742. [Google Scholar] [CrossRef]

- Popescu, D.; El-Khatib, M.; El-Khatib, H.; Ichim, L. New trends in melanoma detection using neural networks: A systematic review. Sensors 2022, 22, 496. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Olabanjo, O.; Wusu, A.; Asokere, M.; Afisi, O.; Okugbesan, B.; Olabanjo, O.; Folorunso, O.; Mazzara, M. Application of machine learning and deep learning models in prostate cancer diagnosis using medical images: A systematic review. Analytics 2023, 2, 708–744. [Google Scholar] [CrossRef]

- Al-Karawi, D.; Al-Assam, H.; Du, H.; Sayasneh, A.; Landolfo, C.; Timmerman, D.; Bourne, T.; Jassim, S. An evaluation of the effectiveness of image-based texture features extracted from static B-mode ultrasound images in distinguishing between benign and malignant ovarian masses. Ultrason. Imaging 2021, 43, 124–138. [Google Scholar] [CrossRef]

- Samek, W.; Montavon, G.; Lapuschkin, S.; Anders, C.J.; Müller, K.R. Explaining deep neural networks and beyond: A review of methods and applications. Proc. IEEE 2021, 109, 247–278. [Google Scholar] [CrossRef]

- De Ville, B. Decision trees. Wiley Interdiscip. Rev. Comput. Stat. 2013, 5, 448–455. [Google Scholar] [CrossRef]

- Aha, D.W.; Kibler, D.; Albert, M.K. Instance-based learning algorithms. Mach. Learn. 1991, 6, 37–66. [Google Scholar] [CrossRef]

- Abdullah, D.M.; Abdulazeez, A.M. Machine learning applications based on SVM classification a review. Qubahan Acad. J. 2021, 1, 81–90. [Google Scholar] [CrossRef]

- Khan, A.; Sohail, A.; Zahoora, U.; Qureshi, A.S. A survey of the recent architectures of deep convolutional neural networks. Artif. Intell. Rev. 2020, 53, 5455–5516. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Tang, W.; Sun, J.; Wang, S.; Zhang, Y. Review of AlexNet for medical image classification. arXiv 2023, arXiv:2311.08655. [Google Scholar] [CrossRef]

- Saha, A.; Bosma, J.S.; Twilt, J.J.; van Ginneken, B.; Bjartell, A.; Padhani, A.R.; Bonekamp, D.; Villeirs, G.; Salomon, G.; Giannarini, G.; et al. Artificial intelligence and radiologists in prostate cancer detection on MRI (PI-CAI): An international, paired, non-inferiority, confirmatory study. Lancet Oncol. 2024, 25, 879–887. [Google Scholar] [CrossRef]

- Gunashekar, D.D.; Bielak, L.; Hägele, L.; Oerther, B.; Benndorf, M.; Grosu, A.L.; Brox, T.; Zamboglou, C.; Bock, M. Explainable AI for CNN-based prostate tumor segmentation in multi-parametric MRI correlated to whole mount histopathology. Radiat. Oncol. 2022, 17, 65. [Google Scholar] [CrossRef]

- Payan, A.; Montana, G. Predicting Alzheimer’s disease: A neuroimaging study with 3D convolutional neural networks. arXiv 2015, arXiv:1502.02506. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Adams, L.C.; Makowski, M.R.; Engel, G.; Rattunde, M.; Busch, F.; Asbach, P.; Niehues, S.M.; Vinayahalingam, S.; van Ginneken, B.; Litjens, G.; et al. Prostate158-An expert-annotated 3T MRI dataset and algorithm for prostate cancer detection. Comput. Biol. Med. 2022, 148, 105817. [Google Scholar] [CrossRef]

- Chauhan, I.; Kekre, S.; Miglani, A.; Kankar, P.K.; Ratnaparkhe, M.B. CNN-based damage classification of soybean kernels using a high-magnification image dataset. J. Food Meas. Charact. 2025, 19, 3471–3495. [Google Scholar] [CrossRef]

- Verdhan, V.; Verdhan, V. VGGNet and AlexNet networks. In Computer Vision Using Deep Learning: Neural Network Architectures with Python and Keras; Apress: Berkeley, CA, USA, 2021; pp. 103–139. [Google Scholar]

- Ranzato, M.; Huang, F.J.; Boureau, Y.L.; LeCun, Y. Unsupervised learning of invariant feature hierarchies with applications to object recognition. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–8. [Google Scholar]

- Chawla, S.; Gupta, D.; Pippal, S.K. Review on Architectures of Convolutional Neural Network. In Proceedings of the 2025 3rd International Conference on Disruptive Technologies (ICDT), Greater Noida, India, 7–8 March 2025; pp. 442–448. [Google Scholar]

- Peng, B.; Ge, J.; Wei, G.; Wan, A. Research on identification of key brittleness factors in emergency medical resources support system based on complex network. Artif. Intell. Med. 2022, 131, 102350. [Google Scholar] [CrossRef]

- Esteva, A.; Robicquet, A.; Ramsundar, B.; Kuleshov, V.; DePristo, M.; Chou, K.; Cui, C.; Corrado, G.; Thrun, S.; Dean, J. A guide to deep learning in healthcare. Nat. Med. 2019, 25, 24–29. [Google Scholar] [CrossRef] [PubMed]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar]

- Tjoa, E.; Guan, C. A survey on explainable artificial intelligence (xai): Toward medical xai. IEEE Trans. Neural Netw. Learn. Syst. 2020, 32, 4793–4813. [Google Scholar] [CrossRef] [PubMed]

- Lundervold, A.S.; Lundervold, A. An overview of deep learning in medical imaging focusing on MRI. Z. Med. Phys. 2019, 29, 102–127. [Google Scholar] [CrossRef] [PubMed]

- Linkon, A.H.M.; Labib, M.M.; Hasan, T.; Hossain, M. Deep learning in prostate cancer diagnosis and Gleason grading in histopathology images: An extensive study. Inform. Med. Unlocked 2021, 24, 100582. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Nayagam, R.D.; Selvathi, D. A systematic review of deep learning methods for the classification and segmentation of prostate cancer on magnetic resonance images. Int. J. Imaging Syst. Technol. 2024, 34, e23064. [Google Scholar] [CrossRef]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Albahri, A.S.; Duhaim, A.M.; Fadhel, M.A.; Alnoor, A.; Baqer, N.S.; Alzubaidi, L.; Albahri, O.S.; Alamoodi, A.H.; Bai, J.; Salhi, A.; et al. A systematic review of trustworthy and explainable artificial intelligence in healthcare: Assessment of quality, bias risk, and data fusion. Inf. Fusion 2023, 96, 156–191. [Google Scholar] [CrossRef]

- Rahimzadeh, M.; Attar, A. A modified deep convolutional neural network for detecting COVID-19 and pneumonia from chest X-ray images based on the concatenation of xception and resnet50v2. Inform. Med. Unlocked 2020, 19, 100360. [Google Scholar] [CrossRef] [PubMed]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Englman, C.; Fütterer, J.J.; Giganti, F.; Moore, C.M. A History of Reporting Standards for Prostate Magnetic Resonance Imaging: PI-RADS, PRECISE, PI-QUAL, PI-RR, and PI-FAB. In Imaging and Focal Therapy of Early Prostate Cancer; Springer Nature: Cham, Switzerland, 2024; pp. 135–154. [Google Scholar]

- Pang, B. Classification of images using EfficientNet CNN model with convolutional block attention module (CBAM) and spatial group-wise enhance module (SGE). In Proceedings of the International Conference on Image, Signal Processing, and Pattern Recognition (ISPP 2022), Guilin, China, 25–27 February 2022; Volume 12247, pp. 34–41. [Google Scholar]

- Wang, F.; Jiang, M.; Qian, C.; Yang, S.; Li, C.; Zhang, H.; Wang, X.; Tang, X. Residual attention network for image classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3156–3164. [Google Scholar]

- Holzinger, A.; Langs, G.; Denk, H.; Zatloukal, K.; Müller, H. Causability and explainability of artificial intelligence in medicine. Wiley Interdiscip. Rev. Data Min. Knowl. Discov. 2019, 9, e1312. [Google Scholar] [CrossRef] [PubMed]

- An, Q.; Chen, W.; Shao, W. A deep convolutional neural network for pneumonia detection in X-ray images with attention ensemble. Diagnostics 2024, 14, 390. [Google Scholar] [CrossRef]

- Chaurasia, A.K.; Harris, H.C.; Toohey, P.W.; Hewitt, A.W. A generalised vision transformer-based self-supervised model for diagnosing and grading prostate cancer using histological images. Prostate Cancer Prostatic Dis. 2025, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Sangeetha, S.K.; Mathivanan, S.K.; Muthukumaran, V.; Cho, J.; Easwaramoorthy, S.V. An Empirical Analysis of Transformer-Based and Convolutional Neural Network Approaches for Early Detection and Diagnosis of Cancer Using Multimodal Imaging and Genomic Data. IEEE Access 2024, 13, 6120–6145. [Google Scholar] [CrossRef]

- Takahashi, S.; Sakaguchi, Y.; Kouno, N.; Takasawa, K.; Ishizu, K.; Akagi, Y.; Aoyama, R.; Teraya, N.; Bolatkan, A.; Shinkai, N.; et al. Comparison of vision transformers and convolutional neural networks in medical image analysis: A systematic review. J. Med. Syst. 2024, 48, 84. [Google Scholar] [CrossRef]

- Abudalou, S.; Qi, J.; Choi, J.; Gage, K.; Pow-Sang, J.; Yilmaz, Y.; Balagurunathan, Y. Improving Prostate Gland Segmenting Using Transformer Based Architectures. 2025. Available online: https://arxiv.org/pdf/2506.14844 (accessed on 16 July 2025).

- Dong, X.; Bao, J.; Chen, D.; Zhang, W.; Yu, N.; Yuan, L.; Chen, D.; Guo, B. Cswin transformer: A general vision transformer backbone with cross-shaped windows. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 12124–12134. [Google Scholar]

- Xu, P.; Zhu, X.; Clifton, D.A. Multimodal learning with transformers: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 12113–12132. [Google Scholar] [CrossRef]

- Talaat, F.M.; El-Sappagh, S.; Alnowaiser, K.; Hassan, E. Improved prostate cancer diagnosis using a modified ResNet50-based deep learning architecture. BMC Med. Inform. Decis. Mak. 2024, 24, 23. [Google Scholar] [CrossRef]

- Alici-Karaca, D.; Akay, B. An efficient deep learning model for prostate cancer diagnosis. IEEE Access 2024, 12, 150776–150792. [Google Scholar] [CrossRef]

- Battazza, A.; da Silva Brasileiro, F.C.; Tasaka, A.C.; Bulla, C.; Ximenes, P.P.; Hosomi, J.E.; da Silva, P.F.; da Silva, L.F.; de Moura, F.B.C.; Rocha, N.S. Integrating telepathology and digital pathology with artificial intelligence: An inevitable future. Vet. World 2024, 17, 1667. [Google Scholar] [CrossRef] [PubMed]

- Lee, J.H.; Li, C.X.; Jahanandish, H.; Bhattacharya, I.; Vesal, S.; Zhang, L.; Sang, S.; Choi, M.H.; Soerensen, S.J.C.; Zhou, S.R.; et al. Prostate-Specific Foundation Models for Enhanced Detection of Clinically Significant. arXiv 2025, arXiv:2502.00366. [Google Scholar]

- Zheng, S.; Tan, J.; Jiang, C.; Li, L. Automated multi-modal transformer network (amtnet) for 3d medical images segmentation. Phys. Med. Biol. 2023, 68, 025014. [Google Scholar] [CrossRef] [PubMed]

- Puttagunta, M.; Ravi, S. Medical image analysis based on deep learning approach. Multimed. Tools Appl. 2021, 80, 24365–24398. [Google Scholar] [CrossRef]

- Khosravi, P.; Lysandrou, M.; Eljalby, M.; Li, Q.; Kazemi, E.; Zisimopoulos, P.; Sigaras, A.; Brendel, M.; Barnes, J.; Ricketts, C.; et al. A deep learning approach to diagnostic classification of prostate cancer using pathology–radiology fusion. J. Magn. Reson. Imaging 2021, 54, 462–471. [Google Scholar] [CrossRef]

- Hiremath, A.; Shiradkar, R.; Merisaari, H.; Prasanna, P.; Ettala, O.; Taimen, P.; Aronen, H.J.; Boström, P.J.; Jambor, I.; Madabhushi, A. Test-retest repeatability of a deep learning architecture in detecting and segmenting clinically significant prostate cancer on apparent diffusion coefficient (ADC) maps. Eur. Radiol. 2021, 31, 379–391. [Google Scholar] [CrossRef]

- Naik, N.; Tokas, T.; Shetty, D.K.; Hameed, B.Z.; Shastri, S.; Shah, M.J.; Ibrahim, S.; Rai, B.P.; Chłosta, P.; Somani, B.K. Role of deep learning in prostate cancer management: Past, present and future based on a comprehensive literature review. J. Clin. Med. 2022, 11, 3575. [Google Scholar] [CrossRef]

- Li, S.T.; Zhang, L.; Guo, P.; Pan, H.Y.; Chen, P.Z.; Xie, H.F.; Xie, B.K.; Chen, J.; Lai, Q.Q.; Li, Y.Z.; et al. Prostate cancer of magnetic resonance imaging automatic segmentation and detection of based on 3D-Mask RCNN. J. Radiat. Res. Appl. Sci. 2023, 16, 100636. [Google Scholar] [CrossRef]

- Kott, O.; Linsley, D.; Amin, A.; Karagounis, A.; Jeffers, C.; Golijanin, D.; Serre, T.; Gershman, B. Development of a deep learning algorithm for the histopathologic diagnosis and Gleason grading of prostate cancer biopsies: A pilot study. Eur. Urol. Focus 2021, 7, 347–351. [Google Scholar] [CrossRef]

- Schelb, P.; Tavakoli, A.A.; Tubtawee, T.; Hielscher, T.; Radtke, J.P.; Görtz, M.; Schuetz, V.; Kuder, T.A.; Schimmoeller, L.; Stenzinger, A.; et al. Comparison of prostate MRI lesion segmentation agreement between multiple radiologists and a fully automatic deep learning system. In RöFo-Fortschritte auf dem Gebiet der Röntgenstrahlen und der bildgebenden Verfahren; Georg Thieme Verlag KG.: Stuttgart, Germany, 2021; Volume 193, pp. 559–573. [Google Scholar]

- Lai, C.C.; Wang, H.K.; Wang, F.N.; Peng, Y.C.; Lin, T.P.; Peng, H.H.; Shen, S.H. Autosegmentation of prostate zones and cancer regions from biparametric magnetic resonance images by using deep-learning-based neural networks. Sensors 2021, 21, 2709. [Google Scholar] [CrossRef]

- Ma, L.; Guo, R.; Zhang, G.; Tade, F.; Schuster, D.M.; Nieh, P.; Master, V.; Fei, B. Automatic segmentation of the prostate on CT images using deep learning and multi-atlas fusion. Proc. SPIE Int. Soc. Opt. Eng. 2017, 10133, 101332O. [Google Scholar] [PubMed]

- Soni, M.; Khan, I.R.; Babu, K.S.; Nasrullah, S.; Madduri, A.; Rahin, S.A. Light weighted healthcare CNN model to detect prostate cancer on multiparametric MRI. Comput. Intell. Neurosci. 2022, 2022, 5497120. [Google Scholar] [CrossRef] [PubMed]

- Hong, S.; Kim, S.H.; Yoo, B.; Kim, J.Y. Deep learning algorithm for tumor segmentation and discrimination of clinically significant cancer in patients with prostate cancer. Curr. Oncol. 2023, 30, 7275–7285. [Google Scholar] [CrossRef] [PubMed]

- Oner, M.U.; Ng, M.Y.; Giron, D.M.; Xi, C.E.C.; Xiang, L.A.Y.; Singh, M.; Yu, W.; Sung, W.K.; Wong, C.F.; Lee, H.K. Digital Pathology Dataset for Prostate Cancer Diagnosis. 2022. Available online: https://zenodo.org/records/5971764 (accessed on 16 July 2025).

- Abbasi, A.A.; Hussain, L.; Awan, I.A.; Abbasi, I.; Majid, A.; Nadeem, M.S.A.; Chaudhary, Q.A. Detecting prostate cancer using deep learning convolution neural network with transfer learning approach. Cogn. Neurodyn. 2020, 14, 523–533. [Google Scholar] [CrossRef]

- Soerensen, S.J.; Fan, R.E.; Seetharaman, A.; Chen, L.; Shao, W.; Bhattacharya, I.; Kim, Y.H.; Sood, R.; Borre, M.; Chung, B.I.; et al. Deep learning improves speed and accuracy of prostate gland segmentations on magnetic resonance imaging for targeted biopsy. J. Urol. 2021, 206, 604–612. [Google Scholar] [CrossRef]

- Reda, I.; Khalil, A.; Elmogy, M.; Abou El-Fetouh, A.; Shalaby, A.; Abou El Ghar, M.; Elmaghraby, A.; Ghazal, M.; El-Baz, A. Deep learning role in early diagnosis of prostate cancer. Technol. Cancer Res. Treat. 2018, 17, 1533034618775530. [Google Scholar] [CrossRef]

- Wouter Bulten. The Public PESO Dataset: Prostate H&E Whole-Side Images for Epithelium Segmentation. 29 July 2019. Available online: https://www.wouterbulten.nl/posts/peso-dataset-whole-slide-image-prosate-cancer (accessed on 16 July 2025).

- Home—Gleason2019—Grand Challenge (grand-challenge.org). Available online: https://gleason2019.grand-challenge.org/ (accessed on 16 July 2025).

- Bulten, W.; Litjens, G.; Pinckaers, H.; Ström, P.; Eklund, M.; Kartasalo, K.; Demkin, M.; Dane, S. The PANDA challenge: Prostate cANcer graDe Assessment using the Gleason grading system. In Proceedings of the 23rd International Conference on Medical Image Computing and Computer Assisted Intervention (MICCAI 2020), Lima, Peru, 4–8 October 2020. [Google Scholar]

- PROSTATE-MRI | PROSTATE-MRI. Available online: https://www.cancerimagingarchive.net/collection/prostate-mri (accessed on 16 July 2025).

- Available online: https://www.brighamandwomens.org/research/amigo/advanced-multimodality-image-guided-operating-suite?utmsource=chatgpt.com (accessed on 16 July 2025).

- Litjens, G.; Toth, R.; Van De Ven, W.; Hoeks, C.; Kerkstra, S.; Van Ginneken, B.; Vincent, G.; Guillard, G.; Birbeck, N.; Zhang, J.; et al. Evaluation of prostate segmentation algorithms for MRI: The PROMISE12 challenge. Med. Image Anal. 2014, 18, 359–373. [Google Scholar] [CrossRef]

- Natinal Cancer Institute. The Cancer Imaging Archive QIN-PROSTATE-Repeatability | QIN-PROSTATE-Repeatability. 2018. Available online: https://www.cancerimagingarchive.net/collection/qin-prostate-repeatability/?utmsource=chatgpt.com (accessed on 16 July 2025).

- Prostate cANcer graDe Assessment (PANDA) Challenge. Available online: https://panda.grand-challenge.org (accessed on 16 July 2025).

- Muckley, M.J.; Riemenschneider, B.; Radmanesh, A.; Kim, S.; Jeong, G.; Ko, J.; Jun, Y.; Shin, H.; Hwang, D.; Mostapha, M.; et al. Results of the 2020 fastMRI challenge for machine learning MR image reconstruction. IEEE Trans. Med. Imaging 2021, 40, 2306–2317. [Google Scholar] [CrossRef]

- Arunachalam, M.; Chiranjeev, C.; Mondal, B.; Sanjay, T. Generative AI Revolution: Shaping the Future of Healthcare Innovation. In Revolutionizing the Healthcare Sector with AI; IGI Global: Hershey, PA, USA, 2024; pp. 341–364. [Google Scholar]

| Method | Description | Advantage | Disadvantage |

|---|---|---|---|

| Edge detection and contour features [45] | Handcrafted feature-based method. Examples include the Canny edge detector and active contour models (snakes). | The method offers simplicity and efficiency, enables sharp boundary detection and effective feature extraction, and reduces data complexity, and it is versatile across different modalities. | It is sensitive to noise, struggles with weak or fuzzy boundaries, produces fragmented contours, tends to over-segment, and lacks robustness for complex structures. |

| Intensity-based features [46] | Handcrafted feature-based method relying on pixel intensity values. Examples include thresholding and histogram analysis. | It offers simplicity and efficiency, fast processing with minimal user interaction, effective performance on high-contrast images, and versatility across different imaging modalities. | The method exhibits sensitivity to noise and artifacts, struggles with low-contrast images, is prone to over- or under-segmentation, is limited to simple structures, and is highly dependent on threshold settings. |

| Region-based features [47] | Divides an image into regions based on pixel similarity. Examples include region growing and the watershed algorithm. | It preserves spatial relationships and is effective for homogeneous regions, less sensitive to noise, robust under varying contrast, and capable of handling complex structures. | The method is prone to over-segmentation, sensitive to initialisation and intensity gradients, and computationally complex, and it struggles to handle heterogeneous regions. |

| Texture-based segmentation [48] | Analyzes pixel intensity patterns to differentiate image regions. Examples include GLCM and wavelet-based methods. | It captures fine details, performs effectively in heterogeneous regions, enables versatile feature extraction, improves segmentation of complex structures, and is resistant to illumination variations. | It suffers from high computational complexity, sensitivity to noise, difficulty in handling homogeneous regions, limited interpretability, and over-segmentation in high-texture areas. |

| Traditional intelligence-based methods [49] | Includes methods like ANN, genetic algorithms, fuzzy logic, and SVMs. | Interpretable models with low computational requirements, effective on small and less data-intensive datasets, and flexible across different problem types. | The approach suffers from limited accuracy, poor generalisation, reliance on manual feature engineering, susceptibility to noise, and difficulty handling multimodal data. |

| Deep learning-based methods [50] | Uses models like CNNs and U-Nets for automatic feature learning and segmentation. | Automatic feature learning, high accuracy, adaptability to complex structures, end-to-end learning, and robustness to noise and variability. | The method involves high data requirements, significant computational cost, risk of overfitting, extensive preprocessing, and a time-consuming training process. |

| Methods | Outline | Strengths | Limitations | Applications |

|---|---|---|---|---|

| Traditional intelligence-based methods [19] | Relies on structured rules and algorithms for reasoning and classification. | Low data requirements and interpretability. | Limited flexibility and scalability challenges. | Expert systems and financial analysis. |

| Deep Neural Networks [60] | A neural network model with multiple layers between input and output layers for complex pattern learning. | Automatic feature extraction, high accuracy, scalability, and versatile applications characterise the system. |

High computational cost, large data requirements, complexity with lack of interpretability, and susceptibility to overfitting are key challenges. |

Computer vision, natural language processing (NLP), healthcare, and audio processing. |

| Decision Trees [61] | A supervised learning algorithm for classification and regression with intuitive decision paths. | The method offers interpretability, requires no data normalisation, and is robust to missing values. | Prone to overfitting and unstable with data variation. | Healthcare, finance, and marketing. |

| Instance-based classifiers [62] | Algorithms that predict using stored training examples. | Flexibility, adaptability, and simple implementation. | Computational complexity and sensitivity to noise. | Image recognition and text classification. |

| Support Vector Machine (SVM) [63] | A supervised algorithm for classification and regression tasks. | Effective for high-dimensional data and robust to overfitting. | The model faces challenges related to computational complexity and limited interpretability. | Bioinformatics and image classification. |

| Study | Modality | Model | Dataset Size | Key Metrics | Performance |

|---|---|---|---|---|---|

| [107] | MRI | Custom DL | 400 patients | AUC | 0.89 (cancer vs non-cancer), 0.78 (risk stratification) |

| [108] | DW-MRI | U-Net | 112 patients | ICC, DSC | ICC: 0.80–0.83, DSC: 0.68–0.72 |

| [109] | MRI + PSA | SNCSAE CAD | Not specified | Diagnostic Performance | Robust non-invasive detection |

| [110] | Histopathology | InceptionV3 | 96 biopsies | Accuracy, Sensitivity, Specificity | Acc: 92%, Sens: 90%, Spec: 93% |

| [111] | Histopathology | ResNet | 85 biopsies | Accuracy | Coarse: 91.5%, Fine: 85.4% |

| [112] | MRI | ProGNet | 905 patients | Dice Coefficient | Outperformed U-Net; 35s segmentation time |

| [114] | MRI | 3D-Mask RCNN | Not specified | Accuracy | High accuracy |

| [115] | MRI | SEMRCNN | Not specified | Dice, Sensitivity, Specificity | Dice: 0.654, Sens: 0.695, Spec: 0.970 |

| [116] | CT | DL + Multi-atlas | 92 patients | Dice Coefficient | 86.80% |

| [117] | MRI | Transfer Learning CNN | Not specified | Comparison with ML | DL superior to SVM, decision tree, Bayes |

| [118] | MRI | U-Net | 165 patients | Dice Coefficient | 0.22 (U-Net), 0.48–0.52 (manual) |

| [119] | MRI | U-Net | 312 patients | Sensitivity, Specificity, Dice | Sens: 96%, Spec: 92%, Dice: 0.89 |

| Dataset | Description | Modality | Sample Size | Key Application |

|---|---|---|---|---|

| PROSTATE158 [71] | High-resolution MRI scans for segmentation research | MRI (T2-weighted) | 158 cases | Algorithm development for segmentation |

| Digital Pathology Dataset [116] | Whole Slide Images (H&E-stained); Tan Tock Seng Hospital | Histopathology | 99 WSIs | Gland segmentation and classification |

| Peso Dataset [120] | WSIs with annotations; Radboud University Medical Centre | Histopathology | 102 WSIs | Cancer detection and classification |

| TCGA Prostate Adenocarcinoma Dataset (TCGA-PRAD) [121] | Heterogeneous clinical data; multi-center collection | Mixed (Genomics, Imaging) | 500 cases | Multi-omics analysis of prostate cancer |

| Gleason Challenge [122] | Tissue Microarrays (TMAs); Vancouver Prostate Center | Histopathology | 244 training + 87 test TMAs | Gleason grading classification |

| PROSTATE MRI [NCI, USA] [123] | MRI guided biopsy and radical prostatectomy | MRI | Multiple cases | MRI-guided diagnostics and interventions |

| NCIGT-PROSTATE (NIH) [124] | MRI-guided therapy and biopsy procedures | MRI | Multiple cases | Image-guided therapy and biopsy research |

| PROMISE12 Challenge [125] | Segmentation challenge with ground-truth data | MRI (T2-weighted) | 50 cases | Prostate gland segmentation benchmarking |

| QIN-PROSTATE-Repeatability [126] | Study on imaging biomarker reproducibility | MRI (T2W, DWI) | Multiple repeated scans | Biomarker validation and reproducibility |

| PANDA Challenge [127] | Largest publicly available prostate WSIs; Radboud & Karolinska | Histopathology | ∼11,000 WSIs | AI development for Gleason grading |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Olawuyi, O.; Viriri, S. Deep Learning Techniques for Prostate Cancer Analysis and Detection: Survey of the State of the Art. J. Imaging 2025, 11, 254. https://doi.org/10.3390/jimaging11080254

Olawuyi O, Viriri S. Deep Learning Techniques for Prostate Cancer Analysis and Detection: Survey of the State of the Art. Journal of Imaging. 2025; 11(8):254. https://doi.org/10.3390/jimaging11080254

Chicago/Turabian StyleOlawuyi, Olushola, and Serestina Viriri. 2025. "Deep Learning Techniques for Prostate Cancer Analysis and Detection: Survey of the State of the Art" Journal of Imaging 11, no. 8: 254. https://doi.org/10.3390/jimaging11080254

APA StyleOlawuyi, O., & Viriri, S. (2025). Deep Learning Techniques for Prostate Cancer Analysis and Detection: Survey of the State of the Art. Journal of Imaging, 11(8), 254. https://doi.org/10.3390/jimaging11080254