DFCNet: Dual-Stage Frequency-Domain Calibration Network for Low-Light Image Enhancement

Abstract

1. Introduction

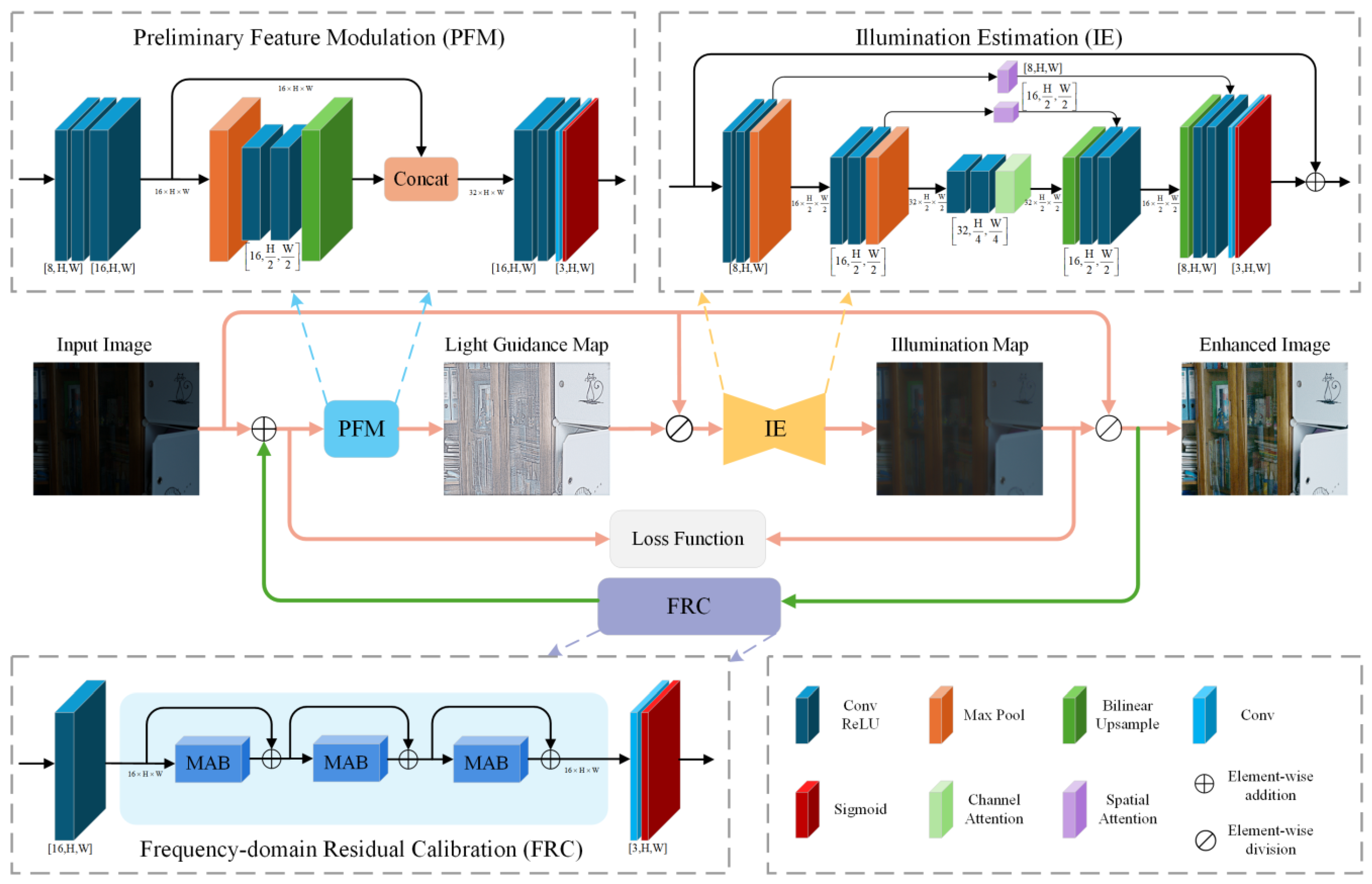

- An unsupervised dual-stage framework is proposed to eliminate reliance on paired training data, thus striking a balance between enhancement quality and real-time efficiency.

- An FRC module is designed to enhance structural consistency while suppressing noise and artifacts by exploiting residual information in the frequency domain.

- A lightweight PFM module and an IE module are designed to collaboratively facilitate accurate illumination estimation via dynamic feature guidance mechanisms.

2. Related Work

2.1. Conventional Methods

2.2. Supervised Deep Learning-Driven Methods

2.3. Unsupervised Deep Learning-Driven Methods

3. Proposed Method

3.1. Overview of the DFCNet

3.2. Preliminary Feature Modulation

3.3. Illumination Estimation

3.4. Frequency-Domain Residual Calibration

| Algorithm 1 Frequency-Domain Residual Calibration (FRC) | |

| Input | Reflectance map |

| Output | Calibration term |

| 1: Spatial Encoding | |

| 2: for do | |

| 3: FFT | |

| 4: Magnitude & Phase Separation | |

| 5: Magnitude Calibration | |

| 6: Frequency Recombination | |

| 7: IFFT | |

| 8: Residual Fusion with Gating | |

| 9: Update Input | |

| 10: end for | |

| 11: Output Calibration Term | |

3.5. Loss Function

3.5.1. Illumination Consistency Loss

3.5.2. Structural Smoothness Loss

3.5.3. Total Variation Loss

4. Experiments

4.1. Experiment Settings

4.2. Comparative Methods and Evaluation Metrics

4.3. Results

4.4. Complexity Analysis

4.5. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Cui, Y.; Ren, W.; Cao, X.; Knoll, A. Image Restoration via Frequency Selection. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 46, 1093–1108. [Google Scholar] [CrossRef]

- Munsif, M.; Khan, S.U.; Khan, N.; Baik, S.W. Attention-Based Deep Learning Framework for Action Recognition in a Dark Environment. Hum. Centric Comput. Inf. Sci. 2024, 14, 4. [Google Scholar]

- Herath, H.; Herath, H.; Madusanka, N.; Lee, B.-I. A Systematic Review of Medical Image Quality Assessment. J. Imaging 2025, 11, 100. [Google Scholar] [CrossRef] [PubMed]

- Zhang, J.; Cheng, B.; Zhang, T.; Zhao, Y.; Fu, T.; Wu, Z.; Tao, X. MIMO-Uformer: A Transformer-Based Image Deblurring Network for Vehicle Surveillance Scenarios. J. Imaging 2024, 10, 274. [Google Scholar] [CrossRef]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep Retinex Decomposition for Low-Light Enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar] [CrossRef]

- Jiang, Y.; Gong, X.; Liu, D.; Cheng, Y.; Fang, C.; Shen, X.; Yang, J.; Zhou, P.; Wang, Z. Enlightengan: Deep Light Enhancement Without Paired Supervision. IEEE Trans. Image Process. 2021, 30, 2340–2349. [Google Scholar] [CrossRef]

- Tatana, M.M.; Tsoeu, M.S.; Maswanganyi, R.C. Low-Light Image and Video Enhancement for More Robust Computer Vision Tasks: A Review. J. Imaging 2025, 11, 125. [Google Scholar] [CrossRef]

- Guo, J.; Ma, J.; García-Fernández, Á.F.; Zhang, Y.; Liang, H. A Survey on Image Enhancement for Low-Light Images. Heliyon 2023, 9, e14558. [Google Scholar] [CrossRef] [PubMed]

- Jobson, D.J.; Rahman, Z.; Woodell, G.A. Properties and Performance of a Center/Surround Retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef]

- Li, D.; Zhang, Y.; Wen, P.; Bai, L. A Retinex Algorithm for Image Enhancement Based on Recursive Bilateral Filtering. In Proceedings of the 2015 11th International Conference on Computational Intelligence and Security (CIS), Shenzhen, China, 19–20 December 2015; IEEE: New York, NY, USA, 2015; pp. 154–157. [Google Scholar]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-Light Image Enhancement via Illumination Map Estimation. IEEE Trans. Image Process. 2016, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Lore, K.G.; Akintayo, A.; Sarkar, S. LLNet: A Deep Autoencoder Approach to Natural Low-Light Image Enhancement. Pattern Recognit. 2017, 61, 650–662. [Google Scholar] [CrossRef]

- Lv, F.; Lu, F.; Wu, J.; Lim, C. MBLLEN: Low-Light Image/Video Enhancement Using Cnns. In Proceedings of the Bmvc, Newcastle, UK, 3–6 September 2018; Volume 220, p. 4. [Google Scholar]

- Chen, C.; Chen, Q.; Xu, J.; Koltun, V. Learning to See in the Dark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 3291–3300. [Google Scholar]

- Lim, S.; Kim, W. DSLR: Deep Stacked Laplacian Restorer for Low-Light Image Enhancement. IEEE Trans. Multimed. 2020, 23, 4272–4284. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhang, J.; Guo, X. Kindling the Darkness: A Practical Low-Light Image Enhancer. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; pp. 1632–1640. [Google Scholar]

- Wu, W.; Weng, J.; Zhang, P.; Wang, X.; Yang, W.; Jiang, J. Uretinex-Net: Retinex-Based Deep Unfolding Network for Low-Light Image Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5901–5910. [Google Scholar]

- Cheng, D.; Chen, L.; Lv, C.; Guo, L.; Kou, Q. Light-Guided and Cross-Fusion U-Net for Anti-Illumination Image Super-Resolution. IEEE Trans. Circuits Syst. Video Technol. 2022, 32, 8436–8449. [Google Scholar] [CrossRef]

- Wang, H.; Xu, K.; Lau, R.W. Local Color Distributions Prior for Image Enhancement. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: New York, NY, USA, 2022; pp. 343–359. [Google Scholar]

- Cai, Y.; Bian, H.; Lin, J.; Wang, H.; Timofte, R.; Zhang, Y. Retinexformer: One-Stage Retinex-Based Transformer for Low-Light Image Enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–6 October 2023; pp. 12504–12513. [Google Scholar]

- Bai, J.; Yin, Y.; He, Q.; Li, Y.; Zhang, X. Retinexmamba: Retinex-Based Mamba for Low-Light Image Enhancement. arXiv 2024, arXiv:2405.03349. [Google Scholar]

- Yao, Z.; Fan, G.; Fan, J.; Gan, M.; Chen, C.L.P. Spatial-Frequency Dual-Domain Feature Fusion Network for Low-Light Remote Sensing Image Enhancement. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4706516. [Google Scholar] [CrossRef]

- Wang, W.; Wei, C.; Yang, W.; Liu, J. Gladnet: Low-Light Enhancement Network with Global Awareness. In Proceedings of the 2018 13th IEEE International Conference on Automatic Face & Gesture Recognition (FG 2018), Xi’an, China, 15–19 May 2018; IEEE: New York, NY, USA, 2018; pp. 751–755. [Google Scholar]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-Reference Deep Curve Estimation for Low-Light Image Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1780–1789. [Google Scholar]

- Zhu, A.; Zhang, L.; Shen, Y.; Ma, Y.; Zhao, S.; Zhou, Y. Zero-Shot Restoration of Underexposed Images via Robust Retinex Decomposition. In Proceedings of the 2020 IEEE International Conference on Multimedia and Expo (ICME), London, UK, 6–10 July 2020; IEEE: New York, NY, USA, 2020; pp. 1–6. [Google Scholar]

- Yang, W.; Wang, S.; Fang, Y.; Wang, Y.; Liu, J. Band Representation-Based Semi-Supervised Low-Light Image Enhancement: Bridging the Gap Between Signal Fidelity and Perceptual Quality. IEEE Trans. Image Process. 2021, 30, 3461–3473. [Google Scholar] [CrossRef]

- Zhao, Z.; Xiong, B.; Wang, L.; Ou, Q.; Yu, L.; Kuang, F. RetinexDIP: A Unified Deep Framework for Low-Light Image Enhancement. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 1076–1088. [Google Scholar] [CrossRef]

- Wang, R.; Jiang, B.; Yang, C.; Li, Q.; Zhang, B. MAGAN: Unsupervised Low-Light Image Enhancement Guided by Mixed-Attention. Big Data Min. Anal. 2022, 5, 110–119. [Google Scholar] [CrossRef]

- Ma, L.; Ma, T.; Liu, R.; Fan, X.; Luo, Z. Toward Fast, Flexible, and Robust Low-Light Image Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 5637–5646. [Google Scholar]

- Kandula, P.; Suin, M.; Rajagopalan, A.N. Illumination-Adaptive Unpaired Low-Light Enhancement. IEEE Trans. Circuits Syst. Video Technol. 2023, 33, 3726–3736. [Google Scholar] [CrossRef]

- Liang, Z.; Li, C.; Zhou, S.; Feng, R.; Loy, C.C. Iterative Prompt Learning for Unsupervised Backlit Image Enhancement. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; pp. 8094–8103. [Google Scholar]

- Jiang, Q.; Mao, Y.; Cong, R.; Ren, W.; Huang, C.; Shao, F. Unsupervised Decomposition and Correction Network for Low-Light Image Enhancement. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19440–19455. [Google Scholar] [CrossRef]

- Fei, B.; Lyu, Z.; Pan, L.; Zhang, J.; Yang, W.; Luo, T.; Zhang, B.; Dai, B. Generative Diffusion Prior for Unified Image Restoration and Enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 9935–9946. [Google Scholar]

- Wang, W.; Luo, R.; Yang, W.; Liu, J. Unsupervised Illumination Adaptation for Low-Light Vision. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 5951–5966. [Google Scholar] [CrossRef]

- Peng, B.; Zhang, J.; Zhang, Z.; Huang, Q.; Chen, L.; Lei, J. Unsupervised Low-Light Image Enhancement via Luminance Mask and Luminance-Independent Representation Decoupling. IEEE Trans. Emerg. Top. Comput. Intell. 2024, 8, 3029–3039. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–Miccai 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; Springer: New York, NY, USA, 2015; pp. 234–241. [Google Scholar]

- Sun, L.; Dong, J.; Tang, J.; Pan, J. Spatially-Adaptive Feature Modulation for Efficient Image Super-Resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 13190–13199. [Google Scholar]

- Rowlands, D.A.; Finlayson, G.D. Optimisation of Convolution-Based Image Lightness Processing. J. Imaging 2024, 10, 204. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.; Zhang, Q.; Fu, C.-W.; Shen, X.; Zheng, W.-S.; Jia, J. Underexposed Photo Enhancement Using Deep Illumination Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 6849–6857. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Zhu, X.; Cheng, D.; Zhang, Z.; Lin, S.; Dai, J. An Empirical Study of Spatial Attention Mechanisms in Deep Networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6688–6697. [Google Scholar]

- Li, C.; Guo, C.-L.; Zhou, M.; Liang, Z.; Zhou, S.; Feng, R.; Loy, C.C. Embedding Fourier for Ultra-High-Definition Low-Light Image Enhancement. arXiv 2023, arXiv:2302.11831. [Google Scholar]

- Zhang, Y.; Guo, X.; Ma, J.; Liu, W.; Zhang, J. Beyond Brightening Low-Light Images. Int. J. Comput. Vis. 2021, 129, 1013–1037. [Google Scholar] [CrossRef]

- Yang, W.; Wang, W.; Huang, H.; Wang, S.; Liu, J. Sparse Gradient Regularized Deep Retinex Network for Robust Low-Light Image Enhancement. IEEE Trans. Image Process. 2021, 30, 2072–2086. [Google Scholar] [CrossRef]

- Wang, S.; Zheng, J.; Hu, H.-M.; Li, B. Naturalness Preserved Enhancement Algorithm for Non-Uniform Illumination Images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-Reference Image Quality Assessment in the Spatial Domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Bychkovsky, V.; Paris, S.; Chan, E.; Durand, F. Learning Photographic Global Tonal Adjustment with a Database of Input/Output Image Pairs. In Proceedings of the Cvpr 2011, Colorado Springs, CO, USA, 20–25 June 2011; IEEE: New York, NY, USA, 2011; pp. 97–104. [Google Scholar]

- Loh, Y.P.; Chan, C.S. Getting to Know Low-Light Images with the Exclusively Dark Dataset. Comput. Vis. Image Underst. 2019, 178, 30–42. [Google Scholar] [CrossRef]

- Yang, W.; Yuan, Y.; Ren, W.; Liu, J.; Scheirer, W.J.; Wang, Z.; Zhang, T.; Zhong, Q.; Xie, D.; Pu, S. Advancing Image Understanding in Poor Visibility Environments: A Collective Benchmark Study. IEEE Trans. Image Process. 2020, 29, 5737–5752. [Google Scholar] [CrossRef]

| Methods | Venue | LOL | MIT | ||||||

|---|---|---|---|---|---|---|---|---|---|

| PSNR ↑ | SSIM ↑ | NIQE ↓ | BRISQUE ↓ | PSNR ↑ | SSIM ↑ | NIQE ↓ | BRISQUE ↓ | ||

| RetinexNet | BMVC’18 | 16.77 ± 2.38 | 0.564 ± 0.077 | 8.39 ± 0.53 | 35.43 ± 7.69 | 14.29 ± 1.89 | 0.698 ± 0.087 | 4.58 ± 0.92 | 29.24 ± 8.59 |

| DSLR | TMM’20 | 14.95 ± 4.16 | 0.600 ± 0.131 | 8.18 ± 0.48 | 33.78 ± 8.75 | 18.17 ± 3.88 | 0.769 ± 0.047 | 3.83 ± 0.79 | 32.66 ± 10.05 |

| RRDNet | ICME’20 | 10.70 ± 3.65 | 0.436 ± 0.148 | 7.82 ± 0.91 | 29.32 ± 8.27 | 16.16 ± 3.69 | 0.664 ± 0.068 | 4.08 ± 0.88 | 30.20 ± 10.22 |

| Zero-DCE | CVPR’20 | 14.86 ± 4.27 | 0.562 ± 0.124 | 7.85 ± 1.03 | 24.98 ± 8.10 | 16.07 ± 1.95 | 0.738 ± 0.061 | 3.65 ± 0.76 | 24.31 ± 8.52 |

| EnlightenGAN | TIP’21 | 17.48 ± 3.53 | 0.651 ± 0.110 | 5.40 ± 0.69 | 23.77 ± 5.89 | 17.95 ± 2.44 | 0.791 ± 0.060 | 3.19 ± 0.70 | 28.14 ± 9.49 |

| RetinexDIP | TCSVT’21 | 11.68 ± 3.86 | 0.487 ± 0.136 | 7.57 ± 1.08 | 22.55 ± 6.89 | 18.41 ± 3.11 | 0.836 ± 0.049 | 3.23 ± 0.67 | 22.86 ± 8.83 |

| LCDPNet | ECCV’22 | 14.51 ± 4.94 | 0.575 ± 0.098 | 7.44 ± 0.79 | 22.63 ± 7.37 | 17.07 ± 2.52 | 0.770 ± 0.065 | 3.24 ± 0.74 | 25.75 ± 10.03 |

| SCI | CVPR’22 | 14.81 ± 4.08 | 0.548 ± 0.137 | 7.81 ± 1.06 | 27.32 ± 6.60 | 18.06 ± 3.10 | 0.815 ± 0.063 | 3.55 ± 0.79 | 25.55 ± 8.26 |

| CLIP-LIT | ICCV’23 | 12.39 ± 3.62 | 0.493 ± 0.121 | 7.90 ± 0.97 | 28.10 ± 8.08 | 17.19 ± 2.94 | 0.773 ± 0.066 | 3.38 ± 0.76 | 23.90 ± 9.27 |

| GDP | CVPR’23 | 15.90 ± 2.38 | 0.542 ± 0.077 | 7.95 ± 0.73 | 30.14 ± 7.69 | 18.26 ± 2.89 | 0.799 ± 0.087 | 3.22 ± 0.92 | 24.76 ± 8.59 |

| Ours | - | 17.04 ± 3.32 | 0.592 ± 0.107 | 7.12 ± 0.79 | 24.06 ± 7.68 | 18.73 ± 2.26 | 0.787 ± 0.069 | 3.05 ± 0.71 | 23.11 ± 8.48 |

| Methods | Venue | ExDark | DarkFace | ||

|---|---|---|---|---|---|

| NIQE ↓ | BRISQUE ↓ | NIQE ↓ | BRISQUE ↓ | ||

| RetinexNet | BMVC’18 | 4.44 ± 0.68 | 31.64 ± 9.05 | 4.42 ± 0.22 | 34.67 ± 2.83 |

| DSLR | TMM’20 | 3.90 ± 0.64 | 28.65 ± 8.55 | 3.74 ± 0.38 | 34.90 ± 4.17 |

| RRDNet | ICME’20 | 3.65 ± 0.99 | 20.77 ± 7.45 | 3.97 ± 0.44 | 30.34 ± 3.59 |

| Zero-DCE | CVPR’20 | 3.03 ± 0.58 | 27.72 ± 7.04 | 3.50 ± 0.45 | 28.18 ± 5.16 |

| EnlightenGAN | TIP’21 | 3.12 ± 0.63 | 19.61 ± 6.81 | 3.26 ± 0.33 | 26.61 ± 3.22 |

| RetinexDIP | TCSVT’21 | 3.32 ± 0.63 | 25.76 ± 6.81 | 3.58 ± 0.43 | 24.50 ± 4.27 |

| LCDPNet | ECCV’22 | 3.27 ± 0.81 | 20.22 ± 7.54 | 3.23 ± 0.29 | 28.62 ± 4.92 |

| SCI | CVPR’22 | 2.97 ± 0.66 | 21.65 ± 7.15 | 3.36 ± 0.44 | 24.71 ± 3.26 |

| CLIP-LIT | ICCV’23 | 3.16 ± 0.66 | 23.65 ± 8.42 | 3.73 ± 0.48 | 32.46 ± 6.35 |

| GDP | CVPR’23 | 3.21 ± 0.68 | 25.45 ± 7.05 | 3.68 ± 0.22 | 29.16 ± 3.83 |

| Ours | - | 2.92 ± 0.57 | 20.03 ± 6.98 | 3.13 ± 0.37 | 23.29 ± 2.40 |

| Methods | Venue | SIZE(M) | LOL (600 × 400) | DarkFace (1080 × 720) | ||

|---|---|---|---|---|---|---|

| FLOPs (G) | TIME (S) | FLOPs (G) | TIME (S) | |||

| RetinexNet | BMVC’18 | 0.8383 | 136.0151 | 0.1131 | 441.4513 | 0.5533 |

| DSLR | TMM’20 | 14.9313 | 43.0319 | 0.0729 | 141.0071 | 0.0451 |

| RRDNet | ICME’20 | 0.1282 | 30.6500 | 90.4702 | 99.3212 | 272.6918 |

| Zero-DCE | CVPR’20 | 0.0794 | 19.0081 | 0.0061 | 61.5859 | 0.0076 |

| EnlightenGAN | TIP’21 | 8.6370 | 61.0261 | 0.0098 | 196.5670 | 0.1028 |

| RetinexDIP | TCSVT’21 | 0.7072 | 3.4134 | 16.2311 | 11.2549 | 16.8708 |

| LCDPNet | ECCV’22 | 0.2818 | 5.0068 | 0.0382 | 15.9825 | 0.0286 |

| SCI | CVPR’22 | 0.0003 | 0.1282 | 0.0025 | 0.4152 | 0.0033 |

| CLIP-LIT | ICCV’23 | 0.2788 | 66.6701 | 0.0095 | 216.0111 | 0.0165 |

| Ours | - | 0.0428 | 4.0263 | 0.0038 | 13.0454 | 0.0051 |

| Baseline | PFM | IE | FRC | PSNR ↑ | SSIM ↑ | NIQE ↓ | BRISQUE ↓ |

|---|---|---|---|---|---|---|---|

| Baseline1 | 10.049 | 0.381 | 8.556 | 31.650 | |||

| Baseline2 | 12.414 | 0.460 | 7.374 | 26.561 | |||

| √ | 12.348 | 0.456 | 8.139 | 28.632 | |||

| √ | 13.496 | 0.467 | 7.935 | 28.247 | |||

| √ | 12.919 | 0.475 | 7.442 | 26.354 | |||

| √ | √ | 15.985 | 0.574 | 7.644 | 27.468 | ||

| √ | √ | 15.455 | 0.569 | 7.309 | 25.919 | ||

| √ | √ | √ | 17.039 | 0.592 | 7.115 | 24.060 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, H.; Li, J.; Mao, Y.; Liu, L.; Lu, Y. DFCNet: Dual-Stage Frequency-Domain Calibration Network for Low-Light Image Enhancement. J. Imaging 2025, 11, 253. https://doi.org/10.3390/jimaging11080253

Zhou H, Li J, Mao Y, Liu L, Lu Y. DFCNet: Dual-Stage Frequency-Domain Calibration Network for Low-Light Image Enhancement. Journal of Imaging. 2025; 11(8):253. https://doi.org/10.3390/jimaging11080253

Chicago/Turabian StyleZhou, Hui, Jun Li, Yaming Mao, Lu Liu, and Yiyang Lu. 2025. "DFCNet: Dual-Stage Frequency-Domain Calibration Network for Low-Light Image Enhancement" Journal of Imaging 11, no. 8: 253. https://doi.org/10.3390/jimaging11080253

APA StyleZhou, H., Li, J., Mao, Y., Liu, L., & Lu, Y. (2025). DFCNet: Dual-Stage Frequency-Domain Calibration Network for Low-Light Image Enhancement. Journal of Imaging, 11(8), 253. https://doi.org/10.3390/jimaging11080253