1. Introduction

In the textile production process, accurate color matching between incoming samples and final products critically depends on the precision of yarn color measurement. Human color perception of yarn is influenced by several factors. Individual physiological differences mean color perception varies due to cone cell sensitivity [

1]. Also, environmental factors like lighting and viewing angles alter how yarn colors appear [

2]. Moreover, yarn’s physical properties, such as uneven dyeing and fiber blends, add to the complexity of color differentiation. Thus, human visual perception of yarn colors is inconsistent and limited. Currently, the industry-standard method primarily relies on spectrophotometers [

3], which compute chromaticity values by measuring spectral reflectance within a defined aperture. However, due to the small size of individual yarns, they cannot fully cover the spectrophotometer’s aperture. Consequently, manual yarn winding techniques are commonly employed to expand the measurement area [

4,

5,

6]. This approach, however, presents three technical limitations. First, the measurement results are highly sensitive to process parameters such as the number of winding layers and density—excessive layering tends to overestimate brightness and saturation, while insufficient layering can lead to background interference and reduced accuracy. Second, the directional nature of fiber arrangement and surface texture on yarns results in anisotropic reflection and scattering behaviors, which cannot be effectively captured by the optical system of spectrophotometers, as it is designed based on isotropic assumptions. Third, human visual perception of brightness and color is inherently nonlinear, whereas instrument-based measurements rely on linear models. This fundamental discrepancy hinders accurate representation of human visual experiences.

Vision-consistent imaging-based methods offer a potential solution to the aforementioned limitations. These methods mainly include photographic colorimetry and multispectral imaging. Photographic colorimetry achieves micron-scale chromaticity measurement through high-resolution image acquisition combined with color space transformation algorithms, such as the TDColor system [

7], region extraction methods, normal distribution approaches, and kernel density estimation [

8], with a reported standard deviation of 1.88 in CIEDE2000 color difference. Multispectral imaging breaks through the RGB channel limitations using spectral decoupling techniques, including Tang’s color matching approach [

9], Luo’s average pixel method [

10], and Wang’s polynomial model [

11]. Notably, Zhang et al. [

12] developed the R-Model calibration system using Fréchet distance spectral matching, achieving an average improvement of 54.99% in CIEDE2000 color difference. Kong et al. [

13] introduced a feedback-based nonlinear training sample selection strategy, resulting in reconstructed spectral reflectance more closely aligned with actual measurements. Luo et al. [

14] proposed four color measurement methods using an MSI system, with the central region averaging method exhibiting the highest brightness stability.

Li et al. [

15] advanced beyond traditional unidimensional chromatic analysis by constructing a Spectral Pan-Similarity Metric (SPSM) model to map color features across the full process from dyed polyester fiber to yarn and woven fabric. This model innovatively introduced a chromaticity index weighting algorithm to analyze spectral difference mechanisms between melange yarns and satin fabrics, revealing nonlinear correlations between textile structural parameters and optical responses. Lu et al. [

16] developed a bivariate coupling model of pigment mass fraction and fiber linear density to elucidate chromatic evolution from solution-dyed polyester fibers to yarn, providing quantitative benchmarks for process optimization.

Despite improvements in measurement accuracy and stability, two major issues remain. First, the combined effects of multiple light scattering from microstructures on yarn surfaces, complex texture backgrounds [

17], and yarn edge effects result in significant brightness deviations, with no effective compensation model currently available. Second, existing chromaticity measurement methods lack a precise mapping to human visual perception. The Weber–Fechner Law [

18] and Stevens’ Law [

19] collectively demonstrate that human brightness perception follows a piecewise nonlinear pattern—complying with the DeVries–Rose Law in low-brightness regions, a logarithmic relationship in medium-brightness regions, and a power function saturation in high-brightness regions. This nonlinear characteristic leads to difficulty in capturing human sensitivity to low-brightness and saturation at high-brightness levels [

20], resulting in considerable discrepancies between measurement results and actual visual perception.

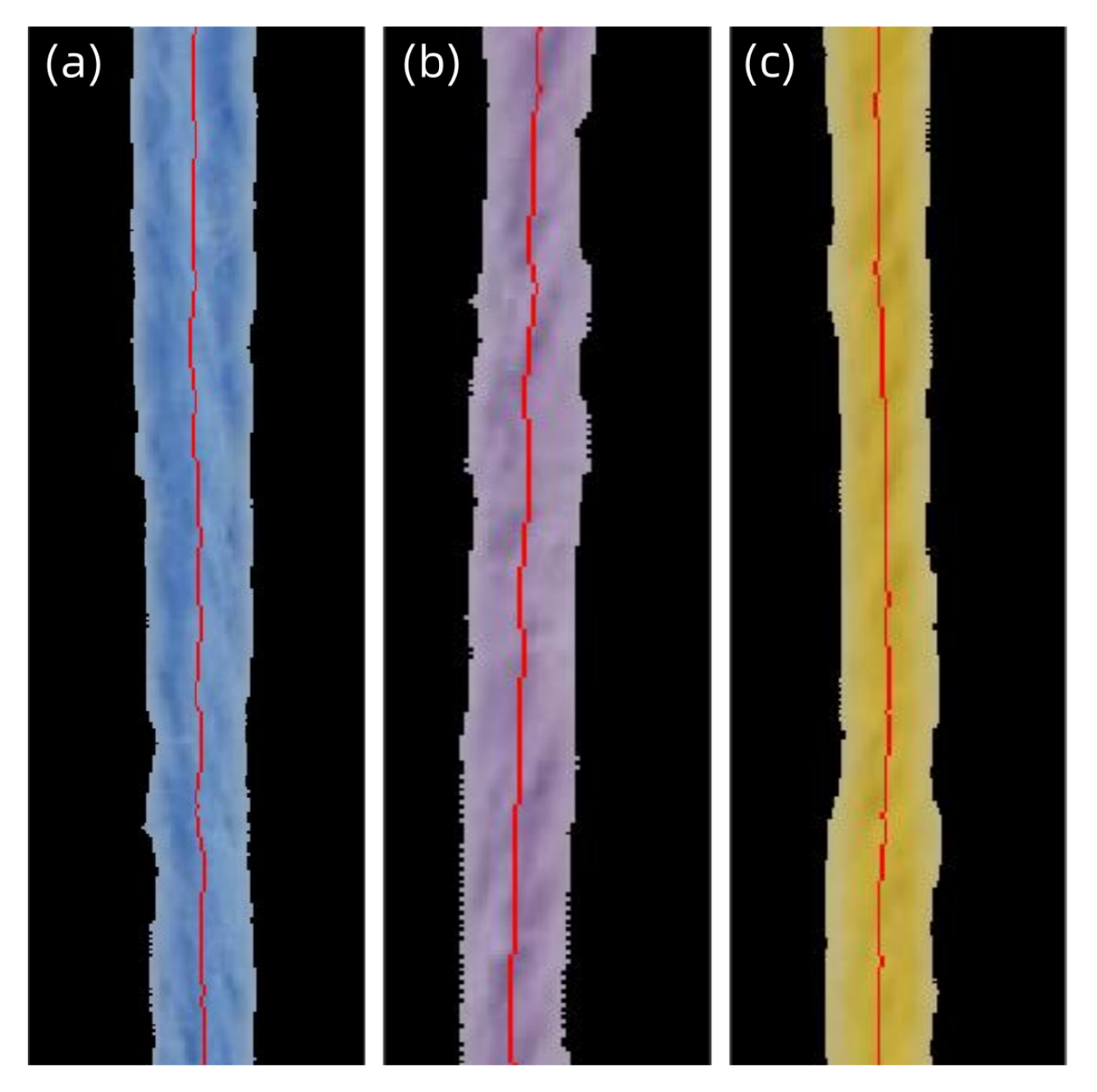

To address these technical bottlenecks in yarn color measurement, this study proposes a centerline-based nonlinear texture-weighted color measurement method, implemented using a self-developed photographic colorimetry system. The method aims to construct a measurement model that aligns with human visual perception. It employs K-means clustering to segment yarn regions and a skeletonization algorithm to extract the yarn centerline, thereby reducing interference from edge texture variations. Spectral reconstruction is then performed along the centerline, and standard chromaticity values are established based on colorimetric theory. Finally, a nonlinear texture-weighted correction based on brightness features is introduced, enhancing the alignment of measurement outcomes with actual visual appearances of yarns. Psychophysical experiments confirm that this method significantly reduces the discrepancy between subjective color perception and objective measurement, greatly improving measurement accuracy and offering a novel approach and technical foundation for the advancement of yarn color measurement technologies.

3. Experimental Results and Analysis

3.1. Spectrophotometry Results

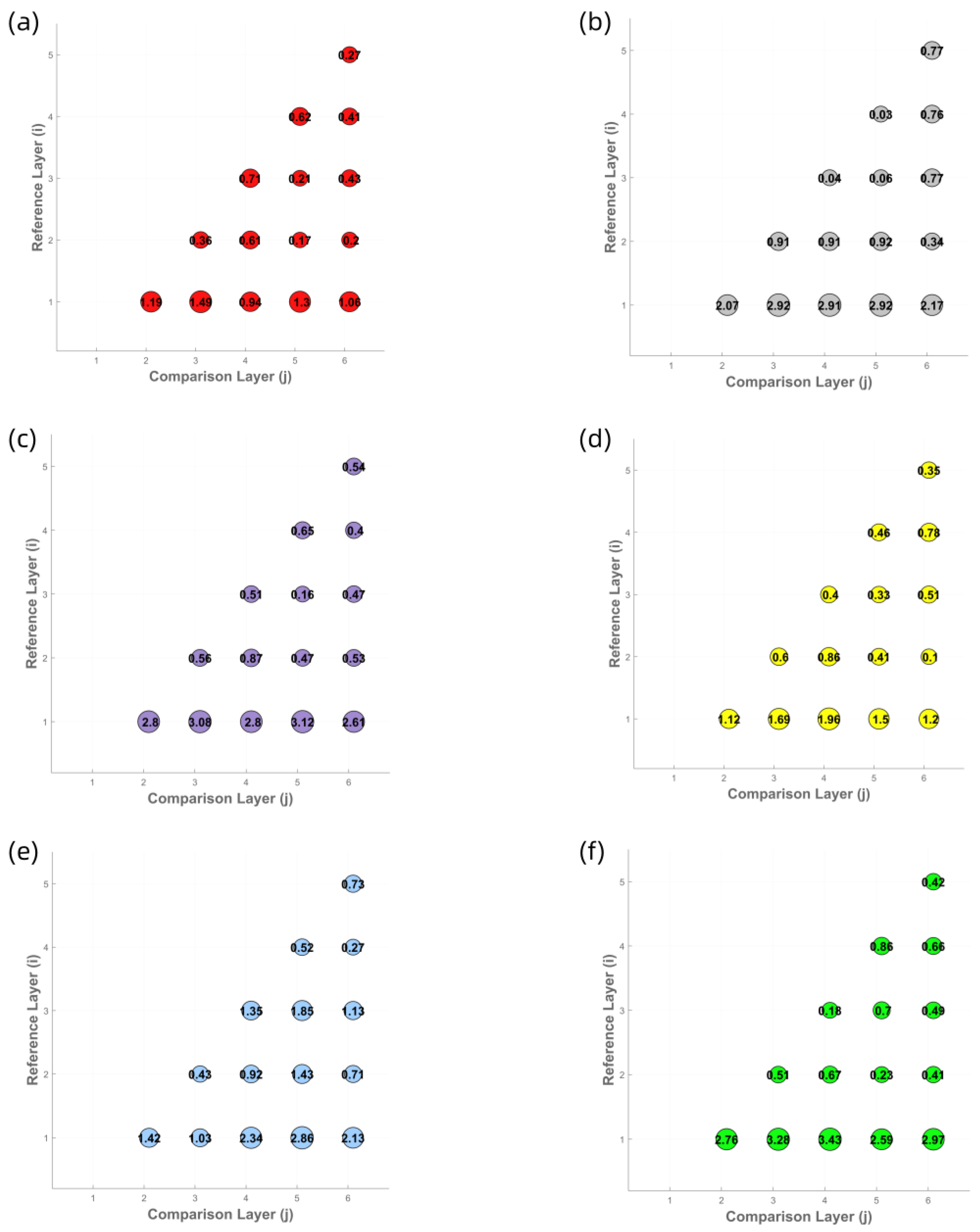

To determine the optimal number of yarn winding layers for the M0 method, six different colored yarns (all made of cotton with a count of 32) were used to measure the spectral reflectance and CIELab chromaticity values of samples with one to six layers. Corresponding spectral reflectance curves (

Figure 4), CIELab chromaticity distribution maps (

Figure 5), and color difference comparison charts (

Figure 6) were generated to evaluate the influence of winding layer count on the stability of color measurements.

The experimental results indicate that the spectral reflectance curves become progressively more consistent with the increase in winding layers. For samples with four or more layers, the spectral reflectance curves across all colors converge to a stable state, suggesting that the spectral characteristics of the yarn colors have stabilized. This trend is further confirmed by the CIE chromaticity distribution maps, where the chromaticity coordinate points of samples with four or more layers are closely clustered. Moreover, the values between the four-, five-, and six-layer samples for all six yarn colors are below 1.0, which complies with the commercial-grade acceptability threshold of in the textile industry. In contrast, samples with fewer than four layers exhibit significantly higher variation, indicating that their color measurements are still influenced by surface texture and background interference. Based on the analysis of the spectral reflectance curves and chromaticity distributions, it is concluded that the four-layer winding samples can accurately reflect both the spectral and visual chromatic characteristics of the yarns. Therefore, four-layer winding samples are selected as the benchmark data for the M0 method in subsequent experiments.

3.2. Photographic Colorimetry Results

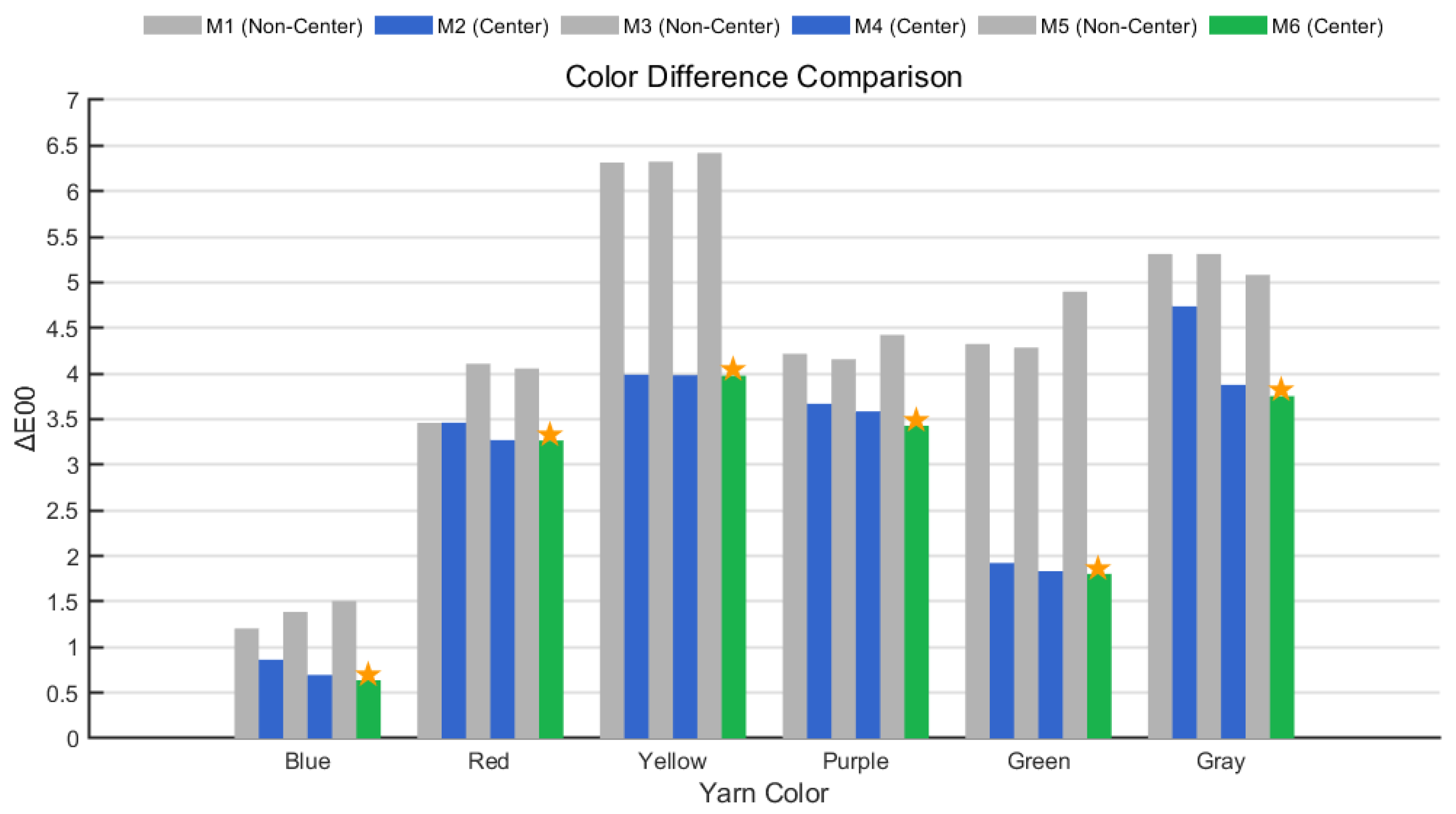

In this experiment, six yarn samples with different colors—corresponding to those used in the M0 method—were evaluated using six photographic colorimetry methods (M1 through M6). The CIELab values obtained from each method were compared with the corresponding M0 results to calculate the CIEDE2000 color differences (

), thereby providing a preliminary assessment of the deviation between photographic and spectrophotometric color measurement methods. The specific results are presented in the following

Table 2,

Table 3 and

Table 4 and in

Figure 7.

Systematic analysis of the experimental data reveals that the skeletonization algorithm (centerline region) effectively suppresses surface texture interference and edge light scattering. Compared with global regions, it significantly reduces the average color differences between the photographic methods and the spectrophotometric reference across all six yarn colors. Under the average pixel model, transitioning from M1 to M2 results in a reduction in overall (from 4.1354 to 3.0864). Notably, the for the green yarn decreases from 4.3249 to 1.9239—a reduction.

In the luminance-weighted model, moving from M3 to M4 yields a decrease in average color difference (from 4.26 to 2.87). Specifically, the for the red yarn drops from 4.1082 to 3.271 (a reduction), while the blue yarn—representing a cool tone—shows a decline from 1.3878 to 0.6962 (a reduction), closely approaching the M0 results. The gray yarn also benefits from the centerline algorithm, which eliminates edge shadow interference and enhances luminance values, thus demonstrating the model’s effectiveness in improving chromaticity accuracy for neutral tones.

In the texture-weighted model, a comparison between M5 and M6 indicates that M6 further suppresses overexposure in high-luminance areas via an inverse-proportional weighting function. This adjustment reduces the average color difference across all six yarn colors from 4.3989 (M5) to 2.8104 (M6), marking a reduction. For the blue yarn, the drops from 1.5077 (M5) to 0.6373 (M6), nearly matching the spectrophotometric result.

From the perspective of model progression, the values demonstrate a stepwise reduction across the M2→ M4→ M6 sequence (with global means decreasing from 2.31 to 2.13), confirming the effectiveness of the nonlinear texture-weighted correction method for addressing the nonlinear response characteristics of yarn surface texture. The for the blue yarn (0.6373) is almost identical to the M0 result, while the green yarn’s of 1.803 approximates the reference. Although the for warm-toned yarns remains slightly higher, the M6 method still produces results closer to the spectrophotometric measurements compared to the other five methods. This demonstrates that the proposed approach can consistently obtain chromaticity data comparable to spectrophotometric results without requiring the yarn winding process, thereby offering objective support for developing color measurement techniques aligned with human visual perception.

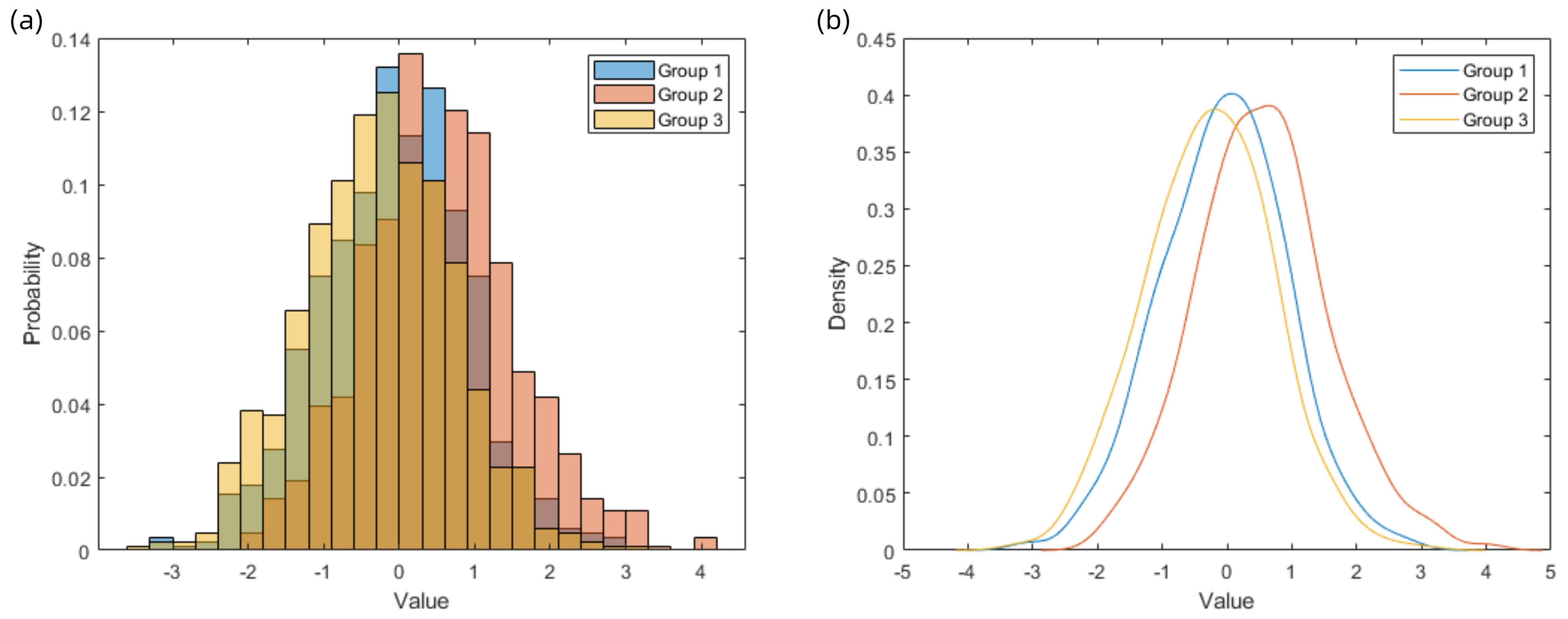

3.3. Subjective Evaluation of Measurement Results

To validate the visual perception consistency of the yarn color measurement methods, a psychophysical experiment was designed and conducted under strictly controlled environmental conditions using standardized display equipment and a viewing booth. The display presented the yarn colors as measured by different methods, while the viewing booth contained the six yarn samples and their corresponding four-layer winding samples, as illustrated in

Figure 8. After calibration, the display device achieved an average CIEDE2000 color difference of 1.0 for 24 standard color patches, ensuring color accuracy during the experiment.

A total of 20 observers, all having passed a color vision screening, participated in the evaluation to ensure normal color discrimination capabilities. Prior to the formal test, each observer underwent an adaptation phase in a darkroom environment to stabilize their visual perception. During the evaluation, observers compared the yarn color displayed on the screen with the actual samples placed in the booth and rated the perceived similarity using a 7-point Likert scale, where 1 indicated the least similarity and 7 indicated the highest. Each observer completed three rounds of scoring to ensure the reliability and consistency of the results.

Upon completion of the experiment, the data from all three rounds were collected and subjected to analysis of variance (ANOVA). The results revealed a statistically significant difference among the three rounds (

p = 0.0014), with the third round yielding significantly higher mean scores than the first two. However, no significant variation was found within each round across different observers. After standardization, the variance analysis indicated no longer significant differences between groups, and subsequent evaluations focused on the first two rounds to better reflect the participants’ scoring trends. The average scores of the 20 participants are shown in

Table 5, as well as

Figure 9 and

Figure 10.

Visual perception assessments based on the psychophysical data indicate that for the evaluation of single yarn samples, the M0 method received an average score of 4.667. Although it slightly outperformed the traditional global region-based methods for red (5.2) and gray (4.7) yarns (compared to M1 = 4.754, M3 = 4.738, M5 = 4.775), it was significantly outscored by all centerline-based methods (M2 = 5.442, M4 = 5.542, M6 = 5.704). The M6 method achieved the highest overall average score of 5.704, strongly validating the close alignment between the centerline texture-weighted measurement method and human visual perception.

For the evaluation of winding samples, M0 showed slight advantages in red (5.375) and green (5.175) samples, but its performance was comparable to the traditional global methods (M1 = 4.938, M3 = 4.988, M5 = 5.054). In contrast, all centerline-based methods (M2 = 5.458, M4 = 5.588, M6 = 5.788) exhibited superior perceptual consistency. Notably, M6 again achieved the highest score of 5.77. Its score for the winding samples even surpassed that for single yarn samples, indicating the robustness of this method in handling complex textile structures.

Overall, the subjective evaluation data demonstrate that the linear measurement model of spectrophotometry cannot fully reflect human visual perception. In contrast, photographic colorimetry aligns more closely with the nonlinear characteristics of human color and luminance perception. Specifically, the use of centerline regions effectively reduces surface texture and edge scattering interference, resulting in more concentrated and stable measurement areas. Furthermore, the texture-weighted strategy, by simulating the S-shaped response curve of the human visual system—sensitive to low luminance and saturated in high luminance—achieves a more accurate perceptual correction.