IEWNet: Multi-Scale Robust Watermarking Network Against Infrared Image Enhancement Attacks

Abstract

1. Introduction

- We employ a multi-scale neural network structure to embed image watermarks of different scales onto the original IR image of the corresponding scale. The proposed model is able to select the appropriate watermark embedding location among features at different scales.

- We add a noise layer between the encoder and decoder to cope with the traditional IRE attack. The noise layer is used to simulate the principle of eight traditional IRE attacks. It is applied as the alternative to the eight traditional IRE attacks in the flow of the algorithm. This makes the proposed algorithm more robust against traditional IRE attacks.

- For machine learning IRE attacks where the specific structure is known, we train an enhancement sub-network to improve robustness. The enhancement sub-network is able to replace the role of four machine learning-based IRE algorithms in the IEWNet. The structure of the enhancement module is referenced to four classical IRE neural networks.

2. Background

3. Related Work

3.1. Multi-Scale-Based Methods

3.2. Infrared Enhancement-Based Networks

4. Materials and Methods

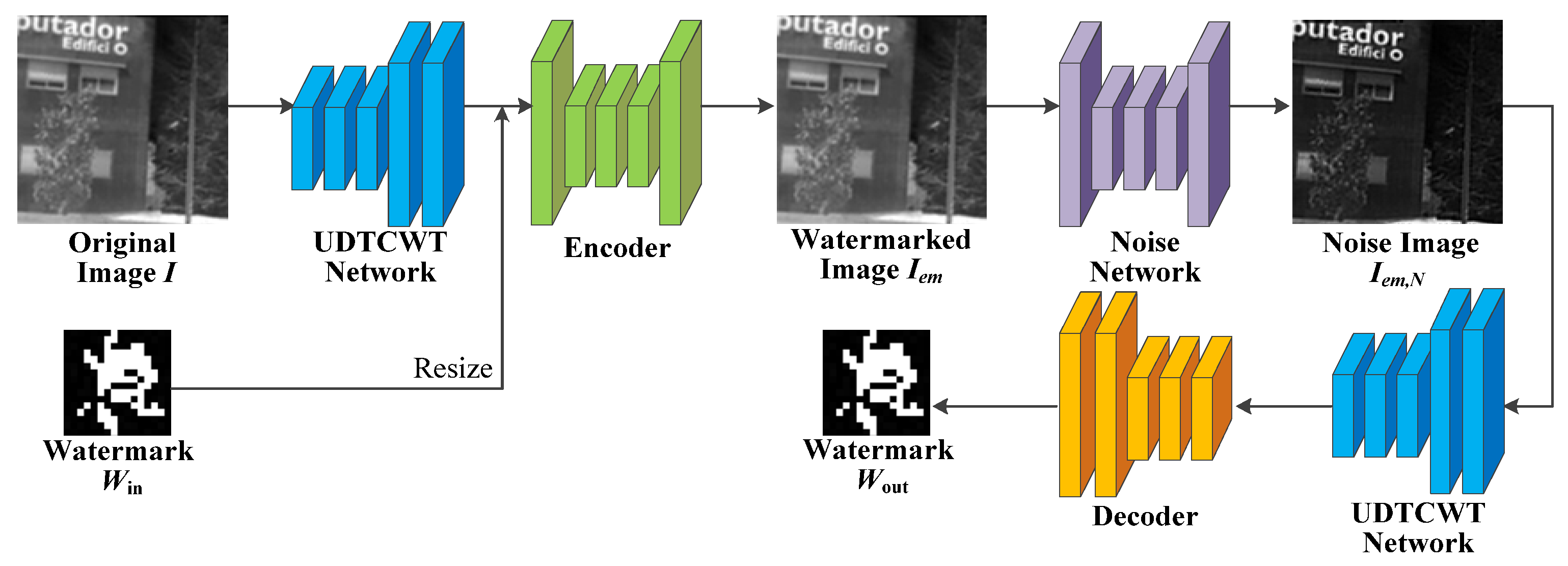

4.1. Network Architecture

- (1)

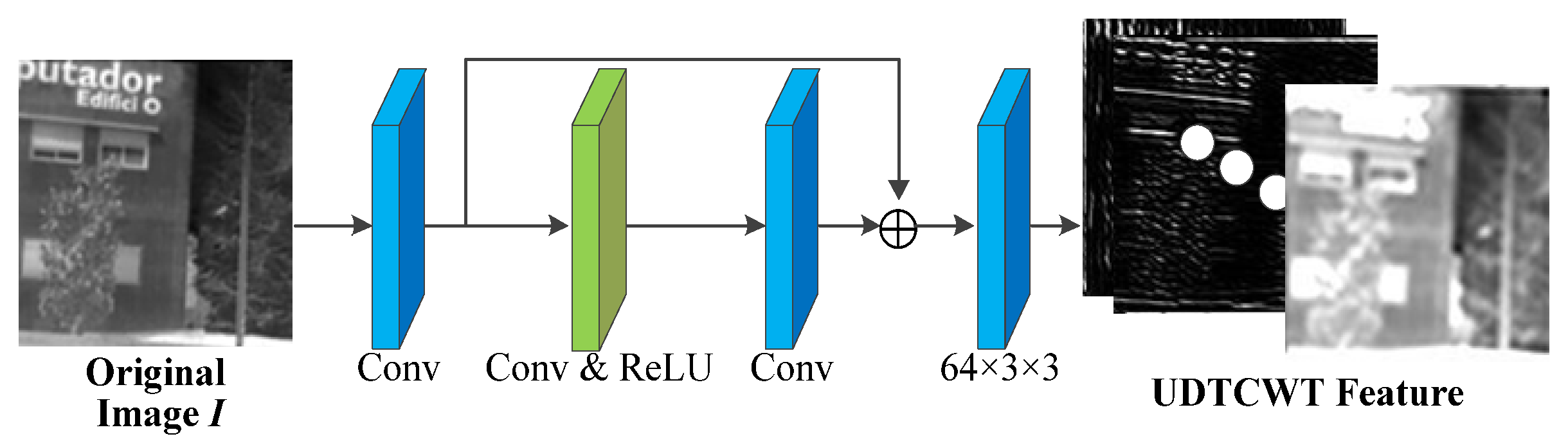

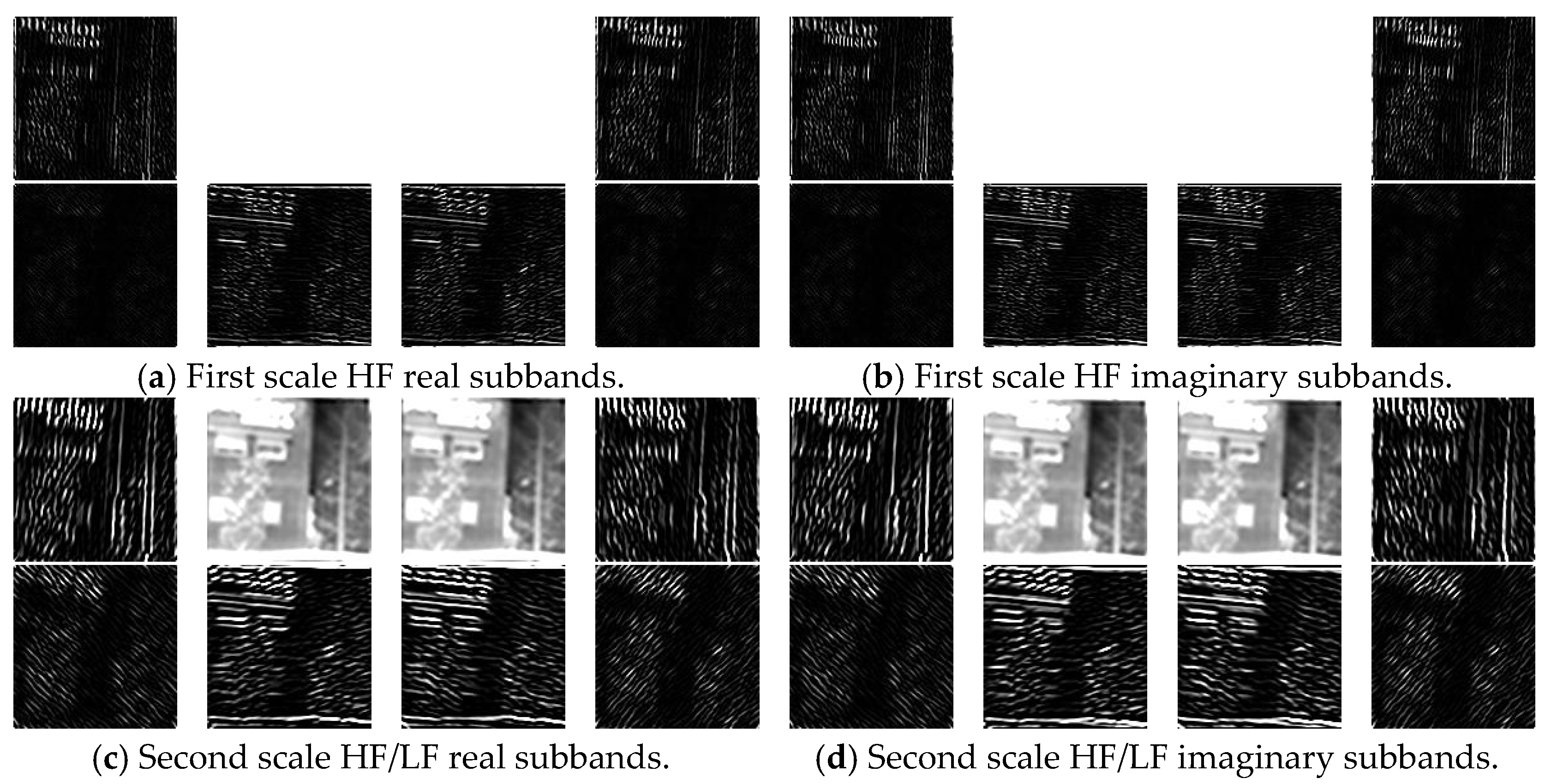

- UDTCWT [16] Network : To make the frequency domain transform more suitable for incorporation into the proposed IEWNet, we train a small CNN with subbands of the UDTCWT [1] decomposition as targets. The main structure of UDTCWT [16] network is shown in Figure 2. The network structure starts with the convolutional layer, followed by a residual structure consisting of a convolutional layer and a ReLU activation function. The final output from a convolutional layer is a 28-dimensional tensor. The convolutional kernel size is 3 × 3 and has 64 dimensions.

- (2)

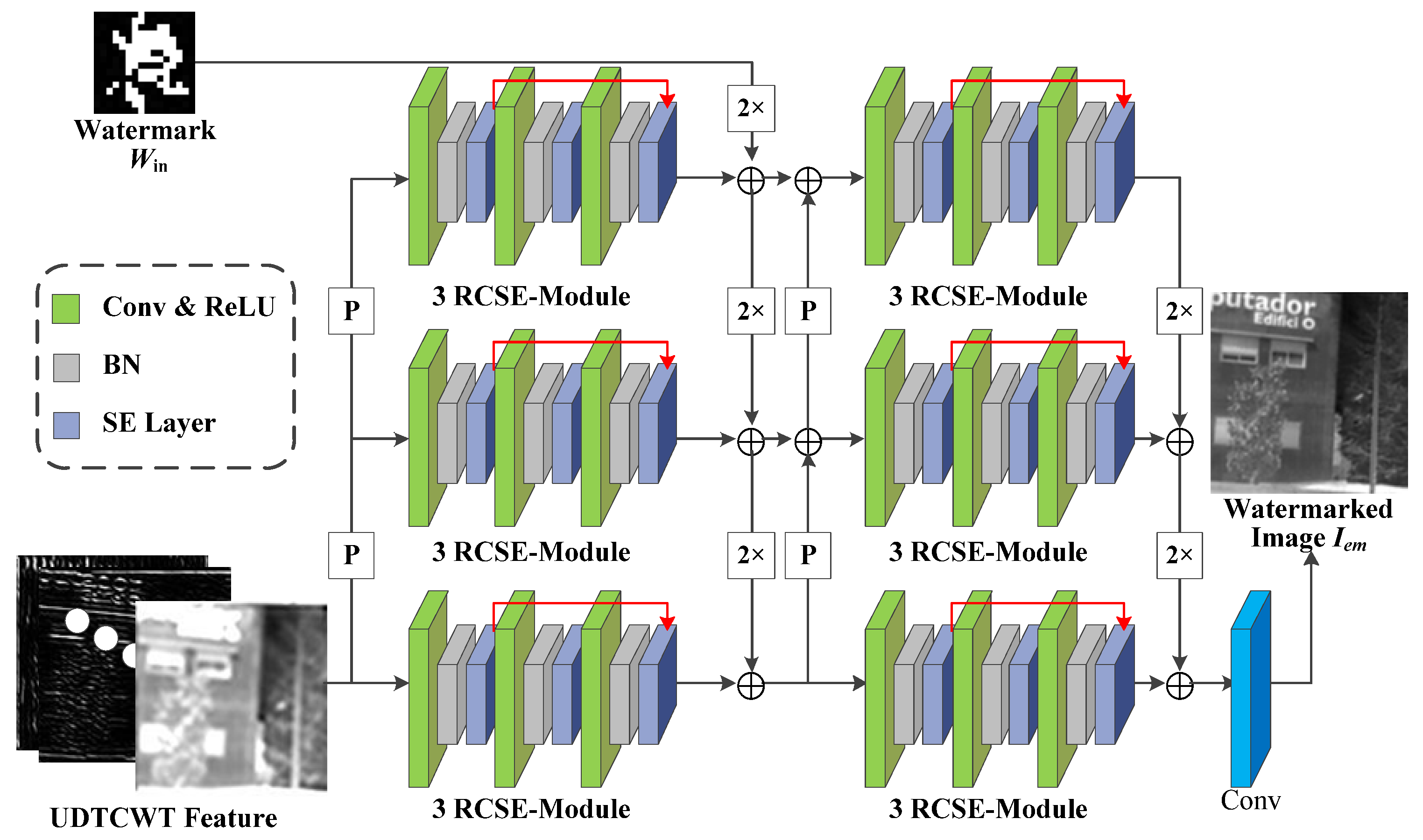

- Encoder : Figure 3 shows the network structure for the encoder , where “P” stands for 2 × 2 average pooling operation, “2×” stands for 64 × 3 × 3 doubly expanded convolutional layers, and (+) indicates the addition operation used to sum the features at each scale. Encoder aims to encode the input UDTCWT [16] features with the original IR image into a fixed dimensional tensor and to extract multi-scale image features while reducing the dimensionality of the input. The structure of encoder is generally divided into three scales. On the one hand, the UDTCWT [16] subnet extracts features after one or two 1/2 downsampling to form three dimensionality-decreasing features. On the other hand, a doubly extended convolutional layer can scale the watermarked image to twice or four times its size. Then, their sizes at these three scales will be in equal relationship. This will facilitate the combination of features at different scales with the help of downsampling and upsampling operations as illustrated in Figure 3. The inter-scale feature fusion will preserve more features from the original image during the model training process, which will improve the imperceptibility of the encoder . The Residual Convolutional SE (RCSE) module is a residual network structure consisting of three SE-Conv sub-blocks. The structure of the SE-Conv sub-blocks is based on channel attention module proposed by Cao et al. [46]. The ReLU activation function is capable of applying nonlinear operations on the model weights. The BN operation reduces the covariate bias of the model weights. After the tensor is processed sequentially by the 3 × 3 convolutional layer, ReLU activation function, and BN operation, the channel attention module is able to adjust the weights of the image watermark’s feature in different channels according to the important relationship between the channels. The attention module first reduces the dimension of the tensor to one dimension using the global average pooling algorithm. Then, two sets of Fully Connected (FC) layers with activation functions adjust the one-dimensional tensor. Finally, the output of the attention module is multiplied by the input tensor to achieve the final result. After the encoder obtains the sum of the features at each scale, it outputs the watermarked IR image through a 64-dimensional 3 × 3 convolutional layer.

- (3)

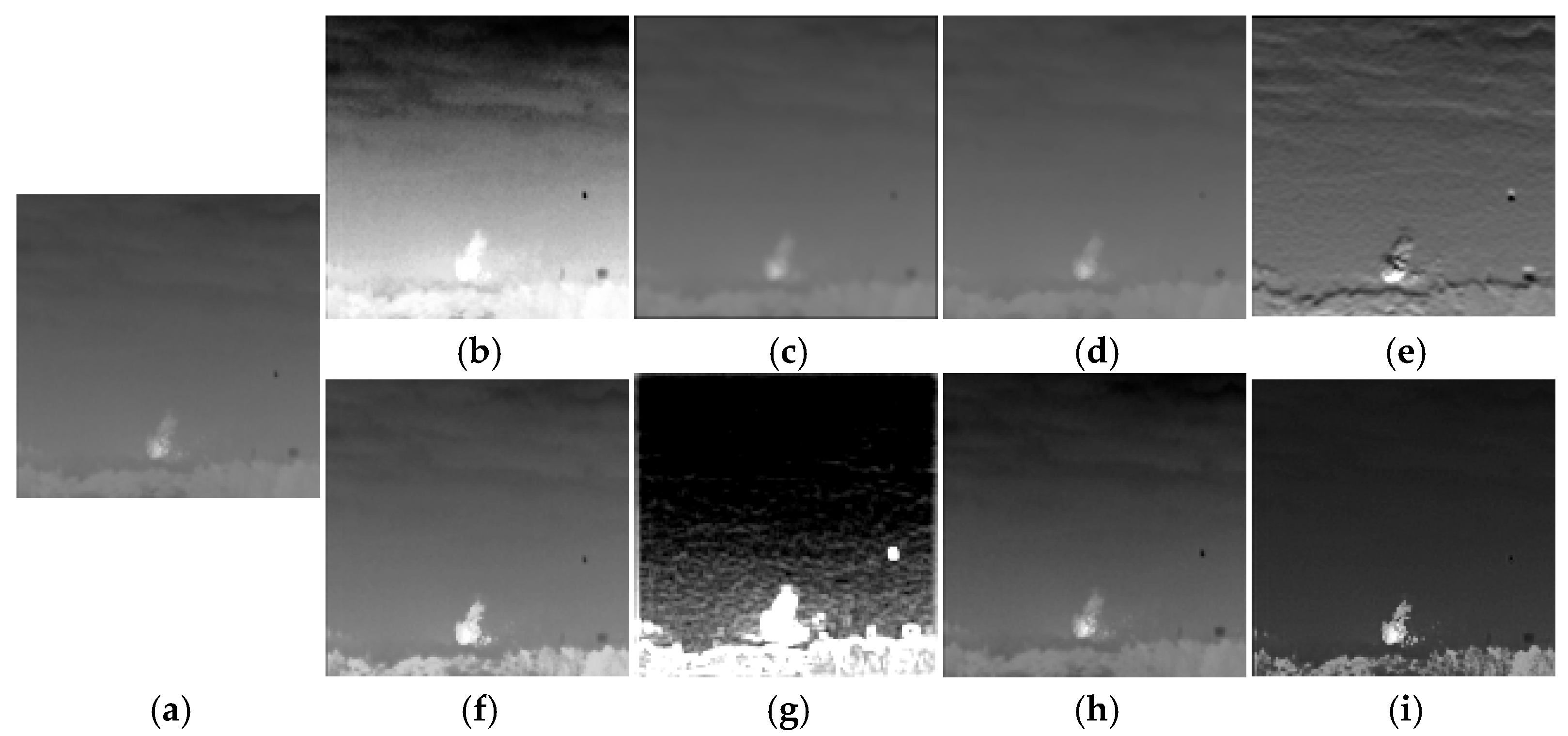

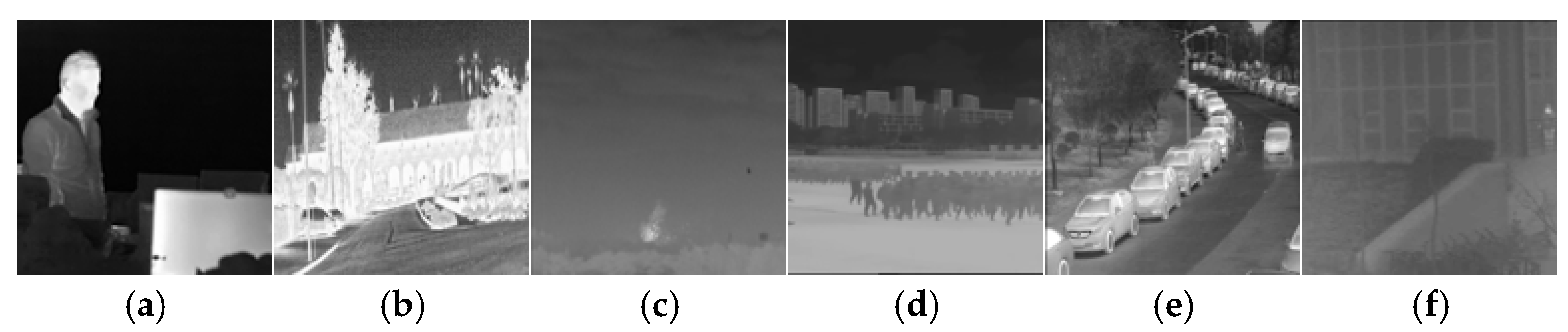

- Noisy Network : In order to learn some patterns of IRE attacks, we set up the noise network between the encoder and decoder to simulate these attack methods. IRE attacks are categorized into traditional-based methods and machine learning-based methods. For traditional-based methods, we choose eight attacks for IRE images as the target for model training. These attacks include HE, mean filter, median filter, and Sobel operator, and there are also four IRE attacks provided by the GitHub public repository: Adaptive Histogram Partition and Brightness Correction (AHPBC) [47], Discrete Wavelet Transform and Event triggered Particle Swarm Optimization (DWT-EPSO), DeepVIP [48], and Adaptive Non-local Filter and Local Contrast (ANFLC) [49]. The code for these algorithms is all using Matlab. Figure 4 illustrates the results of eight traditional IRE algorithms.

- (4)

- Decoder : Figure 6 illustrates the structure of decoder . Decoder decodes the noisy image and maps the features flowing through the other sub-networks to the watermark information. Unlike the encoder , the decoder has only one residual convolution module per scale. Both the encoder and the decoder have exactly the same structure for their residual convolution modules. The first scale undergoes two 2 × 2 average pooling operations with SE-Conv blocks to match the features of the other two scales in turn. To obtain the mapping of the features to the watermark image , the decoder applies a FC layer at the end. We choose the scale with the smallest size to output the watermark image , because the FC layer maps each weight of the model. If the number of weights in the feature tensor is too large, the difficulty of model training increases substantially.

4.2. Loss Function

4.3. Training Process

5. Experimental Results

5.1. Datasets and Experimental Configuration

5.2. UDTCWT

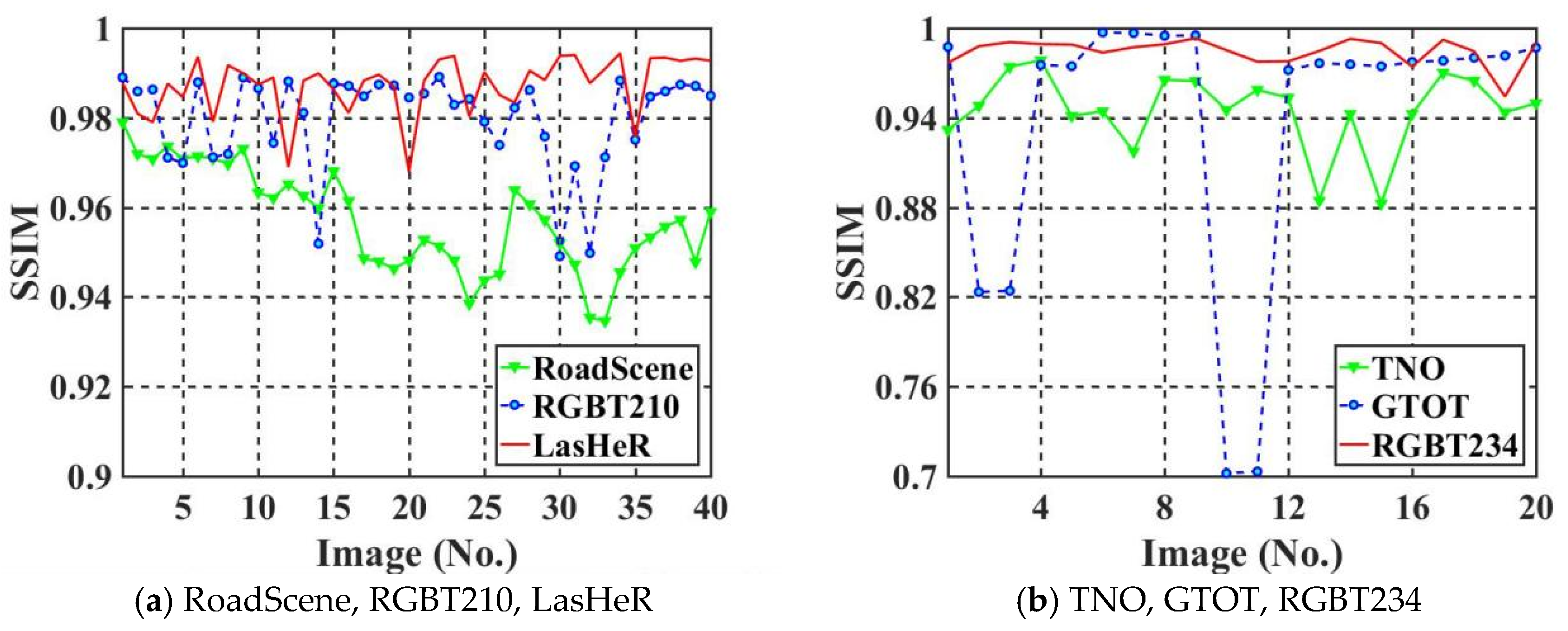

5.3. Imperceptibility Comparison

5.4. Robustness Comparison

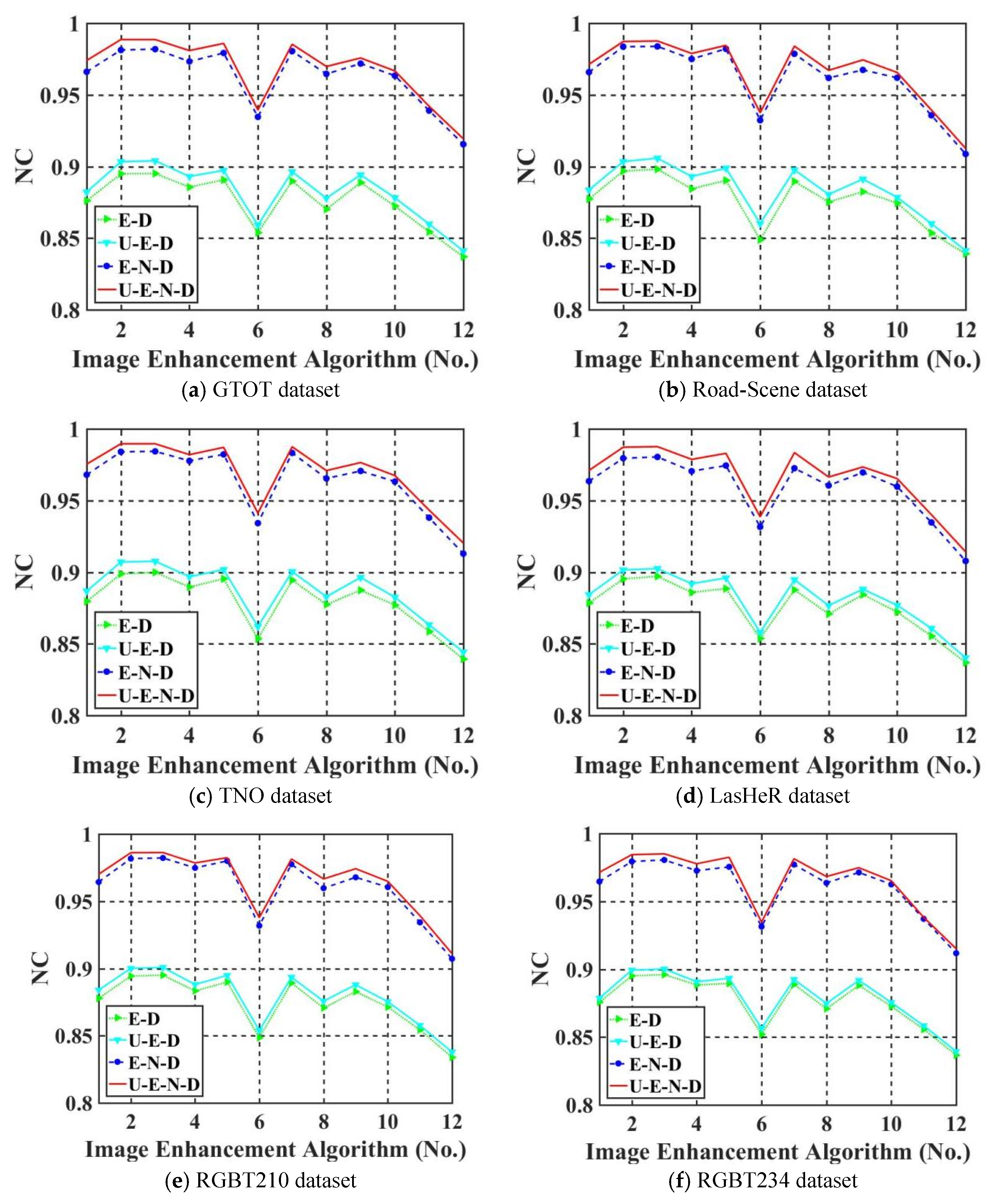

5.4.1. Ablation Study

5.4.2. Comparative Study

5.5. Watermark Capacity Test

6. Discussion

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IR | Infrared |

| IRE | Infrared Enhancement |

| UDTCWT | Undecimated Dual Tree Complex Wavelet Transform |

| PSNR | Peak Signal to Noise Ratio |

| HE | Histogram Equalization |

| CNN | Convolutional Neural Networks |

| ReLU | Rectified Linear Unit |

| GAN | Generative Adversarial Network |

| FFT | Fast Fourier Transform |

| DWT | Discrete Wavelet Transform |

| DNN | Deep Neural Network |

| DNST | Discrete Non-separable Shearlet Transform |

| PZM | Pseudo-Zernike Moments |

| DCT | Discrete Cosine Transform |

| WHT | Walsh Hadamard Transform |

| SVD | Singular Value Decomposition |

| GBT | Graph Based Transform |

| NCWT | Nondownsampled Contour Wave Transform |

| TEN | Thermal Image Enhancement using CNN |

| BCNN | Brightness-Based CNN |

| ONRDCNN | Optical Noise Removal Deep CNN |

| ACNN | Auto-Driving CNN |

| AHPBC | Adaptive Histogram Partition and Brightness Correction |

| DWT-EPSO | Discrete Wavelet Transform and Event triggered Particle Swarm Optimization |

| ANFLC | Adaptive Non-local Filter and Local Contrast |

| SGD | Stochastic Gradient Descent |

| HF | high-frequency |

| LF | low-frequency |

| NC | Normalized Correlation |

| SSIM | Structural Similarity Index Measure |

References

- Hildebrandt, C.; Raschner, C.; Ammer, K. An Overview of Recent Application of Medical Infrared Thermography in Sports Medicine in Austria. Sensors 2021, 10, 4700–4715. [Google Scholar] [CrossRef] [PubMed]

- Gong, J.; Fan, G.; Yu, L.; Havlicek, J.P.; Chen, D.; Fan, N. Joint Viewidentity Manifold for Infrared Target Tracking and Recognition. Comput. Vis. Image Underst. 2014, 118, 211–224. [Google Scholar] [CrossRef]

- Hwang, S.; Park, J.; Kim, N.; Choi, Y.; Kweon, I.S. Multispectral Pedestrian Detection: Benchmark Dataset and Baseline. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1037–1045. [Google Scholar] [CrossRef]

- Arrue, B.C.; Ollero, A.; de Dios, J.R.M. An Intelligent System for False Alarm Reduction in Infrared Forest-fire Detection. IEEE Intell. Syst. 2000, 15, 64–73. [Google Scholar] [CrossRef]

- Goldberg, A.C.; Fischer, T.; Derzko, Z.I. Application of Dual-band Infrared Focal Plane Arrays to Tactical and Strategic Military Problems. In Proceedings of the International Symposium on Optical Science and Technology, Seattle, WA, USA, 7–11 July 2002; Society of Photo-Optical Instrumentation Engineers: Bellingham, WA, USA, 2003; Volume 4820, pp. 500–514. [Google Scholar] [CrossRef]

- Janani, V.; Dinakaran, M. Infrared Image Enhancement Techniques—A Review. In Proceedings of the Second International Conference on Current Trends in Engineering and Technology-ICCTET, Coimbatore, India, 8 July 2014; pp. 167–173. [Google Scholar] [CrossRef]

- Paul, A.; Sutradhar, T.; Bhattacharya, P.; Maity, S.P. Infrared Images Enhancement Using Fuzzy Dissimilarity Histogram Equalization. Optik 2021, 247, 167887. [Google Scholar] [CrossRef]

- Wan, M.; Gu, G.; Maldague, X.; Qian, W.; Ren, K.; Chen, Q. Particle Swarm Optimization-based Local Entropy Weighted Histogram Equalization for Infrared Image Enhancement. Infrared Phys. Technol. 2018, 91, 164–181. [Google Scholar] [CrossRef]

- Paul, A.; Sutradhar, T.; Bhattacharya, P.; Maity, S.P. Adaptive Clip-limit-based Bi-histogram Equalization Algorithm for Infrared Image Enhancement. Appl. Opt. 2020, 59, 9032–9041. [Google Scholar] [CrossRef]

- Bhattacharya, P.; Riechen, J.; Zolzer, U. Infrared Image Enhancement in Maritime Environment with Convolutional Neural Networks. In Proceedings of the 13th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Funchal, Portugal, 27–29 January 2018; Volume 4, pp. 37–46. [Google Scholar] [CrossRef]

- Kuang, X.; Sui, X.; Liu, Y.; Chen, Q.; Gu, G. Single Infrared Image Enhancement Using a Deep Convolutional Neural Network. Neurocomputing 2019, 332, 119–128. [Google Scholar] [CrossRef]

- Qi, Y.; He, R.; Lin, H. Novel Infrared Image Enhancement Technology Based on The Frequency Compensation Approach. Infrared Phys. Technol. 2016, 76, 521–529. [Google Scholar] [CrossRef]

- Zhao, J.; Chen, Y.; Feng, H.; Xu, Z.; Li, Q. Infrared Image Enhancement Through Saliency Feature Analysis Based on Multi-scale Decomposition. Infrared Phys. Technol. 2014, 62, 86–93. [Google Scholar] [CrossRef]

- Wu, W.; Yang, X.; Li, H.; Liu, K.; Jian, L.; Zhou, Z. A Novel Scheme for Infrared Image Enhancement by Using Weighted Least Squares Filter and Fuzzy Plateau Histogram Equalization. Multimed. Tools Appl. 2017, 76, 24789–24817. [Google Scholar] [CrossRef]

- Ein-shoka, A.A.; Faragallah, O.S. Quality Enhancement of Infrared Images Using Dynamic Fuzzy Histogram Equalization and High Pass Adaptation in DWT. Optik 2018, 160, 146–158. [Google Scholar] [CrossRef]

- Hilla, P.R.; Anantrasirichai, N.; Achim, A.; Al-Mualla, M.E.; Bull, D.R. Undecimated Dual-Tree Complex Wavelet Transforms. Signal Process. Image Commun. 2015, 35, 61–70. [Google Scholar] [CrossRef]

- Sharma, S.; Zou, J.; Fang, G.; Shukla, P.; Cai, W. A Review of Image Watermarking for Identity Protection and Verification. Multimed. Tools Appl. 2024, 83, 31829–31891. [Google Scholar] [CrossRef]

- Wang, G.; Ma, Z.; Liu, C.; Yang, X.; Fang, H.; Zhang, W.; Yu, N. MuST: Robust Image Watermarking for Multi-Source Tracing. In Proceedings of the 38th AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 5364–5371. [Google Scholar] [CrossRef]

- Singh, H.K.; Singh, A.K. Digital Image Watermarking Using Deep Learning. Multimed. Tools Appl. 2024, 83, 2979–2994. [Google Scholar] [CrossRef]

- Boujerfaoui, S.; Douzi, H.; Harba, R.; Ros, F. Cam-Unet: Print-Cam Image Correction for Zero-Bit Fourier Image Watermarking. Sensors 2024, 24, 3400. [Google Scholar] [CrossRef]

- Xiao, X.; Zhang, Y.; Hua, Z.; Xia, Z.; Weng, J. Client-Side Embedding of Screen-Shooting Resilient Image Watermarking. IEEE Trans. Inf. Forensics Secur. 2024, 19, 5357–5372. [Google Scholar] [CrossRef]

- Hasan, M.K.; Kamil, S.; Shafiq, M.; Yuvaraj, S.; Kumar, E.S.; Vincent, R.; Nafi, N.S. An Improved Watermarking Algorithm for Robustness and Imperceptibility of Data Protection in the Perception Layer of Internet of Things. Pattern Recognit. Lett. 2021, 152, 283–294. [Google Scholar] [CrossRef]

- Peng, F.; Wang, X.; Li, Y.; Niu, P. Statistical Learning Based Blind Image Watermarking Approach. Knowl.-Based Syst. 2024, 297, 111971. [Google Scholar] [CrossRef]

- Zeng, C.; Liu, J.; Li, J.; Cheng, J.; Zhou, J.; Nawaz, S.A.; Xiao, X.; Bhatti, U.A. Multi-watermarking Algorithm for Medical Image Based on KAZE-DCT. J. Ambient. Intell. Humaniz. Comput. 2024, 15, 1735–1743. [Google Scholar] [CrossRef]

- Kumar, C. Hybrid Optimization for Secure and Robust Digital Image Watermarking with DWT, DCT and SPIHT. Multimed. Tools Appl. 2024, 83, 31911–31932. [Google Scholar] [CrossRef]

- Kumar, S.; Verma, S.; Singh, B.K.; Kumar, V.; Chandra, S.; Barde, C. Entropy Based Adaptive Color Image Watermarking Technique in YCbCr Color Space. Multimed. Tools Appl. 2024, 83, 13725–13751. [Google Scholar] [CrossRef]

- Devi, K.J.; Singh, P.; Bilal, M.; Nayyar, A. Enabling Secure Image Transmission in Unmanned Aerial Vehicle Using Digital Image Watermarking with H-Grey Optimization. Expert Syst. Appl. 2024, 236, 121190. [Google Scholar] [CrossRef]

- Su, Q.; Hu, F.; Tian, X.; Su, L.; Cao, S. A Fusion-domain Intelligent Blind Color Image Watermarking Scheme Using Graph-based Transform. Opt. Laser Technol. 2024, 177, 111191. [Google Scholar] [CrossRef]

- Zhang, L.; Liu, X.; Martin, A.V.; Bearfield, C.X.; Brun, Y.; Guan, H. Robust Image Watermarking using Stable Diffusion. arXiv 2024, arXiv:2401.04247. [Google Scholar]

- Gorbal, M.; Shelke, R.D.; Joshi, M. An Image Watermarking Scheme: Combining the Transform Domain and Deep Learning Modality with an Improved Scrambling Process. Int. J. Comput. Appl. 2024, 46, 310–323. [Google Scholar] [CrossRef]

- Olkkonen, H.; Pesola, P. Gaussian Pyramid Wavelet Transform for Multiresolution Analysis of Images. Graph. Models Image Process. 1996, 58, 394–398. [Google Scholar] [CrossRef]

- Yan, L.; Hao, Q.; Cao, J.; Saad, R.; Li, K.; Yan, Z.; Wu, Z. Infrared and Visible Image Fusion Via Octave Gaussian Pyramid Framework. Sci. Rep. 2021, 11, 1235. [Google Scholar] [CrossRef]

- Lai, W.S.; Huang, J.B.; Ahuja, N.; Yang, M.H. Deep Laplacian Pyramid Networks for Fast and Accurate Super-Resolution. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 624–632. [Google Scholar] [CrossRef]

- Qin, C.; Li, X.; Zhang, Z.; Li, F.; Zhang, X.; Feng, G. Print-Camera Resistant Image Watermarking with Deep Noise Simulation and Constrained Learning. IEEE Trans. Multimed. 2024, 26, 2164–2177. [Google Scholar] [CrossRef]

- Rai, M.; Goyal, S.; Pawar, M. An Optimized Deep Fusion Convolutional Neural Network-Based Digital Color Image Watermarking Scheme for Copyright Protection. Circuits Syst. Signal Process. 2023, 42, 4019–4050. [Google Scholar] [CrossRef]

- Choi, Y.; Kim, N.; Hwang, S.; Kweon, I.S. Thermal Image Enhancement using Convolutional Neural Network. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Republic of Korea, 9–14 October 2016; pp. 223–230. [Google Scholar] [CrossRef]

- Lee, K.; Lee, J.; Lee, J.; Hwang, S.; Lee, S. Brightness-Based Convolutional Neural Network for Thermal Image Enhancement. IEEE Access. 2017, 5, 26867–26879. [Google Scholar] [CrossRef]

- Kuang, X.; Sui, X.; Liu, Y.; Chen, Q.; Gu, G. Single Infrared Image Optical Noise Removal Using a Deep Convolutional Neural Network. IEEE Photonics J. 2017, 10, 7800615. [Google Scholar] [CrossRef]

- Zhong, S.; Fu, L.; Zhang, F. Infrared Image Enhancement Using Convolutional Neural Networks for Auto-Driving. Appl. Sci. 2023, 13, 12581. [Google Scholar] [CrossRef]

- Li, C.; Cheng, H.; Hu, S.; Liu, X.; Tang, J.; Lin, L. Learning Collaborative Sparse Representation for Grayscale-thermal Tracking. IEEE Trans. Image Process. 2016, 25, 5743–5756. [Google Scholar] [CrossRef]

- Xu, H.; Wang, X.; Ma, J. DRF: Disentangled Representation for Visible and Infrared Image Fusion. IEEE Trans. Instrum. Meas. 2021, 70, 5006713. [Google Scholar] [CrossRef]

- Toet, A. The TNO Multiband Image Data Collection. Data Brief 2017, 15, 249–251. [Google Scholar] [CrossRef]

- Li, C.; Xue, W.; Jia, Y.; Qu, Z.; Luo, B.; Tang, J.; Sun, D. LasHeR: A Large-scale High-diversity Benchmark for RGBT Tracking. IEEE Trans. Image Process. 2021, 31, 392–404. [Google Scholar] [CrossRef]

- Li, C.; Zhao, N.; Lu, Y.; Zhu, C.; Tang, J. Weighted Sparse Representation Regularized Graph Learning for RGB-T Object Tracking. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1856–1864. [Google Scholar] [CrossRef]

- Li, C.; Liang, X.; Lu, Y.; Zhao, N.; Tang, J. RGB-T Object Tracking: Benchmark and Baseline. Pattern Recognit. 2019, 96, 106977. [Google Scholar] [CrossRef]

- Cao, F.; Guo, D.; Wang, T.; Yao, H.; Li, J.; Qin, C. Universal Screen-shooting Robust Image Watermarking with Channel-attention in DCT Domain. Expert Syst. Appl. 2024, 238, 122062. [Google Scholar] [CrossRef]

- Wan, M.; Gu, G.; Qian, W.; Ren, K.; Chen, Q.; Maldague, X. Infrared Image Enhancement Using Adaptive Histogram Partition and Brightness Correction. Remote Sens. 2018, 10, 682. [Google Scholar] [CrossRef]

- Qi, J.; Abera, D.E.; Fanose, M.N.; Wang, L.; Cheng, J. A Deep Learning and Image Enhancement Based Pipeline for Infrared and Visible Image Fusion. Neurocomputing 2024, 578, 127353. [Google Scholar] [CrossRef]

- Zhang, F.; Hu, H.; Wang, Y. Infrared Image Enhancement Based on Adaptive Non-local Filter and Local Contrast. Optik 2023, 292, 171407. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional Networks for Niomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention MICCAI International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Anand, A. A Dimensionality Reduction-based Approach for Secured Color Image Watermarking. Soft Comput. 2024, 28, 5137–5154. [Google Scholar] [CrossRef]

- Niu, P.; Wang, F.; Wang, X. SVD-UDWT Difference Domain Statistical Image Watermarking Using Vector Alpha Skew Gaussian Distribution. Circuits Syst. Signal Process. 2024, 43, 224–263. [Google Scholar] [CrossRef]

| (a) GTOT Dataset | |||||

| Attack | BER (%) | ||||

| Niu et al. [52] | Anand [51] | Singh et al. [19] | Cao et al. [46] | Proposed | |

| HE | 2.6171 | 2.7343 | 2.5390 | 2.3632 | 1.6601 |

| Mean Filter | 1.7382 | 1.5625 | 1.0742 | 0.7812 | 0.7031 |

| Median Filter | 1.6601 | 1.5039 | 1.0546 | 0.7421 | 0.7031 |

| Sobel Operator | 1.9140 | 1.9531 | 1.8164 | 1.3085 | 1.2109 |

| AHPBC | 1.8554 | 1.7773 | 1.5429 | 1.1718 | 0.8789 |

| DWT-EPSO | 6.4257 | 6.2890 | 5.8007 | 4.7265 | 4.0625 |

| DeepVIF | 1.9726 | 1.7578 | 1.5625 | 1.2109 | 0.9179 |

| ANFLC | 2.9492 | 2.6953 | 2.6367 | 2.1875 | 1.9335 |

| (b) Road-Scene dataset | |||||

| Attack | BER (%) | ||||

| Niu et al. [52] | Anand [51] | Singh et al. [19] | Cao et al. [46] | Proposed | |

| HE | 2.7734 | 2.8320 | 2.5683 | 2.5140 | 1.8359 |

| Mean Filter | 1.9140 | 1.6992 | 1.1816 | 0.8886 | 0.7910 |

| Median Filter | 1.8847 | 1.6503 | 1.1718 | 0.8789 | 0.7715 |

| Sobel Operator | 2.0996 | 1.9531 | 1.8945 | 1.3476 | 1.3281 |

| AHPBC | 2.0507 | 1.9238 | 1.7187 | 1.2792 | 0.9668 |

| DWT-EPSO | 6.7382 | 6.4843 | 5.8496 | 5.0195 | 4.1601 |

| DeepVIF | 2.1191 | 1.8847 | 1.8066 | 1.2988 | 0.9961 |

| ANFLC | 3.1347 | 3.0273 | 2.9394 | 2.4414 | 2.1191 |

| (c) TNO dataset | |||||

| Attack | BER (%) | ||||

| Niu et al. [52] | Anand [51] | Singh et al. [19] | Cao et al. [46] | Proposed | |

| HE | 2.5585 | 2.5976 | 2.3828 | 2.3242 | 1.5625 |

| Mean Filter | 1.7187 | 1.5234 | 1.0156 | 0.7031 | 0.6445 |

| Median Filter | 1.6601 | 1.4843 | 0.9960 | 0.7031 | 0.6445 |

| Sobel Operator | 1.8750 | 1.8554 | 1.7578 | 1.1914 | 1.1328 |

| AHPBC | 1.8164 | 1.7187 | 1.4843 | 1.1328 | 0.8007 |

| DWT-EPSO | 6.3671 | 6.1914 | 5.6250 | 4.6484 | 3.9257 |

| DeepVIF | 1.9140 | 1.6796 | 1.5625 | 1.0937 | 0.7812 |

| ANFLC | 2.8515 | 2.7148 | 2.5195 | 2.0507 | 1.8554 |

| (d) LasHeR dataset | |||||

| Attack | BER (%) | ||||

| Niu et al. [52] | Anand [51] | Singh et al. [19] | Cao et al. [46] | Proposed | |

| HE | 2.7929 | 2.8515 | 2.6074 | 2.5976 | 1.8652 |

| Mean Filter | 1.9335 | 1.6894 | 1.2304 | 0.8984 | 0.8007 |

| Median Filter | 1.9042 | 1.6601 | 1.2109 | 0.8984 | 0.7715 |

| Sobel Operator | 2.1093 | 1.9824 | 1.9042 | 1.3867 | 1.3476 |

| AHPBC | 2.0703 | 1.9335 | 1.7578 | 1.2792 | 1.0742 |

| DWT-EPSO | 6.7089 | 6.5234 | 5.8105 | 5.1074 | 4.1406 |

| DeepVIF | 2.1582 | 1.9628 | 1.8652 | 1.2890 | 1.1035 |

| ANFLC | 3.1738 | 3.1054 | 2.9004 | 2.4707 | 2.1582 |

| (e) RGBT210 dataset | |||||

| Attack | BER (%) | ||||

| Niu et al. [52] | Anand [51] | Singh et al. [19] | Cao et al. [46] | Proposed | |

| HE | 2.8320 | 2.8613 | 2.6367 | 2.6074 | 1.9140 |

| Mean Filter | 1.9531 | 1.7187 | 1.2597 | 0.9082 | 0.8691 |

| Median Filter | 1.9043 | 1.6992 | 1.2207 | 0.8886 | 0.8593 |

| Sobel Operator | 2.1386 | 2.0019 | 1.9140 | 1.3574 | 1.3574 |

| AHPBC | 2.0800 | 1.9824 | 1.7285 | 1.2988 | 1.1035 |

| DWT-EPSO | 6.7871 | 6.5430 | 5.8691 | 5.0292 | 4.1992 |

| DeepVIF | 2.1875 | 2.0117 | 1.9531 | 1.4062 | 1.1719 |

| ANFLC | 3.2031 | 3.1152 | 3.0761 | 2.4902 | 2.1484 |

| (f) RGBT234 dataset | |||||

| Attack | BER (%) | ||||

| Niu et al. [52] | Anand [51] | Singh et al. [19] | Cao et al. [46] | Proposed | |

| HE | 2.7148 | 2.8125 | 2.6757 | 2.4609 | 1.8554 |

| Mean Filter | 1.7773 | 1.6015 | 1.1718 | 1.0351 | 0.9765 |

| Median Filter | 1.7187 | 1.5625 | 1.1132 | 1.0156 | 0.9375 |

| Sobel Operator | 1.9921 | 2.0312 | 1.9335 | 1.5234 | 1.4062 |

| AHPBC | 1.8750 | 1.8164 | 1.6015 | 1.2695 | 1.0937 |

| DWT-EPSO | 6.5039 | 6.4062 | 5.9375 | 4.9218 | 4.4140 |

| DeepVIF | 2.0507 | 1.8164 | 1.6992 | 1.4062 | 1.1718 |

| ANFLC | 3.1250 | 2.9687 | 2.7929 | 2.3437 | 2.0312 |

| (a) GTOT Dataset | |||||

| Attack | BER (%) | ||||

| Niu et al. [52] | Anand [51] | Singh et al. [19] | Cao et al. [46] | Proposed | |

| TEN | 3.2812 | 3.1250 | 2.4804 | 1.8554 | 1.5429 |

| BCNN | 3.8085 | 3.7695 | 2.7539 | 2.3046 | 2.1484 |

| ONRDCNN | 7.4609 | 7.0898 | 5.9765 | 4.9804 | 3.8671 |

| ACNN | 7.7929 | 7.5781 | 6.3671 | 6.1718 | 5.5859 |

| (b) Road-Scene dataset | |||||

| Attack | BER (%) | ||||

| Niu et al. [52] | Anand [51] | Singh et al. [19] | Cao et al. [46] | Proposed | |

| TEN | 3.3789 | 3.2324 | 2.5488 | 2.0117 | 1.6211 |

| BCNN | 4.1210 | 4.0722 | 3.0175 | 2.4707 | 2.2168 |

| ONRDCNN | 7.6171 | 7.4902 | 6.3574 | 5.3515 | 4.0136 |

| ACNN | 8.5058 | 8.2128 | 6.8847 | 6.5527 | 5.9667 |

| (c) TNO dataset | |||||

| Attack | BER (%) | ||||

| Niu et al. [52] | Anand [51] | Singh et al. [19] | Cao et al. [46] | Proposed | |

| TEN | 3.1640 | 3.0468 | 2.3046 | 1.7382 | 1.4843 |

| BCNN | 3.7304 | 3.5546 | 2.7148 | 2.2460 | 2.0898 |

| ONRDCNN | 7.3828 | 7.0117 | 5.8203 | 4.9218 | 3.7695 |

| ACNN | 7.6367 | 7.4804 | 6.2890 | 6.1328 | 5.4687 |

| (d) LasHeR dataset | |||||

| Attack | BER (%) | ||||

| Niu et al. [52] | Anand [51] | Singh et al. [19] | Cao et al. [46] | Proposed | |

| TEN | 3.4375 | 3.2714 | 2.5683 | 2.0312 | 1.6992 |

| BCNN | 4.2188 | 4.2089 | 3.0273 | 2.4218 | 2.2363 |

| ONRDCNN | 7.6660 | 7.5488 | 6.3964 | 5.3710 | 4.0234 |

| ACNN | 8.7402 | 8.2910 | 6.9140 | 6.6992 | 6.0059 |

| (e) RGBT210 dataset | |||||

| Attack | BER (%) | ||||

| Niu et al. [52] | Anand [51] | Singh et al. [19] | Cao et al. [46] | Proposed | |

| TEN | 3.5449 | 3.5058 | 2.6269 | 2.0605 | 1.6406 |

| BCNN | 4.2578 | 4.2383 | 3.0273 | 2.5097 | 2.2656 |

| ONRDCNN | 7.8417 | 7.6563 | 6.4746 | 5.4395 | 4.0820 |

| ACNN | 8.9063 | 8.6621 | 6.9727 | 6.8945 | 6.0937 |

| (f) RGBT234 dataset | |||||

| Attack | BER (%) | ||||

| Niu et al. [52] | Anand [51] | Singh et al. [19] | Cao et al. [46] | Proposed | |

| TEN | 3.6328 | 3.4375 | 2.7343 | 2.0507 | 1.6015 |

| BCNN | 3.9453 | 4.0429 | 2.8125 | 2.4414 | 2.2460 |

| ONRDCNN | 7.5976 | 7.1875 | 6.1523 | 5.1757 | 4.1601 |

| ACNN | 7.9492 | 7.8125 | 6.5039 | 6.3085 | 5.9179 |

| (a) Proposed | ||||||

| Attack | GTOT | Road-Scene | TNO | LasHeR | RGBT210 | RGBT234 |

| HE | 3.9746 | 4.3383 | 4.0673 | 6.5429 | 5.9521 | 7.4560 |

| Mean Filter | 1.9677 | 2.2363 | 1.8554 | 3.5986 | 3.4350 | 3.8769 |

| Median Filter | 1.7431 | 2.0263 | 1.7675 | 3.2812 | 3.0078 | 3.6279 |

| Sobel Operator | 3.6425 | 3.2275 | 3.0371 | 5.1416 | 4.4653 | 5.7421 |

| AHPBC | 2.0166 | 2.2949 | 2.1240 | 4.5507 | 3.7304 | 4.4677 |

| DWT-EPSO | 9.1650 | 10.4370 | 8.9648 | 13.3447 | 12.3046 | 13.8769 |

| DeepVIF | 2.3730 | 2.4291 | 2.3437 | 4.8754 | 3.9111 | 5.0830 |

| ANFLC | 4.9609 | 5.1513 | 5.6396 | 8.7329 | 7.6708 | 9.1308 |

| TEN | 4.1162 | 3.9868 | 3.4423 | 5.5664 | 5.0122 | 6.0302 |

| BCNN | 4.5605 | 5.1293 | 4.4238 | 8.2104 | 7.0922 | 7.9589 |

| ONRDCNN | 8.3789 | 10.1391 | 8.7060 | 12.4682 | 11.8334 | 13.1689 |

| ACNN | 13.1152 | 14.1577 | 12.5732 | 18.0249 | 17.2924 | 18.9550 |

| (b) Cao et al. [46] | ||||||

| Attack | GTOT | Road-Scene | TNO | LasHeR | RGBT210 | RGBT234 |

| HE | 7.7881 | 8.3105 | 7.1533 | 8.7305 | 9.0430 | 10.3418 |

| Mean Filter | 2.6074 | 3.1982 | 2.1826 | 3.5742 | 3.5547 | 4.0967 |

| Median Filter | 2.3438 | 3.0615 | 2.1387 | 3.3447 | 3.2178 | 3.6719 |

| Sobel Operator | 4.9756 | 5.3223 | 4.1406 | 6.0986 | 5.3223 | 6.8115 |

| AHPBC | 3.8672 | 4.9121 | 3.3789 | 5.3711 | 4.3994 | 4.6045 |

| DWT-EPSO | 12.4951 | 15.8545 | 12.0947 | 17.0898 | 16.4453 | 17.5684 |

| DeepVIF | 3.5498 | 4.4824 | 3.1689 | 4.1504 | 5.5273 | 6.4893 |

| ANFLC | 7.1104 | 8.9756 | 6.1094 | 9.2588 | 9.6494 | 10.1172 |

| TEN | 4.8438 | 6.0156 | 4.4678 | 6.7676 | 7.6074 | 7.2705 |

| BCNN | 5.9570 | 9.5068 | 5.5762 | 8.9404 | 9.9121 | 9.6191 |

| ONRDCNN | 11.5918 | 14.1064 | 11.0791 | 14.7363 | 15.4785 | 15.6934 |

| ACNN | 14.8730 | 20.1660 | 14.5117 | 20.6299 | 21.0352 | 20.6582 |

| (c) Singh et al. [19] | ||||||

| Attack | GTOT | Road-Scene | TNO | LasHeR | RGBT210 | RGBT234 |

| HE | 8.2617 | 8.9697 | 7.6221 | 9.3896 | 9.8926 | 11.7188 |

| Mean Filter | 2.9053 | 3.3643 | 2.2852 | 3.9307 | 4.1406 | 4.5264 |

| Median Filter | 2.8711 | 3.2373 | 2.1045 | 3.4229 | 3.7939 | 4.0967 |

| Sobel Operator | 5.7031 | 5.4492 | 4.9365 | 7.2266 | 7.6758 | 8.5791 |

| AHPBC | 5.3320 | 5.8691 | 4.6289 | 6.9287 | 6.3623 | 7.4707 |

| DWT-EPSO | 14.5654 | 15.7275 | 13.6816 | 17.1680 | 16.0156 | 17.4365 |

| DeepVIF | 4.6924 | 5.7715 | 3.8428 | 6.2012 | 6.9043 | 8.0859 |

| ANFLC | 8.7842 | 9.5850 | 8.1445 | 9.1650 | 10.1807 | 9.8145 |

| TEN | 5.9326 | 6.8945 | 5.0439 | 7.6367 | 8.3301 | 9.0869 |

| BCNN | 7.6465 | 9.1455 | 6.1963 | 9.9756 | 9.6289 | 10.5811 |

| ONRDCNN | 12.8418 | 14.2139 | 11.9092 | 16.1621 | 16.6406 | 17.2705 |

| ACNN | 16.7725 | 18.4180 | 15.6787 | 20.9619 | 21.6162 | 22.3291 |

| (d) Anand [51] | ||||||

| Attack | GTOT | Road-Scene | TNO | LasHeR | RGBT210 | RGBT234 |

| HE | 12.6709 | 13.9355 | 11.7627 | 14.3652 | 15.2979 | 16.1328 |

| Mean Filter | 5.1465 | 6.8652 | 4.8291 | 6.3965 | 7.9541 | 8.4277 |

| Median Filter | 4.4092 | 5.9229 | 3.7598 | 6.0547 | 6.7139 | 7.5879 |

| Sobel Operator | 9.4824 | 9.7852 | 8.5742 | 10.5859 | 11.2061 | 11.9336 |

| AHPBC | 7.5781 | 8.1348 | 7.2852 | 8.8916 | 9.3262 | 9.9268 |

| DWT-EPSO | 19.4482 | 21.8506 | 18.3740 | 22.6855 | 23.6523 | 25.0928 |

| DeepVIF | 7.9639 | 8.7012 | 7.2559 | 9.5361 | 10.0928 | 10.4150 |

| ANFLC | 11.6260 | 12.9199 | 12.2412 | 13.8770 | 14.2041 | 15.6934 |

| TEN | 9.7803 | 11.6260 | 8.7549 | 11.9385 | 13.3105 | 14.3408 |

| BCNN | 14.5898 | 15.3809 | 13.6670 | 16.1621 | 16.7432 | 17.7295 |

| ONRDCNN | 18.0664 | 20.1807 | 17.4023 | 21.0107 | 21.9629 | 23.3105 |

| ACNN | 21.3574 | 24.4092 | 19.7949 | 25.2051 | 26.7627 | 29.0918 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, Y.; Li, L.; Zhang, S.; Lu, J.; Luo, T. IEWNet: Multi-Scale Robust Watermarking Network Against Infrared Image Enhancement Attacks. J. Imaging 2025, 11, 171. https://doi.org/10.3390/jimaging11050171

Bai Y, Li L, Zhang S, Lu J, Luo T. IEWNet: Multi-Scale Robust Watermarking Network Against Infrared Image Enhancement Attacks. Journal of Imaging. 2025; 11(5):171. https://doi.org/10.3390/jimaging11050171

Chicago/Turabian StyleBai, Yu, Li Li, Shanqing Zhang, Jianfeng Lu, and Ting Luo. 2025. "IEWNet: Multi-Scale Robust Watermarking Network Against Infrared Image Enhancement Attacks" Journal of Imaging 11, no. 5: 171. https://doi.org/10.3390/jimaging11050171

APA StyleBai, Y., Li, L., Zhang, S., Lu, J., & Luo, T. (2025). IEWNet: Multi-Scale Robust Watermarking Network Against Infrared Image Enhancement Attacks. Journal of Imaging, 11(5), 171. https://doi.org/10.3390/jimaging11050171