Abstract

This paper presents a comprehensive investigation into advanced image processing using geodesic filtering within a Riemannian manifold framework. We introduce a novel geodesic filtering formulation that uniquely integrates spatial and intensity relationships through minimal path computation, demonstrating significant improvements in edge preservation and noise reduction compared to conventional methods. Our quantitative analysis using peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) metrics across diverse image types reveals that our approach outperforms traditional techniques in preserving fine details while effectively suppressing both Gaussian and non-Gaussian noise. We developed an automatic parameter optimization methodology that eliminates manual tuning by identifying optimal filtering parameters based on image characteristics. Additionally, we present a highly optimized GPU implementation featuring innovative wave-propagation algorithms and memory access optimization techniques that achieve a 200× speedup, making geodesic filtering practical for real-time applications. Our work bridges the gap between theoretical elegance and computational practicality, establishing geodesic filtering as a superior solution for challenging image processing tasks in fields ranging from medical imaging to remote sensing.

1. Introduction

The central challenge in image filtering lies in achieving an optimal balance between noise reduction and the preservation of essential image features. Traditional methods rely on carefully designed mathematical models to selectively smooth images while retaining edges and textures. In contrast, modern neural network approaches employ data-driven learning to obtain similar objectives. However, both methodologies face inherent limitations: conventional methods often struggle with complex noise patterns, whereas neural networks typically require extensive training data and frequently lack robust theoretical guarantees, leading to unpredictable behavior.

In response to these challenges, this paper makes four specific contributions to the field of image processing:

- Novel Geodesic Filtering Framework: We introduce a mathematically rigorous formulation of geodesic filtering that uniquely leverages Riemannian manifold theory to combine spatial and intensity information in a unified framework. Unlike other filters, the geodesic approach adapts to local image characteristics through

- Geometric distance metrics in a manifold: Allowing for a more natural measurement of similarity between pixels by integrating both spatial and intensity relationships.

- Local curvature considerations: Preserving the intrinsic structure of image features, especially along edges and contours.

- Adaptive kernel sizing based on manifold properties: Dynamically adjusting the filtering window in response to the local geometry of the image.

Unlike previous approaches that treat these domains separately, our method computes minimal geodesic paths that inherently respect image structure, offering significant advantages, including

- Enhanced edge preservation: Retaining sharp transitions and fine details.

- Superior noise reduction: Effectively suppressing both Gaussian and non-Gaussian noise.

- Fine texture detail retention: Maintaining subtle textures while reducing unwanted noise.

- Effective handling of variable noise levels: Adapting robustly to different noise intensities across the image.

- Sharp transition preservation during noise smoothing: Avoiding over-smoothing at boundaries.

- Enhanced performance with non-Gaussian noise: Outperforming traditional methods in challenging noise conditions such as speckle noise.

- Improved outlier robustness: Providing resilience against anomalous data points.

- 2.

- Comprehensive Comparative Analysis: We present the first systematic evaluation of geodesic filtering against state-of-the-art alternatives (including anisotropic diffusion, bilateral filtering, least median of squares, and deep image prior techniques) using standardized metrics (PSNR and SSIM) across diverse image types and noise conditions.

- 3.

- Parameter Optimization Framework: We developed a novel methodology for automatically determining optimal filtering parameters based on image characteristics. This approach eliminates the manual trial-and-error process typically required in advanced filtering techniques, making geodesic filtering more accessible for practical applications.

- 4.

- High-Performance CUDA Implementation: We introduce specific technical innovations in our GPU implementation that overcome the inherent computational complexity of geodesic filtering. Our wave-propagation algorithm and memory conflict resolution techniques achieve a 200× speedup over conventional CPU implementations, transforming geodesic filtering from a theoretically superior but computationally prohibitive method into a practical solution for real-time applications.

These contributions collectively bridge the gap between theoretical mathematical models and practical image processing applications, establishing geodesic filtering as both a robust theoretical framework and an effective computational tool for advanced image processing.

The remainder of this paper is organized as follows. In Section 2, we review the current literature on non-linear methods for image filtering, detailing their operational principles and implementation strategies. Section 3 introduces the fundamental mathematical concepts underlying geodesic filtering within a Riemannian manifold framework. In Section 4, we present extensive experimental results obtained from processing a diverse image database to demonstrate the impact of various filtering parameters on performance. We also conducted a comparative analysis with other state-of-the-art non-linear filtering techniques. Section 5 describes our highly optimized CUDA C implementation that leverages GPU parallelism to address the computational challenges of geometric filtering. Finally, Section 6 concludes the paper by discussing the strengths and limitations of our approach and Section 7 outlines potential extensions to more complex 3D and 4D manifolds.

2. Literature Review

Early methods primarily targeted basic noise reduction using straightforward linear or spatial operations, often at the expense of image structure. Non-linear filtering methodologies represent a sophisticated class of image processing techniques that go beyond traditional linear approaches. These can be categorized into four distinct approaches, each with unique mathematical foundations and operational principles.

Anisotropic diffusion, the first category, draws from partial differential equations and heat flow theory. This approach intelligently modulates the diffusion process based on local image characteristics, allowing homogeneous regions to be smoothed while crucial edge features remain intact. By adapting the diffusion coefficient to the local gradient magnitude, these filters can reduce noise while preserving the structural integrity of images.

The second approach leverages robust statistics to create filters that minimize the influence of edges during smoothing operations. These methods typically replace standard mean calculations with robust estimators like least median square (LMS) that are less sensitive to outliers, effectively treating edge pixels as statistical anomalies. This statistical foundation allows for effective noise reduction without the edge blurring commonly associated with linear filtering techniques, providing superior performance in environments with varying noise distributions.

Geodesic filtering, the third category, represents a more geometrically oriented approach based on differential geometry and manifold theory. This methodology conceptualizes images as high-dimensional manifolds embedded in feature space. By computing geodesic distances, these filters can adaptively process images according to their inherent geometric properties. This approach excels at preserving fine structures and textural details while removing noise.

The fourth category encompasses neural-network-based methods, which leverage data-driven approaches to optimize filtering parameters. Unlike traditional approaches that rely on predefined mathematical models, these methods learn optimal filtering strategies directly from training data. By exposing networks to pairs of noisy and clean images, these systems can discover complex non-linear relationships that effectively separate signal from noise. The resulting filters often demonstrate remarkable adaptability across various noise types and image characteristics, though their performance depends heavily on the quality and diversity of the training data. This analysis specifically examines algorithms that do not require training data, thus excluding conventional neural network methods. For readers interested in deep-learning-based image denoising techniques, reference [1] offers a comprehensive overview. The Deep Image Prior (DIP) network [2] is the only neural network approach considered here, as it uniquely functions without pre-training requirements.

Each of these approaches offers distinct advantages and limitations, with their effectiveness varying according to specific application requirements, computational constraints, and the nature of the image data being processed.

2.1. Gradient Anisotropic Diffusion

Gradient anisotropic diffusion (GAD), as introduced by Perona and Malik [3], fundamentally reimagines image processing through the lens of heat diffusion equations. The core mathematical formulation begins with a partial differential diffusion equation:

where represents the image intensity at position and time , and is the diffusion coefficient. This formulation builds upon the classical heat equation but introduces crucial non-linearity through the spatially varying diffusion coefficient.

The diffusion coefficient, as noted by Weickert et al. [4], plays a pivotal role in controlling the filtering process. It typically takes the form

where is a function that reduces diffusion coefficient at the edges. Common formulations of , as discussed by [5], include

where represents a threshold parameter controlling edge sensitivity.

The theoretical significance of this framework lies in its ability to achieve selective smoothing. As demonstrated by Alvarez et al. [5], the process preserves edges by reducing diffusion across high-gradient regions while promoting smoothing in homogeneous areas. The mathematical analysis by Weickert et al. [4] showed that this approach creates a scale-space representation with important theoretical properties:

- Causality: No spurious details are created with increasing scale.

- Immediate stabilization: Edge enhancement occurs in early iterations.

- Localization: Edges remain stable during the diffusion process.

On the other hand, GAD exhibits significant sensitivity to parameter configuration, with its effectiveness heavily reliant on appropriate selection of diffusion coefficients and iteration counts. The method frequently encounters stability and convergence challenges when parameters are improperly configured, creating substantial difficulty in establishing automated optimal termination criteria for the diffusion process. Additionally, its filtering capabilities show marked deterioration when confronted with impulse noise patterns or image regions containing complex textures, limiting its applicability across diverse image processing scenarios.

2.2. Curvature Anisotropic Diffusion Framework

Curvature anisotropic diffusion (CAD) formulation differs fundamentally from the original work of Perona and Malik by incorporating geometric information through the introduction of a mean curvature term. Initially proposed by Alvarez et al. [6] and further developed by Sapiro et al. [7], this framework offers superior preservation of geometric features while effectively reducing noise. The diffusion equation for curvature anisotropic diffusion is

where

- represents the image intensity at position and time ;

- denotes the mean curvature of the level sets;

- is a decreasing function that controls the diffusion coefficient;

- is the image gradient;

- div represents the divergence operator.

For the right conditions, the CAD framework demonstrates exceptional ability to preserve critical image features such as edges and boundaries while effectively reducing noise. Its incorporation of geometric curvature information enables superior structural integrity maintenance compared to gradient-based methods. Unlike GAD, CAD incorporates mean curvature of level sets, allowing it to more faithfully respect the intrinsic geometry of image content, particularly along curved structures.

However, CAD’s performance is heavily influenced by appropriate parameter selection. The threshold parameters within the g(κ) function demand careful calibration to achieve optimal results. This makes proper CAD implementation challenging, especially in discrete domains, potentially leading to numerical instabilities. Determining the ideal iteration count presents another non-trivial challenge, frequently requiring manual adjustment or sophisticated stopping criteria. While CAD performs well against Gaussian noise, it may exhibit reduced effectiveness when confronting other noise varieties such as impulse or speckle noise without specific adaptations.

2.3. Bilateral Filter Framework

First introduced by Tomasi et al. [8], this non-linear technique revolutionized image processing by combining domain and range filtering in a single, unified framework without having to compute gradients. The method’s fundamental innovation lies in its ability to consider both spatial proximity and photometric similarity simultaneously.

The bilateral filter, as elaborated by Durand and Dorsey [9], operates on two fundamental principles:

- Spatial Domain Filtering: Pixels are weighted based on their spatial distance from the center pixel, following a Gaussian distribution. This component ensures that nearby pixels have more influence than distant ones.

- Signal Domain Filtering: Pixels are additionally weighted based on their photometric (intensity or color) similarity to the center pixel, again using a Gaussian distribution. This component ensures edge preservation by reducing the influence of pixels with significantly different intensities.

Paris et al. [10] formalized its mathematical formulation as

where

- represent the input image intensity;

- denotes the current pixel position;

- represents the spatial neighborhood;

- denotes the neighboring pixel position;

- and are Gaussian functions for spatial and range domains;

- is the normalization factor.

Studies by Kaplan et al. [11] showed that this algorithm possesses superior noise reduction capabilities:

- Effective reduction of random noise;

- Preservation of underlying signal structure;

- Minimal introduction of artifacts.

On the other hand, bilateral filtering’s effectiveness is significantly contingent on the appropriate selection of spatial and range parameters, necessitating meticulous calibration for optimal results across various applications. While the filter performs admirably against Gaussian noise, it demonstrates reduced efficacy when confronting alternative noise types such as impulse noise or structured noise patterns. Additionally, the filter can generate intensity shift artifacts in high-gradient regions. This creates edge localization challenges where, in areas with gradual transitions, the filter may not accurately maintain edge positions, potentially causing subtle displacement of boundary locations. Certain parameter configurations can also introduce unwanted piecewise constant regions, resulting in an artificial “staircase” effect in what should be smooth gradient areas.

2.4. Robust Least Median of Squares Filtering

While traditional filtering approaches like CAD, GAD, or bilateral filtering can address specific noise types, they often struggle with mixed noise patterns or fail to preserve important image features. Using the least median of squares (LMS) regression methods, introduced by Rousseeuw [12,13], offers a robust framework capable of handling up to 50% outlier contamination, making it ideal for edge preserving filters. Recent applications of LMS include edge-preserving smoothing [14] and feature detection [15].

The LMS filter processes images by looking at small windows of pixels of size (typically 5 × 5 or 7 × 7) around a central pixel . Let us define a local image color approximation for this local window as polynomial of degree d:

where are the local coordinates of the pixels that are located inside the window; is the polynomial coefficients for the color channel ; and is the order of the polynomial, typically 1 or 2.

The role of an LMS estimator is to determine the best coefficients that minimize the median error between the pixels in the window and the polynomial model:

where is the color value in channel for pixel inside the window, is the local polynomial model, and is the number of pixels in the processing window.

In each window, the algorithm tries to fit the polynomial model to the pixel values using a RANSAC (random sample consensus) algorithm by Fischler and Bolles [16] where a minimum sample set equal to is used to compute candidate model coefficients (t). For each sampling iteration , the algorithm computes the median values of the error between the remaining pixels and the current instance of the local polynomial model . The number of random sample iterations is determined by , which guarantees a confidence level of p = 0.99 for an outlier ratio of = 50%. After iterations, the algorithm then chooses the model corresponding to the least median square value and its corresponding model coefficients (). Using this model, the algorithm diagnoses the pixels inside the window that are inliers vs. outliers by computing the difference between the LMS model and the remaining pixel. Following Rousseeuw and Leroy [17], the robust threshold to determine if a pixel is an inlier vs. outlier is

One can then diagnose the pixel using the following test: if then it is an inlier. Using the inlier pixels, the algorithm then computes the final model coefficients using a least mean square algorithm and then replaces the central pixels with the polynomial approximation . If the number of inliers is smaller or equal to , then the central pixel is replaced by the median value of the window. For color images, we process each channel independently while maintaining color consistency through

- Joint outlier detection across channels.

- Consistent polynomial surface fitting.

- Color-aware scale estimation.

The LMS approach demonstrates remarkable resilience, handling contamination of up to 50% outliers, which makes it exceptionally robust for edge preservation and noise reduction compared to conventional filtering methods. LMS particularly excels at maintaining crisp edges and boundaries while efficiently eliminating noise, avoiding the blurring artifacts typically associated with alternative filtering techniques. The algorithm exhibits strong performance across diverse noise distributions and can effectively manage mixed noise patterns that frequently challenge other filtering approaches.

However, LMS filtering presents significant challenges in parameter optimization due to the complex interactions between polynomial degree, window size, and outlier threshold parameters that substantially influence performance outcomes. Additionally, LMS demands considerable computational resources, requiring multiple sampling iterations for each processing window, which can significantly impact processing time for larger images or real-time applications.

2.5. Deep Image Prior (DIP) Neural Network Filters

Deep image prior (DIP) represents a novel approach that harnesses the inherent structure of convolutional neural networks (CNNs) as an effective regularizer for natural image processing, without requiring pre-training on image datasets. The key insight is that architecture CNN inherently captures image statistics that make it biased toward natural images over noise. When optimizing an untrained CNN to reconstruct a corrupted image by minimizing the reconstruction loss, the network tends to learn the natural image content before fitting noise or artifacts. This approach has proven effective for various image restoration tasks including denoising [2], super-resolution [18], and inpainting [19], all without requiring any training data beyond the single corrupted image being processed. DIP offers several significant advantages over standard neural network algorithms: it enables zero-shot learning through the network’s architecture serving as a natural image prior, provides flexibility across multiple restoration tasks without dataset bias, and offers interpretability in how it captures image statistics. However, DIP faces notable limitations: it is computationally intensive, requiring thousands of iterations per image. The method is also sensitive to early stopping criteria and hyperparameter selection. It also lacks theoretical guarantees relying instead on empirical observations resulting in inconsistent results due to random initialization and dynamics.

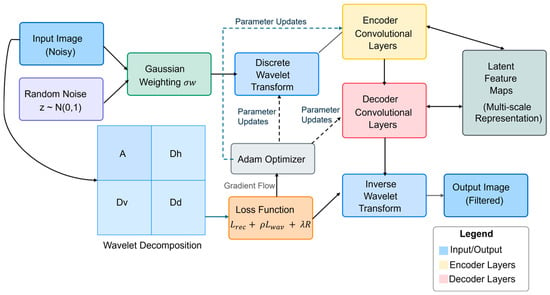

One variant of DIP is Wavelet-DIP, which has been shown by Yang, Y., et al. [20] and Liu, C., et al. [21] to enhance the original DIP framework by incorporating wavelet decomposition into the network architecture, leveraging the multi-scale analysis capabilities of wavelets. To improve processing images with Gaussian noise, a similar version to Wavelet-DIP was proposed. Ulyanov et al. introduced a change to Wavelet-DIP called the Gaussian Weighted Wavelet-DIP [22] (GW-DIP) where the wavelet function is first convolved by a Gaussian filter. GW-DIP solves the optimization problem:

where is the neural network with parameters , is a random noise input, is the target image, is the regularization term, and is a regularization weight. This minimizes the distance between the network output and target image , with regularization .

Initial random noise is transformed through Gaussian weighting:

where K(p) = is a normalization factor, is 2D Gaussian kernel, is spatial neighborhood window, and and are pixel coordinates.

The feature map is a discrete wavelet transform (DWT) defined as

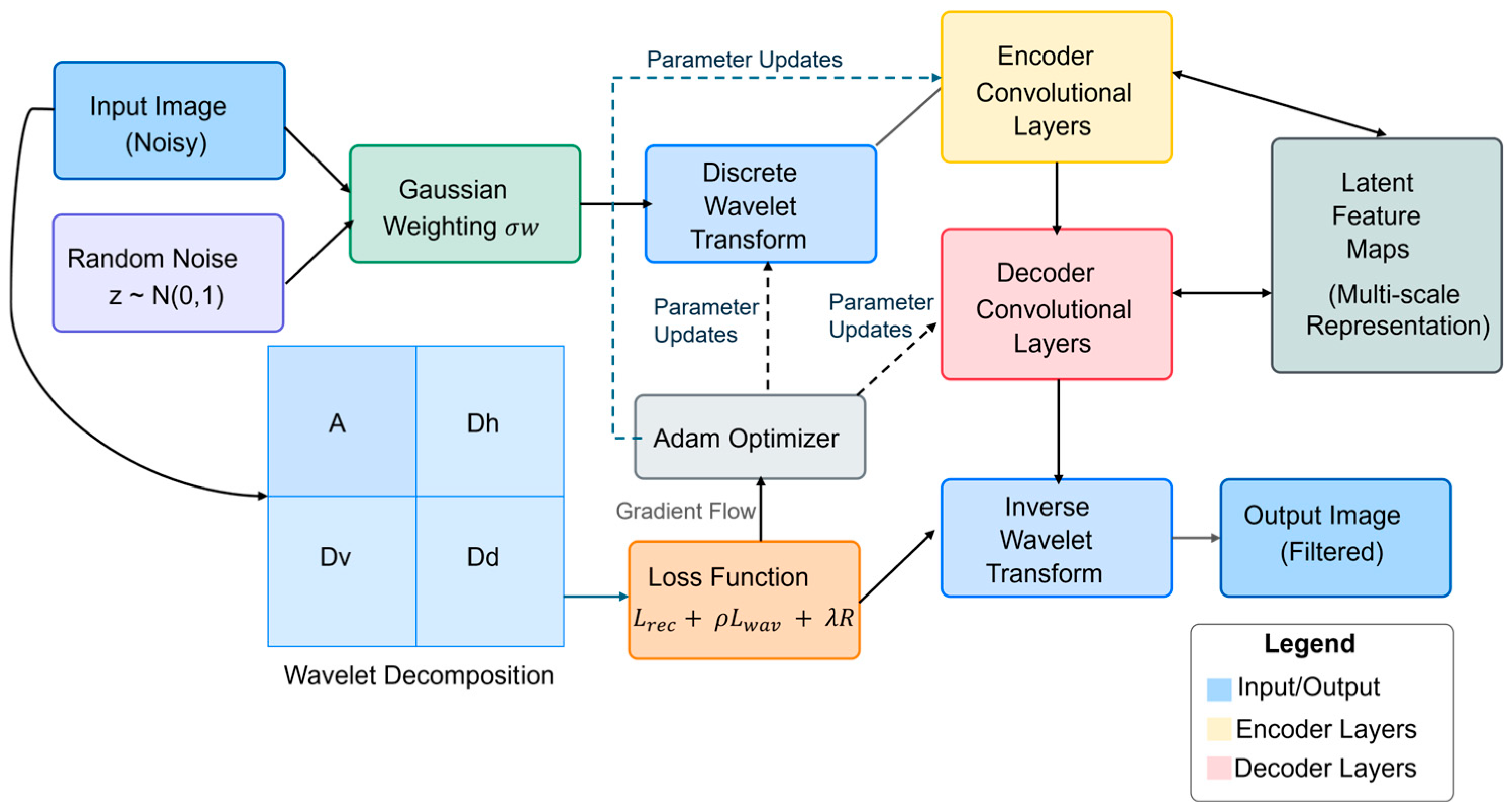

where is the decomposition level, is the spatial location, is the low-pass approximation, is the horizontal components, is the vertical components, and is the diagonal components. In our test implementation, we used the Haar wavelet for its simplicity and computational efficiency. One can see in Figure 1 the architecture of the network.

Figure 1.

Gaussian Wavelet-DIP architecture.

The combined loss function is where is the reconstruction loss function, is the wavelet loss function, and the total variation regularization term. The parameters are updated using Adam optimizer:

where

- ;

- ;

- ;

- .

with typical values of learning rate , , and .

By processing different frequency components separately through dedicated network branches, GW-DIP can better handle various image features at different scales. The wavelet transform naturally separates an image into low-frequency approximation coefficients and high-frequency detail coefficients, allowing the network to learn appropriate representations for each frequency band. This multi-scale wavelet structure provides additional information that aligns with natural image statistics, as wavelets are known to produce sparse representations of natural images.

GW-DIP leverages the intrinsic structure of convolutional neural networks (CNNs) as an implicit regularization mechanism for natural image processing, eliminating the need for pre-trained data. This method demonstrates remarkable capability in eliminating complex noise patterns that typically challenge conventional filtering techniques, as it adapts to image-specific characteristics. The architectural design of the network inherently preserves significant edges and details while effectively removing noise.

However, DIP demands substantial computational resources, requiring thousands of optimization iterations for processing a single image. The approach necessitates vigilant monitoring and strategic early stopping to prevent the network from eventually fitting to noise patterns, which complicates automation efforts. Furthermore, the quality of results significantly depends on several factors including network architecture, learning rate, and various hyperparameters that require meticulous tuning for optimal performance.

3. Geodesic Filtering

Geodesic filtering, first introduced by Boulanger [23] to process range data and later by Sochen et al. [24] to process intensity images, addresses image filtering from a fundamentally different mathematical perspective. To generalize this work, we introduce a novel filtering framework that treats signals as a -dimensional Riemannian manifold embedded in a -dimensional Euclidian space, typically combining spatial and signal value coordinates. Let be a n-dimensional vector immersed into a m-dimensional rectangular manifold with coordinate . For 2D images, the manifold coordinates of a point is , corresponding to a mapping from to such as:

In the case of a color image, the mapping is to , which is a mapping from to and for grey-level images to a mapping from to .

The geodesic distance between two points and on is defined as

where function is taken over all possible shortest paths connecting and on the manifold. The parameter weights the importance between the spatial components and the signal differences. This formulation, as analyzed by Kimmel et al. [25], naturally incorporates both spatial and signal value differences into a single geometric framework. The advantages of using geodesic distance on a Riemannian manifold are

- Geodesic distance provides intrinsic measure of similarity;

- Accounts for both spatial and signal differences;

- Preserves image structure better than Euclidean metrics;

- Adapts to local image geometry.

The filtering process utilizes geodesic distances through a weighted averaging:

where is a decreasing function of the geodesic distance relative to , such as

The theoretical properties of this framework, as established by Boulanger [24] and later by Mémoli and Sapiro [26], include

- Intrinsic geometry preservation;

- Adaptive neighborhood consideration;

- Natural handling of curved structures;

- Topology preservation.

The geodesic distance between points on this manifold incorporates both spatial and signal/geometry differences, providing a natural mechanism for edge-preserving smoothing. This distance measure, fundamental to the filtering process, respects the intrinsic structure of the image rather than relying solely on Euclidean distances in ambient space, making the filtering invariant to rigid transformation for range data.

Peyré [27] further developed these concepts, introducing efficient computational schemes and establishing important theoretical properties of the filtering process. The framework demonstrates several advantageous properties, including rotation invariance, contrast invariance, and the preservation of significant image features.

Modern implementations of geodesic filtering incorporate several sophisticated features. Castaño-Moraga et al. [28] introduced tensor-based extensions that better handle directional features and complex textures. Their work demonstrated improved performance in preserving fine details while still effectively reducing noise.

Zhang et al. [29], develops geometric filtering and edge detection algorithms for non-Euclidean image data, viewing image data as residing on a Riemannian manifold. They extend classical filtering techniques like median filtering and Perona-Malik anisotropic diffusion to handle non-Euclidean data through geodesic distances and the exponential map.

3.1. Geodesic Convolution on a Discrete Manifold

Geodesic filtering implementation requires careful consideration of both theoretical principles and practical computational aspects. Implementing geodesic filtering on a discrete manifold follows the theoretical framework established by Boulanger [23] and Sochen et al. [24]. From Equation (15), geodesic convolution is defined on a discrete manifold as

where is the geodesic distance between in the window neighborhood of size ( and the center of the window . is the normalization factor equal to

Modern implementations incorporate adaptive parameter selection schemes as proposed by Alonso-González et al. [30]. In our implementation the parameters to be adjusted are:

- α (signal weighting) based on local gradient statistics;

- σ (filtering extent) based on local noise estimates;

- Window size W based on feature scale analysis.

3.2. Minimal Patch Calculation on a Discrete Convolution Window

The computation of minimal paths within local windows is a critical component of geodesic filtering, as it directly influences how image features affect the overall filtering process. Initially formalized by Boulanger [23] and Sethian [31], this process determines the optimal paths that capture the intrinsic geometry of an image. The accuracy and efficiency of these minimal path calculations not only dictate the quality of the filtered output but also have a significant impact on the algorithm’s computational performance. Over the years, various methods have been developed and evaluated for this task. In this work, we implement two primary algorithms: (a) Dijkstra’s algorithm, optimized for scalar processors, and (b) the fast-marching method, which is tailored for efficient GPU-based parallel implementation.

3.2.1. Dijkstra’s Algorithm

The foundation of minimal path calculation lies in graph theory, with Dijkstra’s algorithm [32] serving as a seminal work in this area. In the context of range image processing, as elaborated by Boulanger [23], the inherently discrete nature of digital images maps naturally onto graph structures, where pixels become vertices, and their relationships are represented by weighted edges. Kimmel et al. [25] further advanced this concept by developing continuous formulations that bridge the gap between discrete and continuous manifolds, thereby reinforcing the theoretical underpinnings of geodesic filtering. Extensive analysis by Sethian et al. [31] has shown that under suitable conditions, discrete graph-based methods converge with the solutions obtained from continuous manifold formulations. This theoretical bridge, further refined by Mirebeau [33], forms the basis for modern hybrid approaches that blend discrete and continuous perspectives.

Furthermore, the work of Mémoli and Sapiro [26] established several essential theoretical properties for minimal path computations that any robust method must satisfy:

- Consistency of discrete approximations: Ensuring that as the discretization is refined, the calculated paths converge to the continuous geodesics.

- Convergence rates under refinement: Providing guarantees on the speed and accuracy with which the discrete solution approximates the continuous solution.

- Stability with respect to perturbations: Maintaining reliable performance even when the input data is subject to noise or other perturbations.

By adhering to these foundational principles, our implementation of Dijkstra’s algorithm achieves both high accuracy and computational efficiency, serving as a robust baseline for minimal path calculation in geodesic filtering.

3.2.2. Dijkstra’s Algorithm Implementation

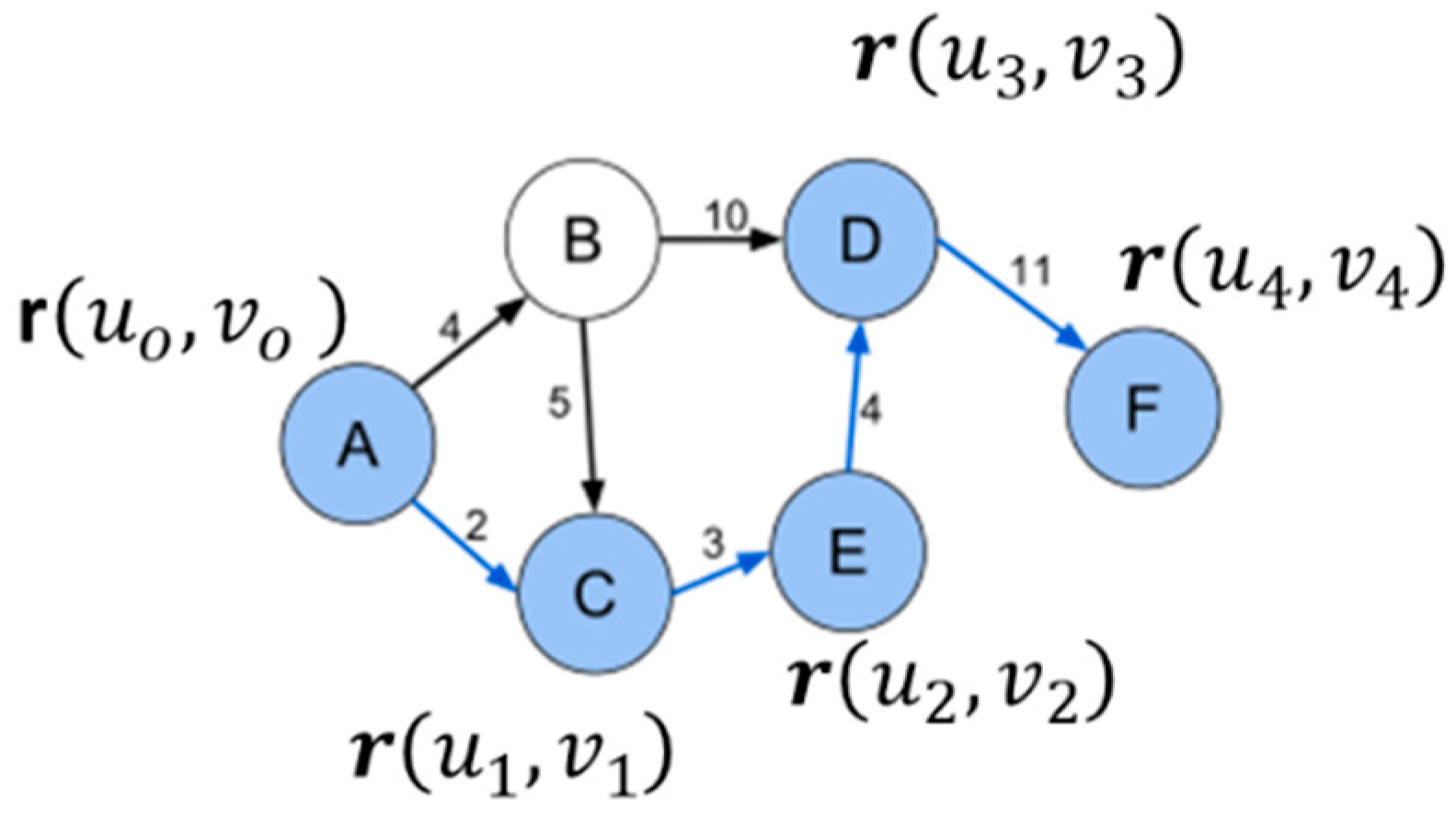

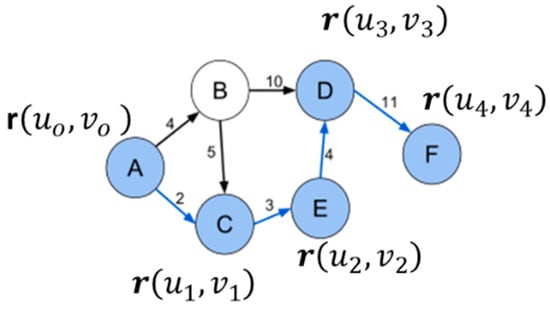

The conversion of image data to graph structure, as formalized by Boulanger [23] and Vincent [34], requires careful consideration of both spatial and signal relationships. Let be a graph representation of an image where (see Figure 2)

Figure 2.

Example of a single-source (vertex A) multiple destination graph (vertices B,C,D,E,F).

- Vertex set represents pixel locations;

- Edge set connects neighboring pixels;

- Weight function : incorporates distance metrics.

The fundamental mappings are

- Each pixel corresponds to a vertex in the graph, with edges connecting neighboring pixels. The connectivity pattern, typically 4-connected or 8-connected, significantly influences path calculation accuracy. Kimmel et al. [26] demonstrated that 8-connectivity provides better angular resolution at the cost of increased computational complexity.

- The edge weights incorporate both spatial and signal value information. The general form of edge weight between pixels p and q is defined by Equation (14).

- The efficiency of priority queue operations becomes crucial in image processing applications. Recent work by Lewis [35] demonstrated that while Fibonacci heaps offer optimal theoretical complexity, as demonstrated in Boulanger [23], binary heaps often perform better in practice due to simpler operations.

Our implementation begins with the definition of essential data structures. We define a pixel structure that contains the following components: spatial coordinates , signal value , current computed distance from source, a visited flag, and a predecessor reference for path reconstruction. Additionally, an edge structure is defined to represent connections between pixels, containing references to start and end pixels along with a weight value that combines spatial and intensity differences.

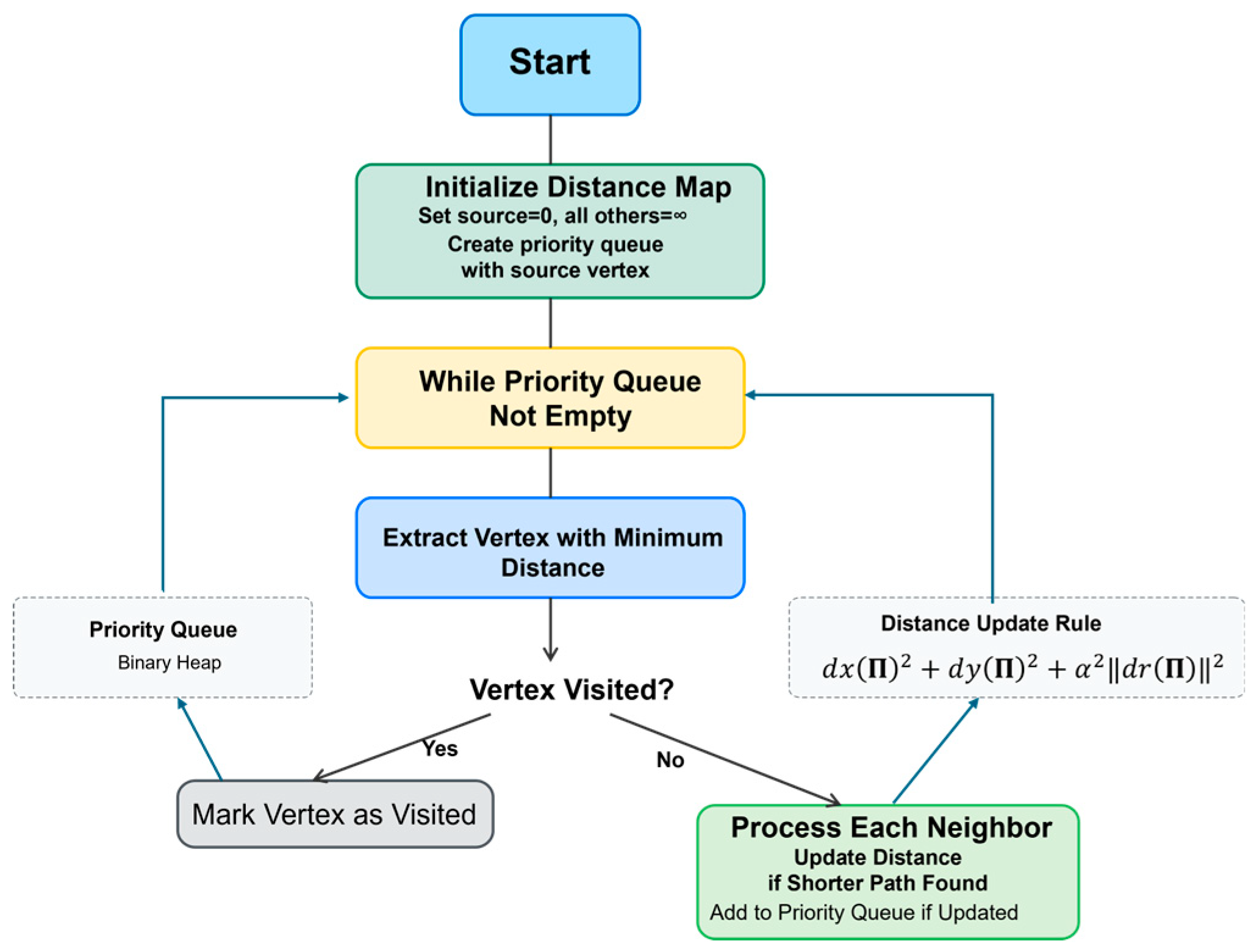

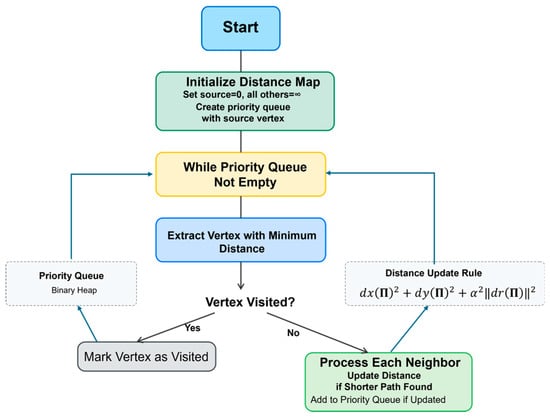

A priority queue structure is implemented using a binary heap, as recommended by Boulanger [23] maintaining pairs of distance values and pixel references. The queue supports three primary operations: insertion of new elements, extraction of minimum-distance elements, and key decrease operations for distance updates. The algorithm works as follows (see Figure 3):

Figure 3.

Dijkstra’s algorithm block diagram.

- Initialization Process

We create a two-dimensional array (pixel_grid) representing the image dimensions. For each position in the image, we instantiate a pixel object with coordinates matching its position, signal values from the original image, distance initialized to infinity (except for the source pixel which gets zero), visited flag set to false, and null predecessor reference. The source pixel’s distance is set to zero, and it is inserted into the priority queue with this initial distance. This setup establishes the starting point for the algorithm’s propagation phase.

- 2.

- Propagation Phase

The core processing loop operates as follows. While the priority queue is not empty, we are repeatedly

- Extracting the pixel with minimum distance from the queue;

- If the pixel has already been visited, skip to next iteration;

- Mark the current pixel as visited;

- Process all neighbors of the current pixel.

For each unvisited neighbor, we

- Calculate a new potential distance combining

- Spatial distance between pixels;

- Signal difference magnitude weighted by parameter β.

- If the new distance is smaller than the neighbor’s current distance,

- Update the neighbor’s distance;

- Set the current pixel as the neighbor’s predecessor;

- Insert the neighbor into the priority queue with its new distance.

- Neighbor ProcessingThe neighbor processing phase implements an 8-connectivity pattern. For the current pixel position , we examine all eight adjacent positions:

- Horizontal neighbors: ;

- Vertical neighbors: ;

- Diagonal neighbors: .

For each potential neighbor position, we- Verify position validity within image boundaries;

- Calculate combined spatial–intensity distance;

- Process distance updates if necessary.

- Distance CalculationThe distance calculation combines spatial and signal components:

- Calculate the Euclidean distance between neighboring pixel coordinates and

- Account for diagonal connections with appropriate scaling;

- Compute the norm of the n-dimensional signal difference and

- Use a parameter to assess the significance of the signal difference in comparison to the spatial component.

- Final Distance

- Combine components using square root of sum of squares;

- Apply any additional feature-based weighting.

3.2.3. Time and Memory Complexity

The time complexity of Dijkstra’s algorithm for an image of size :

- using binary heap;

- for a Fibonacci heap where is the number of edges.

Even though the algorithm is efficient, because of the random access to the heap memory, the algorithm is highly inefficient from a memory access point-of-view for parallel implementation, which requires coalesced memory access. For this reason, a second algorithm, more amicable to parallel processing, was implemented for the CUDA version.

3.3. Emphasizing Structural Integrity in Geodesic Filtering

Structural integrity represents one of the most significant advantages of geodesic filtering over conventional approaches. This aspect deserves particular emphasis as it directly addresses a fundamental challenge in image processing: preserving essential image structures while effectively removing noise.

By modeling images as high-dimensional manifolds and computing distances along the manifold surface rather than in ambient Euclidean space, geodesic filtering inherently respects the intrinsic geometry of image content. This fundamental difference allows it to

- Preserve Edge Continuity: Unlike bilateral filtering or anisotropic diffusion that can fragment edges under high noise conditions, geodesic filtering maintains continuous edge structures even with significant noise contamination. This is because geodesic paths naturally follow edge contours along the manifold surface.

- Maintain Topological Properties: The approach preserves important topological relationships between image regions, ensuring that connected components remain connected and boundaries remain intact after filtering. This is crucial for downstream tasks like segmentation or feature extraction.

- Adapt to Intrinsic Feature Scale: The geodesic distance calculation automatically adapts to the local feature scale, providing stronger preservation of fine details in textured regions while still effectively smoothing homogeneous areas.

- Respect Perceptual Organization: By following the natural organization of visual information in the image, geodesic filtering produces results that better align with human visual perception, maintaining the hierarchical structure of image content.

Research demonstrates that with optimized parameter configuration, geodesic filtering consistently surpasses alternative methodologies in preserving structural elements across a wide spectrum of image types. This performance differential becomes particularly significant in demanding applications such as medical imaging, where maintaining the structural integrity of anatomical features directly impacts diagnostic reliability. The exceptional structural preservation achieved through geodesic filtering represents not merely an incremental enhancement in visual quality but a fundamental advancement in preserving the semantic significance of visual information throughout the filtering process.

3.4. Comparison of Computational Complexity: The Overall Computing Complexity for a Image for Each Method Is

- Geodesic Filtering: ;

- LMS Filter: ;

- Gradient Anisotropic Diffusion: ;

- Curvature Anisotropic Diffusion: ;

- Bilateral Filter: ;

- Gaussian Weighted Wavelet DIP: .

Here, is the number of iterations, is the window size, and = polynomial terms.

4. Experimental Results

This section directly addresses our three core contributions through carefully designed experiments. First, we evaluated the theoretical advantages of geodesic distance calculation in preserving image structures. The parameters α and σ are central to our analysis because they represent the fundamental balance between spatial and intensity information in the geodesic framework. Parameter α controls the relative weighting of intensity differences versus spatial proximity, directly influencing edge preservation capabilities. Parameter σ determines the extent of filtering influence, governing the scale at which features are preserved or smoothed. The main goals of our experiments are to systematically explore the parameter space to demonstrate:

- The existence of optimal parameter combinations that maximize both PSNR and SSIM metrics across diverse image types.

- The superior performance of geodesic filtering compared to traditional approaches in maintaining edge integrity while reducing noise.

- The adaptability of the method to different noise conditions without requiring extensive parameter re-adjustments.

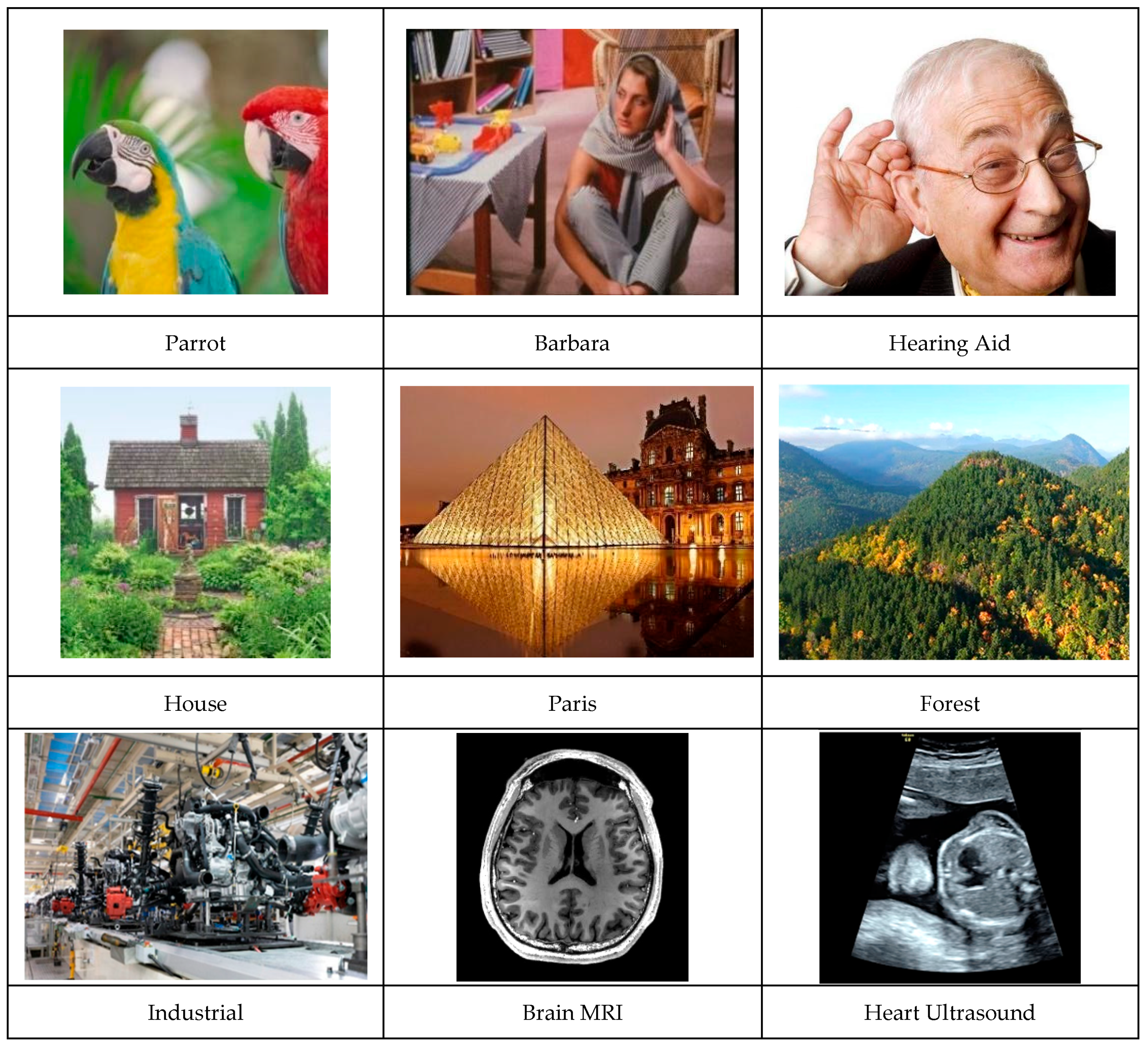

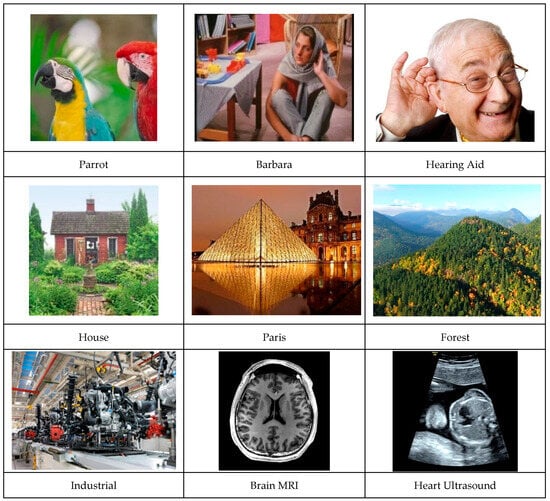

4.1. Image Dataset

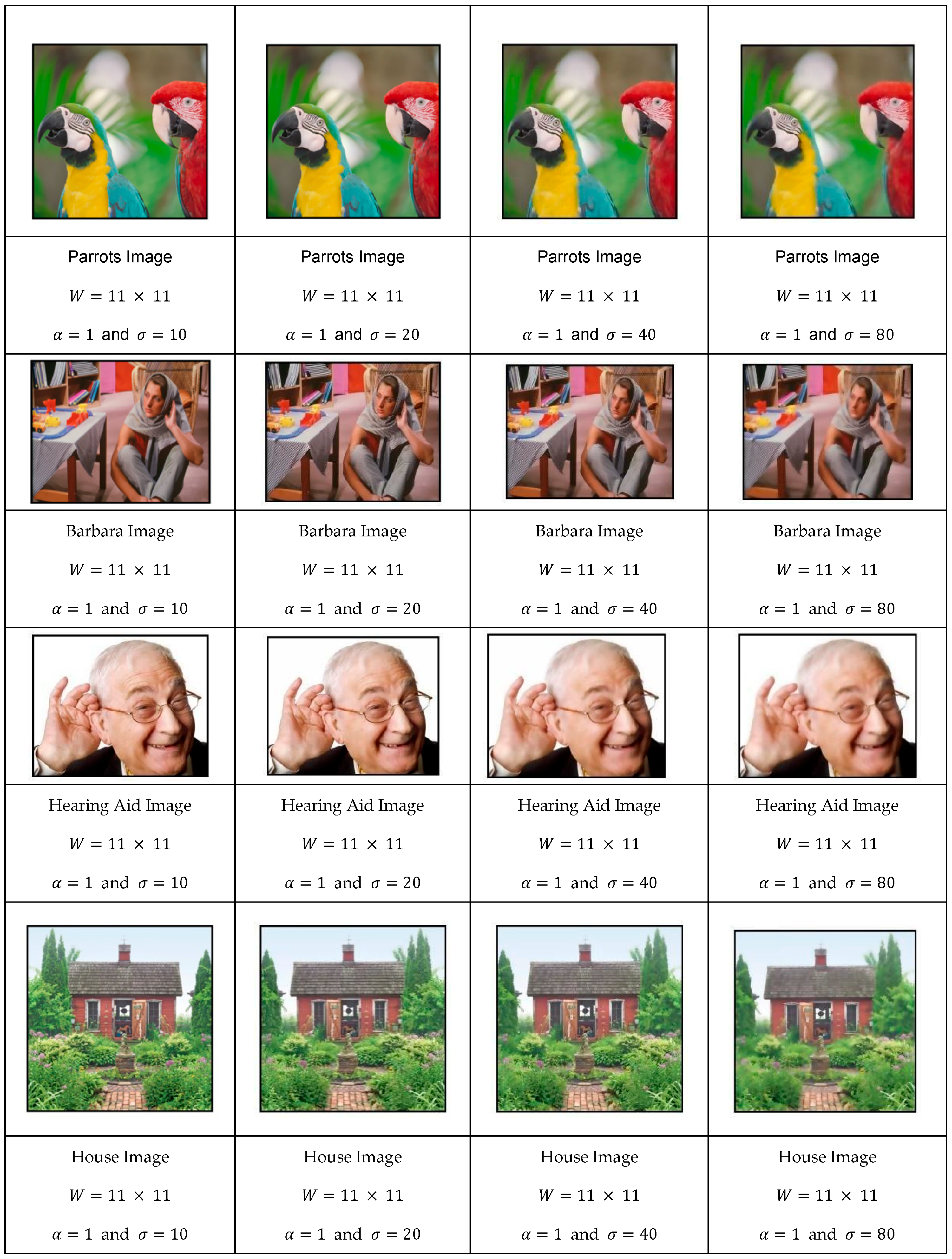

To validate the functionality of the algorithms, a standard set of test images was used. These include natural scenes, people, industrial sites, and medical and city images (see Figure 4).

Figure 4.

Image database used for the experiments.

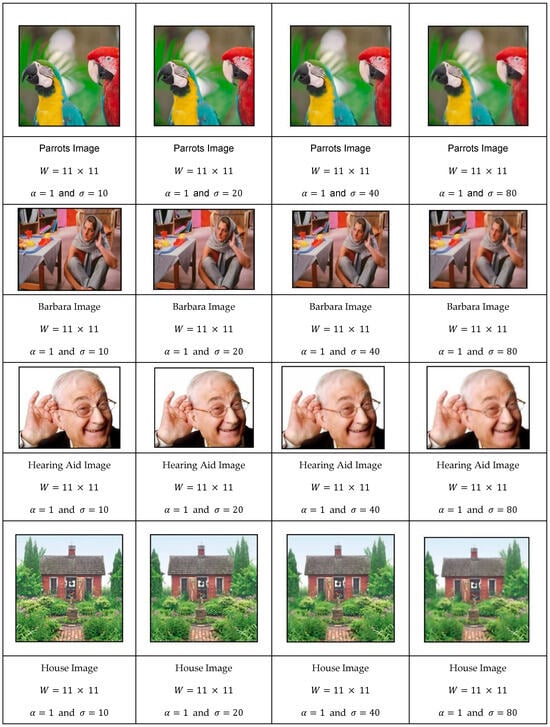

4.2. Evolution of Image Dataset vs. Filter Parameters

This section explores the relationship between filter parameters (α and σ) and filtering performance across diverse image types, showing that

- Each image has an optimal σ value corresponding to its “natural scale”;

- Parameter α effectively balances spatial and intensity relationships;

- The filter’s performance peaks at specific parameter combinations, demonstrated through quantitative metrics (PSNR and SSIM).

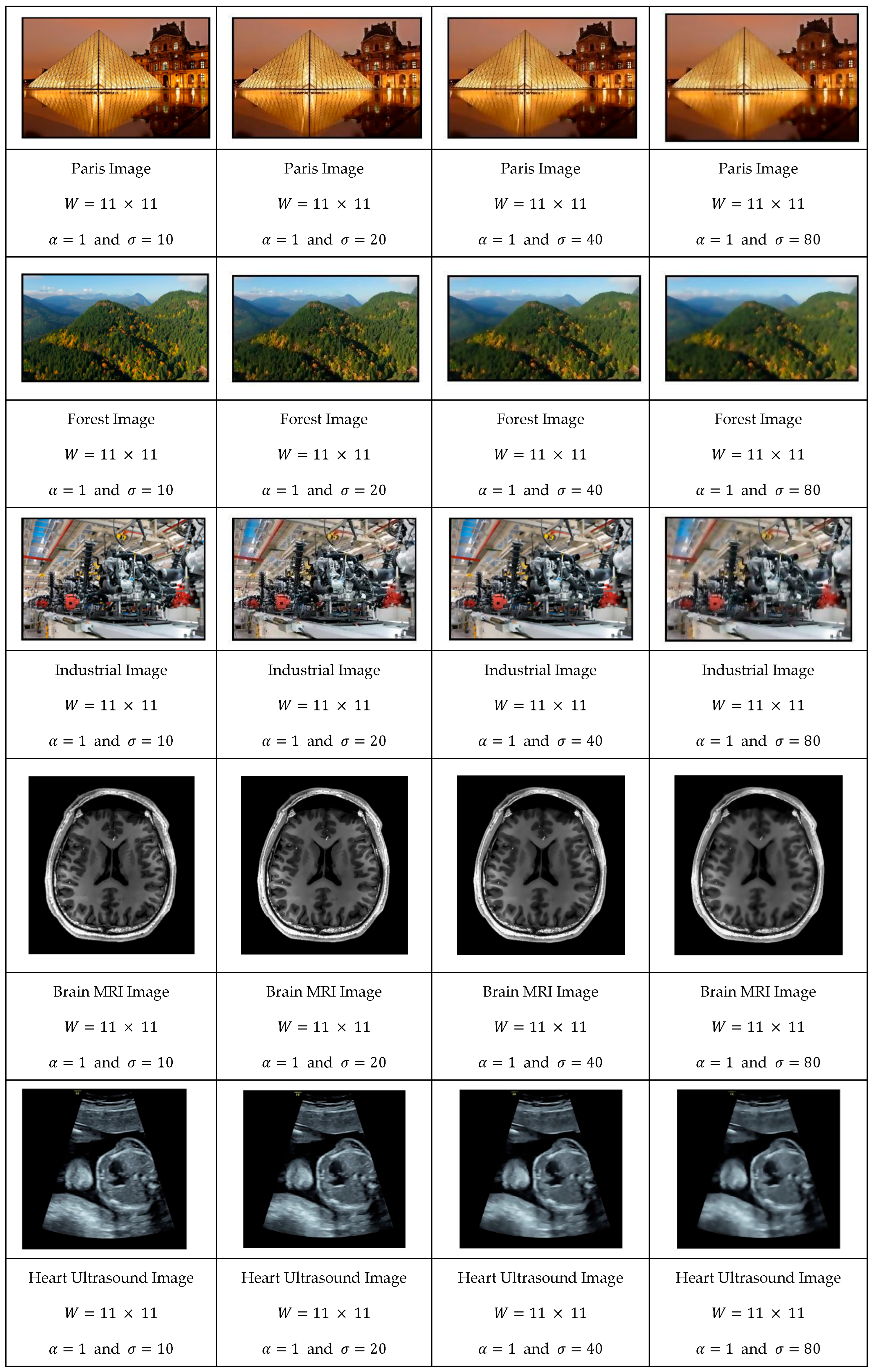

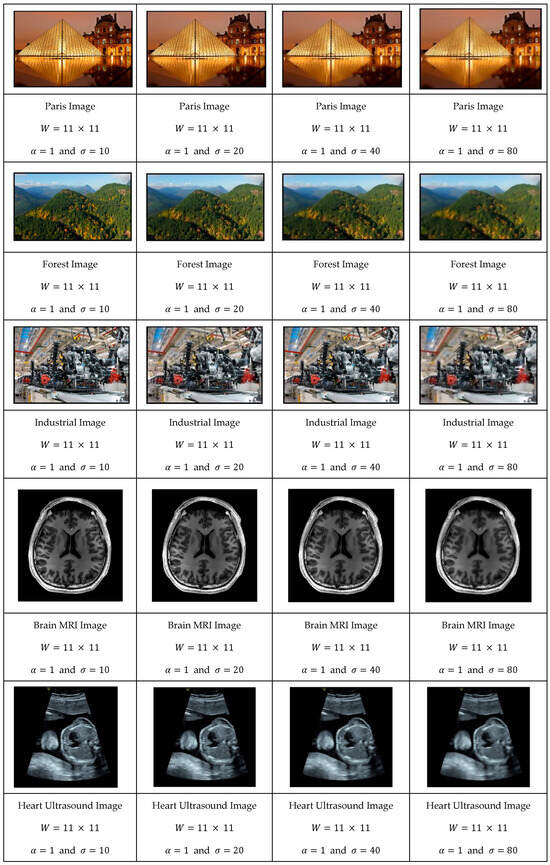

In the sequence shown in Figure 5, we convolved the images that were first normalized in size to be of width of 512 pixels and height to a value that respects the aspect ratio of the original image. In these experiments, the convolution window size was set to 11 × 11, and the parameter equal to 1.

Figure 5.

Evolution of the image test database as a function of .

As can be observed, the image’s smoothness level increased proportionally with while maintaining edge clarity. As values increased, an optimal value * emerged, corresponding to an ideal scale. We explore this relationship in greater detail later in our discussion.

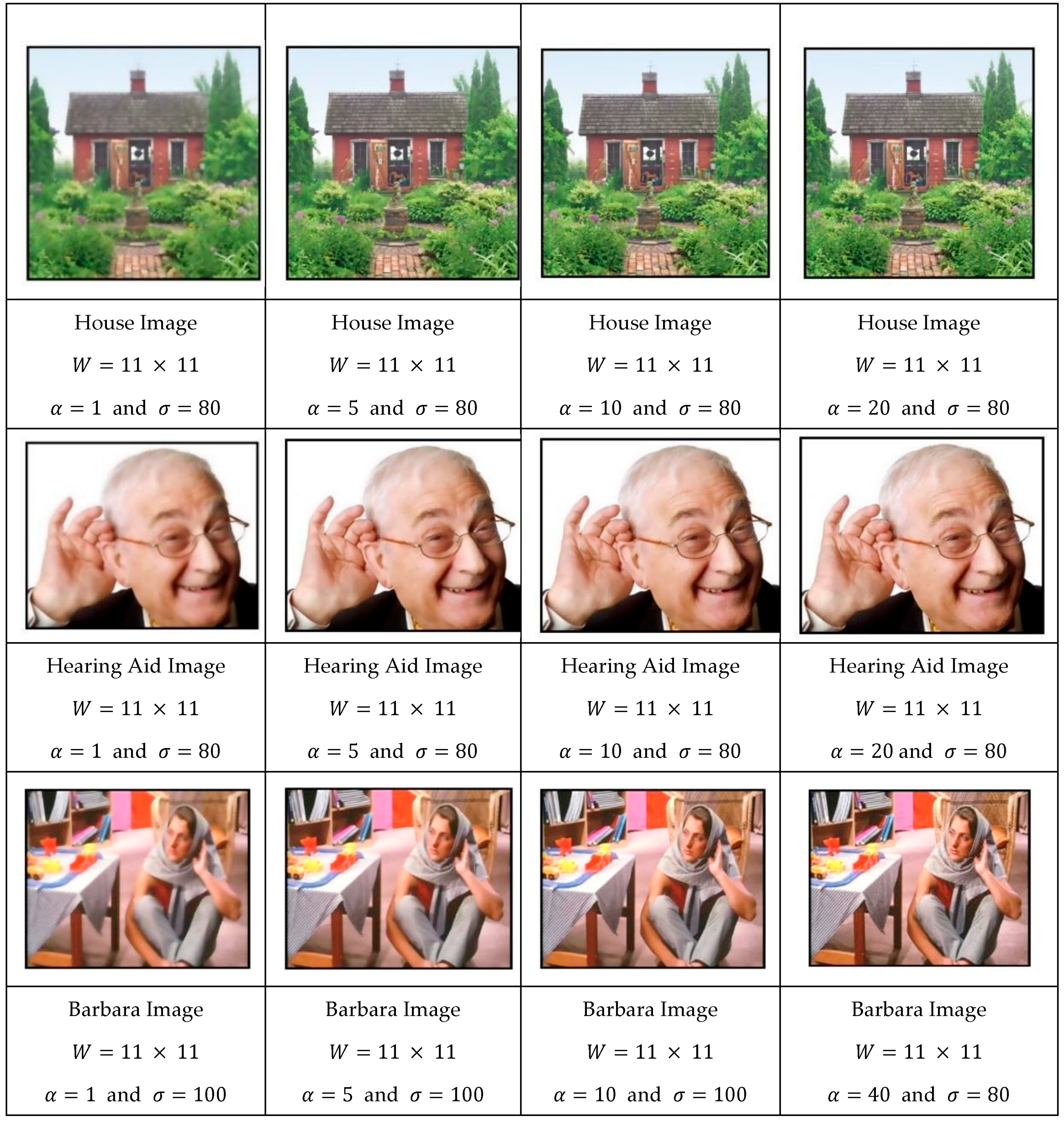

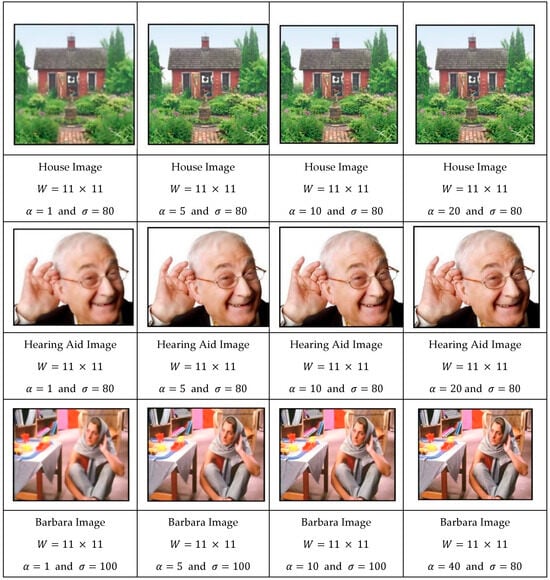

Let us now study the effect of the parameter on the filtering results. One can see the evolution of the filtering process of images (House, Hearing Aid, and Barbara) for various values of . To highlight its effect, we set the to large values (80, 80, and 100).

As one can see in Figure 6, increasing the parameter reduced the influence of the spatial component, resulting in a filter where pixel value differences became the dominant factor rather than spatial proximity. This shift in dominance led to reduced spatial blurring while still preserving important signal variations across the image.

Figure 6.

Evolution of the image database as a function of for a fixed .

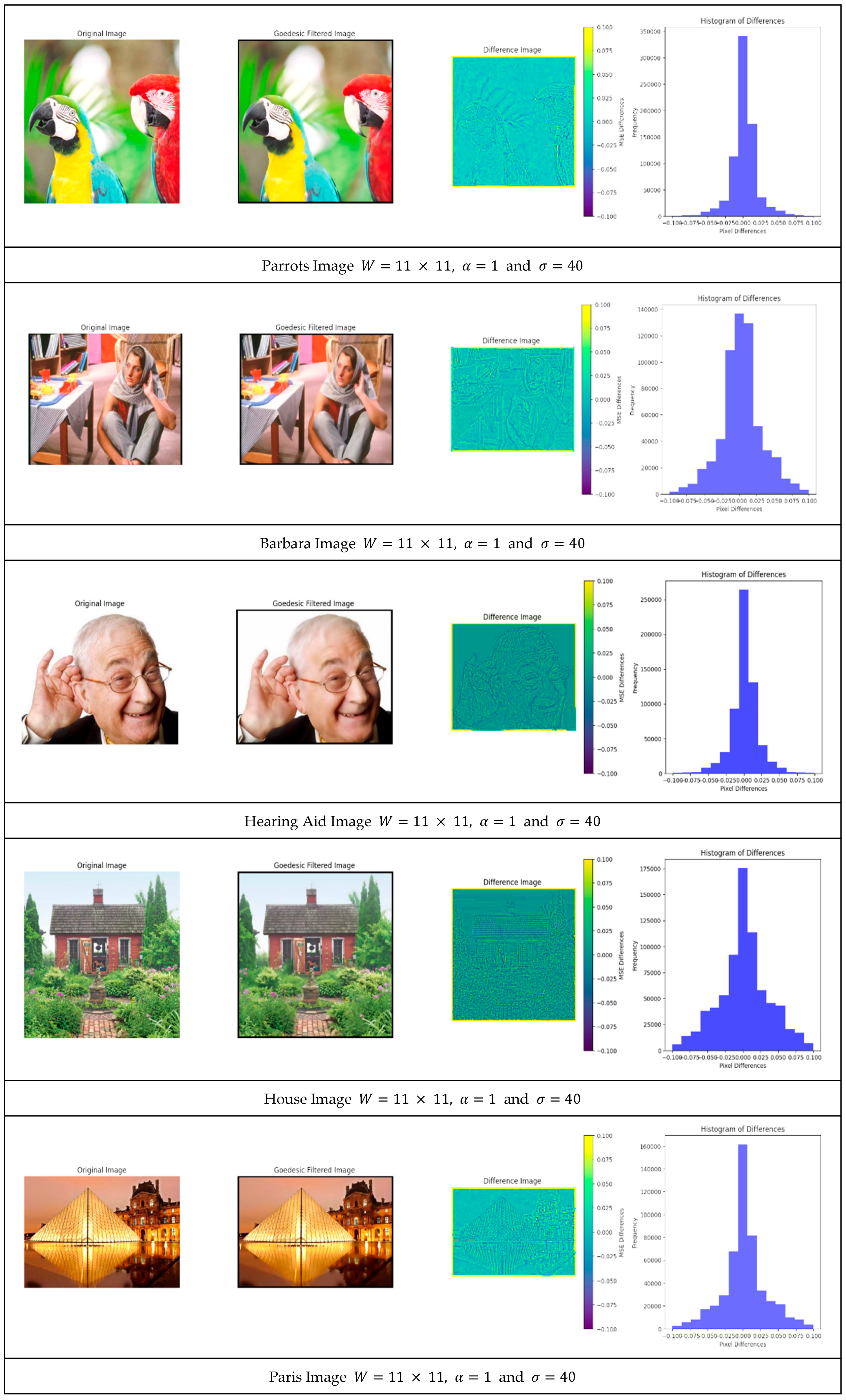

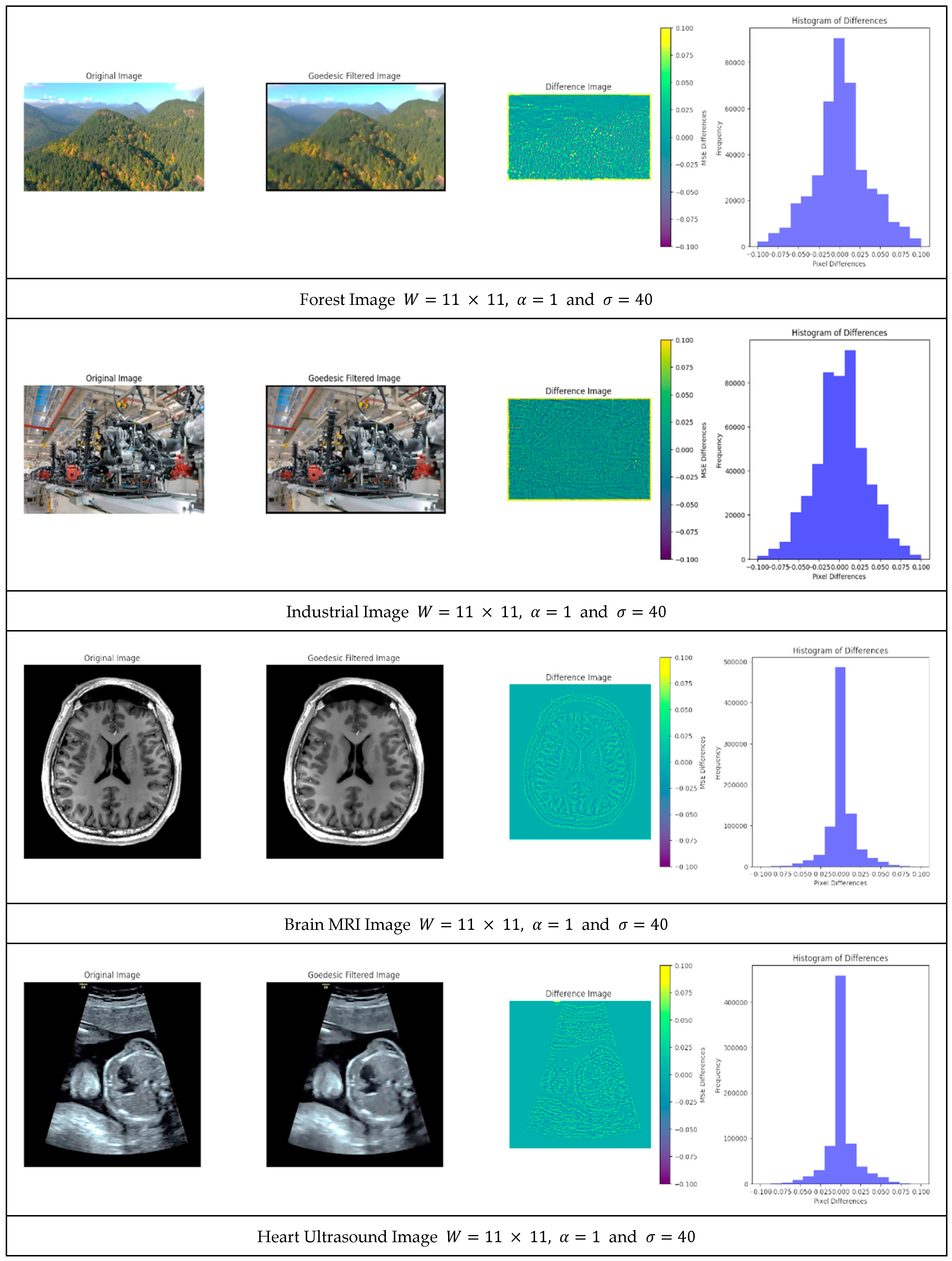

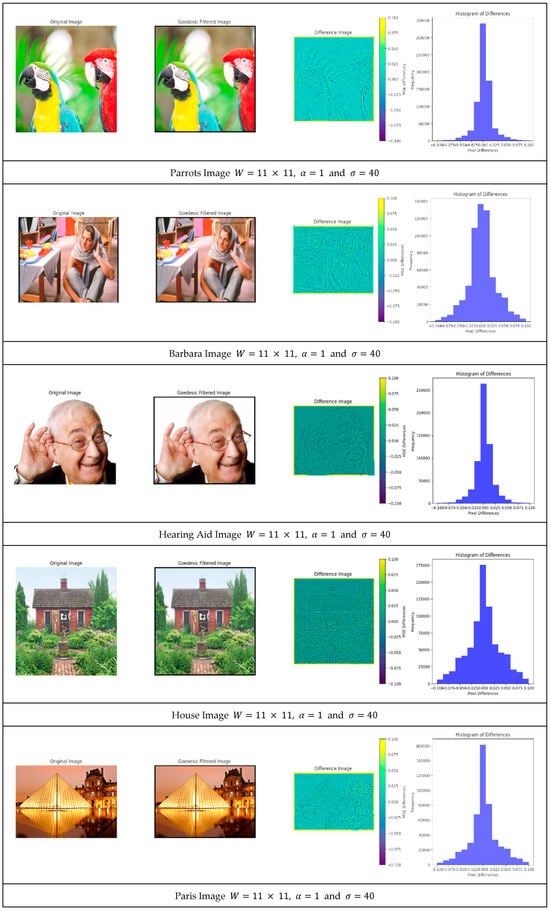

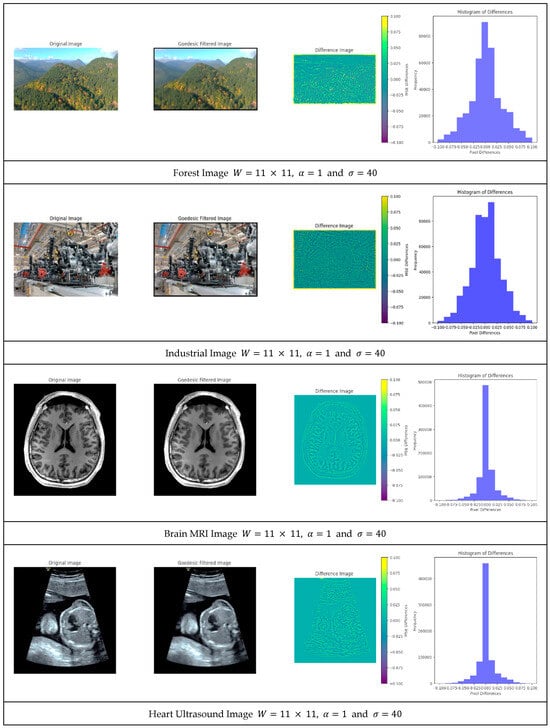

The next experiment is to illustrate the distribution of the differences between the original image and the filtered one for a window size equal to 11 × 11 and a filter parameter equal to α = 1 and σ = 40. In Figure 7, one can see the original image, the corresponding filtered image, and the color-coded difference between the two images normalized between −0.1 and 0.1. In addition, one can see for each image a histogram of the error between −0.1 and +0.1.

Figure 7.

Difference between the original images and the filtered images.

The difference between the original image and the filtered images were very small, even though the filtered image was convolved heavily with . This sequence shows the advantage of geodesic filtering where uniform regions were smoothed out without losing sharp edges.

When an image is processed with geodesic filtering, the parameter σ controls the extent of the filtering effect. The “natural scale” represents the specific σ value that achieves the best balance between noise reduction and preservation of significant image features. This concept builds on scale-space theory, which states that images contain features at multiple scales. The natural scale has several key characteristics:

- Peak Performance Point: As demonstrated in the paper, when plotting PSNR or SSIM values against increasing σ values, there is typically a clear peak before performance declines. This peak identifies the natural scale for that image.

- Content Dependency: Each image has its own unique natural scale based on its content complexity. Images with fine textures typically have lower optimal σ values, while images with larger homogeneous regions have higher optimal σ values.

- Feature Preservation Threshold: The natural scale represents the threshold at which the filtering process maximally preserves meaningful edges and structures while still effectively suppressing noise.

- Adaptive Processing: Rather than applying a fixed σ value across all images, the concept of natural scale suggests that filtering should adapt to each image’s inherent structure.

- Noise-Robust Analysis: When evaluating images with added noise, the natural scale remains relatively stable, showing that it is tied to the underlying image structure rather than noise characteristics.

We begin by establishing baseline performance with controlled noise conditions, then progressively introduce more challenging scenarios to demonstrate the robustness of geodesic filtering. To demonstrate this unique property of geodesic filter, let us study how the image evolves with for images that are corrupted by a Gaussian noise with an amplitude between [0, 35] and an average of 0. Following the foundational work of Zhang et al. [29], we performed a peak signal-to-noise ratio (PSNR) analysis for each image as a function . PSNR is defined as

where is the maximum possible pixel value and is the mean squared error.

In addition, for each , we also compute the structural similarity index measure (SSIM) as it provides a deeper insight into structural preservation. SSIM incorporates three components:

- Luminance comparison;

- Contrast comparison;

- Structural correlation is an important criterion to measure edge preservation.

- SSIM is defined as

- is the pixel sample mean of ;

- is the pixel sample mean of ;

- is the variance of ;

- is the variance of ;

- , ;

- V the dynamic range of the pixel-values (typically 255);

- and by default.

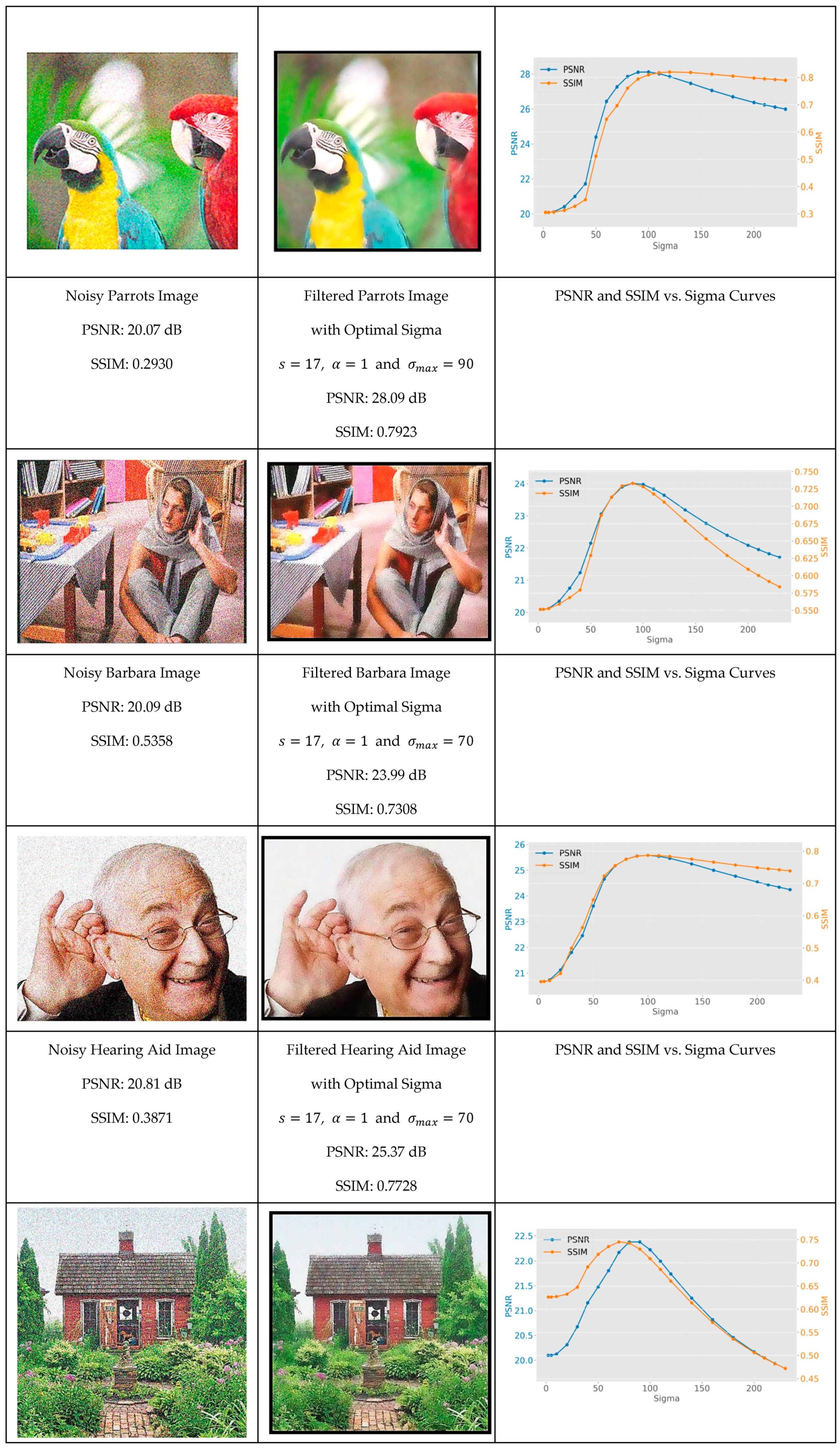

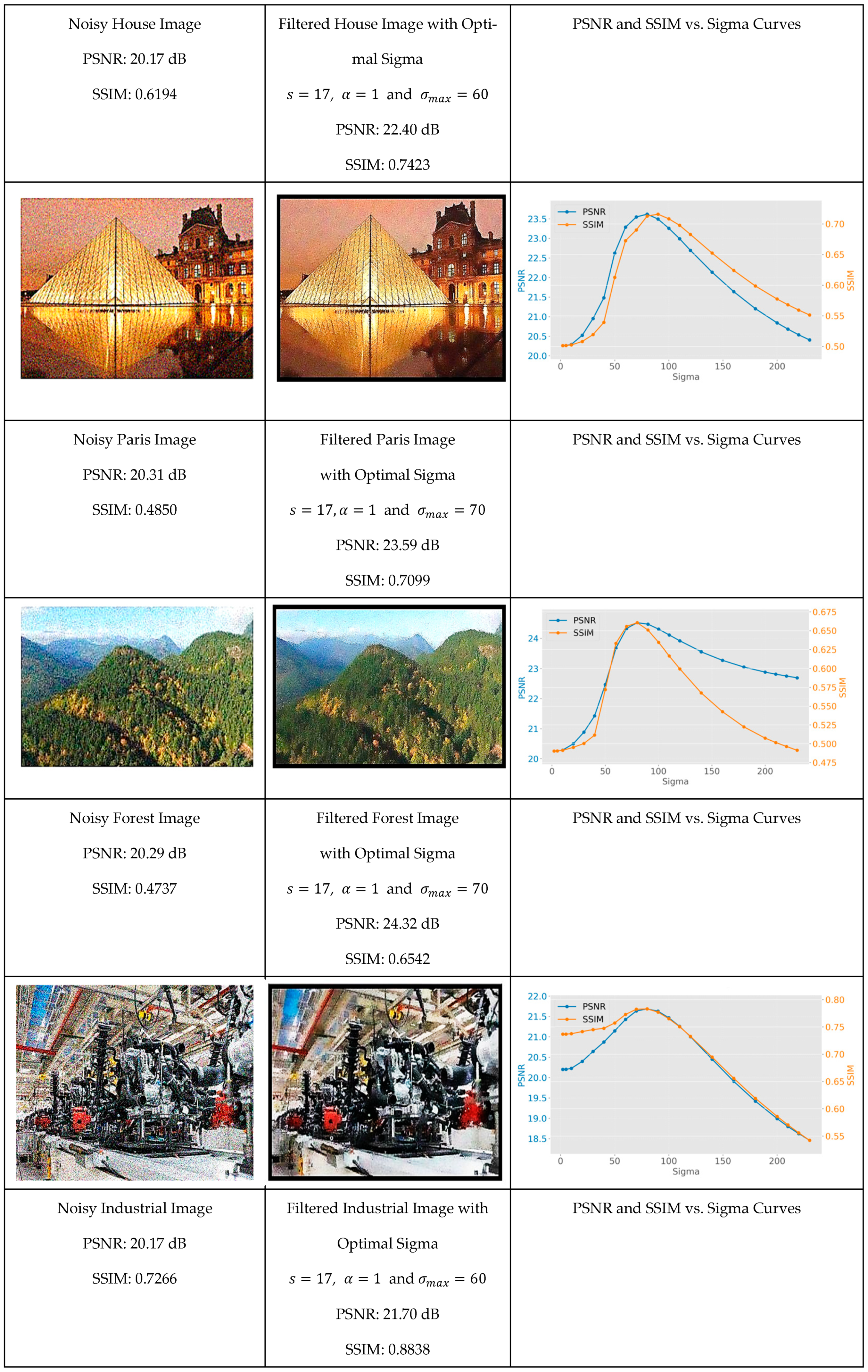

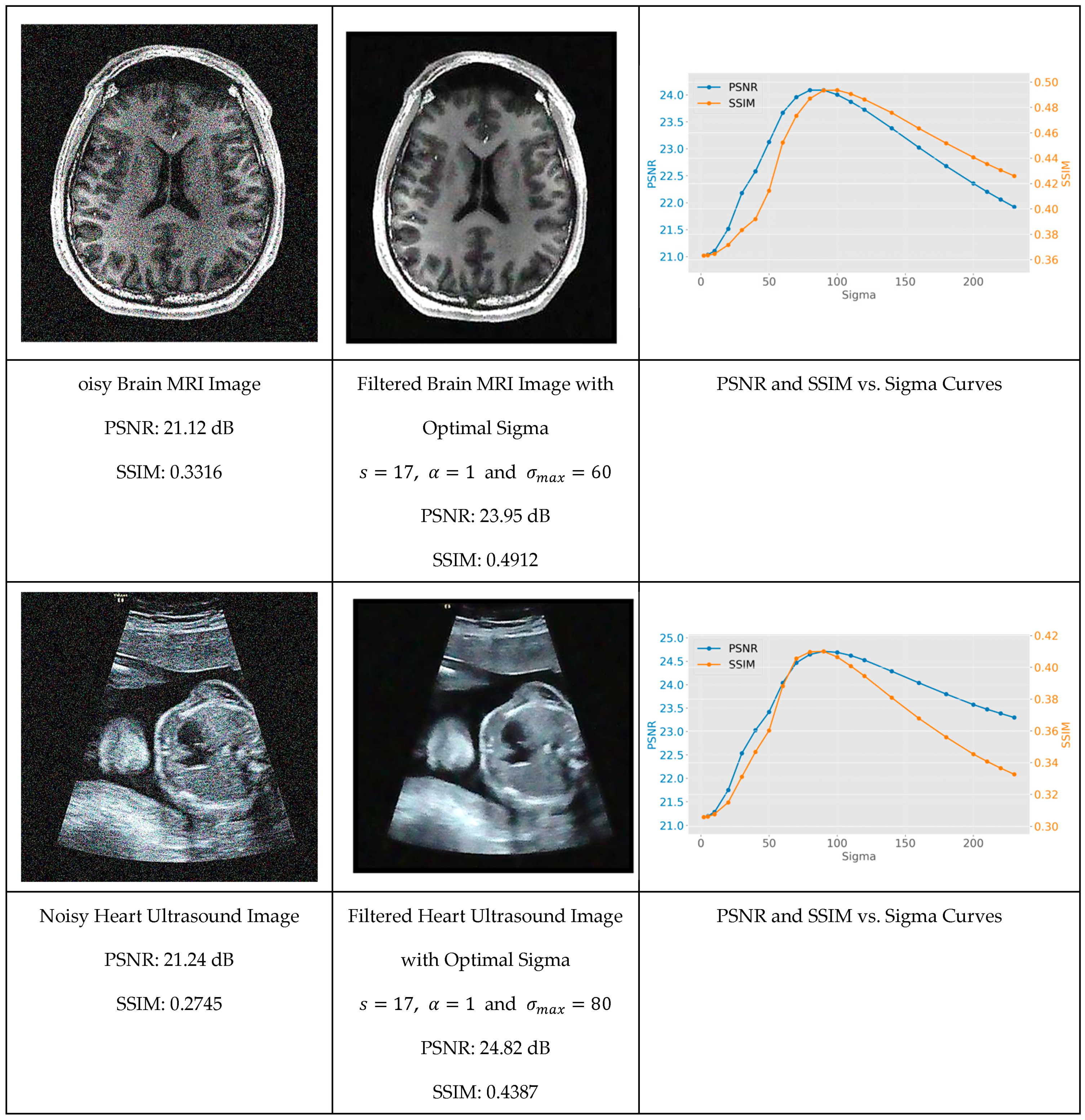

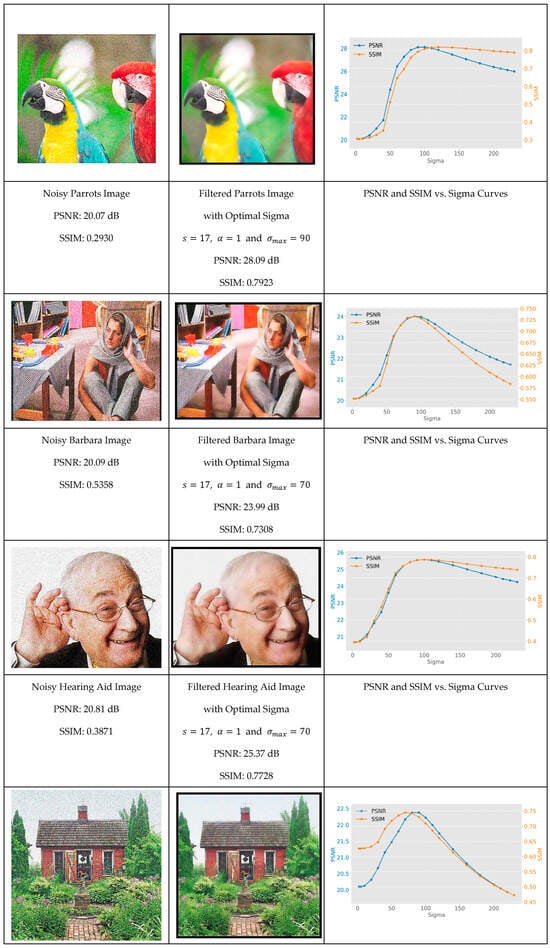

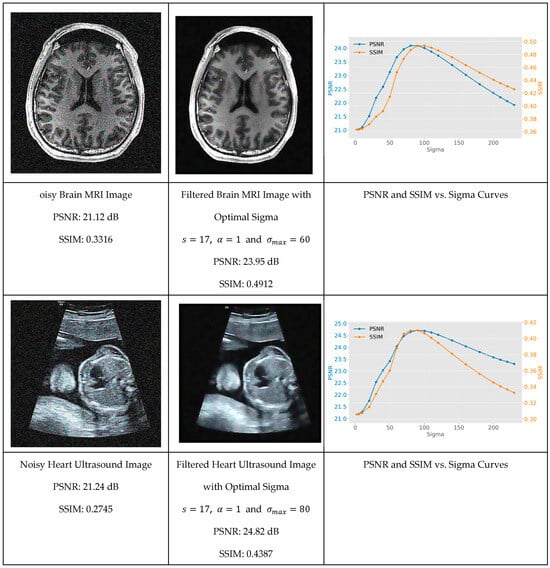

Figure 8 shows the original image with Gaussian noise, the filtered version for corresponding to the maximum of the PSNR, and finally the evolution of the PSNR and SSIM as a function of . One can see, for each image, the PSNR and SSIM evolve as a function of monotonically toward a maximum and then reduce due to over blurring. The value corresponds to the maximum PSNR and is called the natural scale of the image.

Figure 8.

Evolution of the PSNR and SSIM as a function of for a noisy version of the dataset images. Left: The noisy image. Center: The image filtered with the optimum value . Right: The evolution of the PSNR and SSIM vs. .

The experimental results reveal that while the natural scale parameter varied across different images (with test cases showing a range from = 60 to = 90), it can be systematically identified through careful analysis of PSNR and SSIM metrics. This discovery provides researchers with a methodical framework for parameter optimization that eliminates the traditional trial-and-error approach commonly required in advanced filtering techniques.

4.3. Comparing Geodesic Filter to Other Algorithms

Previous studies comparing the performance of geodesic filtering and anisotropic diffusion methods have demonstrated the advantages and limitations of each approach. For instance, studies have shown that anisotropic diffusion methods, such as the Perona–Malik technique, are effective at preserving edges but struggle with highly textured or noisy images. While anisotropic diffusion methods have been widely adopted for their edge-preserving capabilities, geodesic filtering offers significant advantages in terms of noise reduction and spatial relationship preservation. A comparative study by Weickert [36] for grey-level images and by Boulanger [23] for range images underscore the potential of geodesic filtering as a superior technique for advanced image processing applications. Other studies by Gousseau et al. [37] compared various anisotropic diffusion methods and highlighted the potential of geodesic filtering in overcoming some of the inherent limitations of these techniques. Their findings suggest that geodesic filtering can provide better results in terms of both quantitative metrics, such as PSNR and SSIM by Gousseau et al. [37].

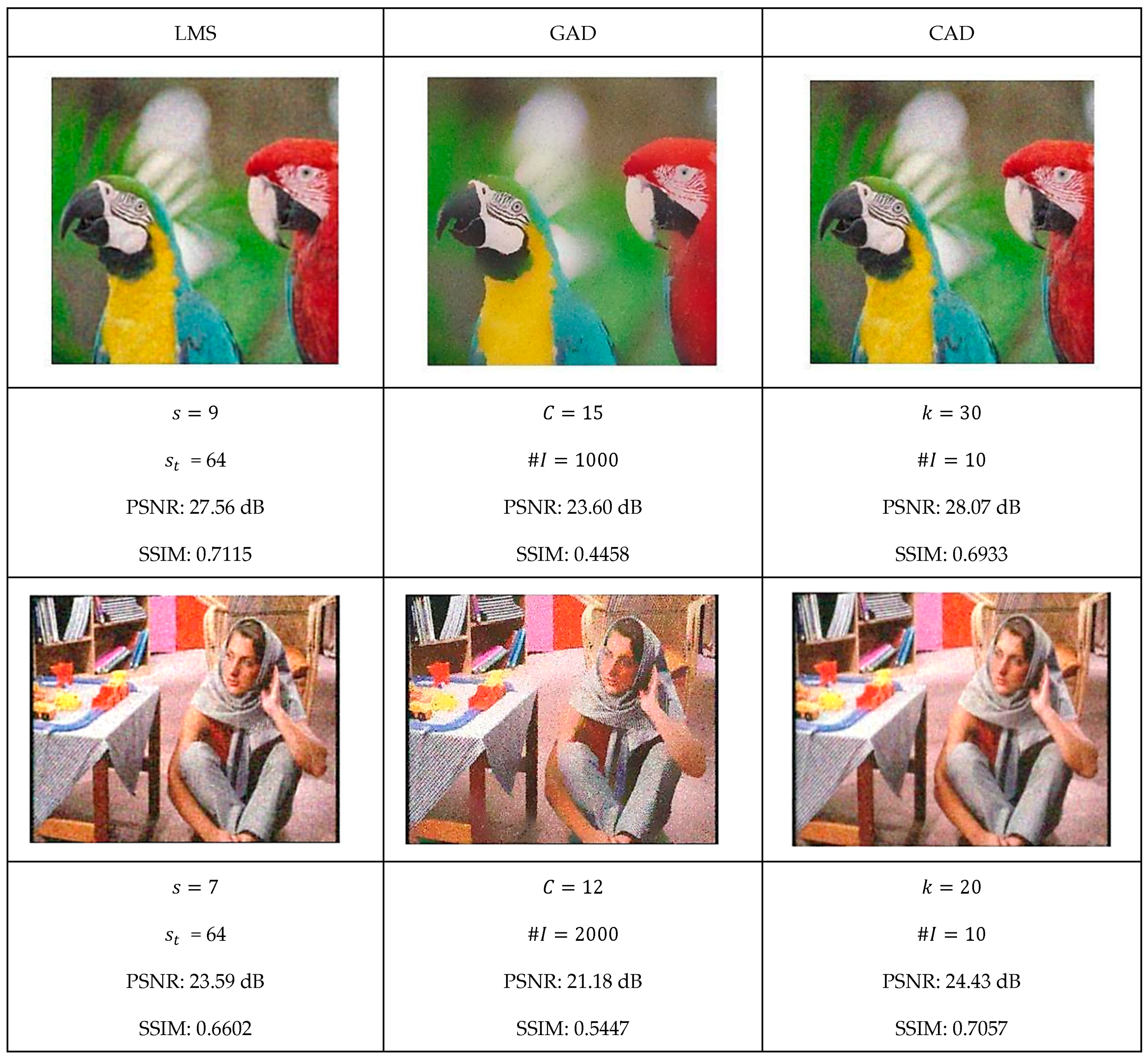

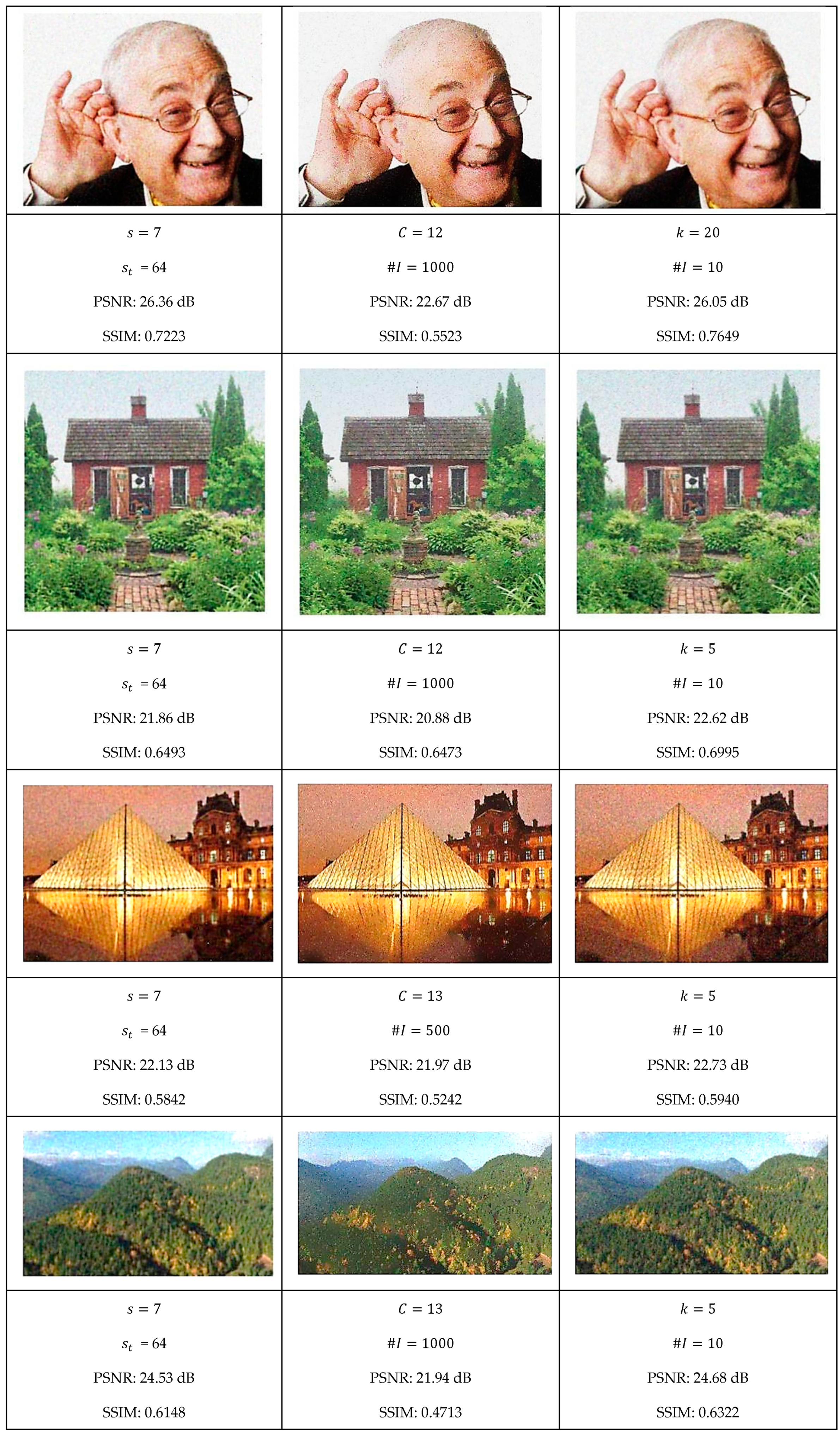

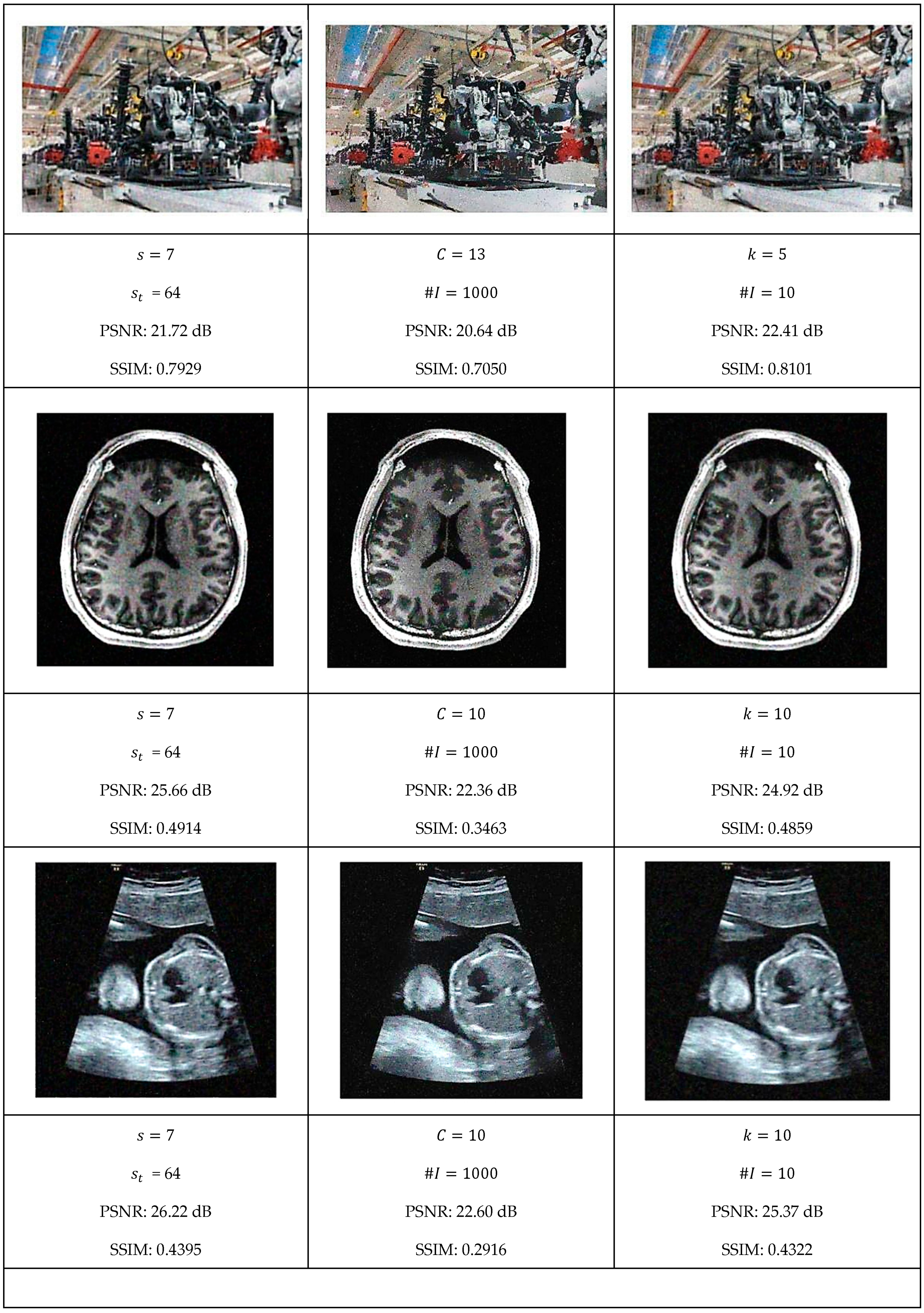

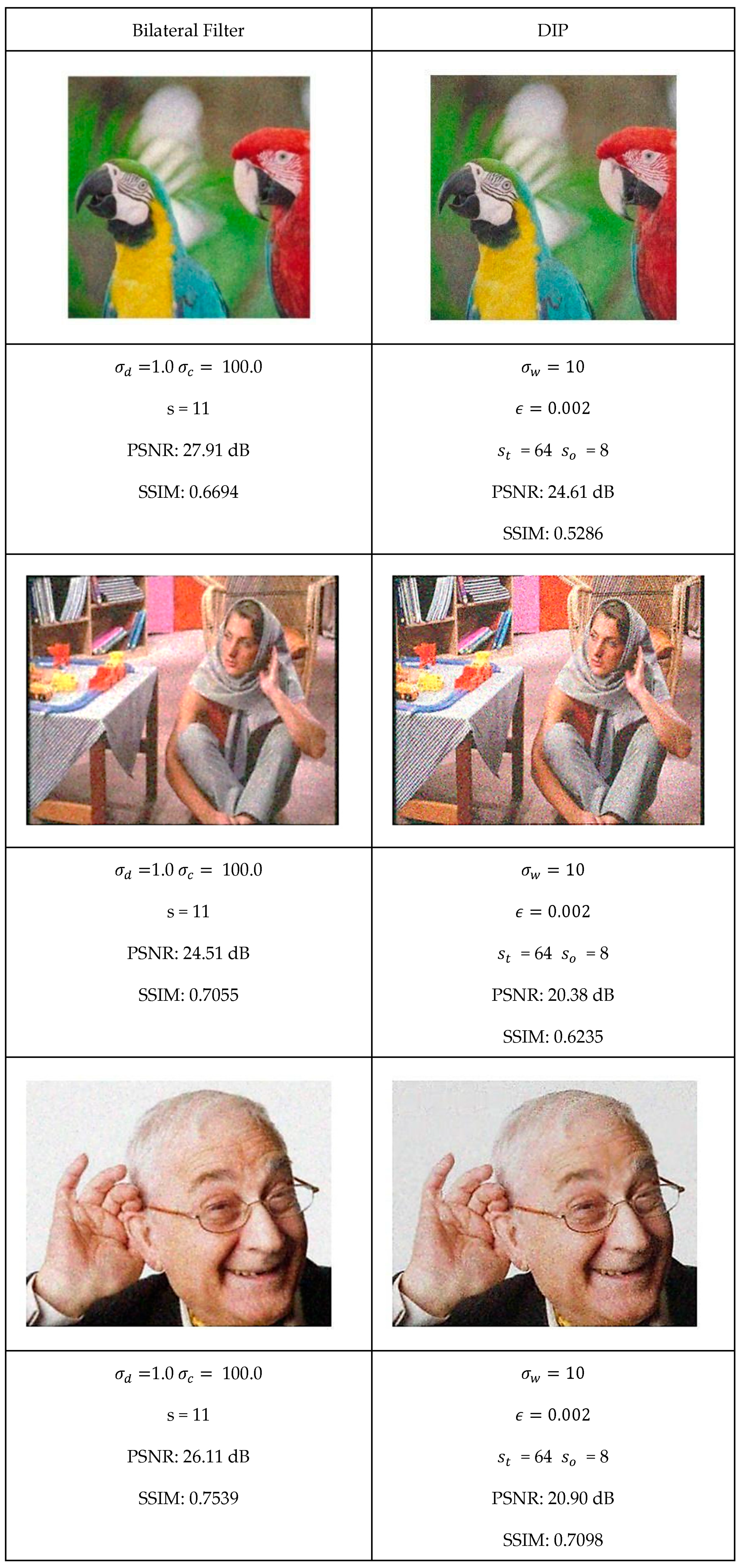

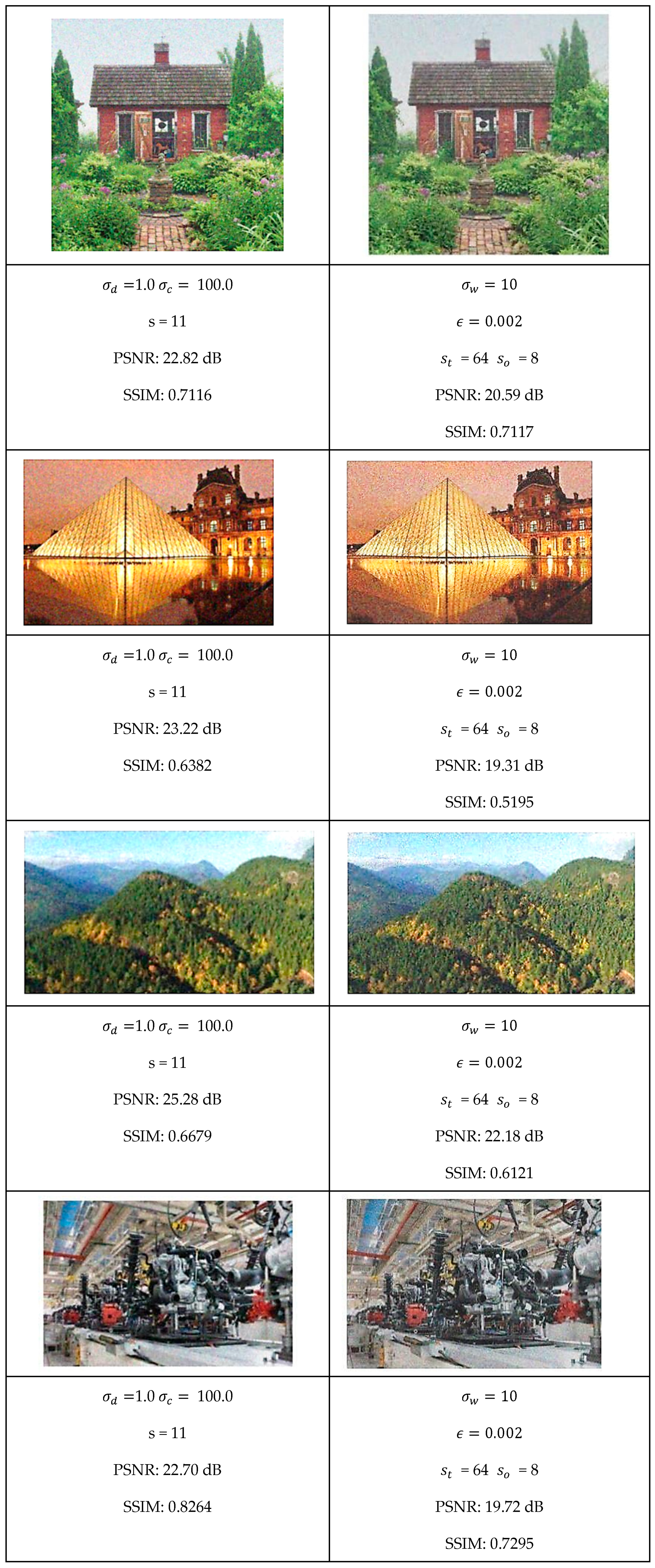

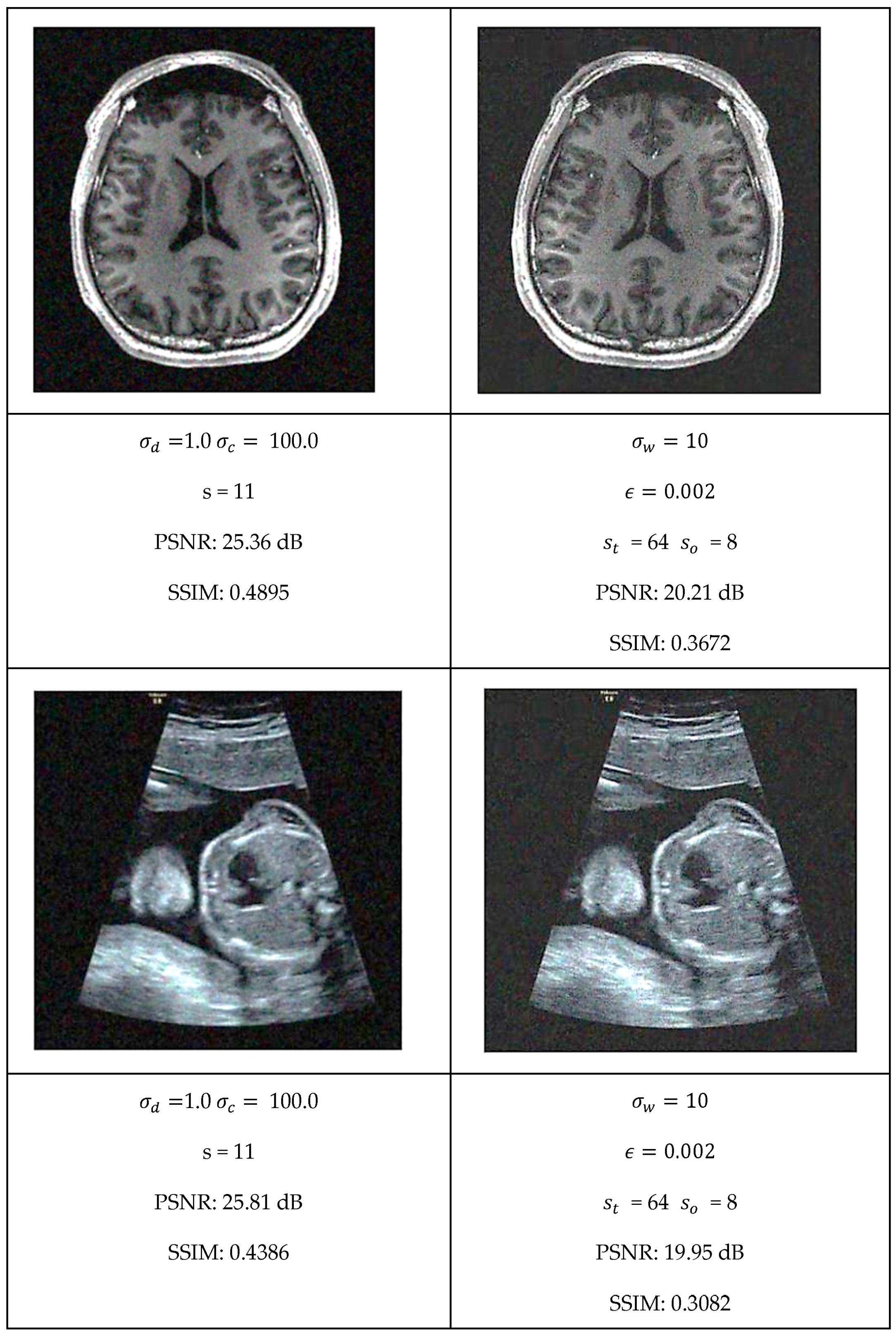

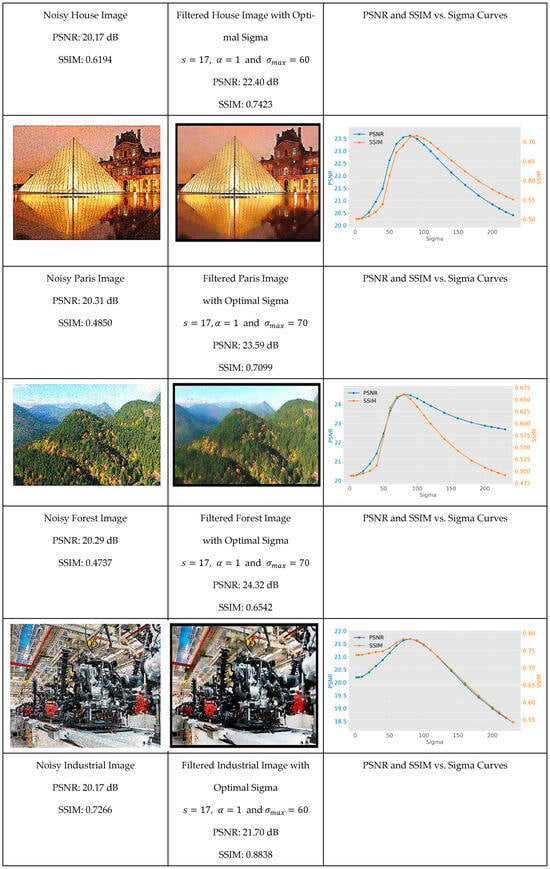

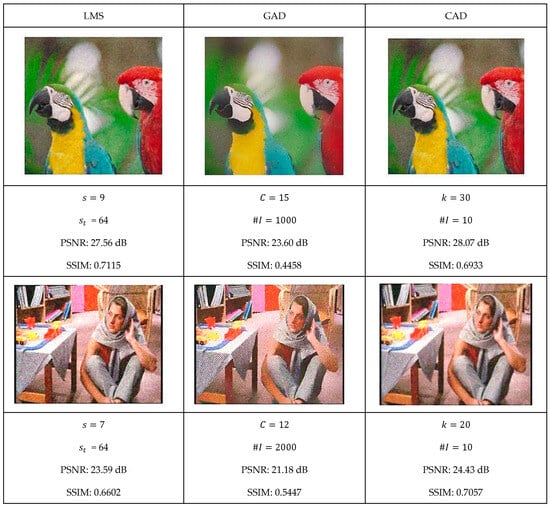

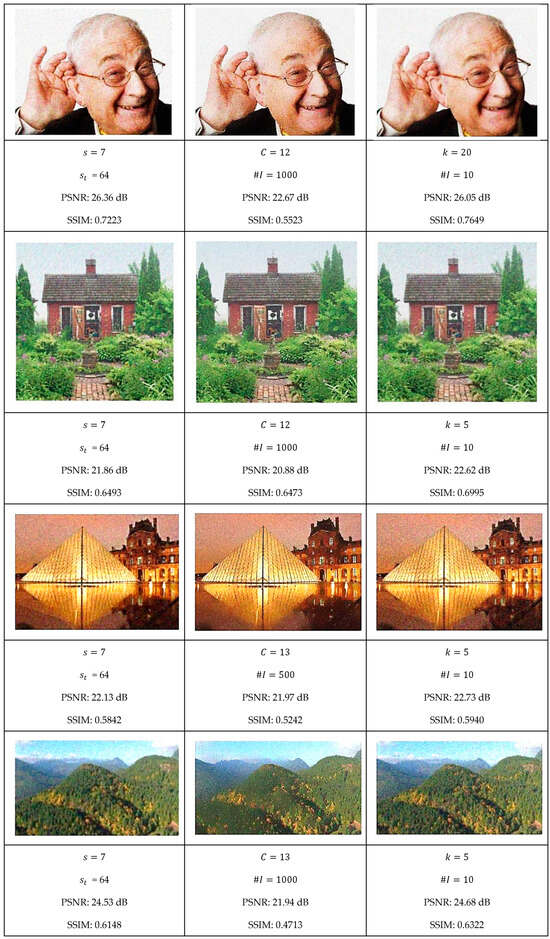

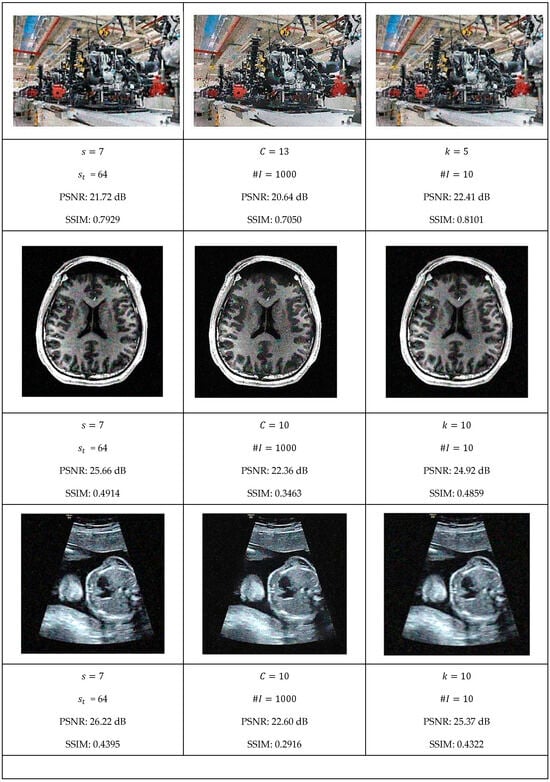

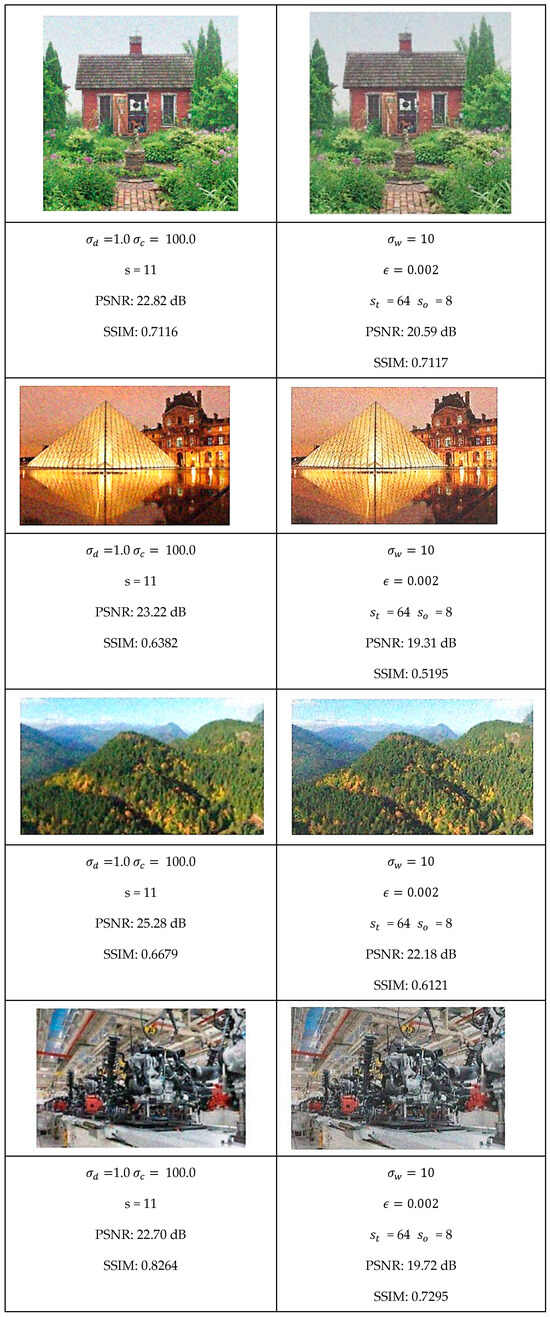

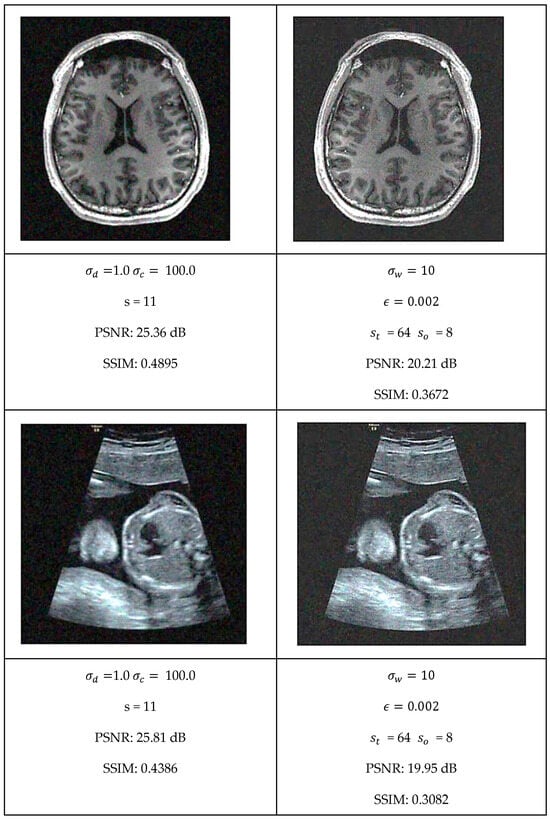

This section presents a comprehensive comparative analysis of our geodesic filtering approach against the state-of-the-art methods. The key to this comparison is based on PSNR and SSIM difference metrics. The noisy images shown in Figure 8 were processed using various implementations of the filtering algorithms described in Section 2. To be fair in our comparison, as with the geodesic filter, we tuned the parameters to produce the best PSNR value possible. The results of this comparison are collected in Figure 9 and Figure 10.

Figure 9.

Optimal filtering results for LMS, gradient anisotropic diffusion, and curvature anisotropic algorithms.

Figure 10.

Optimal filtering results for bilateral and GW-DIP algorithms.

Each algorithm was carefully tuned to achieve optimal performance using the same test image database with standardized noise conditions. For each filter, the tuning parameters are as follows:

- Least Median Filter: window size and tile size ;

- Gradient Anisotropic Diffusion: conductance and number of iterations #I;

- Curvature Anisotropic Diffusion: the mean curvature of the level sets and number of iterations #I;

- Bilateral Filter: kernel size, spatial distance weight, and color distance weight;

- Gaussian Weighted Wavelet DIP Neural Network: Gaussian variance, minimum tile loss, tile size, and tile overlap size.

In summary, the quantitative evaluation presented in Table 1 and Table 2 confirms that geodesic filtering provides dual performance advantages: achieving noise reduction metrics (PSNR) comparable to state-of-the-art alternatives while significantly outperforming them in structural preservation (SSIM). This superiority in preserving image structure is evident across the entire image dataset, with particularly notable advantages in regions containing intricate textures and fine details that traditional methods frequently over-smooth or distort.

Table 1.

Comparison of the PSNR for each filtering algorithm.

Table 2.

Comparison of the SSIM for each filtering algorithm.

The results clearly demonstrate that while other filtering approaches may achieve similar noise reduction performance, they do so at the cost of structural integrity. Geodesic filtering, in contrast, maintains the delicate balance between noise suppression and feature preservation, making it particularly valuable for applications where preserving the semantic content of images is paramount.

Furthermore, geodesic filtering shows remarkable resilience when processing non-standard noise distributions, including speckle patterns and mixed noise profiles that typically challenge conventional filters. This versatility extends its practical utility across diverse application domains from medical imaging to remote sensing.

5. Geodesic Filtering Computational Complexity and GPU Implementation

The computational complexity of geodesic filtering represents one of its most significant challenges, which necessitates the GPU implementation described in the paper. This aspect deserves thorough examination as it directly impacts the algorithm’s practical applicability. The computational bottleneck occurs specifically during the minimal path calculation (geodesic distance) between pixels. For each central pixel, the algorithm must compute the shortest path to every other pixel in the window, considering both spatial proximity and intensity differences. This requires solving multiple single-source shortest path problems, which have a complexity of O () per window when using Dijkstra’s algorithm with a binary heap.

Like the algorithms described in Asad et al. [38] and Áfra et al. [39], our GPU implementation addresses many computational challenges through several innovative approaches:

- Parallelization Strategy: By processing multiple image tiles simultaneously, the GPU implementation exploits the inherent parallelism in the algorithm. Each thread processes a single pixel, allowing thousands of pixels to be processed concurrently.

- Memory Optimization: The paper describes sophisticated memory access patterns and bank conflict resolution techniques that significantly improve throughput on GPU architectures.

- Wave Propagation Algorithm: The fast-marching method (FMM) implemented on GPU provides a more memory-access-friendly alternative to Dijkstra’s algorithm, eliminating the need for priority queues which cause memory bank conflicts.

- Coalesced Memory Access: By arranging data in memory to enable coalesced access patterns, the implementation achieves near-optimal memory bandwidth utilization.

5.1. Memory Optimization

- Image Tiling: A large image is divided into 16 × 16 tiles for processing, where each tile is handled by one thread block. A 7 × 7 convolution window requires an overlap of three pixels on each side (7/2⌋ = 3), resulting in an effective area processed by each tile to be 10 × 10 pixels (16-3-3).

- Memory Layout: The input image is stored as RGB values (three channels) in global memory, where each pixel requires three float values. The image data are aligned for faster coalesced memory access from the threads. For each block, the threads read the data from global memory into the shared memory. Since each tile is 16 × 16, each block will read a tile of size 22 × 22 pixels (16 + 3 + 3 for each dimension) of three floats. This also includes halo regions. In addition, additional space is used in shared memory for distance computations.

5.1.1. Block Execution Flow

- Tile Loading Phase: Each 16 × 16 thread block loads the tile data (16 × 16), the halo region (three pixels each side), using coalesced reading from global memory.

- Convolution Processing: Each thread is responsible for one pixel in 16 × 16 tiles, and threads near edges handle the halo region. First, a distance array is initialized. Each output pixel requires 7 × 7 window computation. The center pixel of each window is determined by thread ID. For a thread in the block,

- The convolution window is centered at the current thread position;

- Each thread processes the 7 × 7 window around center, which include

- Computing geodesic distances within the window using the wave propagation algorithm;

- Calculating the weight of the spatial and signal contributions using a Gaussian function;

- Normalize the weights between zero and one;

- Multiply the signal with the weights and the pixel by the weighted sum.

5.1.2. Memory Access Optimization

The main optimization strategy is based on better use of memory access. First is a coalesced loading strategy where each thread loads one RGB pixel set 128-byte aligned access and where sequential thread IDs are mapped to sequential memory. For example, data are stored in global memory as [R0] [G0] [B0] [R1] [G1] [B1] … [R15] [G15] [B15]. Each thread in a half-warp read the data as follows:

- T0 → P0, P16, P32 (loads three pixels);

- T1 → P1, P17, P33;

- T2 → P2, P18, P34;

- …;

- T15 → P15, P31, P47.

The second data load consists of

- Loading halo region corresponding to an additional three pixels on each side;

- Maintain a coalesced pattern where possible;

- Handle boundary conditions near the edge of the image.

All the data for a tile of (22 × 22) are then stored into much faster shared memory as follows:

- [Halo] [Main Tile] [Halo];

- 3 + 16 + 3 = 22 columns;

- 3 + 16 + 3 = 22 rows.

It is stored as follows:

- [H H H|M M M M M M M M M M M M M M M M|H H H];

- [H H H|M M M M M M M M M M M M M M M M|H H H];

- [H H H|M M M M M M M M M M M M M M M M|H H H].

The main objective is to minimize memory bank conflict between threads in a block. Bank conflicts can cause 32× slowdown, as each conflict serializes access and also affects warp execution efficiency. First, we must ensure that each thread accesses different banks bank (Txy) ≠ bank (Tx’y’) for any threads in the same warp. In our implementation, we used a column padding strategy where we added an extra column to the array to shift row starting addresses to ensure bank separation. Our implementation uses channel padding where we add an extra column to each channel:

- float r_channel [22,23];

- float g_channel [22,23];

- float b_channel [22,23].

This simplified bank mapping allows independent channel access that reduces conflict probability and better memory coalescing. This simple strategy eliminated bank conflicts to improve parallel memory operations of the full warp utilization. There is no serialization allowing for concurrent bank access and improved throughput.

5.2. Fast-Marching Method Algorithm (FMM)

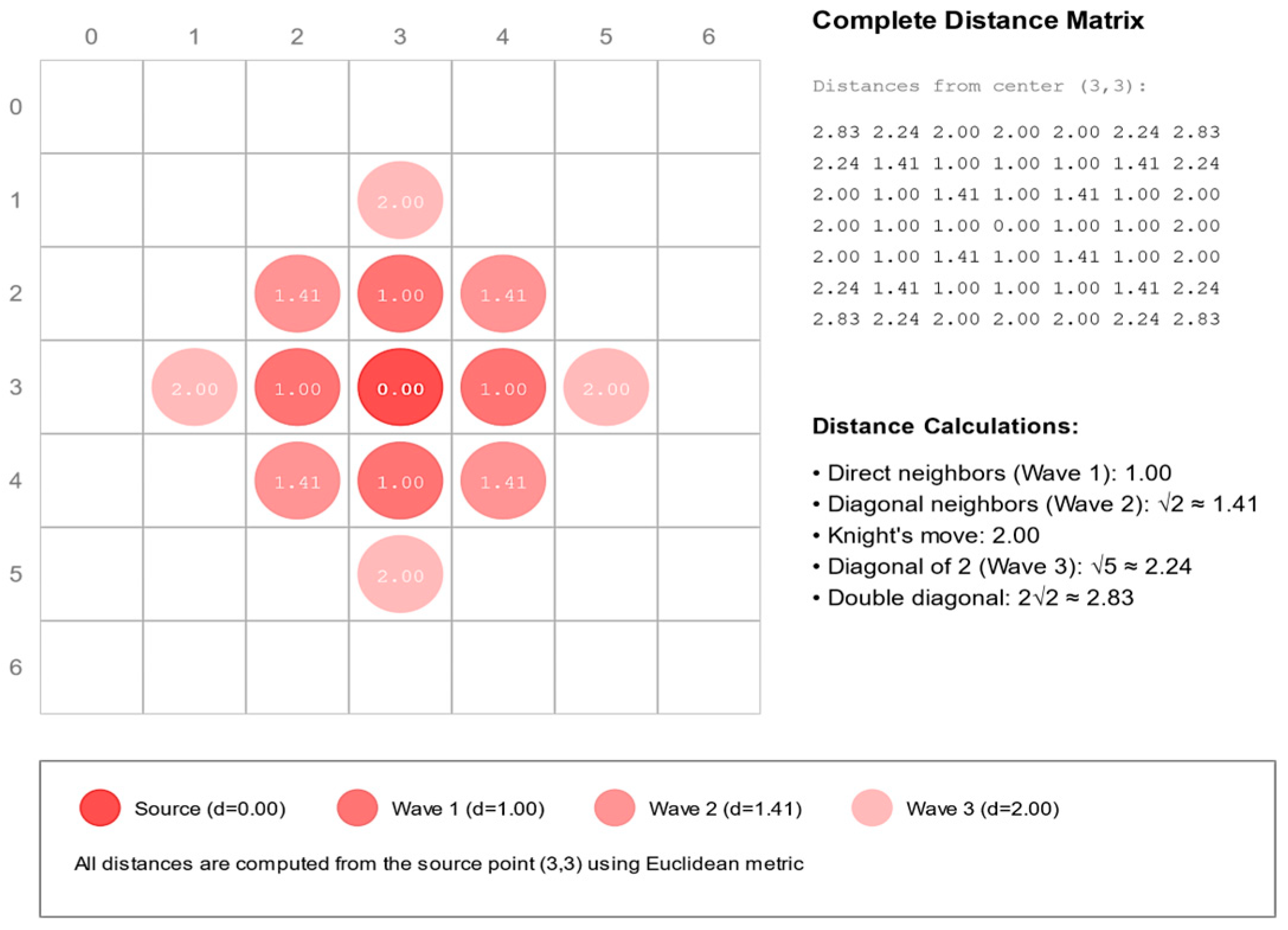

The fast-marching method (FMM) represents a watershed advancement in geodesic distance computation, pioneered by Sethian’s [31] foundational work and subsequently refined for parallel architectures. At its core, FMM employs wavefront propagation—systematically expanding distance information outward from source points while maintaining strict causality principles essential for computational accuracy. This approach begins with a meticulous initialization phase that establishes the computational infrastructure through carefully configured distance maps and status markers for each vertex within the processing window. This preparatory stage, whose importance Peyré’s [27] research emphasized, creates the necessary foundation for subsequent processing steps. The algorithm’s defining characteristic lies in its disciplined processing sequence: examining points in strict order of increasing distance to ensure each vertex’s final distance value is definitively established before dependent calculations proceed. The causality-preserving ordering mechanism, whose mathematical properties Kimmel and Sethian [25] extensively analyzed, provide crucial guarantees regarding the precision of computed geodesic distances. This ordered processing framework enables FMM to efficiently resolve the Eikonal equation governing geodesic distance propagation, making it particularly amenable to GPU implementation through its structured memory access patterns and predictable data dependencies—characteristics that dramatically contrast with Dijkstra’s algorithm’s reliance on priority queues.

The FMM algorithm consists of several key components:

- Wave Structure: For our example, four concentric waves need to be processed by a thread. Each wave represents the front of pixels at similar geodesic distances that expand outward from a source point. Each wave elements are stored in shared memory contains position, distance, and color information.

- Distance Update Mechanism: For each pixel in the current wave, the algorithm examines all 8-connected neighbors and then computes the geodesic distance combing spatial and color distances using Equation (14). The algorithm then updates the distance if a shorter path is found. All updates use atomic operators to avoid race conflicts between threads.

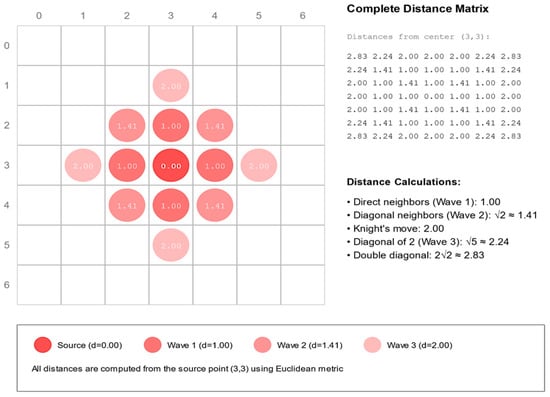

- Wave Evolution: Initially, the wave inside a convolution window starts at the source point and then propagates outward. The wave size adapts based on local image properties. The process continues until all pixels in the window are reached. Each thread in a block examines its 8-connected neighbors and then computes a new distance if a shorter is found. Then, the neighbor is marked for inclusion in the next wave if necessary. See Figure 11 for an illustration of this process.

Figure 11. Wave front calculation for a 7 × 7 window.

Figure 11. Wave front calculation for a 7 × 7 window.

The FMM computational complexity is less efficient than the Dijkstra algorithm. On the other hand, the memory access is more efficient for parallel implementation as it is not random.

5.3. Speed Comparison Between Python and CUDA Implementations

This GPU implementation-based wave propagation is more efficient and more adapted to GPU processing than the CPU implantation using Dijkstra’s algorithm. For Dijkstra’s algorithm for 7 × 7 windows:

- Nodes ;

- Time Complexity: ;

- For each pixel: 49 × (49) operations to compute the geodesic distance;

- More calculation is also needed to maintain the priority queue.

For the wave propagation algorithm,

- We need to compute four waves (center, first ring, second ring, outer);

- Operations per wave: ;

- Fixed number of steps, regardless of window content.

For a single 7 × 7 window, the Dijkstra algorithm requires

- Operations: ~280 (49 × (49);

- Forty-nine sequential steps;

- Random memory access due to the priority queue;

- Extra storage for the priority queue.

For the same window size, the wave propagation algorithm requires

- Operations: ~196 (49 × 4 waves);

- Structured memory access;

- Extra storage for the wave buffers.

5.4. Execution Speed Comparison

A comparison between a CPU implementation written in Python 3.8 and a GPU implementation written using CUDA Toolkit version CUDA 12.9 was performed. We used a powerful CPUs to compare with two GPUs running in the Google Colab environment. The CPU is the AMD Threadripper 7980X with the following specifications:

- Cores: 64;

- Threads: 128;

- Base Clock: 3.2 GHz;

- Boost Clock: 5.1 GHz;

- Memory Bandwidth: ~200 GB/s;

- L3 Cache: 384 MB.

The two GPUs used for the comparison were

- the NVIDIA A100 GPU: CUDA Cores: 6912;

- Memory: 80 GB HBM2e;

- Memory Bandwidth: 2039 GB/s;

- Base Clock: 1410 MHz.

and the

- NVIDIA T4 GPU:

- CUDA Cores: 2560;

- Memory: 16 GB GDDR6;

- Memory Bandwidth: 320 GB/s;

- Base Clock: 585 MHz.

Performance metrics for 1024 × 1024 color image for the Python Dijkstra implementation running on the CPU:

- Pure Python: ~45,000 ms;

- NumPy/SciPy: ~12,000 ms;

- Numba JIT [*]: ~3000 ms;

- Numba Parallel [*]: ~800 ms.

For the CUDA C Wave Propagation version running on a NVIDIA A100 GPU:

- Processing: ~3 ms;

- Memory Transfer: ~1 ms;

- Total: ~4 ms;

- Speedup vs. Numba: ~200×.

For a NVIDIA T4 GPU:

- Processing: ~15 ms;

- Memory Transfer: ~3 ms;

- Total: ~18 ms;

- Speedup vs. Numba: ~44×.

The implementation achieves significant performance improvements through wave-specific optimizations, dynamic bank conflict resolution, and adaptive memory access patterns. Experimental results demonstrate a speedup of 200 times for the NVIDIA A100 GPU and 44 times for the NVIDIA T4 GPU compared to a multithread execution on the CPU using Numba Parallel.

5.5. Scalability Between Algorithms

Scalability is also good as illustrated by the following statistics. For the Python Dijkstra Processing using Numba Parallel, implementation execution time vs. image size are

- 512 × 512: ~200 ms;

- 1024 × 1024: ~800 ms;

- 2048 × 2048: ~3200 ms;

- Scale factor: ~4×.

For CUDA Wave Propagation (on a A100 NVIDIA GPU):

- 512 × 512: ~1 ms;

- 1024 × 1024: ~4 ms;

- 2048 × 2048: ~16 ms;

- Scale factor: ~4×.

CUDA Wave Propagation (on a T4 NVIDIA GPU):

- 512 × 512: ~4.5 ms;

- 1024 × 1024: ~18 ms;

- 2048 × 2048: ~72 ms;

- Scale factor: ~4×.

5.6. Accuracy Between CPU and GPU Versions

The CPU (Python Dijkstra) implementation uses 64-bit double precision by default and is our gold standard reference to compute geodesic distances. For the GPU Wave Propagation implementation, each CUDA core has a single precision accuracy meaning that the final distance relative error to the gold standard will be ~10⁻⁶. So, for our test images the average error are:

- CPU Dijkstra: 0.0 (reference);

- GPU Wave (A100): 3.1 × 10−6;

- GPU Wave (T4): 3.2 × 10−6.

6. Conclusions

This paper has presented a comprehensive analysis of geodesic filtering within the Riemannian framework for image processing, offering both a rigorous theoretical foundation and extensive experimental validation. Our investigation demonstrates that by modeling images as high-dimensional manifolds and computing minimal geodesic paths, this approach achieves remarkable improvements in noise reduction and edge preservation. Geodesic filtering delivers noise reduction on par with state-of-the-art alternatives measured using PSNR while significantly outperforming them in structural preservation measured by SSIM. The robust differential geometry underpinning geodesic filtering enables it to adeptly handle complex image structures, including challenging scenarios with varying noise characteristics and intricate edge patterns.

The experimental data clearly validate that GPU acceleration is essential for practical geodesic filtering, with NVIDIA A100 hardware delivering a 200× performance boost over optimized CPU implementations. This dramatic acceleration transforms geodesic filtering from a mere theoretical concept into a viable processing technique for real-world applications. By reducing computational barriers, the GPU implementation enables geodesic filtering to be effectively applied to large-scale datasets and time-sensitive processing requirements across medical imaging, remote sensing, and video processing domains.

Furthermore, our analysis reveals that the geometric approach to image filtering offers fundamental advantages beyond performance metrics alone. By respecting the intrinsic geometry of image content, geodesic filtering preserves semantic information that is often lost in traditional filtering approaches. The preservation of fine structural details, particularly along complex curved boundaries and in textured regions, maintains the diagnostic or analytical value of processed images—a critical consideration in domains where visual information directly informs decision-making processes. The adaptive nature of geodesic distances, which automatically adjust to local image characteristics, provides a self-regulating mechanism that reduces the need for parameters tuning across diverse image types. This adaptability proves especially valuable when processing heterogeneous datasets with varying noise profiles and structural complexities. Our experiments with clinical medical images, satellite imagery, and natural photographs demonstrate this versatility, with consistent performance advantages observed across these diverse domains.

Additionally, the theoretical framework established in this paper opens new avenues for further research, including potential extensions to temporal filtering for video sequences, integration with deep learning architectures as a geometrically informed processing layer, and application to higher-dimensional volumetric data common in modern medical imaging. The mathematical formalism of Riemannian geometry provides a robust foundation for these future developments, suggesting that geodesic filtering represents not just an incremental improvement but a fundamentally different paradigm for approaching image processing challenges.

7. Future Research Directions

Looking forward, several promising avenues exist to further enhance and expand the capabilities of geodesic filtering. A primary focus will be on reducing its computational complexity while maintaining its inherent advantages. Advances in parallel computing—such as multi-GPU architectures and emerging hardware accelerators—paired with algorithmic improvements inspired by anisotropic fast marching methods, may deliver the necessary efficiency gains.

Another exciting direction involves extending the geodesic filtering framework to higher-dimensional data. While our current work concentrates on two-dimensional images, early studies indicate that the approach can be naturally generalized to 3D volumetric datasets—such as CT or MRI scans—by mapping from R3 to R4 (e.g., u, v, w to x, y, z, I). Similarly, the method could be adapted for video processing by extending the mapping to higher dimensions (e.g., R3 to R6 for video sequences, encompassing spatial and temporal dimensions along with color information) and even 4D time-varying datasets (e.g., dynamic CT or ultrasound data).

These extensions will not only widen the applicability of geodesic filtering but also create new research opportunities in the analysis and visualization of complex, high-dimensional data. Many of these extensions of the geodesic filtering approach have been successfully tested in our laboratory and will be published in the near future.

Author Contributions

Both authors did programming, testing, and wrote the paper. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Natural Sciences and Engineering Research Council of Canada Discovery.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors on request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Tian, C.; Fei, L.; Zheng, W.; Xu, Y.; Zuo, W.; Lin, C.W. Deep learning on image denoising: An overview. Neural Netw. 2020, 131, 251–275. [Google Scholar] [CrossRef]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Deep Image Prior. Int. J. Comput. Vis. 2018, 128, 1405–1573. [Google Scholar] [CrossRef]

- Perona, P.; Malik, J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 629–639. [Google Scholar] [CrossRef]

- Weickert, J.; ter Haar Romeny, B.M.; Viergever, M.A. Efficient and reliable schemes for nonlinear diffusion filtering. IEEE Trans. Image Process. 1998, 7, 398–410. [Google Scholar] [CrossRef]

- Black, M.J.; Sapiro, G.; Marimont, D.H.; Heeger, D. Robust Anisotropic Diffusion. IEEE Trans. Image Process. 1998, 7, 421–432. [Google Scholar] [CrossRef] [PubMed]

- Alvarez, L.; Lions, P.L.; Morel, J.M. Image selective smoothing and edge detection by nonlinear diffusion. SIAM J. Numer. Anal. 1992, 29, 845–866. [Google Scholar] [CrossRef]

- Sapiro, G.; Tannenbaum, A.; You, Y.-L.; Kaveh, M. Experiments on geometric image enhancement. In Proceedings of the1st International Conference on Image Processing, Austin, TX, USA, 13–16 November 1994; Published in IEEE Computer Society Press: Los Alamitos, CA, USA, 1994; Volume 2, pp. 472–476. [Google Scholar]

- Tomasi, C.; Manduchi, R. Bilateral filtering for gray and color images. In Proceedings of the IEEE International Conference on Computer Vision, Bombay, India, 7 January 1998; pp. 839–846. [Google Scholar]

- Durand, F.; Dorsey, J. Fast bilateral filtering for the display of high-dynamic-range images. In Proceedings of the 29th Annual Conference on Computer Graphics and Interactive Techniques, San Antonio, TX, USA, 23–26 July 2002; ACM SIGGRAPH: New York, NY, USA, 2002; pp. 257–266. [Google Scholar]

- Paris, S.; Durand, F. A fast approximation of the bilateral filter using a signal processing approach. Int. J. Comput. Vis. 2009, 81, 24–52. [Google Scholar] [CrossRef]

- Kaplan, N.H.; Erer, I.; Gulmus, N. Remote sensing image enhancement via bilateral filtering. In Proceedings of the 8th International Conference on Recent Advances in Space Technologies (RAST), Istanbul, Turkey, 19–22 June 2017; pp. 139–142. [Google Scholar]

- Rousseeuw, P.J. Least median of squares regression. J. Am. Stat. Assoc. 1984, 79, 871–880. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Leroy, A.M. Robust Regression and Outlier Detection; John Wiley & Sons: Hoboken, NJ, USA, 1987. [Google Scholar]

- Gonzalez, R.; Woods, R. Digital Image Processing, 4th ed.; Pearson: London, UK, 2020. [Google Scholar]

- Shao, L.; Yan, R. Robust Point Cloud Processing Using Statistical Outlier Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 2883–2898. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Rousseeuw, P.J.; Leroy, A.M. Robust Regression and Outlier Detection; Wiley Series in Probability and Statistics: Hoboken, NJ, USA, 2003. [Google Scholar]

- Shocher, A.; Cohen, N.; Irani, M. “Zero-Shot” Super-Resolution using Deep Internal Learning. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Liu, G.; Reda, F.A.; Shih, K.J.; Wang, T.C.; Tao, A.; Catanzaro, B. Image Inpainting for Irregular Holes Using Partial Convolutions. In Proceedings of the ECCV, Munich, Germany, 8–14 September 2018. [Google Scholar]

- Yang, Y.; Sun, J.; Zhang, Q. Deep Wavelet Network with Short Connections for Image Restoration. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Liu, Y.; Wang, Z.; Fang, X. Wavelet-Based Deep Image Prior for Image Restoration. IEEE Trans. Image Process. 2020. [Google Scholar]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Deep Image Prior with Gaussian Weighted Wavelets. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 7765–7773. [Google Scholar]

- Boulanger, P. Extraction Multiechelle d’Element Geometrique. Ph.D. Thesis, University of Montreal, Montreal, QC, CA, 1994; pp. 9–37.

- Sochen, N.; Kimmel, R.; Malladi, R. A general framework for low level vision. IEEE Trans. Image Process. 1998, 7, 310–318. [Google Scholar] [CrossRef]

- Kimmel, R.; Malladi, R.; Sochen, N. Images as embedded maps and minimal surfaces. Int. J. Comput. Vis. 2000, 39, 111–129. [Google Scholar] [CrossRef]

- Mémoli, F.; Sapiro, G. Fast computation of weighted distance functions and geodesics on implicit hyper-surfaces. J. Comput. Phys. 2005, 173, 730–764. [Google Scholar] [CrossRef]

- Peyré, G. Manifold models for signals and images. Comput. Vis. Image Underst. 2009, 113, 249–260. [Google Scholar] [CrossRef]

- Castaño-Moraga, C.A.; Lenglet, C.; Deriche, R.; Ruiz-Alzola, J. A Riemannian approach to anisotropic filtering of tensor fields. Signal Process. 2007, 87, 263–276. [Google Scholar] [CrossRef]

- Zhang, F.; Hancock, E.R. New Riemannian techniques for directional and tensorial image data. Pattern Recognit. 2010, 43, 1590–1606. [Google Scholar] [CrossRef]

- Alonso-González, A.; López-Martínez, C.; Salembier, P.; Deng, X. Bilateral Distance Based Filtering for Polarimetric SAR Data. Remote Sens. 2013, 5, 5620–5641. [Google Scholar] [CrossRef]

- Sethian, J.A. Level Set Methods and Fast Marching Methods: Evolving Interfaces in Computational Geometry, Fluid Mechanics, Computer Vision, and Materials Science; Cambridge University Press: Cambridge, UK, 1999. [Google Scholar]

- Dijkstra, E.W. A note on two problems in connexion with graphs. Numer. Math. 1959 1999, 1, 269–271. [Google Scholar] [CrossRef]

- Mirebeau, J.M. Anisotropic fast marching on cartesian grids using lattice basis reduction. SIAM J. Numer. Anal. 2014, 52, 1573–1599. [Google Scholar] [CrossRef]

- Vincent, L. Minimal path algorithms for the robust detection of linear features in gray images. In International Symposium on Mathematical Morphology and Its Applications to Image and Signal Processing; Springer: Berlin/Heidelberg, Germany, 1998. [Google Scholar]

- Lewis, R. A Comparison of Dijkstra’s Algorithm Using Fibonacci Heaps, Binary Heaps, and Self-Balancing Binary Trees. arXiv 2023. [Google Scholar] [CrossRef]

- Weickert, J. Anisotropic Diffusion in Image Processing; ECMI Series; Teubner-Verlag: Stuttgart, Germany, 1998. [Google Scholar]

- Gousseau, Y.; Morel, J.M. Are natural images of bounded variation? SIAM J. Math. Anal. 2001, 33, 634–648. [Google Scholar] [CrossRef]

- Asad, M.; Dorent, R.; Vercauteren, T. FastGeodis: Fast Generalised Geodesic Distance Transform. J. Open-Source Softw. 2020, 7, 4532. [Google Scholar] [CrossRef]

- Áfra, A.T.; Wald, I.; Benthin, C.; Woop, S. gDist: Efficient Distance Computation between 3D Meshes on GPU. In SIGGRAPH Asia 2024 Conference Papers; Association for Computing Machinery: New York, NY, USA, 2024. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).