LIM: Lightweight Image Local Feature Matching

Abstract

1. Introduction

2. Related Work

2.1. Feature Extraction

2.1.1. Deep Learning-Based Image Matching Algorithms

2.1.2. Transformer-Based Image Matching Approaches

2.2. Feature Matching

3. LIM: Lightweight Image Local Feature Matching

3.1. Lightweight Network Backbone

- At the early stage of the network, we employ standard convolutional operations while incorporating residual connections inspired by ResNet.

- This design facilitates comprehensive extraction of low-dimensional image features, mitigating the gradient vanishing problem during training.

- Given the high resolution of the input image, we deliberately reduce the number of channels in the initial convolutional layers to control computational complexity.

- As the spatial resolution decreases, we gradually increase the network’s dimensionality until reaching 128 dimensions.

- Beyond this point, we replace standard convolution with depth-separable convolution, leveraging its efficiency while retaining the extracted hierarchical representations.

- The dense parameter matrix of standard convolution, combined with residual connections, effectively captures local textures and fine details in early layers.

- Retaining a higher resolution at this stage ensures rich structural information extraction, improving the model’s overall expressiveness.

- The computational overhead remains manageable due to the lower number of channels in the early layers.

- As the network depth increases, feature maps transition from pixel-level details to high-level semantic abstractions.

- At this stage, depth-separable convolution efficiently focuses on extracting abstract semantic features while reducing redundant computations.

- This approach substantially decreases the computational burden of high-resolution images and optimizes the overall parameter count.

3.2. Local Feature Extraction

- BasicBlock: A standard 2D convolutional module with kernel sizes of 1 or 3, combined with BatchNorm + ReLU activation.

- DeepSeparationBlock: A depthwise-separable convolutional module structured as pointwise convolution + depthwise convolution + pointwise convolution, with a kernel size of 3, paired with BatchNorm + HardSwish activation.

3.2.1. Description

3.2.2. Keypoints

3.3. Rotation

4. Network Training

4.1. Descriptor Loss

- Forward matching, where similarity is computed using and .

- Reverse matching, where similarity is recomputed using and to obtain a maximum dual-softmax loss.

4.2. Reliable Loss

4.3. Keypoints Loss

Repeatability Loss

5. Experiments

5.1. Experimental Setup

5.1.1. Dataset

- MegaDepth is a large-scale outdoor dataset containing extensive depth maps and corresponding images, making it well suited for feature-matching model training.

- COCO synthetic dataset consists of thousands of images generated via various transformations, improving the generalization capability of our model.

5.1.2. Training Parameter

- Batch size: 8;

- Learning rate: ;

- Total training steps: 160,000;

- Training duration: 48 h;

- VRAM consumption: 14 GB.

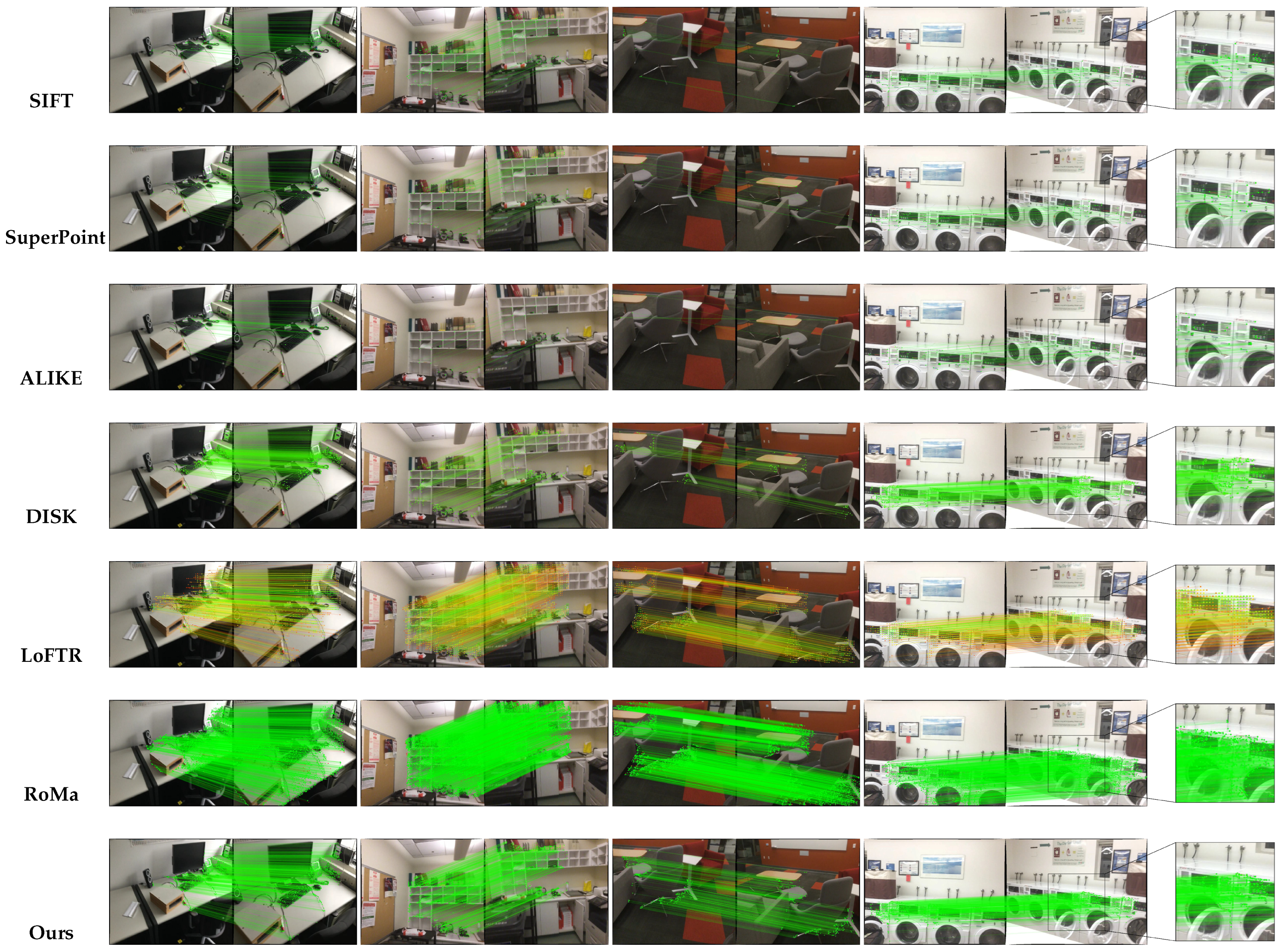

5.2. Relative Pose Estimation

5.3. Homography Estimation

5.4. Visual Localization

5.5. Benchmark Analysis

5.6. Ablation Experiment

- Default configuration.

- Eliminate the SE channel attention.

- Reduce the dimensions to 32.

- Modify the keypoint detection branch.

- Replace all convolutions with standard convolutions.

- Replace all convolutions with depth-separable convolutions.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Edstedt, J.; Athanasiadis, I.; Wadenbäck, M.; Felsberg, M. DKM: Dense kernelized feature matching for geometry estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 17765–17775. [Google Scholar]

- Edstedt, J.; Sun, Q.; Bökman, G.; Wadenbäck, M.; Felsberg, M. RoMa: Robust dense feature matching. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19790–19800. [Google Scholar]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-free local feature matching with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8922–8931. [Google Scholar]

- Truong, P.; Danelljan, M.; Timofte, R.; Van Gool, L. Pdc-net+: Enhanced probabilistic dense correspondence network. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 10247–10266. [Google Scholar] [CrossRef] [PubMed]

- Low, D.G. Distinctive image features from scale-invariant keypoints. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Viswanathan, D.G. Features from accelerated segment test (fast). In Proceedings of the 10th Workshop on Image Analysis for Multimedia Interactive Services, London, UK, 6–8 May 2009; pp. 6–8. [Google Scholar]

- Bay, H.; Tuytelaars, T.; Van Gool, L. Surf: Speeded up robust features. In Proceedings of the Computer Vision–ECCV 2006: 9th European Conference on Computer Vision, Proceedings, Part I 9, Graz, Austria, 7–13 May 2006; pp. 404–417. [Google Scholar]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571. [Google Scholar]

- Yi, K.M.; Trulls, E.; Lepetit, V.; Fua, P. Lift: Learned invariant feature transform. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Proceedings, Part VI 14, Amsterdam, The Netherlands, 11–14 October 2016; pp. 467–483. [Google Scholar]

- Tyszkiewicz, M.; Fua, P.; Trulls, E. DISK: Learning local features with policy gradient. Adv. Neural Inf. Process. Syst. 2020, 33, 14254–14265. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Salt Lake City, UT, USA, 18–23 June 2018; pp. 224–236. [Google Scholar]

- Gleize, P.; Wang, W.; Feiszli, M. Silk: Simple learned keypoints. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 22499–22508. [Google Scholar]

- Zhao, X.M.; Wu, X.M.; Miao, J.Y.; Chen, W.H.; Chen, P.C.Y.; Li, Z.G. ALIKE: Accurate and Lightweight Keypoint Detection and Descriptor Extraction. IEEE Multimed. 2023, 25, 3101–3112. [Google Scholar] [CrossRef]

- Zhao, X.; Wu, X.; Chen, W.; Chen, P.C.; Xu, Q.; Li, Z. Aliked: A lighter keypoint and descriptor extraction network via deformable transformation. IEEE Trans. Instrum. Meas. 2023, 72, 1–16. [Google Scholar] [CrossRef]

- Edstedt, J.; Bökman, G.; Wadenbäck, M.; Felsberg, M. DeDoDe: Detect, don’t describe—Describe, don’t detect for local feature matching. In Proceedings of the 2024 International Conference on 3D Vision (3DV), Davos, Switzerland, 18–21 March 2024; pp. 148–157. [Google Scholar]

- Ullman, S. The interpretation of structure from motion. Proc. R. Soc. Lond. Ser. Biol. Sci. 1979, 203, 405–426. [Google Scholar]

- Eppstein, D.; Erickson, J. Raising roofs, crashing cycles, and playing pool: Applications of a data structure for finding pairwise interactions. In Proceedings of the Fourteenth Annual Symposium on Computational Geometry, Minneapolis, MN, USA, 7–10 June 1998; pp. 58–67. [Google Scholar]

- Juan, J. Programme de classification hiérarchique par l’algorithme de la recherche en chaîne des voisins réciproques. Les Cah. L’Analyse Des DonnéEs 1982, 7, 219–225. [Google Scholar]

- Moore, A.W. An Introductory Tutorial on Kd-Trees; Technical Report; Computer Laboratory, University of Cambridge: Cambridge, UK, 1991; p. 209. [Google Scholar]

- Gionis, A.; Indyk, P.; Motwani, R. Similarity search in high dimensions via hashing. In Proceedings of the Vldb, Scotland, UK, 7–10 September 1999; pp. 518–529. [Google Scholar]

- Sarlin, P.-E.; DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superglue: Learning feature matching with graph neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 4938–4947. [Google Scholar]

- Lindenberger, P.; Sarlin, P.-E.; Pollefeys, M. Lightglue: Local feature matching at light speed. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 2–3 October 2023; pp. 17627–17638. [Google Scholar]

- Wang, Y.; He, X.; Peng, S.; Tan, D.; Zhou, X. Efficient LoFTR: Semi-dense local feature matching with sparse-like speed. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 21666–21675. [Google Scholar]

- Jiang, H.; Karpur, A.; Cao, B.; Huang, Q.; Araujo, A. Omniglue: Generalizable feature matching with foundation model guidance. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 19865–19875. [Google Scholar]

- Oquab, M.; Darcet, T.; Moutakanni, T.; Vo, H.; Szafraniec, M.; Khalidov, V.; Fernandez, P.; Haziza, D.; Massa, F.; El-Nouby, A. Dinov2: Learning robust visual features without supervision. arXiv 2023, arXiv:2304.07193. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Sifre, L.; Mallat, S. Rigid-motion scattering for texture classification. arXiv 2014, arXiv:1403.1687. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Kanakis, M.; Maurer, S.; Spallanzani, M.; Chhatkuli, A.; Van Gool, L. Zippypoint: Fast interest point detection, description, and matching through mixed precision discretization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 6114–6123. [Google Scholar]

- Cohen, T.S.; Welling, M. Group equivariant convolutional networks. In Proceedings of the 33rd International Conference on International Conference on Machine Learning—Volume 48, New York, NY, USA, 20–22 June 2016; pp. 2990–2999. [Google Scholar]

- Li, Z.; Snavely, N. Megadepth: Learning single-view depth prediction from internet photos. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2041–2050. [Google Scholar]

- Balntas, V.; Lenc, K.; Vedaldi, A.; Mikolajczyk, K. HPatches: A benchmark and evaluation of handcrafted and learned local descriptors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5173–5182. [Google Scholar]

- Sattler, T.; Maddern, W.; Toft, C.; Torii, A.; Hammarstrand, L.; Stenborg, E.; Safari, D.; Okutomi, M.; Pollefeys, M.; Sivic, J. Benchmarking 6dof outdoor visual localization in changing conditions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8601–8610. [Google Scholar]

- Barath, D.; Noskova, J.; Ivashechkin, M.; Matas, J. MAGSAC++, a fast, reliable and accurate robust estimator. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1304–1312. [Google Scholar]

- Fischler, M. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

| Method | AUC@5° | AUC@10° | AUC@20° | ACC@5° | ACC@10° | ACC@20° |

|---|---|---|---|---|---|---|

| SuperPoint | 36.7 | 49.3 | 60.3 | 57.4 | 68.5 | 83.2 |

| DISK | 52.4 | 64.2 | 75.2 | 68.4 | 81.2 | 89.1 |

| ALIKE | 47.3 | 60.9 | 73.2 | 69.1 | 77.9 | 87.1 |

| LoFTR | 49.8 | 65.7 | 78.5 | 70.3 | 83.2 | 90.2 |

| Ours | 47.9 | 61.8 | 73.7 | 69.5 | 81.3 | 88.7 |

| SuperPoint+LightGlue | 42.4 | 54.5 | 68.5 | 63.7 | 72.1 | 87.3 |

| DISK+LightGlue | 53.3 | 67.5 | 77.5 | 70.1 | 82.5 | 90.1 |

| Ours+LightGlue | 50.5 | 64.3 | 76.2 | 71.2 | 82.3 | 91.1 |

| Method | FPS—480 p | FPS—720 p | FPS—1080 p | Mem. (GB) |

|---|---|---|---|---|

| SuperPoint | 1.3 | |||

| DISK | 1.6 | |||

| ALIKE | 0.6 | |||

| LoFTR | 4.5 | |||

| Ours | 0.4 | |||

| SuperPoint+LightGlue | 1.5 | |||

| DISK+LightGlue | 2.9 | |||

| Ours+LightGlue | 1.0 |

| Method | AUC@5° | AUC@10° | AUC@20° |

|---|---|---|---|

| SuperPoint | 12.2 | 23.2 | 34.2 |

| DISK | 10.4 | 20.8 | 32.1 |

| ALIKE | 8.2 | 16.2 | 25.8 |

| LoFTR | 16.6 | 33.8 | 50.6 |

| Ours | 16.7 | 32.6 | 47.8 |

| SuperPoint+LightGlue | 13.2 | 25.2 | 40.2 |

| DISK+LightGlue | 11.2 | 24.4 | 38.4 |

| Ours+LightGlue | 16.8 | 33.2 | 48.3 |

| Method | Illumination MHA | Viewpoint MHA | ||||

|---|---|---|---|---|---|---|

| @1 | @3 | @5 | @1 | @3 | @5 | |

| SuperPoint | 49.23 | 88.85 | 96.92 | 21.79 | 52.86 | 70.07 |

| DISK | 50.02 | 89.23 | 97.31 | 19.29 | 53.21 | 70.32 |

| ALIKE | 51.19 | 90.15 | 96.92 | 20.86 | 52.14 | 67.52 |

| LoFTR | 53.24 | 92.23 | 98.32 | 22.25 | 52.03 | 71.21 |

| Ours | 51.32 | 89.54 | 97.35 | 21.57 | 52.86 | 69.17 |

| SuperPoint+LightGlue | 49.45 | 89.15 | 97.35 | 22.11 | 53.02 | 71.03 |

| DISK+LightGlue | 51.06 | 89.75 | 97.55 | 20.11 | 54.02 | 71.05 |

| Ours+LightGlue | 52.45 | 90.35 | 97.65 | 21.71 | 53.22 | 70.23 |

| Method | Day | Night | ||||

|---|---|---|---|---|---|---|

| 0.25 m | 0.5 m | 5 m | 0.25 m | 0.5 m | 5 m | |

| SuperPoint | 74.2 | 79.5 | 84.1 | 37.8 | 43.9 | 53.1 |

| DISK | 81.9 | 89.8 | 93.1 | 66.3 | 72.4 | 85.7 |

| ALIKE | 72.7 | 79.7 | 84.3 | 38.8 | 43.9 | 59.2 |

| LoFTR | 88.5 | 95.5 | 98.8 | 75.4 | 90.6 | 97.9 |

| Ours | 79.4 | 86.0 | 90.5 | 70.4 | 75.5 | 89.8 |

| SuperPoint+LightGlue | 88.6 | 95.4 | 98.3 | 85.7 | 90.8 | 100 |

| DISK+LightGlue | 86.2 | 94.8 | 98.7 | 81.6 | 90.8 | 100 |

| Ours+LightGlue | 88.9 | 95.8 | 98.9 | 86.6 | 92.9 | 100 |

| Strategy | AUC@5° |

|---|---|

| Default | 47.9 |

| (i) No SE attention | 42.5 |

| (ii) Smaller model | 36.8 |

| (iii) Modify keypoint extraction | 39.9 |

| (iv) All standard convolutions | 41.6 |

| (v) All depth-separable convolutions | 40.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ying, S.; Zhao, J.; Li, G.; Dai, J. LIM: Lightweight Image Local Feature Matching. J. Imaging 2025, 11, 164. https://doi.org/10.3390/jimaging11050164

Ying S, Zhao J, Li G, Dai J. LIM: Lightweight Image Local Feature Matching. Journal of Imaging. 2025; 11(5):164. https://doi.org/10.3390/jimaging11050164

Chicago/Turabian StyleYing, Shanquan, Jianfeng Zhao, Guannan Li, and Junjie Dai. 2025. "LIM: Lightweight Image Local Feature Matching" Journal of Imaging 11, no. 5: 164. https://doi.org/10.3390/jimaging11050164

APA StyleYing, S., Zhao, J., Li, G., & Dai, J. (2025). LIM: Lightweight Image Local Feature Matching. Journal of Imaging, 11(5), 164. https://doi.org/10.3390/jimaging11050164