Abstract

Computer vision aims to enable machines to understand the visual world. Computer vision encompasses numerous tasks, namely action recognition, object detection and image classification. Much research has been focused on solving these tasks, but one that remains relatively uncharted is light enhancement (LE). Low-light enhancement (LLE) is crucial as computer vision tasks fail in the absence of sufficient lighting, having to rely on the addition of peripherals such as sensors. This review paper will shed light on this (focusing on video enhancement) subfield of computer vision, along with the other forementioned computer vision tasks. The review analyzes both traditional and deep learning-based enhancers and provides a comparative analysis on recent models in the field. The review also analyzes how popular computer vision tasks are improved and made more robust when coupled with light enhancement algorithms. Results show that deep learners outperform traditional enhancers, with supervised learners obtaining the best results followed by zero-shot learners, while computer vision tasks are improved with light enhancement coupling. The review concludes by highlighting major findings such as that although supervised learners obtain the best results, due to a lack of real-world data and robustness to new data, a shift to zero-shot learners is required.

1. Introduction

Light enhancement (LE) networks aim to enhance low-light and poorly lit visual data, to allow more information to be extracted from the visual data for further processing. The simple brightening of an image does not lead to desired results. Firstly, in low-light conditions, not all objects of the image are equally deprived of lighting; thus, the enhancement of objects on an image needs to be performed with consideration of local luminosity. Secondly, images captured in low-lighting conditions often incur noise; thus, a major challenge exists in enhancing these lighting conditions without enhancing the unwanted noise; therefore, light enhancement is a non-trivial task. LE methods can be divided into two groups, traditional learning and deep learning. These are further sub-divided into histogram equalization, Retinex, Dehazing and statistical techniques for traditional learning, and supervised learning, unsupervised learning, semi-supervised learning and zero-shot learning for deep learning [1]. Traditional learning methods like those employed in [2,3,4,5] were commonly used before the rise of deep learning. Some, like histogram equalization, are still widely employed alongside deep learning methods due to their abilities to better handle specific tasks; in the case of histogram equalization, that being maintaining contrast amongst neighboring pixels and enhancing the dynamic range of an image. Deep learning methods have gained popularity in the LE domain due to the improvements in neural networks over the years that have made them more accurate and faster. Although deep learning methods exhibit the forementioned crucial benefits, one major drawback is their need for large real-world and diverse training data.

Within the domain of LE, image enhancement enjoys the bulk of most research over video enhancement. Algorithms that aim to enhance videos simply extend image enhancement networks to videos by handling the videos frame by frame. This causes temporal inconsistencies in the processed video, which leads to various artefacts such as blurs, poor color grading and flickering. Deblurring is a technique that aims to recover sharp images from blurry ones. When an image is blurred, mathematically, it is convoluted with a blurring kernel (also termed Point Spread Function, “PSF”) resulting in the blurred image. This blur can be due to object or camera shake, out-of-focus objects, or slow shutter speed of camera to name a few. Deblurring may be categorized as being uniform or non-uniform, as well as blind or non-blind. Uniform blur means that the kernel that caused the blur throughout the frame is constant; the opposite is true with non-uniform blur (also termed local blur). Blind blur occurs when the kernel that caused the blur effect is unknown, making it harder to deblur the image, while with non-blind blur, the blur-causing kernel is known. Real-world scenarios of blur are often blind and non-uniform. Deblurring networks have the following basic sequential layers: Convolutional layer, Recurrent Layer, Residual layer, Dense layer and Attention layer. The most common architectures include Deep Auto-Encoders, Generative Adversarial Networks (GANs), Cascaded Networks, Multi-scale Networks and Reblurring Networks.

Poor color grading (poor color transfer) occurs when an image or video that is in greyscale or is poorly colored (such as in the case of low-light images or videos) is poorly adjusted in color and tonal balance by an algorithm. This poor colorization manifests itself as either chromatic flickering or incorrect colorization of objects in the processed visual data along with a loss of color contrast. Flickering in processed videos is often caused by poor processing techniques that destroy the videos’ temporal coherence between neighboring video frames, causing artefacts in the processed video. To remove video flickers, exploitations of “the temporal information between neighboring frames” [6] is required. To ensure temporal information is maintained frame to frame, methods include using 3D Convolutional Networks (ConvNets) which capture both spatial and temporal information, along with LSTM or GRUs. Consideration is required, as while 3D ConvNets can capture both temporal and spatial information, this is only for a short period of time as compared to LSTM and GRUs, which can capture longer sequences but struggle at capturing spatial information compared to ConvNets.

Action recognition (AR) is the ability of machines to recognize human activities in videos. “Actions are ‘meaningful interactions’ between humans and the environment” [7]. Actions can be short term or long term, and in the case of long-term actions, recurrence is required to ensure that the network is able to “remember” actions that happened at the start of the sequence. Recurrence also assists the model in understanding the sequence in which actions occur. Due to this, many action recognition models leverage some sort of recurrent networks, be it LSTMs or GRUs.

The methodologies chosen in this review were chosen for being the latest popular models, or being popular models whose robustness and effectiveness has stood the test of time. Examples of such models would be recent and popular models like Zero DCE (proposed in 2020) [8] and much older models like the YOLO algorithms; YOLOv1 [9] was proposed in 2015, but due to its faster than real-time performance, it continues to be utilized for many real-time detection tools till this day.

The contributions of this review are as follows:

- Provides a survey of recent and most impactful LE models, video artefacts removal networks, action recognition and object detection algorithms. By so doing, this review provides a framework which researchers can use to build nighttime-enabled computer vision algorithms such as autonomous criminal activity detectors, where similar algorithms have been attempted using traditional methods, with little success. This review, on the other hand, studies deep learning methods which have been proven to outperform traditional learning methods.

- Recent and popular computer vision models are compared with each other, both qualitatively and quantitatively, providing a holistic evaluation of each model.

- The survey summarizes the most popular datasets utilized in the various networks mentioned, while also giving an overview of the data format of these datasets and related information. The review also gives a methodology on how the datasets can be manipulated to be more representative of real-world data, which leads to improved model performances.

- Finally, this review lays out challenges that face the highlighted computer vision tasks, and a means for tackling these challenges.

The rest of this review is organized as follows:

Section 2 discusses different models in LE and the various learning techniques used. Artefact removal is reviewed in Section 3, while artefact removal coupled with light enhancement is analyzed in Section 4. Section 5 delves into action recognition and object detection, and Section 6 focuses on how action detection and object detection can be improved in low-light conditions when coupled with LE models. Section 7 talks about the various datasets used for training and testing LE models, while Section 8 paints a picture of the challenges that remain to be tackled in LE. Section 9 concludes the findings made in this paper.

2. LE Techniques

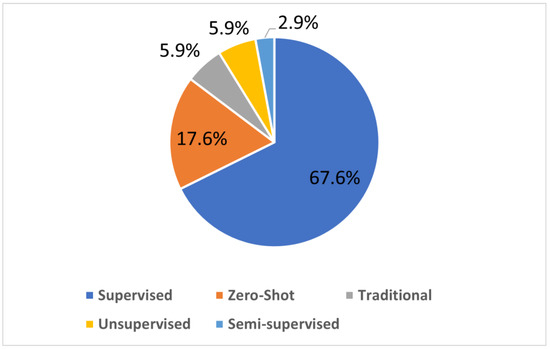

Light enhancement methods can be divided into two main categories, namely traditional learning and deep learning. Most enhancers employ deep learning techniques as a result of their superior processing speeds and accuracy; therefore, this paper will focus on deep learning-based enhancers. The enhancers discussed will be classified into four learning strategies, supervised, zero-shot, traditional unsupervised and semi-supervised. The popularity of these learning strategies is visualized in Figure 1.

Figure 1.

Popularity of various LE learning strategies [1].

2.1. Traditional Learning-Based Enhancers

Traditional learning-based enhancers make use of techniques such as histogram equalization statistical techniques, gray level transformation and Retinex theory. Traditional enhancers require less training data while also using less computational resources.

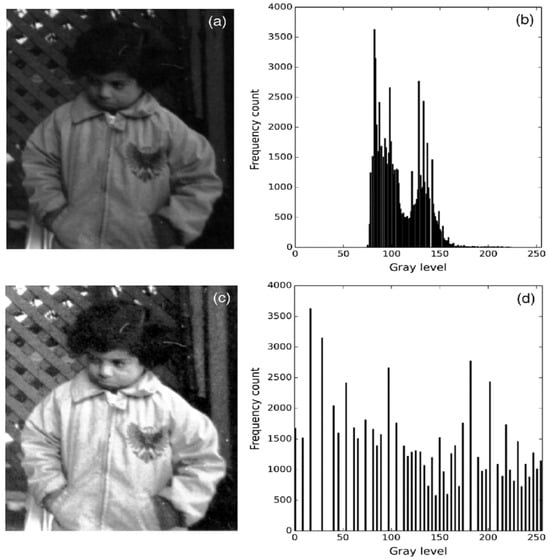

Histogram equalization (HE) techniques such as those employed in [10,11,12] are used to improve the contrast of the image. This is achieved by spreading the pixel intensity throughout the image, pictorially demonstrated in Figure 2 [13].

Figure 2.

Histogram equalization illustration [13]. (b) The histogram of (a), which shows a highly condensed histogram. (d) The histogram of (c), which shows the equalized version of the histogram in (b). (Reprinted from [13]).

The other popular traditional learning technique for light enhancement, Retinex theory, takes inspiration from the biology of the human eye and was first introduced in [3]. The human eye is able to detect object color under changing illumination conditions, and Retinex theory aims to imitate this. Retinex theory decomposes an image into two parts, illumination and reflectance. The reflectance mapping represents the colors of objects in the image, while illumination represents the intensity of light in the image. To enhance the image, the reflectance is enhanced while ensuring that illumination is adjusted such that the image is bright enough to perceive objects in the image (not too dim, not too bright). Some enhancers that have employed Retinex theory in recent years have been discussed in [14,15,16].

The pitfalls of these traditional methods lie in that these methods are limited in their ability to handle enhancing images with complex lighting conditions, such as back-lit images, front-lit images, and any images where the lighting conditions are not uniform. Traditional enhancers treat an image as though the poor lighting conditions are equally shared by all pixels in the image and thus often apply global transformation techniques. This is seen in Figure 3, which illustrates the major issue with traditional learners. The input image is back-lit, with the sky and clouds in the background clearly visible and thus requiring very little if any enhancement, while the cathedral is not well lit and thus requires enhancement. HE is able to enhance the cathedral walls, and maintain some resemblance of the sky to the original image, but in doing so sacrifices the ability to enhance darker regions, noted in red. Retinex is able to enhance these darker regions that HE failed to enhance, but in doing so, over-enhances the already well-lit parts of the images; contrast is lost in these parts, making it harder to distinguish the start and end points of various objects. Adaptive variants of traditional enhancers exist that consider the local differences in an image [17]. These variants such as Contrast-Limited Adaptive Histogram Equalization (CLAHE), a variant of HE, are better at capturing local contrasts and preserving edges, but in doing so sacrifice runtime for performance. Although the overall performance of the algorithm is improved by said variations, it still falls behind deep learners, as they (traditional learners) are not very robust (poor ability to adapt to a wide range of lighting conditions), poor at detail preservation and apply basic techniques to noise reduction, which often results in noise being amplified in the final image along with the desired signal.

Figure 3.

Pitfalls of traditional learning enhancers [15]. The input image requires enhancement, specifically the building, while preserving (minimal enhancement) the contrasts of the clouds. The histogram-equalized image can slightly enhance parts of the building while preserving the contrast in the clouds, seen in the black square, but fails to enhance the darker parts of the image highlighted in the red square. The Retinex-enhanced image over-enhances the image and as a result, contrast in the clouds (black square) is lost even though the darker parts of the building are now visible. The Retinex-enhanced image also inadvertently enhances noise as well. (Reprinted from [15]).

2.2. Supervised Learning-Based Enhancers

Supervised learning in LE requires labeled and paired data. The data used are of the same scene both in low-light and optimal lighting conditions. For this reason, supervised learning-based models often suffer from a lack of a large diverse dataset, often leading to the use of synthetic data, which fail to capture the natural variations in the lighting in a scene (i.e., naturally in a scene, often some objects may appear dark while others appear over-illuminated). Even with the mammoth task of requiring diverse and paired datasets of the same scene, supervised enhancers continue to dominate in terms of choice, due to them continually outperforming other enhancers on benchmark tests.

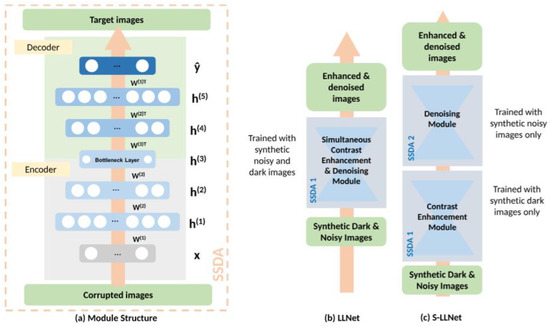

One of the first supervised LE models employed deep encoders to adaptively enhance and denoise images. LLNet [18] enhances contrast such that the image improvements are completed relative to local neighbors. This helps prevent the model from enhancing already bright regions, which is a challenge that plagues many enhancers. The network is also trained to recognize stable features of images even in the presence of noise, to equip the model with denoising capabilities. LLNet takes its inspiration from Sparse Stacked Denoising Autoencoders (SSDAs) [19] and their denoising capabilities. The SSDA is derived from research performed by [19] (illustrated in Figure 4a), which showed that a model is able to find better parameter space during back-propagation by stacking denoising autoencoders (DAs). Let yi be the uncorrupted desired image and xi the corrupted input version of yi, where i is an element of positive integers; DA is thus defined as follows:

where is the element-wise sigmoid activation function, the hidden layers defined by , are approximated by . The weights and biases are defined as .

Figure 4.

LLNet architecture. In (a), x and are the corrupted and reconstructed images, respectively, and h(i) are the hidden layers [18]. (b) The simultaneous enhancement and denoising LLNet. (c) LLNet with sequential enhancement followed by denoising. (Reprinted with permission from [18] 2017, Elsevier, Amsterdam, The Netherlands).

The authors developed two models, vanilla LLNet, which simultaneously enhances and denoises and the staged LLNet, which first enhances and then denoises, as illustrated in Figure 4b,c respectively. The results in the comparisons of the two LLNet models show that vanilla LLNet outperforms staged LLNet on numerous metrics, which supports the idea that simultaneous enhancing and denoising yields more desirable results than sequential enhancing and denoising. This is a key observation as most low-light visual data are consumed by noise, and enhancers have the unintended tendency of enhancing said noise. This observation is also supported by coupled enhancers discussed later in this paper.

Table 1 shows a performance comparison between LLNet and some non-deep learning techniques on synthetic and real dark images. Table 2 compares LLNet with the same traditional learning strategies but with dark and noisy synthetic data. In both cases, both the vanilla LLNet and S-LLNet outperform the traditional LE techniques (with LLNet outperforming S-LLNet) while histogram equalization performs the worst. The various models are evaluated using the Peak Signal to Noise Ratio (PSNR) and Structural Similarity Index Measure (SSIM). PSNR is given by (3) and (4), where in is the number of bits per pixel, generally eight bits, and is the mean square error. The Structural Similarity Index Measure (SSIM), first formulated by [20], per pixel is formulated in (5). The SSIM is used to explore structural information in an image. The structures as defined by [20] are “those attributes that represent the structure of objects in the scene, independent of the average luminance and contrast” [20]. In (5), x and y are the inputs from the unprocessed and processed images, respectively. defines the luminance component, the contrast component is defined by , and the structure component by . These components are weighted by the exponents , respectively.

Table 1.

Both LLNet and S-LLNet are compared with non-deep learning enhancement techniques. Dark is the synthetically darkened input data. HE is histogram equalization. CLAHE is contrast-limiting adaptive histogram equalization. GA is the gamma adjustment. HE + BM3D is histogram equalization with 3D block matching. The best models were tested with 90 synthetic and 6 natural images. The best-performing model is highlighted in bold, and the number in parenthesis reflects the number of winning instances amongst the entire dataset; thus, LLNet has the best winning streak, and HE has the worst [18]. (↑) Indicates higher values are desirable. (Reprinted from [18].)

Table 2.

“Bird” denotes the original optimally lit and noiseless image. “Bird-D” is the darkened version of the original image, while “Bird-D + GNx” is the darkened and noisy image with Gaussian Noise of σ = x. The same is true for the remainder [18]. The best-performing model is highlighted in bold in the “average” row. (↑) Indicates higher values are desirable.(Reprinted from [18]).

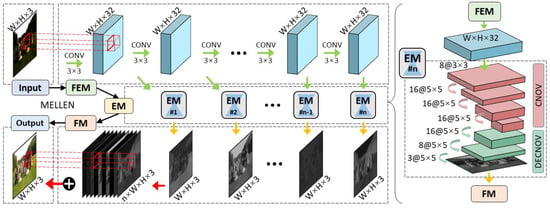

Lv et al. [21] proposed a multi-branch low-light enhancement network (MBLLEN) to extract features from different levels and apply enhancement via multiple subnets. The branches proposed are the feature extraction module (FEM), which extracts image features and feeds the output to the Enhancement Module (EM). The EM enhances images and the outputs from the EM are concatenated in the Fusion Module (FM) via multi-branch fusion, as illustrated in Figure 5.

Figure 5.

MBLLEN Model pipeline [21]. (Reprinted from [21].)

For training and testing, the model utilized a synthesized version of the VOC Dataset [22] (Poisson noise was added to images). The model also employed the e-Lab Video Data Set (e-VDS) [23] for training and testing its modified low-light video enhancement (LLVE) network. Both datasets were altered with random gamma adjustment to synthesize low-light data. This process of creating synthetic low-light data means that the model is poorly suited for real-world scenarios, which was observed in its poor performance in extremely low-light videos, resulting in flickering in the videos processed [6]. The model also did not employ color correcting error metrics, which caused the model’s color inconsistencies in the processed videos. Another limitation of the model is its 3 s runtime, making it unsuitable for real-world applications. Table 3a–c show the self-reported results from different evaluations of the MBLLEN algorithm. Table 3a,b show the comparison of MBLLEN on dark images and dark + noisy images, respectively. Table 3c shows the video enhancement version of the model, which utilizes 3D convolutions instead of 2D convolutions. VIF [24] is the Visual Information Fidelity used to determine if image quality after embedding has improved, formulated in (6)–(8). In (6), the numerator determines the mutual information between the reference image () and corrupted image () given subband statistics ().

In (7) and (8):

- : Reference image;

- : Distorted image;

- : Gain;

- : variance in reference subband coefficients;

- : variance in the wavelet coefficients for spatial location y;

- : variance in visual noise;

- : variance in additive distortion noise.

The Lightness Order Error (LOE) [25] is used to measure the distortion of lightness in enhanced images. RD(x) is the relative order difference of the lightness between the original image P and its enhanced version P′ for pixel x, which is defined by (10). The pixel number is defined by m, and the lightness component of the pixel x before and after enhancement is defined by L(x) and L′(x).

TMQI [26] defines the Tone-Mapped Image Quality Index, which combines a multi-scale structural fidelity measure and a statistical naturalness measure to assess the quality of tone-mapped images. In (11), the TMQI is defined as Q, adjusts the relative importance of the two components (structural fidelity and statistical naturalness), and α and β calculate their sensitivity.

Table 3.

(a). Qualitive comparison of MBLLEN with other LLIE networks on dark image only (Reprinted from [21].) (b) Qualitive comparison of MBLLEN with other LLIE networks, using dark and noisy images. (Reprinted from [21].) (c) Qualitative comparison of MBLLEN and MBLLVEN with other enhancers on low-light video enhancement. The best-performing model is highlighted in bold. (↑) Indicates higher values are desirable, the opposite is true (Reprinted from [21].)

Table 3.

(a). Qualitive comparison of MBLLEN with other LLIE networks on dark image only (Reprinted from [21].) (b) Qualitive comparison of MBLLEN with other LLIE networks, using dark and noisy images. (Reprinted from [21].) (c) Qualitative comparison of MBLLEN and MBLLVEN with other enhancers on low-light video enhancement. The best-performing model is highlighted in bold. (↑) Indicates higher values are desirable, the opposite is true (Reprinted from [21].)

| (a) | |||||

| PSNR (↑) | SSIM (↑) | VIF (↑) | LOE (↓) | TMQI (↑) | |

| Input | 12.80 | 0.43 | 0.38 | 606.85 | 0.79 |

| SRIE [27] | 15.84 | 0.59 | 0.43 | 788.53 | 0.82 |

| BPDHE [28] | 15.01 | 0.59 | 0.39 | 607.43 | 0.81 |

| LIME [28] | 15.16 | 0.60 | 0.44 | 1215.58 | 0.82 |

| MF [29] | 18.48 | 0.67 | 0.45 | 882.24 | 0.84 |

| Dong [30] | 17.80 | 0.64 | 0.37 | 1398.35 | 0.82 |

| NPE [31] | 17.65 | 0.68 | 0.43 | 1051.15 | 0.84 |

| DHECI [32] | 18.18 | 0.68 | 0.43 | 606.98 | 0.87 |

| WAHE [33] | 17.64 | 0.67 | 0.48 | 648.29 | 0.84 |

| Ying [5] | 19.66 | 0.73 | 0.47 | 892.56 | 0.86 |

| BIMEF [25] | 19.80 | 0.74 | 0.48 | 675.15 | 0.85 |

| MBLLEN | 26.56 | 0.89 | 0.55 | 478.02 | 0.91 |

| (b) | |||||

| PSNR | SSIM | VIF | LOE | TMQI | |

| WAHE | 17.91 | 0.62 | 0.40 | 771.34 | 0.83 |

| MF | 19.37 | 0.67 | 0.39 | 896.67 | 0.84 |

| DHECI | 18.03 | 0.67 | 0.36 | 687.60 | 0.86 |

| Ying | 18.61 | 0.70 | 0.40 | 928.13 | 0.86 |

| BIMEF | 20.27 | 0.73 | 0.41 | 725.72 | 0.85 |

| MBLLEN | 25.97 | 0.87 | 0.49 | 573.14 | 0.90 |

| (c) | |||||

| LIME | Ying | BIMEF | MBLLEN | MBLLVEN | |

| PSNR | 14.26 | 22.36 | 19.80 | 19.71 | 24.98 |

| SSIM | 0.59 | 0.78 | 0.76 | 0.88 | 0.83 |

KinD and KinD++ [34] take inspiration from Retinex theory [35] and propose the decomposition of an input image into two components, the illumination map for light adjustments and reflectance for degradation removal. The KinD network architecture can also be divided into the Layer Decomposition Network, Reflectance Restoration Network and Illumination Adjustment Network.

Layer Decomposition Net: The layer is responsible for the decomposition of the image into its components, the reflectance and illumination maps. A problem exists as there exists no ground-truth for these mappings. The layer overcomes this through the use of loss functions and images of varying lighting configurations. To enforce reflectance, the model utilizes (12), where the reflectance of two paired images is given by . In (12), L denotes the loss of the reflectance similarity (rs) in the layer decomposition (LD); hence, we denote this loss as . Similar notation is applied for (13) up till (18). For (12) up to (18), I denotes the image, R the reflectance map and L the illumination maps with subscripts to emphasize the difference in lighting of the images. The ℓ1 norm is represented by ||◦||1.

To ensure that the illumination maps (LL, LH) of paired images are piecewise smooth and mutually consistent, (13) is used to enforce this. In (13), the image gradients are represented by ∇ and a small epsilon (ε) is added to prevent division by zero.

Mutual consistency is enforced (14) to ensure that strong edges are aligned, while weak ones are suppressed.

For reconstruction of the original image, the illumination and reflectance layers are recombined, and to ensure proper reconstruction, the reconstruction consistency is enforced by (15) and thus the total loss function is defined by (16).

Reflectance Restoration Net: Brighter images are usually less degraded than darker ones. The reflectance restoration net takes advantage of this observation and uses the reflectance mappings of the brighter images as references. To restore the degraded reflectance (R), the module employs (17), where the restored reflectance is denoted by , and denotes the reference reflectance from brighter images.

Illumination Adjustment Net: The illumination adjustment net employs paired illumination maps and a scaling factor (α) to adjust illumination while preserving edges and the naturalness of an image. Adjusting the illumination to ensure similarity between the target (Lt) and manipulated illumination ( is guided by the loss function in (18).

2.3. Zero-Shot Learning-Based Enhancers

Supervised methods of learning require paired and labeled data of the same scene (dark and light), which are often hard to acquire and often lead to the use of synthetic datasets, where darkness is artificially created. ExCNet [36] and Zero-DCE [8] pioneered a new paradigm in light enhancement, zero-reference learning. Zero-reference learning derives its name from the fact that the training data are unpaired and unlabeled; rather, the model relies on carefully selected non-reference loss functions and parameters to achieve the desired results. These LLIE methods make use of light enhancement curves which dictate the output-enhanced pixel value for a given dark input pixel value.

One of the earliest adopters of zero-shot learning-based light enhancement, ExCNet (Exposure Correction Network) [36], used S-curve estimation to enhance back-lit images. The model’s greatest advantage over models of its time is its zero-shot learning strategy, which aims to enable the model to recognize and classify classes which it had not seen during training, simply by using prior knowledge and semantics. The authors designed a block-based loss function which maximizes the visibility of global features while maintaining local relative differences between the features. To reduce flickers and computational costs when processing videos, the model takes advantage of the parameters from previous frames in order to guide the enhancement of the next frame.

The S-curve comprises Øs and Øh, which are the shadow and highlight parameters used to parameterize the curve, respectively. The shadow and highlight parameters assist in adjusting underexposed and overexposed regions, respectively. The curve is represented in (19), where and are the input and output luminance values, respectively. The incremental function is represented as ∈ [0, 0.5].

The aim of ExCNet is to find the optimal parameter pair [] that restores the back-lit image I. The model goes through two stages, the luminance channel Il adjustment using intermediate S-curves and loss derivation (20), where Ei is the unary data term, Eij is the pairwise term and (λ) is a predefined constant.

The model’s greatest challenge is that it has a runtime of 23.28 s, which makes it a very poor candidate for real-time applications, along with its niche domain (only works for back-lit images).

Zero DCE [37] and its successor Zero DCE++ [38] are popular zero-shot low-light enhancement models, which use LE curves to estimate the best curve for low-light enhancement (LLE). These curves are aimed at achieving three goals:

- To avoid information loss, each enhanced pixel should be normalized within a value range of [0–1].

- For the sake of contrast preservation amongst neighboring pixels, the curves must be unvarying.

- The curves must be basic, and differentiable during back-propagation.

- These goals are achieved through (21) [37]

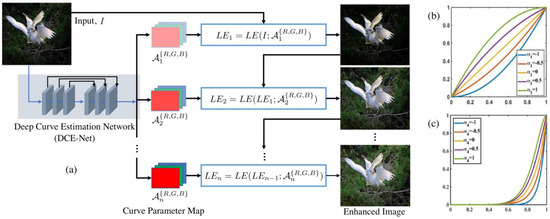

where x represents pixel coordinates, the input is denoted by , whose enhanced output is and [37]. As seen in Figure 6, the model repeatedly enhances an image, and the enhancement occurs on each color channel (RGB) rather than on the entire image. Figure 6 also shows the Deep Curve Estimation Network (DCE-Net), which is responsible for estimating the enhancement curves, which are then applied to each color channel. The models can enhance low-light images but fail at transferring these results onto LLVE and some real-world low-light images. Both models fail at retaining semantic information and may often lead to unintended results such as over enhancement.

Figure 6.

Zero DCE pipeline. (Reprinted from [37]). (a) Is the DCE_Net which estimates the enhancement curves used to iteratively enhance the low-light input image. In (b,c) the light enhancement curves are shown, where the input and output pixel values are represented in the x-axis and y-axis respectively. In (b) the number of iterations is 1 for all the curves with the adjustment parameter α changing from [−1,1] inclusive with a step of 0.5. In (c) the number of iterations for all the curves is 4, with the adjustment parameter changing as in (b).

To tackle the issue of semantics, Semantic-Guided Zero-Shot Learning (SGZ) for low-light image/video enhancement was proposed by [39]. The model proposes an enhancement factor extraction network (EFEN) to estimate the light deficiency at the pixel level, illustrated in Figure 7. The model also proposes a recurrent image enhancer for progressive enhancement of the image, and to preserve semantics, an unsupervised semantic segmentation network. The model introduced the Semantic Loss function, seen in (22), to maintain semantics, where are the height and width of the image, respectively, is the segmentation network’s estimated class probability for a pixel, while and are the focal coefficients. Although the introduction of the EFEN is critical and should be used in future research to guide other models for better pixel-wise light enhancement, the model still suffers from some challenges. The model performed poorly on video enhancements, resulting in flickering in the videos due to its overreliance on image-based datasets and lack of a network that takes advantage of frame neighbor relations.

Figure 7.

EFEN. Input images (left), pixel-wise light deficiency (right). (Reprinted from [39]).

Table 4 [40] compares various zero-shot enhancers to each other on various popular testing datasets. The models are compared on the Naturalness Image Quality Evaluator (NIQE), which is a non-reference image quality assessment, first formulated by [41].

Table 4.

Comparison of various zero-shot enhancers on various datasets using NIQE (↓) metric. The best-performing model is highlighted in bold. () Indicates lower values are desirable. (Reprinted from [40].)

2.4. Traditional Learning-Based Enhancers, a Deepe Dive

Traditional techniques were dominant pre-deep learning models and relied on traditional digital image processing techniques and mathematical approaches. This involved techniques such as histogram equalization, gamma correction and retinex theory.

Histogram equalization aims to improve the image quality by redistributing the pixel intensity to achieve a more uniformly distributed pixel intensity [42]. The method works well with global enhancement or suppression of light but does destroy the contrast relationship between local pixels. Given a greyscale image I = i(x,y) with L discrete intensity levels, where i(x,y) is the intensity of the pixel at coordinates (x,y) and L [0, L − 1], to histogram-equalize I, the probability distribution function is first obtained, which maps the distribution of each pixel intensity for the image. The cumulative distribution function (cdf) is next obtained, after which a transformation function is defined using the original cumulative distribution function as mathematically illustrated in (23).

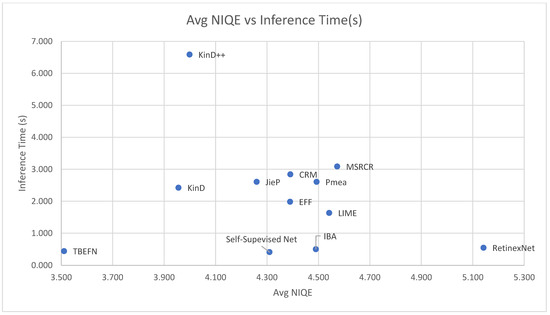

Retinex theory [3] separates an image into two components, a reflection map and illumination map. The reflectance component remains the same regardless of lighting conditions and is thus considered an intrinsic property, while the illumination map is a factor of the light intensity in the original image. The objective, therefore, is to enhance the image by enhancing the illumination map and fusing it with the reflectance map. The image and the two components of the image are illustrated formulaically in (24), where · is the element-wise multiplier. It should be noted that many deep learning methods [31,32,33,34,35,43,44] still borrow from the ideas of traditional learning techniques like Retinex theory. A quantitative comparison is provided by [45], Table 5, on such deep learning models and those (deep learning models) that do not adopt techniques from Retinex theory. Figure 8 compares the average NIQE score and inference times of some of the enhancement networks explored in Table 5. In Figure 8, TBEFN is observed to have a low inference time and low NIQE score, which are the desired conditions.

Table 5.

Quantitative comparison of deep learning LE models which employ some form of Retinex theory. Comparison performed using NIQE (↓) metric. The best-performing model is highlighted in bold. () Indicates lower values are desirable. (Reprinted from [45].)

Figure 8.

Average NIQE vs. inference time of models discussed in Table 5.

2.5. Unsupervised Learning-Based Enhancers

Unsupervised learning LE models do not require paired data, but rather low-light and optimally lit images of different scenes can be “paired”. Such models have the edge over supervised models as less time is wasted mining the data (since the data are easier to acquire) while also benefiting from having pseudo-paired data which allow supervised models to outperform zero-shot learners.

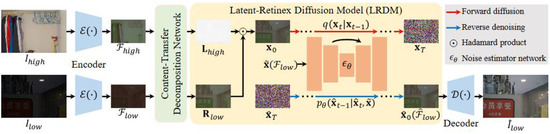

LightenDiffusion [56] proposed an unsupervised light enhancer which is based on diffusion while also incorporating Retinex theory. To improve the visual quality of the enhanced image, ref. [56] performs Retinex decomposition on the latent space instead of the image space. This allows for capturing high-level features such as structural, context and content features. The raw pixels are also sensitive to noise and thus the amplification of this noise is avoided, which, as previously stated, is a problem most enhancers still need to solve. Figure 9 pictorially illustrates the model. The unpaired low-light and normal-light images are first fed into an encoder, which converts the data into their latent space equivalence. The outputs of the encoder are then fed to a content-transfer decomposition network, which is responsible for decomposing each of the latent representations into their illumination and reflectance mappings. Next, the reflectance map of the poorly lit image and illumination of the optimally lit image are fed into the diffusion model which performs the forward diffusion process. Reverse denoising is performed to produce the restored feature flow () which is sent to the decoder, which restores the low-light image to the target image.

Figure 9.

LightenDiffusion pipeline. (Reprinted from [56].)The Hadamard product is the element-wise product. The Encoders transform input images (both low-light and normal lighting) to their latent representations (). These representations are then sent to the Content-Transfer Decomposition Network to generate content-rich reflectance maps () and content-free illumination maps (). The maps matrices are multiplied elementwise after which the Hadamard product is sent to the forward diffusion network and reverse denoising network.

Table 6 presents a quantitative comparison between unsupervised models. The results are obtained from results obtained from [56]. The models are evaluated on four metrics previously introduced and the Perception Index (PI) [57], which has yet to be discussed in this paper. The PI combines two non-reference metrics, the NIQE and Perceptual Quality Score [58].

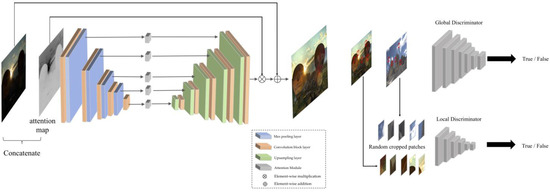

Another popular unsupervised model is EnlightenGAN [59], a generative adversarial network. EnlightenGAN is based on a GAN architecture with the generator being used to enhance images, while the discriminator aims to distinguish between the target and the enhanced images. EnlightenGAN also adopts Global-Local discriminators for enhancing not only the global features but also local areas such as a small bright spot in a scene, pictorially illustrated in Figure 10. Although the model is advantageous in that it does not require paired data and is able to adapt to varying light conditions while also enhancing both local and global features, a major hinderance of GANs, as noted by [60], is their instability and how they require careful tuning. GANs are particularly useful as they focus on perceptual quality and thus generally focus on producing results that are optimized for human perception.

Figure 10.

Architecture of EnlightenGAN. (Reprinted from [59].)

Table 6.

Quantitative comparison between unsupervised models as reported by [56], where higher values for PSNR and SSIM are desired while lower values for NIQE and PI are desired. Performance measure completed using LOL [35], LSRW [61], DICM [62], NPE [31] and VV [63] datasets. The best scores are in bold. (Reprinted from [56].)

Table 6.

Quantitative comparison between unsupervised models as reported by [56], where higher values for PSNR and SSIM are desired while lower values for NIQE and PI are desired. Performance measure completed using LOL [35], LSRW [61], DICM [62], NPE [31] and VV [63] datasets. The best scores are in bold. (Reprinted from [56].)

| Model | LOL | LSRW | DICM | NPE | VV | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | PSNR | SSIM | NIQE | PI | NIQE | PI | NIQE | PI | |

| EnlightenGAN [59] | 17.606 | 0.653 | 17.11 | 0.463 | 3.832 | 3.256 | 3.775 | 2.953 | 3.689 | 2.749 |

| RUAS [64] | 16.405 | 0.503 | 14.27 | 0.461 | 7.306 | 5.7 | 7.198 | 5.651 | 4.987 | 4.329 |

| SCI [65] | 14.784 | 0.525 | 15.24 | 0.419 | 4.519 | 3.7 | 4.124 | 3.534 | 5.312 | 3.648 |

| GDP [66] | 15.896 | 0.542 | 12.89 | 0.362 | 4.358 | 3.552 | 4.032 | 3.097 | 4.683 | 3.431 |

| PairLIE [67] | 19.514 | 0.731 | 17.6 | 0.501 | 4.282 | 3.469 | 4.661 | 3.543 | 3.373 | 2.734 |

| NeRCo [68] | 19.738 | 0.74 | 17.84 | 0.535 | 4.107 | 3.345 | 3.902 | 3.037 | 3.765 | 3.094 |

| LightenDiffusion [56] | 20.453 | 0.803 | 18.56 | 0.539 | 3.724 | 3.144 | 3.618 | 2.879 | 2.941 | 2.558 |

2.6. Semi-Supervised Learning-Based Enhancers

Semi-supervised enhancers make use of both paired and unpaired data, although the data distribution is often skewed. Semi-supervised enhancers contain small amounts of paired data while utilizing large amounts of unpaired data.

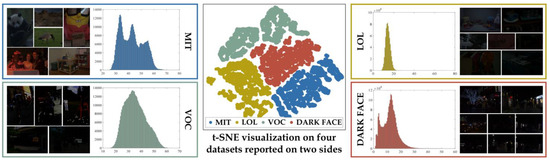

Ref. [69] proposed an enhancer which aims to tackle the issue of previous enhancers being unable to generalize to the real world, thus resulting in modeling performing well on scenes similar to those found during training but struggling when new scenes, with never before seen lighting conditions and noise patterns. Ref. [69] identified that the training data used for enhancers were not diverse enough in lighting conditions and noise patterns; rather, previous enhancers used different scenes, but the lighting conditions from one pair to another were not diverse enough. This lack of diversity is shown in Figure 11 [69], where popular training datasets’ t-SNE distributions are visualized. This visualization shows how the popular practice of only training a model on one of these datasets limits its ability to generalize to other data and potentially the real world.

Bilevel Fast Scene Adaptation [69], learns to generalize representations of various diverse datasets and uses this knowledge to train an encoder to be scene-independent. To further adapt to new scenes and reduce computational requirements, when new data are encountered, the encoder is frozen and only the decoder is fine-tuned.

In the domain of LE, semi-supervised learning is the least utilized strategy, and thus a limited pool of models exists to compare one to another. Table 7 compares Bilevel Fast Scene Adaptation to a popular semi-supervised LE model, the Deep Recursive Band Network (DRBN) [70].

Table 7.

Quantitative comparisons between DRBN and BL as reported by [70]. Best results are in bold. PSNR, SSIM and LPIPS contain paired data while DE, LOE and NIQE do not contain paired data. (Reprinted from [70].)

Figure 11.

t-SNE [71] of common training datasets in the LLE domain, which highlights the distribution discrepancy of said models. (Reprinted from [69]).

2.7. Mixed Approach

Ref. [72] proposed the use of a mixed algorithm, DiffLight, which leverages the advantages of the already discussed techniques. DiffLight utilizes the local feature extraction capabilities of CNNs, global dependency modeling of transformers and abilities of diffusion models to generate new data. DiffLight also combines multiple loss functions to balance perceptual quality and accuracy. LPIPS is used to provide image quality from the perspective of a human being (quality based on human perception). LPIPS is the Learned Perceptual Image Patch Similarity and compares the perceptual similarity between two images. First proposed in [73], LPIPS is the measurement of the distance between the two images (25), and thus lower values imply that the produced image is close to the desired image. Table 8, as reported by [72], compares [72] with some of the models it adopts from, and a comparison is performed using LPIPS, SSIM and PSNR on the LOLv1 dataset.

where

Table 8.

Quantitative comparison of various light enhancement techniques versus a combined approach, evaluated on the LOLv1 dataset. The best-performing model for each metric is bold. (Reprinted from [72].)

- x is the reference image, and y is the distorted image.

- is the feature map extracted from l-th layer

- is the weight of the l-th layer

- ‖◦‖ denotes the Euclidean distance.

- · denotes the elementwise multiplication

Another algorithm that adopts a mixed approach to low-light image enhancement is [81], which inherits the strengths of wavelet transformations and diffusion-based generative modeling. The wavelet-based diffusion framework enhances the image illumination and preserves detail while the diffusion process enhances image quality through noise filtration. The model makes use of wavelets to ensure efficient use of resources as compared to other vanilla diffusion models.

2.8. Loss Functions

2.8.1. Pixel-Level Loss Functions

The Mean Squared Error (MSE) Loss and Mean Absolute Error (MAE) Loss both operate at the pixel level. These errors are simple to implement and compare the pixel values of the predicted output to the target output, and as a result, are commonly implemented in supervised enhancers. The MSE is able to penalize large errors, but is sensitive to outliers, and as noted in [82], in light enhancement, this may result in noise amplification. The MAE is more robust to outliers than the MSE but, in light enhancement, may lead to loss of detail and perceptual quality.

2.8.2. Perceptual Loss Functions

The Perceptual Loss maintains high-level details that the pixel-level losses are unable to maintain. Perceptual Loss focuses more on ensuring that the image appears natural to the human eye and thus is widely used in the light enhancement domain.

The Naturalness Image Quality Evaluator (NIQE) is another Perceptual Loss function, with its major differentiating factor being that it is a no-reference loss function, making it a major player when it comes to unsupervised enhancers. NIQE makes use of statistical regularities that are present in natural images and therefore does not require a reference image.

2.8.3. Color Loss Function

The Color Constancy Loss maintains RGB channel ratios to ensure that color constancy is maintained regardless of changing illumination, making the loss function very popular with light enhancement algorithms.

2.8.4. Smoothness Function

Due to the presence of noise in low-light data, and how during enhancement, light enhancement algorithms often enhance this noise further, the Total Variation (TV) loss has become a popular stable of said algorithms. The TV loss enforces spatial smoothness in neighboring pixels, thus reducing noise in the output image.

3. Artefact Removal Networks

3.1. Deblurring Algorithms

The blurring of an image/video frame can be formulated by (26) [83], where is the blurred frame, is the blurring function, is a parameter vector and is the desired sharpened frame [83]. Therefore, for deblurring, the goal is to find the inverse of (26).

3.1.1. Non-Blind Deblurring

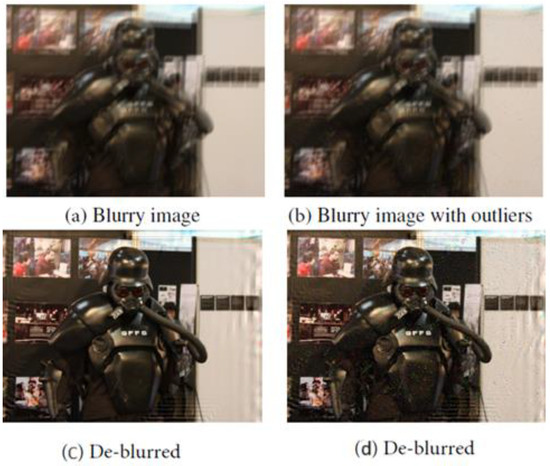

Since many non-blind deblurring methods are sensitive to filter noise, ref. [84] introduces two error terms to prevent ringing artefacts in the deblurred image. The first error term is called “Kernel Error” and approximates the error of the kernel produced by the deblurring methods. The second error is termed “Residual Error” and is introduced to handle noise in blurry images, without which (residual error) the deblurred image contains ringing artefacts around the noisy locations, observed in Figure 12. Finally, to recover the desired image data, a denoiser prior is adopted. The algorithm is modeled by (27), where is the returned kernel by the deblurring method, and is the kernel error term, while is the residual error term. and are adjustable parameter terms and the implicit denoiser prior is denoted by .

Figure 12.

(a–d) Ringing artefact in noisy deblurred image. (Reprinted with permission from [84], 2022, IEEE., 3 Park Avenue, 17th Floor, New York, NY 10016).

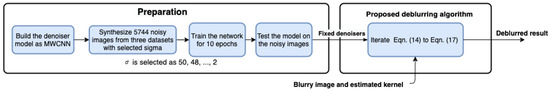

Figure 13 represents the model’s flowchart for training and testing procedures, with “iterate Equations (14)–(17)” being the process of solving, iteratively, the formulated model in (27). A major drawback of the model is that the Multi-level Wavelet-CNN (MWCNN) model is trained with a specific level of Gaussian Noise, and thus for varying noise training, a series of MWCNNs is needed. The model’s inability to generalize to noise makes it unsuitable for real-word applications.

Figure 13.

Model’s training and testing flowchart. (Reprinted from [84]).

3.1.2. Blind Deblurring

DeblurGAN [85] leverages Conditional Generative Adversarial Networks (cGANs) to recover sharp images from motion blurred ones. The model uses a CNN generator and a Wasserstein GAN with a gradient penalty. The model formulates a loss function for achieving state-of-the-art sharp images. The loss function used is a summation of the adversarial loss and content loss, where the Perceptual Loss is adopted as the content loss instead of the classical L1 or L2 losses. The model highlighted the benefits of using both synthetic and real data as opposed to just one or the other as improved model results were observed when a combination was used. The model is, however, hindered by its non-real-time inference speed of 0.85 s. Furthermore, in a benchmark study [86], DeblurGAN showed poor results as compared to other models when it comes to real-world tests. Its successor, DeblurGAN-V2 [87], showed great improvement and was amongst the best models in the same study [86], but ref. [88] showed that DeblurGAN-V2 achieved much slower inference times; thus, the DeblurGAN family is plagued by non-real-time inference times and poor real-world data performances.

Xiang et al. [89] performed a deep and comprehensive survey on blind deblurring methods, focusing on four deep learning networks, based on CNNs, RNNs, GANs and transformers. The researchers found that transformer-based methods were the best-performing methods, with the FFTformer and Uformer being the best-performing models tested on four image deblurring datasets, GoPro [90], HIDE [91], RealBlur-J [86] and RealBlur-R [86]. However, transformers are still a new concept in the world of computer science and more work is needed to make them less computer resource-intensive and more efficient. CNN- and RNN-based algorithms are the next best methods according to [89], but each method does come with its drawbacks. CNN-based methods are unable to handle long-range dependencies, such as with videos and dynamic frames, and as such, RNNs are utilized for such cases. RNNs are known to suffer from exploding and vanishing gradients when training, which leads to the poor performance of the models during testing. The gradient problems of RNNs can be solved in various ways, either by utilizing Gated Recurrent Units (GRUs), gradient clipping or ResNets.

3.1.3. Non-Uniform Deblurring (Local Deblur)

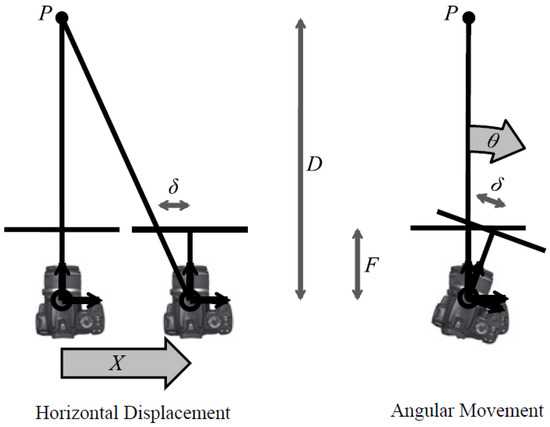

To capture non-uniform blur in an image due to camera shake, ref. [92] proposed a parametrized geometric model. The proposed network aims to show that camera shake is, in most cases, a result of the camera’s 3D rotation. The model’s advantages are that it can be easily integrated into any existing deblurring networks with minor modification and that it employs a single global descriptor. The 3D movement of the camera is modeled by (28), which represents the camera’s horizontal displacement, and (29), which represent the camera’s angular movement; these are pictorially illustrated in Figure 14.

Figure 14.

Camera shake. (Reprinted from [93]).

The algorithm is hindered by its bloated runtime, where a single image can take several hours to deblur, thus making it impractical for real-time applications. The model is also limited in its ability to deblur angular movement, being able to only deblur rotations of 3° to 5° inclusive.

3.1.4. Uniform Deblurring

Uniform deblurring is often applied in instances where the cause of the blur is a single constant source, usually the camera. Since cameras capture images by measuring photon intensities over a period of time, in order to capture a sharp image, this period of time needs to be short, as longer time periods, also known as long exposure, will lead to a blurry image [83]. Since blurs are nothing more than convolutions of a kernel (known or unknown) on a sharp image, deconvolution methods are utilized to obtain the sharp image, such as FFT-based methods. Kruse et al. [94] introduced generalized efficient deconvolution methods based on FFT, employing CNN-based regularization. The model generalizes shrinkage fields through the elimination of redundant parameter sharing and replaces pixel-wise functions with CNNs that operate globally. The corrupted image () can be modeled as a result of the convolution of the kernel () and sharp image () illustrated in (30). Kruse at al. [94], using CNNs, modeled (30) replacing shrinkage functions, where is the IFFT, is the CNN-based learned term, are the linear filters and the learned scalar function that acts as a noise-specific regularization weight is denoted by . The algorithm proposed in [94] suffers from non-real-time inference speeds. The model’s inference time is heavily dependent on the size of the image it is processing, in some instances producing results in 0.75 s.

Table 9a–c highlight the models discussed in this chapter. For comparison of each model’s performance, the same blurring kernel needs to be applied to all the models to ensure fair evaluation, and since not all models operate on the same kind of blur (i.e., uniform vs. non-uniform), algorithms that operate on the same blur need to be compared with each other. Refs. [88,95] provide some comparisons between more algorithms.

Table 9.

(a) Deblurring algorithms’ dataset information. (Reprinted from [88,95]). (b) Deblurring algorithms’ summaries and limitations. (Reprinted from [88,95]). (c) Self-Reported Quantitative comparison amongst artefact removal models. (↑) Indicates higher values are desirable. (Reprinted from [88,95]).

Table 9c presents the self-reported results of the artefact removal models. Ref. [84] presents the average results from Levin’s dataset [98]. Two models were investigated in [94], a greedily trained model, and one fine-tuned model, which is initialized by the greedy model. The model presented in Table 9c is the fine-tuned model, tested on Levin’s dataset [98]. The results from [85] were tested on the GoPro [90] dataset. Ref. [87] used the Inception-ResNet-v2 backbone and tested it on GoPro [90]. Ref. [96] was tested on the GoPro. Ref. [97] was tested on the GoPro dataset.

3.2. Color Grading and Flicker Removal Algorithms

Poor colorization and flickering in videos are related, where video flickers can be observed as brightness changes in neighboring video frames, and thus can be tackled with the same algorithms. Traditionally, the issue of flickering can be simply tackled by using a non-degraded neighboring frame in the video to reconstruct other frames [99]. This solution fails for general purpose cases as noted in [99], such as when two neighboring frames experience scene change.

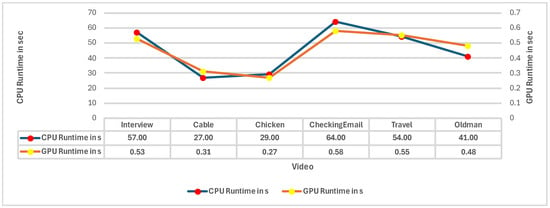

Hence, ref. [99] proposed a multi-frame-based method for video flicker removal, where the frames used for reconstruction are first evaluated for temporal coherence. The benefit of this method is the use of multiple spatiotemporally coherent frames for reconstruction, ensuring that scene changes are accounted for in the reconstruction algorithm. The model uses simple linear iterative clustering (SLIC) to divide an image frame into superpixels (large pixel clusters of similar brightness and color), after which dense correspondence between frames is obtained using SIFT Flow (analogous to optical flow). The model is limited by numerous factors, one of them being its runtime, making it a non-real-time model. Figure 15 compares the performance of the model when tested on a CPU as well as a GPU. The results show that the model tested on a GPU has a runtime two orders of magnitude less than the one on a CPU, implying that for deep learning algorithms, a focus on hardware to increase inference times is just as vital as the focus on the algorithms themselves. Although the model attempts to use multiple spatiotemporally coherent frames to tackle issues related to objects being present or absent in neighboring frames, the model still fails at capturing spatiotemporal coherence on extremely dynamic frames and is limited to handling videos with few slow-moving objects. Finally, as part of the input data, the model requires the original non-flickering video, resulting in the model not being applicable for real-world blind deflickering.

Figure 15.

Runtimes on CPU and GPU. (Reprinted from [99]).

Color grading can be used for LLE, but color grading exhibits limiting factors which make it more suited for post LLE applications rather than being used for LLE directly. A major limiting factor being that color transfer requires a reference stage, where the input image is enhanced using a refence image with the same color schematic as the target image, as exemplified in Figure 16. If the color schemes of the source and reference images do not match, the target will not be obtained, Figure 17.

Figure 16.

Example of good color transfer. (Reprinted from [100]).

Figure 17.

Poor color transfer. (Reprinted from [100]).

To tackle the issue of a specific reference image being required as input which the user may not know, ref. [100] introduced concept transfer, which can be used as input alongside the image to be graded. Lv et al. [100] defined concepts as terms like “romantic”, “earthy” and “luscious”. The model is useful in cases where the user does not know the correct reference image to use but is aware of the “concept” they wish to transfer to the enhanced image. The model is not too dissimilar to modern day image filters that operate by the user picking a name which is associated with a certain color pallet rather than transferring color by individually picking colors to transfer to the image.

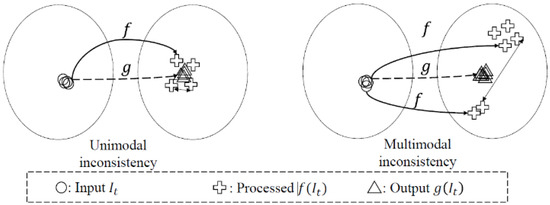

3.3. Deep Video Prior

Lei [101] proposed deep video prior (DVP) to enforce temporal consistency in inconsistent videos, due to frame-by-frame processing. Several video processing techniques process videos frame-by-frame, thus treating them as a series of disconnected images, which ignores their temporal co-dependency. DVP is built upon Deep Image Prior (DIP) [102], which performs image denoising, image inpainting and super-resolution. DIP takes in as input random noise and is trained to reconstruct a sharp image. The work of DVP hypothesizes that flickering in the temporal domain is equivalent to noise. DVP also has the benefit of requiring only a single training video. An inconsistent video is formed when a temporally consistent input is mapped to an inconsistent output, causing artefacts. This inconsistency can be unimodal or multimodal, where multiple solutions exist for an object in the processed frames (e.g., an object that should be blue changes to orange and yellow). As illustrated in Figure 18, f is a random function that inconsistently maps the input to the output, while g is the random function that consistently maps the input to the output. DVP [101] proposes a fully convolutional network, g, that mimics the operation of the inconsistent function, f, while maintaining consistency. During training, the model uses a loss function which measures the distance between the mapping of g and f, and stops training when the distance approaches a minimum at which g would overfit to f, producing the same artefacts observed in f. The CNN (g function) adopts a U-Net and a Perceptual Loss for the loss function but is not limited to these adopted strategies. DVP is hindered by its non-real inference time, where single frames can average an inference time of 2 s.

Figure 18.

Illustration of unimodal and multimodal inconsistency. (Reprinted from [101].)

4. Artefact Removal Coupled with Light Enhancement

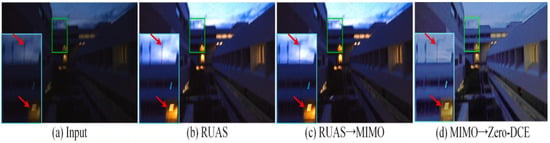

As highlighted by [103], “Images captured under low-light conditions usually co-exist with low light and blur degradation”. This occurrence is a result of how a camera captures data. Under poor lighting conditions, the exposure time of a camera is longer in order to allow for more light into the camera for clearer images. This longer exposure time means that blurs are more likely to occur. This implies that the artefact removal techniques discussed in the previous chapter are limited to images and videos captured in daytime lighting conditions. Thus, to design a computer vision network that is robust enough to be functional in a variety of lighting conditions, joint low-light enhancement with deblurring is required. To emphasize this statement, the coupled and uncoupled artefact removal techniques will be compared to each other to evaluate how they perform in various conditions.

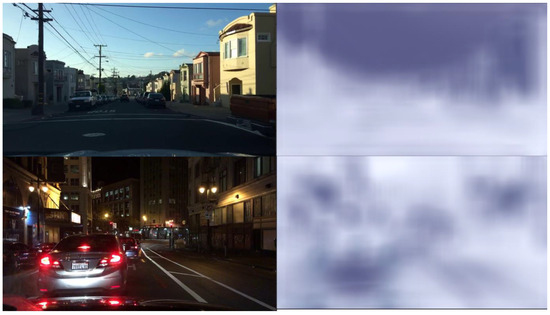

Ref. [103] proposed “joint low-light enhancement and deblurring with structural prior guidance”. The paper noted that there are two common approaches adopted when pursuing low-light enhancement and deblurring, cascaded light enhancement flowed by deblurring, and cascaded deblurring followed by enhancement. The first approach of enhancing the image before deblurring often causes saturated areas in the image to be overexposed; once overexposed, details of these areas become hidden and therefore the deblurring network is unable to deblur these areas, in some cases making them worse, as seen in Figure 19, where the input is a low-light blurred image, and the output (b) is the enhanced image, where enhancement was performed using RUAS [64]. The performance of the cascaded model (c), where RUAS [39] was cascaded with MIMO [104], is comparable with the input, with some areas incurring more blurs.

Figure 19.

Effects of light enhancement cascaded with deblurring. The differences between the input blurry images and the output images of each model (b–d) can be seen in the blue and green squares. In (d) the poles as indicated by the red arrow are clearer as compared to (b,c). (Reprinted with permission from [103], 2024, Elsevier., Radarweg 29, 1043 NX Amsterdam, The Netherlands).

The alternative approach (deblurring followed by enhancement) often involves employing deblurring algorithms that are not trained on low-light data and are therefore poorly interpreted motion information and features in low-light conditions. Figure 19d shows the deblurring algorithm [40] cascaded with [8]. In both Figure 19c,d, the only major improvement from the input is the light enhancement, implying that designing a robust deblurring algorithm for nighttime operations is a non-trivial solution.

The proposed solution by [103] employs structural priors, a transformer backbone for capturing global features of the image. To compensate for the transformer’s poor capabilities at capturing local features, the images are also processed at smaller variable-sized blocks. This assists the model in understanding the ambiguity of blurs, and more specifically non-uniform blurs. Through the use of statistical methods, priors and parallel attention, the model attempts to recover the sharp (deblurred) and enhanced images. This proposed network is akin to the U-net, incorporating an encoder–decoder architecture and a feature reconstruction subnet. Shallow image features are extracted by the encoder, while feature refinement is handled by the decoder. Due to research conducted by [105], which showed that the Pyramid Pooling Module can depress noise in low-light enhancement, multiple layers are equipped with PPMs in the proposed model. Depth image features are captured in the feature reconstruction subnet, which are then sent to the SNR transformer [106]. The non-uniform blurs are handled in the multi-patch perception pyramid block while a proposed prior guided reconstruction block adaptively reconstructs the enhanced sharp image.

The model algorithm is summarized in Algorithm 1.

| Algorithm 1 Joint low-light enhancement and deblurring algorithm. (Reprinted from [103]). |

| Input: low-light blurry image Iin |

| Output: normal-light sharp image Iout |

| 1: Extract shallow image features e with encoder |

| 2: Enhance image features e using the feature reconstruction subnet, where the SNR Transformer strengthens global features i and the MPP block generates structural features ih, ie |

| 3: Fuse image features i and structural features ih, ie through the PR block |

| 4: Decoder refines and restores the normal-light sharp image Iout |

| 5: return Iout |

Table 10 compiled from [103] is a quantitative comparison of the proposed model in [103], and cascaded deblurring and light enhancement models as well as uncascaded light enhancement and deblurring that were retrained on the LOL-Blur dataset [76]. The results obtained by the authors confirm that simply cascading light enhancement and deblurring for better performance is not advisable. The uncascaded and retrained light enhancement and deblurring network performs better than the cascaded models but the best-performing models are algorithms that perform joint light enhacmenet and deblurring. The best-perfoming model is highligted in red, while the next best is blue. Although the model in [103] is able to enhance light, it is unable to fully recover the sharp images. The model’s bloated nature results in the model having 40.1 M parameters and having a runtime of 10 s [103], restricting it to offline use.

Table 10.

Quantitative comparison of deblurring models. Where (↑) indicates higher values are desirable, the opposite is true. The best model is highlighted in red, the next best model is highlighted in blue. (Reprinted from [103].)

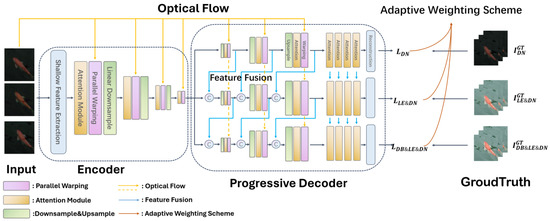

Video Joint Task (VJT) [109] aims to tackle the combined challenge of deblurring, enhancing low-light images and denoising by proposing a three-tiered decoder structure which steadily fits to different levels of the desired results. For facilitating the transition from shallow to deep learning, a feature fusion module is designed. The input to the model, shown in Figure 20, is the undesired video sequences which are fed into the encoder. In the encoder, shallow features are extracted along with frame alignment through warping and an attention module which focuses on the key parts of the video (noisy areas, moving objects). The video data’s spatial dimensions are reduced through downsampling and this reduced video data are the input to the progressive decoder. The feature fusion (in the decoder) ensures that features from different layers are present in the final output. Different weights are assigned for the various outputs depending on the task (restoration, enhancement, etc.) by the adaptive weighting scheme. The adaptive weighting scheme builds on research by [110]. The adaptive weighting scheme addresses the challenges of multi-task loss, ensuring that the contribution of each loss is dynamically balanced. The three losses that are dynamically balanced are the denoising loss, the combined low-light enhancement and denoising loss and a loss for integrated deblurring, enhancement and artefact removal. The model is trained on the dataset created by the authors, the Multi-scenes Lowlight-Blur-Noise Dataset (MLBN) [109], which contains indoor, nighttime outdoor and daytime outdoor scenes. The dataset is a mixture of real and synthetic data, where motion blurs, low illumination and noise are synthetically added.

Figure 20.

VJT illustration with encoder and multi-tier decoder. (Reprinted from [109]).

The researchers performed a quantitative analysis on the proposed methods against other deblurring methods. The results, Table 11 [109], show that just as seen in image processing, in video processing, a joint approach (light enhancement and deblurring) is beneficial for robust denoising systems. VJT (joint approach) is compared with purely deblurring models. The best-performing model is indicated in bold.

Table 11.

Quantitative survey between VJT model and deblurring models. Best model indicated in bold text. (Reprinted from [109].)

5. Action Recognition and Object Detection

5.1. Action Recognition

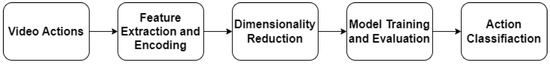

Action recognition (AR) is explained simply by [115] as an attempt “to understand human behavior and assign a label to each action”, and Figure 21 maps out the standard pipeline for AR.

Figure 21.

AR pipeline.

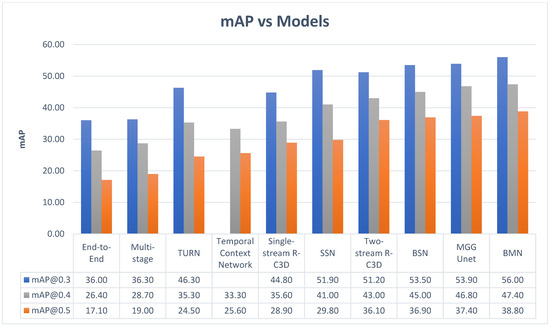

Pareek and Thakkar [116] conducted a comprehensive review of current and future trends in action recognition. According to [116], the major challenges facing traditional methods are feature extraction and training time. As most traditional methods of AR employ supervised learning, the feature extraction involves manually labeling the data. In the case of video actions, this will require large amounts of time, and as the dataset grows, so does the time spent extracting features. The solution to this problem may lie in zero-shot learning, which, unlike other learning methods, can classify new classes without prior knowledge of those classes, relying purely on semantics. Although deep learning (DL) methods remove the need for manual feature extraction and generally perform better than traditional methods, ref. [116] noted that some DL methods, like RNNs which are useful for processing sequential data like videos, can suffer from exploding or vanishing gradients. LSTMs, which are part of the RNN family, do not suffer from the gradient issue, but are unable to capture spatial information which is important for action, actor and object associations. Table 12 summarizes other models in the action recognition domain, while Figure 22 numerically compares the performance of the models discussed in Table 12. In Figure 22, the mean average precisions at different thresholds are obtained from [117], using the THUMOS’14 [118] dataset.

Table 12.

Summary of other action recognition models.

Figure 22.

Mean average precision of various AR models, obtained from [117], using the THUMOS’14 [118] dataset.

5.2. Object Detection

“Object detection involves detecting instances of objects from one or several classes in an image” [119]. Object detection algorithms are divided into either one-stage detectors or two-stage detectors, where one-stage detectors are faster while two-stage detectors are more accurate. As one-stage detectors prioritize speed over accuracy, they are usually implemented for real-time applications while two-stage detectors are usually used for offline medical or crime analysis where time is not as important as accuracy. The difference between the two algorithms is that the single stage moves directly from extracting features from the input image to outputting bounding boxes and classifications. In the case of a two-stage detector, an additional stage of region proposals is added, which outputs proposals of the regions which are most likely to have objects; the model then slides over these proposed regions to classify the objects instead of the entire image.

- A.

- One-Stage Detectors

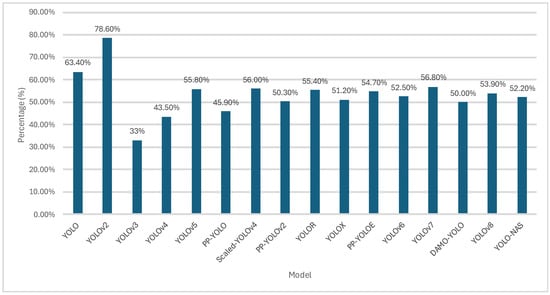

Table 13 summarizes the various YOLO models over the years. The benchmark dataset for YOLO and YOLOv2 was the VOC2007 [22], while the rest were reported on COCO2017 [120]. The YOLO algorithm is limited as it can only make a single prediction per cell, meaning that for smaller closely packed objects, the model struggles. The solution to this would be to divide the image into smaller boxes, which would then slow down the model; thus, there exists a trade-off between detecting small closely packed objects and speed. Figure 23 [120] shows the average precision achieved by various YOLO models using the datasets forementioned.

Table 13.

YOLO versions over the years. (Reprinted from [120].)

Figure 23.

Average precision of various YOLO models. (Reprinted from [120].)

- B.

- Two-Stage Detectors

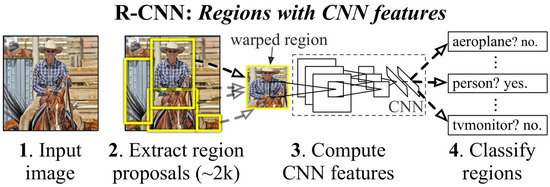

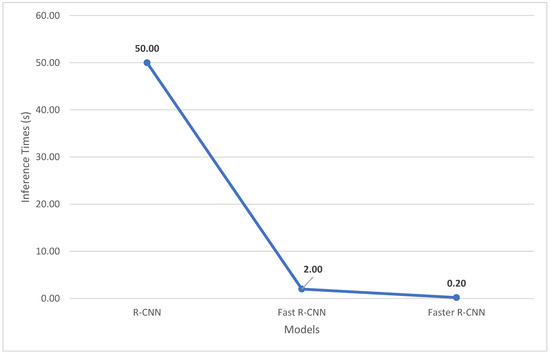

R-CNNs [121] remain amongst the most popular two-stage detectors, with the “R” standing for “Region”, for the region proposal that distinguishes two-stage from one-stage detectors. The algorithm contains three modules. The first region generates category-independent region proposals, which are the regions the rest of the model will use for object detection, ignoring the rest of the image. The next module is the feature-extracting CNN, which extracts fixed length features to feed to the final classification layer. The final layer contains a set of class-specific linear Support Vector Machines (SVMs), where each SVM is trained for the detection of specific objects and each SVM outputs its confidence for that specific object being present in each of the proposed regions, illustrated in Figure 24 [121]. Although the model has achieved state-of-the-art accuracy for detection of objects, its application is limited to offline as R-CNNs have an inference time of 40–50 s, illustrated in Figure 25.

Figure 24.

R-CNN structure. (Reprinted from [121].)

Fast R-CNN [122] and Faster R-CNN [123] were proposed as improvements on RCNN. Fast R-CNN obtained its name for its fast training and testing time, along with the following advantages over R-CNN:

- Higher mAP as compared to R-CNN;

- Single-stage training and combines multiple objectives into a single “Multi-Task Loss”;

- Model can learn and refine features at every level.

Figure 25.

Inference times in seconds of various RCNNs. (Reprinted from [124]).

6. Recognition and Detection Coupled with Light Enhancement

In order to evaluate the performance of their light enhancement model, ref. [8] tested the performance of a face detector on images enhanced by their model. The face detector algorithm chosen was the Dual Shot Face Detector (DSFD) and the dataset chosen was the DARKFACE dataset [125]. The dataset contains 10,000 low-light images. The purpose of the test is to illustrate how light has been enhanced to such an extent that a face detector can extract more useful information after enhancement. The test was performed alongside other low-light enhancers, but the detector remained unchanged. The input is the raw unenhanced data. As can be seen in Table 14 [8], the performance of all the enhanced images (regardless of the enhancement model) was better than the model tested on raw images, which emphasizes the need for an enhancer to allow detection models to function even under extreme conditions.

Table 14.

Performance evaluation of light-enhanced detectors. The best performing model is highlighted in bold. (Reprinted from [8]).

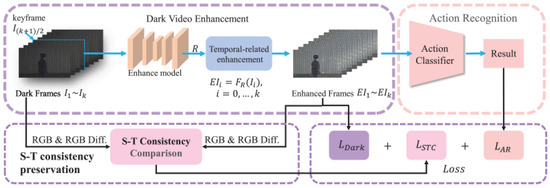

The Dark Temporal Consistency Model (DTCM) [129] (framework shown in Figure 26) proposes a joint approach for human action detection in dark videos with video enhancement. DTCM explores a cascaded approach where the video data are enhanced, after which human actions are deduced from the video. To ensure spatiotemporal consistency frame by frame, the model compares RGB differences before and after video enhancement. The model makes use of three losses.

Figure 26.

DTCM structure. (Reprinted with permission from [129], 2023, IEEE, 3 Park Avenue, 17th Floor, New York, NY 10016).

The first loss (31) employed is the spatiotemporal consistency loss, which is a combination of the temporal and spatial consistency losses. The spatial consistency loss is formulated by (32), while the temporal consistency loss is shown as (33). The goal is to minimize (31), as the value of represents the loss of inconsistency in the enhanced video. The temporal consistency loss, formulated in (33), is responsible for ensuring temporal constancy by comparing the RGB differences in the pre and post processing video. In (33), T denotes the duration of the input video clip, DY and DI are the enhanced frame and input frame, respectively. are the RGB differences of the enhanced and dark frames, respectively.

The second loss employed by the model is the dark enhancement loss. The dark enhancement loss takes inspiration from Zero-DCE [8], and uses the loss functions defined in [8]. The dark enhancement loss (34) is a summation of the exposure control loss, the illumination smoothness loss and color constancy loss. The color constancy loss and the illumination losses have unique weights, while the exposure loss is unweighted.

For action recognition, the model makes use of the vanilla cross entropy loss

The model was tested on the UAVHuman-Fisheye Dataset, which contains distorted dark and light human action videos. As seen in Table 15 [129], the model is the best-performing action detection model amongst other state-of-the art detectors, which indicates that the model is not only suited for low-light action detection, but general action detection.

Table 15.

DTCM (LE action detector) vs. vanilla detectors. Best performing model highlighted in bold. (Reprinted from [129].)

7. Datasets

7.1. LE Datasets

Zheng et al. [1] performed a comprehensive breakdown of the datasets available and their frequency of use in the LE domain. A summary of the most popular datasets is provided in Table 16. In Table 16, “size” represents the size of the dataset in terms of datapoints, while “paired” states whether each datapoint has its corresponding optimally lit pair. It was highlighted in [1] that numerous popular datasets in the LE domain fail to capture real-world conditions, where some objects in the image may be optimally lit, while others are over/under-illuminated. These lighting conditions can also exhibit gradual transitions in a single image rather than discrete luminosity level changes. For this, they proposed a new dataset with gradual transitions in luminosity within a single datapoint, along with another dataset with random transitions; the datasets were termed SICE_Grad [1] and SICE_Mix [1], respectively. Models trained on these datasets were observed to perform better for real-world conditions [1]. These observations reaffirm that for any DL algorithm, model design and configuration are just as important for better results as dataset quality.

Table 16.

LE popular datasets.

7.2. Artefact Removal Datasets

In Table 17, “blur type” states what type of blur the dataset contains (uniform or non-uniform) and “domain” states what objects/items are present (scenes, human faces, objects), where scene describes a scene being blurred and no singular object being the focus of the datapoint. Most of the datasets in Table 17 synthetically generate realistic blurs using a method that has become popular recently, where blurs are created by averaging successive frames of videos.

Table 17.

Artefact removal datasets.

7.3. Action Recognition and Object Detection Datasets

There exist a myriad of action recognition and object detection datasets. Table 18, on the other hand, summarizes the much harder to find criminal activity and weapons detection datasets. Many datasets pertaining to violent action recognition rely on synthetic data such as fights from movies or fights from sporting events. In Table 18, “Type” describes the type of crime (robbery, contact crimes, etc.) and “weapons” describes whether traditional weapons are used in any of the crimes (i.e., guns, knives are traditional weapons, whereas sticks, bats and rocks are not). The synthetic crime videos may contain either crimes acted out by researchers or criminal activities from movies scenes.

Table 18.

Criminal actions and weapons datasets.

8. Challenges

Having surveyed research papers in the domain of LE, artefact removal, action recognition and object detection, three major problems have been identified that hinder progress in these fields: