Classification of Parotid Tumors with Robust Radiomic Features from DCE- and DW-MRI

Abstract

1. Introduction

2. Materials and Methods

2.1. Patient Population

2.2. MR Imaging

2.3. Segmentation

2.4. Artificial ROI Generation for Robustness Evaluation

- (1)

- Axial rotation of 10°, 20°, and 30° degrees clockwise and counterclockwise around the barycenter of the original ROI (six ROIs);

- (2)

- Dilations using a 3D structured element of connectivity of 1 or 2 pixels (two ROIs);

- (3)

- Erosion using a structured element of 1 pixel (one ROI);

- (4)

- Translation of 1 pixel in 3 orthogonal directions, both forward and backward (six ROIs).

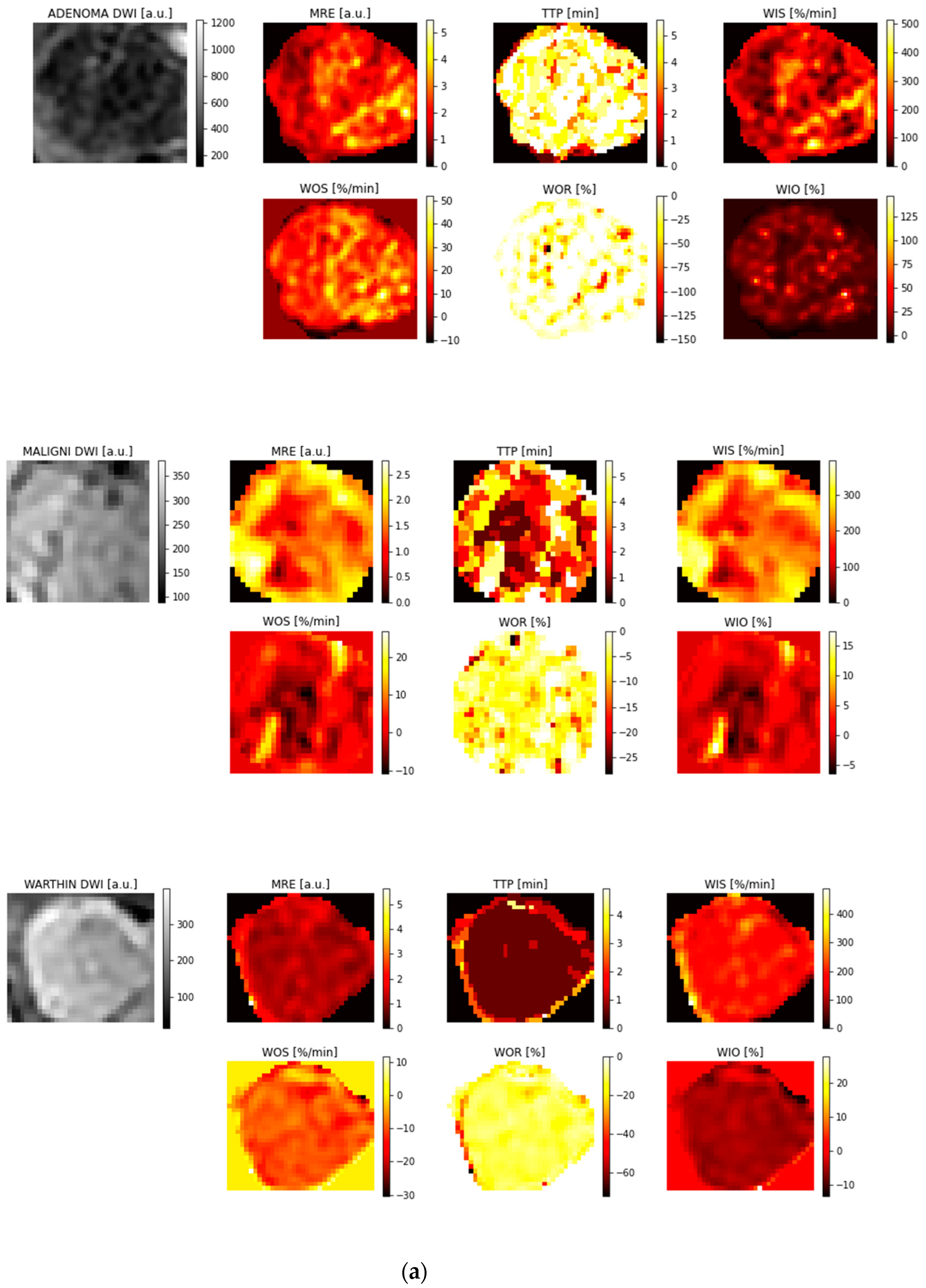

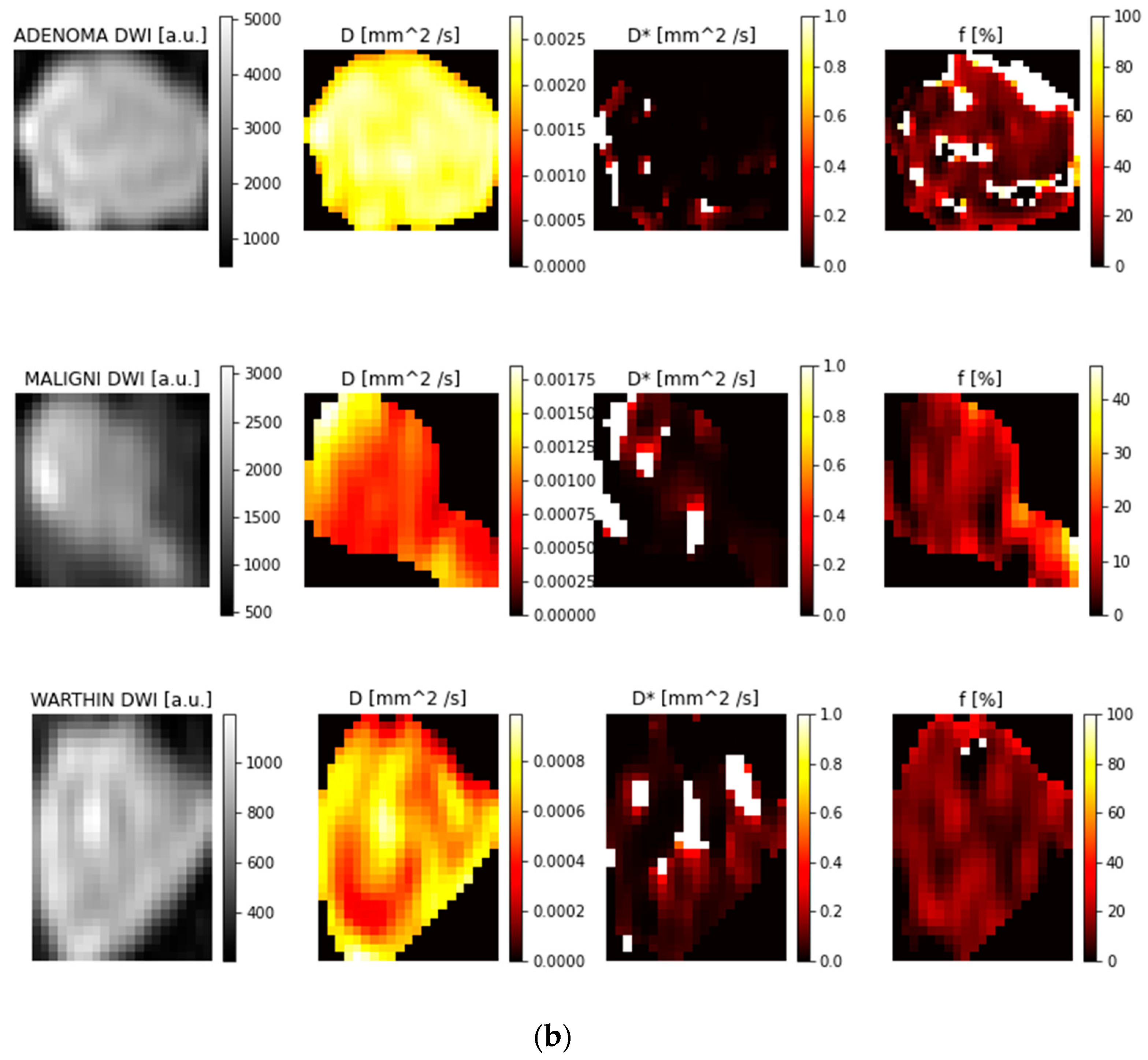

2.5. Parametric Maps

2.6. Radiomic Features

2.7. Evaluation of Feature Reproducibility

2.8. Feature Selection

2.9. Classification and Accuracy Evaluation

3. Results

3.1. ROI Selection and Parametric Maps

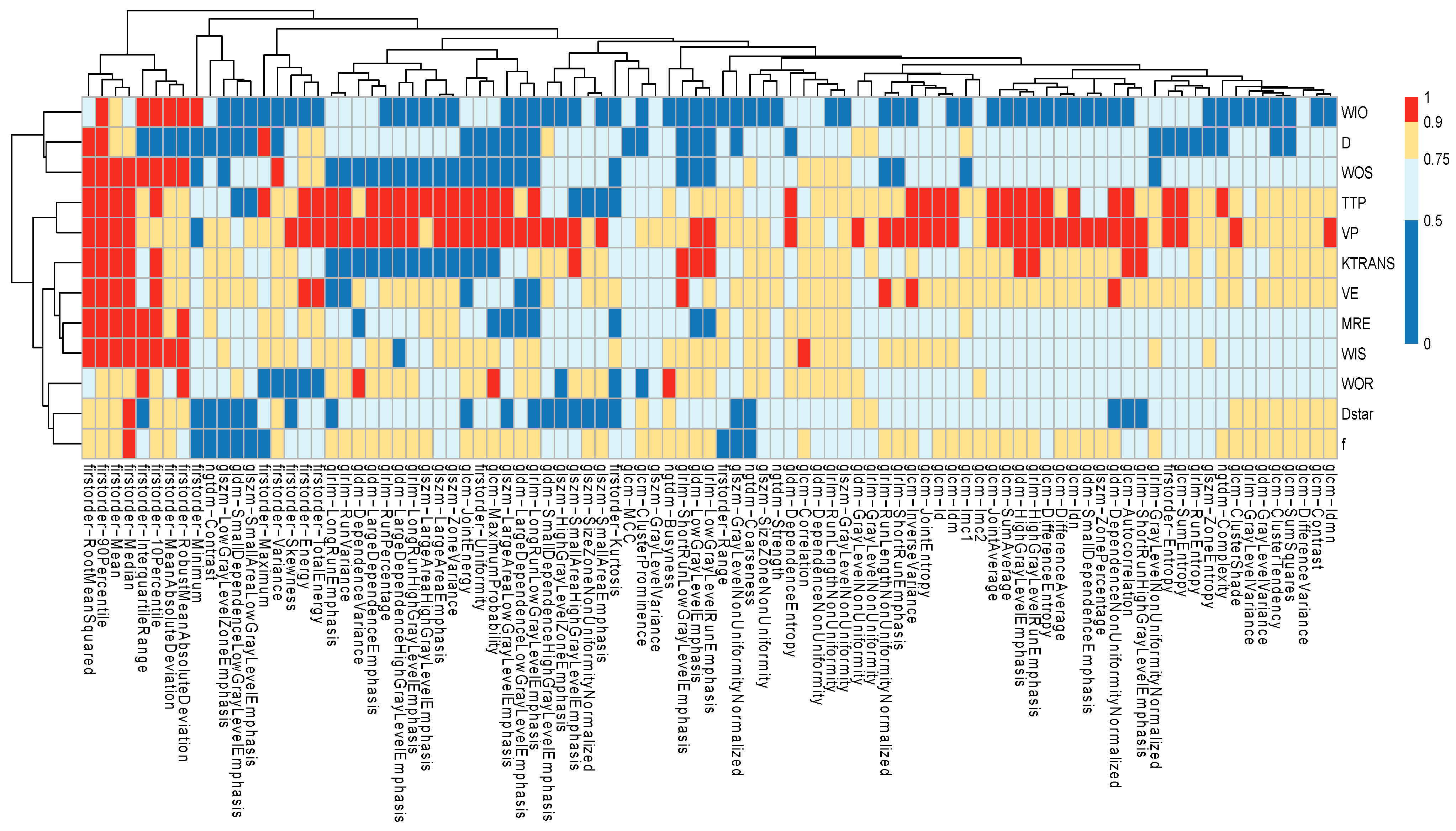

3.2. Feature Reproducibility

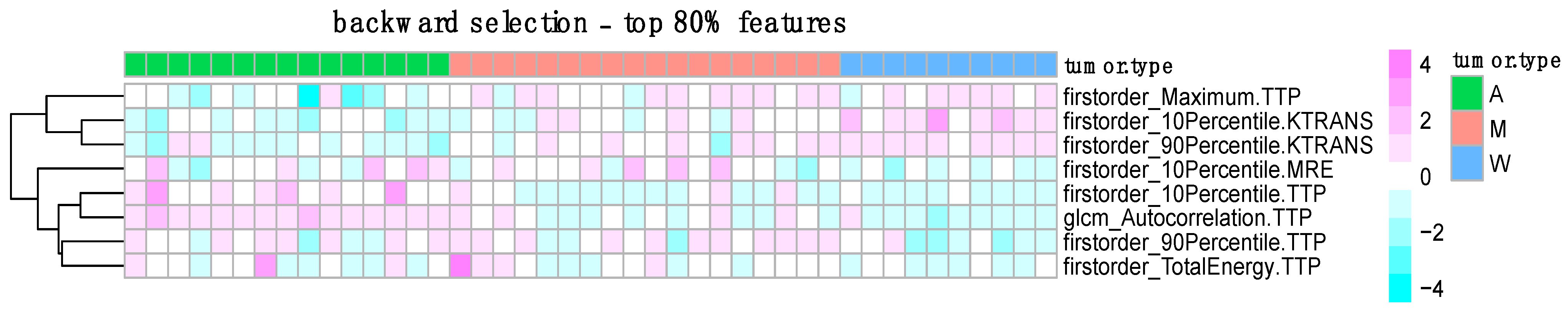

3.3. Feature Selection Results

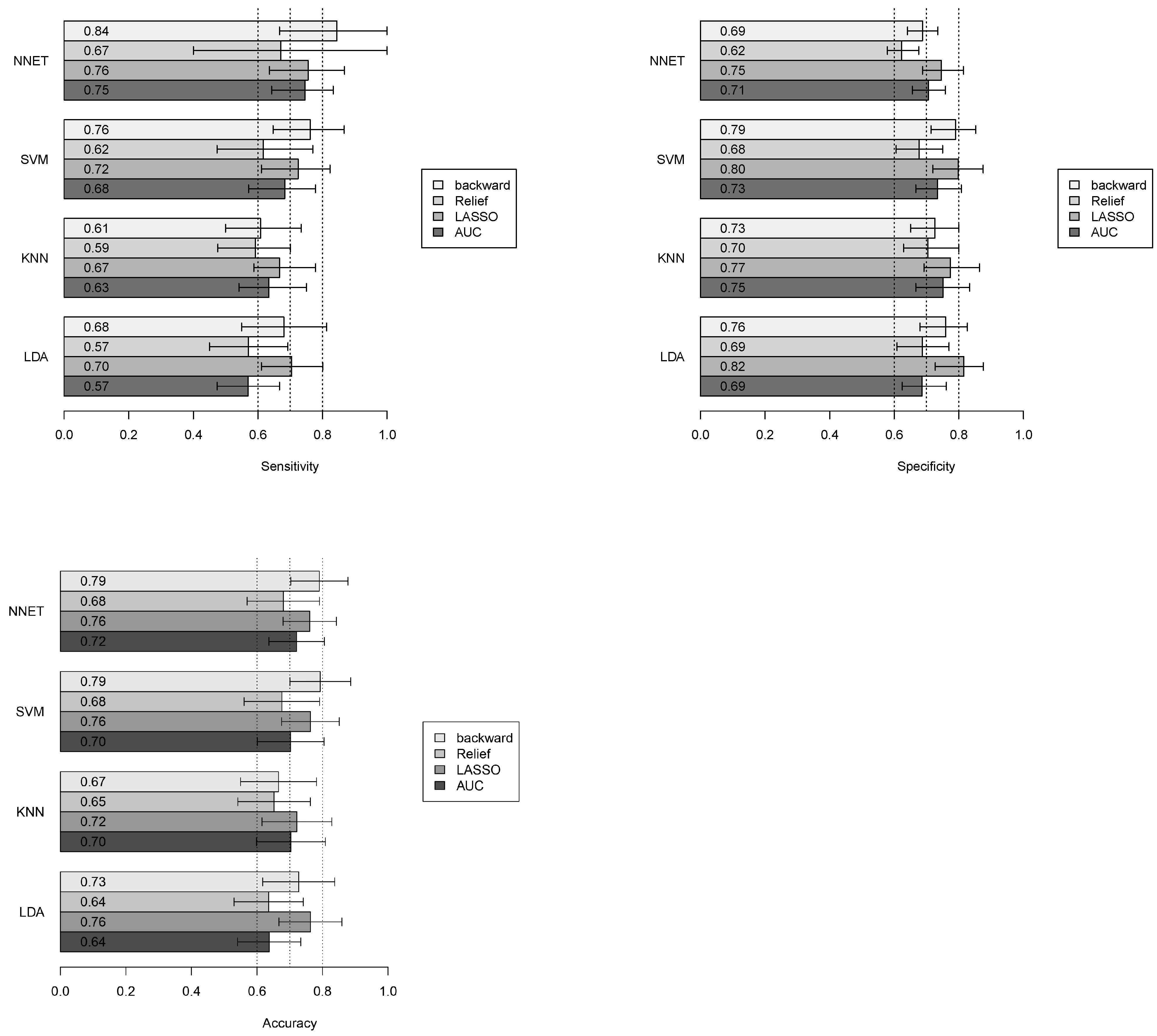

3.4. Classification Performance

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Correction Statement

References

- Kouka, M.; Hoffmann, F.; Ihrler, S.; Guntinas-Lichius, O. Salivary gland cancer. Best Pract. Onkol. 2022, 17, 339–345. [Google Scholar] [CrossRef]

- Gao, M.; Hao, Y.; Huang, M.X.; Ma, D.Q.; Chen, Y.; Luo, H.Y.; Gao, Y.; Cao, Z.Q.; Peng, X.; Yu, G.Y. Salivary gland tumours in a northern Chinese population: A 50-year retrospective study of 7190 cases. Int. J. Oral Maxillofac. Surg. 2017, 46, 343–349. [Google Scholar] [CrossRef] [PubMed]

- WHO Classification of Tumours Editorial Board. Head and Neck Tumours, 5th ed.; WHO Classification of Tumours Series; International Agency for Research on Cancer: Lyon, France, 2022; Volume 9, Available online: https://publications.iarc.fr/ (accessed on 2 February 2025).

- Seethala, R.R.; Stenman, G. Update from the 4th edition of the World Health Organization classification of head and neck tumours: Tumors of the salivary gland. Head Neck Pathol. 2017, 11, 55–67. [Google Scholar] [CrossRef] [PubMed]

- Mao, K.; Wong, L.M.; Zhang, R.; So, T.Y.; Shan, Z.; Hung, K.F.; Ai, Q.Y.H. Radiomics Analysis in Characterization of Salivary Gland Tumors on MRI: A Systematic Review. Cancers 2023, 15, 4918. [Google Scholar] [CrossRef]

- Qi, J.; Gao, A.; Ma, X.; Song, Y.; Zhao, G.; Bai, J.; Gao, E.; Zhao, K.; Wen, B.; Zhang, Y.; et al. Differentiation of Benign from Malignant Parotid Gland Tumors Using Conventional MRI Based on Radiomics Nomogram. Front. Oncol. 2022, 12, 937050. [Google Scholar] [CrossRef]

- Bozzetti, A.; Biglioli, F.; Salvato, G.; Brusati, R. Technical refinements in surgical treatment of benign parotid tumours. J. Cranio-Maxillofacial Surg. 1999, 27, 289–293. [Google Scholar] [CrossRef]

- Witt, R.L.; Eisele, D.W.; Morton, R.P.; Nicolai, P.; Poorten, V.V.; Zbären, P. Etiology and management of recurrent parotid pleomorphic adenoma. Laryngoscope 2015, 125, 888–893. [Google Scholar] [CrossRef]

- Riad, M.A.; Abdel-Rahman, H.; Ezzat, W.F.; Adly, A.; Dessouky, O.; Shehata, M. Variables related to recurrence of pleomorphic adenomas: Outcome of parotid surgery in 182 cases. Laryngoscope 2011, 121, 1467–1472. [Google Scholar] [CrossRef]

- Espinoza, S.; Felter, A.; Malinvaud, D.; Badoual, C.; Chatellier, G.; Siauve, N.; Halimi, P. Warthin’s tumor of parotid gland: Surgery or follow-up? Diagnostic value of a decisional algorithm with functional MRI. Diagn. Interv. Imaging 2016, 97, 37–43. [Google Scholar] [CrossRef]

- Paris, J.; Facon, F.; Pascal, T.; Chrestian, M.A.; Moulin, G.; Zanaret, M. Preoperative diagnostic values of fine-needle cytology and MRI in parotid gland tumors. Eur. Arch. Otorhinolaryngol. 2005, 262, 27–31. [Google Scholar] [CrossRef]

- Yerli, H.; Aydin, E.; Haberal, N.; Harman, A.; Kaskati, T.; Alibek, S. Diagnosing common parotid tumours with magnetic resonance imaging including diffusion-weighted imaging vs. fine-needle aspiration cytology: A comparative study. Dentomaxillofacial Radiol. 2010, 39, 349–355. [Google Scholar] [CrossRef]

- Patella, F.; Sansone, M.; Franceschelli, G.; Tofanelli, L.; Petrillo, M.; Fusco, M.; Nicolino, G.M.; Buccimazza, G.; Fusco, R.; Gopalakrishnan, V.; et al. Quantification of heterogeneity to classify benign parotid tumors: A feasibility study on most frequent histotypes. Futur. Oncol. 2020, 16, 763–778. [Google Scholar] [CrossRef]

- Piludu, F.; Marzi, S.; Ravanelli, M.; Pellini, R.; Covello, R.; Terrenato, I.; Farina, D.; Campora, R.; Ferrazzoli, V.; Vidiri, A. MRI-Based Radiomics to Differentiate between Benign and Malignant Parotid Tumors with External Validation. Front. Oncol. 2021, 11, 656918. [Google Scholar] [CrossRef] [PubMed]

- Angelone, F.; Ponsiglione, A.M.; Belfiore, M.P.; Gatta, G.; Grassi, R.; Amato, F.; Sansone, M. Evaluation of breast density variability between right and left breasts. In Atti del Convegno Nazionale di Bioingegneria 2023; Patron Editore Srl.: Bologna, Italy, 2023. [Google Scholar]

- Angelone, F.; Ciliberti, F.K.; Tobia, G.P.; Jónsson, H., Jr.; Ponsiglione, A.M.; Gislason, M.K.; Tortorella, F.; Amato, F.; Gargiulo, P. Innovative Diagnostic Approaches for Predicting Knee Cartilage Degeneration in Osteoarthritis Patients: A Radiomics-Based Study. Inf. Syst. Front. 2024, 27, 51–73. [Google Scholar] [CrossRef]

- Angelone, F.; Ponsiglione, A.M.; Ricciardi, C.; Belfiore, M.P.; Gatta, G.; Grassi, R.; Amato, F.; Sansone, M. A Machine Learning Approach for Breast Cancer Risk Prediction in Digital Mammography. Appl. Sci. 2024, 14, 10315. [Google Scholar] [CrossRef]

- Angelone, F.; Ciliberti, F.K.; Jónsson, H.; Gíslason, M.K.; Romano, M.; Franco, A.; Amato, F.; Gargiulo, P. Knee Cartilage Degradation in the Medial and Lateral Anatomical Compartments: A Radiomics Study. In Proceedings of the 2024 IEEE International Conference on Metrology for eXtended Reality, Artificial Intelligence and Neural Engineering (MetroXRAINE), St Albans, UK, 21–23 October 2024; IEEE: Piscataway, NJ, USA, 2004; pp. 183–188. [Google Scholar]

- Zwanenburg, A.; Vallières, M.; Abdalah, M.A.; Aerts, H.J.W.L.; Andrearczyk, V.; Apte, A.; Ashrafinia, S.; Bakas, S.; Beukinga, R.J.; Boellaard, R.; et al. The Image Biomarker Standardization Initiative: Standardized Quantitative Radiomics for High-Throughput Image-based Phenotyping. Radiology 2020, 295, 328–338. [Google Scholar] [CrossRef] [PubMed]

- Welcome to Pyradiomics Documentation!—Pyradiomics v3.1.0rc2.post5+g6a761c4 Documentation. Available online: https://pyradiomics.readthedocs.io/en/latest/ (accessed on 24 November 2023).

- Heckman, J.J. Sample Selection Bias as a Specification Error. Econometrica 1979, 47, 153–161. [Google Scholar] [CrossRef]

- Zwanenburg, A.; Leger, S.; Agolli, L.; Pilz, K.; Troost, E.G.C.; Richter, C.; Löck, S. Assessing robustness of radiomic features by image perturbation. Sci. Rep. 2019, 9, 614. [Google Scholar] [CrossRef]

- Ponsiglione, A.M.; Angelone, F.; Amato, F.; Sansone, M. A Statistical Approach to Assess the Robustness of Radiomics Features in the Discrimination of Mammographic Lesions. J. Pers. Med. 2023, 13, 1104. [Google Scholar] [CrossRef]

- Brunese, L.; Mercaldo, F.; Reginelli, A.; Santone, A. An ensemble learning approach for brain cancer detection exploiting radiomic features. Comput. Methods Programs Biomed. 2020, 185, 105134. [Google Scholar] [CrossRef]

- Vial, A.; Stirling, D.; Field, M.; Ros, M.; Ritz, C.; Carolan, M.; Holloway, L.; Miller, A.A. The role of deep learning and radiomic feature extraction in cancer-specific predictive modelling: A review. Transl. Cancer Res. 2018, 7, 803–816. [Google Scholar] [CrossRef]

- van Timmeren, J.E.; Cester, D.; Tanadini-Lang, S.; Alkadhi, H.; Baessler, B. Radiomics in medical imaging-“how-to” guide and critical reflection. Insights Imaging 2020, 11, 91. [Google Scholar] [CrossRef] [PubMed]

- Di Sarno, L.; Caroselli, A.; Tonin, G.; Graglia, B.; Pansini, V.; Causio, F.A.; Gatto, A.; Chiaretti, A. Artificial Intelligence in Pediatric Emergency Medicine: Applications, Challenges, and Future Perspectives. Biomedicines 2024, 12, 1220. [Google Scholar] [CrossRef]

- «Home Page—Horos Project». Available online: https://horosproject.org/ (accessed on 24 November 2023).

- “Welcome to Python.org”, Python.org. Available online: https://www.python.org/ (accessed on 2 February 2024).

- NIfTI—Neuroimaging Informatics Technology Initiative. Available online: https://nifti.nimh.nih.gov/ (accessed on 2 February 2024).

- Koo, T.K.; Li, M.Y. A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research. J. Chiropr. Med. 2016, 15, 155–163. [Google Scholar] [CrossRef] [PubMed]

- Kuhn, M.; Johnson, K. Applied Predictive Modeling; Springer: New York, NY, USA, 2013. [Google Scholar] [CrossRef]

- Urbanowicz, R.J.; Meeker, M.; LaCava, W.; Olson, R.S.; Moore, J.H. Relief-Based Feature Selection: Introduction and Review. J. Biomed. Inform. 2018, 85, 189–203. [Google Scholar] [CrossRef] [PubMed]

- Hastie, T.; Tibshirani, R.; Friedman, J.H. The Elements of Statistical Learning, Data Mining, Inference, and Prediction, 2nd ed.; Springer: New York, NY, USA, 2009. [Google Scholar]

- R: The R Project for Statistical Computing. Available online: https://www.r-project.org/ (accessed on 2 February 2024).

- Kuhn, M. Building Predictive Models in R Using the caret Package. J. Stat. Softw. 2008, 28, 1–26. [Google Scholar] [CrossRef]

- Kuhn, M. The Caret Package. Available online: https://topepo.github.io/caret/index.html (accessed on 2 February 2024).

- Wennmann, M.; Bauer, F.; Klein, A.; Chmelik, J.; Grözinger, M.; Rotkopf, L.T.B.; Neher, P.; Gnirs, R.; Kurz, F.T.; Nonnenmacher, T.M.; et al. In Vivo Repeatability and Multiscanner Reproducibility of MRI Radiomics Features in Patients with Monoclonal Plasma Cell Disorders: A Prospective Bi-institutional Study. Investig. Radiol. 2023, 58, 253–264. [Google Scholar] [CrossRef]

- Wennmann, M.; Rotkopf, L.T.; Bauer, F.; Hielscher, T.; Kächele, J.; Mai, E.K.; Weinhold, N.; Raab, M.; Goldschmidt, H.; Weber, T.F.; et al. Reproducible Radiomics Features from Multi-MRI-Scanner Test–Retest-Study: Influence on Performance and Generalizability of Models. J. Magn. Reson. Imaging 2025, 61, 676–686. [Google Scholar] [CrossRef]

- Shumer, D.E.; Nokoff, N.J. Deep learning and radiomics in precision medicine. Precis. Med. Drug Dev. 2017, 176, 139–148. [Google Scholar] [CrossRef]

- Gabelloni, M.; Faggioni, L.; Attanasio, S.; Vani, V.; Goddi, A.; Colantonio, S.; Germanese, D.; Caudai, C.; Bruschini, L.; Scarano, M.; et al. Can magnetic resonance radiomics analysis discriminate parotid gland tumors? A pilot study. Diagnostics 2020, 10, 900. [Google Scholar] [CrossRef]

- Fathi Kazerooni, A.; Nabil, M.; Alviri, M.; Koopaei, S.; Salahshour, F.; Assili, S.; Rad, H.S.; Aghaghazvini, L. Radiomic Analysis of Multi-parametric MR Images (MRI) for Classification of Parotid Tumors. J. Biomed. Phys. Eng. 2022, 12, 599–610. [Google Scholar] [CrossRef] [PubMed]

- Zhang, R.; Ai, Q.Y.H.; Wong, L.M.; Green, C.; Qamar, S.; So, T.Y.; Vlantis, A.C.; King, A.D. Radiomics for Discriminating Benign and Malignant Salivary Gland Tumors; Which Radiomic Feature Categories and MRI Sequences Should Be Used? Cancers 2022, 14, 5804. [Google Scholar] [CrossRef] [PubMed]

- Kim, Y.R.; Chung, S.W.; Kim, M.-J.; Choi, W.-M.; Choi, J.; Lee, D.; Lee, H.C.; Shim, J.H. Limited generalizability of retrospective single-center cohort study in comparison to multicenter cohort study on prognosis of hepatocellular carcinoma. J. Hepatocell. Carcinoma 2024, 11, 1235–1249. [Google Scholar] [CrossRef]

- Euser, A.M.; Zoccali, C.; Jager, K.J.; Dekker, F.W. Cohort studies: Prospective versus retrospective. Nephron Clin. Pract. 2009, 113, c214–c217. [Google Scholar] [CrossRef] [PubMed]

- Corso, R.; Stefano, A.; Salvaggio, G.; Comelli, A. Shearlet Transform Applied to a Prostate Cancer Radiomics Analysis on MR Images. Mathematics 2024, 12, 1296. [Google Scholar] [CrossRef]

- Rani, K.V.; Prince, M.E.; Therese, P.S.; Shermila, P.J.; Devi, E.A. Content-based medical image retrieval using fractional Hartley transform with hybrid features. Multimed. Tools Appl. 2024, 83, 27217–27242. [Google Scholar] [CrossRef]

| Sequence | Orientation | TR/TE (ms) | FA (deg.) | Size Image (mm × mm) | Acquisition Matrix | ST/Gap (mm/mm) |

|---|---|---|---|---|---|---|

| T2 2D SE | Axial | 7963/129 | 160 | 512 × 512 | 512 × 204 | 3/0.4 |

| T2 2D SE | Coronal | 7963/129 | 160 | 512 × 512 | 384 × 384 | 3/0.4 |

| T1 2D SE | Axial | 500/15 | 160 | 512 × 512 | 512 × 204 | 3/0.4 |

| DWI EPI | Axial | 5290/77 | 90 | 256 × 256 | 140 × 70 | 3/3 |

| T1 3D GRE | Axial | 6/3 | 12 | 512 × 512 | 256 × 256 | 3/0.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Angelone, F.; Tortora, S.; Patella, F.; Bonanno, M.C.; Contaldo, M.T.; Sansone, M.; Carrafiello, G.; Amato, F.; Ponsiglione, A.M. Classification of Parotid Tumors with Robust Radiomic Features from DCE- and DW-MRI. J. Imaging 2025, 11, 122. https://doi.org/10.3390/jimaging11040122

Angelone F, Tortora S, Patella F, Bonanno MC, Contaldo MT, Sansone M, Carrafiello G, Amato F, Ponsiglione AM. Classification of Parotid Tumors with Robust Radiomic Features from DCE- and DW-MRI. Journal of Imaging. 2025; 11(4):122. https://doi.org/10.3390/jimaging11040122

Chicago/Turabian StyleAngelone, Francesca, Silvia Tortora, Francesca Patella, Maria Chiara Bonanno, Maria Teresa Contaldo, Mario Sansone, Gianpaolo Carrafiello, Francesco Amato, and Alfonso Maria Ponsiglione. 2025. "Classification of Parotid Tumors with Robust Radiomic Features from DCE- and DW-MRI" Journal of Imaging 11, no. 4: 122. https://doi.org/10.3390/jimaging11040122

APA StyleAngelone, F., Tortora, S., Patella, F., Bonanno, M. C., Contaldo, M. T., Sansone, M., Carrafiello, G., Amato, F., & Ponsiglione, A. M. (2025). Classification of Parotid Tumors with Robust Radiomic Features from DCE- and DW-MRI. Journal of Imaging, 11(4), 122. https://doi.org/10.3390/jimaging11040122