Automatic Segmentation of Plants and Weeds in Wide-Band Multispectral Imaging (WMI)

Abstract

1. Introduction

2. Materials and Methods

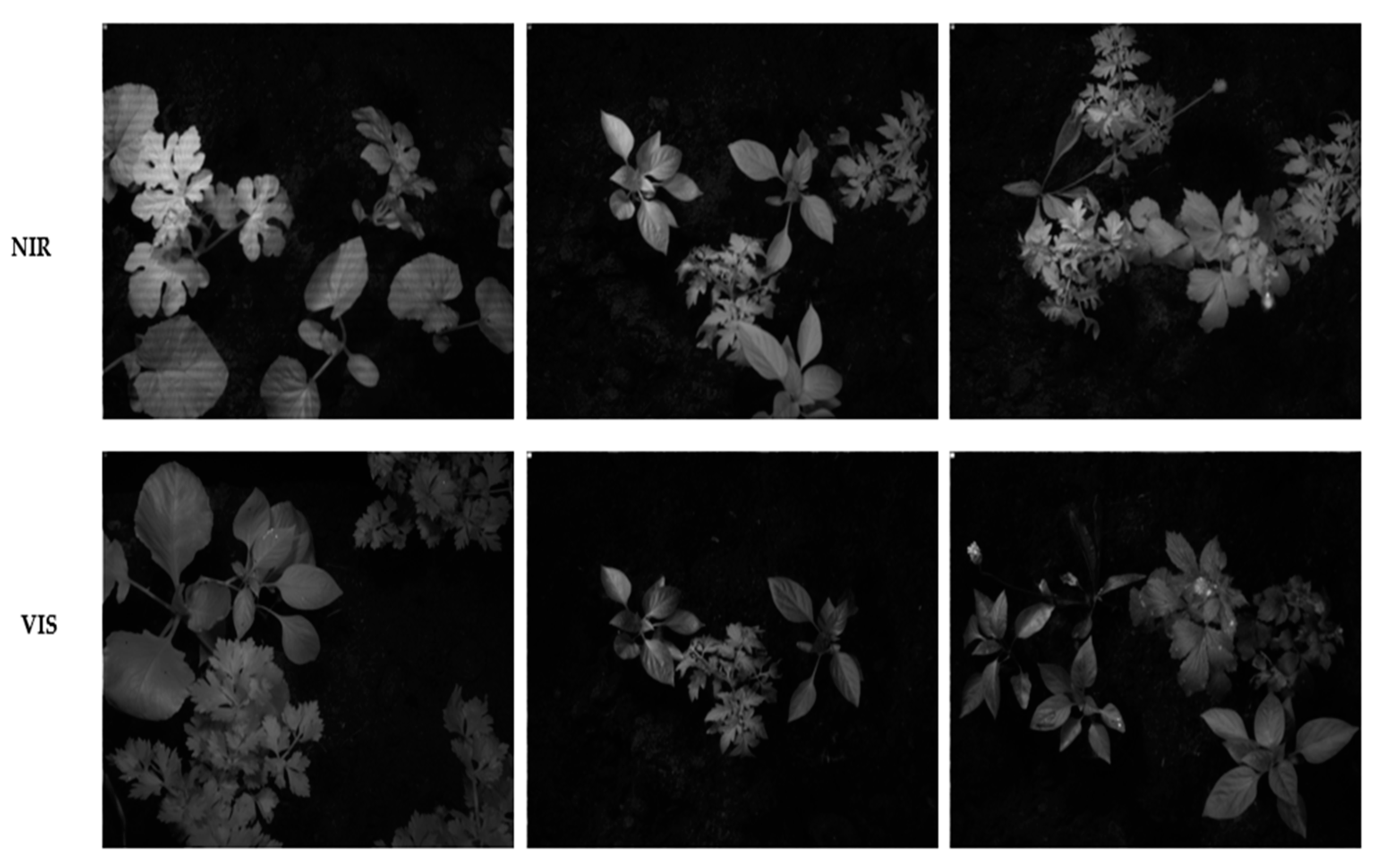

2.1. Images

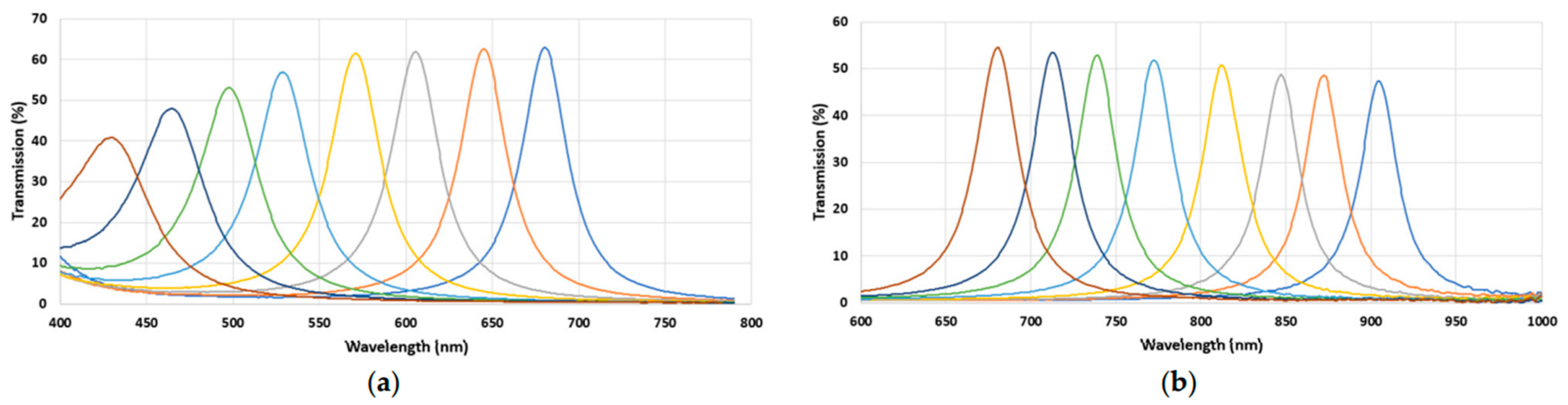

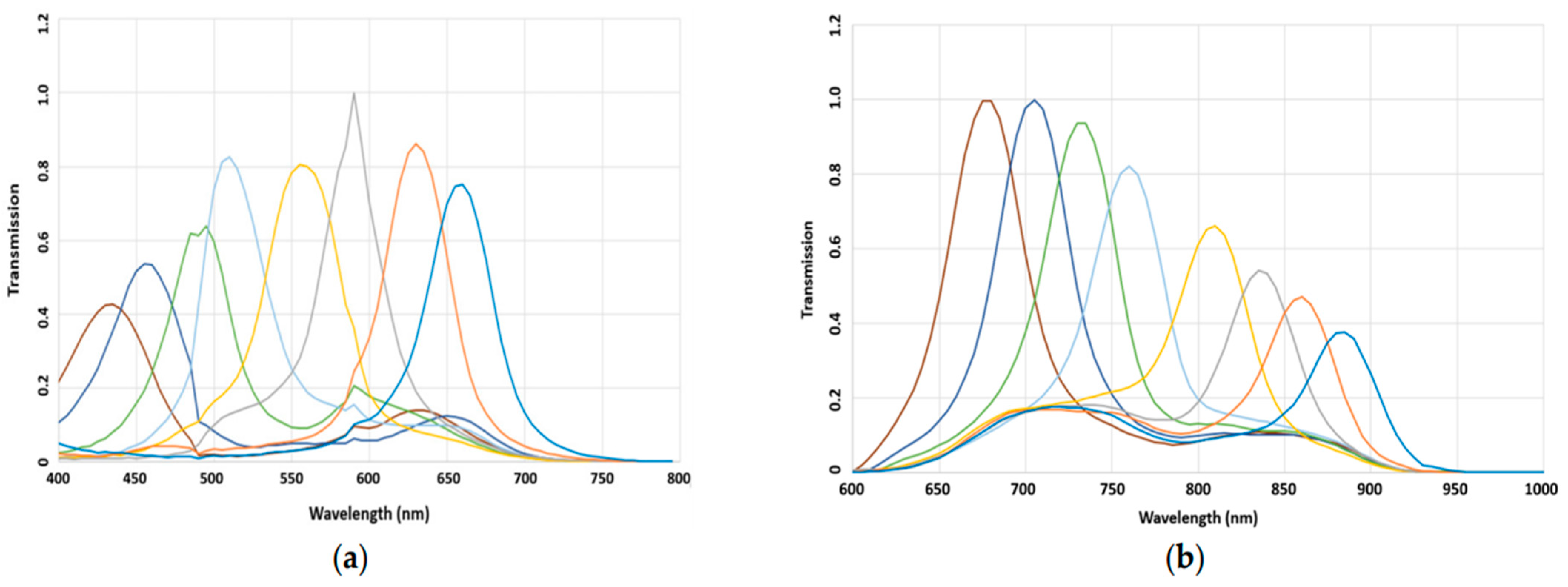

2.2. Spectral Bands

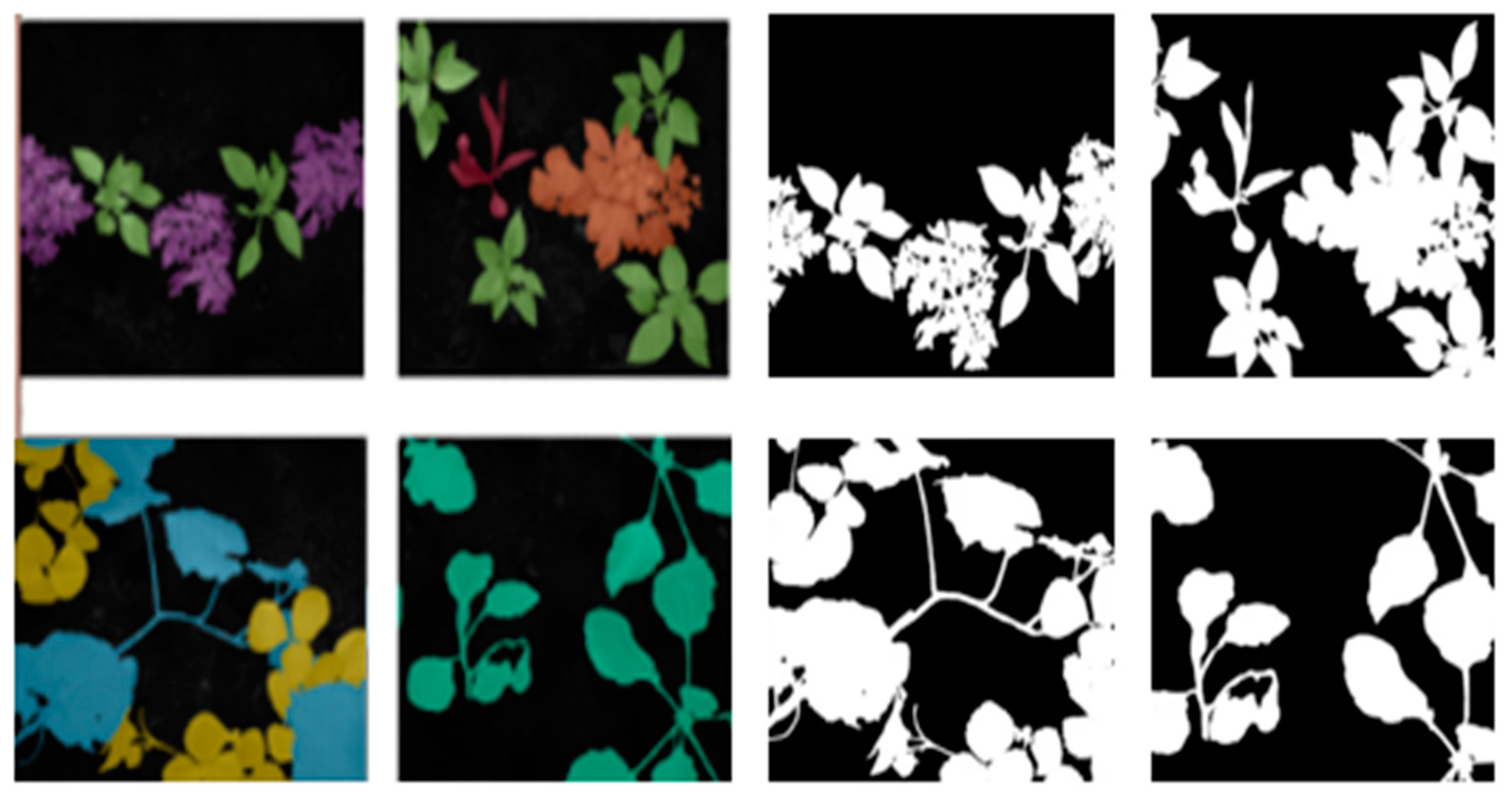

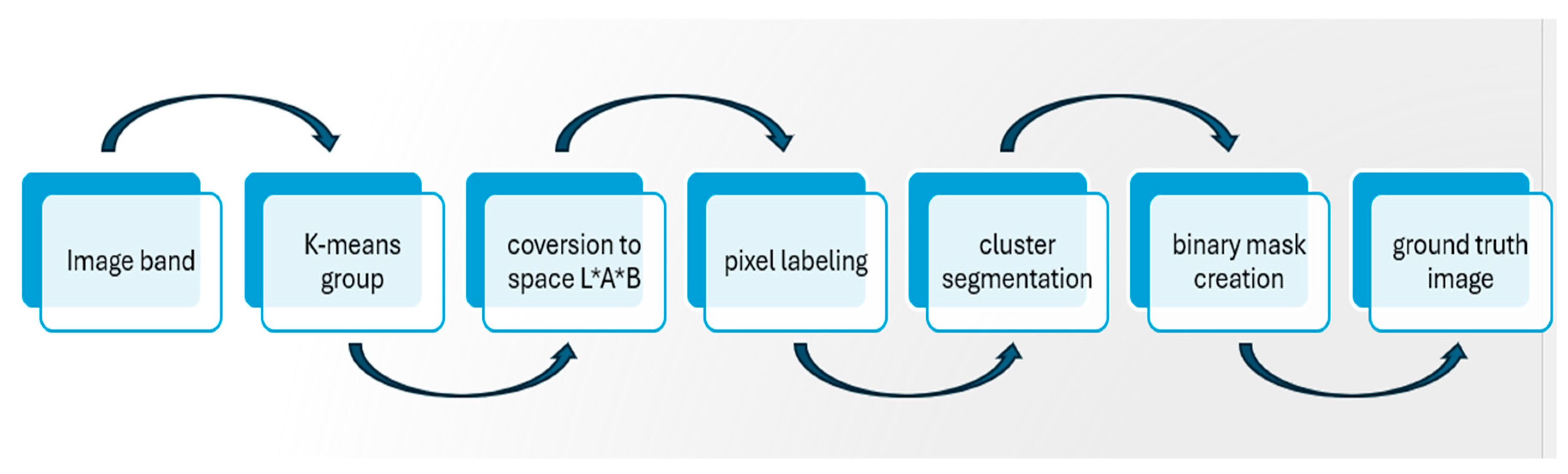

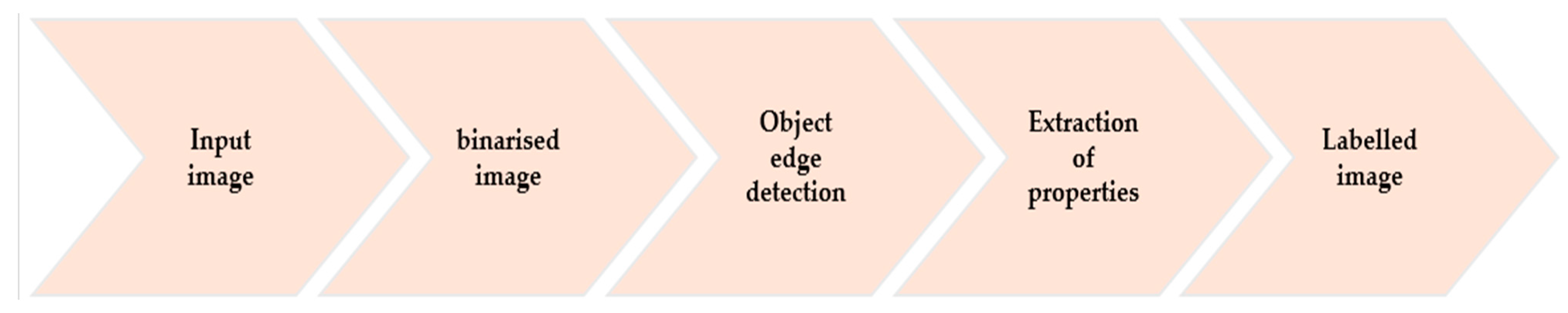

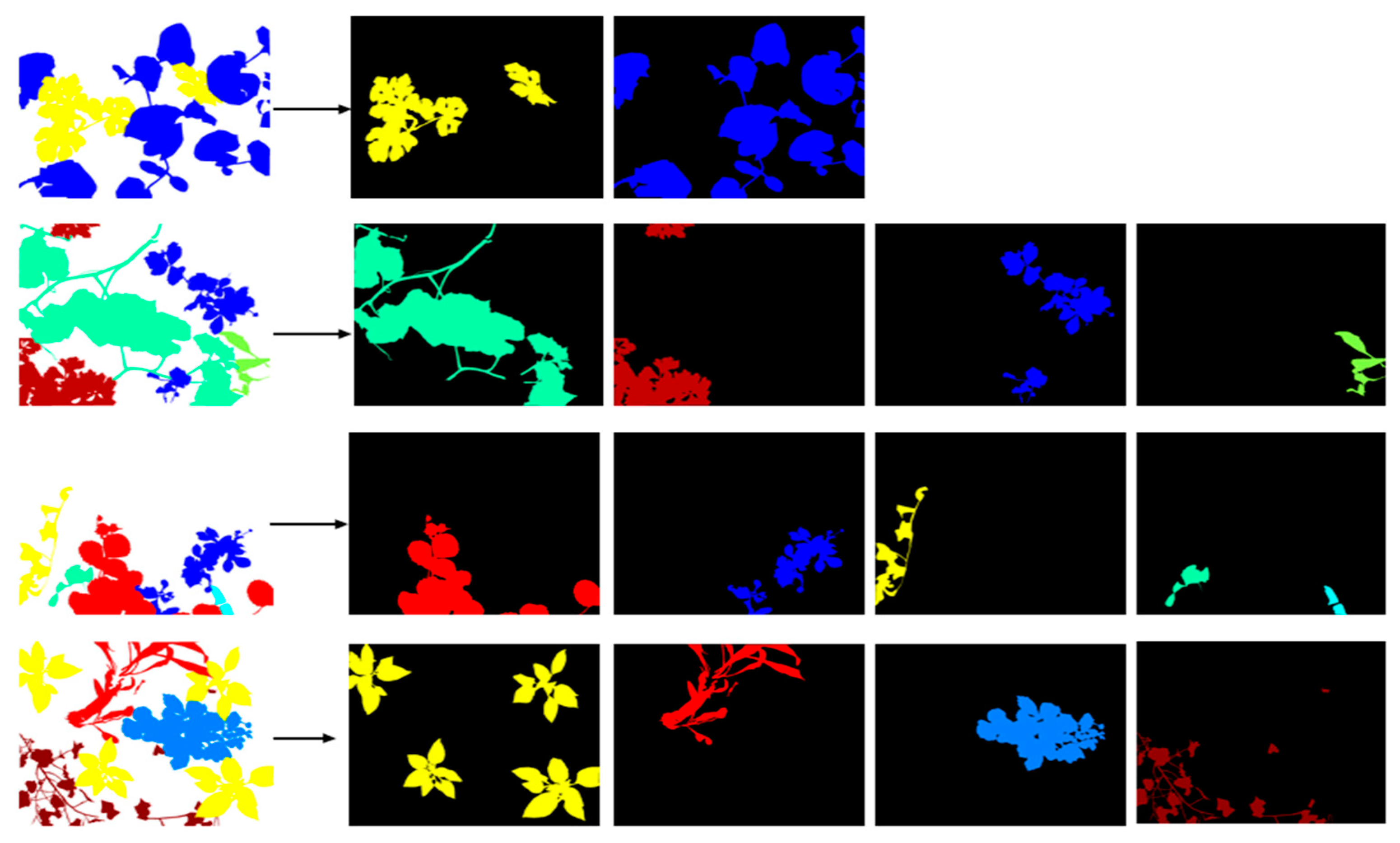

2.3. Refinement of Reference Images and Their Masks

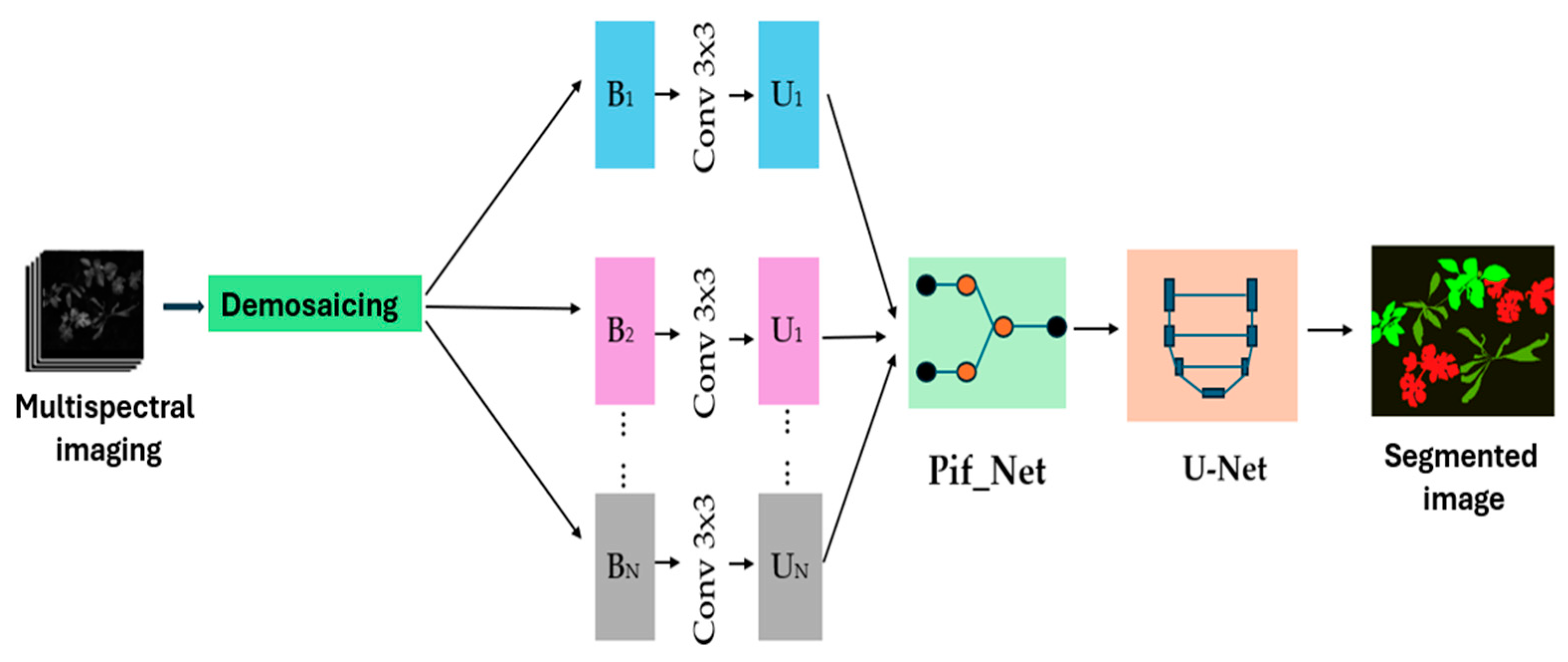

2.4. Proposed PIF-Net and U-Net for Instance-Based Semantic Segmentation with Noise Reduction

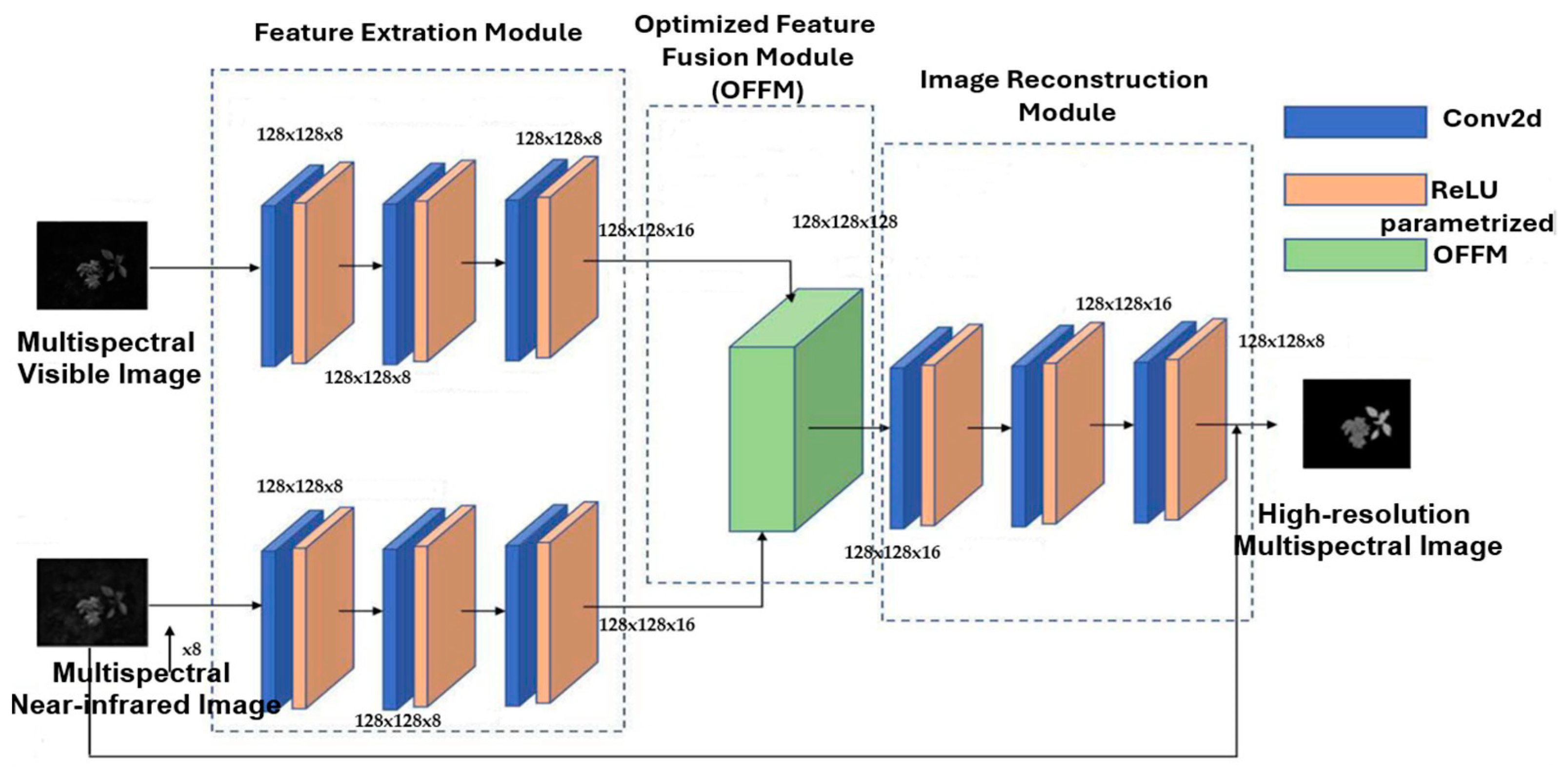

2.5. The Pif-Net Network

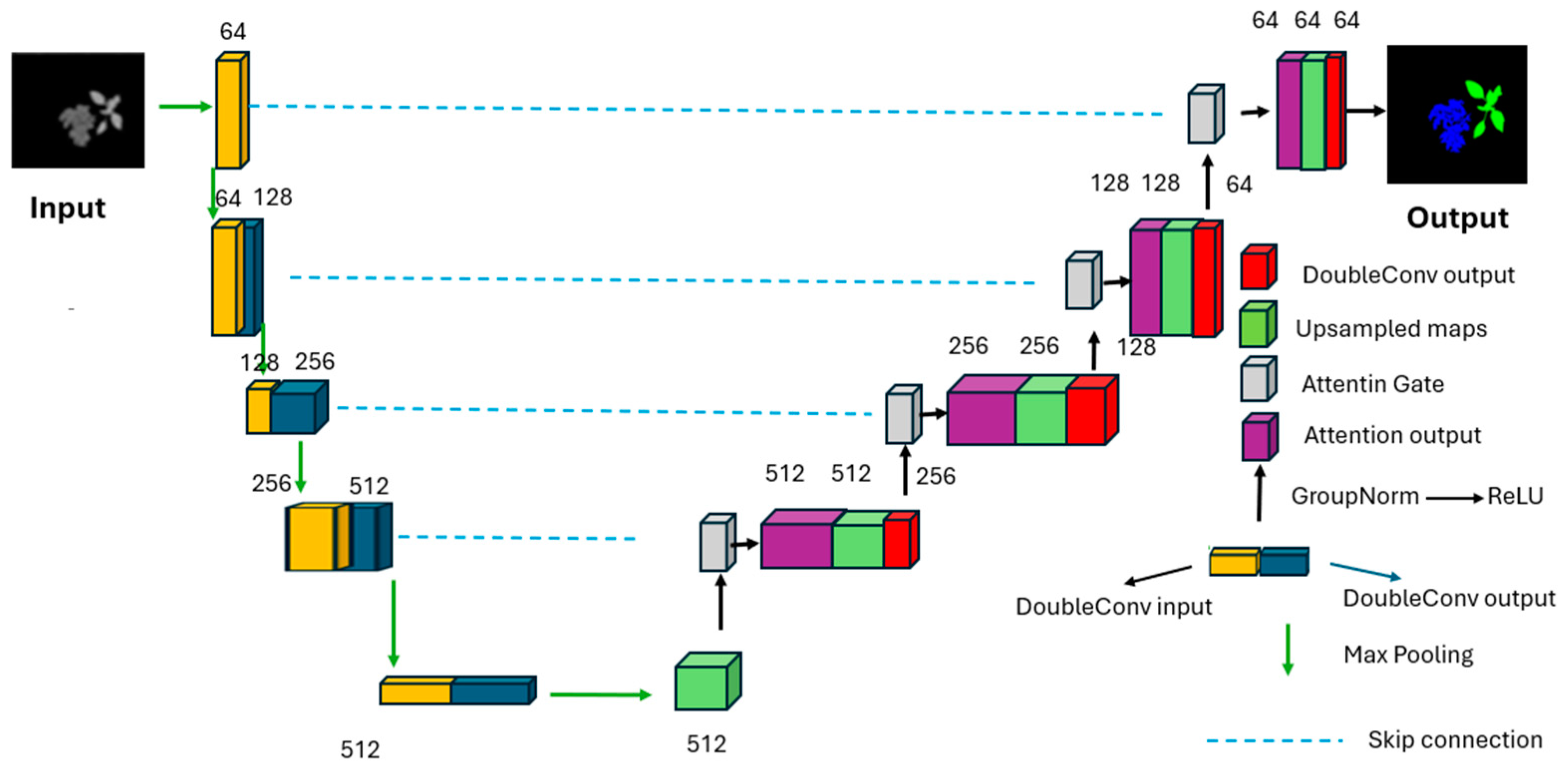

2.6. U-Net Proposed Architecture

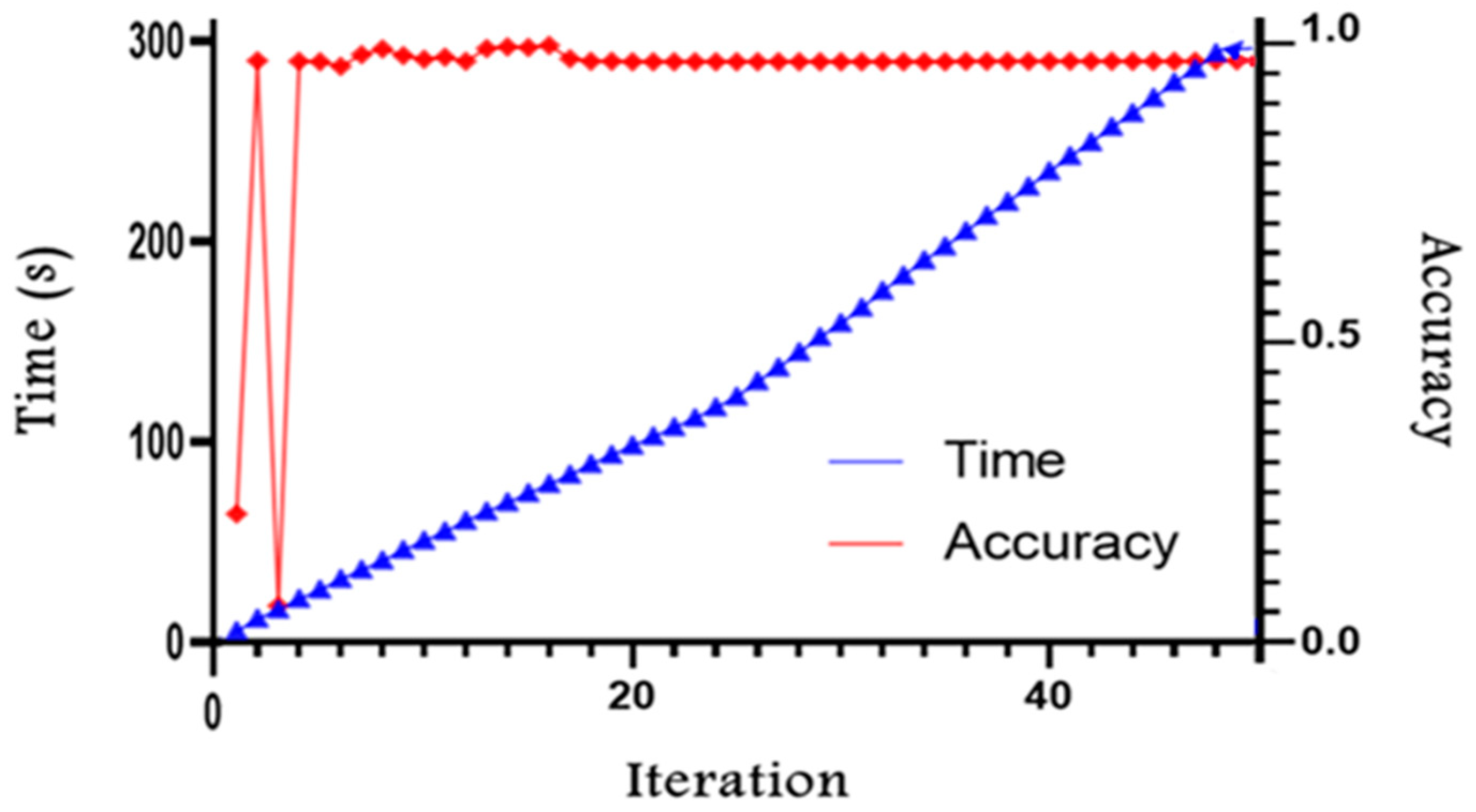

3. Results

3.1. Segmentation Evaluation Metrics

3.1.1. Dice Loss

3.1.2. Mean IoU

3.1.3. Mean Boundary F1 Score

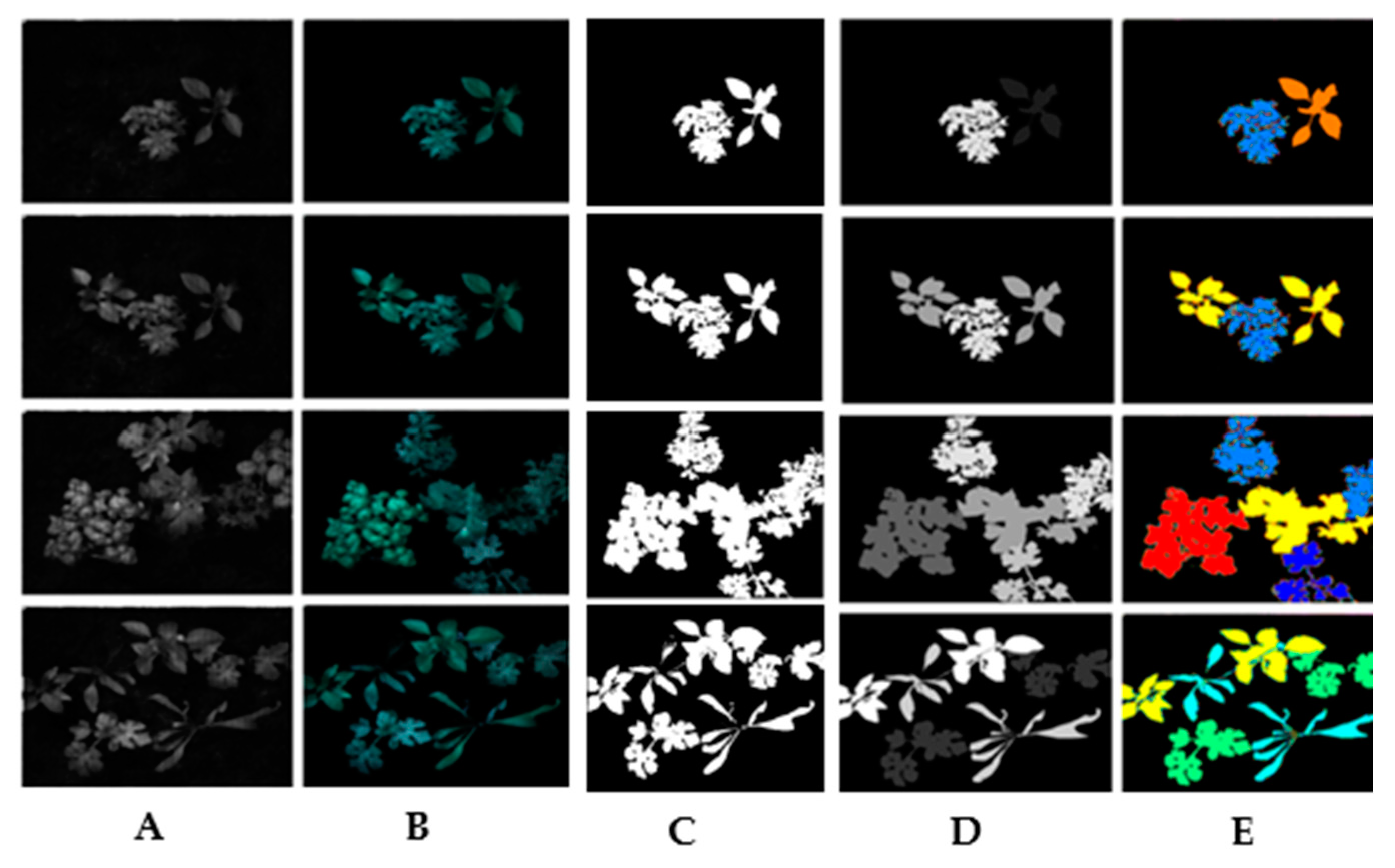

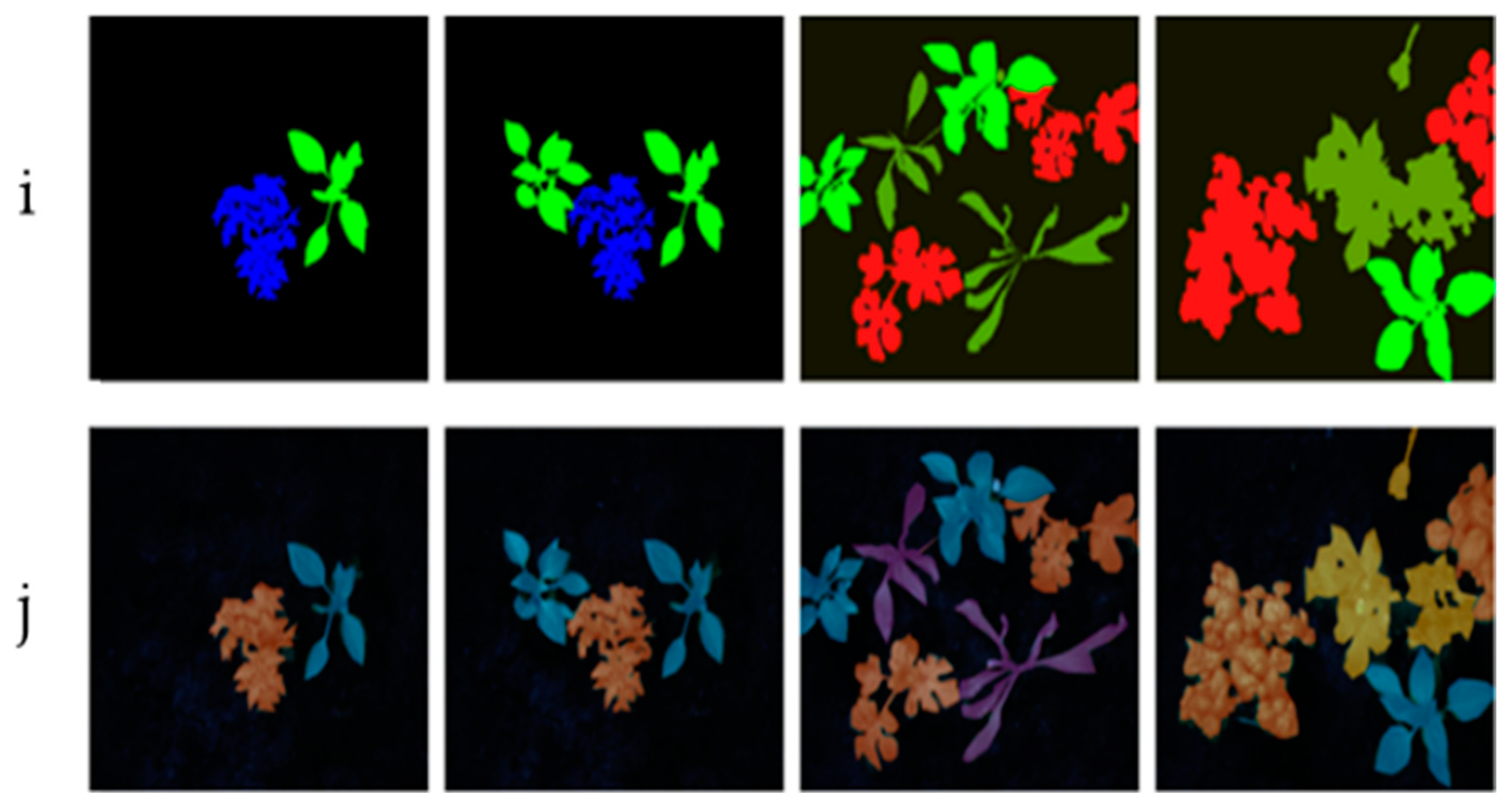

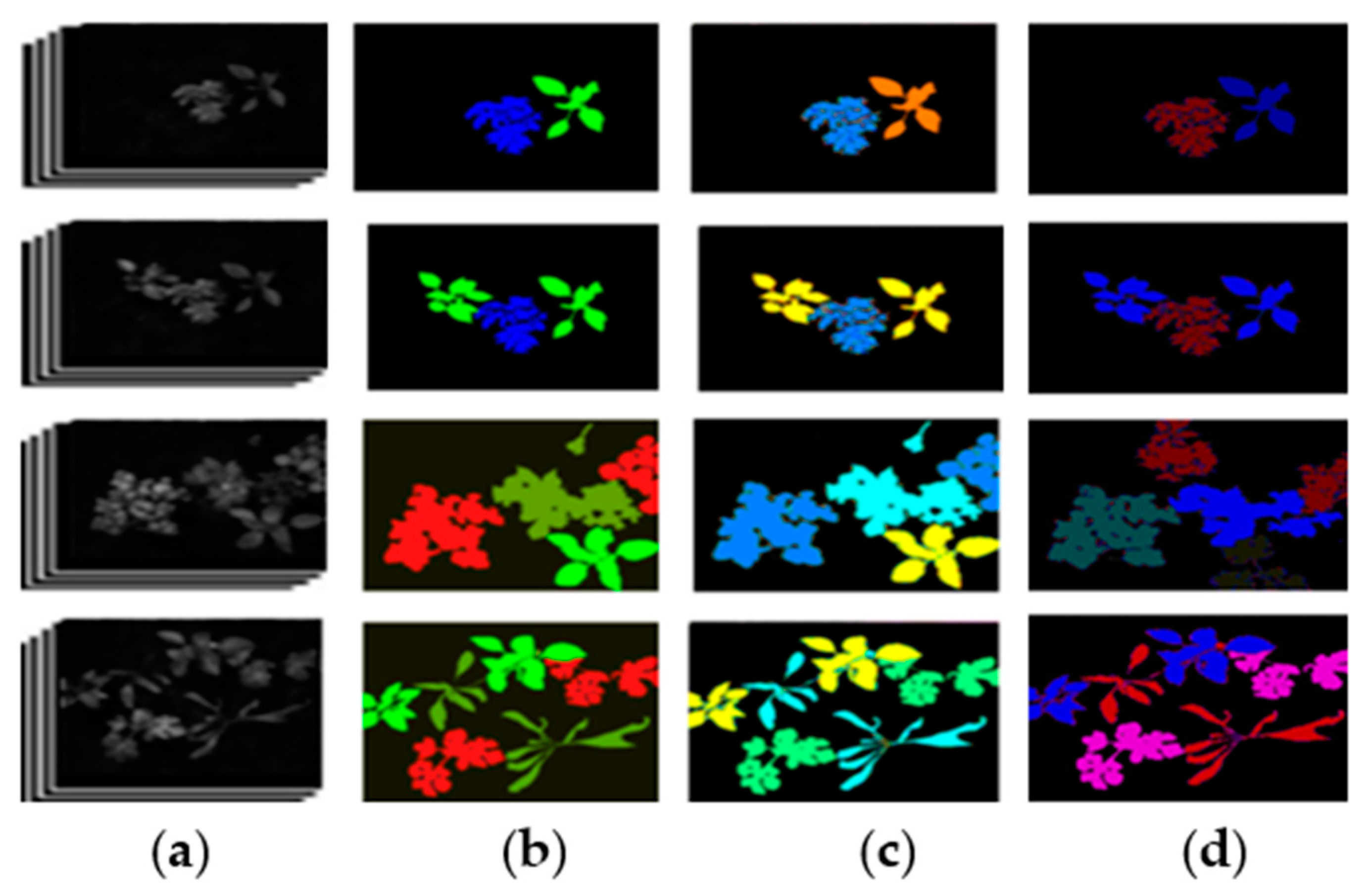

3.2. Instance Segmentation Results Using PCA Combined to U-Net

3.3. Broadband Instance Segmentation

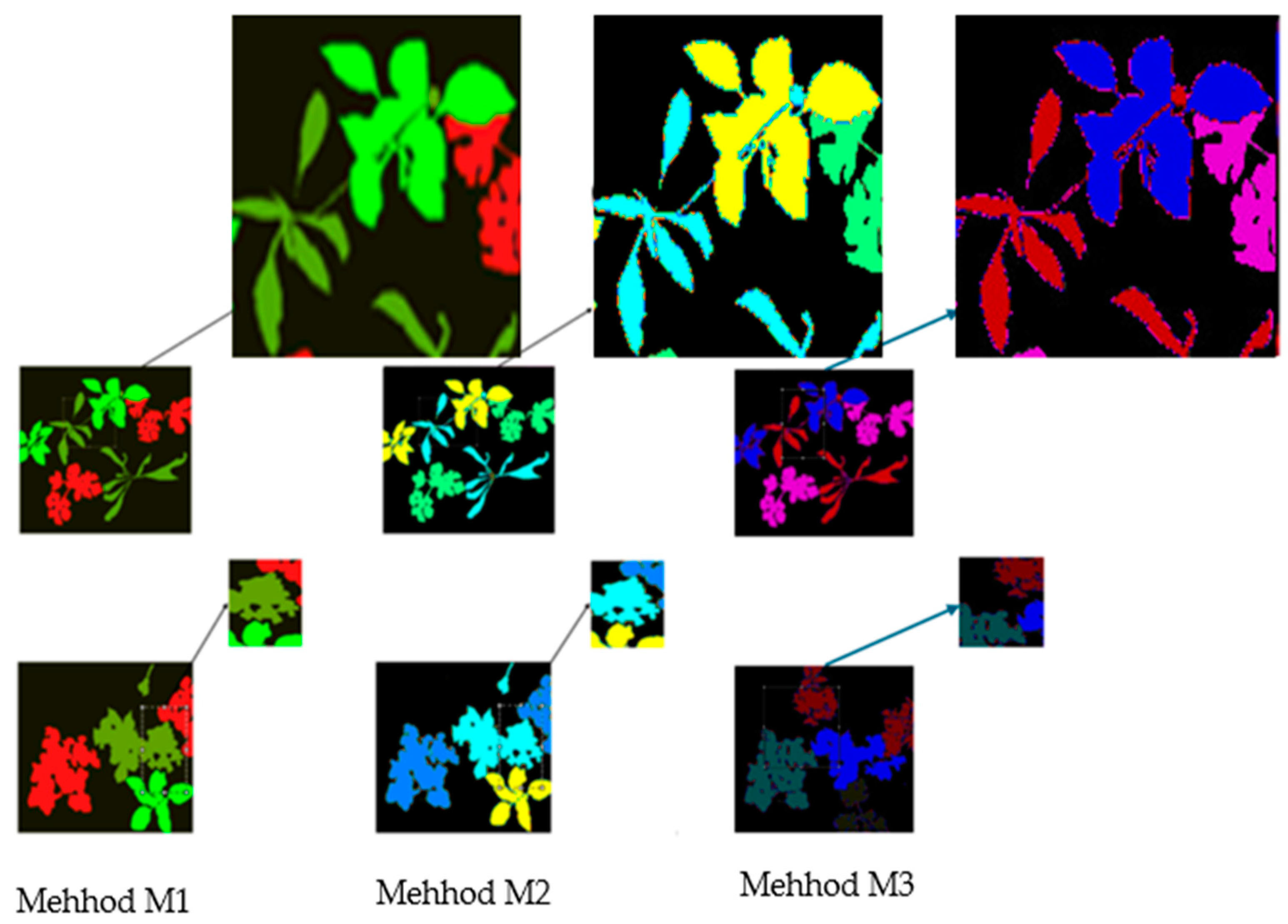

4. Discussion

- ✓

- M2 exhibits high variability, which could compromise its reliability in certain configurations.

- ✓

- M3 is the least efficient, characterized by lower accuracy, reduced precision, and greater errors. The other metrics values are also bad.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| WMI | Wideband multispectral imaging |

| LCTF | Liquid Crystal Tunable Filter |

| AOTF | Acousto-Optical Tunable Filter |

| CNNs | convolutional neural networks |

| NIR | near-infrared |

| MS | multispectral |

| VIS | visible |

| PCA | Principal Component Analysis |

| PIF-Net | Part-based Instance Feature Network |

| PReLU | Parametric Rectified Linear Unit |

| HRMSI | high-resolution multispectral images |

| MSFA | multi-spectral filter arrays |

| RMSE | Root Mean Square Error |

| sRGB | standard RGB |

References

- Les rédacteurs invités Li, J.; Wang, R.; Wheate, R. Special Issue on Large-Scale Deep Learning for Sensor-Driven Mapping. Can. J. Remote Sens. 2021, 47, 353–355. [Google Scholar]

- Chambino, L.L.; Silva, J.S.; Bernardino, A. Multispectral facial recognition: A review. IEEE Access 2020, 8, 207871–207883. [Google Scholar] [CrossRef]

- Mohammadi, V.; Gouton, P.; Rossé, M.; Katakpe, K.K. Design and development of large-band Dual-MSFA sensor camera for precision agriculture. Sensors 2023, 24, 64. [Google Scholar] [CrossRef]

- Shirahata, M.; Wako, K.; Kurihara, J.; Fukuoka, D.; Takeyama, N. Development of liquid crystal tunable filters (LCTFs) for small satellites. In Space Telescopes and Instrumentation; SPIE: Bellingham, WA, USA, 2024; Optical, Infrared, and Millimeter Wave; Volume 13092, pp. 1067–1076. [Google Scholar]

- Champagne, J. Filtres Acousto-Optiques Accordables à Sélection de Polarisation Pour L’imagerie Hyperspectrale. Doctoral Dissertation, Université Polytechnique Hauts-de-France, Valenciennes, France, 2020. [Google Scholar]

- Lapray, P.J.; Wang, X.; Thomas, J.B.; Gouton, P. Multispectral filter arrays: Recent advances and practical implementation. Sensors 2014, 14, 21626–21659. [Google Scholar] [CrossRef]

- Chi, C.; Yoo, H.; Ben-Ezra, M. Multi-spectral imaging by optimized wide band illumination. Int. J. Comput. Vis. 2010, 86, 140–151. [Google Scholar] [CrossRef]

- de Castro, A.I.; Jurado-Expósito, M.; Peña-Barragán, J.M.; López-Granados, F. Airborne multi-spectral imagery for mapping cruciferous weeds in cereal and legume crops. Precis. Agric. 2012, 13, 302–321. [Google Scholar] [CrossRef]

- Wang, D.; Cao, W.; Zhang, F.; Li, Z.; Xu, S.; Wu, X. A review of deep learning in multiscale agricultural sensing. Remote Sens. 2022, 14, 559. [Google Scholar] [CrossRef]

- Dalla Mura, M.; Benediktsson, J.A.; Waske, B.; Bruzzone, L. Morphological attribute profiles for the analysis of very high-resolution images. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3747–3762. [Google Scholar] [CrossRef]

- Sonmez, M.E.; Sabanci, K.; Aydin, N. Convolutional neural network-support vector machine-based approach for identification of wheat hybrids. Eur. Food Res. Technol. 2024, 250, 1353–1362. [Google Scholar] [CrossRef]

- Shi, Y.; van der Meel, R.; Chen, X.; Lammers, T. The EPR effect and beyond: Strategies to improve tumor targeting and cancer nanomedicine treatment efficacy. Theranostics 2020, 10, 7921. [Google Scholar] [CrossRef]

- Madhusudhanan, K.; Burchert, J.; Duong-Trung, N.; Born, S.; Schmidt-Thieme, L. U-net inspired transformer architecture for far horizon time series forecasting. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases; Springer: Cham, Switzerland, 2022; pp. 36–52. [Google Scholar]

- Kim, Y.H.; Park, K.R. MTS-CNN: Multi-task semantic segmentation-convolutional neural network for detecting crops and weeds. Comput. Electron. Agric. 2022, 199, 107146. [Google Scholar] [CrossRef]

- Fawakherji, M.; Youssef, A.; Bloisi, D.; Pretto, A.; Nardi, D. Crop and weeds classification for precision agriculture using context-independent pixel-wise segmentation. In Proceedings of the 2019 Third IEEE International Conference on Robotic Computing (IRC), Naples, Italy, 25–27 February 2019; pp. 146–152. [Google Scholar]

- López-Granados, F. Weed detection for site-specific weed management: Mapping and real-time approaches. Weed Res. 2011, 51, 1–11. [Google Scholar] [CrossRef]

- Shirzadifar, A.M. Automatic weed detection system and smart herbicide sprayer robot for corn fields. In Proceedings of the 2013 First RSI/ISM International Conference on Robotics and Mechatronics (ICRoM), Tehran, Iran, 13–15 February 2013; pp. 468–473. [Google Scholar]

- Wang, K.; Hu, X.; Zheng, H.; Lan, M.; Liu, C.; Liu, Y.; Zhong, L.; Li, H.; Tan, S. Weed detection and recognition in complex wheat fields based on an improved YOLOv7. Front. Plant Sci. 2024, 15, 1372237. [Google Scholar] [CrossRef]

- Nasiri, A.; Omid, M.; Taheri-Garavand, A.; Jafari, A. Deep learning-based precision agriculture through weed recognition in sugar beet fields. Sustain. Comput. Inform. Syst. 2022, 35, 100759. [Google Scholar] [CrossRef]

- Available online: https://multispectraldatabase.vercel.app/ (accessed on 25 August 2024).

- Shrestha, R.; Hardeberg, J.Y.; Khan, R. Spatial arrangement of color filter array for multispectral image acquisition. In Proceedings of the Sensors, Cameras, and Systems for Industrial, Scientific, and Consumer Applications XII, San Francisco, CA, USA, 25–27 January 2011; Volume 7875, pp. 20–28. [Google Scholar]

- Zhang, W.; Suo, J.; Dong, K.; Li, L.; Yuan, X.; Pei, C.; Dai, Q. Handheld snapshot multi-spectral camera at tens-of-megapixel resolution. Nat. Commun. 2023, 14, 5043. [Google Scholar] [CrossRef]

- Meng, G.; Huang, J.; Wang, Y.; Fu, Z.; Ding, X.; Huang, Y. Progressive high-frequency reconstruction for pan-sharpening with implicit neural representation. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 20–27 February 2024; Volume 38, pp. 4189–4197. [Google Scholar]

- Luo, Y.; Han, T.; Liu, Y.; Su, J.; Chen, Y.; Li, J.; Wu, Y.; Cai, G. CSFNet: Cross-Modal Semantic Focus Network for Semantic Segmentation of Large-Scale Point Clouds. IEEE Trans. Geosci. Remote Sens. 2025, 63, 1–15. [Google Scholar] [CrossRef]

- Azad, R.; Aghdam, E.K.; Rauland, A.; Jia, Y.; Avval, A.H.; Bozorgpour, A.; Karimijafarbigloo, S.; Cohen, J.P.; Adeli, E.; Merhof, D. Medical Image Segmentation Review: The Success of U-Net. IEEE Trans. Pattern Anal. Mach. Intell. 2024, 46, 10076–10095. [Google Scholar] [CrossRef]

- Chen, J.; Mei, J.; Li, X.; Lu, Y.; Yu, Q.; Wei, Q.; Luo, X.; Xie, Y.; Adeli, E.; Wang, Y.; et al. TransUNet: Rethinking the U-Net architecture design for medical image segmentation through the lens of transformers. Med. Image Anal. 2024, 97, 103280. [Google Scholar] [CrossRef]

- Siddique, N.; Paheding, S.; Elkin, C.P.; Devabhaktuni, V. U-net and its variants for medical image segmentation: A review of theory and applications. IEEE Access 2021, 9, 82031–82057. [Google Scholar] [CrossRef]

- Liu, Y.; Mu, F.; Shi, Y.; Cheng, J.; Li, C.; Chen, X. Brain tumor segmentation in multimodal MRI via pixel-level and feature-level image fusion. Front. Neurosci. 2022, 16, 1000587. [Google Scholar] [CrossRef]

- Gu, J.; Wang, Z.; Kuen, J.; Ma, L.; Shahroudy, A.; Shuai, B.; Liu, T.; Wang, X.; Wang, G.; Cai, J.; et al. Recent advances in convolutional neural networks. Pattern Recognit. 2018, 77, 354–377. [Google Scholar] [CrossRef]

- Muhammad, W.; Aramvith, S.; Onoye, T. Multi-scale Xception based depthwise separable convolution for single image super-resolution. PLoS ONE 2021, 16, e0249278. [Google Scholar] [CrossRef] [PubMed]

- Fan, X.; Zhang, W.; Zhang, C.; Chen, A.; An, F. SOC estimation of Li-ion battery using convolutional neural network with U-Net architecture. Energy 2022, 256, 124612. [Google Scholar] [CrossRef]

- Zou, K.H.; Warfield, S.K.; Bharatha, A.; Tempany, C.M.; Kaus, M.R.; Haker, S.J.; Wells, W.M., III; Jolesz, F.A.; Kikinis, R. Statistical validation of image segmentation quality based on a spatial overlap index1: Scientific reports. Acad. Radiol. 2004, 11, 178–189. [Google Scholar] [CrossRef]

- Yeung, M.; Sala, E.; Schönlieb, C.B.; Rundo, L. Unified focal loss: Generalising dice and cross entropy-based losses to handle class imbalanced medical image segmentation. Comput. Med. Imaging Graph. 2022, 95, 102026. [Google Scholar] [CrossRef]

- Xi, Z.; Hopkinson, C. Detecting individual-tree crown regions from terrestrial laser scans with an anchor-free deep learning model. Can. J. Remote Sens. 2021, 47, 228–242. [Google Scholar] [CrossRef]

- Gurnani, B.; Kaur, K.; Lalgudi, V.G.; Kundu, G.; Mimouni, M.; Liu, H.; Jhanji, V.; Prakash, G.; Roy, A.S.; Shetty, R.; et al. Role of artificial intelligence, machine learning and deep learning models in corneal disorders–A narrative review. J. Français D’ophtalmologie 2024, 47, 104242. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Li, J.; Hua, Z. MRSE-Net: Multiscale residuals and SE-attention network for water body segmentation from satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5049–5064. [Google Scholar] [CrossRef]

- Sodjinou, S.G.; Mohammadi, V.; Mahama AT, S.; Gouton, P. A deep semantic segmentation-based algorithm to segment crops and weeds in agronomic color images. Inf. Process. Agric. 2022, 9, 355–364. [Google Scholar] [CrossRef]

- Attri, I.; Awasthi, L.K.; Sharma, T.P.; Rathee, P. A review of deep learning techniques used in agriculture. Ecol. Inform. 2023, 77, 102217. [Google Scholar] [CrossRef]

- Giakoumoglou, N.; Kalogeropoulou, E.; Klaridopoulos, C.; Pechlivani, E.M.; Christakakis, P.; Markellou, E.; Frangakis, N.; Tzovaras, D. Early detection of Botrytis cinerea symptoms using deep learning multi-spectral image segmentation. Smart Agric. Technol. 2024, 8, 100481. [Google Scholar] [CrossRef]

- Gupta, S.K.; Yadav, S.K.; Soni, S.K.; Shanker, U.; Singh, P.K. Multiclass weed identification using semantic segmentation: An automated approach for precision agriculture. Ecol. Inform. 2023, 78, 102366. [Google Scholar] [CrossRef]

| Parameter | VIS | NIR |

|---|---|---|

| Min Delta E 2000 | 0.0047 | 0.0051 |

| Max Delta E 2000 | 0.3500 | 2.2575 |

| Mean Delta E 2000 | 0.0562 | 0.0937 |

| Median E 2000 | 0.0465 | 0.0658 |

| STD Delta E 2000 | 0.0402 | 0.1320 |

| Min RMS | 0.0020 | 0.0021 |

| Max RMS | 0.0740 | 0.0986 |

| Mean RMS | 0.0123 | 0.0108 |

| GFC > 0.99 | 99% | 98% |

| GFC > 0.95 | 100% | 100% |

| Criteria | Method M1 | Method M3 | Method M2 |

|---|---|---|---|

| Accuracy | 98.2% | 97.2% | 97.1% |

| Dice Coefficient | 0.8279 ± 0.16% | 0.8249 ± 5.17% | 0.8233 ± 3.19% |

| RMSE | 0.0007 | 0.0011 | 0.0022 |

| Criteria | M1 | M3 | M2 |

|---|---|---|---|

| Accuracy | 98.2% | 97.2% | 97.1% |

| Recall | 0.89017 | 0.82643 | 0.78801 |

| Mean IoU | 0.92011 | 0.89711 | 0.88011 |

| Mean BF Score | 0.71082 | 0.48082 | 0.40082 |

| Epochs | Time Elapsed (s) | Mini-Batch Accuracy | Mini-Batch Loss | Base Learning Rate |

|---|---|---|---|---|

| 1 | 6 | 55.52 | 6.45 | 0.001 |

| 10 | 61 | 96.91 | 0.65 | 0.001 |

| 20 | 121 | 97.66 | 0.32 | 0.001 |

| 30 | 182 | 97.97 | 0.19 | 0.001 |

| 40 | 246 | 99.45 | 0.12 | 0.001 |

| 50 | 300 | 99.49 | 0.10 | 0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sodjinou, S.G.; Mahama, A.T.S.; Gouton, P. Automatic Segmentation of Plants and Weeds in Wide-Band Multispectral Imaging (WMI). J. Imaging 2025, 11, 85. https://doi.org/10.3390/jimaging11030085

Sodjinou SG, Mahama ATS, Gouton P. Automatic Segmentation of Plants and Weeds in Wide-Band Multispectral Imaging (WMI). Journal of Imaging. 2025; 11(3):85. https://doi.org/10.3390/jimaging11030085

Chicago/Turabian StyleSodjinou, Sovi Guillaume, Amadou Tidjani Sanda Mahama, and Pierre Gouton. 2025. "Automatic Segmentation of Plants and Weeds in Wide-Band Multispectral Imaging (WMI)" Journal of Imaging 11, no. 3: 85. https://doi.org/10.3390/jimaging11030085

APA StyleSodjinou, S. G., Mahama, A. T. S., & Gouton, P. (2025). Automatic Segmentation of Plants and Weeds in Wide-Band Multispectral Imaging (WMI). Journal of Imaging, 11(3), 85. https://doi.org/10.3390/jimaging11030085