Optimizing Deep Learning Models for Climate-Related Natural Disaster Detection from UAV Images and Remote Sensing Data

Abstract

1. Introduction

1.1. Introduction on Climate Change

1.2. Current Methods on Impact of Climate Change

1.3. Related AI Research Studies

1.4. AI in Aerial Imagery

1.5. AI Research Utilizing Our Data Source

1.6. Purpose of Our Research Study

2. Materials and Methods

2.1. Compiling the Climate Change Dataset

- The Louisiana Flood 2016 [44] dataset:

- ○

- contained aerial images from the historic flooding that occurred in Southern Louisiana in 2016. For each image taken during the flood, there was a corresponding image before/after the flood.

- ○

- image size: 512 × 360 pixels.

- The FDL_UAV_flood areas [45] dataset:

- ○

- contained aerial images of Houston, TX from Hurricane Harvey. The dataset contains both flooded and unflooded images.

- ○

- the image dimensions were approximately 3 K × 4 K pixels.

- The Cyclone Wildfire Flood Earthquake Database [46]:

- ○

- contained videos and images from various natural disasters. We selected images from the Flood folder. These images were obtained from a Google search on each natural disaster included in the dataset.

- ○

- the images were of variable sizes.

- The Satellite Image Classification [47] dataset:

- ○

- was created from sensors and Google Map snapshots.

- ○

- images size: 256 × 256 pixels.

- Disaster Dataset [48]:

- ○

- contains images from numerous natural disasters.

- ○

- the images were resized to 224 × 224 pixels.

- Aerial Landscape Images [49]:

- Aerial Images of Cities [50]:

- Forest Aerial Images for Segmentation [51]:

- ○

- satellite images of forest land cover. Dataset was obtained from Land Cover Classification Track in DeepGlobe Challenge [51].

- ○

- images resized to 256 × 256 pixels.

2.2. Preprocessing and Model Initiation

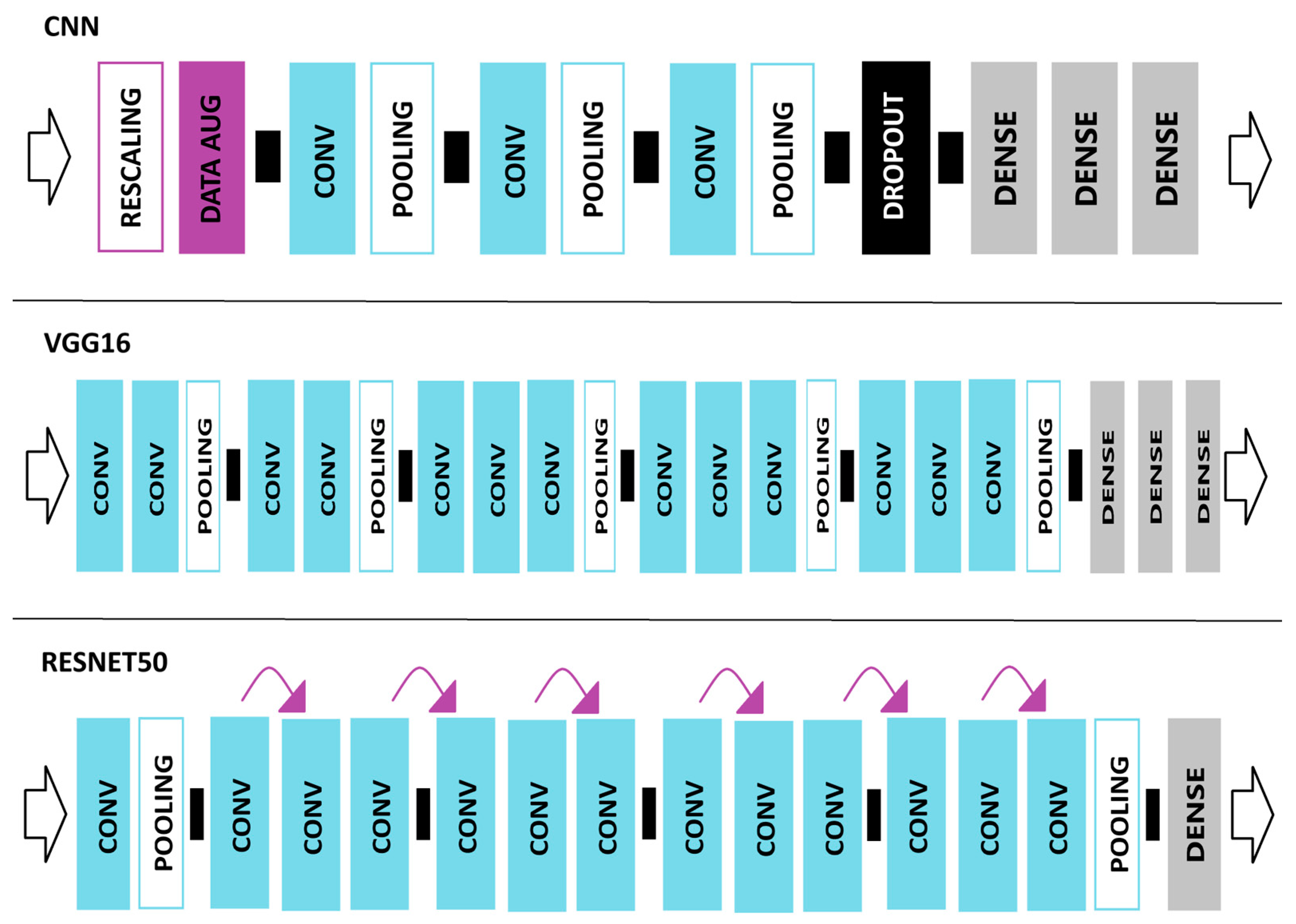

2.3. VGG16 Network Model

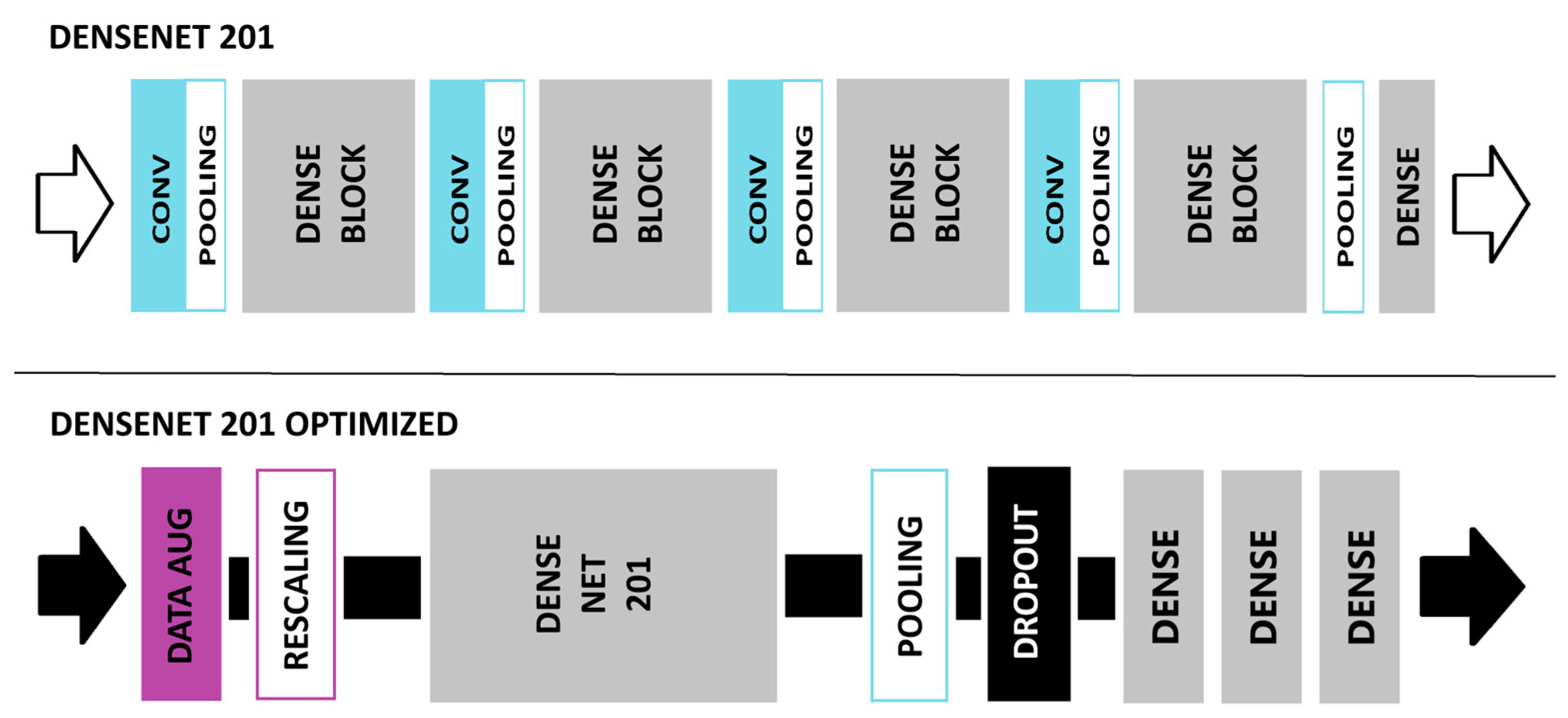

2.4. DenseNet201 Network Model

2.4.1. Data Augmentation Layer

2.4.2. Rescaling Layer

2.4.3. Global Average Pooling Layer

2.4.4. Dropout Layer

2.4.5. Fully Connected Layer and Classifier

2.5. ResNet50 Network Model

2.6. Transfer Learning Framework

2.7. Convolutional Neural Network (CNN) Model

2.7.1. CNN Layers

- 1 rescaling layer;

- 1 data augmentation layer;

- 3 convolutional layers;

- 3 pooling layers;

- 1 drop-out layer;

- 3 fully connected (FC) layers.

2.7.2. Pooling Layers

2.8. Experimental Setup

- Collaborate online with code/feedback;

- Accelerate our ML workload with Google GPUs/TPUs;

- Utilize Google’s cloud computing resources.

2.9. Evaluation Metrics

2.10. Cross-Validation Methods

3. Results

3.1. Individual Model Performance

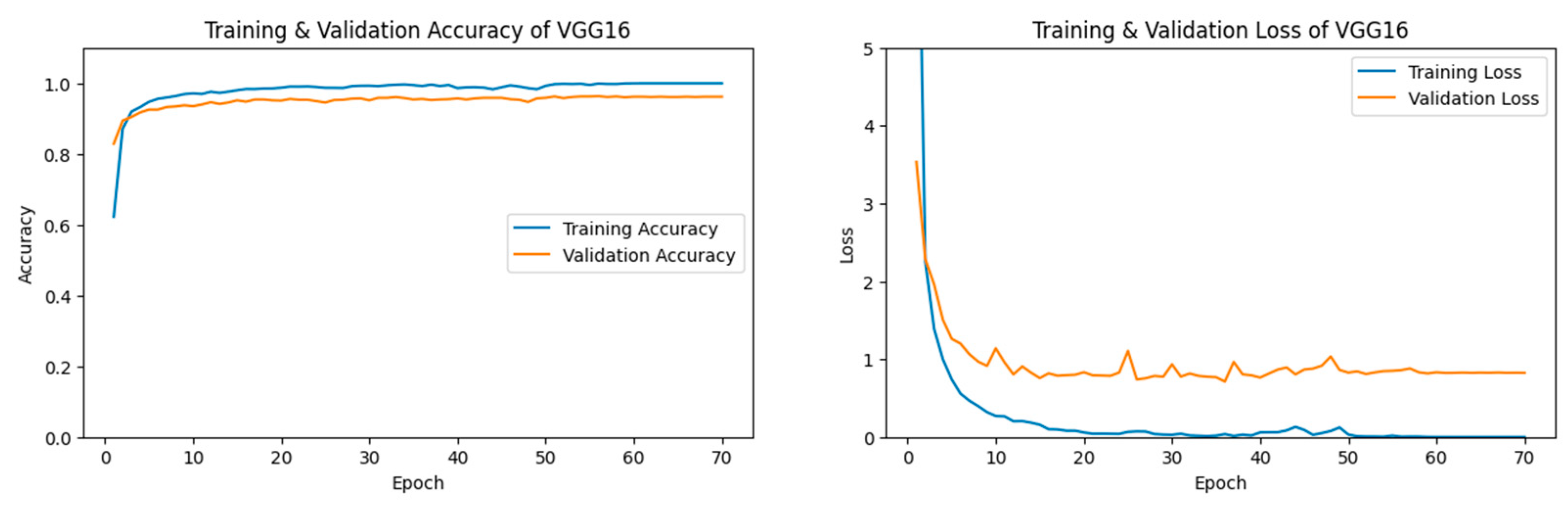

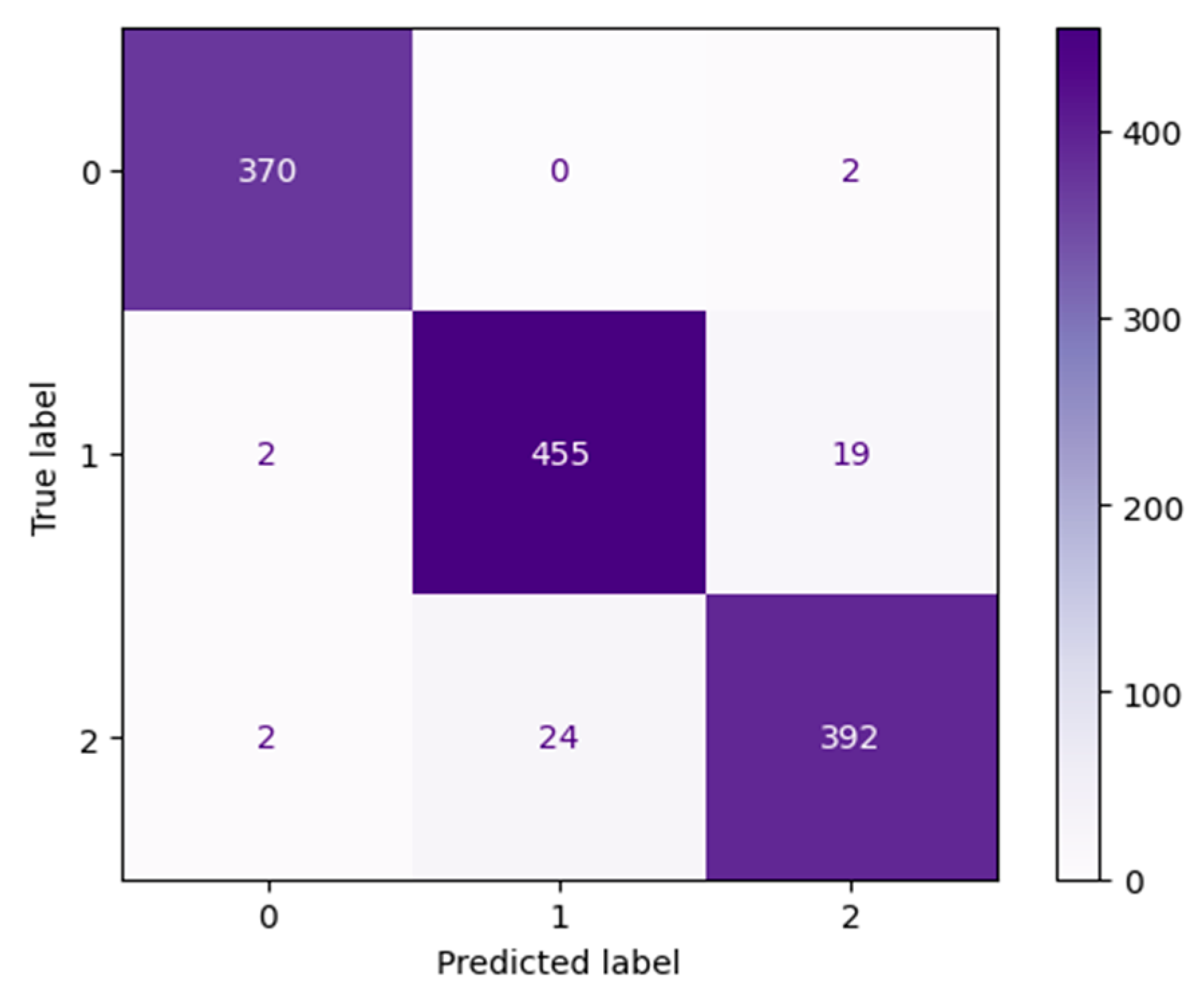

3.1.1. VGG16 Performance

3.1.2. DenseNet Performance

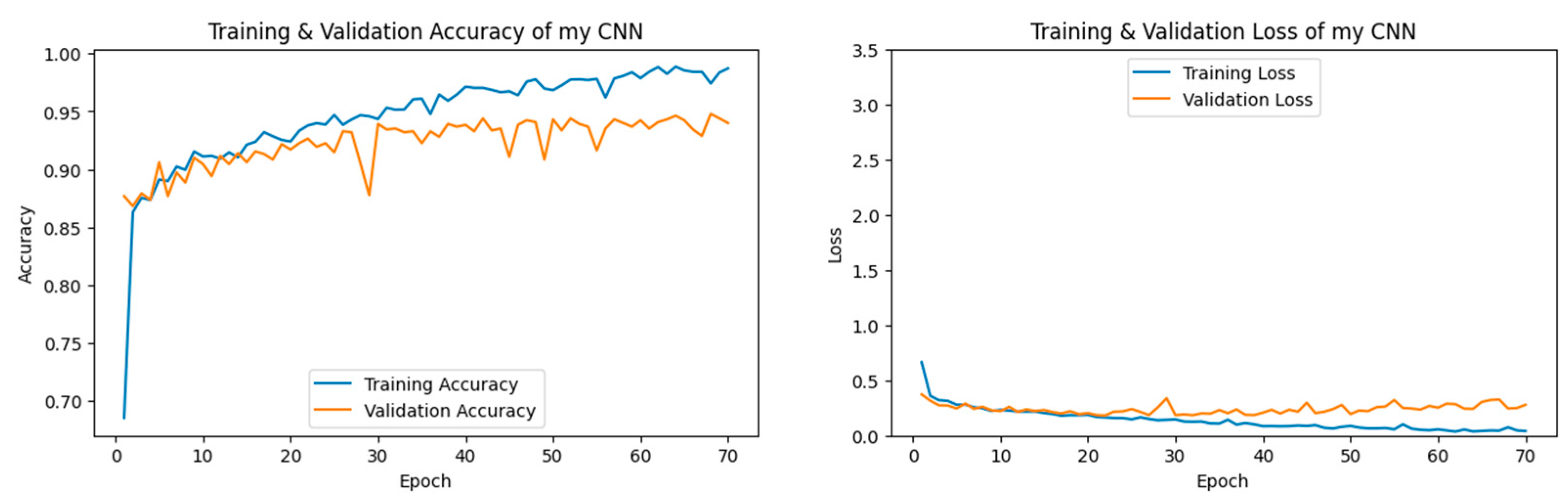

3.1.3. CNN Performance

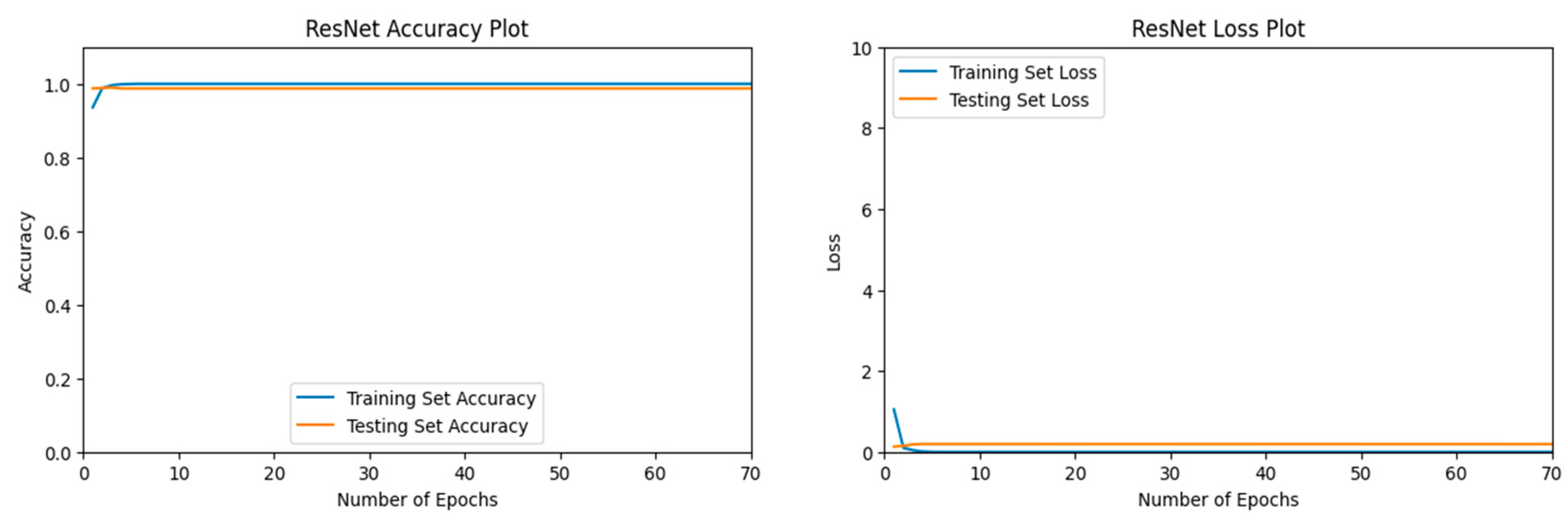

3.1.4. ResNet Performance

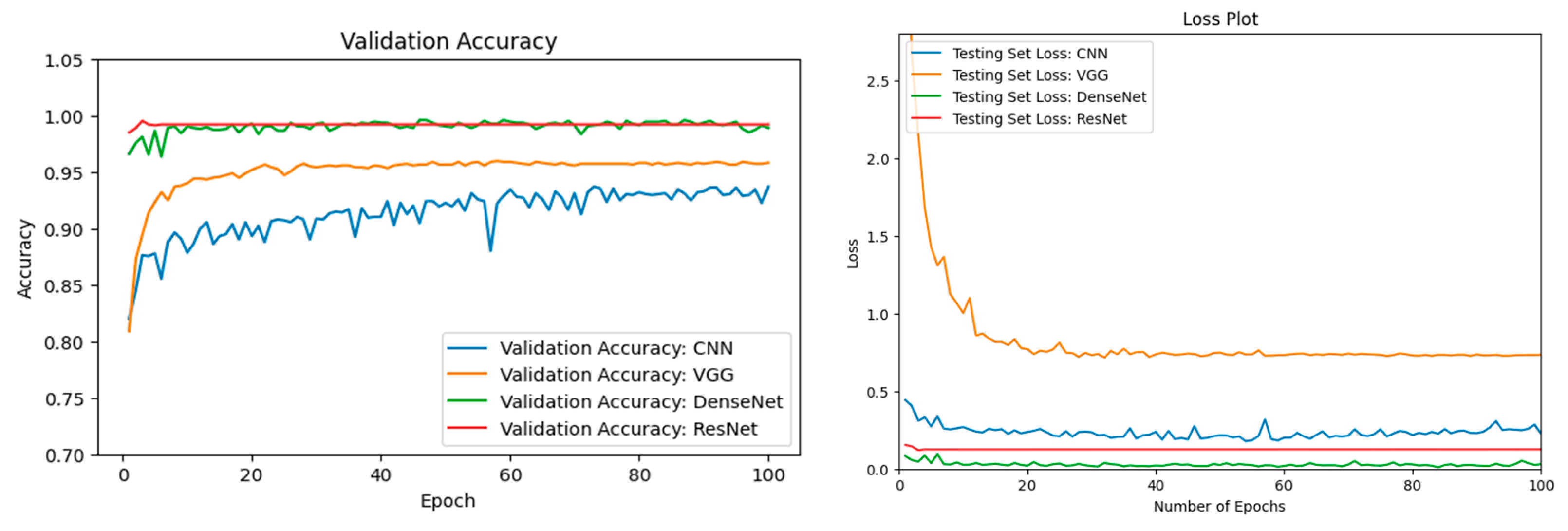

3.2. ML Model Comparison

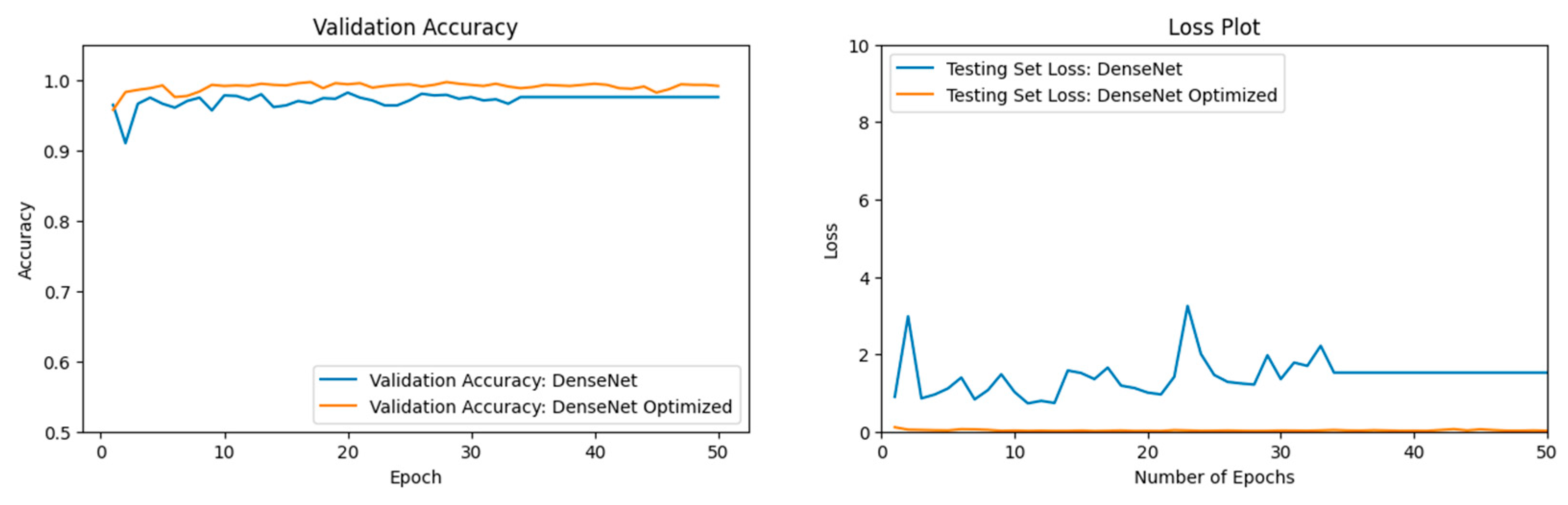

3.3. Optimization of DenseNet

3.4. ResNet vs. DenseNet Optimized

3.5. Cross-Validation

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Clarke, B.; Otto, F.; Stuart-Smith, R.; Harrington, L. Extreme weather impacts of climate change: An attribution perspective. Environ. Res. Clim. 2022, 1, 012001. [Google Scholar] [CrossRef]

- Intergovernmental_Panel_On_Climate_Change(IPCC). Climate Change 2021—The Physical Science Basis: Working Group I Contribution to the Sixth Assessment Report of the Intergovernmental Panel on Climate Change; Cambridge University Press: Cambridge, UK, 2023. [Google Scholar]

- Raymond, C.; Matthews, T.; Horton, R.M. The emergence of heat and humidity too severe for human tolerance. Sci. Adv. 2020, 6, eaaw1838. [Google Scholar] [CrossRef]

- Delforge, D.; Wathelet, V.; Below, R.; Sofia, C.L.; Tonnelier, M.; van Loenhout, J.; Speybroeck, N. EM-DAT: The Emergency Events Database. Res. Sq. 2023, preprint. [Google Scholar] [CrossRef]

- Cho, C.; Li, R.; Wang, S.Y.; Yoon, J.H.; Gillies, R.R. Anthropogenic Footprint of Climate Change in the June 2013 Northern India Flood. Clim. Dyn. 2015, 46, 797–805. [Google Scholar] [CrossRef]

- Pall, P.; Patricola, C.M.; Wehner, M.F.; Stone, D.A.; Paciorek, C.J.; Collins, W.D. Diagnosing conditional anthropogenic contributions to heavy Colorado rainfall in September 2013. Weather. Clim. Extrem. 2017, 17, 1–6. [Google Scholar] [CrossRef]

- van der Wiel, K.; Kapnick, S.B.; van Oldenborgh, G.J.; Whan, K.; Philip, S.; Vecchi, G.A.; Singh, R.K.; Arrighi, J.; Cullen, H. Rapid attribution of the August 2016 flood-inducing extreme precipitation in south Louisiana to climate change. Hydrol. Earth Syst. Sci. 2017, 21, 897–921. [Google Scholar] [CrossRef]

- Philip, S.; Kew, S.F.; van Oldenborgh, G.J.; Aalbers, E.; Vautard, R.; Otto, F.; Haustein, K.; Habets, F.; Singh, R. Validation of a rapid attribution of the May/June 2016 flood-inducing precipiation in France to climate change. J. Hydrometerorol. 2018, 19, 1881–1898. [Google Scholar] [CrossRef]

- Teufel, B.; Sushama, L.; Huziy, O.; Diro, G.T.; Jeong, D.I.; Winger, K.; Garnaud, C.; de Elia, R.; Zwiers, F.W.; Matthews, H.D.; et al. Investigation of the mechanisms leading to the 2017 Montreal flood. Clim. Dyn. 2018, 52, 4193–4206. [Google Scholar] [CrossRef]

- Huang, J.; Zhang, G.; Zhang, Y.; Guan, X.; Wei, Y.; Guo, R. Global desertification vulnerability to climate change and human activities. Land Degrad. Dev. 2020, 31, 1380–1391. [Google Scholar] [CrossRef]

- UNCCD. United Nations Convention to Combat Desertification in Countries Experiencing Serous Drought and/or Desertification, Paticularly in Africa. Paris. 1994. Available online: https://www.researchgate.net/profile/Salah-Tahoun/publication/2870529_Scientific_aspects_of_the_United_Nations_convention_to_combat_desertification/links/558008b908aeea18b77a835d/Scientific-aspects-of-the-United-Nations-convention-to-combat-desertification.pdf (accessed on 1 October 2024).

- Nicholson, S.E.; Tucker, C.J.; Ba, M.B. Desertification, Drought, and Surface Vegetation: An Example from the West African Sahel. Bull. Am. Meteorol. Soc. 1998, 79, 815–829. [Google Scholar] [CrossRef]

- Sivakumar, M.V.K. Interactions between climate and desertification. Agric. For. Meteorol. 2007, 142, 143–155. [Google Scholar] [CrossRef]

- Millennium Ecosystem Assessment (MEA). Ecosystems and Human Well-Being: Desertification Synthesis; World Resources Institute: Washington, DC, USA, 2005. [Google Scholar]

- Hernandez, D.; Cano, J.-C.; Silla, F.; Calafate, C.T.; Cecilia, J.M. AI-Enabled Autonomous Drones for Fast Climate Change Crisis Assessment. IEEE Internet Things J. 2021, 9, 7286–7297. [Google Scholar] [CrossRef]

- Alsumayt, A.; El-Haggar, N.; Amouri, L.; Alfawaer, Z.M.; Aljameel, S.S. Smart Flood Detection with AI and Blockchain Integration in Saudi Arabia Using Drones. Sensors 2023, 23, 5148. [Google Scholar] [CrossRef]

- Intizhami, N.S.; Nuranti, E.Q.; Bahar, N.I. Dataset for flood area recognition with semantic segmentation. Data Brief 2023, 51, 109768. [Google Scholar] [CrossRef]

- Alrayes, F.S.; Alotaibi, S.S.; Alissa, K.A.; Maashi, M.; Alhogail, A.; Alotaibi, N.; Mohsen, H.; Motwakel, A. Artificial Intelligence-Based Secure Communication and Classification for Drone-Enabled Emergency Monitoring Systems. Drones 2022, 6, 222. [Google Scholar] [CrossRef]

- Karanjit, R.; Pally, R.; Samadi, S. FloodIMG: Flood image DataBase system. Data Brief 2023, 48, 109164. [Google Scholar] [CrossRef]

- Malik, I.; Ahmed, M.; Gulzar, Y.; Baba, S.H.; Mir, M.S.; Soomro, A.B.; Sultan, A.; Elwasila, O. Estimation of the Extent of the Vulnerability of Agriculture to Climate Change Using Analytical and Deep-Learning Methods: A Case Study in Jammu, Kashmir, and Ladakh. Sustainability 2023, 15, 11465. [Google Scholar] [CrossRef]

- Seelwal, P.; Dhiman, P.; Gulzar, Y.; Kaur, A.; Wadhwa, S.; Onn, C.W. A systematic review of deep learning applications for rice disease diagnosis: Current trends and future directions. Front. Comput. Sci. 2024, 6, 1452961. [Google Scholar] [CrossRef]

- Alkanan, M.; Gulzar, Y. Enhanced corn seed disease classification: Leveraging MobileNetV2 with feature augmentation and transfer learning. Front. Appl. Math. Stat. 2024, 9, 1320177. [Google Scholar] [CrossRef]

- Nordhaus, W.D.; Mendelsohn, R.; Shaw, D. The Impact of Global Warming on Agriculture: A Ricardian Analysis. Am. Econ. Rev. 1994, 84, 753–771. [Google Scholar]

- Hamlington, B.D.; Tripathi, A.; Rounce, D.R.; Weathers, M.; Adams, K.H.; Blackwood, C.; Carter, J.; Collini, R.C.; Engeman, L.; Haasnoot, M.; et al. Satellite monitoring for coastal dynamic adaptation policy pathways. Clim. Risk Manag. 2023, 42, 100555. [Google Scholar] [CrossRef]

- Dash, J.P.; Pearse, G.D.; Watt, M.S. UAV Multispectral Imagery Can Complement Satellite Data for Monitoring Forest Health. Remote. Sens. 2018, 10, 1216. [Google Scholar] [CrossRef]

- Dilmurat, K.; Sagan, V.; Moose, S. AI-driven maize yield forecasting using unmanned aerial vehicle-based hyperspectral and lidar data fusion. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, V-3-2022, 193–199. [Google Scholar] [CrossRef]

- Raniga, D.; Amarasingam, N.; Sandino, J.; Doshi, A.; Barthelemy, J.; Randall, K.; Robinson, S.A.; Gonzalez, F.; Bollard, B. Monitoring of Antarctica’s Fragile Vegetation Using Drone-Based Remote Sensing, Multispectral Imagery and AI. Sensors 2024, 24, 1063. [Google Scholar] [CrossRef]

- Santangeli, A.; Chen, Y.; Kluen, E.; Chirumamilla, R.; Tiainen, J.; Loehr, J. Integrating drone-borne thermal imaging with artificial intelligence to locate bird nests on agricultural land. Sci. Rep. 2020, 10, 10993. [Google Scholar] [CrossRef] [PubMed]

- Malamiri, H.R.G.; Aliabad, F.A.; Shojaei, S.; Morad, M.; Band, S.S. A study on the use of UAV images to improve the separation accuracy of agricultural land areas. Comput. Electron. Agric. 2021, 184, 106079. [Google Scholar] [CrossRef]

- Alvarez-Vanhard, E.; Corpetti, T.; Houet, T. UAV & satellite synergies for optical remote sensing applications: A literature review. Sci. Remote Sens. 2021, 3, 100019. [Google Scholar] [CrossRef]

- Marx, A.; McFarlane, D.; Alzahrani, A. UAV data for multi-temporal Landsat analysis of historic reforestation: A case study in Costa Rica. Int. J. Remote. Sens. 2017, 38, 2331–2348. [Google Scholar] [CrossRef]

- Hassan, N.; Miah, A.S.M.; Shin, J. Residual-Based Multi-Stage Deep Learning Framework for Computer-Aided Alzheimer’s Disease Detection. J. Imaging 2024, 10, 141. [Google Scholar] [CrossRef] [PubMed]

- Ibrahim, A.M.; Elbasheir, M.; Badawi, S.; Mohammed, A.; Alalmin, A.F.M. Skin Cancer Classification Using Transfer Learning by VGG16 Architecture (Case Study on Kaggle Dataset). J. Intell. Learn. Syst. Appl. 2023, 15, 67–75. [Google Scholar] [CrossRef]

- Abu Sultan, A.B.; Abu-Naser, S.S. Predictive Modeling of Breast Cancer Diagnosis Using Neural Networks:A Kaggle Dataset Analysis. Int. J. Acad. Eng. Res. 2023, 7, 1–9. [Google Scholar]

- Bojer, C.S.; Meldgaard, J.P. Kaggle forecasting competitions: An overlooked learning opportunity. Int. J. Forecast. 2020, 37, 587–603. [Google Scholar] [CrossRef]

- Ker, J.; Wang, L.; Rao, J.; Lim, T. Deep Learning Applications in Medical Image Analysis. IEEE Access 2017, 6, 9375–9389. [Google Scholar] [CrossRef]

- Ghnemat, R.; Alodibat, S.; Abu Al-Haija, Q. Explainable Artificial Intelligence (XAI) for Deep Learning Based Medical Imaging Classification. J. Imaging 2023, 9, 177. [Google Scholar] [CrossRef] [PubMed]

- Kwenda, C.; Gwetu, M.; Fonou-Dombeu, J.V. Hybridizing Deep Neural Networks and Machine Learning Models for Aerial Satellite Forest Image Segmentation. J. Imaging 2024, 10, 132. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Boston, T.; Van Dijk, A.; Thackway, R. U-Net Convolutional Neural Network for Mapping Natural Vegetation and Forest Types from Landsat Imagery in Southeastern Australia. J. Imaging 2024, 10, 143. [Google Scholar] [CrossRef]

- Kumar, A.; Jaiswal, A.; Garg, S.; Verma, S.; Kumar, S. Sentiment Analysis Using Cuckoo Search for Optimized Feature Selection on Kaggle Tweets. Int. J. Inf. Retr. Res. 2019, 9, 1–15. [Google Scholar] [CrossRef]

- Ahmed, A.A.; Shaahid, A.; Alnasser, F.; Alfaddagh, S.; Binagag, S.; Alqahtani, D. Android Ransomware Detection Using Supervised Machine Learning Techniques Based on Traffic Analysis. Sensors 2023, 24, 189. [Google Scholar] [CrossRef]

- Taieb, S.B.; Hyndman, R.J. A gradient boosting approach to the Kaggle load forecasting competition. Int. J. Forecast. 2014, 30, 382–394. [Google Scholar] [CrossRef]

- Kaggle. Available online: https://www.kaggle.com/ (accessed on 1 October 2024).

- RahulTP. Louisiana Flood 2016. Kaggle. Available online: www.kaggle.com/datasets/rahultp97/louisiana-flood-2016 (accessed on 1 October 2024).

- Wang, M. FDL_UAV_flooded Areas. Kaggle. Available online: www.kaggle.com/datasets/a1996tomousyang/fdl-uav-flooded-areas (accessed on 1 October 2024).

- Rupak, R. Cyclone, Wildfire, Flood, Earthquake Database. Kaggle. Available online: www.kaggle.com/datasets/rupakroy/cyclone-wildfire-flood-earthquake-database (accessed on 1 October 2024).

- Reda, M. Satellite Image Classification. Kaggle. Available online: www.kaggle.com/datasets/mahmoudreda55/satellite-image-classification (accessed on 1 October 2024).

- Mystriotis, G. Disasters Dataset. Kaggle. Available online: https://www.kaggle.com/datasets/georgemystriotis/disasters-dataset (accessed on 1 October 2024).

- Bhardwaj, A.; Tuteja, Y. Aerial Landscape Images. Kaggle. Available online: https://www.kaggle.com/datasets/ankit1743/skyview-an-aerial-landscape-dataset (accessed on 1 October 2024).

- Tuteja, Y.; Bhardwaj, A. Aerial Images of Cities. Kaggle. Available online: https://www.kaggle.com/datasets/yessicatuteja/skycity-the-city-landscape-dataset (accessed on 1 October 2024).

- Demir, I.; Koperski, K.; Lindenbaum, D.; Pang, G.; Huang, J.; Basu, S.; Hughes, F.; Tuia, D.; Raskar, R. DeepGlobe 2018: A Challenge to Parse the Earth through Satellite Images. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018. [Google Scholar]

- Xia, G.-S.; Hu, J.; Hu, F.; Shi, B.; Bai, X.; Zhong, Y.; Zhang, L. AID: A Benchmark Dataset for Performance Evaluation of Aerial Scene Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3965–3981. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2015. [Google Scholar] [CrossRef]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Fei-Fei, L. ImageNet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Zan, X.; Zhang, X.; Xing, Z.; Liu, W.; Zhang, X.; Su, W.; Liu, Z.; Zhao, Y.; Li, S. Automatic Detection of Maize Tassels from UAV Images by Combining Random Forest Classifier and VGG16. Remote Sens. 2020, 12, 3049. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; van der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. arXiv 2018. [Google Scholar] [CrossRef]

- Mumumi, A.; Mumuni, F. Data augmentation: A comprehensive survey of modern approaches. Array 2022, 16, 100258. [Google Scholar] [CrossRef]

- Pei, X.; Zhao, Y.H.; Chen, L.; Guo, Q.; Duan, Z.; Pan, Y.; Hou, H. Robustness of machine learning to color, size change, normalization, and image enhancement on micrograph datasets with large sample differences. Mater. Des. 2023, 232, 112086. [Google Scholar] [CrossRef]

- Habib, G.; Qureshi, S. GAPCNN with HyPar: Global Average Pooling convolutional neural network with novel NNLU activation function and HYBRID parallelism. Front. Comput. Neurosci. 2022, 16, 1004988. [Google Scholar] [CrossRef]

- Srivastava, N.; Hinton, G.R.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Basha, S.S.; Dubey, S.R.; Pulabaigari, V.; Mukherjee, S. Impact of fully connected layers on performance of convolutional neural networks for image classification. Neurocomputing 2020, 378, 112–119. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015. [Google Scholar] [CrossRef]

- Alsabhan, W.; Alotaiby, T. Automatic Building Extraction on Satellite Images Using Unet and ResNet50. Comput. Intell. Neurosci. 2022, 2022, 5008854. [Google Scholar] [CrossRef] [PubMed]

- Abu, M.; Zahri, N.A.H.; Amir, A.; Ismail, M.I.; Yaakub, A.; Anwar, S.A.; Ahmad, M.I. A Comprehensive Performance Analysis of Transfer Learning Optimization in Visual Field Defect Classification. Diagnostics 2022, 12, 1258. [Google Scholar] [CrossRef]

- Yang, Y.; Zhang, L.; Du, M.; Bo, J.; Liu, H.; Ren, L.; Li, X.; Deen, M.J. A comparative analysis of eleven neural networks architectures for small datasets of lung images of COVID-19 patients toward improved clinical decisions. Comput. Biol. Med. 2021, 139, 104887. [Google Scholar] [CrossRef]

- Zafar, A.; Aamir, M.; Nawi, N.M.; Arshad, A.; Riaz, S.; Alruban, A.; Dutta, A.K.; Almotairi, S. A Comparison of Pooling Methods for Convolutional Neural Networks. Appl. Sci. 2022, 12, 8643. [Google Scholar] [CrossRef]

- Devnath, L.; Luo, S.; Summons, P.; Wang, D.; Shaukat, K.; Hameed, I.A.; Alrayes, F.S. Deep Ensemble Learning for the Automatic Detection of Pneumoconiosis in Coal Worker’s Chest X-ray Radiography. J. Clin. Med. 2022, 11, 5342. [Google Scholar] [CrossRef]

- Ajayi, O.G.; Ashi, J. Effect of varying training epochs of a Faster Region-Based Convolutional Neural Network on the Accuracy of an Automatic Weed Classification Scheme. Smart Agric. Technol. 2022, 3, 100128. [Google Scholar] [CrossRef]

- Rahaman, M.M.; Li, C.; Yao, Y. Identification of COVID-19 samples from chest X-Ray images using deep learning: A comparison of transfer learning approaches. J. X-Ray Sci. Technol. 2020, 28, 821–839. [Google Scholar] [CrossRef] [PubMed]

| Name of Dataset | Total Image Count | Flooded | Desert | Neither |

|---|---|---|---|---|

| Louisiana Flood 2016 [44] | 263 | 102 | 0 | 161 |

| FDL_UAV_flood areas [45] | 297 | 130 | 0 | 167 |

| Cyclone, Wildfire, Flood, Earthquake Database [46] | 613 | 613 | 0 | 0 |

| Satellite Image Classification Disaster Dataset [47] | 1131 | 0 | 1131 | 0 |

| Disasters Dataset [48] | 1630 | 1493 | 0 | 137 |

| Aerial Landscape Images [49] | 800 | 0 | 800 | 0 |

| Aerial Images of Cities [50] | 600 | 0 | 0 | 600 |

| Forest Aerial Images for Segmentation [51] | 1000 | 0 | 0 | 1000 |

| Totals | 6334 | 2338 | 1931 | 2065 |

| Category | Precision | Recall | F1-Score |

|---|---|---|---|

| Desert | 0.99 | 0.99 | 0.99 |

| Flooded | 0.95 | 0.96 | 0.95 |

| Neither | 0.95 | 0.94 | 0.94 |

| Category | Precision | Recall | F1-Score |

|---|---|---|---|

| Desert | 0.99 | 1.0 | 1.0 |

| Flooded | 1.0 | 0.99 | 0.99 |

| Neither | 0.99 | 0.99 | 0.99 |

| Category | Precision | Recall | F1-Score |

|---|---|---|---|

| Desert | 0.99 | 0.99 | 0.99 |

| Flooded | 0.88 | 0.96 | 0.92 |

| Neither | 0.96 | 0.88 | 0.92 |

| Category | Precision | Recall | F1-Score |

|---|---|---|---|

| Desert | 1.00 | 1.00 | 1.00 |

| Flooded | 0.98 | 0.99 | 0.98 |

| Neither | 0.98 | 0.98 | 0.98 |

| ML Model | Validation Accuracy |

|---|---|

| CNN | 0.9368 |

| VGG16 [54] | 0.9581 |

| DenseNet201 [57] Optimized | 0.9889 |

| ResNet50 [63] | 0.9921 |

| ML Model | Validation Accuracy | Validation Loss |

|---|---|---|

| DenseNet201 [57] | 0.9755 | 1.5234 |

| DenseNet201 [57] Optimized | 0.9913 | 0.0196 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

VanExel, K.; Sherchan, S.; Liu, S. Optimizing Deep Learning Models for Climate-Related Natural Disaster Detection from UAV Images and Remote Sensing Data. J. Imaging 2025, 11, 32. https://doi.org/10.3390/jimaging11020032

VanExel K, Sherchan S, Liu S. Optimizing Deep Learning Models for Climate-Related Natural Disaster Detection from UAV Images and Remote Sensing Data. Journal of Imaging. 2025; 11(2):32. https://doi.org/10.3390/jimaging11020032

Chicago/Turabian StyleVanExel, Kim, Samendra Sherchan, and Siyan Liu. 2025. "Optimizing Deep Learning Models for Climate-Related Natural Disaster Detection from UAV Images and Remote Sensing Data" Journal of Imaging 11, no. 2: 32. https://doi.org/10.3390/jimaging11020032

APA StyleVanExel, K., Sherchan, S., & Liu, S. (2025). Optimizing Deep Learning Models for Climate-Related Natural Disaster Detection from UAV Images and Remote Sensing Data. Journal of Imaging, 11(2), 32. https://doi.org/10.3390/jimaging11020032