1. Introduction

A key challenge for all-sky imagers (ASIs) used in solar energy meteorology is the extreme dynamic range in hemispheric scenes, with orders-of-magnitude differences between the diffuse sky and the circumsolar region. The circumsolar region—defined here as the near-Sun scattered field outside the solar disk (circumsolar aureole)—is critical for resolving cloud transits across the solar disk, which affect direct normal irradiance (DNI), and for characterizing near-Sun scattering in the surrounding aureole. Both mechanisms strongly modulate surface solar irradiance (SSI). Small radiance errors in this area propagate into SSI and its short-term variability. Preserving circumsolar structure with adequate saturation headroom in a radiance-linear sensor domain is therefore important, since this region is highly susceptible to sensor clipping, ISP compression, and halo artifacts [

1,

2,

3,

4].

Single-exposure imaging faces a fundamental trade-off. The shortest shutter preserves circumsolar structure but underexposes the sky dome; longer shutters recover the diffuse sky at the cost of larger saturated areas and weaker circumsolar gradients. Highlight headroom is bounded by pixel full-well capacity and, once analog gain is applied, by the converter’s white level. Without optical attenuation (e.g., neutral-density or solar filters), the radiance of the solar disk typically exceeds the single-exposure dynamic range of visible-range CMOS sensors; the disk cannot be resolved and the core saturates in any single capture. In practice, the objective is to minimize the angular footprint of saturation and to preserve circumsolar radial gradients while maintaining adequate signal over the diffuse hemisphere. These constraints motivate exposure bracketing and HDR fusion [

5,

6,

7].

In many operational ASI systems, the dynamic-range gap is addressed with high-dynamic-range (HDR) bracketing inside the image signal processor (ISP), i.e., after demosaicing and color processing in a nonlinear 8-bit RGB domain. Near the Sun, such ISP-domain HDR can lose information via two mechanisms: (i) exposure-series weighting that down-weights the darkest (shortest) frame and can admit clipped or near-clipped samples from longer frames, biasing highlights; and (ii) global or local tone mapping that compresses highlight contrast and can introduce halos, attenuating circumsolar gradients [

4,

5,

6,

7]. These mechanisms directly affect the circumsolar region and thus the information content available for irradiance-related and atmospheric applications.

In this context, a radiance-linear HDR fusion in the sensor/RAW domain (RAW–HDR) is contrasted with the vendor ISP-based HDR mode (ISP–HDR) for an all-sky camera system. Solar-based geometric calibration provides a mapping between image coordinates and sky directions and enables Sun-centered analysis. RAW exposure series are merged in a radiance-linear sensor domain by strict per-pixel censoring of saturated, near-saturated, and sub-noise samples, followed by a bounded linear estimate from the usable exposures. A monotone, saturation-count-informed post-merge scaling is used for visualization only; all quantitative analyses operate on the radiance-linear HDR result.

The main contribution of this paper is a quantitative, Sun-centered evaluation of ISP-based HDR versus radiance-linear RAW-based HDR in the circumsolar region of all-sky images. This evaluation is based on an interleaved acquisition scheme that provides paired ISP–HDR frames and RAW exposure series under clear-sky and broken-cloud conditions for a representative all-sky camera system. The RAW series are merged in a documented radiance-linear HDR configuration with fixed analog gain and strict per-pixel censoring of saturated and sub-noise samples. Using a geometric calibration derived from solar observations, a Sun-centered evaluation framework is employed, within which two geometry-aware circumsolar performance metrics are defined: (M1) the saturated-area fraction in concentric rings and (M2) a median-based radial gradient in defined arcs. These metrics are used to quantify differences between RAW–HDR and ISP–HDR. In this framework, ISP–HDR exhibits approximately – higher near-saturation within 0– and – weaker circumsolar radial gradients within 0– relative to RAW–HDR.

2. All-Sky Camera and Data Acquisition

This study uses data from a single node of the Wematics PyranoVision network installed at the Technical University of Denmark (DTU). Denmark has a temperate oceanic climate (Köppen Cfb) with mild summers, high humidity, frequent cloud cover, and persistent winds from the North Sea and Baltic. This section summarizes the capture stack and the acquisition protocol used for the paired HDR comparison.

2.1. System Overview

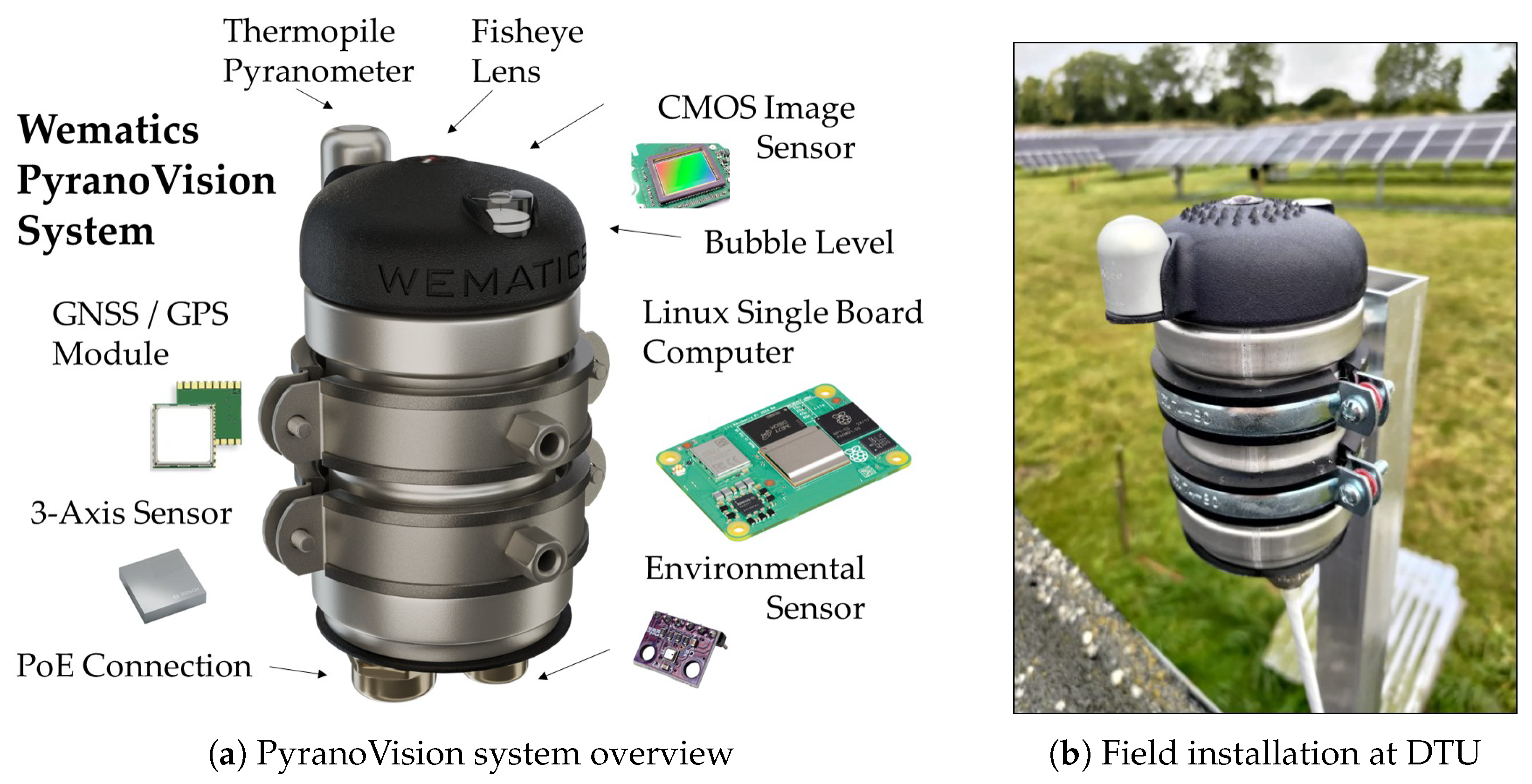

The

PyranoVision system integrates a weather-sealed fisheye sky camera (12 MP, IR-cut filter) and a thermopile pyranometer for global horizontal irradiance (GHI) on a shared single-board computer (SBC). The SBC performs continuous acquisition, lightweight background compression, and metadata logging. The pyranometer is an ISO 9060:2018 Class C instrument [

8], calibrated against an international standard with traceability to the World Radiometric Reference (WRR) via an ISO/IEC 17025-accredited laboratory [

9]. Both sensors are controlled by the same acquisition process and synchronized to a common system clock [

10], yielding co-registered image brackets and irradiance measurements with identical timestamps.

Figure 1 situates the hardware: panel (a) sketches the major components; panel (b) shows the DTU field installation.

For completeness, key hardware characteristics are summarized in

Table 1.

2.2. Capture Stack and Modes

Data acquisition was implemented in Python 3.11. A single control script provides two operating modes:

RAW–HDR mode (sensor/RAW domain): multi-exposure brackets of 12-bit Bayer frames are captured via Raspberry Pi’s

Picamera2 library (v0.3.13) [

11], which is built on

libcamera (v0.2.0) [

12,

13]. Each bracket follows predefined exposure times; eight frames are saved losslessly with per-frame metadata.

ISP–HDR mode (camera/ISP domain): a single 8-bit, post-demosaicing HDR image is produced by invoking the vendor pipeline from Python via

libcamera-still (v1.4) [

14]; multi-frame combination and tone mapping follow the vendor defaults.

Both modes are configured from the same Python script. For RAW–HDR, exposure time and analog gain are set through

PiCamera2/

libcamera controls [

11,

13]; for ISP–HDR, parameters are passed to

libcamera-still [

14]. All frames carry request start/end timestamps and metadata (exposure, analog gain, mode flags).

2.3. Field Campaign

The field campaign spanned June–July 2025 (Denmark). Acquisition proceeded at four HDR events per minute with a fixed 15

alternation to enable paired comparisons: ISP–HDR at {0, 30} s via

libcamera-still, and RAW–HDR at {15, 45} s via

PiCamera2. For RAW–HDR, automatic exposure and automatic white balance were disabled, analog gain was fixed, and a predetermined exposure series was executed for each bracket (see

Section 4.2). Timestamps were recorded in ISO 8601 with explicit offset and stored alongside per-frame metadata, consistent with solar-resource data guidelines [

15].

3. Geometric Calibration

Geometric calibration defines a mapping from sky directions to image pixels by combining a fisheye projection model with the camera’s external orientation relative to the horizontal plane and true north. Each pixel is assigned an azimuth (clockwise from true north) and a zenith angle (from the local vertical). Although calibration can be derived from multiple celestial observations (cf. [

16]), solar-only methods have also been demonstrated [

17]. Clear-sky images from 13 to 14 June 2025 were processed with the Sun-based mode of

SuMo [

18]. Model parameters (intrinsics: projection and principal point; extrinsics: three-axis orientation) were estimated by minimizing the discrepancy between detected solar centroids and solar ephemeris directions across time using a robust objective. Physical calibration targets (e.g., checkerboards) were not required. For downstream analyses, the Sun center at each timestamp was obtained by forward-projecting the ephemeris direction through the calibrated model. This provides a precise, temporally continuous reference that is independent of image-based detection (cf. [

19]) and ensures a consistent definition of circumsolar regions for precise Sun-crop extraction.

3.1. Calibration Procedure

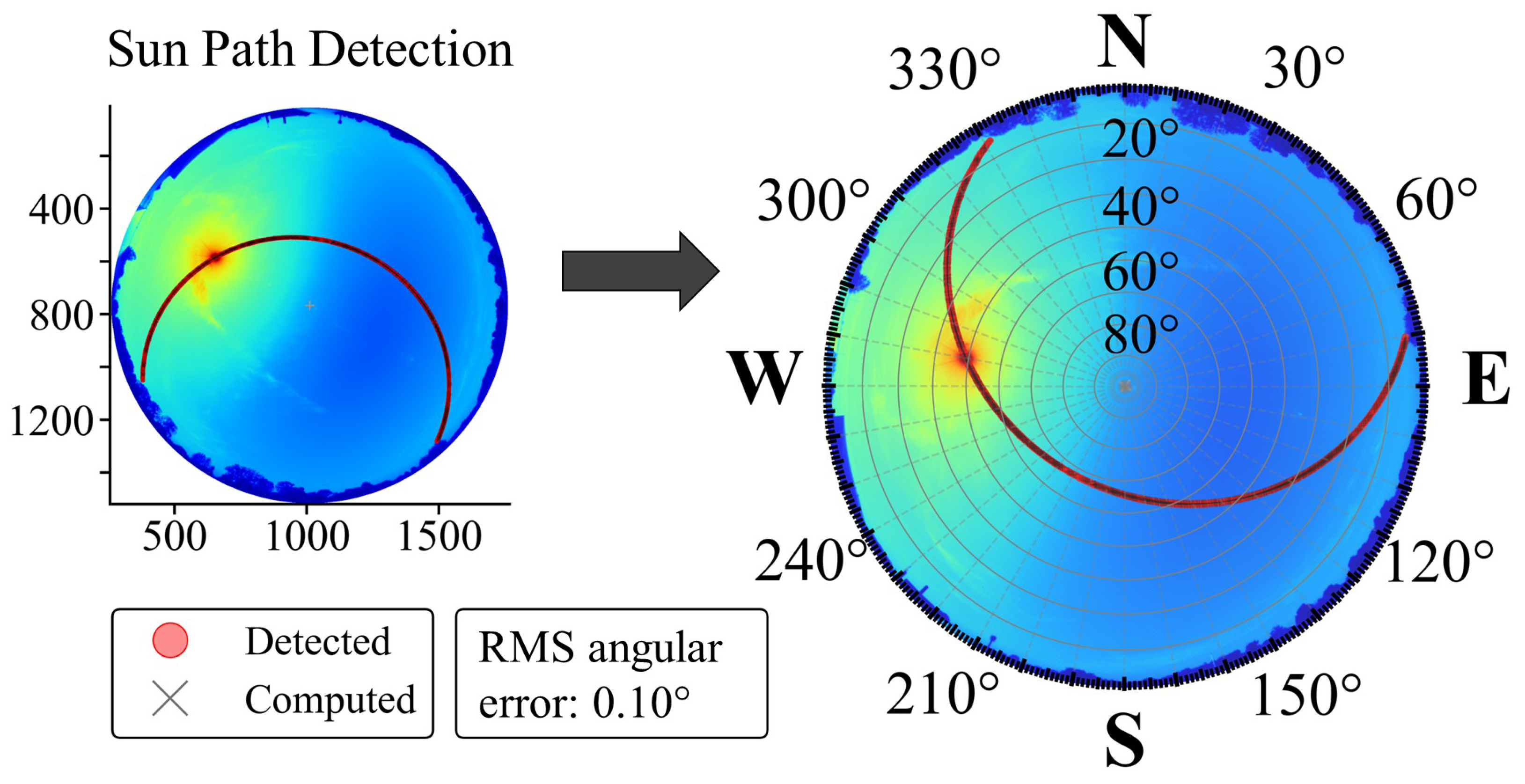

Solar centroids were extracted from clear-sky daytime images on 13–14 June 2025 (

Figure 2). A fixed sky mask was applied, and the lowest-exposure frame per time was used to identify the Sun. Within a local window around the predicted Sun neighborhood, intensity thresholding with minor morphological cleanup was performed. Candidates were screened by circularity

C and a minimum area

to ensure a well-defined Sun-disk region. Images in which the Sun disk intersected the mask boundary were rejected, and the intensity-weighted centroid of the best candidate was retained. Temporal consistency was enforced by fitting an elliptical Sun path in image coordinates and discarding points with orthogonal deviation

px. Accepted timestamps

were paired with ephemeris directions (

Section 3.2).

Table 2 summarizes the symbols used in geometric calibration.

For observation

i, the model maps the centroid to

, and ephemerides provide

. The loss minimized the great-circle misalignment via the squared Euclidean norm

with an optional Huber penalty and per-sample quality weights. Following Blum et al. [

18], a coarse-to-fine scheme was adopted: the principal point

and the global orientation were initialized, after which both the fisheye projection (intrinsics) and the orientation (extrinsics) were jointly refined.

The June dataset spans a broad range of azimuth and zenith angles. As shown in

Figure 2, the solar track covers azimuths of approximately 80–

(sunrise near the east, sunset toward the northwest) and zenith angles of roughly 7–

.

Residuals are reported in degrees and are summarized by distribution quantiles and polar residual maps. For detections, the observed–ephemeris angular residuals are small, with a root-mean-square angular error of .

3.2. Solar Position Mapping and Calibration Outputs

Solar unit vectors were computed from standard ephemerides using image timestamps; no refraction correction was applied. The calibrated inverse map (zenith angle , azimuth clockwise from north) enables zenith-angle binning and reproducible, geometry-defined circumsolar regions around the ephemeris Sun direction.

The archived calibration products comprise the image center , the sky–circle radius R, the intrinsic fisheye projection, and the extrinsic orientation with respect to the local horizon and north; these suffice to project the ephemeris Sun direction into image space and to map pixels to for all subsequent analyses.

4. HDR Merging of RAW Exposure Series

This section describes the HDR processing used in this study. A radiance-linear HDR fusion in the sensor/RAW domain is compared with an operational ISP-domain HDR mode. Three radiometric quantities are used: radiance (per-pixel directional quantity, W/m

2/sr), irradiance (pyranometer plane-integrated flux, W/m

2, used only as an external reference), and digital numbers (DNs; raw sensor counts). For fixed gain and exposure time, non-saturated DNs are treated as proportional to radiance, so HDR fusion operates on DNs as a radiance-linear proxy. The RAW-domain pipeline follows established HDR approaches [

5,

6,

7] and previous HDR applications to all-sky imaging [

20].

4.1. ISP-HDR Pipeline

The ISP-based HDR reference was obtained via the libcamera command-line utility with the vendor HDR option enabled. Processing is performed after Bayer demosaicing and color processing in a nonlinear 8-bit RGB domain. Multiple frames acquired at the same exposure are internally accumulated mainly for noise suppression; the minimum exposure time within the bracket is configured to limit highlight clipping. A global tone-mapping stage and a mild local-contrast enhancement determine the displayed appearance. This ISP–HDR mode represents the operational configuration in Wematics all-sky cameras; the RAW-domain fusion described below is used for quantitative evaluation.

4.2. RAW-HDR Pipeline

The RAW–HDR pipeline merges exposure series directly in the 12-bit Bayer domain under fixed analog gain, with auto exposure (AE) and auto white balance (AWB) disabled. At each timestamp,

RAW frames (RGGB,

px) are captured with measured exposure times

with deviations from the commanded values below

. The series spans from the shortest achievable exposure to clearly saturated images, providing overlap for HDR fusion. The sensor operates with a global black level

DN and a 12-bit clip level

DN.

For each exposure

k and pixel

, raw counts

are black-level-corrected and clipped to obtain

In the non-saturated regime and for fixed gain, these measurements are assumed to follow a simple exposure–response relation

where

is the measured exposure time and

denotes the radiometric slope (DN

) at pixel

.

To suppress unusable samples, each exposure at is classified according to its DN. Values below a noise threshold DN are discarded as too dark, and values above with DN are discarded as near-saturated. The remaining exposures at that pixel are treated as usable. If at least two usable samples exist, the radiance rate is estimated as the slope of y versus t using a least-squares fit constrained by the observed range: the fitted slope is restricted to lie between a lower bound implied by near-saturated samples and an upper bound implied by the usable samples (between the smallest slope that would have produced near-saturation and the largest slope consistent with the usable DN values).

If no exposure passes the usability criteria at a given pixel, a simple fallback is applied based on the brightest frame. When all values remain below DN, the slope is set to the value implied by the longest exposure. Otherwise, the pixel is treated as saturated and assigned a conservative minimum slope derived from near-saturated samples at the longest exposures. In all other cases, the bounded least-squares slope is taken as the HDR estimate .

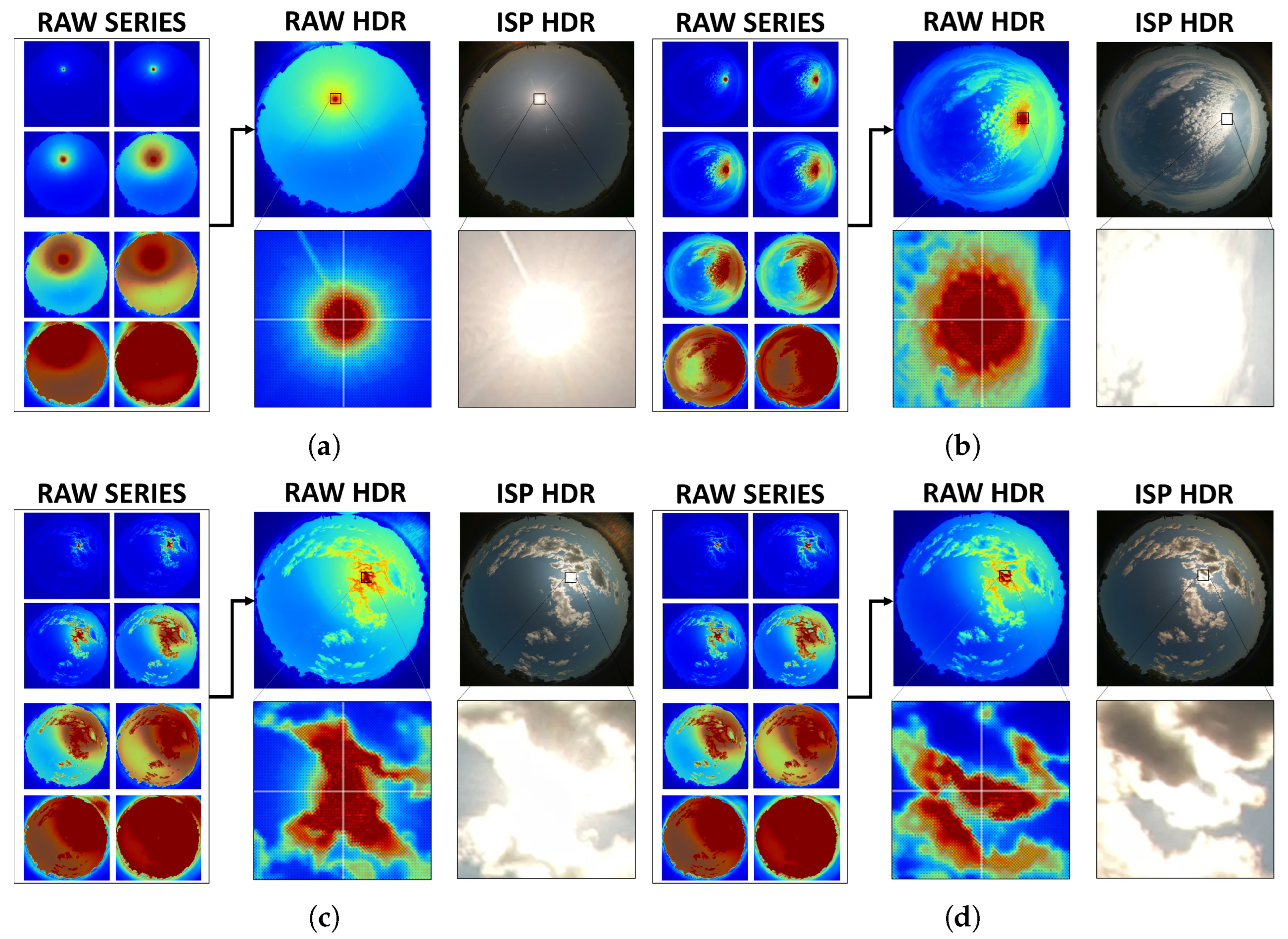

For visualization, the radiance-linear HDR field

is converted to display RGB using a monotone tone curve with a mild banded rescaling that preserves headroom in the circumsolar region while enhancing contrast in the diffuse dome. All quantitative evaluations in

Section 5 operate on the radiance-linear HDR estimate

. Representative clear-sky and broken-cloud scenes are shown in

Figure 3.

Qualitatively,

Figure 3 already suggests that RAW–HDR preserves finer circumsolar gradients and exhibits a smaller saturated footprint than ISP–HDR.

Section 5 introduces Sun-centered metrics that are used to quantify these differences.

5. Circumsolar Metrics

The circumsolar region is characterized by two ring-based, rotation-symmetric metrics: (i) the saturated-area fraction (highlight headroom) and (ii) the median-based radial gradient (aureole fall-off). Rings are defined by the solar elongation

(deg), i.e., the great-circle angle between a pixel’s view direction and the Sun. Throughout this study, rings are spaced by

with edges

,

.

Table 3 summarizes the notation used for these circumsolar metrics.

For a given timestamp, channel, and processing stage, each pixel

is assigned a solar elongation

from the geometric calibration (

Section 3). Rings

are then defined by selecting all valid pixels with

. An

adaptive near-white threshold

is estimated per timestamp, ring, and channel and is applied identically to RAW and ISP.

5.1. Saturated-Area Fraction

The saturated-area fraction

quantifies the fraction of pixels in a ring that are clipped or near-clipped. For a ring

, it is defined as

where

denotes the number of pixels in the ring with

and

denotes the total number of valid pixels in that ring (after masking). Larger values of

indicate a larger angular footprint of saturation and reduced highlight headroom. In the subsequent analysis,

is summarized over 0–

.

5.2. Median-Based Radial Gradient

To characterize the aureole fall-off, a median-based radial profile is formed from non-saturated pixels and then differentiated with respect to

. First, ring medians

are defined by

Thus, pixels at or above the near-saturation threshold contribute to

in (

4) but are excluded from the medians

. The radial gradient is then approximated by a central finite difference of these medians:

Negative values correspond to decreasing brightness with increasing solar elongation, and more negative values indicate a steeper circumsolar aureole. In the subsequent analysis, this gradient is evaluated over 0– using the radiance-linear HDR estimates as input.

In physical terms, quantifies how much of the inner circumsolar region is effectively unusable because it is clipped or near-clipped, and therefore it measures the available highlight headroom around the solar disk. The gradient describes how fast brightness decays with solar elongation and thus captures the shape of the circumsolar aureole. Together, these two metrics describe complementary aspects of circumsolar radiometry: where information is lost to saturation and how well the remaining aureole structure is preserved.

6. Results: Circumsolar Metric Comparison

In this section, results derived from the metrics defined in

Section 5 are presented. RAW frames are split into

at half resolution, with

. Throughout,

is evaluated over 0–

and the radial gradient

over 0–

; scenes with solar elevation

are excluded. Aggregation uses ring-wise daily medians followed by means across days.

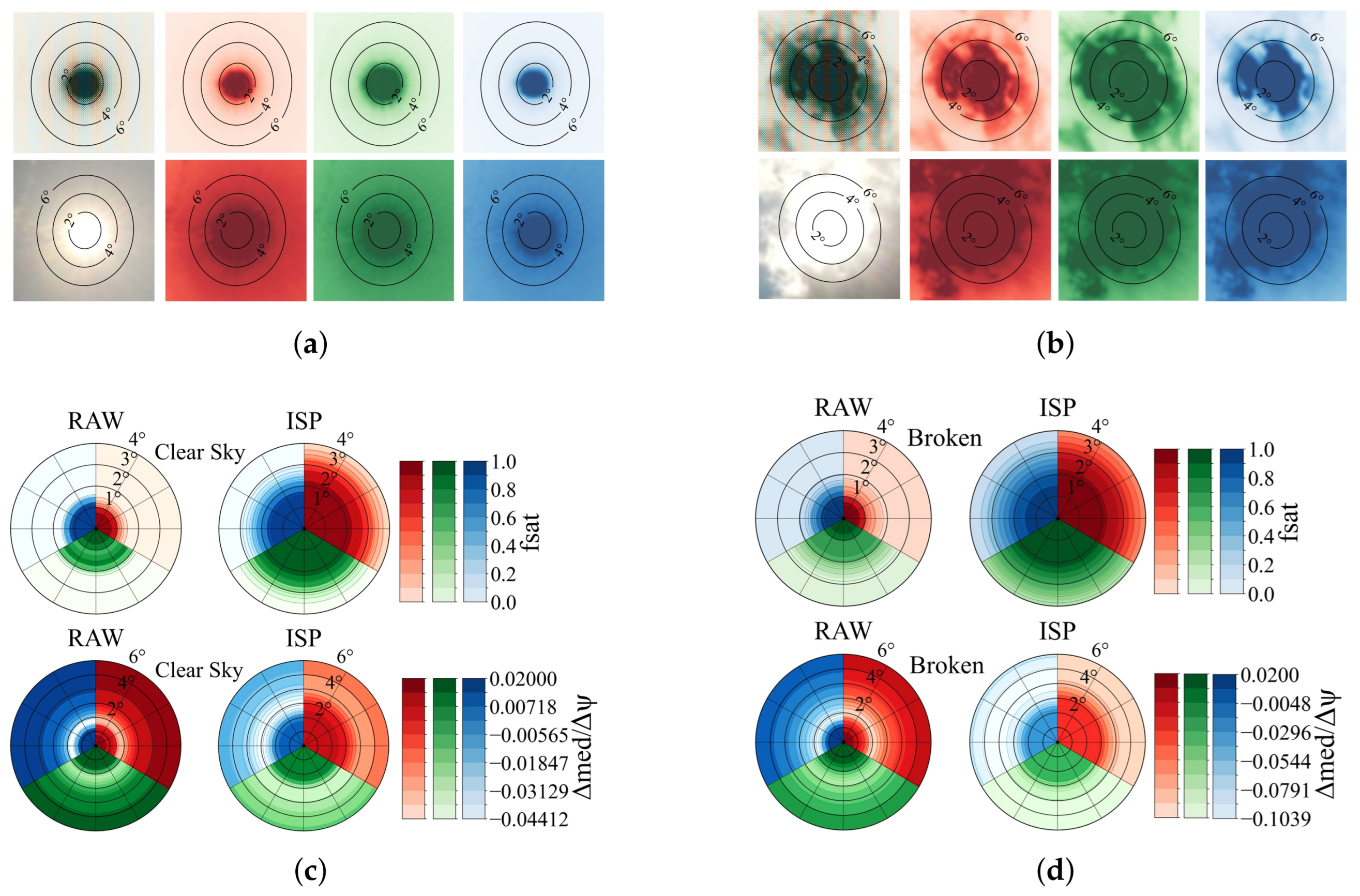

Figure 4 summarizes the results.

Panels (a) and (b) show representative Sun-centered crops (6°) contrasting RAW–HDR and ISP–HDR across the R/G/B channels. Panels (c) and (d) show polar aggregates (saturated-area fraction over 0–4° and radial gradient over 0–6°) with common per-metric color scales. The datasets comprise four clear-sky days (89,712 timestamps) and twelve broken-cloud days (158,472 timestamps).

In the RAW domain, inner-ring saturation consistently follows

. After ISP processing, this ordering reverses to

: red becomes most prone to near-clip, green is intermediate, and blue remains least affected. Broken-cloud conditions increase saturation in all channels but do not change these orderings. For the radial fall-off (

), RAW is steeper (more negative) in every channel, whereas ISP uniformly flattens the profile; blue tends to retain the steepest residual decline, red the flattest, with green in between. The corresponding RGB-mean effects are summarized in

Table 4.

Overall, RAW–HDR shows about a factor of two lower inner-ring saturation in 0–

compared with ISP–HDR, with the reduction slightly stronger under broken cloud (

Table 4). Over 0–

, RAW–HDR also preserves stronger circumsolar gradients: the magnitude

is roughly three-quarters larger than in ISP–HDR (equivalently, ISP flattens the profile by approximately 75%). In summary, RAW–HDR better maintains circumsolar headroom and gradient fidelity than the operational ISP mode for the system studied here.

7. Discussion

The results show that, for the system studied here, radiance-linear RAW–HDR consistently reduces inner-ring saturation and preserves steeper circumsolar gradients than ISP–HDR. In the Sun-centered framework of

Section 5, ISP–HDR exhibits a higher saturated-area fraction

and a flatter median-based radial profile within

for both clear-sky and broken-cloud conditions.

7.1. Interpretation of the Circumsolar Metrics

The saturated-area fraction in the inner rings quantifies highlight headroom around the solar disk. Larger values of indicate that a greater angular region is clipped or near-clipped and therefore cannot encode radiance variations. Information on cloud edges and aureole structure is then lost in this region.

The median-based radial gradient characterizes the steepness of the aureole fall-off as a function of solar elongation. Steeper (more negative) gradients correspond to a better-resolved circumsolar aureole, whereas flatter gradients indicate loss of contrast due to clipping or nonlinear compression. Together, and the radial gradient quantify complementary aspects of circumsolar radiometry (headroom and shape) and both have a direct physical interpretation.

7.2. Mechanisms Behind RAW–HDR and ISP–HDR Differences

In the RAW domain, the relative responses of the red, green, and blue channels are governed by the sensor’s spectral quantum efficiency, the Bayer color-filter array, and the IR-cut filter. For the camera used here, this combination yields the highest effective response in green, a similar or slightly lower response in blue, and the lowest response in red. With fixed analog gain and disabled automatic exposure and white balance, this leads to the observed saturation ordering for RAW–HDR in the inner rings.

In the ISP domain, processing is optimized for perceptual appearance. After demosaicing, white balance applies per-channel gains to achieve a neutral gray axis, and a color-correction matrix maps camera RGB to the output space. Because RAW responses are typically green-dominant, daylight scenes often require stronger scaling of red than of green and blue, reducing headroom in the red channel. Subsequent tone mapping then operates on these up-gained channels. In the circumsolar region, where radiance is high and the spectrum is relatively warm, the red channel reaches the tone-curve knee or clip first. This explains the shift in saturation ordering to for ISP–HDR and the increased red contribution to , as well as the flatter aureole gradients.

RAW–HDR, by contrast, merges exposure-normalized frames in a linear sensor domain and applies any compression as an explicit monotone tone curve after fusion. This avoids combining per-channel white-balance scaling and tone mapping with multi-exposure fusion, and thereby preserves more circumsolar headroom and gradient information for the system studied here.

7.3. Implications and Limitations

The observed differences are directly relevant for several ASI-based applications. A smaller saturated footprint and steeper circumsolar gradients increase the number of usable pixels near the solar disk, which is favorable for cloud–edge detection and segmentation. Methods for image-based irradiance retrieval (GHI, DHI, DNI, POA/GTI) rely on the structure of the circumsolar aureole when partitioning direct and diffuse components; a better-resolved aureole is expected to stabilize DNI estimates and partial-occlusion states. Likewise, aerosol-related inversions that exploit near-Sun radiance can benefit from preserved gradients within a few degrees of the solar disk. The present work does not implement new retrieval algorithms but provides a quantified characterization of the circumsolar radiance field that can be used in such task-level comparisons.

Several limitations should be noted. Temporal pairing between RAW–HDR and ISP–HDR frames is imperfect because brackets were alternated at 15 s and matched within s; aggregation via ring-wise daily medians and across-day means reduces, but does not eliminate, the influence of sub-minute cloud evolution. The analysis is based on a single all-sky system (sensor, optics, ISP tuning), so quantitative values of and the radial gradient will differ for other hardware, filters, and ISP parameters, even though the evaluation framework itself is generic. Computational cost and storage requirements are higher for RAW–HDR than for ISP–HDR, and these practical trade-offs were not quantified here. Finally, radiance linearity is understood in a sensor-domain sense: pixel codes are treated as proportional to scene radiance within the in-band response of the silicon CMOS sensor under fixed analog gain, without implying absolute spectroradiometry or cross-device equivalence.

8. Conclusions

This work compared exposure-series HDR in a radiance-linear sensor domain (RAW–HDR) with an operational ISP-based HDR mode (ISP–HDR) for an all-sky camera in the circumsolar region, using a Sun-centered, geometry-aware framework based on inner-ring saturation and aureole gradients.

For the investigated system, RAW–HDR provided systematically lower inner-ring saturation and steeper circumsolar gradients than ISP–HDR across both clear-sky and broken-cloud conditions. The circumsolar neighborhood around the solar disk remained usable over a larger angular range and retained a sharper aureole fall-off when exposure fusion was performed in the radiance-linear RAW domain.

A large share of existing all-sky imaging studies relies on embedded vendor HDR pipelines in the ISP domain, for example in widely used commercial all-sky cameras such as Mobotix-based systems. The results presented here show that, for the configuration studied, such ISP-domain processing can substantially reduce circumsolar headroom compared with radiance-linear RAW merging. The intention of this work is to make this loss of circumsolar detail explicit and to highlight the potential gains that can be obtained by operating in a radiance-linear RAW domain when circumsolar radiometry is a priority.

The Sun-centered, ring-based metrics introduced in this study provide a compact and reproducible way to characterize circumsolar headroom and gradient preservation across different cameras and HDR configurations, independently of any specific downstream algorithm. In this sense, the study quantifies the differences between RAW–HDR and ISP–HDR for a specific all-sky system under realistic sky conditions and introduces a geometry-aware evaluation protocol that can be reused when documenting HDR strategies in future all-sky imaging deployments.

9. Outlook

The present results suggest that it is worthwhile to test radiance-linear RAW–HDR inputs explicitly in downstream applications rather than simply accepting circumsolar information loss.

A first line of work is irradiance retrieval from all-sky radiance maps, including global horizontal (GHI), diffuse horizontal (DHI), direct normal (DNI), and plane-of-array/global tilted irradiance (POA/GTI). Methods such as the PyranoCam approach [

21] and related vision-based models [

22,

23,

24,

25,

26,

27,

28,

29] provide a framework in which RAW–HDR and ISP–HDR inputs can be compared in terms of bias and error for different sky conditions and solar geometries.

A second line of work is short-horizon nowcasting of ramp events under broken cloud [

30,

31,

32,

33,

34,

35,

36,

37,

38,

39,

40]. Here, the impact of RAW-domain HDR on the timing and magnitude of Sun–cloud occlusions can be quantified explicitly by re-running existing nowcasting models with alternative HDR configurations and evaluating changes in forecast skill.

Third, cloud detection, segmentation, and classification [

20,

41,

42] can be revisited by training or evaluating algorithms on RAW–HDR versus ISP–HDR inputs, with a focus on performance in the circumsolar neighborhood. Finally, aerosol-related inversions that exploit the near-Sun aureole [

43,

44,

45] provide a setting in which the preserved radial gradients from RAW–HDR can be linked directly to retrieval accuracy.

These task-level comparisons would extend the present, system-specific radiometric assessment by quantifying how circumsolar headroom and gradient preservation translate into changes in downstream performance for representative all-sky imager applications.

Author Contributions

Conceptualization, P.M., J.K.T., J.G., and M.A.; methodology, P.M., J.G., and M.A.; software, P.M. and J.G.; validation, P.M., M.A., and J.G.; writing—original draft, P.M.; writing—review and editing, all authors; supervision, S.H. and S.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was co-financed by the Austrian Research Promotion Agency (FFG), Project AUTOSKY (No. 923640), and Wematics FlexCo, with additional institutional support (APC) from the University of Applied Sciences Bonn–Rhein–Sieg (H-BRS).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author upon reasonable request. There are no legal or ethical restrictions on data access. A public repository with a DOI will be linked in the final published version.

Acknowledgments

The authors thank DTU researcher Jacob K. Thorning for installing and maintaining the PyranoVision system.

Conflicts of Interest

Authors Paul Matteschk, Max Aragon, and Jose Gomez are employed by the company Wematics FlexCo. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Abbreviations

| AE | Auto exposure |

| AOD | Aerosol optical depth |

| ASI | All-sky imager |

| AWB | Auto white balance |

| CMOS | Complementary metal–oxide–semiconductor |

| DHI | Diffuse horizontal irradiance |

| DN | Digital number (sensor counts) |

| DNI | Direct normal irradiance |

| DTU | Technical University of Denmark |

| FOV | Field of view |

| GHI | Global horizontal irradiance |

| GTI | Global tilted irradiance |

| HDR | High dynamic range |

| ISP | Image signal processor |

| ISP–HDR | ISP-domain HDR image (vendor pipeline) |

| LDR | Low dynamic range (single exposure) |

| ND | Neutral density (filter) |

| POA | Plane of array |

| RAW–HDR | Radiance-linear HDR merge in RAW domain |

| RGB | Red–green–blue color space |

| RGGB | Bayer mosaic pattern (R–G–G–B) |

| SBC | Single-board computer |

| SSI | Surface solar irradiance |

| UTC | Coordinated Universal Time |

| WB | White balance |

| WRR | World Radiometric Reference |

References

- Stumpfel, J.; Jones, A.; Wenger, A.; Tchou, C.; Hawkins, T.; Debevec, P. Direct HDR Capture of the Sun and Sky. In Proceedings of the AFRIGRAPH 2004: 3rd International Conference on Computer Graphics, Virtual Reality, Visualisation and Interaction in Africa, Stellenbosch, South Africa, 3–5 November 2004; ACM: New York, NY, USA, 2004; pp. 145–149. [Google Scholar] [CrossRef]

- Cho, Y.; Poletto, A.L.; Kim, D.H.; Karmann, C.; Andersen, M. Comparative analysis of LDR vs. HDR imaging: Quantifying luminosity variability and sky dynamics through complementary image processing techniques. Build. Environ. 2025, 269, 112431. [Google Scholar] [CrossRef]

- Alonso-Montesinos, J.; Batlles, F.J.; Villarroel, C.; Ayala, R.; Burgaleta, J.I. Determination of the sun area in sky camera images using radiometric data. Energy Convers. Manag. 2014, 78, 24–31. [Google Scholar] [CrossRef]

- Antuña-Sánchez, J.C.; Román, R.; Cachorro, V.E.; Toledano, C.; López, C.; González, R.; Mateos, D.; Calle, A.; de Frutos, Á.M. Relative sky radiance from multi-exposure all-sky camera images. Atmos. Meas. Tech. 2021, 14, 2201–2217. [Google Scholar] [CrossRef]

- Debevec, P.E.; Malik, J. Recovering High Dynamic Range Radiance Maps from Photographs. In Proceedings of the SIGGRAPH 97, Los Angeles, CA, USA, 3–8 August 1997; pp. 369–378. [Google Scholar] [CrossRef]

- Robertson, M.A.; Borman, S.; Stevenson, R.L. Dynamic Range Improvement Through Multiple Exposures. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Kobe, Japan, 24–28 October 1999; Volume 3, pp. 159–163. Available online: https://dblp.org/rec/conf/icip/RobertsonBS99 (accessed on 19 October 2025).

- Mertens, T.; Kautz, J.; Van Reeth, F. Exposure Fusion: A Simple and Practical Alternative to High Dynamic Range Photography. Comput. Graph. Forum 2009, 28, 161–171. [Google Scholar] [CrossRef]

- ISO 9060:2018; Solar Energy—Specification and Classification of Instruments for Measuring Hemispherical Solar and Direct Solar Radiation. 2018. Available online: https://www.iso.org/standard/67375.html (accessed on 1 December 2025).

- ISO/IEC 17025:2017; General Requirements for the Competence of Testing and Calibration Laboratories. 2017. Available online: https://www.iso.org/standard/66912.html (accessed on 1 December 2025).

- Mills, D.; Martin, J.; Burbank, J.; Kasch, W. Network Time Protocol Version 4: Protocol and Algorithms Specification. RFC 5905, Internet Engineering Task Force (IETF), 2010. Available online: https://www.rfc-editor.org/info/rfc5905 (accessed on 1 December 2025).

- The Picamera2 Library. 2023. Available online: https://datasheets.raspberrypi.com/camera/picamera2-manual.pdf (accessed on 1 December 2025).

- libcamera—A ComPlex Camera Support Library for Linux. Available online: https://libcamera.org/ (accessed on 1 December 2025).

- libcamera API Documentation. 2025. Available online: https://libcamera.org/api-html/ (accessed on 1 December 2025).

- Camera Software (Libcamera/Rpicam-apps). 2024. Available online: https://www.raspberrypi.com/documentation/computers/camera_software.html (accessed on 1 December 2025).

- Sengupta, M.; Habte, A.; Wilbert, S.; Gueymard, C.A.; Remund, J.; Lorenz, E.; van Sark, W.; Jensen, A.R. Best Practices Handbook for the Collection and Use of Solar Resource Data for Solar Energy Applications, 4th ed.; Technical Report NREL/TP-5D00-88300; Prepared with IEA PVPS Task 16 Experts; National Renewable Energy Laboratory (NREL): Golden, CO, USA, 2024. [Google Scholar] [CrossRef]

- Antuña-Sánchez, J.C.; Román, R.; Bosch, J.L.; Toledano, C.; Mateos, D.; González, R.; Cachorro, V.; de Frutos, Á. ORION software tool for the geometrical calibration of all-sky cameras. PLoS ONE 2022, 17, e0265959. [Google Scholar] [CrossRef] [PubMed]

- Urquhart, B.; Kurtz, B.; Kleissl, J. Sky camera geometric calibration using solar observations. Atmos. Meas. Tech. 2016, 9, 4279–4294. [Google Scholar] [CrossRef]

- Blum, N.; Matteschk, P.; Fabel, Y.; Nouri, B.; Román, R.; Zarzalejo, L.F.; Antuña-Sánchez, J.C.; Wilbert, S. Geometric calibration of all-sky cameras using sun and moon positions: A comprehensive analysis. Sol. Energy 2025, 295, 113476. [Google Scholar] [CrossRef]

- Paletta, Q.; Lasenby, J. A Temporally Consistent Image-based Sun Tracking Algorithm for Solar Energy Forecasting Applications. arXiv 2022, arXiv:2012.01059. [Google Scholar] [CrossRef]

- Dev, S.; Savoy, F.M.; Lee, Y.H.; Winkler, S. High-dynamic-range imaging for cloud segmentation. Atmos. Meas. Tech. 2018, 11, 2041–2049. [Google Scholar] [CrossRef]

- Blum, N.; Wilbert, S.; Nouri, B.; Lezaca, J.; Huckebrink, D.; Kazantzidis, A.; Heinemann, D.; Zarzalejo, L.F.; Jiménez, M.J.; Pitz-Paal, R. Measurement of diffuse and plane of array irradiance by a combination of a pyranometer and an all-sky imager. Sol. Energy 2022, 232, 232–247. [Google Scholar] [CrossRef]

- Paletta, Q.; Terrén-Serrano, G.; Nie, Y.; Li, B.; Bieker, J.; Zhang, W.; Dubus, L.; Dev, S.; Feng, C. Advances in Solar Forecasting: Computer Vision with Deep Learning. Adv. Appl. Energy 2023, 11, 100150. [Google Scholar] [CrossRef]

- Dev, S.; Savoy, F.M.; Lee, Y.H.; Winkler, S. Estimating Solar Irradiance Using Sky Imagers. Atmos. Meas. Tech. 2019, 12, 5417–5429. [Google Scholar] [CrossRef]

- Scolari, E.; Sossan, F.; Haure-Touzé, M.; Paolone, M. Local estimation of the global horizontal irradiance using an all-sky camera. Sol. Energy 2018, 173, 1225–1235. [Google Scholar] [CrossRef]

- Sánchez-Segura, C.D.; Valentín-Coronado, L.; Peña-Cruz, M.I.; Díaz-Ponce, A.; Moctezuma, D.; Flores, G.; Riveros-Rosas, D. Solar irradiance components estimation based on a low-cost sky-imager. Sol. Energy 2021, 220, 269–281. [Google Scholar] [CrossRef]

- Chu, T.P.; Guo, J.H.; Leu, Y.G.; Chou, L.F. Estimation of solar irradiance and solar power based on all-sky images. Sol. Energy 2023, 249, 495–506. [Google Scholar] [CrossRef]

- Maciel, J.N.; Gimenez Ledesma, J.J.; Ando Junior, O.H. Hybrid prediction method of solar irradiance applied to short-term photovoltaic energy generation. Renew. Sustain. Energy Rev. 2024, 192, 114185. [Google Scholar] [CrossRef]

- Mercier, T.M.; Sabet, A.; Rahman, T. Vision transformer models to measure solar irradiance using sky images in temperate climates. Appl. Energy 2024, 362, 122967. [Google Scholar] [CrossRef]

- Rajagukguk, R.A.; Lee, H. Sky-image-based sun-blocking index and PredRNN++ for accurate short-term solar irradiance forecasting. Build. Environ. 2025, 274, 112429. [Google Scholar] [CrossRef]

- Schmidt, T.; Kalisch, J.; Lorenz, E.; Heinemann, D. Evaluating the spatio-temporal performance of sky-imager-based solar irradiance analysis and forecasts. Atmos. Chem. Phys. 2016, 16, 3399–3412. [Google Scholar] [CrossRef]

- Caldas, M.; Alonso-Suárez, R. Very short-term solar irradiance forecast using all-sky imaging and real-time irradiance measurements. Renew. Energy 2019, 143, 1643–1658. [Google Scholar] [CrossRef]

- Terrén-Serrano, G.; Martínez-Ramón, M. Processing of global solar irradiance and ground-based infrared sky images for solar nowcasting and intra-hour forecasting applications. Sol. Energy 2023, 264, 111968. [Google Scholar] [CrossRef]

- Nouri, B.; Fabel, Y.; Wilbert, S.; Blum, N.; Schnaus, D.; Zarzalejo, L.F.; Ugedo, E.; Triebel, R.; Kowalski, J.; Pitz-Paal, R. Uncertainty quantification for deep learning-based solar nowcasting from all-sky imager data. Sol. RRL 2024, 8, 2400468. [Google Scholar] [CrossRef]

- Fabel, Y.; Nouri, B.; Wilbert, S.; Blum, N.; Schnaus, D.; Triebel, R.; Zarzalejo, L.F.; Ugedo, E.; Kowalski, J.; Pitz-Paal, R. Combining Deep Learning and Physical Models: A Benchmark Study on All-Sky Imager-Based Solar Nowcasting Systems. Sol. RRL 2024, 8, 2300808. [Google Scholar] [CrossRef]

- Hendrikx, N.Y.; Barhmi, K.; Visser, L.R.; de Bruin, T.A.; Pó, M.; Salah, A.A.; van Sark, W.G.J.H.M. All sky imaging-based short-term solar irradiance forecasting with Long Short-Term Memory networks. Sol. Energy 2024, 272, 112463. [Google Scholar] [CrossRef]

- Ansong, M.; Huang, G.; Nyang’onda, T.N.; Musembi, R.J.; Richards, B.S. Very short-term solar irradiance forecasting based on open-source low-cost sky imager and hybrid deep-learning techniques. Sol. Energy 2025, 294, 113516. [Google Scholar] [CrossRef]

- Bayasgalan, O.; Akisawa, A. Nowcasting Solar Irradiance Components Using a Vision Transformer and Multimodal Data from All-Sky Images and Meteorological Observations. Energies 2025, 18, 2300. [Google Scholar] [CrossRef]

- Gregor, P.; Zinner, T.; Jakub, F.; Mayer, B. Validation of a camera-based intra-hour irradiance nowcasting model using synthetic cloud data. Atmos. Meas. Tech. 2023, 16, 3257–3271. [Google Scholar] [CrossRef]

- Hategan, S.; Stefu, N.; Paulescu, M. An Ensemble Approach for Intra-Hour Forecasting of Solar Resource. Energies 2023, 16, 6608. [Google Scholar] [CrossRef]

- Nouri, B.; Blum, N.; Wilbert, S.; Zarzalejo, L.F. A Hybrid Solar Irradiance Nowcasting Approach: Combining All Sky Imager Systems and Persistence Irradiance Models for Increased Accuracy. Sol. RRL 2022, 6, 2100442. [Google Scholar] [CrossRef]

- Magiera, D.; Fabel, Y.; Nouri, B.; Blum, N.; Schnaus, D.; Zarzalejo, L.F. Advancing semantic cloud segmentation in all-sky images: A semi-supervised learning approach with ceilometer-driven weak labels. Sol. Energy 2025, 300, 113822. [Google Scholar] [CrossRef]

- Li, X.; Wang, B.; Qiu, B.; Wu, C. An all-sky camera image classification method using cloud cover features. Atmos. Meas. Tech. 2022, 15, 3629–3639. [Google Scholar] [CrossRef]

- Román, R.; Antuña-Sánchez, J.C.; Cachorro, V.E.; Toledano, C.; Torres, B.; Mateos, D.; Fuertes, D.; López, C.; González, R.; Lapionok, T.; et al. Retrieval of aerosol properties using relative radiance measurements from an all-sky camera. Atmos. Meas. Tech. 2022, 15, 407–433. [Google Scholar] [CrossRef]

- Logothetis, S.A.; Giannaklis, C.P.; Salamalikis, V.; Tzoumanikas, P.; Raptis, P.I.; Amiridis, V.; Eleftheratos, K.; Kazantzidis, A. Aerosol Optical Properties and Type Retrieval via Machine Learning and an All-Sky Imager. Atmosphere 2023, 14, 1266. [Google Scholar] [CrossRef]

- Dubovik, O.; King, M.D. A flexible inversion algorithm for retrieval of aerosol optical properties from Sun and sky radiance measurements. J. Geophys. Res. Atmos. 2000, 105, 20673–20696. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).