An Improved HRNetV2-Based Semantic Segmentation Algorithm for Pipe Corrosion Detection in Smart City Drainage Networks

Abstract

1. Introduction

- An improved HRNetV2 architecture tailored for precise segmentation of corrosion regions in complex drainage pipe environments is proposed. For the first time, this framework systematically integrates the CBAM and a Lightweight Pyramid Pooling Module (LitePPM), jointly improving the network’s ability to perceive multi-scale defects and resist background interference.

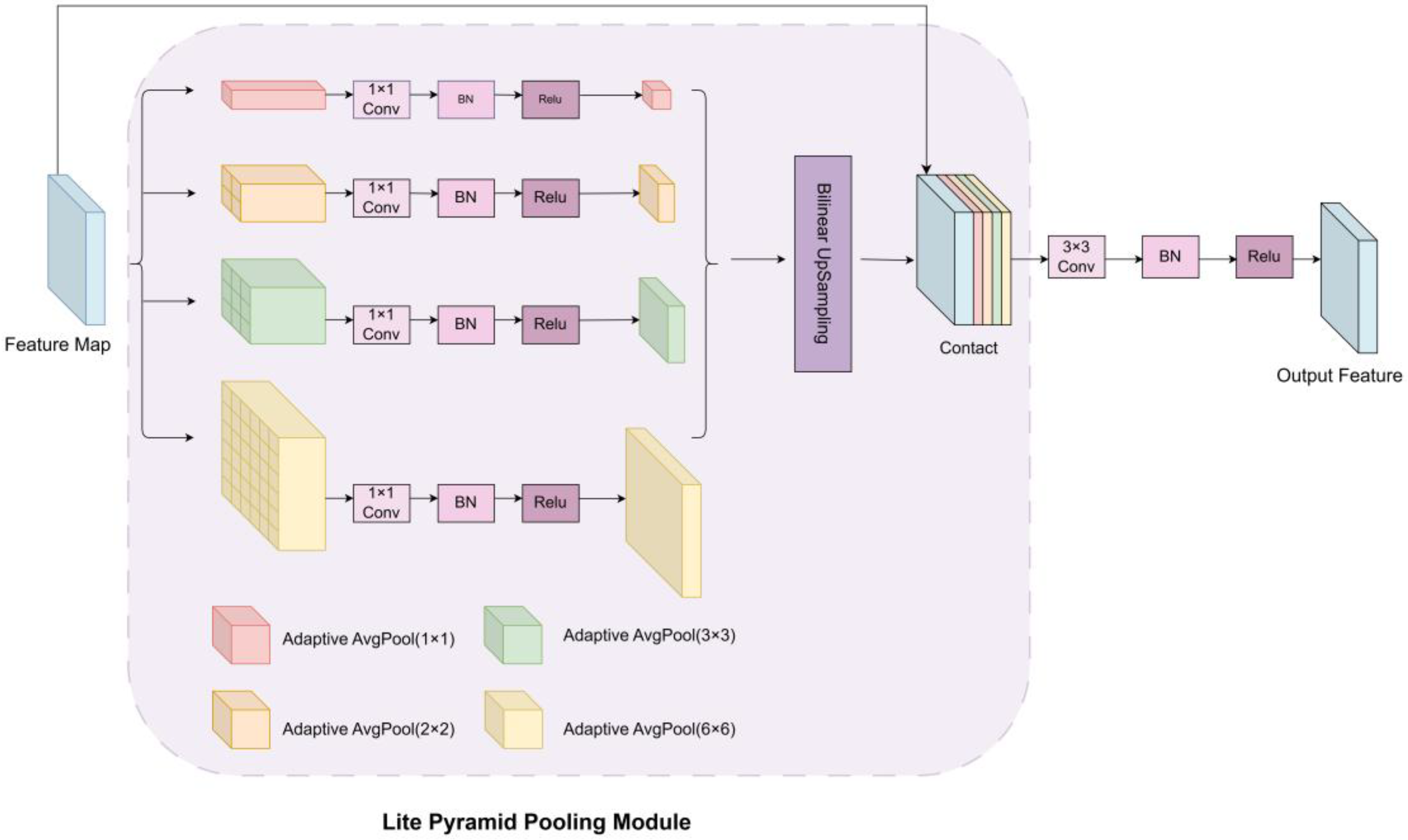

- Inspired by the Pyramid Scene Parsing Network (PSPNet), a LitePPM is designed. This module uses adaptive average pooling at multiple scales, followed by convolution and feature concatenation to extract and fuse contextual information, expanding the network’s receptive field while controlling parameter growth.

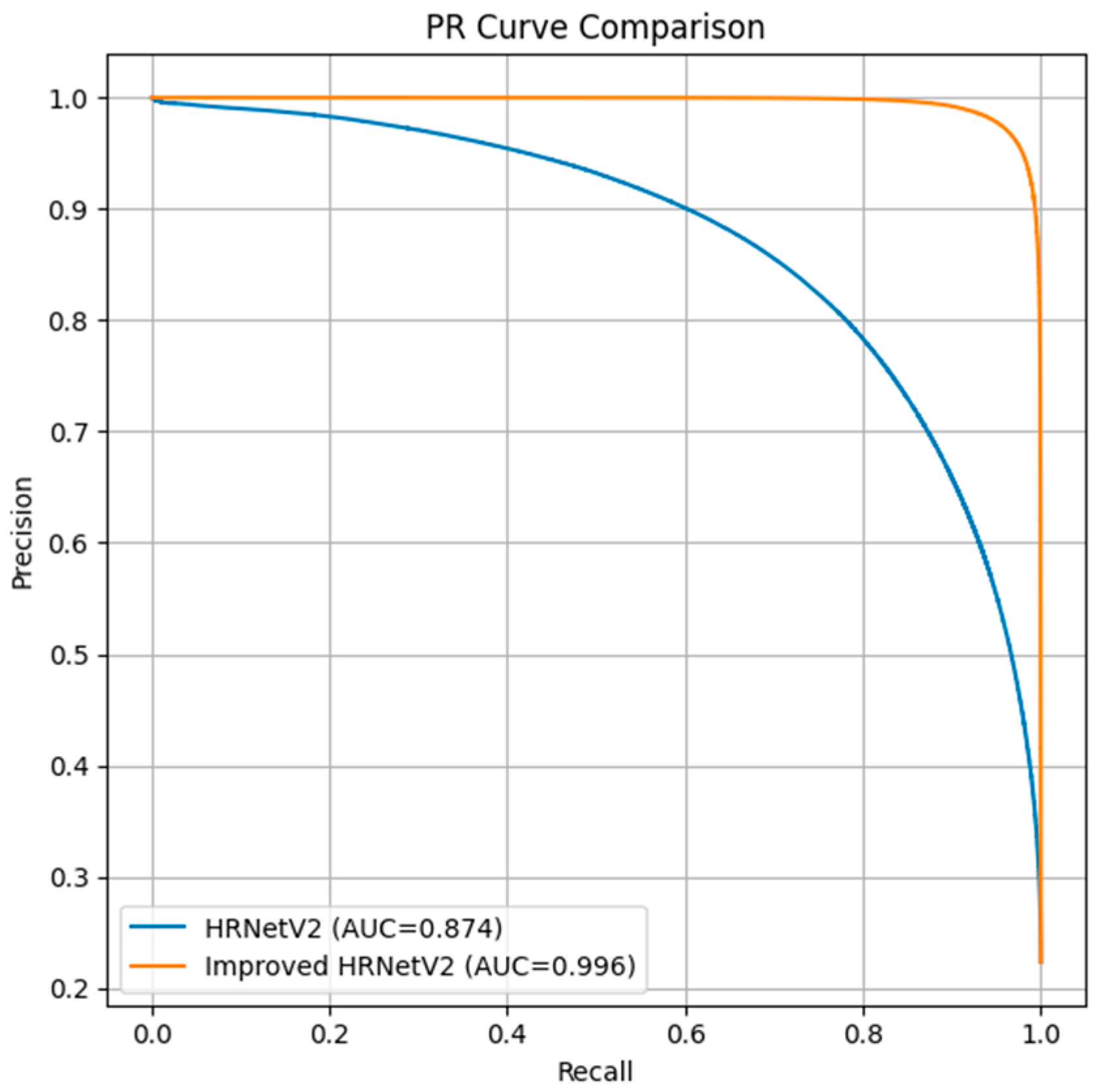

- Comprehensive experiments on a self-built drainage pipe defect dataset validate the effectiveness of the proposed approach. Results show that the model significantly outperforms mainstream U-Net variants and the original HRNetV2 in terms of segmentation accuracy, mIoU and Recall.

2. Materials and Methods

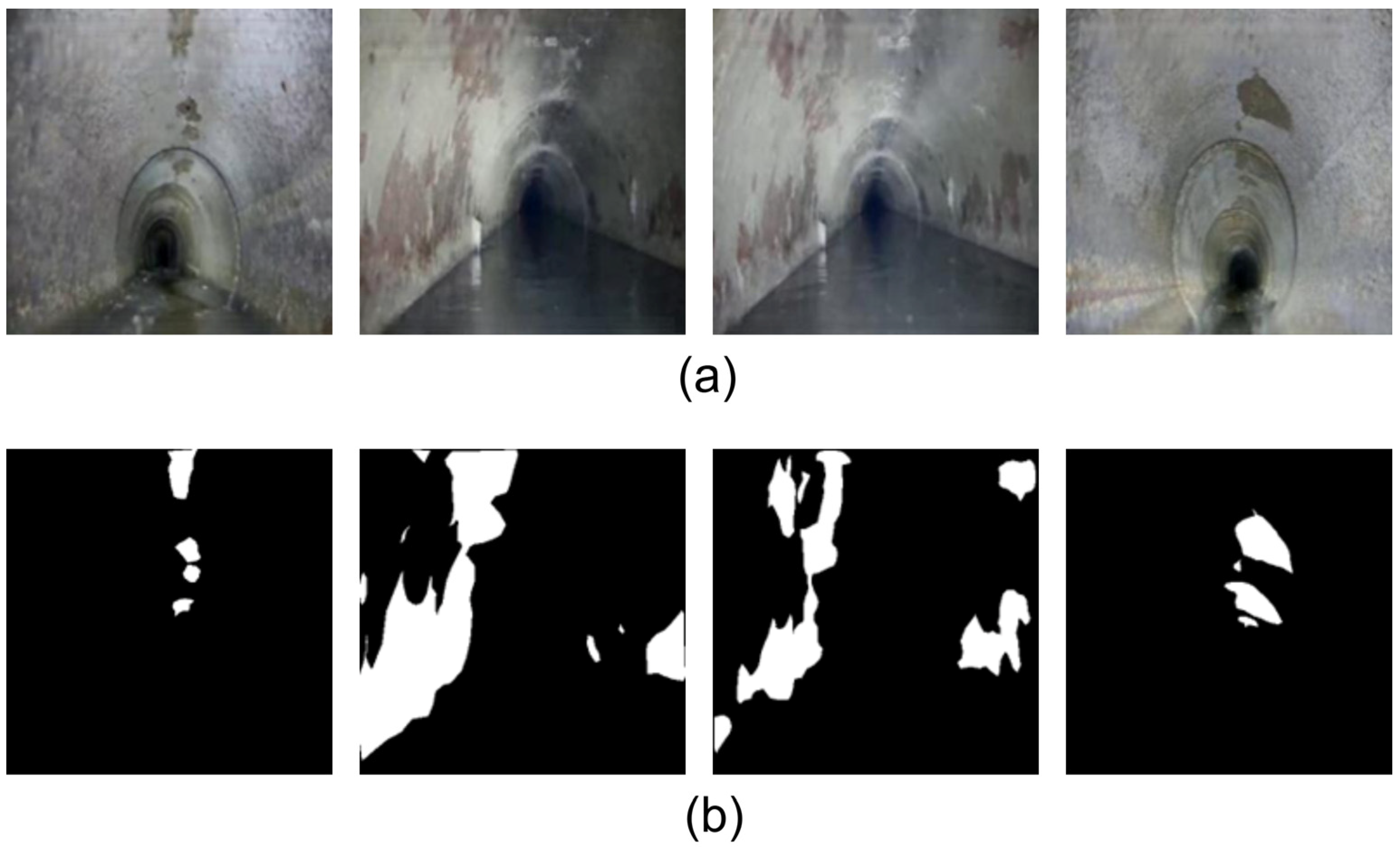

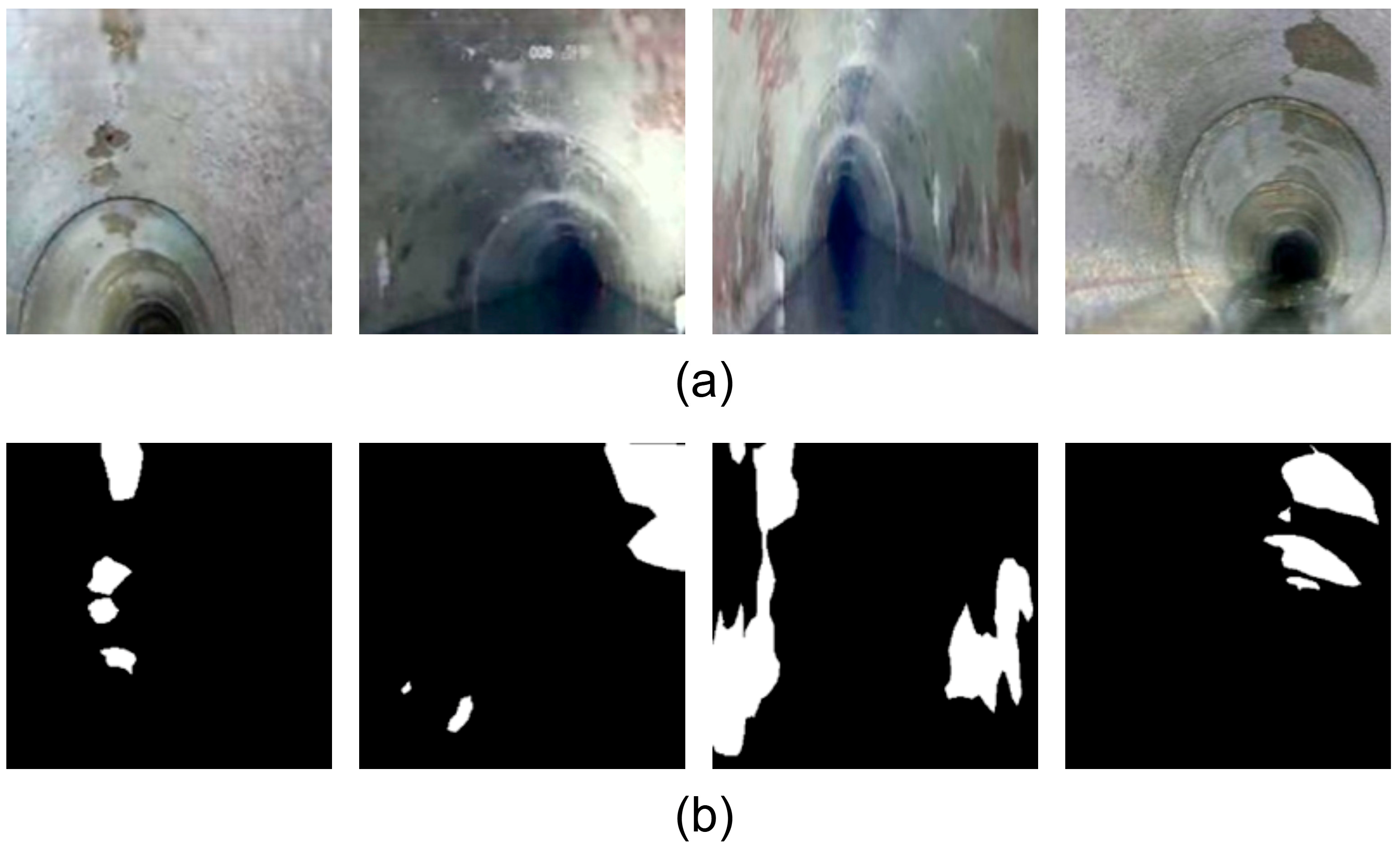

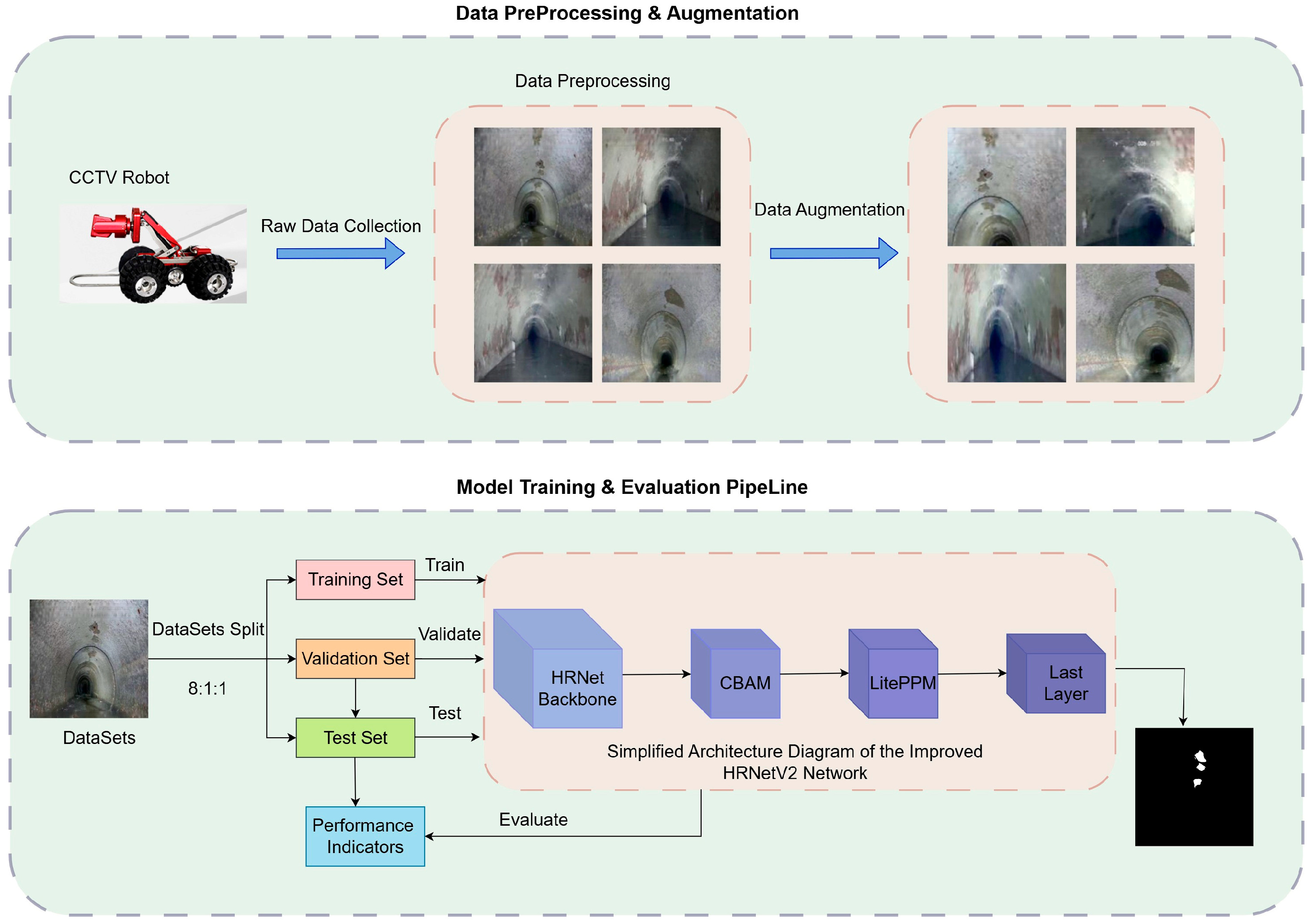

2.1. Drainage Pipeline Data Acquisition

2.2. Data Augmentation

2.3. Semantic Segmentation Network for Corrosion Areas in Drainage Pipelines

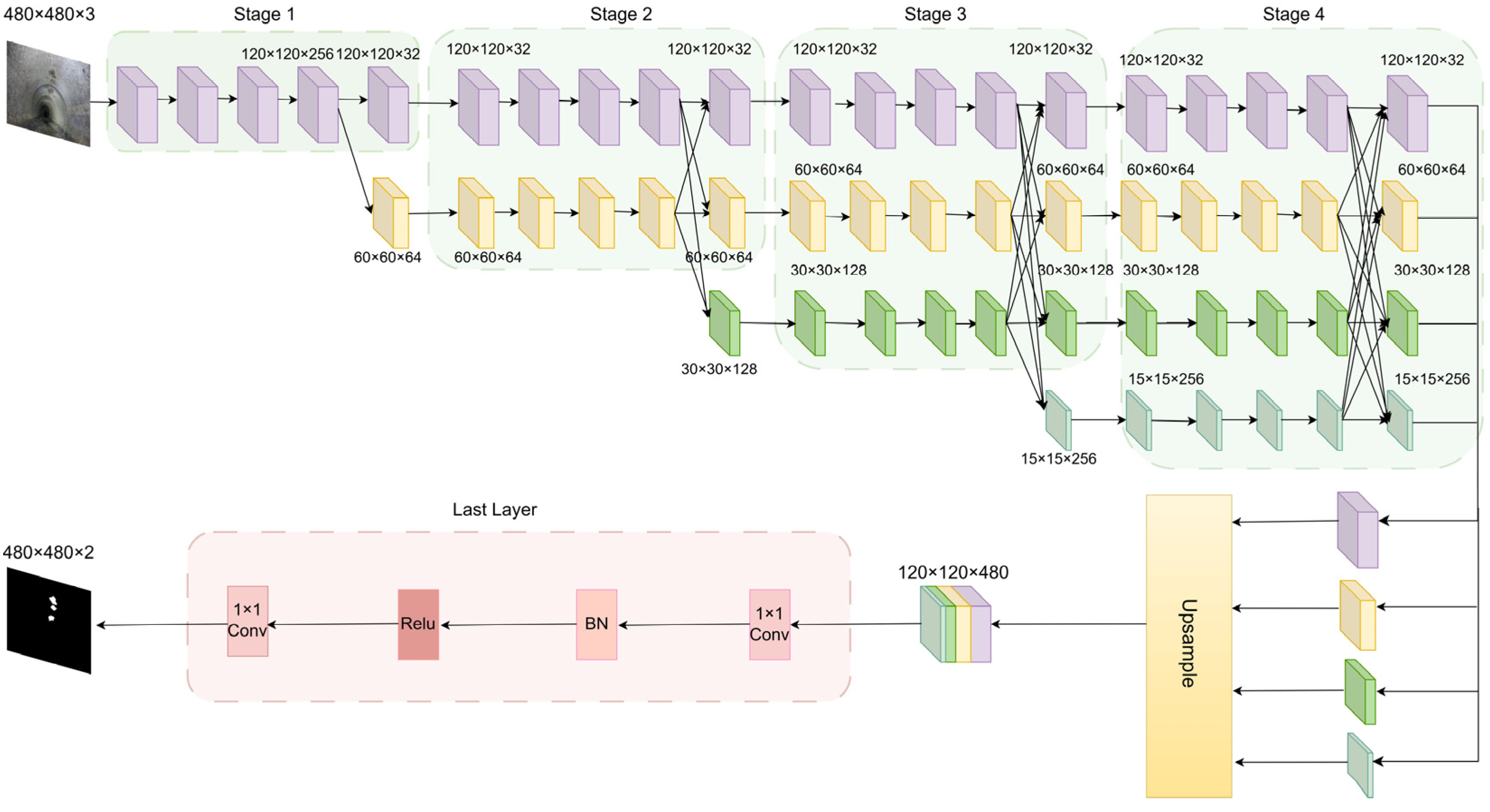

2.3.1. HRNetV2 Semantic Segmentation Model

2.3.2. Optimization of HRNetV2 Semantic Segmentation Model

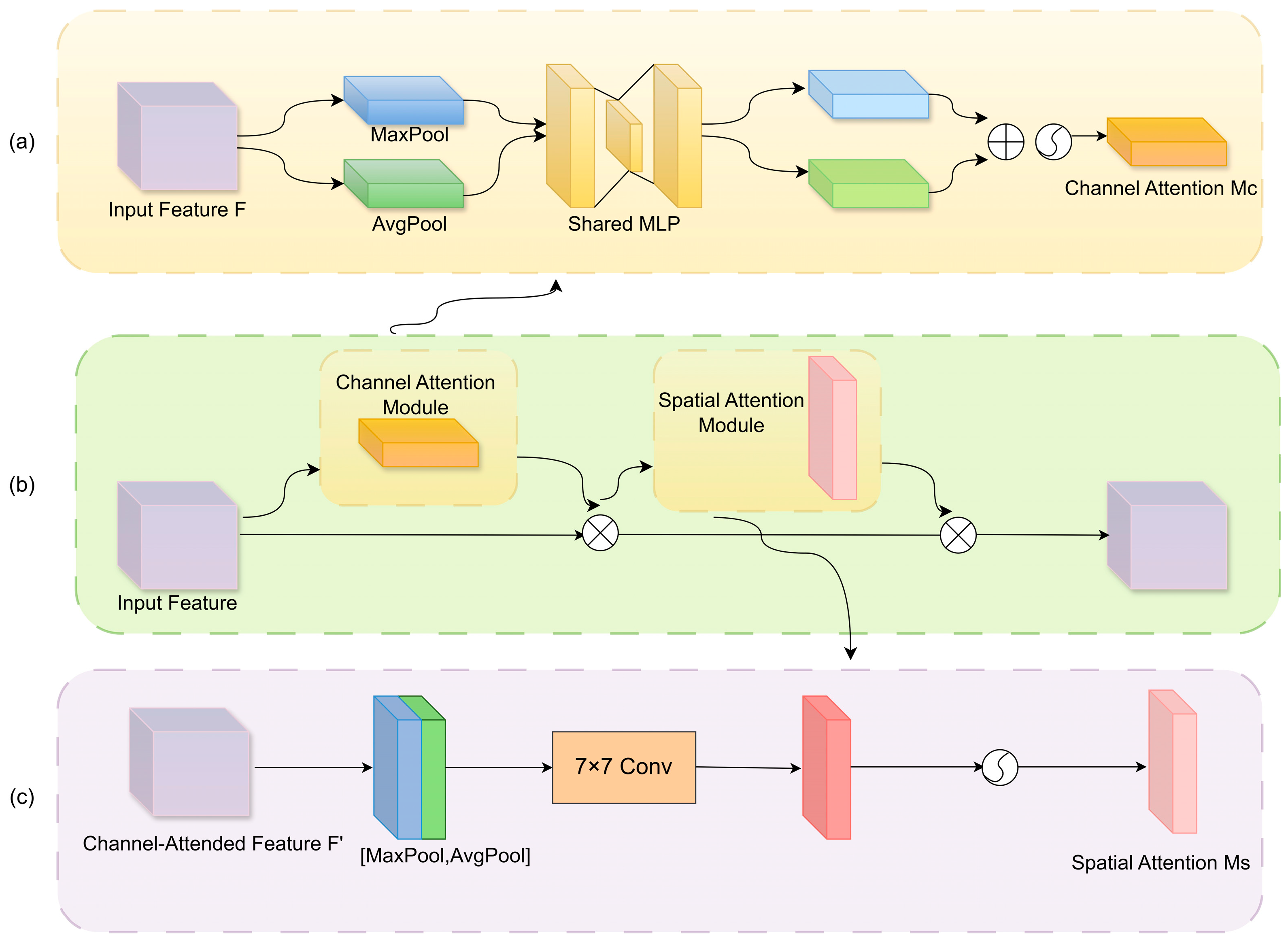

2.3.3. Integration of the CBAM Attention Mechanism

2.3.4. Introduction of the LitePPM Module

3. Experiments and Analysis

3.1. Experimental Platform and Parameters

3.2. Loss Function

3.3. Evaluation Metrics

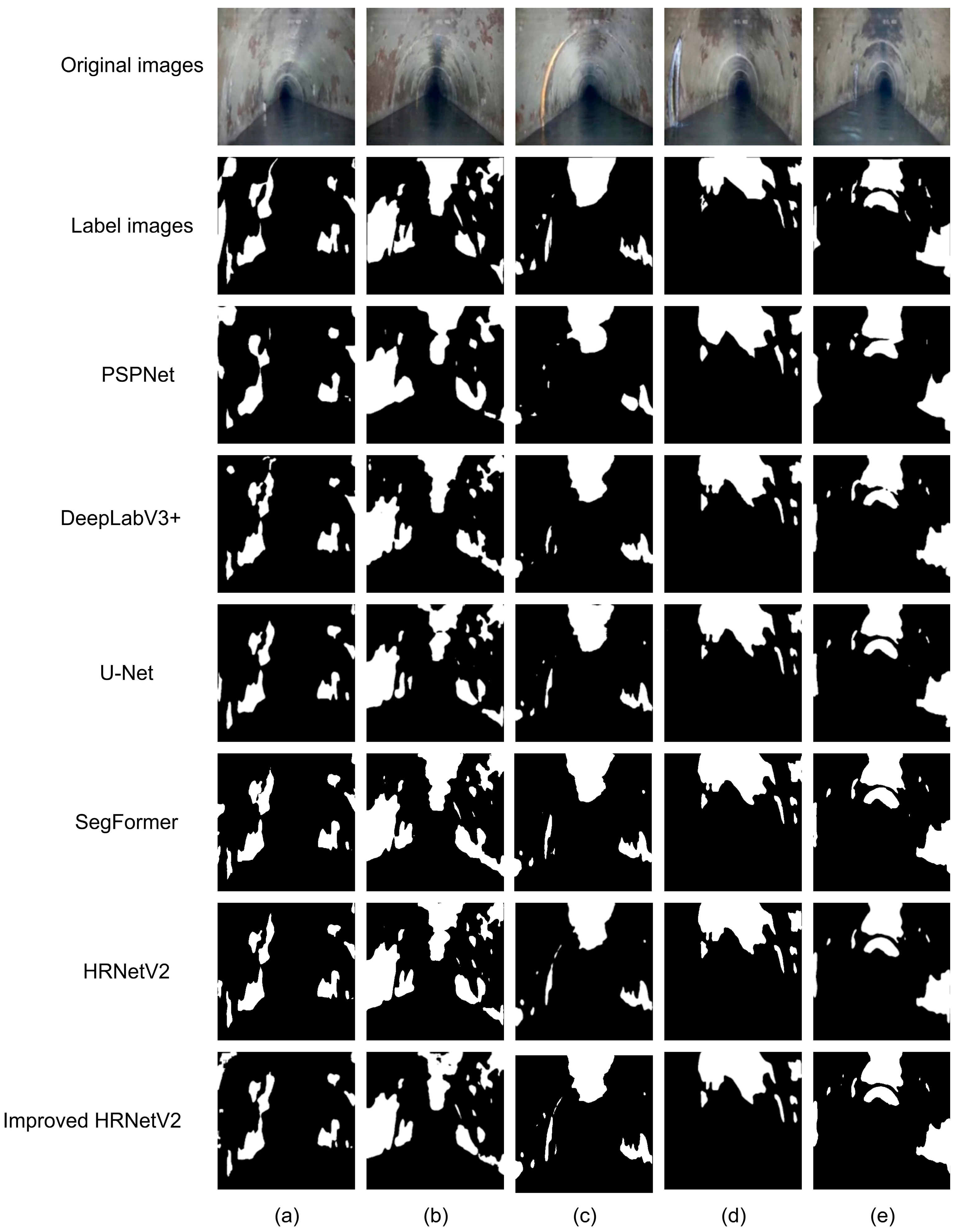

3.4. Experimental Results and Analysis

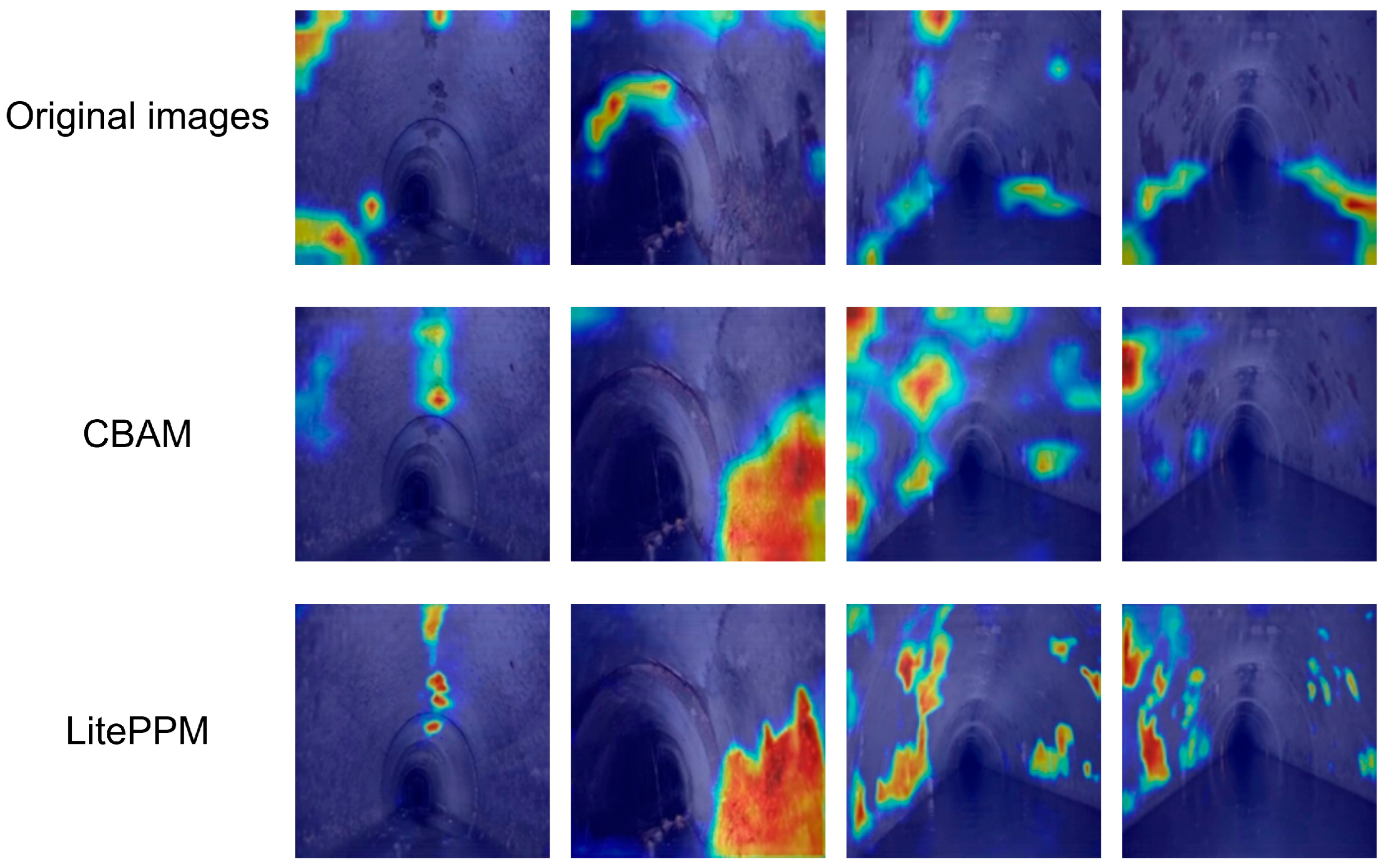

3.5. Ablation, Efficiency, and Visualization Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| HRNetV2 | High-Resolution Network Version 2 |

| CBAM | Convolutional Block Attention Module |

| LitePPM | Lightweight Pyramid Pooling Module |

| mIoU | mean Intersection over Union |

| CCTV | Traditional closed-circuit television |

| CNNs | Convolutional Neural Networks |

| U-Net | U-shaped convolutional networks |

| CA | Coordinate Attention |

| SE | Squeeze-and-Excitation |

| ASPP | Atrous Spatial Pyramid Pooling |

| ECA | Efficient Channel Attention |

| CRF | Conditional Random Field |

| UAV | Unmanned Aerial Vehicle |

| StyleGAN3 | Style-Based Generative Adversarial Network 3 |

| ResNet | Residual Network |

| PSPNet | Pyramid Scene Parsing Network |

| HRNet | High-Resolution Network |

| HRNetV1 | High-Resolution Network Version 1 |

| HRNetV2P | High-Resolution Network Version 2 Plus |

| SAM | Spatial Attention Module |

| CAM | Channel Attention Module |

| MLP | Multi-Layer Perceptron |

| Dice Loss | Dice Similarity Coefficient Loss |

References

- Shen, D.; Liu, X.; Shang, Y.; Tang, X. Deep Learning-Based Automatic Defect Detection Method for Sewer Pipelines. Sustainability 2023, 15, 9164. [Google Scholar] [CrossRef]

- Yuan, G.; Hong-Wu, W.; Shan-Fa, Z.; Lu-Ming, M.A. Current Research Progress in Combined Sewer Sediments and Their Models. China Water Wastewater 2010, 26, 15–18. [Google Scholar]

- Yin, X.; Chen, Y.; Bouferguene, A.; Zaman, H.; Al-Hussein, M.; Kurach, L. A Deep Learning-Based Framework for an Automated Defect Detection System for Sewer Pipes. Autom. Constr. 2020, 109, 102967. [Google Scholar] [CrossRef]

- Cheng, J.C.; Wang, M. Automated Detection of Sewer Pipe Defects in Closed-Circuit Television Images Using Deep Learning Techniques. Autom. Constr. 2018, 95, 155–171. [Google Scholar] [CrossRef]

- Kumar, S.S.; Abraham, D.M.; Jahanshahi, M.R.; Iseley, T.; Starr, J. Automated Defect Classification in Sewer Closed Circuit Television Inspections Using Deep Convolutional Neural Networks. Autom. Constr. 2018, 91, 273–283. [Google Scholar] [CrossRef]

- Ding, L.; Goshtasby, A. On the Canny Edge Detector. Pattern Recognit. 2001, 34, 721–725. [Google Scholar] [CrossRef]

- Chen, T.; Wu, Q.H.; Rahmani-Torkaman, R.; Hughes, J. A Pseudo Top-Hat Mathematical Morphological Approach to Edge Detection in Dark Regions. Pattern Recognit. 2002, 35, 199–210. [Google Scholar] [CrossRef]

- Su, T.-C.; Yang, M.-D.; Wu, T.-C.; Lin, J.-Y. Morphological Segmentation Based on Edge Detection for Sewer Pipe Defects on CCTV Images. Expert Syst. Appl. 2011, 38, 13094–13114. [Google Scholar] [CrossRef]

- Dong, P. Implementation of Mathematical Morphological Operations for Spatial Data Processing. Comput. Geosci. 1997, 23, 103–107. [Google Scholar] [CrossRef]

- Oliveira, H.; Correia, P.L. Automatic Road Crack Segmentation Using Entropy and Image Dynamic Thresholding. In Proceedings of the 2009 17th European Signal Processing Conference, Glasgow, UK, 24–28 August 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 622–626. [Google Scholar]

- Rajeswaran, A.; Narasimhan, S.; Narasimhan, S. A Graph Partitioning Algorithm for Leak Detection in Water Distribution Networks. Comput. Chem. Eng. 2018, 108, 11–23. [Google Scholar] [CrossRef]

- Wolf, S.; Pape, C.; Bailoni, A.; Rahaman, N.; Kreshuk, A.; Kothe, U.; Hamprecht, F. The Mutex Watershed: Efficient, Parameter-Free Image Partitioning. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 546–562. [Google Scholar]

- Almanza-Ortega, N.N.; Flores-Vázquez, J.M.; Martínez-Añorve, H.; Pérez-Ortega, J.; Zavala-Díaz, J.C.; Mexicano-Santoyo, A.; Carmona-Fraustro, J.C. Corrosion Analysis through an Adaptive Preprocessing Strategy Using the K-Means Algorithm. Procedia Comput. Sci. 2023, 219, 586–595. [Google Scholar] [CrossRef]

- Kim, B.; Kwon, J.; Choi, S.; Noh, J.; Lee, K.; Yang, J. Corrosion Image Monitoring of Steel Plate by Using K-Means Clustering. J. Korean Inst. Surf. Eng. 2021, 54, 278–284. [Google Scholar]

- Jing, J.; Liu, S.; Wang, G.; Zhang, W.; Sun, C. Recent Advances on Image Edge Detection: A Comprehensive Review. Neurocomputing 2022, 503, 259–271. [Google Scholar] [CrossRef]

- Wang, M.; Luo, H.; Cheng, J.C. Towards an Automated Condition Assessment Framework of Underground Sewer Pipes Based on Closed-Circuit Television (CCTV) Images. Tunn. Undergr. Space Technol. 2021, 110, 103840. [Google Scholar] [CrossRef]

- Katsamenis, I.; Protopapadakis, E.; Doulamis, A.; Doulamis, N.; Voulodimos, A. Pixel-Level Corrosion Detection on Metal Constructions by Fusion of Deep Learning Semantic and Contour Segmentation. In Advances in Visual Computing; Bebis, G., Yin, Z., Kim, E., Bender, J., Subr, K., Kwon, B.C., Zhao, J., Kalkofen, D., Baciu, G., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; Volume 12509, pp. 160–169. ISBN 978-3-030-64555-7. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Navab, N., Hornegger, J., Wells, W.M., Frangi, A.F., Eds.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2015; Volume 9351, pp. 234–241. ISBN 978-3-319-24573-7. [Google Scholar]

- Su, H.; Wang, X.; Han, T.; Wang, Z.; Zhao, Z.; Zhang, P. Research on a U-Net Bridge Crack Identification and Feature-Calculation Methods Based on a CBAM Attention Mechanism. Buildings 2022, 12, 1561. [Google Scholar] [CrossRef]

- Ran, Q.; Wang, N.; Wang, X.; He, Z.; Zhao, Y. Improved U-Net Drainage Pipe Defects Detection Algorithm Based on CBMA Attention Mechanism. In Proceedings of the 2024 9th International Conference on Electronic Technology and Information Science (ICETIS), Hangzhou, China, 17–19 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 342–347. [Google Scholar]

- Li, Y.; Wang, H.; Dang, L.M.; Piran, M.J.; Moon, H. A Robust Instance Segmentation Framework for Underground Sewer Defect Detection. Measurement 2022, 190, 110727. [Google Scholar] [CrossRef]

- Liu, Y.; Bai, X.; Wang, J.; Li, G.; Li, J.; Lv, Z. Image Semantic Segmentation Approach Based on DeepLabV3 plus Network with an Attention Mechanism. Eng. Appl. Artif. Intell. 2024, 127, 107260. [Google Scholar] [CrossRef]

- Wang, N.; Zhang, J.; Song, X. A Pipeline Defect Instance Segmentation System Based on SparseInst. Sensors 2023, 23, 9019. [Google Scholar] [CrossRef]

- Forkan, A.R.M.; Kang, Y.-B.; Jayaraman, P.P.; Liao, K.; Kaul, R.; Morgan, G.; Ranjan, R.; Sinha, S. CorrDetector: A Framework for Structural Corrosion Detection from Drone Images Using Ensemble Deep Learning. Expert Syst. Appl. 2022, 193, 116461. [Google Scholar] [CrossRef]

- Li, Y.; Yang, Y.; Liu, Y.; Zhong, F.; Zheng, H.; Wang, S.; Wang, Z.; Huang, Z. A Novel Method for Semantic Segmentation of Sewer Defects Based on StyleGAN3 and Improved Deeplabv3+. J. Civ. Struct. Health Monit. 2025, 15, 1939–1956. [Google Scholar] [CrossRef]

- Papamarkou, T.; Guy, H.; Kroencke, B.; Miller, J.; Robinette, P.; Schultz, D.; Hinkle, J.; Pullum, L.; Schuman, C.; Renshaw, J. Automated Detection of Corrosion in Used Nuclear Fuel Dry Storage Canisters Using Residual Neural Networks. Nucl. Eng. Technol. 2021, 53, 657–665. [Google Scholar] [CrossRef]

- Jin, Q.; Han, Q.; Qian, J.; Sun, L.; Ge, K.; Xia, J. Drainage Pipeline Multi-Defect Segmentation Assisted by Multiple Attention for Sonar Images. Appl. Sci. 2025, 15, 597. [Google Scholar] [CrossRef]

- Wang, M.; Cheng, J.C.P. A Unified Convolutional Neural Network Integrated with Conditional Random Field for Pipe Defect Segmentation. Comput. Aided Civ. Infrastruct. Eng. 2020, 35, 162–177. [Google Scholar] [CrossRef]

- Malashin, I.; Tynchenko, V.; Nelyub, V.; Borodulin, A.; Gantimurov, A.; Krysko, N.V.; Shchipakov, N.A.; Kozlov, D.M.; Kusyy, A.G.; Martysyuk, D. Deep Learning Approach for Pitting Corrosion Detection in Gas Pipelines. Sensors 2024, 24, 3563. [Google Scholar] [CrossRef]

- Das, A.; Dorafshan, S.; Kaabouch, N. Autonomous Image-Based Corrosion Detection in Steel Structures Using Deep Learning. Sensors 2024, 24, 3630. [Google Scholar] [CrossRef] [PubMed]

- Du, C.; Wang, K. Drainage Pipeline Defect Detection System Based on Semantic Segmentation. Symmetry 2024, 16, 1477. [Google Scholar] [CrossRef]

- Nash, W.T.; Powell, C.J.; Drummond, T.; Birbilis, N. Automated Corrosion Detection Using Crowdsourced Training for Deep Learning. Corrosion 2020, 76, 135–141. [Google Scholar] [CrossRef]

- Burton, B.; Nash, W.T.; Birbilis, N. RustSEG—Automated Segmentation of Corrosion Using Deep Learning. arXiv 2022, arXiv:2205.05426. [Google Scholar] [CrossRef]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar]

- Sun, K.; Zhao, Y.; Jiang, B.; Cheng, T.; Xiao, B.; Liu, D.; Mu, Y.; Wang, X.; Liu, W.; Wang, J. High-Resolution Representations for Labeling Pixels and Regions. arXiv 2019, arXiv:1904.04514. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional Block Attention Module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2881–2890. [Google Scholar]

- Milletari, F.; Navab, N.; Ahmadi, S.-A. V-Net: Fully Convolutional Neural Networks for Volumetric Medical Image Segmentation. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 565–571. [Google Scholar]

- Lin, T.-Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

| Item | Description |

|---|---|

| Total images | 1360 images |

| Image resolution | 480 × 480 pixels |

| Classes defined | Background (0), Corrosion (FS, 1) |

| Annotation tool | LabelMe (pixel-level annotation) |

| Data split | Training (80%, 1088), Validation (10%, 136), Testing (10%, 136) |

| Viewpoints covered | Front views, side views, partial views of pipelines |

| Hyperparameter | Value |

|---|---|

| Epoch | 221 |

| Batch Size | 5 |

| Optimizer | SGD |

| Momentum | 0.9 |

| Init Learning Rate | 4 × 10−3 |

| Min Learning Rate | 4 × 10−5 |

| Learning Rate Decay Type | COS |

| Weight Decay | 1 × 10−4 |

| Network | Backbone | IoU | mIoU | Recall | Accuracy | Precision | F1 |

|---|---|---|---|---|---|---|---|

| DeepLabv3+ | MobileNetV2 | 0.03 | 0.03 | 0.03 | 0.02 | 0.03 | 0.02 |

| PSPNet | MobileNetV2 | 0.02 | 0.02 | 0.02 | 0.01 | 0.02 | 0.02 |

| U-Net | ResNet50 | 0.03 | 0.03 | 0.04 | 98.49 | 0.03 | 0.03 |

| U-Net | VGG16 | 0.02 | 0.02 | 0.03 | 98.48 | 0.03 | 0.03 |

| SegFormer | B2 | 0.03 | 0.03 | 0.03 | 0.02 | 0.03 | 0.02 |

| HRNetV2 | HRNetV2_W18 | 0.01 | 0.02 | 0.03 | 98.41 | 0.04 | 0.03 |

| HRNetV2 | HRNetV2_W32 | 0.02 | 0.03 | 0.02 | 98.51 | 0.02 | 0.02 |

| Improved HRNetV2 | HRNetV2_W32 | 0.02 | 0.03 | 0.02 | 98.54 | 0.03 | 0.03 |

| HRNetV2 | CBAM | LitePPM | IoU | mIoU | Recall | Accuracy | Precision | F1 |

|---|---|---|---|---|---|---|---|---|

| √ | × | × | 0.02 | 0.03 | 0.02 | 98.51 | 0.02 | 0.02 |

| √ | √ | × | 0.02 | 0.03 | 0.03 | 98.52 | 0.03 | 0.03 |

| √ | × | √ | 0.02 | 0.02 | 0.03 | 0.01 | 0.04 | 0.03 |

| √ | √ | √ | 0.02 | 0.03 | 0.02 | 98.54 | 0.03 | 0.03 |

| HRNetV2 | CBAM | LitePPM | Params (M) | FLOPs (G) | GPU Memory (MB) | FPS (Images/s) |

|---|---|---|---|---|---|---|

| √ | × | × | 29.53 | 39.96 | 1112.77 | 5.83 |

| √ | √ | × | 29.55 | 39.60 | 1115.97 | 5.89 |

| √ | × | √ | 30.35 | 37.67 | 1117.82 | 6.31 |

| √ | √ | √ | 31.41 | 35.92 | 1119.57 | 7.21 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, L.; Huang, X.; Si, W.; Yang, F.; Qiao, X.; Zhu, Y.; Fu, T.; Zhao, J. An Improved HRNetV2-Based Semantic Segmentation Algorithm for Pipe Corrosion Detection in Smart City Drainage Networks. J. Imaging 2025, 11, 325. https://doi.org/10.3390/jimaging11100325

Gao L, Huang X, Si W, Yang F, Qiao X, Zhu Y, Fu T, Zhao J. An Improved HRNetV2-Based Semantic Segmentation Algorithm for Pipe Corrosion Detection in Smart City Drainage Networks. Journal of Imaging. 2025; 11(10):325. https://doi.org/10.3390/jimaging11100325

Chicago/Turabian StyleGao, Liang, Xinxin Huang, Wanling Si, Feng Yang, Xu Qiao, Yaru Zhu, Tingyang Fu, and Jianshe Zhao. 2025. "An Improved HRNetV2-Based Semantic Segmentation Algorithm for Pipe Corrosion Detection in Smart City Drainage Networks" Journal of Imaging 11, no. 10: 325. https://doi.org/10.3390/jimaging11100325

APA StyleGao, L., Huang, X., Si, W., Yang, F., Qiao, X., Zhu, Y., Fu, T., & Zhao, J. (2025). An Improved HRNetV2-Based Semantic Segmentation Algorithm for Pipe Corrosion Detection in Smart City Drainage Networks. Journal of Imaging, 11(10), 325. https://doi.org/10.3390/jimaging11100325