Abstract

Image-based 3D reconstruction enables laparoscopic applications as image-guided navigation and (autonomous) robot-assisted interventions, which require a high accuracy. The review’s purpose is to present the accuracy of different techniques to label the most promising. A systematic literature search with PubMed and google scholar from 2015 to 2023 was applied by following the framework of “Review articles: purpose, process, and structure”. Articles were considered when presenting a quantitative evaluation (root mean squared error and mean absolute error) of the reconstruction error (Euclidean distance between real and reconstructed surface). The search provides 995 articles, which were reduced to 48 articles after applying exclusion criteria. From these, a reconstruction error data set could be generated for the techniques of stereo vision, Shape-from-Motion, Simultaneous Localization and Mapping, deep-learning, and structured light. The reconstruction error varies from below one millimeter to higher than ten millimeters—with deep-learning and Simultaneous Localization and Mapping delivering the best results under intraoperative conditions. The high variance emerges from different experimental conditions. In conclusion, submillimeter accuracy is challenging, but promising image-based 3D reconstruction techniques could be identified. For future research, we recommend computing the reconstruction error for comparison purposes and use ex/in vivo organs as reference objects for realistic experiments.

1. Introduction

Laparoscopy enables minimally invasive surgery (MIS), which reduces patience’s healing duration and surgical trauma [1]. Mostly, it is used therapeutically for resectioning tumors and organs, e.g., parts of the liver or gall bladder, or to diagnose malicious tissue, e.g., peritoneal metastases [2] or endometriosis [3]. Future laparoscopic applications cover image-guided surgery (augmented reality (AR)) [4] or (autonomous) robot-assisted interventions [5]. Image-based 3D reconstruction, which requires accurate depth measurement (distance from camera to object) as well as camera localization, enables these applications by creating a virtual 3D model of the abdomen. A robotic laparoscopic system with 3D measurement ability is offered by Asensus Surgical US, Inc. (Durham, North Carolina, USA) [6], which is based on stereo camera reconstruction.

To provide a recommendation for future research regarding high accuracy 3D reconstruction techniques, literature from 2015 to 2023 was reviewed [7]. Only articles that measure the reconstruction error—especially the root mean squared error (RMSE) and the mean absolute error (MAE)—were considered. The purpose of this work is to give a statement about the image-based 3D reconstruction technique with the highest accuracy.

Section 2 explains the literature search and gives an overview of the quantitative evaluation. Section 3 presents the review’s results, which will be discussed in Section 4, while Section 5 concludes the paper. Before it is continued with Section 2, the image-based 3D reconstruction techniques and related work are demonstrated.

1.1. Image-Based 3D Reconstruction Techniques Used in Laparoscopy

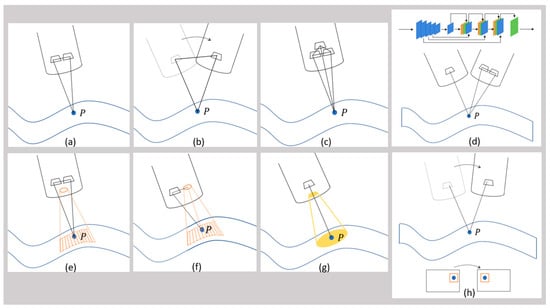

Image-based 3D reconstruction techniques can be divided into passive (only image is used) and active (additionally, external light is brought onto the object’s surface) methods [8]. Most of them make use of triangulation to estimate depth (distance from camera to object). Shape-from-Motion (SfM) [9] (Figure 1b) and Shape-from-Shading (SfS) [10] (Figure 1g) belong to the category Shape-from-X (SfX) and are declared as passive methods. Stereo vision [4] (Figure 1a), trinocular [11] (Figure 1c) or multicamera systems [12], as well as Simultaneous Localization and Mapping (SLAM) [13] (Figure 1h) are passive methods as well. Deep-learning (DL) approaches (Figure 1d) are trained with either mono- or stereoscopic images and can also be assigned to passive methods [14,15,16,17,18,19,20,21,22,23,24]. Active methods are Time-of-Flight (ToF) cameras [25] and Structured Light (SL) [26] (Figure 1e (SL stereo), f (SL mono)). ToF will not be presented in this paper because within the last nine years, we could not find any paper using ToF for 3D reconstruction in laparoscopy. At last Smart Trocar® and Light Field Technology must be mentioned. The first one is a trocar equipped with cameras to record the laparoscope’s and instruments’ positions [27]. Light Field Technology is another passive method but is barely used in laparoscopy [28].

Figure 1.

Schematic overview of different image-based 3D reconstruction techniques. (a) Stereo vision, the point P is captured by both cameras; (b) Structure-from-Motion (SfM), the point P is captured by the mono camera from two different perspectives; (c) trinocular vision, the point P is captured by the three cameras; (d) deep-learning-based approaches (DL), the point P is captured by either a mono or stereo camera, the blue, green and orange rectangles represent different layers of a DL network; (e) active stereo vision with structured light (SL stereo), the point P is captured by the stereo camera while a pattern (represented by the orange dots) is projected onto the surface; (f) structured light with monocular vision (SL mono), the point P is captured by the mono camera while a pattern (represented by the orange dots) is projected onto the surface; (g) Shape-from-Shading (SfS), the point P is captured by the mono camera and the white light is represented by the yellow circle; (h) Simultaneous Localization and Mapping (SLAM), the point P is captured by the mono camera from two different perspectives, the point P in the image is matched to the point P in the neighboring image.

The category SfX contains algorithms, which consider monocular images. The most common technique within this category is SfM. Here, the motion of the camera causes image pairs, which lead to triangulation. Through motion, the system can capture an object from different perspectives. The distance between two images (stereo base or base distance) is variable and depends on the motion [8]. The method SfS analyzes the pixel intensity of monocular images to estimate depth—the more intense, the closer the object [8,29].

Stereo vision describes a system with two cameras and a fixed stereo base. Stereo matching algorithms search for corresponding points in the image pair, which can be used to calculate the disparity and by applying triangulation, depth can be determined [8,30,31]. While stereo vision estimates depth, SLAM tracks the camera positions. It originates from the research area of mobile robots and requires feature detection and loop closing. Through camera movement, features change their pixel position on the image sensor, which correlate with the camera position change [8,32,33].

Trinocular systems belong to the category of multicamera systems and operate comparable to stereo cameras, but with additional cameras [11]. In contrast to this, ToF cameras (active technique) are based on emitting light impulses, which return after reflection from the object’s surface. The time from emitting to returning correlates with the object distance (depth) [8,25]. The technique SL requires a projector, which creates a pattern on the surface, which is captured by either a mono or stereo camera. Patterns could be dots, grids, lines, individual or random dot patterns. Instead of the white light image, the matching algorithms analyze the pattern and triangulation is calculated between two cameras (SL stereo), or between the mono camera and the projector (SL mono) [8,34].

AI approaches contain depth networks (neural networks), which are trained with datasets of either monocular or binocular images. Often, the models are based on self-supervised or unsupervised convolutional neural networks. SLAM algorithms could complement in parallel for localization [14,15,16,17,18,19,20,21,22,23,24,35,36,37,38,39].

1.2. Related Work

Several reviews of laparoscopic image-based 3D reconstruction could be found—without offering the reconstruction error [8,40,41,42,43] and with. The ones including the reconstruction error are presented in the following.

Ref. [44] generated a surface reconstruction of organ phantoms (liver, kidney, and stomach) by using a ToF camera and by applying stereo vision to compare it with CT data. Stereo vision outperformed the ToF camera with an accuracy of 1.1–4.1 mm (RMSE) vs. 3.7–8.7 mm (RMSE). SfM was part of the comparison as well, which resulted in 3.5–5.8 mm (RMSE). Ref. [45] presented SfM, SLAM, SfS, DSfM, binocular, trinocular, and multi-ocular systems, SL and ToF. They claim that SfM, refined by SfS, could deliver an accuracy of 0.1–0.4 mm (RMSE) on simulated objects, stereo vision could generate an accuracy of 0.45 mm (RMSE) when scanning a skull, a trinocular approach could reconstruct a piece of meat with an accuracy of −0.5 ± 0.57 mm (MAE), a multi-ocular approach resulted in near millimeter accuracy, and a SL approach could reconstruct the inside of an hollow object with <0.1 mm accuracy (RMSE), whereas a ToF endoscope could deliver a 4.5 mm accuracy (RMSE) when reconstructing a lung surface compared to 3.0 mm by stereo vision. Ref. [46] compared four stereo vision approaches using laparoscopic stereo video sequences.

However, it is unclear which approach delivers the highest accuracy because there are not enough data, and these references did not consider deep-learning approaches. Due to those gaps, this review collects more data and considers deep-learning approaches.

1.3. Research of Image-Based 3D Reconstruction in Laparoscopy since 2015

The data presented in this article are an extension of the IFAC conference paper [7]. Compared to Ref. [7], also data from 2023 are listed, and a classification of the collected data regarding reference object, ground truth acquisition, deep-learning networks, and stereo- or mono cameras are made.

Before going into details of the quantitative evaluation, the distribution of 3D reconstruction techniques since 2015 is demonstrated. Therefore, all 72 articles (with and without quantitative evaluation) have been sorted regarding 3D reconstruction technique and their publication dates (see Table 1, some articles are mentioned multiple times, which explains the difference between 76 and 72 articles) [7]. Stereo vision was very present in the years 2015–2018 and became less afterwards, whereas AI approaches became increasingly popular having the highest portion in 2021, 2022, and 2023. The distribution is as follows: Stereo vision 26.3%, AI 26.3%, SL 14.5%, SfM 13.2%, SLAM 13.2%, SfS 1.3%, Smart Trocar 1.3%, Trinocular 1.3%, Light Field Technology 1.3%, Multicamera 1.3%, and ToF 0%.

Table 1.

Overview of 3D reconstruction techniques in laparoscopy since 2015.

2. Methods

This chapter presents the search strategy and the metrics usable for the quantitative evaluation of the reconstruction error.

2.1. Search Strategy

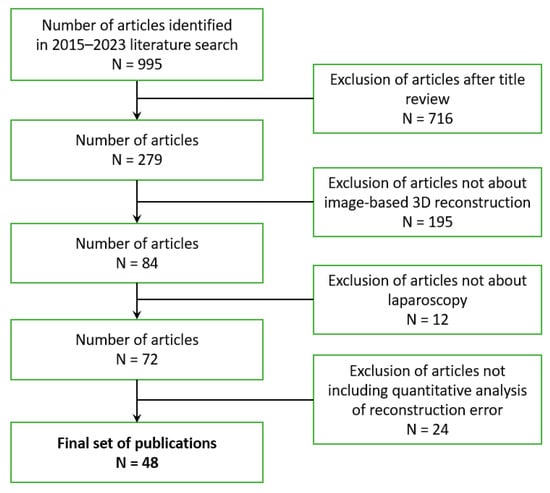

For literature search, the engines “PubMed” and “google scholar” were applied by setting the keywords to “3D reconstruction laparoscopy”, “three-dimensional reconstruction laparoscopy”, and “surface reconstruction laparoscopy”, while the year of publication was set to 2015–2023. Articles were excluded if not about image-based 3D reconstruction techniques, if not about laparoscopy, and if a quantitative analysis is missing (see Figure 2) [7]. In total, 995 articles were found, which were filtered by reading the title, the abstract, and the keywords. Out of those, 716 articles were excluded after title review, 195 articles were excluded because they did not talk about image-based 3D reconstruction, and 12 articles were removed due to the missing topic of laparoscopy. Exclusion criteria led to 72 articles. The last criterion—the presence of the quantitative evaluation of the reconstruction error, especially the RMSE and the MAE—excluded 24 articles. Thus, 48 articles were considered in this review containing studies of image-based 3D reconstruction in laparoscopic applications from full-text articles, conference-proceedings, and abstracts.

Figure 2.

Paper exclusion tree.

For the review process of the literature, we followed the guidelines of Refs. [96,97] and considered the steps of topic formulation, study design, data collection, data analysis, and reporting. This includes the objectives of the review, the creation of a protocol, which describes procedures and methods to evaluate the published work, as well as a standardized template in Excel for the data analysis of the published quantitative evaluation criteria and values. Reporting requires the presentation, interpretation, and discussion of the results as well as the description of implications for future research. The data collection includes the following items: 3D reconstruction technique, reference object, acquisition of ground truth, number of cameras (mainly mono and stereo camera), method of camera localization, metric of quantitative evaluation, number of shots (single vs. multi), number of image sequences/frames/datasets, and image resolution.

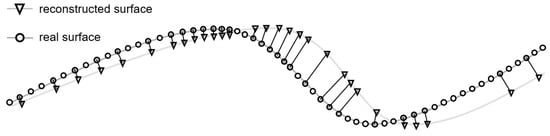

2.2. Metrics for Quantitative Evaluation of 3D Reconstructions

The quantitative evaluation of a reconstructed surface requires the ground truth of the reference model. Most authors measure the accuracy by computing the reconstruction error, which is described as the Euclidean distance (see Figure 3: black lines) between each reconstructed surface point (see Figure 3: triangles) and its corresponding closest point on the real surface (“corresponding point pair”, see Figure 3: grey colored circles). The following metrics could be applied to evaluate the distance: mean absolute error (MAE), magnitude of relative error (MRE), standard deviation (STD), root mean squared error (RMSE), root mean squared logarithmic error (RMSE log), absolute error (AbsRel), and squared error (SqRel) (see Table 2). The MAE and the RMSE can be found in most articles, which is why we focus on these metrics [7].

Figure 3.

Visualization of criteria for quantitative evaluation.

Table 2.

Overview of metrics for quantitative evaluation with reconstructed point , corresponding closest point on the real surface , number of scanned points , and mean value [16].

Next to accuracy, there are additional criteria for the quantitative evaluation. Ref. [42] names point density, surface coverage, and robustness. The point density can be described as the total number of reconstructed points within the region of interest (see Figure 3: number of triangles). The surface coverage describes the portion of the object covered by reconstructed points (see Figure 3: ratio of triangles to circles). It is calculated as the ratio of ground truth points with a corresponding neighbor on the reconstructed surface to the total number of ground truth points. For the robustness evaluation, the performance is measured when object distance and viewing angle vary, and when smoke and blood are present. The precision is defined as the distance between the reconstructed points and the corresponding points on the fitted surface .

3. Results

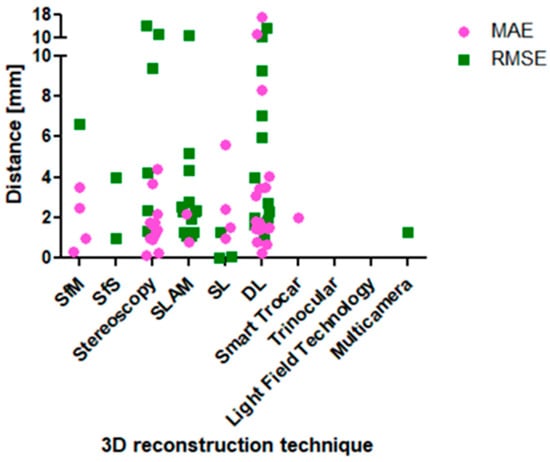

This review collects the reconstruction error of different image-based 3D reconstruction techniques to point out the technique with the highest accuracy. Table 3 lists the results, and Figure 4 visualizes these, from which three key findings can be stated. Some articles used publicly available datasets for evaluation, which are called Hamlyn centre endoscopic/laparoscopic dataset (in vivo patient datasets and validation datasets) [98], KITTI (Karlsruhe Institute of Technology and Toyota Technological Institute) (traffic scenarios) [99], and SCARED (stereo correspondence and reconstruction of endoscopic data) (datasets of fresh porcine cadaver abdominal anatomy using a da Vinci Xi endoscope and a projector) [15]. KITTI is most popular in the research of mobile robots and autonomous driving. The other two datasets are popular for endoscopic and laparoscopic research.

Table 3.

Collection of the reconstruction error of the 48 reviewed articles, including the 3D reconstruction technique, the reference object, the ground truth, the mean absolute error (MAE), the root mean squared error (RMSE), and the author. Data marked with * are delivered via mail by the authors.

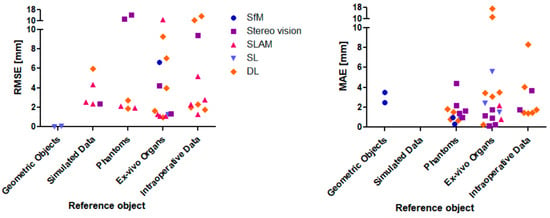

Figure 4.

Visualization of the RMSE and the MAE values in relation to the image-based 3D reconstruction technique.

(1) Based on the RMSE and MAE data set, two SL, one SfM, one stereo vision, and one DL approach deliver an accuracy below one millimeter. Refs. [26,88] could deliver RMSE values below one millimeter. The SL approach in Ref. [88] is based on stereo images and achieved the lowest reconstruction error of 0.0078 mm (plate and cylinder as reference object), while Ref. [26] results in 0.07 mm RMSE (cylinder). Based on the MAE data set, a SfM (0.15 mm) (ex vivo bovine liver), a stereo vision (0.23 mm) (in vitro porcine heart images), and a DL approach (0.26 mm) (Hamlyn centre dataset) delivered the highest accuracy below one millimeter [17,55,74]. When only focusing on intraoperative organ data as reference objects and RMSE values, the best results are achieved by a SLAM approach with a value of 1.1 mm [79] (in vivo porcine abdominal cavity).

(2) Even within similar 3D reconstruction techniques, the RMSE and the MAE vary strongly. The RMSE values of the techniques stereo vision, SLAM and DL differ in a range between one millimeter and more than ten millimeters. The stereo vision approaches of Refs. [56,58,63,73] generated results between 1.31 mm and 4.21 mm, whereas Ref. [61] achieved 9.35 mm. The SLAM approaches delivered values in the range of 1.1 mm to 4.32 mm [73,76,77,79], whereas Ref. [54] results in 10.78 mm. The DL approaches generated RMSE values between 1.62 mm and 5.95 mm [16,17,19,20,22,23,73], whereas two articles present 9.27 mm and 13.18 mm [18,24].

(3) Submillimeter accuracy could only be reached by 6.5% of the articles (RMSE). As it can be seen in Figure 4 and Table 3, only two articles could achieve RMSE values below one millimeter [26,88], twelve articles could achieve RMSE values between one and two millimeters [12,13,16,17,19,22,23,63,79,81,82,86], six articles between two and three millimeters [22,23,73,77,81,82], four articles between three and five millimeters [10,20,58,76], six articles between five and ten millimeters [15,18,54,61,73,82], and four articles above ten millimeters [23,24,54,75]. Thus, most articles delivered values between one and three millimeters.

4. Discussion

This chapter debates the three key findings of the former chapter and three more aspects.

(1) Based on the RMSE and MAE data set, two SL, one SfM, one stereo vision, and one DL approach deliver an accuracy below one millimeter. This statement is valid if the validation scenario, which differs between the reviewed articles, is disregarded. The validation scenario must be considered to decide if it reflects the real application. The reference object is part of the validation scenario and should be considered to give a statement about realistic performance expectations. In the end, it is of little interest for an application in laparoscopy to know the performance of an algorithm on simple objects like cylinders and planes. This does not reveal much about how it performs for the actual application, which is a much more complex situation. The 3D reconstruction algorithms must also perform when there is non-rigid movement in the relevant scene, something which is indeed the case in laparoscopy. Although an algorithm may have excellent performance ex vivo for a non-moving organ, it may become very inaccurate when non-rigid movement is present in the scene. An image-based 3D reconstructions technique in laparoscopy must cope with non-rigid motion. If one technique operates on single images, it might not be badly affected by it. Whereas if one applies multiple images, as SfM for example, it may be. Any multi-view-based model assuming a rigid scene may suffer.

However, it is a necessary criterion for an algorithm to perform on simple objects. The performance test on in vivo organs must follow (sufficient criterion). For the sake of completeness, this article includes the results of all different reference objects.

Figure 5 visualizes the RMSE and MAE in dependence of the 3D reconstruction method and the reference object. (Simple) geometric objects, simulated environments, phantom organs, ex vivo organs, and intraoperative data could be found in the literature. In this order, the intraoperative data are the most complex and challenging to reconstruct. Thus, the expectation is that the reconstruction error increases with increasing complexity of the reference object. Due to a high variation of the reconstruction error, this tendency can only be observed slightly in Figure 5. The two SL approaches achieving RMSE values of 0.07 mm [26] and 0.0078 mm [88] can be found in the category of geometric objects of the left diagram of Figure 5 (plate and cylinder as reference object, left diagram in Figure 5). It would be supportive to validate these results on in vivo organs. In contrast to that, the SL approach of Ref. [86] results in 1.28 mm (RMSE, category ex vivo organ in the left diagram of Figure 5). The three mentioned articles did not measure the MAE, but Ref. [87] did. There, a monocular SL system was built and reached 1.0 ± 0.4 mm MAE (phantom as reference object, right diagram in Figure 5), which is close to submillimeter accuracy. Here, it would be supportive to measure the RMSE as well because it weighs outliers more and is the more critical and representative metric. In summary, the performance of SL approaches cannot be stated when only tested on simple geometric objects, phantoms, or ex vivo organs—additionally intraoperative data are necessary.

Figure 5.

Visualization of the RMSE values in relation to the 3D reconstruction technique and the reference object (left); visualization of the MAE values in relation to the 3D reconstruction technique and the reference object (right).

Ref. [55] applied a SfM approach and Ref. [74] applied a stereo vision approach—both only measuring the MAE (0.15 mm and 0.23 mm), but not the RMSE. Both did not evaluate their algorithms on intraoperative data, but on ex vivo organs. To confirm the high performance, it requires in vivo data and RMSE computation.

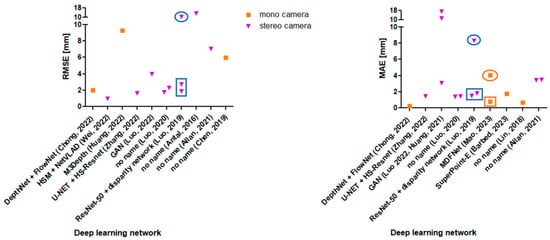

Ref. [17] applied a DL approach and measured 1.98 mm RMSE, which is the highest accuracy compared to the other DL approaches (see left diagram in Figure 5). Moreover, it is validated on intraoperative data, which declare this approach as promising. The authors used the networks DepthNet and FlowNet with monocular images as input (see Figure 6).

Figure 6.

Visualization of the RMSE values from the Refs. [15,16,17,18,19,20,22,23,24,73] in relation to the deep learning network and the number of cameras (left); Visualization of the MAE values from the Refs. [14,15,17,19,20,21,22,23,92,93] in relation to the deep learning network and the number of cameras (right); the rectangle boxes marks the data from phantom organs and the circles mark the data from in vivo images.

Finally—based on the former presented dataset—the SLAM approach by [78] can be stated as the most promising approach because the RMSE value is close to submillimeter accuracy (1.1 mm) and the authors used intraoperative laparoscopic data. However, more data are needed for comparison purposes to either confirm or refute the good performance of the SL and DL approaches.

(2) Even within similar 3D reconstruction techniques, the RMSE and the MAE values vary strongly. In some articles, stereo vision, SLAM, and DL approaches achieve higher accuracies than in other articles. The results show a high variation. The influence of the reference object can be seen by a deep dive into the DL approaches (see Figure 6 left and right). Refs. [23,92] validated their algorithms by using both phantom organs (see data point in rectangles in Figure 6 left and right) and intraoperative images (see ovals in Figure 6 left and right). There, the reconstruction results of the intraoperative images are worse than those of the phantom organs (by factor ~5). If there was a factor to estimate the accuracy for intraoperative data based on simple objects, this would be supportive for future researchers, as they could start their algorithm evaluation on simple objects. If the performance already fails then, they can save the effort of further in vivo evaluations.

Figure 6 shows a diagram in which DL approaches are separated into either stereoscopic or monocular input images. The expectation was that stereoscopic input images deliver higher accuracies due to the second camera perspective. This statement cannot be confirmed, as there are too few data. Moreover, the performance of the different DL networks might have an influence on the results as well.

(3) Submillimeter accuracy could only be reached by 6.5% of the articles (RMSE).

In a laparoscopic intervention, movement (heartbeat, breathing, intestinal peristalsis), organ specularity, and occlusions (instruments, blood, smoke) challenge the 3D reconstruction algorithms to reach submillimeter accuracy [7]. It can be achieved when using geometric objects as a reference [26,88]. Probably, some applications do not require submillimeter accuracy. The supervision of preoperative 3D models with intraoperative laparoscopic images for image-guided navigation for example needs less accuracy if the laparoscope is guided manually [7]. Ref. [100] reports that a maximum error of 1.5–2 mm is acceptable. Autonomous robot-assisted interventions require a higher accuracy. Ref. [101] reports that the doctor’s hand can achieve an accuracy of 0.1 mm, which should also be met by the robot.

(4) Accuracy might depend on several influence factors. Due to the inability to explain the high variation of the reconstruction error, there are assumptions that the different validation scenarios might be responsible for it. The following subjects are listed as possible influence factors:

- Reference object (geometric objects vs. simulated data vs. ex/in vivo data).

- Method of ground truth acquisition (CT data vs. laser scanner vs. manual labelling).

- Method of camera localization (known from external sensor vs. image-based estimation).

- Number of frames (single shot vs. multiple frames/multi view).

- Image resolution (the higher the more 3D points).

- Number of training data (relevant for DL approaches.

- Implemented algorithms (e.g., SIFT vs. SURF, ORB-SLAM vs. VISLAM).

To prove the above-mentioned influence factors, more data are required as well as a comparison of different image-based 3D reconstruction techniques under the same validation scenarios.

(5) Additional aspects for validation. Not only the quantitative metrics should be considered for validation. When choosing a 3D reconstruction method, the computation time, the necessity of additional hardware, the investment costs, and the compatibility with the standard equipment in clinics should be evaluated [7]. When looking at the computation time, a few articles—DL and stereo vision—claim to achieve real time with around 20–30 fps or close to real time with 11–17 fps [16,19,59,60,74]. The aspect of additional hardware compatibility with the standard equipment of clinics and the investment costs correlate. Stereo vision requires a second camera, which, compared to monocular laparoscopes, is more expensive, and thus, not every clinic provides these. SL requires additional hardware as well (projecting light source) and standard laparoscopes do not contain SL. Regarding this aspect, SfM, SfS, SLAM, and DL (monocular images as input) would be the better choice.

(6) Recommendations. In future research, it would be recommendable to follow a standard evaluation procedure. First, the evaluation should be made either with a MIS data set (e.g., data set of Hamlyn centre, SCARED), which includes ground truth, or with other intraoperative image data. Second, in case of an own image acquisition, including ground truth, these data should be published so that other researchers have access. Third, the MAE and the RMSE of the reconstruction error must be measured. Fourth, the ground truth must be as close as possible to the real shape, which is best by either CAD data, CT or MRT data, or reliable 3D scanners. Fifth, the influence factors should be documented. Refs. [54,57,73,85] included a comparison of different approaches, which are good examples for future research articles.

5. Conclusions

This review (2015–2023) presented laparoscopic image-based 3D reconstruction techniques and compared their accuracy to name the most promising one, from which SLAM, DL, and SL achieved the lowest reconstruction error (RMSE) in submillimeter and near submillimeter range. The SLAM approach reached 1.1 mm RMSE (in vivo porcine abdominal cavity), the DL approach reached 1.98 mm RMSE (Hamlyn centre dataset), and the SL approach reached 0.0078 mm RMSE (plate and cylinder). For the evaluation of the reconstruction techniques, the reference object must be rated if it is representative for laparoscopy—the actual application. Any viable approach should have a low reconstruction error for simple objects. However, simple models or even more complex ones—if they are at rest or rigid—as basis for comparison can be deceptive. Even if one approach has on-par or even better performance than another, the former can perform significantly worse in a realistic situation if it cannot cope with non-rigid motion, whereas the latter is not affected. Following this, a plate, a cylinder, and even ex vivo organs are not sufficient for the evaluation. Thus, it requires intraoperative data to confirm an approach’s performance.

Finally, it is recommendable for future researchers to always consider both the MAE and the RMSE of the reconstruction error, to apply their algorithms in vivo organs, publicly available MIS datasets, or publish own datasets, and to compare the own results with other approaches.

Author Contributions

Conceptualization, B.G., A.R. and K.M.; methodology, B.G.; validation, B.G.; formal analysis, B.G.; investigation, B.G.; data curation, B.G.; writing—original draft preparation, B.G.; writing—review and editing, B.G.; visualization, B.G.; supervision, A.R. and K.M.; project administration, B.G., A.R. and K.M. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partially funded by Furtwangen University (Villingen-Schwenningen, Germany) and KARL STORZ SE & Co. KG (Tuttlingen, Germany).

Data Availability Statement

The data that supports the findings of this work are available from the corresponding author.

Conflicts of Interest

Birthe Göbel is part-time employed at KARL STORZ SE & Co. KG. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Jaffray, B. Minimally Invasive Surgery. Arch. Dis. Child. 2005, 90, 537–542. [Google Scholar] [CrossRef] [PubMed]

- Sugarbaker, P.H. Laparoscopy in the Diagnosis and Treatment of Peritoneal Metastases. Ann. Laparosc. Endosc. Surg. 2019, 4, 42. [Google Scholar] [CrossRef]

- Agarwal, S.K.; Chapron, C.; Giudice, L.C.; Laufer, M.R.; Leyland, N.; Missmer, S.A.; Singh, S.S.; Taylor, H.S. Clinical Diagnosis of Endometriosis: A Call to Action. Am. J. Obstet. Gynecol. 2019, 220, 354.e1–354.e12. [Google Scholar] [CrossRef] [PubMed]

- Andrea, T.; Congcong, W.; Rafael, P.; Faouzi, A.C.; Azeddine, B.; Bjorn, E.; Jakob, E.O. Validation of Stereo Vision Based Liver Surface Reconstruction for Image Guided Surgery. In Proceedings of the 2018 Colour and Visual Computing Symposium (CVCS), Gjovik, Norway, 19–20 September 2018; IEEE: New York City, NY, USA, 2018; pp. 1–6. [Google Scholar]

- Saeidi, H.; Opfermann, J.D.; Kam, M.; Wei, S.; Leonard, S.; Hsieh, M.H.; Kang, J.U.; Krieger, A. Autonomous Robotic Laparoscopic Surgery for Intestinal Anastomosis. Sci. Robot. 2022, 7, eabj2908. [Google Scholar] [CrossRef] [PubMed]

- Armstrong, K.; Spalazzi, J.; Lazzaretti, S.; Cook, M.; Trivedi, A. Clinical Utility of Senhance AI Inguinal Hernia. Available online: https://www.asensus.com/documents/clinical-utility-senhance-ai-inguinal-hernia (accessed on 17 July 2023).

- Göbel, B.; Möller, K. Quantitative Evaluation of Camera-Based 3D Reconstruction in Laparoscopy: A Review. In Proceedings of the 12th IFAC Symposium on Biological and Medical Systems, Villingen-Schwenningen, Germany, 11–13 September 2024. forthcoming. [Google Scholar]

- Maier-Hein, L.; Mountney, P.; Bartoli, A.; Elhawary, H.; Elson, D.; Groch, A.; Kolb, A.; Rodrigues, M.; Sorger, J.; Speidel, S.; et al. Optical Techniques for 3D Surface Reconstruction in Computer-Assisted Laparoscopic Surgery. Med. Image Anal. 2013, 17, 974–996. [Google Scholar] [CrossRef] [PubMed]

- Marcinczak, J.M.; Painer, S.; Grigat, R.-R. Sparse Reconstruction of Liver Cirrhosis from Monocular Mini-Laparoscopic Sequences. In Proceedings of the Medical Imaging 2015: Image-Guided Procedures, Robotic Interventions, and Modeling, Orlando, FL, USA, 21–26 February 2015; SPIE: Bellingham, WA, USA, 2015; pp. 470–475. [Google Scholar]

- Kumar, A.; Wang, Y.-Y.; Liu, K.-C.; Hung, W.-C.; Huang, S.-W.; Lie, W.-N.; Huang, C.-C. Surface Reconstruction from Endoscopic Image Sequence. In Proceedings of the 2015 IEEE International Conference on Consumer Electronics-Taiwan, Taipei, Taiwan, 6–8 June 2015; IEEE: New York City, NY, USA, 2015; pp. 404–405. [Google Scholar]

- Conen, N.; Luhmann, T.; Maas, H.-G. Development and Evaluation of a Miniature Trinocular Camera System for Surgical Measurement Applications. PFG–J. Photogramm. Remote Sens. Geoinf. Sci. 2017, 85, 127–138. [Google Scholar] [CrossRef]

- Su, Y.-H.; Huang, K.; Hannaford, B. Multicamera 3D Reconstruction of Dynamic Surgical Cavities: Camera Grouping and Pair Sequencing. In Proceedings of the 2019 International Symposium on Medical Robotics (ISMR), Atlanta, GA, USA, 3–5 April 2019; IEEE: New York City, NY, USA, 2019; pp. 1–7. [Google Scholar]

- Zhou, H.; Jayender, J. EMDQ-SLAM: Real-Time High-Resolution Reconstruction of Soft Tissue Surface from Stereo Laparoscopy Videos. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, 27 September–1 October 2021; Proceedings, Part IV 24. Springer Nature: Cham, Switzerland, 2021; Volume 12904, pp. 331–340. [Google Scholar]

- Lin, J.; Clancy, N.T.; Qi, J.; Hu, Y.; Tatla, T.; Stoyanov, D.; Maier-Hein, L.; Elson, D.S. Dual-Modality Endoscopic Probe for Tissue Surface Shape Reconstruction and Hyperspectral Imaging Enabled by Deep Neural Networks. Med. Image Anal. 2018, 48, 162–176. [Google Scholar] [CrossRef] [PubMed]

- Allan, M.; Mcleod, J.; Wang, C.; Rosenthal, J.C.; Hu, Z.; Gard, N.; Eisert, P.; Fu, K.X.; Zeffiro, T.; Xia, W.; et al. Stereo Correspondence and Reconstruction of Endoscopic Data Challenge 2021. arXiv 2021, arXiv:2101.01133. [Google Scholar]

- Wei, R.; Li, B.; Mo, H.; Lu, B.; Long, Y.; Yang, B.; Dou, Q.; Liu, Y.; Sun, D. Stereo Dense Scene Reconstruction and Accurate Localization for Learning-Based Navigation of Laparoscope in Minimally Invasive Surgery. IEEE Trans. Biomed. Eng. 2022, 70, 488–500. [Google Scholar] [CrossRef]

- Chong, N. 3D Reconstruction of Laparoscope Images with Contrastive Learning Methods. IEEE Access 2022, 10, 4456–4470. [Google Scholar] [CrossRef]

- Huang, B.; Zheng, J.-Q.; Nguyen, A.; Xu, C.; Gkouzionis, I.; Vyas, K.; Tuch, D.; Giannarou, S.; Elson, D.S. Self-Supervised Depth Estimation in Laparoscopic Image Using 3D Geometric Consistency. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Singapore, 18–22 September 2022; Springer Nature: Cham, Switzerland, 2022; pp. 13–22. [Google Scholar]

- Zhang, G.; Huang, Z.; Lin, J.; Li, Z.; Cao, E.; Pang, Y. A 3D Reconstruction Based on an Unsupervised Domain Adaptive for Binocular Endoscopy. Front. Physiol. 2022, 13, 1734. [Google Scholar] [CrossRef] [PubMed]

- Luo, H.; Wang, C.; Duan, X.; Liu, H.; Wang, P.; Hu, Q.; Jia, F. Unsupervised Learning of Depth Estimation from Imperfect Rectified Stereo Laparoscopic Images. Comput. Biol. Med. 2022, 140, 105109. [Google Scholar] [CrossRef] [PubMed]

- Huang, B.; Zheng, J.; Nguyen, A.; Tuch, D.; Vyas, K.; Giannarou, S.; Elson, D.S. Self-Supervised Generative Adversarial Network for Depth Estimation in Laparoscopic Images. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, 27 September–1 October 2021; Proceedings, Part IV 24. Springer Nature: Cham, Switzerland, 2021; pp. 227–237. [Google Scholar]

- Luo, H.; Yin, D.; Zhang, S.; Xiao, D.; He, B.; Meng, F.; Zhang, Y.; Cai, W.; He, S.; Zhang, W.; et al. Augmented Reality Navigation for Liver Resection with a Stereoscopic Laparoscope. Comput. Methods Programs Biomed. 2020, 187, 105099. [Google Scholar] [CrossRef] [PubMed]

- Luo, H.; Hu, Q.; Jia, F. Details Preserved Unsupervised Depth Estimation by Fusing Traditional Stereo Knowledge from Laparoscopic Images. Healthc. Technol. Lett. 2019, 6, 154–158. [Google Scholar] [CrossRef] [PubMed]

- Antal, B. Automatic 3D Point Set Reconstruction from Stereo Laparoscopic Images Using Deep Neural Networks 2016. arXiv 2016, arXiv:1608.00203. [Google Scholar]

- Penne, J.; Höller, K.; Stürmer, M.; Schrauder, T.; Schneider, A.; Engelbrecht, R.; Feußner, H.; Schmauss, B.; Hornegger, J. Time-of-Flight 3-D Endoscopy. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, London, UK, 20–24 September 2009; Springer: Berlin/Heidelberg, Germany, 2009; pp. 467–474. [Google Scholar]

- Sui, C.; Wang, Z.; Liu, Y. A 3D Laparoscopic Imaging System Based on Stereo-Photogrammetry with Random Patterns. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: New York City, NY, USA, 2018; pp. 1276–1282. [Google Scholar]

- Garbey, M.; Nguyen, T.B.; Huang, A.Y.; Fikfak, V.; Dunkin, B.J. A Method for Going from 2D Laparoscope to 3D Acquisition of Surface Landmarks by a Novel Computer Vision Approach. Int. J. CARS 2018, 13, 267–280. [Google Scholar] [CrossRef] [PubMed]

- Kwan, E.; Qin, Y.; Hua, H. Development of a Light Field Laparoscope for Depth Reconstruction. In Proceedings of the Imaging and Applied Optics 2017 (3D, AIO, COSI, IS, MATH, pcAOP) (2017), San Francisco, CA, USA, 26–29 June 2017; paper DW1F.2. Optica Publishing Group: Washington, DC, USA, 2017; p. DW1F.2. [Google Scholar]

- Collins, T.; Bartoli, A. Towards Live Monocular 3D Laparoscopy Using Shading and Specularity Information. In Proceedings of the International Conference on Information Processing in Computer-Assisted Interventions, Pisa, Italy, 27 June 2012; Springer: Berlin/Heidelberg, Germany, 2012; pp. 11–21. [Google Scholar]

- Devernay, F.; Mourgues, F.; Coste-Maniere, E. Towards Endoscopic Augmented Reality for Robotically Assisted Minimally Invasive Cardiac Surgery. In Proceedings of the International Workshop on Medical Imaging and Augmented Reality, Hong Kong, China, 10–12 June 2001; pp. 16–20. [Google Scholar]

- Scharstein, D.; Szeliski, R. A Taxonomy and Evaluation of Dense Two-Frame Stereo Correspondence Algorithms. Int. J. Comput. Vis. 2002, 47, 7–42. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous Localisation and Mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Mountney, P.; Stoyanov, D.; Davison, A.; Yang, G.-Z. Simultaneous Stereoscope Localization and Soft-Tissue Mapping for Minimal Invasive Surgery. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Copenhagen, Denmark, 1–6 October 2006; Springer: Berlin/Heidelberg, Germany, 2006; Volume 9, pp. 347–354. [Google Scholar]

- Robinson, A.; Alboul, L.; Rodrigues, M. Methods for Indexing Stripes in Uncoded Structured Light Scanning Systems. J. WSCG 2004, 12, 371–378. [Google Scholar]

- Bardozzo, F.; Collins, T.; Forgione, A.; Hostettler, A.; Tagliaferri, R. StaSiS-Net: A Stacked and Siamese Disparity Estimation Network for Depth Reconstruction in Modern 3D Laparoscopy. Med. Image Anal. 2022, 77, 102380. [Google Scholar] [CrossRef]

- Cao, Z.; Huang, W.; Liao, X.; Deng, X.; Wang, Q. Self-Supervised Dense Depth Prediction in Monocular Endoscope Video for 3D Liver Surface Reconstruction. J. Phys. Conf. Ser. 2021, 1883, 012050. [Google Scholar] [CrossRef]

- Maekawa, R.; Shishido, H.; Kameda, Y.; Kitahara, I. Dense 3D Organ Modeling from a Laparoscopic Video. In Proceedings of the International Forum on Medical Imaging in Asia, Taipei, Taiwan, 24–27 January 2021; SPIE: Bellingham, WA, USA, 2021; Volume 11792, pp. 115–120. [Google Scholar]

- Su, Y.-H.; Huang, K.; Hannaford, B. Multicamera 3D Viewpoint Adjustment for Robotic Surgery via Deep Reinforcement Learning. J. Med. Robot. Res. 2021, 6, 2140003. [Google Scholar] [CrossRef]

- Xu, K.; Chen, Z.; Jia, F. Unsupervised Binocular Depth Prediction Network for Laparoscopic Surgery. Comput. Assist. Surg. 2019, 24 (Suppl. S1), 30–35. [Google Scholar] [CrossRef]

- Lin, B.; Goldgof, D.; Gitlin, R.; You, Y.; Sun, Y.; Qian, X. Video Based 3D Reconstruction, Laparoscope Localization, and Deformation Recovery for Abdominal Minimally Invasive Surgery: A Survey. Int. J. Med. Robot. Comput. Assist. Surg. 2016, 12, 158–178. [Google Scholar] [CrossRef] [PubMed]

- Bergen, T.; Wittenberg, T. Stitching and Surface Reconstruction from Endoscopic Image Sequences: A Review of Applications and Methods. IEEE J. Biomed. Health Inform. 2014, 20, 304–321. [Google Scholar] [CrossRef]

- Maier-Hein, L.; Groch, A.; Bartoli, A.; Bodenstedt, S.; Boissonnat, G.; Haase, S.; Heim, E.; Hornegger, J.; Jannin, P.; Kenngott, H.; et al. Comparative Validation of Single-Shot Optical Techniques for Laparoscopic 3D Surface Reconstruction. IEEE Trans. Med. Imaging 2014, 33, 1913–1930. [Google Scholar] [CrossRef] [PubMed]

- Schneider, C.; Allam, M.; Stoyanov, D.; Hawkes, D.J.; Gurusamy, K.; Davidson, B.R. Performance of Image Guided Navigation in Laparoscopic Liver Surgery—A Systematic Review. Surg. Oncol. 2021, 38, 101637. [Google Scholar] [CrossRef] [PubMed]

- Groch, A.; Seitel, A.; Hempel, S.; Speidel, S.; Engelbrecht, R.; Penne, J.; Holler, K.; Rohl, S.; Yung, K.; Bodenstedt, S.; et al. 3D Surface Reconstruction for Laparoscopic Computer-Assisted Interventions: Comparison of State-of-the-Art Methods. In Proceedings of the Medical Imaging 2011: Visualization, Image-Guided Procedures, and Modeling, Lake Buena Vista, FL, USA, 13–15 February 2011; SPIE: Bellingham, WA, USA, 2011; Volume 7964, pp. 351–359. [Google Scholar]

- Conen, N.; Luhmann, T. Overview of Photogrammetric Measurement Techniques in Minimally Invasive Surgery Using Endoscopes. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 33–40. [Google Scholar] [CrossRef]

- Parchami, M.; Cadeddu, J.; Mariottini, G.-L. Endoscopic Stereo Reconstruction: A Comparative Study. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; IEEE: New York City, NY, USA, 2014; pp. 2440–2443. [Google Scholar]

- Cheema, M.N.; Nazir, A.; Sheng, B.; Li, P.; Qin, J.; Kim, J.; Feng, D.D. Image-Aligned Dynamic Liver Reconstruction Using Intra-Operative Field of Views for Minimal Invasive Surgery. IEEE Trans. Biomed. Eng. 2019, 66, 2163–2173. [Google Scholar] [CrossRef]

- Puig, L.; Daniilidis, K. Monocular 3D Tracking of Deformable Surfaces. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; IEEE: New York City, NY, USA, 2016; pp. 580–586. [Google Scholar]

- Bourdel, N.; Collins, T.; Pizarro, D.; Debize, C.; Grémeau, A.; Bartoli, A.; Canis, M. Use of Augmented Reality in Laparoscopic Gynecology to Visualize Myomas. Fertil. Steril. 2017, 107, 737–739. [Google Scholar] [CrossRef]

- Francois, T.; Debize, C.; Calvet, L.; Collins, T.; Pizarro, D.; Bartoli, A. Uteraug: Augmented Reality in Laparoscopic Surgery of the Uterus. In Proceedings of the Démonstration Présentée lors de la Conférence ISMAR en octobre, Nantes, France, 9–13 October 2017. [Google Scholar]

- Modrzejewski, R.; Collins, T.; Bartoli, A.; Hostettler, A.; Soler, L.; Marescaux, J. Automatic Verification of Laparoscopic 3d Reconstructions with Stereo Cross-Validation. In Proceedings of the Surgetica; Surgetica, Strasbourg, France, 20–22 November 2017. [Google Scholar]

- Wang, R.; Price, T.; Zhao, Q.; Frahm, J.M.; Rosenman, J.; Pizer, S. Improving 3D Surface Reconstruction from Endoscopic Video via Fusion and Refined Reflectance Modeling. In Proceedings of the Medical Imaging 2017: Image Processing, Orlando, FL, USA, 12–14 February 2017; SPIE: Bellingham, WA, USA, 2017; Volume 10133, pp. 80–86. [Google Scholar]

- Oh, J.; Kim, K. Accurate 3D Reconstruction for Less Overlapped Laparoscopic Sequential Images. In Proceedings of the 2017 2nd International Conference on Bio-engineering for Smart Technologies (BioSMART), Paris, France, 30 August–1 September 2017; IEEE: New York City, NY, USA, 2017; pp. 1–4. [Google Scholar]

- Su, Y.-H.; Huang, I.; Huang, K.; Hannaford, B. Comparison of 3D Surgical Tool Segmentation Procedures with Robot Kinematics Prior. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; IEEE: New York City, NY, USA, 2018; pp. 4411–4418. [Google Scholar]

- Modrzejewski, R.; Collins, T.; Hostettler, A.; Marescaux, J.; Bartoli, A. Light Modelling and Calibration in Laparoscopy. Int. J. Comput. Assist. Radiol. Surg. 2020, 15, 859–866. [Google Scholar] [CrossRef] [PubMed]

- Allan, M.; Kapoor, A.; Mewes, P.; Mountney, P. Non Rigid Registration of 3D Images to Laparoscopic Video for Image Guided Surgery. In Proceedings of the Computer-Assisted and Robotic Endoscopy: Second International Workshop, CARE 2015, Held in Conjunction with MICCAI 2015, Munich, Germany, 5 October 2015; Revised Selected Papers 2. Springer International Publishing: Cham, Switzerland, 2016; Volume 9515, pp. 109–116. [Google Scholar]

- Lin, B. Visual SLAM and Surface Reconstruction for Abdominal Minimally Invasive Surgery; University of South Florida: Tampa, FL, USA, 2015. [Google Scholar]

- Reichard, D.; Bodenstedt, S.; Suwelack, S.; Mayer, B.; Preukschas, A.; Wagner, M.; Kenngott, H.; Müller-Stich, B.; Dillmann, R.; Speidel, S. Intraoperative On-the-Fly Organ- Mosaicking for Laparoscopic Surgery. J. Med. Imaging 2015, 2, 045001. [Google Scholar] [CrossRef] [PubMed]

- Penza, V.; Ortiz, J.; Mattos, L.S.; Forgione, A.; De Momi, E. Dense Soft Tissue 3D Reconstruction Refined with Super-Pixel Segmentation for Robotic Abdominal Surgery. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 197–206. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.T.; Cheng, C.-H.; Liu, D.-G.; Liu, J.; Huang, W.S.W. Designing a New Endoscope for Panoramic-View with Focus-Area 3D-Vision in Minimally Invasive Surgery. J. Med. Biol. Eng. 2020, 40, 204–219. [Google Scholar] [CrossRef]

- Teatini, A.; Brunet, J.-N.; Nikolaev, S.; Edwin, B.; Cotin, S.; Elle, O.J. Use of Stereo-Laparoscopic Liver Surface Reconstruction to Compensate for Pneumoperitoneum Deformation through Biomechanical Modeling. In Proceedings of the VPH2020-Virtual Physiological Human, Paris, France, 26–28 August 2020. [Google Scholar]

- Shibata, M.; Hayashi, Y.; Oda, M.; Misawa, K.; Mori, K. Quantitative Evaluation of Organ Surface Reconstruction from Stereo Laparoscopic Images. IEICE Tech. Rep. 2018, 117, 117–122. [Google Scholar]

- Zhang, L.; Ye, M.; Giataganas, P.; Hughes, M.; Yang, G.-Z. Autonomous Scanning for Endomicroscopic Mosaicing and 3D Fusion. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: New York City, NY, USA, 2017; pp. 3587–3593. [Google Scholar]

- Luo, X.; McLeod, A.J.; Jayarathne, U.L.; Pautler, S.E.; Schlachta, C.M.; Peters, T.M. Towards Disparity Joint Upsampling for Robust Stereoscopic Endoscopic Scene Reconstruction in Robotic Prostatectomy. In Proceedings of the Medical Imaging 2016: Image-Guided Procedures, Robotic Interventions, and Modeling, San Diego, CA, USA, 28 February–1 March 2016; SPIE: Bellingham, WA, USA, 2016; Volume 9786, pp. 232–241. [Google Scholar]

- Thompson, S.; Totz, J.; Song, Y.; Johnsen, S.; Stoyanov, D.; Gurusamy, K.; Schneider, C.; Davidson, B.; Hawkes, D.; Clarkson, M.J. Accuracy Validation of an Image Guided Laparoscopy System for Liver Resection. In Proceedings of the Medical Imaging 2015: Image-Guided Procedures, Robotic Interventions, and Modeling, Orlando, FL, USA, 21–26 February 2015; SPIE: Bellingham, WA, USA, 2015; Volume 941509, pp. 52–63. [Google Scholar]

- Wittenberg, T.; Eigl, B.; Bergen, T.; Nowack, S.; Lemke, N.; Erpenbeck, D. Panorama-Endoscopy of the Abdomen: From 2D to 3D. In Proceedings of the Computer und Roboterassistierte Chirurgie (CURAC 2017), Hannover, Germany, 5–7 October 2017. Tech. Rep. [Google Scholar]

- Reichard, D.; Häntsch, D.; Bodenstedt, S.; Suwelack, S.; Wagner, M.; Kenngott, H.G.; Müller, B.P.; Maier-Hein, L.; Dillmann, R.; Speidel, S. Projective Biomechanical Depth Matching for Soft Tissue Registration in Laparoscopic Surgery. Int. J. Comput. Assist. Radiol. Surg. 2017, 12, 1101–1110. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Cheikh, F.A.; Kaaniche, M.; Elle, O.J. Liver Surface Reconstruction for Image Guided Surgery. In Proceedings of the Medical Imaging 2018: Image-Guided Procedures, Robotic Interventions, and Modeling, Houston, TX, USA, 12–15 February 2018; SPIE: Bellingham, WA, USA, 2018; Volume 10576, pp. 576–583. [Google Scholar]

- Speers, A.D.; Ma, B.; Jarnagin, W.R.; Himidan, S.; Simpson, A.L.; Wildes, R.P. Fast and Accurate Vision-Based Stereo Reconstruction and Motion Estimation for Image-Guided Liver Surgery. Healthc. Technol. Lett. 2018, 5, 208–214. [Google Scholar] [CrossRef] [PubMed]

- Kolagunda, A.; Sorensen, S.; Mehralivand, S.; Saponaro, P.; Treible, W.; Turkbey, B.; Pinto, P.; Choyke, P.; Kambhamettu, C. A Mixed Reality Guidance System for Robot Assisted Laparoscopic Radical Prostatectomy|SpringerLink. In Proceedings of the OR 2.0 Context-Aware Operating Theaters, Computer Assisted Robotic Endoscopy, Clinical Image-Based Procedures, and Skin Image Analysis, Granada, Spain, 16–20 September 2018; Springer: Berlin/Heidelberg, Germany, 2018; pp. 164–174. [Google Scholar]

- Zhou, H.; Jagadeesan, J. Real-Time Dense Reconstruction of Tissue Surface from Stereo Optical Video. IEEE Trans. Med. Imaging 2020, 39, 400–412. [Google Scholar] [CrossRef] [PubMed]

- Xia, W.; Chen, E.C.S.; Pautler, S.; Peters, T.M. A Robust Edge-Preserving Stereo Matching Method for Laparoscopic Images. IEEE Trans. Med. Imaging 2022, 41, 1651–1664. [Google Scholar] [CrossRef]

- Chen, L. On-the-Fly Dense 3D Surface Reconstruction for Geometry-Aware Augmented Reality. Ph.D. Thesis, Bournemouth University, Poole, UK, 2019. [Google Scholar]

- Zhang, X.; Ji, X.; Wang, J.; Fan, Y.; Tao, C. Renal Surface Reconstruction and Segmentation for Image-Guided Surgical Navigation of Laparoscopic Partial Nephrectomy. Biomed. Eng. Lett. 2023, 13, 165–174. [Google Scholar] [CrossRef]

- Su, Y.-H.; Lindgren, K.; Huang, K.; Hannaford, B. A Comparison of Surgical Cavity 3D Reconstruction Methods. In Proceedings of the 2020 IEEE/SICE International Symposium on System Integration (SII), Honolulu, HI, USA, 12–15 January 2020; IEEE: New York City, NY, USA, 2020; pp. 329–336. [Google Scholar]

- Chen, L.; Tang, W.; John, N.W.; Wan, T.R.; Zhang, J.J. Augmented Reality for Depth Cues in Monocular Minimally Invasive Surgery 2017. arXiv 2017, arXiv:1703.01243. [Google Scholar]

- Mahmoud, N.; Hostettler, A.; Collins, T.; Soler, L.; Doignon, C.; Montiel, J.M.M. SLAM Based Quasi Dense Reconstruction for Minimally Invasive Surgery Scenes 2017. arXiv 2017, arXiv:1705.09107. [Google Scholar]

- Wei, G.; Feng, G.; Li, H.; Chen, T.; Shi, W.; Jiang, Z. A Novel SLAM Method for Laparoscopic Scene Reconstruction with Feature Patch Tracking. In Proceedings of the 2020 International Conference on Virtual Reality and Visualization (ICVRV), Recife, Brazil, 13–14 November 2020; IEEE: New York City, NY, USA, 2020; pp. 287–291. [Google Scholar]

- Mahmoud, N.; Collins, T.; Hostettler, A.; Soler, L.; Doignon, C.; Montiel, J.M.M. Live Tracking and Dense Reconstruction for Handheld Monocular Endoscopy. IEEE Trans. Med. Imaging 2019, 38, 79–89. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.; Liao, X.; Sun, Y.; Wang, Q. Improved ORB-SLAM Based 3D Dense Reconstruction for Monocular Endoscopic Image. In Proceedings of the 2020 International Conference on Virtual Reality and Visualization (ICVRV), Recife, Brazil, 13–14 November 2020; IEEE: New York City, NY, USA, 2020; pp. 101–106. [Google Scholar]

- Yu, X.; Zhao, J.; Wu, H.; Wang, A. A Novel Evaluation Method for SLAM-Based 3D Reconstruction of Lumen Panoramas. Sensors 2023, 23, 7188. [Google Scholar] [CrossRef] [PubMed]

- Wei, G.; Shi, W.; Feng, G.; Ao, Y.; Miao, Y.; He, W.; Chen, T.; Wang, Y.; Ji, B.; Jiang, Z. An Automatic and Robust Visual SLAM Method for Intra-Abdominal Environment Reconstruction. J. Adv. Comput. Intell. Intell. Inform. 2023, 27, 1216–1229. [Google Scholar] [CrossRef]

- Lin, J.; Clancy, N.T.; Hu, Y.; Qi, J.; Tatla, T.; Stoyanov, D.; Maier-Hein, L.; Elson, D.S. Endoscopic Depth Measurement and Super-Spectral-Resolution Imaging 2017. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2017: 20th International Conference, Quebec City, QC, Canada, 11–13 September 2017. [Google Scholar]

- Le, H.N.D.; Opfermann, J.D.; Kam, M.; Raghunathan, S.; Saeidi, H.; Leonard, S.; Kang, J.U.; Krieger, A. Semi-Autonomous Laparoscopic Robotic Electro-Surgery with a Novel 3D Endoscope. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; IEEE: New York City, NY, USA, 2018; pp. 6637–6644. [Google Scholar]

- Edgcumbe, P.; Pratt, P.; Yang, G.-Z.; Nguan, C.; Rohling, R. Pico Lantern: Surface Reconstruction and Augmented Reality in Laparoscopic Surgery Using a Pick-up Laser Projector. Med. Image Anal. 2015, 25, 95–102. [Google Scholar] [CrossRef] [PubMed]

- Geurten, J.; Xia, W.; Jayarathne, U.L.; Peters, T.M.; Chen, E.C.S. Endoscopic Laser Surface Scanner for Minimally Invasive Abdominal Surgeries. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2018: 21st International Conference, Granada, Spain, 16–20 September 2018; Proceedings, Part IV 11. Springer International Publishing: Cham, Switzerland, 2018; pp. 143–150. [Google Scholar]

- Fusaglia, M.; Hess, H.; Schwalbe, M.; Peterhans, M.; Tinguely, P.; Weber, S.; Lu, H. A Clinically Applicable Laser-Based Image-Guided System for Laparoscopic Liver Procedures. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 1499–1513. [Google Scholar] [CrossRef] [PubMed]

- Sui, C.; He, K.; Lyu, C.; Wang, Z.; Liu, Y.-H. 3D Surface Reconstruction Using a Two-Step Stereo Matching Method Assisted with Five Projected Patterns. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; IEEE: New York City, NY, USA, 2019; pp. 6080–6086. [Google Scholar]

- Clancy, N.T.; Lin, J.; Arya, S.; Hanna, G.B.; Elson, D.S. Dual Multispectral and 3D Structured Light Laparoscope. In Proceedings of the Multimodal Biomedical Imaging X, San Francisco, CA, USA, 7 February 2015; SPIE: Bellingham, WA, USA, 2015; Volume 9316, pp. 60–64. [Google Scholar]

- Sugawara, M.; Kiyomitsu, K.; Namae, T.; Nakaguchi, T.; Tsumura, N. An Optical Projection System with Mirrors for Laparoscopy. Artif. Life Robot. 2017, 22, 51–57. [Google Scholar] [CrossRef]

- Sui, C.; Wu, J.; Wang, Z.; Ma, G.; Liu, Y.-H. A Real-Time 3D Laparoscopic Imaging System: Design, Method, and Validation. IEEE Trans. Biomed. Eng. 2020, 67, 2683–2695. [Google Scholar] [CrossRef]

- Mao, F.; Huang, T.; Ma, L.; Zhang, X.; Liao, H. A Monocular Variable Magnifications 3D Laparoscope System Using Double Liquid Lenses. IEEE J. Transl. Eng. Health Med. 2023, 12, 32–42. [Google Scholar] [CrossRef]

- Barbed, O.L.; Montiel, J.M.M.; Fua, P.; Murillo, A.C. Tracking Adaptation to Improve SuperPoint for 3D Reconstruction in Endoscopy. In Proceedings of the Medical Image Computing and Computer Assisted Intervention—MICCAI 2023; Greenspan, H., Madabhushi, A., Mousavi, P., Salcudean, S., Duncan, J., Syeda-Mahmood, T., Taylor, R., Eds.; Springer Nature: Cham, Switzerland, 2023; pp. 583–593. [Google Scholar]

- Cruciani, L.; Chen, Z.; Fontana, M.; Musi, G.; De Cobelli, O.; De Momi, E. 3D Reconstruction and Segmentation in Laparoscopic Robotic Surgery. In Proceedings of the 5th Italian Conference on Robotics and Intelligent Machines (IRIM), Rome, Italy, 20–22 October 2023. [Google Scholar]

- Nguyen, K.T.; Tozzi, F.; Rashidian, N.; Willaert, W.; Vankerschaver, J.; De Neve, W. Towards Abdominal 3-D Scene Rendering from Laparoscopy Surgical Videos Using NeRFs 2023. In International Workshop on Machine Learning in Medical Imaging; Springer Nature: Cham, Switzerland, 2023; pp. 83–93. [Google Scholar]

- Palmatier, R.W.; Houston, M.B.; Hulland, J. Review Articles: Purpose, Process, and Structure. J. Acad. Mark. Sci. 2018, 46, 1–5. [Google Scholar] [CrossRef]

- Carnwell, R.; Daly, W. Strategies for the Construction of a Critical Review of the Literature. Nurse Educ. Pract. 2001, 1, 57–63. [Google Scholar] [CrossRef] [PubMed]

- London, I.C. Hamlyn Centre Laparoscopic/Endoscopic Video Datasets. Available online: http://hamlyn.doc.ic.ac.uk/vision/ (accessed on 30 January 2024).

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision Meets Robotics: The KITTI Dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Lin, Z.; Lei, C.; Yang, L. Modern Image-Guided Surgery: A Narrative Review of Medical Image Processing and Visualization. Sensors 2023, 23, 9872. [Google Scholar] [CrossRef] [PubMed]

- Duval, C.; Jones, J. Assessment of the Amplitude of Oscillations Associated with High-Frequency Components of Physiological Tremor: Impact of Loading and Signal Differentiation. Exp. Brain Res. 2005, 163, 261–266. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).