The accurate estimation of human skin tone from colour images is important in diverse applications that rely on precise colour representation in visual data. This research topic is closely intertwined with the pursuit of colour accuracy and colour constancy, affecting technologies such as facial recognition [

1,

2,

3], medical imaging [

4,

5,

6], and the cosmetic and beauty industry [

7]. In these domains, the quality and correctness of outcomes are intrinsically tied to the faithful recovery of an individual’s skin tone. The results of failing to do so lead to sub-optimal performance, erroneous results, and sometimes, a complete breakdown in system functionality.

However, perceived colours and tones in the skin are a manifestation of not just the pigmentation and skin properties but also the source of the light that is reflected by the skin. Within the scope of this study, we explore and investigate some underlying causes of inaccurate colours in images captured through various light sources, focusing on the following factors:

Approaches based on computer vision technologies tend to use machine learning to address these challenges. In the realm of deep machine learning methodologies such as Convolutional Neural Networks (CNNs), effective data processing is crucial. CNNs exhibit the potential to address data-related challenges in skin tone estimation by revealing concealed data patterns. However, the efficacy of CNN models in this context heavily relies on the quality and diversity of the training data. Biased or inaccurate datasets can impede the model’s ability to generalise across various skin tones and conditions, impacting the accuracy of skin tone estimation. The goal is to contribute to advancements in fields reliant on accurate colour representation, facilitating improved applications in facial recognition, medical diagnostics, security systems, and cosmetics [

18,

19].

The challenge in developing such approaches is not limited to the method and CNN model but largely depends on the dataset used to train and assess it.

1.1. Related Works

Various works from the literature have tried to address this problem. We highlight some of their objectives, methods, and datasets.

Kips et al. [

20] developed a skin tone classifier for online makeup product shopping. Sobham et al. [

4] developed a method to assess the progress of wound healing, which has had a substantial impact on treatment decisions.

Borza et al., Lin et al., and Newton et al. used standard CNN architectures in their studies, achieving notable accuracy rates without providing detailed training parameter information. Borza et al. employed the VGG-19 architecture, achieving accuracy rates of 94.10%, 98.68%, and 82.89% for dark, light, and medium skin tones, respectively. Lin experimented with VGG-16, VGG-19, ResNet18, ResNet34, and ResNet50, reporting accuracy rates ranging from 71.29% to 83.96%. Newton et al. used a DenseNet201 model pre-trained on the ImageNet dataset for skin lesion classification, achieving accuracy rates of 98.10% on the ISIC2018 dataset and 91.20% on the SD-136 dataset.

In contrast, Kips et al. and Sobham et al. developed custom CNN models. Kips et al. adapted the LeNet model from the ResNet architecture for skin tone estimation into LAB colours, revealing specific hyperparameters, such as an AdaMax optimiser with a learning rate of and a value of 0.9. They applied data augmentation by randomly flipping images horizontally, training on both colour-corrected and non-colour-corrected images, with reported errors of 3.91 ± 2.53 and 4.23 ± 2.72, respectively. Sobham et al.’s custom CNN model featured four conditional layers and a fully connected layer, with fine-tuned hyperparameters achieved through the scipy library’s techniques. The Bayesian optimisation technique yielded the best performance, reaching an accuracy of 84.50%. Optimal hyperparameters included 128, 256, 512, and 512 feature maps for Conv-1 to Conv-4, dropout with 0% probability, Sigmoid activation functions, a learning rate of 0.0001, and a weight decay of 0.001.

1.1.1. Skin Tone Scales

Kips et al., Newton et al., and M. Sobham et al. employed the standard Fitzpatrick Skin Tone Scale to classify their image data. In contrast, Borza et al. categorised their facial images into three manual labels—dark, medium, and light—for their eyeglass recommendation project. Lin used a four-point non-standard skin tone scale: sallow, dark, pallid, and red.

1.1.2. Datasets

Both Kips et al. and Sobham et al. manually collected their datasets. Kips et al. used a diverse group of participants representing various skin tones in the United States for skin colour measurements from three facial regions. These measurements were averaged to establish a ground-truth skin colour. Participants took makeup-free photos while holding a colour calibration target and used their personal smartphones for image variation. Different lighting conditions, such as tungsten, fluorescent, outdoor, and indoor, were used for image capture. Manual annotation of colour calibration target positions ensured accuracy, with 2795 images from 655 participants used for model training.

In contrast, Sobham et al. captured colour images from 65 video frames at four subject locations under three lighting conditions: yellow, white, and ambient light. This dataset included images from five subjects, and each image was labelled with the Fitzpatrick Skin Type (FST) as a reference for skin characterisation.

Borza et al. developed a method to aid with the selection of eyeglasses inspired by recent fashion trends, where choosing the right eyeglass frame is crucial. The selection process involved various factors, such as face shape, skin tone, and eye colour, which can be challenging and costly when conducted by human analysts [

7]. The training dataset was created by combining facial images from various face databases, including the Caltech [

21] dataset, the Chicago Face dataset [

22], the Minear–Park database, and the Brazilian face database, with the categorisation based on subjects’ skin colours.

Lin [

5] developed an approach to accurately classify Chinese skin tones for disease diagnosis. The author did not specify the source of the data.

Newton et al. [

6] proposed a method to detect skin lesions and examined the impact of varying skin tones on detection accuracy. They used the ISIC2018 and SD-198 datasets, both of which are key for skin disease analysis. ISIC2018 contains 10,015 dermoscopic images across seven classes. Meanwhile, SD-198 comprises 6584 clinical images representing 198 skin diseases with 17 classes.

1.1.3. Data Pre-Processing Methods

All researchers considered various pre-processing steps, with cropping and scaling being common practices. Kips et al. employed cropping to isolate the face and subsequently scaled the images to a size of 128 × 128 × 3 for their non-standard LeNet model. On the other hand, Lin aimed to train both VGG and ResNet models. He began by cropping faces and scaling the images to dimensions of 224 × 224 × 3 and 227 × 227 × 3. Following this, he applied gamma correction, resulting in images of varying shades. Sobham et al. also applied cropping; however, their paper used images captured from different body parts rather than facial images. Consequently, patches of skin were collected and scaled to a size of 28 × 28 × 3.

Conversely, Borza et al. compared two methods for skin tone classification. The first involved an SVM classifier, where skin tone patches were extracted from the face. Each patch was then converted to RGB, HSV, LAB, and YCrCb colour spaces, and these images were concatenated into a long feature vector. Subsequently, the dimensionality was reduced using Principal Component Analysis (PCA). Their second model was a CNN, where from the images, faces were cropped, and the resulting images were scaled by 40% horizontally and vertically. Their final images were of size 224 × 224 × 3.

In the literature, researchers take diverse approaches influenced by factors such as data type, pre-processing methods, skin tone scale, and CNN model choice. Given the absence of a universally correct method, outcomes depend on best practices and experimental efforts within the CNN technology domain, leading to varied insights.

Public datasets prove valuable for CNN model training, addressing the challenge of data collection. However, certain prior studies [

5,

7] predominantly featured images under diverse lighting conditions, with limited variation in skin tones. This consideration prompts the authors of this project to explore data collection methods, drawing inspiration from [

20] for image acquisition as a foundational framework.

Key aspects identified from the literature include the absence of a universally favoured scale for skin tone classification, with the choice depending on the desired granularity. Additionally, the practice of cropping and scaling the facial region for model specifications is crucial in pre-processing methods. This project aims to incorporate these techniques and employ well-defined accuracy assessment metrics specific to the model types developed. Inspired by past research [

6], standard architectures like VGG and ResNet, pre-trained models, and hyperparameter tuning from [

4] will be integral components of this project’s investigation.

1.2. Research Gaps and Contributions

Previous research on skin tone estimation has often overlooked the crucial aspect of consistent performance across diverse skin tones, leading to biases, such as the under-representation of darker skin tones. Additionally, widely used datasets like ISIC2018, SD-198, and others lack specific designs for skin tone information, limiting their applicability. For example, Lin’s focus solely on Chinese skin variations further restricts the global perspective on skin tone diversity.

This paper addresses these limitations by curating a more balanced dataset that spans the entire spectrum of skin tones. Another significant gap is the absence of standardised protocols for data structuring, CNN model optimisation, and effective training for skin tone estimation. This paper contributes to the existing body of work by comprehensively evaluating various model setups, aiming to establish best practices and identify areas for improvement in future research on this critical topic.

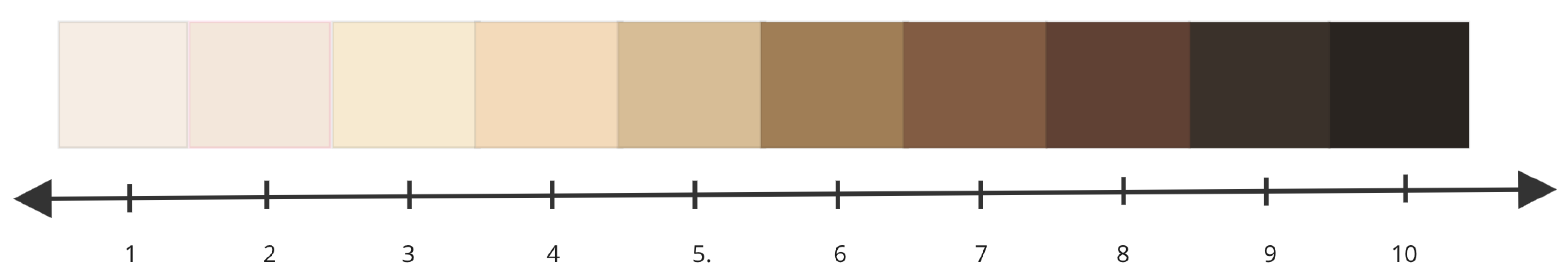

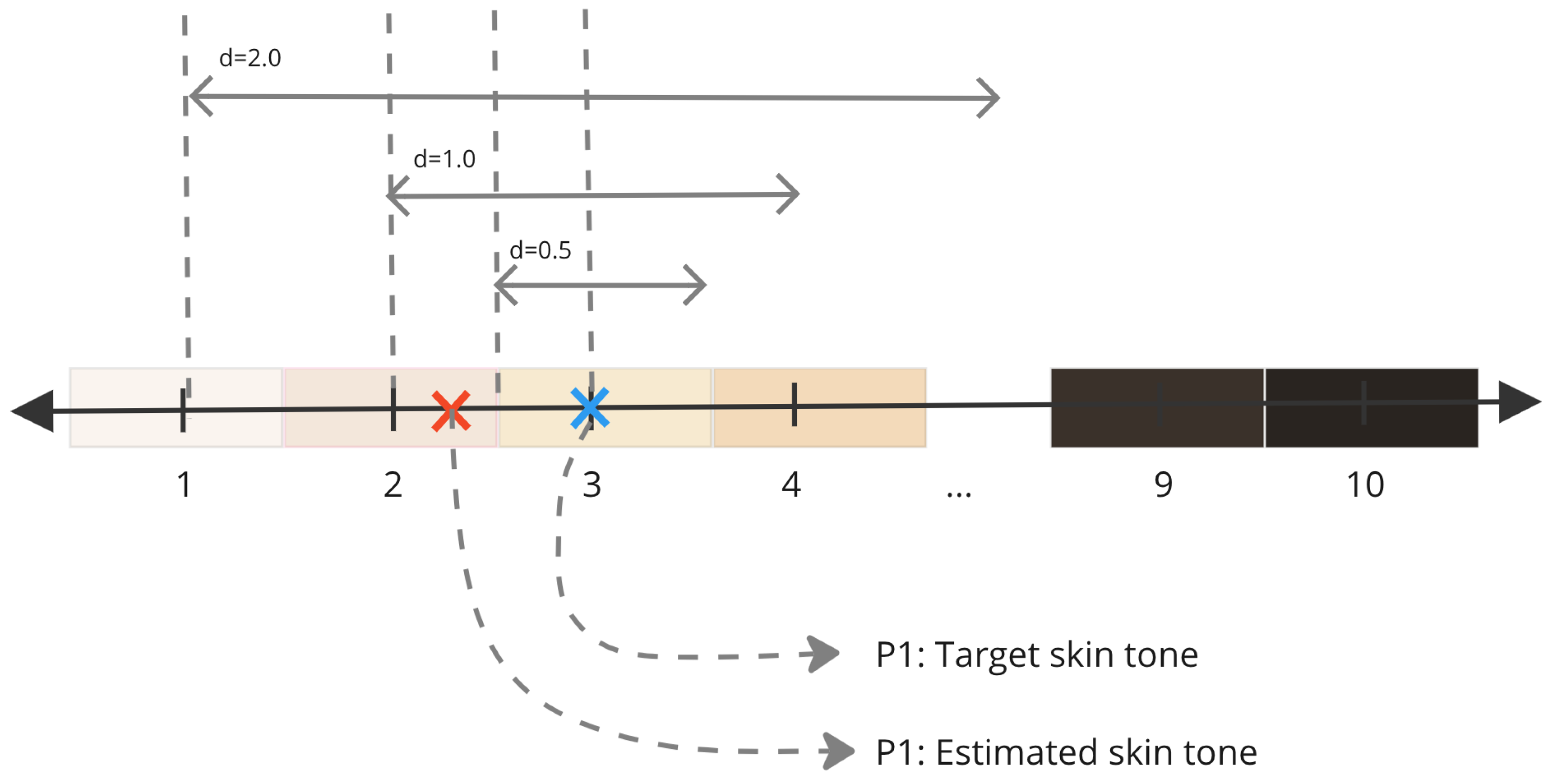

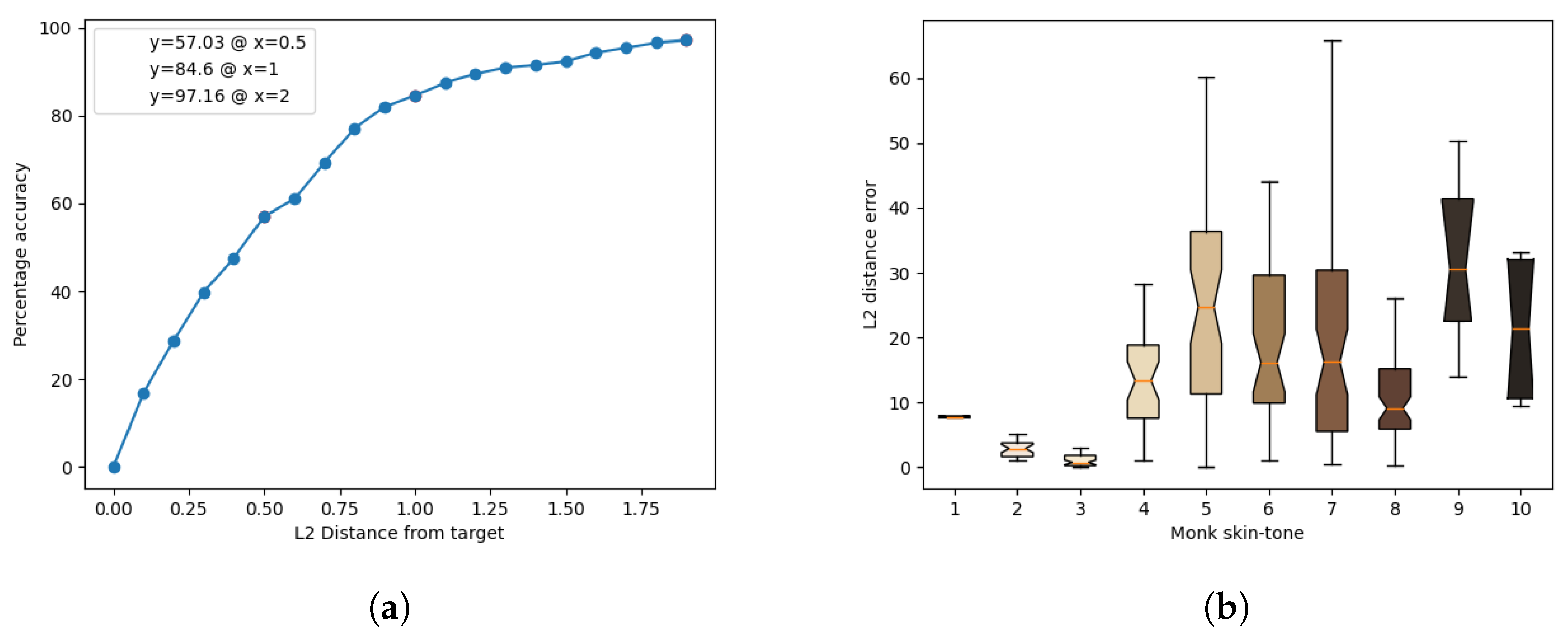

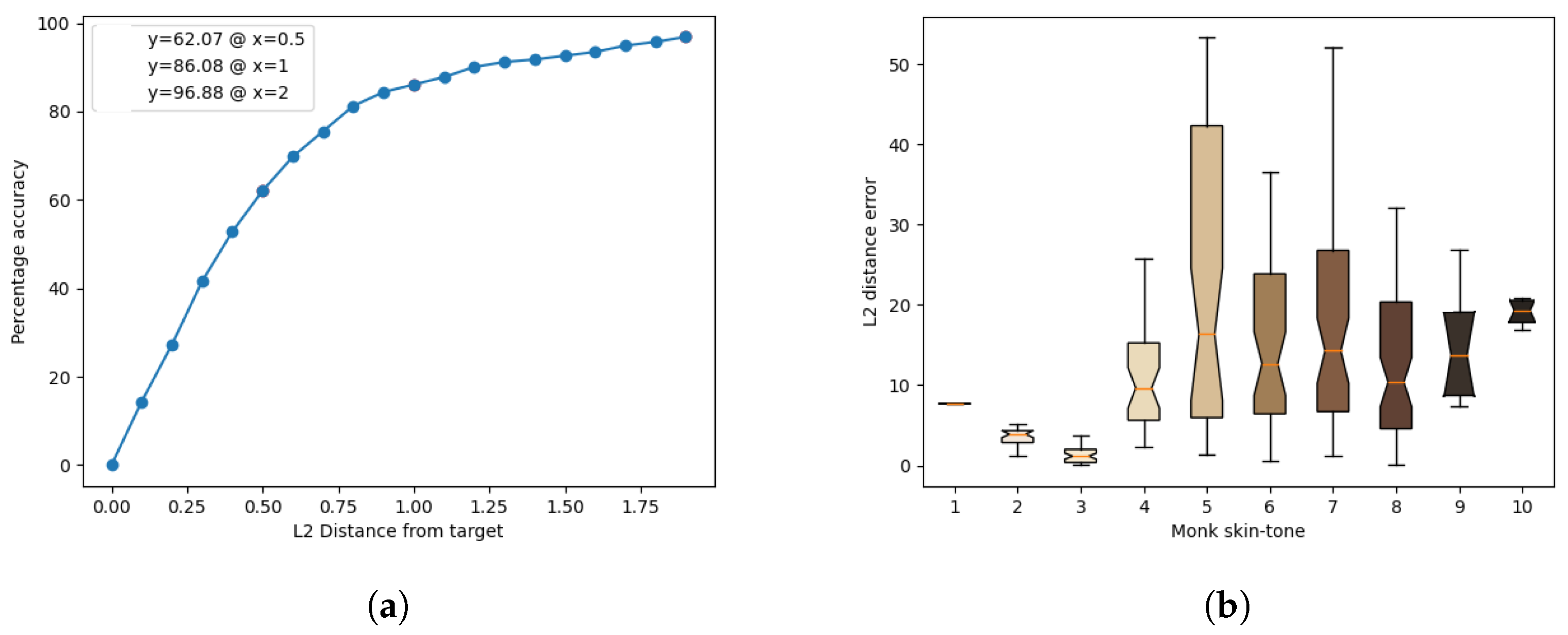

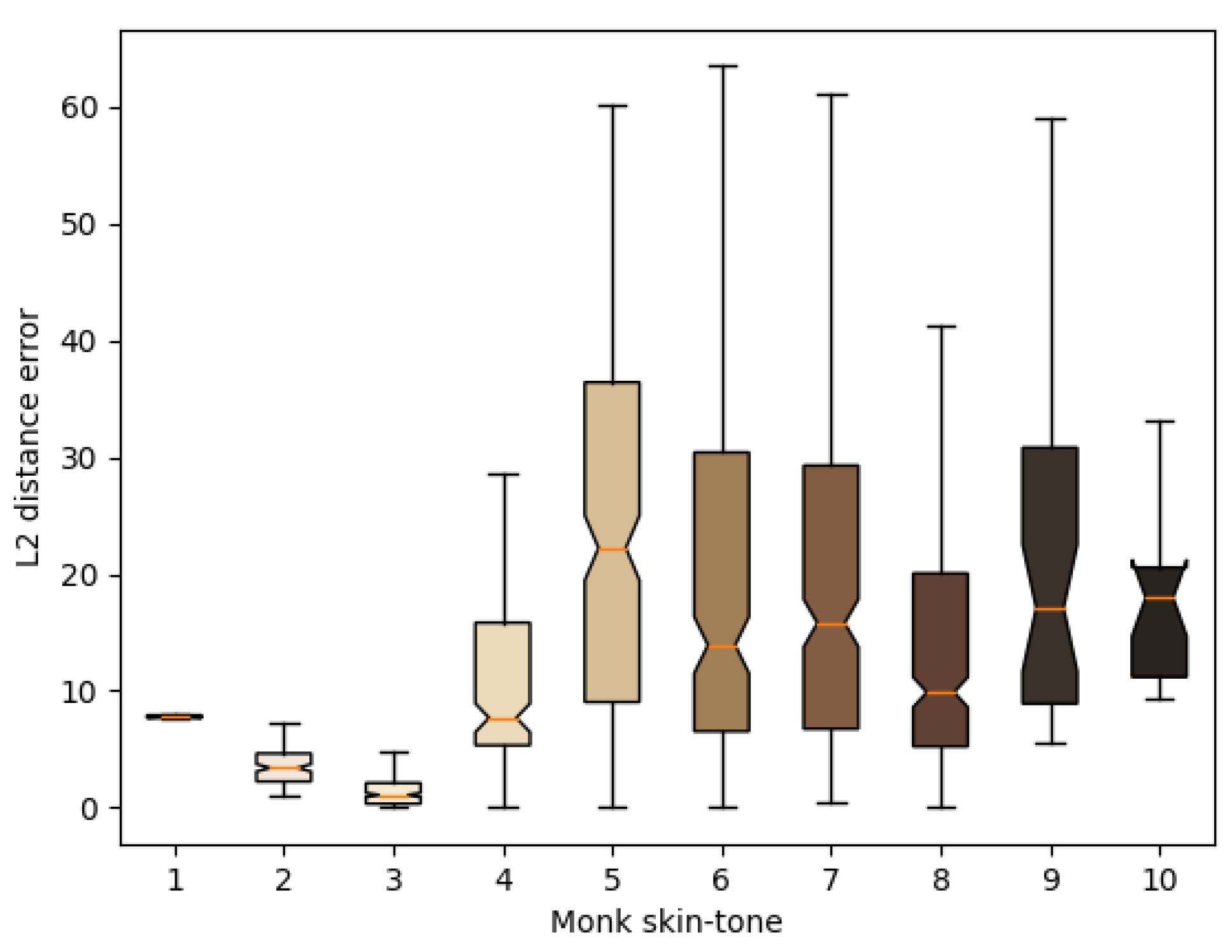

In our model, the output is a single decimal value representing a Monk skin tone colour index within the range of 1 to 10, with a margin of error of ±0.5 units.

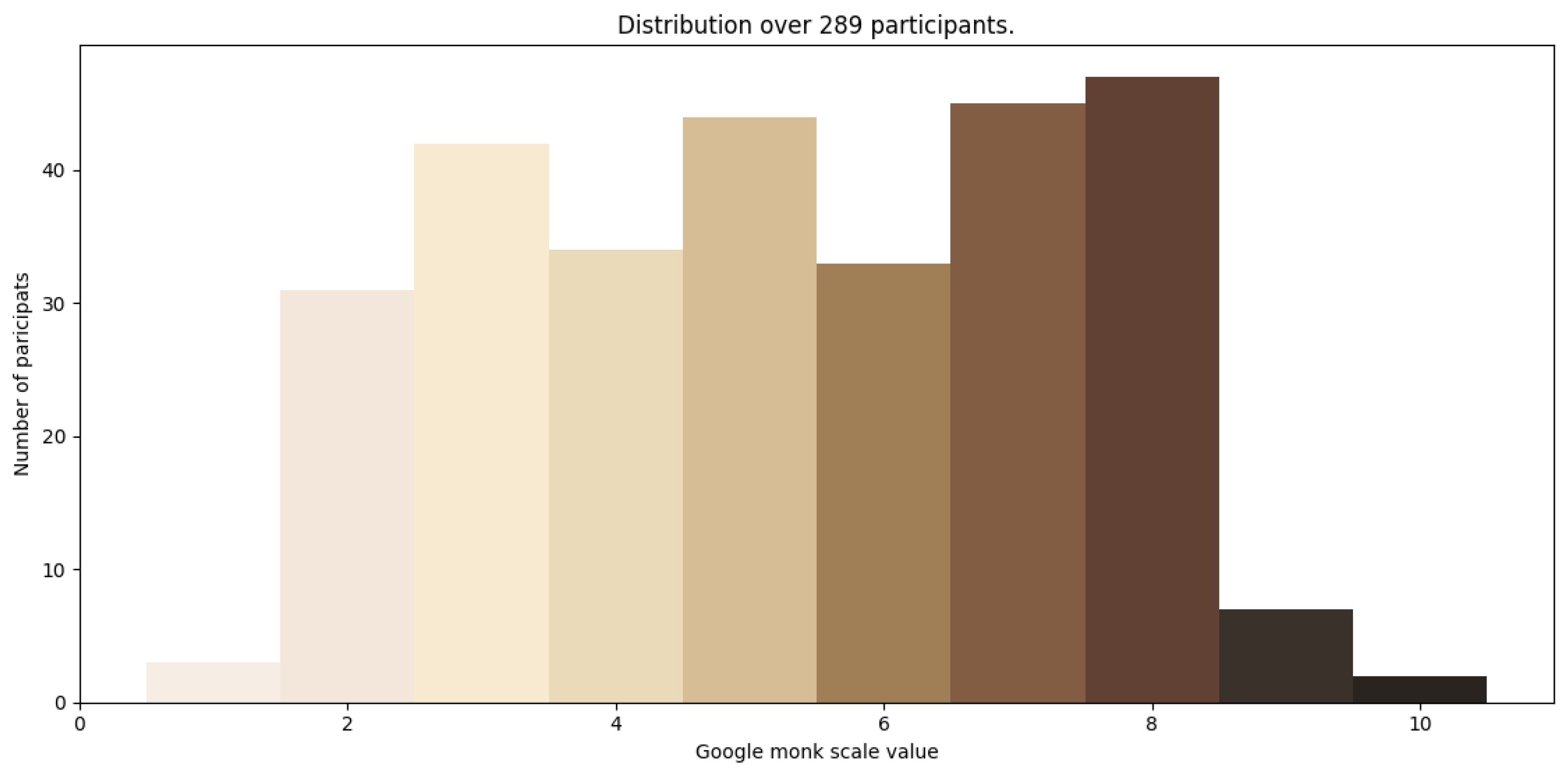

Figure 1 shows the mapping of these indices to the Monk skin tone colours. The RGB colour space distances between the Monk skin tone colours are non-uniform, averaging 41.25 units. The linear relationship assumption between indices was made for simplicity during data collection.