Abstract

Chest X-ray (CXR) imaging plays a pivotal role in diagnosing various pulmonary diseases, which account for a significant portion of the global mortality rate, as recognized by the World Health Organization (WHO). Medical practitioners routinely depend on CXR images to identify anomalies and make critical clinical decisions. Dramatic improvements in super-resolution (SR) have been achieved by applying deep learning techniques. However, some SR methods are very difficult to utilize due to their low-resolution inputs and features containing abundant low-frequency information, similar to the case of X-ray image super-resolution. In this paper, we introduce an advanced deep learning-based SR approach that incorporates the innovative residual-in-residual (RIR) structure to augment the diagnostic potential of CXR imaging. Specifically, we propose forming a light network consisting of residual groups built by residual blocks, with multiple skip connections to facilitate the efficient bypassing of abundant low-frequency information through multiple skip connections. This approach allows the main network to concentrate on learning high-frequency information. In addition, we adopted the dense feature fusion within residual groups and designed high parallel residual blocks for better feature extraction. Our proposed methods exhibit superior performance compared to existing state-of-the-art (SOTA) SR methods, delivering enhanced accuracy and notable visual improvements, as evidenced by our results.

1. Introduction

X-ray imaging captures internal body structures, portraying them in a grayscale spectrum where the tissue’s absorption of radiation dictates shades; notably, calcium rich bones, absorbing X-rays most prominently, manifest as bright white in the image [1,2,3,4]. Enhancing the pixel resolution of chest X-ray images is vital for sharpening image clarity, optimizing diagnostic precision, and identifying subtle abnormalities [5]. In recent years, the utilization of super-resolution (SR) has taken this enhancement further, refining image details and potentially unveiling nuanced features essential for precise medical assessments, offering a solution to improve the pixel resolution of medical images, including those produced through chest X-rays (CXRs), Magnetic Resonance Imaging (MRI), and Computerized Tomography (CT) [6]. SR aims to estimate high-resolution (HR) images from one or more low-resolution (LR) images, allowing for enhanced details and finer representation of image structures [5,6,7]. Furthermore, recent studies [5,6,8,9] show that SR can also help deep learning models to increase their segmentation performance. This technique has shown diverse applications, ranging from surveillance to medical imaging, offering potential advantages in medical image analysis [5,6,8,10,11].

In the field of SR, there are two primary approaches: Single Image Super-Resolution (SISR) and Multiple Image Super-Resolution (MISR) [5,10,12]. MISR is a computer vision technique that enhances the resolution and quality of an image by fusing information from multiple low-resolution input images. SISR focuses on reconstructing the HR output image from a single LR input image. Although both SISR and MISR methods have advantages, MISR is more challenging because of the difficulties in obtaining several LR images of the same object. SISR techniques have garnered acclaim for their elegant simplicity and remarkable efficacy in HR image reconstruction from a sole LR input [13,14]. CNN-based techniques have gained considerable traction in the SR field. Previous studies [5,8,14,15,16,17] underscore the benefits of leveraging SISR LR images to augment the effectiveness of deep learning models, encompassing GAN-based and Residual Group models in the SR field. However, it is crucial to recognize that GAN-based SR methods pose significant computational challenges. This is due to the intensive training requirements imposed by Generative Adversarial Networks (GANs), especially when dealing with high-resolution images. Additionally, the GAN-based methods with batch normalization behave differently during training and inference: they rely on batch statistics during training and population statistics during inference. These factors necessitate powerful hardware and substantial computational resources.

In our novel approach, we embraced an Enhanced Residual network (i.e., a modified version of the RIR structure proposed by RCAN [8]) while eschewing the incorporation of a channel attention mechanism. This deliberate choice mitigates the computational load, particularly with HR images, rendering the process less computationally intensive and devoid of time-consuming aspects. Also, we incorporated the naive Inception architecture [18] into our proposed network to design parts of our proposed network (i.e., the naive Inception architecture was proposed for classification; it involves stacking multiple parallel convolutional pathways of different filter sizes and pooling operations to capture features at various scales within a single layer). In addition, we adopted dense feature fusion within our model for multi-stage information fusion.

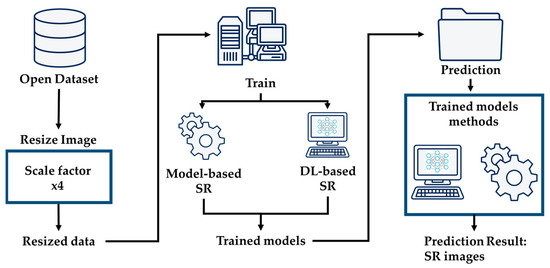

This research focuses on super-resolving chest X-ray images to enhance diagnostic precision. This enhancement provides physicians with detailed imagery for more precise analysis. Additionally, we explore cutting-edge super-resolution techniques, elucidating the overarching architectural framework depicted in Figure 1. We employ an advanced deep learning-based approach that utilizes residual learning to elevate the pixel resolution of CXR images, as depicted in Figure 2. This enhancement provides physicians with detailed imagery for more precise analysis. Additionally, we explore cutting-edge super-resolution techniques, elucidating the overarching architectural framework depicted in Figure 1. Furthermore, we apply bicubic downsampling by adopting the MATLAB function imresize from HR images with a scale factor of ×4. Subsequently, we add salt-and-pepper noise with noise levels of 0.005, 0.01, and 0.02 to each dataset.

Figure 1.

Structure of general system architecture.

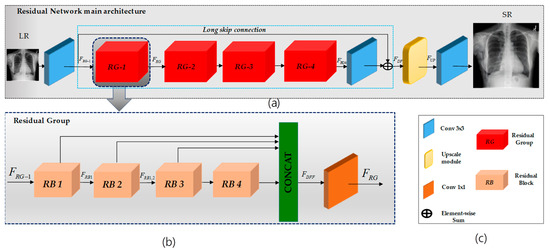

Figure 2.

Overview of the proposed SR model. In (a), LR-to-HR transformation is depicted using convolutional layers within a residual-in-residual network with a long skip connection. The output is processed through an upsampling layer to generate the HR output image. (b) Each residual group comprises four residual blocks, incorporating multiplications such as 1 × 1, 3 × 3, and 5 × 5, depicted in the figure. Following these blocks, there is a 1 × 1 convolution. This process repeats for each residual group, and the outputs are concatenated before passing through a final 1 × 1 convolution layer. (c) expiation of the RN and RG architecture inside.

The main contributions of this work can be summarized as follows:

- We harness the power of residual learning in medical CXR image SR, offering significant advancements in diagnostic precision and image quality.

- We adopted the RIR structure with dense feature fusion and highly parallel residual blocks comprising different kernel sizes, which enhances the diagnostic potential of CXR images. Our architecture incorporates four meticulously designed residual groups and blocks to extract and amplify spatial details. This facilitates the synthesis of HR CXR images, thereby advancing diagnostic imaging quality.

- Comprehensive experiments show that our proposed model yields superior SR results to the SOTA approaches.

- We conduct experiments involving salt-and-pepper noise, further demonstrating the robustness and effectiveness of our proposed approach in challenging imaging conditions.

2. Related Work

Recently, deep learning (DL)-based approaches to computer vision have dramatically outperformed traditional approaches. Single image super-resolution (SISR) and multiple image super-resolution (MISR) are the two broad categories into which the known SR techniques can be grouped [19,20]. This paper will primarily focus on SISR for medical X-ray images. By leveraging certain image priors, SISR algorithms aim to produce high-resolution (HR) images from low-resolution (LR) inputs.

2.1. Model-Based Super-Resolution Approaches

SISR algorithms can be categorized based on image priors. These algorithms include model-based methods, such as edge-based [21,22] models and image statistical models [20,23,24], patch-based methods [18,24,25], and learning-based approaches. Model-based approaches for super-resolution in medical imaging focus on incorporating prior knowledge or constraints of the image formation process to reconstruct high-resolution (HR) images from low-resolution (LR) inputs.

One common model-based technique is the Maximum Likelihood Estimation (MLE) framework [26]. MLE aims to maximize the likelihood of observing the LR image given the HR image and the degradation process. It models the degradation process, such as blurring and noise, to estimate the HR image that best explains the observed LR image.

Another widely used approach is the maximum a posteriori (MAP) estimation [22]. MAP incorporates prior information about the HR image, such as smoothness or sparsity, into the reconstruction process. MAP produces more accurate HR images by balancing data fidelity and prior information.

Regularization-based methods are also popular in model-based super-resolution [27]. These methods add a regularization term to the optimization problem to control the smoothness of the reconstructed HR image. The regularization term introduces constraints to achieve more plausible HR solutions.

2.2. Deep Learning-Based Super-Resolution Approaches

Deep learning-based SR methods employ neural networks to learn complex, nonlinear mappings that enhance image details effectively. These neural networks, often convolutional neural networks (CNNs), are trained using large datasets to grasp intricate relationships between LR and HR image patches, allowing for superior restoration of image details [8,14,16,17,24].

In the realm of super-resolution, various methods exhibit remarkable advancements. EDSR (Enhanced Deep Super-Resolution) [16] impresses with its highly accurate outcomes and exceptional image reconstruction quality, attributed to its efficient parallel architecture. This allows for swift processing—ideal for real-time applications—while maintaining parameter efficiency with fewer parameters than complex architectures. However, a notable drawback is EDSR’s demand for significant computational resources, especially for high up-scaling factors and large images, potentially hindering real-time usage on low- revise resource devices. On the other hand, VDSR (Very Deep Super-Resolution) [14] stands out due to Please its deep architecture, effectively capturing intricate image details and being less susceptible to overfitting, thereby aiding generalization to unseen data. Nevertheless, training deep models like VDSR poses challenges such as vanishing gradients, necessitating careful initialization and precise training strategies. Residual Dense Networks (RDN) [17] enhance information flow through skip connections and dense connectivity. Still, this advantage is balanced with increased model complexity and heightened memory usage, potentially limiting deployment on memory-constrained devices. These considerations highlight the trade-offs between efficiency and complexity in pursuing superior super-resolution methods. Shifting focus to Residual Channel Attention Networks (RCAN) [8], this innovative approach integrates an attention mechanism, enabling the model to prioritize critical features and significantly enhance reconstruction quality. RCAN introduced the residual-in-residual (RIR) structure, incorporating residual groups (RG) and long skip connections (LSC). Each RG comprises Residual Channel Attention Blocks (RCAB) with short skip connections (SSC). This innovative residual-in-residual architecture enables the training of very deep convolutional neural networks (CNNs) with over 400 blocks (i.e., the number of residual blocks in RCAN), significantly improving image super-resolution (SR) performance.

GAN-based super-resolution methods, such as SNSRGAN (Spectral-Normalizing Super-Resolution Generative Adversarial Network) [5], (i.e., using the same dataset and scale factor as our proposed model) and SRGAN (Super-Resolution Generative Adversarial Network) [28], showcase the immense potential of propelling image super-resolution, aiming to generate highly realistic high-resolution images. However, it is crucial to acknowledge and address two prominent challenges prevalent in these methods: Training Instability and Difficulty in Evaluation, which include the noteworthy impact of batch normalization (BN). BN utilizes batch statistics during training and population statistics during inference, potentially leading to inconsistencies and suboptimal results when transitioning from training to inference, consequently impacting the final quality of the generated high-resolution images. Training GANs for super-resolution introduces instability issues, often including problems like mode collapse, presenting significant obstacles to effective training and fine-tuning. Furthermore, accurately evaluating the performance of GAN-based SR methods remains a formidable task due to the absence of a well-defined objective metric, making the precise quantification of improvements a challenging endeavor.

This paper introduces a residual network that excludes batch normalization and channel attention, particularly tailored for X-ray image super-resolution. Notably, performing super-resolution on X-ray images adds more complexity than normal images (i.e., restoring fine details on X-ray images is very challenging). This study addresses this complexity by employing a deep learning-based super-resolution method incorporating a residual network (RN) specifically designed for chest X-ray images. We concentrate on chest X-ray images as our target data, aiming to overcome the intricacies of super-resolution effectively.

3. Methodology

This section will introduce the deep architecture and formulation of the proposed model using a convolutional residual network. The architecture of the model is shown in Figure 2.

Network Overview

Our proposed network comprises three functional components, as illustrated in Figure 2. The initial segment of our network employs a single convolutional layer to extract shallow features, denoted as , from the low-resolution (LR) input.

where is the convolution layer’s shallow features. These shallow features are then used for deep feature extraction, denoted as , within the main network. The final functional component is the upscaling part, denoted as .

Deep feature extraction: Following RCAN [8], we adopted the residual-in-residual (RIR) structure, denoted as , consisting of four residual groups (RG)——and a long skip connection (LSC). Each RG further comprises four residual blocks (RB)——(refer to Figure 3) involving a concatenation of three different kernel sizes along with a short skip connection (SSC).

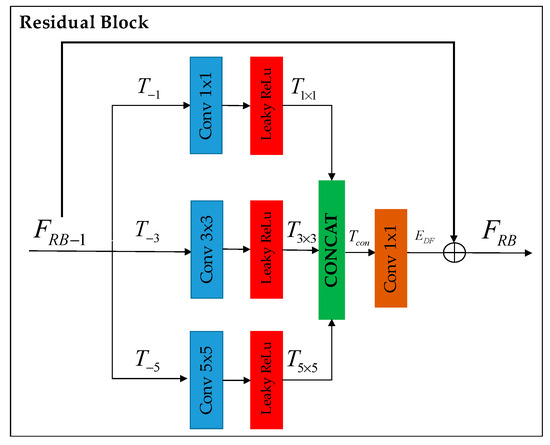

Figure 3.

Residual block enhances deep feature extraction through parallel processing with different kernel sizes (i.e., 1 × 1, 3 × 3, and 5 × 5). The extracted features are then concatenated and fused to generate the residual block output. A short skip connection is used to concentrate more on extracting high-frequency details.

This residual-in-residual structure facilitates the training of a highly performant CNN with a deep structure for image SR. Remarkably, our approach is less time-consuming than that of the RCAN model while delivering superior results that surpass the performance achieved by the RCAN model (i.e., when using the same number of blocks).

Residual Group (RG): Following RDN [17], we adopted dense feature fusion (DFF), denoted as , within our RG to better utilize features extracted from the RBs hierarchically in a global way. Therefore, the features generated from the four RBs are concatenated along with the RG input and fused using a 1 × 1 convolution layer.

Residual Block (RB): Following Inception [18], we adopted the design of the naive version without using max pooling, as shown in Figure 3. The improvement of deep feature extraction within the residual block is achieved by simultaneously processing with various kernel sizes (namely, 1 × 1, 3 × 3, and 5 × 5), each followed by a LeakyReLU.

The features obtained from this process are then merged and blended to create the output of the residual block.

where (.) and (.) denote the RB in deep feature extraction and kernel size output concatenation. Additionally, a skip connection is incorporated to focus more on capturing high-frequency details.

Upscaling Layer: The final extracted features (output of the RIR part) are then applied to the pixel shuffler layer to increase the spatial size [22]. Supposing the input to the pixel shuffler layer is the size of (H, W, C), the output generated is size (H/α, W/α, C/α2), where C represents the number of input channels and α represents the super-resolution factor (i.e., α = 4 in this article). Finally, the output of the pixel shuffler is applied to a convolutional layer with a kernel size of 3 × 3 to produce the final HR image.

4. Experiment

4.1. Datasets

This paper utilizes two chest X-ray datasets: Chest X-ray 14 [29] and Chest X-ray 2017 [30] and Chest X-ray 2017 comprises 5856 images from pediatric cases, with 4273 labeled as pneumonia (referred to as CXR 2) and 1583 as normal (referred to as CXR 3). We utilized the same dataset as SNSRGAN [5]. The dataset is split into training and testing sets, with 550 normal and 320 pneumonia images in the training set and 32 in the test set. The dataset characteristics and distribution are further illustrated in Table 1.

Table 1.

Dataset characteristics and distribution.

On the other hand, Chest X-ray 14, referred to as CXR1 in this paper, comprises 112,120 frontal-view chest X-ray images from 30,805 unique patients in the published NHCC American Research Hospital 2014 database [29]. Each image has a size of 1024 × 1024 pixels with 8-bit grayscale values [5]. Board-certified radiologists have annotated 880 bounding boxes for eight pathologies. For our analysis, we use the 32 annotated images as the testing set [5] and randomly select 250 images as the training set.

4.2. Implementation Details

All experiments were conducted on a 16-core Intel(R) Core(TM) i7-11700K processor with NVIDIA TITAN Xp 32 GB GPUs, ensuring consistency and objectivity. For the training dataset, we extracted accurate patches with sizes of 16 × 16 and 64 × 64 for input and ground-truth images, respectively, using a stride of one. This comprehensive dataset comprises 102,400 input and corresponding ground-truth patches, providing a substantial volume for robust training. Additionally, we utilized a batch size of 32 during the training process, and our implementation is based on high-level Python (TensorFlow).

4.3. Training Settings

We employ a bicubic kernel-based downsampling technique with a downsampling factor of (where ) to transform HR images into LR images, following the methodology outlined in SNSR-GAN. The model training process is optimized using the ADAM optimizer [31] with parameters β1 = 0.9, β2 = 0.999, and ε = 10−8. We initially set the learning rate to 2 × 10−4, with a subsequent exponential reduction by a factor of 0.1 every 120 epochs. Various loss functions are employed during the convolutional neural network (CNN) training, including L2 (sum of squared differences) and L1 (sum of absolute differences). As observed by Zhao et al. [5], L1 loss often outperforms L2 loss when assessing image quality using metrics such as Peak Signal-to-Noise Ratio (PSNR) and Structural Similarity Index (SSIM). In our study, we trained our proposed network to minimize the L1 distance between the original CXR input images and their corresponding ground-truth images [32].

In this paper, we compare the proposed method with the traditional interpolation methods, including nearest-neighbor (NN) [1] and bicubic interpolation [2], as well as several SOTA approaches, including SRCNN [15], VDSR [14], EDSR [16], RDB [17], SNSRGAN [5], and RCAN [8]. For fair comparison, we retrained on the same datasets we used to train our proposed model [29,30].

4.4. Evaluation Metrics

In image processing, quality assessment metrics are essential for evaluating the fidelity of reconstructed content. PSNR assesses quality by comparing the original and reconstructed signals, considering noise as interference. It quantifies the signal and noise power relationship, with higher PSNR values indicating superior quality, which is formulated as Equation (9). It is calculated based on the mean squared error (MSE) between the original and the processed images, considering the maximum possible pixel value (MAX), such as 255 for an 8-bit image. Higher PSNR values indicate better image quality [33].

SSIM considers luminance, contrast, and structural information, reflecting pixel-wise similarity and preserving structural elements, making it a more perceptually meaningful metric. Formulated as Equation (10), it involves comparing images at multiple resolutions, capturing both fine and coarse details. The SSIM index is calculated based on the means (), variances (), and covariance () of images x and y. Constants C1 and C2 are used to avoid instability when the denominator is close to zero. The SSIM value ranges from −1 to 1, where 1 indicates perfect similarity [34].

Though less common, MSIM (multi-scale structure similarity index) is valuable as it captures average information loss during image compression, which is formulated as Equation (11) [34].

Lower MSIM values indicate superior compression quality, implying more faithful retention of structural information. These metrics collectively enable a robust evaluation of image processing methods, contributing to advancements in visual content enhancement and compression techniques. The specific formula for MSIM involves the product of the SSIM values at each scale, raised to the power of the corresponding weight (). The weighted product is then raised to the power of the reciprocal of the number of scales (N), providing a comprehensive multi-scale evaluation of structural similarity across different image detail levels. This paper complements qualitative assessments with quantitative measurements using PSNR, SSIM, and MSIM.

5. Results and Discussion

5.1. Comparisons with SOTA Methods

This research paper covers extensive experiments with deep learning-based SR methods. We are comparing our proposed model to these established approaches. These metrics offer a robust quantitative assessment. The objective was to enhance chest X-ray images’ visual quality and resolution, specifically those from three distinct datasets: CXR 1, CXR 2, and CXR 3. To evaluate the performance of these methods, we employed three key quality metrics—the PSNR, SSIM, and MSIM—to measure the fidelity and similarity between the super-resolved and ground-truth images. First, we set bicubic interpolation as a baseline and compared our efficiency against it; we achieved a PSNR improvement of 2.36 dB, 2.86 dB, and 4.47 dB on CXR 1, CXR 2, and CXR 3, respectively. In addition, visual results have shown that traditional interpolation methods excessively smooth out details, leading to noticeable results. In other words, these methods compromise fine details, which is unsuitable for medical images.

Subsequently, we compared our proposed model with SOTA deep learning-based SR techniques, including SRCNN, VDSR, EDSR, RDN SNSRGAN, and RCAN Table 2.

Table 2.

Numerical comparison of super-resolved chest X-ray images, up-scaled by factors of 2×, 4×, and 8×. The PSNR, SSIM, and MSIM are presented with bolded values to indicate the best performance.

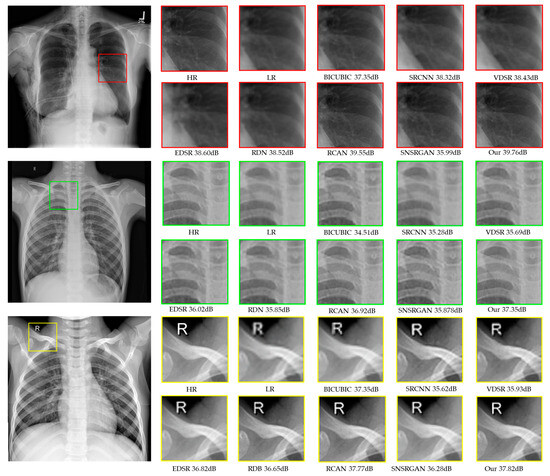

The numerical results of different scale factors, illustrated in Table 2, present quantitative comparisons for ×4 SR. Among all previous methods, our proposed model consistently outperforms others across all datasets. Comparatively, SR methods relying on GANs produce more visually compelling results than other SOTA approaches. Nonetheless, a drawback arises regarding information loss when selectively enlarging areas of concern, as illustrated in Figure 4, which could impact diagnostic accuracy. Upon comparing the numerical results, we observed that RCAN performs the best after our proposed model. The enhancement in performance can be attributed to the additions and modifications incorporated in our model, such as dense feature fusion and highly parallel residual blocks.

Figure 4.

Visual comparison of super-resolved (up-scaled by a factor of 4×) chest X-ray images from the CXR1 (red squares), CXR2 (green squares), and CXR3 (yellow squares) datasets. The HR images generated through traditional interpolation methods, other SOTA methods, and our proposed model are presented.

Our study thoroughly explored diverse SR techniques, providing an analysis of their performance on various chest X-ray datasets. The comprehensive evaluation, which includes PSNR, SSIM, and MSIM metrics, highlights the potential of our proposed model as a robust solution for improving chest X-ray image quality. Additionally, we introduced noise at scale factors of ×2 and ×8 to assess the model’s performance under different conditions, further demonstrating its versatility and efficacy in enhancing chest X-ray images across various scenarios Table 2.

Furthermore, we conducted comparisons of datasets scaled by a factor of ×4, evaluating the performance of our proposed model against a GAN-based super-resolution model, specifically the SNSRGAN [5] model. While the SNSRGAN model demonstrated good performance on grayscale images, our proposed model surpasses its performance, as illustrated in Table 3.

Table 3.

Numerical comparison of super-resolved chest X-ray images, up-scaled by a factor of 4×. The PSNR, SSIM, and MSIM are presented with bolded values to indicate the best performance.

5.2. Comparisons with SOTA Methods on Noisy Images

In this section, we delve into the experimental results concerning the efficacy of our proposed super-resolution model in enhancing noisy images, particularly those afflicted with salt-and-pepper noise—random isolated pixels of extreme brightness or darkness that distort the image [35]. Our investigation utilizes a scaling factor ×4 dataset comprising chest X-ray (CXR) images deliberately corrupted with varying noise levels (0.005, 0.01, and 0.02) to simulate different degrees of image distortion (Table 4). We compare our proposed model against several state-of-the-art super-resolution algorithms, including BICUBIC, SRCNN, VDSR, EDSR, RDN, RCAN, and SNSRGAN for noisy LR images.

Table 4.

Numerical noisy image comparison of super-resolved chest X-ray images, up-scaled by a factor of 4×. The PSNR, SSIM, and MSIM are presented with bolded values to indicate the best performance.

Our experimental findings, meticulously outlined in Table 4, underscore the superior performance of our proposed model across various noise levels and CXR datasets (CXR1, CXR2, CXR3) on ×4 scaling factors. Notably, at a noise level of 0.005, our model consistently surpasses baseline methods, yielding substantial improvements in Peak Signal-to-Noise Ratio (PSNR) of 14.29% (CXR1), 12.5% (CXR2), and 12.5% (CXR3), alongside corresponding Structural Similarity Index (SSIM) values of 0.806, 0.818, and 0.801, respectively.

Further analysis reveals the intricate challenges posed by noise levels ranging from 0.01 to 0.02 in LR images, where traditional methods struggle to extract high-level noise details effectively. However, our proposed RN model demonstrates remarkable capabilities in managing such noise complexities, effectively suppressing noise and recovering additional image details in most scenarios. The detailed comparison provided in Table 4 highlights the significant advantage of our RN model over existing methods, particularly in its ability to handle diverse noise levels and enhance image quality. This comparison solidifies the efficacy of our proposed approach and suggests its potential for integration with complementary techniques such as super-resolution and image denoising.

5.3. Ablation Study

We evaluate six distinct architectures to illustrate the impact of various components in the model, as presented in Table 5. All models listed in Table 5 share the same residual groups and blocks, where RG, RB, and f denote the number of Residual Groups, Residual Blocks, and filters for each convolutional layer, respectively. Having constructed our model on the residual-in-residual structure, we conducted a comparison with RCAN. We assessed the impact of the modified residual block (specifically, the highly parallel residual block with varying kernel sizes). We examined the influence of incorporating the channel attention mechanism (CA), which we did not utilize. The first four rows of results shoFwcase our outcomes with and without the use of the CA; without the use of concatenation, with the CA; and the CA, while the fifth and sixth rows present the results of the RCAN model with and without the use of the CA.

Table 5.

Numerical evaluation (PSNR/SSIM) of four distinct models, all featuring an identical number of residual groups and blocks. The outcomes of the suggested model are bolded. ✓ include, ✗ not include for each RB in CA, concatenation and skip connection.

The model performs better when not using the CA, contrary to what was suggested in RCAN. This discrepancy is reasonable, considering the distinct nature of the chest X-ray images. Furthermore, comparing the results in the second and fifth rows demonstrates that our proposed model exhibited exceptional performance across all datasets, surpassing the RCAN method in terms of PSNR.

Furthermore, in our study, we emphasize using skip connections and concatenation. Specifically, our model integrates concatenation with long skip connection within each residual block.

6. Conclusions

In this work, we introduced a learning-based super-resolution approach specifically tailored to enhance chest X-ray images. By harnessing the inherent strength of the residual-in-residual structure, we have meticulously designed our network to extract deep features effectively. Through the integration of dense feature fusion and the utilization of highly parallel residual blocks, we have further fortified the network’s capacity to comprehend and model intricate relationships within the images, consequently restoring finer texture details and enhancing overall quality. Moreover, through comparative analysis with noisy images using various super-resolution models, our findings indicate that our proposed model exhibits significant denoising capabilities for low-resolution images. Across diverse datasets, our proposed model has consistently demonstrated exceptional performance, outperforming existing methods in terms of Peak signal-to-noise ratio (PSNR). This highlights the remarkable capability of our model to enhance the quality of chest X-ray images, thereby positioning it as a robust solution for comprehensive image enhancement in medical imaging applications.

Author Contributions

Conceptualization, A.K. and A.S.; methodology, A.K. and A.S.; software, A.K. and A.S.; formal analysis, A.K. and A.S.; investigation, H.-S.K.; resources, H.-S.K.; data curation, A.K. and A.S.; writing—original draft preparation, A.K.; writing—review and editing, A.S. and H.-S.K.; validation, A.K., A.S., and H.-S.K.; visualization, H.-S.K.; supervision, H.-S.K.; project administration, H.-S.K.; funding acquisition, H.-S.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by Korea Institute of Marine Science & Technology Promotion (KIMST) funded by the Ministry of Oceans and Fisheries (20200611), and supported by the MSIT (Ministry of Science and ICT), Korea, under the Grand Information Technology Research Center support program (IITP-2024-2020-0-01462, 50%) supervised by the IITP (Institute for Information & communications Technology Planning & Evaluation).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets used in this paper are public datasets.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Rukundo, O.; Cao, H. Nearest neighbor value interpolation. arXiv 2012, arXiv:1211.1768. [Google Scholar]

- Gao, S.; Gruev, V. Bilinear and bicubic interpolation methods for division of focal plane polarimeters. Opt. Express 2011, 19, 26161–26173. [Google Scholar] [CrossRef] [PubMed]

- Wildenschild, D.; Sheppard, A.P. X-ray imaging and analysis techniques for quantifying pore-scale structure and processes in subsurface porous medium systems. Adv. Water Resour. 2013, 51, 217–246. [Google Scholar] [CrossRef]

- World Health Organization. Lung Cancer. Available online: https://www.who.int/news-room/fact-sheets/detail/lung-cancer (accessed on 5 September 2023).

- Xu, L.; Zeng, X.; Huang, Z.; Li, W.; Zhang, H. Low-dose chest X-ray image super-resolution using generative adversarial nets with spectral normalization. Biomed. Signal Process. Control 2020, 55, 101600. [Google Scholar] [CrossRef]

- Lyu, Q.; Shan, H.; Steber, C.; Helis, C.; Whitlow, C.; Chan, M.; Wang, G. Multi-contrast super-resolution MRI through a progressive network. IEEE Trans. Med. Imaging 2020, 39, 2738–2749. [Google Scholar] [CrossRef] [PubMed]

- Ebner, M.; Wang, G.; Li, W.; Aertsen, M.; Patel, P.A.; Aughwane, R.; Melbourne, A.; Doel, T.; Dymarkowski, S.; De Coppi, P. An automated framework for localization, segmentation and super-resolution reconstruction of fetal brain MRI. NeuroImage 2020, 206, 116324. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image super-resolution using very deep residual channel attention networks. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 286–301. [Google Scholar]

- Sert, E.; Özyurt, F.; Doğantekin, A. A new approach for brain tumor diagnosis system: Single image super resolution based maximum fuzzy entropy segmentation and convolutional neural network. Med. Hypotheses 2019, 133, 109413. [Google Scholar] [CrossRef]

- Shakeel, P.M.; Burhanuddin, M.A.; Desa, M.I. Lung cancer detection from CT image using improved profuse clustering and deep learning instantaneously trained neural networks. Measurement 2019, 145, 702–712. [Google Scholar] [CrossRef]

- Wang, Z.; Liu, C.; Bai, X.; Yang, X. Deepcadx: Automated prostate cancer detection and diagnosis in mp-mri based on multimodal convolutional neural networks. In Proceedings of the 25th ACM International Conference on Multimedia, Mountain View, CA, USA, 23–27 October 2017; pp. 1229–1230. [Google Scholar]

- Feng, C.-M.; Fu, H.; Yuan, S.; Xu, Y. Multi-contrast mri super-resolution via a multi-stage integration network. In Proceedings of the Medical Image Computing and Computer Assisted Intervention–MICCAI 2021: 24th International Conference, Strasbourg, France, 27 September–1 October 2021; Proceedings, Part VI 24. 2021; pp. 140–149. [Google Scholar]

- Yang, C.-Y.; Ma, C.; Yang, M.-H. Single-image super-resolution: A benchmark. In Proceedings of the Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, 6–12 September 2014; Proceedings, Part IV 13. 2014; pp. 372–386. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate image super-resolution using very deep convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 1646–1654. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2472–2481. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Wen, D.; Jia, P.; Lian, Q.; Zhou, Y.; Lu, C. Review of sparse representation-based classification methods on EEG signal processing for epilepsy detection, brain-computer interface and cognitive impairment. Front. Aging Neurosci. 2016, 8, 172. [Google Scholar] [CrossRef] [PubMed]

- Li, K.; Wu, Z.; Peng, K.-C.; Ernst, J.; Fu, Y. Tell me where to look: Guided attention inference network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 9215–9223. [Google Scholar]

- Zhao, C.-Y.; Jia, R.-S.; Liu, Q.-M.; Liu, X.-Y.; Sun, H.-M.; Zhang, X.-L. Chest X-ray images super-resolution reconstruction via recursive neural network. Multimed. Tools Appl. 2021, 80, 263–277. [Google Scholar] [CrossRef]

- Sparacino, G.; Tombolato, C.; Cobelli, C. Maximum-likelihood versus maximum a posteriori parameter estimation of physiological system models: The C-peptide impulse response case study. IEEE Trans. Biomed. Eng. 2000, 47, 801–811. [Google Scholar] [CrossRef] [PubMed]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Yamanaka, J.; Kuwashima, S.; Kurita, T. Fast and accurate image super resolution by deep CNN with skip connection and network in network. In Proceedings of the Neural Information Processing: 24th International Conference, ICONIP 2017, Guangzhou, China, 14–18 November 2017; Proceedings, Part II 24. 2017; pp. 217–225. [Google Scholar]

- Yin, J.; Liu, Z.; Jin, Z.; Yang, W. Kernel sparse representation based classification. Neurocomputing 2012, 77, 120–128. [Google Scholar] [CrossRef]

- Levitan, E.; Herman, G.T. A maximum a posteriori probability expectation maximization algorithm for image reconstruction in emission tomography. IEEE Trans. Med. Imaging 1987, 6, 185–192. [Google Scholar] [CrossRef] [PubMed]

- Lam, B.S.; Gao, Y.; Liew, A.W.-C. General retinal vessel segmentation using regularization-based multiconcavity modeling. IEEE Trans. Med. Imaging 2010, 29, 1369–1381. [Google Scholar] [CrossRef] [PubMed]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z. Photo-realistic single image super-resolution using a generative adversarial network. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4681–4690. [Google Scholar]

- Wang, X.; Peng, Y.; Lu, L.; Lu, Z.; Bagheri, M.; Summers, R.M. Chestx-ray8: Hospital-scale chest X-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2097–2106. [Google Scholar]

- Kermany, D.S.; Goldbaum, M.; Cai, W.; Valentim, C.C.; Liang, H.; Baxter, S.L.; McKeown, A.; Yang, G.; Wu, X.; Yan, F. Identifying medical diagnoses and treatable diseases by image-based deep learning. Cell 2018, 172, 1122–1131.e1129. [Google Scholar] [CrossRef] [PubMed]

- Kingma, D.; Ba, J. Adam: A method for stochastic optimization. In Proceedings of the 3rd International Conference for Learning Representations (ICLR’15), San Diego, CA, USA, 7–9 May 2015; Volume 500. [Google Scholar]

- Salem, A.; Ibrahem, H.; Kang, H.-S. Light Field Reconstruction Using Residual Networks on Raw Images. Sensors 2022, 22, 1956. [Google Scholar] [CrossRef] [PubMed]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Sara, U.; Akter, M.; Uddin, M.S. Image quality assessment through FSIM, SSIM, MSE and PSNR—A comparative study. J. Comput. Commun. 2019, 7, 8–18. [Google Scholar] [CrossRef]

- Chan, R.H.; Ho, C.-W.; Nikolova, M. Salt-and-pepper noise removal by median-type noise detectors and detail-preserving regularization. IEEE Trans. Image Process. 2005, 14, 1479–1485. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).