Abstract

Phase Retrieval is defined as the recovery of a signal when only the intensity of its Fourier Transform is known. It is a non-linear and non-convex optimization problem with a multitude of applications including X-ray crystallography, microscopy and blind deconvolution. In this study, we address the problem of Phase Retrieval from the perspective of variable splitting and alternating minimization for real signals and seek to develop algorithms with improved convergence properties. An exploration of the underlying geometric relations led to the conceptualization of an algorithmic step aiming to refine the estimate at each iteration via recombination of the separated variables. Following this, a theoretical analysis to study the convergence properties of the proposed method and justify the inclusion of the recombination step was developed. Our experiments showed that the proposed method converges substantially faster compared to other state-of-the-art analytical methods while demonstrating equivalent or superior performance in terms of quality of reconstruction and ability to converge under various setups.

1. Introduction

Phase Retrieval (PhR) is the inverse problem of reconstructing a signal when only the magnitude of its Fourier transform is available. A typical example is Ptychography, where a set of interference patterns is generated by illuminating a specimen at various centering positions, at preset intervals between them.

The obtained measurements contain only magnitude information and computational imaging methods can produce an image of the specimen by combining the information of each pattern at different positions. Examples of phase retrieval applications include microscopy [1], X-ray crystallography [2,3,4], coherent diffraction imaging [5], array imaging [6], blind deconvolution [7], acoustics [8], interferometry [9] and astronomy [10]. The problem can be stated more generally as retrieving from measurements of the type

where is the i-th sampling vector and is an additive noise term. Phase Retrieval belongs to the class of non-linear non-convex optimization problems. Theoretical advances in random linear operators enabled the application of the solution uniqueness principles of random underdetermined linear systems (Compressive Sensing) to the quadratic measurements problem of Phase Retrieval.

In detail, the original signal can be recovered, when the sampling vectors are drawn randomly, following a probability distribution, such as a Gaussian [11]. In practice, this can be achieved by masking the sample with binary masks or optical grating [12]. Additionally, in the general case the uniqueness of the solution cannot be guaranteed unless a sufficient number of samples exceeding the signal size is available.

1.1. Related Work

The most widely accepted reconstruction methods before the invention of modern techniques were the Gerchberg–Saxton [13] and Fienup [10] algorithms. Both methods are based on iterative nonlinear projections for the refinement of their estimates. There was no guarantee for the convergence of these algorithms and solutions could typically be obtained under special conditions for the input signals or special initializations relying on prior information. Subsequent research produced nonconvex iterative methods such as the extended Ptychographic Iterative Engine [14] and Difference Map [15].

The advent of Compressive Sensing [16] and its theoretical connection to the problem of Matrix Completion [17] led to the development of new theoretical results on Phase Retrieval. Specifically, in [11,12], Candès et al. approached the problem via the “Lifting” technique, where a convex relaxation allows to search for the solution to the problem in the space of positive semidefinite matrices, through trace norm minimization with the problem being recast as one of matrix completion. This formulation, in conjunction with special properties for the transform operators, namely having a randomness property, allowed to provide guarantees for convergence to a unique solution for the problem, given the availability of sufficient samples [8,18,19,20].

The matrix completion formulation of Phase Retrieval and related semidefinite programming methods [21] are computationally prohibitive for any sizeable signal, for example, a high-resolution image, since they involve the manipulation of very large dimensional variables. In response to this, a number of efficient non-convex optimization methods based on Stochastic Gradient Descent (SGD) were developed, namely Wirtinger Flow and Amplitude flow [19,22,23], where random operator properties and a good initial estimation of the solution are used in order to guarantee their convergence. The success of SGD methods led to the development of numerous modifications, aiming to improve their convergence and noise resilience performance, see [24,25,26,27,28] among others.

Beyond Wirtiger flow-related studies, research on Phase Retrieval produced algorithms based on non-linear optimization [29], alternating minimization [30,31] and ADMM methods [32]. Furthermore, the problem was addressed from the perspective of Basis Pursuit convex optimization [33,34], low rank matrix completion [35,36] and Total Variation minimization [37]. Ref. [38] explored insights on the geometry of the problem of Phase Retrieval. In [39] the Generalized Approximate Message Passing framework was applied to Phase Retrieval.

The ongoing developments in Deep Learning have resulted in a plethora of Neural Network methods for Phase Retrieval. Deep Learning methods include direct network approaches, in which Neural Networks are trained with specific datasets in order to learn the function mapping input to output [40,41]. Beyond dataset-based approaches, a number of physics-oriented methods were developed. Physics-based methods utilize a model of the dynamics of the sensing system as a prior driving the training or inference process of Neural Networks. In such methods, the Neural Network can act as a regularizer in an iterative estimation process, confining the estimates to certain spaces [42,43,44]. Alternatively, the iterations of an analytical process for Phase Retrieval can be mapped on the layers of a Neural Network [45,46].

Neural Networks have also been combined with numerical methods in order to refine imperfect estimates into high-quality reconstuctions [47,48]. Untrained neural network physics-based methods have been used to alleviate various problems that stem from the lack of adequate or good-quality training data as well as imperfect modeling of the signal propagation [49,50,51]. Deep learning has also been utilized to optimize the design of coded diffraction patterns [52].

Alternating optimization algorithms have also utilized Neural Networks as regularizers [53,54] in order to improve noise stability and achieve better performance. The ADMM has also been used as a physical model for untrained network methods [55,56]. An overview of Deep Learning techniques for Phase Retrieval related to a wide variety of applications and sensing configurations is provided in [57].

1.2. Our Contribution

We introduce a solver for the non-convex optimization problem of Equation (1), concerning real signals, which belongs to the category of alternating minimization algorithms. Our method differs from traditional alternating estimation algorithms since it does not involve the type of updates used in the Gerchberg–Saxton and Fienup methods, where successive nonlinear projections to desirable sets are used to refine the solution. Our study follows the line of theoretical results on Phase Retrieval with random sensing operators, specifically the interpretation of Phase Retrieval as a Matrix Completion problem and uses established results on the uniqueness of the solution in the space of rank-1 Hermitian matrices [11,22] in order to derive a nonconvex split variables formulation (see Equation (3)).

Our formulation differs from other alternating minimization methods such as [30,31], which estimate a phase and a solution vector. Instead, it reformulates the optimization problem by using two vectors for the estimated solution, expanding the search space to all rank-1 matrices (Equation (4)). The paper [32] shares the same optimization problem formulation as the one presented here (Equation (7)). However, the main theoretical and algorithmic innovation of our study lies in that it unveils and utilizes an implicit geometric relation of the split variables at optimal points in order to enforce their equality, by calculating a recombination of them at each iteration (Equation (13)) effectively restricting the estimated solution space to rank-1 Hermitian matrices. This, is without the need for additional regularization terms in the objective function, such as the ones used in various versions of the Alternating Direction Method of Multipliers.

As a result, the proposed updated equations correspond to a fundamentally different formulation of the Alternating Direction Method compared to the one presented in [32] (also see Section S5 in Supplementary File). To the best of our knowledge, the updated equations of the proposed method are not equivalent to any existing iterative method for Phase Retrieval. Empirical results show that the inclusion of the recombination step is necessary for the algorithm to converge (see Section S6 of the Supplementary File). Furthermore, since we are not aware of any results that correspond to the recombination step in the literature, we provide a theoretical analysis exploring the convergence properties of the proposed non-linear optimization method, in order to justify its general applicability.

An experimental comparison shows that our method demonstrates superior convergence properties compared to state-of-the-art analytical methods, in terms of its ability to converge for various numbers of available samples, processing time and accuracy under the presence of noise.

1.3. Paper Structure

The rest of the paper is organized as follows: In Section 2, the problem formulation and an introduction of the proposed optimization method is provided along with a theoretical analysis of its convergence. Section 3 contains experimental results, where the proposed method is evaluated and compared against other analytical Phase Retrieval solvers.

2. Method

2.1. Problem Formulation

In our experiments and analysis, we consider the case of observations, which are acquired according to the following forward model

that is a matrix with entries , observed by applying a set of K masks (diagonal matrices) to the original signal and measuring the squared magnitude of each element of the Discrete Fourier Transform (DFT) of the masked signal, where the DFT matrix is represented by with rows . Finally, a noise term is added to the observation.

This observation model corresponds to a sample acquisition system where the sampled image is first modulated before it is acquired by the sensor. Examples of such systems are masking [58] and ptychography [59]. In this study the mask elements are sampled randomly from the set , where i represents the imaginary unit. A modulation of this kind could be achieved with a phase-shift mask, without precluding the use of other coded patterns achievable with a modulated illumination beam or ptychography.

According to [12], the Phase Retrieval problem can be transformed into the following matrix completion problem

where is a matrix, and the operator is the Frobenius inner product. According to the same work [12], given random sampling vectors and an adequate number of samples, the optimal matrix is unique and can be used to identify the vector up to a global phase. Since it holds that at the global minimum, the search space for the matrix is the positive semidefinite cone.

Against this backdrop, we use a split variable formulation to solve the problem in Equation (3), and to enforce the rank-1 constraint in Equation (3) (i), by factorizing the matrix as

where and are vectors in . This formulation allows the observation constraints, in Equation (3) (iii) to be written as

since

Splitting the variable into and is the reason why the real part in the definition of the sampling operator is used. For a sampling vector , and denoting and its real and imaginary parts, respectively, = . For the split variables = + + i − i. When the imaginary terms do not cancel out and since the value must be real, only the real part of the sampling vector times its transpose is retained.

Similar approaches can be found in the literature on the matrix completion problem. For instance, in [60], matrix is factorized as , where and are matrices of size and its nuclear norm is minimized through alternating estimation of the values of and . The main difference between this approach and our method is that in the case of PhR, we can enforce the rank-1 constraint and equality of and .

Thus, the PhR problem can be interpreted as one of matrix completion with split variables. Solving for and does not necessarily lead to positive-semidefinite (PSD) solutions for , as is required in Equation (3) (ii). To avoid this problem, we enforce a stronger constraint, , which implies that the only non-zero eigenvalue of , , is positive, and therefore, .

Finally, the PhR problem can be formulated as the following optimization problem

2.2. Proposed Optimization Algorithm

The optimization problem of Equation (7) can be recast as

where denotes the Frobenius norm of a vector and is a matrix whose rows are defined as

and denoting the vector obtained by stacking the columns of matrix .

We propose a method that alternates between the estimations of and . At iteration n, the vectors and are calculated by solving

Alternatingly solving Equations (10) and (11) does not result in convergence. An empirical investigation (please see Section S6 in the Supplementary File) reveals the geometric properties of the local minima. It can be seen that the vectors and tend to attain resting positions that lie on opposing sides of the solution vector.

Consequently, to enforce , we introduce a refinement step in the process which replaces the vectors and with their mean

The convergence analysis of the algorithm presented in Section 2.3 shows that the second estimation step can be skipped, as calculating the local minimum of only one of the variables suffices for the recombination step to provide an estimate that is closer to the solution.

The recombination step then becomes

The final form of the proposed optimization method is summarized in Algorithm 1.

| Algorithm 1 Proposed Algorithm for Phase Retrieval |

|

The estimation of involves the application of a conjugate gradient solver. If p the number of maximum allowed iteration of the Conjugate Gradient solver [61] and q the maximum allowed iterations of Algorithm 1, the total computational complexity is (see Section S3 in the Supplementary Material) basic operations.

2.3. Convergence Analysis

In this subsection, theoretical results on the convergence of Algorithm 1 are presented. This analysis is related to real signal and variable vectors .

2.3.1. Equations at Local Minima

Let and be the error vectors, such as

At each iteration, the vector is computed via a Linear Least Squares system solution

At this optimal point, it holds that

By using the definition of the error vectors of Equation (14), multiplying the left sides of Equation (16) with the transpose of the vector and after some algebra (please see Section S1 of the Supplementary File), we obtain

The algorithm will converge, if at each iteration it holds that

Assuming that only (or equivalently ) is optimized, the value of is left unchanged. Thus, if at each iteration

the algorithm will converge.

2.3.2. Norm of

We proceed to examine the relation of the norms of the vectors and . From the triangle inequality and Equation (17) follows that

We establish close-form solutions for the expectation and concentration inequality for the quantities and (please see Section S2 of Supplementary Material).

Combining inequality (20) with Equations (S41), (S43) and inequalities (S93), (S108), leads to

which holds with very high probability.

Since , in the limit cases inequality (21) after some algebra becomes

2.3.3. Convergence

To declutter the equations, we define

for which it holds that , and .

To find the conditions that satisfy inequalities (23), we rewrite it as

Since is upper bound by 1,

A larger value for corresponds to a smaller distance tolerance for the error vector .

Assuming a pessimistic scenario and minimum value of such a distance, the bound of is

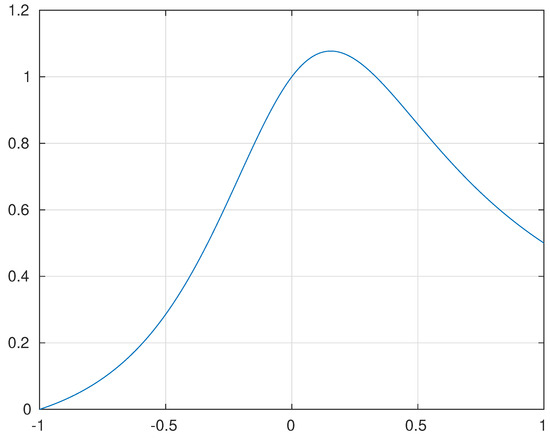

An upper bound for is numerically computed (see Figure 1), thus in the worst case

Figure 1.

Plot of the function against the variable .

Reinstating the original variables

From the non-negativity constraint, the denominators must be positive.

After some algebra, the geometric constraint becomes

or

where since the right term of the inequality is an upper bound, only the term with the minus sign was retained.

Solving the quadratic equation and after some algebra, the condition of convergence becomes

It remains to express the constraint as a relation between the norm of the error and solution vectors.

From the definition of the error vector we have

The minimal value for is attained, when , i.e., when , and are collinear.

At this geometric point, it holds that

or

From inequality (38) it follows that an initialization which results in a value will always lead to convergence.

The above result assumes the worst case scenario for the value of the variable , as well as the geometric relations of the vectors , and .

Assuming that the uncertainty term relying on is equal to zero for ease of exposition, the best possible scenario is the case where for which , or stated otherwise, any initial value of results to a smaller error norm.

In a case where , which implies that the vectors , and are not aligned, a value for the error vector where , will also lead to a lower error.

The convergence analysis presented in this section relies mainly on the terms of the right side of inequality (17) attaining small values or equivalently that for various instantiations of the masks, the quantities and are highly concentrated around their expected value. The type of random masks examined endows the forward operator with special statistical properties that promote the high concentration. The convergence analysis presented in this study assumes that for each mask element, it is true that

and

Masks with elements that belong to or will also lead to convergence. Empirical tests show that for the proposed algorithm to function well the zero expectation property must be maintained. Beyond the aforementioned, other families of masks that enhance the concentration of measure of the sensing matrices, such as Designed Coded Diffraction [62], can be utilized.

3. Results

This section contains experiments showing the performance of the proposed algorithm in terms of numerical error and execution time. The proposed method is compared with other analytical methods such as the Wirtinger Flow Phase Retrieval (WF) [63], Truncated Wirtinger Flow (TWF) [23] Truncated Amplitude Flow (TAF) [19], the Momentum median reweighted Truncated Amplitude Flow (MRTAF) [28] and the PhaseSplit [32] method which shares the same formulation as the method proposed in this study.

Notice that the forward model of TAF, or MRTAF uses the non-squared magnitudes as input, but the proposed method, WF, TWF and PhaseSplit consider the squared magnitudes. Therefore, the noisy measurements of one category of algorithms do not result in the same SNR for the other and cannot be used directly. However, we compare the methods by adding a level of noise which results in the same SNR to the magnitudes and squared magnitudes observations, respectively.

Each method has parameters that control its performance. The standard parameters were used, as provided by their authors.

In the experiments three initialization methods were considered, one based on the Truncated Wirtinger Flow spectral initialization (TWF) introduced in [23], one based on the Truncated Amplitude Flow spectral initialization (TAF) introduced in [19] and a proposed positive random numbers initialization method (see Section S4 of the Supplementary File).

The proposed method was implemented in MATLAB © and MATLAB © implementations of WF, TAF and TWF were downloaded from the respective websites, see Table 1. All test images shown were obtained by the USC-SIPI Image Database (The USC-SIPI test image dataset can be found in https://sipi.usc.edu/database/ (accessed on 20 July 2024)). The implementation of PhaseSplit and MRTAF where not readily available and were implemented by us since they correspond to minor modifications of the proposed and TAF methods, respectively.

Table 1.

URLs of the websites where we downloaded the code for the state-of-the-art methods compared in this work.

In the simulations, three different images were used. “Lena” of sizes and , “Cameraman” of size , and “Man” of sizes and .

In the experiments, the proposed algorithm stops executing when the distance between the last two estimated output values becomes lower than a given threshold, set to .

To evaluate the performance of the proposed method we use the following metric, which is the square root of the metric proposed in [11]

This metric takes into account the fact that the original signal and any other signal obtained by a global phase delay of always produces the same observation.

For the case where is real, this is

We generate simulated observations according to the acquisition model introduced in Equation (2).

We consider a different number of masks in our simulations, that is .

The elements of each mask , , are uniformly drawn from the Coded Diffraction Patterns dictionary (see [22]). These diffraction patterns correspond to physically realizable acquisition systems, where only a phase delay is introduced using appropriate masking.

Different levels of AWGN noise were considered in our simulations, with SNRs equal to ∞, 30, 24, 20 and 10 dB.

3.1. Initialization Quality

The performance of the initialization method proposed in Section S4 of the Supplementary File was evaluated first.

Table 2 shows the normalized error obtained by the proposed random initialization method, TWF initialization method and TAF initialization method, for the noiseless case with .

Table 2.

Comparison of the initialization methods in the noiseless case.

The proposed random initialization method produces estimates of similar quality for all cases. The error of the proposed initialization method increases with the size of the input image but is similar for different numbers of masks with each size.

We observe that TWF and TAF need at least K = 8 and K = 4 masks, respectively, to obtain a normalized error smaller than one, but the proposed initialization method can obtain normalized errors smaller than one, even with K = 2.

Table 3 shows the normalized error obtained by the proposed initialization method, TAF initialization method and TWF initialization method, for SNR = 20 dB and . The proposed method performs similarly to the noiseless case.

Table 3.

Comparison of the initialization methods for SNR = 20 dB.

The TWF method needs more than K = 8 masks to obtain a normalized error less than 1 while the TAF initialization begins to do so with K = 4 masks. The quality of the estimates of the proposed initialization method does not change substantially by the presence of noise compared to the noiseless case.

Table 4 shows the time required for the initialization routine to return for the WF, TWF and TAF methods. The Proposed method is omitted since it has effectively zero execution time (for example, it is 0.01 s for and images, the slowest case in the experiments conducted in this work). The results are for various image sizes and in the noise-free observations case.

Table 4.

Execution times of the comparison initialization methods in the noiseless case.

The noisy cases are not shown but would have the same return times since the presence of noise does not affect the execution times of the iterations, and the iterations number is predefined. We observe that the times required grow with the number of masks and image sizes, which is expected due to the higher computational complexity. The WF method is the fastest, with the second fastest being the TWF and TAF the slowest.

This pattern reflects the higher complexity of the truncation calculations in each iteration. Generally, the WF, TWF and TAF initializations can consume substantial computational resources, with execution times equivalent to 40% of the reconstruction time in the cases where noise is present.

3.2. Reconstructions with Noise-Free Observations

Figure 2 shows the reconstructions of 10 test images for K = 2 masks in the noiseless case, using the proposed method. In all cases the images were perfectly reconstructed.

Figure 2.

Reconstructions of images for K = 2 using the proposed method.

In all noise-free cases, the proposed method is able to recover the original signal. Regarding the compared methods, TWF, TAF, and MRTAF also recover the exact solution in all cases. However, WF only recovers the exact solution when . For , WF converges to an inexact reconstruction of the image.

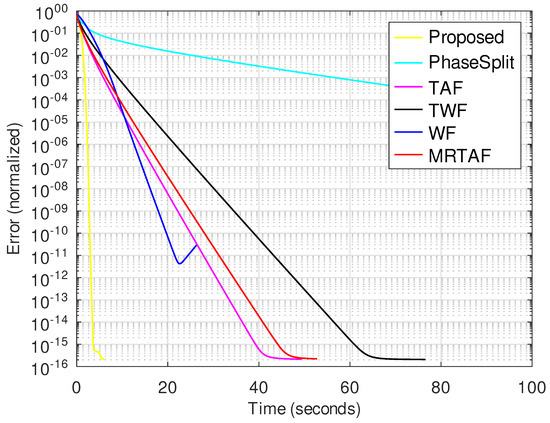

Figure 3 shows the evolution of the reconstruction error with time for all methods tested. The proposed method converges to a solution substantially faster than the compared methods. Beyond this WF, TAF and MRTAF are the methods that converge relatively faster. The PhaseSplit method converges more slowly than the proposed and SGD-based methods and its convergence behavior is very sensitive to changes in its parameters (also see Section S5 of Supplementary Material).

Figure 3.

Normalized error vs. time for image size with K = 8 in the noiseless case.

Table 5 shows the execution time taken for all methods to achieve exact reconstruction for various input sizes and for both the proposed and TAF initializations, with the latter being considered the best available spectral initializer. In some of the experiments, the WF method failed to reach an exact solution and terminated early with a solution of normalized error typically close to 0.03.

Table 5.

Comparison of execution times (in seconds) in the noiseless case. The asterisks (*) denote cases where the reconstruction failed to converge or reach an exact solution.

In all experiments, the proposed method is faster than all the compared methods. More specifically, we observe that the proposed method is approximately four times faster than the second WF fastest method.

In the next experiment, the convergence success rate is measured. We generate 100 random signals of size and apply the observation model in Equation (3) to generate 100 noise-free observations. Then, we apply the compared methods and calculate the percentage of signals that have been successfully recovered.

The experiment is repeated for two different ways of generating the signal. More specifically, we use a uniform distribution on the interval [0, 1], and a standard Gaussian distribution with mean 0 and covariance the identity matrix. The results of this experiment are shown in Table 6.

Table 6.

Success rates for the proposed algorithm for real positives and real signals.

For signals generated with the Gaussian distribution, the proposed method converges with higher probability when the TAF initialization is used, since the quality of the proposed initialization is worse, as was seen in the previous experiment.

The failures in convergence were associated with poor initialization quality up to . Beyond this threshold there is enough information for the spectral initialization to be of good quality. More than six masks also suffice for the method to converge, regardless of the initialization, which can be deduced by the success rate for a general real signal when the initialization only contains positive numbers. When the TAF initialization was used, all methods had progressively better success rates with higher K.

The WF method follows the same converge patterns, regardless of initialization and signal type with only the number of masks determining the rate of success. TAF and TWF always failed when the proposed random initialization was used for general real signals due to the poor quality of the initialization.

The proposed method and PhaseSplit had higher success rates for lower K compared to all other methods.

Generally, the proposed method produces reconstructions for the noise-free case comparable to the obtained ones by the state-of-the-art methods, with the advantage of being much faster.

The proposed random initialization method also leads to acceptable initializations in practice.

The proposed method works when the lower theoretical bound of K = 2 masks is available, given that a good-quality initialization is available. Reconstructions with K = 2 masks have only been reported in the TAF paper [19], for real valued sampling vectors. PhaseSplit could also produce a similar level of performance to the proposed method when its parameters were finely tuned.

The proposed method also converges with K = 2 masks in the case of complex sampling vectors.

3.3. Reconstructions with Noisy Observations

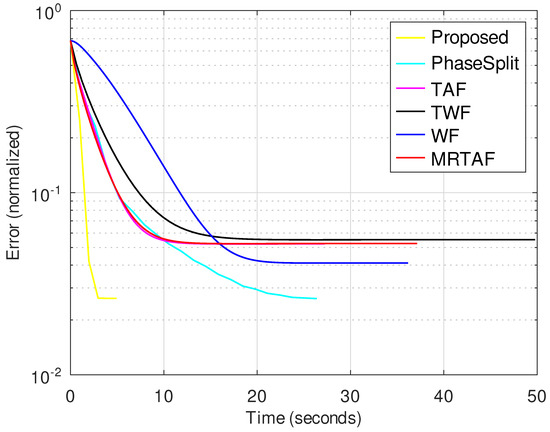

In this subsection, we evaluate the performance of the compared methods for noisy observations. Figure 4 and Figure 5 show the evolution of the normalized error for all compared methods for SNR = 24 dB and K = 8, when the image size is and , respectively.

Figure 4.

Normalized error vs. time for image size with SNR = 24 dB and K = 8.

Figure 5.

Normalized error vs. time for image size with SNR = 24 dB and K = 8.

Table 7 shows the results obtained for all the compared methods, for image sizes and SNR = 24 dB. In addition to Normalized error, we also show the PSNR and SSIM of the reconstructed images.

Table 7.

Figures of merit for the three compared methods, for image size and SNR = 24 dB.

The proposed method obtains better reconstructions in terms of Normalized error, PSNR and SSIM. We also observe that when the number of masks K increases, the three methods obtain better reconstructions.

For K = 4, the difference in PSNR is approximately 2 dB with the WF, which is the nearest competitor of the GSD-based methods. However, when K increases, this difference decreases, and when K = 8 the difference with the nearest competitor WF is about 0.8 dB. Regarding running times, the proposed method needs about 1 s when the number of masks is K = 8. For the same case, WF and TWF need more than 6 s and TAF and MRTAF more than 3.5 s.

Table 8 and Table 9 show the results obtained for all the compared methods, for image size with SNR = 24 dB and SNR = 30 dB, respectively. The proposed method reconstructions have a better Normalized error, PSNR and SSIM with these metrics improving with a higher number of masks.

Table 8.

Comparison of solvers performance, for different K and initialization method. The image size is and the input SNR is 24 dB.

Table 9.

Comparison of solvers performance, for different K and initialization method. The image size is and the input SNR is 30 dB.

PhaseSplit and the proposed method result in the same level of reconstruction quality for . The proposed method converges 3.5 to 6 times faster than PhaseSplit. For K = 4 and SNR = 24 dB there is a 3.5 dB difference with the next best SGD method, TAF. For K = 8 there is a 0.85 dB difference with the next best SGD method, WF. In terms of execution time, the proposed method execution time is 2.5 lower than the next best one.

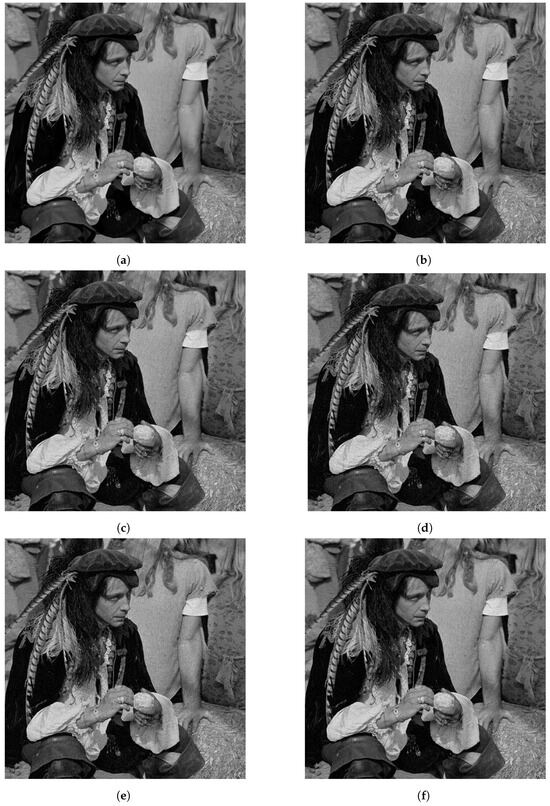

Figure 6 shows an example of the reconstructed images by the compared methods, for image size , K = 4 masks and SNR = 20 dB. Figure 6a shows the ground truth image that we used to generate the observation. Figure 6b shows the reconstruction obtained for the proposed method.

Figure 6.

Reconstructions of image with SNR = 20 dB for K = 4. (a) Original Image. (b) Reconstruction with Proposed method. (c) Reconstruction with WF. (d) Reconstruction with TWF. (e) Reconstruction with MRTAF. (f) Reconstruction with PhaseSplit.

The proposed method recovered most of the details in the image. For instance, see the high-frequencies information on the straw at the bottom-right of the image. See also, the feathers hanging of the man’s hat. Figure 6c,d shows the reconstructions obtained by WF, TWF, MRTAF and PhaseSplit, respectively. These reconstructions are very similar to the ones obtained with the proposed method; however, we can observe that the man’s face in Figure 6c–e, looks noisier than the man’s face in Figure 6b. The reconstruction of PhaseSplit presented in Figure 6f is very similar to the one produced by the proposed method.

3.4. Robustness to Number of Masks and Noise Level

The performance of the Proposed method for different levels of noise and number of masks is examined next. Figure 7 shows the effect of noise on the convergence of the proposed method. As expected, we observe that we obtain more error for higher noise levels. However, we observe that for higher noise levels, the proposed method needs less time to converge.

Figure 7.

Semilogarithmic plot of normalized error vs. iteration for image size and K = 8.

To visualize the effect of various noise levels on the reconstruction, the results for an image, reconstructed with the proposed method, are presented in Figure 8. Figure 8a is the noiseless image. Figure 8b is the reconstructed image with SNR = 10 dB. In this case, there is an obvious effect from the noise in the image quality that can be seen throughout the whole image. Figure 8c,d shows the reconstructed images for SNR = 20 dB and SNR = 30 dB, respectively.

Figure 8.

From left to right and top to bottom, original and reconstructions of image with K = 8 for various noise levels. (a) Original Image. (b) SNR = 10 dB. (c) SNR = 20 dB. (d) SNR = 30 dB.

In these cases, the noise effect is less apparent but can be seen as changes in texture, especially on large patches of the same color or texture in the image.

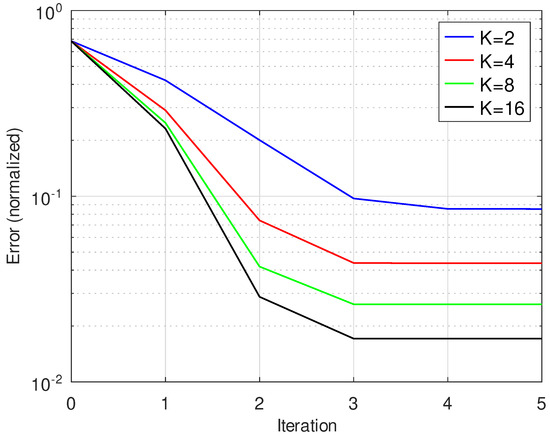

Figure 9 shows the performance of the proposed algorithm for different values of K. A lower number of masks leads to faster convergence due to lower computational complexity and a higher normalized error.

Figure 9.

Iteration vs. normalized error for SNR = 24 dB for image size and K = 2, 4, 8, 16.

The images in Figure 10 present the corresponding reconstructions for K = 2, 4, 8, 16 for a image and 20 dB SNR. Figure 10a is the case with K = 2 where the effect of noise is most obvious. Figure 10b–d are the cases with K = 4, 8 and 16, respectively. In these cases, the reconstruction is better and the effect of noise becomes progressively less apparent with the growing number of masks.

Figure 10.

Reconstructions of image with SNR = 24 dB for various numbers of masks K. (a) K = 2. (b) K = 4. (c) K = 8. (d) K = 16.

4. Discussion

We have presented an analytical method, which outperforms state-of-the-art algorithms for the solution of non-linear quadratic optimization problems associated with Phase Retrieval, when real signals are involved.

Following established results in the literature on the connection between Matrix Completion and Phase Retrieval as well as the uniqueness of the solution under random forward operator conditions [11,22], we have reformulated the original problem into one of alternating optimization with split variables.

While various alternating optimization for Phase Retrieval approaches exist in the literature [30,31] and the formulation considered in this study has also been used in [32], our method differs from other Phase Retrieval solvers, since it introduces an algorithmic step to recombine the split variables and confine the estimated solution to the desired space. This was possible due to a close examination of the relations of the variables involved which allowed us to identify implicit regularizations.

The convergence properties of the algorithm were theoretically examined, in order to establish its applicability for any real signal where it was shown that the algorithm will converge, for some mild initialization conditions.

The presence of noise in the observations is implicitly factored in the statistical uncertainty terms in the theoretical analysis; however, the noise terms were not specifically modeled. An experimental exploration of the effect of AGWN on the method (see Figure 7) showed that the method converges and the output is corrupted according to the noise level. The proposed method performed better than state-of-the-art analytical methods when tested at the same noise level (please see Section 3.3).

Since the algorithm does not use any explicit regularization terms, the only algorithmic parameters that are controllable are the tolerance and maximum iterations of the Conjugate Gradient solver and the number of maximum iterations of Algorithm 1. A higher noise level can allow for the use of higher tolerance or maximum iterations for the GC solver. The proposed algorithm can be implemented on any platform that can support the solution of linear systems via Conjugate Gradient. Since the forward model is based on the Fast Fourier Transform, the only storage requirements are for the masks and split variables, allowing for the handling of large images by standard desktop computers with limited memory.

Our experiments show that given an adequate number of observations, appropriate types of masks and a good initialization, the proposed algorithm can reconstruct any real signal.

However, it must be highlighted that the analysis and implementation of the presented algorithm concerns real signals only. Both the algorithmic implementation and theoretical analysis would be fundamentally different in the case of complex signals. This fact precludes the use of the method in the form presented in this paper by most practical Phase Retrieval applications.

The expansion of this method to its complex signal equivalent and the mapping of the iterations of Algorithm 1 or its complex equivalent onto a Neural Network architecture based on deep unrolling, remain a future research direction.

5. Conclusions

In this study, an analytical method to address the problem of Phase Retrieval was developed. The method, which belongs to the family of alternating minimization methods employs an algorithm that estimates two separate variables via convex optimizations iteratively to reach a solution. The discovery of special geometric relations of the split variables brought the introduction of a new algorithmic step, which amounts to the replacement of the variables with their average after the convex estimations at each iteration. A theoretical exploration of the convergence properties of the algorithm in the presence of the recombination step was conducted, showing that the algorithm converges under some mild initialization assumptions. Experiments show that the inclusion of the recombination step leads to significantly faster convergence rates compared to existing analytical methods, for equivalent or improved accuracy, resilience to noise and ability to converge under varying conditions. The presented analysis involves real signals only. The expansion to complex signals and the development of a deep unrolling version of the proposed algorithm are directions of future research.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/jimaging10100249/s1. Table S1: Example of evolution of variables after alternatingly estimating a and b. Table S2: Example of evolution of variables at each iteration of the algorithm. The values shown are before averaging the vectors. Figure S1: Values of objective function and normalized norms of vectors d and e after each step of the algorithm. The error norm decreases faster as the algorithm progresses.

Author Contributions

Conceptualization, P.N., P.R.M., H.N., G.T. and A.K.K.; methodology, P.N., P.R.M., H.N., G.T. and A.K.K.; software, P.N.; validation, P.N., P.R.M., H.N., G.T. and A.K.K.; formal analysis, P.N., P.R.M., H.N., G.T. and A.K.K.; writing—original draft preparation, P.N., P.R.M.; writing—review and editing, P.N., P.R.M., H.N., G.T. and A.K.K.; supervision, H.N., G.T. and A.K.K. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data and software can be provided by authors upon request.

Conflicts of Interest

Author Pablo Ruiz Mataran was employed by the company Charboost. Author Petros Nyfantis was employed by the company PCCWGlobal. The remaining authors declare no conflicts of interest.

References

- Tian, L.; Waller, L. 3D intensity and phase imaging from light field measurements in an LED array microscope. Optica 2015, 2, 104–111. [Google Scholar] [CrossRef]

- Harrison, R.W. Phase problem in crystallography. J. Opt. Soc. Am. A 1993, 10, 1046–1055. [Google Scholar] [CrossRef]

- Pfeiffer, F.; Weitkamp, T.; Bunk, O.; David, C. Phase retrieval and differential phase-contrast imaging with low-brilliance X-ray sources. Nat. Phys. 2006, 2, 258. [Google Scholar] [CrossRef]

- Miao, J.; Ishikawa, T.; Johnson, B.; Anderson, E.H.; Lai, B.; Hodgson, K.O. High Resolution 3D X-Ray Diffraction Microscopy. Phys. Rev. Lett. 2002, 89, 088303. [Google Scholar] [CrossRef] [PubMed]

- Miao, J.; Ishikawa, T.; Shen, Q.; Earnest, T. Extending X-ray Crystallography to Allow the Imaging of Noncrystalline Materials, Cells, and Single Protein Complexes. Annu. Rev. Phys. Chem. 2008, 59, 387–410. [Google Scholar] [CrossRef]

- Chai, A.; Moscoso, M.; Papanicolaou, G. Array imaging using intensity-only measurements. Inverse Probl. 2011, 27, 015005. [Google Scholar] [CrossRef]

- Ahmed, A.; Recht, B.; Romberg, J.K. Blind Deconvolution Using Convex Programming. IEEE Trans. Inf. Theory 2014, 60, 1711–1732. [Google Scholar] [CrossRef]

- Balan, R.; Casazza, P.; Edidin, D. On signal reconstruction without phase. Appl. Comput. Harmon. Anal. 2006, 20, 345–356. [Google Scholar] [CrossRef]

- Demanet, L.; Jugnon, V. Convex Recovery From Interferometric Measurements. IEEE Trans. Comput. Imaging 2017, 3, 282–295. [Google Scholar] [CrossRef]

- Fienup, C.; Dainty, J. Phase retrieval and image reconstruction for astronomy. Image Recover. Theory Appl. 1987, 231, 275. [Google Scholar]

- Candès, E.J.; Strohmer, T.; Voroninski, V. PhaseLift: Exact and Stable Signal Recovery from Magnitude Measurements via Convex Programming. arXiv 2011, arXiv:1109.4499. [Google Scholar] [CrossRef]

- Candès, E.J.; Eldar, Y.C.; Strohmer, T.; Voroninski, V. Phase Retrieval via Matrix Completion. SIAM Rev. 2015, 57, 225–251. [Google Scholar] [CrossRef]

- Gerchberg, R.; Saxton, W.O. A practical algorithm for the determination of phase from image and diffraction plane pictures. SPIE Milest. Ser. MS 1971, 35, 237–250. [Google Scholar]

- Maiden, A.; Rodenburg, J.M. An improved ptychographical phase retrieval algorithm for diffractive imaging. Ultramicroscopy 2009, 109, 1256–1262. [Google Scholar] [CrossRef] [PubMed]

- Thibault, P.; Dierolf, M.; Menzel, A.; Bunk, O.; David, C.; Pfeiffer, F. High-Resolution Scanning X-ray Diffraction Microscopy. Science 2008, 321, 379–382. [Google Scholar] [CrossRef] [PubMed]

- Candes, E.J.; Wakin, M.B. An Introduction to Compressive Sampling. IEEE Signal Process. Mag. 2008, 25, 21–30. [Google Scholar] [CrossRef]

- Candès, E.J.; Recht, B. Exact Matrix Completion via Convex Optimization. Found. Comput. Math. 2009, 9, 717–772. [Google Scholar] [CrossRef]

- Eldar, Y.C.; Mendelson, S. Phase retrieval: Stability and recovery guarantees. Appl. Comput. Harmon. Anal. 2014, 36, 473–494. [Google Scholar] [CrossRef]

- Wang, G.; Zhang, L.; Giannakis, G.B.; Akçakaya, M.; Chen, J. Sparse Phase Retrieval via Truncated Amplitude Flow. IEEE Trans. Signal Process. 2018, 66, 479–491. [Google Scholar] [CrossRef]

- Bandeira, A.S.; Cahill, J.; Mixon, D.G.; Nelson, A.A. Saving phase: Injectivity and stability for phase retrieval. Appl. Comput. Harmon. Anal. 2014, 37, 106–125. [Google Scholar] [CrossRef]

- Waldspurger, I.; d’Aspremont, A.; Mallat, S. Phase Recovery, MaxCut and Complex Semidefinite Programming. Math. Program. 2012, 149, 47–81. [Google Scholar] [CrossRef]

- Candes, E.J.; Li, X.; Soltanolkotabi, M. Phase retrieval from coded diffraction patterns. Appl. Comput. Harmon. Anal. 2015, 39, 277–299. [Google Scholar] [CrossRef]

- Chen, Y.; Candés, E.J. Solving Random Quadratic Systems of Equations is Nearly as Easy as Solving Linear Systems. In Proceedings of the 28th International Conference on Neural Information Processing Systems—Volume 1, Montreal, QC, Canada, 7–12 December 2015; MIT Press: Cambridge, MA, USA, 2015. NIPS’15. pp. 739–747. [Google Scholar]

- Bostan, E.; Soltanolkotabi, M.; Ren, D.; Waller, L. Accelerated Wirtinger Flow for Multiplexed Fourier Ptychographic Microscopy. In Proceedings of the 2018 25th IEEE International Conference on Image Processing (ICIP), Athens, Greece, 7–10 October 2018; pp. 3823–3827. [Google Scholar]

- Zhang, H.; Chi, Y.; Liang, Y. Provable Non-convex Phase Retrieval with Outliers: Median TruncatedWirtinger Flow. In Proceedings of the International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016. [Google Scholar]

- Cai, T.; Li, X.; Ma, Z. Optimal Rates of Convergence for Noisy Sparse Phase Retrieval via Thresholded Wirtinger Flow. Ann. Stat. 2015, 44, 2221–2251. [Google Scholar] [CrossRef]

- Kong, L.; Yan, A. Robust amplitude method with L1/2-regularization for compressive phase retrieval. J. Ind. Manag. Optim. 2023, 19, 7686–7702. [Google Scholar] [CrossRef]

- Zhang, Q.; Liu, D.; Hu, F.; Li, A.; Cheng, H. Median momentum reweighted amplitude flow for phase retrieval with arbitrary corruption. J. Mod. Opt. 2021, 68, 374–381. [Google Scholar] [CrossRef]

- Shechtman, Y.; Beck, A.; Eldar, Y.C. GESPAR: Efficient phase retrieval of sparse signals. IEEE Trans. Signal Process. 2014, 62, 928–938. [Google Scholar] [CrossRef]

- Netrapalli, P.; Jain, P.; Sanghavi, S. Phase retrieval using alternating minimization. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–8 December 2013; pp. 2796–2804. [Google Scholar]

- Waldspurger, I. Phase Retrieval With Random Gaussian Sensing Vectors by Alternating Projections. IEEE Trans. Inf. Theory 2016, 64, 3301–3312. [Google Scholar] [CrossRef]

- Mukherjee, S.; Shit, S.; Seelamantula, C.S. Phasesplit: A Variable Splitting Framework for Phase Retrieval. In Proceedings of the 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 4709–4713. [Google Scholar] [CrossRef]

- Bahmani, S.; Romberg, J. Phase retrieval meets statistical learning theory: A flexible convex relaxation. arXiv 2016, arXiv:1610.04210. [Google Scholar]

- Goldstein, T.; Studer, C. PhaseMax: Convex Phase Retrieval via Basis Pursuit. arXiv 2016. [Google Scholar] [CrossRef]

- Vaswani, N.; Nayer, S.; Eldar, Y.C. Low-Rank Phase Retrieval. IEEE Trans. Signal Process. 2017, 65, 4059–4074. [Google Scholar] [CrossRef]

- Horstmeyer, R.; Chen, R.Y.; Ou, X.; Ames, B.; Tropp, J.A.; Yang, C. Solving ptychography with a convex relaxation. New J. Phys. 2015, 17, 053044. [Google Scholar] [CrossRef]

- Wu, T.T.; Huang, C.; Gu, X.; Niu, J.; Zeng, T. Finding robust minimizer for non-convex phase retrieval. Inverse Probl. Imaging 2023, 18, 286–310. [Google Scholar] [CrossRef]

- Sun, J.; Qu, Q.; Wright, J. A geometric analysis of phase retrieval. In Proceedings of the 2016 IEEE International Symposium on Information Theory (ISIT), Barcelona, Spain, 10–15 July 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 2379–2383. [Google Scholar]

- Schniter, P.; Rangan, S. Compressive Phase Retrieval via Generalized Approximate Message Passing. IEEE Trans. Signal Process. 2015, 63, 1043–1055. [Google Scholar] [CrossRef]

- Sinha, A.; Lee, J.; Li, S.; Barbastathis, G. Lensless computational imaging through deep learning. Optica 2017, 4, 1117–1125. [Google Scholar] [CrossRef]

- Deng, M.; Li, S.; Zhang, Z.; Kang, I.; Fang, N.X.; Barbastathis, G. On the interplay between physical and content priors in deep learning for computational imaging. Opt. Express 2020, 28, 24152–24170. [Google Scholar] [CrossRef] [PubMed]

- Metzler, C.A.; Schniter, P.; Veeraraghavan, A.; Baraniuk, R.G. prDeep: Robust Phase Retrieval with Flexible Deep Neural Networks. arXiv 2018, arXiv:1803.00212. [Google Scholar] [CrossRef]

- Jagatap, G.; Hegde, C. Algorithmic guarantees for inverse imaging with untrained network priors. In Proceedings of the 33rd International Conference on Neural Information Processing Systems; Curran Associates Inc.: Red Hook, NY, USA, 2019. [Google Scholar]

- Chen, Q.; Huang, D.; Chen, R. Fourier ptychographic microscopy with untrained deep neural network priors. Opt. Express 2022, 30, 39597–39612. [Google Scholar] [CrossRef]

- Wu, X.; Wu, Z.; Shanmugavel, S.C.; Yu, H.Z.; Zhu, Y. Physics-informed neural network for phase imaging based on transport of intensity equation. Opt. Express 2022, 30, 43398–43416. [Google Scholar] [CrossRef]

- Yang, Y.; Lian, Q.; Zhang, X.; Zhang, D.; Zhang, H. HIONet: Deep priors based deep unfolded network for phase retrieval. Digit. Signal Process. 2023, 132, 103797. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, T.; Shen, Z.; Qiao, Y.; Zhang, Y. Fourier ptychographic microscopy reconstruction with multiscale deep residual network. Opt. Express 2019, 27, 8612–8625. [Google Scholar] [CrossRef]

- Rivenson, Y.; Zhang, Y.; Günaydın, H.; Teng, D.; Ozcan, A. Phase recovery and holographic image reconstruction using deep learning in neural networks. Light. Sci. Appl. 2018, 7, 17141. [Google Scholar] [CrossRef]

- Zhang, Y.; Noack, M.A.; Vagovic, P.; Fezzaa, K.; Garcia-Moreno, F.; Ritschel, T.; Villanueva-Perez, P. PhaseGAN: A deep-learning phase-retrieval approach for unpaired datasets. Opt. Express 2021, 29, 19593–19604. [Google Scholar] [CrossRef] [PubMed]

- Huang, L.; Chen, H.; Liu, T.; Ozcan, A. Self-supervised learning of hologram reconstruction using physics consistency. Nat. Mach. Intell. 2023, 8, 895–907. [Google Scholar] [CrossRef]

- Wang, Y.; Sun, X.; Fleischer, J.W. When deep denoising meets iterative phase retrieval. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; Volume 28. [Google Scholar]

- Hyder, R.; Cai, Z.; Asif, M.S. Data-driven illumination patterns for coded diffraction imaging. Sensors 2022, 24, 9964. [Google Scholar] [CrossRef]

- Wang, F.; Bian, Y.; Wang, H.; Lyu, M.; Pedrini, G.; Osten, W.; Barbastathis, G.; Situ, G. Phase imaging with an untrained neural network. Light. Sci. Appl. 2020, 9, 77. [Google Scholar] [CrossRef]

- Song, L.; Lam, E.Y. Phase retrieval with a dual recursive scheme. Opt. Express 2023, 31, 10386–10400. [Google Scholar] [CrossRef]

- Wang, Z.; Zheng, S.; Ding, Z.; Guo, C. Dual-constrained physics-enhanced untrained neural network for lensless imaging. J. Opt. Soc. Am. A 2024, 41, 165–173. [Google Scholar] [CrossRef]

- Zhang, J.; Xu, T.; Shen, Z.; Qiao, Y.; Zhang, Y. ADMM based Fourier phase retrieval with untrained generative prior. J. Comput. Appl. Math. 2024, 444, 115786. [Google Scholar] [CrossRef]

- Wang, K.; Song, L.; Wang, C.; Ren, Z.; Zhao, G.; Dou, J.; Di, J.; Barbastathis, G.; Zhou, R.; Zhao, J.; et al. On the use of deep learning for phase recovery. arXiv 2023, arXiv:2308.00942. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, B.; Li, E.; Wang, J.; Marcelli, A.; Wilkins, S.; Ming, H.; Tian, Y.; Nugent, K.; Zhu, P.; et al. Phase retrieval in X-ray imaging based on using structured illumination. Phys. Rev. A 2008, 78, 023817. [Google Scholar] [CrossRef]

- Rodenburg, J.M. Ptychography and related diffractive imaging methods. Adv. Imaging Electron. Phys. 2008, 150, 87–184. [Google Scholar]

- Recht, B.; Fazel, M.; Parrilo, P.A. Guaranteed Minimum-Rank Solutions of Linear Matrix Equations via Nuclear Norm Minimization. SIAM Rev. 2010, 52, 471–501. [Google Scholar] [CrossRef]

- Barrett, R.; Berry, M.; Chan, T.F.; Demmel, J.; Donato, J.; Dongarra, J.; Eijkhout, V.; Pozo, R.; Romine, C.; Van der Vorst, H. Templates for the solution of linear systems: Building blocks for iterative methods. Math. Comput. 1996, 64, 9. [Google Scholar]

- Guerrero, A.; Pinilla, S.; Arguello, H. Phase Recovery Guarantees From Designed Coded Diffraction Patterns in Optical Imaging. IEEE Trans. Image Process. 2020, 29, 5687–5697. [Google Scholar] [CrossRef] [PubMed]

- Candès, E.J.; Li, X.; Soltanolkotabi, M. Phase Retrieval via Wirtinger Flow: Theory and Algorithms. IEEE Trans. Inf. Theory 2015, 61, 1985–2007. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).