Artificial Feature Extraction for Estimating State-of-Temperature in Lithium-Ion-Cells Using Various Long Short-Term Memory Architectures

Abstract

:1. Introduction

Motivation

2. Theoretical Foundations

2.1. Temperature Behavior in Lithium-Ion-Battery-Cells

2.1.1. Heat Generation

2.1.2. Heat Dissipation

2.2. Time Series Forecast

3. Experimental

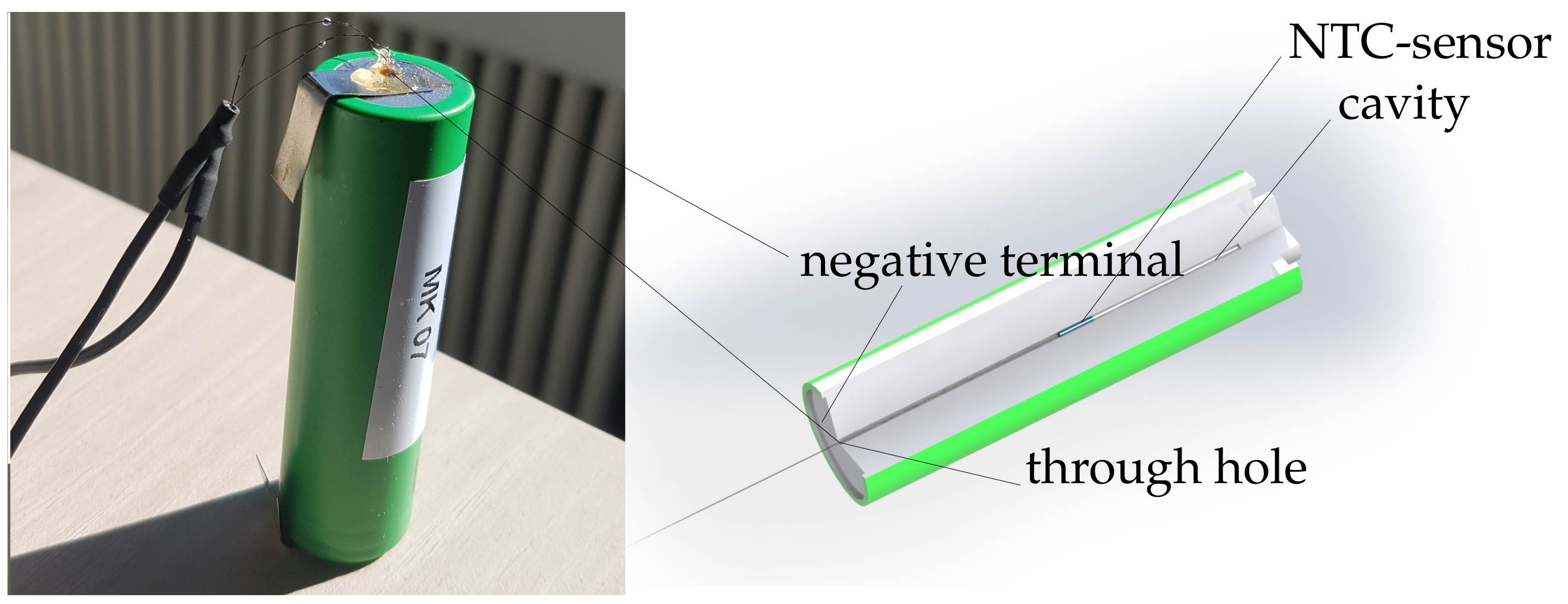

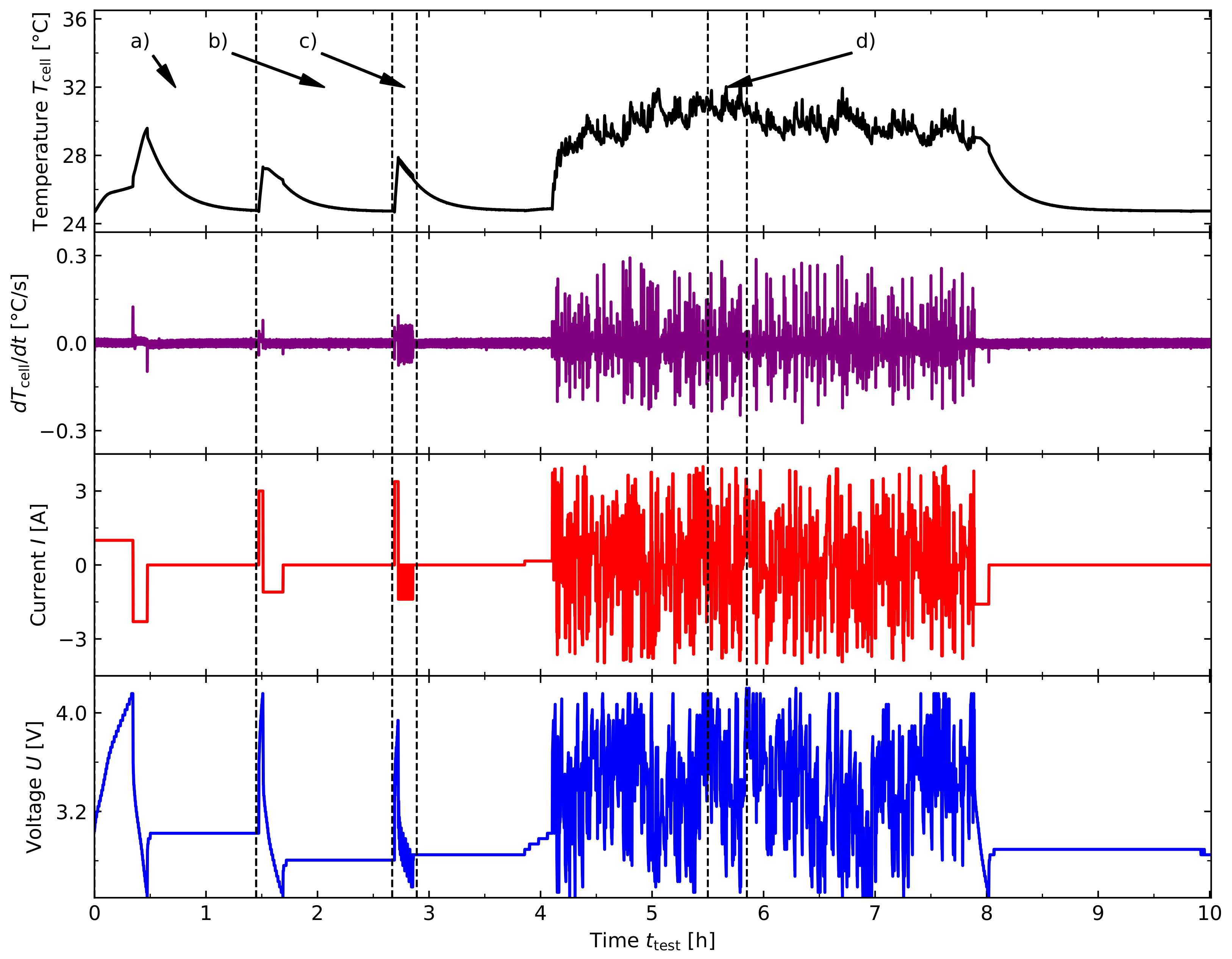

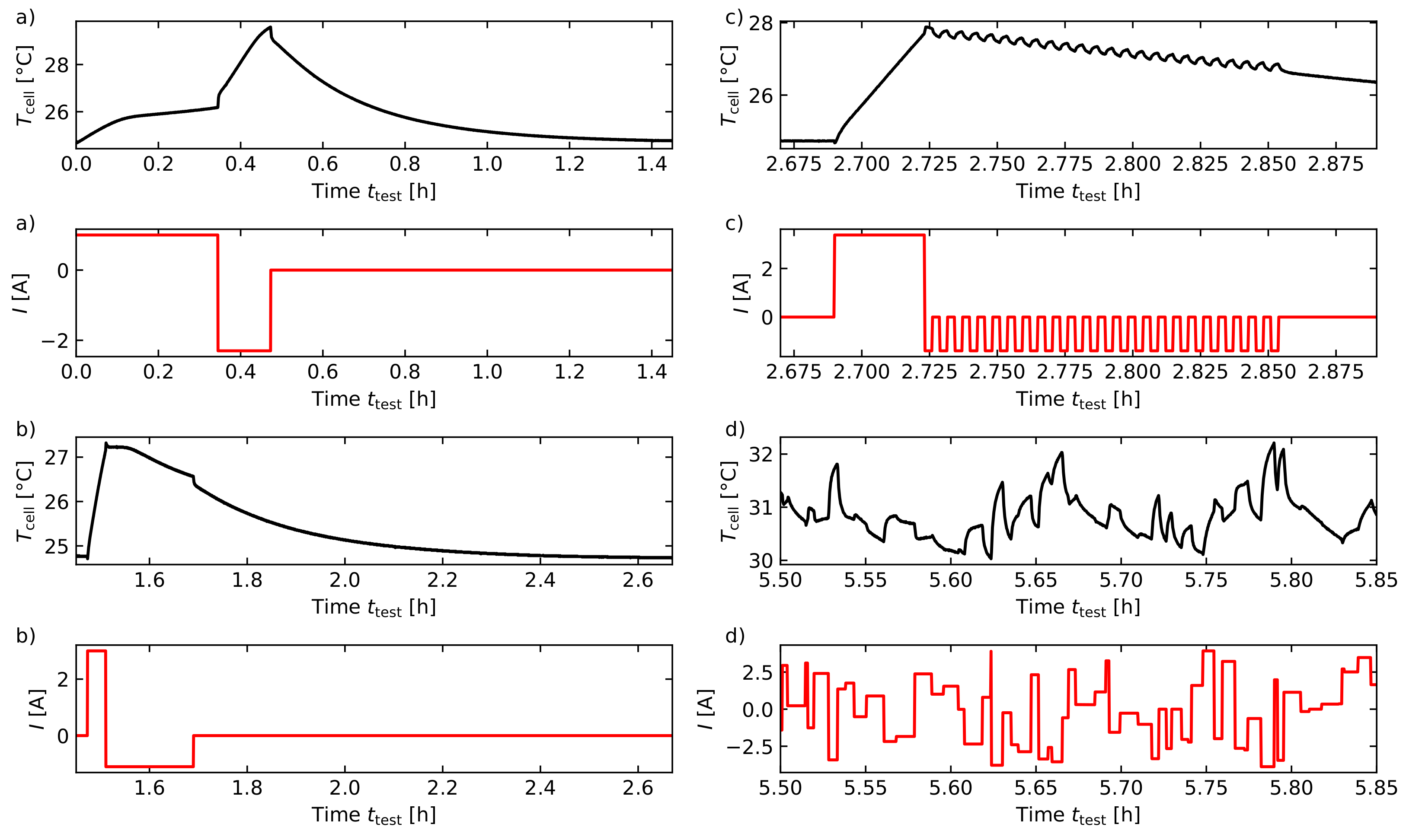

3.1. Method of Measurement

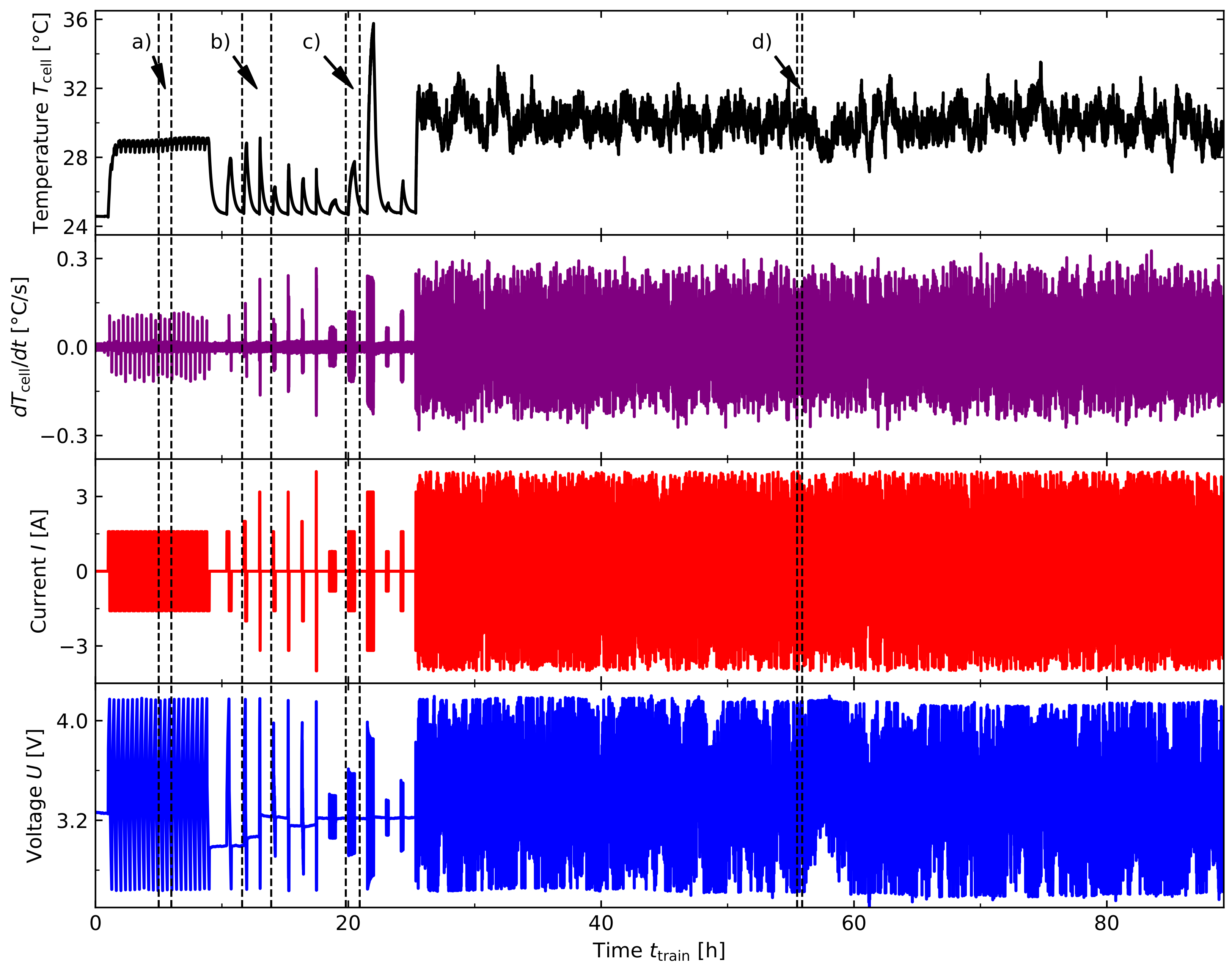

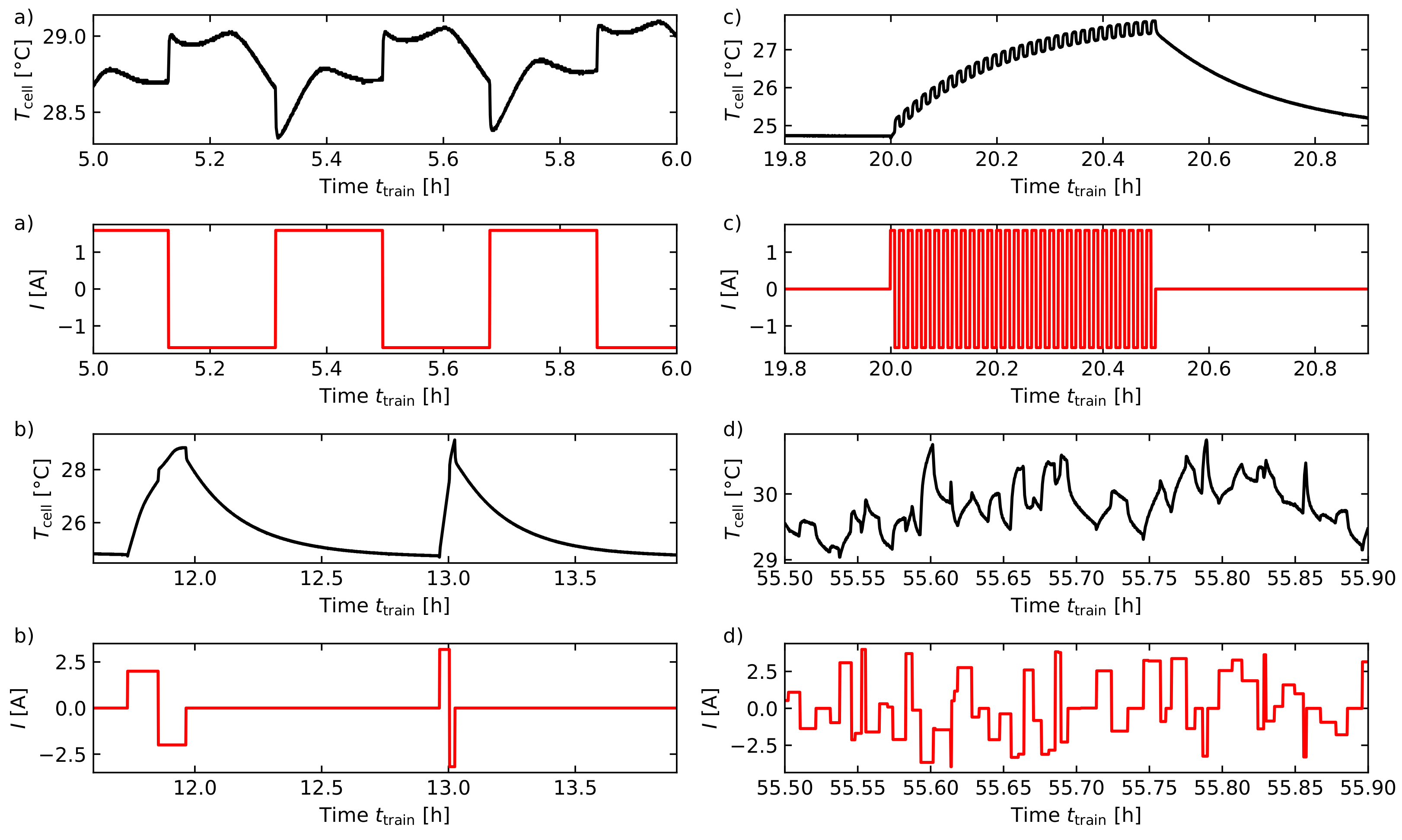

3.2. Current Profile

3.3. Data Preparation

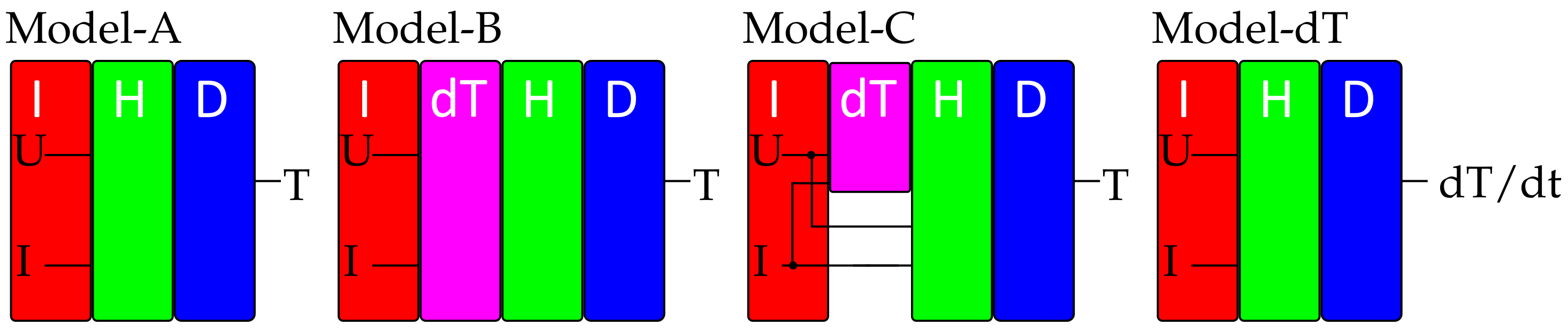

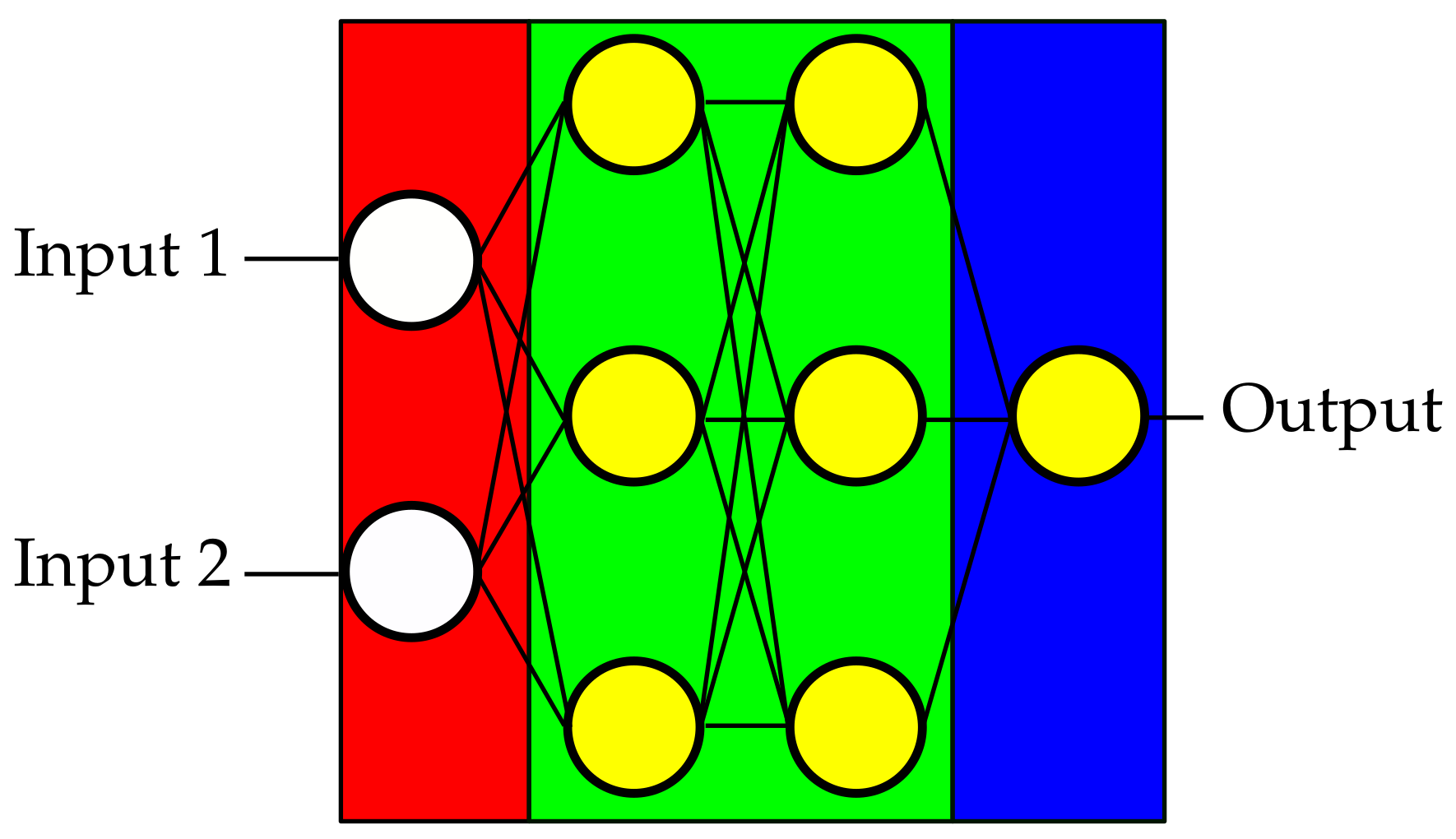

4. ANN-Architectures

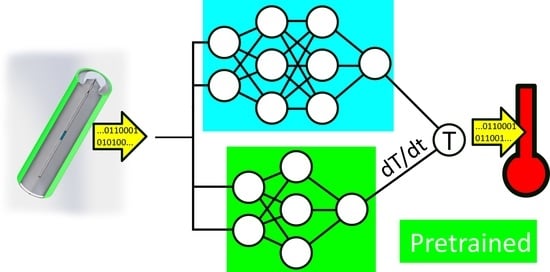

- Hidden block (green) is a function of the number of hidden layers and the number of neurons . Each neuron is a LSTM neuron and each layer is fully connected as described in Section 2.2.

- dT block (purple) is a fully trained LSTM model built as in Figure 7—Model-dT. It is structured like Model A; however, it was trained to predict the linear approximation as calculated in Section 3.2.

- Output block (blue) is a single LSTM neuron to bundle all the information coming from the hidden block.

- Window size : This is the number of data points the ANN will see at each time step. If the algorithm is supposed to estimate a temperature value for and , then the ANN would be fed . In order to make all architectures comparable, will only be dynamic at the base model (Model-A). For Model-B, -C and –dT, will be static. In case a value needs to be estimated with a window size with , the data padding approach will be used [34]. This means, that the values will be automatically filled in to create an array with the appropriate dimensions.

- Number of neurons : This value represents the total number of neurons in the hidden block.

- Number of hidden layers : This is representative of the number of hidden layers in the hidden block. The number of neurons of each individual layer l can be calculated bywith and

- Learning rate: During the backpropagation process, the weights are being adjusted according to Section 2.2. Learning rate is the order of magnitude by which the weights are adjusted. A strong is unlikely to find the optimal solution while a small will make it challenging to reach a conclusion in a reasonable time frame. This is especially important when using an early stopping approach. In addition, this study uses an ADAM (derived from adaptive moment estimation) optimization algorithm to dynamically change the during the training process [35].

- Drop rate : This parameter is meant to counteract overfitting by stochastically taking weights out of the equation. If we assume , this would mean every neuron has a 10% chance of being bypassed.

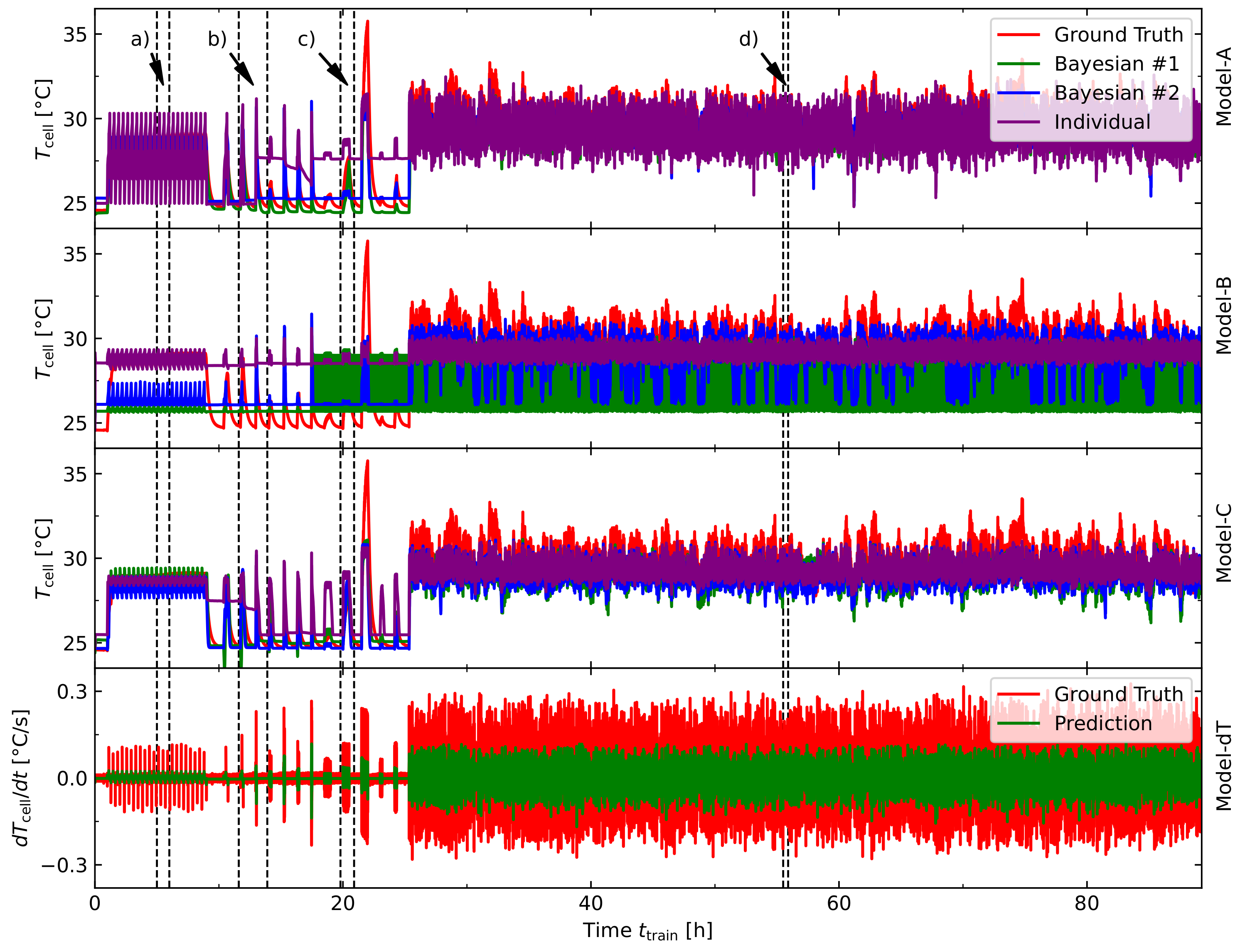

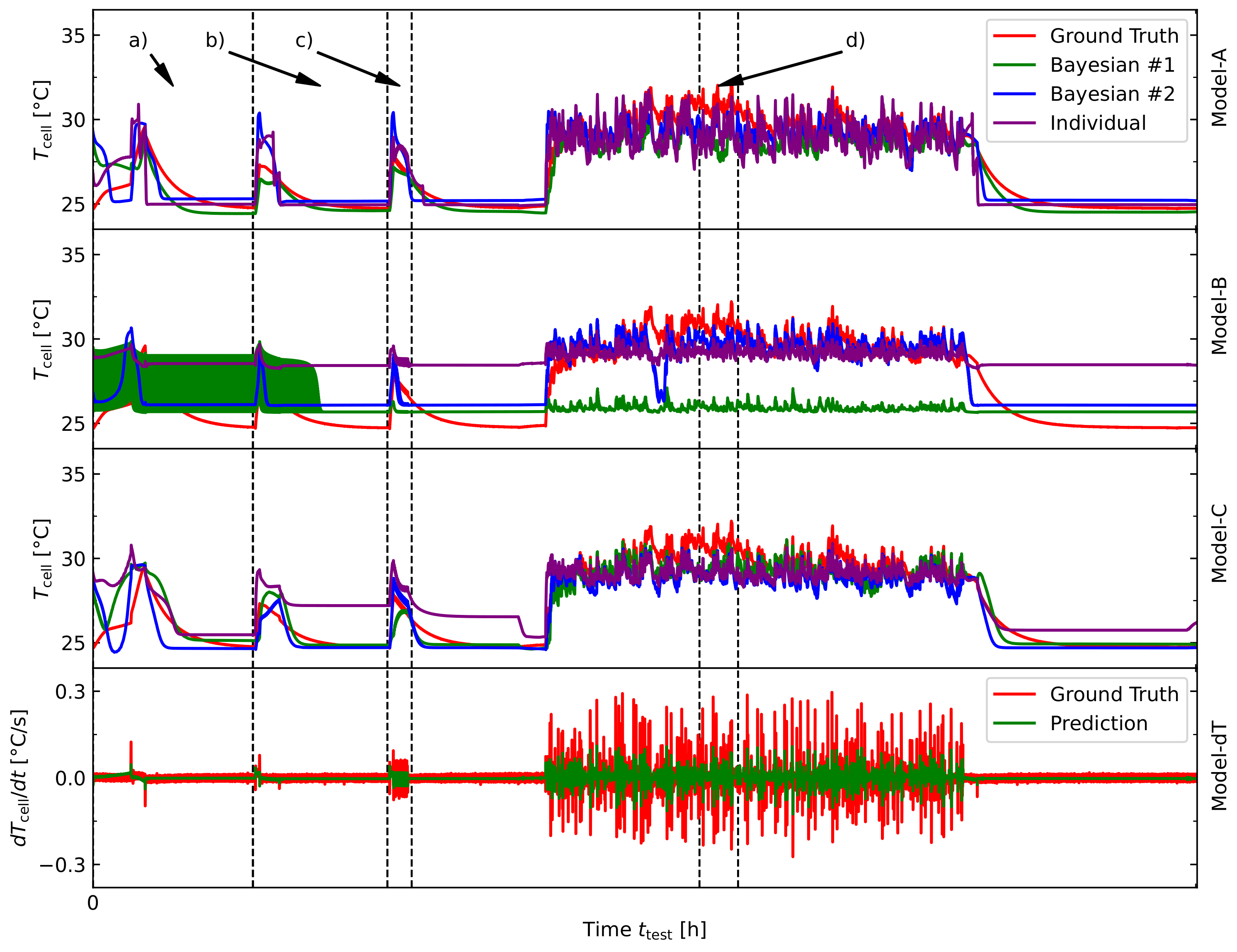

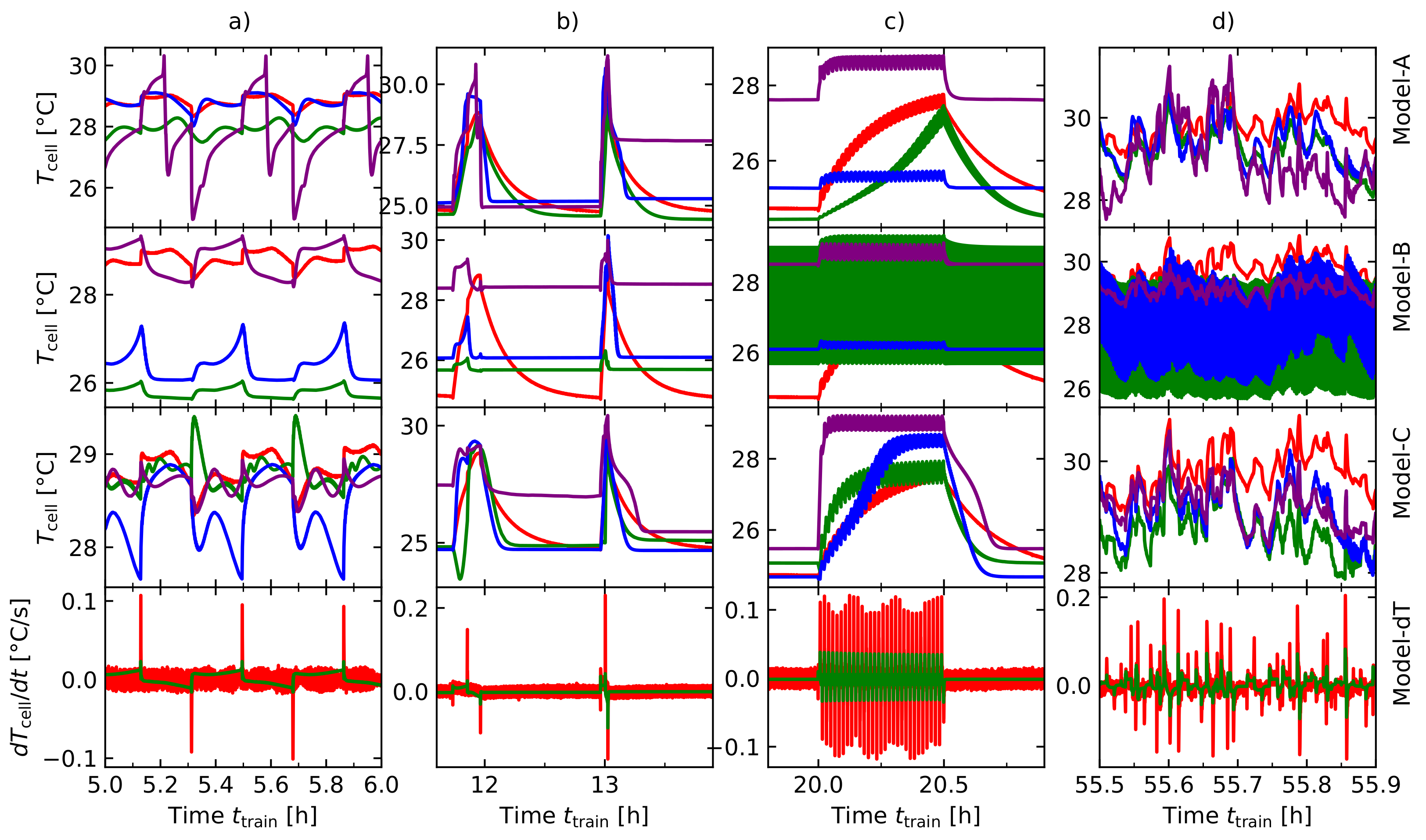

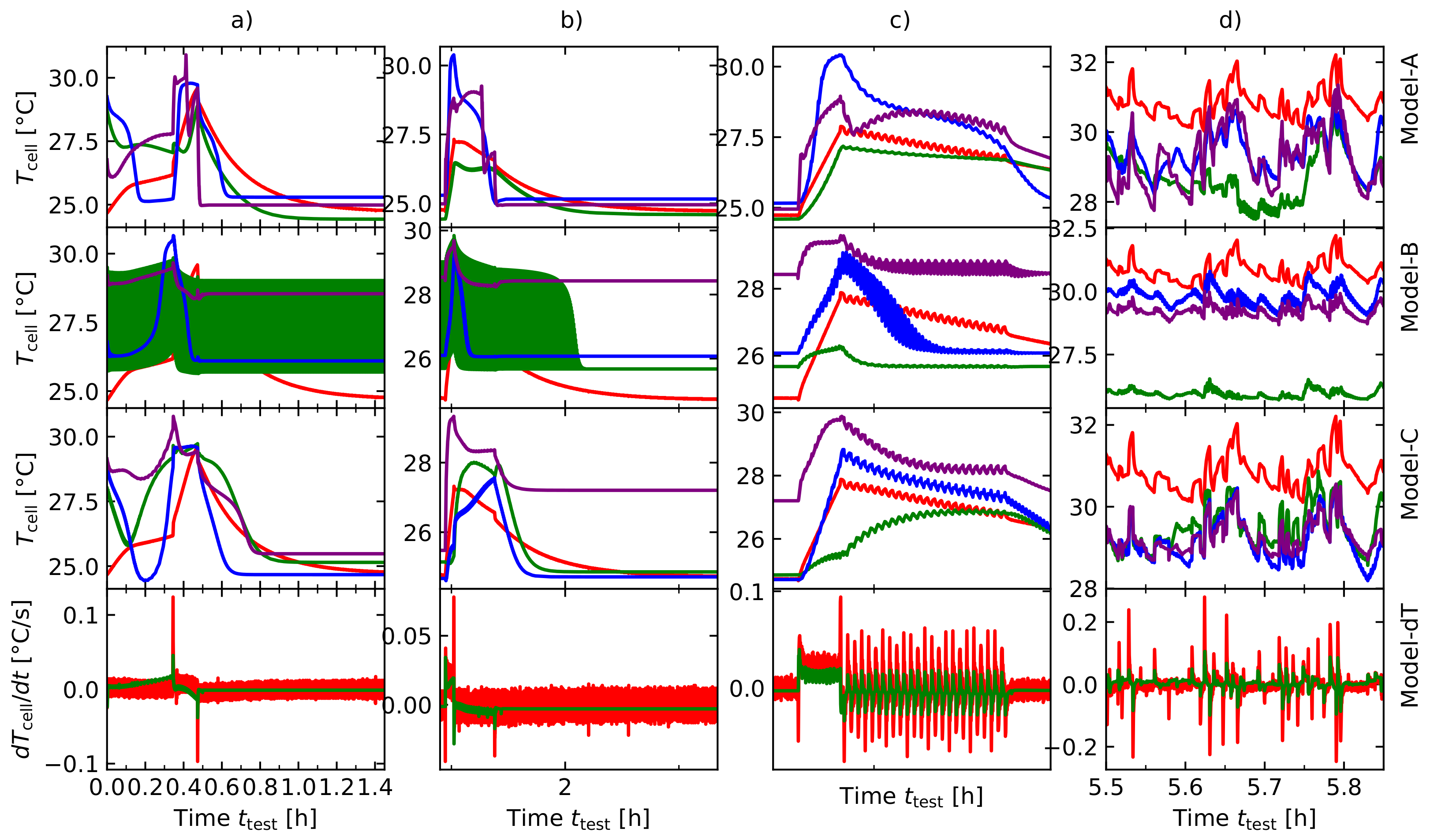

5. Results and Discussion

6. Conclusions and Future Work

- A LIBC has been prepared with an internal NTC-temperature sensor with the aim to prevent a time delay from heat generation to heat dissipation.

- A custom measuring system was designed to track the temperature and to synchronize the temperature data with the data of the battery system.

- Using this approach, a training- and validation dataset was created to investigate three LSTM-architectures. A hyperparameter analysis for each model has been carried out to find the optimal model structure for each sub model. Model-A architecture is the base model, Model-B architecture uses an additional dT-layer, that has been separately trained to forecast the linear approximation of and the third Model-C benefits from both approaches (Model-A and Model-B). Model-C was able to outperform Model-A and Model-B, which shows that artificial feature extraction is a useful method to improve model accuracy in the non-linear state of temperature prediction in LIBCs. This method made it possible to increase the accuracy by for the training data and by for the validation data compared to the base model with only the information of the current profile I and its corresponding voltage response U.

- Broader temperature range;

- Variable ambient temperature;

- Implementing real life drive cycles.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Lyu, Y.; Siddique, A.; Hasibul Majid, S.; Biglarbegian, M.; Gadsden, S.; Mahmud, S. Electric vehicle battery thermal management system with thermoelectric cooling. Energy Rep. 2019, 5, 822–827. [Google Scholar] [CrossRef]

- Li, W.; Rentemeister, M.; Badeda, J.; Jöst, D.; Schulte, D.; Sauer, D.U. Digital twin for battery systems: Cloud battery management system with online state-of-charge and state-of-health estimation. J. Energy Storage 2020, 30, 101557. [Google Scholar] [CrossRef]

- Ma, S.; Jiang, M.; Tao, P.; Song, C.; Wu, J.; Wang, J.; Deng, T.; Shang, W. Temperature effect and thermal impact in lithium-ion batteries: A review. Prog. Nat. Sci. Mater. Int. 2018, 28, 653–666. [Google Scholar] [CrossRef]

- Yang, Y.; Huang, X.; Cao, Z.; Chen, G. Thermally conductive separator with hierarchical nano/microstructures for improving thermal management of batteries. Nano Energy 2016, 22, 301–309. [Google Scholar] [CrossRef] [Green Version]

- Cavalheiro, G.; Iriyama, T.; Nelson, G.; Huang, S.; Zhang, G. Effects of Non-Uniform Temperature Distribution on Degradation of Lithium-Ion Batteries. J. Electrochem. Energy Convers. Storage 2019, 17, 021101. [Google Scholar] [CrossRef]

- Fill, A.; Avdyli, A.; Birke, K.P. Online Data-based Cell State Estimation of a Lithium-Ion Battery. In Proceedings of the 2020 2nd IEEE International Conference on Industrial Electronics for Sustainable Energy Systems (IESES), Cagliari, Italy, 1–3 September 2020; Volume 1, pp. 351–356. [Google Scholar] [CrossRef]

- Guo, G.; Long, B.; Cheng, B.; Zhou, S.; Xu, P.; Cao, B. Three-dimensional thermal finite element modeling of lithium-ion battery in thermal abuse application. J. Power Sources 2010, 195, 2393–2398. [Google Scholar] [CrossRef]

- Spinner, N.S.; Love, C.T.; Rose-Pehrsson, S.L.; Tuttle, S.G. Expanding the Operational Limits of the Single-Point Impedance Diagnostic for Internal Temperature Monitoring of Lithium-ion Batteries. Electrochim. Acta 2015, 174, 488–493. [Google Scholar] [CrossRef]

- Raijmakers, L.; Danilov, D.; Lammeren, J.; Lammers, M.; Notten, P. Sensorless Battery Temperature Measurements Based on Electrochemical Impedance Spectroscopy. J. Power Sources 2013, 247, 539–544. [Google Scholar] [CrossRef]

- Wang, S.; Fernandez, C.; Yu, C.; Fan, Y.; Cao, W.; Stroe, D.I. A novel charged state prediction method of the lithium ion battery packs based on the composite equivalent modeling and improved splice Kalman filtering algorithm. J. Power Sources 2020, 471, 228450. [Google Scholar] [CrossRef]

- Thomas, K.E.; Newman, J. Thermal Modeling of Porous Insertion Electrodes. J. Electrochem. Soc. 2003, 150, A176. [Google Scholar] [CrossRef]

- Zhang, J.; Huang, J.; Li, Z.; Wu, B.; Nie, Z.; Sun, Y.; An, F.; Wu, N. Comparison and validation of methods for estimating heat generation rate of large-format lithium-ion batteries. J. Therm. Anal. Calorim. 2014, 117, 447–461. [Google Scholar] [CrossRef]

- Au Severson, K.A.; Attia, P.M.; Jin, N.; Perkins, N.; Jiang, B.; Yang, Z.; Chen, M.H.; Aykol, M.; Herring, P.K.; Fraggedakis, D.; et al. Data-driven prediction of battery cycle life before capacity degradation. Nat. Energy 2019, 4, 383–391. [Google Scholar] [CrossRef] [Green Version]

- Yang, F.; Li, W.; Li, C.; Miao, Q. State-of-charge estimation of lithium-ion batteries based on gated recurrent neural network. Energy 2019, 175, 66–75. [Google Scholar] [CrossRef]

- Li, P.; Zhang, Z.; Xiong, Q.; Ding, B.; Hou, J.; Luo, D.; Rong, Y.; Li, S. State-of-health estimation and remaining useful life prediction for the lithium-ion battery based on a variant long short term memory neural network. J. Power Sources 2020, 459, 228069. [Google Scholar] [CrossRef]

- Kleiner, J.; Stuckenberger, M.; Komsiyska, L.; Endisch, C. Real-time core temperature prediction of prismatic automotive lithium-ion battery cells based on artificial neural networks. J. Energy Storage 2021, 39, 102588. [Google Scholar] [CrossRef]

- Feng, F.; Teng, S.; Liu, K.; Xie, J.; Xie, Y.; Liu, B.; Li, K. Co-estimation of lithium-ion battery state of charge and state of temperature based on a hybrid electrochemical-thermal-neural-network model. J. Power Sources 2020, 455, 227935. [Google Scholar] [CrossRef]

- Zhu, S.; He, C.; Zhao, N.; Sha, J. Data-driven analysis on thermal effects and temperature changes of lithium-ion battery. J. Power Sources 2021, 482, 228983. [Google Scholar] [CrossRef]

- Wu, B.; Han, S.; Shin, K.G.; Lu, W. Application of artificial neural networks in design of lithium-ion batteries. J. Power Sources 2018, 395, 128–136. [Google Scholar] [CrossRef]

- Zhu, C.; Li, X.; Song, L.; Xiang, L. Development of a theoretically based thermal model for lithium ion battery pack. J. Power Sources 2013, 223, 155–164. [Google Scholar] [CrossRef]

- Rao, L.; Newman, J. Heat-Generation Rate and General Energy Balance for Insertion Battery Systems. J. Electrochem. Soc. 1997, 144, 2697–2704. [Google Scholar] [CrossRef]

- Abdul-Quadir, Y.; Laurila, T.; Karppinen, J.; Jalkanen, K.; Vuorilehto, K.; Skogström, L.; Paulasto-Kröckel, M. Heat generation in high power prismatic Li-ion battery cell with LiMnNiCoO2 cathode material. Int. J. Energy Res. 2014, 38, 1424–1437. [Google Scholar] [CrossRef]

- Bandhauer, T.; Garimella, S.; Fuller, T. A Critical Review of Thermal Issues in Lithium-Ion Batteries. J. Electrochem. Soc. 2011, 158, R1. [Google Scholar] [CrossRef]

- Thomas, K.E.; Newman, J. Heats of mixing and of entropy in porous insertion electrodes. J. Power Sources 2003, 119–121, 844–849. [Google Scholar] [CrossRef]

- Chen, S.; Wan, C.; Wang, Y. Thermal analysis of lithium-ion batteries. J. Power Sources 2005, 140, 111–124. [Google Scholar] [CrossRef]

- Keil, P.; Jossen, A. Thermal FDM Battery Model. In Proceedings of the 2020 Advanced Battery Power–Kraftwerk Batterie, Münster, Germany, 23–25 March 2012. [Google Scholar]

- Dongare, A.; Kharde, R.R.; Kachare, A.D. Introduction to Artificial Neural Network. Int. J. Eng. Innov. Technol. (IJEIT) 2012, 2, 189–194. [Google Scholar]

- Lipton, Z. A Critical Review of Recurrent Neural Networks for Sequence Learning. arXiv 2015, arXiv:1506.00019. [Google Scholar]

- Bird, J.; Ekart, A.; Buckingham, C.; Faria, D. Evolutionary Optimisation of Fully Connected Artificial Neural Network Topology. In Proceedings of the SAI Computing Conference 2019, Las Vegas, NV, USA, 25–26 April 2019. [Google Scholar]

- Rumelhart, D.E.; McClelland, J.L. Learning Internal Representations by Error Propagation. In Parallel Distributed Processing: Explorations in the Microstructure of Cognition; Foundations MIT Press: Cambridge, MA, USA, 1987; pp. 992–997. [Google Scholar]

- Yu, Y.; Si, X.; Hu, C.; Zhang, J. A Review of Recurrent Neural Networks: LSTM Cells and Network Architectures. Neural Comput. 2019, 31, 1235–1270. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Ruan, H.; Sun, B.; Jiang, J.; Zhang, W.; He, X.; Su, X.; Bian, J.; Gao, W. A modified-electrochemical impedance spectroscopy-based multi-time-scale fractional-order model for lithium-ion batteries. Electrochim. Acta 2021, 394, 139066. [Google Scholar] [CrossRef]

- Dwarampudi, M.; Reddy, N.V.S. Effects of padding on LSTMs and CNNs. arXiv 2019, arXiv:1903.07288. [Google Scholar]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, Banff, AB, Canada, 14–16 April 2014. [Google Scholar]

- Nogueira, F. Bayesian Optimization: Open Source Constrained Global Optimization Tool for Python. Available online: https://github.com/fmfn/BayesianOptimization (accessed on 9 June 2021).

| Model | |||||||

|---|---|---|---|---|---|---|---|

| A | 8 | 2 | 5 | ||||

| Bayesian | B | 8 | 1 | 5 | |||

| C | 8 | 1 | 5 | ||||

| A | 10 | 1 | 28 | ||||

| Individual | B | 8 | 1 | 24 | |||

| C | 8 | 1 | 82 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kopp, M.; Ströbel, M.; Fill, A.; Pross-Brakhage, J.; Birke, K.P. Artificial Feature Extraction for Estimating State-of-Temperature in Lithium-Ion-Cells Using Various Long Short-Term Memory Architectures. Batteries 2022, 8, 36. https://doi.org/10.3390/batteries8040036

Kopp M, Ströbel M, Fill A, Pross-Brakhage J, Birke KP. Artificial Feature Extraction for Estimating State-of-Temperature in Lithium-Ion-Cells Using Various Long Short-Term Memory Architectures. Batteries. 2022; 8(4):36. https://doi.org/10.3390/batteries8040036

Chicago/Turabian StyleKopp, Mike, Marco Ströbel, Alexander Fill, Julia Pross-Brakhage, and Kai Peter Birke. 2022. "Artificial Feature Extraction for Estimating State-of-Temperature in Lithium-Ion-Cells Using Various Long Short-Term Memory Architectures" Batteries 8, no. 4: 36. https://doi.org/10.3390/batteries8040036

APA StyleKopp, M., Ströbel, M., Fill, A., Pross-Brakhage, J., & Birke, K. P. (2022). Artificial Feature Extraction for Estimating State-of-Temperature in Lithium-Ion-Cells Using Various Long Short-Term Memory Architectures. Batteries, 8(4), 36. https://doi.org/10.3390/batteries8040036