BGWL-YOLO: A Lightweight and Efficient Object Detection Model for Apple Maturity Classification Based on the YOLOv11n Improvement

Abstract

1. Introduction

2. Materials and Methods

2.1. Construction of the Datasets

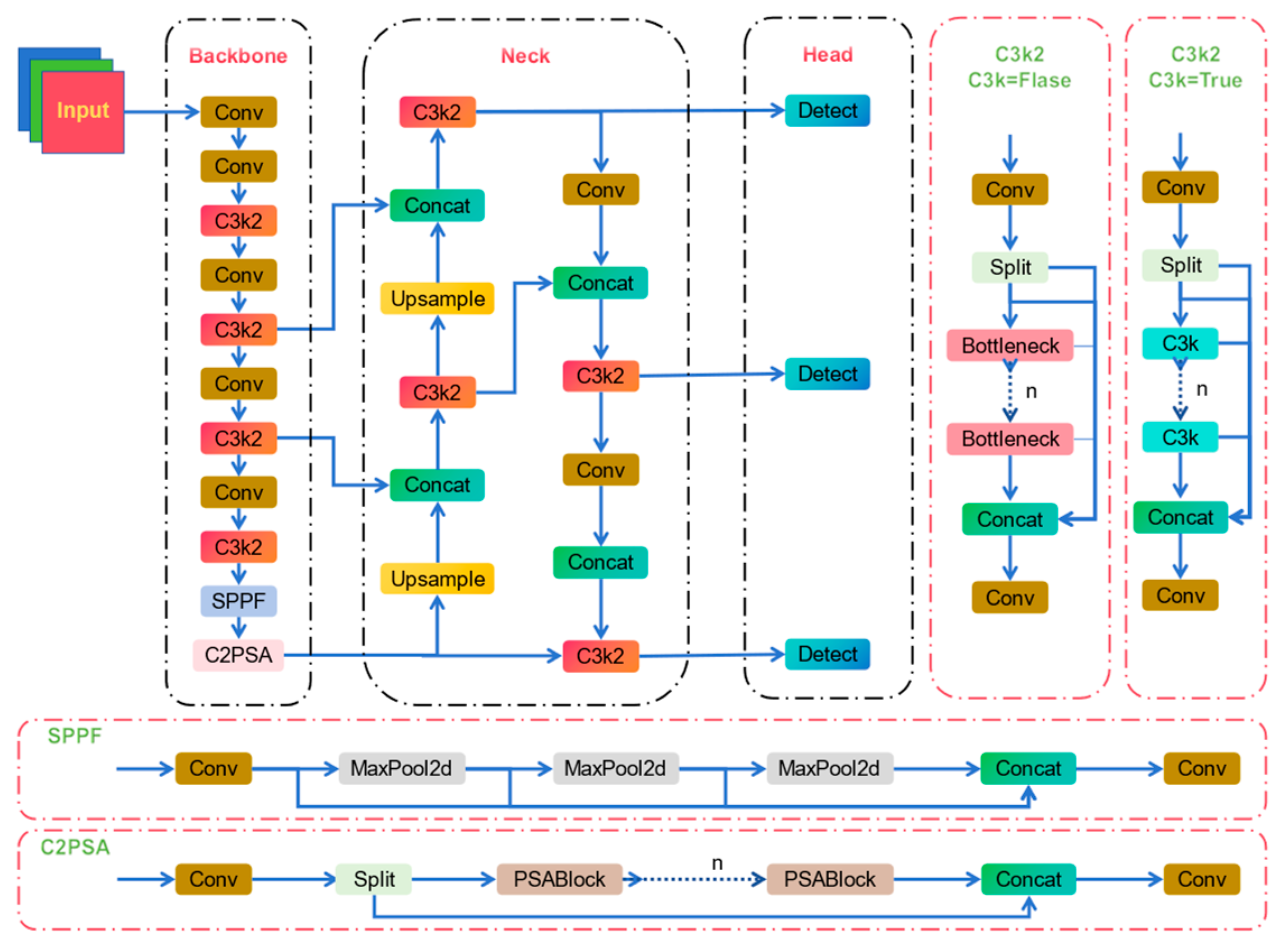

2.2. YOLOv11 Algorithm

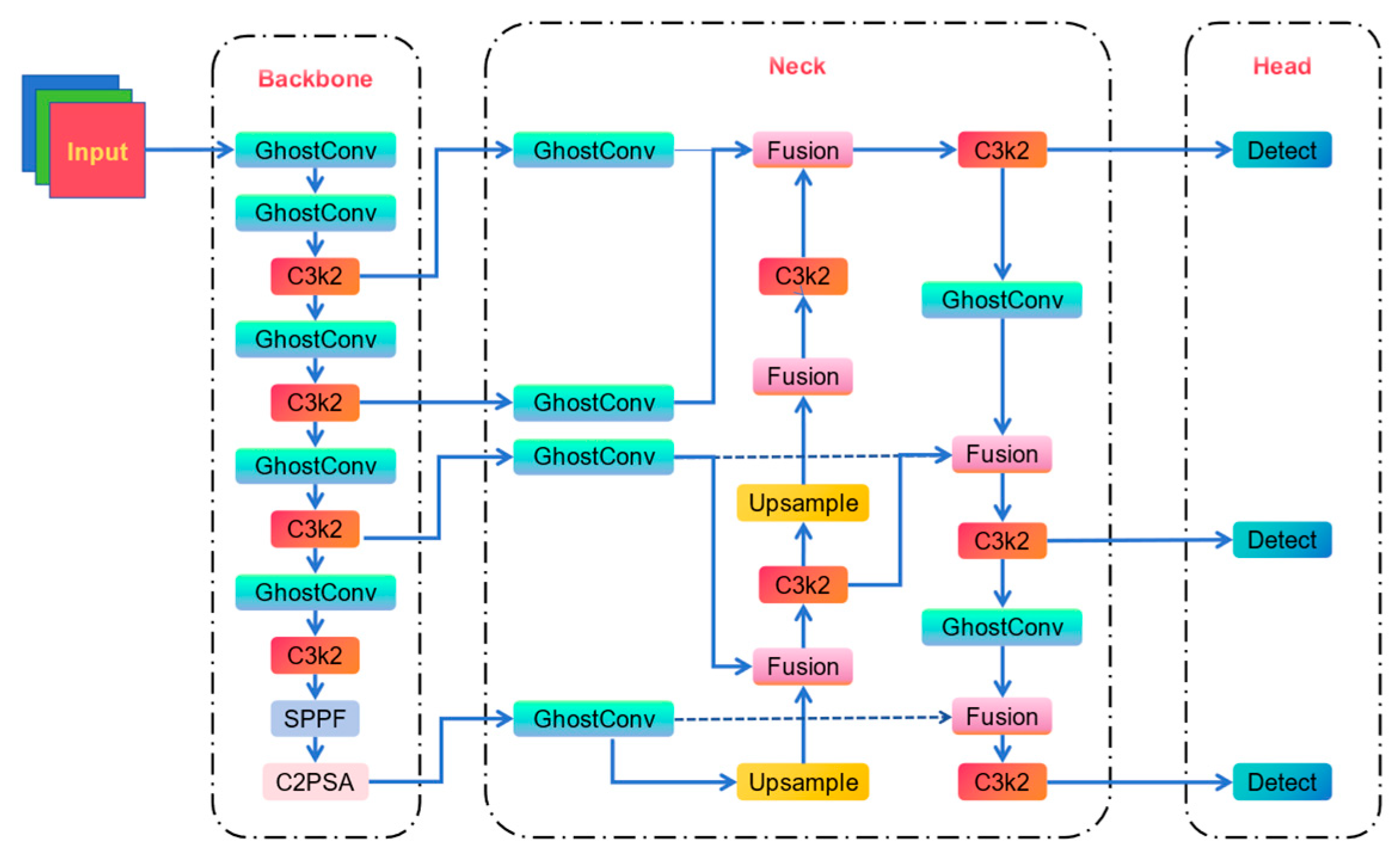

2.3. Lightweight Apple Maturity Detection Model (BGWL-YOLO)

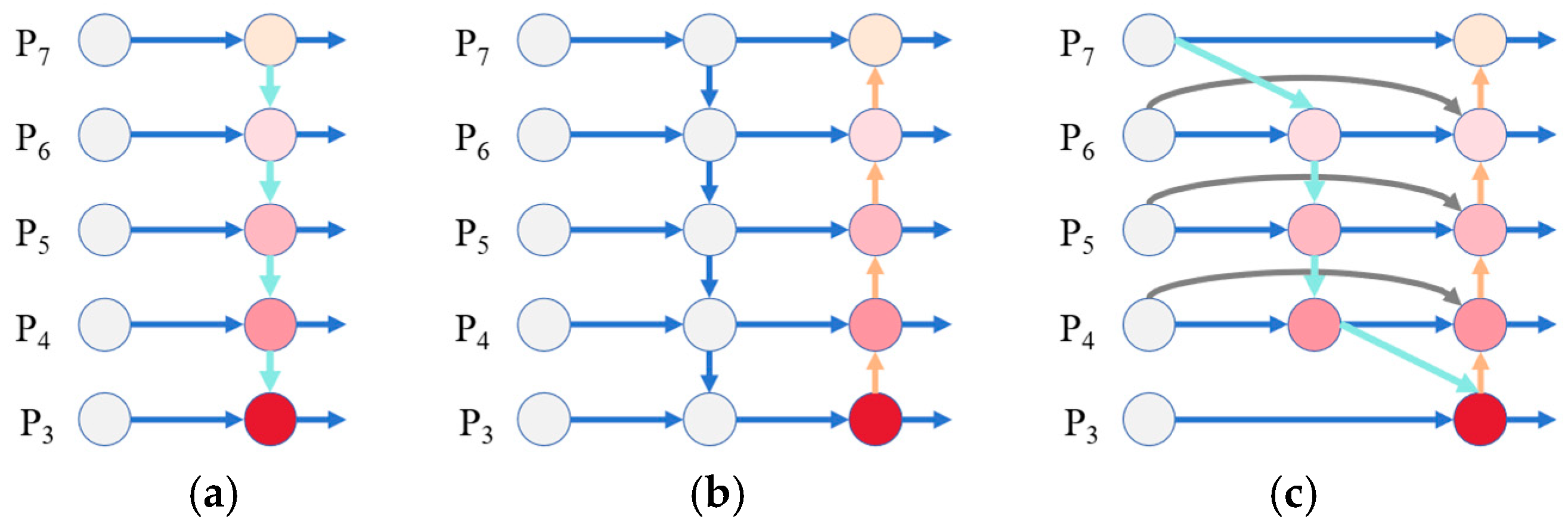

2.3.1. BiFPN Feature Fusion Network

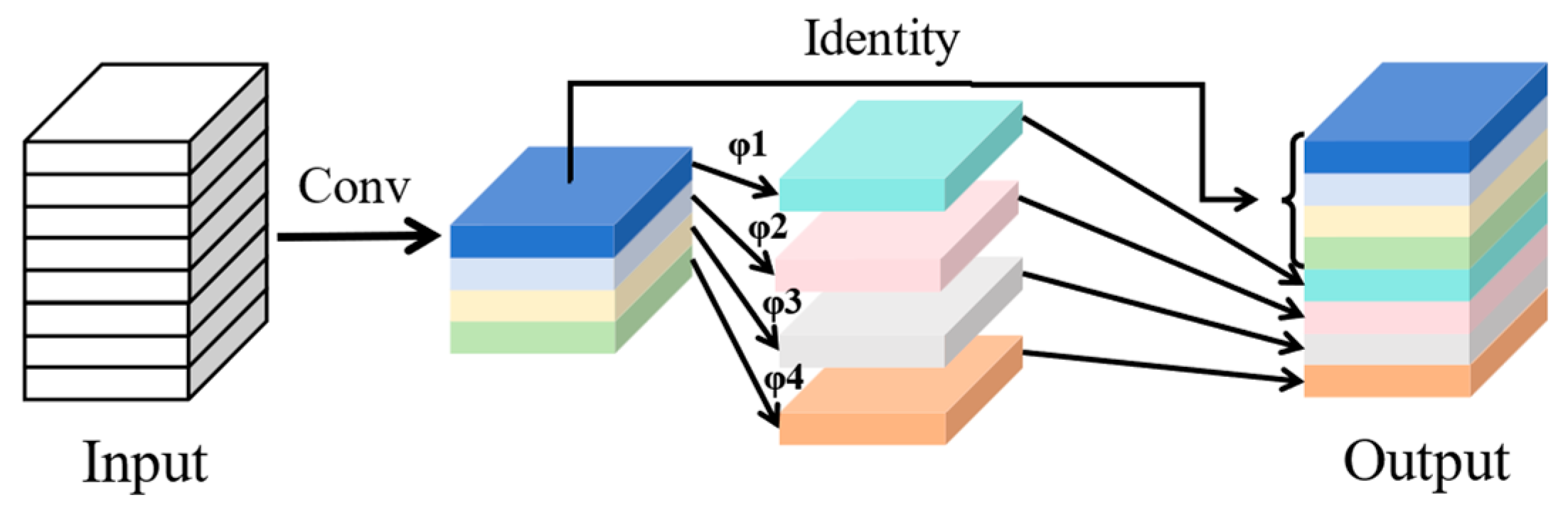

2.3.2. GhostConv Module

2.3.3. Wise-Inner-MPDIoU (WIMIoU) Loss Function

2.3.4. Experiment of LAMP Pruning

2.4. Experimental Environment

2.5. Evaluation Indicators

3. Experiments and Results Analysis

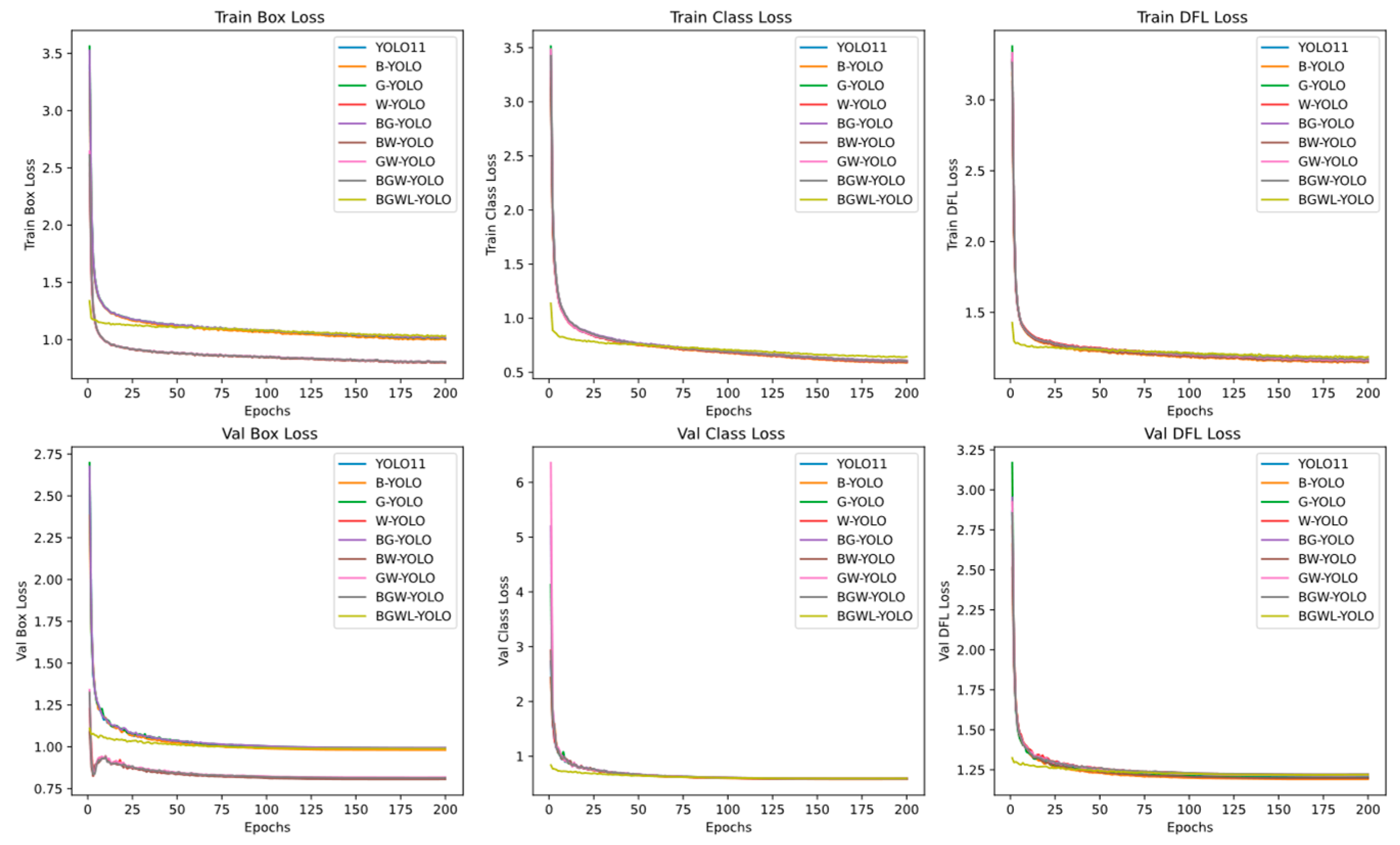

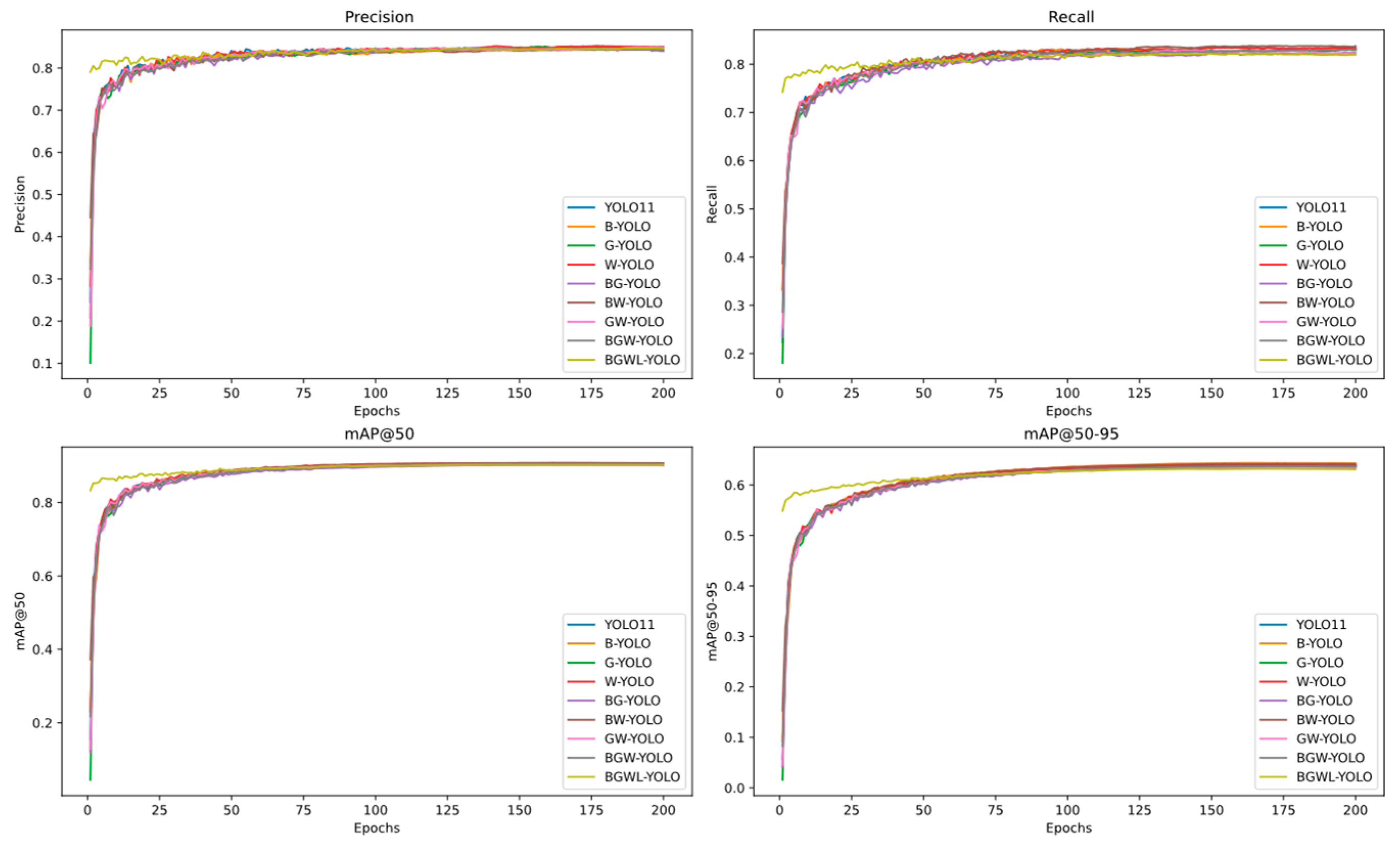

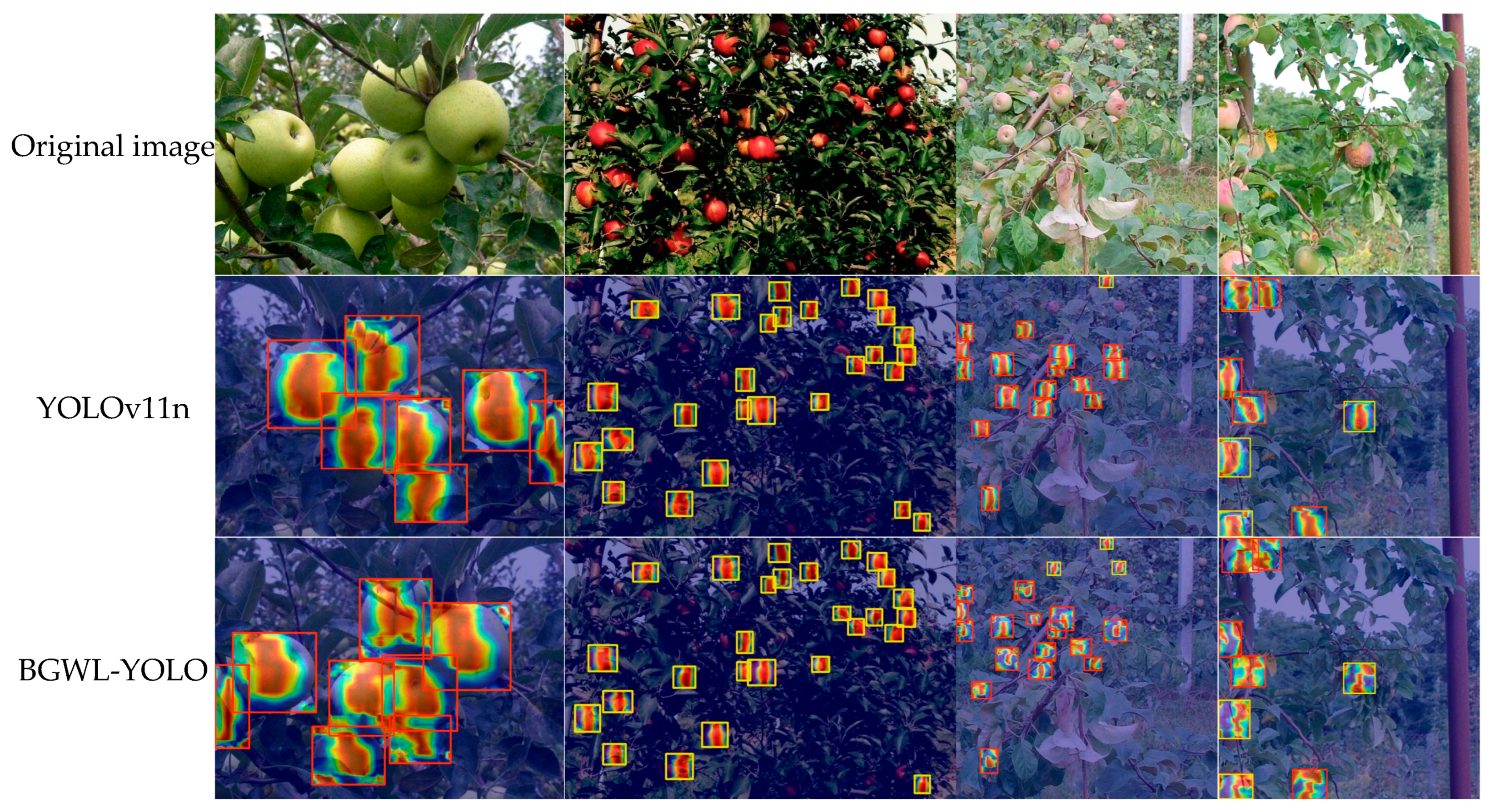

3.1. Visualization of the Model Training Process

3.2. Ablation Experiments

3.3. Pruning Experiment

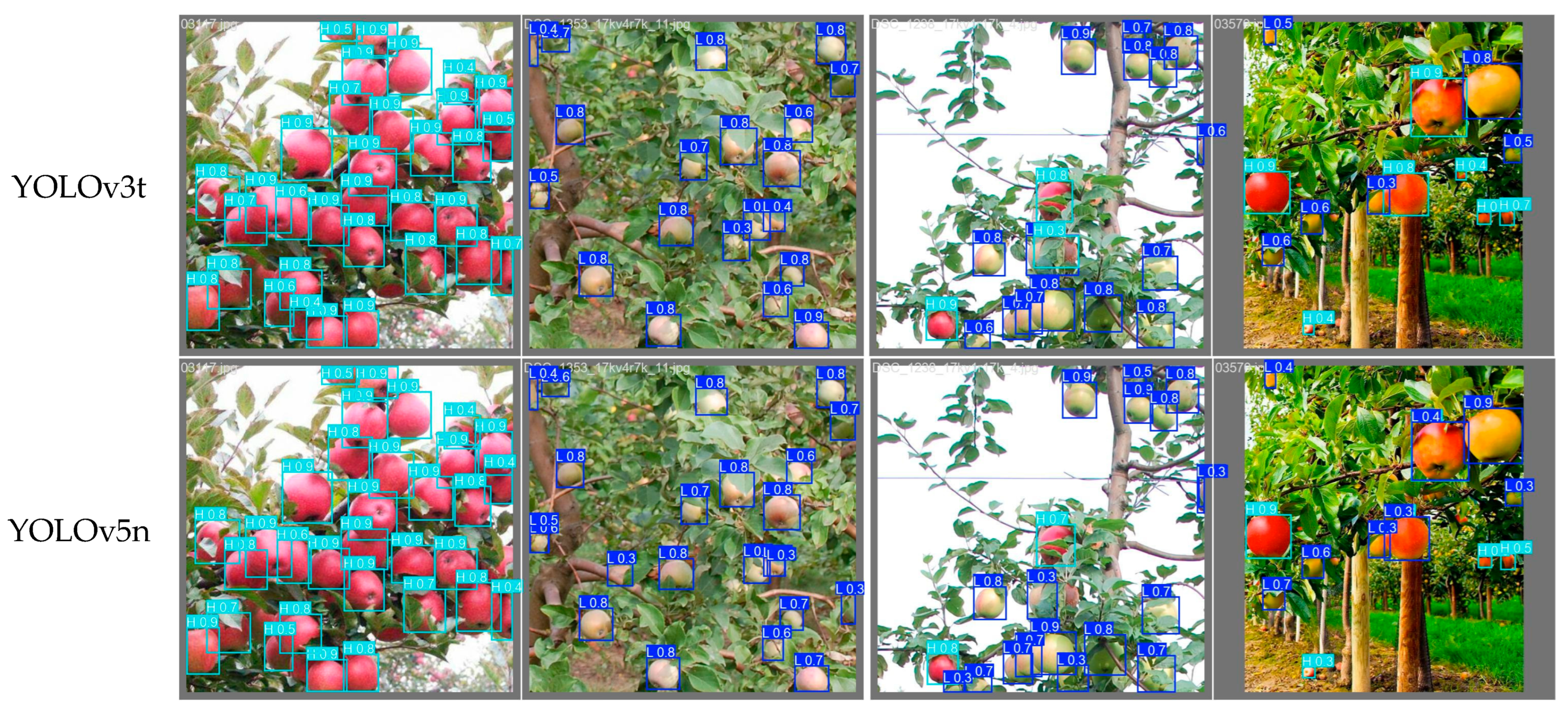

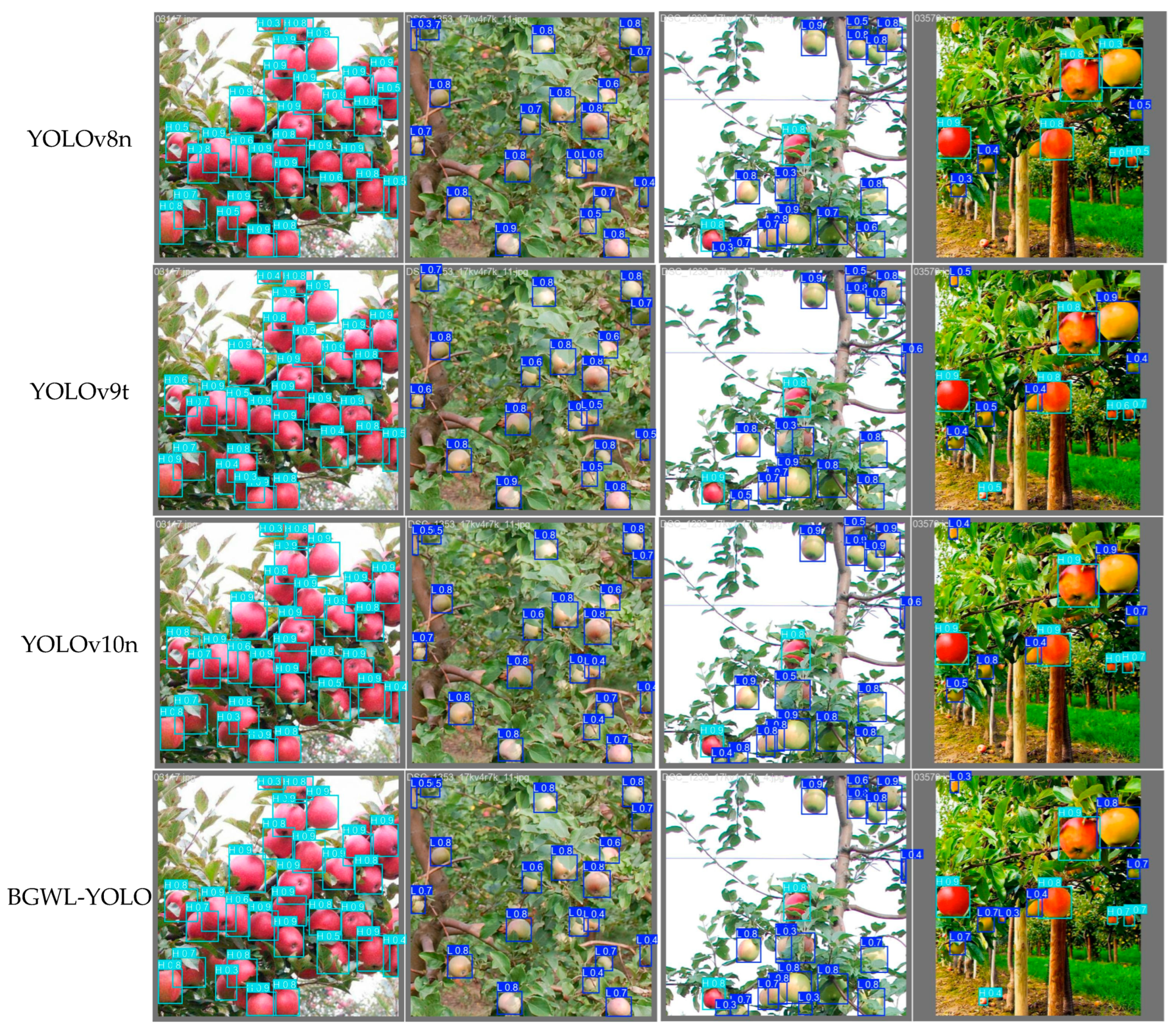

3.4. Comparative Experiments of Different Models

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

References

- Zhao, J.; Lipani, A.; Schillaci, C. Fallen apple detection as an auxiliary task: Boosting robotic apple detection performance through multi-task learning. Microelectron. J. 2024, 8, 100436. [Google Scholar] [CrossRef]

- Xu, W.; Wang, R. ALAD-YOLO: An lightweight and accurate detector for apple leaf diseases. Front. Plant Sci. 2023, 14, 1204569. [Google Scholar]

- Chu, P.; Li, Z.; Zhang, K.; Chen, D.; Lammers, K.; Lu, R. O2RNet: Occluder-occludee relational network for robust apple detection in clustered orchard environments. Smart Agric. Technol. 2023, 5, 100284. [Google Scholar] [CrossRef]

- Li, N.; Wu, Y.; Jiang, Z.; Mou, Y.; Ji, X.; Huo, H.; Dong, X. Efficient Identification and Classification of Pear Varieties Based on Leaf Appearance with YOLOv10 Model. Horticulturae 2025, 11, 489. [Google Scholar] [CrossRef]

- Hou, J.; Che, Y.; Fang, Y.; Bai, H.; Sun, L. Early bruise detection in apple based on an improved faster RCNN model. Horticulturae 2024, 10, 100. [Google Scholar] [CrossRef]

- Badgujar, C.M.; Poulose, A.; Gan, H. Agricultural object detection with You Only Look Once (YOLO) Algorithm: A bibliometric and systematic literature review. Comput. Electron. Agric. 2024, 223, 109090. [Google Scholar] [CrossRef]

- Barhate, D.; Pathak, S.; Singh, B.K.; Jain, A.; Dubey, A.K. A systematic review of machine learning and deep learning approaches in plant species detection. Smart Agric. Technol. 2024, 9, 100605. [Google Scholar] [CrossRef]

- Liao, H.; Wang, G.; Jin, S.; Liu, Y.; Sun, W.; Yang, S.; Wang, L. HCRP-YOLO: A lightweight algorithm for potato defect detection. Smart Agric. Technol. 2025, 10, 100849. [Google Scholar] [CrossRef]

- Luo, Y.; Lin, K.; Xiao, Z.; Lv, E.; Wei, X.; Li, B.; Zeng, Z. PBR-YOLO: A lightweight piglet multi-behavior recognition algorithm based on improved yolov8. Smart Agric. Technol. 2025, 10, 100785. [Google Scholar] [CrossRef]

- Zheng, Z.; Chen, L.; Wei, L.; Huang, W.; Du, D.; Qin, G.; Wang, S. An efficient and lightweight banana detection and localization system based on deep CNNs for agricultural robots. Smart Agric. Technol. 2024, 9, 100550. [Google Scholar] [CrossRef]

- Tang, B.; Zhou, J.; Li, X.; Pan, Y.; Lu, Y.; Liu, C.; Gu, X. Detecting tasseling rate of breeding maize using UAV-based RGB images and STB-YOLO model. Smart Agric. Technol. 2025, 11, 100893. [Google Scholar] [CrossRef]

- Moreira, G.; dos Santos, F.N.; Cunha, M. Grapevine inflorescence segmentation and flower estimation based on Computer Vision techniques for early yield assessment. Smart Agric. Technol. 2025, 10, 100690. [Google Scholar] [CrossRef]

- Wang, C.; Han, Q.; Li, J.; Li, C.; Zou, X. YOLO-BLBE: A novel model for identifying blueberry fruits with different maturities using the I-MSRCR method. Agronomy 2024, 14, 658. [Google Scholar] [CrossRef]

- Ling, C.; Zhang, Q.; Zhang, M.; Gao, C. Research on adaptive object detection via improved HSA-YOLOv5 for raspberry maturity detection. IET Image Process. 2024, 18, 4898–4912. [Google Scholar] [CrossRef]

- Badeka, E.; Karapatzak, E.; Karampatea, A.; Bouloumpasi, E.; Kalathas, I.; Lytridis, C.; Kaburlasos, V.G. A deep learning approach for precision viticulture, assessing grape maturity via YOLOv7. Sensors 2023, 23, 8126. [Google Scholar] [CrossRef]

- Xu, D.; Ren, R.; Zhao, H.; Zhang, S. Intelligent detection of muskmelon ripeness in greenhouse environment based on YOLO-RFEW. Agronomy 2024, 14, 1091. [Google Scholar] [CrossRef]

- Sun, H.; Ren, R.; Zhang, S.; Tan, C.; Jing, J. Maturity detection of ‘Huping’ jujube fruits in natural environment using YOLO-FHLD. Smart Agric. Technol. 2024, 9, 100670. [Google Scholar] [CrossRef]

- Jing, J.; Zhang, S.; Sun, H.; Ren, R.; Cui, T. Detection of maturity of “Okubo” peach fruits based on inverted residual mobile block and asymptotic feature pyramid network. J. Food Meas. Charact. 2025, 19, 682–695. [Google Scholar] [CrossRef]

- Wei, J.; Ni, L.; Luo, L.; Chen, M.; You, M.; Sun, Y.; Hu, T. GFS-YOLO11: A maturity detection model for multi-variety tomato. Agronomy 2024, 14, 2644. [Google Scholar] [CrossRef]

- Sapkota, R.; Meng, Z.; Karkee, M. Synthetic meets authentic: Leveraging llm generated datasets for yolo11 and yolov10-based apple detection through machine vision sensors. Smart Agric. Technol. 2024, 9, 100614. [Google Scholar] [CrossRef]

- Touko Mbouembe, P.L.; Liu, G.; Park, S.; Kim, J.H. Accurate and fast detection of tomatoes based on improved YOLOv5s in natural environments. Front. Plant Sci. 2024, 14, 1292766. [Google Scholar] [CrossRef]

- Thakuria, A.; Erkinbaev, C. Improving the network architecture of YOLOv7 to achieve real-time grading of canola based on kernel health. Smart Agric. Technol. 2023, 5, 100300. [Google Scholar] [CrossRef]

- Huang, Y.; Ouyang, H.; Miao, X. LSOD-YOLOv8: Enhancing YOLOv8n with New Detection Head and Lightweight Module for Efficient Cigarette Detection. Appl. Sci. 2025, 15, 3961. [Google Scholar] [CrossRef]

- Sun, W.; Meng, N.; Chen, L.; Yang, S.; Li, Y.; Tian, S. CTL-YOLO: A Surface Defect Detection Algorithm for Lightweight Hot-Rolled Strip Steel Under Complex Backgrounds. Machines 2025, 13, 301. [Google Scholar] [CrossRef]

- Seol, S.; Ahn, J.; Lee, H.; Kim, Y.; Chung, J. SSP based underwater CIR estimation with S-BiFPN. ICT Express 2022, 8, 44–49. [Google Scholar] [CrossRef]

- Rajeev, P.A.; Dharewa, V.; Lakshmi, D.; Vishnuvarthanan, G.; Giri, J.; Sathish, T.; Alrashoud, M. Advancing e-waste classification with customizable YOLO based deep learning models. Sci. Rep. 2025, 15, 18151. [Google Scholar] [CrossRef] [PubMed]

- Chakrabarty, S.; Shashank, P.R.; Deb, C.K.; Haque, M.A.; Thakur, P.; Kamil, D.; Dhillon, M.K. Deep learning-based accurate detection of insects and damage in cruciferous crops using YOLOv5. Smart Agric. Technol. 2024, 9, 100663. [Google Scholar] [CrossRef]

- Saraei, M.; Lalinia, M.; Lee, E.J. Deep Learning-Based Medical Object Detection: A Survey. IEEE Access 2025, 13, 53019–53038. [Google Scholar] [CrossRef]

- Yaamini, H.G.; Swathi, K.J.; Manohar, N.; Kumar, A. Lane and Traffic Sign Detection for Autonomous Vehicles: Addressing Challenges on Indian Road Conditions. MethodsX 2025, 14, 103178. [Google Scholar] [CrossRef]

- Lv, Q.; Sun, F.; Bian, Y.; Wu, H.; Li, X.; Li, X.; Zhou, J. A Lightweight Citrus Object Detection Method in Complex Environments. Agriculture 2025, 15, 1046. [Google Scholar] [CrossRef]

- Jiang, X.; Tang, D.; Xu, W.; Zhang, Y.; Lin, Y. Swimming-YOLO: A drowning detection method in multi-swimming scenarios based on improved YOLO algorithm. Signal Image Video Process. 2025, 19, 161. [Google Scholar] [CrossRef]

- Shen, Q.; Li, Y.; Zhang, Y.; Zhang, L.; Liu, S.; Wu, J. CSW-YOLO: A traffic sign small target detection algorithm based on YOLOv8. PLoS ONE 2025, 20, e0315334. [Google Scholar] [CrossRef] [PubMed]

- Dai, S.; Bai, T.; Zhao, Y. Keypoint Detection and 3D Localization Method for Ridge-Cultivated Strawberry Harvesting Robots. Agriculture 2025, 15, 372. [Google Scholar] [CrossRef]

| Variety | Superior Grade | First-Class Grade | Second-Class Grade |

|---|---|---|---|

| Fuji Series Apple | The apple color is over 90% red or striped red. | The apple color is over 80% red or striped red. | The apple color is over 55% red or striped red. |

| Marshal Series Apple | The apple color is over 95% red. | The apple color is over 85% red. | The apple color is over 60% red. |

| Qin Guan Apple | The apple color is over 90% red. | The apple color is over 80% red. | The apple color is over 55% red. |

| Golden Crown Series Apple | Golden yellow. | Yellow, greenish yellow | Yellow, greenish yellow, yellowish green. |

| Data Set | The number of Images | The Number of Low-Maturity Apples | The Number of Mature Apples |

|---|---|---|---|

| Dataset A | 3169 | 40,048 | 4173 |

| Dataset B | 1838 | 1879 | 15,489 |

| Dataset C | 4733 | 2509 | 26,699 |

| Dataset D | 580 | 446 | 1491 |

| Ours | 10,320 | 44,882 | 47,852 |

| Model | Size | mAP50-95 (%) | CPU ONNX Speed (ms) | T4 TensorRT Speed (ms) | Number of Parameters (M) | FLOPs (B) |

|---|---|---|---|---|---|---|

| YOL011n | 640 | 39.5 | 56.1 ± 0.8 | 1.5 ± 0.0 | 2.6 | 6.5 |

| YOL011s | 640 | 47.0 | 90.0 ± 1.2 | 2.5 ± 0.0 | 9.4 | 21.5 |

| YOL011m | 640 | 51.5 | 183.2 ± 2.0 | 4.7 ± 0.1 | 20.1 | 68.0 |

| YOL011l | 640 | 53.4 | 238.6 ± 1.4 | 6.2 ± 0.1 | 25.3 | 86.9 |

| YOL011x | 640 | 54.7 | 462.8 ± 6.7 | 11.3 ± 0.2 | 56.9 | 194.9 |

| Name of Hyperparameter | Parameter Value |

|---|---|

| Image input size | 640 × 640 |

| Training batch | 200 |

| Batch size | 16 |

| Number of work processes | 1 |

| Optimizer | SGD |

| Initial learning rate | 0.01 |

| Learning rate attenuation strategy | cos |

| Model | GhostConv | BiFPN | WIMIoU | mAP50 (%) | Number of Parameters | GFLOPs | Model Size (MB) | FPS |

|---|---|---|---|---|---|---|---|---|

| A | 89.8 | 2,582,542 | 6.3 | 5.21 | 232.6 | |||

| B | √ | 89.6 | 2,256,846 | 5.5 | 4.60 | 222.5 | ||

| C | √ | 90.2 | 1,923,018 | 6.3 | 3.98 | 207.0 | ||

| D | √ | 90.0 | 2,582,542 | 6.3 | 5.21 | 233.8 | ||

| E | √ | √ | 89.8 | 1,620,202 | 5.3 | 3.43 | 197.9 | |

| F | √ | √ | 89.9 | 2,256,846 | 5.5 | 4.60 | 222.5 | |

| G | √ | √ | 90.1 | 1,923,018 | 6.3 | 3.98 | 208.8 | |

| H | √ | √ | √ | 90.0 | 1,620,202 | 5.3 | 3.43 | 197.4 |

| Pruning Rate | mAP50 (%) | Number of Parameters | GFLOPs | Model Size (MB) | FPS |

|---|---|---|---|---|---|

| 1.5 | 90.3 | 757,846 | 3.5 | 1.82 | 222.7 |

| 2.0 | 90.1 | 490,870 | 2.6 | 1.31 | 246.1 |

| 2.5 | 89.7 | 378,831 | 2.1 | 1.08 | 258.9 |

| 3.0 | 89.4 | 297,752 | 1.7 | 1.41 | 283.7 |

| 3.5 | 88.7 | 250,856 | 1.5 | 0.86 | 294.7 |

| 4.0 | 88.7 | 223,726 | 1.3 | 0.81 | 311.2 |

| Pruning Method | mAP50 (%) | Number of Parameters | (GFLOPs) | Model Size (MB) | FPS |

|---|---|---|---|---|---|

| BGW-YOLO | 90.0 | 2,343,604 | 5.5 | 4.79 | 197.4 |

| Random | 80.7 | 1,086,630 | 2.6 | 2.45 | 285.0 |

| L1 | 88.4 | 1,440,468 | 2.6 | 1.86 | 249.5 |

| Group_Norm | 87.7 | 1,170,328 | 2.6 | 2.61 | 235.1 |

| LAMP | 90.1 | 490,870 | 2.6 | 1.31 | 246.1 |

| Model | mAP50 (%) | Number OF Parameters | GFLOPs | Model Size (MB) | FPS1 | FPS2 |

|---|---|---|---|---|---|---|

| YOLOv3n | 88.3 | 12,128,692 | 18.9 | 23.20 | 173.7 | 11.9 |

| YOLOv5n | 89.2 | 2,503,334 | 7.1 | 5.02 | 242.8 | 24.5 |

| YOLOv8n | 89.5 | 3,006,038 | 8.1 | 5.95 | 240.0 | 22.6 |

| YOLOv9t | 89.9 | 1,971,174 | 7.6 | 4.41 | 178.0 | 19.7 |

| YOLOv10n | 90.2 | 2,265,558 | 6.5 | 5.48 | 224.7 | 22.6 |

| YOLOv11n | 89.8 | 2,582,542 | 6.3 | 5.21 | 232.6 | 22.9 |

| BGWL-YOLO | 90.1 | 490,870 | 2.6 | 1.31 | 246.1 | 30.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qiu, Z.; Ou, W.; Mo, D.; Sun, Y.; Ma, X.; Chen, X.; Tian, X. BGWL-YOLO: A Lightweight and Efficient Object Detection Model for Apple Maturity Classification Based on the YOLOv11n Improvement. Horticulturae 2025, 11, 1068. https://doi.org/10.3390/horticulturae11091068

Qiu Z, Ou W, Mo D, Sun Y, Ma X, Chen X, Tian X. BGWL-YOLO: A Lightweight and Efficient Object Detection Model for Apple Maturity Classification Based on the YOLOv11n Improvement. Horticulturae. 2025; 11(9):1068. https://doi.org/10.3390/horticulturae11091068

Chicago/Turabian StyleQiu, Zhi, Wubin Ou, Deyun Mo, Yuechao Sun, Xingzao Ma, Xianxin Chen, and Xuejun Tian. 2025. "BGWL-YOLO: A Lightweight and Efficient Object Detection Model for Apple Maturity Classification Based on the YOLOv11n Improvement" Horticulturae 11, no. 9: 1068. https://doi.org/10.3390/horticulturae11091068

APA StyleQiu, Z., Ou, W., Mo, D., Sun, Y., Ma, X., Chen, X., & Tian, X. (2025). BGWL-YOLO: A Lightweight and Efficient Object Detection Model for Apple Maturity Classification Based on the YOLOv11n Improvement. Horticulturae, 11(9), 1068. https://doi.org/10.3390/horticulturae11091068