1. Introduction

The horticultural sector is currently faced with several critical challenges, one of the most pressing being the shortage of (skilled) workers and the costs associated with manual work [

1]. Given that many essential tasks, such as growth monitoring and biotic and abiotic stress detection, are still predominantly performed manually, reducing the dependence of the sector on human labor has become a necessity [

2,

3]. A potential strategy to address this issue is the automation and digitalization of horticultural practices as this can reduce the need for manual labor and lower associated labor costs [

4,

5]. In this context, close remote sensing has become a key enabling technology [

6]. Close remote sensing refers to the collection of information about objects or areas without direct physical contact, typically through the measurement of radiation reflected or emitted from surfaces using sensors, especially with sufficiently high spatial resolution [

7,

8]. Various sensors and imaging platforms can be used to collect the data [

9]. A commonly used aerial-based imaging platform is the unmanned aerial vehicle (UAV) since it can capture high-resolution images across large cultivation areas in a short time [

10]. Although satellite platforms provide the advantage of even broader spatial coverage in a shorter time, their spatial and spectral resolutions are comparatively lower [

11]. In addition, UAVs are well-suited for use across diverse landscapes, unlike ground-based mobile platforms that are often constrained by limited speed and reduced efficiency in uneven or hilly terrains [

12]. UAVs can be equipped with a range of sensors, such as RGB, thermal, multispectral, hyperspectral, and Light Detection And Ranging (LiDAR), with the RGB camera being a popular choice due to its affordability and simplicity [

13].

Remote sensing has been widely applied across a variety of domains such as archaeology [

14], geology [

15], ecology [

16], agriculture [

17], and horticulture [

18]. A prominent application in agriculture and horticulture is High-Throughput Field Phenotyping (HTFP), which enables the rapid, objective, and non-destructive assessment of a wide range of plant traits [

13]. It addresses the limitations of manual/visual assessments in terms of the quantity and quality of the collected data [

3]. Traditionally, growth monitoring and stress detection rely on labor-intensive and time-consuming field surveys, which typically evaluate only a fraction of the field and are subject to human interpretation. In contrast, close remote sensing-based HTFP offers scalable, objective, and more consistent plant trait evaluation, which supports plant-level detection of growth defects and stress. This, in turn, allows for a more precise and site-specific application of agricultural inputs, an approach known as precision farming [

4,

19]. By improving resource use efficiency, this strategy offers a viable response to declining water availability, one of the most critical challenges in horticulture [

2]. In the context of disease and pest management, precision farming provides a more sustainable and responsible use of pesticides, aligning with increasing regulatory pressure [

2,

6]. For instance, Rayamajhi et al. [

12] estimated the required volume of agrochemicals for maple trees by calculating canopy volume using data acquired through an RGB-sensor mounted on a UAV. Beyond crop management, HTFP also plays an important role in plant breeding by enabling the rapid evaluation of various selection traits across large plant populations [

13,

20,

21]. Borra-Serrano et al. [

22] successfully extracted traits such as plant height, plant shape, and floribundity from RGB UAV imagery of woody ornamentals to support selection decision.

A critical first step in extracting plant traits involves distinguishing plants (foreground) from the background, such as soil and weeds, a process known as segmentation. A variety of algorithms is available, with detailed overviews provided by Yu et al. [

23] and Cheng et al. [

24]. In recent years, deep learning (DL) approaches have often been adopted due to their better performance compared to classical methods [

25], as illustrated by Zhang et al. [

26]. They evaluated three methods for the segmentation of chrysanthemums; two traditional computer vision methods, both relying on thresholding-based segmentation, with one operating in the RGB color space and the other in the HSV color space; and a deep learning-based approach employing Mask R-CNN. The results demonstrated that the Mask R-CNN model outperformed traditional methods, particularly in complex scenarios such as overlapping plants. Similarly, Zheng et al. [

27], who applied Mask R-CNN for strawberry delineation, reported that the model remained robust under challenging conditions, including variations in illumination and overlapping or connected plants. For crown delineation from UAV-acquired RGB imagery, Mask R-CNN is commonly used [

28,

29,

30]. However, other deep learning architectures have also been applied, including U-Net [

30], U

†-Net [

31], ResU-Net [

32], and YOLACT [

33]. Although these supervised DL-models generally perform well, with evaluation metrics exceeding 80%, they often fail when the field conditions such as lighting (e.g., cloud cover), background (presence of weeds), plant species, planting density, or growth stage differ from those in the training data [

34,

35]. The performance of the sNet CNN proposed by Potena et al. [

36] for crop/weed classification decreased by 20–30% when tested on images captured at a different plant growth stage than those used for training. To maintain performance in new scenarios, the models often require retraining on task-specific datasets, a process that is costly, labor-intensive, and time-consuming [

34]. Given the variability within the horticultural sector, a more generalized and flexible approach is needed [

2]. Rayamajhi et al. [

12] used the Segment Anything Model (SAM) for self-supervised segmentation of trees as an initial step in calculating tree canopy attributes. However, to avoid the segmentation of soil, they first applied height-based thresholding on the canopy height model (CHM), a classical segmentation approach that is likely to fail when tree crowns are in contact.

This study seeks to fill this gap by proposing a robust pipeline for the label-free segmentation of ornamental plants. Individual plants were detected using Segment Anything Model 2.1 (SAM 2.1), a promptable, zero-shot segmentation model [

37]. As a zero-shot model, SAM 2.1 can segment objects it has not encountered during training, eliminating the need for retraining for new segmentation tasks. However, the model will segment all objects in an image, including weeds and soil. To guide the model towards the target objects, in this case, the plants, prompts could be used. Given the availability of the CHM and the characteristic that individual plants typically exhibit a height peak relative to their surroundings, local maxima were extracted from the CHM and used as point prompts to indicate the positions of individual plants. The objectives of this study were to develop a segmentation algorithm for ornamental plants with chrysanthemum as a use case that is (1) labeling-free and not requiring training; (2) robust to variations in flower color, growth stage, plant morphology, and suboptimal lighting conditions; and (3) able to ensure the automated evaluation of plant traits. The goal is to support breeders and growers and reduce labor, time, and costs while increasing spatial and temporal coverage. The algorithm was evaluated for the estimation of chrysanthemum crown diameter.

2. Materials and Methods

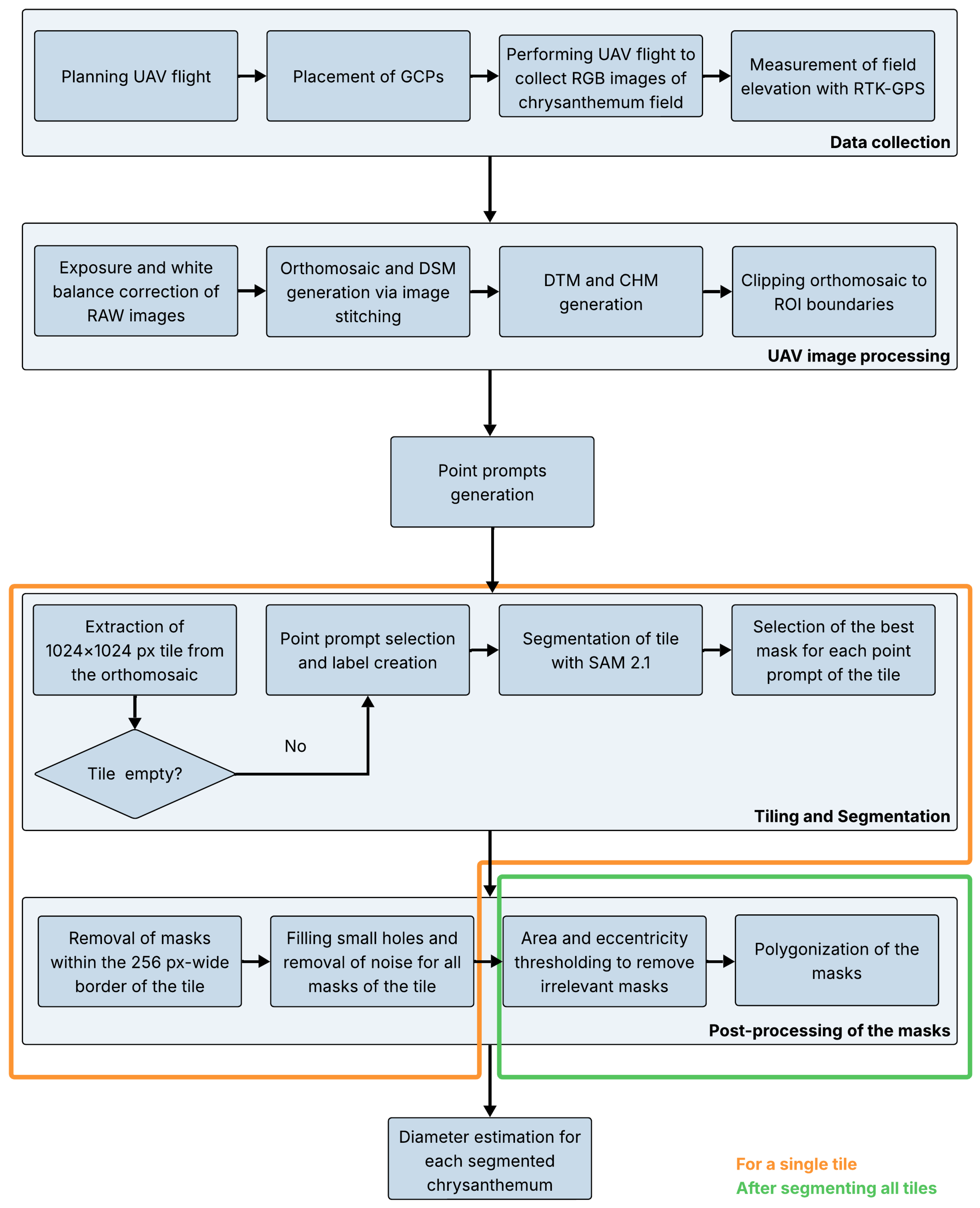

An overview of the pipeline is presented in

Figure 1, with a more detailed version available in

Appendix A (

Figure A1). The process began with a UAV flight to capture high-resolution RGB images of the chrysanthemum field. Since the entire field could not be covered in a single high-resolution image, multiple overlapping images were taken and subsequently corrected and stitched to generate an orthomosaic of the field. In addition, a CHM, representing the height of the objects (plants and weeds) in the field, was calculated by subtracting the digital terrain model (DTM) from the digital surface model (DSM). Next, individual plants were segmented using SAM 2.1. For computational reasons, the orthomosaic was first divided into 1024x1024 pixel sections, referred to as tiles, before being inputted into the model. To avoid the segmentation of weeds and soil, the locations of the plants were determined based on local maxima in the CHM and these coordinates were used as prompts for the segmentation. Lastly, the model’s predicted masks were post-processed to improve the quality. These masks could then be used to extract various traits for each segmented plant. In this study, the crown diameter of a chrysanthemum was determined. A single plant pot contained multiple chrysanthemum cuttings. In this article, the terms plant and (ball-shaped) chrysanthemum refer to the collective group of cuttings grown together in a single pot, sold as a unit.

2.1. Study Area

The observed area was part of a 5.2 ha chrysanthemum field (Chrysanthemum morifolium (Ramat)) located in Staden, West Flanders, Belgium (50°57’2” N, 3°04’31” E). The field is organized into blocks spaced approximately 16 m apart, each comprising five beds of five rows. Around 4200 different genotypes, featuring a range of flower colors, including green, white, yellow, orange, red, pink, and purple, were grown under open-field cultivation. In week 19 of 2024, chrysanthemum cuttings were planted in trays and after a three-week rooting period, they were transplanted into plant pots. Two weeks later, in week 24, the plants were transferred to the field. No fertilization was applied; only irrigation was provided.

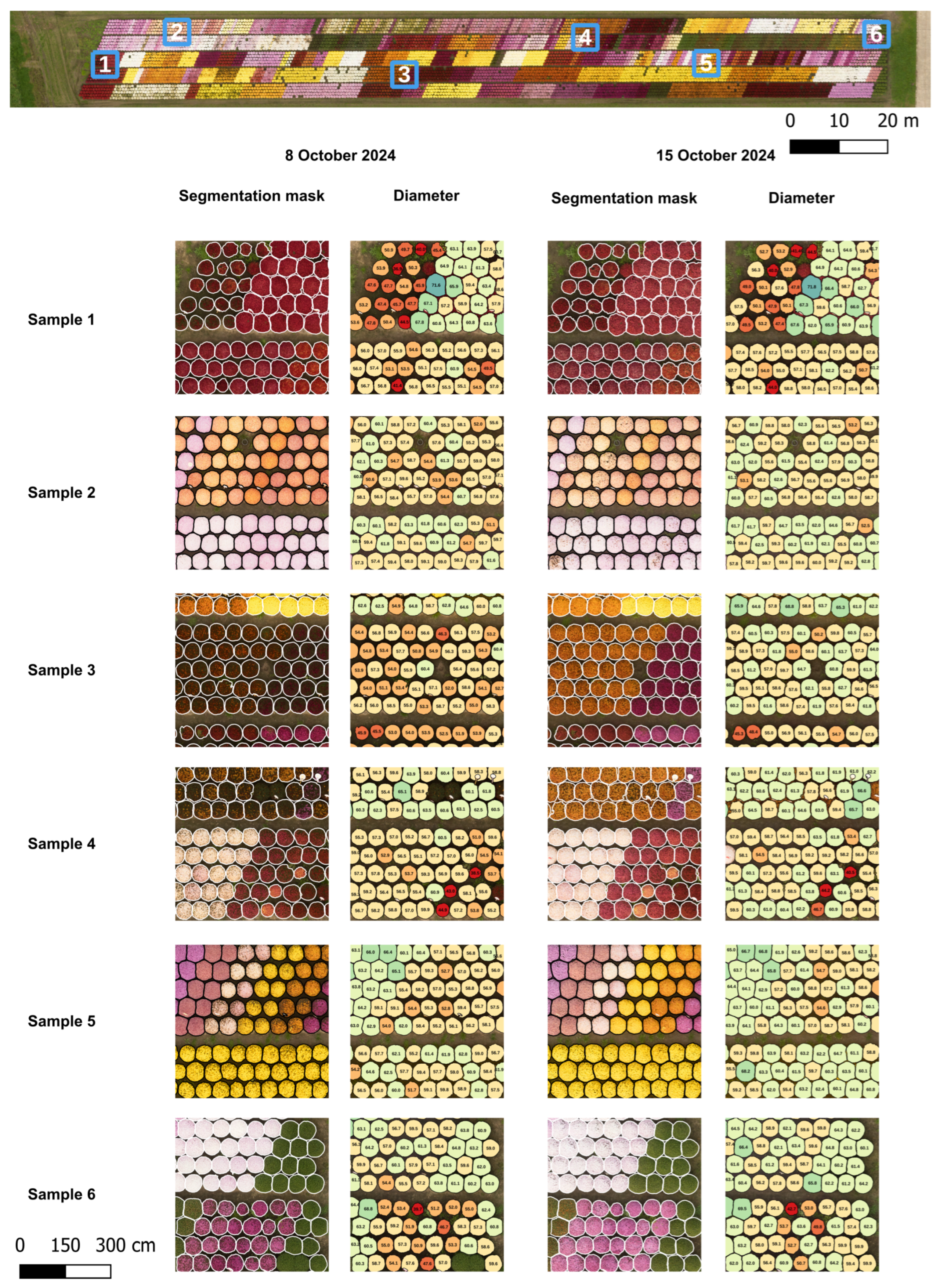

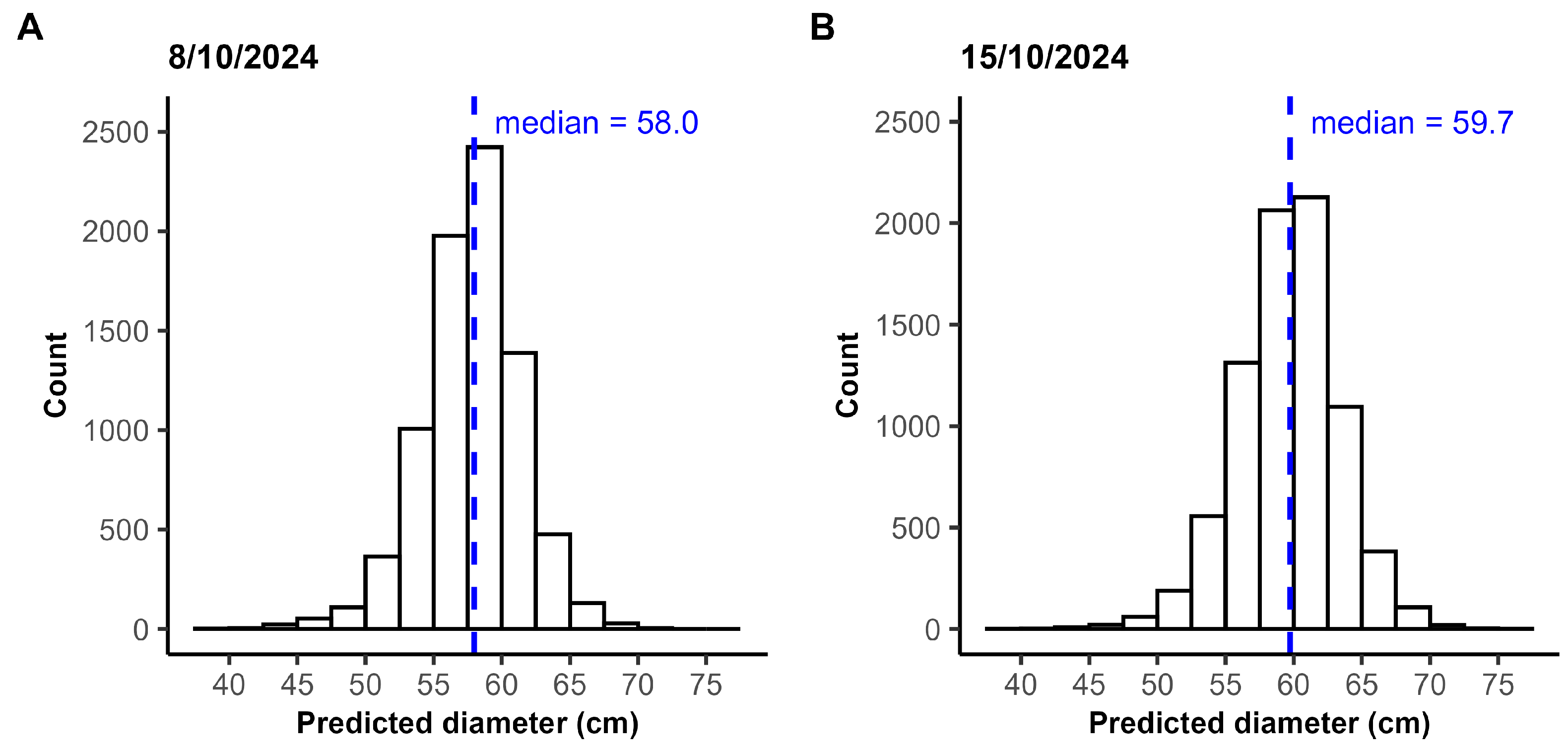

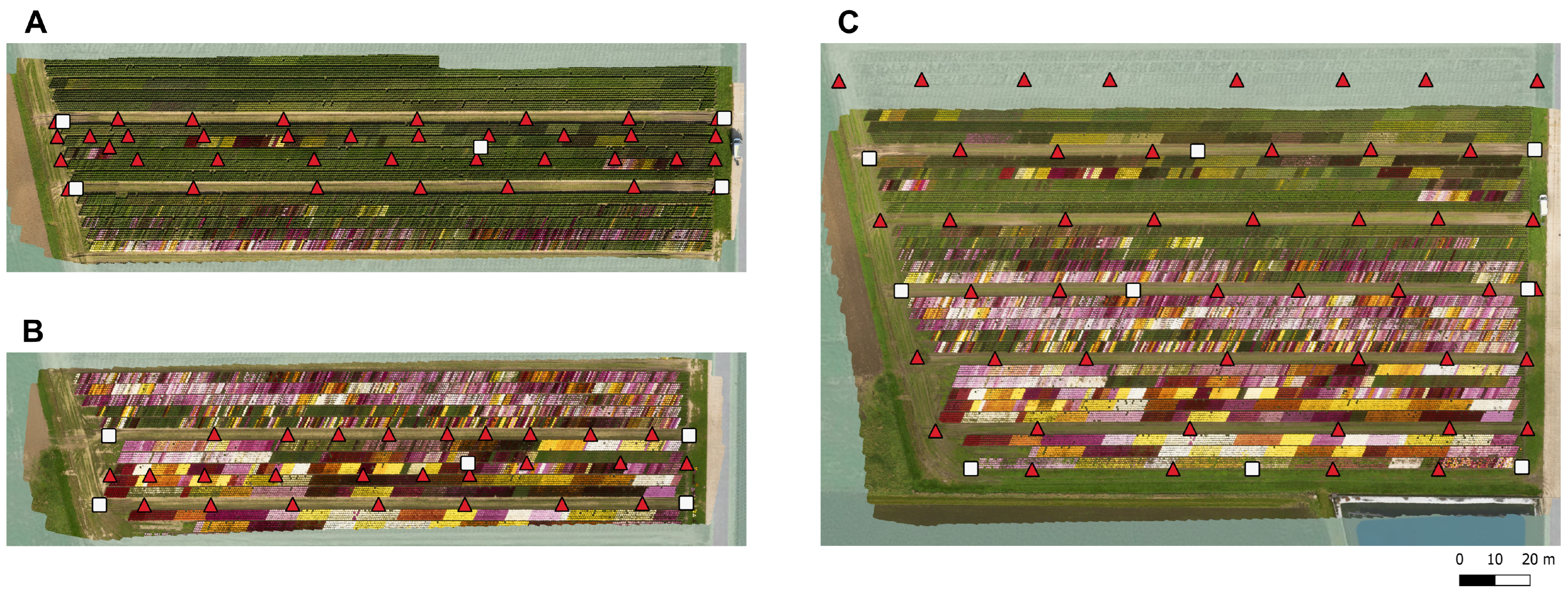

For the diameter measurements, two regions of interest (ROIs) were selected (

Figure 2), each consisting of a single block of five beds containing chrysanthemums of various genotypes and flower colors. The growth stage of the plants varied, ranging from budding to flowering stages. ROI 1 covered an area of 3290

and ROI 2 an area of 2868

. The total number of plants within each ROI is provided in

Table 1. Image acquisition of the field was performed twice: in week 41 on 8 October and one week later on 15 October. Data were collected on two separate dates to introduce greater variation into the dataset, reflecting conditions likely to be encountered in practical applications, such as variations in lighting conditions.

2.2. Data Collection

On 8 October and 15 October 2024, UAV flights were performed to collect high-resolution RGB images of the chrysanthemum field. On the first date, two flights were carried out, one at 11:03 a.m. and the other at 12:25 p.m., each covering a different ROI. On the second date, the entire study area was captured during a single flight, performed around 12:42 p.m. The cloud cover and hence lighting conditions varied between the flights. The second flight on 8 October and the flight on 15 October took place under broken cloud cover (7/8), while the first flight on 8 October was performed under scattered cloud cover (2/8), causing shadow across the field.

For all flights, the Matrice 600 Pro (DJI, Shenzhen, China) equipped with an RGB (visible spectral range) camera with 6000 × 4000 pixels and a 35 mm lens (Sony

6400, Sony Corporation, Tokio, Japan) was used. The camera was mounted on the UAV using a gimbal to orient the camera in the nadir position during image collection. Images were taken with 80% overlap in both flight and lateral directions. The ground sampling distance (GSD) was 3.71 mm/px, 3.74 mm/px, and 3.84 mm/px for the first flight on October 8, the second flight on October 8, and the flight on October 15, respectively. The flight height and drone speed varied between the flights and are listed in

Table A1. Shutter speed, aperture, and ISO were manually set before each flight and remained the same during the entire flight (

Table A2). Each image was saved in both JPEG and RAW formats.

For georeferencing purposes, ground control points (GCPs) were laid out uniformly across the study area prior to each flight and their exact locations were determined using a real time kinematic (RTK) GPS (Stonex S10 GNSS, Stonex SRL, Milan, Italy). In addition, the latitude, longitude, and elevation of several other regularly spaced points were measured to generate a more precise DTM. The location of these points and the GCPs are visualized in the corresponding orthomosaics in

Figure 3.

2.3. UAV Image Preprocessing

White balance and exposure of the RAW images of a single flight were corrected in Adobe Lightroom Classic (Adobe, San Jose, CA, USA) using a gray card (18% reference gray, Novoflex Präzisionstechnik GmbH, Memmingen, Germany) that was photographed during the flight. After correction, georeferencing and stitching were carried out in Agisoft Metashape Professional Edition (Agisoft 2.1.1 LCC, Saint Petersburg, Russia), resulting in an orthomosaic and DSM per flight, which were saved as GeoTIFF files. The orthomosaic was clipped using the package Rasterio v1.4.3 [

38] to extract the data within the ROI. As the field was imaged on two separate dates and two distinct ROIs were selected, a total of four clipped orthomosaics were generated. On 15 October, both ROI 1 and ROI 2 were captured during a single flight, resulting in a single orthomosaic comprising both areas. However, on 8 October, two separate flights were performed to capture the two ROIs. As a result, ROI 1 was clipped from the orthomosaic of the 11:03 a.m. flight, while ROI 2 was clipped from the orthomosaic of the 12:25 p.m. flight.

A DTM was generated per flight using the elevation data from both the GCPs and additional measured field points. First, a regular grid with a resolution of 1 cm was constructed over the entire area of the input points. Elevation values at each grid node were estimated using linear barycentric interpolation within the triangles formed by applying Delaunay triangulation to the input points [

39]. A DTM was also generated using only elevation data from the GCPs, and with reduced resolution, in order to evaluate the impact on segmentation performance and the accuracy of diameter estimation (

Appendix B.1). The CHM was derived by subtracting the DTM from the DSM. Since the extent of the DSM was defined by the coverage of the UAV flight and the extent of the DTM was limited to the outermost points measured using the RTK GPS, the two surfaces did not fully overlap. To ensure accurate subtraction, both the DSM and DTM were reprojected to a common raster grid, defined by the intersection between them and the lowest resolution of the two datasets. Reprojection was performed with the package Rasterio v1.4.3 [

38] using bilinear interpolation. The resolution of the DSM, DTM, and CHM per flight is listed in

Table 2. The accuracy of the CHM produced using this method was validated in an earlier study by Borra-Serrano et al. [

40].

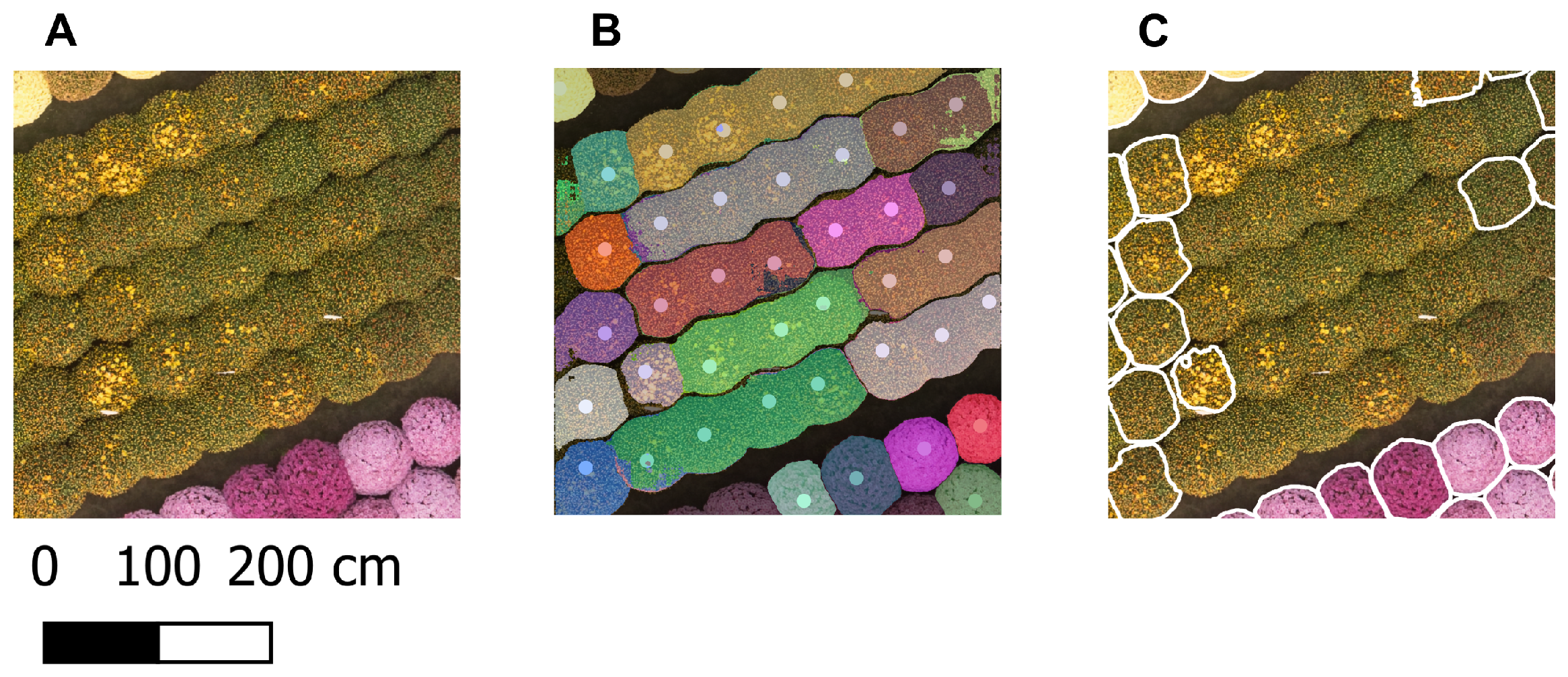

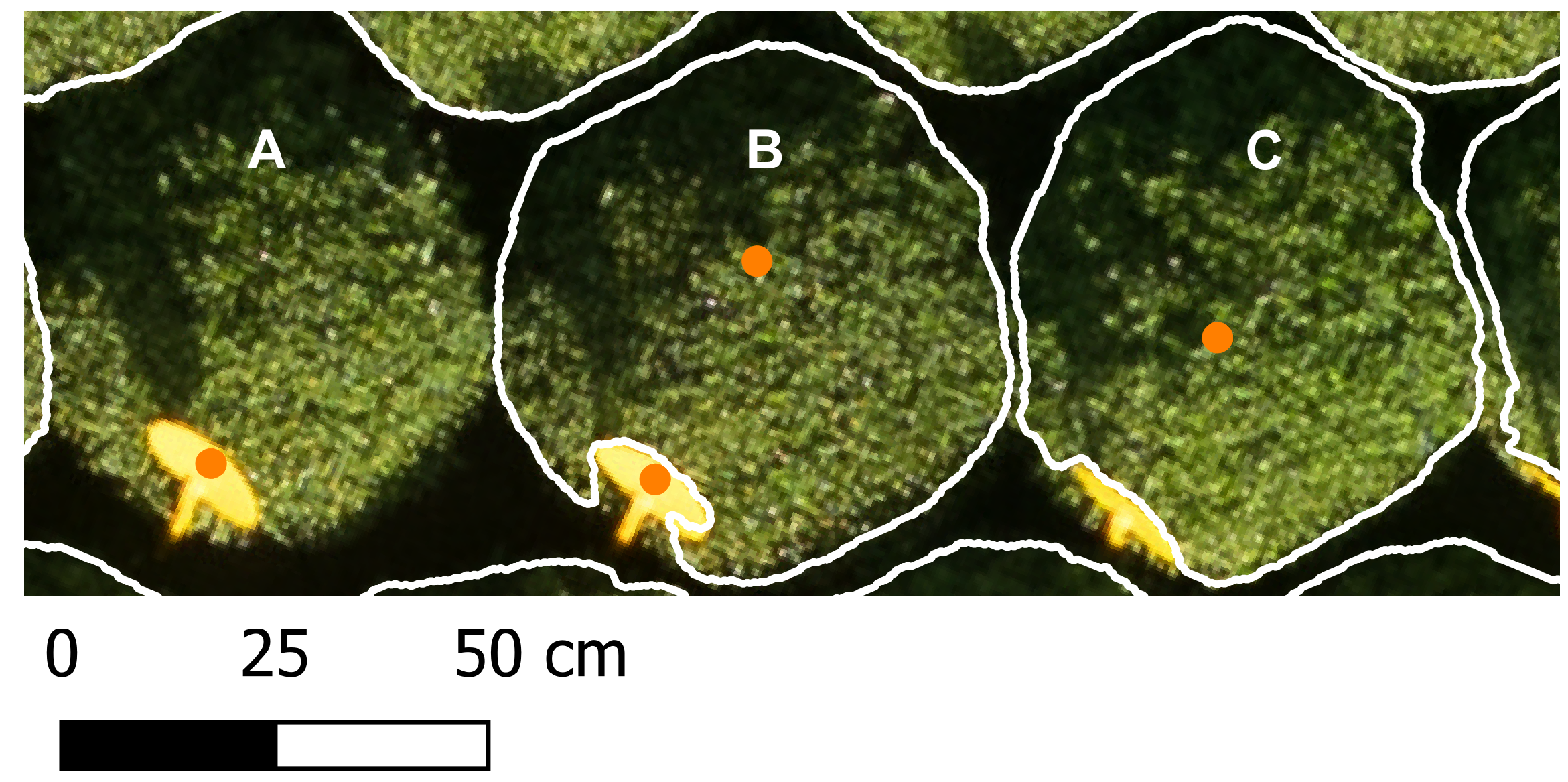

2.4. Point Prompts Generation

Positive point prompts, corresponding to the location of the plant tops, were used to define the objects for segmentation. For each ROI and each flight date, a different set of point prompts was generated by identifying local maxima in the CHM, applying the image processing library Scikit-image v0.25.2 [

41]. To avoid multiple prompts per plant and prompts corresponding to background, such as weeds or soil, the

minimum allowed distance between local maxima and the

minimum peak height were manually set until only points corresponding to the plants were obtained, based on a visual inspection. The

minimum peak height was defined as a user-defined quantile of all positive height values within the ROI, which, for our experiment, was set to 0.3 quantiles. The value for the

minimum distance between local maxima was 10 pixels for the four point prompts generations. The impact of these parameters on segmentation accuracy and diameter estimation performance is discussed in

Appendix B.2.

The point prompts were originally defined as array indices within the CHM. To convert these indices to corresponding positions on the clipped orthomosaic, the array indices were first transformed into geographic coordinates using the affine transformation matrix of the CHM. Subsequently, these geographic coordinates were converted into array indices of the clipped orthomosaic by applying the inverse transformation using the affine transformation matrix of the clipped orthomosaic.

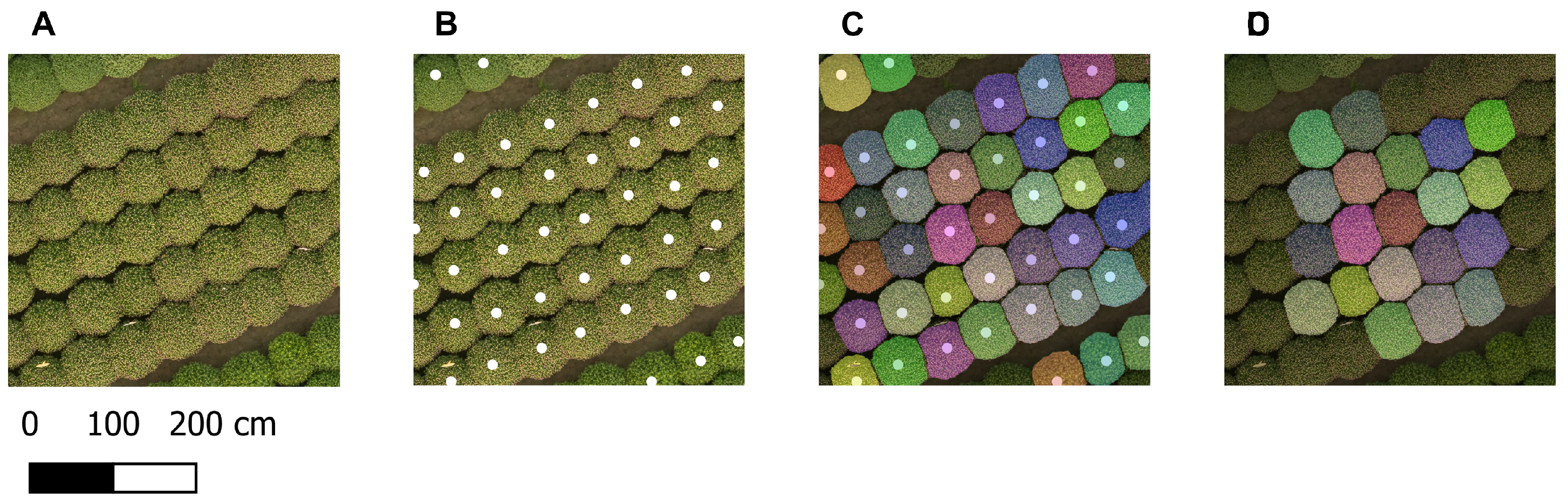

Figure 4B presents an example of the generated point prompts for a small part of the orthomosaic of 15 October.

2.5. Tiling and Segmentation

Since the clipped orthomosaics were too large to be segmented in a single pass, they were divided into tiles of size 1024 x 1024 px (

Figure 4A), which corresponded to the default input size of the SAM 2.1 encoder. The tiling was performed with 50% overlap in both vertical and horizontal directions to ensure accurate plant segmentation masks, including those at the borders of each tile. The plant rows did not align with the image borders because the orthomosaic was aligned with the true north, while the rows were not, resulting in tiles containing only nodata values. These were excluded from further processing to save memory and reduce computation time. For each remaining tile, point prompts located within the tile, including those on the tile border, were selected (

Figure 4B). Positive labels (1) were generated for these prompts. Each individual tile, along with its corresponding prompts and labels, was given as input to the Image Predictor of the segmentation model SAM 2.1 [

37]. The Hiera-L model was selected as an image encoder due its higher accuracy compared to smaller options. Segmentation performance and diameter estimation accuracy for the different SAM 2.1 encoders is presented in

Appendix B.4. For each point prompt, three segmentation masks were generated, but only the mask with the highest quality score, as predicted by SAM 2.1, was selected (

Figure 4C).

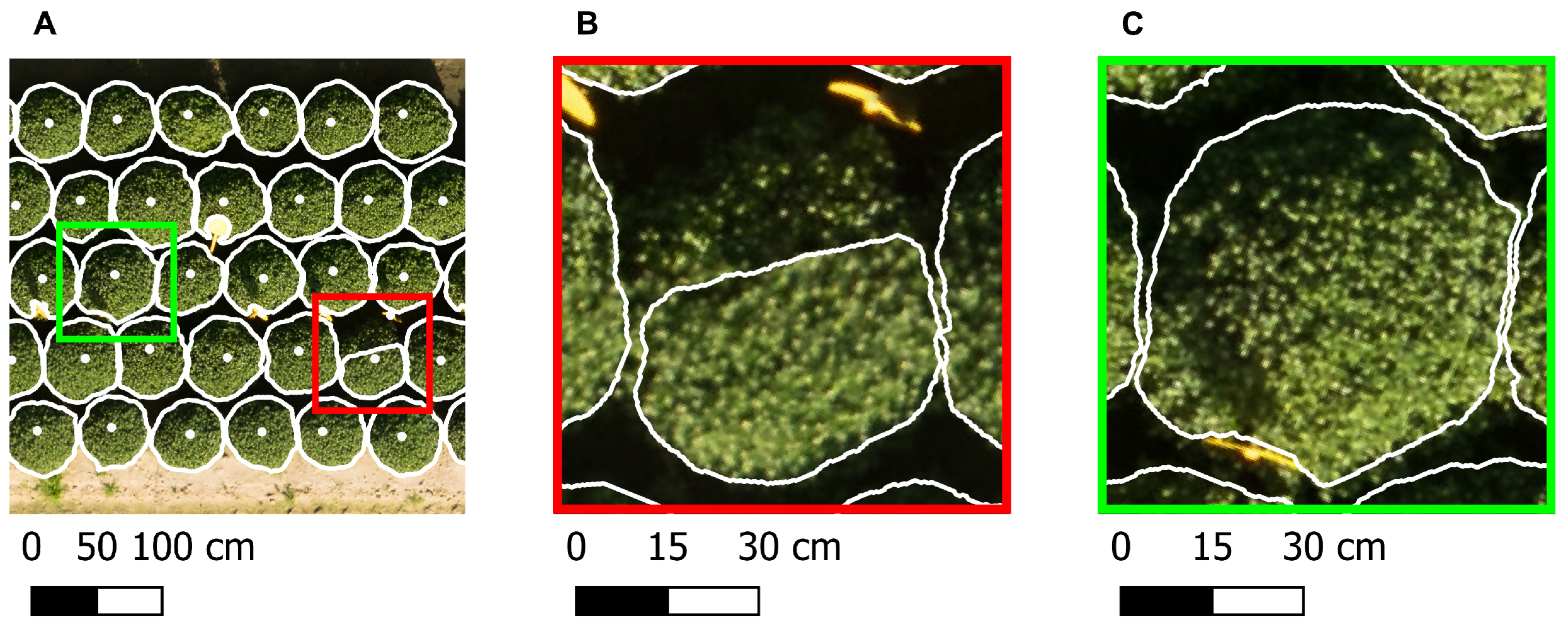

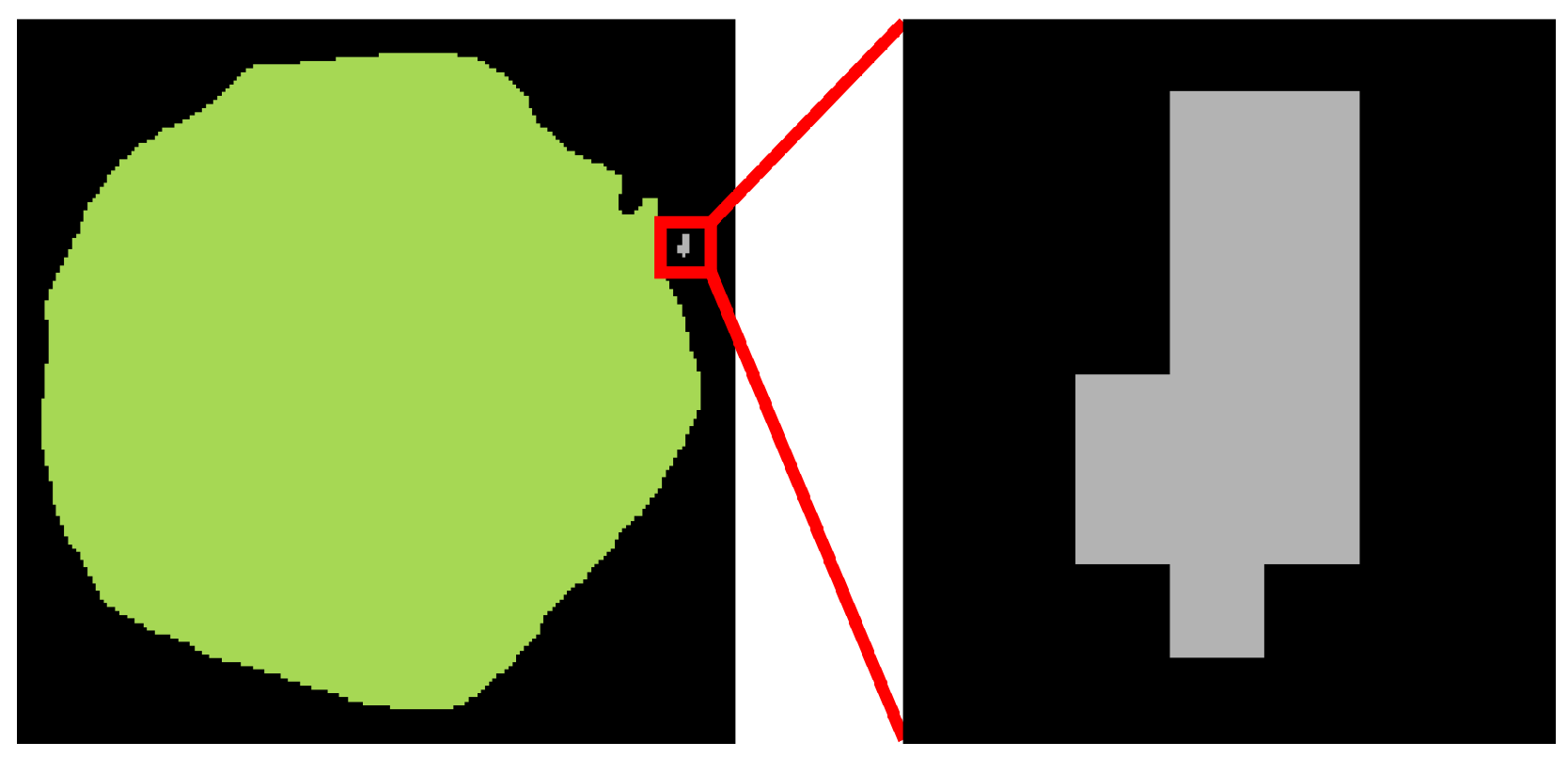

2.6. Post-Processing of the Masks

As a result of the tiling, plants at the borders of a tile were split over multiple tiles. Plant segmentation masks containing only pixels within the 256-pixel-wide border of the tile were excluded to prevent the presence of masks corresponding to fragmented plants in the field-level segmentation mask (

Figure 4D). The quality of the remaining segmentation masks was improved by filling small holes using the binary fill holes function from the SciPy library (version 1.15.2) with default parameters [

39]. Although the mask predicted by the segmentation model corresponded to a single object, it sometimes contained multiple connected regions, as illustrated in

Figure 5. Since a ball-shaped chrysanthemum is a coherent structure, only the largest connected component of the mask, identified through connected component analysis using SciPy v1.15.2 [

39], was maintained.

After segmenting all tiles of an orthomosaic, low-quality masks and those corresponding to non-target objects were filtered out based on thresholds for the eccentricity (upper threshold) and area (lower and upper threshold). These features were computed using the package scikit-image [

41]. For both the eccentricity threshold and the area thresholds, various values were tested per orthomosaic until the desired result was achieved.

Appendix B.3 provides a detailed assessment of how these parameters affected both segmentation performance and diameter estimation accuracy. Eccentricity was defined as the ratio of the focal distance to the major axis length, yielding a value between 0 and 1, with 0 indicating a perfect circle. Considering that ball-shaped chrysanthemums typically have a nearly circular shape, a mask with a high eccentricity value was unlikely to correspond to a chrysanthemum. The threshold value for eccentricity was 0.75 for all four clipped orthomosaics. Plant segmentation masks with areas that were either excessively large or small were also unlikely to represent a single plant and were therefore excluded. To identify these outliers, area thresholds were defined based on the interquartile range (IQR): the upper threshold was set as 3 times the IQR above the third quartile (Q3), while the lower threshold was set as 3 times the IQR below the first quartile (Q1).

As a result of the 50 % overlap between tiles, individual plants appeared in multiple tiles, resulting in multiple plant segmentation masks for the same plant. To retain a single mask per plant, an iterative procedure was applied. All masks were first sorted by descending area, and the iterative procedure started with the first mask of the sorted masks, corresponding with the largest mask. All masks with at least 80% of their area contained within this mask were considered to represent the same plant. Among these masks, the one with the highest quality score was selected as the final segmentation mask for that plant, and all these masks were excluded from future iterations to prevent duplicate detections. The quality score represented the predicted intersection over union (IoU) and was computed by SAM 2.1 during the segmentation process. This process was repeated for each subsequent mask, considering only those that had not previously been excluded. In each iteration, only the remaining, unprocessed masks were compared to the current one to check for overlap. The final selected plant segmentation masks were converted into polygons and saved as a GeoDataFrame. To transform the binary masks into polygons, the contours of the masks were first extracted using OpenCV v4.11.0 [

42]. The masks were then converted into polygons and validated using the package Shapely v2.0.7 [

43].

2.7. Diameter Measurement

The crown diameter of each segmented plant was calculated as the diameter of a circle with an area equal to that of its corresponding post-processed mask since the shape of a ball-shaped chrysanthemum closely resembles that of a circle. Additionally, this approach was selected for its reduced sensitivity to shape extremities (e.g., more elongated, oval-shaped chrysanthemums), as opposed to using the diameter of the smallest enclosing circle.

2.8. Analysis of the Data

Segmentation, post-processing, and diameter measurements of the chrysanthemum crowns were performed on each clipped orthomosaic. Since the field was imaged on two separate dates and two distinct ROIs were selected, a total of four analyses were conducted.

2.8.1. Evaluation of the Post-Processed Field-Level Segmentation Mask

To evaluate the quality of the segmentation and post-processing, the recall (Equation (

1)), precision (Equation (

2)), and

score (Equation (

3)) were calculated. False negatives were defined as plants without a mask, while false positives corresponded to masks that either did not overlap a chrysanthemum or had a visually estimated IoU below 0.8. A threshold value of 0.8 was selected as masks exceeding this threshold demonstrated a visually acceptable fit, and it was believed that the corresponding diameter measurements would fall within the range of variability expected in practical applications. Since no ground truth masks were available, the IoU was estimated through visual inspection rather than calculated. False positives and false negatives were manually counted by overlaying the post-processed field-level segmentation mask on the corresponding orthomosaic in QGIS (QGIS Desktop 3.40.0, Bratislava) [

44]. Each chrysanthemum was evaluated by first verifying the presence of a segmentation mask and, if one was present, the IoU was visually estimated by assessing how much of the plant was not covered by the mask and/or the degree to which the mask extended beyond the boundaries of the plant. The IoU was therefore considered an approximate rather than an absolute value. However, as most plants either lacked a mask entirely or had a mask that closely matched the outline of the plant, evaluation using this method was considered feasible. The true positives, corresponding to masks with an IoU of at least 0.8, was computed as the difference between the total number of masks and the false positives.

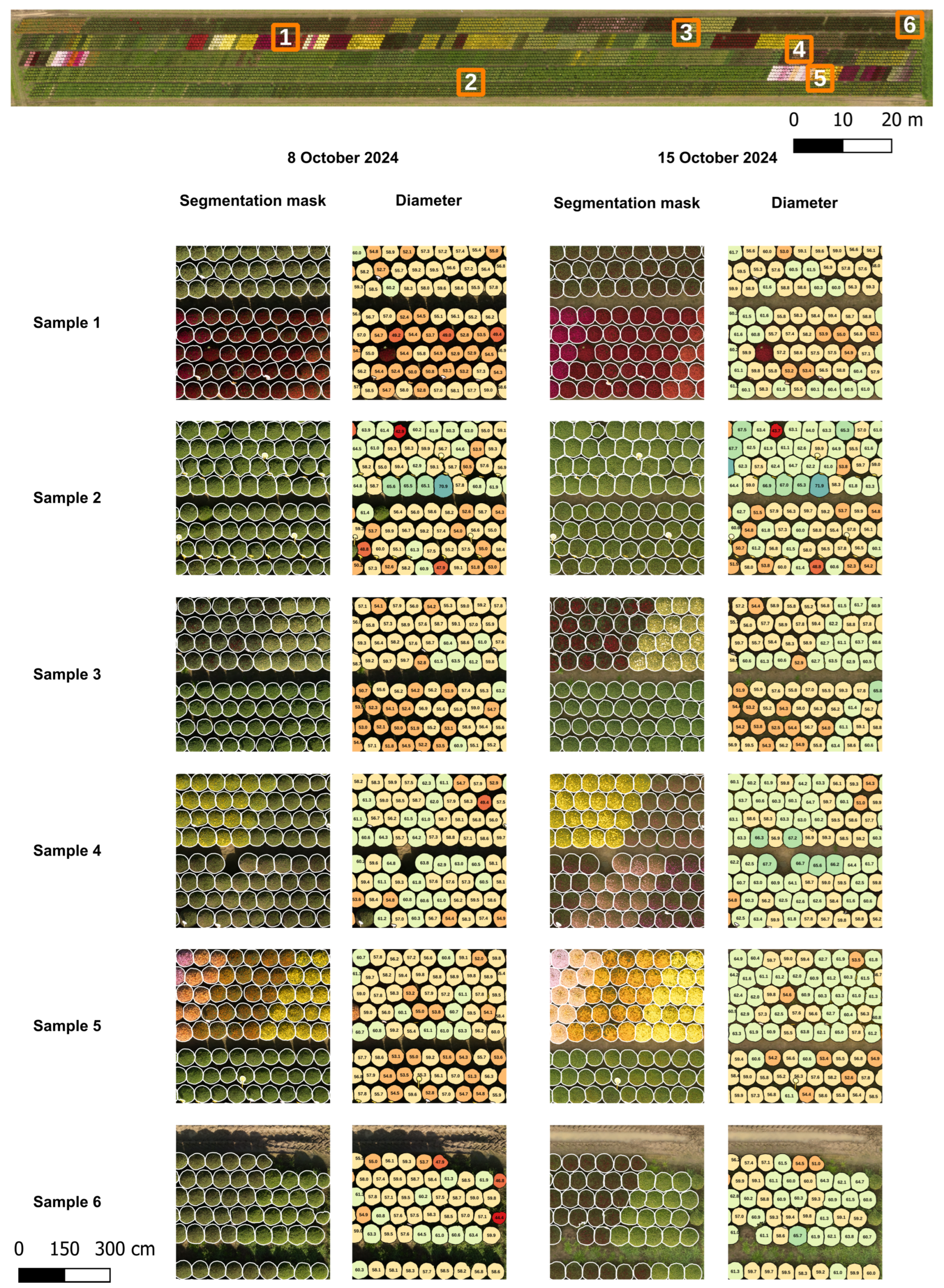

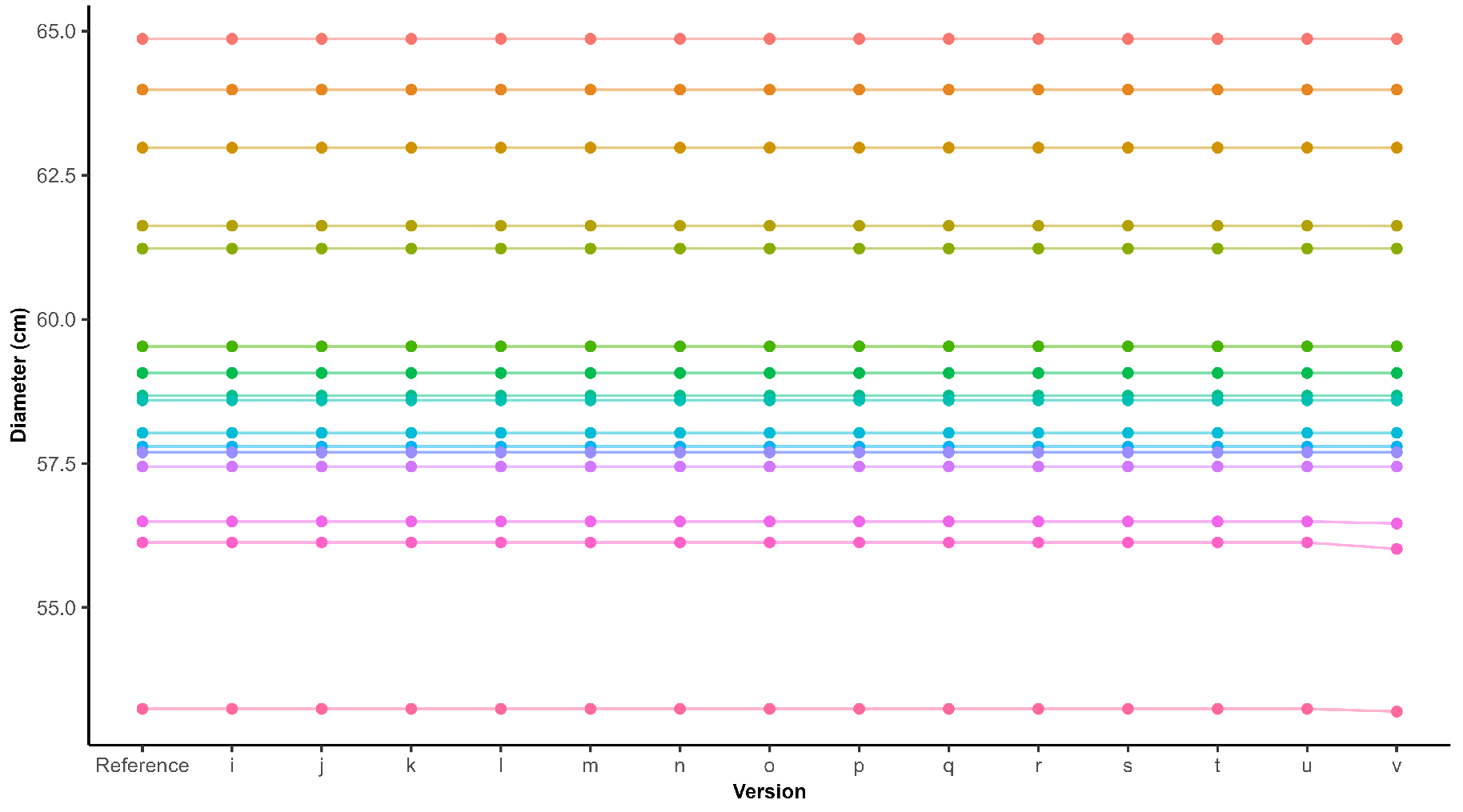

2.8.2. Evaluation of the Diameter Measurement

Field measurements of the chrysanthemum diameter were not provided. Therefore, no ground truth was available to compare the predicted diameters with. To evaluate the accuracy of the proposed pipeline, 102 chrysanthemums distributed across three selected sections (sections 1, 3, and 6 in Figure 7) in ROI 2 were manually measured in QGIS and compared with the computed diameters. The chrysanthemums inside a section were selected using a systematic sampling approach, in which every second plant was measured, to ensure a representative distribution. The manual diameters were defined as the mean of the largest crown diameter and the diameter perpendicular to it, measured directly on the orthomosaic with QGIS. To prevent bias, segmentation masks were not displayed during the measurement process.

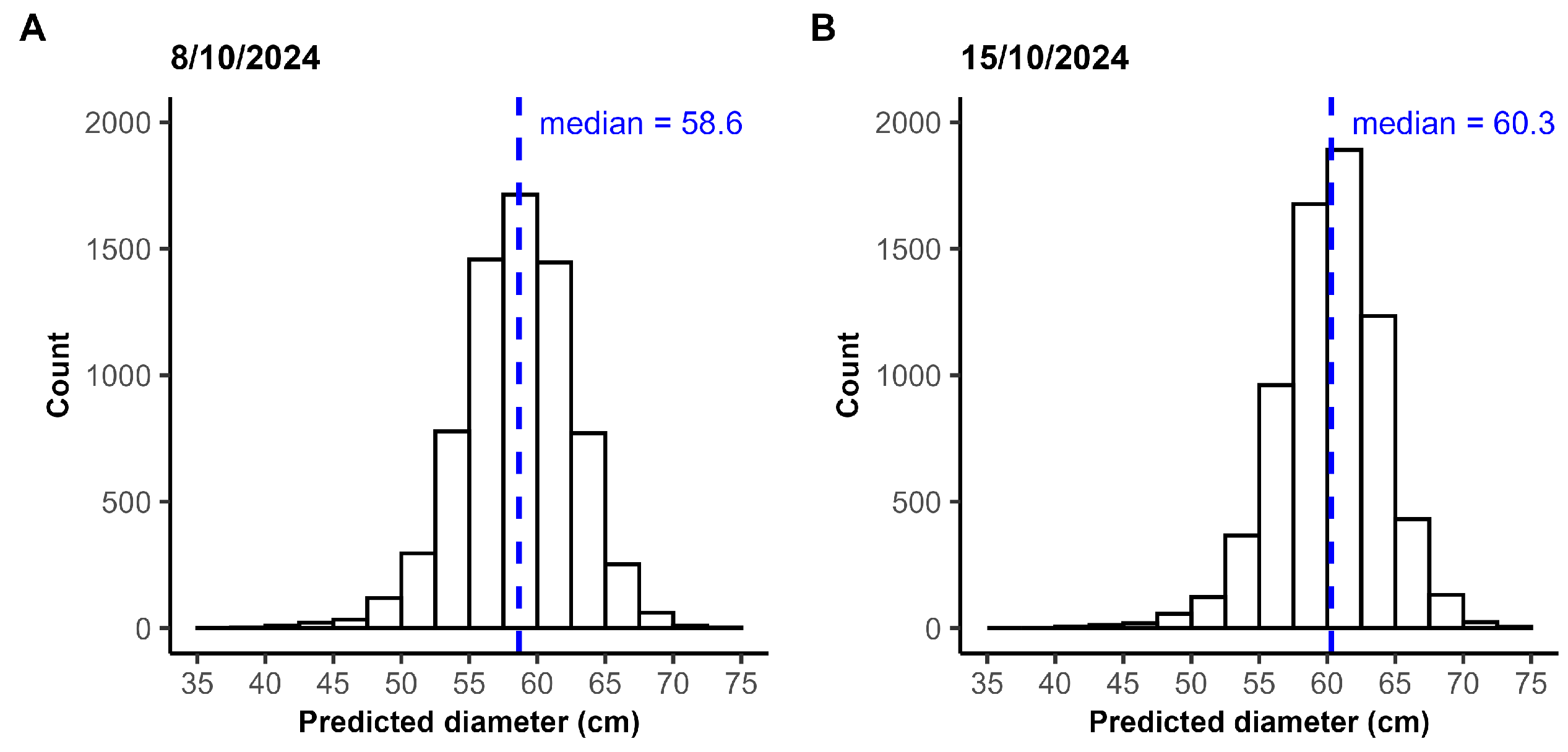

The predicted crown diameters were compared for the same plants across both flight dates. Both ROI 1 and ROI 2 were captured twice, with a one-week interval between acquisitions. Polygons representing the same plant across the two imaging dates were matched based on the value for the IoU, defined as the ratio of the overlapping area to the total combined area of the two polygons. For each polygon, the IoU was calculated with all polygons from the other date, and the polygon with the highest IoU was selected as the match, but only if it exceeded 50%.

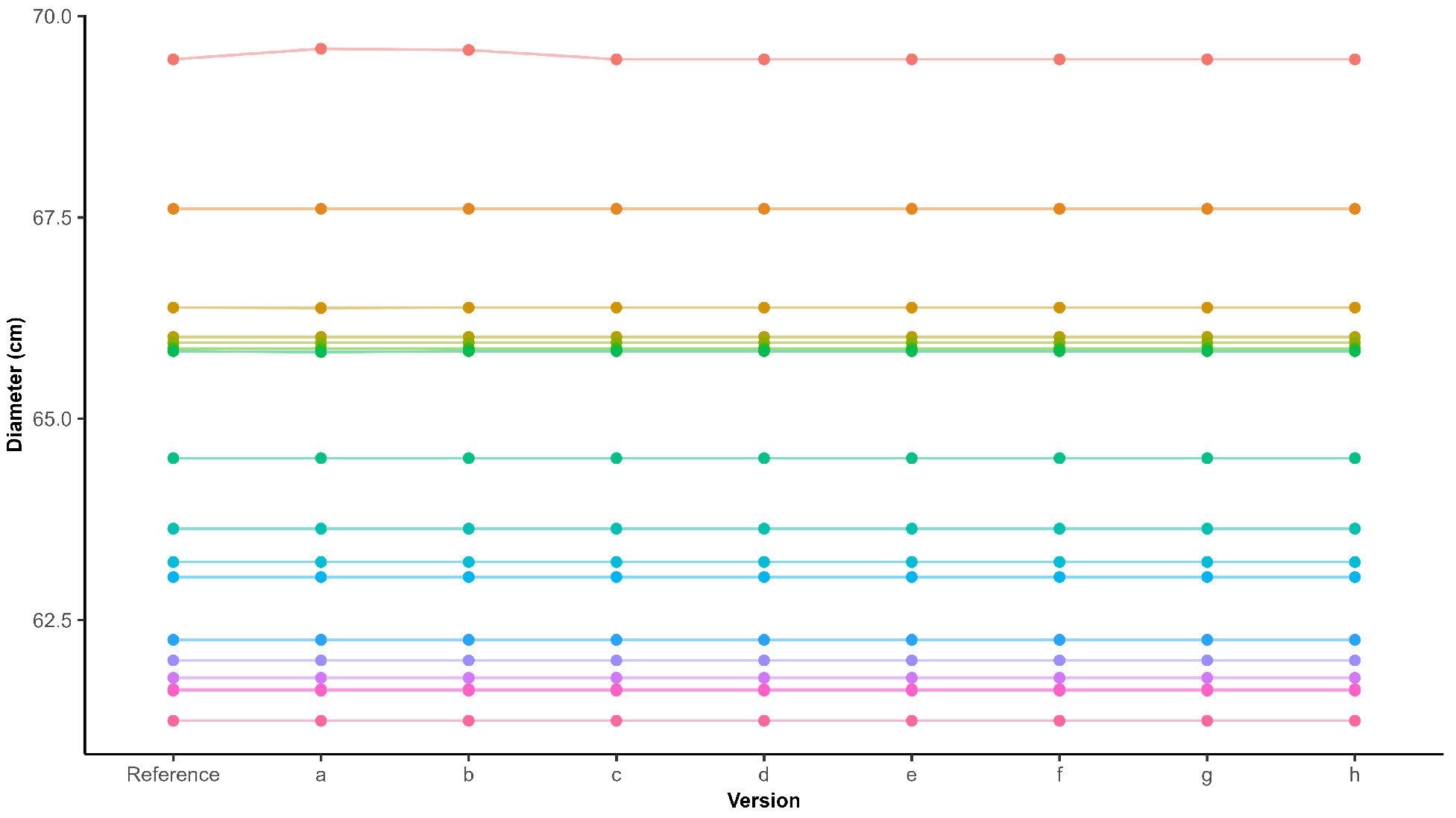

2.9. Sensitivity Analysis

A sensitivity analysis was performed to analyze the impact of manual settings on segmentation quality and diameter estimation. This included the evaluation of parameters used for point prompt generation—

minimum peak height and

minimum distance between local maxima (

Section 2.4)—and for mask post-processing—

upper eccentricity threshold and

upper and

lower area thresholds (

Section 2.6). In addition, the influence of the DTM resolution and the number of elevation points used in its calculation was evaluated. Since the resolution of the CHM was determined by the lowest resolution between the DSM and DTM, changes in DTM resolution directly affected the CHM. Finally, different encoders of SAM 2.1 were tested.

To automate the evaluation, a ground truth mask was created for all plants that contained at least a part within the sections visualized in Figure 7. The annotation was conducted in QGIS by manually drawing polygons on top of the orthomosaic of 15 October in freehand mode, and a total of 480 plants were evaluated. Predicted masks were compared against this ground truth to compute true positives (IoU ≥ 0.8), false negatives (no match with IoU ≥ 50%), and false positives (remaining predicted masks). Recall, precision, and

score were calculated as defined in Equations (

1)–(

3). The diameter estimations were evaluated by comparing predicted values to manual measurements (

Section 2.8.2). Due to the labor-intensive annotation process, the sensitivity analysis was only performed for data collected on 15 October within ROI 2. This field was chosen for its diversity in plant size, flower color, and the presence of weeds and grass.

2.10. Computational Resources

The experiments were performed on a system running Windows Server 2022 Standard Edition, equipped with 384 GB RAM, two Intel Xeon Gold 6226R CPUs and an NVIDIA RTX A5000 GPU. Wall time and peak RAM usage were measured for processing ROI 1 of the orthomosaic acquired on October 15, covering the entire processing pipeline. The DTM was generated using elevation data from 55 points and covered an area of 2.00 ha, while the resulting CHM spanned 1.84 ha. The clipped orthomosaic was divided into 3913 tiles, of which 879 were segmented with SAM 2.1 using the Hiera-L encoder. The remaining tiles contained only nodata values due to the orientation of the clipped orthomosaic aligned to true north and were not processed. For each of the 8589 point prompts, three masks were predicted. The total processing time was 25 min, with tiling and segmentation accounting for the majority of the time (88%). The remaining time was distributed across the following steps: DTM generation (2%), CHM calculation (5%), point prompt generation (1%), post-processing (3%), and diameter estimation (1%). Peak RAM usage reached 23 GB during segmentation.

4. Discussion

This study demonstrates the applicability of SAM 2.1, combined with point prompts derived from the CHM, for the automatic extraction of ornamental plant traits from UAV-acquired RGB images to support ornamental growers and breeders in their decision-making. A novel aspect compared to similar work is the label-free nature of the proposed pipeline. The approach requires no annotated data and no model training, making it significantly faster and less labor-intensive than traditional supervised segmentation methods. Certain conditions must be met for the pipeline to be applicable: a CHM must be available and the plants should be relatively compact and not form a continuous, overlapping canopy.

The availability of an accurate CHM is necessary to generate point prompts for identifying the objects of interest. In the absence of a CHM, the model cannot target specific objects and instead attempts to segment all visible elements in the image, leading to noise and irrelevant segmentations. On the other hand, when an inaccurate CHM is used, point prompts may be misplaced, resulting in masks that correspond to background rather than the intended plants. The accuracy of the CHM itself depends on the quality of the DSM as well as the DTM. A precise DSM requires UAV flights to be performed with sufficient image overlap [

40,

45]. While increasing image overlap enhances DSM precision, it also prolongs the flight duration, reducing the area that can be captured in a single flight without requiring a battery change. Nevertheless, the area that can be evaluated with this method is still considerably larger than what could be assessed with the traditional approach. Generating an accurate DTM requires measuring the elevation of evenly distributed points across the ROI, which requires an RTK-GPS, increasing overall costs, and increasing data collection time. However, as reported in

Appendix B.1, no additional elevation measurements beyond the GCPs are required to obtain good results, provided the measured points cover the borders of the ROI and the terrain does not have abrupt slope changes. In addition, this measurement only needs to be performed once.

The method for generating point prompts relies on the detection of local height maxima. Consequently, non-target objects within the field that are equal to or taller than the target plants may also trigger prompt generation and subsequent segmentation. However, masks that deviate in size or shape are filtered out in the post-processing step, meaning that only non-target objects with characteristics similar to the target plants are likely to be retained. A potential solution to eliminate the need for point prompts and, thus, the CHM is to use PerSAM-F, a customized version of SAM that enables personalized object segmentation through one-shot fine-tuning [

46]. Although the fine-tuning is completed within 10 s, it still requires one annotated image. To avoid manual annotation, Osco et al. [

47] combined Grounding DINO with SAM to enable segmentation based on text prompts and compared this approach with the performance of SAM using point and bounding box prompts. The results indicated that text prompts generally resulted in lower segmentation accuracy, with point prompts proving more effective for identifying and segmenting small, individual objects. The performance of segmentation guided with text prompts relies on Grounding DINO’s ability to correctly interpret and localize textual descriptions within the image. The authors noted that this ability can be limited, especially when domain-specific terminology is not well represented in the model’s training data. In contrast, point prompts offer more direct control over the segmentation target, thereby improving reliability. In addition, the combination of Grounding DINO with SAM was applied to automatically generate a mask of the target object, which was then used to fine-tune PerSAM-F. The authors stated that the effectiveness of the approach depends on the accurate identification of the target object, as PerSAM-F cannot be fine-tuned in the absence of an initial segmentation. It remains uncertain whether this method is effective for the segmentation of ornamental plants. This can be further explored in future research. The CHM can also serve a purpose beyond generating point prompts. For instance, Rayamajhi et al. [

12] used it to estimate the height of individual plants.

The captured field included chrysanthemums of various genotypes, flower colors, sizes, and growth stages. An average recall of 96.86% demonstrates that the model is robust to all these variations, making it suitable for automatic diameter measurement across different chrysanthemum genotypes, flower colors, and both the bud and flowering stage. Furthermore, shadow between as well as on the chrysanthemums did not pose major issues, as evidenced by the similar recall and precision between the flights over ROI 1 captured on 8 October and on 15 October. The two flights were carried out under different lighting conditions. The coefficient of determination () and the MAE indicated a slightly imperfect match between the estimated and measured diameters, with the pipeline both underestimating and overestimating the crown diameter. It should be noted that the manual measurements used for comparison do not represent absolute ground truth and likely contain some degree of measurement error themselves related to the expert. Even if manual measurements were performed directly in the field rather than on the orthomosaic, some variability would remain, as different experts are unlikely to obtain identical diameter estimates for each plant.

Zhang et al. [

26] estimated chrysanthemum crown diameter using the bounding rectangle of the segmentation mask predicted by Mask R-CNN. Their reported RMSE of 2.29 cm, based on comparisons between estimated diameters and manually measured field values obtained using a measuring tape, was comparable to the values obtained using our approach. However, Mask R-CNN is a supervised learning model and its application to varying field conditions typically requires retraining [

34]. The researchers used a dataset containing 3014 annotated images with 3262 instances. Manually annotating that amount of data requires a substantial amount of time and labor. In contrast, our proposed method operates without the need for manual annotation or model training.

There are still some opportunities to further optimize the pipeline. For example, to avoid reliance on manual height measurements with the RTK GPS for DTM generation, an alternative approach could be to perform a flight before the chrysanthemums are present in the field, capturing the DTM of the terrain beforehand. In this case, an appropriate choice of sensor and flight parameters (flight altitude and overlap) is essential, as the resolution of the DSM and, consequently, the quality of the CHM depend on them. However, this would further reduce the manual labor. To be more user-friendly, the manual settings for the point prompt generation and post-processing could be specified using meaningful real-word metrics. For instance, instead of expressing the minimum distance between local maxima in pixels, defining this value in meters would be more intuitive, given that a reasonable estimation is often available. However, our findings indicate that these parameters primarily influence the segmentation performance (number of plants with/without a mask), rather than the accuracy of diameter measurements. Moreover, as long as the thresholds are not too strict, the segmentation performance remains satisfactory. Consequently, if sporadic errors are acceptable, the precise tuning of these manual settings becomes less critical. In this case, it is recommended to use low values for the minimum distance and peak height during point prompt generation and high values for maximum area and eccentricity, along with a low value for minimum area during post-processing.

For the segmentation, the largest model, Hiera-L, was selected as the SAM 2.1 image encoder due to its better segmentation accuracy, as reported in

Appendix B.4. This performance advantage comes at the cost of a longer processing time; however, the additional time remains relatively limited (

Table A3). In scenarios where fast processing is required, smaller image encoders (Hiera-S and Hiera-T) may serve as an alternative, but this comes with reduced segmentation performance and lower diameter accuracy.

Although the method has certain limitations, we believe it holds potential as a robust tool for the automatic extraction of various plant traits across different field conditions, with potential applications in both breeding (e.g., selection) and production (e.g., growth monitoring and stress detection) contexts. In future research, the algorithm could be applied to other crops, both in container fields and fields, to further test its robustness. Moreover, the predicted diameters could be validated against manual measurements performed by experts. In addition to diameter, other traits such as canopy volume and height could also be extracted. Monitoring these traits over time would allow for evaluation of the algorithm’s potential for supporting growth monitoring in a production environment, as illustrated by Vigneault et al. [

48]. Moreover, when multispectral imagery is available, vegetation indices can be calculated on a per-plant basis to support yield and biomass assessments and the detection of biotic and abiotic stresses, including drought and diseases [

19].