Abstract

This study proposes the PHD-YOLO model as a means to enhance the precision of passion fruit detection in intricate orchard settings. The model has been meticulously engineered to circumvent salient challenges, including branch and leaf occlusion, variances in illumination, and fruit overlap. This study introduces a pioneering partial convolution module (ParConv), which employs a channel grouping and independent processing strategy to mitigate computational complexity. The module under consideration has been demonstrated to enhance the efficacy of local feature extraction in dense fruit regions by integrating sub-group feature-independent convolution and channel concatenation mechanisms. Secondly, deep separable convolution (DWConv) is adopted to replace standard convolution. The proposed method involves decoupling spatial convolution and channel convolution, a strategy that enables the retention of multi-scale feature expression capabilities while achieving a substantial reduction in model computation. The integration of the HSV Attentional Fusion (HSVAF) module within the backbone network facilitates the fusion of HSV color space characteristics with an adaptive attention mechanism, thereby enhancing feature discriminability under dynamic lighting conditions. The experiment was conducted on a dataset of 1212 original images collected from a planting base in Yunnan, China, covering multiple periods and angles. The dataset was constructed using enhancement strategies, including rotation and noise injection, and contains 2910 samples. The experimental results demonstrate that the improved model achieves a detection accuracy of 95.4%, a recall rate of 85.0%, mAP@0.5 of 91.5%, and an F1 score of 90.0% on the test set, which are 0.7%, 3.5%, 1.3%, and 2. The model demonstrated a 4% increase in accuracy compared to the baseline model YOLOv11n, with a single-frame inference time of 0.6 milliseconds. The model exhibited significant robustness in scenarios with dense fruits, leaf occlusion, and backlighting, validating the synergistic enhancement of staged convolution optimization and hybrid attention mechanisms. This solution offers a means to automate the monitoring of orchards, achieving a balance between accuracy and real-time performance.

1. Introduction

The advent of precision agriculture technology has catalyzed the emergence of fruit detection based on computer vision as a pivotal technology for autonomous operations in innovative agricultural equipment. Passion fruit, a high-value tropical crop, has emerged as a significant component in reducing harvesting costs and enhancing supply chain efficiency through automated detection [1]. However, due to its vine-like growth pattern and dense clustering, passion fruit faces challenges such as leaf and branch obstruction, light variation, and fruit overlap in natural environments. These challenges result in feature confusion and limited generalization ability in existing detection models [2].

The existing computer vision-based precision agriculture detection paradigm is predicated on four aspects: (1) the optimization of the attention mechanism, (2) the innovation of feature fusion strategy, (3) the development of lightweight and embedded deployment, and (4) the optimization of all-weather detection. Concerning the optimization of attention mechanism, researchers have introduced channel attention [3,4,5], spatial attention [6,7], and their hybrid variants (e.g., CBAM [8], CA [9], SimAM [10], etc.) to enhance the model’s ability to focus on fruit features [11]. Ling et al. [12] introduced DyHead detection heads and lightweight modules into the EDYOLOv8s model, achieving a substantial enhancement in the accuracy of passion fruit ripeness recognition. Chen et al. [13] embedded the SimAM module into the ELAN layer of the YOLOv7-tiny network, thereby demonstrating its ability to enhance feature extraction capabilities without increasing the number of parameters. Liu et al. [14] proposed the V-YOLO model, which attains efficient performance in guava detection by improving the backbone network and attention mechanism. However, extant studies have also found that standard convolutions are prone to feature confusion in fruit occlusion scenarios [15], while conventional attention mechanisms suffer from overly smooth channel features under dynamic lighting conditions [16]. In terms of feature fusion strategies, researchers have proposed various innovative methods to address the challenges of dense object detection. Liu et al. [17] introduced the CBAM module into YOLOv5, thereby enhancing the localization accuracy of small objects. Ma et al. [18] designed a grape cluster detection network that significantly reduces feature redundancy through a multiscale feature extraction module (MSFEM) and a receptive field expansion module (RFAM). These methods have addressed the detection challenges in complex orchard environments to varying degrees. In the context of lightweight and embedded deployment, significant progress has been made in addressing the real-time and deployment cost requirements of orchard scenarios. Researchers have developed various lightweight models to address these needs. For instance, Li et al. [19] improved the YOLOv5 model to achieve lightweight detection of passion fruit pests. Similarly, Sun et al. [20] proposed a model utilizing a GhostNet backbone network, combined with knowledge distillation techniques, to enhance the performance of lightweight networks. Yang et al. In the year 2024, the EGSS network was designed (2024) [21]. This network employs an MCAttention module and a SlimPAN structure to achieve efficient apple detection. However, it is essential to note that lightweight design often comes at the expense of detection accuracy in complex scenarios [22], which necessitates careful consideration in practical applications.

Regarding the enhancement of all-weather detection capabilities, researchers have proposed a range of methodologies to mitigate the impact of low-light conditions and adverse weather on the efficacy of detection systems. Sasagawa et al. [23] enhanced the map of the YOLO model under low-light conditions by 111% through Gamma correction; Sun et al. [24] developed the YOLO-P model, which exhibited good stability in day-night pear detection. Despite these efforts, existing methods still struggle to achieve a balance between “lightweight, accuracy, and robustness” [25], and Li et al. [26] found that lightweight models experience significant accuracy declines in extreme environments.

In summary, although the field of computer vision has already achieved a considerable degree of maturity in the domain of precision agriculture, the automated detection of passion fruit in complex orchard scenes remains a challenging task. This is due to the presence of factors such as leaf and branch occlusion, dynamic changes in lighting, and dense fruit overlap, which collectively impose significant limitations on the practical application of automated harvesting technology. The presence of four technical impediments characterizes the present state of the discipline. First, standard convolution structures exhibit inadequate feature expression capabilities and redundant calculations in overlapping scenes. Second, conventional attention mechanisms struggle to balance the robustness of multiscale interference with the extended receptive field modeling of occluded targets. Third, there is an inherent contradiction between feature enhancement and real-time performance. Fourth, the stability of all-weather detection has not been systematically addressed. To remedy these issues, this paper proposes the PHD-YOLO model, which achieves a synergistic optimization of accuracy and efficiency through systematic innovation:

- (1)

- Designing a novel partial convolution module (ParConv) that employs a channel grouping strategy for independent processing. By decoupling the feature learning process, this approach effectively reduces feature confusion in fruit-dense regions while preserving multi-scale semantic information.

- (2)

- Developing an HSV Attentional Fusion hybrid attention mechanism that integrates hue, saturation, and brightness features from the HSV color space. This is combined with Halo Attention’s extended receptive field, dynamic channel weighting reconstruction, and spatial pyramid multi-scale fusion to enhance feature discriminability under complex lighting conditions.

- (3)

- Reconstructing the backbone network using deep separable convolutions to optimize computational efficiency while maintaining feature expression capabilities significantly, resolving the trade-off between real-time performance and accuracy in mobile device deployments.

2. Materials and Methods

2.1. Datasets

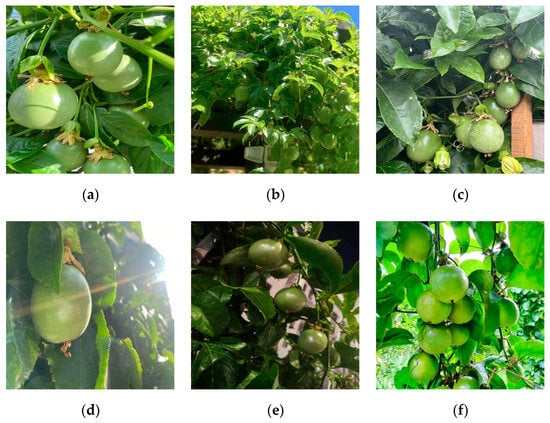

This study was conducted at a passion fruit cultivation base in Chenggong District, Kunming City, Yunnan Province, China. At this location, image data were collected using digital equipment with a resolution of 3072 × 4096 pixels, resulting in JPEG-format images. Data collection spanned three typical periods under natural lighting conditions: early morning, noon, and evening. Four shooting angles were utilized: front light, backlight, front view, and rear view. The study systematically documented a range of morphological features, including single-fruit close-ups, clusters of fruits, leaf and branch obstruction, and fruit overlap. This comprehensive documentation resulted in a total of 1212 raw images. The collected images are presented in Figure 1.

Figure 1.

Passion fruit images. (a) Shunted; (b) shaded by branches and leaves; (c) overlapping fruits; (d) single-fruit backlighting; (e) nighttime; (f) multi-fruit aggregation.

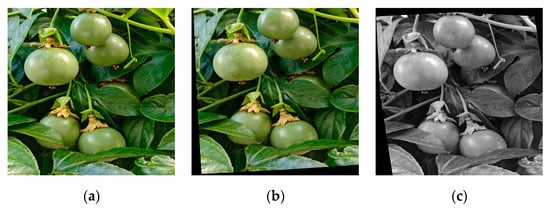

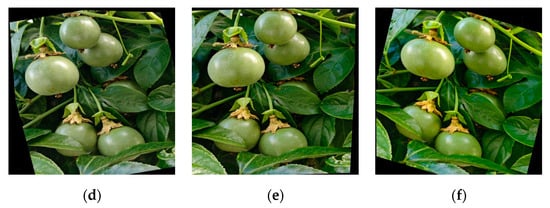

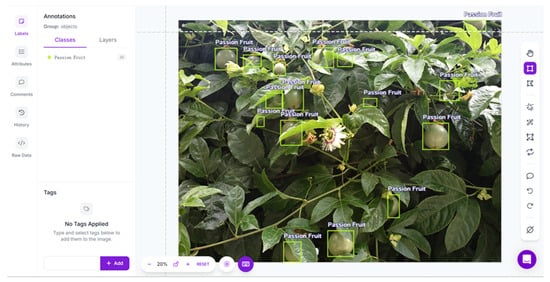

To enhance data diversity, the original data underwent a multi-dimensional enhancement process. This process included rotation, cropping, noise addition, grayscale conversion, and saturation adjustment. Specifically, the image rotation angle range was set to −15° to +15°, the horizontal and vertical cropping range was set to ±10°, and 15% of the images underwent grayscale conversion. Additionally, saturation was adjusted within the range of −25% to +25%, noise levels were set to 0.1% of pixels, and brightness was randomly adjusted by ±20%. To further enhance data diversity, images were subjected to horizontal flipping and random cropping. A selection of the enhanced images is shown in Figure 2. Following the implementation of the aforementioned data augmentation techniques, a total of 2910 passion fruit images were obtained. The images presented above were meticulously annotated with the designated targets and bounding boxes using RoboFlow, an online annotation website. This process yielded XML files, as illustrated in Figure 3. The final dataset was randomly partitioned into three sets: a training set, a validation set, and a test set, with a proportion of 7:2:1. The specific data division is illustrated in Table 1.

Figure 2.

Data enhancement. (a) Original; (b) rotation; (c) grayscale; (d) shear; (e) noise; (f) saturation.

Figure 3.

Data annotation.

Table 1.

Division of the passion fruit dataset.

2.2. PHD-YOLO Framework

2.2.1. Network Architecture Design

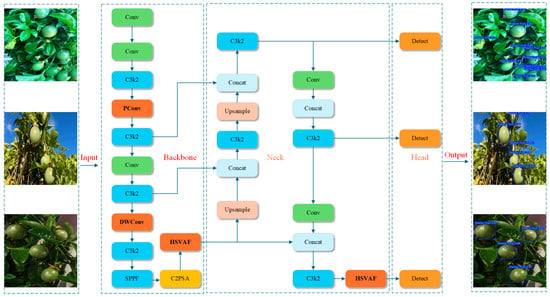

The enhanced model PHD-YOLO considered in this study is based on the single-stage detection framework YOLOv11 [27], and its comprehensive architecture is illustrated in Figure 4. The model is composed of three distinct components: a backbone network, a neck network, and a head network. YOLOv11 represents a version of the YOLO series of models. A comparison of YOLOv11 and its predecessor reveals significant advancements in architecture and training methods, propelling the development of real-time object detection technology. Notable innovations in YOLOv11 include the incorporation of C3k2 (Cross Stage Partial with kernel size 2) blocks, SPPF (Spatial Pyramid Pooling-Fast) modules, and C2PSA (Convolutional block with Parallel Spatial Attention) components, which collectively enhance the model’s capacity for feature extraction and its computational efficiency.

Figure 4.

PHD-YOLO model architecture diagram.

The backbone network utilizes multi-level convolutions (Conv) as its fundamental component. The integration of a novel partial convolution module (ParConv) to replace specific conventional convolution layers has been demonstrated to reduce computational redundancy through channel aggregation and autonomous processing while preserving multi-scale feature representation capabilities. The feature extraction stage employs an alternating stacking of Conv and C3k2 modules to enhance the collaborative representation of local texture details and global contextual information. The C3k2 module in YOLOv11 utilizes smaller convolution kernels instead of larger ones, thereby improving computational speed and reducing computational complexity while maintaining high accuracy.

The neck network employs a multi-branch feature fusion design, facilitating cross-scale feature interaction through upsampling (Upsample) and concatenation (Concat) operations. It incorporates the HSV Attentional Fusion hybrid attention mechanism. This module converts the input image to the HSV color space. It applies Halo Attention to expand the receptive field, introduce dynamic channel attention weighting, and implement spatial pyramid multi-scale fusion strategies in the hue, saturation, and value channels. This significantly improves feature discrimination in occluded targets and complex lighting scenes. Concurrently, it integrates depth-separable convolution (DWConv) to enhance computing efficiency and minimize superfluous parameters.

The head network integrates a lightweight detection head (Detect), which combines the multi-scale pooling characteristics of the SPPF module with the cross-layer feature aggregation capabilities of the C2PSA module to optimize the localization accuracy of dense fruits. The entire network is based on C3k2 modules, which are stacked hierarchically and feature pyramid structures to achieve multi-granularity information fusion. The data undergoes a series of processing steps, including feature extraction in the backbone network, cross-scale enhancement in the neck network, and localization regression in the head network. These processes culminate in the generation of detection results, which are presented as bounding boxes. The C2PSA module of YOLOv11 introduces a spatial attention mechanism, thereby enhancing the model’s robustness in complex orchard environments.

As illustrated in Figure 4, the comprehensive process, from initial image input to detection output, is outlined. This process encompasses the deployment locations and the data flow of pivotal innovative modules, including HSVAF, ParConv, and DWConv. This verification serves to substantiate the model’s efficacy and resilience in intricate orchard environments.

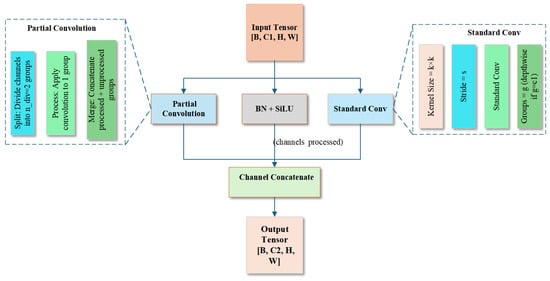

2.2.2. Novel Partial Convolution

In this study, we propose a convolutional layer, dubbed ParConv, to enhance the computational efficiency of convolutions. Its structural configuration is delineated in Figure 5. It synthesizes the benefits of partial convolutions and standard convolutions by segmenting the channels and executing convolutions independently on each subgroup. This approach minimizes computational expenditure and enhances processing efficiency. The primary innovation of ParConv lies in dividing the input feature map channels into multiple subgroups. Each of these subgroups undergoes independent processing via partial convolution, with the results subsequently concatenated to form the output feature map. Specifically, the input feature map size is represented by [B, C1, H, W], and each subgroup size is denoted by [B, n, H, W], where n signifies the number of subgroups. The convolution computation for each subgroup can be expressed as:

Figure 5.

ParConv structure diagram.

In this context, x(i, j) denotes the pixel value in the input feature map, w(i, j) signifies the weight of the convolution kernel, and K × K represents the size of the convolution kernel. After the convolution operation, the results of multiple subgroups are merged by channel splicing (Concatenation) to form the final output feature map, which has dimensions [B, C2, H, W], where C2 = C1 is the number of output channels. Consequently, ParConv substantially reduces the computational burden and enhances the model’s real-time performance, particularly in scenarios involving large amounts of input data.

In the context of the passion fruit detection task, ParConv demonstrated a commendable performance. In scenarios involving fruit occlusion, lighting changes, and overlapping fruits, ParConv demonstrated a notable ability to extract key features effectively. In scenarios where fruits were occluded, some convolutions independently processed each channel group. This ensured that even if part of the fruit was occluded, the model could still identify the key information of the fruit. In scenarios involving multiple overlapping fruits, ParConv employs grouped convolutions to mitigate interference between different fruits, thereby enhancing the accuracy of fruit detection. Furthermore, in conditions characterized by backlighting or low illumination levels, ParConv expedites the processing of low-brightness images by diminishing the computational demands of each convolution operation. This ensures the model’s capacity to execute real-time detection tasks effectively.

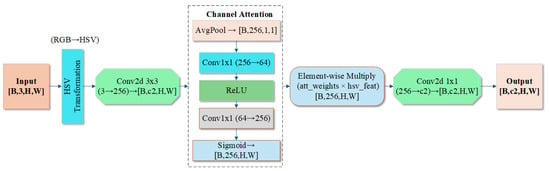

2.2.3. HSV Attentional Fusion

To address the challenges encountered in passion fruit detection, such as the significant influence of light intensity on fruit color, the large color gamut due to maturity differences, and the blurred local features caused by branch and leaf obstruction, this study proposes the HSV Attentional Fusion (HSVAF) feature enhancement module. This module integrates the characteristics of the HSV color space with an adaptive attention mechanism to construct a multi-dimensional feature enhancement channel. First, the input RGB image is converted to the HSV color space, and independent features are extracted from the three channels: hue (H), saturation (S), and value (V). The hue channel utilizes Halo Attention to broaden the receptive field and capture the periodic patterns inherent in fruit skin texture. The saturation channel utilizes Dynamic Channel Attention (DCA) to weigh features in high-saturation fruit regions and suppress interference from low-saturation background vegetation. The value channel utilizes Spatial Pyramid Attention (SPA) to fuse brightness features across different lighting conditions through multi-scale dilated convolutions. Following parallel processing, the three-channel features are fused through a learnable spatial-channel hybrid attention-gating mechanism.

As illustrated in Figure 6, the module employs a dual-path feature interaction architecture. The primary path maps RGB features to the HSV space through the HSV Transform layer, while the secondary path maintains the original spatial features to preserve geometric details. The HSV conversion process is achieved through channel reorganization by replacing the dimensions of the tensor.

Figure 6.

HSV Attentional Fusion module architecture diagram.

HSV color decoupling: The RGB feature tensor is mapped to the HSV space through a differentiable HSV transformation layer, and the calculation process satisfies the following condition:

In this equation, signifies the hue period correction mask, and ϵ = 1 × 10−7 is employed to avert zero division exceptions. This process maintains the stability of backpropagation through gradient clipping (detach) to avoid gradient oscillations caused by nonlinear transformations in the color space. Cross-domain attention fusion: The construction of a dual-branch interaction network is imperative, wherein the HSV branch is tasked with capturing the long-range dependencies of fruit peel textures through the implementation of expansion convolution.

Spatial branches use dynamic channel attention to reinforce key areas:

Gate-controlled loop unit (GRU) implements space-time feature alignment:

Among them, is the hidden state, and feature refinement is achieved through three time steps of iteration.

Multi-scale feature aggregation: A spatial pyramid structure is used to fuse feature responses under different receptive fields, and the calculation formula is defined as:

In this context, signifies the learnable scale weight, and ⊗ is used to denote a channelwise multiply–accumulate operation. The final step in the process is the reduction of dimensionality, which is accomplished through the implementation of a 1 × 1 convolution:

This module has been demonstrated to offer substantial advantages in areas characterized by dense passion fruit growth. HSV color flow encodes the V channel for light invariance, thereby enhancing the model’s detection robustness in backlit scenes. The reduction in false negative rate in scenes with leaf and branch occlusion is attributed to spatial branch dynamic channel attention. The GRU gating mechanism is an iterative process that optimizes the temporal dimension to disentangle overlapping features of fruit, thereby reducing the false positive rate.

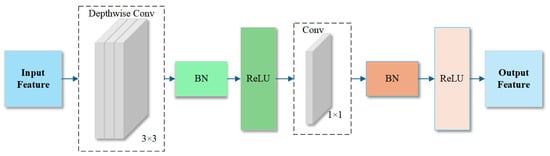

2.2.4. Depth Wise Separable Convolution

In this study, the conventional convolution of the backbone structure is substituted by depth wise separable convolution (DWConv), which considerably enhances the efficiency of the passion fruit detection task by decomposing the standard convolution into two phases: depth convolution and point-by-point convolution [28]. Its structural configuration is illustrated in Figure 7.

Figure 7.

Depth wise separable convolution architecture diagram.

In standard convolution, it is assumed that the size of the input feature map is , the number of channels of the output feature map is , and the convolution kernel size is K × K. The quantity of its parameters can be expressed as:

Conversely, the parametric quantity of a depth wise separable convolution is comprised of two components:

Accordingly, the total number of parameters of the depth wise separable convolution is as follows:

In terms of computational effort, the dimensions of the input feature map are , and the dimensions of the output feature map are . The total computational effort of the depth wise separable convolution convolution

In practical applications, depth wise separable convolution effectively solves the challenges of fruit occlusion and overlapping, and maintains stable detection performance under light variations and background interference conditions. The reduced computational resource requirements make the model more suitable for deployment in mobile devices or embedded systems, achieving a good balance between detection accuracy and real-time performance.

3. Results

3.1. Experimental Environment

This study was conducted using the Windows 11 Professional operating system. The hardware platform was a Lenovo Saver Y9000P 2024 notebook equipped with an Intel® Core™ i9-14900HX processor (24-core, 32-thread, max. RWI 5.8 GHz) with an NVIDIA GeForce RTX 4060 Laptop GPU (8 GB GDDR6 video memory with DLSS 3 support) with 32 GB DDR5-5600 MHz RAM and a 1 TB PCIe 4.0 NVMe SSD to provide powerful computing performance and high-speed data throughput. The software development environment utilizes PyCharm Professional 2023.2, which is built on PyTorch 3.8 with GPU acceleration via CUDA 11.1 and cuDNN 8.0.5. The Python version employed is 3.12.7, and all dependent libraries are managed via the conda virtual environment to ensure the reproducibility of the experimental environment.

The experiments utilize the Ultralytics YOLO framework to implement PHD-YOLO and comparison models (e.g., YOLOv5, YOLOv8). The training was executed on a single NVIDIA GeForce RTX 4060 graphics card with mixed precision training (AMP) enabled to optimize graphics memory. The training was set to 150 epochs with a batch size of 4 and an input image size of 640 × 640, utilizing the AdamW optimizer (initial learning rate of 0.001 and weight decay of 0.05). The dataset is loaded from a local SSD, and multi-threaded pre-reading is implemented via PyTorch DataLoader, which is combined with a customized data enhancement pipeline to ensure training efficiency and result reliability. All experiments were performed under the same random seed (seed = 42) setting to ensure reproducibility.

3.2. Evaluation Indicators

In the passion fruit detection task context, the following metrics are employed: precision, recall, F1-score, mean average precision (mAP), and inference time. Precision is defined as the proportion of correct positive samples in the detection results over all samples predicted to be positive. This concept can be expressed as follows:

In the context of the data presented, TP signifies actual cases, while FP denotes false positive cases. Conversely, recall calculates the proportion of all positive samples that are correctly detected and is expressed by the following formula:

FN denotes false negative examples.

The F1-score is the reconciled average of precision and recall, which combines the balance of precision and recall. It is expressed as follows:

A higher F1-score indicates that the model performs better regarding precision and recall. Mean Average Precision (mAP) is a frequently utilized metric in multi-category detection tasks. The precision performance of the model is thoroughly evaluated by calculating the precision at varying recall rates, as depicted in Equation (1):

In this study, N denotes the total number of categories, and represents the average precision of category i. Conversely, the inference time signifies the duration required for the model to process the input data and generate the prediction results, typically measured in milliseconds. This temporal aspect is paramount for real-time detection tasks, as the model’s processing capability is directly proportional to the inference time. These detection metrics provide comprehensive criteria for evaluating the performance of passion fruit detection models in various complex environments, ensuring that the model achieves a good balance between accuracy, robustness, and real-time performance.

3.3. Ablation Experiment Results and Analysis

This study ensured the reproducibility of experiments by setting random seeds, and all results were obtained by averaging the data from three independent replicate experiments. Ablation experiments systematically evaluated the impact of each enhanced module and their combinations on model performance. The present study evaluated the independent contributions of ParConv, HSV Attentional Fusion, and depth wise separable convolution (DWConv) modules when used alone and compared them with the complete model (i.e., PHD-YOLO) that includes all modules. The results of the ablation experiment are shown in Table 2.

Table 2.

Results of the ablation experiment.

The ParConv module demonstrated a noteworthy performance in terms of individual module operation, achieving an average detection accuracy of 94.3% and a recall rate of 84.1% on the test set. This performance is further evidenced by the module’s mAP@0.5, which reached 91.2%. A comparison of the ParConv module with the baseline model reveals a substantial enhancement in the module’s capacity for feature extraction in dense fruit regions. This enhancement is primarily attributable to the module’s effective reduction of feature confusion through channel grouping strategies. However, the module’s inherent complexity led to an increase in computational complexity. Following the introduction of the HSV Attentional Fusion module, the model achieved an average accuracy of 94.0% and a recall rate of 85.1% on the test set, demonstrating optimal performance under conditions of occlusion and complex lighting. This module enhances hue sensitivity and lighting robustness by decoupling the HSV space and fusing multimodal features, but the inference time increases to 0.8 milliseconds per frame. Finally, the DWConv module significantly improves the model’s computational efficiency, with an average single-frame inference time of 0.6 milliseconds. However, the recall rate is relatively low, especially in severely occluded scenes, suggesting that lightweight modifications may compromise the expressiveness of certain features.

Regarding the performance of module combinations, the utilization of ParConv and DWConv in conjunction leads to a substantial enhancement in computational efficiency while maintaining an average accuracy of 94.3%. However, the enhancement in the recall rate is relatively insignificant, suggesting that lightweight optimization may impact the detection capacity of occluded targets. The integration of HSVAF and DWConv lacks a local feature decoupling mechanism, resulting in a decline in the mean recall rate to 83.4%, which substantiates ParConv’s efficacy in preserving features in densely fruit-bearing regions. The combination of ParConv and HSVAF demonstrates optimal performance in occlusion scenarios, exhibiting substantial robustness. However, an average accuracy decline indicates the necessity for more precise parameter balancing. Finally, the comprehensive PHD-YOLO model, which integrates the three modules of ParConv, HSVAF, and DWConv, attains a detection accuracy of 95. The experimental results yielded an accuracy of 4% and a recall rate of 85.0% on the test set, with a mean average precision (mAP) at 0.5 as 91.5% and an F1 score of 90.0%. Concurrently, the inference time was maintained at 0.6 milliseconds per frame. This combination effectively improves the model’s accuracy and computational efficiency, achieving the optimal balance.

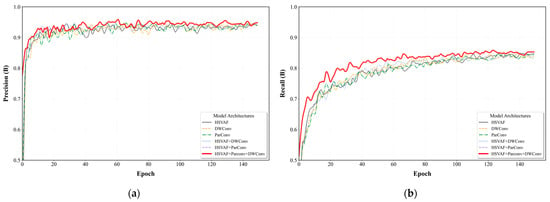

As demonstrated in Figure 8, the comparison results of accuracy and recall rate in the ablation experiments further validate the aforementioned conclusions. As illustrated in Figure 8a, the accuracy of each module combination undergoes alterations under varying training cycles. Figure 8b provides a visual representation of the trend in the recall rate. As demonstrated in Figure 1, the PHD-YOLO model demonstrates the optimal performance among all module combinations, exhibiting the highest levels of accuracy and recall rate. This finding suggests that a comprehensive consideration of the advantages inherent in each module can yield the most efficacious detection outcomes.

Figure 8.

Comparison of Precision and Recall metrics for module combinations in ablation experiments. (a) Comparison of Precision metrics for module combinations; (b) Comparison of recall metrics for module combinations.

3.4. Comparison with State-of-the-Art Models

To provide a comprehensive evaluation of the proposed improved model’s performance, this study conducted a systematic comparative analysis of the current mainstream lightweight object detection models under the same experimental environment. The experiment selected representative models from the YOLO series, including six versions. To ensure the comprehensiveness and representativeness of the comparison, the following YOLO versions were utilized: YOLOv3-tiny, YOLOv5n, YOLOv6n, YOLOv8n, YOLOv10n, and YOLOv11n. All models were trained and tested on a unified self-built dataset using the same evaluation metrics, including detection accuracy (Precision), recall (Recall), mAP@0.5 and mAP@0.5-0.95 (mAP@0.5 and mAP@0.5-0.95), F1 score, and single-frame inference time. This approach enabled a comprehensive evaluation of the detection performance and computational efficiency of the models. A detailed comparison of the results is presented in Table 3.

Table 3.

Comparison of the test results of different models.

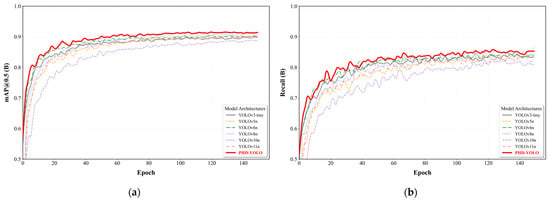

Additionally, a horizontal comparison with recent studies focusing on passion fruit detection was conducted to validate the effectiveness of the proposed method further. Given the potential for variations in datasets and experimental platforms across studies, a comparison of a single metric may not be entirely equitable. Therefore, the present study focused on comparing works with similar research objectives and methods to ensure the reasonableness and objectivity of the evaluation. Due to the incomplete reporting of experimental data in some literature, the comparison results in Table 4 may have specific limitations. However, they still reflect the relative advantages of the proposed method. Figure 9 and Table 3 provide empirical evidence that supports the efficacy of PHD-YOLO in passion fruit detection from two distinct perspectives: training dynamics and testing performance. As illustrated in Figure 9, the training curves demonstrate that PHD-YOLO’s mAP@0.5 achieves a consistent breakthrough of 90% after 80 iterations and ultimately attains 91.5% after 140 rounds (refer to Table 3 for detailed data). This enhancement surpasses the 91.1% performance of YOLOv8n.

Table 4.

Comparison with different models in recent works.

Figure 9.

Comparison of mAP@0.5 and Recall metrics across different models. (a) Comparison of Precision metrics for module combinations; (b) Comparison of recall metrics for module combinations.

Additionally, the recall rate increases to 85%. The accuracy of the proposed model is evidenced by its 0% error rate, as depicted in Table 3. This performance surpasses the 84.3% accuracy rate of YOLOv8n and the 81.5% accuracy rate of YOLOv11n. Furthermore, the absence of overfitting in models such as YOLOv10n, where the recall rate decreased from 81.6% to 79.3%, validates the robustness of the modular design against complex fruit features.

As illustrated in Table 3, the model attains a balance between false positives and false negatives, exhibiting a detection accuracy of 95.4% and an F1 score of 90.0%. This performance surpasses that of YOLOv8n by a margin of 0.9%, with an F1 score of 89.1%. In terms of computational efficiency, PHD-YOLO attains the fastest single-frame inference time of 0. The 6-millisecond model demonstrates equivalent performance to YOLOv6n and surpasses YOLOv5n and YOLOv8n by 0.7 milliseconds. The achieved mAP@0.5-0.95 of 80.8% exhibits a slight decrease compared to YOLOv8n’s 81.5%, yet surpasses YOLOv6n’s 80.3% and YOLOv5n’s 80.5%, signifying its remarkable adaptability to multi-scale fruit detection tasks.

The integration of training curves and test data reveals that PHD-YOLO attains a balanced enhancement in accuracy, recall rate, and real-time performance through module combination optimization in high-density agricultural scenarios, thereby providing dependable technical support for automated harvesting systems.

A comparison of the existing methods in Table 4 reveals that Wu et al. [29] enhanced the detection accuracy of YOLOv3 by integrating DenseNet. However, the model’s computational efficiency was found to be inadequate. Tu et al. [30] augmented the recognition rate by integrating multimodal data in Faster R-CNN. Nevertheless, the real-time performance of the two-stage detection framework was found to be suboptimal, as demonstrated in this study by Ou et al. [31]; a lightweight design was employed to optimize YOLOv7. However, its performance in complex scenes was found to be inadequate. Tu et al. [32] developed the YOLOv8n + OC-SORT + CRCM algorithm, which integrates object tracking and central region counting methods to facilitate real-time estimation of passion fruit yield in scenarios characterized by complex lighting and occlusion. Sun et al. [20] and Li et al. [26] developed lightweight models based on YOLOv5 and YOLOv8, respectively, achieving embedded deployment; however, they observed a significant decrease in detection accuracy in dense fruit scenarios. Yang et al. [10] proposed a tracking and counting algorithm with high error rates in occlusion scenarios, while Chen et al. [33] developed a disease detection model with limited functionality, unable to meet maturity assessment requirements simultaneously. These methods are often plagued by trade-offs between accuracy and speed, as well as a lack of adaptability to complex scenarios, thereby constraining their practical application effectiveness.

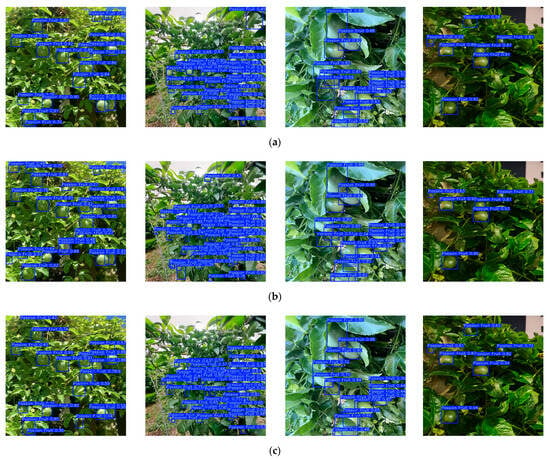

3.5. Comparison of Recognition Effect Before and After Improvement

To comprehensively evaluate the detection performance of the improved model, this study selected passion fruit images under various complex environmental conditions for comparative testing. These conditions included challenging scenarios such as backlighting and shadows, multiple fruit clusters and overlaps, branch and leaf occlusions, and uneven lighting. A visual analysis of the detection results, as illustrated in Figure 10, revealed that the enhanced PHD-YOLO model exhibited superior performance in the majority of scenarios. In conditions characterized by dense fruit and shadow, the PHD-YOLO model demonstrated a notable reduction in false detections and multiple detections, similar to the improvements observed in the YOLOv5n and YOLOv6n models. This improvement in accuracy is evident in the more precise prediction boxes delineated by the PHD-YOLO model. In scenarios involving fruit aggregation and overlap, as well as branch and leaf occlusion, all models demonstrate a certain degree of false detections and missed detections. However, the PHD-YOLO model exhibits comparatively superior performance with a reduced false detection rate. In conditions characterized by uneven lighting and nocturnal settings, where the YOLOv5s, YOLOv10n, and YOLOv11n models encounter difficulties in detecting objects, the PHD-YOLO model exhibits a notable degree of stability in its detection performance. It effectively identifies each passion fruit target, thereby demonstrating its efficacy in challenging scenarios. In the context of confidence metrics, PHD-YOLO demonstrates superior performance compared to prevalent models, such as YOLOv5n, YOLOv6n, YOLOv8n, YOLOv10n, and YOLOv11n, although its performance is marginally lower than that of YOLOv3-tiny. However, its recall rate and F1 score are significantly higher than those of other models, indicating that the improved model achieves a good balance between detection accuracy and false negative rate. Based on the comprehensive test results, PHD-YOLO demonstrates superior detection performance under complex environmental conditions, exhibiting strong robustness and generalization capabilities.

Figure 10.

Visual comparison of different models. (a) YOLOv3-tiny; (b) YOLOv5n; (c) YOLOv6n; (d) YOLOv8n; (e) YOLOv10n; (f) YOLOv11n; (g) PHD-YOLO.

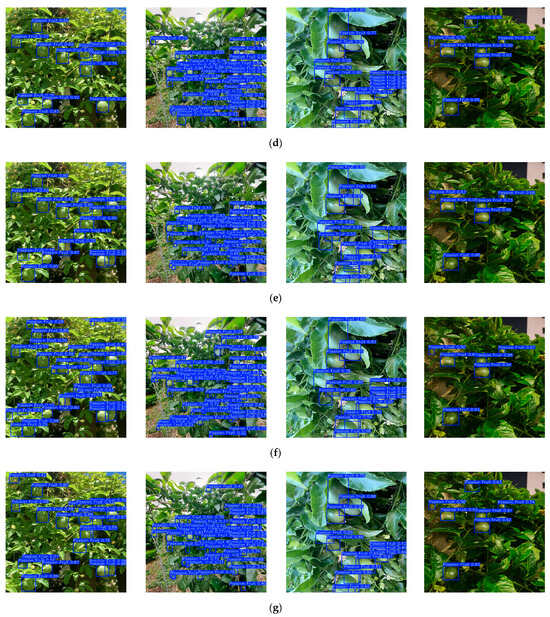

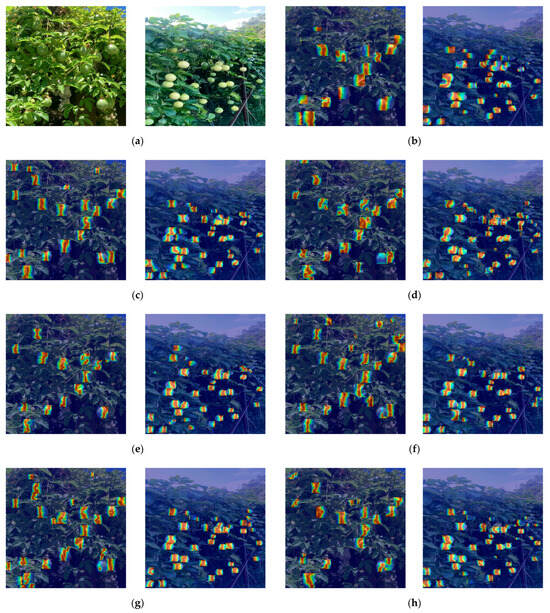

To further analyze and improve the model’s performance, this study employed Layer CAM (Layer-wise Class Activation Map) technology to conduct an in-depth visualization analysis of the feature extraction mechanism of the PHD-YOLO model [34]. As illustrated in Figure 11, this method utilizes a heat map to visually represent the model’s attention to critical features of passion fruit at various levels. This provides a significant foundation for comprehending the model’s decision-making process.

Figure 11.

Comparison of the effect of heat map recognition among models for complex conditions in orchards. The highlighted areas in the figure are the regions of interest for the model detection. (a) Original figure; (b) YOLOv3-tiny; (c) YOLOv5n; (d) YOLOv6n; (e) YOLOv8n; (f) YOLOv10n; (g) YOLOv11n; (h) PHD-YOLO.

In a test scenario with leaf occlusion and backlight shadows, 20 sample images containing passion fruits were selected for analysis. The experimental results demonstrate that the 20 high-brightness response regions generated by the PHD-YOLO model exhibit a high degree of consistency with the actual fruit locations, with each response region precisely located to a specific fruit. In contrast, the performance of mainstream comparison models varied: The YOLOv3-tiny model detected 15 fruits, the YOLOv5n model detected 21, the YOLOv6n model detected 19, the YOLOv8n model detected 14, the YOLOv10n model detected 23, and the YOLOv11n model detected 25. These models exhibit discrepancies in the number of detected objects compared to the ground truth. They also demonstrate less precise correspondence between their response regions and the actual locations of the fruits.

Further analysis reveals that in the multi-fruit aggregation and overlapping scene (18 fruits), PHD-YOLO still accurately recognizes all the targets. In contrast, the detection results of the comparison models are 13 for YOLOv3-tiny, 17 for YOLOv5n, 15 for YOLOv6n, 12 for YOLOv8n, 19 for YOLOv10n, and 22 for YOLOv11n. In branch and leaf shade (15 fruits), the PHD-YOLO model exhibited full detection capability, while the comparison model demonstrated varying degrees of missed detection. The values assigned to these models are 11 for YOLOv3-tiny, 14 for YOLOv5n, 13 for YOLOv6n, 10 for YOLOv8n, 16 for YOLOv10n, and 17 for YOLOv11n.

A thorough, layer-by-layer analysis of feature response patterns was conducted, thereby unveiling the merits of PHD-YOLO. In the shallow network, the model established a primary feature representation based on texture and contours. In the deep network, the model concentrated on the precise localization of spatial relationships. This hierarchical learning strategy enabled the model to effectively distinguish closely adjacent fruits and maintain relatively stable detection under occlusion conditions. Additionally, the model demonstrated strong robustness to lighting variations, generating clear feature responses under backlighting and shadow conditions. Visualization results also show that PHD-YOLO can selectively focus on key features of passion fruits (such as fruit texture and edge contours) while effectively suppressing the influence of distracting factors like leaf texture. The efficacy of this feature selection mechanism is a primary factor contributing to the system’s high detection accuracy. These findings not only validate the superior performance of the PHD-YOLO model but also provide important insights into the feature learning patterns of deep learning models in agricultural vision detection tasks.

4. Conclusions

The present study proposes a PHD-YOLO passion fruit detection model based on the YOLOv11n framework. The incorporation of partial convolution modules (ParConv), deep separable convolution (DWConv), and HSV Attentional Fusion (HSVAF) modules has led to significant advancements in the model’s ability to detect objects in complex orchard environments. The experimental results demonstrate that while maintaining a single-frame inference speed of 0.6 milliseconds, the model achieves a detection accuracy of 95.4% and an 85.0% recall rate and 91.5% mean average precision (mAP) at 0.5, representing improvements of 0.7% and 3.5% in accuracy and recall rate, respectively, compared to the baseline YOLOv11n model. In challenging scenarios, such as dense fruit clusters, leaf occlusion, and backlighting, the model exhibited good robustness, thereby validating the effectiveness of the proposed method. The present study constructed a multi-time-period, multi-angle passion fruit dataset containing 2910 samples and further improved the model’s generalization ability through data augmentation strategies. However, this study has its limitations. These include the potential for false negatives in cases of extreme occlusion and the possibility of enhancing cross-regional adaptability. Subsequent research endeavors will center on the exploration of 3D point cloud fusion technology to enhance detection performance in occlusion scenarios. Additionally, plans are in place to expand the dataset to include passion fruit samples of various varieties and growth stages, thereby achieving more precise multi-class detection. Moreover, the model framework can be further applied to orchard yield estimation and automated harvesting systems, providing technical support for innovative agriculture applications.

Author Contributions

Conceptualization, R.L. and R.Z.; methodology, R.L. and R.Z.; software, R.L.; validation, R.L. and R.Z.; formal analysis, R.L.; investigation, R.L. and R.Z.; resources, X.D., S.P. and F.C.; data curation, R.L.; writing—original draft preparation, R.L.; writing—review and editing, R.L. and R.Z.; visualization, X.D.; supervision, X.D.; project administration, X.D.; funding acquisition, X.D., S.P. and F.C. All authors have read and agreed to the published version of the manuscript.

Funding

This study is grateful for the support of the following projects: Major Science and Technology Special Project of Yunnan Province, Number: 202302AO370003, Yunnan Province Basic Research Special Program, Number: 202301AT070173, Yunnan Province Basic Research Special Program, Number: 202401AT070103.

Data Availability Statement

All data are contained within the article. To request the data and code, please send an email to the first or corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Ni, X.; Li, C.; Jiang, H.; Takeda, F. Three-dimensional photogrammetry with deep learning instance segmentation to extract berry fruit harvestability traits. ISPRS J. Photogramm. Remote Sens. 2021, 171, 297–309. [Google Scholar] [CrossRef]

- Nan, Y.; Zhang, H.; Zeng, Y.; Zheng, J.; Ge, Y. Intelligent detection of Multi-Class pitaya fruits in target picking row based on WGB-YOLO network. Comput. Electron. Agric. 2023, 208, 107780. [Google Scholar] [CrossRef]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; Hu, Q. ECA-Net: Efficient channel attention for deep convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11534–11542. [Google Scholar]

- Qin, Z.; Zhang, P.; Wu, F.; Li, X. Fcanet: Frequency channel attention networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Nashville, TN, USA, 20–25 June 2021; pp. 783–792. [Google Scholar]

- Zhang, X.; Li, J.; Hua, Z. MRSE-Net: Multiscale residuals and SE-attention network for water body segmentation from satellite images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 5049–5064. [Google Scholar] [CrossRef]

- Zhu, X.; Cheng, D.; Zhang, Z.; Lin, S.; Dai, J. An empirical study of spatial attention mechanisms in deep networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Long Beach, CA, USA, 15–20 June 2019; pp. 6688–6697. [Google Scholar]

- Shen, Y.; Guo, J.; Liu, Y.; Xu, C.; Li, Q.; Qi, F. SMANet: Superpixel-guided multi-scale attention network for medical image segmentation. Biomed. Signal Process. Control 2025, 100, 107062. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Zhao, Y.; Ju, Z.; Sun, T.; Dong, F.; Li, J.; Yang, R.; Fu, Q.; Lian, C.; Shan, P. Tgc-yolov5: An enhanced yolov5 drone detection model based on transformer, gam & ca attention mechanism. Drones 2023, 7, 446. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, R.-Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Xu, J.; Yang, S.; Liang, Q.; Zheng, Z.; Ren, L.; Fu, H.; Yang, P.; Xie, W.; Yang, D. Transillumination imaging for detection of stress cracks in maize kernels using modified YOLOv8 after pruning and knowledge distillation. Comput. Electron. Agric. 2025, 231, 109959. [Google Scholar] [CrossRef]

- Ling, H.; Tu, Z.; Li, G.; Wang, J. ED-YOLOv8s: An enhanced approach for passion fruit maturity detection based on YOLOv8s. In Proceedings of the 2024 5th International Seminar on Artificial Intelligence, Networking and Information Technology (AINIT), Nanjing, China, 29–31 March 2024; pp. 2320–2324. [Google Scholar]

- Chen, Z.; Qian, M.; Zhang, X.; Zhu, J. Chinese Bayberry Detection in an Orchard Environment Based on an Improved YOLOv7-Tiny Model. Agriculture 2024, 14, 1725. [Google Scholar] [CrossRef]

- Liu, Z.; Xiong, J.; Cai, M.; Li, X.; Tan, X. V-YOLO: A Lightweight and Efficient Detection Model for Guava in Complex Orchard Environments. Agronomy 2024, 14, 1988. [Google Scholar] [CrossRef]

- Li, R.; Sun, X.; Yang, K.; He, Z.; Wang, X.; Wang, C.; Wang, B.; Wang, F.; Liu, H. A lightweight wheat ear counting model in UAV images based on improved YOLOv8. Front. Plant Sci. 2025, 16, 1536017. [Google Scholar] [CrossRef]

- Liang, Y.; Jiang, W.; Liu, Y.; Wu, Z.; Zheng, R. Picking-Point Localization Algorithm for Citrus Fruits Based on Improved YOLOv8 Model. Agriculture 2025, 15, 237. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, H.; Zhang, Y.; Zhang, Q.; Chen, H.; Xu, X.; Wang, G. “Is this blueberry ripe?”: A blueberry ripeness detection algorithm for use on picking robots. Front. Plant Sci. 2023, 14, 1198650. [Google Scholar] [CrossRef] [PubMed]

- Ma, J.; Xu, S.; Ma, Z.; Fu, H.; Lin, B. Grape clusters detection based on multi-scale feature fusion and augmentation. Sci. Rep. 2024, 14, 22701. [Google Scholar] [CrossRef] [PubMed]

- Li, K.; Wang, J.; Jalil, H.; Wang, H. A fast and lightweight detection algorithm for passion fruit pests based on improved YOLOv5. Comput. Electron. Agric. 2023, 204, 107534. [Google Scholar] [CrossRef]

- Sun, Q.; Li, P.; He, C.; Song, Q.; Chen, J.; Kong, X.; Luo, Z. A lightweight and high-precision passion fruit YOLO detection model for deployment in embedded devices. Sensors 2024, 24, 4942. [Google Scholar] [CrossRef]

- Yang, X.; Zhao, W.; Wang, Y.; Yan, W.Q.; Li, Y. Lightweight and efficient deep learning models for fruit detection in orchards. Sci. Rep. 2024, 14, 26086. [Google Scholar] [CrossRef]

- Tu, S.; Huang, Y.; Liang, Y.; Liu, H.; Cai, Y.; Lei, H. A passion fruit counting method based on the lightweight YOLOv5s and improved DeepSORT. Precis. Agric. 2024, 25, 1731–1750. [Google Scholar] [CrossRef]

- Sasagawa, Y.; Nagahara, H. Yolo in the dark-domain adaptation method for merging multiple models. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; Proceedings, Part XXI 16. pp. 345–359. [Google Scholar]

- Sun, H.; Wang, B.; Xue, J. YOLO-P: An efficient method for pear fast detection in complex orchard picking environment. Front. Plant Sci. 2023, 13, 1089454. [Google Scholar] [CrossRef]

- Codes-Alcaraz, A.M.; Furnitto, N.; Sottosanti, G.; Failla, S.; Puerto, H.; Rocamora-Osorio, C.; Freire-García, P.; Ramírez-Cuesta, J.M. Automatic Grape Cluster Detection Combining YOLO Model and Remote Sensing Imagery. Remote Sens. 2025, 17, 243. [Google Scholar] [CrossRef]

- Li, H.; Chen, J.; Gu, Z.; Dong, T.; Chen, J.; Huang, J.; Gai, J.; Gong, H.; Lu, Z.; He, D. Optimizing edge-enabled system for detecting green passion fruits in complex natural orchards using lightweight deep learning model. Comput. Electron. Agric. 2025, 234, 110269. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar]

- Chollet, F. Xception: Deep learning with Depth Wise separable Convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Wu, X.; Tang, R. Fast Detection of Passion Fruit with Multi-class Based on YOLOv3. In Proceedings of the Chinese Intelligent Systems Conference; Springer: Singapore, 2020; pp. 818–825. [Google Scholar]

- Tu, S.; Huang, J.; Lin, Y.; Li, J.; Liu, H.; Chen, Z. Automatic detection of passion fruit based on improved faster R-CNN. Res. Explor. Lab 2021, 40, 32–37. [Google Scholar]

- Ou, J.; Zhang, R.; Li, X.; Lin, G. Research and explainable analysis of a real-time passion fruit detection model based on FSOne-YOLOv7. Agronomy 2023, 13, 1993. [Google Scholar] [CrossRef]

- Tu, S.; Huang, Y.; Huang, Q.; Liu, H.; Cai, Y.; Lei, H. Estimation of passion fruit yield based on YOLOv8n+ OC-SORT+ CRCM algorithm. Comput. Electron. Agric. 2025, 229, 109727. [Google Scholar] [CrossRef]

- Chen, D.; Lin, F.; Lu, C.; Zhuang, J.; Su, H.; Zhang, D.; He, J. YOLOv8-MDN-Tiny: A lightweight model for multi-scale disease detection of postharvest golden passion fruit. Postharvest Biol. Technol. 2025, 219, 113281. [Google Scholar] [CrossRef]

- Muhammad, M.B.; Yeasin, M. Eigen-cam: Class activation map using principal components. In Proceedings of the 2020 International Joint Conference on Neural Networks (IJCNN), Glasgow, UK, 19–24 July 2020; pp. 1–7. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).