Maturity Classification of Blueberry Fruit Using YOLO and Vision Transformer for Agricultural Assistance †

Abstract

1. Introduction

- Conventional model (customize CNN) is trained to compare the detection results with proposed method.

- The classification results for the all three patterns dataset are included.

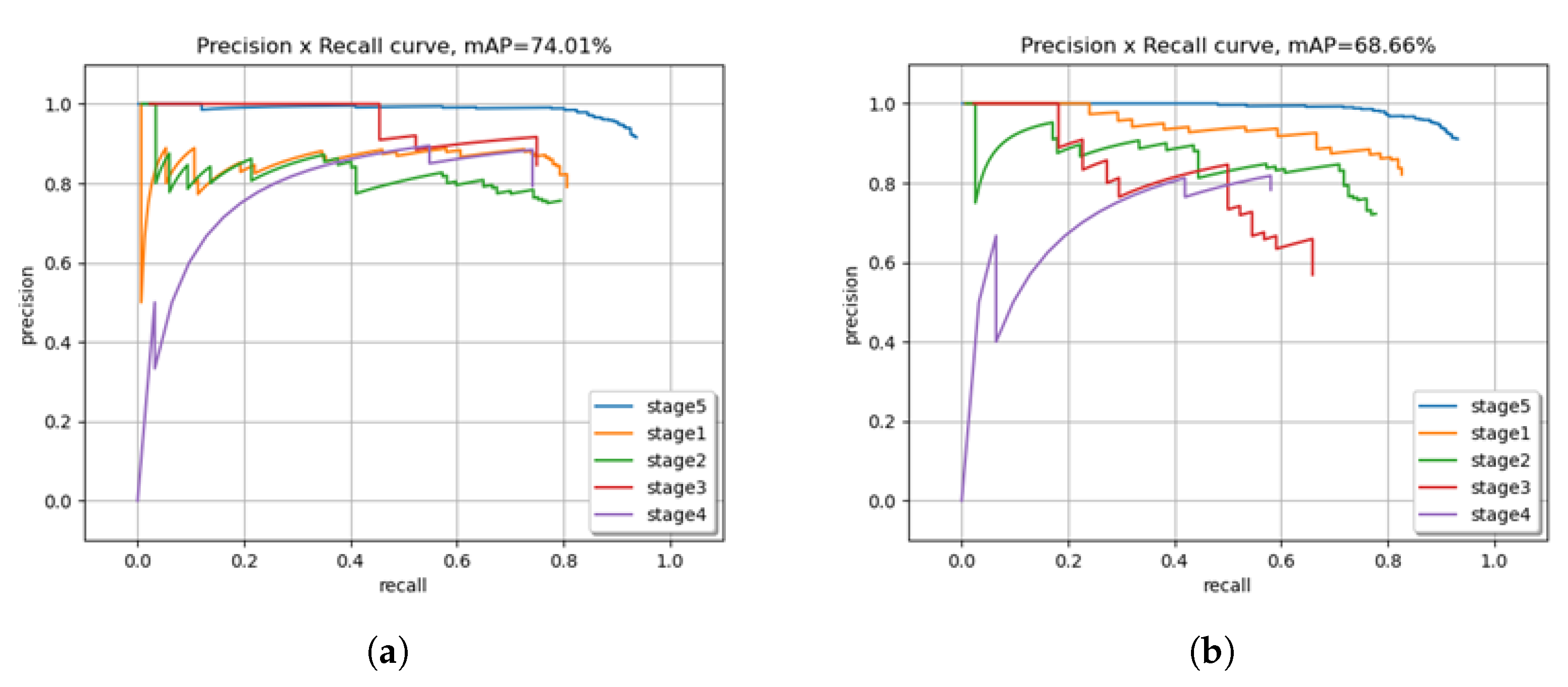

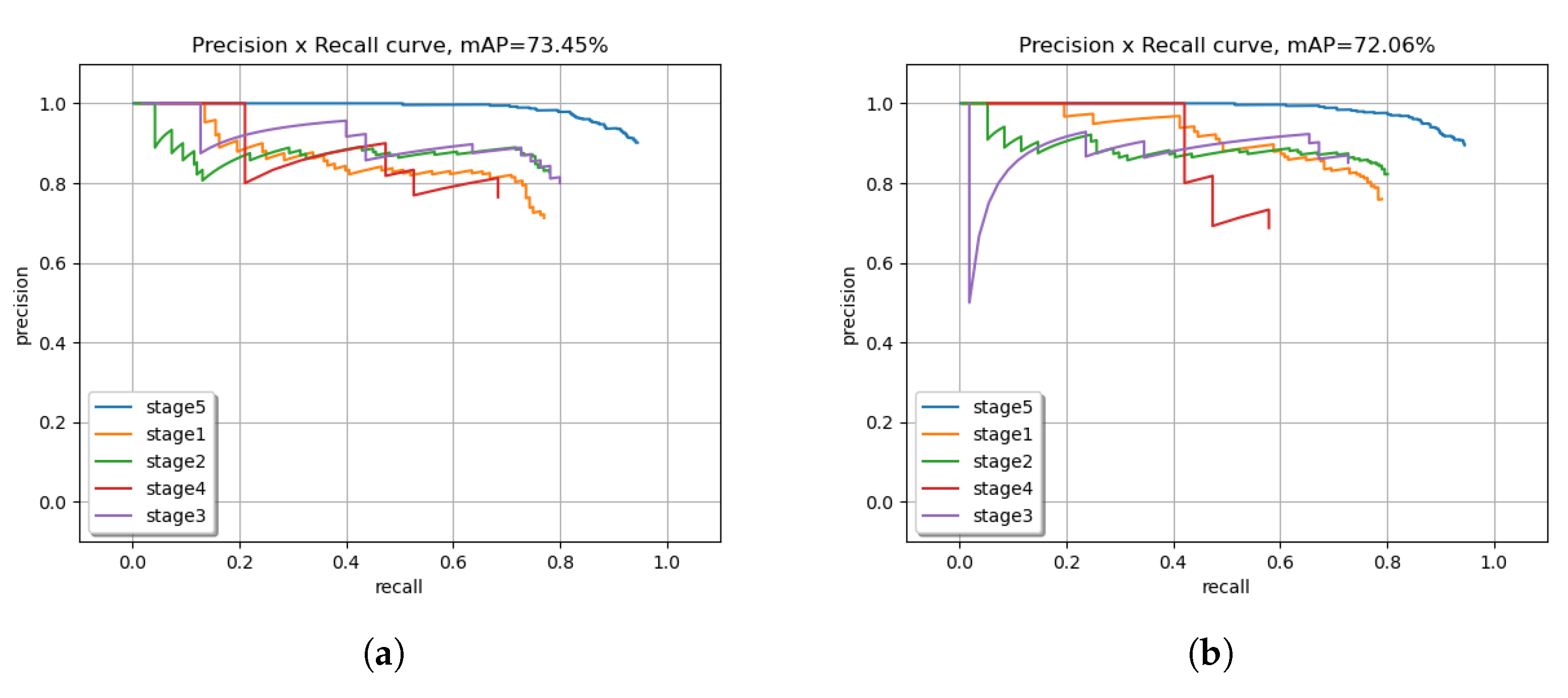

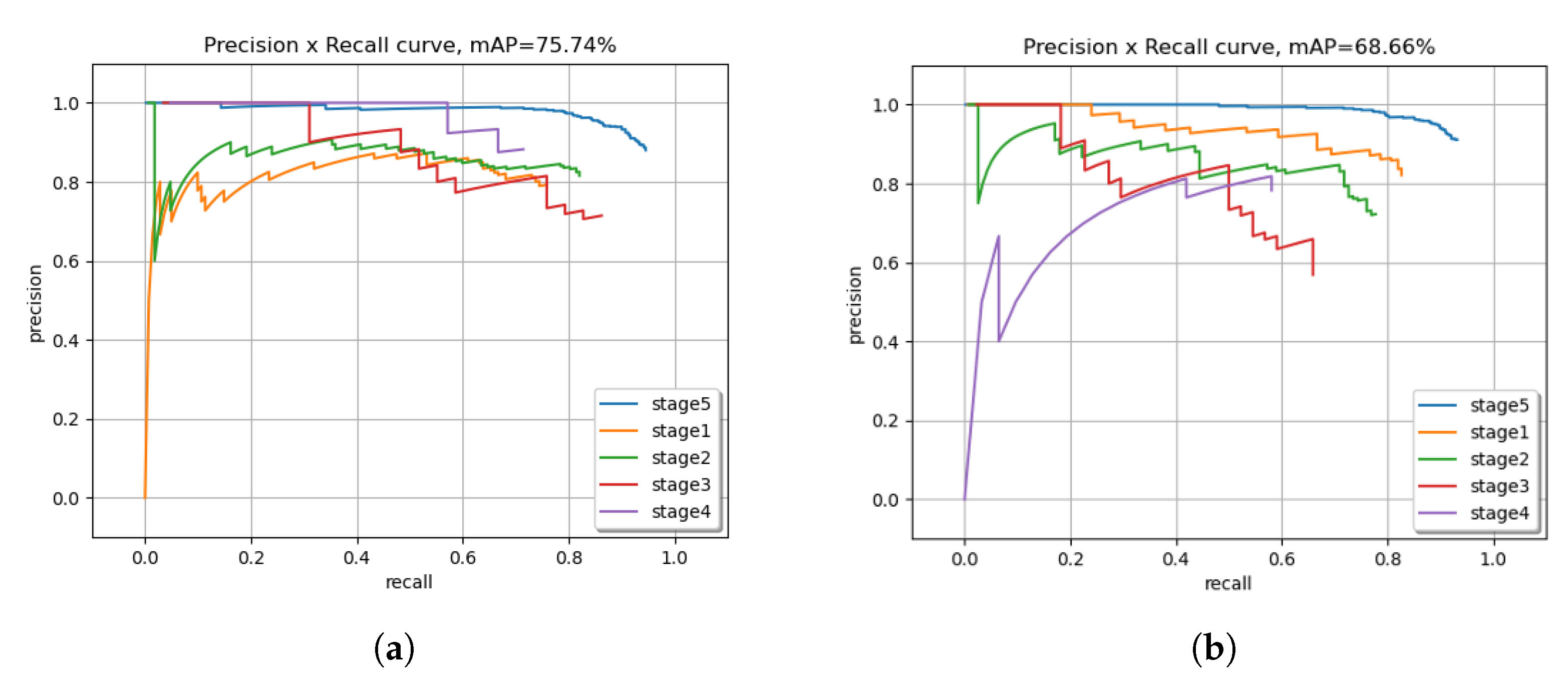

- The precision–recall curve for the patterns 2 and 3 datasets are included.

- To show the effectiveness of object detection module, segmentation results are compared with the proposed model.

2. Materials and Methods

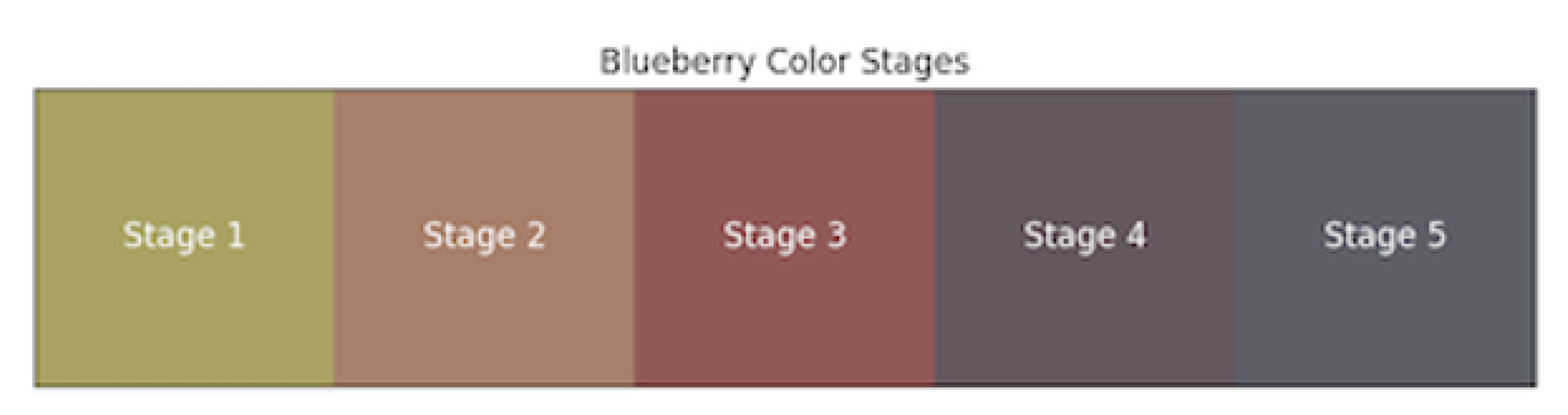

2.1. Maturity Levels of Blueberry Fruit

2.2. Dataset

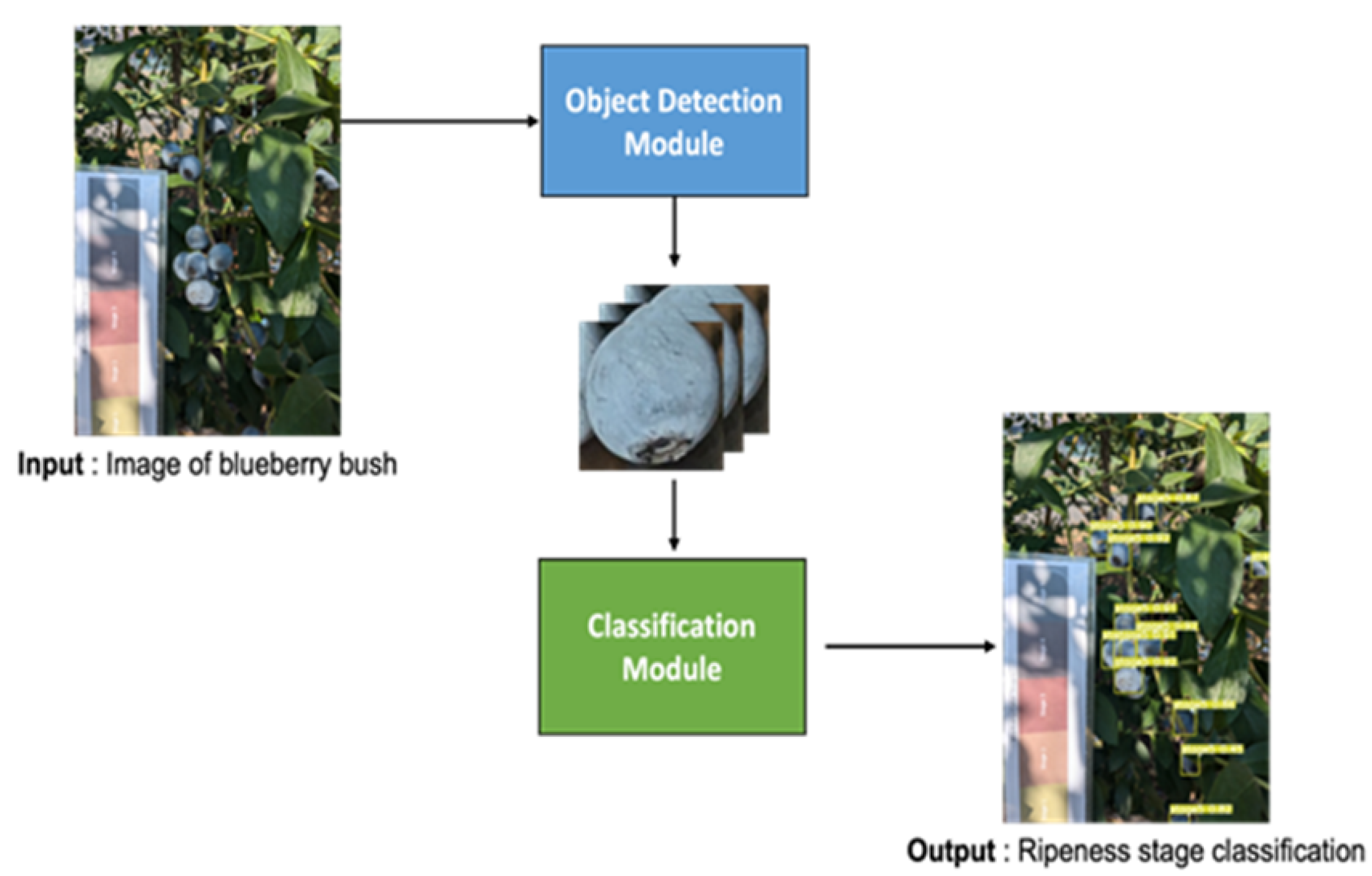

2.3. Maturity Classification Method

3. Experiments

3.1. Experimental Condition

- Convolutional Neural Network

- Nearest neighbor search in L∗a∗b∗ color space of image.

- Vision Transformer (proposed method): params: 5.7 M, input size: 224 (proposed method).

3.2. Results and Discussion

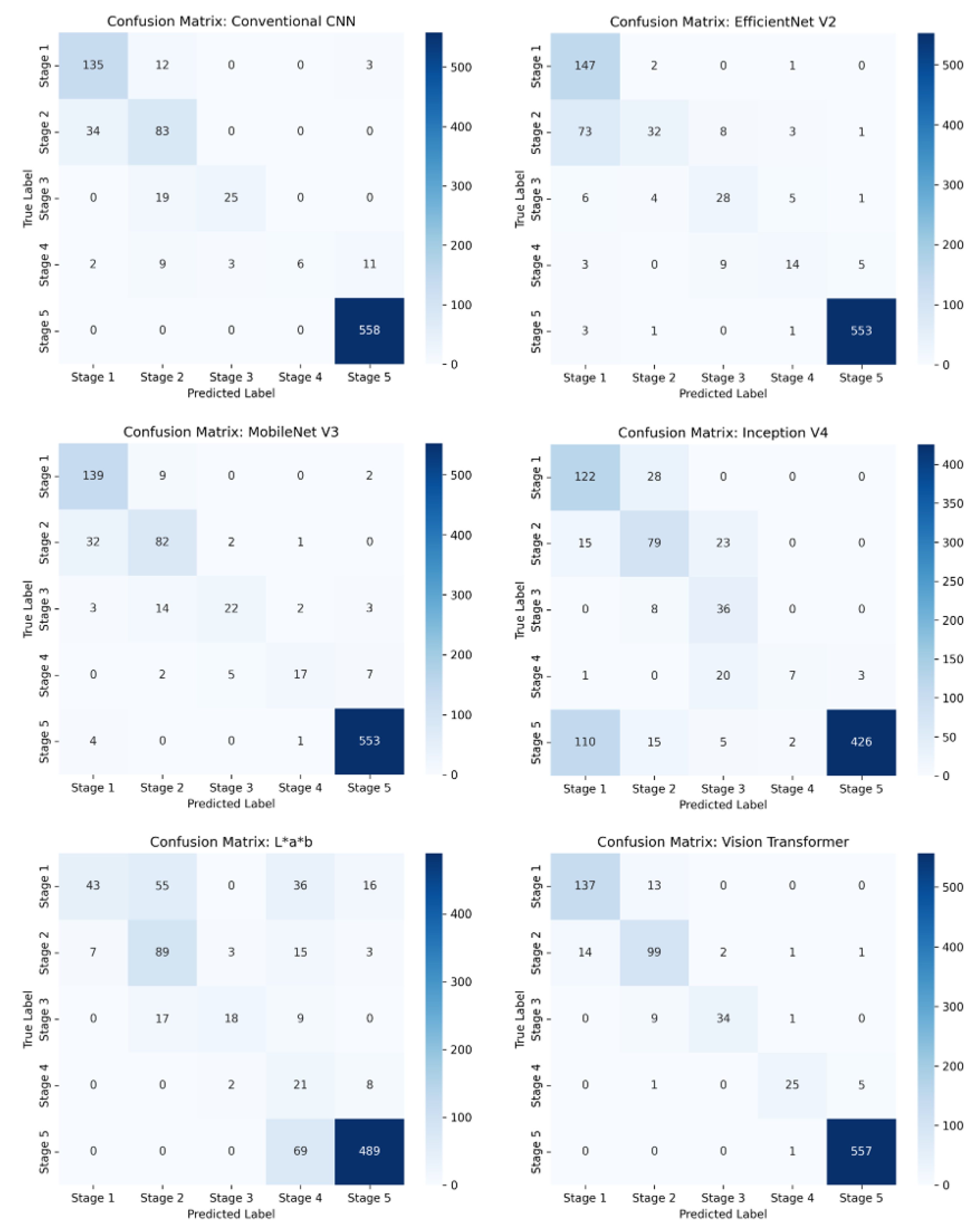

3.2.1. Classification Accuracy

3.2.2. Detection Approach

3.3. Ablation Analysis

3.3.1. Effectiveness of Transformer Module

3.3.2. Effectiveness of Classification Using Unified Dataset

4. Limitation

4.1. Dataset

4.2. Applicability to Robotic Harvesting

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zapien-Macias, J.M.; Liu, T.; Nunez, G.H. Blueberry ripening mechanism: A systematic review of physiological and molecular evidence. Hortic. Res. 2025, 12, 8. [Google Scholar] [CrossRef] [PubMed]

- Smrke, T.; Stajner, N.; Cesar, T.; Verberic, R.; Hudina, M.; Jakopic, J. Correlation between Destructive and Non-Destructive Measurements of Highbush Blueberry Fruit during Maturation. Horticulturae 2023, 9, 501. [Google Scholar] [CrossRef]

- Li, H.; Lee, W.S.; Wang, K. Identifying blueberry fruit of different growth stages using natural outdoor color images. Comput. Electron. Agric. 2014, 106, 91–101. [Google Scholar] [CrossRef]

- Yang, C.; Lee, W.S.; Gader, P. Hyperspectral band selection for detecting different blueberry fruit maturity stages. Comput. Electron. Agric. 2014, 109, 23–31. [Google Scholar] [CrossRef]

- Gonzalez, S.; Arellano, C.; Tapia, J.E. Deepblueberry: Quantification of blueberries in the wild using instance segmentation. IEEE Access 2019, 7, 105776–105788. [Google Scholar] [CrossRef]

- Muñoz, P.B.C.; Sorogastua, E.M.F.; Gardini, S.R.P. Detection and classification of ventura-blueberries in five levels of ripeness from images taken during pre-harvest stage using deep learning techniques. In Proceedings of the 2022 IEEE ANDESCON, Barranquilla, Colombia, 16–19 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Muñoz, P.B.C.; Kemper, R.J.H.; Gardini, S.R.P. Artificial vision strategy for Ripeness assessment of Blueberries on Images taken during Pre-harvest stage in Agroindustrial Environments using Deep Learning Techniques. In Proceedings of the 2023 IEEE XXX International Conference on Electronics, Electrical Engineering and Computing (INTERCON), Lima, Peru, 2–4 November 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Yang, W.; Ma, X.; An, H. Blueberry Ripeness Detection Model Based on Enhanced Detail Feature and Content-Aware Reassembly. Agronomy 2023, 13, 1613. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, H.; Zhang, Y.; Zhange, Q.; Chen, H.; Xu, X.; Wang, G. Is this blueberry ripe?: A blueberry ripeness detection algorithm for use on picking robots. Front. Plant Sci. 2023, 14, 1198650. [Google Scholar] [CrossRef] [PubMed]

- MacEachern, C.B.; Esau, T.J.; Schumann, A.W.; Hennessy Patrick, J.; Zaman, Q.U. Detection of fruit maturity stage and yield estimation in wild blueberry using deep learning convolutional neural networks. Smart Agric. Technol. 2023, 3, 100099. [Google Scholar] [CrossRef]

- Esaki, I.; Noma, S.; Ban, T.; Sultana, R.; Shimizu, I. Maturity classification of blueberry fruit from camera image for cultivation support system. In Proceedings of the 2025 7th International Conference on Image, Video and Signal Processing, Kunming, China, 14–16 November 2025; pp. 75–79. [Google Scholar]

- Shutak, V.G.; Gough, R.E.; Windus, N.D. The Cultivated Highbush Blueberry: Twenty Years of Research; Rhode Island Agricultural Experiment Station Bulletin, University of Rhode Island, Dept. of Plant and Soil Science: Kingston, RI, USA, 1980; 428p. [Google Scholar]

- Sanhueza, D.; Balic-Norambuena, I.; Sepúlveda-Orellana, P.; Siña-López, S.; Moreno, A.A.; María Alejandra Moya-León, M.A.; Saez-Aguayo, S. Unraveling cell wall polysaccharides during blueberry ripening. Front. Plant Sci. 2024, 15, 1422917. [Google Scholar] [CrossRef] [PubMed]

- Acharya, T.P.; Nambeesan, S.U. Ethylene-releasing plant growth regulators promote ripening initiation by stimulating sugar, acid and anthocyanin metabolism in blueberry (Vaccinium ashei). BMC Plant Biol. 2025, 25, 766. [Google Scholar] [CrossRef] [PubMed]

- Chiabrando, V.; Giacalone, G. Anthocyanins, phenolics and antioxidant capacity after fresh storage ofblueberry treated with edible coatings. INternational J. Food Sci. Nutr. 2015, 66, 248–253. [Google Scholar] [CrossRef] [PubMed]

- Zifkin, M.; Jin, A.; Ozga, J.A.; Zaharia, L.I.; Schernthaner, J.P.; Gesell, A.; Abrams, S.R.; Kennedy, J.A.; Constabel, C.P. Gene Expression and Metabolite Profiling of Developing Highbush Blueberry Fruit Indicates Transcriptional Regulation of Flavonoid Metabolism and Activation of Abscisic Acid Metabolism. Plant Physiol. 2012, 158, 200–224. [Google Scholar] [CrossRef] [PubMed]

- Yamagishi, S.; Ito, N.; Takeda, H.; Hirose, Y. Blueberry fruit detachment when vibrating fruit and resultant branch units. Agric. Res. 2002, 37, 25–32. (In Japanese) [Google Scholar]

- Steiner, A.; Kolesnikov, A.; Zhai, X.; Wightman, R.; Uszkoreit, J.; Beyer, L. How to train your vit? data, augmentation, and regularization in vision transformers. arXiv 2021, arXiv:2106.10270. [Google Scholar]

- Tan, M.; Le, Q. Efficientnetv2: Smaller models and faster training. In International Conference on Machine Learning; PMLR: Cambridge, MA, USA, 2021; pp. 10096–10106. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan v VLe, Q.; et al. Searching for mobilenetv3. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1314–1324. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; Volume 31. [Google Scholar]

- Jia, Z.; JiYun, N.; Hui, Z.; Ye, L. Evaluation Indexes for Blueberry Quality. Sci. Agric. Sin. 2019, 52, 2128–2139. [Google Scholar]

- Steven, A.S.; Brecht, J.K.; Forney, C.F. Blueberry harvest and postharvest operations: Quality maintenance and food safety; Childers, N.F., Lyrene, P.M., Blueberries, E.O., Eds.; Painter Printing Company, Inc.: De Leon Springs, FL, USA, 2006; pp. 139–151. [Google Scholar]

- Zhou, H.; Wang, X.; Au, W.; Kang, H.; Chen, C. Intelligent robots for fruit harvesting: Recent developments and future challenges. Precis. Agric. 2022, 23, 1856–1907. [Google Scholar] [CrossRef]

- Chen, Z.; Lei, X.; Yuan, Q.; Qi, Y.; Ma, Z.; Qian, S.; Lyu, X. Key Technologies for Autonomous Fruit- and Vegetable-Picking Robots: A Review. Agronomy 2024, 14, 2233. [Google Scholar] [CrossRef]

- Wang, C.; Pan, W.; Zou, T.; Li, C.; Han, Q.; Wang, H.; Yang, J.; Zou, X. A Review of Perception Technologies for Berry Fruit-Picking Robots: Advantages, Disadvantages, Challenges, and Prospects. Agriculture 2024, 14, 1346. [Google Scholar] [CrossRef]

- TOMRA Food. Integrated Blueberry Solutions. 2025. Available online: https://www.tomra.com/food/categories/fruit/blueberries/ (accessed on 13 October 2025).

- Ellips, B.V. Ellips - Fruit Sorting and Grading Technology. 2024. Available online: https://ellips.com/grading-machine/blueberry/ (accessed on 13 October 2025).

- UNITEC S.p.A. UNITEC Blueberry Vision 3: Optical Sorting Technology. 2024. Available online: https://www.unitec-group.com/en/blueberry-vision-3/ (accessed on 13 October 2025).

| Maturity Stage | Indicator by Peel Color | Color Stage |

|---|---|---|

| Stage 1 | The entire fruit is green with a slight reddish tinge on the nape. | Mature green |

| Stage 2 | About 1/2 of the fruit is reddish. | Green pink |

| Stage 3 | Fruit reddish with slight bluish tinge. | Blue pink |

| Stage 4 | The whole fruit is blue except around the small petiolar attachment. | Blue |

| Stage 5 | The entire fruit is blue, including the area around the small petiolar attachment. | Ripe |

| Stage 1 | Stage 2 | Stage 3 | Stage 4 | Stage 5 |

|---|---|---|---|---|

| 818 | 632 | 229 | 115 | 2712 |

| Model | Stage 1 | Stage 2 | Stage 3 | Stage 4 | Stage 5 | All |

|---|---|---|---|---|---|---|

| Conventional Method | 90.0 | 70.9 | 56.8 | 19.4 | 100.0 | 89.7 |

| EfficientNet v2 | 98.0 | 27.4 | 63.6 | 45.2 | 99.1 | 86.0 |

| MobileNet v3 | 92.7 | 70.1 | 50.0 | 54.8 | 99.1 | 90.3 |

| Inception v4 | 81.3 | 67.5 | 81.8 | 22.6 | 76.3 | 74.4 |

| L∗a∗b | 28.7 | 76.1 | 40.9 | 67.7 | 87.6 | 73.3 |

| Vision Transformer | 91.3 | 84.6 | 77.3 | 80.7 | 99.8 | 94.7 |

| Model | Stage 1 | Stage 2 | Stage 3 | Stage 4 | Stage 5 | All |

|---|---|---|---|---|---|---|

| Conventional Method | 73.0 | 70.8 | 12.7 | 0.0 | 99.6 | 82.2 |

| EfficientNet v2 | 81.1 | 62.5 | 52.7 | 47.4 | 98.3 | 84.4 |

| MobileNet v3 | 81.1 | 78.1 | 49.1 | 42.1 | 99.4 | 87.9 |

| Inception v4 | 91.9 | 73.4 | 81.8 | 42.1 | 95.1 | 88.3 |

| L∗a∗b | 28.4 | 64.6 | 47.3 | 78.9 | 87.4 | 70.7 |

| Vision Transformer | 89.2 | 79.7 | 87.3 | 68.4 | 99.8 | 92.6 |

| Model | Stage 1 | Stage 2 | Stage 3 | Stage 4 | Stage 5 | All |

|---|---|---|---|---|---|---|

| Conventional Method | 78.7 | 84.4 | 34.5 | 0.0 | 99.8 | 89.3 |

| EfficientNet v2 | 79.4 | 79.0 | 44.8 | 28.6 | 98.6 | 88.7 |

| MobileNet v3 | 83.0 | 68.9 | 51.7 | 57.1 | 99.3 | 88.7 |

| Inception v4 | 89.4 | 65.9 | 58.6 | 38.1 | 92.2 | 84.6 |

| L∗a∗b | 37.6 | 71.9 | 34.5 | 76.2 | 88.0 | 75.3 |

| Vision Transformer | 85.8 | 85.0 | 89.7 | 66.7 | 99.6 | 93.7 |

| Model | Stage 1 | Stage 2 | Stage 3 | Stage 4 | Stage 5 | All |

|---|---|---|---|---|---|---|

| Conventional Method | 80.6 | 75.4 | 34.7 | 6.5 | 99.8 | 87.1 |

| EfficientNet v2 | 86.2 | 56.3 | 53.7 | 40.4 | 98.6 | 86.4 |

| MobileNet v3 | 85.6 | 72.4 | 50.3 | 51.4 | 99.3 | 89.0 |

| Inception v4 | 87.5 | 68.9 | 74.1 | 34.3 | 87.9 | 82.4 |

| L∗a∗b | 31.6 | 70.9 | 40.9 | 74.3 | 87.7 | 73.1 |

| Vision Transformer | 88.8 | 83.1 | 84.7 | 71.9 | 99.8 | 93.7 |

| Prediction | True | ||||

|---|---|---|---|---|---|

| Stage 1 | Stage 2 | Stage 3 | Stage 4 | Stage 5 | |

| Stage 1 | 103 | 14 | 0 | 0 | 1 |

| Stage 2 | 13 | 88 | 10 | 1 | 0 |

| Stage 3 | 0 | 2 | 29 | 1 | 0 |

| Stage 4 | 0 | 2 | 1 | 22 | 0 |

| Stage 5 | 0 | 0 | 0 | 3 | 469 |

| Background | 34 | 11 | 4 | 5 | 87 |

| Prediction | True | ||||

|---|---|---|---|---|---|

| Stage 1 | Stage 2 | Stage 3 | Stage 4 | Stage 5 | |

| Stage 1 | 92 | 11 | 0 | 0 | 1 |

| Stage 2 | 13 | 87 | 9 | 1 | 0 |

| Stage 3 | 0 | 2 | 30 | 1 | 0 |

| Stage 4 | 0 | 0 | 2 | 21 | 1 |

| Stage 5 | 0 | 0 | 0 | 3 | 468 |

| Background | 45 | 11 | 3 | 5 | 87 |

| Stage 1 | Stage 2 | Stage 3 | Stage 4 | Stage 5 | All | |

|---|---|---|---|---|---|---|

| EfficientNet v2 | 82.0% | 50.4% | 59.1% | 67.7% | 97.7% | 86.0% |

| MobileNet v3 | 68.0% | 47.9% | 56.8% | 54.8% | 93.5% | 80.2% |

| Inception v4 | 94.0% | 41.0% | 84.1% | 74.2% | 95.0% | 86.5% |

| Vision Transformer | 86.7% | 69.2% | 75.0% | 93.5% | 98.4% | 91.3% |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Esaki, I.; Noma, S.; Ban, T.; Sultana, R.; Shimizu, I. Maturity Classification of Blueberry Fruit Using YOLO and Vision Transformer for Agricultural Assistance. Horticulturae 2025, 11, 1272. https://doi.org/10.3390/horticulturae11101272

Esaki I, Noma S, Ban T, Sultana R, Shimizu I. Maturity Classification of Blueberry Fruit Using YOLO and Vision Transformer for Agricultural Assistance. Horticulturae. 2025; 11(10):1272. https://doi.org/10.3390/horticulturae11101272

Chicago/Turabian StyleEsaki, Ikuma, Satoshi Noma, Takuya Ban, Rebeka Sultana, and Ikuko Shimizu. 2025. "Maturity Classification of Blueberry Fruit Using YOLO and Vision Transformer for Agricultural Assistance" Horticulturae 11, no. 10: 1272. https://doi.org/10.3390/horticulturae11101272

APA StyleEsaki, I., Noma, S., Ban, T., Sultana, R., & Shimizu, I. (2025). Maturity Classification of Blueberry Fruit Using YOLO and Vision Transformer for Agricultural Assistance. Horticulturae, 11(10), 1272. https://doi.org/10.3390/horticulturae11101272