Abstract

The accurate identification of tomato maturity and picking positions is essential for efficient picking. Current deep-learning models face challenges such as large parameter sizes, single-task limitations, and insufficient precision. This study proposes MTS-YOLO, a lightweight and efficient model for detecting tomato fruit bunch maturity and stem picking positions. We reconstruct the YOLOv8 neck network and propose the high- and low-level interactive screening path aggregation network (HLIS-PAN), which achieves excellent multi-scale feature extraction through the alternating screening and fusion of high- and low-level information while reducing the number of parameters. Furthermore, We utilize DySample for efficient upsampling, bypassing complex kernel computations with point sampling. Moreover, context anchor attention (CAA) is introduced to enhance the model’s ability to recognize elongated targets such as tomato fruit bunches and stems. Experimental results indicate that MTS-YOLO achieves an F1-score of 88.7% and an mAP@0.5 of 92.0%. Compared to mainstream models, MTS-YOLO not only enhances accuracy but also optimizes the model size, effectively reducing computational costs and inference time. The model precisely identifies the foreground targets that need to be harvested while ignoring background objects, contributing to improved picking efficiency. This study provides a lightweight and efficient technical solution for intelligent agricultural picking.

1. Introduction

The tomato (Solanum lycopersicum) is one of the most widely cultivated and highest-yielding vegetables in the world [1]. As a major producer, China still heavily relies on manual labor for most tomato picking, leading to relatively low picking efficiency. With labor resources becoming increasingly scarce and the agricultural industry undergoing transformation and upgrading, the demand for automated and mechanized picking has grown more urgent [2]. The inconsistency in tomato maturity necessitates careful identification of maturity for each bunch to prevent economic losses caused by improper picking times. Distinguishing between different maturity levels of tomato fruit bunches and accurately locating the fruit stems are critical technical challenges [3]. Consequently, many researchers are focused on developing precise, efficient, and lightweight target-detection algorithms that are easy to deploy, significantly enhancing the recognition capabilities of picking robots [4].

Traditional image processing methods typically analyzed the color, texture, and shape of tomatoes, using algorithms such as support vector machines (SVM) and geometric techniques for classification and detection. Kumar et al. [5] proposed a non-destructive tomato sorting and grading system that utilized a three-stage classification process with SVM classifiers to effectively sort and grade tomatoes. However, solving the non-linear problem required cascading multiple SVM classifiers, which introduced significant computational complexity. Bai et al. [6] proposed a vision algorithm that combined shape, texture, and color features for tomato recognition, employing geometric processing methods like Hough circle detection and spline interpolation for precise picking point localization in fruit bunches. However, their experiments focused entirely on single fruit bunches, overlooking the complex background information typically found in tomato greenhouses.

With advancements in deep-learning technology and the accelerated transformation of smart agriculture, convolutional neural network-based target-detection algorithms have been widely applied in tomato fruit detection due to their strong feature learning capabilities. Early target-detection algorithms adopted two-stage detection methods, with Faster R-CNN [7] being a representative model. Sun et al. [8] proposed a method based on an improved feature pyramid network (FPN) to enhance the recognition of tomato organs, achieving significant performance improvements over Faster R-CNN models, with mean average precision reaching 99.5%. Mu et al. [9] used a tomato detection model combining Faster R-CNN and ResNet 101, achieving an average accuracy of 87.83% with intersection over union (IoU) ≥ 0.5. Seo et al. [10] reported an 88.6% detection accuracy for tomatoes grown in hydroponic greenhouses using Faster R-CNN.

In recent years, the emergence of single-stage detection algorithms, which do not generate candidate regions like two-stage detection algorithms, significantly improves detection speed while maintaining high accuracy. These algorithms gradually become the preferred solution for fruit detection, with the single shot multibox detector (SSD) [11] and the you only look once (YOLO) series [12,13,14,15,16,17,18,19,20,21] being representative models. Yuan et al. [22] replaced SSD’s backbone network with Inception V2, achieving an average precision (AP) of 98.85% for small tomato recognition in greenhouse environments. Vasconez et al. [23] proposed an SSD integrated with MobileNet for accurate fruit counting in orchards, reaching a 90.0% success rate, thus facilitating improved decision making in agricultural practices. Zheng et al. [24] introduced RC-YOLOv4, which integrated R-CSPDarknet53 and depthwise separable convolutions to improve the detection accuracy of small, distant, and partially occluded objects in complex environments. Ge et al. [25] developed YOLO-deepSort, a target tracking network for recognizing and counting tomatoes at different growth stages, achieving average detection accuracies of 93.1%, 96.4%, and 97.9% for flowers, green tomatoes, and red tomatoes, respectively. Zeng et al. [26] achieved a lightweight implementation with an mAP@0.5 of 96.9% while deploying an Android mobile application based on an improved YOLOv5. Phan et al. [27] proposed four deep-learning frameworks (Yolov5m and Yolov5 based on ResNet-50, ResNet-101, and EfficientNet-B0, respectively) for classifying ripe, unripe, and damaged tomatoes, all of which achieved strong results. Li et al. [28] introduced the MHSA-YOLOv8 model for tomato maturity grading and fruit counting, which was suitable for practical production scenarios. Chen et al. [29] developed a cherry tomato multi-task detection network based on YOLOv7, which successfully handled cherry tomato detection, fruit, and fruit cluster maturity grading, with an average inference time of 4.9 ms (RTX3080). Yue et al. [30] proposed an improved YOLOv8 network, RSR-YOLO, for long-distance recognition of tomato fruits, achieving accuracy, recall, F1-score, and mAP@0.5 of 91.6%, 85.9%, 88.7%, and 90.7%, respectively, while also designing a dedicated graphical user interface (GUI) for real-time tomato detection tasks.

In the study of tomato maturity recognition and classification, computational cost and model weight are key factors that directly impact the feasibility of the model in real-world deployment. As summarized in Table 1, a comparative analysis of key metrics related to tomato maturity recognition and classification research is conducted based on the cited references. Zheng et al. [24] improved YOLOv4 by incorporating depthwise separable convolutions to enhance the ability to capture small objects, achieving high-precision tomato maturity classification. However, they did not consider the model size and inference time needed for actual deployment. Zeng et al. [26] focused on deploying lightweight models on mobile devices with limited performance, significantly reducing model parameters by using the MobileNetV3 lightweight backbone and a pruned neck network, although there was still room for optimization in inference time. Li et al. [28] introduced the multi-head self-attention (MHSA) mechanism to enhance YOLOv8’s diverse feature extraction capabilities, enabling tomato maturity classification in complex scenarios with occlusion and overlap. However, to ensure accuracy improvement, the study did not reduce model parameters and computational costs, nor did it fully account for practical deployment needs. Chen et al. [29] added two additional decoders on the basis of YOLOv7 to detect tomato fruit bunches, fruit maturity, and bunch maturity, and they utilized scale-sensitive intersection over union (SIoU) to improve the model’s recognition accuracy. These improvements did not significantly increase inference time, but the high computational cost (103.3 G) limited the model’s further deployment. Yue et al. [30] enhanced feature fusion and used repulsion loss to improve YOLOv8 for tomato maturity classification and detection in large-area environments. However, its inference time (13.2 ms) and FLOPs (16.9 G) were higher than YOLOv8n’s 7.9 ms and 8.1 G. Overall, these advancements indicate progress in addressing the challenges of tomato maturity recognition and classification. However, there remains considerable opportunity for further research to reduce computational costs and model weight, which are crucial for practical deployment and scalability.

Table 1.

Comparative summary of key metrics for previous studies on tomato maturity classification and detection.

In prolonged robotic picking operations, models capable of performing multiple tasks simultaneously are essential for reducing computational costs [31,32,33]. Achieving accurate simultaneous recognition of tomato maturity and stem position is crucial for enhancing the performance and operational efficiency of tomato-picking robots, with further improvements needed in detection precision. Additionally, even on picking robots with limited computing power, lightweight designs can optimize operations and reduce energy consumption [34,35,36]. Current studies often overlook the necessity of distinguishing between foreground and background targets in picking scenarios [37,38]. In real tomato-picking environments, attention should be focused on the nearest row of targets while ignoring background elements.

To address these challenges, this study proposes an innovative lightweight model, MTS-YOLO, trained using a dataset annotated to include both the maturity of tomato fruit bunches and their stems. Compared to existing advanced detection methods, MTS-YOLO features fewer parameters, efficient feature fusion capabilities, and higher multi-task detection accuracy. Additionally, it tackles the challenge of distinguishing foreground picking targets from the background in practical picking scenarios. The specific contributions are as follows:

- (1)

- We propose the top-down select feature fusion module (TSFF), which enhances the MFDS-DETR [39] SFF module by replacing bilinear interpolation with DySample [40] upsampling, using point sampling to eliminate convolution operations, resulting in a lighter model with faster inference.

- (2)

- We propose HLIS-PAN, featuring the newly designed down-top select feature fusion module (DSFF), which fuses low-level features into high-level features, compensating for positional information loss and improving semantic understanding. Compared to the YOLOv8 neck network, HLIS-PAN is lighter and more efficient.

- (3)

- We integrate CAA [41] to sharpen the focus on central features, enhance elongated target recognition, and boost foreground detection precision, which contributes to optimizing the picking robot’s performance.

The remainder of this paper is organized as follows: Section 2 introduces the dataset utilized and provides a detailed description of the enhancements made to the model; Section 3 presents the experimental results, followed by validation and analysis; Section 4 discusses the current state of research on maturity and picking object-detection algorithms, the limitations of this study, and offers perspectives on future research directions; Section 5 concludes this study.

2. Materials and Methods

2.1. Experimental Dataset

2.1.1. Dataset Source

Our experimental data utilizes the publicly available 2022 Dataset of String Tomato in Shanxi Nonggu Tomato Town [42]. The data samples were collected from 23 July 2022, to 10 August 2022, and include images of tomato fruit bunches. These images were gathered at Shanxi Tiansen Dushi Tomato Technology Company in Tomato Town, Jinzhong National Agricultural High-tech Zone, Getou Village, Fancun Town, Taigu District, Jinzhong City, Shanxi Province. The collection environment was a greenhouse. After processing and filtering, 3665 images totaling 5.31 GB were selected. These images were annotated using the LabelImg tool, categorized into ‘mature’, ‘stem’, and ‘raw’ classes, focusing only on the nearest row of tomato fruit bunches in each image. The annotations were saved as TXT files in YOLO format, totaling 0.8 MB.

2.1.2. Dataset Sample Description

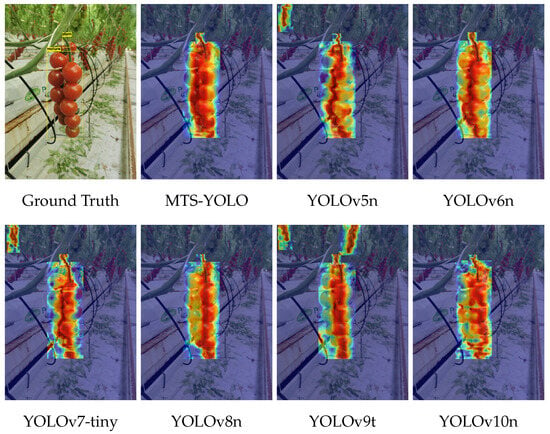

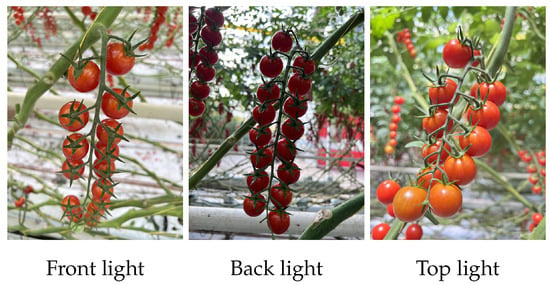

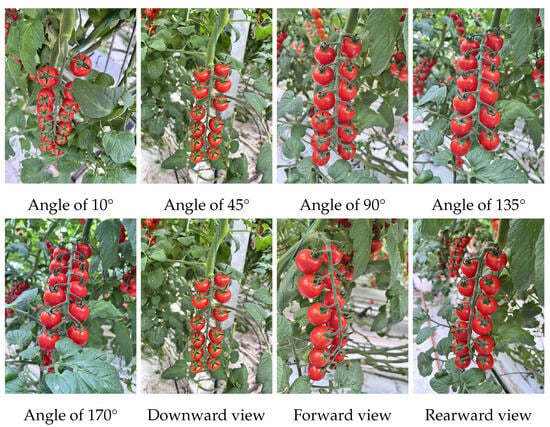

To facilitate the analysis of tomato fruit bunch characteristics and enhance the robustness of the training model, image data collection was conducted using multiple devices, time periods, and angles. The images were captured using iPhone 13 Pro Max, HUAWEI P30, HUAWEI Nova 5z, and OPPO A91 smartphones. The resolution of the images ranged from a minimum of 2736 × 3648 pixels to a maximum of 3000 × 4000 pixels. Figure 1 shows images taken at different times of the day, captured under various lighting conditions and orientations. A detailed description can be found in Appendix A Figure A1, Figure A2 and Figure A3.

Figure 1.

The images of tomato fruit bunches captured at different times of the day, under various lighting conditions and orientations.

2.1.3. Dataset Splitting

Based on a collection of 3665 valid images, the dataset is partitioned into training, validation, and test sets at a ratio of 8:1:1. The training set comprises 2932 images, the validation set includes 366 images, and the test set consists of 367 images. Specific details of the dataset are presented in Table 2.

Table 2.

Experimental dataset and data distribution.

To enhance the robustness and fairness of model comparisons, undersampling techniques are applied to balance the three classes within the dataset, resulting in a total of 1458 images. These images are split into training, validation, and test sets with an 8:1:1 ratio. The distribution details of this balanced dataset are shown in Table 3, while the results of the experimental comparisons can be found in Appendix B Table A1.

Table 3.

Balanced dataset and data distribution.

2.2. Model Introduction

2.2.1. Network Architecture of YOLOv8

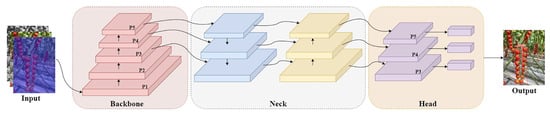

YOLOv8, introduced by Ultralytics, marks a significant leap forward in the YOLO series. Despite the subsequent releases of YOLOv9 and YOLOv10, YOLOv8 continues to stand out due to its innovative anchor-free and task-aligned assigner mechanisms for dynamically assigning positive and negative samples, which provides an excellent balance between speed and accuracy [43]. With its stable and reliable performance, YOLOv8 has achieved significant success in industrial defect detection, vehicle recognition, and plant phenotype detection [44,45,46]. Additionally, YOLOv8 offers outstanding deployment capabilities for edge devices, making it highly effective for integration into picking robots and laying a solid foundation for future field deployments [47]. Among the YOLOv8 series, which includes x, l, m, s, and n versions, YOLOv8n is the most lightweight model. In our study, we choose YOLOv8n as the base model to minimize computational and memory costs, ensuring better performance on resource-limited or mobile devices. As shown in Figure 2, this is the YOLOv8 model and the structure diagram of its modules. Its main components are divided into the backbone network, neck network, and head network.

Figure 2.

Diagram of YOLOv8 network architecture.

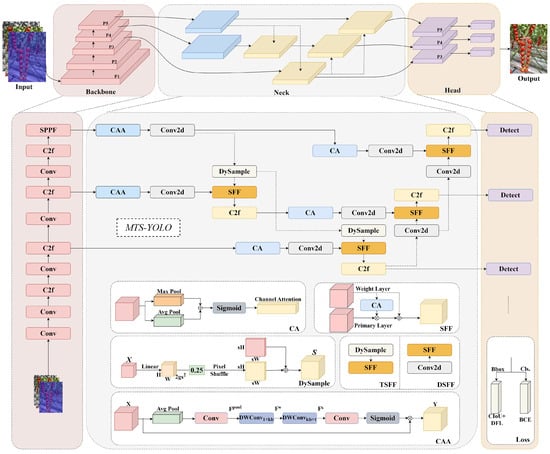

2.2.2. Network Architecture of MTS-YOLO

MTS-YOLO is an improvement based on the YOLOv8 architecture, and the network structure is shown in Figure 3. The entire network is divided into three parts: backbone, neck, and head. The backbone, head, and loss function inherit the design of YOLOv8, ensuring basic performance and stability. We design a new neck network, HLIS-PAN, based on the HS-FPN concept of MFDS-DETR. By utilizing the SFF module of HS-FPN, we design TSFF and DSFF, and combine them to achieve stronger multi-scale feature fusion capabilities. Moreover, in HLIS-PAN, we introduce the CAA module to capture long-range contextual information, thereby enhancing the network’s ability to capture slender shape features such as tomato fruit bunches and stems.

Figure 3.

Diagram of MTS-YOLO network architecture. The backbone (red) extracts multi-scale feature maps, which are passed to the neck network. In the neck, the CAA (blue) is applied to the feature maps from P4 and P5 to focus the model on relevant regions. Next, the CA (light blue) enhances the channel-wise importance of the feature maps. The SFF (orange) is used for selective feature fusion, and it is further improved into TSFF by integrating DySample (light yellow) for lightweight high-level feature filtering and fusion into low-level features. Additionally, the DSFF module combines Conv2d (gray) for further low-level feature filtering and fusion into high-level features. The features processed by TSFF and DSFF are passed to the C2f layer (yellow) for further refinement and aggregation. Finally, the detection layers (purple) output predictions for tomato maturity and stem positions.

2.3. High and Low-Level Interactive Screening Path Aggregation Network

As depicted in Figure 4, HLIS-PAN comprises two principal components: (1) the feature selection module and (2) the select feature fusion module. The neck network of YOLOv8 captures low-scale features through the large-parameter P3 and P4 layers, resulting in a significant increase in the number of parameters and computational load. In contrast, the P3 and P4 layers of HLIS-PAN have parameters consistent with the P5 layer. By establishing a bidirectional information exchange mechanism between low-level and high-level features, HLIS-PAN enhances their semantic and positional information. This not only integrates features at various levels effectively but also significantly reduces the number of parameters.

Figure 4.

Diagram of HLIS-PAN structure. The backbone (red) includes feature maps from P3, P4, and P5 layers. The feature selection module applies CAA (blue) and Conv2d (gray) operations to the feature maps from P4 and P5. The interactive screening feature fusion module contains TSFF (ochre) and DSFF (orange) modules. TSFF fuses high-level features into low-level features, while DSFF fuses low-level features into high-level features. The feature maps (beige-colored) represent the outputs generated after processing through the TSFF and DSFF modules.

Feature selection module: In the multi-scale feature maps generated by the backbone network, high-level features are rich in semantic information but lack clear localization details, whereas low-level features offer precise localization information but are limited in semantic content. HLIS-PAN introduces the CAA module, which enhances the target localization capabilities of high-level information and focuses more accurately on the feature information of elongated targets in the foreground.

Select feature fusion module: The SFF module of HS-FPN in MFDS-DETR screens and fuses low-level features through high-level features, achieving semantic enhancement of low-level features. However, this method does not use low-level features to assist high-level feature learning. Inspired by this, HLIS-PAN introduces a module called DSFF. After compensating for the lack of semantic information in low-level features, DSFF screens and fuses high-level features to enhance their localization ability, thus achieving the fusion of high-level and low-level interactive screening features. Additionally, we propose TSFF based on improvements to SFF, replacing the transposed convolutions and bilinear interpolation used in SFF with the lightweight upsampling method DySample. DySample employs dynamic point sampling technology to flexibly adjust the sampling grid, allowing for more comprehensive information perception. This reduces excessive kernel operations while achieving better feature representation capabilities.

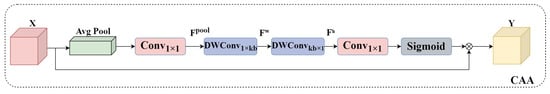

2.3.1. Context Anchor Attention

CAA employs strip convolutions along multiple axes to capture contextual relationships between distant pixels while simultaneously enhancing central features. This makes it particularly suitable for detecting elongated objects. A schematic diagram of the CAA model is shown in Figure 5. Initially, local features are obtained using average pooling followed by a 1 × 1 convolution, as shown in Equation (1):

Figure 5.

CAA module. The input feature map X (red cube) undergoes average pooling (green) to aggregate spatial information. A operation (light red) reduces channel dimensions. Vertical stripe depthwise convolution (blue) and horizontal stripe depthwise convolution (blue) capture directional information. Another operation (light red) is followed by a Sigmoid activation (gray). The resulting feature map is multiplied element-wise with the original X, producing the output Y (yellow cube).

Subsequently, we use vertical and horizontal stripe depthwise convolutions as approximations of the standard large kernel depth convolution, as shown in Equations (2) and (3):

Among them, the parameter is set to 11 + 2 × 1, allowing strip convolution to have sufficient strip receptive fields and better capture the features of slender objects. This operation achieves effects similar to those of standard large kernel depth convolution while effectively reducing computational complexity.

Finally, we use a 1 × 1 convolution followed by a sigmoid activation to generate attention weights. These weights are then multiplied with the input to complete the CAA operation, as shown in Equation (4):

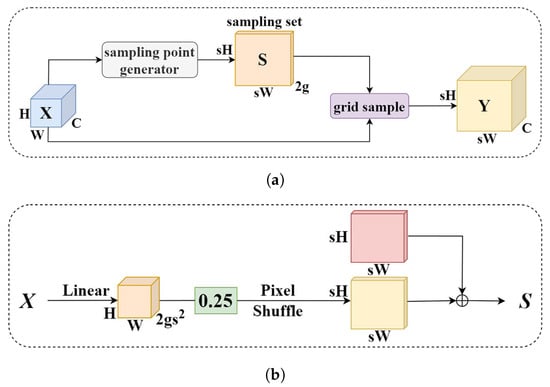

2.3.2. Top-Down Select Feature Fusion Module

As shown in Figure 6, DySample is an innovative dynamic upsampling method that enhances feature resolution by utilizing point sampling instead of dynamic convolution. It generates content-aware offsets through linear projection and then resamples the input feature maps through bilinear interpolation. Initially, given an upsampling ratio factor s = 2, a static range factor of 0.25, and a grouping g = 4, for a feature map X of size C × H × W, a linear layer with input and output channels as C and , respectively, is multiplied by the static range factor to generate offsets O of size × H × W. These offsets are then reshaped into 2g × sH × sW through a pixel shuffling operation, as shown in Equation (5). The sum of offsets O and the original sampling grid G produces the sampling set S, with the size of 2g × sH × sW, where 2g represents the x and y coordinates of g groups, as shown in Equation (6):

Figure 6.

DySample module. (a) Sampling based dynamic upsampling. (b) Sampling point generator in DySample with the static scope factor.

Using the coordinates from the sampling set S, the input feature map X is upsampled via bilinear interpolation to obtain a new feature Y, with the dimensions C × sH × sW, as shown in Equation (7), where grid_sample represents the bilinear interpolation method.

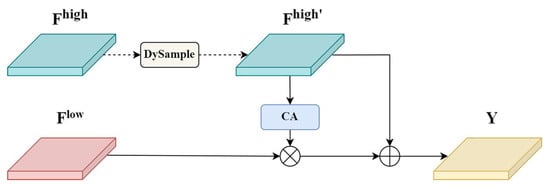

The structure of the TSFF module is shown in Figure 7. To achieve the screening and fusion of high-level features with low-level features, it is first necessary to unify their dimensions. Therefore, for the given high-level features of size C × × , we upsample them using the DySample method to obtain , which has the same dimensions as the low-level features , as shown in Equation (8). Then, the attention weights from the high-level features are captured using CA for screening the low-level features. Finally, the screened low-level features are fused with the attention weights of the high-level features to produce the output Y of size C × × , as shown in Equation (9):

Figure 7.

Diagram of TSFF structure. The high-level feature map (cyan) is upsampled by DySample (light yellow), producing (cyan). After channel attention by CA (light blue), it is fused with the low-level feature map (red) via element-wise addition. The output is then passed to Y (yellow).

DySample replaces the combination of transposed convolution and bilinear interpolation. Its design, which uses point sampling without the need for kernels, can reduce computational overhead and improve upsampling efficiency. The content-aware offset function allows for the adjustment of sampling points based on the feature map, providing a larger receptive field, more extensive capture and retention of beneficial features, and improved upsampling performance.

2.3.3. Down-Top Select Feature Fusion Module

In the HLIS-PAN, the DSFF is a newly added module, the structure of which is shown in Figure 8. The DSFF module designs to achieve the screening and fusion of high-level features by low-level features. For the given low-level features of size C × × , they are processed through two-dimensional convolution to extract effective features and reduce dimensions, resulting in , as shown in Equation (10). The processed features are consistent with the high-level feature dimensions, with a size of C × × . Subsequently, the following steps are consistent with those of the TSFF, using CA to capture the attention weights of the low-level features for screening the high-level features. Finally, the screened high-level features are fused with the attention weights of the low-level features to produce the output Y of size C × × , as shown in Equation (11):

Figure 8.

Diagram of DSFF structure. The high-level feature map (cyan) is fused with the low-level feature map (red), which has been processed by Conv2d (gray) and enhanced by the CA (light blue) module, producing the final output Y (yellow) through element-wise addition.

Through the DSFF module, the capability for multi-scale fusion can be further enhanced. Low level features can screen and integrate beneficial information into high-level features, compensating for the high-level features’ lack of localization precision. This process not only maintains the abstract semantic information of the high-level features but also improves the localization accuracy of the overall feature map, thereby enhancing the model’s performance in object detection tasks.

3. Results and Analysis

3.1. Experimental Environment and Parameter Settings

The experiment is conducted on the Rocky Linux 9.4 (Blue Onyx) operating system, leveraging an Intel Xeon Silver 4214 CPU and an Nvidia RTX 4090 GPU. PyTorch version 1.13.1 serves as the deep-learning framework in conjunction with CUDA version 11.7, and Python 3.8 is used throughout the experiment.

The model is trained for a total of 300 epochs with a batch size of 16, and all input images are resized to dimensions of 640 × 640 pixels. Stochastic gradient descent (SGD) with a momentum of 0.937 serves as the optimizer, ensuring efficient and stable parameter updates. The training begins with an initial learning rate (Lr0) of 0.01, which is consistently maintained throughout the training process. For bounding box optimization, the complete intersection over union (CIoU) loss function is employed. To mitigate the risk of overfitting, a weight decay of 0.0005 is applied. A warmup phase is implemented at the beginning of training, consisting of 3.0 warmup epochs, during which the warmup momentum is set to 0.8 and the warmup bias learning rate is 0.1. The initial training parameters are detailed in Table 4.

Table 4.

Initial training parameters.

3.2. Model Evaluation Metrics

To assess both the performance and lightweight capability of our model, we employ eight metrics: precision, recall, F1-score, mAP@0.5, mAP@0.5:0.95, parameters, time, and FLOPs. Precision quantifies the ratio of predictions to all positive predictions, where represents correctly identified positive instances and represents false positive instances. It is calculated as Equation (12):

Recall determines the proportion of actual positives correctly identified by the model, where represents false negative instances. It is calculated as Equation (13):

The F1-score, the harmonic mean of precision and recall, provides a metric that reflects the model’s classification ability, ensuring that neither precision nor recall dominates the evaluation. It is calculated as Equation (14):

The mean average precision (mAP) is a key metric for evaluating the performance of object detection models across multiple classes. It is calculated by taking the mean of the AP values for each class. AP itself is determined by the area under the precision–recall curve for a particular class, reflecting the model’s ability to correctly identify the class at various confidence thresholds. It is calculated as Equation (15):

where is the average precision for the i-th category, and n is the total number of classes.

mAP@0.5 refers to the mean average precision calculated at a fixed IoU threshold of 0.5. mAP@0.5:0.95 represents the mean average precision averaged over multiple IoU thresholds ranging from 0.5 to 0.95 in increments of 0.05.

Parameters indicate the total number of trainable parameters in the model, reflecting its complexity and storage requirements. The time represents the overall time the model takes to process an image. The time taken to process each image can be expressed as Equation (16):

Floating point operations per second (FLOPs) gauge the computational complexity and resource demands of the model. These metrics collectively offer a comprehensive evaluation of the model’s performance and efficiency.

3.3. Training and Testing Results of MTS-YOLO

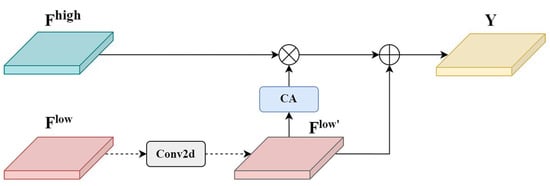

Figure 9a illustrates the validation loss curves for MTS-YOLO. The loss components for MTS-YOLO include ‘val/cls_loss’, ‘val/box_loss’, and ‘val/dfl_loss’. The ‘val/cls_loss’ represents the classification loss on the validation set, measuring the model’s accuracy in categorizing different targets. The ‘val/box_loss’ represents the bounding box loss on the validation set, assessing the precision of the model in locating target boundaries. The ‘val/dfl_loss’ represents the distribution focal loss (DFL) on the validation set, evaluating the accuracy of the model in predicting the confidence of the bounding boxes. The loss values exhibit a rapid decline initially and eventually stabilize as the epochs progress. Among the three losses, ‘val/cls_loss’ is the lowest, followed by ‘val/box_loss’, with ‘val/dfl_loss’ being the highest.

Figure 9.

Model training results. (a) The validation loss curve during MTS-YOLO training, (b) the precision–recall curves for various object classes, and (c) the normalized confusion matrix.

Figure 9b presents the precision–recall (P–R) curves for different object classes (‘mature’, ‘stem’, and ‘raw’) as well as the overall performance. The aggregate P–R curve, with an mAP@0.5 of 92.0%, highlights MTS-YOLO’s robust performance across all classes, despite some variability in individual class performance. The lower performance for the ‘stem’ class is due to its similarity to other objects and background interference, making it a more challenging detection task.

Figure 9c shows the normalized confusion matrix, which evaluates the model’s classification performance across different categories. The horizontal axis represents the true labels, while the vertical axis represents the predicted labels. The color intensity of each cell indicates prediction accuracy, with darker shades representing higher accuracy. For the ‘mature’ category, MTS-YOLO achieves very high accuracy, with a correct prediction rate of 96.0%. The ‘stem’ category poses a more complex classification challenge, with a correct prediction rate of 81.0%. This is partly due to the strong similarity between background and target stems, leading to many backgrounds being misclassified as stems. For the ‘raw’ category, MTS-YOLO shows a commendable accuracy rate of 93.0%.

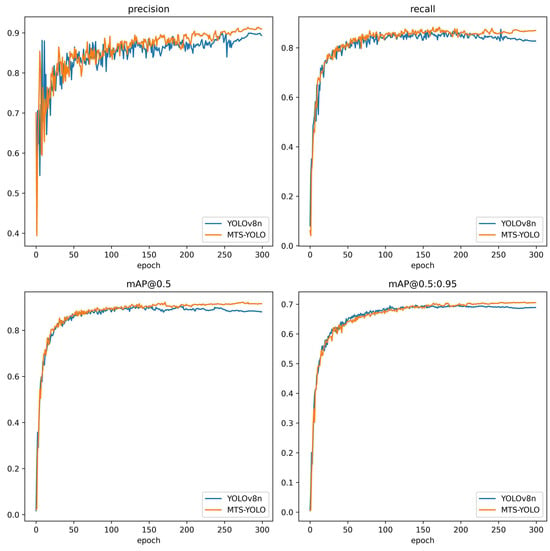

Based on the data in Table 5, MTS-YOLO shows clear advantages over YOLOv8n across multiple categories. In the ’mature’ category, MTS-YOLO matches YOLOv8n in mAP@0.5 and mAP@0.5:0.95 but outperforms it by 2.0% in precision and 1.2% in F1-score, leading to more accurate classification of mature tomatoes. In the ’stem’ category, despite a slight dip in precision, MTS-YOLO significantly improves recall by 6.6%, resulting in a 3.5% increase in F1-score. This balance between precision and recall indicates that MTS-YOLO is better at reducing false negatives, which is crucial for real-world recognition. Additionally, MTS-YOLO achieves a 4.0% higher mAP@0.5 and a 1.2% higher mAP@0.5:0.95, enhancing its ability to accurately detect stem regions. In the ’raw’ category, MTS-YOLO excels with a 7.2% increase in precision, a 3.4% increase in F1-score, and a 1.3% improvement in mAP@0.5:0.95. Overall, aggregating results across all categories, MTS-YOLO shows a 2.7% increase in precision, a 2.0% increase in recall, a 2.4% increase in F1-score, a 1.4% higher mAP@0.5, and a 0.9% higher mAP@0.5:0.95. These improvements highlight the model’s superior capability in distinguishing tomato fruit bunch maturity and detecting stems. The performance comparison curves of MTS-YOLO and YOLOv8 for precision, recall, mAP@0.5, and mAP@0.5:0.95 are shown in Appendix C Figure A4.

Table 5.

Comparison experiments on the performance of MTS-YOLO and YOLOv8n in three categories.

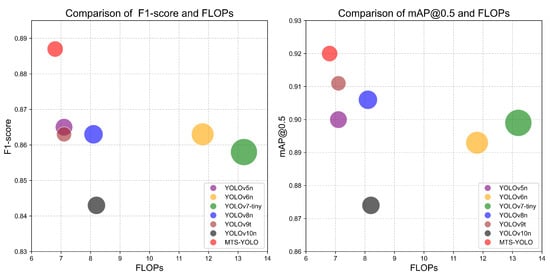

3.4. Comparison of Neck

In this study, we evaluate ten different modifications to the neck network of YOLOv8. We implement the following neck networks: YOLOv8-EfficientRepBiPAN [17], YOLOv8-GDFPN [48], YOLOv8-GoldYOLO [49], YOLOv8-CGAFusion [50], and YOLOv8-HS-FPN [36]. For MTS-YOLO, we replace the attention mechanisms with MTS-YOLO-SimAM [51], MTS-YOLO-CBAM [52], and MTS-YOLO-CAFM [53]. We also substitute the upsampling module with MTS-YOLO-CARAFE [54]. Table 6 presents the detailed performance parameters of these networks.

Table 6.

Comparison of neck network model improvements.

YOLOv8-EfficientRepBiPAN demonstrates better mAP@0.5 performance but does not show an advantage in inference time. YOLOv8-GDFPN exhibits good classification performance, although its mAP@0.5 performance is unsatisfactory. The addition of SimAM, CBAM, and CAFM attention mechanisms does not yield significant performance improvements. The CARAFE upsampling method delivers strong performance but noticeably increases inference time due to its content-aware processing, which involves compressing channels, encoding content, and normalizing kernels for each target location, thereby adding to the computational load. Although YOLOv8-HS-FPN has the smallest parameter count, the DySample upsampling used in MTS-YOLO results in shorter inference times. Additionally, MTS-YOLO’s HLIS-PAN demonstrates excellent feature fusion and extraction capabilities, leading to superior classification performance and mAP. Overall, MTS-YOLO shows the best comprehensive performance.

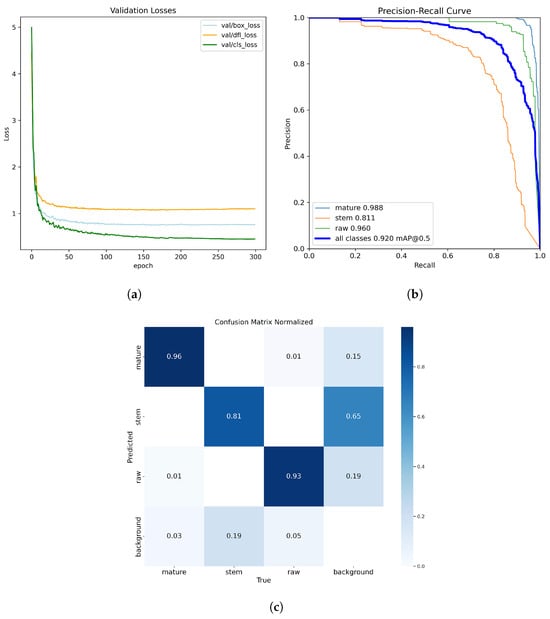

3.5. Comparison of Model Performance with Lightweight State-of-the-Art Models

To further validate the performance of MTS-YOLO, we compared it with the state-of-the-art object-detection algorithms currently available on the market. As shown in Table 7, the models compared include YOLOv5n, YOLOv6n, YOLOv7-tiny, YOLOv8n, YOLOv9t, and YOLOv10n. These models represent the lightweight versions of the most advanced object-detection algorithms, aiming to balance high performance with a lightweight design, thereby achieving an optimal equilibrium between performance and efficiency.

Table 7.

Comparison of detection performance between MTS-YOLO and lightweight state-of-the-art object-detection models.

Compared to these state-of-the-art lightweight object-detection models, MTS-YOLO exhibits the best performance in precision, recall, F1-score, mAP@0.5, and FLOPs. In terms of the F1-score, MTS-YOLO shows improvements of 2.2%, 2.4%, 2.9%, 2.4%, 2.4%, and 4.4% compared to YOLOv5n, YOLOv6n, YOLOv7-tiny, YOLOv8n, YOLOv9t, and YOLOv10n, respectively. For mAP@0.5, MTS-YOLO improves by 2.0%, 2.7%, 2.1%, 1.4%, 0.9%, and 4.6%. Additionally, MTS-YOLO reduces FLOPs by 4.2%, 42.4%, 47.7%, 16.0%, 4.2%, and 17.1%. Although the pruned YOLOv9 has fewer parameters, YOLOv9t employs a programmable gradient information (PGI) training strategy, and the addition of reversible branches significantly increases the training cost. Moreover, YOLOv9t does not have an advantage in inference time either. YOLOv10n eliminates the need for non-maximum suppression (NMS), resulting in very short inference times but at the expense of accuracy. Figure 10 clearly demonstrates that our model is both lighter and more effective in classification and mAP. MTS-YOLO not only outperforms these models in terms of performance but also shows significant advantages in parameter efficiency and computational effectiveness.

Figure 10.

Comparison of lightweight networks. The size of the circles in the two graphs represents the number of parameters.

3.6. Display of Visual Results

To validate the effectiveness of MTS-YOLO in practical application scenarios, we conducted detection on the test set images and compared its performance with that of other lightweight models. During the detection process, we set the confidence threshold to 0.6 and the IoU threshold to 0.45. Figure 11 shows images similar to those encountered by tomato-picking robots when harvesting mature tomatoes. It is evident that other models fail to detect two stems completely, whereas MTS-YOLO accurately identifies both the tomato fruit bunches and the stems. Figure 12 depicts a bunch of tomatoes, with the lower part being immature. Other models exhibit false detections or over-detections in this scenario, while only MTS-YOLO successfully detects the immature tomato fruit bunch. In summary, MTS-YOLO provides precise detection of tomato fruit bunch maturity and stems, making it highly suitable for application in tomato-picking robots by accurately focusing on foreground targets.

Figure 11.

Detection results of different lightweight detectors in mature tomato fruit bunches and stems environment.

Figure 12.

Detection results of different lightweight detectors in an immature tomato fruit bunch environment.

Figure 13 displays the heatmap results of various lightweight state-of-the-art object-detection models for detecting mature tomato fruit bunches and stems. The heatmaps reveal that MTS-YOLO, YOLOv6n, YOLOv8n, and YOLOv10n successfully target mature tomato fruit bunches. However, YOLOv5n, YOLOv7-tiny, and YOLOv9t identify background fruit bunches that fall outside the picking robot’s operational range. Additionally, YOLOv5n, YOLOv8n, YOLOv9t, and YOLOv10n exhibit some inaccuracies when detecting stems. In contrast, MTS-YOLO demonstrates superior accuracy in identifying stems, effectively directing attention to the correct locations. This precision is particularly valuable for guiding the robot to the optimal picking points.

Figure 13.

Heatmap comparison of MTS-YOLO and lightweight state-of-the-art models.

3.7. Comparison of Model Performance with Larger State-of-the-Art Models

As shown in Table 8, MTS-YOLO, despite its lightweight design, delivers impressive performance. Compared to YOLOv7, which has significantly more parameters, MTS-YOLO only slightly lags behind in terms of mAP@0.5. When compared to YOLOv8s, which shares the same structure as YOLOv8n but has more parameters, MTS-YOLO exhibits comprehensive superiority. Although these larger models possess significantly more parameters, they do not demonstrate a noticeable improvement in feature learning. This underscores the efficiency of MTS-YOLO’s feature selection and fusion mechanisms. Additionally, MTS-YOLO shows advantages in inference time over these larger models. Overall, these comparisons highlight MTS-YOLO as a highly accurate, efficient, and computationally economical option for object detection.

Table 8.

Comparison of detection performance between MTS-YOLO and larger state-of-the-art object-detection models.

3.8. Ablation Experiment

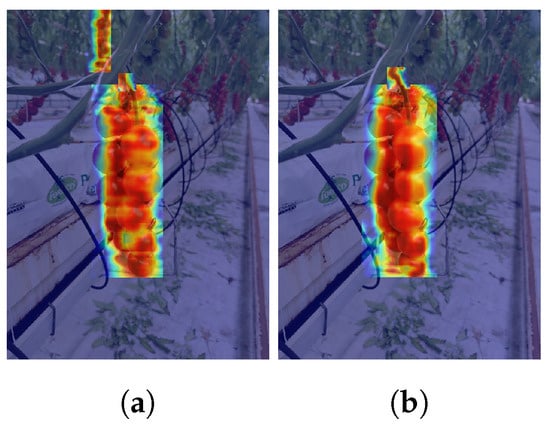

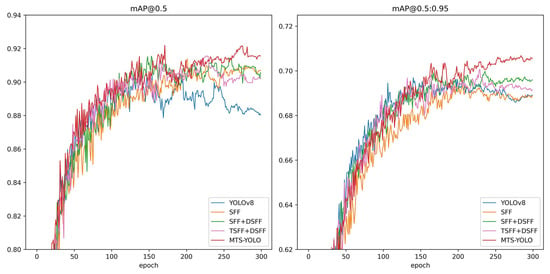

Based on the ablation study results in Table 9, we assess the impact of various components on model performance metrics. Adding the TSFF module (Model B) demonstrates that using DySample for upsampling—without convolution—significantly reduces inference time and computational cost with minimal performance impact. Building on this, the integration of the DSFF module (Model E) enhances feature selection and fusion capabilities. Although this leads to a slight increase in parameter count and inference time, it results in improvements of 1.3% in F1-score and 1.0% in mAP@0.5. Finally, incorporating the CAA module (MTS-YOLO) yields additional gains of 1.0% in F1-score and 0.4% in mAP@0.5, without extending inference time. This is due to the lower computational load of strip convolutions compared to large kernel convolutions, resulting in nearly no noticeable increase in computational cost. As shown in Figure 14, the CAA module effectively captures key features of tomato fruit bunches and stems. The performance curves for mAP@0.5 and mAP@0.5:0.95 from the ablation experiments involving the addition of the three modules to MTS-YOLO are presented in Appendix D Figure A5.

Table 9.

Results and test process of the ablation experiment.

Figure 14.

Heatmap results of the CAA module ablation experiment. (a) Results without the introduction of the CAA module. (b) Results with the introduction of the CAA module.

4. Discussion

4.1. Discussion of the Current Research Status

In recent years, numerous researchers have focused on developing object-detection algorithms for fruit maturity and picking point localization [55]. Liu et al. [56] proposed a YOLO-based algorithm with a Little-CBAM structure and MobileNetv3 backbone for blueberry maturity detection, achieving a 78.3% mAP, which is 9% higher than YOLOv5x. This approach successfully identified all blueberries within the field of view, demonstrating high detection success under strong lighting and in high-density conditions. Zhang et al. [57] introduced the YOLOMS model, optimizing YOLOv5s with a RepVGG structure and Focal-EIoU loss function. It achieved 82.42% mAP, 85.64% recall, 82.26% mean intersection over union (MIoU) in stem segmentation, 92.19% recognition accuracy, an 89.84% picking success rate, and an average localization time of 58.4 ms, effectively overcoming challenges such as leaf occlusion and fruit overlap. Similarly, Hou et al. [58] proposed a method combining CBAM with Mask R-CNN and Soft-NMS for precise citrus pedicel detection, achieving 95.04% precision and a 91.44% F1-score, effectively addressing the challenge of detecting small citrus pedicels that blend with the background. While these studies tackle issues like overlapping occlusions, varying lighting conditions, and background color interference, they focus on target recognition regardless of distance. This approach does not account for the limited operational range of picking robots. There is a need for algorithms that prioritize recognizing nearby targets while ignoring distant ones. MTS-YOLO addresses this challenge, enhancing the efficiency of detecting targets within the picking robot’s operational scope.

Robots deployed in plant factories or open-field environments often have limited edge computing capabilities [59]. Therefore, it is crucial to achieve a lightweight design while maintaining accuracy, which is a mainstream research focus [60]. Meng et al. [61] introduced a spatio-temporal convolutional neural network model with shifted window transformer fusion for detecting pineapples, achieving 92.54% accuracy. However, while the model achieved lightweight design, its average inference time reached 16.3 ms, which was significantly higher than YOLOv8’s 7.0 ms. Chen et al. [62] introduced YOLOv8-GP, an improved model for detecting grape bunches and picking points, achieving 89.7% AP with a 47.73% reduction in parameters, enhancing detection performance while reducing deployment costs for mobile robots. However, its FPS decreased compared to YOLOv8. Zhong et al. [63] introduced Light-YOLO, a lightweight mango detection model based on Darknet53, achieving a 96.1% mAP@0.5 on the ACFR Mango dataset with only 1.96 M parameters and 3.65 G FLOPs. However, its inference time of 10.8 ms was higher compared to YOLOv8’s 6.0 ms. While these researchers have reduced model parameters through lightweight techniques, this led to longer inference times, which could negatively impact the recognition and picking efficiency of picking robots. Therefore, reducing inference time is also a key focus of research [64]. MTS-YOLO not only focuses on a lightweight design but also takes inference time into account, avoiding the reduction of parameters at the expense of computational cost. Compared to the MTD-YOLO model, which is used for detecting tomato fruit bunch maturity, MTS-YOLO achieves an inference time of 3.1 ms, while also performing an additional task of stem recognition, which is lower than MTD-YOLO’s 4.9 ms [29]. MTS-YOLO achieves efficient detecting by balancing lower parameters and reduced computational demands, making it well-suited for deployment on picking robots.

4.2. Limitations and Future Work

Although MTS-YOLO achieves good results in multi-task detection of tomato fruit bunch maturity and stem positions, much work still merits further research and exploration.

First, while MTS-YOLO shows excellent performance in identifying tomato fruit bunch maturity, it faces challenges in stem position detection due to background confusion. To further improve detection accuracy, we will continue to optimize the selected feature fusion modules to achieve even better recognition results.

Secondly, current multi-task detection has its limitations. The maturity of the entire tomato bunch is successfully assessed, but the maturity of each individual tomato in the bunch varies. To address this practical issue, we plan to collect additional data at the tomato cultivation base of Huzhou Wuxing Jinnong Ecological Agriculture Development Limited Company. Our goal is to introduce further tasks based on this data, focusing on accurately identifying the maturity and stem position of individual tomatoes.

Lastly, while our model has been validated through computer simulations, it has yet to be deployed in real-world applications. We aim to conduct on-site testing at the tomato cultivation site using mobile devices to validate the model, alongside experiments with robotic arms for picking. These additional efforts will refine the model’s practical application capabilities, enhancing its viability and reliability for real-world detection tasks.

5. Conclusions

This study proposes MTS-YOLO, a lightweight model designed for detecting tomato fruit bunch maturity and stem positions. Its lightweight design makes it ideal for deployment on resource-constrained picking robots, offering lower inference times and high accuracy, which enhance picking performance. MTS-YOLO’s outstanding ability to recognize foreground targets effectively prevents the robot from straying from the intended picking area, thereby improving the overall efficiency of the picking process. The core of MTS-YOLO is the HLIS-PAN neck network, which excels in feature fusion while minimizing parameter redundancy. DySample is used for efficient upsampling, resulting in a lower computational load and reduced inference time. Additionally, the integration of CAA enhances the model’s focus on foreground targets, ensuring precise detection and improved recognition of elongated targets, even in complex picking scenarios. Experimental results demonstrate that MTS-YOLO achieves an F1-score of 88.7% and mAP@0.5 of 92.0%, outperforming several state-of-the-art models by notable margins, all while maintaining a significantly smaller number of parameters (2.05 M) and FLOPs (6.8 G). Compared with YOLOv5n, YOLOv6n, YOLOv7-tiny, YOLOv8n, YOLOv9t, and YOLOv10n, MTS-YOLO shows F1-score improvements of 2.2%, 2.4%, 2.9%, 2.4%, 2.4%, and 4.4%, respectively, and mAP@0.5 improvements of 2.0%, 2.7%, 2.1%, 1.4%, 0.9%, and 4.6%, respectively. Visualization and heatmap results validate the model’s precision in identifying mature fruit bunches and stems. The ablation studies further confirm the effectiveness of HLIS-PAN in enhancing the model’s recognition capabilities. In summary, MTS-YOLO excels in the multi-task detection of tomato fruit bunches and stems, offering a highly efficient technical solution for intelligent fruit picking in agriculture.

Author Contributions

Conceptualization, M.W., H.L., and B.Z.; methodology, M.W., H.L., and B.Z.; validation, H.L., S.Z., and B.Z.; formal analysis B.Z.; investigation, M.W.; resources, X.S.; writing—original draft preparation, H.L.; writing—review and editing, H.L.; visualization, M.W.; supervision, M.W.; project administration, X.S.; funding acquisition, B.Z., S.Z., and H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (61906066); A project supported by Scientific Research Fund of Zhejiang Provincial Education Department (Y202351126); the Key Science and Technology Project of Huzhou (2023GG11); and the Postgraduate Research and Innovation Project of Huzhou University (2024KYCX41).

Data Availability Statement

Our experimental data utilizes the publicly available 2022 Dataset of String Tomato in Shanxi Nonggu Tomato Town, accessible at https://cstr.cn/31253.41.sciencedb.05228.006C1CBB (accessed on 5 August 2024). Source code are available at: https://github.com/linhanran/MTS (accessed on 9 September 2024).

Conflicts of Interest

Author Xingren Shi was employed by the company Huzhou Wuxing Jinnong Ecological Agriculture Development Limited Company. Author Maonian Wu was employed by the company Huzhou Jiurui Digital Science and Technology Limited Company. The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Appendix A

Figure A1 shows tomato fruit bunches captured at different times of day.

Figure A1.

The images of tomato fruit bunches captured at different times of the day.

Figure A2 shows tomato fruit bunches captured under different lighting conditions.

Figure A2.

The images of tomato fruit bunches captured under different lighting conditions.

The capture angle is referenced to the horizontal ground, with 0° as a top-down view and 180° as a bottom-up view. Figure A3 shows tomato fruit bunches captured from different angles and orientations.

Figure A3.

The images of tomato fruit bunches captured from different angles and orientations.

Appendix B

As illustrated in the Table A1, MTS-YOLO outperforms in F1-score, mAP@0.5, and FLOPs on the balanced dataset, proving its capability to enhance precision, reduce parameters, and lower computational demands.

Table A1.

Comparison of detection performance between MTS-YOLO and lightweight state-of-the-art object-detection models on the balanced dataset.

Table A1.

Comparison of detection performance between MTS-YOLO and lightweight state-of-the-art object-detection models on the balanced dataset.

| Model | F1-Score | mAP@0.5 | mAP@0.5:0.95 | Parameters | Time | FLOPs |

|---|---|---|---|---|---|---|

| YOLOv5n | 82.2% | 83.6% | 66.7% | 2.39 M | 3.7 ms | 7.1 G |

| YOLOv6n | 84.3% | 86.1% | 69.1% | 4.04 M | 4.0 ms | 11.8 G |

| YOLOv7-tiny | 84.8% | 85.5% | 62.5% | 5.74 M | 7.5 ms | 13.0 G |

| YOLOv8n | 84.4% | 86.7% | 67.0% | 2.87 M | 4.0 ms | 8.1 G |

| YOLOv9t | 82.7% | 87.6% | 69.6% | 2.50 M | 8.9 ms | 10.7 G |

| YOLOv9t * | 82.7% | 87.6% | 69.6% | 1.79 M | 6.3 ms | 7.1 G |

| YOLOv10n | 81.3% | 83.2% | 63.9% | 2.57 M | 3.4 ms | 8.2 G |

| MTS-YOLO (ours) | 85.1% | 88.9% | 68.3% | 2.05 M | 3.8 ms | 6.8 G |

* The code for YOLOv9t pruning auxiliary branch heads is consistent with Table 7.

Appendix C

Figure A4 presents a comparison of performance metrics between YOLOv8n and MTS-YOLO during training, encompassing precision, recall, mAP@0.5, and mAP@0.5:0.95. Both models exhibit a rapid initial increase, but from the midpoint onward, MTS-YOLO demonstrates superior precision and recall, indicating enhanced true positive recognition and instance capture. Additionally, MTS-YOLO’s mAP shows consistent improvement, signifying better overall detection performance and robustness across varying IoU thresholds. These results underscore MTS-YOLO’s superiority over the baseline.

Figure A4.

Performance metrics curve between YOLOv8n and MTS-YOLO.

Appendix D

As shown in Figure A5, the continuous addition of TSFF, DSFF, and CAA modules leads to performance improvements.

Figure A5.

Performance comparison of module ablation.

References

- FAO. World Food and Agriculture—Statistical Yearbook 2022; FAO: Rome, Italy, 2022. [Google Scholar]

- Xiao, X.; Wang, Y.N.; Jiang, Y.M. Review of research advances in fruit and vegetable harvesting robots. J. Electr. Eng. Technol. 2024, 19, 773–789. [Google Scholar] [CrossRef]

- Kalampokas, T.; Vrochidou, E.; Papakostas, G.; Pachidis, T.; Kaburlasos, V. Grape stem detection using regression convolutional neural networks. Comput. Electron. Agric. 2021, 186, 106220. [Google Scholar] [CrossRef]

- Ariza-Sentís, M.; Vélez, S.; Martínez-Peña, R.; Baja, H.; Valente, J. Object detection and tracking in Precision Farming: A systematic review. Comput. Electron. Agric. 2024, 219, 108757. [Google Scholar] [CrossRef]

- Kumar, S.-D.; Esakkirajan, S.; Bama, S.; Keerthiveena, B. A microcontroller based machine vision approach for tomato grading and sorting using SVM classifier. Microprocess. Microsyst. 2020, 76, 103090. [Google Scholar] [CrossRef]

- Bai, Y.H.; Mao, S.; Zhou, J.; Zhang, B.H. Clustered tomato detection and picking point location using machine learning-aided image analysis for automatic robotic harvesting. Precis. Agric. 2023, 24, 727–743. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- Sun, J.; He, X.F.; Wu, M.M.; Wu, X.H.; Shen, J.F.; Lu, B. Detection of tomato organs based on convolutional neural network under the overlap and occlusion backgrounds. Mach. Vis. Appl. 2020, 31, 1–13. [Google Scholar] [CrossRef]

- Mu, Y.; Chen, T.S.; Ninomiya, S.; Guo, W. Intact detection of highly occluded immature tomatoes on plants using deep learning techniques. Sensors 2020, 20, 2984. [Google Scholar] [CrossRef]

- Seo, D.; Cho, B.-H.; Kim, K.-C. Development of monitoring robot system for tomato fruits in hydroponic greenhouses. Agronomy 2021, 11, 2211. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A. Ssd: Single shot multiBox detector. In Proceedings of the 14th European Conference of the European Conference on Computer Vision (ECCV 2016), Amsterdam, The Netherlands, 11–14 October 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.-Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Jocher, G. YOLOv5 Release v6.1. 2022. Available online: https://github.com/ultralytics/YOLOv5/releases/tag/v6.1 (accessed on 5 August 2024).

- Li, C.; Li, L.; Geng, Y.; Jiang, H.; Cheng, M.; Zhang, B.; Ke, Z.; Xu, X.; Chu, X. YOLOv6 v3.0: A full-scale reloading. arXiv 2023, arXiv:2301.05586. [Google Scholar]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.-Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 7464–7475. [Google Scholar]

- Jocher, G. Ultralytics YOLOv8. Available online: https://github.com/ultralytics/ultralytics (accessed on 5 August 2024).

- Wang, C.Y.; Yeh, I.-H.; Liao, H.-Y.M. Yolov9: Learning what you want to learn using programmable gradient information. arXiv 2024, arXiv:2402.13616. [Google Scholar]

- Wang, A.; Chen, H.; Liu, L.H.; Chen, K.; Lin, Z.J.; Han, J.G.; Ding, G.G. Yolov10: Real-time end-to-end object detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Yuan, T.; Lv, L.; Zhang, F.; Fu, J.; Gao, J.; Zhang, J.X.; Li, W.; Zhang, C.L.; Zhang, W.Q. Robust cherry tomatoes detection algorithm in greenhouse scene based on SSD. Agriculture 2020, 10, 160. [Google Scholar] [CrossRef]

- Vasconez, J.P.; Delpiano, J.; Vougioukas, S.; Cheein, F. Comparison of convolutional neural networks in fruit detection and counting: A comprehensive evaluation. Comput. Electron. Agric. 2020, 173, 105348. [Google Scholar] [CrossRef]

- Zheng, T.X.; Jiang, M.Z.; Li, Y.F.; Feng, M.C. Research on tomato detection in natural environment based on RC-YOLOv4. Comput. Electron. Agric. 2022, 198, 107029. [Google Scholar] [CrossRef]

- Ge, Y.H.; Lin, S.; Zhang, Y.H.; Li, Z.L.; Cheng, H.T.; Dong, J.; Shao, S.S.; Zhang, J.; Qi, X.Y.; Wu, Z.D. Tracking and counting of tomato at different growth period using an improving YOLO-deepsort network for inspection robot. Machines 2022, 10, 489. [Google Scholar] [CrossRef]

- Zeng, T.H.; Li, S.Y.; Song, Q.M.; Zhong, F.L.; Wei, X. Lightweight tomato real-time detection method based on improved YOLO and mobile deployment. Comput. Electron. Agric. 2023, 205, 107625. [Google Scholar] [CrossRef]

- Phan, Q.; Nguyen, V.; Lien, C.; Duong, T.; Hou, M.T.; Le, N. Classification of Tomato Fruit Using Yolov5 and Convolutional Neural Network Models. Plants 2023, 12, 790. [Google Scholar] [CrossRef]

- Li, P.; Zheng, J.S.; Li, P.Y.; Long, H.W.; Li, M.; Gao, L.H. Tomato maturity detection and counting model based on MHSA-YOLOv8. Sensors 2023, 23, 6701. [Google Scholar] [CrossRef] [PubMed]

- Chen, W.B.; Liu, M.C.; Zhao, C.J.; Li, C.J.; Wang, Y.Q. MTD-YOLO: Multi-task deep convolutional neural network for cherry tomato fruit bunch maturity detection. Comput. Electron. Agric. 2024, 216, 108533. [Google Scholar] [CrossRef]

- Yue, X.Y.; Qi, K.; Yang, F.H.; Na, X.Y.; Liu, Y.H.; Liu, C.H. RSR-YOLO: A real-time method for small target tomato detection based on improved YOLOv8 network. Discov. Appl. Sci. 2024, 6, 268. [Google Scholar] [CrossRef]

- Chen, J.Y.; Liu, H.; Zhang, Y.T.; Zhang, D.K.; Ouyang, H.K.; Chen, X.Y. A multiscale lightweight and efficient model based on YOLOv7: Applied to citrus orchard. Plants 2022, 11, 3260. [Google Scholar] [CrossRef]

- Yan, B.; Fan, P.; Lei, X.Y.; Liu, Z.J.; Yang, F.Z. A real-time apple targets detection method for picking robot based on improved YOLOv5. Remote Sens. 2021, 13, 1619. [Google Scholar] [CrossRef]

- Nan, Y.L.; Zhang, H.C.; Zeng, Y.; Zheng, J.Q.; Ge, Y.F. Intelligent detection of Multi-Class pitaya fruits in target picking row based on WGB-YOLO network. Comput. Electron. Agric. 2023, 208, 107780. [Google Scholar] [CrossRef]

- Chen, J.Q.; Ma, A.Q.; Huang, L.X.; Su, Y.S.; Li, W.Q.; Zhang, H.D.; Wang, Z.K. GA-YOLO: A lightweight YOLO model for dense and occluded grape target detection. Horticulturae 2023, 9, 443. [Google Scholar] [CrossRef]

- Cao, L.L.; Chen, Y.R.; Jin, Q.G. Lightweight Strawberry Instance Segmentation on Low-Power Devices for Picking Robots. Electronics 2023, 12, 3145. [Google Scholar] [CrossRef]

- Zhang, P.; Liu, X.M.; Yuan, J.; Liu, C.L. YOLO5-spear: A robust and real-time spear tips locator by improving image augmentation and lightweight network for selective harvesting robot of white asparagus. Biosyst. Eng. 2022, 218, 43–61. [Google Scholar] [CrossRef]

- Miao, Z.H.; Yu, X.Y.; Li, N.; Zhang, Z.; He, C.X.; Deng, C.Y.; Sun, T. Efficient tomato harvesting robot based on image processing and deep learning. Precis. Agric. 2023, 24, 254–287. [Google Scholar] [CrossRef]

- Zhu, X.Y.; Chen, F.J.; Zhang, X.W.; Zheng, Y.L.; Peng, X.D.; Chen, C. Detection the maturity of multi-cultivar olive fruit in orchard environments based on Olive-EfficientDet. Sci. Hortic. 2024, 324, 112607. [Google Scholar] [CrossRef]

- Chen, Y.F.; Zhang, C.Y.; Chen, B.; Huang, Y.Y.; Sun, Y.F.; Wang, C.M.; Fu, X.J.; Dai, Y.X.; Qin, F.W.; Peng, Y.; et al. Accurate leukocyte detection based on deformable-DETR and multi-level feature fusion for aiding diagnosis of blood diseases. Comput. Biol. Med. 2024, 170, 107917. [Google Scholar] [CrossRef] [PubMed]

- Liu, W.; Lu, H.; Fu, H.; Cao, Z. Learning to upsample by learning to sample. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; pp. 6027–6037. [Google Scholar]

- Cai, X.; Lai, Q.; Wang, Y.; Wang, W.; Sun, Z.; Yao, Y. Poly kernel inception network for remote sensing detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 17–21 June 2024; pp. 27706–27716. [Google Scholar]

- Song, G.Z.; Shi, Y.; Wang, J.; Jing, C.; Luo, G.F.; Sun, S.; Wang, X.L.; Li, Y.N. 2022 Dataset of String Tomato in Shanxi Nonggu Tomato Town. Sci. Data Bank. 2023. Available online: https://cstr.cn/31253.11.sciencedb.05228 (accessed on 5 August 2024).

- Feng, C.; Zhong, Y.; Gao, Y.; Scott, M.R.; Huang, W. TOOD: Task-Aligned One-Stage Object Detection. In Proceedings of the 2021 IEEE International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 3490–3499. [Google Scholar]

- Dong, X.J.; Zhang, C.S.; Wang, J.H.; Chen, Y.; Wang, D.W. Real-time detection of surface cracking defects for large-sized stamped parts. Comput. Ind. 2024, 159, 104105. [Google Scholar] [CrossRef]

- Bakirci, M. Enhancing vehicle detection in intelligent transportation systems via autonomous UAV platform and YOLOv8 integration. Appl. Soft Comput. 2024, 164, 112015. [Google Scholar] [CrossRef]

- Solimani, F.; Cardellicchio, A.; Dimauro, G.; Petrozza, A.; Summerer, S.; Cellini, F.; Renò, V. Optimizing tomato plant phenotyping detection: Boosting YOLOv8 architecture to tackle data complexity. Comput. Electron. Agric. 2024, 218, 108728. [Google Scholar] [CrossRef]

- Gu, Y.; Hong, R.; Cao, Y. Application of the YOLOv8 Model to a Fruit Picking Robot. In Proceedings of the 2024 IEEE 2nd International Conference on Control, Electronics and Computer Technology (ICCECT), Jiling, China, 26–28 April 2024; pp. 580–585. [Google Scholar]

- Jiang, Y.Q.; Tan, Z.Y.; Wang, J.Y.; Sun, X.Y.; Lin, M.; Lin, H. GiraffeDet: A heavy-neck paradigm for object detection. arXiv 2022, arXiv:2202.04256. [Google Scholar]

- Wang, C.C.; He, W.; Nie, Y.; Guo, J.Y.; Liu, C.J.; Wang, Y.H.; Han, K. Gold-YOLO: Efficient object detector via gather-and-distribute mechanism. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 10–16 December 2023; pp. 51094–51112. [Google Scholar]

- Chen, Z.X.; He, Z.W.; Lu, Z.M. DEA-Net: Single image dehazing based on detail-enhanced convolution and content-guided attention. IEEE Trans. Image Process 2024, 33, 1002–1015. [Google Scholar] [CrossRef]

- Yang, L.X.; Zhang, R.Y.; Li, L.D.; Xie, X.H. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning (ICML), Online, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Hu, S.; Gao, F.; Zhou, X.W.; Dong, J.Y.; Du, Q. Hybrid Convolutional and Attention Network for Hyperspectral Image Denoising. IEEE Geosci. Remote Sens. Lett. 2024, 21, 1–5. [Google Scholar] [CrossRef]

- Wang, J.Q.; Chen, K.; Xu, R.; Liu, Z.W.; Loy, C.; Lin, D.H. Carafe: Content-aware reassembly of features. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019; pp. 3007–3016. [Google Scholar]

- Xiao, F.; Wang, H.; Xu, Y.; Zhang, R. Fruit detection and recognition based on deep learning for automatic harvesting: An overview and review. Agronomy 2023, 13, 1625. [Google Scholar] [CrossRef]

- Liu, Y.; Zheng, H.T.; Zhang, Y.H.; Zhang, Q.J.; Chen, H.L.; Xu, X.Y.; Wang, G.Y. “Is this blueberry ripe?”: A blueberry ripeness detection algorithm for use on picking robots. Front. Plant Sci. 2023, 14, 1198650. [Google Scholar] [CrossRef]

- Zhang, B.; Xia, Y.Y.; Wang, R.R.; Wang, Y.; Yin, C.H.; Fu, M.; Fu, W. Recognition of mango and location of picking point on stem based on a multi-task CNN model named YOLOMS. Precis. Agric. 2024, 25, 1454–1476. [Google Scholar] [CrossRef]

- Hou, C.J.; Xu, J.L.; Tang, Y.; Zhuang, J.J.; Tan, Z.P.; Chen, W.L.; Wei, S.; Huang, H.S.; Fang, M.W. Detection and localization of citrus picking points based on binocular vision. Precis. Agric. 2024, 1–35. [Google Scholar] [CrossRef]

- ElBeheiry, N.; Balog, R. Technologies driving the shift to smart farming: A review. IEEE Sens. J. 2022, 23, 1752–1769. [Google Scholar] [CrossRef]

- Tang, Y.; Qiu, J.; Zhang, Y.; Wu, D.; Cao, Y.; Zhao, K.; Zhu, L. Optimization strategies of fruit detection to overcome the challenge of unstructured background in field orchard environment: A review. Precis. Agric. 2023, 24, 1183–1219. [Google Scholar] [CrossRef]

- Meng, F.; Li, J.H.; Zhang, Y.Q.; Qi, S.J.; Tang, Y.C. Transforming unmanned pineapple picking with spatio-temporal convolutional neural networks. Comput. Electron. Agric. 2023, 214, 108298. [Google Scholar] [CrossRef]

- Chen, J.Q.; Ma, A.Q.; Huang, L.X.; Li, H.W.; Zhang, H.Y.; Huang, Y.; Zhu, T.T. Efficient and lightweight grape and picking point synchronous detection model based on key point detection. Comput. Electron. Agric. 2024, 217, 108612. [Google Scholar] [CrossRef]

- Zhong, Z.Y.; Yun, L.J.; Cheng, F.Y.; Chen, Z.Q.; Zhang, C.J. Light-YOLO: A Lightweight and Efficient YOLO-Based Deep Learning Model for Mango Detection. Agriculture 2024, 14, 140. [Google Scholar] [CrossRef]

- Miranda, J.; Gené-Mola, J.; Zude-Sasse, M.; Tsoulias, N.; Escolà, A.; Arnó, J.; Rosell-Polo, J.; Sanz-Cortiella, R.; Martínez-Casasnovas, J.; Gregorio, E. Fruit sizing using AI: A review of methods and challenges. Postharvest Biol. Technol. 2023, 206, 112587. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).