Multi-Scale Convolutional Neural Network for Accurate Corneal Segmentation in Early Detection of Fungal Keratitis

Abstract

:1. Introduction

2. Background

3. Materials and Methods

3.1. Data Collation

3.2. Data Preprocessing and Augmentation

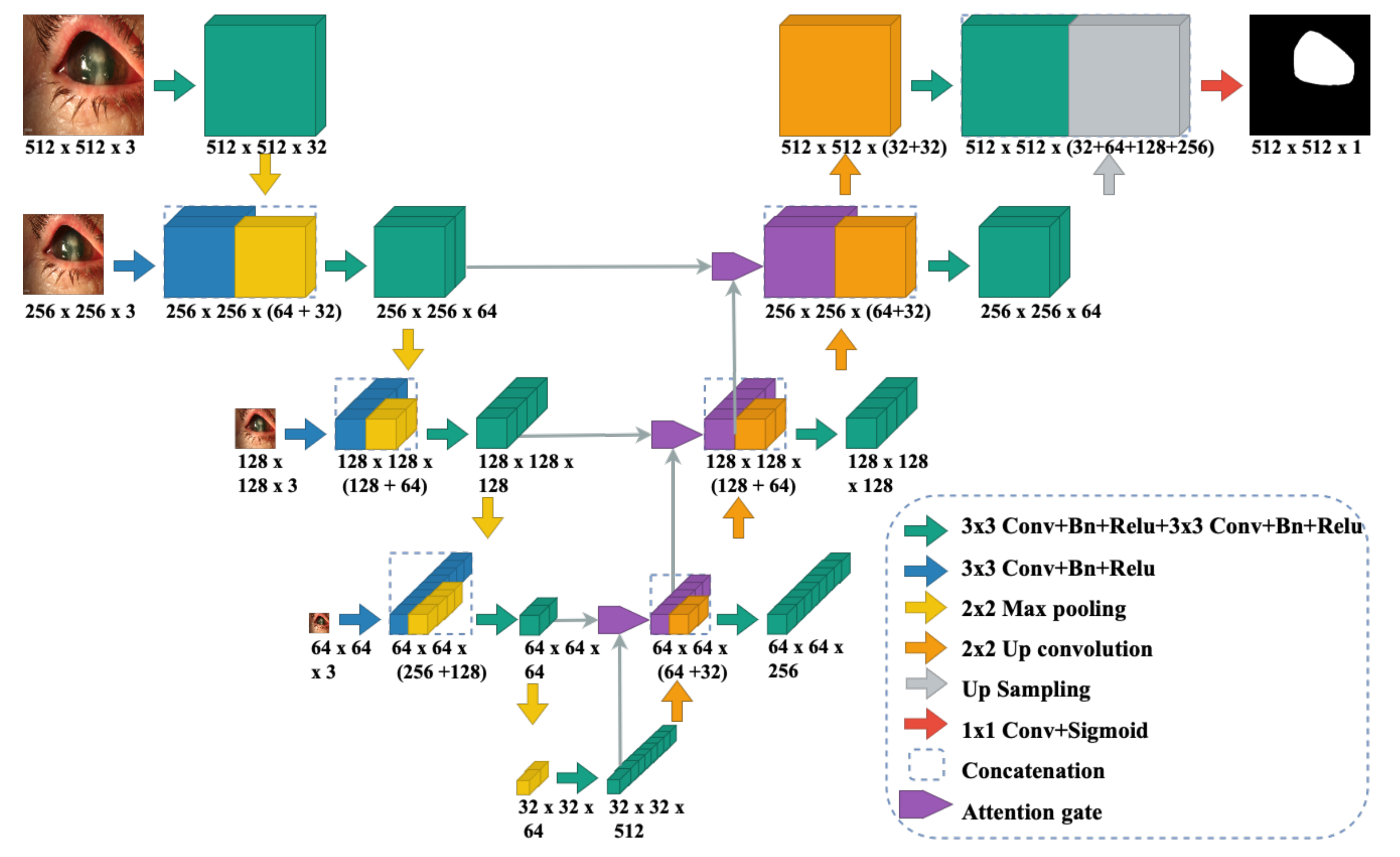

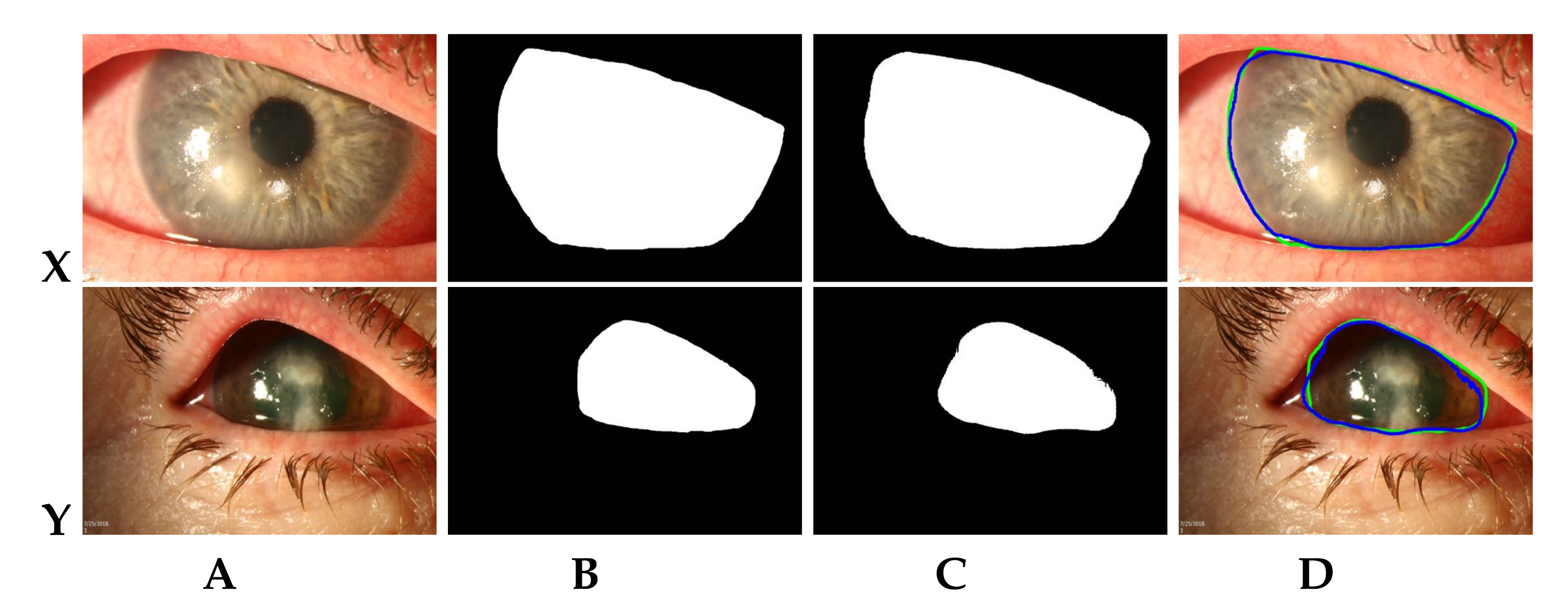

3.3. Multi-Scale CNN Model for RoI Segmentation

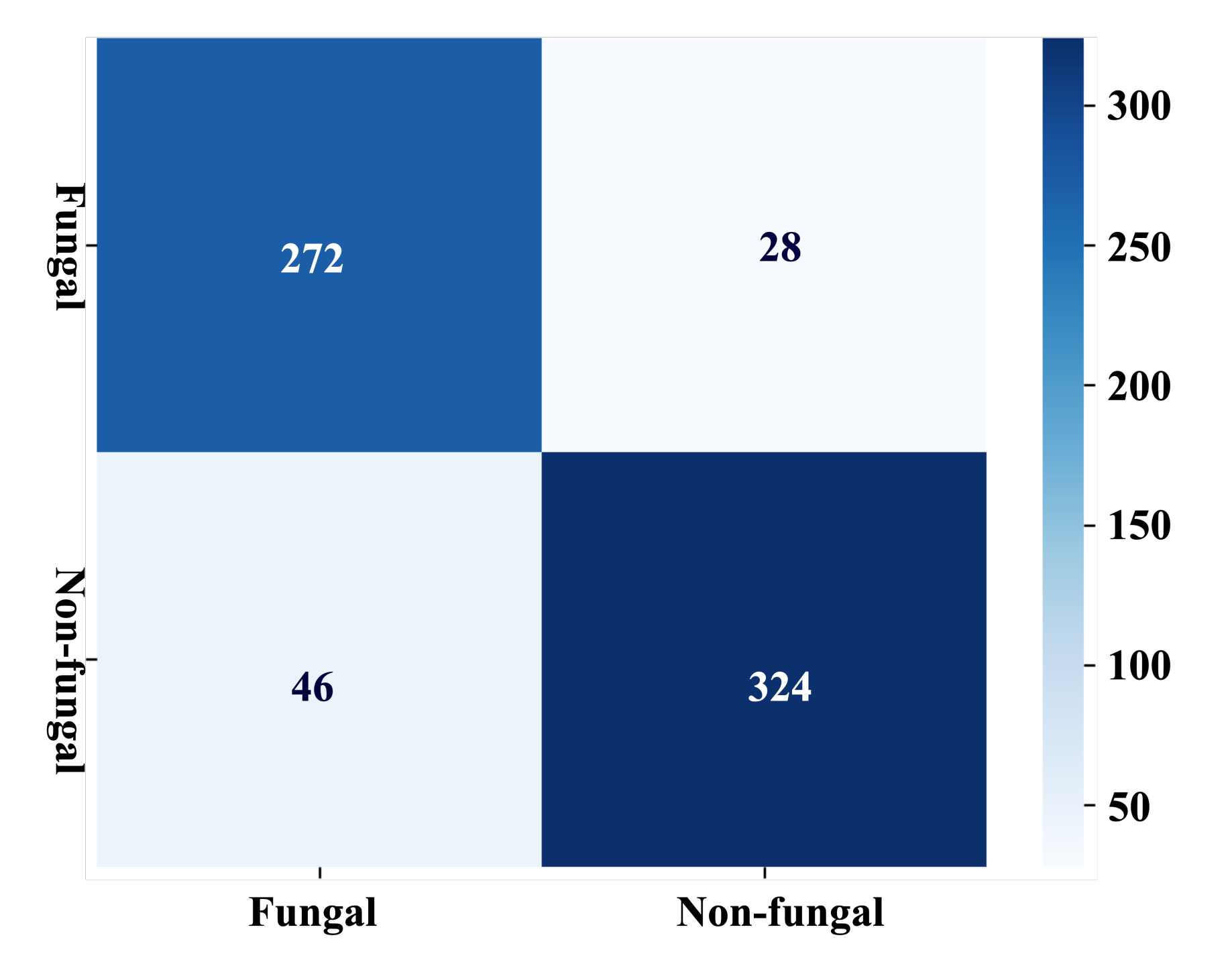

3.4. Disease Classification

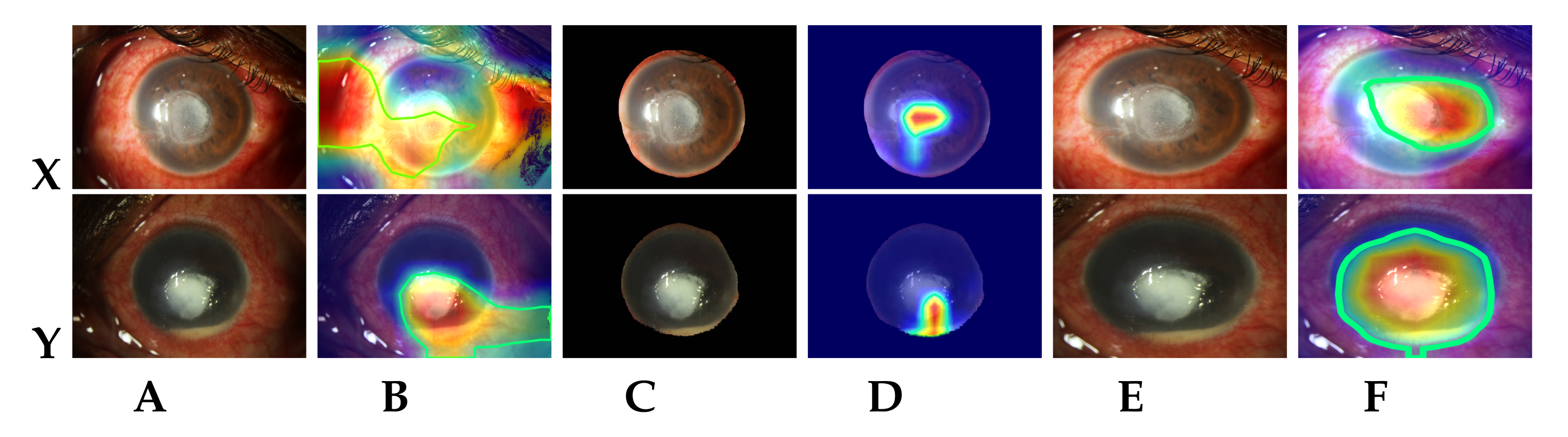

4. Experimental Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- WHO. World Health Organization Report on Vision; Online; WHO: Geneva, Switzerland, 2019. [Google Scholar]

- Ung, L.; Bispo, P.J.M.; Shanbhag, S.; Gilmore, M.; Chodosh, J. The persistent dilemma of microbial keratitis: Global burden, diagnosis, and antimicrobial resistance. Surv. Ophthalmol. 2019, 64, 255–271. [Google Scholar] [CrossRef] [PubMed]

- Anutarapongpan, O.; Brien, T. Update on Management of Fungal Keratitis. Clin. Microb. 2014, 3, 1000168. [Google Scholar] [CrossRef]

- Schein, O.D. Evidence-Based Treatment of Fungal Keratitis. JAMA Ophthalmol. 2016, 134, 1372–1373. [Google Scholar] [CrossRef] [PubMed]

- Maharana, P.; Sharma, N.; Nagpal, R.; Jhanji, V.; Das, S.; Vajpayee, R. Recent advances in diagnosis and management of Mycotic Keratitis. Indian J. Ophthalmol. 2016, 64, 346–357. [Google Scholar] [PubMed]

- Tananuvat, N.; Upaphong, P.; Tangmonkongvoragul, C.; Niparugs, M.; Chaidaroon, W.; Pongpom, M. Fungal keratitis at a tertiary eye care in Northern Thailand: Etiology and prognostic factors for treatment outcomes. J. Infect. 2021, 83, 112–118. [Google Scholar] [CrossRef] [PubMed]

- Ferrer, C.; Alió, J. Evaluation of molecular diagnosis in fungal keratitis. Ten years of experience. J. Ophthalmic Inflamm. Infect. 2011, 1, 15–22. [Google Scholar] [CrossRef] [Green Version]

- Dalmon, C.A.; Porco, T.; Lietman, T.; Prajna, N.; Prajna, L.; Das, M.; Kumar, J.A.; Mascarenhas, J.; Margolis, T.; Whitcher, J.; et al. The clinical differentiation of bacterial and fungal keratitis: A photographic survey. Investig. Ophthalmol. Vis. Sci. 2012, 53, 1787–1791. [Google Scholar] [CrossRef]

- Xu, Y.; Kong, M.; Xie, W.; Duan, R.; Fang, Z.; Lin, Y.; Zhu, Q.; Tang, S.; Wu, F.; Yao, Y.F. Deep Sequential Feature Learning in Clinical Image Classification of Infectious Keratitis. Engineering 2020. [Google Scholar] [CrossRef]

- Kuo, M.T.; Hsu, B.Y.; Yin, Y.K.; Fang, P.C.; Lai, H.Y.; Chen, A.; Yu, M.S.; Tseng, V. A deep learning approach in diagnosing fungal keratitis based on corneal photographs. Sci. Rep. 2020, 10, 1–8. [Google Scholar] [CrossRef]

- Liu, Z.; Shi, Y.; Zhan, P.; Zhang, Y.; Gong, Y.; Tang, X. Automatic Corneal Ulcer Segmentation Combining Gaussian Mixture Modeling and Otsu Method. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 6298–6301. [Google Scholar]

- Loo, J.; Kriegel, M.; Tuohy, M.; Kim, K.; Prajna, V.; Woodward, M.; Farsiu, S. Open-Source Automatic Segmentation of Ocular Structures and Biomarkers of Microbial Keratitis on Slit-Lamp Photography Images Using Deep Learning. IEEE J. Biomed. Health Inform. 2021, 25, 88–99. [Google Scholar] [CrossRef]

- Kalkancı, A.; Ozdek, S. Ocular Fungal Infections. Curr. Eye Res. 2011, 36, 179–189. [Google Scholar] [CrossRef] [PubMed]

- Lopes, B.T.; Eliasy, A.; Ambrósio, R. Artificial Intelligence in Corneal Diagnosis: Where Are we? Curr. Ophthalmol. Rep. 2019, 7, 204–211. [Google Scholar] [CrossRef] [Green Version]

- Prajna, V.; Prajna, L.; Muthiah, S. Fungal keratitis: The Aravind experience. Indian J. Ophthalmol. 2017, 65, 912–919. [Google Scholar] [CrossRef] [PubMed]

- Thomas, P.; Leck, A.; Myatt, M. Characteristic clinical features as an aid to the diagnosis of suppurative keratitis caused by filamentous fungi. Br. J. Ophthalmol. 2005, 89, 1554–1558. [Google Scholar] [CrossRef]

- Leck, A.; Burton, M. Distinguishing fungal and bacterial keratitis on clinical signs. Community Eye Health 2015, 28, 6–7. [Google Scholar]

- Dahlgren, M.A.; Lingappan, A.; Wilhelmus, K.R. The Clinical Diagnosis of Microbial Keratitis. Am. J. Ophthalmol. 2007, 143, 940–944.e1. [Google Scholar] [CrossRef] [Green Version]

- Raghavendra, U.; Gudigar, A.; Bhandary, S.; Rao, T.N.; Ciaccio, E.; Acharya, U. A Two Layer Sparse Autoencoder for Glaucoma Identification with Fundus Images. J. Med. Syst. 2019, 43, 1–9. [Google Scholar] [CrossRef]

- Mayya, V.; Kamath Shevgoor, S.; Kulkarni, U. Automated microaneurysms detection for early diagnosis of diabetic retinopathy: A Comprehensive review. Comput. Methods Programs Biomed. Update 2021, 1, 100013. [Google Scholar] [CrossRef]

- Nayak, D.R.; Das, D.; Majhi, B.; Bhandary, S.; Acharya, U. ECNet: An evolutionary convolutional network for automated glaucoma detection using fundus images. Biomed. Signal Process. Control 2021, 67, 102559. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Hung, N.; Shih, A.K.Y.; Lin, C.; Kuo, M.T.; Hwang, Y.S.; Wu, W.C.; Kuo, C.F.; Kang, E.Y.C.; Hsiao, C.H. Using Slit-Lamp Images for Deep Learning-Based Identification of Bacterial and Fungal Keratitis: Model Development and Validation with Different Convolutional Neural Networks. Diagnostics 2021, 11, 1246. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Dehghan, M.; Zaïane, O.R.; Jägersand, M. U2-Net: Going Deeper with Nested U-Structure for Salient Object Detection. Pattern Recognit. 2020, 106, 107404. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Dutta, A.; Zisserman, A. The VIA Annotation Software for Images, Audio and Video. In Proceedings of the 27th ACM International Conference on Multimedia, Nice, France, 21–25 October 2019; ACM: New York, NY, USA, 2019; pp. 2276–2279. [Google Scholar]

- Reza, A. Realization of the Contrast Limited Adaptive Histogram Equalization (CLAHE) for Real-Time Image Enhancement. VLSI Signal Process. 2004, 38, 35–44. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015; Springer: Munich, Germany, 2015; pp. 234–241. [Google Scholar]

- Abraham, N.; Khan, N. A Novel Focal Tversky Loss Function With Improved Attention U-Net for Lesion Segmentation. In Proceedings of the 2019 IEEE 16th International Symposium on Biomedical Imaging (ISBI 2019), Venice, Italy, 8–11 April 2019; pp. 683–687. [Google Scholar]

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef] [Green Version]

- Xie, S.; Girshick, R.B.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5987–5995. [Google Scholar]

- Liu, S.; Deng, W. Very deep convolutional neural network based image classification using small training sample size. In Proceedings of the 2015 3rd IAPR Asian Conference on Pattern Recognition (ACPR), Kuala Lumpur, Malaysia, 3–6 November 2015; pp. 730–734. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Arlot, S.; Celisse, A. A survey of cross-validation procedures for model selection. Stat. Surv. 2010, 4, 40–79. [Google Scholar] [CrossRef]

| Method | DSC (%) | Confidence Interval (with 0.05 Significance Level) | Training Parameters (in Millions) |

|---|---|---|---|

| U-Net [12] | 91 | 74–100% | 34.51 [28] |

| U Net [24] | 95.10 | 93.54–96.66% | 44.01 |

| SLIT-Net [12] | 95 | 93–97% | 44.62 |

| MS-CNN | 96.42 | 95.65–97.19% | 5.67 |

| Metric | Mean Value | Confidence Interval (with 0.05 Significance Level) |

|---|---|---|

| Accuracy | 88.96% | 87.43–90.48% |

| Sensitivity/Recall/TPR | 90.67% | 87.95–93.39% |

| Specificity/TNR | 87.57% | 85.45–89.69% |

| Precision/PPV | 85.65% | 83.59–87.75% |

| Negative predictive values/NPV | 92.18% | 90.01–94.33% |

| F1/Dice coefficient score/DSC | 88.01% | 86.32–89.70% |

| Model | Accuracy (%) d | F1 Score (%) d |

|---|---|---|

| Proposed approach (Section 3.2 + Section 3.3 + Section 3.4) | 89.55 | 89.23 |

| Original images (Section 3.4) | 87.88−θ | 87.59−θ |

| Segmented corneal images (Section 3.4) | 84.62−θ | 84.62−θ |

| RoI cropped images (Section 3.2 + Section 3.3) | 76.12−η | 76.12−η |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mayya, V.; Kamath Shevgoor, S.; Kulkarni, U.; Hazarika, M.; Barua, P.D.; Acharya, U.R. Multi-Scale Convolutional Neural Network for Accurate Corneal Segmentation in Early Detection of Fungal Keratitis. J. Fungi 2021, 7, 850. https://doi.org/10.3390/jof7100850

Mayya V, Kamath Shevgoor S, Kulkarni U, Hazarika M, Barua PD, Acharya UR. Multi-Scale Convolutional Neural Network for Accurate Corneal Segmentation in Early Detection of Fungal Keratitis. Journal of Fungi. 2021; 7(10):850. https://doi.org/10.3390/jof7100850

Chicago/Turabian StyleMayya, Veena, Sowmya Kamath Shevgoor, Uma Kulkarni, Manali Hazarika, Prabal Datta Barua, and U. Rajendra Acharya. 2021. "Multi-Scale Convolutional Neural Network for Accurate Corneal Segmentation in Early Detection of Fungal Keratitis" Journal of Fungi 7, no. 10: 850. https://doi.org/10.3390/jof7100850

APA StyleMayya, V., Kamath Shevgoor, S., Kulkarni, U., Hazarika, M., Barua, P. D., & Acharya, U. R. (2021). Multi-Scale Convolutional Neural Network for Accurate Corneal Segmentation in Early Detection of Fungal Keratitis. Journal of Fungi, 7(10), 850. https://doi.org/10.3390/jof7100850