Analysis of Various Facial Expressions of Horses as a Welfare Indicator Using Deep Learning

Abstract

Simple Summary

Abstract

1. Introduction

2. Materials and Methods

2.1. Animals

- RH (n = 210): Horses that rested in stables. We confirmed from owners that the horses were not exposed to any external stimuli, exercise, or horseshoeing and from veterinarians that the horses had no disease 3 h before filming.

- HP (n = 163): Most horses with pain due to complications, such as acute colic, radial nerve paralysis, laminitis, and joint tumors, were transported to an equine veterinary hospital of the KRA. These horses were diagnosed by veterinarians and underwent surgery, treatment, and hospitalization (N = 136/163). Fattened horses, primarily Jeju horses in South Korea, raised on fattening farms were also included (N = 8/163). Their feces and urine were not removed from the stables; all horse hooves were turned, and the horses could not walk properly. Furthermore, the images of horses with pain used in the previously published papers were extracted and included in this study (N = 19/163).

- HE (n = 156): Horses that were well trained and exercised every day were included in this study after the veterinarians confirmed that they were free from edema or lameness. Horse exercises included walking, trotting, cantering, and horse riding for 50 min and treadmill for 40 min. Filming was performed immediately after the exercise and dismantling the bridle.

- HH (n = 210): These are horses that had to have farriery treatment by farriers. On the day of horseshoeing, the horses did not exercise and rested in their individual stables. Images were taken with the horse fixed after the halter was fastened with both lead straps in the stable corridor during farriery treatment. Horses without visible lameness were included prior to horseshoeing.

2.2. Image Collection

3. Proposed Methodology

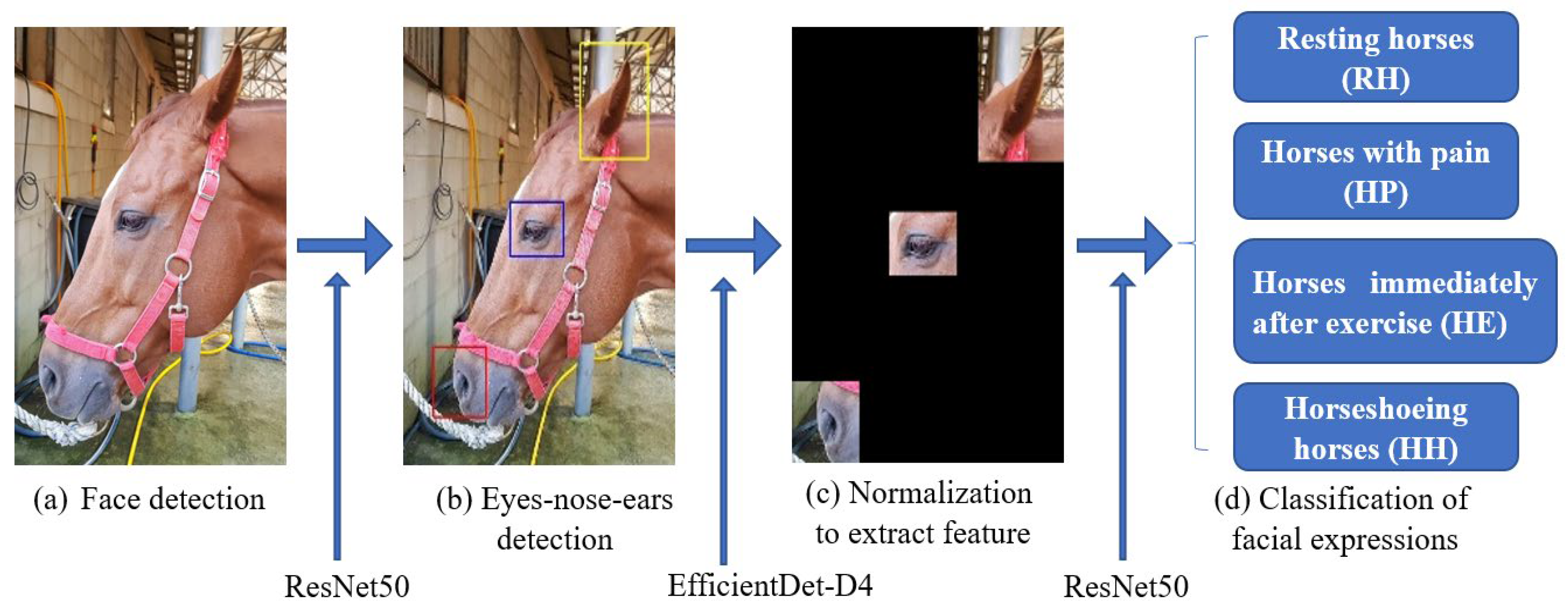

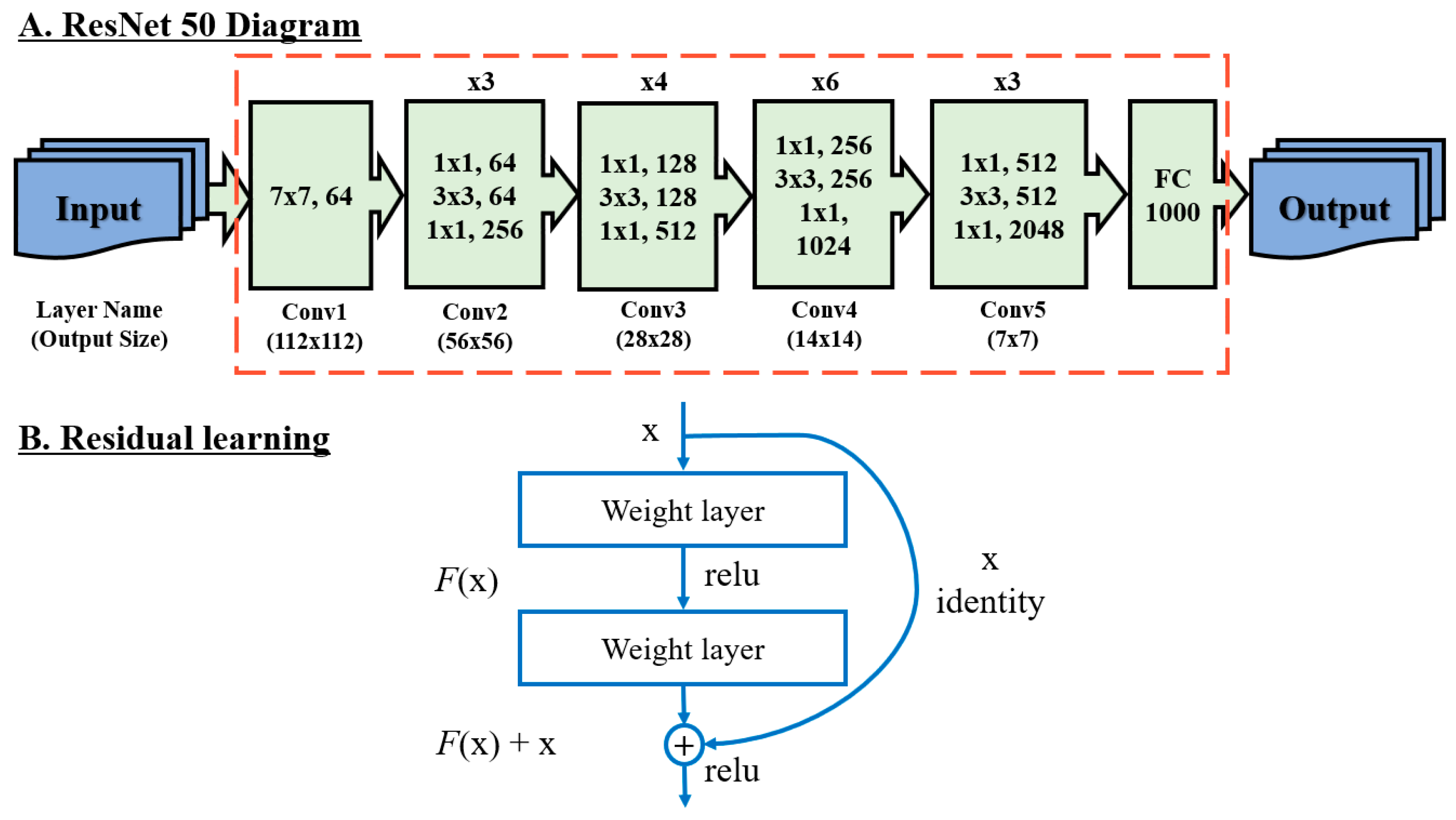

3.1. Method Overview

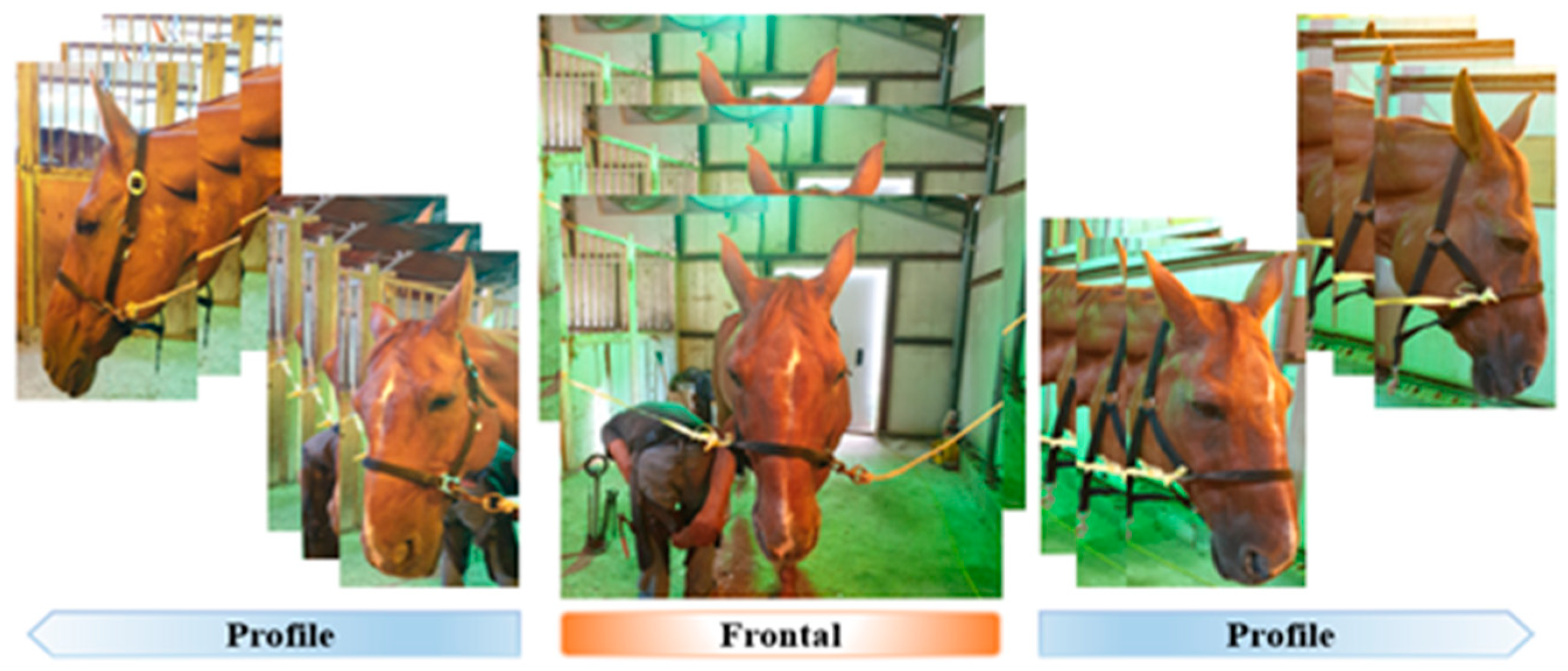

3.2. Normalization of Facial Posture

3.3. Equine Facial Keypoint Detection for Classification

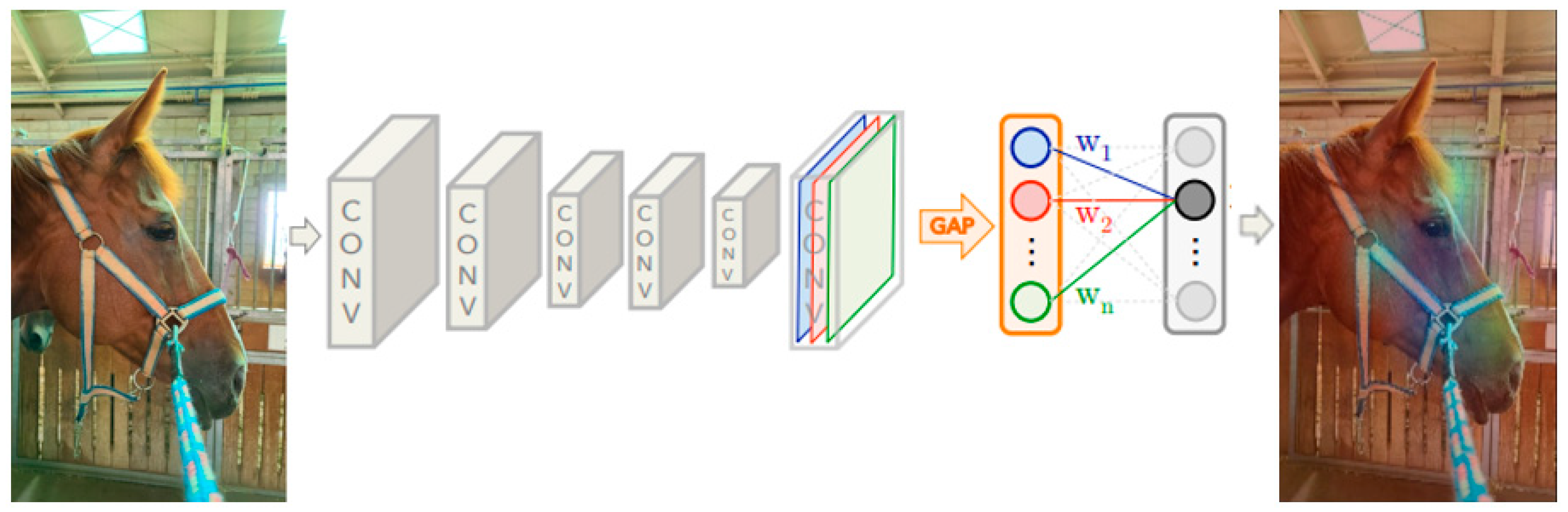

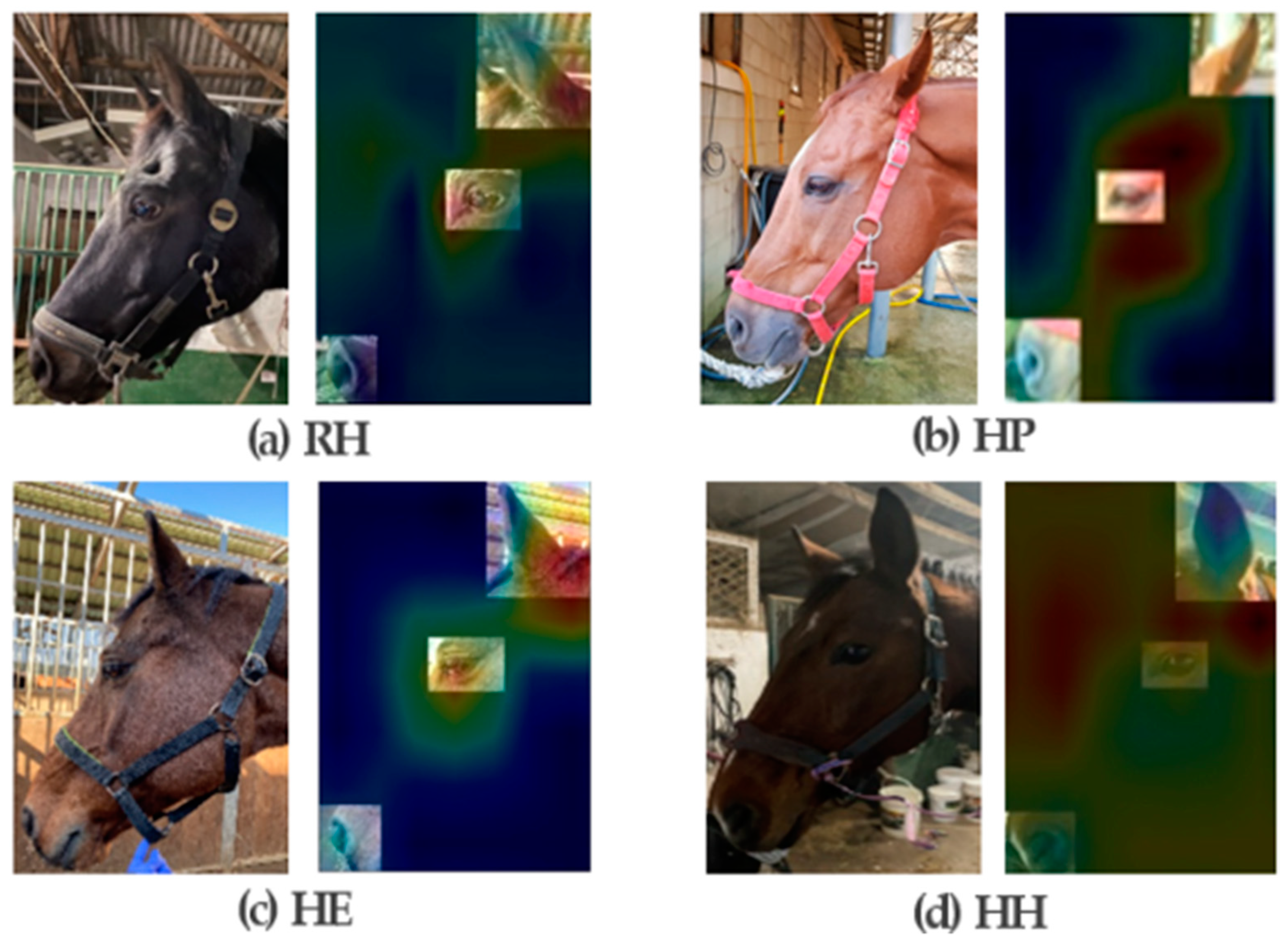

3.4. Class Activation Mapping

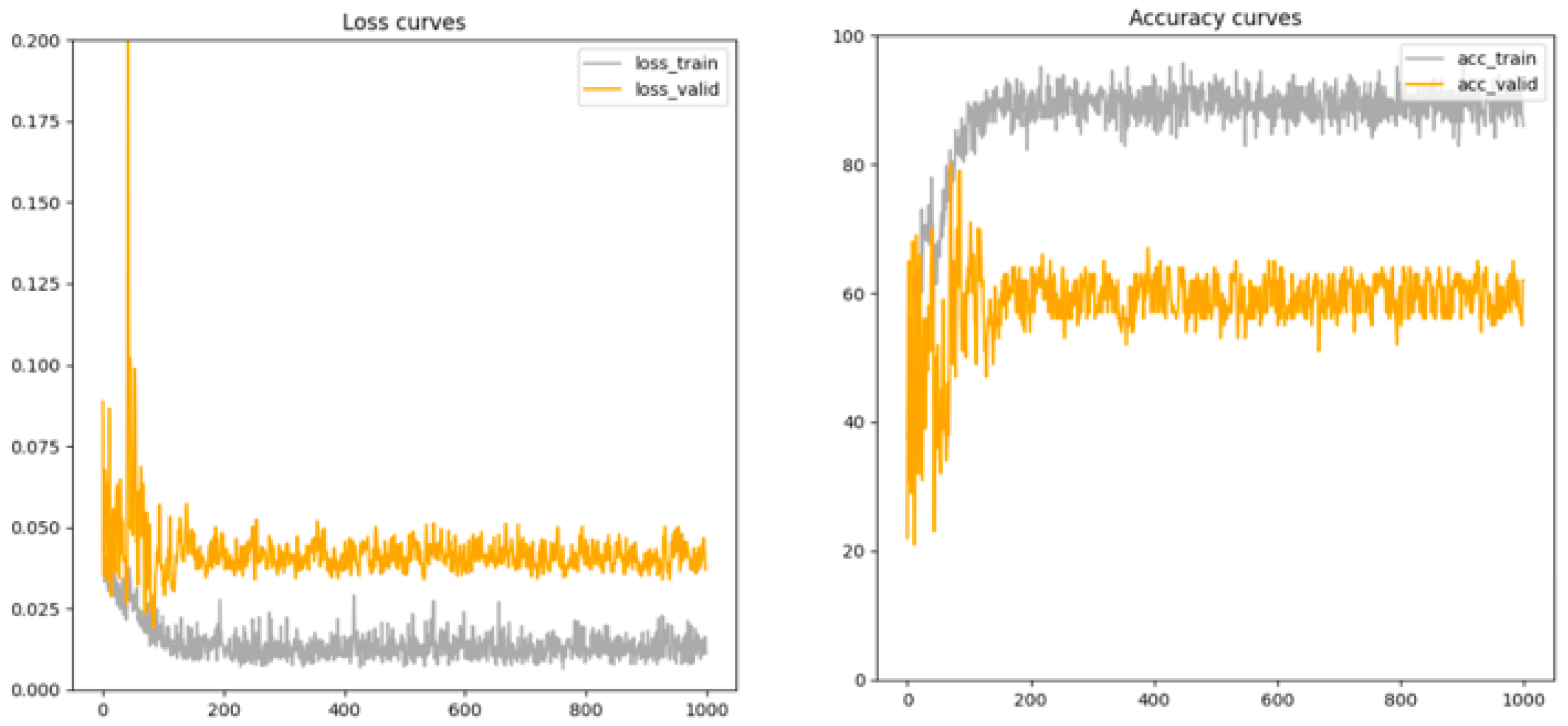

3.5. Model Convergence

4. Results

4.1. Normalization of Facial Posture Results

4.2. Visualization of Class Activation Mapping

4.3. Equine Facial Keypoint Detection Classification Performance

4.4. Model Convergence

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Darwin, C.; Prodger, P. The Expression of the Emotions in Man and Animals; Oxford University Press: New York, NY, USA, 1998. [Google Scholar] [CrossRef]

- Leach, M.C.; Klaus, K.; Miller, A.L.; Scotto di Perrotolo, M.; Sotocinal, S.G.; Flecknell, P.A. The assessment of post-vasectomy pain in mice using behaviour and the mouse grimace scale. PLoS ONE 2012, 7, e35656. [Google Scholar] [CrossRef] [PubMed]

- Van Rysewyk, S. Nonverbal indicators of pain. Anim. Sentience 2016, 1, 30. [Google Scholar] [CrossRef]

- Price, J.; Marques, J.M.; Welsh, E.M.; Waran, N.K. Pilot epidemiological study of attitudes towards pain in horses. Vet. Rec. 2002, 151, 570–575. [Google Scholar] [CrossRef] [PubMed]

- Morrel-Samuels, P.; Krauss, R.M. Cartesian analysis: A computer-video interface for measuring motion without physical contact. Behav. Res. Methods Instrum. Comput. 1990, 22, 466–470. [Google Scholar] [CrossRef]

- Pereira, P.; Oliveira, R.F. A simple method using a single video camera to determine the three-dimensional position of a fish. Behav. Res. Meth. Instrum. Comput. 1994, 26, 443–446. [Google Scholar] [CrossRef]

- Santucci, A.C. An affordable computer-aided method for conducting Morris water maze testing. Behav. Res. Meth. Instrum. Comput. 1995, 27, 60–64. [Google Scholar] [CrossRef]

- Gleerup, K.B.; Lindegaard, C. Recognition and quantification of pain in horses: A tutorial review. Equine Vet. Educ. 2016, 28, 47–57. [Google Scholar] [CrossRef]

- Graubner, C.; Gerber, V.; Doherr, M.; Spadavecchia, C. Clinical application and reliability of a post abdominal surgery pain assessment scale (PASPAS) in horses. Vet. J. 2011, 188, 178–183. [Google Scholar] [CrossRef]

- Price, J.; Catriona, S.; Welsh, E.M.; Waran, N.K. Preliminary evaluation of a behaviour-based system for assessment of post-operative pain in horses following arthroscopic surgery. Vet. Anaesth. Analg. 2003, 30, 124–137. [Google Scholar] [CrossRef]

- Raekallio, M.; Taylor, P.M.; Bloomfield, M.A. Comparison of methods for evaluation of pain and distress after orthopaedic surgery in horses. Vet. Anaesth. Analg. 1997, 24, 17–20. [Google Scholar] [CrossRef]

- Sellon, D.C.; Roberts, M.C.; Blikslager, A.T.; Ulibarri, C.; Papich, M.G. Effects of continuous rate intravenous infusion of butorphanol on physiologic and outcome variables in horses after celiotomy. J. Vet. Intern. Med. 2004, 18, 555–563. [Google Scholar] [CrossRef]

- Van Loon, J.P.; Van Dierendonck, M.C. Monitoring acute equine visceral pain with the Equine Utrecht university Scale for Composite Pain Assessment (EQUUS-COMPASS) and the Equine Utrecht University Scale for Facial Assessment of Pain (EQUUS-FAP): A scale-construction study. Vet. J. 2015, 206, 356–364. [Google Scholar] [CrossRef] [PubMed]

- de Grauw, J.C.; van Loon, J.P. Systematic pain assessment in horses. Vet. J. 2016, 209, 14–22. [Google Scholar] [CrossRef]

- Love, E.J. Assessment and management of pain in horses. Equine Vet. Educ. 2009, 21, 46–48. [Google Scholar] [CrossRef]

- Gleerup, K.B.; Forkman, B.; Lindegaard, C.; Andersen, P.H. An equine pain face. Vet. Anaesth. Analg. 2015, 42, 103–114. [Google Scholar] [CrossRef] [PubMed]

- Andersen, P.H.; Broomé, S.; Rashid, M.; Lundblad, J.; Ask, K.; Li, Z.; Hernlund, E.; Rhodin, M.; Kjellström, H. Towards machine recognition of facial expressions of pain in horses. Animals 2021, 11, 1643. [Google Scholar] [CrossRef]

- Hummel, H.I.; Pessanha, F.; Salah, A.A.; van Loon, T.; Veltkamp, R.C. Automatic pain detection on horse and donkey faces. In Proceedings of the 15th IEEE International Conference on Automatic Face and Gesture Recognition, Buenos Aires, Argentina, 16–20 November 2020; pp. 793–800. [Google Scholar] [CrossRef]

- Williams, A.C.D.C. Facial expression of pain: An evolutionary account. Behav. Brain. Sci. 2002, 25, 439–455. [Google Scholar] [CrossRef]

- Ashley, F.H.; Waterman-Pearson, A.E.; Whay, H.R. Behavioural assessment of pain in horses and donkeys: Application to clinical practice and future studies. Equine Vet. J. 2005, 37, 565–575. [Google Scholar] [CrossRef]

- Coles, B.; Birgitsdottir, L.; Andersen, P.H. Out of sight but not out of clinician’s mind: Using remote video surveillance to disclose concealed pain behavior in hospitalized horses. In Proceedings of the International Association for the Study of Pain 17th World Congress, Boston, MA, USA, 12 September 2018; p. 471121. [Google Scholar]

- Torcivia, C.; McDonnell, S. In-person caretaker visits disrupt ongoing discomfort behavior in hospitalized equine orthopedic surgical patients. Animals 2020, 10, 210. [Google Scholar] [CrossRef]

- Hintze, S.; Smith, S.; Patt, A.; Bachmann, I.; Würbel, H. Are eyes a mirror of the soul? What eye wrinkles reveal about a Horse’s emotional state. PLoS ONE 2016, 11, e0164017. [Google Scholar] [CrossRef]

- Doherty, O.L.; Casey, V.; McGreevy, P.; Arkins, S. Noseband use in equestrian sports—An international study. PLoS ONE 2017, 12, e0169060. [Google Scholar] [CrossRef] [PubMed]

- Fenner, K.; Yoon, S.; White, P.; Starling, M.; McGreevy, P. The effect of noseband tightening on horses’ behavior, eye temperature, and cardiac responses. PLoS ONE 2016, 11, e0154179. [Google Scholar] [CrossRef] [PubMed]

- Anderson, P.H.; Gleerup, K.B.; Wathan, J.; Coles, B.; Kjellström, H.; Broomé, S.; Lee, Y.J.; Rashid, M.; Sonder, C.; Rosenberger, E.; et al. Can a machine learn to see horse pain? An interdisciplinary approach towards automated decoding of facial expressions of pain in the horse. In Proceedings of the Measuring Behavioral Research, Manchester, UK, 5–8 June 2018; p. 209526193. [Google Scholar]

- Bussières, G.; Jacques, C.; Lainay, O.; Beauchamp, G.; Leblond, A.; Cadoré, J.L.; Desmaizières, L.M.; Cuvelliez, S.G.; Troncy, E. Development of a composite orthopaedic pain scale in horses. Res. Vet. Sci. 2008, 85, 294–306. [Google Scholar] [CrossRef]

- Lindegaard, C.; Gleerup, K.B.; Thomsen, M.H.; Martinussen, T.; Jacobsen, S.; Andersen, P.H. Anti-inflammatory effects of intra-articular administration of morphine in horses with experimentally induced synovitis. Am. J. Vet. Res. 2010, 71, 69–75. [Google Scholar] [CrossRef] [PubMed]

- Kil, N.; Ertelt, K.; Auer, U. Development and validation of an automated video tracking model for stabled horses. Animals 2020, 10, 2258. [Google Scholar] [CrossRef]

- Kim, H.I.; Moon, J.Y.; Park, J.Y. Research trends for deep learning-based high-performance face recognition technology. ETRI J. 2018, 33, 43–53. [Google Scholar] [CrossRef]

- Graving, J.M.; Chae, D.; Naik, H.; Li, L.; Koger, B.; Costelloe, B.R.; Couzin, I.D. DeepPoseKit, a software toolkit for fast and robust animal pose estimation using deep learning. eLife 2019, 8, e47994. [Google Scholar] [CrossRef]

- Mathis, A.; Mamidanna, P.; Cury, K.M.; Abe, T.; Murthy, V.N.; Mathis, M.W.; Bethge, M. DeepLabCut: Markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 2018, 21, 1281–1289. [Google Scholar] [CrossRef]

- Mathis, M.W.; Mathis, A. Deep learning tools for the measurement of animal behavior in neuroscience. Curr. Opin. Neurobiol. 2020, 60, 1–11. [Google Scholar] [CrossRef]

- Burla, J.B.; Ostertag, A.; Schulze Westerath, H.S.; Hillmann, E. Gait determination and activity measurement in horses using an accelerometer. Comput. Electron. Agric. 2014, 102, 127–133. [Google Scholar] [CrossRef]

- Eerdekens, A.; Deruyck, M.; Fontaine, J.; Martens, L.; Poorter, E.D.; Joseph, W. Automatic equine activity detection by convolutional neural networks using accelerometer data. Comput. Electron. Agric. 2020, 168, 105–139. [Google Scholar] [CrossRef]

- Wathan, J.; Burrows, A.M.; Waller, B.M.; McComb, K. EquiFACS: The equine Facial Action Coding System. PLoS ONE 2015, 10, e0131738. [Google Scholar] [CrossRef]

- Dalla Costa, E.; Minero, M.; Lebelt, D.; Stucke, D.; Canali, E.; Leach, M.C. Development of the Horse Grimace Scale (HGS) as a pain assessment tool in horses undergoing routine castration. PLoS ONE 2014, 9, e92281. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the Computer Vision and Pattern Recognition, Columbus, OH, USA, 10 April 2015. [Google Scholar] [CrossRef]

- Minaee, S.; Minaei, M.; Abdolrashidi, A. Deep-emotion: Facial expression recognition using attentional convolutional network. Sensors 2021, 21, 3046. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.; Ye, S.; Zhao, L.; Dai, Z. Hybrid attention cascade network for facial expression recognition. Sensors 2021, 21, 2003. [Google Scholar] [CrossRef] [PubMed]

- Mahmood, A.; Ospina, A.G.; Bennamoun, M.; An, S.; Sohel, F.; Boussaid, F.; Hovey, R.; Fisher, R.B.; Kendrick, G.A. Automatic hierarchical classification of kelps using deep residual features. Sensors 2020, 20, 447. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020. [Google Scholar] [CrossRef]

- Lin, M.; Chen, Q.; Yan, S. Network in network. In Proceedings of the International Conference on Learning Representations 2014, Banff, AB, Canada, 28 February 2014. [Google Scholar] [CrossRef]

- Szegedy, C.W.; Liu, Y.; Jia, P.; Sermanet, S.; Reed, D.; Anguelov, D.; Erhan, V.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- Zhou, B.; Khosla, A.; Lapedriza, A.; Oliva, A.; Torralba, A. Learning deep features for discriminative localization. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- Zeiler, M.D.; Fergus, R. Visualizing and understanding convolutional networks. Lecture Notes in Computer Science. In Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014; pp. 818–833. [Google Scholar] [CrossRef]

- Egenvall, A.; Penell, J.C.; Bonnett, B.N.; Olson, P.; Pringle, J. Mortality of Swedish horses with complete life insurance between 1997 and 2000: Variations with sex, age, breed and diagnosis. Vet. Rec. 2006, 158, 397–406. [Google Scholar] [CrossRef]

- Stover, S.M. The epidemiology of Thoroughbred racehorse injuries. Clin. Tech. Equine Pract. 2003, 2, 312–322. [Google Scholar] [CrossRef]

- Logan, A.A.; Nielsen, B.D. Training young horses: The science behind the benefits. Animals 2021, 11, 463. [Google Scholar] [CrossRef]

- Lucey, P.; Cohn, J.F.; Kanade, T.; Saragih, J.; Ambadar, Z.; Matthews, I. The Extended Cohn-Kanade Dataset (CK+): A complete dataset for action unit and emotion-specified expression. In Proceedings of the 2010 IEEE Computer Society Conference on Computer Vision and Pattern Recognition—Workshops, San Francisco, CA, USA, 13–18 June 2010; pp. 94–101. [Google Scholar] [CrossRef]

- Lucey, P.; Cohn, J.F.; Prkachin, K.M.; Solomon, P.E.; Matthews, I. 2011 Painful data: The UNBC-McMaster shoulder pain expression archive database. In Proceedings of the 2011 International Conference on Automatic Face & Gesture Recognition, Santa Barbara, CA, USA, 21–25 March 2011; pp. 57–64. [Google Scholar] [CrossRef]

- Zhang, X.; Yin, L.; Cohn, J.F.; Canavan, S.; Reale, M.; Horowitz, A.; Liu, P.; Girard, J.M. BP4D-spontaneous: A high-resolution spontaneous 3D dynamic facial expression database. Image. Vis. Comput. 2014, 32, 692–706. [Google Scholar] [CrossRef]

- LeCun, Y. Generalization and network design strategies. Conn. Perspect. 1989, 119, 143–155. [Google Scholar]

- Pooya, K.; Paine, T.; Huang, T. Do deep neural networks learn facial action units when doing expression recognition? In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015. [Google Scholar] [CrossRef]

- Tzirakis, P.; Trigeorgis, G.; Nicolaou, M.A.; Schuller, B.W.; Zafeiriou, S. End-to-end multimodal emotion recognition using deep neural networks. IEEE J. Sel. Top. Signal. Process. 2017, 11, 1301–1309. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Zhou, B.; Lapedriza, A.; Xiao, J.; Torralba, A.; Oliva, A. Learning deep features for scene recognition using places database. In Proceedings of the 27th International Conference on Neural Information processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 487–495. [Google Scholar]

- Bergamo, A.; Bazzani, L.; Anguelov, D.; Torresani, L. Self-taught object localization with deep networks. In Proceedings of the 2016 IEEE Winter Conference on Applications of Computer Vision, Lake Placid, NY, USA, 7–10 March 2016. [Google Scholar] [CrossRef]

- Cinbis, R.G.; Verbeek, J.; Schmid, C. Weakly supervised object localization with multi-fold multiple instance learning. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 189–203. [Google Scholar] [CrossRef]

- Oquab, M.; Bottou, L.; Laptev, I.; Sivic, J. Learning and transferring mid-level image representations using convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014. [Google Scholar] [CrossRef]

- Oquab, M.; Bottou, L.; Laptev, I.; Sivic, J. Is object localization for free? weakly supervised learning with convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar] [CrossRef]

- Cohn, F.J.; Zlochower, A. A computerized analysis of facial expression: Feasibility of automated discrimination. Am. Psychol. Soc. 1995, 2, 6. [Google Scholar]

- Dyson, S.; Berger, J.M.; Ellis, A.D.; Mullard, J. Can the presence of musculoskeletal pain be determined from the facial expressions of ridden horses (FEReq)? J. Vet. Behav. 2017, 19, 78–89. [Google Scholar] [CrossRef]

- Dalla Costa, E.; Stucke, D.; Dai, F.; Minero, M.; Leach, M.C.; Lebelt, D. Using the horse grimace scale (HGS) to assess pain associated with acute laminitis in horses (Equus caballus). Animals 2016, 6, 47. [Google Scholar] [CrossRef]

- Shin, S.K.; Kim, S.M.; Lioyd, S.; Cho, G.J. Prevalence of hoof disorders in horses in South Korea. Open Agric. J. 2020, 14, 25–29. [Google Scholar]

| Model Classification Results | Actual Results | |

|---|---|---|

| True | False | |

| True | True positive (TP) | False positive (FN) |

| False | False negative (FN) | True negative (TN) |

| N | Accuracy (%) | |

|---|---|---|

| Frontal | 162/166 | 97.59 |

| Profile | 181/187 | 99.45 |

| Mean | 343/353 | 98.52 |

| Training | Validation | Test | ||||

|---|---|---|---|---|---|---|

| n = 339 | Accuracy | n = 137 | Accuracy | n = 273 | Accuracy | |

| Resting | 80/80 | 100.0 | 21/25 | 84.0 | 97/105 | 92.38 |

| Feeling pain | 77/78 | 98.72 | 20/27 | 74.07 | 47/58 | 77.41 |

| Exercising | 78/81 | 96.29 | 19/25 | 76.0 | 42/50 | 84.0 |

| Horseshoeing | 100/100 | 100.0 | 55/60 | 91.67 | 57/60 | 95.0 |

| Total | 98.75 | 81.44 | 88.1 | |||

| Classification | n = 58 | Accuracy (%) |

|---|---|---|

| Paper | 7/7 | 100 |

| Google * | 9/12 | 75 |

| Surgery | 17/19 | 89.47 |

| Laminitis | 6/6 | 100 |

| Fattened horses | 8/8 | 100 |

| Severe lameness | 0/6 | 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.M.; Cho, G.J. Analysis of Various Facial Expressions of Horses as a Welfare Indicator Using Deep Learning. Vet. Sci. 2023, 10, 283. https://doi.org/10.3390/vetsci10040283

Kim SM, Cho GJ. Analysis of Various Facial Expressions of Horses as a Welfare Indicator Using Deep Learning. Veterinary Sciences. 2023; 10(4):283. https://doi.org/10.3390/vetsci10040283

Chicago/Turabian StyleKim, Su Min, and Gil Jae Cho. 2023. "Analysis of Various Facial Expressions of Horses as a Welfare Indicator Using Deep Learning" Veterinary Sciences 10, no. 4: 283. https://doi.org/10.3390/vetsci10040283

APA StyleKim, S. M., & Cho, G. J. (2023). Analysis of Various Facial Expressions of Horses as a Welfare Indicator Using Deep Learning. Veterinary Sciences, 10(4), 283. https://doi.org/10.3390/vetsci10040283