Abstract

Robust credit risk prediction in emerging economies increasingly demands the integration of external factors (EFs) beyond borrowers’ control. This study introduces a scenario-based methodology to incorporate EF—namely COVID-19 severity (mortality and confirmed cases), climate anomalies (temperature deviations, weather-induced road blockages), and social unrest—into machine learning (ML) models for credit delinquency prediction. The approach is grounded in a CRISP-DM framework, combining stationarity testing (Dickey–Fuller), causality analysis (Granger), and post hoc explainability (SHAP, LIME), along with performance evaluation via AUC, ACC, KS, and F1 metrics. The empirical analysis uses nearly 8.2 million records compiled from multiple sources, including 367,000 credit operations granted to individuals and microbusiness owners by a regulated Peruvian financial institution (FMOD) between January 2020 and September 2023. These data also include time series of delinquency by economic activity, external factor indicators (e.g., mortality, climate disruptions, and protest events), and their dynamic interactions assessed through Granger causality to evaluate both the intensity and propagation of external shocks. The results confirm that EF inclusion significantly enhances model performance and robustness. Time-lagged mortality (COVID MOV) emerges as the most powerful single predictor of delinquency, while compound crises (climate and unrest) further intensify default risk—particularly in portfolios without public support. Among the evaluated models, CNN and XGB consistently demonstrate superior adaptability, defined as their ability to maintain strong predictive performance across diverse stress scenarios—including pandemic, climate, and unrest contexts—and to dynamically adjust to varying input distributions and portfolio conditions. Post hoc analyses reveal that EF effects dynamically interact with borrower income, indebtedness, and behavioral traits. This study provides a scalable, explainable framework for integrating systemic shocks into credit risk modeling. The findings contribute to more informed, adaptive, and transparent lending decisions in volatile economic contexts, relevant to financial institutions, regulators, and risk practitioners in emerging markets.

1. Introduction

Currently, artificial intelligence (AI), machine learning (ML), and process digitization are fundamental elements of daily life. This trend began in the 1990s with the global expansion of the Internet [1] and notably intensified during the COVID-19 pandemic [2]. Simultaneously, the increase in the use of online credit has generated large volumes of data [3], leading the financial industry to the imperative need to develop advanced technological tools for risk prediction and effective uncertainty management [4] that include exogenous variables in the process [5]. Presently, scoring models and machine learning techniques are common for risk prediction, applying innovations based on exhaustive data analysis, data-driven innovation (DDI) [6], and the big data paradigm—characterized by the use of massive, high-dimensional, and heterogeneous datasets for predictive modeling and decision-making [7].

In the context of rising demand, credit risk levels show a significant increase [8,9]. Despite the growing integration of DDI and ML in the financial sector, substantial challenges persist, leaving numerous applications unexplored. The application of ML techniques during unpredictable events, such as the 2008 housing crisis [2,10], the COVID-19 pandemic [11], and the impacts of climate change [12], has exposed critical limitations in risk assessment methodologies and revealed considerable uncertainties in their outputs [2,13,14,15]. These challenges underscore the need to examine the influence of external factors (EF) on the accuracy and robustness of ML models in credit risk evaluation [6,16], particularly concerning the inclusion of sequential or time-series data, which are commonly encountered in credit risk scenarios [17]. Moreover, these issues emphasize the need to ensure explainability in ML-driven predictions [18], both to facilitate informed decision-making by stakeholders and to maintain transparency for all parties involved [19].

The main objective of this article is to address the integration of variables sensitive to external risk factors into machine learning models for credit risk assessment, a critical yet insufficiently explored topic in the academic context. Peru, as a case study, represents a particularly relevant scenario: it was the country with the highest COVID-19 death rate per 100,000 inhabitants during the pandemic [20]; it faced the periodic and devastating effects of the El Niño phenomenon [12]; and it experienced intense social unrest following the attempted coup in December 2022 [21]. These events have directly impacted the microcredit market by targeting small businesses, a sector highly vulnerable to risk volatility [12].

While the influence of external factors—such as macroeconomic variables and pandemic-related disruptions—on credit risk has been the subject of substantial academic research, few studies have explored the simultaneous integration of multiple external shocks (pandemics, climate anomalies, and social unrest) into ML-based credit risk models, especially in emerging economies such as Peru. This study addresses that gap, offering valuable insights into the applicability and extrapolation of multifactor risk models in vulnerable financial systems [12]. To this end, EF-related variables are integrated into credit risk models through causality and stationarity tests [22]. These variables are derived from public time-series data and combined with proprietary credit data provided by a regulated Peruvian financial institution—hereafter referred to as FMOD—used under a confidentiality agreement. This strategy not only enhances the predictive power of ML models but also establishes a replicable methodology for integrating diverse EF indicators into future risk prediction studies.

The FMOD dataset plays a pivotal role in this study. It incorporates a unique variable that identifies loans supported by government intervention during periods influenced by EF, such as climate change, social unrest, and the COVID-19 pandemic. This variable allows for the detection and detailed analysis of the potential biases introduced by mitigation programs designed to address economic disruptions. By explicitly accounting for these interventions, the FMOD dataset provides a nuanced understanding of how state-led programs shape credit risk dynamics and borrower behavior under varying external conditions.

Moreover, the FMOD dataset includes monthly updated records of delinquency days, enabling granular analysis across distinct periods. This temporal precision facilitates an in-depth exploration of the interplay between EF and default patterns, thus enhancing the methodological rigor of credit risk assessment. By integrating time-series data with real-world financial indicators, the FMOD dataset offers a robust and replicable framework for evaluating the influence of EF and government programs on financial stability and credit behavior in emerging markets.

Finally, this study evaluates the impact of EF on the predictive accuracy and explainability of ML models. Particular attention is paid to identifying the most influential factors when incorporating external variables into predictive frameworks. By addressing these challenges, this research not only fills a critical gap in the existing literature but also advances the understanding of the effects of EF on credit risk in emerging economies. This contributes actionable insights for policymakers and financial institutions aiming to enhance resilience in volatile economic environments.

2. Related Work

Credit risk, an inherent component of financial activities, arises when borrowers fail to meet their credit obligations, typically due to inability rather than unwillingness to pay [23]. This form of credit deterioration is strongly associated with demographic and macroeconomic variables—such as household income and employment—which are, in turn, sensitive to external systemic shocks [9,23]. Enhancing the explainability of these complex relationships is essential to support data-driven decision-making for policymakers, financial institutions, and affected communities.

The recent literature has increasingly focused on the role of exogenous compound shocks—namely, pandemics, climate change, and social unrest—as emerging sources of credit instability. These phenomena often co-occur and interact in nonlinear ways, producing cascading effects on borrower behavior, portfolio quality, and systemic financial resilience.

- COVID-19 and Credit Risk: The COVID-19 pandemic exposed critical limitations in traditional credit risk models. Government interventions—such as moratoriums, liquidity injections, and public credit guarantees—generated structural breaks in borrower behavior, which challenged the assumptions of pre-pandemic models and led to inaccurate estimations of default risk [11]. These disruptions particularly affected financially vulnerable groups as noted in recent empirical analyses [24].Several studies have highlighted the necessity of using adaptive, data-driven approaches—such as deep learning and regularized regression models—to handle the nonlinearity and volatility introduced by systemic shocks [25,26,27]. In the Peruvian context, unemployment shocks and loan restructuring policies were found to be key drivers of delinquency in consumer and microfinance credit portfolios during the pandemic period [28,29]. Evidence also suggests that regularized machine learning models, including Lasso and Ridge regression, offer robust predictive performance under uncertainty, particularly in large-scale government programs such as “Reactiva Perú” [27].

- Climate-Related Risks: Climate change poses both physical risks (e.g., floods and droughts) and transition risks (e.g., carbon pricing and regulatory shifts), each with distinct implications for credit stability. Physical hazards can disrupt supply chains, damage infrastructure, and reduce household and firm-level repayment capacity [30], while transition risks expose high-emission sectors to market penalties and regulatory uncertainty [31,32].Empirical evidence shows that extreme climate events elevate loan impairment rates and increase provisioning requirements, particularly for microfinance institutions operating in vulnerable regions [12]. These dynamics underscore the urgency of integrating climate stress testing, early-warning systems, and network-aware modeling into credit risk frameworks [33,34].This study [35] analyzes the interaction between macroeconomic shocks and climate-driven economic pressures within the Peruvian context, using a vector autoregression (VAR) model with national time-series data. Their findings suggest that expansionary monetary policies during periods of climate-related stress may inadvertently elevate the credit risk of microfinance portfolios. This reinforces the need to develop predictive frameworks that account for both environmental and policy-related drivers of credit instability in emerging economies.

- Social Unrest and Political Instability: Social unrest—often triggered by inequality, political crises, or economic shocks—can significantly amplify credit risk by disrupting income flows, consumption patterns, and investor confidence. Empirical evidence from Chile shows that the 2019 protests led to a measurable increase in household default probability, with partial mitigation through pandemic-era relief programs [36]. Similarly, civil unrest has been shown to induce volatility in financial markets, exemplified by reverse herding behaviors among investors [37], and to influence regulatory responses such as foreclosure bans, which may inadvertently elevate long-term credit risk [38]. In the Middle East and North Africa (MENA) region, waves of political instability have prompted structural reforms such as bank privatization, producing mixed outcomes in terms of credit risk and financial system resilience [39].While regional and global analyses are increasingly available, country-level studies for Peru remain scarce. However, recent academic research suggests that social and health crises in Peru prompted a behavioral shift among microentrepreneurs, particularly those in lower-poverty districts, who increasingly substituted personal loans with government-backed business credit during times of elevated uncertainty. This substitution pattern reflects a form of borrower adaptation and highlights the indirect impact of public policy interventions—such as state guarantee programs—on the structure and resilience of credit portfolios in emerging markets [29].

- Compound External Shocks: An emerging body of research highlights that the simultaneous occurrence of exogenous stressors—such as pandemics, climate anomalies, and social unrest—can generate nonlinear, systemic risks that conventional credit risk models are ill-equipped to anticipate [40]. These compound shocks interact across temporal and spatial dimensions, producing cascading effects on borrower behavior, institutional solvency, and portfolio stability. Moreover, societal vulnerabilities such as institutional fragility, inequality, and economic informality intensify the transmission channels and feedback loops of these risks, particularly in emerging economies.To address this complexity, researchers have proposed integrated modeling frameworks that incorporate early-warning signals, tipping points, and network-aware propagation mechanisms spanning environmental, economic, and political domains [40]. These multidimensional approaches are considered essential for the development of next-generation credit risk systems capable of operating under deep systemic uncertainty.In the Peruvian context, recent analyses emphasize the need for credit scoring systems to account for borrower heterogeneity under compound volatility conditions [41]. Empirical studies support the use of hybrid models that integrate Explainable Artificial Intelligence (XAI) techniques—such as SHapley Additive Explanations (SHAP) and Local Interpretable Model-Agnostic Explanations (LIME)—into traditional risk evaluation processes, thereby enhancing model transparency and predictive performance in highly unstable environments [23]. These insights reinforce the urgency of designing robust, adaptive credit assessment tools tailored to complex and evolving risk landscapes.

Despite the growing body of work on machine learning applications for credit scoring in Peru, most studies focus on conventional financial variables without incorporating external systemic stressors. Prior research has evaluated multiple machine learning algorithms for microcredit assessment in rural areas but typically omits exogenous factors such as pandemic shocks or climate anomalies [42]. This reveals a gap in studies that simultaneously integrate multiple external variables into ML-based credit risk models, particularly within the Peruvian financial system.

The present study addresses this limitation by incorporating time-series-based exogenous indicators—including COVID-19 deaths, temperature anomalies, and climate-induced road blockades—into a wide range of ML models. We assess their influence on both predictive accuracy and model interpretability.

3. Materials and Methods

3.1. Research Questions and Data Description

Based on this assessment, this article poses the research questions outlined in Table 1, centering on the interplay between external factors and credit risk assessment. Particular emphasis is placed on the importance of explainability in ML-driven models, as understanding how EF impacts credit risk is critical for decision-making and actionable insights.

Table 1.

Research questions.

To explore these relationships, a study focusing on Peru from January 2020 to September 2023 was conducted. The analysis was based on a comprehensive dataset comprising nearly 8.2 million records, as summarized in Table 2. This dataset integrates diverse EF, such as COVID-19 positive cases and deaths, road blockages, and temperature anomalies, along with financial delinquency (see Table 3) and credit activity data (see Table 4), ensuring a multifaceted approach to understanding credit risk dynamics under external influences.

Table 2.

Datasets.

Table 3.

Description of variables in the time-series dataset on credit delinquency by economic activity.

Table 4.

Summary of variables used in the modeling experiments.

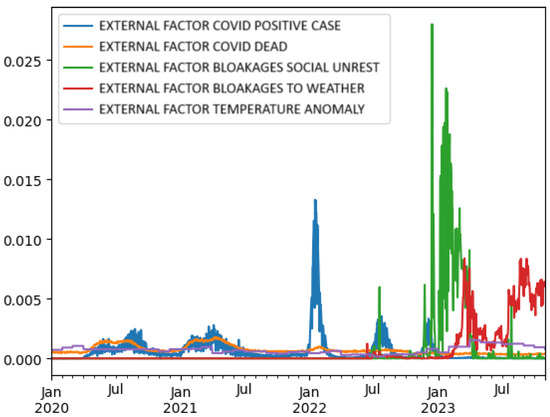

Figure 1 illustrates the trends of various external factors, including COVID-19 cases (positive and dead), social unrest, road blockages due to social unrest and weather, and temperature anomalies. Peaks in certain factors, such as COVID-19 cases and social unrest, were visible during specific periods, indicating their significant occurrence and potential impact on other events or systems.

Figure 1.

Time series visualization of external factors from January 2020 to September 2023. The plot includes normalized series (min–max scaled) for external factors such as COVID-19 confirmed cases, COVID-19 deaths, protest-related road blockages, weather-induced road blockages, and temperature anomalies. The y-axis ranges from 0 to 1, representing relative intensity over time for each factor. This normalization facilitates the comparison of dynamic patterns across factors with different units and magnitudes.

The main features of the credit dataset provided by FMOD are summarized in Table 5. This summary complements the structural descriptions in Table 3 and Table 4.

Table 5.

Descriptive statistics of the credit dataset used for modeling (FMOD).

Table 3 presents the structure of the time-series dataset used to analyze credit delinquency patterns across economic activities. Each row corresponds to an aggregated daily observation, capturing both the average and maximum number of overdue days among loans, grouped by income source, loan purpose, and economic sector. These variables are essential for modeling how different segments of the economy responded to external shocks over time.

By combining these features, the study constructed temporal indicators of financial stress, which were then aligned with a time series of external factors (e.g., COVID-19 indicators and climate anomalies). This temporal alignment enabled the application of causality analyses—such as Granger causality tests—and enhanced the interpretability of the model’s behavior under compound external disruptions.

This approach not only evaluates the predictive accuracy of machine learning models but also examines their capacity to provide transparent explanations for the relationships uncovered, ensuring their practical relevance for decision-making in volatile and complex environments.

Additionally, Table 4 summarizes the set of features used in the individual-level credit risk modeling. These include socio-demographic variables, financial indicators, and normalized business metrics. Each row corresponds to a credit evaluated during the study period, enriched with external factor indicators to support scenario-based experimentation.

Among the set of features, two representative variables were constructed with monthly frequency for this study: ‘DelinquencyDays_MMYYYY’, which captures the number of overdue days per month, and ‘ExternalFactorImpact_MMYYYY’, which flags the presence of external stressors—such as COVID-19, climate anomalies, or social unrest—during the same period. This temporal alignment enabled a dynamic analysis of credit behavior under external disruptions, strengthening both the predictive modeling and the interpretability of the results.

3.2. Cross-Industry Standard Process for Data Mining

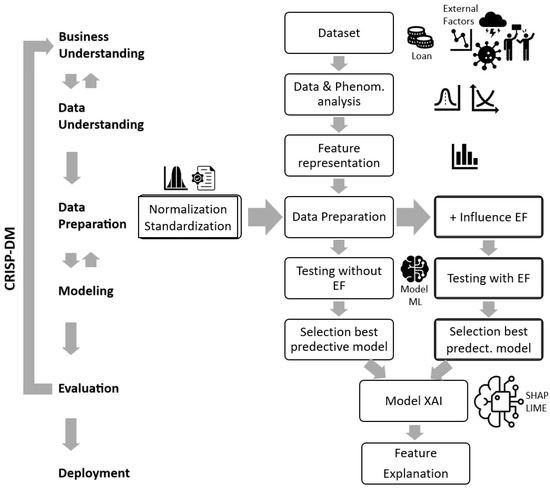

To evaluate the influence of EF on credit risk, we adopt the Cross-Industry Standard Process for Data Mining (CRISP-DM), a widely used framework that structures data mining projects into six iterative stages: Business Understanding, Data Understanding, Data Preparation, Modeling, Evaluation, and Deployment [43]. This methodological foundation provides a robust and flexible structure for guiding machine learning workflows across domains, and has also been successfully applied in prior studies analyzing external factors during crisis scenarios [44,45].

In the context of this study, the application of CRISP-DM allowed us to address two specific challenges associated with modeling credit risk under compound external shocks. First, during the Data Understanding and Preparation phases, the incorporation of exogenous time-series variables—such as pandemic indicators, climate anomalies, and social unrest—required additional preprocessing steps, including stationarity testing, temporal alignment, and Granger causality analysis. Second, during the Modeling and Evaluation phases, we conducted comparative experiments across multiple scenarios (with and without EF), enabling us to assess the marginal contribution and explanatory power of these external variables under volatile conditions.

As illustrated in Figure 2, the CRISP-DM framework provided a structured and iterative foundation for organizing each phase of the experiment. The process began with Business and Data Understanding, which guided the initial dataset exploration and external factor characterization. During Data Preparation, we performed normalization, standardization, and the integration of exogenous time-series variables. This integration was based on a monthly alignment strategy.

Figure 2.

CRISP-DM framework applied to EF-driven credit risk modeling. This diagram illustrates how the CRISP-DM phases structure the experimental workflow, from data understanding and preparation—including the monthly alignment and integration of exogenous time-series variables—to scenario-based modeling (with and without external factors) and the use of explainable AI techniques (SHAP and LIME) during evaluation. The variables DelinquencyDays_MMYYYY and ExternalFactorImpact_MMYYYY are constructed to support a time-aware analysis of credit behavior and its relationship to validated external shocks across economic sectors.

Importantly, the ‘ExternalFactorImpact_MMYYYY’ variable was not assigned uniformly across the dataset. Instead, it was derived by evaluating the declared economic activity of each credit and determining whether that activity exhibited a statistically significant relationship with a given external factor. This was performed using Granger causality tests between the monthly time series of average delinquency for each economic activity and the corresponding external factor series (e.g., COVID-19 deaths and road blockages). Only when a causal relationship was validated did the external factor impact flag become active for loans in that activity during the affected periods.

Each loan record was then linked to the corresponding external factor conditions (e.g., COVID-19, road blockages, and temperature anomalies) based on the month of disbursement or performance observation. This was operationalized through two representative monthly variables: ‘DelinquencyDays_MMYYYY’, capturing overdue status, and ‘ExternalFactorImpact_MMYYYY’, flagging the presence of external stressors. This structure enabled the inclusion of both defaulting and non-defaulting loans, allowing the model to learn from the evolution of payment behavior under varying external conditions.

In the Modeling and Evaluation stages, we compared predictive performance across parallel scenarios—with and without EF—to assess their marginal contribution. Finally, during the Evaluation phase, Explainable Artificial Intelligence (XAI) techniques, specifically SHAP and LIME, were incorporated to enhance transparency and facilitate the interpretation of model decisions concerning external shocks.

3.2.1. Workflow Description

- Dataset Definition and Feature Selection: The process begins by determining the relevant datasets and conducting a comprehensive analysis of the phenomena to be evaluated (EF in this study). Feature selection was guided by the established methodologies [46], identifying attributes with high relevance to credit risk prediction, including both internal (e.g., loan characteristics) and external (e.g., EF) variables. The selected features were refined using feature importance techniques, such as Recursive Feature Elimination (RFE) and Mutual Information (MI), ensuring that only the most significant predictors were retained.

- Data Preparation: Preprocessing involved standardizing and normalizing the dataset (using sum normalization) to homogenize the data scales. Additionally, missing data were handled using multiple imputation techniques to maintain dataset integrity [46].

- Integration of External Factors: To evaluate the impact of EF on credit risk prediction, additional variables corresponding to EF (e.g., COVID-19, temperature anomalies, and social unrest) were incorporated into the dataset. These variables were derived from time-series analyses and causal relationships, validated through statistical methods such as the Dickey–Fuller test and the Granger causality test [47].The Dickey–Fuller test was employed to verify the stationarity of the time-series data, ensuring that relationships between variables were not spurious and that the models yielded reliable results [47]. The stationarity of time series is critical, particularly when external factors such as COVID-19 cases, mortality rates, and roadblocks caused by weather events influence the temporal dynamics. The test is described mathematically asThe terms represent the following:

- : first difference in time series ();

- : a constant;

- : a trend term;

- : lagged value of the time series;

- : coefficients of lagged differences;

- : the error term;

- p: the number of lags.

Following the confirmation of stationarity, the Granger causality test was applied to evaluate the causal influence of these external factors on economic activities. The test, widely used in econometric studies, is defined asThe terms represent the following:- : lagged values of series ;

- , , and : the coefficients;

- : the error term.

Lag variables were calculated to capture delayed effects, with lag periods tailored to each EF (e.g., quarterly for temperature anomalies, and monthly for social unrest and COVID-19). These statistical methods ensured rigorous validation of the causal relationship between EF and economic activities that influence credit defaults. - ML Model Training and Hyperparameter Tuning: Advanced ML models were trained on datasets both with and without EF to assess their predictive contributions. Hyperparameter tuning was conducted using grid search and Bayesian optimization [48] to enhance the model performance. To address the class imbalance in the dataset, techniques such as the Synthetic Minority Oversampling Technique (SMOTE) [49] and class-weighted loss functions were employed [50,51].

- Evaluation and Explainability (XAI): Models were evaluated using metrics such as Accuracy (ACC), Area Under the Curve (AUC), Kolmogorov–Smirnov (KS) and F1-score (F1) across multiple folds (10-fold cross-validation). To enhance interpretability, post hoc explainability techniques such as SHAP and LIME were applied [52]. These methods highlight the most influential features in predicting credit delinquency both before and after incorporating EF [19].

- Comparison of Scenarios: The workflow was executed in parallel for scenarios excluding EF and those incorporating EF. This allowed for a direct comparison of the model performance and the added value of EF inclusion.

3.2.2. Key Enhancements in the Current Study

- The integration of explainability methods (SHAP and LIME) provides actionable insights for decision-makers in financial institutions.

- Comprehensive feature selection processes were implemented, ensuring that only the most predictive attributes were retained for model development.

- Rigorous hyperparameter optimization to maximize model performance and robustness.

By employing this adapted CRISP-DM workflow, this study contributes a systematic and replicable methodology for evaluating EF in credit risk contexts, particularly during crises.

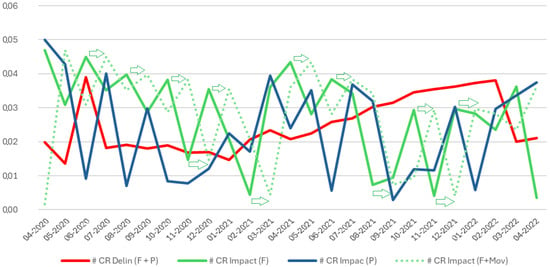

Figure 3 depicts the impact of COVID-19 on economic activities by comparing delinquency rates with the economic impacts over time. The graph highlights fluctuations in economic impact trends, particularly during pandemic peaks, and distinguishes patterns between financial and personal impacts (F + P) and movement-related impacts (CR Impact F + Mov).

Figure 3.

Impact on economic activities by external factor COVID-19. This figure presents normalized credit risk impact values across economic activity types under different pandemic-related external factor scenarios. The vertical axis represents a normalized metric (ranging from 0 to 1) that quantifies the relative intensity of impact observed for each external factor. External factor notations: F = COVID-19 Deaths, P = COVID-19 Positive Cases, F + P = Combined Impact of COVID-19 Deaths and Positive Cases, F + Mov = Combined Impact including Mobility Restrictions.

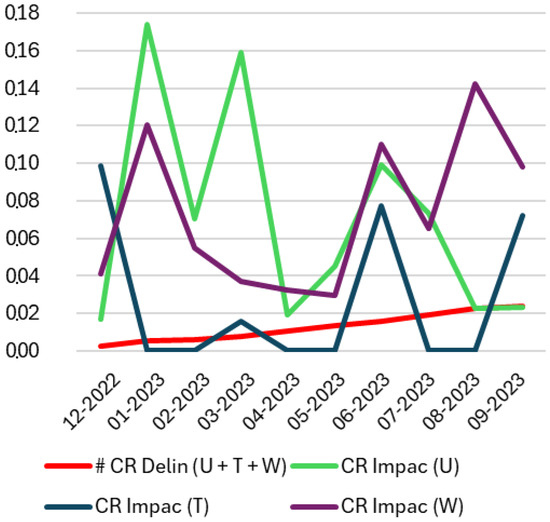

Figure 4 illustrates the impact of social unrest and climatic factors on economic activity from December 2022 to September 2023. It compares delinquency rates with the economic impact of social unrest, weather, and temperature anomalies, and shows significant spikes during periods of intensified external disruptions.

Figure 4.

Impact on economic activities by external factors: social unrest and climatic events. This figure presents normalized credit risk impact values across economic activity types under different external factor scenarios. The vertical axis represents a normalized impact metric (ranging from 0 to 1) that reflects the relative intensity of the effect across sectors. External factor notations: U = social unrest, T = temperature anomaly, W = weather-induced road blockages, U + T + W = combined effect of social unrest, temperature anomaly, and road blockages.

3.2.3. Research Strategy

The evaluation period for this study is defined based on the behavior of the monthly classification variables, starting from the onset of the impact of EF until stabilization as shown in Figure 3 and Figure 4. Stabilization is defined as the point at which the slope of the curve representing the proportion of bad payers (red line) began to level off.

Evaluation Periods

- COVID-19: The evaluation period spans March 2020 to January 2022. Loans disbursed until October 2021 are included to account for a 60-day grace period before delinquencies appeared, ensuring unbiased results (Figure 3).During the analysis of the COVID-19 external factors, it was identified that the trends in the number of positive cases and deaths often showed opposing behaviors. To address this, an additional experiment was conducted, in which the death curve was shifted from one period to the right, referred to as the COVID MOV. This adjustment considered the estimated incubation period of the disease and the time to potential fatality, typically ranging from 2 to 3 weeks. By aligning the death data with this temporal delay, this analysis aimed to capture the causal relationship between disease progression and its impact on credit delinquency rates. This methodological adjustment is illustrated in Figure 3 (green dotted line).

- Social Unrest and Climate Factors: Data from December 2022 to September 2023 were analyzed, with loans disbursed up to June 2023 considered under the same grace period logic (Figure 4).

In addition, the dataset includes a variable that identifies whether a credit was backed by a government guarantee. Based on this variable, two experimental configurations were defined: one including all government-backed credits (WGB_CR), and another excluding them (WOGB_CR), to evaluate potential biases introduced by public credit support programs.

To assess the influence of external stressors on credit risk, multiple experimental scenarios were constructed using different combinations of observed external factors (see Table 6). These include a baseline scenario SFE, as well as scenarios incorporating COVID-19-related metrics such as fatalities (F), confirmed positive cases (P), and a combined F + P scenario. Additionally, a variation labeled COVID MOV was considered, shifting fatality data forward by one month to account for incubation and reporting delays.

Table 6.

Acronyms of evaluated scenarios.

Other scenarios focused on sociopolitical and environmental disruptions, including social unrest indicators (U), temperature anomalies (T), and road blockages caused by extreme weather (W). Combined scenarios such as T + W (climatic anomalies and road disruptions) and U + T + W (integrating social unrest, climate variability, and climate-induced blockages) were also included to evaluate compound effects.

Data Preprocessing

- Data normalization and standardization were applied to homogenize the scales [53].

- Features with correlations above 90% were removed to prevent redundancy.

- SMOTE was used to balance the dataset [54].

Machine Learning Models

We implemented the following models to evaluate the binary classification tasks, prioritizing interpretability and predictive performance:

- Traditional Models: Linear Discriminant Analysis (LDA), Quadratic Discriminant Analysis (QDA), and k-Nearest Neighbors (KNN) [54].

- Advanced Models: Perceptron (PERCEP), Multi-layer Perceptron (MLP), Random Forest (RF) [3], Ridge Regression (Ridge) [55], and Extreme Gradient Boosting (XGB) [56].

- Explainable Models:

- –

- Explainable Neural Network (XNN), which directly integrates interpretability into the model architecture [17].

- –

- Explainable Boosting Machines (EBM), a method that combines high accuracy with feature interpretability through additive modeling [57].

- Feedforward Neural Networks: Variants of Feedforward Neural Networks (FFNN) were explored:

- –

- Standard Feedforward Neural Network (FFN): A classical architecture used as a baseline for comparison.

- –

- Feedforward Neural Network with Sparse Regularization (FFNSP): Incorporates sparsity constraints to enhance generalization and reduce overfitting.

- –

- Feedforward Neural Network with Local Penalty Regularization (FFNLP): Local penalty terms are applied to balance feature contributions and improve interpretability.

- Deep Learning: Convolutional Neural Networks (CNN) were included for their ability to capture complex relationships in both spatial and temporal data [25]. Although CNN are traditionally applied to image or time-series data, in this study, each tabular instance was reshaped into a 2D tensor of shape , allowing the use of 1D convolutional layers to capture localized patterns across the feature vector. This approach has been successfully applied in previous studies involving structured tabular data [58].

Each model was trained and evaluated using a stratified 10-fold cross-validation protocol, ensuring balanced class representation across folds [26]. In each fold, 90% of the data was used for model development and 10% was held out for testing. Within the development portion, 75% was used for training and 25% for internal validation, enabling unbiased hyperparameter tuning. We employed a combination of grid search and Bayesian optimization to identify optimal model configurations.

All performance metrics—including ACC, AUC, F1, and KS—were computed separately on the validation and test partitions for each fold. The final results reported in the manuscript correspond to the average values obtained across the 10 test folds.

Explainability with XAI

To address concerns about interpretability, SHAP and LIME were applied to identify the most influential features in predicting credit delinquency both before and after incorporating EF. Models such as XNN and EBM provided native interpretability, complementing these post hoc methods.

Statistical Evaluation

The impact of EF on model performance was assessed using Student’s t-test (Equation (3)), applied to metrics such as ACC, AUC, KS and F1 from the cross-validation folds, with a significance level of :

The terms represent the following:

- : mean of metrics from ten folds;

- : hypothetical mean under the null scenario;

- s: standard deviation of metrics;

- n: number of folds ().

Economic Activity Relevance

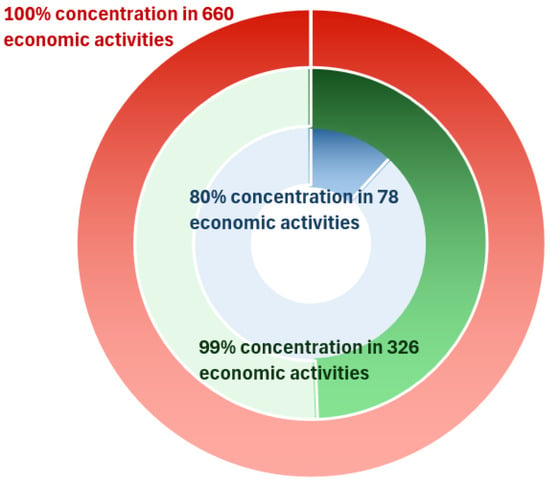

The dataset, comprising 367,000 loans (see Table 2), was analyzed based on its concentration across economic activities. This analysis is particularly relevant, as the impact of EF on these activities amplifies exposure to credit risk. The distribution of loans is as follows:

- 80% Concentration: 80% of the loans are concentrated in 78 economic activities.

- 99% Concentration: 99% of the loans are concentrated in 326 economic activities.

- 100% Concentration: 100% of the loans are distributed across 660 economic activities.

Figure 5 illustrates these groupings, highlighting the increased dispersion and noise as the number of activities increases. To ensure a comprehensive analysis, the results were further examined by including and excluding loans affected by government-backed programs, thereby isolating the potential biases introduced by such interventions.

Figure 5.

Relevance of Economic Activity.

Research Limitations and Biases

While this study provides valuable insights into the influence of EF on credit delinquency predictions, several limitations and potential biases must be considered:

- Context-Specific Findings: The analysis is based on data from the Peruvian context, which may limit the generalizability to other regions with different economic, social, and environmental dynamics. Future research should incorporate external validation by using datasets from diverse geographical areas to evaluate the transferability of the proposed methodology.

- Training Data Bias: Dataset imbalances and the under-reporting of delinquency during government interventions may introduce biases. Although SMOTE has been used to address class imbalances, residual biases related to data collection practices or policy impacts may persist.

- Model Transparency: While explainability techniques such as SHAP, LIME, and interpretable models (e.g., XNN and EBM) have been employed, the use of black-box models like CNN and XGB remains a challenge. This lack of inherent transparency could hinder their applicability to financial institutions, where explainability is crucial for regulatory compliance and stakeholder trust.

- Temporal Assumptions: The assumption that loans disbursed near the evaluation cutoff reflect the grace period impacts may not fully account for the delayed delinquency effects, particularly for loans with extended repayment terms.

- Data Quality and Noise: External factor measurements and institutional datasets may contain noise or inaccuracies that could affect model performance, despite preprocessing steps such as normalization and standardization. Future work should explore advanced noise-reduction techniques.

- Researcher Influence: Prior domain knowledge and experience can introduce subtle biases in model selection, EF, or preprocessing decisions. To mitigate this, the study adhered to best practices such as k-fold cross-validation, rigorous statistical testing, and methodological transparency.

Despite these limitations, the methodological rigor applied in this study, including robust preprocessing, comprehensive statistical validation, and the integration of explainable models, provides a solid foundation for future research. The findings highlight the need for the further exploration of EF impacts in broader contexts, paving the way for more robust, interpretable, and generalizable credit risk assessment frameworks.

4. Results

4.1. Stationarity Analysis and Dataset Integration

In this analysis, “economic activities” refer to the internal sector classification codes provided by the financial institution FMOD. These codes represent a customized segmentation structure used to group clients based on their type of business or productive sector, and are derived from operational risk models. Although not fully equivalent to official international standards, these codes are conceptually aligned with the International Standard Industrial Classification of All Economic Activities (ISIC Rev. 4), maintained by the United Nations Statistics Division. (https://unstats.un.org/unsd/classifications/Econ/isic, accessed on 5 April 2025).

Specifically, the evaluation window from April 2018 to February 2020 (as shown in Table 7) contains 718 unique activity codes, each linked to credit operations that remained active during that period. Other periods in the table include subsets of these activities, depending on whether data on overdue loans were available within each respective evaluation window.

Table 7.

Economic activity delinquency stationarity.

To assess the influence of EF on economic activity delinquency, we first applied the Dickey–Fuller stationarity test (see Equation (1)) to the normalized daily time series covering the period from January 2016 to September 2023 (see Table 2). These time series represent the number of delinquency days associated with the economic activities under study, examined both in periods with EF and without EF [47].

Key Stationarity Findings

Table 7 shows that, in periods without EF, between 90% and 91% of the activities exhibit non-stationary delinquency patterns, while 6–7% exhibit stationarity, and around 3% are indeterminate. In contrast, periods with EF present a lower proportion of non-stationary activities (75–85%) and a higher proportion of stationary (6–22%) or indeterminate patterns (2–9%). These differences suggest that exogenous shocks, such as pandemics, social unrest, or climatic disruptions, can induce more persistent or complex delinquency behaviors, consistent with similar observations in other emerging economies.

To assess the influence of EF on economic activity delinquency, the Dickey–Fuller stationarity test was applied (see Equation (1)) to the normalized time series of daily frequency. These series represent the days of delinquency associated with the analyzed economic activities. The data used for this analysis cover the period from January 2016 to September 2023 (see Table 2). The analysis was performed for both periods influenced by EF and those without EF [47].

The results indicate that during periods without EF influence, between 90% and 91% of economic activities exhibited non-stationary delinquency, while 7% and 6% showed stationarity. Additionally, 3% of the activities fell into the “indeterminate” category, where stationarity could not be conclusively determined. In periods with EF influence, the proportion of non-stationary activities decreased to 75% and 85%, whereas stationary activities increased to 22% and 6%. The “indeterminate” category also showed slight variations, with 2% and 9% of the activities falling into this group.

The “indeterminate” classification, though a minority, highlights cases where delinquency patterns are less predictable or fall outside the thresholds for stationarity detection. This suggests that EF may not only influence stationarity directly but may also introduce complexity in delinquency patterns, making it harder to classify definitively.

This behavior may be explained by the risk management practices of financial institutions during stable periods. In the absence of external shocks, institutions are more capable of identifying early signs of payment delays and can adjust their credit exposure accordingly—often by suspending or restricting lending to high-risk segments. These interventions disrupt emerging trends, resulting in more irregular default behavior that tends to deviate from stationary assumptions due to abrupt shifts in mean or variance.

Conversely, during systemic external shocks—such as pandemics or climate disruptions—multiple economic sectors experience distress concurrently, reducing the institution’s ability to react. This leads to prolonged and homogeneous delinquency patterns across broader borrower groups, increasing the likelihood of stationarity in the associated time series.

These findings are summarized in Table 7, which provides a detailed breakdown of the stationarity classifications across the different periods of evaluation. The results underscore the dynamic impact of external factors on the stationarity of delinquency days in economic activities, reinforcing the need to account for these factors in economic analyses and credit risk prediction models.

4.2. Definition of Evaluated Scenarios and Causality Testing

To evaluate the seasonal impact of EF on the default behavior of economic activities, the Granger causality test (see Equation (2)) was applied to the time series representing the days of default for each economic activity, in conjunction with the time series of each EF. For simplicity, a binary value of 1 was assigned when the p-value was less than 0.05, indicating statistically significant causality, and 0 otherwise. This binary classification was performed every month to effectively capture temporal variations.

The results of this causality analysis were subsequently integrated into the credit dataset and associated with the corresponding economic activity and period under analysis. Additionally, a global binary classification variable was calculated and appended to the dataset to identify the payment quality of credit holders (good or bad payers) based on monthly delinquency data. This enriched dataset provided the foundation for applying Algorithm 1 to the established datasets (see Figure 5) to compute the ACC, AUC, KS, and F1 metrics for the evaluated machine learning models. These models were assessed across the scenarios listed in Table 6. Based on the values obtained, the differences between the scenarios that include EF and SFE scenarios were calculated as shown in Table 8.

| Algorithm 1 Dataset evaluation using ML algorithms. |

|

Table 8.

Acronyms of the results for the evaluated scenarios.

The performance metrics (ACC, AUC, KS and F1) derived from the application of machine learning models for the binary classification of good and bad payers, evaluated across datasets stratified by relevance to established scenarios, are presented in Table A1, Table A2, Table A3, Table A4, Table A5, Table A6, Table A7, Table A8, Table A9, Table A10, Table A11, Table A12, Table A13, Table A14, Table A15 and Table A16 in Appendix A. These tables provide a detailed comparative analysis of the scenarios with and without the inclusion of EF related to COVID-19, social unrest, and climate change.

Additionally, Table A17, Table A18, Table A19, Table A20, Table A21 and Table A22 in Appendix A identify the machine learning models that achieved statistically significant improvements in their classification metrics when the EFs were incorporated. These tables delineate the conditions under which specific models demonstrate enhanced predictive capabilities, providing critical insights into the sensitivity and adaptability of various machine learning approaches to external factors.

The comprehensive dataset from these experiments forms a robust foundation for addressing the research questions that underpin this study. Given the extensive volume of information presented, the subsequent discussion focuses on the most significant results, with direct references to the relevant tables to highlight critical findings and their implications for predictive modeling under diverse scenarios.

- RQ1. How did the COVID-19 pandemic, through factors such as positive cases and mortality rates, influence credit defaults in different economic activities?

The first research question is focused on understanding the impact of the COVID-19 pandemic on credit delinquency, particularly through the inclusion of variables such as confirmed infections and mortality. A comparative analysis was conducted between baseline scenarios SFE and those influenced by pandemic conditions, including a time-shifted mortality scenario (COVID MOV). Across all evaluation metrics—AUC, ACC, KS, and F1—the results consistently indicate a marked shift in credit risk behavior during pandemic periods.

Among the pandemic-related variables, mortality rates—especially when temporally aligned to reflect delayed economic effects—proved to be the most influential predictor of default. This effect was most evident in the 3. D80 dataset, which encompasses high-contact economic sectors that are particularly vulnerable to pandemic restrictions and health crises. In this scenario, the inclusion of lag-adjusted mortality data significantly enhanced model performance, suggesting that delayed health outcomes capture the real-world propagation of credit risk better than contemporaneous case counts alone.

The presence of government-backed credit mechanisms also played a decisive role. In datasets where such guarantees were included (WGB_CR), the predictive impact of the pandemic was partially cushioned, resulting in lower volatility and more stable classification outcomes. Conversely, in non-guaranteed portfolios (WOGB_CR), default risks increased substantially, reflecting the lack of institutional buffers.

In terms of model performance, CNN and XGB emerged as the most effective across all partitions, consistently achieving top-tier predictive accuracy and discrimination. While models such as EBM demonstrated improvements in specific scenarios—particularly under external stressors—others like XNN exhibited limited effectiveness, especially in pandemic-related contexts, which constrained their applicability despite their inherent interpretability features.

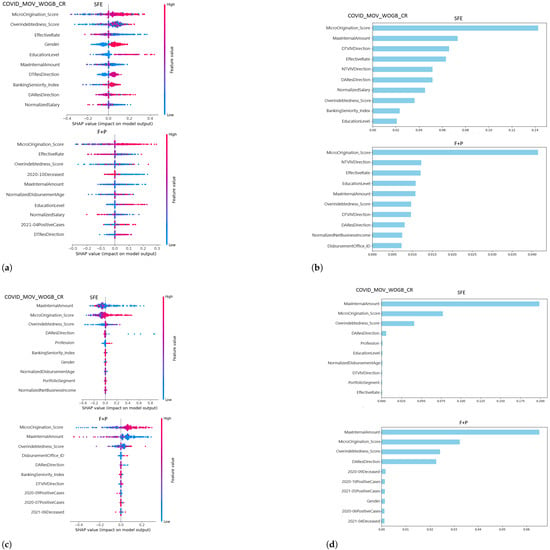

These findings are further reinforced by the interpretability analyses shown in Figure 6, where SHAP and LIME techniques reveal how the relative importance of predictive features shifts across scenarios. Variables related to debt burden and origination characteristics gained or lost prominence depending on whether pandemic indicators were present, reflecting the models’ dynamic response to exogenous health shocks.

Figure 6.

Top 10 features between without external factors (SFE) and COVID-19 stress scenarios (F + P). (a) XGB SHAP analysis, (b) XGB LIME analysis, (c) CNN SHAP analysis, and (d) CNN LIME analysis. Legend: F = COVID-19 confirmed cases; P = COVID-19 deceased; F + P = Combined scenaries; Mov = Mobility restrictions; WGB_CR = With Government-Backed Credit; WOGB_CR = Without Government-Backed Credit.

In summary, the COVID-19 pandemic had a substantial and measurable effect on credit delinquency patterns. Mortality—particularly when time-shifted—proved to be the most impactful factor, and its inclusion substantially improved model robustness. Government interventions, while partially effective, could not fully suppress the delinquency risks in the most exposed segments. These insights underscore the value of integrating lag-sensitive external variables into credit risk modeling, especially for sectors with heightened structural vulnerability.

Figure 6 provides interpretability insights into the predictive behavior of the XGB and CNN models under both baseline (SFE) and COVID-affected (F + P) scenarios, using SHAP and LIME explainability techniques.

Figure 6a,b illustrate the SHAP and LIME results for the XGB model, respectively. Under the SFE scenario, features such as MicroOrigination_Score, Overindebtedness_ Score, and MaxInternalAmount exhibit the greatest impact on prediction outputs. In the F + P setting, variables directly linked to pandemic conditions—such as 2021-04Positive Cases and 2020-10Deceased—emerge alongside financial indicators, suggesting an integration of external health shocks into the model’s risk prioritization.

Figure 6c,d display the corresponding SHAP and LIME analyses for the CNN model. Similar to XGB, the CNN model assigns greater relevance to features such as MaxInternalAmount and MicroOrigination_Score under the baseline (SFE) scenario. In the F + P scenario, feature importance shifts toward pandemic-related variables and demographic indicators (e.g., 2020-09PositiveCases, 2020-09Deceased, DAResDirection, Gender), reflecting the model’s responsiveness to contextual changes introduced by COVID-19.

Together, these visualizations underscore the dynamic interaction between model architecture and scenario context, revealing how external stressors—particularly COVID-19—reshape the prioritization of financial and behavioral risk indicators within advanced machine learning models.

- RQ2. To what extent do climate change indicators, such as temperature anomalies and road blockages due to weather, impact credit delinquency patterns?

The analysis of climate-related external factors—specifically temperature anomalies (T) and weather-induced road blockages (W)—reveals a measurable and statistically significant influence on credit delinquency behavior. While the magnitude of this effect is generally more moderate compared to pandemic-related variables, it remains consistent across scenarios and data partitions.

Table A9, Table A10, Table A11 and Table A12 (WGB_CR) and Table A13, Table A14, Table A15 and Table A16 (WOGB_CR) show notable improvements in classification metrics such as ACC, AUC, KS, and F1 upon including climate variables. These gains are particularly prominent in the absence of government guarantees, where borrowers are more directly affected by logistical disruptions. In portfolios without policy support (WOGB_CR), the effect of weather-related blockages is accentuated, often contributing to delays in income flows and operational closures—factors that increase credit risk exposure.

From a comparative perspective, the predictive gains attributed to climate anomalies may not be as large as those observed under pandemic mortality scenarios, yet they are highly context dependent. For instance, improvements in AUC of up to 3% and accuracy gains of over 30% have been recorded in neural network models, especially when the economic activities under analysis are concentrated in geographically exposed sectors. This supports the findings of [30], who demonstrated that road infrastructure disruptions can propagate liquidity shocks and elevate default risk, particularly in informal or underserved lending markets.

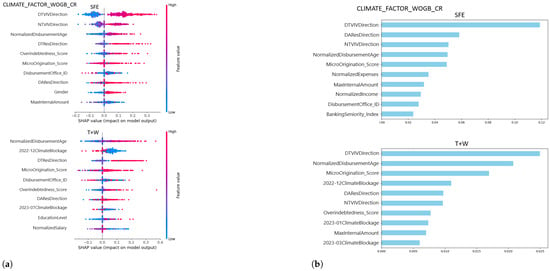

Figure 7 illustrates how the XGB model integrates climate variables into its decision structure, ranking them alongside key behavioral and demographic attributes. This interpretability result reaffirms that exogenous environmental stressors, while external to borrower control, are internalized by advanced algorithms as significant contributors to credit delinquency prediction.

Figure 7.

Top 10 features between scenarios without external factors (SFE) and with climate external factors (T + W): (a) XGB SHAP analysis. (b) XGB LIME analysis. Legend: SFE = Scenario without external factors; T = Temperature Anomaly; W = Climate Blockage; T + W = Combined scenarios; WGB_CR = With Government-Backed Credit; WOGB_CR = Without Government-Backed Credit.

In sum, the inclusion of climate indicators in credit risk models enhances their ability to reflect real-world disruptions and borrower vulnerability. The observed performance improvements are not only statistically valid but also economically meaningful, underscoring the importance of incorporating climate-related risks into financial predictive systems, particularly in emerging markets subject to environmental volatility.

Figure 7 provides interpretability insights into the behavior of the XGB model under climate-related stress conditions. Figure 7a presents the SHAP analysis comparing the baseline scenario SFE and the scenario that includes both temperature anomalies and weather-induced road blockages (T + W). The visualization highlights the ten most influential features in each setting, with color gradients indicating the direction and intensity of their contribution to the model’s predictions. High feature values are represented in red and low values in blue, allow a clear interpretation of how variable magnitudes relate to increased or decreased credit risk.

Figure 7b displays the corresponding LIME analysis for the same dataset (CLIMATE_ FACTOR_WOGB_CR). In both scenarios, DTResDirection emerges as the most critical driver of classification outcomes. Notably, under the T + W scenario, Gender gains relevance, suggesting that climate-related externalities may interact differently with sociodemographic characteristics. This contrast between the SFE and T + W contexts reinforces the need to account for latent vulnerabilities when modeling credit risk under environmental stress.

Together, these interpretability results confirm that the inclusion of climate factors not only enhances model accuracy but also reconfigures the internal prioritization of risk features. This dynamic adaptation reflects the capacity of machine learning models like XGB to absorb and reflect external shocks through learned decision boundaries.

- RQ3. What is the relationship between credit delinquency and social unrest, considering disruptions to economic activities and societal stability?

Social unrest—manifested through protests, strikes, and other large-scale disruptions—can severely affect economic activity by limiting mobility, reducing consumer demand, and disrupting informal and formal markets. These effects become particularly salient in borrower segments highly dependent on local or face-to-face interactions.

Our analysis confirms that the inclusion of social unrest indicators (U) in the datasets yields statistically significant improvements in credit delinquency prediction. These improvements are evident in both WGB_CR and WOGB_CR portfolios, although the magnitude of the effect is more pronounced in the latter. This distinction highlights the protective role of policy guarantees, which tend to buffer the financial impact of systemic disruptions.

In terms of model responsiveness, CNN, and XGB exhibit the highest sensitivity to unrest scenarios, achieving notable gains in metrics such as AUC, ACC, KS, and F1. Classical models, including LDA and Ridge, also show moderate but statistically consistent improvements. Notably, in the 3. D80 dataset—focused on high-contact economic activities—the accuracy and discrimination capacity of models improve substantially under social unrest scenarios, suggesting that these sectors are disproportionately vulnerable to institutional instability.

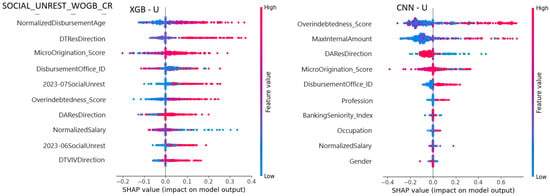

Interpretability analyses provide further insight. As shown in Figure 8, SHAP values for CNN and XGB highlight a shift in feature relevance when social unrest variables are introduced. Features such as Overindebtedness_Score and MaxInternalAmount become more prominent, reflecting the increased importance of financial stress indicators in volatile sociopolitical contexts.

Figure 8.

SHAP XGB and CNN: Top 10 Features in scenarios with social unrest (U). Legend: U = social unrest, represented by events of civil protest and political instability registered in 2023; WGB_CR = with government-backed credit; WOGB_CR = without government-backed credit.

In summary, the presence of social unrest introduces measurable and context-sensitive volatility in credit delinquency behavior. Its integration into predictive models not only improves classification performance but also enhances the capacity to identify borrower segments at heightened risk. These findings support the existing literature on the financial impacts of sociopolitical instability and underscore the importance of incorporating such variables into credit risk assessments, particularly in emerging economies where unrest events are recurrent and unevenly absorbed by the population.

Figure 8 presents the SHAP feature importance analysis for the XGB and CNN models under the “U scenarios” in the SOCIAL_UNREST_WOGB_CR dataset. The top 10 influential features are shown for each model, with Overindebtedness_Score and MaxInternalAmount being particularly significant in both, highlighting their impact on the model predictions.

- RQ4. How do the combined effects of external factors (COVID-19, climate change, and social unrest) contribute to variations in credit delinquency, and what are the most influential factors?

The combination of external factors—pandemic severity, climatic anomalies, and sociopolitical instability—amplifies the complexity and unpredictability of credit behavior. When analyzed jointly, these variables generate compounded stress conditions that exert a stronger influence on delinquency patterns than any of them in isolation. This effect is particularly evident in the absence of government-backed guarantees (WOGB_CR), where borrowers are fully exposed to systemic shocks.

Empirical results from multifactor scenarios, such as U + T + W and Climate+Unrest, show a higher density of statistically significant gains across all evaluation metrics. Notably, mortality indicators (especially in lag-adjusted form, i.e., COVID MOV) remain the dominant predictor of default, reflecting the profound economic dislocation associated with fatal health outcomes. Climatic variables, although more moderate in their individual contribution, reinforce vulnerability in certain borrower segments, particularly when the infrastructure is disrupted by weather-related events. Social unrest introduces further pressure by destabilizing labor income and limiting business continuity.

Model behavior under combined factor scenarios reveals distinct patterns. XGB consistently ranks as the most resilient and adaptive model, achieving significance across all multifactor experiments. CNN also demonstrates strong performance, although with some sensitivity to the structure of the input data and the intensity of external stressors. While XNN was included as an interpretable neural model, its performance was inconsistent, limiting its contribution beyond methodological comparison. Classical models such as Ridge and LDA continue to offer baseline robustness, particularly in datasets with partial shielding (e.g., WGB_CR), although their gains remain smaller and more scenario dependent.

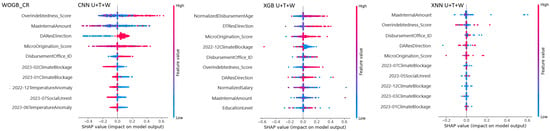

Figure 9 supports these findings by showing the SHAP analyses for CNN, XGB, and XNN in scenarios combining all external factors. Variables such as MaxInternalAmount, Overindebtedness_Score, and DAResDirection consistently emerge as top predictors, reflecting how borrower financial behavior interacts with macro-level disruptions. The shifting prominence of these features across models underscores the necessity of interpretability tools to contextualize model predictions in complex environments.

Figure 9.

SHAP CNN, XGB, and XNN: Top 10 features in scenarios combining external factors. Legend: U = social unrest (e.g., protests and road blockages); T = temperature anomalies; W = climate blockages; U + T + W = combined scenario including all three external factors; WOGB_CR = credit risk portfolio excluding government-backed loans.

In conclusion, the combined influence of external shocks intensifies credit risk dynamics and reveals structural fragilities in both the borrower base and the credit portfolios. The models’ ability to detect and adapt to these stressors is contingent not only on the algorithmic design but also on how exogenous variables are incorporated, preprocessed, and aligned temporally. These findings reinforce the importance of building integrated, context-aware credit risk models—particularly in economies where multiple external threats converge and the margin for institutional response is limited.

5. Discussion and Research Implications

This study examined how credit delinquency prediction is affected by the incorporation of EF, including pandemic severity, climate anomalies, and social unrest. The findings demonstrate that these variables introduce significant and context-dependent shifts in credit behavior, particularly in scenarios without government-backed guarantees. At the same time, the process revealed methodological challenges that are increasingly relevant in the application of ML in dynamic financial environments.

The first challenge relates to the quality and availability of data on external disruptions. Publicly available records on COVID-19 mortality, weather events, and protest activity often lack consistency and timeliness, complicating their integration into high-frequency credit datasets. Similar issues have been noted in prior empirical efforts conducted in Peru, where the construction of reliable time series for economic modeling has required substantial preprocessing [28,29]. In this study, the alignment of mortality data through temporal shifts proved essential, as it revealed the delayed but pronounced influence of pandemic fatalities on repayment capacity.

Another source of complexity was the divergence between credit portfolios with and without public guarantees. Government-backed loans mitigated part of the risk during systemic shocks but also altered the underlying statistical distribution of defaults. This distinction made model generalization more challenging but also more necessary for understanding the buffering effects of financial policy. Prior studies in Latin America suggest that the performance of ML classifiers can vary significantly depending on whether policy instruments like emergency credit programs are present [27].

Overfitting risks were also non-trivial, particularly when combining multiple exogenous factors in deep learning architectures. Although regularization and cross-validation strategies were implemented, the complexity of models such as CNN and XNN occasionally reduced transparency. While post hoc interpretability tools like SHAP and LIME help uncover shifts in feature relevance across scenarios, they do not entirely resolve concerns regarding model opacity—especially in contexts where regulatory clarity and explainability are essential [30,59].

Despite these challenges, several insights emerge. Mortality-related variables—especially when time-lagged—exert the strongest influence on credit behavior, particularly in high-contact economic sectors. Climate-related disruptions, though more moderate in effect, consistently improved predictive accuracy, especially in vulnerable portfolios. Social unrest also introduces nonlinear shocks, further reinforcing the need for adaptive models that account for real-world volatility.

The integration of multiple external stressors revealed a compounding effect on delinquency, a finding that conventional econometric approaches may fail to capture. Tree-based and neural models, particularly XGB and CNN, showed the greatest adaptability in this regard. At the same time, classical models like Ridge maintained stable performance under certain constrained scenarios, suggesting a role for hybrid modeling strategies.

In the Peruvian context, these findings align with recent efforts to modernize credit risk assessment through advanced analytics. Several local studies highlight the value of incorporating contextual variables and borrower segmentation into scoring systems, particularly in the wake of the pandemic and its economic aftermath [29,35]. Internationally, there is growing consensus around the importance of multidimensional modeling frameworks that include environmental and social dimensions alongside traditional financial indicators.

Overall, this study contributes to the ongoing development of risk prediction systems capable of responding to complex and evolving realities. It underscores the importance of aligning exogenous variables not only temporally but also conceptually with the credit cycle. Future implementations of ML in finance—particularly in emerging economies—should prioritize data interoperability, scenario partitioning, and explainable design to ensure both accuracy and transparency in risk-based decision-making.

6. Conclusions and Future Research

This study has demonstrated that credit delinquency prediction improves significantly when EF—such as COVID-19 severity, climate anomalies, and social unrest—are systematically integrated into machine learning workflows. Using a multi-scenario design and high-resolution time series, the models captured both the direct and delayed effects of these external shocks across various economic activities and portfolio compositions.

By incorporating explanatory tools such as SHAP and LIME, and validating the results with formal stationarity and causality tests, the study provided both predictive and interpretive value. Notably, the performance of key metrics—AUC, ACC, KS, and F1—was significantly enhanced in models exposed to time-shifted mortality and climate-related variables. This confirmed that delayed systemic shocks (e.g., COVID-related deaths) exert stronger predictive influence than contemporaneous infection rates, and that road infrastructure disruptions propagate liquidity risks, particularly in non-guaranteed portfolios.

The comparative evaluation revealed that CNN and XGB consistently outperformed other models across multiple relevance partitions and scenarios. While EBM proved especially useful in contexts requiring greater model transparency, XNN served as an exploratory reference for intrinsically interpretable architectures, although its predictive effectiveness was limited in high-volatility scenarios. Moreover, the role of government-backed guarantees emerged as central: their presence moderated the effects of external crises, whereas their absence magnified the volatility and default risks observed in the models.

Overall, these findings offer a scenario-driven framework for understanding how systemic shocks affect credit behavior. This framework is replicable and adaptable to emerging economies facing concurrent crises, combining domain-sensitive data transformations (e.g., lag structures) with advanced modeling and interpretability techniques. Importantly, the integration of KS and F1 metrics deepens the evaluation of not just model accuracy but also the discriminatory power and balance between false positives and false negatives—factors critical to financial risk management and regulatory compliance.

Looking forward, several research directions are both relevant and necessary. First, the use of static lag structures could be extended through adaptive or dynamic lag modeling, particularly in the case of gradual-onset crises such as climate change or prolonged social instability. Second, cross-regional replication would test the generalizability of these findings in other vulnerable markets, where the interaction between policy buffers and external shocks may differ. Third, in-depth analysis of credit policies—such as emergency moratoria or targeted guarantees—would enable the finer-grained modeling of institutional effectiveness under stress. Finally, explainability remains a methodological frontier: while SHAP and LIME provide insights, future work could explore intrinsically interpretable models or causal frameworks that support regulatory transparency and stakeholder trust.

In conclusion, this research confirms the value of integrating multidimensional exogenous variables into credit risk modeling, offering a robust and explainable approach to capturing volatility in increasingly complex economic environments. As global financial systems face overlapping crises, such tools will become indispensable for promoting resilience, fairness, and informed decision-making in credit allocation.

Author Contributions

Conceptualization, J.N. and J.H.; methodology, J.N.; validation, J.N., L.R. and J.H.; formal analysis, J.N., J.C. and J.H.; investigation, J.N.; resources, J.N.; writing—original draft preparation, J.N.; writing—review and editing, J.N., L.R., J.C. and J.H.; visualization, J.N. and J.C.; supervision, J.H.; project administration, J.N. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The datasets presented in this study are partly publicly available and partly proprietary. Public datasets (COVID-19 cases, deaths, road blockages, and temperature anomalies) are accessible through the official URLs listed in Table 2. Proprietary financial datasets (credit delinquency activity and credits with external factors) were used under confidentiality agreements and have been anonymized and aggregated. Metadata, modeling programs, and experimental results have been deposited and are openly available at Zenodo: https://zenodo.org/records/14890903. Further inquiries can be directed to the corresponding author(s).

Conflicts of Interest

The authors declare no conflicts of interest.

Appendix A. Results Tables

Table A1.

Average AUC and ACC across 10 folds of ML models with COVID EF WGB_CR, by evaluated scenario and relevance groups.

Table A1.

Average AUC and ACC across 10 folds of ML models with COVID EF WGB_CR, by evaluated scenario and relevance groups.

| AUC (%) | ACC (%) | |||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Scenarios | Results | Scenarios | Results | |||||||||||

| SFE | P | F + P | F | P - SFE | F + P - SFE | F - SFE | SFE | P | F + P | F | P - SFE | F + P - SFE | F - SFE | |

| WGB_CR | ||||||||||||||

| 1. D100 | ||||||||||||||

| cnn | 72.82 | 73.63 | 74.09 | 74.09 | 0.82 | 1.28 | 1.27 | 67.49 | 70.07 | 70.18 | 70.14 | 2.58 | 2.69 | 2.65 |

| ebm | 81.13 | 81.23 | 81.42 | 81.26 | 0.10 | 0.29 | 0.13 | 74.84 | 74.94 | 75.05 | 74.93 | 0.09 | 0.20 | 0.08 |

| ffn | 72.30 | 72.56 | 72.39 | 73.38 | 0.26 | 0.10 | 1.08 | 66.74 | 65.24 | 66.15 | 66.14 | −1.50 | −0.59 | −0.60 |

| ffnlp | 72.11 | 72.35 | 72.41 | 72.72 | 0.25 | 0.30 | 0.61 | 66.28 | 67.55 | 65.64 | 65.19 | 1.27 | −0.64 | −1.09 |

| ffnsp | 72.31 | 72.31 | 71.42 | 72.77 | 0.00 | −0.89 | 0.47 | 66.40 | 66.45 | 64.67 | 65.59 | 0.05 | −1.73 | −0.81 |

| knn | 67.21 | 66.92 | 66.93 | 66.93 | −0.29 | −0.28 | −0.28 | 63.23 | 63.00 | 62.97 | 62.98 | −0.22 | −0.26 | −0.24 |

| lda | 67.23 | 68.01 | 68.54 | 68.25 | 0.78 | 1.31 | 1.02 | 63.09 | 63.74 | 64.09 | 63.77 | 0.65 | 1.00 | 0.67 |

| mlp | 69.30 | 70.83 | 71.17 | 70.47 | 1.53 | 1.87 | 1.17 | 60.82 | 63.26 | 63.58 | 62.87 | 2.44 | 2.76 | 2.06 |

| percep | 57.96 | 58.44 | 59.88 | 59.46 | 0.48 | 1.92 | 1.50 | 44.58 | 57.99 | 56.37 | 49.31 | 13.41 | 11.78 | 4.73 |

| qda | 67.44 | 66.98 | 65.81 | 67.17 | −0.46 | −1.63 | −0.27 | 52.50 | 54.01 | 54.13 | 53.72 | 1.52 | 1.64 | 1.22 |

| rf | 81.74 | 81.51 | 81.21 | 81.46 | −0.23 | −0.53 | −0.29 | 75.51 | 75.44 | 75.22 | 75.48 | −0.07 | −0.29 | −0.03 |

| ridge | 66.94 | 67.55 | 68.05 | 67.81 | 0.62 | 1.12 | 0.87 | 62.93 | 63.39 | 63.70 | 63.43 | 0.46 | 0.77 | 0.50 |

| xgb | 80.76 | 81.00 | 81.10 | 81.07 | 0.23 | 0.33 | 0.30 | 74.77 | 74.98 | 75.03 | 74.98 | 0.21 | 0.26 | 0.22 |

| xnn | 50.14 | 50.38 | 50.10 | 50.37 | 0.24 | −0.05 | 0.22 | 53.42 | 57.99 | 62.76 | 54.22 | 4.57 | 9.34 | 0.80 |

| 2. D99 | ||||||||||||||

| cnn | 72.75 | 73.60 | 74.27 | 73.57 | 0.86 | 1.52 | 0.83 | 67.04 | 70.03 | 70.42 | 69.97 | 2.98 | 3.37 | 2.93 |

| ebm | 81.04 | 81.24 | 81.37 | 81.24 | 0.19 | 0.33 | 0.20 | 74.77 | 74.93 | 75.04 | 74.94 | 0.16 | 0.28 | 0.18 |

| ffn | 72.69 | 72.38 | 71.50 | 71.55 | −0.31 | −1.20 | −1.14 | 66.07 | 65.94 | 65.86 | 65.75 | −0.14 | −0.21 | −0.32 |

| ffnlp | 71.71 | 72.81 | 72.16 | 72.45 | 1.09 | 0.44 | 0.74 | 65.27 | 66.83 | 66.28 | 65.94 | 1.56 | 1.02 | 0.67 |

| ffnsp | 72.58 | 73.11 | 72.58 | 72.99 | 0.53 | −0.00 | 0.41 | 63.93 | 65.34 | 66.81 | 66.17 | 1.41 | 2.87 | 2.24 |

| knn | 67.10 | 66.88 | 66.88 | 66.88 | −0.22 | −0.21 | −0.21 | 63.24 | 63.02 | 63.02 | 63.04 | −0.23 | −0.22 | −0.21 |

| lda | 67.17 | 67.92 | 68.46 | 68.19 | 0.75 | 1.29 | 1.02 | 63.07 | 63.68 | 64.04 | 63.78 | 0.60 | 0.96 | 0.70 |

| mlp | 69.79 | 68.93 | 69.62 | 68.70 | −0.86 | −0.16 | −1.08 | 63.83 | 64.12 | 59.76 | 64.96 | 0.29 | −4.07 | 1.13 |

| percep | 55.09 | 57.67 | 54.19 | 56.99 | 2.58 | −0.90 | 1.90 | 55.48 | 58.56 | 53.93 | 50.60 | 3.07 | −1.55 | −4.88 |

| qda | 67.32 | 66.90 | 65.74 | 67.08 | −0.42 | −1.58 | −0.23 | 52.41 | 53.98 | 54.02 | 53.63 | 1.57 | 1.62 | 1.22 |

| rf | 81.68 | 81.33 | 81.02 | 81.30 | −0.35 | −0.66 | −0.38 | 75.63 | 75.32 | 75.09 | 75.38 | −0.31 | −0.54 | −0.24 |

| ridge | 66.85 | 67.47 | 67.97 | 67.74 | 0.62 | 1.12 | 0.89 | 62.94 | 63.39 | 63.68 | 63.49 | 0.45 | 0.73 | 0.55 |

| xgb | 80.72 | 80.96 | 80.97 | 80.95 | 0.24 | 0.25 | 0.24 | 74.81 | 74.97 | 74.89 | 74.86 | 0.16 | 0.08 | 0.04 |

| xnn | 50.47 | 50.25 | 50.48 | 50.59 | −0.23 | 0.00 | 0.11 | 57.50 | 53.55 | 59.22 | 54.37 | −3.95 | 1.71 | −3.14 |

| 3. D80 | ||||||||||||||

| cnn | 72.68 | 73.18 | 73.79 | 73.50 | 0.50 | 1.11 | 0.82 | 66.38 | 70.28 | 70.65 | 70.44 | 3.90 | 4.26 | 4.06 |

| ebm | 80.73 | 81.03 | 81.16 | 81.05 | 0.29 | 0.43 | 0.31 | 74.92 | 75.16 | 75.29 | 75.19 | 0.24 | 0.37 | 0.27 |

| ffn | 71.76 | 72.16 | 72.38 | 72.83 | 0.41 | 0.62 | 1.07 | 63.24 | 66.92 | 66.19 | 67.18 | 3.68 | 2.95 | 3.94 |

| ffnlp | 71.14 | 72.00 | 71.66 | 71.97 | 0.86 | 0.51 | 0.83 | 65.73 | 66.90 | 66.45 | 65.44 | 1.16 | 0.72 | −0.29 |

| ffnsp | 70.87 | 72.03 | 72.29 | 72.13 | 1.15 | 1.42 | 1.25 | 64.07 | 65.62 | 66.69 | 65.65 | 1.54 | 2.61 | 1.58 |

| knn | 66.57 | 66.57 | 66.57 | 66.57 | −0.01 | 0.00 | 0.00 | 62.98 | 62.95 | 62.95 | 62.96 | −0.02 | −0.03 | −0.01 |

| lda | 67.33 | 67.90 | 68.29 | 68.07 | 0.57 | 0.96 | 0.74 | 63.45 | 63.88 | 64.20 | 64.02 | 0.43 | 0.75 | 0.57 |

| mlp | 67.96 | 68.85 | 68.61 | 69.92 | 0.89 | 0.66 | 1.96 | 58.09 | 63.16 | 62.43 | 60.73 | 5.07 | 4.34 | 2.64 |

| percep | 57.44 | 55.39 | 59.27 | 56.66 | −2.05 | 1.83 | −0.78 | 52.88 | 57.22 | 50.16 | 56.66 | 4.35 | −2.71 | 3.78 |

| qda | 67.69 | 66.51 | 64.11 | 66.30 | −1.18 | −3.58 | −1.39 | 52.76 | 54.28 | 52.68 | 53.73 | 1.52 | −0.08 | 0.97 |

| rf | 81.18 | 81.03 | 80.68 | 80.96 | −0.15 | −0.51 | −0.22 | 75.47 | 75.57 | 75.26 | 75.52 | 0.11 | −0.20 | 0.05 |

| ridge | 66.67 | 67.23 | 67.59 | 67.40 | 0.56 | 0.92 | 0.73 | 63.06 | 63.35 | 63.73 | 63.55 | 0.29 | 0.67 | 0.49 |