Robust Medical Image Colorization with Spatial Mask-Guided Generative Adversarial Network

Abstract

1. Introduction

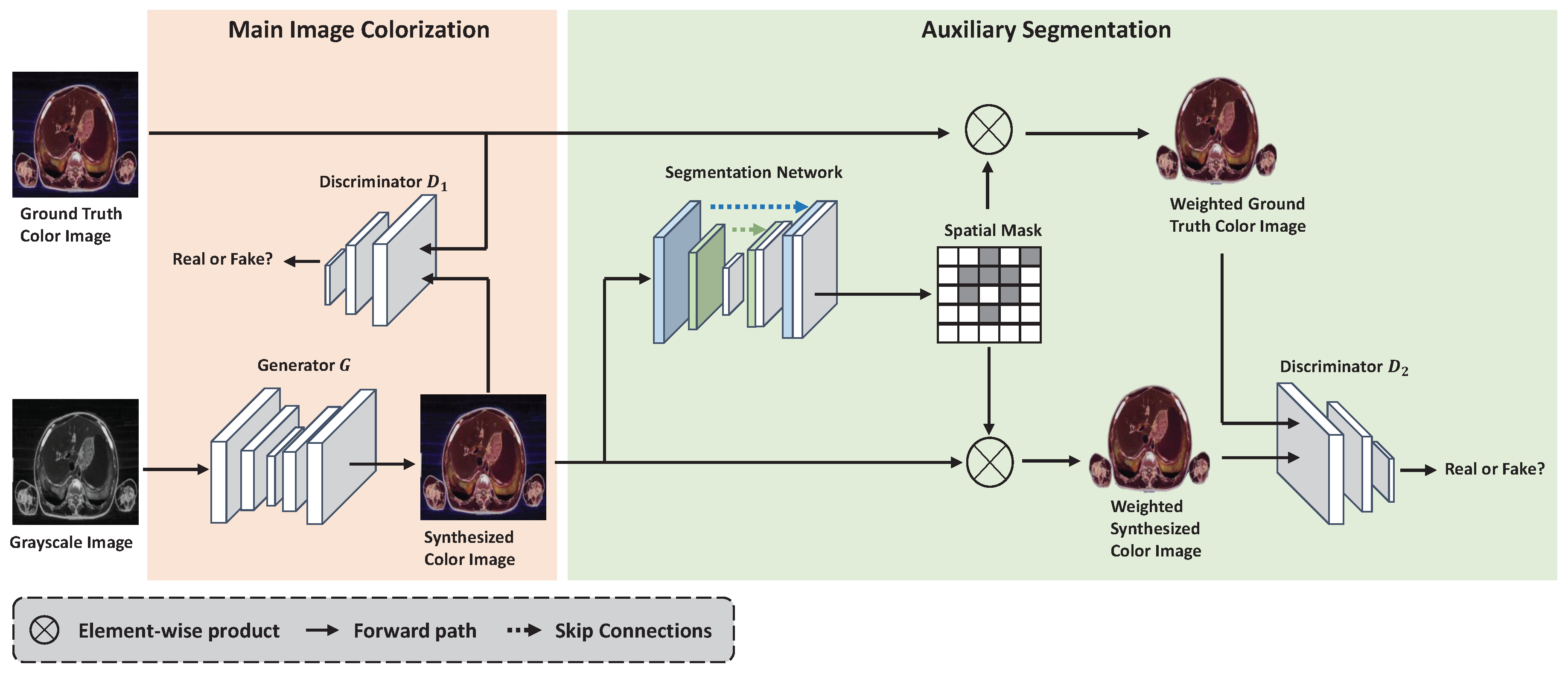

- We demonstrate that the foreground-aware module composed of a spatial mask embedded in a GAN-based colorization framework makes the model emphasize the foreground regions during the colorization process.

- A novel adversarial loss function is devised to assist the colorization model to focus on the colorization of foreground regions, reducing visual artifacts to improve the performance of colorization.

2. Related Work

3. Methodology

3.1. SMCGAN Architecture

3.2. Loss Functions

4. Experiments and Analysis

4.1. Experimental Settings

4.2. Implementation Details

4.3. Experimental Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zaffino, P.; Marzullo, A.; Moccia, S.; Calimeri, F.; De Momi, E.; Bertucci, B.; Arcuri, P.P.; Spadea, M.F. An open-source COVID-19 ct dataset with automatic lung tissue classification for radiomics. Bioengineering 2021, 8, 26. [Google Scholar] [CrossRef]

- Lee, J.; Kim, J.N.; Gomez-Perez, L.; Gharaibeh, Y.; Motairek, I.; Pereira, G.T.; Zimin, V.N.; Dallan, L.A.; Hoori, A.; Al-Kindi, S.; et al. Automated segmentation of microvessels in intravascular OCT images using deep learning. Bioengineering 2022, 9, 648. [Google Scholar] [CrossRef]

- Tang, Y.; Cai, J.; Lu, L.; Harrison, A.P.; Yan, K.; Xiao, J.; Yang, L.; Summers, R.M. CT image enhancement using stacked generative adversarial networks and transfer learning for lesion segmentation improvement. In International Workshop on Machine Learning in Medical Imaging; Springer: Berlin/Heidelberg, Germany, 2018; pp. 46–54. [Google Scholar]

- Luo, J.; Wu, M.; Gopukumar, D.; Zhao, Y. Big data application in biomedical research and health care: A literature review. Biomed. Inform. Insight 2016, 8, 1–10. [Google Scholar] [CrossRef]

- Wei, W.; Zhou, B.; Połap, D.; Woźniak, M. A regional adaptive variational PDE model for computed tomography image reconstruction. Pattern Recognit. 2019, 92, 64–81. [Google Scholar] [CrossRef]

- Kaur, M.; Singh, M. Contrast Enhancement and Pseudo Coloring Techniques for Infrared Thermal Images. In Proceedings of the 2018 2nd IEEE International Conference on Power Electronics, Intelligent Control and Energy Systems (ICPEICES), Delhi, India, 22–24 October 2018; IEEE: Manhattan, NY, USA, 2018; pp. 1005–1009. [Google Scholar]

- Dabass, J.; Vig, R. Biomedical image enhancement using different techniques-a comparative study. In International Conference on Recent Developments in Science, Engineering and Technology; Springer: Berlin/Heidelberg, Germany, 2017; pp. 260–286. [Google Scholar]

- Wang, H.; Liu, X. Overview of image colorization and its applications. In Proceedings of the IEEE 5th Advanced Information Technology, Electronic and Automation Control Conference (IAEAC), Chongqing, China, 12–14 March 2021; IEEE: Manhattan, NY, USA, 2021; pp. 1561–1565. [Google Scholar]

- Levin, A.; Lischinski, D.; Weiss, Y. Colorization using optimization. In Proceedings of the ACM SIGGRAPH, Los Angeles, CA, USA, 8–12 August 2004; pp. 689–694. [Google Scholar]

- Zhang, R.; Zhu, J.Y.; Isola, P.; Geng, X.; Lin, A.S.; Yu, T.; Efros, A.A. Real-time user-guided image colorization with learned deep priors. ACM Trans. Graph. (TOG) 2017, 36, 119–129. [Google Scholar] [CrossRef]

- Fang, F.; Wang, T.; Zeng, T.; Zhang, G. A superpixel-based variational model for image colorization. IEEE Trans. Vis. Comput. Graph. 2019, 26, 2931–2943. [Google Scholar] [CrossRef]

- Iizuka, S.; Simo-Serra, E. Deepremaster: Temporal source-reference attention networks for comprehensive video enhancement. ACM Trans. Graph. (TOG) 2019, 38, 1–13. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1125–1134. [Google Scholar]

- Lei, C.; Chen, Q. Fully automatic video colorization with self-regularization and diversity. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3753–3761. [Google Scholar]

- Nazeri, K.; Ng, E.; Ebrahimi, M. Image colorization using generative adversarial networks. In International Conference on Articulated Motion and Deformable Objects; Springer: Berlin/Heidelberg, Germany, 2018; pp. 85–94. [Google Scholar]

- Vitoria, P.; Raad, L.; Ballester, C. Chromagan: Adversarial picture colorization with semantic class distribution. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Snowmass Village, CO, USA, 1–5 March 2020; pp. 2445–2454. [Google Scholar]

- Zhang, X.; Karaman, S.; Chang, S.F. Detecting and simulating artifacts in gan fake images. In Proceedings of the IEEE International Workshop on Information Forensics and Security (WIFS), Delft, The Netherlands, 9–12 December 2019; pp. 1–6. [Google Scholar]

- Marra, F.; Saltori, C.; Boato, G.; Verdoliva, L. Incremental learning for the detection and classification of gan-generated images. In Proceedings of the IEEE International Workshop on Information Forensics and Security (WIFS), Delft, The Netherlands, 9–12 December 2019; pp. 1–6. [Google Scholar]

- Zhan, F.; Zhu, H.; Lu, S. Spatial fusion gan for image synthesis. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 3653–3662. [Google Scholar]

- Xiong, W.; Yu, J.; Lin, Z.; Yang, J.; Lu, X.; Barnes, C.; Luo, J. Foreground-aware image inpainting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 5840–5848. [Google Scholar]

- Chen, X.; Zou, D.; Zhao, Q.; Tan, P. Manifold preserving edit propagation. ACM Trans. Graph. (TOG) 2012, 31, 1–7. [Google Scholar] [CrossRef]

- Xu, K.; Li, Y.; Ju, T.; Hu, S.M.; Liu, T.Q. Efficient affinity-based edit propagation using kd tree. ACM Trans. Graph. (TOG) 2009, 28, 1–6. [Google Scholar]

- Sangkloy, P.; Lu, J.; Fang, C.; Yu, F.; Hays, J. Scribbler: Controlling deep image synthesis with sketch and color. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5400–5409. [Google Scholar]

- Xiao, Y.; Zhou, P.; Zheng, Y.; Leung, C.S. Interactive deep colorization using simultaneous global and local inputs. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Brighton, UK, 12–17 May 2019; IEEE: Manhattan, NY, USA, 2019; pp. 1887–1891. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Li, B.; Zhao, F.; Su, Z.; Liang, X.; Lai, Y.K.; Rosin, P.L. Example-based image colorization using locality consistent sparse representation. IEEE Trans. Image Process. 2017, 26, 5188–5202. [Google Scholar] [CrossRef]

- He, M.; Chen, D.; Liao, J.; Sander, P.V.; Yuan, L. Deep exemplar-based colorization. ACM Trans. Graph. (TOG) 2018, 37, 1–16. [Google Scholar] [CrossRef]

- Xu, Z.; Wang, T.; Fang, F.; Sheng, Y.; Zhang, G. Stylization-based architecture for fast deep exemplar colorization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9363–9372. [Google Scholar]

- Lu, P.; Yu, J.; Peng, X.; Zhao, Z.; Wang, X. Gray2colornet: Transfer more colors from reference image. In Proceedings of the 28th ACM International Conference on Multimedia, Seattle, WA, USA, 12–16 October 2020; pp. 3210–3218. [Google Scholar]

- Lee, J.; Kim, E.; Lee, Y.; Kim, D.; Chang, J.; Choo, J. Reference-based sketch image colorization using augmented-self reference and dense semantic correspondence. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5801–5810. [Google Scholar]

- Cheng, Z.; Yang, Q.; Sheng, B. Deep colorization. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 415–423. [Google Scholar]

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. Let there be color! Joint end-to-end learning of global and local image priors for automatic image colorization with simultaneous classification. ACM Trans. Graph. (TOG) 2016, 35, 1–11. [Google Scholar] [CrossRef]

- Zhang, R.; Isola, P.; Efros, A.A. Colorful image colorization. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 649–666. [Google Scholar]

- Anwar, S.; Tahir, M.; Li, C.; Mian, A.; Khan, F.S.; Muzaffar, A.W. Image colorization: A survey and dataset. arXiv 2020, arXiv:2008.10774. [Google Scholar]

- An, J.; Kpeyiton, K.G.; Shi, Q. Grayscale images colorization with convolutional neural networks. Soft Comput. 2020, 24, 4751–4758. [Google Scholar] [CrossRef]

- Zhao, J.; Han, J.; Shao, L.; Snoek, C.G. Pixelated semantic colorization. Int. J. Comput. Vis. 2020, 128, 818–834. [Google Scholar] [CrossRef]

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context encoders: Feature learning by inpainting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2536–2544. [Google Scholar]

- Liang, Y.; Lee, D.; Li, Y.; Shin, B.S. Unpaired medical image colorization using generative adversarial network. Multimed. Tools Appl. 2021, 81, 26669–26683. [Google Scholar] [CrossRef]

- Zhao, Y.; Po, L.M.; Cheung, K.W.; Yu, W.Y.; Rehman, Y.A.U. SCGAN: Saliency map-guided colorization with generative adversarial network. IEEE Trans. Circuits Syst. Video Technol. 2020, 31, 3062–3077. [Google Scholar] [CrossRef]

- Wang, L.; Dong, X.; Wang, Y.; Ying, X.; Lin, Z.; An, W.; Guo, Y. Exploring Sparsity in Image Super-Resolution for Efficient Inference. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 4917–4926. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Johnson, J.; Alahi, A.; Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 694–711. [Google Scholar]

- Du, G.; Cao, X.; Liang, J.; Chen, X.; Zhan, Y. Medical image segmentation based on u-net: A review. J. Imaging Sci. Technol. 2020, 64, 1–12. [Google Scholar] [CrossRef]

- Dong, N.; Xu, M.; Liang, X.; Jiang, Y.; Dai, W.; Xing, E. Neural architecture search for adversarial medical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Berlin/Heidelberg, Germany, 2019; pp. 828–836. [Google Scholar]

- Lee, H.H.; Tang, Y.; Tang, O.; Xu, Y.; Chen, Y.; Gao, D.; Han, S.; Gao, R.; Savona, M.R.; Abramson, R.G.; et al. Semi-supervised multi-organ segmentation through quality assurance supervision. In Proceedings of the Medical Imaging 2020: Image Processing. SPIE, Houston, TX, USA, 15–20 February 2020; Volume 11313, pp. 363–369. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Liang, K.; Guo, Y.; Chang, H.; Chen, X. Visual relationship detection with deep structural ranking. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 7098–7105. [Google Scholar]

- Maddison, C.J.; Mnih, A.; Teh, Y.W. The concrete distribution: A continuous relaxation of discrete random variables. arXiv 2016, arXiv:1611.00712. [Google Scholar]

- Li, F.; Li, G.; He, X.; Cheng, J. Dynamic Dual Gating Neural Networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 5330–5339. [Google Scholar]

- Spitzer, V.M.; Whitlock, D.G. The Visible Human Dataset: The anatomical platform for human simulation. Anat. Rec. Off. Publ. Am. Assoc. Anat. 1998, 253, 49–57. [Google Scholar] [CrossRef]

- Liu, Q.; Dou, Q.; Yu, L.; Heng, P.A. MS-Net: Multi-site network for improving prostate segmentation with heterogeneous MRI data. IEEE Trans. Med. Imaging 2020, 39, 2713–2724. [Google Scholar] [CrossRef]

- Qin, X.; Zhang, Z.; Huang, C.; Gao, C.; Dehghan, M.; Jagersand, M. Basnet: Boundary-aware salient object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 7479–7489. [Google Scholar]

- Zeng, X.; Tong, S.; Lu, Y.; Xu, L.; Huang, Z. Adaptive Medical Image Deep Color Perception Algorithm. IEEE Access 2020, 8, 56559–56571. [Google Scholar] [CrossRef]

- Arjovsky, M.; Chintala, S.; Bottou, L. Wasserstein generative adversarial networks. In Proceedings of the International Conference on Machine Learning. PMLR, Sydney, NSW, Australia, 6–11 August 2017; pp. 214–223. [Google Scholar]

- Gulrajani, I.; Ahmed, F.; Arjovsky, M.; Dumoulin, V.; Courville, A.C. Improved training of wasserstein gans. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 5767–5777. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Sultana, M.; Mahmood, A.; Javed, S.; Jung, S.K. Unsupervised deep context prediction for background estimation and foreground segmentation. Mach. Vis. Appl. 2019, 30, 375–395. [Google Scholar] [CrossRef]

- Stauffer, C.; Grimson, W.E.L. Adaptive background mixture models for real-time tracking. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Ft. Collins, CO, USA, 23–25 June 1999; IEEE: Manhattan, NY, USA, 1999; Volume 2, pp. 246–252. [Google Scholar]

- Lu, X. A multiscale spatio-temporal background model for motion detection. In Proceedings of the IEEE International Conference on Image Processing (ICIP), Paris, France, 27–30 October 2014; IEEE: Manhattan, NY, USA, 2014; pp. 3268–3271. [Google Scholar]

- Wang, Y.; Luo, Z.; Jodoin, P.M. Interactive deep learning method for segmenting moving objects. Pattern Recognit. Lett. 2017, 96, 66–75. [Google Scholar] [CrossRef]

- Zeng, D.; Zhu, M. Background subtraction using multiscale fully convolutional network. IEEE Access 2018, 6, 16010–16021. [Google Scholar] [CrossRef]

- Lim, L.A.; Keles, H.Y. Foreground segmentation using convolutional neural networks for multiscale feature encoding. Pattern Recognit. Lett. 2018, 112, 256–262. [Google Scholar] [CrossRef]

- Sakkos, D.; Ho, E.S.; Shum, H.P. Illumination-aware multi-task GANs for foreground segmentation. IEEE Access 2019, 7, 10976–10986. [Google Scholar] [CrossRef]

- Zhang, B.; He, M.; Liao, J.; Sander, P.V.; Yuan, L.; Bermak, A.; Chen, D. Deep exemplar-based video colorization. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 16–20 June 2019; pp. 8052–8061. [Google Scholar]

- Larsson, G.; Maire, M.; Shakhnarovich, G. Learning representations for automatic colorization. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Berlin/Heidelberg, Germany, 2016; pp. 577–593. [Google Scholar]

- Varga, D.; Szirányi, T. Fully automatic image colorization based on Convolutional Neural Network. In Proceedings of the 23rd International Conference on Pattern Recognition (ICPR), Cancún, Mexico, 4–8 December 2016; IEEE: Manhattan, NY, USA, 2016; pp. 3691–3696. [Google Scholar]

| Methods | Black Background | White Background | Average | p-Value | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| MAE ↓ | PSNR ↑ | SSIM ↑ | MAE ↓ | PSNR ↑ | SSIM ↑ | MAE ↓ | PSNR ↑ | SSIM ↑ | ||

| GAN | 0.080 | 13.58 | 0.886 | 0.074 | 14.06 | 0.896 | 0.077 | 13.82 | 0.891 | |

| DCGAN | 0.040 | 13.55 | 0.706 | 0.031 | 11.21 | 0.835 | 0.035 | 12.38 | 0.771 | |

| ChromaGAN | 0.029 | 26.5 | 0.964 | 0.026 | 24.56 | 0.989 | 0.028 | 25.53 | 0.976 | |

| WGAN | 0.014 | 26.46 | 0.989 | 0.024 | 23.94 | 0.988 | 0.019 | 25.20 | 0.988 | |

| WGAN-GP | 0.021 | 24.13 | 0.985 | 0.024 | 23.55 | 0.987 | 0.022 | 23.84 | 0.986 | |

| CycleGAN | 0.014 | 26.21 | 0.989 | 0.025 | 23.66 | 0.986 | 0.019 | 24.94 | 0.988 | |

| Ours | 0.019 | 27.42 | 0.983 | 0.015 | 27.97 | 0.995 | 0.017 | 27.70 | 0.989 | - |

| Methods | Black Background | White Background | Average | ||||||

|---|---|---|---|---|---|---|---|---|---|

| MAE ↓ | PSNR ↑ | SSIM ↑ | MAE ↓ | PSNR ↑ | SSIM ↑ | MAE ↓ | PSNR ↑ | SSIM ↑ | |

| Ours | 0.025 | 21.31 | 0.972 | 0.042 | 19.34 | 0.970 | 0.033 | 20.33 | 0.971 |

| Ours | 0.019 | 27.42 | 0.983 | 0.015 | 27.97 | 0.995 | 0.017 | 51.81 | 0.989 |

| Methods | Black Background | White Background | Average | ||||||

|---|---|---|---|---|---|---|---|---|---|

| MAE ↓ | PSNR ↑ | SSIM ↑ | MAE ↓ | PSNR ↑ | SSIM ↑ | MAE ↓ | PSNR ↑ | SSIM ↑ | |

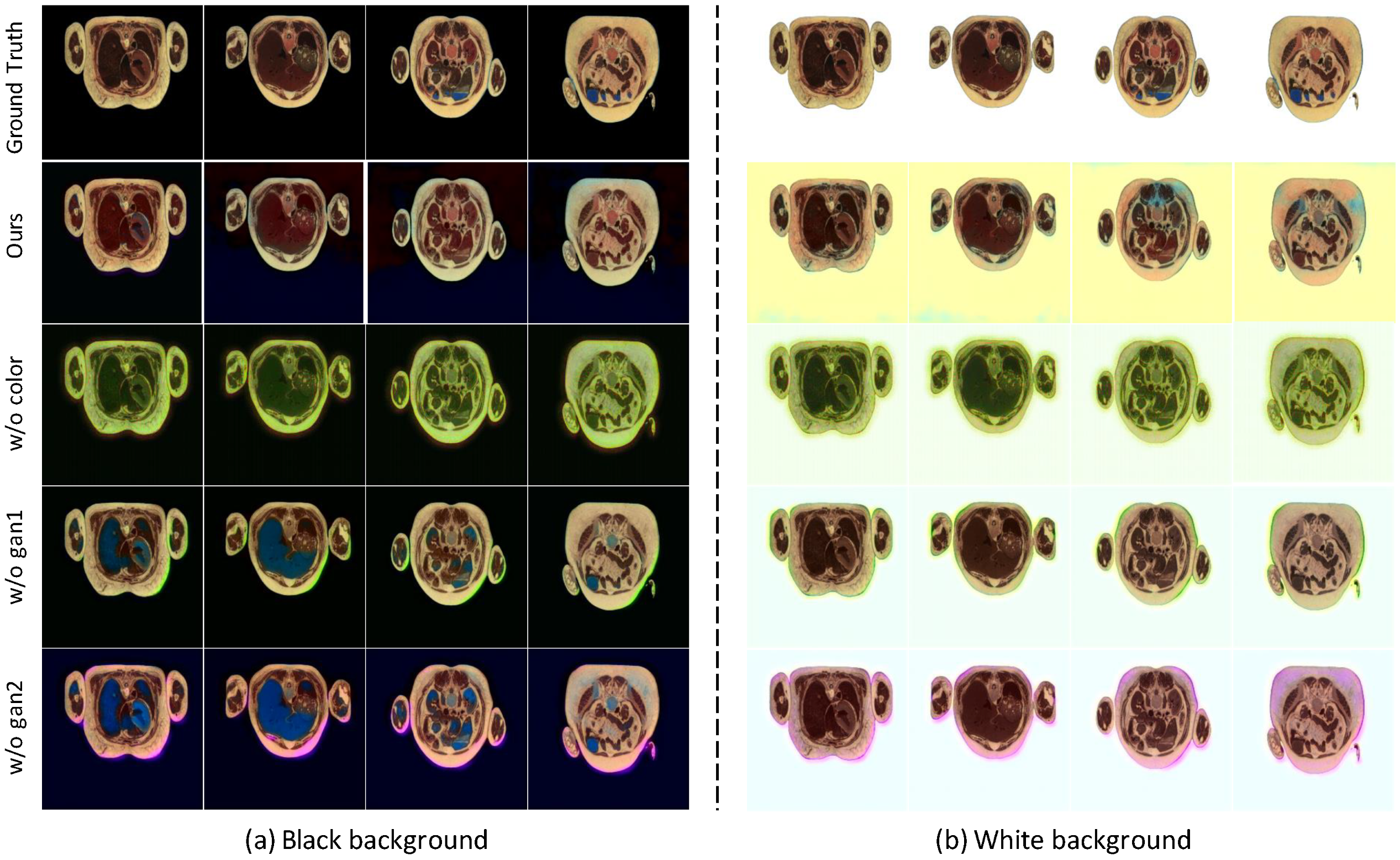

| w/o color | 0.040 | 20.59 | 0.947 | 0.049 | 19.28 | 0.966 | 0.045 | 19.94 | 0.957 |

| w/o gan1 | 0.015 | 27.88 | 0.987 | 0.022 | 25.37 | 0.994 | 0.019 | 26.63 | 0.991 |

| w/o gan2 | 0.049 | 20.97 | 0.901 | 0.028 | 23.50 | 0.991 | 0.039 | 22.24 | 0.946 |

| Ours | 0.019 | 27.42 | 0.983 | 0.015 | 27.97 | 0.995 | 0.017 | 27.70 | 0.989 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Z.; Li, Y.; Shin, B.-S. Robust Medical Image Colorization with Spatial Mask-Guided Generative Adversarial Network. Bioengineering 2022, 9, 721. https://doi.org/10.3390/bioengineering9120721

Zhang Z, Li Y, Shin B-S. Robust Medical Image Colorization with Spatial Mask-Guided Generative Adversarial Network. Bioengineering. 2022; 9(12):721. https://doi.org/10.3390/bioengineering9120721

Chicago/Turabian StyleZhang, Zuyu, Yan Li, and Byeong-Seok Shin. 2022. "Robust Medical Image Colorization with Spatial Mask-Guided Generative Adversarial Network" Bioengineering 9, no. 12: 721. https://doi.org/10.3390/bioengineering9120721

APA StyleZhang, Z., Li, Y., & Shin, B.-S. (2022). Robust Medical Image Colorization with Spatial Mask-Guided Generative Adversarial Network. Bioengineering, 9(12), 721. https://doi.org/10.3390/bioengineering9120721