Hand Exoskeleton Design and Human–Machine Interaction Strategies for Rehabilitation

Abstract

1. Introduction

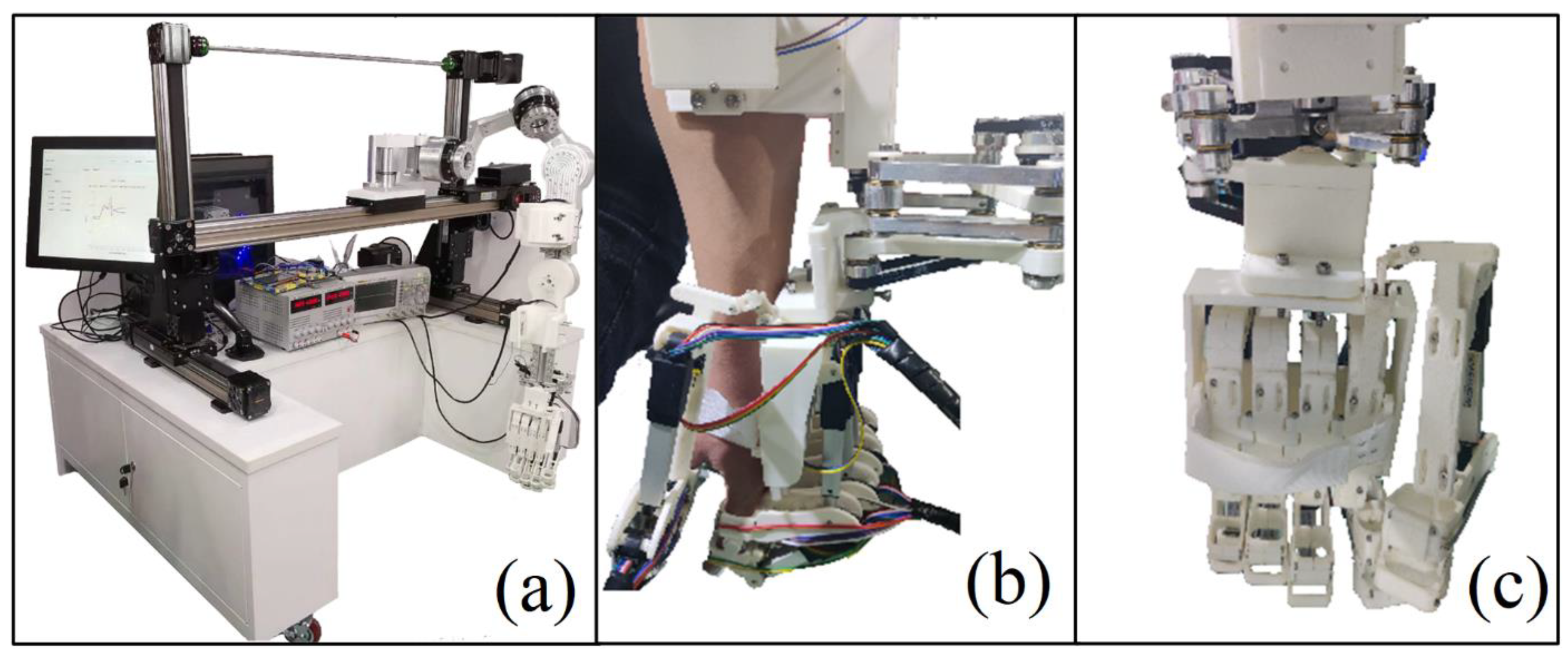

2. Design of Hand Exoskeleton

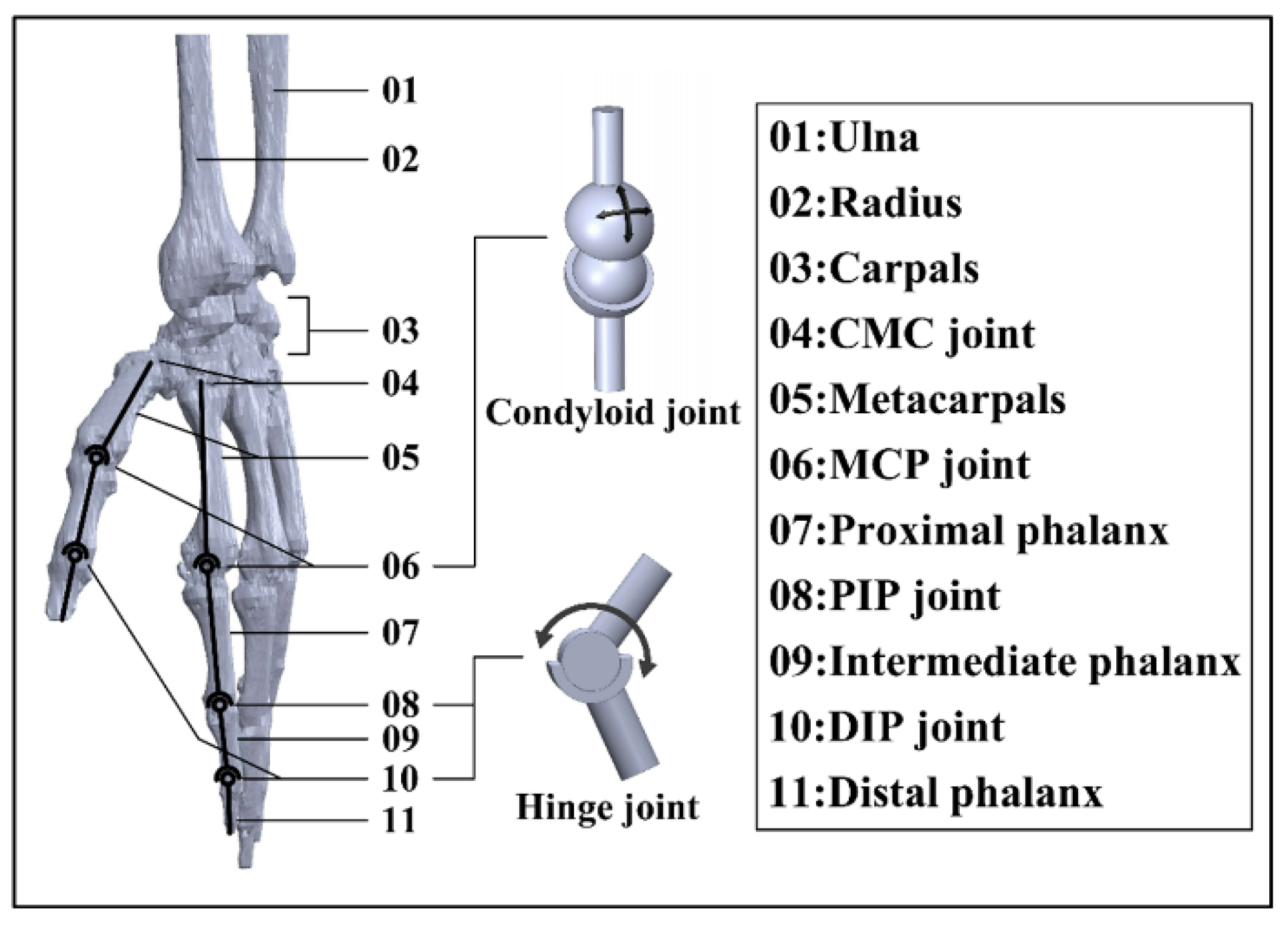

2.1. Hand Skeleton Model Construction

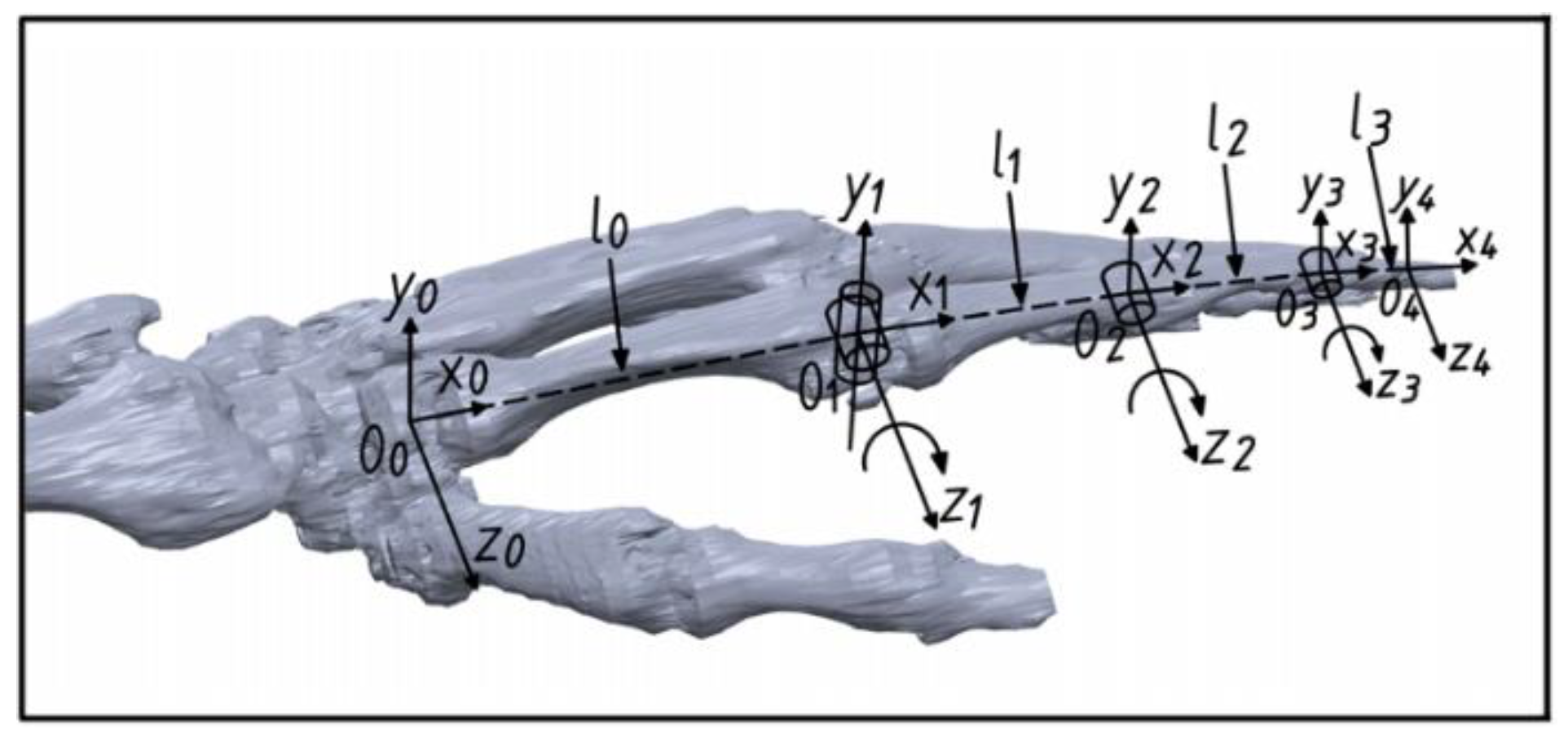

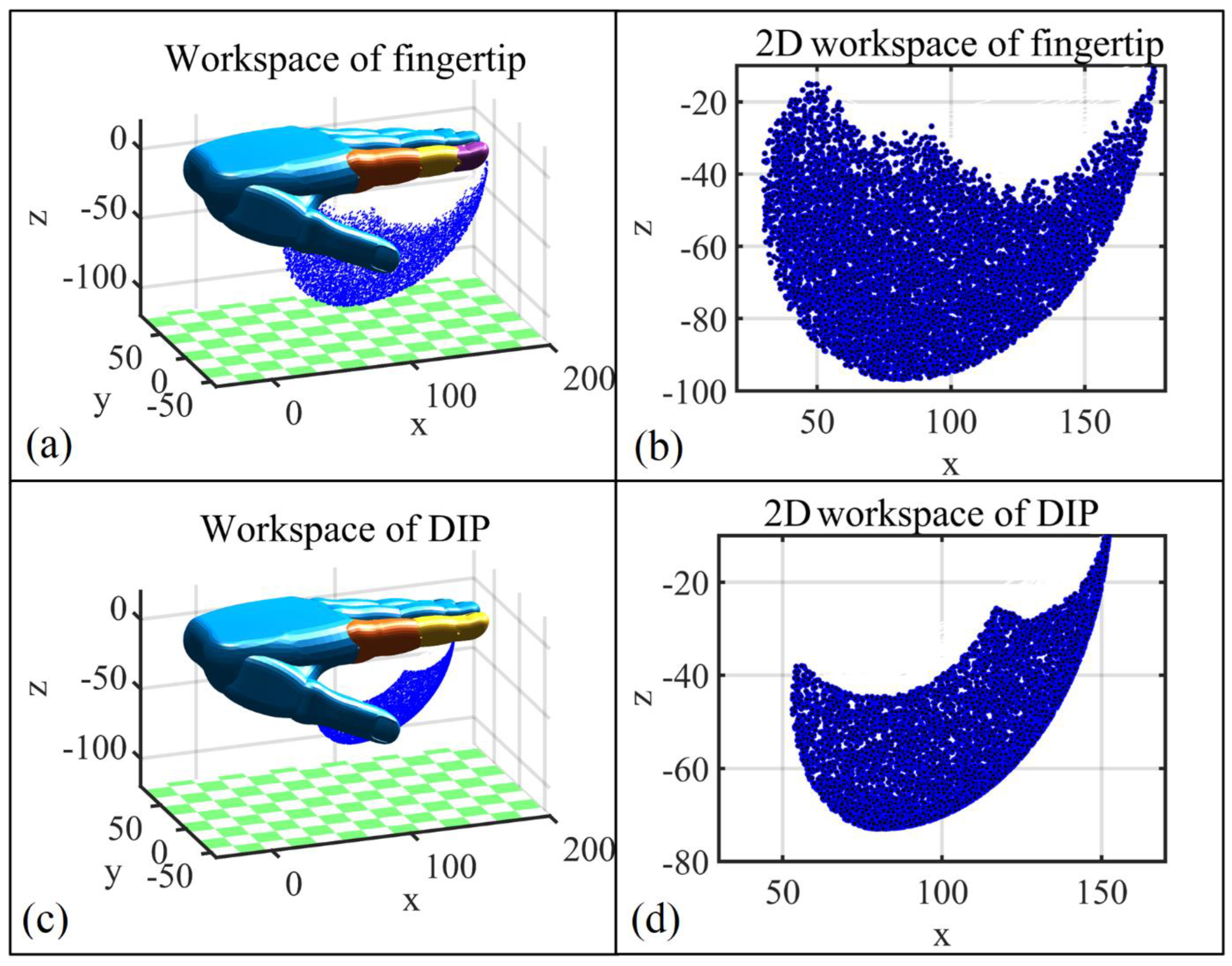

2.2. Finger Kinematics

2.3. Design of the Exoskeleton Structure

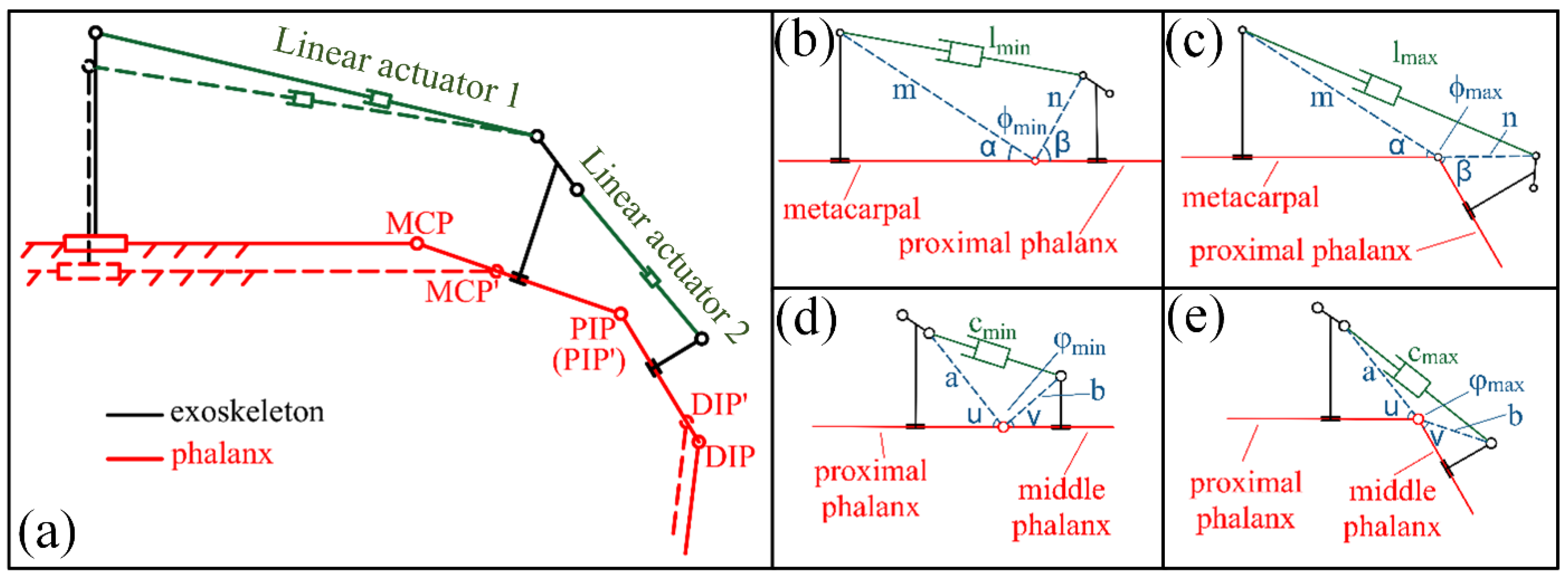

2.3.1. Structure Analysis

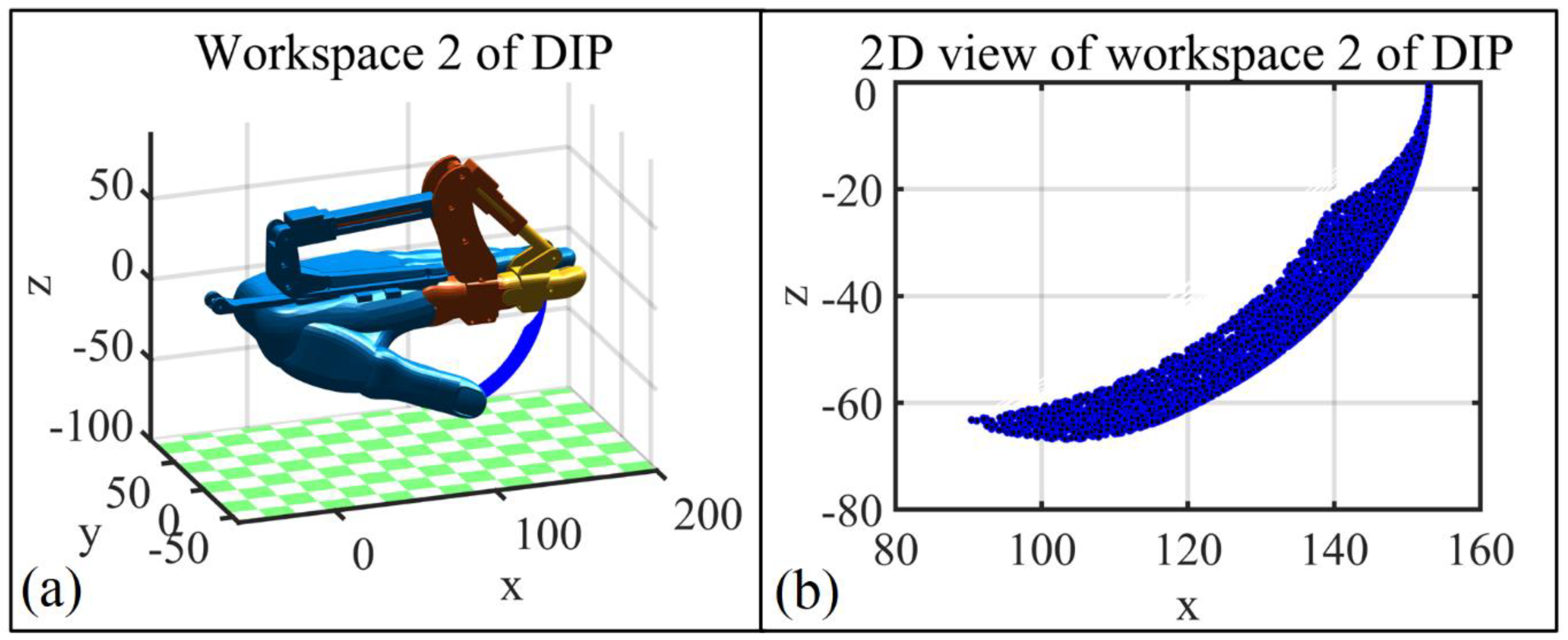

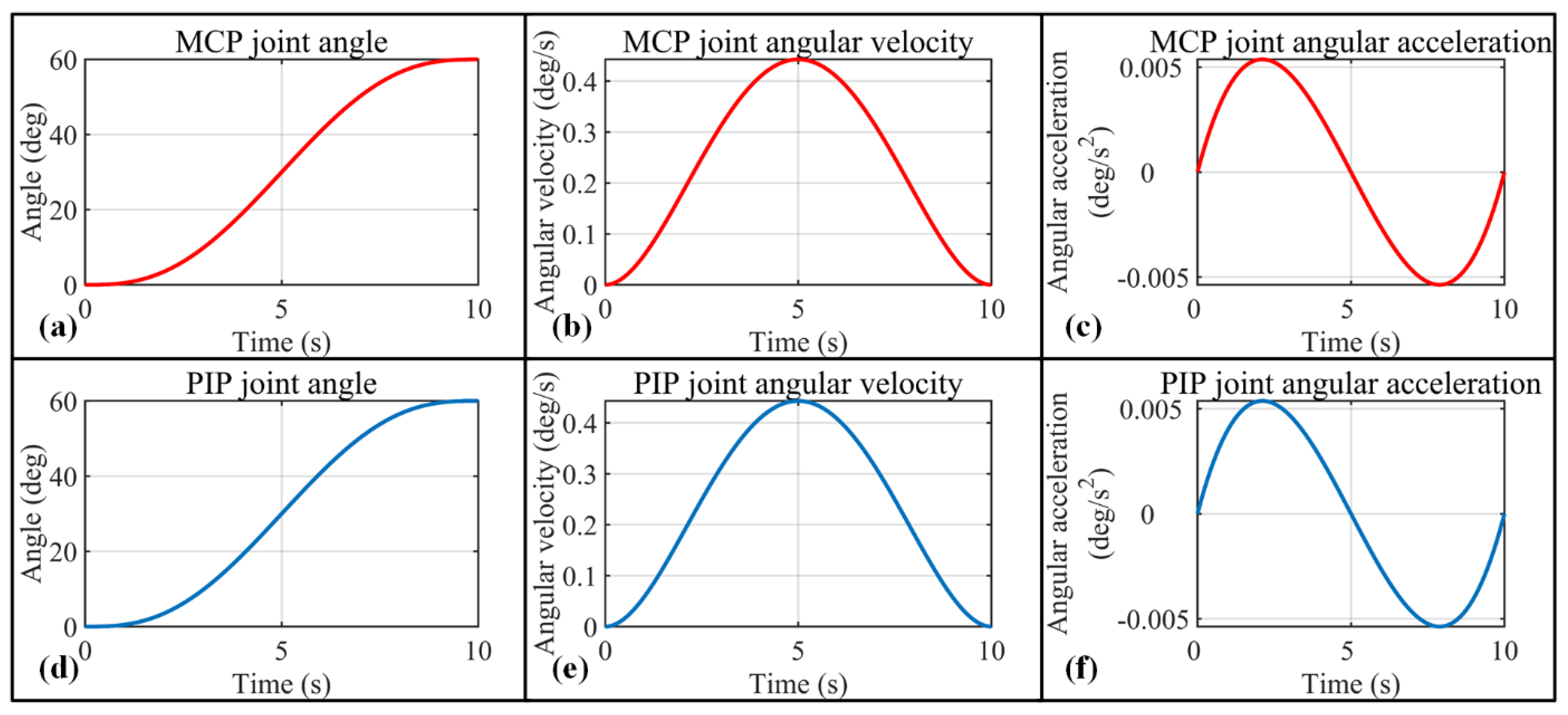

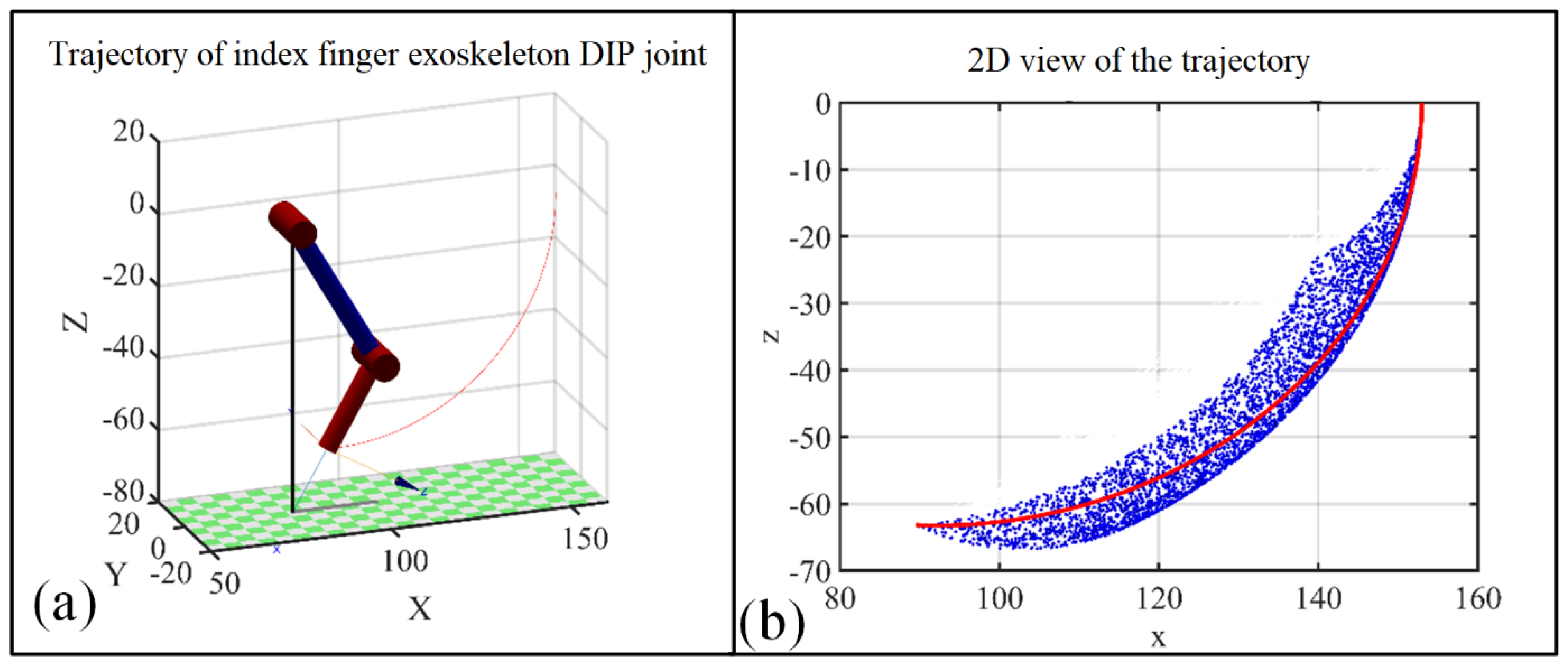

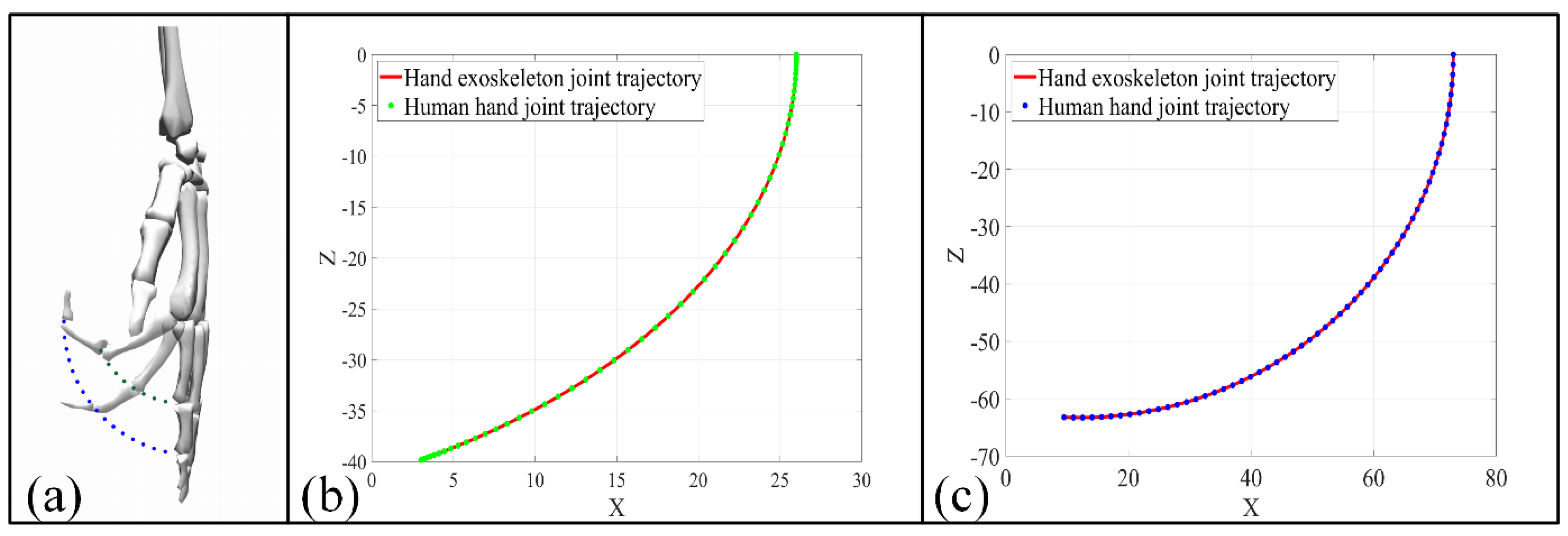

2.3.2. Kinematic Analysis

3. Hand Exoskeleton HMI Strategies

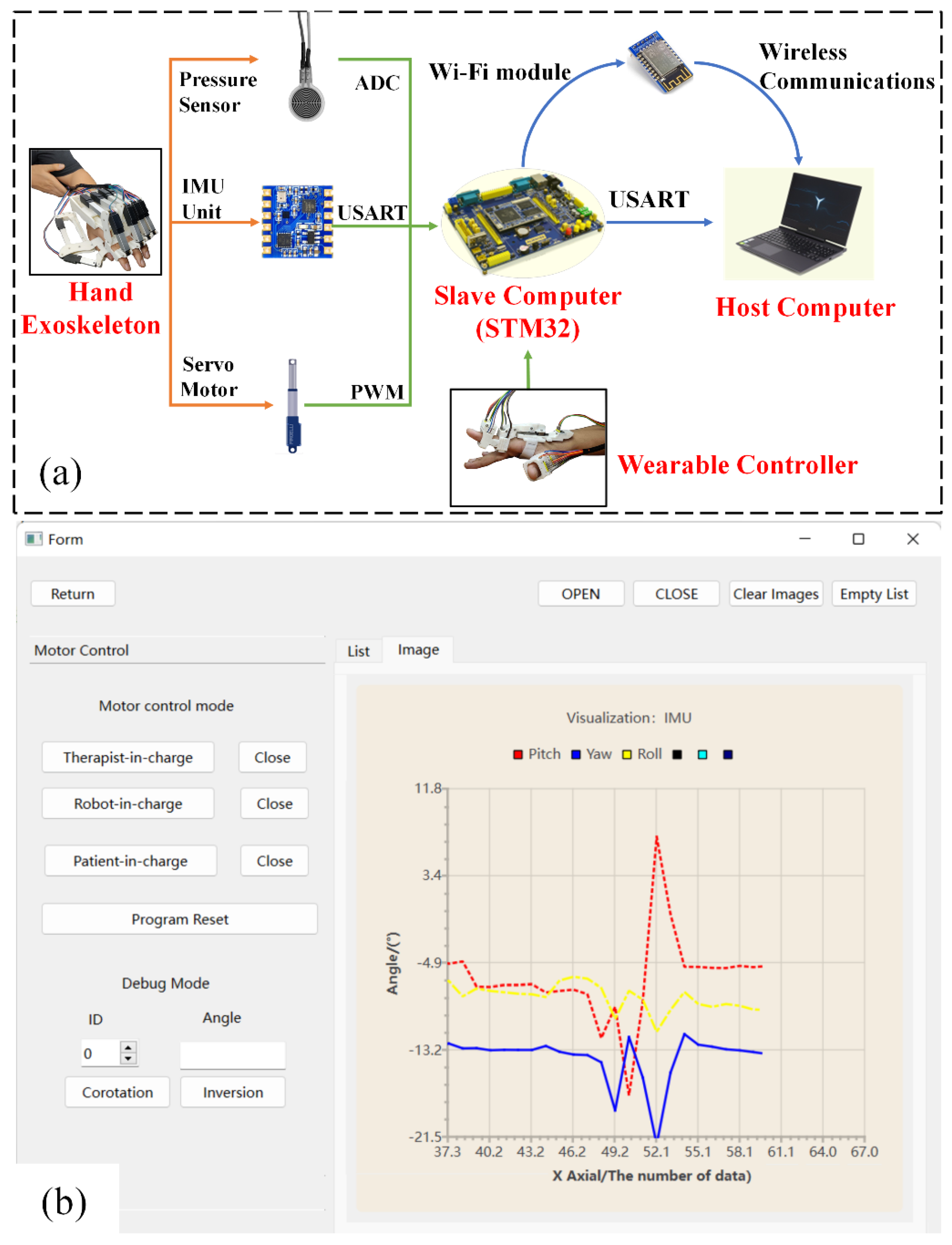

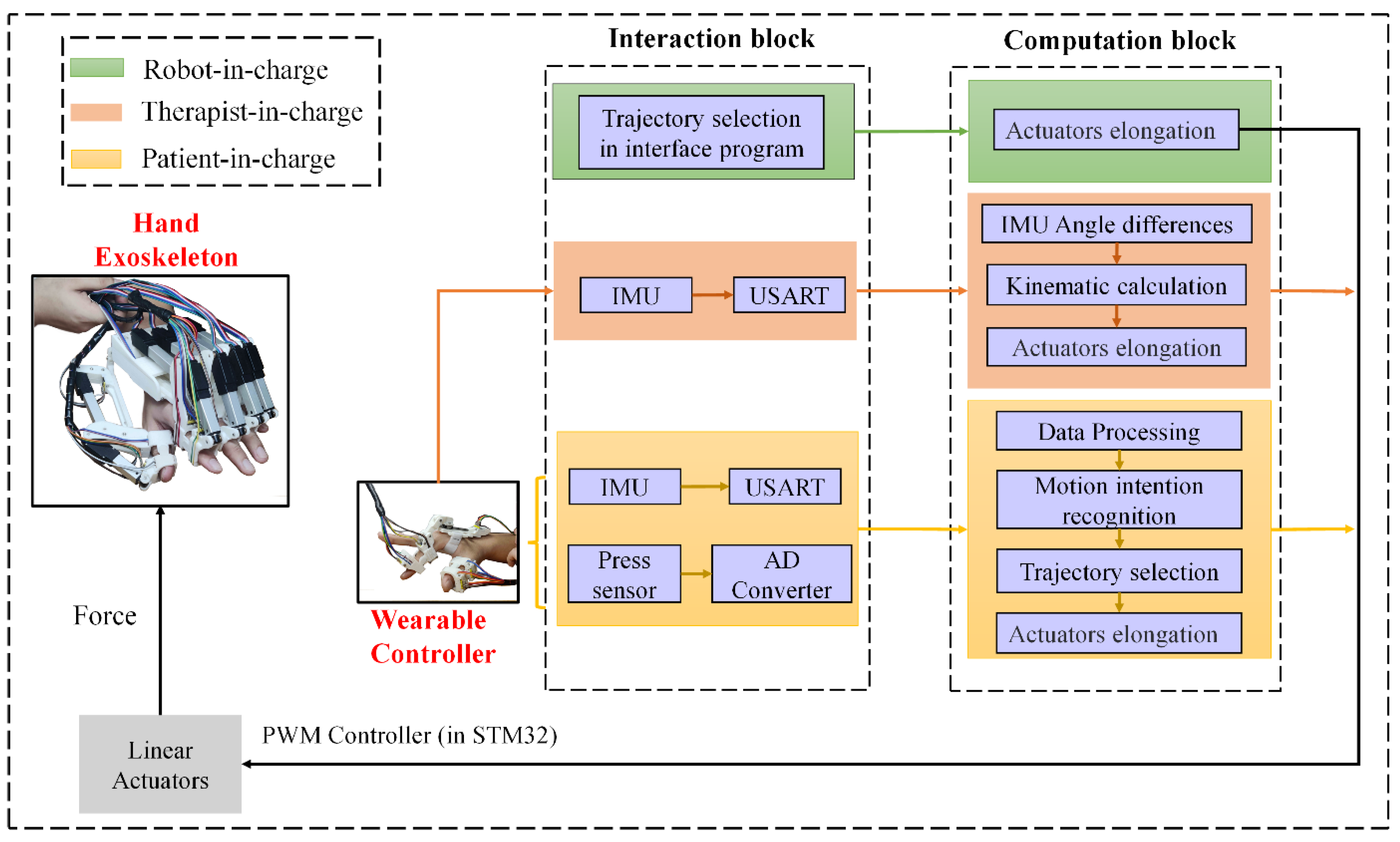

3.1. Hand Exoskeleton System Overview

3.2. Control Modes for Rehabilitation and Daily Life Activity Assistance

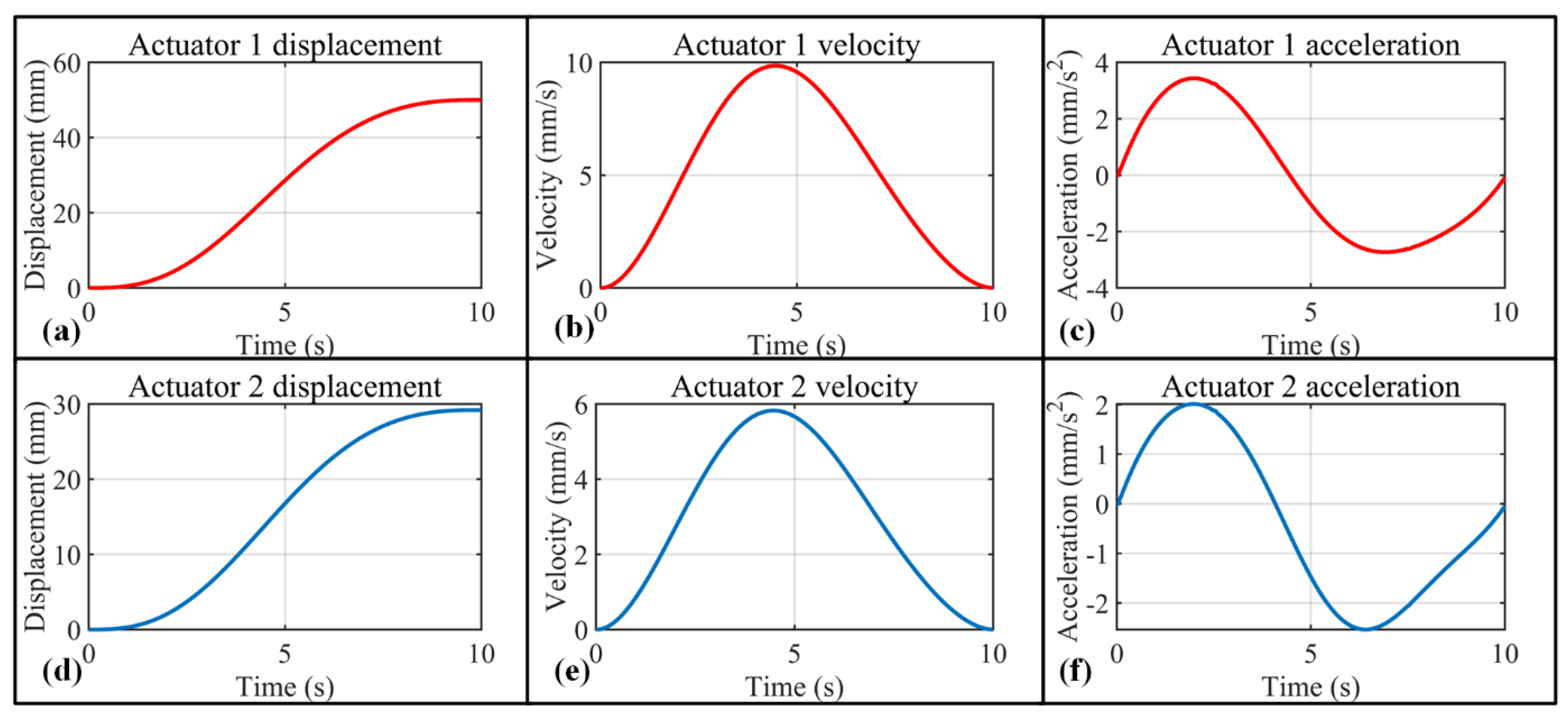

3.2.1. Robot-in-Charge Rehabilitation Mode

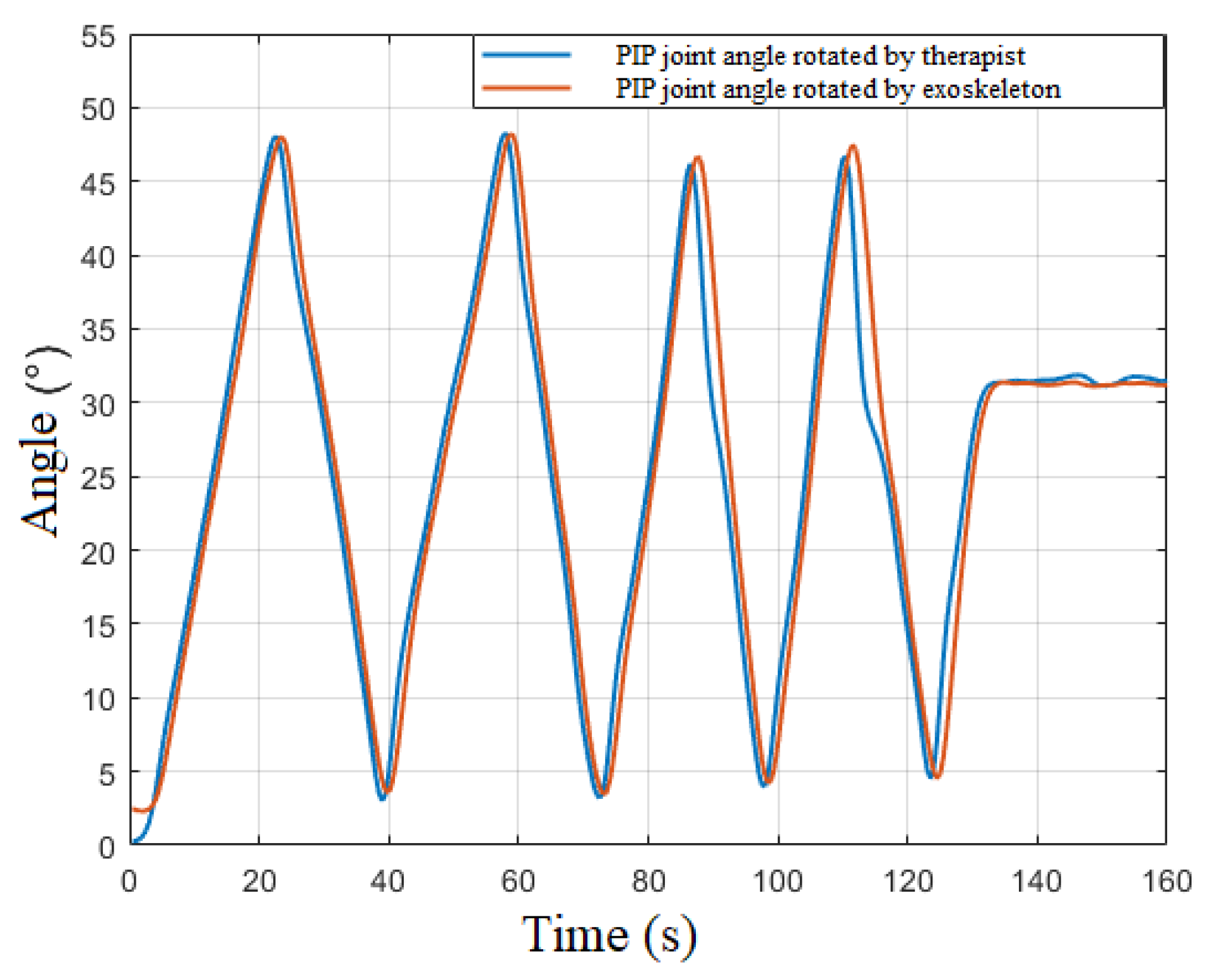

3.2.2. Therapist-in-Charge Rehabilitation Mode

3.2.3. Patient-in-Charge Rehabilitation Mode

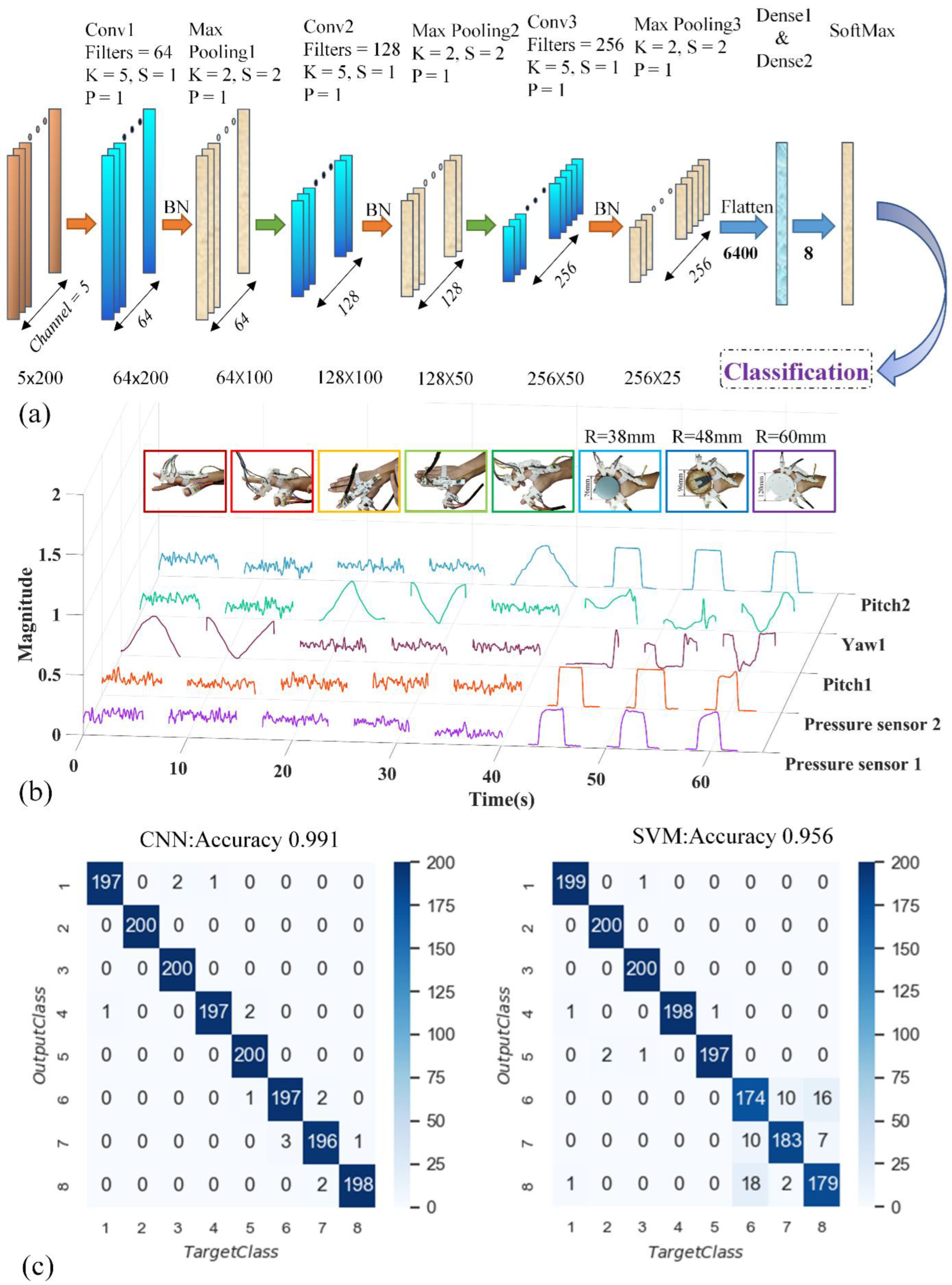

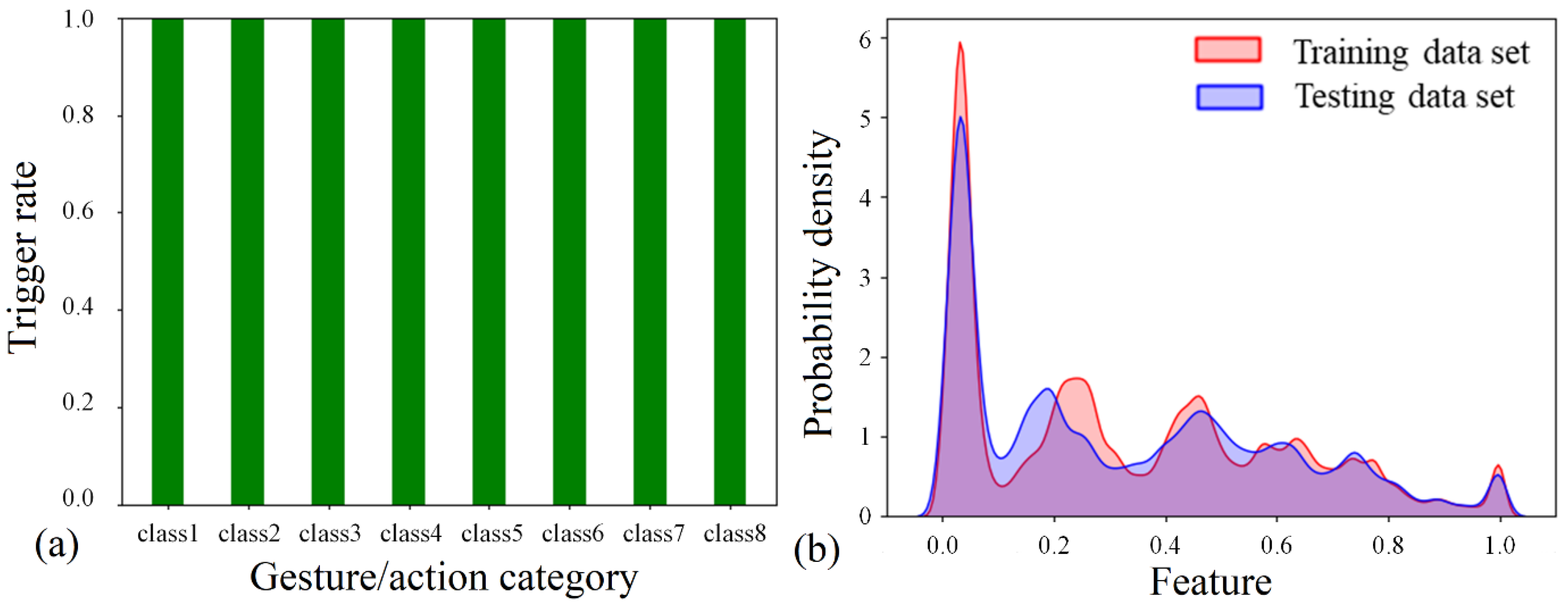

Data Acquisition and Processing

Intention-Recognition Model and Results

4. Discussion

4.1. Mechanical Design of the Exoskeleton

4.2. Intention Detection

4.3. Intention Recognition

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Basteris, A.; Nijenhuis, S.; Stienen, A.; Buurke, J.; Prange, G.; Amirabdollahian, F. Training modalities in robot-mediated upper limb rehabilitation in stroke: A framework for classification based on a systematic review. J. Neuroeng. Rehabil. 2014, 11, 111. [Google Scholar] [CrossRef] [PubMed]

- Gao, X.; Liu, Z.; Li, Y.; Gu, X. A dynamic observation on the effects of rehabilitation therapy on poststroke hemiplegic patients. Chin. J. Rehabil. Med. 2005, 20, 44–46. [Google Scholar]

- Wing, K.; Lynskey, J.; Bosch, P. Whole-Body intensive rehabilitation is feasible and effective in chronic stroke survivors: A retrospective data analysis. Top Stroke Rehabil. 2008, 15, 247–255. [Google Scholar] [CrossRef] [PubMed]

- Santavas, N.; Kansizoglou, I.; Bampis, L.; Karakasis, E.; Gasteratos, A. Attention! A Lightweight 2D Hand Pose Estimation Approach. IEEE Sens. J. 2021, 21, 11488–11496. [Google Scholar] [CrossRef]

- Nasri, N.; Orts-Escolano, S.; Cazorla, M. An sEMG-Controlled 3D Game for Rehabilitation Therapies: Real-Time Time Hand Gesture Recognition Using Deep Learning Techniques. Sensors 2020, 20, 12. [Google Scholar] [CrossRef] [PubMed]

- Gordleeva, S.Y.; Lobov, S.A.; Grigorev, N.A.; Savosenkov, A.O.; Shamshin, M.O.; Lukoyanov, M.V.; Khoruzhko, M.A.; Kazantsev, V.B. Real-Time EEG-EMG Human-Machine Interface-Based Control System for a Lower-Limb Exoskeleton. IEEE Access 2020, 8, 84070–84081. [Google Scholar] [CrossRef]

- Mortl, A.; Lawitzky, M.; Kucukyilmaz, A.; Sezgin, M.; Basdogan, C.; Hirche, S. The role of roles: Physical cooperation between humans and robots. Int. J. Rob. Res. 2012, 31, 1656–1674. [Google Scholar] [CrossRef]

- Huang, J.; Huo, W.G.; Xu, W.X.; Mohammed, S.; Amirat, Y. Control of Upper-Limb Power-Assist Exoskeleton Using a Human-Robot Interface Based on Motion Intention Recognition. IEEE Trans. Autom. Sci. Eng. 2015, 12, 1257–1270. [Google Scholar] [CrossRef]

- Hassan, M.; Kadone, H.; Suzuki, K.; Sankai, Y. Wearable Gait Measurement System with an Instrumented Cane for Exoskeleton Control. Sensors 2014, 14, 1705–1722. [Google Scholar] [CrossRef]

- Zhu, M.; Sun, Z.; Zhang, Z.; Shi, Q.; Lee, C. Haptic-Feedback smart glove as a creative human-machine interface (HMI) for virtual/augmented reality applications. Sci. Adv. 2020, 6, eaaz8693. [Google Scholar] [CrossRef]

- Abughalieh, K.M.; Alawneh, S.G. Predicting Pedestrian Intention to Cross the Road. IEEE Access 2020, 8, 72558–72569. [Google Scholar] [CrossRef]

- Ramli, N.A.; Nordin, A.N.; Azlan, N.Z. Development of low cost screen-printed piezoresistive strain sensor for facial expressions recognition systems. Microelectron. Eng. 2020, 234, 111440. [Google Scholar] [CrossRef]

- Zhang, H.J.; Liu, Y.J.; Wang, C.; Fu, R.; Sun, Q.Y.; Li, Z. Research on a Pedestrian Crossing Intention Recognition Model Based on Natural Observation Data. Sensors 2020, 20, 1776. [Google Scholar] [CrossRef] [PubMed]

- Zhang, X.; Zhou, P. High-Density Myoelectric Pattern Recognition toward Improved Stroke Rehabilitation. IEEE. Trans. Biomed. Eng. 2012, 59, 1649–1657. [Google Scholar] [CrossRef] [PubMed]

- Xiao, F.Y.; Chen, Y.Y.; Zhu, Y.H. GADF/GASF-HOG: Feature extraction methods for hand movement classification from surface electromyography. J. Neural Eng. 2020, 17, 046016. [Google Scholar] [CrossRef] [PubMed]

- Tang, Z.C.; Zhang, L.T.; Chen, X.; Ying, J.C.; Wang, X.Y.; Wang, H. Wearable Supernumerary Robotic Limb System Using a Hybrid Control Approach Based on Motor Imagery and Object Detection. IEEE Trans. Neural Syst. Rehabil. Eng. 2022, 30, 1298–1309. [Google Scholar] [CrossRef]

- Jaramillo-Yanez, A.; Benalcazar, M.E.; Mena-Maldonado, E. Real-Time Hand Gesture Recognition Using Surface Electromyography and Machine Learning: A Systematic Literature Review. Sensors 2020, 20, 2467. [Google Scholar] [CrossRef]

- Albini, A.; Denei, S.; Cannata, G. Human hand recognition from robotic skin measurements in human-robot physical interactions. In Proceedings of the 2017 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4348–4353. [Google Scholar]

- Qiu, S.; Zhao, H.K.; Jiang, N.; Wang, Z.L.; Liu, L.; An, Y.; Zhao, H.K.; Miao, X.; Liu, R.C. Multi-Sensor information fusion based on machine learning for real applications in human activity recognition: State-of-the-art and research challenges. Inf. Fusion 2022, 80, 241–265. [Google Scholar] [CrossRef]

- Lu, Z.; Chen, X.; Li, Q.; Zhang, X.; Zhou, P. A Hand Gesture Recognition Framework and Wearable Gesture-Based Interaction Prototype for Mobile Devices. IEEE Trans Hum. Mach. Syst 2017, 44, 293–299. [Google Scholar] [CrossRef]

- Samprita, S.; Koshy, A.S.; Megharjun, V.N.; Talasila, V. LSTM-Based Analysis of A Hip-Hop Movement. In Proceedings of the 6th International Conference on Control, Automation and Robotics (ICCAR), Electr Network, 20–23 April 2020; pp. 519–524. [Google Scholar]

- Mayagoitia, R.E.; Lotters, J.C.; Veltink, P.H.; Hermens, H. Standing balance evaluation using a triaxial accelerometer. Gait. Posture 2002, 16, 55–59. [Google Scholar] [CrossRef]

- Bourke, A.K.; O’Brien, J.V.; Lyons, G.M. Evaluation of a threshold-based tri-axial accelerometer fall detection algorithm. Gait. Posture 2007, 26, 194–199. [Google Scholar] [CrossRef] [PubMed]

- Atallah, L.; Yang, G.Z. The use of pervasive sensing for behaviour profiling—A survey. Pervasive Mob. Comput. 2009, 5, 447–464. [Google Scholar] [CrossRef]

- Salman, A.D.; Khalaf, O.I.; Abdulsaheb, G.M. An adaptive intelligent alarm system for wireless sensor network. Indones. J. Electr. Eng. Comput. Sci. 2019, 15, 142–147. [Google Scholar] [CrossRef]

- Tryon, J.; Trejos, A.L. Classification of Task Weight During Dynamic Motion Using EEG-EMG Fusion. IEEE Sens. J. 2021, 21, 5012–5021. [Google Scholar] [CrossRef]

- Tunca, C.; Pehlivan, N.; Ak, N.; Arnrich, B.; Salur, G.; Ersoy, C. Inertial Sensor-Based Robust Gait Analysis in Non-Hospital Settings for Neurological Disorders. Sensors 2017, 17, 825. [Google Scholar] [CrossRef] [PubMed]

- Tahir, S.; Jalal, A.; Kim, K. Wearable Inertial Sensors for Daily Activity Analysis Based on Adam Optimization and the Maximum Entropy Markov Model. Entropy 2020, 22, 579. [Google Scholar] [CrossRef]

- Liu, L. Objects detection toward complicated high remote basketball sports by leveraging deep CNN architecture. Future Gener. Comput. Syst. 2021, 119, 31–36. [Google Scholar] [CrossRef]

- Zhou, X.K.; Liang, W.; Wang, K.I.K.; Wang, H.; Yang, L.T.; Jin, Q. Deep-Learning-Enhanced Human Activity Recognition for Internet of Healthcare Things. IEEE Internet Things J. 2020, 7, 6429–6438. [Google Scholar] [CrossRef]

- Nath, R.K.; Thapliyal, H.; Caban-Holt, A. Machine Learning Based Stress Monitoring in Older Adults Using Wearable Sensors and Cortisol as Stress Biomarker. J. Signal Process. Syst. 2022, 94, 513–525. [Google Scholar] [CrossRef]

- Yang, J.T.; Yin, Y.H. Novel Soft Smart Shoes for Motion Intent Learning of Lower Limbs Using LSTM with a Convolutional Autoencoder. IEEE Sens. J. 2021, 21, 1906–1917. [Google Scholar] [CrossRef]

- Chen, G.; Liu, Z.G.; Yu, G.; Liang, J.H. A New View of Multisensor Data Fusion: Research on Generalized Fusion. Math. Probl. Eng. 2021, 2021, 5471242. [Google Scholar] [CrossRef]

- Shao, Y.; Liu, L.; Huang, L.; Deng, N. Key issues of support vector machines and future prospects. Acta Math. Sin. Chin. Ser. 2020, 50, 1233–1248. [Google Scholar]

- Polygerinos, P.; Wang, Z.; Overvelde, J.; Galloway, K.; Wood, R.; Bertoldi, K.; Walsh, C.J. Modeling of soft fiber-reinforced bending actuators. IEEE Trans. Robot. 2015, 31, 778–789. [Google Scholar] [CrossRef]

- Marconi, D.; Baldoni, A.; McKinney, Z.; Cempini, M.; Crea, S.; Vitiello, N. A novel hand exoskeleton with series elastic actuation for modulated torque transfer. Mechatronics 2019, 61, 69–82. [Google Scholar] [CrossRef]

- Cempini, M.; Cortese, M.; Vitiello, N. A powered finger–thumb wearable hand exoskeleton with self-aligning joint axes. IEEE ASME Trans. Mechatron. 2014, 20, 705–716. [Google Scholar] [CrossRef]

- Chen, Y.; Yang, Y.; Li, M.; Chen, E.; Mu, W.; Fisher, R.; Yin, R. Wearable Actuators: An Overview. Textiles 2021, 1, 283–321. [Google Scholar] [CrossRef]

- Freni, P.; Botta, E.M.; Randazzo, L.; Ariano, P. Innovative Hand Exoskeleton Design for Extravehicular Activities in Space; Pernici, B., Della, S., Colosimo, T.B.M., Faravelli, T., Paolucci, R., Piardi, S., Eds.; Springer Briefs in Applied Sciences and Technology: New York, NY, USA, 2014; Volume 1, pp. 64–76. [Google Scholar]

- Aliseichik, A.P.; Gribkov, D.A.; Efimov, A.R.; Orlov, I.A.; Pavlovsky, V.E.; Podoprosvetov, A.V.; Khaidukova, I.V. Artificial Muscles (Review Article). J. Comput. Syst. Sci. Int. 2022, 61, 270–293. [Google Scholar] [CrossRef]

- Jo, I.; Park, Y.; Lee, J.; Bae, J. A portable and spring-guided hand exoskeleton for exercising flexion/extension of the fingers. Mech. Mach. Theory 2019, 135, 176–191. [Google Scholar] [CrossRef]

- Taheri, H.; Rowe, J.; Gardner, D.; Chan, V.; Gray, K.; Bower, C.; Reinkensmeyer, D.; Wolbrecht, E. Design and preliminary evaluation of the FINGER rehabilitation robot: Controlling challenge and quantifying finger individuation during musical computer game play. J. Neuroeng. Rehabil. 2014, 11, 10. [Google Scholar] [CrossRef]

- Ramos, O.; Múnera, M.; Moazen, M.; Wurdemann, H.; Cifuentes, C. Assessment of Soft Actuators for Hand Exoskeletons: Pleated Textile Actuators and Fiber-Reinforced Silicone Actuators. Front. Bioeng. Biotechnol. 2022, 10, 1149. [Google Scholar] [CrossRef]

- Vertongen, J.; Kamper, D. Design of a 3D printed hybrid mechanical structure for a hand exoskeleton. Curr. Dir. Biomed. Eng. 2020, 6, 1–5. [Google Scholar] [CrossRef]

- Grandi, A.; Karthikeyan, A.; Junior, E.; Covarrubias, M. Low-Cost 3D Printed Exoskeleton for Post-Stroke Hand Rehabilitation. Comput. Aided. Des. Appl. 2022, 6, 1207–1215. [Google Scholar] [CrossRef]

- Conti, R.; Meli, E.; Ridolfi, A.; Bianchi, M.; Governi, L.; Volpe, Y.; Allotta, B. Kinematic synthesis and testing of a new portable hand exoskeleton. Meccanica 2017, 52, 2873–2897. [Google Scholar] [CrossRef]

- Lee, J.; Kwon, K.; Yeo, W.-H. Recent advances in wearable exoskeletons for human strength augmentation. Flex. Print. Electron. 2022, 7, 023002. [Google Scholar] [CrossRef]

- Wilhelm, N.; Haddadin, S.; Lang, J.; Micheler, C.; Hinterwimmer, F.; Reiners, A.; Burgkart, R.; Glowalla, C. Development of an Exoskeleton Platform of the Finger for Objective Patient Monitoring in Rehabilitation. Sensors 2022, 22, 4804. [Google Scholar] [CrossRef]

- Secciani, N.; Brogi, C.; Pagliai, M.; Buonamici, F.; Gerli, F.; Vannetti, F.; Bianchini, M.; Volpe, Y.; Ridolfi, A. Wearable Robots: An Original Mechatronic Design of a Hand Exoskeleton for Assistive and Rehabilitative Purposes. Front. Neurorobot. 2021, 15, 750385. [Google Scholar] [CrossRef]

- De la Cruz-Sánchez, B.; Arias-Montiel, M.; Lugo-González, E. Development of hand exoskeleton prototype for assisted rehabilitation. Mech. Mach. Sci. 2018, 66, 378–385. [Google Scholar]

- Orlando, M.; Behera, L.; Dutta, A.; Saxena, A. Optimal design and redundancy resolution of a novel robotic two-fingered exoskeleton. IEEE Trans. Nucl. Sci. 2020, 2, 59–75. [Google Scholar]

- Li, G.; Cheng, L.; Sun, N. Design, manipulability analysis and optimization of an index finger exoskeleton for stroke rehabilitation. Mech. Mach. Theory 2022, 167, 104526. [Google Scholar] [CrossRef]

- Li, Y.; Gao, X.; Liao, B.; Peng, Y.; Chen, Y. Research Progress of Exoskeleton for Hand Rehabilitation Following Stroke. In Proceedings of the Journal of Physics: Conference Series, Atlanta, GA, USA, 15–17 January 2021; p. 012076. [Google Scholar]

- Suarez-Escobar, M.; Rendon-Velez, E. An overview of robotic/mechanical devices for post-stroke thumb rehabilitation. Disabil. Rehabil. Assist. Technol. 2018, 13, 683–703. [Google Scholar] [CrossRef]

- Peidró, A.; Reinoso, Ó.; Gil, A.; Marín, J.; Payá, L. An improved Monte Carlo method based on Gaussian growth to calculate the workspace of robots. Eng. Appl. Artif. Intel. 2017, 64, 197–207. [Google Scholar] [CrossRef]

- Felix, E.A.; Lee, S.P. Systematic literature review of preprocessing techniques for imbalanced data. IET Softw. 2019, 13, 479–496. [Google Scholar] [CrossRef]

- Mitsuhashi, T. Impact of feature extraction to accuracy of machine learning based hot spot detection. In Proceedings of the SPIE Photomask Technology Conference, Monterey, CA, USA, 11–14 September 2017; p. 104510C. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 7–9 July 2015; pp. 448–456. [Google Scholar]

- Park, K.; Changyi, P. Comparison of nonlinear classification methods for image data. J. Korean Data Inf. Sci. Sociaty 2021, 32, 767–780. [Google Scholar] [CrossRef]

| Thumb | Index Finger | Middle Finger | Ring Finger | Little Finger | |

|---|---|---|---|---|---|

| Proximal phalanx | 36 | 46 | 47 | 46 | 39 |

| Middle phalanx | — | 27 | 28 | 27 | 24 |

| Distal phalanx | 31 | 24 | 25 | 24 | 22 |

| metacarpal | 43 | 63 | 61 | 55 | 51 |

| i = 0 | 80 | 0 | 0 | |

| i = 1 | 46 | 0 | 0 | |

| i = 2 | 27 | 0 | 0 | |

| i = 3 | 24 | 0 | 0 |

| CNN | Run number | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| Accuracy | 1.0 | 0.991 | 1.0 | 0.922 | 0.951 | 0.958 | 1.0 | 0.951 | 0.973 | 0.964 | |

| Average | 97.1 | ||||||||||

| Variance | 0.0276 | ||||||||||

| SVM | Run number | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 |

| Accuracy | 0.931 | 0.956 | 0.981 | 0.961 | 0.882 | 0.411 | 0.979 | 0.949 | 0.921 | 0.871 | |

| Average | 0.884 | ||||||||||

| Variance | 0.162 | ||||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xia, K.; Chen, X.; Chang, X.; Liu, C.; Guo, L.; Xu, X.; Lv, F.; Wang, Y.; Sun, H.; Zhou, J. Hand Exoskeleton Design and Human–Machine Interaction Strategies for Rehabilitation. Bioengineering 2022, 9, 682. https://doi.org/10.3390/bioengineering9110682

Xia K, Chen X, Chang X, Liu C, Guo L, Xu X, Lv F, Wang Y, Sun H, Zhou J. Hand Exoskeleton Design and Human–Machine Interaction Strategies for Rehabilitation. Bioengineering. 2022; 9(11):682. https://doi.org/10.3390/bioengineering9110682

Chicago/Turabian StyleXia, Kang, Xianglei Chen, Xuedong Chang, Chongshuai Liu, Liwei Guo, Xiaobin Xu, Fangrui Lv, Yimin Wang, Han Sun, and Jianfang Zhou. 2022. "Hand Exoskeleton Design and Human–Machine Interaction Strategies for Rehabilitation" Bioengineering 9, no. 11: 682. https://doi.org/10.3390/bioengineering9110682

APA StyleXia, K., Chen, X., Chang, X., Liu, C., Guo, L., Xu, X., Lv, F., Wang, Y., Sun, H., & Zhou, J. (2022). Hand Exoskeleton Design and Human–Machine Interaction Strategies for Rehabilitation. Bioengineering, 9(11), 682. https://doi.org/10.3390/bioengineering9110682