SelfCoLearn: Self-Supervised Collaborative Learning for Accelerating Dynamic MR Imaging

Abstract

1. Introduction

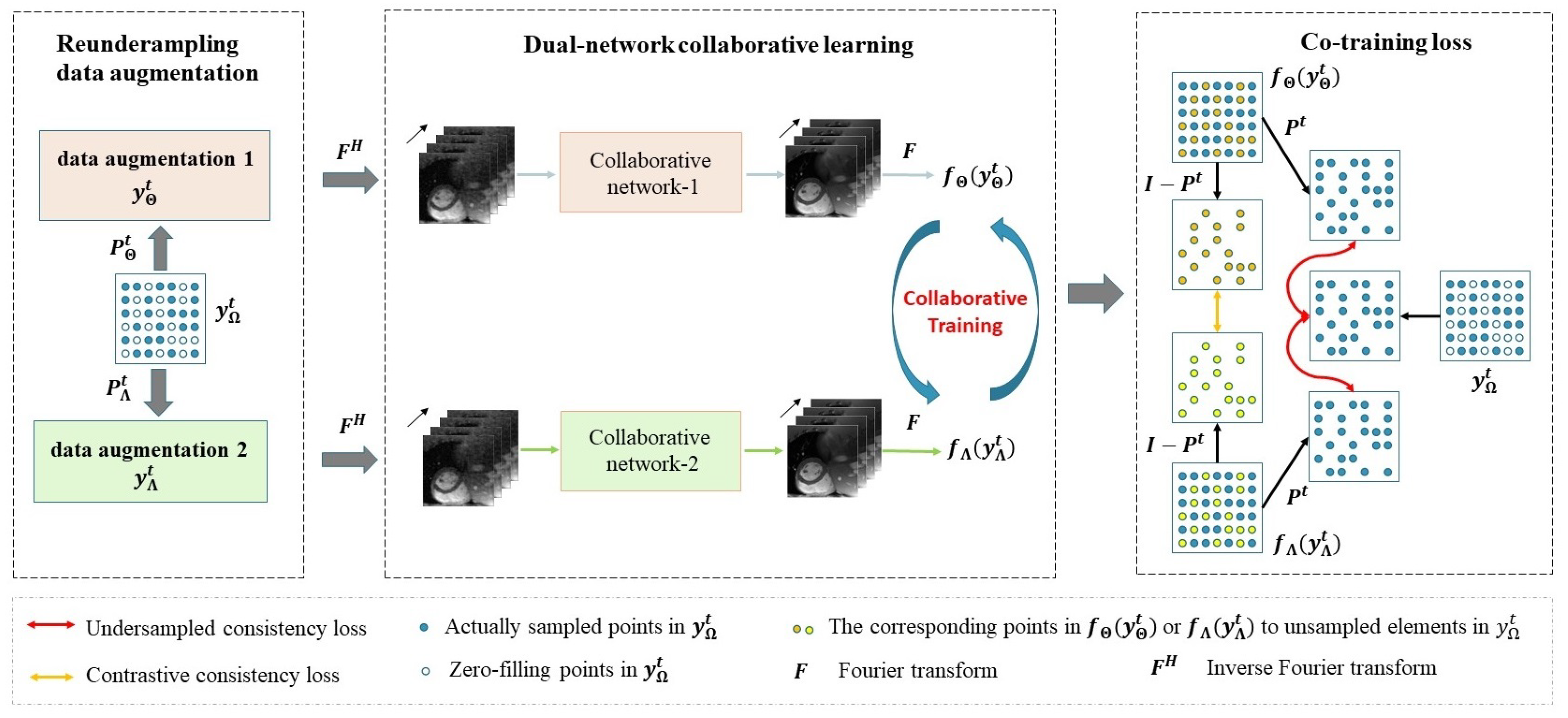

- We present a self-supervised collaborative learning framework with reundersampling data augmentation for accelerating dynamic MR imaging. The proposed framework is flexible and can be integrated with various model-based iterative un-rolled networks;

- A co-training loss, including both undersampled consistency loss term and a contrastive consistency loss term, is designed to guide the end-to-end framework to capture essential and inherent representations from undersamled k-space data;

- Extensive experiments are conducted to evaluate the effectiveness of the proposed SelfCoLearn with different model-based iterative un-rolled networks, with more promising results obtained compared to self-supervised methods.

2. Methodology

2.1. Dynamic MR Imaging Formulation

2.2. The Overall Framework

2.3. Network Architectures

2.3.1. Model-Driven Deep Learning with Image-Domain Regularization

2.3.2. Model-Driven Deep Learning with Complementary Regularization

2.3.3. Model-Driven Deep Learning with Low-Rank Regularization

2.4. The Proposed Co-Training Loss

3. Experimental Results

3.1. Experimental Setup

3.1.1. Dataset

3.1.2. Reundersampling K-Space Data Augmentation

3.1.3. Evaluation Metrics

3.1.4. Model Configuration and Implementation Details

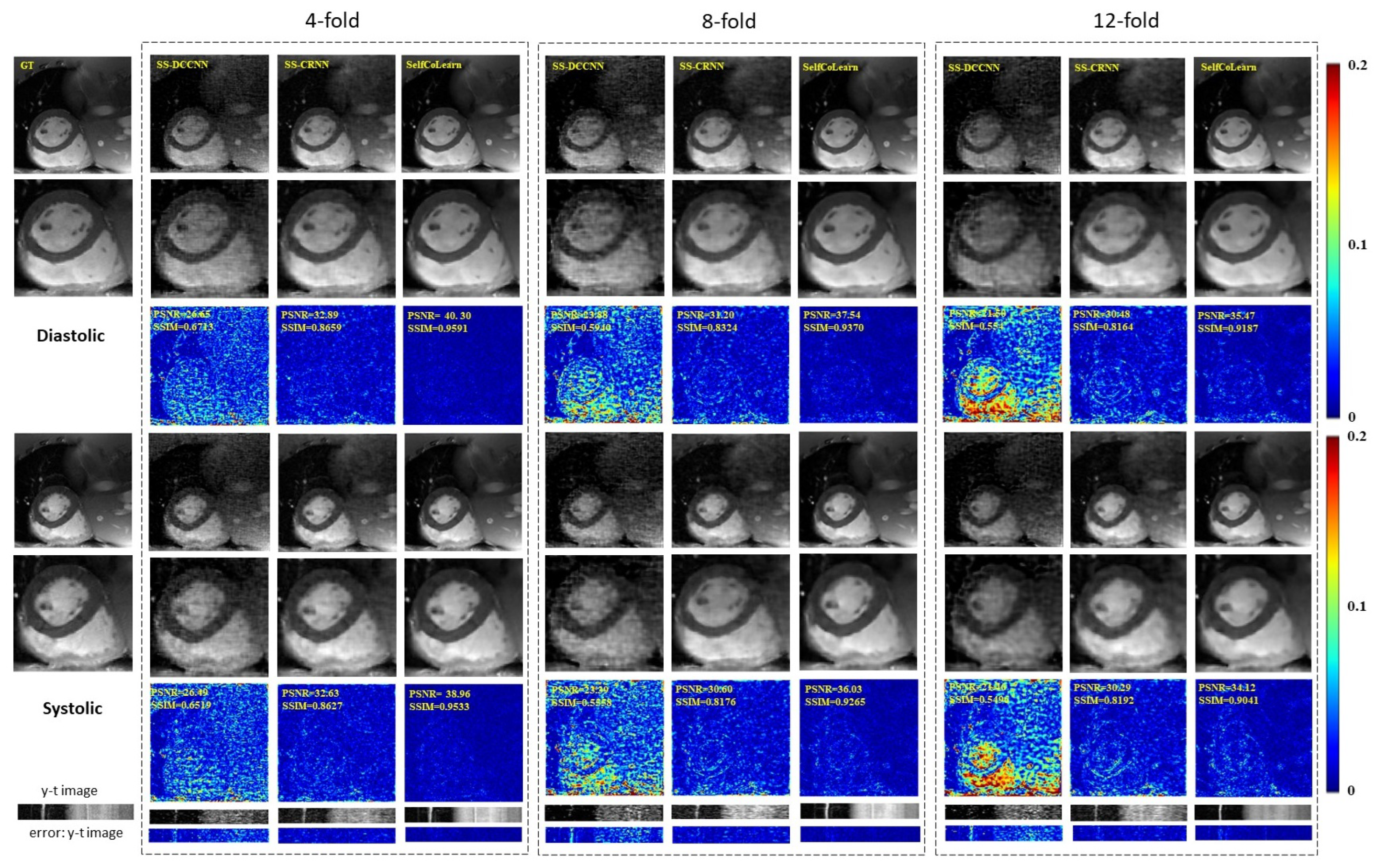

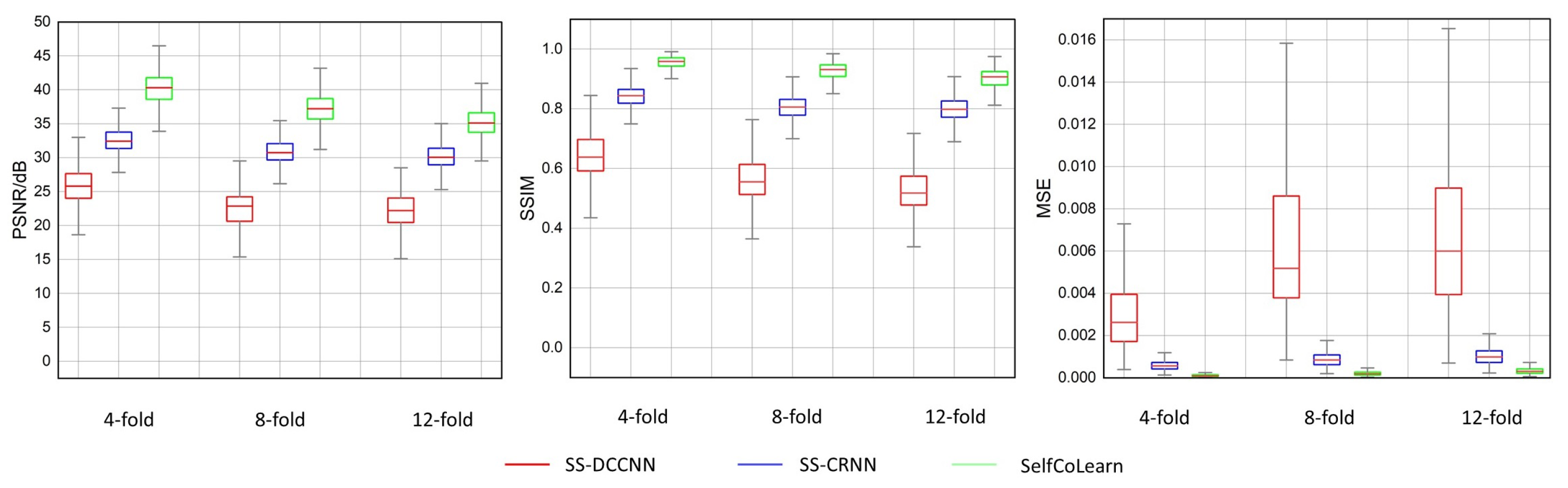

3.2. Comparisons to State-of-the-Art Unsupervised Methods

3.3. Comparisons to State-of-the-Art Supervised Methods

4. Discussion

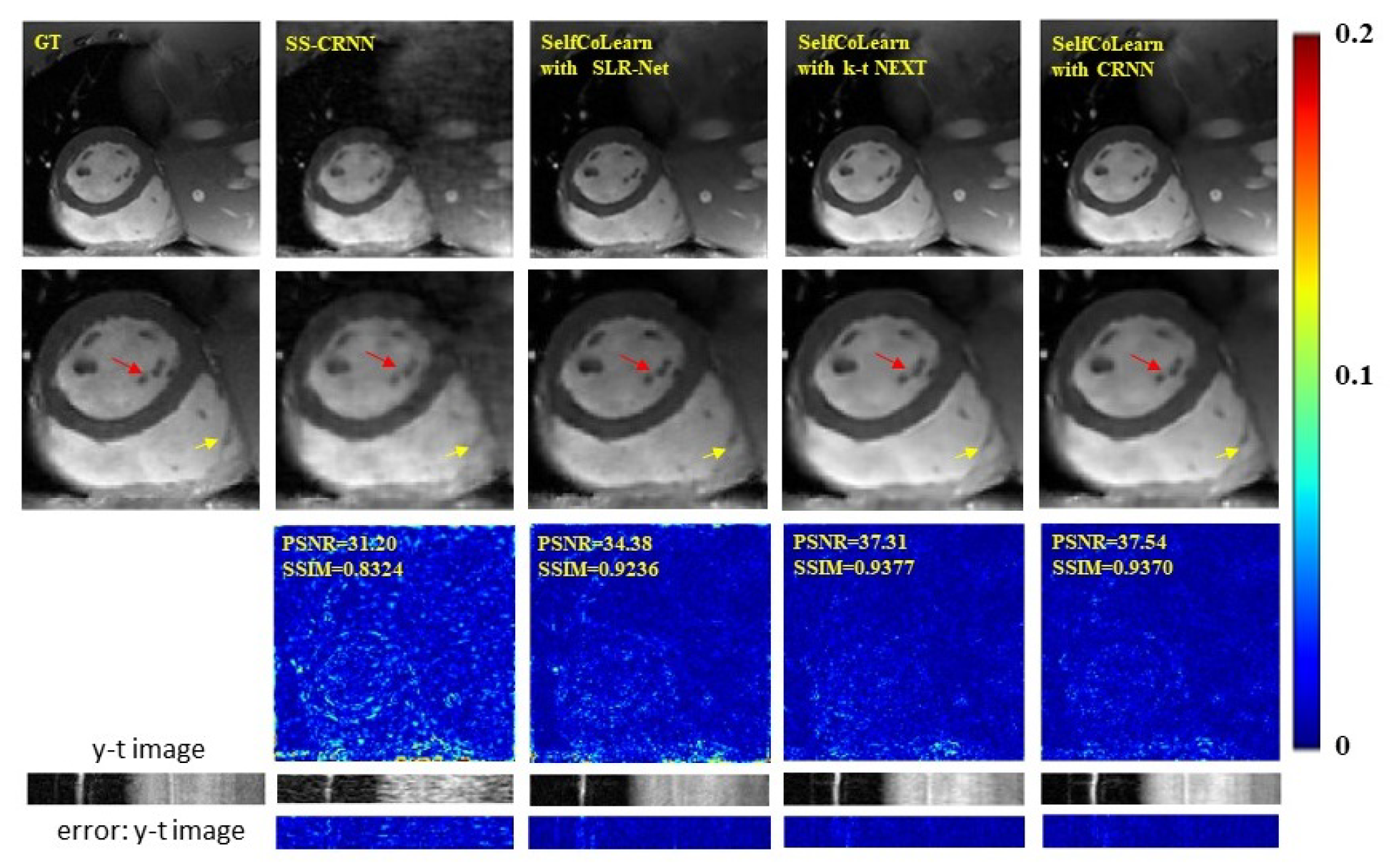

4.1. Network Backbone Architectures

4.2. Co-Training Loss Function

4.3. Loss Functions

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gamper, U.; Boesiger, P.; Kozerke, S. Compressed sensing in dynamic MRI. Magn. Reson. Med. Off. J. Int. Soc. Magn. Reson. Med. 2008, 59, 365–373. [Google Scholar] [CrossRef] [PubMed]

- Zhao, B.; Haldar, J.P.; Christodoulou, A.G.; Liang, Z.P. Image reconstruction from highly undersampled (k, t)-space data with joint partial separability and sparsity constraints. IEEE Trans. Med. Imaging 2012, 31, 1809–1820. [Google Scholar] [CrossRef] [PubMed]

- Jung, H.; Ye, J.C.; Kim, E.Y. Improved k–t BLAST and k–t SENSE using FOCUSS. Phys. Med. Biol. 2007, 52, 3201. [Google Scholar] [CrossRef] [PubMed]

- Wang, Y.; Ying, L. Compressed sensing dynamic cardiac cine MRI using learned spatiotemporal dictionary. IEEE Trans. Biomed. Eng. 2013, 61, 1109–1120. [Google Scholar] [CrossRef]

- Caballero, J.; Price, A.N.; Rueckert, D.; Hajnal, J.V. Dictionary learning and time sparsity for dynamic MR data reconstruction. IEEE Trans. Med. Imaging 2014, 33, 979–994. [Google Scholar] [CrossRef]

- Jung, H.; Sung, K.; Nayak, K.S.; Kim, E.Y.; Ye, J.C. k-t FOCUSS: A general compressed sensing framework for high resolution dynamic MRI. Magn. Reson. Med. Off. J. Int. Soc. Magn. Reson. Med. 2009, 61, 103–116. [Google Scholar] [CrossRef]

- Otazo, R.; Candes, E.; Sodickson, D.K. Low-rank plus sparse matrix decomposition for accelerated dynamic MRI with separation of background and dynamic components. Magn. Reson. Med. 2015, 73, 1125–1136. [Google Scholar] [CrossRef]

- Wang, S.; Xiao, T.; Liu, Q.; Zheng, H. Deep learning for fast MR imaging: A review for learning reconstruction from incomplete k-space data. Biomed. Signal Process. Control 2021, 68, 102579. [Google Scholar] [CrossRef]

- Wang, S.; Cao, G.; Wang, Y.; Liao, S.; Wang, Q.; Shi, J.; Li, C.; Shen, D. Review and Prospect: Artificial Intelligence in Advanced Medical Imaging. Front. Radiol. 2021, 1, 781868. [Google Scholar] [CrossRef]

- Li, C.; Li, W.; Liu, C.; Zheng, H. Artificial intelligence in multiparametric magnetic resonance imaging: A review. Med. Phys. 2022, 49, e1024–e1054. [Google Scholar] [CrossRef]

- Wang, S.; Su, Z.; Ying, L.; Peng, X.; Zhu, S.; Liang, F.; Feng, D.; Liang, D. Accelerating magnetic resonance imaging via deep learning. In Proceedings of the 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), Prague, Czech Republic, 13–16 April 2016; pp. 514–517. [Google Scholar]

- Zhang, J.; Ghanem, B. ISTA-Net: Interpretable optimization-inspired deep network for image compressive sensing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1828–1837. [Google Scholar]

- Eo, T.; Jun, Y.; Kim, T.; Jang, J.; Lee, H.J.; Hwang, D. KIKI-net: Cross-domain convolutional neural networks for reconstructing undersampled magnetic resonance images. Magn. Reson. Med. 2018, 80, 2188–2201. [Google Scholar] [CrossRef] [PubMed]

- Sun, J.; Li, H.; Xu, Z.; Yang, Y. Deep ADMM-Net for compressive sensing MRI. Adv. Neural Inf. Process. Syst. 2016, 29. [Google Scholar]

- Aggarwal, H.K.; Mani, M.P.; Jacob, M. MoDL: Model-based deep learning architecture for inverse problems. IEEE Trans. Med. Imaging 2018, 38, 394–405. [Google Scholar] [CrossRef] [PubMed]

- Hammernik, K.; Klatzer, T.; Kobler, E.; Recht, M.P.; Sodickson, D.K.; Pock, T.; Knoll, F. Learning a variational network for reconstruction of accelerated MRI data. Magn. Reson. Med. 2018, 79, 3055–3071. [Google Scholar] [CrossRef] [PubMed]

- Akçakaya, M.; Moeller, S.; Weingärtner, S.; Uğurbil, K. Scan-specific robust artificial-neural-networks for k-space interpolation (RAKI) reconstruction: Database-free deep learning for fast imaging. Magn. Reson. Med. 2019, 81, 439–453. [Google Scholar] [CrossRef] [PubMed]

- Mardani, M.; Gong, E.; Cheng, J.Y.; Vasanawala, S.S.; Zaharchuk, G.; Xing, L.; Pauly, J.M. Deep generative adversarial neural networks for compressive sensing MRI. IEEE Trans. Med. Imaging 2018, 38, 167–179. [Google Scholar] [CrossRef]

- Huang, Q.; Xian, Y.; Yang, D.; Qu, H.; Yi, J.; Wu, P.; Metaxas, D.N. Dynamic MRI reconstruction with end-to-end motion-guided network. Med. Image Anal. 2021, 68, 101901. [Google Scholar] [CrossRef]

- Seegoolam, G.; Schlemper, J.; Qin, C.; Price, A.; Hajnal, J.; Rueckert, D. Exploiting motion for deep learning reconstruction of extremely-undersampled dynamic MRI. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Shenzhen, China, 13–17 October 2019; pp. 704–712. [Google Scholar]

- Qin, C.; Schlemper, J.; Duan, J.; Seegoolam, G.; Price, A.; Hajnal, J.; Rueckert, D. k-t NEXT: Dynamic MR image reconstruction exploiting spatio-temporal correlations. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Shenzhen, China, 13–17 October 2019; pp. 505–513. [Google Scholar]

- Schlemper, J.; Caballero, J.; Hajnal, J.V.; Price, A.N.; Rueckert, D. A deep cascade of convolutional neural networks for dynamic MR image reconstruction. IEEE Trans. Med. Imaging 2017, 37, 491–503. [Google Scholar] [CrossRef]

- Qin, C.; Schlemper, J.; Caballero, J.; Price, A.N.; Hajnal, J.V.; Rueckert, D. Convolutional recurrent neural networks for dynamic MR image reconstruction. IEEE Trans. Med. Imaging 2018, 38, 280–290. [Google Scholar] [CrossRef]

- Qin, C.; Duan, J.; Hammernik, K.; Schlemper, J.; Küstner, T.; Botnar, R.; Prieto, C.; Price, A.N.; Hajnal, J.V.; Rueckert, D. Complementary time-frequency domain networks for dynamic parallel MR image reconstruction. Magn. Reson. Med. 2021, 86, 3274–3291. [Google Scholar] [CrossRef]

- Wang, S.; Ke, Z.; Cheng, H.; Jia, S.; Ying, L.; Zheng, H.; Liang, D. DIMENSION: Dynamic MR imaging with both k-space and spatial prior knowledge obtained via multi-supervised network training. NMR Biomed. 2022, 35, e4131. [Google Scholar] [CrossRef] [PubMed]

- Ke, Z.; Huang, W.; Cui, Z.X.; Cheng, J.; Jia, S.; Wang, H.; Liu, X.; Zheng, H.; Ying, L.; Zhu, Y.; et al. Learned low-rank priors in dynamic MR imaging. IEEE Trans. Med. Imaging 2021, 40, 3698–3710. [Google Scholar] [CrossRef] [PubMed]

- Hu, C.; Li, C.; Wang, H.; Liu, Q.; Zheng, H.; Wang, S. Self-supervised learning for mri reconstruction with a parallel network training framework. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Strasbourg, France, 27 September–1 October 2021; pp. 382–391. [Google Scholar]

- Wang, S.; Wu, R.; Li, C.; Zou, J.; Zhang, Z.; Liu, Q.; Xi, Y.; Zheng, H. PARCEL: Physics-based Unsupervised Contrastive Representation Learning for Multi-coil MR Imaging. IEEE/ACM Trans. Comput. Biol. Bioinform. 2022. [Google Scholar] [CrossRef]

- Yoo, J.; Jin, K.H.; Gupta, H.; Yerly, J.; Stuber, M.; Unser, M. Time-dependent deep image prior for dynamic MRI. IEEE Trans. Med. Imaging 2021, 40, 3337–3348. [Google Scholar] [CrossRef] [PubMed]

- Acar, M.; Çukur, T.; Öksüz, İ. Self-supervised Dynamic MRI Reconstruction. In Proceedings of the International Workshop on Machine Learning for Medical Image Reconstruction, Strasbourg, France, 1 October 2021; pp. 35–44. [Google Scholar]

- Ulyanov, D.; Vedaldi, A.; Lempitsky, V. Deep image prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 9446–9454. [Google Scholar]

- Yaman, B.; Hosseini, S.A.H.; Moeller, S.; Ellermann, J.; Uğurbil, K.; Akçakaya, M. Self-supervised learning of physics-guided reconstruction neural networks without fully sampled reference data. Magn. Reson. Med. 2020, 84, 3172–3191. [Google Scholar] [CrossRef]

- Akçakaya, M.; Yaman, B.; Chung, H.; Ye, J.C. Unsupervised Deep Learning Methods for Biological Image Reconstruction and Enhancement: An overview from a signal processing perspective. IEEE Signal Process. Mag. 2022, 39, 28–44. [Google Scholar] [CrossRef]

- Liang, D.; Cheng, J.; Ke, Z.; Ying, L. Deep Magnetic Resonance Image Reconstruction: Inverse Problems Meet Neural Networks. IEEE Signal Process. Mag. 2020, 37, 141–151. [Google Scholar] [CrossRef]

- Qin, C.; Rueckert, D. Artificial Intelligence-Based Image Reconstruction in Cardiac Magnetic Resonance. In Artificial Intelligence in Cardiothoracic Imaging; Springer: Cham, Switzerland, 2022; pp. 139–147. [Google Scholar]

- Candès, E.J.; Recht, B. Exact matrix completion via convex optimization. Found. Comput. Math. 2009, 9, 717–772. [Google Scholar] [CrossRef]

- Lee, K.; Bresler, Y. Admira: Atomic decomposition for minimum rank approximation. IEEE Trans. Inf. Theory 2010, 56, 4402–4416. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the ICLR (Poster), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| AF | Methods | Training Pattern | PSNR (dB) | SSIM | MSE () |

|---|---|---|---|---|---|

| SS-DCCNN | Self-supervised | 25.81 ± 2.86 | 0.6409 ± 0.0739 | 32.81 ± 24.85 | |

| 4-fold | SS-CRNN | Self-supervised | 32.49 ± 1.79 | 0.8383 ± 0.0387 | 6.14 ± 2.62 |

| SelfCoLearn | Self-supervised | 40.34 ± 2.69 | 0.9536 ± 0.0239 | 1.11 ± 0.72 | |

| SS-DCCNN | Self-supervised | 22.56 ± 2.71 | 0.5615 ± 0.0732 | 67.87 ± 49.27 | |

| 8-fold | SS-CRNN | Self-supervised | 30.81 ± 1.77 | 0.8015 ± 0.0427 | 9.02 ± 3.75 |

| SelfCoLearn | Self-supervised | 37.27 ± 2.40 | 0.9243 ± 0.0338 | 2.17 ± 1.22 | |

| SS-DCCNN | Self-supervised | 22.17 ± 2.76 | 0.5270 ± 0.0702 | 74.89 ± 54.96 | |

| 12-fold | SS-CRNN | Self-supervised | 30.14 ± 1.78 | 0.7943 ± 0.0444 | 10.54 ± 4.40 |

| SelfCoLearn | Self-supervised | 35.19 ± 2.24 | 0.8985 ± 0.0399 | 3.44 ± 1.78 |

| AF | Methods | Training Pattern | PSNR (dB) | SSIM | MSE () |

|---|---|---|---|---|---|

| U-Net | Supervised | 33.77 ± 1.96 | 0.8698 ± 0.0391 | 4.66 ± 2.22 | |

| 4-fold | SelfCoLearn | Self-supervised | 40.34 ± 2.69 | 0.9536 ± 0.0239 | 1.11 ± 0.72 |

| CRNN | Supervised | 40.89 ± 2.90 | 0.9553 ± 0.0237 | 1.01 ± 0.68 | |

| U-Net | Supervised | 32.63 ± 1.97 | 0.8329 ± 0.0456 | 6.06 ± 2.88 | |

| 8-fold | SelfCoLearn | Self-supervised | 37.27 ± 2.40 | 0.9243 ± 0.0338 | 2.17 ± 1.22 |

| CRNN | Supervised | 38.09 ± 2.52 | 0.9269 ± 0.0342 | 1.83 ± 1.07 | |

| U-Net | Supervised | 31.96 ± 1.88 | 0.8315 ± 0.0478 | 6.99 ± 3.03 | |

| 12-fold | SelfCoLearn | Self-supervised | 35.19 ± 2.24 | 0.8985 ± 0.0399 | 3.44 ± 1.78 |

| CRNN | Supervised | 36.32 ± 2.29 | 0.9048 ± 0.0392 | 2.67 ± 1.42 |

| Methods | Training Pattern | PSNR (dB) | SSIM | MSE () |

|---|---|---|---|---|

| SS-CRNN | Self-supervised | 30.81 ± 1.77 | 0.8015 ± 0.0427 | 9.02 ± 3.75 |

| SelfCoLearn with SLR-Net | Self-supervised | 33.58 ± 2.24 | 0.9001 ± 0.0369 | 5.57 ± 10.48 |

| SelfCoLearn with k-t Next | Self-supervised | 36.95 ± 2.39 | 0.9226 ± 0.0343 | 2.34 ± 1.32 |

| SelfCoLearn with CRNN | Self-supervised | 37.27 ± 2.40 | 0.9243 ± 0.0338 | 2.17 ± 1.22 |

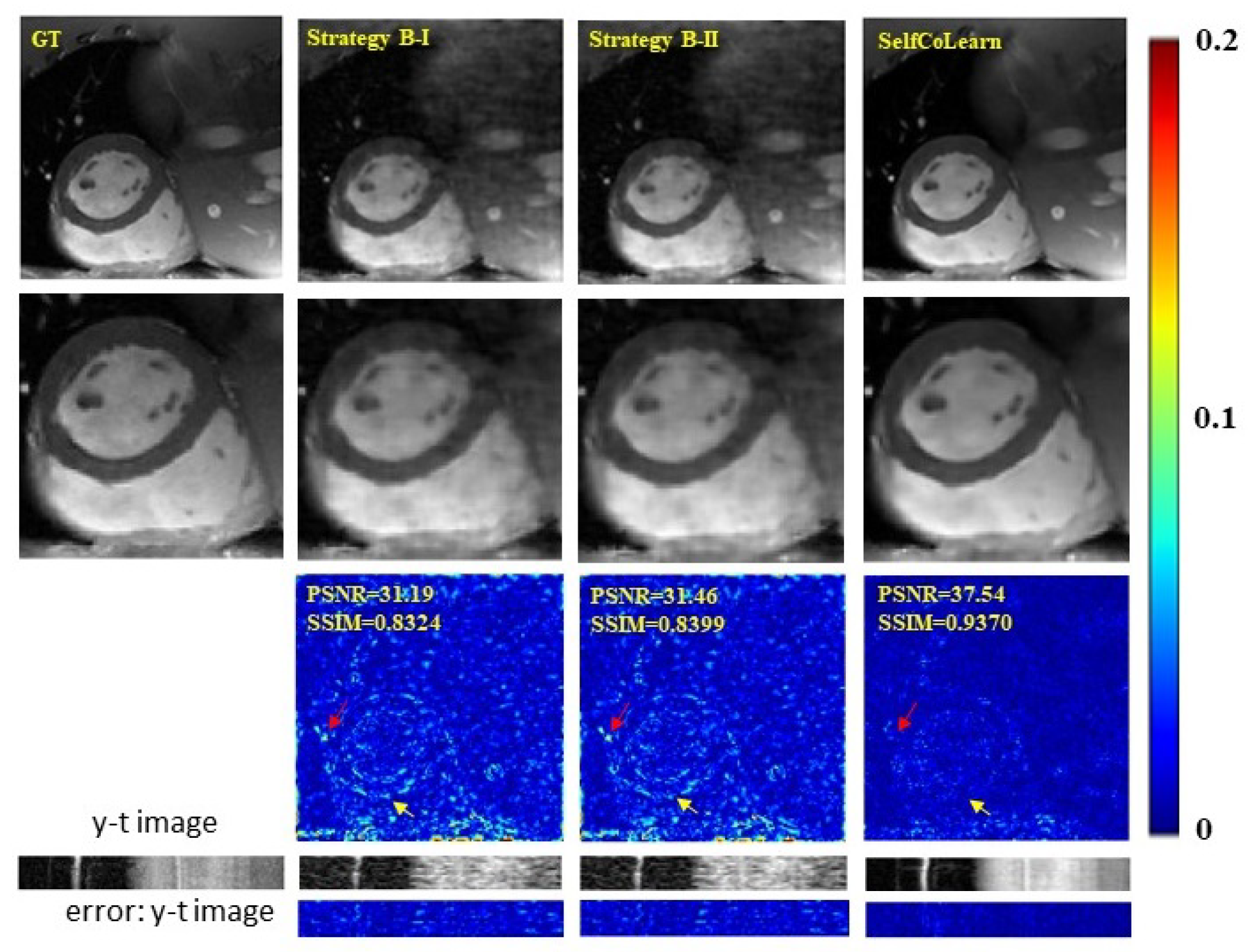

| Methods | Single-Net | Parallel-Net | PSNR (dB) | SSIM | MSE () | ||

|---|---|---|---|---|---|---|---|

| Strategy B-I | √ | × | × | × | 30.81 ± 1.77 | 0.8015 ± 0.0427 | 9.02 ± 3.75 |

| Strategy B-II | √ | × | √ | × | 31.04 ± 1.74 | 0.8102 ± 0.0411 | 8.53 ± 3.50 |

| SelfCoLearn | × | √ | √ | √ | 37.27 ± 2.40 | 0.9243 ± 0.0338 | 2.17 ± 1.22 |

| Methods | PSNR (dB) | SSIM | MSE () | ||

|---|---|---|---|---|---|

| Strategy C-I | x-t domain | k-space | 37.00 ± 2.35 | 0.9230 ± 0.0344 | 2.30 ± 1.29 |

| Strategy C-II | x-t domain | x-t domain | 37.20 ± 2.37 | 0.9235 ± 0.0343 | 2.20 ± 1.22 |

| Strategy C-III | k-space | k-space | 37.27 ± 2.40 | 0.9243 ± 0.0338 | 2.17 ± 1.22 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zou, J.; Li, C.; Jia, S.; Wu, R.; Pei, T.; Zheng, H.; Wang, S. SelfCoLearn: Self-Supervised Collaborative Learning for Accelerating Dynamic MR Imaging. Bioengineering 2022, 9, 650. https://doi.org/10.3390/bioengineering9110650

Zou J, Li C, Jia S, Wu R, Pei T, Zheng H, Wang S. SelfCoLearn: Self-Supervised Collaborative Learning for Accelerating Dynamic MR Imaging. Bioengineering. 2022; 9(11):650. https://doi.org/10.3390/bioengineering9110650

Chicago/Turabian StyleZou, Juan, Cheng Li, Sen Jia, Ruoyou Wu, Tingrui Pei, Hairong Zheng, and Shanshan Wang. 2022. "SelfCoLearn: Self-Supervised Collaborative Learning for Accelerating Dynamic MR Imaging" Bioengineering 9, no. 11: 650. https://doi.org/10.3390/bioengineering9110650

APA StyleZou, J., Li, C., Jia, S., Wu, R., Pei, T., Zheng, H., & Wang, S. (2022). SelfCoLearn: Self-Supervised Collaborative Learning for Accelerating Dynamic MR Imaging. Bioengineering, 9(11), 650. https://doi.org/10.3390/bioengineering9110650