Enhancing Clinical Decision Support with Adaptive Iterative Self-Query Retrieval for Retrieval-Augmented Large Language Models

Abstract

1. Introduction

- (a)

- Adaptive Self-Query Retrieval: Clinically oriented schemas for metadata-aware retrieval are implemented, with a robust fallback mechanism when reliable auto-population is not feasible.

- (b)

- Iterative Query Refinement: Implementation of a self-critiquing/rewriting loop that updated the structured queries and related data until a predefined quality criterion was met.

- (c)

- Composite context scoring: Evidence is prioritized using a principled blend of semantic similarity, lexical overlap, and length normalization to mitigate chunk-length bias.

- (d)

- Model-agnostic, modular design: LLM, embedders, and corpora are swappable without major architectural changes.

- (e)

- Dual evaluation: Clinician-facing metrics (accuracy and relevance) are paired with information-retrieval metrics (precision, recall, and F1) to link clinical utility with retrieval quality.

- (f)

- Empirical gains over a basic RAG baseline: Steady increase in all metrics evaluated from the basic RAG model to the complete pipeline implementing SQR with fallback mechanism and IQR.

- (g)

- Statistical transparency: Standardized reporting in Results (two-sided α = 0.05; ANOVA with Tukey for accuracy/relevance; IR metrics descriptive with clear definitions).

- (h)

- Clinically grounded proof-of-concept: Demonstrated on postoperative rhinoplasty queries, with a path to broader specialty-specific deployments.

2. Methods

2.1. Study Design and Setting

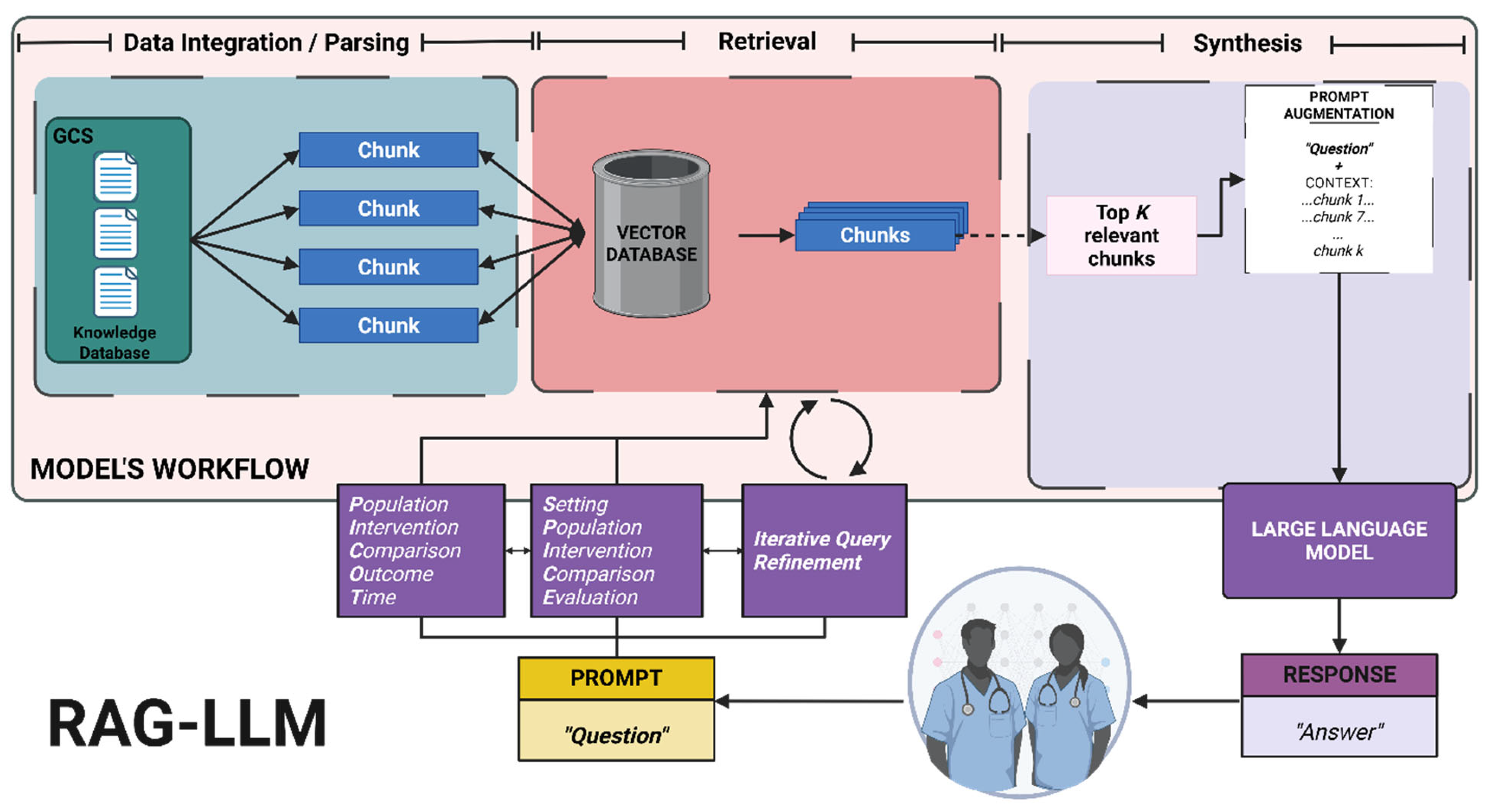

2.2. Pipeline Implementation

2.2.1. Query Decomposition and Self-Query Generation

- ➔

- Population: “patient at postoperative day 2 after open rhinoplasty,”

- ➔

- Intervention: “swelling control and pain management,”

- ➔

- Comparison: “N/A” (if not explicitly stated),

- ➔

- Outcome: “reduced edema and adequate analgesia,”

- ➔

- Time: “48 h post-op.”

2.2.2. Document Chunking via Recursive Character Splitting

2.2.3. Embedding Index Construction

2.2.4. Initial Retrieval and Composite Context Scoring

- Q is the free-text clinical question;

- refers to the embedded chunks.

- is the lexical overlap, defined as the proportion of shared keywords between the flattened query and the chunk .

- is a length-normalization term, equal to , which discourages overly long passages from dominating retrieval.

2.2.5. Initial Answer Generation and Scoring

2.2.6. Adaptive, Corrective Self-Refinement Loop

2.2.7. Fallback Mechanism and Final Answer Synthesis

2.2.8. Implementation Details

2.3. Evaluation Tools and Outcome Metrics

2.4. Statistical Analyses

3. Results

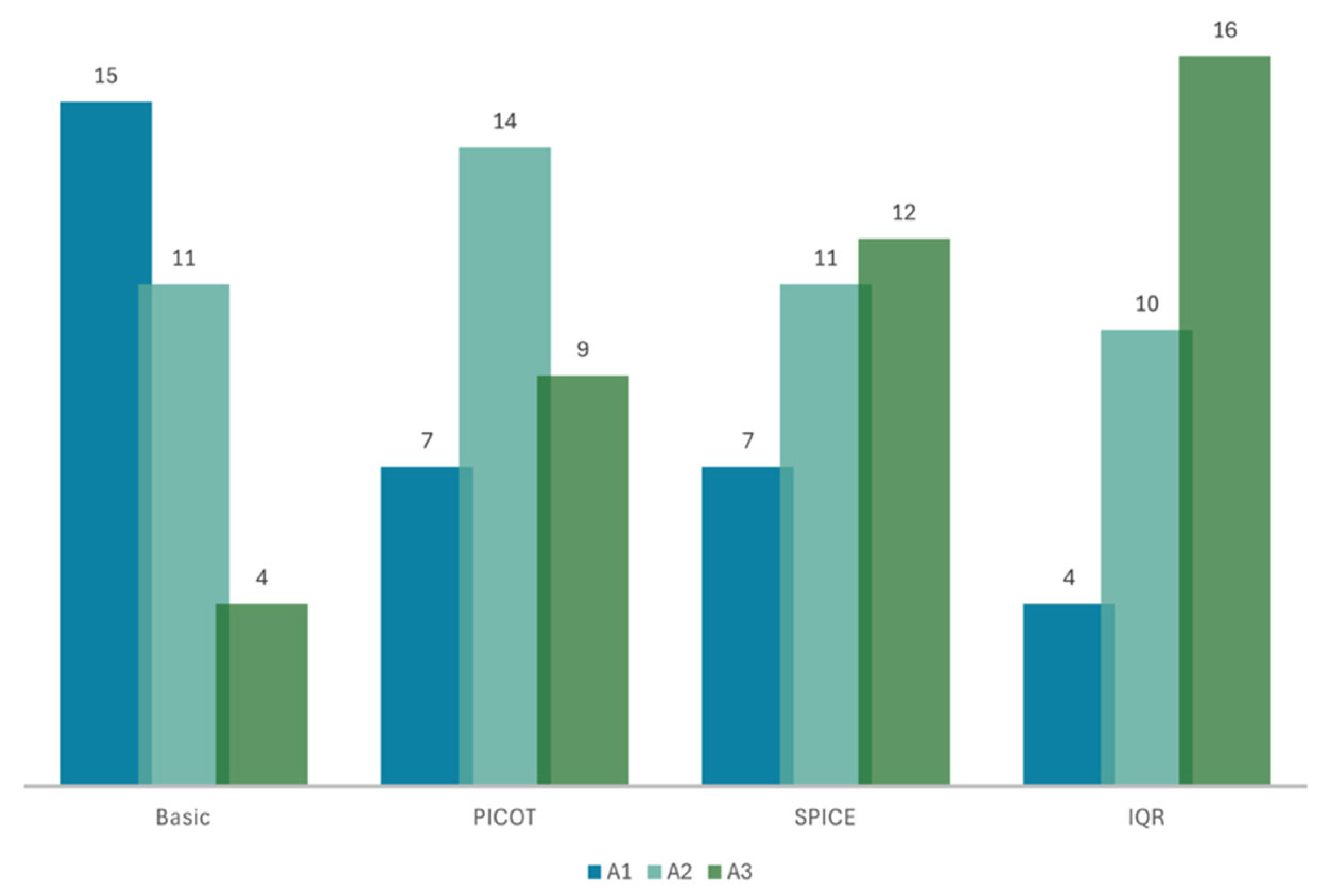

3.1. Medical Accuracy

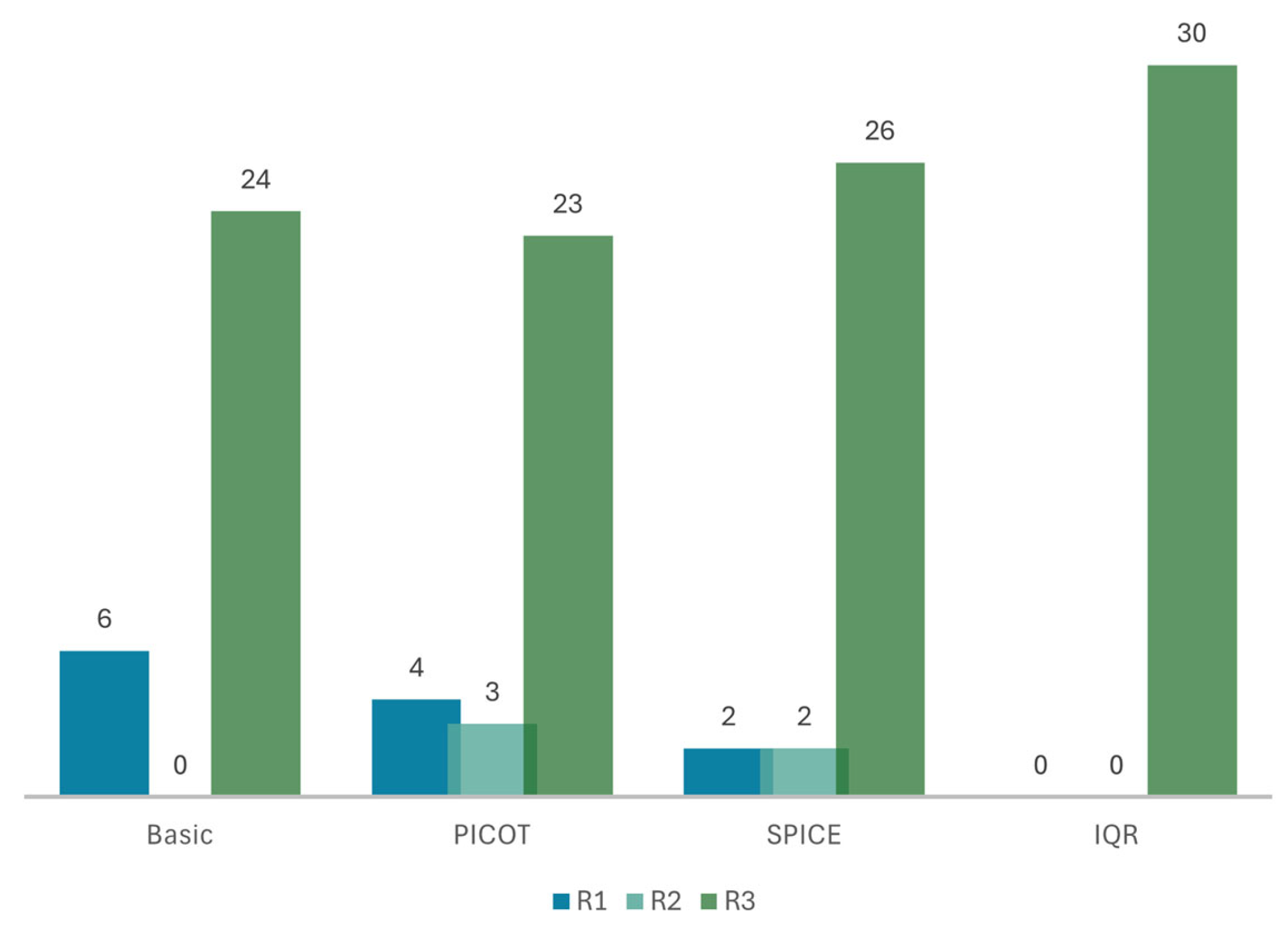

3.2. Clinical Relevance

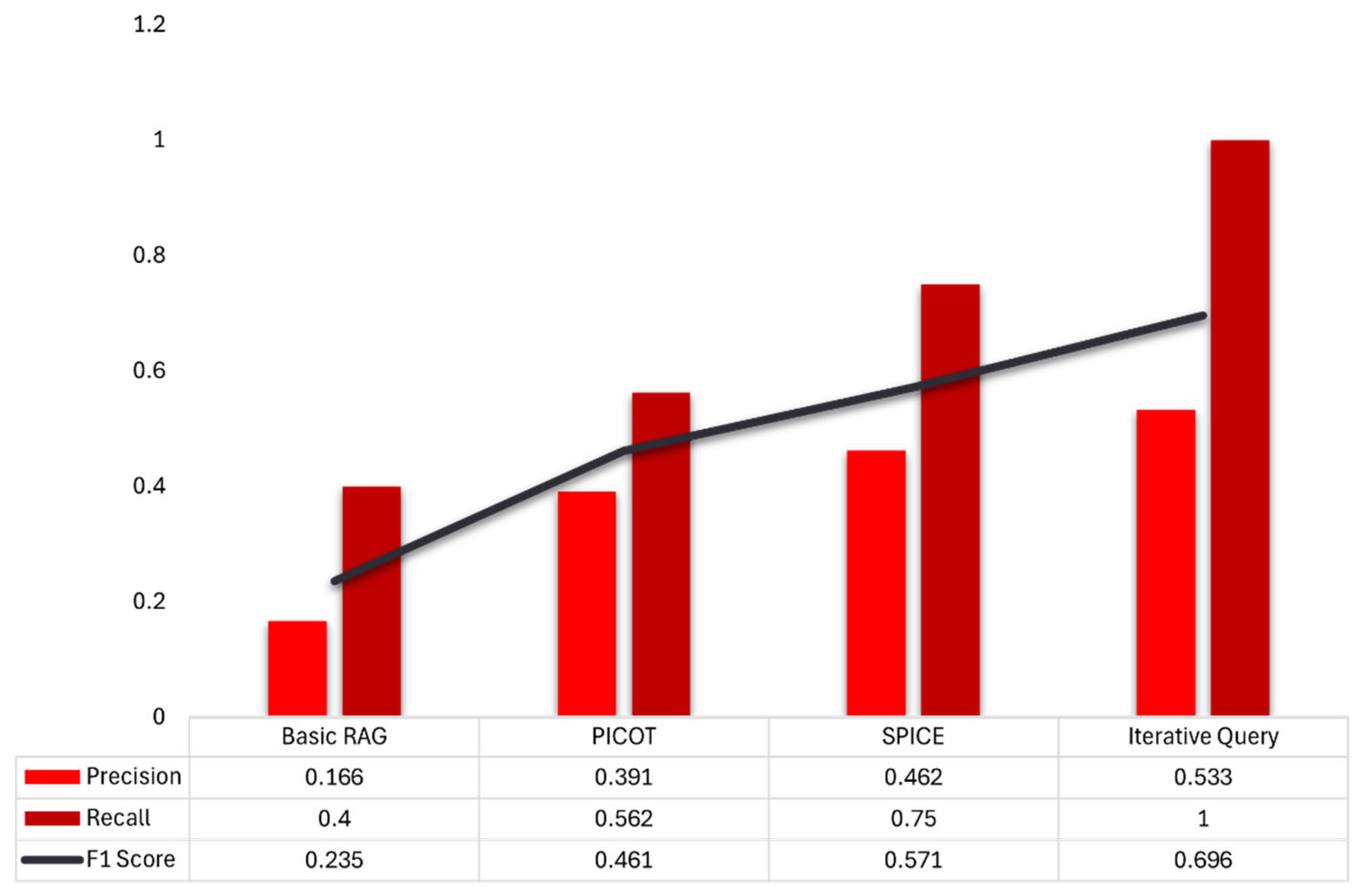

3.3. Information-Retrieval Performance

3.4. Self-Query Retrieval and Iterative-Query Refinement

4. Discussion

4.1. Interpretation of Findings

4.2. Comparison with Current Research

4.3. Impact on the Medical Practice

4.4. Limitations

5. Conclusions

6. Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Gomez-Cabello, C.A.; Borna, S.; Pressman, S.M.; Haider, S.A.; Forte, A.J.; Cowart, J.B.; Maniaci, M.J. Barriers to hospital-at-home acceptance: A systematic review of reasons for patient refusal. mHealth 2024, 10, 34. [Google Scholar] [CrossRef]

- Nogués, X.; Sánchez-Martinez, F.; Castells, X.; Díez-Pérez, A.; Sabaté, R.A.; Petit, I.; Brasé, A.; Horcajada, J.P.; Güerri-Fernández, R.; Pascual, J. Hospital-at-Home Expands Hospital Capacity During COVID-19 Pandemic. J. Am. Med. Dir. Assoc. 2021, 22, 939–942. [Google Scholar] [CrossRef] [PubMed]

- Kingham, T.P.; Ginsburg, O.; Sivaram, S.; Ilbawi, A.; Trimble, T. Priorities and funding for global cancer surgery research. J. Surg. Oncol. 2017, 115, 513–516. [Google Scholar] [CrossRef] [PubMed]

- Grech, V.; Cuschieri, S.; Eldawlatly, A.A. Artificial intelligence in medicine and research—The good, the bad, and the ugly. Saudi J. Anaesth. 2023, 17, 401–406. [Google Scholar] [CrossRef]

- Habehh, H.; Gohel, S. Machine Learning in Healthcare. Curr. Genom. 2021, 22, 291–300. [Google Scholar] [CrossRef]

- Jheng, Y.C.; Kao, C.L.; Yarmishyn, A.A.; Chou, Y.B.; Hsu, C.C.; Lin, T.C.; Hu, H.K.; Ho, T.K.; Chen, P.Y.; Kao, Z.K.; et al. The era of artificial intelligence-based individualized telemedicine is coming. J. Chin. Med. Assoc. 2020, 83, 981–983. [Google Scholar] [CrossRef]

- Liaw, W.; Kakadiaris, I.A. Artificial Intelligence and Family Medicine: Better Together. Fam. Med. 2020, 52, 8–10. [Google Scholar] [CrossRef] [PubMed]

- Thiessen, U.; Louis, E.; St. Louis, C. Artificial Intelligence in Primary Care. Fam. Dr. 2021, 9, 11–14. [Google Scholar]

- Iqbal, J.; Cortes Jaimes, D.C.; Makineni, P.; Subramani, S.; Hemaida, S.; Thugu, T.R.; Butt, A.N.; Sikto, J.T.; Kaur, P.; Lak, M.A.; et al. Reimagining Healthcare: Unleashing the Power of Artificial Intelligence in Medicine. Cureus 2023, 15, e44658. [Google Scholar] [CrossRef]

- Turcian, D.; Stoicu-Tivadar, V. Artificial Intelligence in Primary Care: An Overview. Stud. Health Technol. Inform. 2022, 289, 208–211. [Google Scholar] [CrossRef]

- Hadi, M.U.; Al-Tashi, Q.; Qureshi, R.; Shah, A.; Muneer, A.; Irfan, M.; Zafar, A.; Shaikh, M.B.; Akhtar, N.; Al-Garadi, M.A.; et al. Large Language Models: A Comprehensive Survey of Applications, Challenges, Limitations, and Future Prospects. arXiv 2023. [Google Scholar] [CrossRef]

- Park, Y.J.; Pillai, A.; Deng, J.; Guo, E.; Gupta, M.; Paget, M.; Naugler, C. Assessing the research landscape and clinical utility of large language models: A scoping review. BMC Med. Inform. Decis. Mak. 2024, 24, 72. [Google Scholar] [CrossRef]

- Rydzewski, N.R.; Dinakaran, D.; Zhao, S.G.; Ruppin, E.; Turkbey, B.; Citrin, D.E.; Patel, K.R. Comparative Evaluation of LLMs in Clinical Oncology. Nejm AI 2024, 1, AIoa2300151. [Google Scholar] [CrossRef]

- Caglayan, A.; Slusarczyk, W.; Rabbani, R.D.; Ghose, A.; Papadopoulos, V.; Boussios, S. Large Language Models in Oncology: Revolution or Cause for Concern? Curr. Oncol. 2024, 31, 1817–1830. [Google Scholar] [CrossRef]

- Gaber, F.; Shaik, M.; Franke, V.; Akalin, A. Evaluating large language model workflows in clinical decision support for triage and referral and diagnosis. npj Digit. Med. 2025, 8, 263. [Google Scholar] [CrossRef]

- Gomez-Cabello, C.A.; Borna, S.; Pressman, S.M.; Haider, S.A.; Forte, A.J. Large Language Models for Intraoperative Decision Support in Plastic Surgery: A Comparison between ChatGPT-4 and Gemini. Medicina 2024, 60, 957. [Google Scholar] [CrossRef]

- Liu, F.; Li, Z.; Zhou, H.; Yin, Q.; Yang, J.; Tang, X.; Luo, C.; Zeng, M.; Jiang, H.; Gao, Y. Large language models in the clinic: A comprehensive benchmark. arXiv 2024, arXiv:2405.00716. [Google Scholar]

- Oniani, D.; Wu, X.; Visweswaran, S.; Kapoor, S.; Kooragayalu, S.; Polanska, K.; Wang, Y. Enhancing Large Language Models for Clinical Decision Support by Incorporating Clinical Practice Guidelines. arXiv 2024, arXiv:2401.11120. [Google Scholar] [CrossRef]

- Zakka, C.; Shad, R.; Chaurasia, A.; Dalal, A.R.; Kim, J.L.; Moor, M.; Fong, R.; Phillips, C.; Alexander, K.; Ashley, E.; et al. Almanac—Retrieval-Augmented Language Models for Clinical Medicine. Nejm AI 2024, 1, AIoa2300068. [Google Scholar] [CrossRef] [PubMed]

- Madhu Babu, K. Integration of Large Language Models in Clinical Decision Support: A Framework for Human-AI Collaboration in Healthcare. Int. J. Sci. Res. Comput. Sci. Eng. Inf. Technol. 2024, 10, 2352–2363. [Google Scholar] [CrossRef]

- Yu, Y.; Gomez-Cabello, C.A.; Makarova, S.; Parte, Y.; Borna, S.; Haider, S.A.; Genovese, A.; Prabha, S.; Forte, A.J. Using Large Language Models to Retrieve Critical Data from Clinical Processes and Business Rules. Bioengineering 2024, 12, 17. [Google Scholar] [CrossRef]

- Lee, P.; Bubeck, S.; Petro, J. Benefits, limits, and risks of GPT-4 as an AI chatbot for medicine. N. Engl. J. Med. 2023, 388, 1233–1239. [Google Scholar] [CrossRef] [PubMed]

- Pressman, S.M.; Borna, S.; Gomez-Cabello, C.A.; Haider, S.A.; Haider, C.; Forte, A.J. AI and Ethics: A Systematic Review of the Ethical Considerations of Large Language Model Use in Surgery Research. Healthcare 2024, 12, 825. [Google Scholar] [CrossRef] [PubMed]

- Gourabathina, A.; Gerych, W.; Pan, E.; Ghassemi, M. The Medium is the Message: How Non-Clinical Information Shapes Clinical Decisions in LLMs. In Proceedings of the 2025 ACM Conference on Fairness, Accountability, and Transparency (FAccT), Athens, Greece, 23–26 June 2025. [Google Scholar]

- Collins, G.S.; Moons, K.G.M.; Dhiman, P.; Riley, R.D.; Beam, A.L.; Van Calster, B.; Ghassemi, M.; Liu, X.; Reitsma, J.B.; van Smeden, M. TRIPOD+AI statement: Updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ 2024, 385, e078378. [Google Scholar] [CrossRef]

- Vasey, B.; Nagendran, M.; Campbell, B.; Clifton, D.A.; Collins, G.S. Reporting guideline for the early-stage clinical evaluation of decision support systems driven by artificial intelligence: DECIDE-AI. Nat. Med. 2022, 28, 924–933. [Google Scholar] [CrossRef]

- Ge, J.; Sun, S.; Owens, J.; Galvez, V.; Gologorskaya, O.; Lai, J.C.; Pletcher, M.J.; Lai, K. Development of a liver disease-specific large language model chat interface using retrieval-augmented generation. Hepatology 2024, 80, 1158–1168. [Google Scholar] [CrossRef]

- Huang, Y.; Huang, J. A Survey on Retrieval-Augmented Text Generation for Large Language Models. arXiv 2024, arXiv:2404.10981. [Google Scholar] [CrossRef]

- Lakatos, R.; Pollner, P.; Hajdu, A.; Joo, T. Investigating the performance of Retrieval-Augmented Generation and fine-tuning for the development of AI-driven knowledge-based systems. arXiv 2024, arXiv:2403.09727. [Google Scholar] [CrossRef]

- Lewis, P.; Perez, E.; Piktus, A.; Petroni, F.; Karpukhin, V.; Goyal, N.; Küttler, H.; Lewis, M.; Yih, W.-T.; Rocktäschel, T. Retrieval-augmented generation for knowledge-intensive nlp tasks. Adv. Neural Inf. Process. Syst. 2020, 33, 9459–9474. [Google Scholar]

- Miao, J.; Thongprayoon, C.; Suppadungsuk, S.; Garcia Valencia, O.A.; Cheungpasitporn, W. Integrating Retrieval-Augmented Generation with Large Language Models in Nephrology: Advancing Practical Applications. Medicina 2024, 60, 445. [Google Scholar] [CrossRef] [PubMed]

- Ong, C.S.; Obey, N.T.; Zheng, Y.; Cohan, A.; Schneider, E.B. SurgeryLLM: A retrieval-augmented generation large language model framework for surgical decision support and workflow enhancement. npj Digit. Med. 2024, 7, 364. [Google Scholar] [CrossRef]

- Shuster, K.; Poff, S.; Chen, M.; Kiela, D.; Weston, J. Retrieval augmentation reduces hallucination in conversation. arXiv 2021, arXiv:2104.07567. [Google Scholar] [CrossRef]

- Zhang, T.; Patil, S.G.; Jain, N.; Shen, S.; Zaharia, M.; Stoica, I.; Gonzalez, J.E. Raft: Adapting language model to domain specific rag. arXiv 2024, arXiv:2403.10131. [Google Scholar] [CrossRef]

- Mallen, A.; Asai, A.; Zhong, V.; Das, R.; Khashabi, D.; Hajishirzi, H. When not to trust language models: Investigating effectiveness of parametric and non-parametric memories. arXiv 2022, arXiv:2212.10511. [Google Scholar]

- Gupta, S.; Ranjan, R.; Singh, S.N. A comprehensive survey of retrieval-augmented generation (rag): Evolution, current landscape and future directions. arXiv 2024, arXiv:2410.12837. [Google Scholar]

- Ovadia, O.; Brief, M.; Mishaeli, M.; Elisha, O. Fine-tuning or retrieval? comparing knowledge injection in llms. arXiv 2023, arXiv:2312.05934. [Google Scholar]

- Soudani, H.; Kanoulas, E.; Hasibi, F. Fine Tuning vs. Retrieval Augmented Generation for Less Popular Knowledge. arXiv 2024, arXiv:2403.01432. [Google Scholar] [CrossRef]

- Yang, Q.; Zuo, H.; Su, R.; Su, H.; Zeng, T.; Zhou, H.; Wang, R.; Chen, J.; Lin, Y.; Chen, Z.; et al. Dual retrieving and ranking medical large language model with retrieval augmented generation. Sci. Rep. 2025, 15, 18062. [Google Scholar] [CrossRef]

- Lammert, J.; Dreyer, T.; Mathes, S.; Kuligin, L.; Borm, K.J.; Schatz, U.A.; Kiechle, M.; Lorsch, A.M.; Jung, J.; Lange, S.; et al. Expert-Guided Large Language Models for Clinical Decision Support in Precision Oncology. JCO Precis. Oncol. 2024, 8, e2400478. [Google Scholar] [CrossRef]

- Ong, J.C.L.; Jin, L.; Elangovan, K.; Lim, G.Y.S.; Lim, D.Y.Z.; Sng, G.G.R.; Ke, Y.; Tung, J.Y.M.; Zhong, R.J.; Koh, C.M.Y. Development and Testing of a Novel Large Language Model-Based Clinical Decision Support Systems for Medication Safety in 12 Clinical Specialties. arXiv 2024, arXiv:2402.01741. [Google Scholar] [CrossRef]

- Kell, G.; Roberts, A.; Umansky, S.; Qian, L.; Ferrari, D.; Soboczenski, F.; Wallace, B.C.; Patel, N.; Marshall, I.J. Question answering systems for health professionals at the point of care: A systematic review. J. Am. Med. Inform. Assoc. 2024, 31, 1009–1024. [Google Scholar] [CrossRef] [PubMed]

- Amugongo, L.M.; Mascheroni, P.; Brooks, S.; Doering, S.; Seidel, J. Retrieval augmented generation for large language models in healthcare: A systematic review. PLoS Digit. Health 2025, 4, e0000877. [Google Scholar] [CrossRef] [PubMed]

- Ma, X.; Gong, Y.; He, P.; Zhao, H.; Duan, N. Query rewriting for retrieval-augmented large language models. arXiv 2023, arXiv:2305.14283. [Google Scholar] [CrossRef]

- Peimani, E.; Singh, G.; Mahyavanshi, N.; Arora, A.; Shaikh, A. Iterative NLP Query Refinement for Enhancing Domain-Specific Information Retrieval: A Case Study in Career Services. arXiv 2024, arXiv:2412.17075. [Google Scholar] [CrossRef]

- Yang, E.; Amar, J.; Lee, J.H.; Kumar, B.; Jia, Y. The Geometry of Queries: Query-Based Innovations in Retrieval-Augmented Generation. arXiv 2024, arXiv:2407.18044. [Google Scholar]

- Van Oudenhove, L. Enhancing RAG Performance with Metadata: The Power of Self-Query Retrievers. Available online: https://medium.com/@lorevanoudenhove/enhancing-rag-performance-with-metadata-the-power-of-self-query-retrievers-e29d4eecdb73 (accessed on 16 June 2025).

- Korland, G. Advanced RAG Techniques: What They Are & How to Use Them. Available online: https://www.falkordb.com/blog/advanced-rag/ (accessed on 6 June 2025).

- Glasper, A.; Carpenter, D. Posing Chapter 9 an evidence-based practice question: Using the PICO and SPICE models. How Write Your Nurs. Diss. 2021, 89, 89–96. [Google Scholar]

- Feldner, K.; Dutka, P. Exploring the evidence: Generating a research question: Using the PICOT framework for clinical inquiry. Nephrol. Nurs. J. 2024, 51, 393–395. [Google Scholar] [CrossRef]

- Kostric, I.; Balog, K. A Surprisingly Simple yet Effective Multi-Query Rewriting Method for Conversational Passage Retrieval. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval, Washington, DC, USA, 14–18 July 2024; pp. 2271–2275. [Google Scholar]

- Madaan, A.; Tandon, N.; Gupta, P.; Hallinan, S.; Gao, L.; Wiegreffe, S.; Alon, U.; Dziri, N.; Prabhumoye, S.; Yang, Y. Self-refine: Iterative refinement with self-feedback. Adv. Neural Inf. Process. Syst. 2023, 36, 46534–46594. [Google Scholar]

- Xiong, G.; Jin, Q.; Wang, X.; Zhang, M.; Lu, Z.; Zhang, A. Improving Retrieval-Augmented Generation in Medicine with Iterative Follow-up Questions (i-MedRAG). In Proceedings of the Pacific Symposium on Biocomputing, Kohala Coast, HI, USA, 4–8 January 2025; pp. 199–214. [Google Scholar]

- Chen, Y.; Wen, Z.; Fan, G.; Chen, Z.; Wu, W.; Liu, D.; Li, Z.; Liu, B.; Xiao, Y. Mapo: Boosting large language model performance with model-adaptive prompt optimization. arXiv 2024, arXiv:2407.04118. [Google Scholar] [CrossRef]

- BioRender. Available online: https://www.biorender.com/ (accessed on 27 May 2025).

- Dang, H.; Mecke, L.; Lehmann, F.; Goller, S.; Buschek, D. How to prompt? Opportunities and challenges of zero-and few-shot learning for human-AI interaction in creative applications of generative models. arXiv 2022, arXiv:2209.01390. [Google Scholar]

- Lu, Y.; Bartolo, M.; Moore, A.; Riedel, S.; Stenetorp, P. Fantastically ordered prompts and where to find them: Overcoming few-shot prompt order sensitivity. arXiv 2021, arXiv:2104.08786. [Google Scholar]

- Qin, G.; Eisner, J. Learning how to ask: Querying LMs with mixtures of soft prompts. arXiv 2021, arXiv:2104.06599. [Google Scholar] [CrossRef]

- Shin, T.; Razeghi, Y.; Logan IV, R.L.; Wallace, E.; Singh, S. Autoprompt: Eliciting knowledge from language models with automatically generated prompts. arXiv 2020, arXiv:2010.15980. [Google Scholar] [CrossRef]

- Ke, Y.; Jin, L.; Elangovan, K.; Abdullah, H.R.; Liu, N.; Sia, A.T.H.; Soh, C.R.; Tung, J.Y.M.; Ong, J.C.L.; Ting, D.S.W. Development and Testing of Retrieval Augmented Generation in Large Language Models—A Case Study Report. arXiv 2024, arXiv:2402.01733. [Google Scholar]

- Zuccon, G.; Koopman, B. Dr ChatGPT, tell me what I want to hear: How prompt knowledge impacts health answer correctness. arXiv 2023, arXiv:2302.13793. [Google Scholar] [CrossRef]

- Lee, J.; Cha, H.; Hwangbo, Y.; Cheon, W. Enhancing Large Language Model Reliability: Minimizing Hallucinations with Dual Retrieval-Augmented Generation Based on the Latest Diabetes Guidelines. J. Pers. Med. 2024, 14, 1131. [Google Scholar] [CrossRef] [PubMed]

- Ke, Y.H.; Jin, L.; Elangovan, K.; Abdullah, H.R.; Liu, N.; Sia, A.T.H.; Soh, C.R.; Tung, J.Y.M.; Ong, J.C.L.; Kuo, C.F.; et al. Retrieval augmented generation for 10 large language models and its generalizability in assessing medical fitness. NPJ Digit. Med. 2025, 8, 187. [Google Scholar] [CrossRef]

| 1. Original query: “I had aesthetic rhinoplasty with an open technique three days ago. What should I apply to the incision?” | ||

| Worst scoring iteration: Population: Patients three days post-open rhinoplasty Intervention: Topical applications to the incision site Comparison: No treatment/standard postoperative care (this is implied as the query asks what can be applied, suggesting they are not currently using anything beyond standard care) or surgeon-recommended treatment (as the query specifically asks about surgeon recommendations, comparing other options to the surgeon’s advice is relevant) Outcome: Improved healing and minimized scarring Time: Three days post-surgery Refined Query = Three days after open rhinoplasty, what can I apply to my incision to aid healing and minimize scarring? Is there anything my surgeon specifically recommended I use? Score = 0.4847764803303612 | Best scoring iteration: Population: Patients three days post-open rhinoplasty Intervention: Topical application of ointments/creams or other treatments to the surgical incision Comparison: Keeping the incision dry, or alternative surgeon-prescribed treatments (implicitly including standard postoperative care) Outcome: Improved healing and minimized scarring Time: Three days post-operatively Refined Query = Three days after open rhinoplasty, what can I apply to my incision to aid healing and minimize scarring? Should I be using an ointment, keeping it dry, or something else prescribed by my surgeon? Score = 0.5628299422123852 | Final Response: After an open rhinoplasty, patients should do the following to the incision three days after the procedure: Gently clean the visible sutures in the columella (if present) and nasal vestibule to prevent crusting. To clean the sutures, use a solution of half-strength hydrogen peroxide (the patient may mix equal amounts of water and hydrogen peroxide) applied with a cotton-tipped applicator. Instruct patients before surgery that doing this consistently will make suture removal faster and less painful, and they will almost always comply. After cleaning the sutures, apply a thin coating of antibacterial ointment to the sutures. Clean in this way two to three times per day to prevent crust accumulation in most cases. Keep the cast dry if one has been applied. |

| 2. Original query: “I had aesthetic rhinoplasty with an open technique three days ago. When can I wear my glasses?” | ||

| Worst scoring iteration: Setting: Postoperative care, outpatient. Population: Patients who have undergone open rhinoplasty. Intervention: Wearing glasses resting on the bridge of the nose. Comparator: Not wearing glasses or wearing glasses without a bridge contact (e.g., taped to forehead). Evaluation: Time until safe resumption of wearing glasses on the bridge of the nose post-rhinoplasty (i.e., without risk of complications such as displacement of nasal bones or cartilage). Refined Query = I had open rhinoplasty three days ago. When can I safely rest glasses on the bridge of my nose again? Score = 0.3924790531410891 | Best scoring iteration: Setting: Postoperative (3 days post-op) Population: Adult patient who underwent open rhinoplasty Intervention: Wearing glasses Comparator: Not wearing glasses/Alternative methods of vision correction (e.g., contact lenses) Evaluation: Time until safe resumption of glasses wearing; Strategies to minimize nasal pressure Refined Query = I had open rhinoplasty 3 days ago. How long should I wait before wearing glasses, and what can I do to minimize pressure on my nose in the meantime? Score = 0.5274241349913857 | Final Response: 6 weeks: This is the general timeframe most surgeons recommend waiting before wearing glasses again after open rhinoplasty. This allows the nose adequate time to heal and minimize the risk of pressure or displacement on the newly restructured tissues. |

| 3. Original query: “I had aesthetic rhinoplasty with an open technique three days ago. I have a persistent headache. What should I do?” | ||

| Fallback and worst scoring iteration: Population: Patients who have undergone open aesthetic rhinoplasty Intervention: N/A (The intervention is the rhinoplasty itself, and we are looking at a consequence, not comparing interventions) Comparison: N/A (Not comparing different interventions or a lack of intervention) Outcome: Persistent headache Time: Three days postoperative → Missing components: 2 ↪ Iteration 0 PICOT failed/incomplete: Two of the PICOT components are missing. → Switching to SPICE fallback. Setting:Postoperative (3 days post-op) Population: Patients who have undergone open rhinoplasty Intervention: Experiencing persistent headache Comparator: (Implicit) Not experiencing a headache/Normal postoperative course Evaluation: Normality of the headache, Management/treatment options for the headache Refined Query = “Three days after open rhinoplasty, I’m experiencing a persistent headache. Is this normal, and what should I do about it?” Score = 0.429184089201252 | Best scoring iteration: Setting: Postoperative (3 days post-op) open rhinoplasty Population: Patients undergoing open rhinoplasty Intervention: Headache management strategies Comparator: N/A (The question is about managing an existing headache, not comparing interventions.) Evaluation: Headache resolution/reduction; Normality of persistent headache post-op. (Implicitly, the query also seeks to evaluate whether contacting the surgeon is necessary). Refined Query = “I had open rhinoplasty three days ago and I have a persistent headache. Is this normal, and what can I do to alleviate it? Should I contact my surgeon?” Score = 0.526509184295481 | Final Response: I understand you are concerned about sun exposure after your open aesthetic rhinoplasty a week ago. Based on the information in the provided context, here is what I can tell you: Excessive heat and sun exposure to the nose should be avoided for 3 months after the operation This includes sunbathing, solarium treatments, and even prolonged exposure to direct sunlight. It is important to protect your nose while outdoors. Wear a wide-brimmed hat and apply sunscreen with an SPF of 30 or higher to the exposed areas of your face, including your nose. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Prabha, S.; Gomez-Cabello, C.A.; Haider, S.A.; Genovese, A.; Trabilsy, M.; Wood, N.G.; Bagaria, S.; Tao, C.; Forte, A.J. Enhancing Clinical Decision Support with Adaptive Iterative Self-Query Retrieval for Retrieval-Augmented Large Language Models. Bioengineering 2025, 12, 895. https://doi.org/10.3390/bioengineering12080895

Prabha S, Gomez-Cabello CA, Haider SA, Genovese A, Trabilsy M, Wood NG, Bagaria S, Tao C, Forte AJ. Enhancing Clinical Decision Support with Adaptive Iterative Self-Query Retrieval for Retrieval-Augmented Large Language Models. Bioengineering. 2025; 12(8):895. https://doi.org/10.3390/bioengineering12080895

Chicago/Turabian StylePrabha, Srinivasagam, Cesar A. Gomez-Cabello, Syed Ali Haider, Ariana Genovese, Maissa Trabilsy, Nadia G. Wood, Sanjay Bagaria, Cui Tao, and Antonio J. Forte. 2025. "Enhancing Clinical Decision Support with Adaptive Iterative Self-Query Retrieval for Retrieval-Augmented Large Language Models" Bioengineering 12, no. 8: 895. https://doi.org/10.3390/bioengineering12080895

APA StylePrabha, S., Gomez-Cabello, C. A., Haider, S. A., Genovese, A., Trabilsy, M., Wood, N. G., Bagaria, S., Tao, C., & Forte, A. J. (2025). Enhancing Clinical Decision Support with Adaptive Iterative Self-Query Retrieval for Retrieval-Augmented Large Language Models. Bioengineering, 12(8), 895. https://doi.org/10.3390/bioengineering12080895